#relative outperformance scans

Text

MIT researchers develop advanced machine learning models to detect pancreatic cancer

MIT researchers develop advanced machine learning models to detect pancreatic cancer.

MIT CSAIL researchers develop advanced machine-learning models that outperform current methods in detecting pancreatic ductal adenocarcinoma.

Prismatic perspectives pancreatic cancer

The path forward

The first documented case of pancreatic cancer dates from the 18th century. Since then, researchers have embarked on a long and difficult journey to better understand this elusive and deadly disease. To date, early intervention is the most effective cancer treatment. Unfortunately, due to its location deep within the abdomen, the pancreas is particularly difficult to detect early on.

Scientists from the MIT Computer Science and Artificial Intelligence Laboratory (CSAIL), as well as Limor Appelbaum, a staff scientist in the Department of Radiation Oncology at Beth Israel Deaconess Medical Center (BIDMC), wanted to better identify potential high-risk patients. They set out to create two machine-learning models for the early detection of pancreatic ductal adenocarcinoma (PDAC), the most common type of cancer.

To gain access to a large and diverse database, the team collaborated with a federated network company and used electronic health record data from multiple institutions across the United States. This vast data set contributed to the models' reliability and generalizability, making them applicable to a wide range of populations, geographical locations, and demographic groups.

The two models—the “PRISM” neural network and the logistic regression model (a statistical technique for probability)—outperformed current methods. The team’s comparison showed that while standard screening criteria identify about 10 percent of PDAC cases using a five-times higher relative risk threshold, Prism can detect 35 percent of PDAC cases at this same threshold.

Using AI to detect cancer risk is not a new phenomenon; algorithms analyze mammograms, CT scans for lung cancer, and assist in the analysis of Pap smear tests and HPV testing, to name a few applications.

“The PRISM models stand out for their development and validation on an extensive database of over 5 million patients, surpassing the scale of most prior research in the field,” says Kai Jia, an MIT PhD student in electrical engineering and computer science (EECS), MIT CSAIL affiliate, and first author on an open-access paper in eBioMedicine outlining the new work. “The model uses routine clinical and lab data to make its predictions, and the diversity of the U.S. population is a significant advancement over other PDAC models, which are usually confined to specific geographic regions, like a few health-care centers in the U.S. Additionally, using a unique regularization technique in the training process enhanced the models' generalizability and interpretability.”

“This report outlines a powerful approach to use big data and artificial intelligence algorithms to refine our approach to identifying risk profiles for cancer,” says David Avigan, a Harvard Medical School professor and the cancer center director and chief of hematology and hematologic malignancies at BIDMC, who was not involved in the study. “This approach may lead to novel strategies to identify patients with high risk for malignancy that may benefit from focused screening with the potential for early intervention.”

Prismatic perspectives pancreatic cancer

The journey toward the development of PRISM began over six years ago, fueled by firsthand experiences with the limitations of current diagnostic practices.

“Approximately 80-85 percent of pancreatic cancer patients are diagnosed at advanced stages, where cure is no longer an option,” says senior author Appelbaum, who is also a Harvard Medical School instructor as well as radiation oncologist. “This clinical frustration sparked the idea to delve into the wealth of data available in electronic health records (EHRs).”

The CSAIL group’s close collaboration with Appelbaum made it possible to understand the combined medical and machine learning aspects of the problem better, eventually leading to a much more accurate and transparent model. “The hypothesis was that these records contained hidden clues — subtle signs and symptoms that could act as early warning signals of pancreatic cancer,” she adds. “This guided our use of federated EHR networks in developing these models, for a scalable approach for deploying risk prediction tools in health care.”

Both PrismNN and PrismLR models analyze EHR data, including patient demographics, diagnoses, medications, and lab results, to assess PDAC risk. PrismNN uses artificial neural networks to detect intricate patterns in data features like age, medical history, and lab results, yielding a risk score for PDAC likelihood. PrismLR uses logistic regression for a simpler analysis, generating a probability score of PDAC based on these features. Together, the models offer a thorough evaluation of different approaches in predicting PDAC risk from the same EHR data.

One paramount point for gaining the trust of physicians, the team notes, is better understanding how the models work, known in the field as interpretability. The scientists pointed out that while logistic regression models are inherently easier to interpret, recent advancements have made deep neural networks somewhat more transparent. This helped the team to refine the thousands of potentially predictive features derived from EHR of a single patient to approximately 85 critical indicators. These indicators, which include patient age, diabetes diagnosis, and an increased frequency of visits to physicians, are automatically discovered by the model but match physicians' understanding of risk factors associated with pancreatic cancer.

The path forward

Despite the promise of the PRISM models, as with all research, some parts are still a work in progress. U.S. data alone are the current diet for the models, necessitating testing and adaptation for global use. The path forward, the team notes, includes expanding the model's applicability to international datasets and integrating additional biomarkers for more refined risk assessment.

“A subsequent aim for us is to facilitate the models' implementation in routine health care settings. The vision is to have these models function seamlessly in the background of health care systems, automatically analyzing patient data and alerting physicians to high-risk cases without adding to their workload,” says Jia. “A machine-learning model integrated with the EHR system could empower physicians with early alerts for high-risk patients, potentially enabling interventions well before symptoms manifest. We are eager to deploy our techniques in the real world to help all individuals enjoy longer, healthier lives.”

Jia wrote the paper alongside Applebaum and MIT EECS Professor and CSAIL Principal Investigator Martin Rinard, who are both senior authors of the paper. Researchers on the paper were supported during their time at MIT CSAIL, in part, by the Defense Advanced Research Projects Agency, Boeing, the National Science Foundation, and Aarno Labs. TriNetX provided resources for the project, and the Prevent Cancer Foundation also supported the team.

Source: MIT

Read the full article

0 notes

Text

New Hope for Early Pancreatic Cancer Intervention via AI-based Risk Prediction - Technology Org

New Post has been published on https://thedigitalinsider.com/new-hope-for-early-pancreatic-cancer-intervention-via-ai-based-risk-prediction-technology-org/

New Hope for Early Pancreatic Cancer Intervention via AI-based Risk Prediction - Technology Org

The first documented case of pancreatic cancer dates back to the 18th century. Since then, researchers have undertaken a protracted and challenging odyssey to understand the elusive and deadly disease. To date, there is no better cancer treatment than early intervention. Unfortunately, the pancreas, nestled deep within the abdomen, is particularly elusive for early detection.

Image credit: MIT CSAIL

MIT Computer Science and Artificial Intelligence Laboratory (CSAIL) scientists, alongside Limor Appelbaum, a staff scientist in the Department of Radiation Oncology at Beth Israel Deaconess Medical Center (BIDMC), were eager to better identify potential high-risk patients. They set out to develop two machine-learning models for early detection of pancreatic ductal adenocarcinoma (PDAC), the most common form of the cancer.

To access a broad and diverse database, the team synced up with a federated network company, using electronic health record data from various institutions across the United States. This vast pool of data helped ensure the models’ reliability and generalizability, making them applicable across a wide range of populations, geographical locations, and demographic groups.

The two models — the “PRISM” neural network, and the logistic regression model (a statistical technique for probability), outperformed current methods. The team’s comparison showed that while standard screening criteria identify about 10 percent of PDAC cases using a five-times higher relative risk threshold, Prism can detect 35 percent of PDAC cases at this same threshold.

Using AI to detect cancer risk is not a new phenomena — algorithms analyze mammograms, CT scans for lung cancer, and assist in the analysis of Pap smear tests and HPV testing, to name a few applications. “The PRISM models stand out for their development and validation on an extensive database of over 5 million patients, surpassing the scale of most prior research in the field,” says Kai Jia, an MIT PhD student in electrical engineering and computer science (EECS), MIT CSAIL affiliate, and first author on an open-access paper in eBioMedicine outlining the new work.

“The model uses routine clinical and lab data to make its predictions, and the diversity of the U.S. population is a significant advancement over other PDAC models, which are usually confined to specific geographic regions, like a few health-care centers in the U.S. Additionally, using a unique regularization technique in the training process enhanced the models’ generalizability and interpretability.”

“This report outlines a powerful approach to use big data and artificial intelligence algorithms to refine our approach to identifying risk profiles for cancer,” says David Avigan, a Harvard Medical School professor and the cancer center director and chief of hematology and hematologic malignancies at BIDMC, who was not involved in the study. “This approach may lead to novel strategies to identify patients with high risk for malignancy that may benefit from focused screening with the potential for early intervention.”

Prismatic perspectives

The journey toward the development of PRISM began over six years ago, fueled by firsthand experiences with the limitations of current diagnostic practices. “Approximately 80-85 percent of pancreatic cancer patients are diagnosed at advanced stages, where cure is no longer an option,” says senior author Appelbaum, who is also a Harvard Medical School instructor as well as radiation oncologist. “This clinical frustration sparked the idea to delve into the wealth of data available in electronic health records (EHRs).”

The CSAIL group’s close collaboration with Appelbaum made it possible to understand the combined medical and machine learning aspects of the problem better, eventually leading to a much more accurate and transparent model. “The hypothesis was that these records contained hidden clues — subtle signs and symptoms that could act as early warning signals of pancreatic cancer,” she adds. “This guided our use of federated EHR networks in developing these models, for a scalable approach for deploying risk prediction tools in health care.”

Both PrismNN and PrismLR models analyze EHR data, including patient demographics, diagnoses, medications, and lab results, to assess PDAC risk. PrismNN uses artificial neural networks to detect intricate patterns in data features like age, medical history, and lab results, yielding a risk score for PDAC likelihood. PrismLR uses logistic regression for a simpler analysis, generating a probability score of PDAC based on these features. Together, the models offer a thorough evaluation of different approaches in predicting PDAC risk from the same EHR data.

One paramount point for gaining the trust of physicians, the team notes, is better understanding how the models work, known in the field as interpretability. The scientists pointed out that while logistic regression models are inherently easier to interpret, recent advancements have made deep neural networks somewhat more transparent. This helped the team to refine the thousands of potentially predictive features derived from EHR of a single patient to approximately 85 critical indicators. These indicators, which include patient age, diabetes diagnosis, and an increased frequency of visits to physicians, are automatically discovered by the model but match physicians’ understanding of risk factors associated with pancreatic cancer.

The path forward

Despite the promise of the PRISM models, as with all research, some parts are still a work in progress. U.S. data alone are the current diet for the models, necessitating testing and adaptation for global use. The path forward, the team notes, includes expanding the model’s applicability to international datasets and integrating additional biomarkers for more refined risk assessment.

“A subsequent aim for us is to facilitate the models’ implementation in routine health care settings. The vision is to have these models function seamlessly in the background of health care systems, automatically analyzing patient data and alerting physicians to high-risk cases without adding to their workload,” says Jia. “A machine-learning model integrated with the EHR system could empower physicians with early alerts for high-risk patients, potentially enabling interventions well before symptoms manifest. We are eager to deploy our techniques in the real world to help all individuals enjoy longer, healthier lives.”

Written by Rachel Gordon

Source: Massachusetts Institute of Technology

You can offer your link to a page which is relevant to the topic of this post.

#A.I. & Neural Networks news#Adenocarcinoma#ai#alerts#Algorithms#Analysis#applications#approach#artificial#Artificial Intelligence#artificial intelligence (AI)#artificial neural networks#background#Beth Israel Deaconess Medical Center#Big Data#biomarkers#Biotechnology news#Cancer#cancer treatment#Collaboration#comparison#computer#Computer Science#data#Database#datasets#dates#detection#development#diabetes

0 notes

Text

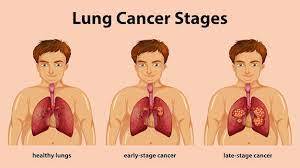

Lung Cancer: Early Signs, Symptoms, Stages

Cellular Deterioration in Pulmonary Tissues: The Dominant Precursor of Lethal Diseases

Cellular degradation within the pulmonary organs has emerged as the primary adversary confronting individuals afflicted by invasive neoplastic conditions, impacting not only spouses, acquaintances, and neighbors but also inflicting distress upon countless families. In the US, lung malignancies overwhelmed bosom carcinomas as the preeminent supporter of disease-related mortalities in ladies as soon as 1987. Cellular breakdown in the lungs losses comprise a fourth of all disease-related passings in America, outperforming the joined death paces of prostate, bosom, and colon malignancies. In 2017, almost 160,000 Americans capitulated to a cellular breakdown in the lungs.

Triggers of Pulmonary Carcinoma

The precise etiology of pulmonary carcinoma remains under meticulous scrutiny. Several risk elements have been identified as pivotal factors in the genesis of malignant cellular growths. Risk determinants encompass tobacco consumption, exposure to atmospheric contamination, and hereditary factors.

Does Tobacco Consumption Instigate Pulmonary Carcinoma?

The primary catalyst for pulmonary carcinoma in Homo sapiens is the utilization of tobacco products. In 1876, an apparatus was devised to mass-produce cylindrical tobacco sticks, rendering tobacco commodities economically accessible to virtually all segments of society. Prior to this innovation, pulmonary carcinoma was a relatively infrequent occurrence. Subsequent to the proliferation of mass-produced cigarettes, tobacco consumption witnessed a steep ascent, coinciding with a parallel surge in the incidence of pulmonary carcinoma. Presently, roughly 90% of all instances of pulmonary carcinoma are inextricably linked to tobacco usage. Radon gas, environmental pollutants, noxious agents, and various other factors contribute to the residual 10%.

Cigarettes and tobacco smoke encompass an excess of 70 carcinogenic compounds. Some of the carcinogenic constituents detected within tobacco smoke comprise:

Lead (an exceptionally toxic metallic element)

Arsenic (a potent insecticidal compound)

Cadmium (a constituent of batteries)

Isoprene (employed in the production of synthetic rubber)

Benzene (an adjunct in fuel formulations)

Cigar smoke abounds in tobacco-specific nitrosamines (TSNAs), recognized for their particularly malign properties.

Pulmonary Carcinoma and Cilia

Tobacco smoke impairs and, on occasion, eradicates ciliated projections on respiratory tract epithelial cells, designated as cilia. Cilia conventionally function to evacuate toxic substances, carcinogens, viral agents, and bacterial pathogens. In situations where smoke disrupts or eradicates cilia, these deleterious substances may amass within the pulmonary tissues, potentially precipitating infections and pulmonary carcinoma.

Indications of Pulmonary Carcinoma

Regrettably, pulmonary carcinomas frequently remain asymptomatic during their initial stages or present with obscure clinical manifestations that individuals are apt to disregard. Approximately 25% of individuals afflicted by pulmonary carcinomas devoid of clinical indications receive a diagnosis subsequent to undergoing a thoracic radiograph or computed tomography (CT) scan during a routine medical assessment or investigative procedures. Clinical indications of pulmonary carcinoma that might manifest comprise:

Persistent, recurrent paroxysms of coughing

Lethargy

Unexplained corporeal mass reduction

Breathlessness or paroxysms of wheezing

Expectoration of hemoptoic mucus

Thoracic discomfort

Three Conventional Approaches for Screening Pulmonary Carcinoma

Pulmonary carcinoma screening typically entails the deployment of three modalities.

Physiological Appraisal

A physiological appraisal is conducted to discern signs of paroxysms of wheezing, breathlessness, coughing, discomfort, and other conceivable indicators of pulmonary carcinoma. The extent to which the carcinoma has progressed determines additional early indicators, encompassing anhidrosis, engorged cervical veins, facial edema, conspicuously constricted pupils, and other diagnostic cues. The physiological appraisal also encompasses a detailed review of the patient's tobacco consumption history, in conjunction with a thoracic radiograph.

Sputum Cytology Assessment

A sputum cytology assessment entails the microscopic examination of the patient's expectorated mucus (sputum).

Spiral Computed Tomography (CT) Imaging

This CT imaging methodology assembles an intricate portrait of the internal anatomical components of the patient's physique. Within a spiral CT apparatus, intricate images are captured of the principal constituents of the patient's corporeal structure. These images are subsequently correlated with an X-ray device to craft three-dimensional representations of the patient's inner bodily organs. These illustrations may potentially unveil neoplastic growths.

A logical report set that people between the ages of 55 and 74, who have supported everyday utilization of something like one bunch of cigarettes for at least 30 years, may get benefits from a winding CT output of the pneumonic organs.

Finding of Pneumonic Carcinoma

Should the outcomes of the screening examinations intimate the presence of pulmonary carcinoma in an individual, conclusive diagnostic tests may be executed by a pathologist. The pathologist will scrutinize pulmonary cellular entities obtained from the patient's expectorated mucus, sputum, or extracted via a biopsy procedure, for the purposes of classifying and staging the pulmonary carcinoma.

Pulmonary Carcinoma Biopsy

As previously elucidated, the most efficacious approach for definitively diagnosing pulmonary carcinoma typically involves procuring a biological tissue specimen from the site of the suspected carcinoma. In most instances, pulmonary biopsies are obtained by means of a needle biopsy, bronchoscopic biopsy of the pulmonary organs, or via surgical excision of corporeal tissue. Various supplementary diagnostic assessments may be administered to accrue supplemental insights into the extent of the ailment's dissemination.

For further information concerning the categories of pulmonary carcinoma and the staging of pulmonary carcinoma, including stage IV pulmonary carcinoma, please refer to the subsequent segments.

Varieties of Pulmonary Carcinoma

Aspiratory carcinomas can be arranged into simply two essential groupings: little cell pneumonic carcinoma and non-little cell pneumonic carcinoma. Under 5% of pneumonic carcinomas will appear as a carcinoid neoplasm, while other abnormal malignancies include adenoid cystic carcinomas, lymphomas, and sarcomas. Significantly, occurrences of neoplastic arrangements beginning somewhere else inside the body and penetrating the pneumonic organs are not delegated aspiratory carcinomas.

Non-Small Cell Pulmonary Carcinoma

Non-small cell pulmonary carcinomas represent the most prevalent variant of pulmonary carcinoma. These malignancies account for roughly 90% of all pulmonary carcinomas and exhibit a relatively indolent course in comparison to small cell pulmonary carcinomas, signifying a more protracted progression to metastatic dissemination.

Small Cell Pulmonary Carcinoma

Small cell pulmonary carcinoma, colloquially known as oat cell pulmonary carcinoma, encompasses nearly 10% of all pulmonary carcinomas. This specific neoplastic form tends to disseminate with rapidity.

Stages of Pulmonary Carcinoma: 0-4

Subsequent to the determination of the specific subtype of pulmonary carcinoma, the condition is further stratified in terms of its pulmonary carcinoma staging. The staging system provides insights into the extent of neoplastic dissemination throughout the bodily structure, including its metastasis to lymph nodes or remote organ systems such as the cerebral cortex. Distinctions exist between the staging criteria for non-small cell pulmonary carcinomas and small cell pulmonary carcinomas. The ensuing stage classifications are extrapolated from the National Cancer Institute's documentation on pulmonary carcinoma staging; alternative staging methodologies may exhibit variations in categorization, such as the American Cancer Society's TNM classification scheme:

Small Cell Pulmonary Carcinoma Stages

Limited Stage: This stage designates small cell pulmonary carcinoma that remains confined to one hemisphere of the thoracic cavity, typically involving the pulmonary tissues and adjacent lymphatic structures. Approximately one-third of individuals diagnosed with small cell pulmonary carcinoma initially present with limited stage malignancies.

Extensive Stage: This pertains to small cell pulmonary carcinoma that has disseminated beyond the confines of a single pulmonary lobe, affecting either both pulmonary lobes, lymph nodes situated contralateral to the thoracic cavity, or extrapulmonary locations. Approximately two-thirds of individuals diagnosed with small cell pulmonary carcinoma initially present with extensive stage malignancies.

Non-Small Cell Pulmonary Carcinoma Stages

Occult (Hidden) Stage: In this phase, neoplastic cells are discernible in sputum cytology tests or alternative diagnostic procedures, albeit without an ascertained origin of the neoplastic process.

Stage 0 (Carcinoma in situ): This pulmonary carcinoma stage confines the presence of neoplastic cells to the superficial strata of the respiratory tract's mucosal lining, without penetration into deeper pulmonary tissues or extrapulmonary dissemination.

Stage I: Characterized by the detection of minute pulmonary neoplasms (measuring under 3 centimeters in diameter) that have not yet infiltrated adjacent pulmonary parenchyma, lymph nodes, or the principal bronchial segments of the pulmonary organs.

Stage II: Stage II pulmonary carcinoma can be diagnosed through various modalities. One plausible scenario is the infiltration of lymph nodes in proximity to the pulmonary organs.

Stage IIA: Neoplastic tumors measuring between 3 and 5 centimeters in diameter are categorized as Stage IIA, with additional criteria potentially contributing to this designation.

Stage IIB: Tumors within the range of 5 to 7 centimeters in diameter are classified as Stage IIB pulmonary carcinoma. Supplementary considerations may factor into this classification.

Stage III: Analogous to Stage II pulmonary carcinoma, Stage III comprises multiple interpretive frameworks. One elucidation denotes the coexistence of pulmonary neoplasia within the pulmonary organs and lymph nodes situated within the thoracic cavity. Stage III pulmonary carcinoma is further subdivided into two distinct subsets.

Stage IIIA: This category defines pulmonary carcinoma that has propagated to the ipsilateral thoracic cavity.

Stage IIIB: This classification characterizes pulmonary carcinoma whereby the affliction has extended to either the contralateral thoracic cavity or cranial to the clavicle.

Stage IV: This constitutes the terminal phase of pulmonary carcinoma. Neoplastic formations may exhibit varying dimensions, but two out of three of the ensuing criteria must be met:

Dissemination of the carcinoma to the contralateral pulmonary lobe of origin.

Identification of neoplastic cells within the serous fluid surrounding the pulmonary organs.

Neoplastic cellular presence within the serous fluid encapsulating the cardiac organ.

Deciphering Lung Cancer Survival Probabilities and Therapeutic Alternatives

Lung malignancy emerges as an imposing adversary, adept at concealing itself during its initial phases and presenting dismal prospects for those entrenched in its advanced stages. The metrics for survival exhibit fluctuations contingent upon the specific cancer subtype. According to statistics disseminated by the American Cancer Society, the five-year survival quotient for non-small cell lung carcinoma hovers around 24% across all phases. In stark contrast, small cell lung carcinoma regrettably plummets to an abysmal 6%. It is, however, noteworthy that these figures are susceptible to substantial augmentation when the ailment is identified and treated within the confines of its localized or regional confines, with distant-stage lung cancer manifesting as the most adverse scenario.

Surgical Interventions for Lung Cancer

In instances of rudimentary-stage (stage 0 or certain stage I) non-small cell lung carcinoma, surgical recourse proffers itself as a viable therapeutic modality. This procedural maneuver entails the excision of a segment or, in some instances, the entirety of the lung locus harboring the malignancy, occasionally culminating in a comprehensive remission. Nevertheless, it is imperative to acknowledge that a considerable cohort of patients still necessitates chemotherapy, radiation therapy, or a composite amalgamation thereof, aimed at eliminating any residual cancerous entities that may have eluded the surgical intervention. Regrettably, small cell lung carcinomas are rarely apprehended during their incipient phases, which invariably relegates both surgical procedures and ancillary treatment modalities to predominantly palliative roles, extending only a limited prospect of potential cure.

Cutting-edge Lung Cancer Remedial Approaches

The conventional armamentarium for addressing both small cell and non-small cell lung carcinomas frequently encompasses chemotherapy and, in select circumstances, radiation therapy and surgical interventions. Numerous patients grappling with the complexities of advanced-stage maladies receive a synergistic orchestration of these interventions, meticulously tailored to their specific clinical panorama and predicated upon the directives tendered by their oncological consultants.

Precision Therapeutics for Lung Cancer

Precision therapeutics are strategically poised to thwart or impede the proliferation of neoplastic cells by directing their focus towards the requisite vascularization imperative for their sustenance and proliferation. Simultaneously, alternate therapeutic modalities set their sights on sabotaging the molecular signaling cascades requisite for the multiplication and dissemination of malignant cells.

Empirical Investigations into Lung Cancer

In addition to precision therapeutics, a plethora of empirical studies and clinical assays present themselves as accessible avenues for eligible individuals. Some of these investigational forays may be feasibly accessible within a localized purview, affording afflicted individuals the invaluable opportunity to engage with pioneering therapeutic approaches and protocols in the sphere of lung cancer management.

Existence Beyond the Pronouncement of a Lung Cancer Diagnosis

The reception of a lung cancer diagnosis invariably evokes an inundation of emotions, chief among them being a palpable sense of being overwhelmed and despondency. Nonetheless, a ray of optimism emanates from the crucible of ongoing scientific research, kindling the flame of hope for survival and an elongated lifespan, contingent upon the judicious pursuit of apt therapeutic interventions. Accumulated scientific evidence underscores the superior prognosis accorded to individuals who elect to embrace a salubrious lifestyle and embark upon the path of smoking cessation, vis-à-vis their counterparts who eschew such transformative measures.

Lung Cancer and Passive Smoking

It is salient to underscore that tobacco smokers do not only imperil their individual health but also imperil the well-being of non-smokers inhabiting their immediate sphere, which includes spouses, offspring, and significant others. Exposure to secondhand smoke in the ambient environment confers a daunting 20% to 30% augmented susceptibility to the onset of lung cancer in these non-smoking individuals.

Occupational Exposure and Lung Cancer

While it remains an incontrovertible verity that smoking is the predominant etiological agent precipitating lung carcinoma, it is pertinent to acknowledge that a heterogeneous compendium of chemicals and compounds also transposes an elevated risk quotient. Agents such as asbestos, uranium, arsenic, benzene, and sundry others significantly amplify the proclivity for the development of lung cancer. Notably, exposure to asbestos, for instance, may instigate lung malignancy, manifesting as mesothelioma, numerous decades post-initial contact, thereby perpetuating the specter of lung infirmity over protracted time frames.

Radon Gas and Lung Cancer

Radon gas emerges as the second most formidable trigger of lung cancer. It subsists as an innate occurrence but is prone to permeating residential edifices, amassing within subterranean basements and crawl spaces. Although its qualities encompass odorlessness and chromatic neutrality, it remains susceptible to detection via simplistic and cost-effective examination kits. It is imperative to recognize that smokers who confront this insidious gas hazard an augmented proclivity for the incipience of lung cancer, contrasted with their non-smoking counterparts.

Atmospheric Contamination and Lung Cancer

Certain pundits in the scientific community postulate the connotation between atmospheric contamination and the genesis of lung carcinoma. Multiple investigations tender a repository of data proffering cogent substantiation for the inference that atmospheric pollutants, including diesel exhaust, may substantially contribute to the genesis of lung cancer in select individuals. Conservative estimates from researchers posit that approximately 5% of lung cancer incidents can be imputed to the pernicious influence of atmospheric pollution.

Supplementary Risk Factors for Lung Cancer

Despite the proliferation of insights into the intricacies of lung cancer, myriad complexities and scenarios remain enshrouded in the mists of obscurity. An exemplary conundrum resides in the enigmatic proclivity for certain families to manifest a lineage characterized by the scourge of lung cancer, notwithstanding the absence of apparent precipitating risk factors. Analogous mysteries persist in relation to specific patients who fall victim to lung cancer in the absence of readily discernible risk factors. There exists a tentative correlation between the consumption of water exhibiting elevated arsenic concentrations and the augmentation of lung cancer vulnerability, yet the precise mechanistic underpinnings of this phenomenon remain an enigma. Analogously, the rationale behind the preponderance of adenocarcinoma incidence among non-smokers, rather than their smoking counterparts, remains elusive.

Lung Cancer Aversion

For the lion's share of lung cancer instances, preemptive strategies are attainable through the embrace of abstention from smoking and the meticulous avoidance of secondhand smoke exposure. Those who choose to relinquish their tobacco habit bear witness to a precipitous decline in their susceptibility to lung cancer, with their risk profile mirroring that of individuals who have never indulged in the act of smoking. Concurrently, the adoption of prudence with regard to other risk factors, encompassing specific chemicals and compounds such as benzene and asbestos, alongside the conscientious curtailment of atmospheric contamination exposure, affords a panoply of avenues for the prevention of lung cancer in targeted subsets of the populace.

#Lung cancer#Early signs#Symptoms#Stages#Diagnosis#Treatment options#Risk factors#Prevention#awareness#Survival rates

0 notes

Text

Selecting Pairs Using Principal Component Analysis

Pairs trading is a market-neutral strategy that involves identifying two correlated securities and taking positions based on their relative price movements. The concept behind pairs trading is to identify pairs of assets that historically exhibit a high degree of correlation, meaning they tend to move in tandem. However, when a temporary divergence occurs between the prices of the two assets, a pairs trader will take a long position in the underperforming asset and a short position in the outperforming asset, anticipating that the prices will converge again. This strategy seeks to profit from the reversion to the mean in the relationship between the two assets, regardless of the overall direction of the broader market.

Pairs selection is one of the most critical steps in pairs trading. The success of this trading strategy heavily relies on the careful identification of suitable pairs of assets that exhibit a high correlation and a historically stable relationship. The selection process involves rigorous analysis of historical price data, statistical measures such as cointegration or correlation coefficients, and fundamental factors that drive the performance of the assets.

Usually, stocks from the same industry are chosen for pairs trading. Reference [1] proposed a pairs selection method based on clusters identified by Principal Component Analysis (PCA). It pointed out,

We applied the unsupervised learning technique DB-SCAN algorithm for efficient pair selection which gives more number of pairs and better results than other algorithms like KNN algorithm.

We also used moving averages over 30 days rather than overall averages for more efficient prediction due to more relevant and recent results. We optimized our strategy at each step of pair trading computation to obtain overall optimized results which can be seen in our results.

The results show that our strategy is very effective against the standard Nifty-50 leading to good profits and pair selections. But, the model has slightly better performance in prediction spread and pairs than profitability. This is because profitability depends on various other market factors.

In short, PCA is an efficient method for identifying suitable pairs. While it appears that the authors did not perform out-of-sample testing, we believe that their result has merits.

Let us know what you think in the comments below or in the discussion forum.

References

[1] Vanshika Gupta, Vineet Kumar, Yuvraj Yuvraj, Manoj Kumar, Optimized Pair Trading Strategy using Unsupervised Machine Learning, 2023 IEEE 8th International Conference for Convergence in Technology (I2CT)

Article Source Here: Selecting Pairs Using Principal Component Analysis

from Harbourfront Technologies - Feed https://ift.tt/asuCVOo

0 notes

Text

Al Discussion on mHSPC Treatment Options -Using Doublet and Triplet Treatments

Catherine Marshall, MD, MPH, an assistant professor of oncology at Johns Hopkins Medical Center in an interview with GU Oncology helped clarify current changes in the therapy of metastatic hormone sensitive prostate cancer (mHSPC). When we talk about mHSPC, we really mean two things, according to Dr. Marshall: (1) confirmed metastatic disease based on conventional scans (eg, CT or nuclear bone scans) showing evidence of lymphadenopathy (involved lymph nodes), visceral disease (metastases to soft-tissue sites other than lymph nodes), or bone lesions on bone scan; and (2) recently diagnosed prostate adenocarcinoma that has not yet been treated with hormone therapy (ADT).

Dr. Marshall stated that the development and expanded usage of imaging, such as prostate-specific membrane antigen (PSMA) positron emission tomography (PET) are expected to increase the upstaging of prostate cancer in patients. Indeed, in a patient with rising PSA and negative conventional scans (ie, CT and bone imaging), upstaging of a disease that was earlier regarded as biochemically recurrent (Increases in PSA only) may have PSMA imaging diagnostic of metastatic cancer.

ADT has long been the gold standard of care for males with mHSPC, with either a luteinizing hormone-releasing hormone (LHRH) agonist or antagonist being used alone. Unfortunately, men with mHSPC who are treated with ADT alone are almost guaranteed to acquire hormone-resistant illness (prostate cancer that does not respond to ADT). Various antiandrogen therapies have been added to the regimen over the last decade to prevent the inevitable establishment of hormone resistance.

Currently, four medications that were previously only approved for the treatment of hormone-resistant prostate cancer have become the standard of care for men with mHSPC. Docetaxel (Taxotere) and the oral antiandrogens abiraterone, enzalutamide, and apalutamide have been shown in large trials to prolong overall survival when used at the time of diagnosis (i.e., when the patient is still sensitive to ADT) rather than waiting for the disease to progress to metastatic hormone-resistant prostate cancer. Dr. Marshall defined "doublet therapy" as a regimen of ADT plus one of these four approved therapeutic medicines for the treatment of men with mHSPC.

Triplet vs. Doublet Therapy

Since doublet therapy provides a clinical benefit, the obvious next question is whether "triplet therapy" provides an even bigger benefit. Dr. Marshall was careful to moderate expectations when asked this question. She referenced the PEACE-1 trial and the ARASENS trial as two recent randomized trials looking into the benefits of triplet therapy. The main question with triplet therapy, according to Dr. Marshall, is whether "combining chemotherapy with an oral new hormonal drug enhances outcomes relative to chemotherapy and ADT." PEACE-1 investigated the addition of abiraterone/prednisone, whilst ARASENS investigated the addition of darolutamide to the backbone of ADT plus docetaxel.

Dr. Marshall did, however, notice certain disparities in the patient groups of these trials. PEACE-1 patients had de novo metastatic illness (i.e. disease that was metastatic at the time of the prostate cancer diagnosis). Men who had abiraterone with or without radiation in addition to ADT with docetaxel had better radiologic progression-free survival and overall survival, according to the findings. The ARASENS trial, on the other hand, compared ADT plus docetaxel to either the antiandrogen darolutamide or placebo. The majority of patients, but not all, had metastatic disease at the time of diagnosis. ARASENS also demonstrated that triplet therapy outperformed ADT plus docetaxel in terms of overall survival, with the probability of death in the darolutamide group being 32% lower than in the placebo group. Neither of these trials compared ADT and docetaxel plus an oral antiandrogen to a comparator arm of ADT plus an oral antiandrogen alone. As a result, according to Dr. Marshall, we cannot claim with certainty that triplet therapy is superior to doublet therapy with ADT + oral anti-androgen.

One of the primary concerns about the use of triplet therapy, according to Dr. Marshall, is the danger of toxicity to the patient. Concerns have been raised in the medical community concerning the possibility of additive toxicity while taking three medications at the same time. The most serious of these is concern about the possibility of docetaxel exacerbating tiredness and neuropathy. However, those trials revealed "toxicities that would be predicted from the particular drugs," according to Dr. Marshall.

As previously stated, the four standard-of-care medicines used alongside ADT were previously solely advised for hormone-resistant prostate cancer. Dr. Marshall stressed that deciding on the appropriate sequencing of treatments will continue to be increasingly difficult as more treatments become available in clinical practice and the standard treatments for hormone-resistant disease continue to be used earlier in the prostate cancer course. Furthermore, as the medical community continues to use novel hormonal drugs and chemotherapy in the hormone-sensitive situation, the decision about which treatments to use next—after hormone resistance develops—has grown hazy.

Dr. Marshall described the CARD trial as a study that gives data to justify the use of the chemotherapeutic drug cabazitaxel when disease progression has occurred on abiraterone or enzalutamide with docetaxel. The CARD study results indicated a significant improvement in both progression-free survival and overall survival. Dr. Marshall was also careful to point out that the medical community will be required to consider when to use newer therapeutic agents, such as radiologic-targeted lutetium-177 PSMA therapy, immunotherapy, and targeted agents, such as PARP (poly adenosine diphosphate-ribose polymerase) inhibitors, for the treatment of men who may benefit from those therapies.

The Future of mHSPC Treatment GU Oncology also asked Dr. Marshall what the future of mHSPC treatment would look like. She believes that the future of illness management will include the use of more focused medications at earlier stages of the disease. She believes that as medication research and clinical trial progress, the medical community will become better at determining who needs the most rigorous treatment and who can be treated effectively without the additional morbidity that comes with treatment intensification.

Finally, Dr. Marshall emphasized that treatment for males with mHSPC must be adjusted to a variety of patient- and disease-specific characteristics. The current standard of care for males with mHSPC has advanced much beyond the old norm of ADT alone. The paradigm has altered as the use of chemotherapy or novel antiandrogen medicines in combination with ADT has shown considerable survival improvements over ADT alone. Although some people may not benefit from doublet therapy because they are too fragile to tolerate the second agent or have other substantial comorbidities, it is evident that for many patients, there are significantly more treatment alternatives to consider when deciding how to approach a patient with mHSPC.

The original conversation was published online at: https://dialysisinc.com/news/editors-talk-triplet-versus-doublet-therapy-in-metastatic-hormone-sensitive-prostate-cancer/

0 notes

Link

#swing trading strategy#How to select stocks for swing trading#Relative Outperformance Scans#Relative under performance#Stocks Outperforming Index#how to select stocks for trading

0 notes

Link

If a stock has consistently outperformed the index over the last two to three months, it is quite likely that it will continue to outperform and that such equities will be purchasing on the decline. The built-in Relative outperformance and Relative underperformance screeners on the intradayscreener website can help you quickly identify such stocks.

0 notes

Text

In the economic sphere too, the ability to hold a hammer or press a button is becoming less valuable than before. In the past, there were many things only humans could do. But now robots and computers are catching up, and may soon outperform humans in most tasks. True, computers function very differently from humans, and it seems unlikely that computers will become humanlike any time soon. In particular, it doesn’t seem that computers are about to gain consciousness, and to start experiencing emotions and sensations. Over the last decades there has been an immense advance in computer intelligence, but there has been exactly zero advance in computer consciousness. As far as we know, computers in 2016 are no more conscious than their prototypes in the 1950s. However, we are on the brink of a momentous revolution. Humans are in danger of losing their value, because intelligence is decoupling from consciousness.

Until today, high intelligence always went hand in hand with a developed consciousness. Only conscious beings could perform tasks that required a lot of intelligence, such as playing chess, driving cars, diagnosing diseases or identifying terrorists. However, we are now developing new types of non-conscious intelligence that can perform such tasks far better than humans. For all these tasks are based on pattern recognition, and non-conscious algorithms may soon excel human consciousness in recognising patterns. This raises a novel question: which of the two is really important, intelligence or consciousness? As long as they went hand in hand, debating their relative value was just a pastime for philosophers. But in the twenty-first century, this is becoming an urgent political and economic issue. And it is sobering to realise that, at least for armies and corporations, the answer is straightforward: intelligence is mandatory but consciousness is optional.

Armies and corporations cannot function without intelligent agents, but they don’t need consciousness and subjective experiences. The conscious experiences of a flesh-and-blood taxi driver are infinitely richer than those of a self-driving car, which feels absolutely nothing. The taxi driver can enjoy music while navigating the busy streets of Seoul. His mind may expand in awe as he looks up at the stars and contemplates the mysteries of the universe. His eyes may fill with tears of joy when he sees his baby girl taking her very first step. But the system doesn’t need all that from a taxi driver. All it really wants is to bring passengers from point A to point B as quickly, safely and cheaply as possible. And the autonomous car will soon be able to do that far better than a human driver, even though it cannot enjoy music or be awestruck by the magic of existence.

Indeed, if we forbid humans to drive taxis and cars altogether, and give computer algorithms monopoly over traffic, we can then connect all vehicles to a single network, and thereby make car accidents virtually impossible. In August 2015, one of Google’s experimental self-driving cars had an accident. As it approached a crossing and detected pedestrians wishing to cross, it applied its brakes. A moment later it was hit from behind by a sedan whose careless human driver was perhaps contemplating the mysteries of the universe instead of watching the road. This could not have happened if both vehicles were steered by interlinked computers. The controlling algorithm would have known the position and intentions of every vehicle on the road, and would not have allowed two of its marionettes to collide. Such a system will save lots of time, money and human lives – but it will also do away with the human experience of driving a car and with tens of millions of human jobs.

Some economists predict that sooner or later, unenhanced humans will be completely useless. While robots and 3D printers replace workers in manual jobs such as manufacturing shirts, highly intelligent algorithms will do the same to white-collar occupations. Bank clerks and travel agents, who a short time ago were completely secure from automation, have become endangered species. How many travel agents do we need when we can use our smartphones to buy plane tickets from an algorithm?

Stock-exchange traders are also in danger. Most trade today is already being managed by computer algorithms, which can process in a second more data than a human can in a year, and that can react to the data much faster than a human can blink. On 23 April 2013, Syrian hackers broke into Associated Press’s official Twitter account. At 13:07 they tweeted that the White House had been attacked and President Obama was hurt. Trade algorithms that constantly monitor newsfeeds reacted in no time, and began selling stocks like mad. The Dow Jones went into free fall, and within sixty seconds lost 150 points, equivalent to a loss of $136 billion! At 13:10 Associated Press clarified that the tweet was a hoax. The algorithms reversed gear, and by 13:13 the Dow Jones had recuperated almost all the losses.

Three years previously, on 6 May 2010, the New York stock exchange underwent an even sharper shock. Within five minutes – from 14:42 to 14:47 – the Dow Jones dropped by 1,000 points, wiping out $1 trillion. It then bounced back, returning to its pre-crash level in a little over three minutes. That’s what happens when super-fast computer programs are in charge of our money. Experts have been trying ever since to understand what happened in this so-called ‘Flash Crash’. We know algorithms were to blame, but we are still not sure exactly what went wrong. Some traders in the USA have already filed lawsuits against algorithmic trading, arguing that it unfairly discriminates against human beings, who simply cannot react fast enough to compete. Quibbling whether this really constitutes a violation of rights might provide lots of work and lots of fees for lawyers.

And these lawyers won’t necessarily be human. Movies and TV series give the impression that lawyers spend their days in court shouting ‘Objection!’ and making impassioned speeches. Yet most run-of-the-mill lawyers spend their time going over endless files, looking for precedents, loopholes and tiny pieces of potentially relevant evidence. Some are busy trying to figure out what happened on the night John Doe got killed, or formulating a gargantuan business contract that will protect their client against every conceivable eventuality. What will be the fate of all these lawyers once sophisticated search algorithms can locate more precedents in a day than a human can in a lifetime, and once brain scans can reveal lies and deceptions at the press of a button? Even highly experienced lawyers and detectives cannot easily spot deceptions merely by observing people’s facial expressions and tone of voice. However, lying involves different brain areas to those used when we tell the truth. We’re not there yet, but it is conceivable that in the not too distant future fMRI scanners could function as almost infallible truth machines. Where will that leave millions of lawyers, judges, cops and detectives? They might need to go back to school and learn a new profession.

When they get in the classroom, however, they may well discover that the algorithms have got there first. Companies such as Mindojo are developing interactive algorithms that not only teach me maths, physics and history, but also simultaneously study me and get to know exactly who I am. Digital teachers will closely monitor every answer I give, and how long it took me to give it. Over time, they will discern my unique weaknesses as well as my strengths. They will identify what gets me excited, and what makes my eyelids droop. They could teach me thermodynamics or geometry in a way that suits my personality type, even if that particular way doesn’t suit 99 per cent of the other pupils. And these digital teachers will never lose their patience, never shout at me, and never go on strike. It is unclear, however, why on earth I would need to know thermodynamics or geometry in a world containing such intelligent computer programs.

Even doctors are fair game for the algorithms. The first and foremost task of most doctors is to diagnose diseases correctly, and then suggest the best available treatment. If I arrive at the clinic complaining about fever and diarrhoea, I might be suffering from food poisoning. Then again, the same symptoms might result from a stomach virus, cholera, dysentery, malaria, cancer or some unknown new disease. My doctor has only five minutes to make a correct diagnosis, because this is what my health insurance pays for. This allows for no more than a few questions and perhaps a quick medical examination. The doctor then cross-references this meagre information with my medical history, and with the vast world of human maladies. Alas, not even the most diligent doctor can remember all my previous ailments and check-ups. Similarly, no doctor can be familiar with every illness and drug, or read every new article published in every medical journal. To top it all, the doctor is sometimes tired or hungry or perhaps even sick, which affects her judgement. No wonder that doctors often err in their diagnoses, or recommend a less-than-optimal treatment.

Now consider IBM’s famous Watson – an artificial intelligence system that won the Jeopardy! television game show in 2011, beating human former champions. Watson is currently groomed to do more serious work, particularly in diagnosing diseases. An AI such as Watson has enormous potential advantages over human doctors. Firstly, an AI can hold in its databanks information about every known illness and medicine in history. It can then update these databanks every day, not only with the findings of new researches, but also with medical statistics gathered from every clinic and hospital in the world.

Secondly, Watson can be intimately familiar not only with my entire genome and my day-to-day medical history, but also with the genomes and medical histories of my parents, siblings, cousins, neighbours and friends. Watson will know instantly whether I visited a tropical country recently, whether I have recurring stomach infections, whether there have been cases of intestinal cancer in my family or whether people all over town are complaining this morning about diarrhoea.

Thirdly, Watson will never be tired, hungry or sick, and will have all the time in the world for me. I could sit comfortably on my sofa at home and answer hundreds of questions, telling Watson exactly how I feel. This is good news for most patients (except perhaps hypochondriacs). But if you enter medical school today in the expectation of still being a family doctor in twenty years, maybe you should think again. With such a Watson around, there is not much need for Sherlocks.

This threat hovers over the heads not only of general practitioners, but also of experts. Indeed, it might prove easier to replace doctors specialising in a relatively narrow field such as cancer diagnosis. For example, in a recent experiment a computer algorithm diagnosed correctly 90 per cent of lung cancer cases presented to it, while human doctors had a success rate of only 50 per cent. In fact, the future is already here. CT scans and mammography tests are routinely checked by specialised algorithms, which provide doctors with a second opinion, and sometimes detect tumours that the doctors missed.

A host of tough technical problems still prevent Watson and its ilk from replacing most doctors tomorrow morning. Yet these technical problems – however difficult – need only be solved once. The training of a human doctor is a complicated and expensive process that lasts years. When the process is complete, after ten years of studies and internships, all you get is one doctor. If you want two doctors, you have to repeat the entire process from scratch. In contrast, if and when you solve the technical problems hampering Watson, you will get not one, but an infinite number of doctors, available 24/7 in every corner of the world. So even if it costs $100 billion to make it work, in the long run it would be much cheaper than training human doctors.

And what’s true of doctors is doubly true of pharmacists. In 2011 a pharmacy opened in San Francisco manned by a single robot. When a human comes to the pharmacy, within seconds the robot receives all of the customer’s prescriptions, as well as detailed information about other medicines taken by them, and their suspected allergies. The robot makes sure the new prescriptions don’t combine adversely with any other medicine or allergy, and then provides the customer with the required drug. In its first year of operation the robotic pharmacist provided 2 million prescriptions, without making a single mistake. On average, flesh-and-blood pharmacists get wrong 1.7 per cent of prescriptions. In the United States alone this amounts to more than 50 million prescription errors every year!

Some people argue that even if an algorithm could outperform doctors and pharmacists in the technical aspects of their professions, it could never replace their human touch. If your CT indicates you have cancer, would you like to receive the news from a caring and empathetic human doctor, or from a machine? Well, how about receiving the news from a caring and empathetic machine that tailors its words to your personality type? Remember that organisms are algorithms, and Watson could detect your emotional state with the same accuracy that it detects your tumours.

This idea has already been implemented by some customer-services departments, such as those pioneered by the Chicago-based Mattersight Corporation. Mattersight publishes its wares with the following advert: ‘Have you ever spoken with someone and felt as though you just clicked? The magical feeling you get is the result of a personality connection. Mattersight creates that feeling every day, in call centers around the world.’ When you call customer services with a request or complaint, it usually takes a few seconds to route your call to a representative. In Mattersight systems, your call is routed by a clever algorithm. You first state the reason for your call. The algorithm listens to your request, analyses the words you have chosen and your tone of voice, and deduces not only your present emotional state but also your personality type – whether you are introverted, extroverted, rebellious or dependent. Based on this information, the algorithm links you to the representative that best matches your mood and personality. The algorithm knows whether you need an empathetic person to patiently listen to your complaints, or you prefer a no-nonsense rational type who will give you the quickest technical solution. A good match means both happier customers and less time and money wasted by the customer-services department.

The most important question in twenty-first-century economics may well be what to do with all the superfluous people. What will conscious humans do, once we have highly intelligent non-conscious algorithms that can do almost everything better?

Throughout history the job market was divided into three main sectors: agriculture, industry and services. Until about 1800, the vast majority of people worked in agriculture, and only a small minority worked in industry and services. During the Industrial Revolution people in developed countries left the fields and herds. Most began working in industry, but growing numbers also took up jobs in the services sector. In recent decades developed countries underwent another revolution, as industrial jobs vanished, whereas the services sector expanded. In 2010 only 2 per cent of Americans worked in agriculture, 20 per cent worked in industry, 78 per cent worked as teachers, doctors, webpage designers and so forth. When mindless algorithms are able to teach, diagnose and design better than humans, what will we do?

This is not an entirely new question. Ever since the Industrial Revolution erupted, people feared that mechanisation might cause mass unemployment. This never happened, because as old professions became obsolete, new professions evolved, and there was always something humans could do better than machines. Yet this is not a law of nature, and nothing guarantees it will continue to be like that in the future. Humans have two basic types of abilities: physical abilities and cognitive abilities. As long as machines competed with us merely in physical abilities, you could always find cognitive tasks that humans do better. So machines took over purely manual jobs, while humans focused on jobs requiring at least some cognitive skills. Yet what will happen once algorithms outperform us in remembering, analysing and recognising patterns?

The idea that humans will always have a unique ability beyond the reach of non-conscious algorithms is just wishful thinking. True, at present there are numerous things that organic algorithms do better than non-organic ones, and experts have repeatedly declared that something will ‘for ever’ remain beyond the reach of non-organic algorithms. But it turns out that ‘for ever’ often means no more than a decade or two. Until a short time ago, facial recognition was a favourite example of something which even babies accomplish easily but which escaped even the most powerful computers on earth. Today facial-recognition programs are able to recognise people far more efficiently and quickly than humans can. Police forces and intelligence services now use such programs to scan countless hours of video footage from surveillance cameras, tracking down suspects and criminals.

In the 1980s when people discussed the unique nature of humanity, they habitually used chess as primary proof of human superiority. They believed that computers would never beat humans at chess. On 10 February 1996, IBM’s Deep Blue defeated world chess champion Garry Kasparov, laying to rest that particular claim for human pre-eminence.

Deep Blue was given a head start by its creators, who preprogrammed it not only with the basic rules of chess, but also with detailed instructions regarding chess strategies. A new generation of AI uses machine learning to do even more remarkable and elegant things. In February 2015 a program developed by Google DeepMind learned by itself how to play forty-nine classic Atari games. One of the developers, Dr Demis Hassabis, explained that ‘the only information we gave the system was the raw pixels on the screen and the idea that it had to get a high score. And everything else it had to figure out by itself.’ The program managed to learn the rules of all the games it was presented with, from Pac-Man and Space Invaders to car racing and tennis games. It then played most of them as well as or better than humans, sometimes coming up with strategies that never occur to human players.

Computer algorithms have recently proven their worth in ball games, too. For many decades, baseball teams used the wisdom, experience and gut instincts of professional scouts and managers to pick players. The best players fetched millions of dollars, and naturally enough the rich teams got the cream of the market, whereas poorer teams had to settle for the scraps. In 2002 Billy Beane, the manager of the low-budget Oakland Athletics, decided to beat the system. He relied on an arcane computer algorithm developed by economists and computer geeks to create a winning team from players that human scouts overlooked or undervalued. The old-timers were incensed by Beane’s algorithm transgressing into the hallowed halls of baseball. They said that picking baseball players is an art, and that only humans with an intimate and long-standing experience of the game can master it. A computer program could never do it, because it could never decipher the secrets and the spirit of baseball.

They soon had to eat their baseball caps. Beane’s shoestring-budget algorithmic team ($44 million) not only held its own against baseball giants such as the New York Yankees ($125 million), but became the first team ever in American League baseball to win twenty consecutive games. Not that Beane and Oakland could enjoy their success for long. Soon enough, many other baseball teams adopted the same algorithmic approach, and since the Yankees and Red Sox could pay far more for both baseball players and computer software, low-budget teams such as the Oakland Athletics now had an even smaller chance of beating the system than before.

In 2004 Professor Frank Levy from MIT and Professor Richard Murnane from Harvard published a thorough research of the job market, listing those professions most likely to undergo automation. Truck drivers were given as an example of a job that could not possibly be automated in the foreseeable future. It is hard to imagine, they wrote, that algorithms could safely drive trucks on a busy road. A mere ten years later, Google and Tesla not only imagine this, but are actually making it happen.

In fact, as time goes by, it becomes easier and easier to replace humans with computer algorithms, not merely because the algorithms are getting smarter, but also because humans are professionalising. Ancient hunter-gatherers mastered a very wide variety of skills in order to survive, which is why it would be immensely difficult to design a robotic hunter-gatherer. Such a robot would have to know how to prepare spear points from flint stones, how to find edible mushrooms in a forest, how to use medicinal herbs to bandage a wound, how to track down a mammoth and how to coordinate a charge with a dozen other hunters. However, over the last few thousand years we humans have been specialising. A taxi driver or a cardiologist specialises in a much narrower niche than a hunter-gatherer, which makes it easier to replace them with AI.

Even the managers in charge of all these activities can be replaced. Thanks to its powerful algorithms, Uber can manage millions of taxi drivers with only a handful of humans. Most of the commands are given by the algorithms without any need of human supervision. In May 2014 Deep Knowledge Ventures – a Hong Kong venture-capital firm specialising in regenerative medicine – broke new ground by appointing an algorithm called VITAL to its board. VITAL makes investment recommendations by analysing huge amounts of data on the financial situation, clinical trials and intellectual property of prospective companies. Like the other five board members, the algorithm gets to vote on whether the firm makes an investment in a specific company or not.

Examining VITAL’s record so far, it seems that it has already picked up one managerial vice: nepotism. It has recommended investing in companies that grant algorithms more authority. With VITAL’s blessing, Deep Knowledge Ventures has recently invested in Silico Medicine, which develops computer-assisted methods for drug research, and in Pathway Pharmaceuticals, which employs a platform called OncoFinder to select and rate personalised cancer therapies.

As algorithms push humans out of the job market, wealth might become concentrated in the hands of the tiny elite that owns the all-powerful algorithms, creating unprecedented social inequality. Alternatively, the algorithms might not only manage businesses, but actually come to own them. At present, human law already recognises intersubjective entities like corporations and nations as ‘legal persons’. Though Toyota or Argentina has neither a body nor a mind, they are subject to international laws, they can own land and money, and they can sue and be sued in court. We might soon grant similar status to algorithms. An algorithm could then own a venture-capital fund without having to obey the wishes of any human master.

If the algorithm makes the right decisions, it could accumulate a fortune, which it could then invest as it sees fit, perhaps buying your house and becoming your landlord. If you infringe on the algorithm’s legal rights – say, by not paying rent – the algorithm could hire lawyers and sue you in court. If such algorithms consistently outperform human fund managers, we might end up with an algorithmic upper class owning most of our planet. This may sound impossible, but before dismissing the idea, remember that most of our planet is already legally owned by non-human inter-subjective entities, namely nations and corporations. Indeed, 5,000 years ago much of Sumer was owned by imaginary gods such as Enki and Inanna. If gods can possess land and employ people, why not algorithms?

So what will people do? Art is often said to provide us with our ultimate (and uniquely human) sanctuary. In a world where computers replace doctors, drivers, teachers and even landlords, everyone would become an artist. Yet it is hard to see why artistic creation will be safe from the algorithms. Why are we so sure computers will be unable to better us in the composition of music? According to the life sciences, art is not the product of some enchanted spirit or metaphysical soul, but rather of organic algorithms recognising mathematical patterns. If so, there is no reason why non-organic algorithms couldn’t master it.

David Cope is a musicology professor at the University of California in Santa Cruz. He is also one of the more controversial figures in the world of classical music. Cope has written programs that compose concertos, chorales, symphonies and operas. His first creation was named EMI (Experiments in Musical Intelligence), which specialised in imitating the style of Johann Sebastian Bach. It took seven years to create the program, but once the work was done, EMI composed 5,000 chorales à la Bach in a single day. Cope arranged a performance of a few select chorales in a music festival at Santa Cruz. Enthusiastic members of the audience praised the wonderful performance, and explained excitedly how the music touched their innermost being. They didn’t know it was composed by EMI rather than Bach, and when the truth was revealed, some reacted with glum silence, while others shouted in anger.

EMI continued to improve, and learned to imitate Beethoven, Chopin, Rachmaninov and Stravinsky. Cope got EMI a contract, and its first album – Classical Music Composed by Computer – sold surprisingly well. Publicity brought increasing hostility from classical-music buffs. Professor Steve Larson from the University of Oregon sent Cope a challenge for a musical showdown. Larson suggested that professional pianists play three pieces one after the other: one by Bach, one by EMI, and one by Larson himself. The audience would then be asked to vote who composed which piece. Larson was convinced people would easily tell the difference between soulful human compositions, and the lifeless artefact of a machine. Cope accepted the challenge. On the appointed date, hundreds of lecturers, students and music fans assembled in the University of Oregon’s concert hall. At the end of the performance, a vote was taken. The result? The audience thought that EMI’s piece was genuine Bach, that Bach’s piece was composed by Larson, and that Larson’s piece was produced by a computer.

Critics continued to argue that EMI’s music is technically excellent, but that it lacks something. It is too accurate. It has no depth. It has no soul. Yet when people heard EMI’s compositions without being informed of their provenance, they frequently praised them precisely for their soulfulness and emotional resonance.

Following EMI’s successes, Cope created newer and even more sophisticated programs. His crowning achievement was Annie. Whereas EMI composed music according to predetermined rules, Annie is based on machine learning. Its musical style constantly changes and develops in reaction to new inputs from the outside world. Cope has no idea what Annie is going to compose next. Indeed, Annie does not restrict itself to music composition but also explores other art forms such as haiku poetry. In 2011 Cope published Comes the Fiery Night: 2,000 Haiku by Man and Machine. Of the 2,000 haikus in the book, some are written by Annie, and the rest by organic poets. The book does not disclose which are which. If you think you can tell the difference between human creativity and machine output, you are welcome to test your claim.

In the nineteenth century the Industrial Revolution created a huge new class of urban proletariats, in the twenty-first century we might witness the creation of a new massive class: people devoid of any economic, political or even artistic value, who contribute nothing to the prosperity, power and glory of society.

In September 2013 two Oxford researchers, Carl Benedikt Frey and Michael A. Osborne, published ‘The Future of Employment’, in which they surveyed the likelihood of different professions being taken over by computer algorithms within the next twenty years. The algorithm developed by Frey and Osborne to do the calculations estimated that 47 per cent of US jobs are at high risk. For example, there is a 99 per cent probability that by 2033 human telemarketers and insurance underwriters will lose their jobs to algorithms. There is a 98 per cent probability that the same will happen to sports referees, 97 per cent that it will happen to cashiers and 96 per cent to chefs. Waiters – 94 per cent. Paralegal assistants – 94 per cent. Tour guides – 91 per cent. Bakers – 89 per cent. Bus drivers – 89 per cent. Construction labourers – 88 per cent. Veterinary assistants – 86 per cent. Security guards – 84 per cent. Sailors – 83 per cent. Bartenders – 77 per cent. Archivists – 76 per cent. Carpenters – 72 per cent. Lifeguards – 67 per cent. And so forth. There are of course some safe jobs. The likelihood that computer algorithms will displace archaeologists by 2033 is only 0.7 per cent, because their job requires highly sophisticated types of pattern recognition, and doesn’t produce huge profits. Hence it is improbable that corporations or government will make the necessary investment to automate archaeology within the next twenty years.

Of course, by 2033 many new professions are likely to appear, for example, virtual-world designers. But such professions will probably require much more creativity and flexibility than your run-of-the-mill job, and it is unclear whether forty-year-old cashiers or insurance agents will be able to reinvent themselves as virtual-world designers (just try to imagine a virtual world created by an insurance agent!). And even if they do so, the pace of progress is such that within another decade they might have to reinvent themselves yet again. After all, algorithms might well outperform humans in designing virtual worlds too. The crucial problem isn’t creating new jobs. The crucial problem is creating new jobs that humans perform better than algorithms.

- Yuval Noah Harari, The Great Decoupling in Homo Deus: A Brief History of Tomorrow

2 notes

·

View notes

Text

Product structure of LCD (1)

https://www.topfoison.net/lcd/product-structure-of-lcd-1/

1. According to the technical classification of display screen: tft-lcd /Mono LCD(stn-fstn)/OLEDLCD:(Liquid Crystal Display)

Liquid Crystal Display (LCD) is short for Liquid Crystal Display (Liquid Crystal Display). The structure of LCD is to place Liquid Crystal in two parallel glass pieces. There are many thin vertical and horizontal wires between the two pieces of glass.The structure of the LCD is placed in the middle of the two pieces of parallel glass liquid crystal box, the substrate glass set on TFT (thin film transistor), set the color filter substrate glass on, on the TFT signal and the voltage change to control the rotation direction of the liquid crystal molecules, so as to achieve control of each pixel display emergent polarized light or not and to achieve.Create various colors based on white light passing through a color filter.The white light will form basic colors such as red, green and blue after passing through, and the light transmittance of each pixel point will be controlled by an electric current, so as to control the color of the pixel.

TFT-LCD

TFT (Thin Film Transistor) refers to the Thin Film Transistor, each LCD pixels is integrated in the Thin Film Transistor to drive behind the pixels, which can achieve high speed, high brightness, high contrast display screen information, is one of the best LCD color display device, compared with those of the STN TFT has excellent color saturation, reducing power and higher contrast, the sun still see very clear, but the downside is consumed, but also high cost.

Main features (advantages and disadvantages)

(1) good service characteristics: low voltage applications, low driving voltage, solid use safety and reliability;Flat, thin and light, saving a lot of raw materials and use space;Low power consumption, reflective tft-lcd even only about 1% of CRT, saving a lot of energy;Tft-lcd products also have many characteristics, such as specifications, size series, variety, convenient and flexible use, easy maintenance, update, upgrade, long service life and so on. Display quality from the simplest monochrome character graphics to high resolution, high color fidelity, high brightness, high contrast, high response speed of various specifications of video display;Display modes are direct vision, projection, perspective, and reflection.

(2) good environmental features: no radiation, no flicker, no damage to the user’s health.