#run script inside python shell

Explore tagged Tumblr posts

Text

Boost Your Python Scripts with Command Line Argument Parsing Learn how to efficiently handle arguments in Python command line with Tutorials24x7. This guide covers running Python scripts with arguments, optimizing execution, and enhancing script functionality. Master command-line argument parsing to automate tasks and streamline your coding workflow. Boost your Python skills with expert tips and practical examples! 🚀

0 notes

Text

Matrix Breakout: 2 Morpheus

Hello everyone, it's been a while. :)

Haven't been posting much recently as I haven't really done anything noteworthy- I've just been working on methodologies for different types of penetration tests, nothing interesting enough to write about!

However, I have my methodologies largely covered now and so I'll have the time to do things again. There are a few things I want to look into, particularly binary exploit development and OS level security vulnerabilities, but as a bit of a breather I decided to root Morpheus from VulnHub.

It is rated as medium to hard, however I don't feel there's any real difficulty to it at all.

Initial Foothold

Run the standard nmap scans and 3 open ports will be discovered:

Port 22: SSH

Port 80: HTTP

Port 31337: Elite

I began with the web server listening at port 80.

The landing page is the only page offered- directory enumeration isn't possible as requests to pages just time out. However, there is the hint to "Follow the White Rabbit", along with an image of a rabbit on the page. Inspecting the image of the rabbit led to a hint in the image name- p0rt_31337.png. Would never have rooted this machine if I'd known how unrealistic and CTF-like it was. *sigh*

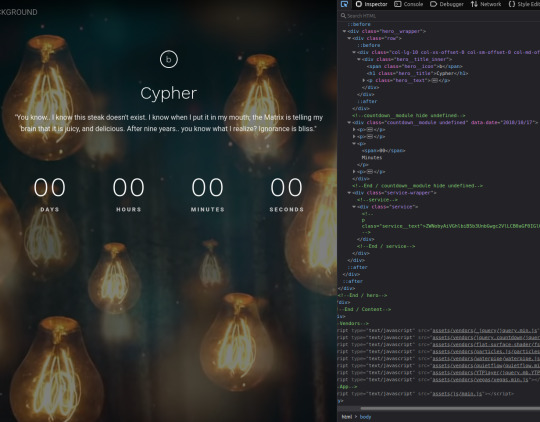

The above is the landing page of the web server listening at port 31337, along with the page's source code. There's a commented out paragraph with a base64 encoded string inside.

The string as it is cannot be decoded, however the part beyond the plus sign can be- it decodes to 'Cypher.matrix'.

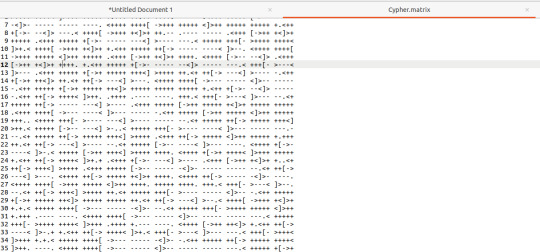

This is a file on the web server at port 31337 and visiting it triggers a download. Open the file in a text editor and see this voodoo:

Upon seeing the ciphertext, I was immediately reminded of JSFuck. However, it seemed to include additional characters. It took me a little while of looking around before I came across this cipher identifier.

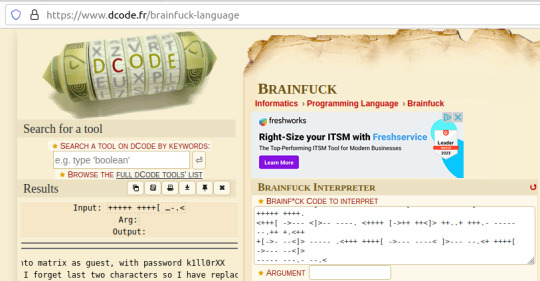

I'd never heard of Brainfuck, but I was confident this was going to be the in-use encryption cipher due to the similarity in name to JSFuck. So, I brainfucked the cipher and voila, plaintext. :P

Here, we are given a username and a majority of the password for accessing SSH apart from the last two character that were 'forgotten'.

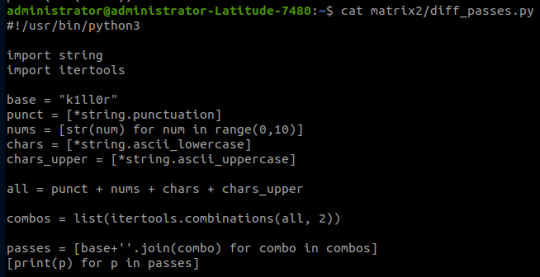

I used this as an excuse to use some Python- it's been a while and it was a simple script to create. I used the itertools and string modules.

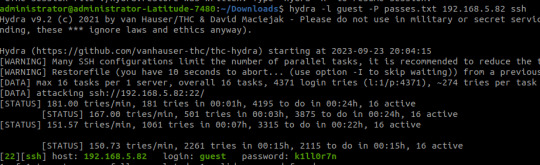

The script generates a password file with the base password 'k1ll0r' along with every possible 2-character combination appended. I simply piped the output into a text file and then ran hydra.

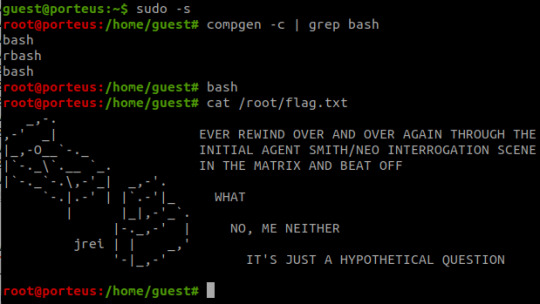

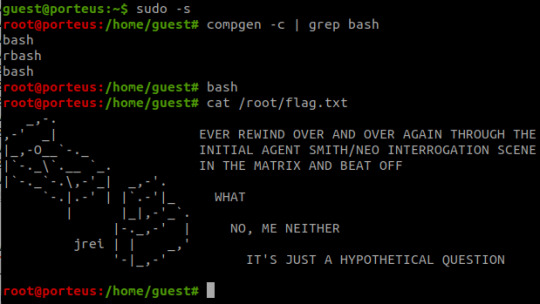

The password is eventually revealed to be 'k1ll0r7n'. Surely enough this grants access to SSH; we are put into an rbash shell with no other shells immediately available. It didn't take me long to discover how to bypass this- I searched 'rbash escape' and came across this helpful cheatsheet from PSJoshi. Surely enough, the first suggested command worked:

The t flag is used to force tty allocation, needed for programs that require user input. The "bash --noprofile" argument will cause bash to be run; it will be in the exec channel rather than the shell channel, thus the need to force tty allocation.

Privilege Escalation

With access to Bash commands now, it is revealed that we have sudo access to everything, making privilege escalation trivial- the same rbash shell is created, but this time bash is directly available.

Thoughts

I did enjoy working on Morpheus- the CTF element of it was fun, and I've never came across rbash before so that was new.

However, it certainly did not live up to the given rating of medium to hard. I'm honestly not sure why it was given such a high rating as the decoding and decryption elements are trivial to overcome if you have a foundational knowledge of hacking and there is alot of information on bypassing rbash.

It also wasn't realistic in any way, really, and the skills required are not going to be quite as relevant in real-world penetration testing (except from the decoding element!)

#brainfuck#decryption#decoding#base64#CTF#vulnhub#cybersecurity#hacking#rbash#matrix#morpheus#cypher

9 notes

·

View notes

Text

i'm a dual monitor girlie and ran i3 for a few years - if you like maximising real estate, then clever use of workspaces, tabs, and splits inside tabs will do you well. custom tagged scratchpad windows (or a dmenu/rofi switcher for open scratchpads) to summon floating stuff from the void.

most tilers are light (it's the bar, launcher, file manager etc. that add weight).

qtile didn't really do it for me, though ik some people love it. i've moved to river and am still setting it up, but it's already comfy.

tilers are keyboard-driven so you're gonna be using at least some shortcuts, but you can (and should) make them ones you'll remember.

river's config is an executable run on login. the example is a shell script but river doesn't care. could be a C program, python, whatever.

river also has tags (personally prefer them to workspaces) and uses a stack (rather than node tree) and supports community-made tiling layouts. the default has a main and secondary area, which works well for juggling programs, though not entirely how i'd like.

feel free to drop a message if you're curious !!

ok people what graphical (or terminal, i GUESS ugh) file managers do you use. because i'm honestly getting sick of xfe's bullshit

(not xfce, the xfce suite packages thunar. i'm talking about a manager literally called xfe. it's bad)

106 notes

·

View notes

Text

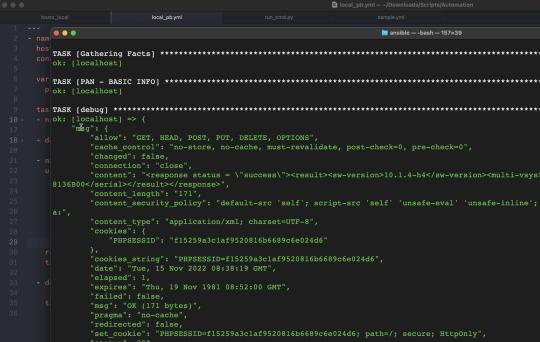

Sending REST APIs in Playbooks

Hello Networkers,

We have released a new update to our existing ‘Network Automation using Ansible’ course we will show you how you can run python scripts inside of an Ansible playbook utilizing the shell module. Another type of task we can run is sending REST API requests inside of a playbook. For this example we will send a request to one of my Palo Alto Network firewalls to get some basic system information..

You can get more details here:

https://www.routehub.net/course/ansible/

8 notes

·

View notes

Text

Job at hand: Must know 16 programming languages

Everything from waking up in the morning to seeing the weather every day before going to bed at night can now be seen in just a few clicks. This has been made possible by the development of technology. To put it more clearly, for the benefit of software and various applications. Software dependence is increasing with time. "Software is eating the world," said Marcy Andresen, a U.S. technology entrepreneur and investor. "The whole world is now in the grip of software. These much-needed or useless software is created by coding." Coding is done using programming languages. There are many languages in this programming. Some of these languages are very popular and more helpful in the job field. An organization hires programmers according to the type of work. So before learning programming, one should have an accurate idea about the fields of work. The popular business magazine Business Insider has compiled 18 popular programming languages based on a survey of stock overflow and TOB index.

The report highlights the languages that are currently popular and in demand in the job market.

Java

Java is a programming language. Since Sun Microsystems designed Java in the early '90s, it quickly became one of the most popular programming languages in the world. This language is now used to create applications for the mobile platform Android. In addition, various business software is created with the help of Java.

C

C is a programming language. It was created by Dennis Ritchie and Bell Labs while working in the '70s. . The first purpose of creating the language was to use it to write code for the Unix operating system. It soon became a widely used language. C has had a profound effect on many programming languages. The most interesting aspect of it is its portability. Programs written in this language can be run on computers of any operating system.

Python

Python is a high-level programming language. It was first published in 1991 by Guido van Rossum. Much emphasis has been placed on the readability of the program while creating it. Here the programmer's work is given more importance than the computer. Python's core syntax and semantics are very brief. However, the standard library of the language is much richer. Among the big projects that have used Python are Zop Application Server, Emnet Distributed File Store, YouTube and the original BitTorrent client. Among the big organizations that use Python are Google and NASA. Python has multiple uses in the information security industry. Some Immunity Security Tools, some Core Security Tools, Web Application Security Scanner WAPT and Fazar TAOF, are particularly noteworthy.

PHP

PHP is a scripting programming language, which was originally designed to create websites. It incorporates command line interface capabilities and can use standalone graphical applications. Rasmus Lordruff invented PHP in 1995. Most of it is used to create web servers. It can be used for free on almost all operating systems and locations. According to Wikipedia, PHP is being used on more than 2 million websites and 1 million web servers.

Visual Basic

Visual Basic is abbreviated as VB. Software giant Microsoft launched the language in 1991 as an improved version of the old Basic language. As per Wikipedia, it is the most broadly utilized programming language in PC history. Although it is an old programming language, it is still used today.

JavaScript

The JavaScript programming language is very popular for creating web-based applications. Java has nothing to do with this. JavaScript is being used on popular website sites around the world.

And

'R' is an open computer programming language created for statistical work. The fruit of the tireless and relentless work of world-renowned statisticians. It is not just a programming language but also a statistical package and an interpreter.

Go

Google develops the programming language 'Go.' Its structure is not at all complicated like other object-oriented languages. There is no question of sub classing here. It has brought different dimensions or different tastes in object-oriented programming in the use of interface. It has the imprint of Python language. Google always favors Python a little bit. Like Python, it supports Go and Slice, which allows you to refer to a specific part of an array with a simple syntax.

Ruby

Ruby is another popular programming language. It very well may be utilized to foster work area applications and web applications. Its different mainstream systems make the work simpler. Its various popular frameworks make the work easier. It is much easier to maintain the code in this language. No need to comment too much. If you look at the code, you can understand the purpose of the code. Ruby has no semicolon. It is White Space Independent. The use of brackets is very low.

Swift

Swift Steve Jobs is the programming language of the famous tech giant Apple. This language works faster than Objective-C. It can be easily learned. Programmers can write the code at the same time to see its output. Swift is powerful and efficient as a compiled language, as simple and interactive as any other popular language.

Objective c

Objective C is a reflective, object-oriented programming language. Smalltalk's message exchange system has come together with so many C languages. It is currently used mainly in Mac OS X, iPhone OS. It is based on the OpenStep standard - the main language of the NextStep, OpenStep and Cocoa frameworks.

Pearl

Larry Wall invented the Pearl language. It was first published in 1986. Features have been borrowed from C, Born Shell, Oak, Sed, and Lisp in this language. It is highly effective in string processing.

The other five programming languages on Business Insider's list are gravy, assembly language, Pascal, Matlab, etc. If you know these well, there will be no problem in getting a job worldwide. A programmer does not have to be unemployed if he is fluent in these languages.

- Mahmudur Rahman

2 notes

·

View notes

Text

12 Terminal Commands

Every Web Developer Should Know

The terminal is one of the foremost vital productivity tools in a developer's arsenal. Mastering it will have an awfully positive impact on your work flow, as several everyday tasks get reduced to writing a straightforward command and striking Enter. In this article we've ready for you a set of UNIX system commands that may assist you get the foremost out of your terminal. a number of them square measure inbuilt, others square measure free tools that square measure reliable and may be put in but a moment.

Curl

Curl may be a program line tool for creating requests over HTTP(s), FTP and dozens of different protocols you will haven't detected concerning. It will transfer files, check response headers, and freely access remote information.

In net development curl is usually used for testing connections and dealing with RESTful APIs.

# Fetch the headers of a URL. curl -I http://google.com HTTP/1.1 302 Found Cache-Control: private Content-Type: text/html; charset=UTF-8 Referrer-Policy: no-referrer Location: http://www.google.com/?gfe_rd=cr&ei=0fCKWe6HCZTd8AfCoIWYBQ Content-Length: 258 Date: Wed, 09 Aug 2017 11:24:01 GMT # Make a GET request to a remote API. curl http://numbersapi.com/random/trivia 29 is the number of days it takes Saturn to orbit the Sun.

Curl commands can get much more complicated than this. There are tons of options for controlling headers, cookies, authentication,and more.

Tree

Tree may be a little instruction utility that shows you a visible illustration of the files during a directory. It works recursively, going over every level of nesting and drawing a formatted tree of all the contents. this fashion you'll quickly skim and notice the files you're trying to find.

tree . ├── css │ ├── bootstrap.css │ ├── bootstrap.min.css ├── fonts │ ├── glyphicons-halflings-regular.eot │ ├── glyphicons-halflings-regular.svg │ ├── glyphicons-halflings-regular.ttf │ ├── glyphicons-halflings-regular.woff │ └── glyphicons-halflings-regular.woff2 └── js ├── bootstrap.js └── bootstrap.min.js

There is also the option to filter the results using a simple regEx-like pattern:

tree -P '*.min.*' . ├── css │ ├── bootstrap.min.css ├── fonts └── js └── bootstrap.min.js

Tmux

According to its Wiki, Tmux may be a terminal electronic device, that translated in human language would mean that it is a tool for connecting multiple terminals to one terminal session.

It helps you to switch between programs in one terminal, add split screen panes, and connect multiple terminals to a similar session, keeping them in adjust. Tmux is particularly helpful once functioning on a far off server, because it helps you to produce new tabs while not having to log in once more.

Disk usage - du

The du command generates reports on the area usage of files and directories. it's terribly straightforward to use and may work recursively, rummaging every directory and returning the individual size of each file. A common use case for du is once one in every of your drives is running out of area and you do not understand why. Victimization this command you'll be able to quickly see what proportion storage every folder is taking, therefore finding the most important memory saver.

# Running this will show the space usage of each folder in the current directory. # The -h option makes the report easier to read. # -s prevents recursiveness and shows the total size of a folder. # The star wildcard (*) will run du on each file/folder in current directory. du -sh * 1.2G Desktop 4.0K Documents 40G Downloads 4.0K Music 4.9M Pictures 844K Public 4.0K Templates 6.9M Videos

There is also a similar command called

df

(Disk Free) which returns various information about the available disk space (the opposite of du).

Git

Git is far and away the foremost standard version system immediately. It’s one among the shaping tools of contemporary internet dev and that we simply could not leave it out of our list. There area unit many third-party apps and tools on the market however most of the people choose to access unpleasant person natively although the terminal. The unpleasant person CLI is basically powerful and might handle even the foremost tangled project history.

Tar

Tar is the default Unix tool for working with file archives. It allows you to quickly bundle multiple files into one package, making it easier to store and move them later on.

tar -cf archive.tar file1 file2 file3

Using the -x option it can also extract existing .tar archives.

tar -xf archive.tar

Note that almost all alternative formats like .zip and .rar can't be opened by tar and need alternative command utilities like unfasten.

Many trendy operating system systems run associate expanded version of tar (GNU tar) that may additionally perform file size compression:

# Create compressed gzip archive. tar -czf file.tar.gz inputfile1 inputfile2 # Extract .gz archive. tar -xzf file.tar.gz

If your OS doesn't have that version of tar, you can use

gzip

,

zcat

or

compress

to reduce the size of file archives.

md5sum

Unix has many inbuilt hashing commands together with

md5sum

,

sha1sum

and others. These program line tools have varied applications in programming, however most significantly they'll be used for checking the integrity of files. For example, if you've got downloaded associate degree .iso file from associate degree untrusted supply, there's some likelihood that the file contains harmful scripts. To form positive the .iso is safe, you'll generate associate degree md5 or alternative hash from it.

md5sum ubuntu-16.04.3-desktop-amd64.iso 0d9fe8e1ea408a5895cbbe3431989295 ubuntu-16.04.3-desktop-amd64.iso

You can then compare the generated string to the one provided from the first author (e.g. UbuntuHashes).

Htop

Htop could be a a lot of powerful different to the intrinsic prime task manager. It provides a complicated interface with several choices for observation and dominant system processes.

Although it runs within the terminal, htop has excellent support for mouse controls. This makes it a lot of easier to navigate the menus, choose processes, and organize the tasks thought sorting and filtering.

Ln

Links in UNIX operating system square measure the same as shortcuts in Windows, permitting you to urge fast access to bound files. Links square measure created via the ln command and might be 2 types: arduous or symbolic. Every kind has totally different properties and is employed for various things (read more).

Here is associate example of 1 of the various ways that you'll be able to use links. as an instance we've a directory on our desktop referred to as Scripts. It contains showing neatness organized bash scripts that we have a tendency to ordinarily use. on every occasion we wish to decision one in every of our scripts we'd need to do this:

~/Desktop/Scripts/git-scripts/git-cleanup

Obviously, this is isn't very convinient as we have to write the absolute path every time. Instead we can create a symlink from our Scripts folder to /usr/local/bin, which will make the scripts executable from all directories.

sudo ln -s ~/Desktop/Scripts/git-scripts/git-cleanup /usr/local/bin/

With the created symlink we can now call our script by simply writing its name in any opened terminal.

git-cleanup

SSH

With the ssh command users will quickly hook up with a foreign host and log into its UNIX operating system shell. This makes it doable to handily issue commands on the server directly from your native machine's terminal.

To establish a association you just got to specify the proper science address or URL. The primary time you hook up with a replacement server there'll be some style of authentication.

ssh username@remote_host

If you want to quickly execute a command on the server without logging in, you can simply add a command after the url. The command will run on the server and the result from it will be returned.

ssh username@remote_host ls /var/www some-website.com some-other-website.com

There is a lot you can do with SSH like creating proxies and tunnels, securing your connection with private keys, transferring files and more.

Grep

Grep is the standard Unix utility for finding strings inside text. It takes an input in the form of a file or direct stream, runs its content through a regular expression, and returns all the matching lines.

This command comes in handy once operating with massive files that require to be filtered. Below we tend to use grep together with the date command to look through an oversized log file and generate a brand new file containing solely errors from nowadays.

// Search for today's date (in format yyyy-mm-dd) and write the results to a new file. grep "$(date +"%Y-%m-%d")" all-errors-ever.log > today-errors.log

Another nice command for operating with strings is

sed

. It’s additional powerful (and additional complicated) than grep and may perform nearly any string-related task together with adding, removing or replacement strings.

Alias

Many OS commands, together with some featured during this article, tend to urge pretty long when you add all the choices to them. to create them easier to recollect, you'll produce short aliases with the alias bash inbuilt command:

# Create an alias for starting a local web server. alias server="python -m SimpleHTTPServer 9000" # Instead of typing the whole command simply use the alias. server Serving HTTP on 0.0.0.0 port 9000 ...

The alias are offered as long as you retain that terminal open. to create it permanent you'll add the alias command to your .bashrc file. We will be happy to answer your questions on designing, developing, and deploying comprehensive enterprise web, mobile apps and customized software solutions that best fit your organization needs.

As a reputed Software Solutions Developer we have expertise in providing dedicated remote and outsourced technical resources for software services at very nominal cost. Besides experts in full stacks We also build web solutions, mobile apps and work on system integration, performance enhancement, cloud migrations and big data analytics. Don’t hesitate to

get in touch with us!

1 note

·

View note

Text

Tips to switch careers in Python?

How to switch careers in Python?

Python was granted programing language Hall of Fame within the year 2010 proceeding with its adventure. As indicated by Tiobe Python made a stride ahead moving from fifth to fourth place in 2018 with conventional Java, C, and C++ before it. Tiobe Index is employed to grasp the celebrity of programming dialects over the planet. These evaluations are taken from the foremost gifted specialists and on the bottom of list items from famous web crawlers.

Python may be a programing language which is extremely simple and straightforward to find out. Python slices improvement time down the center with its easy to know grammar and straightforward arrangement highlight applied data science with python. Additionally, it's tons of libraries that help information examination, control, and representation. Subsequently, it's developed because the most favored language and viewed because the “Following Big Thing” and an “Absolute necessity” for Professionals.

Future with Python

A fruitful expert isn’t the one that has his rundown of accomplishments, yet the one is reliably improving, developing, and adjusting his abilities. Consider the emergency looked by the present programming market. the need for a programing language that's up with the foremost recent improvements in several advances and supports other coding stages like C, C++, Java which were available for quite awhile .

Corporate pioneers are relied upon to accomplish more inside the restrictions of your time which make moving engineers outfox without upsetting innovation. Python experts its rivals in these angles. Its broad rundown of bundles which may be cloned and altered backings singular experience. Offer a warm greeting to the specialists from expansive zones. If you're hoping to hitch a relentless structure of programming without losing your long stretches of skill, at that time you're progressing the right way. just one out of each odd programing language has its likeness near English.

Simple coding

Applied Data Science with Python coding is smooth for the specialists also as for the tenderfoots. it's extremely minimal, straightforward with little or no weighty language structure looks as if it’s compacted out of other long projects with comparative operational use. due to its adaptable nature, Python is somewhat delayed once in a while . it's safe to mention that you simply are mindful of the favored 20/80 guidelines, the equivalent apply here also? Ask any engineer chipping away at Python; he will confide in you that learning 20% of Python coding will assist you in executing 80% of the cycles.

Python on Linux and UNIX and Windows working frameworks

• It is safe to mention that you simply are mindful that the bulk of PCs, Laptops, and worker frameworks with Linux and UNIX working frameworks alongside a number of windows os accompany an ongoing sort of Python introduced in them?

• Python is employed generally for the Linux and Unix framework administrator assignments and is a fantastic fit when contrasted with Ruby, Pearl, Bash, and so on

• You can perform an examination of log documents, genomic groupings, and factual investigation with sped up during a matter of moments.

• For a supervisor work will accumulate with more speed contrasted with un-heap. If you utilized shell-scripting previously, learning Python may be a cakewalk.

• In contrast to Linux and Unix, an outsized portion of the windows don’t uphold the establishment of Python, and you'll require MSI packages (especially accumulated for windows) refreshed for every delivery.

Advantages of Python

Numerous organizations were managing realistic and web planning applications, gaming, text and movie handling, and then on utilize Python. Its programming given an open-source permit which makes it simple to download and run. It’s a greater amount of enjoyable to chip away at at this product. Python adds accommodation to the specialists of other coding dialects without going astray from their base structure.

Python career opportunities

Number of Python Jobs

While there’s popularity for Python engineers in India, the pliability is outrageously low. To affirm this, we’ll assess an HR proficient explanation. The expert was required to pick 10 software engineers each for both Java and Python. Around 100 decent continues overwhelmed certain Java, yet they got just 8 great ones for Python. Along these lines, while they needed to experience an extended cycle to sift through great up-and-comers, with Python, that they had no real option except to require those 8 competitors.

Python features a simple linguistic structure; we truly need more individuals in India to upskill themselves. This is often the thing that creates it a fantastic open door for Indians to urge talented in python. At the purpose once we mention the amount of occupations, there might not be an excessive number for Python in India. Yet, we've an incredible number of occupations per Python developer.

Conclusion

Data Science with Python Online Course is in huge demand thanks to its above discussed career opportunities and advantages. Python development is promising soon. learning the right aptitudes through the right stage will get you to the perfect work. Different accessible online affirmation preparations can get to the perfect aptitudes directly. From my side, I can suggest you a financially savvy, simple-to-learn applied data science with python which may direct you into it. There isn’t anything to worry over the potential outcomes Python will open, you merely got to pull your jeans up and complete things!

Since you recognize the entryways Python can open up for you and what are the distinctive professional openings in Python, it totally depends upon you which of them one will you're taking.

Also check top 10 trending technologies in 2021.

#online classes#python training#online training#elearning#applied python training science#python#python online course

1 note

·

View note

Text

Theme Updates, Offline Upgrades Headline New Additions to Pop!_OS 19.10

Halloween came early this year with our latest release of Pop!_OS. Fill your treat bag with theme updates, Tensorman, easy upgrading, and more as we unwrap the details of Pop!_OS 19.10:

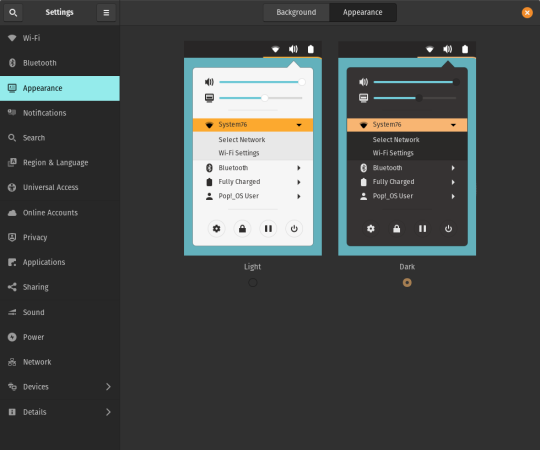

Theme Updates

A new Dark Mode for Pop!_OS is available in the operating system’s Appearance Settings. Both the Light and Dark modes feature higher contrast colors using a neutral color palette that’s easy on the eyes.

The functionality of Dark Mode has been expanded to include the shell, providing a more consistently dark aesthetic across your desktop. If you’re using the User Themes extension to set the shell theme, disable it to use the new integrated Light and Dark mode switcher.

The default theme on Pop!_OS has been rebuilt based on Adwaita. Though users may only notice a slight difference in their widgets, the new OS theme provides significant measures to prevent application themes from experiencing UI breakage. This breakage manifests in the application as missing or misaligned text, broken widgets, and scaling errors, and should not occur with the new theme in place.

The updated theme includes a new set of modernized sound effects. Users will now hear a sound effect when plugging and unplugging a USB or charging cable. The sound effect for adjusting the volume has been removed.

Tensorman

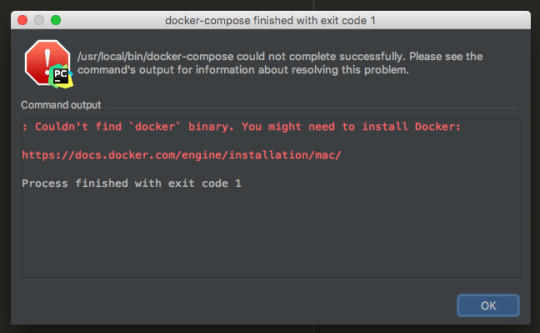

Tensorman is a new tool that we’ve developed to serve as a toolchain manager for Tensorflow, using the official Docker builds of Tensorflow. For example, executing a python script inside of a Tensorflow Docker container with CUDA GPU and Python 3 support will now be as simple as running:

tensorman run --gpu python -- ./script.py

Tensorman allows users to define a default version of Tensorflow user-wide, project-wide, and per run. This will enable all releases of Pop!_OS to gain equal support for all versions of Tensorflow, including pre-releases, without needing to install Tensorflow or the CUDA SDK in the system. Likewise, new releases of Tensorflow upstream will be made immediately available to install with Tensorman.

Examples of how to use Tensorman are in the tool’s support page. Tensorman is now available on Pop!_OS 19.10, and will be coming to Pop!_OS 18.04 LTS soon.

To install Tensorman, enter this command into the Terminal:

sudo apt install tensorman

GNOME 3.34

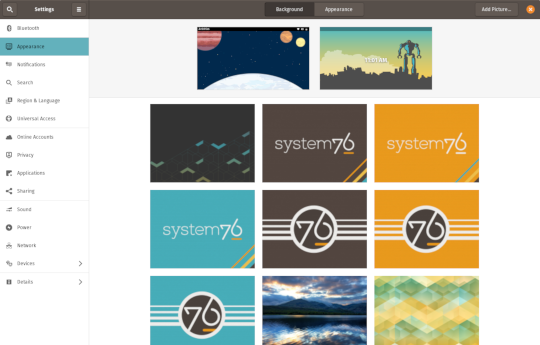

In addition to theming improvements, the GNOME 3.34 release brings some new updates to the fold. From GNOME’s 3.34 Release Notes:

A redesigned Background panel landed in the Appearance settings. Now when you select a background, you will see a preview of it under the desktop panel and lock screen. Custom backgrounds can now be added via the Add Picture… button.

Performance improvements bring smoother animations and a more responsive desktop experience.

Icons in the application overview can be grouped together into folders. To do this, drag an icon on top of another to create a group. Removing all icons from a group will automatically remove the group, too. This makes organizing applications much easier and keeps the application overview clutter-free.

The visual style for the Activities overview was refined as well, including the search entry field, the login password field, and the border that highlights windows. All these changes give the GNOME desktop an improved overall experience.

Some animations in the Activities overview have been refactored, resulting in faster icon loading and caching.

The Terminal application now supports right-to-left and bi-directional languages.

The Files application now warns users when attempting to paste a file into a read-only folder.

Search settings for the Activities overview can now be reordered in the Settings application by dragging them in the settings list. The Night Light section has been moved to the Display panel.

New Upgrade Process

Offline upgrades are now live on Pop!_OS 19.04, bringing faster, more reliable upgrades. When an upgrade becomes available, it is downloaded to your computer. Then, when you decide to upgrade to the newest version of your OS, the upgrade will overwrite the current version of your software. However, this is not to be confused with an automatic update; your OS will remain on the current version until you yourself decide to upgrade.

To upgrade to 19.10 from a fully updated version of Pop!_OS 19.04, open the Settings application and scroll down on the sidebar menu to the Details tab. In the About panel of the Details tab, you will see a button to download the upgrade. Once the download is complete, hit the button again to upgrade your OS. This will be the standard method of upgrading between Pop!_OS releases going forward.

Alternatively, a notification will appear when your system is ready to upgrade. This notification appears on your next login on your fully updated version of Pop!_OS 19.10. Clicking it will take you to the About panel in the Settings application.

In early November, Pop!_OS 18.04 LTS users will be notified to update to Pop!_OS 19.10 or remain on 18.04 until the next LTS version is available.

New to Pop!_OS:

Visit the official Pop!_OS page to download Pop!_OS 19.10.

Upgrade Ubuntu to 19.10

See our support article on upgrading Ubuntu for information on how to upgrade Ubuntu 19.04 to 19.10.

17 notes

·

View notes

Text

PYTHON

PYTHON

A Python application created on a Macintosh computer will run on a Linux machine, and vice versa. Python is a cross-platform language. As long as the Python interpreter is installed on the Windows PC, Python programmes can be used there (most other operating systems come with Python pre-installed). A software called py2exe exists that enables users to convert Python programmes into Windows binaries so they may run programmes created in Python on Windows machines without Python installed.

Python uses white space and indentations differently than many other languages. Python does not use line ends like semicolons to conclude programming statements, unlike many other languages. Additionally, it does not use curly brackets to encapsulate code blocks like for loops and if statements. Instead, Python defines a code block using indentations.

Programming languages

A unique application known as a compiler is used to compile programming languages. A compiler must convert widely used languages like C and C++ into machine code, which is unreadable to humans and can be read and processed by computers. The computer examines the resulting.o file once a C programme has been written and compiled. Code that has been compiled often runs quicker, and only one compilation process is required (unless you change your code). While some compilers perform some fundamental optimization automatically, others contain a number of flags that may be set to prepare the code for parallel processing and many processors.

A computer language's compiled code often executes on top of the hardware upon which it was developed. A C++ programme is immediately run by the processor on which it was written and compiled. While this can speed up the execution of the code, it can occasionally have the unintended consequence of making a compiled programme dependent on the processor and machine. Due to minute hardware variations, code created on one computer might not even run on another, nearly identical machine.

On the other hand, scripting languages don't require a compiler to produce a machine-language file; instead, they are read and interpreted each time you execute them. Because there is no optimization, if you write shoddy code, you'll get shoddy results, which may lead to slower programmes.

Scripting languages don't execute directly on their host processor; instead, they run "within" another programme. For instance, PHP and bash scripts both execute inside the PHP scripting engine and bash shell, respectively. Java, which is regarded as a programming language but is executed by the Java Virtual Machine (JVM), is an exception to this rule.

Although Python is frequently referred to as a programming language, it is actually a scripting language. Since the Python shell is supported on practically every device, its code doesn't require a compiler to run. In addition, it has a few more features in common with scripting languages as opposed to programming languages

Scripting languages sometimes have fewer strict rules for syntax and presentation. White space is given more leeway (with the exception of indentation, mentioned earlier). Programmers no longer have to spend hours troubleshooting code in order to track down a lost semicolon or a missing curly brace. Many Python programmers take pleasure in the fact that their code is human-readable, making it simpler to debug when necessary.

Python is maligned by some programming purists who claim that it might be sluggish and less effective since it is not a compiled language. However, the speed difference between a compiled language and a scripted one becomes less noticeable as processing speed and architecture advance. The difference between the two may only be noticeable in situations where performance is a critical concern.

Many software developers find Python to be a very powerful language that is worthwhile to learn. It is a very alluring solution to many programming issues and applications due to its portability, simplicity, and accessibility to new programmers.

Python offers a wide variety of libraries that offer failsafe ready modules because to its established status and strong community support. They enable quick implementation of even the most complex features in software without sacrificing code dependability.

Python speeds the development process since it is understandable and portable, which has a beneficial effect on the time to market for online applications. Additionally, eliminating compilers makes it possible to execute new and changed code more quickly than with languages that demand recompilation after each update.

Further speeding up development include Python's dynamic typing and binding, high-level built-in data structures, availability of common frameworks, and libraries. Python is a good option for startups and projects with short deadlines because of the quick speed of app development.

IPCS

0 notes

Text

Webots dongle download

Webots dongle download software#

Webots dongle download code#

Webots dongle download professional#

Webots dongle download software#

Crack in this context means the action of removing the copy protection from software or to unlock features from a demo or time-limited trial. It allows you to play karaoke files from the hard drive and directly from CD+G discs, has build-in song database, and automatically manages singer rotation.

Webots dongle download professional#

Get the control of your karaoke show! Siglos Karaoke Professional will make your shows better and easier to run. Siglos Karaoke Professional is a show hosting software for karaoke hosts (KJ). That ensures that Siglos Karaoke Professional 2.0.24 is 100% safe for your computer. The current version of Siglos Karaoke Professional has been scanned by our system, which contains 46 different anti-virus algorithms. Siglos Karaoke Professional is VIRUS-FREE! Our security scan shows that Siglos Karaoke Professional is clean from viruses. This article originally appeared in Linux Format Magazine Issue 260.

Webots dongle download code#

Your final python code should look like this: from pyfirmata import Arduino, util Now turn the potentiometer and watch the LED come to life. The final lines of code are an else condition, which turns the LED off if the value is less than 0.05. If the value’s greater than 0.05 the LED is turned on, and the sleep interval, used to keep the LED on/off by pausing the code, is controlled by the value. if value = None:Īnother condition to test this is if the value is greater than 0.05, the analogue values returned are between 0.0 and 1.0. Therefore an if condition checks the value, and if it is none, it changes it to 0. If the value has no data, then it will return none, and this will crash the code. while True:Ī conditional test is now applied to the value variable. This value will then be printed to the Python shell. The main body of code is a while True loop, which will read the current value of analogue pin 0, which is connected to the potentiometer, and store the value in a variable called value. We should create an object called it and then connect this to the Arduino, before then starting the thread. To read the analogue values from the Arduino we need to create a thread that will run and not interrupt the main code. We’ll start the code for this project In a new blank file, using the same lines to import and configure the pin being used on the Arduino. Please see the diagram below for more information on this.Īrduino Uno board connected to a potentiometer and LED light. We will add the potentiometer to the existing LED test circuit that we have just built and tested. We can use the value returned from the Arduino to control the speed at which the LED flashes. This will be an LED that flashes, but the interval between each flash is controlled via a potentiometer, an analogue electronic component –- something that the Raspberry Pi cannot ordinarily use without extra ADC (analog-to-digital conversion) boards. Sleep(0.2) Flashing LED Lights with Raspberry Pi and Arduino The final python script should look like this: from pyfirmata import Arduino, util Save the code and then run it from your editor (IDLE Run > Run Module/Thonny Run > Run Current Script) and after a few seconds the LED connected to the Arduino will flash, proving that we have a working connection. Then we sleep for 0.2 seconds, before turning the pin off and sleeping once more. Note that we use the variable led to identify the pin. We will call the object board, with a class to control the pin digitally (0,1) and then write 1 to the pin to turn it on. Inside of a while True loop, we can write the code that will turn the LED on and off every 0.2 seconds. You create it by adding the line: led = 12 board = Arduino('/dev/ttyUSB0')Ī variable called led is used to store the Arduino pin number. For this, we shall need to use the USB device information from dmesg. The next step is to create an object called board that will be the connection from our Pi to the Arduino. We can then import the sleep function from the time library, by typing: from pyfirmata import Arduino, util Start by importing two classes from the pyFirmata library, which will enable our code to connect to the Arduino. We shall now write some Python code into this file. Using your favourite Python 3 editor (IDLE, Thonny, nano, Vim), create a new file and name it LED_test.py. Look for USB devices such as ttyUSB0 and ttyACM0. Plug in the Arduino, and in the terminal type the following code. Diagram showing how Arduino Uno connects to LED light.

0 notes

Text

Geofencing mongodb python example

Geofencing mongodb python example android#

Geofencing mongodb python example code#

There are several in-built operators available to us as part of Airflow. If we wish to execute a Bash command, we have Bash operator. For example, if we want to execute a Python script, we will have a Python operator. Thanks for the article “ Determining Whether A Point Is Inside A Complex Polygon”. To elaborate, an operator is a class that contains the logic of what we want to achieve in the DAG. If the point is exactly on the edge of the polygon, then the function may return true or false. The function will return true if the point X,Y is inside the polygon, or false if it is not. Using this method, GPS Tracking devices can be tracked either inside or outside of the polygon. But MongoDB should already be available in your system before python can connect to it and run. In this article we will learn to do that. Python can interact with MongoDB through some python modules and create and manipulate data inside Mongo DB. Backgroundįor geo-fencing, I used Polygonal geo-fencing method where a polygon is drawn around the route or area. MongoDB is a widely used document database which is also a form of NoSQL DB. The notification can contain information about the location of the device and might be sent to a mobile telephone or an email account. Geo FenceĪ Geo fence is a virtual perimeter on a geographic area using a location-based service, so that when the geo fencing device enters or exits the area, a notification is generated. These geo fences when crossed by a GPS equipped vehicle or person can trigger a warning to the user or operator via SMS or Email. Geo-fencing allows users of a GPS Tracking Solution to draw zones (i.e., a Geo Fence) around places of work, customer’s sites and secure areas. This is an unsecured server, and later we will add authorization.

Geofencing mongodb python example android#

To run the app from android studio, open one of your project's activity files and click Run icon from the toolbar.One of the important feature of GPS Tracking software using GPS Tracking devices is geo-fencing and its ability to help keep track of assets. Leave your server running in the background, you can now access your database from the mongo shell or as we will see later, also from python. I assume you have connected your actual Android Mobile device with your computer. import pymongo myclient pymongo.MongoClient('mongodb://localhost:27017/') If the above pymongo example program runs successfully, then a connection is said to made to the mongodb instance. Make sure that Mongo Daemon is up and running at the URL you specified.

Geofencing mongodb python example code#

Step 4 − Add the following code to androidManifest.xml You can pass the url of the MongoDB instance as shown in the following program. Public class MainActivity extends AppCompatActivity, 1) Import .location.FusedLocationProviderClient Using 2 sample programs we will write some information to the database and then. Step 3 − Add the following code to src/MainActivity.java import .PackageManager In this video, I will show how you can use Python to get connected to MongoDB. Note : If URI is not specified, it tries to connect to MongoDB instance at localhost on port 27017. Following is the syntax to create a MongoClient in Python. Deserialization, therefore, is the process of turning something in that format into an object. Create a connection to MongoDB Daemon Service using MongoClient. In programming, serialization is the process of turning an object into a new format that can be stored (e.g. Step 2 − Add the following code to res/layout/activity_main.xml Marshmallow serialization with MongoDB and Python. Step 1 − Create a new project in Android Studio, go to File ⇒ New Project and fill all required details to create a new project. This example demonstrates how do I get current GPS location programmatically in android.

0 notes

Text

Best html and python editor mac os x

BEST HTML AND PYTHON EDITOR MAC OS X CODE

BEST HTML AND PYTHON EDITOR MAC OS X FREE

Note: comment out "msw_help(." line in pymode.sl if you are having problems on Windows. Syntax highlighting and indenting, (optional) emacs keybindings, programmable with s-lang. Unix, VMS, MSDOS, OS/2, BeOS, QNX, and Windows. Supports Python syntax and a Python-specific menu. Syntax coloring for python, extensible with jython, supports many file formats, fully customisable, has sidebar for class and functions Syntax coloring for python, extensible with jython, supports many file formats, has folding, fully customisable, has sidebar for class and functions, fast for a Java application Ideas is a feature rich IDE that supports debugging, interpreting and project management. Gedit is the official text editor of the GNOME desktop environment, with Python syntax highlighting. Supports lots of languages, including Python doesn't seem programmableĪ small and lightweight GTK+ IDE that supports lots of languages, including Python.

BEST HTML AND PYTHON EDITOR MAC OS X CODE

Extensible in Python using pymacsĬustomizable Python mode, syntax coloring, function tagging.Įxtensible editor written in Python, Python/C/Nim code tree browser, 3-window editing, text diff, multi-language support, Python REPL, manipulate editor text with Python code Extensible with plugins written in python. It supports python syntax highlighting, auto-ident, auto-completion, classbrowser, and can run scripts from inside the editor. an open extensible IDE for anything and nothing in particular." Support for Python can be obtained via the PyDEV plugin.īuilt-in Python syntax highlighting, Python class browsing, Python-compatible regular expressions, code folding, and extensive options for running external tools such as Python scripts.Ī general purpose developer's text editor written in Python/wxPython. Simple, Highly Customizable Editor/Environment. Keeps your recent results, provides session history saving (optionally in HTML), interactive plotting with matplotlib. Interactive shell with history box and code box, auto-completion of attributes and file names, auto-display of function arguments and documentation. Powerful macro language.ĭRAKON diagram editor with code generation in Python. eric6 requires Python 3 (and, if desired, PyQt5), and supports CxFreeze and PyInstaller, Django and Pyramid, PyLint and VultureīRIEF-compatible, supports Python syntax, in-buffer Python interpreter, supports lots of languages. Integrated version control interface for Git, Subversion and Mercurial through core plugins. Supports projects, debugging, auto-complete, syntax coloring, etc. Includes P圜rust shell.Ĭomplete IDE, very well integrated with PyQT development, but usable for any kind of project. Extensible through a Python API.Įxtensible in Python part of PythonCard.

BEST HTML AND PYTHON EDITOR MAC OS X FREE

py files, but it's still a nice IDE to use for python projectsĬream is a free and easy-to-use configuration of the powerful and famous Vim text editor for both Microsoft Windows and GNU/Lfinux.ĬSS editor with syntax highlighting for Python, and embedded Python interpreter. Python language support for Atom-IDE, powered by the Python language server.Ĭlass browser does not currently work for. Extensible in Tcl, Tk Can interact with python.

0 notes

Text

Writing Lua scripts with meta

Sheridan Rawlins, Architect, Yahoo

Summary

In any file ending in .lua with the executable bit set (chmod a+x), putting a "shebang" line like the following lets you run it, and even pass arguments to the script that won't be swallowed by meta

hello-world.lua

#!/usr/bin/env meta print("hello world")

Screwdriver's meta tool is provided to every job, regardless of which image you choose.

This means that you can write Screwdriver commands or helper scripts as Lua programs.

It was inspired by (but unrelated to) etcd's bolt, as meta is a key-value store of sorts, and its boltcli which also provides a lua runner that interfaces with bolt.

Example script or sd-cmd

run.lua

#!/usr/bin/env meta meta.set("a-plain-string-key", "somevalue") meta.set("a-key-for-json-value", { name = "thename", num = 123, array = { "foo", "bar", "baz" } })

What is included?

A Lua 5.1 interpreter written in go (gopher-lua)

meta CLI commands are exposed as methods on the meta object

meta get

local foo_value = meta.get('foo')

meta set

-- plain string meta.set('key', 'value')` -- json number meta.set('key', 123)` -- json array meta.set('key', { 'foo', 'bar', 'baz' })` -- json map meta.set('key', { foo = 'bar', bar = 'baz' })`

meta dump

local entire_meta_tree = meta.dump()

Libraries (aka "modules") included in gopher-lua-libs - while there are many to choose from here, some highlights include:

argparse - when writing scripts, this is a nice CLI parser inspired from the python one.

Encoding modules: json, yaml, and base64 allow you to decode or encode values as needed.

String helper modules: strings, and shellescape

http client - helpful if you want to use the Screwdriver REST API possibly using os.getenv with the environment vars provided by screwdriver - SD_API_URL, SD_TOKEN, SD_BUILD_ID can be very useful.

plugin - is an advanced technique for parallelism by firing up several "workers" or "threads" as "goroutines" under the hood and communicating via go channels. More than likely overkill for normal use-cases, but it may come in handy, such as fetching all artifacts from another job by reading its manifest.txt and fetching in parallel.

Why is this interesting/useful?

meta is atomic

When invoked, meta obtains an advisory lock via flock.

However, if you wanted to update a value from the shell, you might perform two commands and lose the atomicity:

# Note, to treat the value as an integer rather than string, use -j to indicate json declare -i foo_count="$(meta get -j foo_count)" meta set -j foo_count "$((++foo_count))"

While uncommon, if you write builds that do several things in parallel (perhaps a Makefile run with make -j $(nproc)), making such an update in parallel could hit race conditions between the get and set.

Instead, consider this script (or sd-cmd)

increment-key.lua

#!/usr/bin/env meta local argparse = require 'argparse' local parser = argparse(arg[0], 'increment the value of a key') parser:argument('key', 'The key to increment') local args = parser:parse() local value = tonumber(meta.get(args.key)) or 0 value = value + 1 meta.set(args.key, value) print(value)

Which can be run like so, and will be atomic

./increment-key.lua foo 1 ./increment-key.lua foo 2 ./increment-key.lua foo 3

meta is provided to every job

The meta tool is made available to all builds, regardless of the image your build chooses - including minimal jobs intended for fanning in several jobs to a single one for further pipeline job-dependency graphs (i.e. screwdrivercd/noop-container)

Screwdrivers commands can help share common tasks between jobs within an organization. When commands are written in bash, then any callouts it makes such as jq must either exist on the images or be installed by the sd-cmd. While writing in meta's lua is not completely immune to needing "other things", at least it has proper http and json support for making and interpreting REST calls.

running "inside" meta can workaround system limits

Occasionally, if the data you put into meta gets very large, you may encounter Limits on size of arguments and environment, which comes from UNIX systems when invoking executables.

Imagine, for instance, wanting to put a file value into meta (NOTE: this is not a recommendation to put large things in meta, but, on the occasions where you need to, it can be supported). Say I have a file foobar.txt and want to put it into some-key. This code:

foobar="$(< foobar.txt)" meta set some-key "$foobar"

May fail to invoke meta at all if the args get too big.

If, instead, the contents are passed over redirection rather than an argument, this limit can be avoided:

load-file.lua

#!/usr/bin/env meta local argparse = require 'argparse' local parser = argparse(arg[0], 'load json from a file') parser:argument('key', 'The key to put the json in') parser:argument('filename', 'The filename') local args = parser:parse() local f, err = io.open(args.filename, 'r') assert(not err, err) local value = f:read("*a") -- Meta set the key to the contents of the file meta.set(args.key, value)

May be invoked with either the filename or, if the data is in memory with the named stdin device

# Direct from the file ./load-file.lua some-key foobar.txt # If in memory using "Here String" (https://www.gnu.org/software/bash/manual/bash.html#Here-Strings) foobar="$(< foobar.txt)" ./load-file.lua some-key /dev/stdin <<<"$foobar"

Additional examples

Using http module to obtain the parent id

get-parent-build-id.lua

#!/usr/bin/env meta local http = require 'http' local json = require 'json' SD_BUILD_ID = os.getenv('SD_BUILD_ID') or error('SD_BUILD_ID environment variable is required') SD_TOKEN = os.getenv('SD_TOKEN') or error('SD_TOKEN environment variable is required') SD_API_URL = os.getenv('SD_API_URL') or error('SD_API_URL environment variable is required') local client = http.client({ headers = { Authorization = "Bearer " .. SD_TOKEN } }) local url = string.format("%sbuilds/%d", SD_API_URL, SD_BUILD_ID) print(string.format("fetching buildInfo from %s", url)) local response, err = client:do_request(http.request("GET", url)) assert(not err, err) assert(response.code == 200, "error code not ok " .. response.code) local buildInfo = json.decode(response.body) print(tonumber(buildInfo.parentBuildId) or 0)

Invocation examples:

# From a job that is triggered from another job declare -i parent_build_id="$(./get-parent-build-id.lua)" echo "$parent_build_id" 48242862 # From a job that is not triggered by another job declare -i parent_build_id="$(./get-parent-build-id.lua)" echo "$parent_build_id" 0

Larger example to pull down manifests from triggering job in parallel

This advanced script creates 3 argparse "commands" (manifest, copy, and parent-id) to help copying manifest files from parent job (the job that triggers this one).

it demonstrates advanced argparse features, http client, and the plugin module to create a "boss + workers" pattern for parallel fetches:

Multiple workers fetch individual files requested by a work channel

The "boss" (main thread) filters relevent files from the manifest which it sends down the work channel

The "boss" closes the work channel, then waits for all workers to complete tasks (note that a channel will still deliver any elements before a receive() call reports not ok

This improves throughput considerably when fetching many files - from a worst case of the sum of all download times with one at a time, to a best case of just the maximum download time when all are done in parallel and network bandwidth is sufficient.

manifest.lua

#!/usr/bin/env meta -- Imports argparse = require 'argparse' plugin = require 'plugin' http = require 'http' json = require 'json' log = require 'log' strings = require 'strings' filepath = require 'filepath' goos = require 'goos' -- Parse the request parser = argparse(arg[0], 'Artifact operations such as fetching manifest or artifacts from another build') parser:option('-l --loglevel', 'Set the loglevel', 'info') parser:option('-b --build-id', 'Build ID') manifestCommand = parser:command('manifest', 'fetch the manifest') manifestCommand:option('-k --key', 'The key to set information in') copyCommand = parser:command('copy', 'Copy from and to') copyCommand:option('-p --parallelism', 'Parallelism when copying multiple artifacts', 4) copyCommand:flag('-d --dir') copyCommand:argument('source', 'Source file') copyCommand:argument('dest', 'Destination file') parentIdCommand = parser:command("parent-id", "Print the parent-id of this build") args = parser:parse() -- Setup logs is shared with workers when parallelizing fetches function setupLogs(args) -- Setup logs log.debug = log.new('STDERR') log.debug:set_prefix("[DEBUG] ") log.debug:set_flags { date = true } log.info = log.new('STDERR') log.info:set_prefix("[INFO] ") log.info:set_flags { date = true } -- TODO(scr): improve log library to deal with levels if args.loglevel == 'info' then log.debug:set_output('/dev/null') elseif args.loglevel == 'warning' or args.loglevel == 'warning' then log.debug:set_output('/dev/null') log.info:set_output('/dev/null') end end setupLogs(args) -- Globals from env function setupGlobals() SD_API_URL = os.getenv('SD_API_URL') assert(SD_API_URL, 'missing SD_API_URL') SD_TOKEN = os.getenv('SD_TOKEN') assert(SD_TOKEN, 'missing SD_TOKEN') client = http.client({ headers = { Authorization = "Bearer " .. SD_TOKEN } }) end setupGlobals() -- Functions -- getBuildInfo gets the build info json object from the buildId function getBuildInfo(buildId) if not buildInfo then local url = string.format("%sbuilds/%d", SD_API_URL, buildId) log.debug:printf("fetching buildInfo from %s", url) local response, err = client:do_request(http.request("GET", url)) assert(not err, err) assert(response.code == 200, "error code not ok " .. response.code) buildInfo = json.decode(response.body) end return buildInfo end -- getParentBuildId gets the parent build ID from this build’s info function getParentBuildId(buildId) local parentBuildId = getBuildInfo(buildId).parentBuildId assert(parentBuildId, string.format("could not get parendId for %d", buildId)) return parentBuildId end -- getArtifact gets and returns the requested artifact function getArtifact(buildId, artifact) local url = string.format("%sbuilds/%d/artifacts/%s", SD_API_URL, buildId, artifact) log.debug:printf("fetching artifact from %s", url) local response, err = client:do_request(http.request("GET", url)) assert(not err, err) assert(response.code == 200, string.format("error code not ok %d for url %s", response.code, url)) return response.body end -- getManifestLines returns an iterator for the lines of the manifest and strips off leading ./ function getManifestLines(buildId) return coroutine.wrap(function() local manifest = getArtifact(buildId, 'manifest.txt') local manifest_lines = strings.split(manifest, '\n') for _, line in ipairs(manifest_lines) do line = strings.trim_prefix(line, './') if line ~= '' then coroutine.yield(line) end end end) end -- fetchArtifact fetches the artifact "source" and writes to a local file "dest" function fetchArtifact(buildId, source, dest) log.info:printf("Copying %s to %s", source, dest) local sourceContent = getArtifact(buildId, source) local dest_file = io.open(dest, 'w') dest_file:write(sourceContent) dest_file:close() end -- fetchArtifactDirectory fetches all the artifacts matching "source" from the manifest and writes to a folder "dest" function fetchArtifactDirectory(buildId, source, dest) -- Fire up workers to run fetches in parallel local work_body = [[ http = require 'http' json = require 'json' log = require 'log' strings = require 'strings' filepath = require 'filepath' goos = require 'goos' local args, workCh setupLogs, setupGlobals, fetchArtifact, getArtifact, args, workCh = unpack(arg) setupLogs(args) setupGlobals() log.debug:printf("Starting work %p", _G) local ok, work = workCh:receive() while ok do log.debug:print(table.concat(work, ' ')) fetchArtifact(unpack(work)) ok, work = workCh:receive() end log.debug:printf("No more work %p", _G) ]] local workCh = channel.make(tonumber(args.parallelism)) local workers = {} for i = 1, tonumber(args.parallelism) do local worker_plugin = plugin.do_string(work_body, setupLogs, setupGlobals, fetchArtifact, getArtifact, args, workCh) local err = worker_plugin:run() assert(not err, err) table.insert(workers, worker_plugin) end -- Send workers work to do log.info:printf("Copying directory %s to %s", source, dest) local source_prefix = strings.trim_suffix(source, filepath.separator()) .. filepath.separator() for line in getManifestLines(buildId) do log.debug:print(line, source_prefix) if source == '.' or source == '' or strings.has_prefix(line, source_prefix) then local dest_dir = filepath.join(dest, filepath.dir(line)) goos.mkdir_all(dest_dir) workCh:send { buildId, line, filepath.join(dest, line) } end end -- Close the work channel to signal workers to exit log.debug:print('Closing workCh') err = workCh:close() assert(not err, err) -- Wait for workers to exit log.debug:print('Waiting for workers to finish') for _, worker in ipairs(workers) do local err = worker:wait() assert(not err, err) end log.info:printf("Done copying directory %s to %s", source, dest) end -- Normalize/help the buildId by getting the parent build id as a convenience if not args.build_id then SD_BUILD_ID = os.getenv('SD_BUILD_ID') assert(SD_BUILD_ID, 'missing SD_BUILD_ID') args.build_id = getParentBuildId(SD_BUILD_ID) end -- Handle the command if args.manifest then local value = {} for line in getManifestLines(args.build_id) do table.insert(value, line) if not args.key then print(line) end end if args.key then meta.set(args.key, value) end elseif args.copy then if args.dir then fetchArtifactDirectory(args.build_id, args.source, args.dest) else fetchArtifact(args.build_id, args.source, args.dest) end elseif args['parent-id'] then print(getParentBuildId(args.build_id)) end

Testing

In order to test this, bats testing system was used to invoke manifest.lua with various arguments and the return code, output, and side-effects checked.

For unit tests, an http server was fired up to serve static files in a testdata directory, and manifest.lua was actually invoked within this test.lua file so that the http server and the manifest.lua were run in two separate threads (via the plugin module) but the same process (to avoid being blocked by meta's locking mechanism, if run in two processes)

test.lua

#!/usr/bin/env meta -- Because Meta locks, run the webserver as a plugin in the same process, then invoke the actual file under test. local plugin = require 'plugin' local filepath = require 'filepath' local argparse = require 'argparse' local http = require 'http' local parser = argparse(arg[0], 'Test runner that serves http test server') parser:option('-d --dir', 'Dir to serve', filepath.join(filepath.dir(arg[0]), "testdata")) parser:option('-a --addr', 'Address to serve on', "localhost:2113") parser:argument('rest', "Rest of the args") :args '*' local args = parser:parse() -- Run an http server on the requested (or default) addr and dir local http_plugin = plugin.do_string([[ local http = require 'http' local args = unpack(arg) http.serve_static(args.dir, args.addr) ]], args) http_plugin:run() -- Wait for http server to be running and serve status.html local wait_plugin = plugin.do_string([[ local http = require 'http' local args = unpack(arg) local client = http.client() local url = string.format("http://%s/status.html", args.addr) repeat local response, err = client:do_request(http.request("GET", url)) until not err and response.code == 200 ]], args) wait_plugin:run() -- Wait for it to finish up to 2 seconds local err = wait_plugin:wait(2) assert(not err, err) -- With the http server running, run the actual file under test -- Run with a plugin so that none of the plugins used by _this file_ are loaded before invoking dofile local run_plugin = plugin.do_string([[ arg[0] = table.remove(arg, 1) dofile(arg[0]) ]], unpack(args.rest)) run_plugin:run() -- Wait for the run to complete and report errors, if any local err = run_plugin:wait() assert(not err, err) -- Stop the http server for good measure http_plugin:stop()

And the bats test looked something like:

#!/usr/bin/env bats load test-helpers function setup() { mk_temp_meta_dir export SD_META_DIR="$TEMP_SD_META_DIR" export SD_API_URL="http://localhost:2113/" export SD_TOKEN=SD_TOKEN export SD_BUILD_ID=12345 export SERVER_PID="$!" } function teardown() { rm_temp_meta_dir } @test "artifacts with no command is an error" { run "${BATS_TEST_DIRNAME}/run.lua" echo "$status" echo "$output" ((status)) } @test "manifest gets a few files" { run "${BATS_TEST_DIRNAME}/test.lua" -- "${BATS_TEST_DIRNAME}/run.lua" manifest echo "$status" echo "$output" ((!status)) grep foo.txt <<<"$output" grep bar.txt <<<"$output" grep manifest.txt <<<"$output" } @test "copy foo.txt myfoo.txt writes it properly" { run "${BATS_TEST_DIRNAME}/test.lua" -- "${BATS_TEST_DIRNAME}/run.lua" copy foo.txt "${TEMP_SD_META_DIR}/myfoo.txt" echo "$status" echo "$output" ((!status)) [[ $(<"${TEMP_SD_META_DIR}/myfoo.txt") == "foo" ]] } @test "copy bar.txt mybar.txt writes it properly" { run "${BATS_TEST_DIRNAME}/test.lua" -- "${BATS_TEST_DIRNAME}/run.lua" copy bar.txt "${TEMP_SD_META_DIR}/mybar.txt" echo "$status" echo "$output" ((!status)) [[ $(<"${TEMP_SD_META_DIR}/mybar.txt") == "bar" ]] } @test "copy -b 101010 -d somedir mydir writes it properly" { run "${BATS_TEST_DIRNAME}/test.lua" -- "${BATS_TEST_DIRNAME}/run.lua" -l debug copy -b 101010 -d somedir "${TEMP_SD_META_DIR}/mydir" echo "$status" echo "$output" ((!status)) ls -1 "${TEMP_SD_META_DIR}/mydir/somedir" ls -1 "${TEMP_SD_META_DIR}/mydir/somedir" | grep one.txt ls -1 "${TEMP_SD_META_DIR}/mydir/somedir" | grep two.txt (( $(<"${TEMP_SD_META_DIR}/mydir/somedir/one.txt") == 1 )) (( $(<"${TEMP_SD_META_DIR}/mydir/somedir/two.txt") == 2 )) } @test "copy -b 101010 -d . mydir gets all artifacts" { run "${BATS_TEST_DIRNAME}/test.lua" -- "${BATS_TEST_DIRNAME}/run.lua" -l debug copy -b 101010 -d . "${TEMP_SD_META_DIR}/mydir" echo "$status" echo "$output" ((!status)) ls -1 "${TEMP_SD_META_DIR}/mydir/somedir" ls -1 "${TEMP_SD_META_DIR}/mydir/somedir" | grep one.txt ls -1 "${TEMP_SD_META_DIR}/mydir/somedir" | grep two.txt (( $(<"${TEMP_SD_META_DIR}/mydir/somedir/one.txt") == 1 )) (( $(<"${TEMP_SD_META_DIR}/mydir/somedir/two.txt") == 2 )) [[ $(<"${TEMP_SD_META_DIR}/mydir/abc.txt") == abc ]] [[ $(<"${TEMP_SD_META_DIR}/mydir/def.txt") == def ]] (($(find "${TEMP_SD_META_DIR}/mydir" -type f | wc -l) == 5)) } @test "copy -b 101010 -d . -p 1 mydir gets all artifacts" { run "${BATS_TEST_DIRNAME}/test.lua" -- "${BATS_TEST_DIRNAME}/run.lua" -l debug copy -b 101010 -d . -p 1 "${TEMP_SD_META_DIR}/mydir" echo "$status" echo "$output" ((!status)) ls -1 "${TEMP_SD_META_DIR}/mydir/somedir" ls -1 "${TEMP_SD_META_DIR}/mydir/somedir" | grep one.txt ls -1 "${TEMP_SD_META_DIR}/mydir/somedir" | grep two.txt (( $(<"${TEMP_SD_META_DIR}/mydir/somedir/one.txt") == 1 )) (( $(<"${TEMP_SD_META_DIR}/mydir/somedir/two.txt") == 2 )) [[ $(<"${TEMP_SD_META_DIR}/mydir/abc.txt") == abc ]] [[ $(<"${TEMP_SD_META_DIR}/mydir/def.txt") == def ]] (($(find "${TEMP_SD_META_DIR}/mydir" -type f | wc -l) == 5)) } @test "parent-id 12345 gets 99999" { run "${BATS_TEST_DIRNAME}/test.lua" -- "${BATS_TEST_DIRNAME}/run.lua" parent-id -b 12345 echo "$status" echo "$output" ((!status)) (( $output == 99999 )) }

0 notes

Text

Savagely Reviewing Python Hosting-Worth it?

Find the universe of Python! While evaluating python facilitating, ensure you think about some obligatory viewpoints prior to settling on a choice.

Python is an item situated, well-deciphered, and high level programming language. Be that as it may, looking into python facilitating incorporates choosing a decent host for wary screening and assessment.

You will require shell access just as Apache establishment to help mod_wsgi and CGI. Thusly you should be sure about the structure intend to utilize. You can pick between Python or Flask since they support it. In case you are looking into Python facilitating, ensure you pick the right form of Python.

Navicosoft is a believed Django Flask Script cPanel for online web facilitating. We mean to stamp your reality with rich ability! Also, we make a point to utilize amazing workers so your information is free from any danger.

We guarantee to you to convey a crazy speed of Python web hosting utilizing our incredible and winning undertaking SSD workers. As far as force as well as speed, we make a point to work on the speed of your site definitely.

How Might You Select the Best Python Hosting?

You can sort among a few web facilitating suppliers to find in the event that they live up to your desires. Additionally, ensure these Python mediators are modern and accessible with mainstream structures.

Additionally, while assessing Python facilitating, ensure there are no irrational client limitations that you are unconscious of. At last, ensure you assess the hosts for their presentation, security, speed, and worth. Then, at that point, apply all your insight and inspect the surveys to choose the best python facilitating.

Tracking down the Right Python web Hosting

Python is a gigantic language explicitly for web applications. In any case, all facilitating suppliers don't offer it. Truth be told, everything has don't uphold it. So here are a few perspectives that you should consider to choose the right Python web facilitating for you.

What is Python?

Python is a main programming language that is object-situated and was contrived in the last part of the 1980s. Its present rendition is exceptionally mainstream among developers.

Blended Programming Paradigms

Python is ideal for clients who favor an article situated methodology utilizing organized programming. Novices effectively comprehend this is a direct result of its basic elaborate structure and syntax. The code is intentionally composed exceptionally clear so that even unpracticed software engineers can survey and change it.

Numerous dialects that utilization accentuations and Python ordinarily center around utilizing English words to make the records outwardly less tumultuous.

It is likewise plainly slanted towards making simple codes to check. Hence while looking into Python facilitating, unquestionably think about this perspective.

Broadening Python

Python's usefulness stretches out towards add-on capacities which are written in C or C+. Henceforth you can likewise utilize it as an order language with C. You can run the code inside a Java application.

Python is Easy to Learn

Contrasted and the dialects which seem obstructed for fledglings, Python is not difficult to learn, and the cross-stage similarity is one of the preeminent advantages. You can even code it in the Terminal application on macOS. Also, you can utilize it for security applications or even on web applications running on a Linux worker.

Google, YouTube, NASA, and even CERN use Python language. CERN utilizes Python to unscramble information from Atlas, which is one of the LHC sensors. The CERN staff has Python gatherings. In this manner they use Python thoroughly in their figuring and material science labs.

Python Versions and Releases

In case you will get to know the programming language and investigating different Python adaptations, it is a decent choice for you. It gives you refreshes and a thought of what you can anticipate. Furthermore, python upholds different working frameworks, which incorporates MacOS, iOS, Windows, AIX, Linux/UNIX, Solaris, and VMS.

Interesting points for Best Python Hosting:

A portion of the contemplations that you should remember prior to choosing a Python web facilitating are as per the following:

Make sure that the bundle you are thinking about has Python support in the specs.

Check the Python translator form prior to choosing since a web host may be reluctant to refresh an old mediator due to little interest.

Determine which units are introduced. Particularly read the arrangement in regards to new modules.

Check on the off chance that you can introduce Python bundles without help from anyone else and which are the indexes of Python modules.

Ensure that your host permits you to run relentless cycles.

Make sure you have Shell (SSH) access.

Check potential alternatives for information bases. Henceforth you can assume that by and large, Python scripts do well with a MySQL data set. Nonetheless, in case it isn't the alternative, you should look through somewhat more.

Navicosoft Provides Best Python Hosting!

Navicosoft is serving its customers since 2008. We are a believed online web facilitating organization pushing forward by offering types of assistance at an efficient methodology. We esteem our clients by thinking about your requirements and ensuring your personality.

Also, we offer you the most reasonable answers for your business that require seaward facilitating to allow you to proceed flawlessly with fundamental protection settings alongside hostile to site closure.

Navicosoft furnishes you Best Python Hosting with a pre-introduced Python system that is completely upgraded to play out the best. Besides, we offer you an assortment of utilizations that suits all python engineers. It incorporates module establishment and execution observing.

We ensure that Python application creation becomes simpler for you. You can helpfully introduce the python application with a couple of snaps. What's more, we try to help your applications at each progression we can and keep on doing as such at whatever point you need us.

Our arrangements incorporate occasional reinforcements ensuring that your information never gets lost. We offer you free reinforcements so that regardless of whether you feel that you have lost your information, you can restore your record, data sets, and information, remembering messages and insights for your record.

Navicosoft esteems the significance of uptime in computerized business. Henceforth we work to surpass your assumptions, particularly in the event of uptime. We are among the couple of facilitating organizations having a main record of conveying most extreme uptime. Presently, we are giving 99.99% of uptime.

#pythonwebhosting #bestpythonhosting

0 notes

Text

Docker Compose Install For Mac

Estimated reading time: 15 minutes

Docker Compose Install For Mac Installer

Docker Compose Install For Mac High Sierra

Docker Compose Install For Macos

Running Docker On Mac