#spring boot async api

Explore tagged Tumblr posts

Text

Full Stack Web Development Coaching at Gritty Tech

Master Full Stack Development with Gritty Tech

If you're looking to build a high-demand career in web development, Gritty Tech's Full Stack Web Development Coaching is the ultimate solution. Designed for beginners, intermediates, and even experienced coders wanting to upskill, our program offers intensive, hands-on training. You will master both front-end and back-end development, preparing you to create complete web applications from scratch For More…

At Gritty Tech, we believe in practical learning. That means you'll not only absorb theory but also work on real-world projects, collaborate in teams, and build a strong portfolio that impresses employers.

Why Choose Gritty Tech for Full Stack Coaching?

Gritty Tech stands out because of our commitment to excellence, personalized mentorship, and career-oriented approach. Here's why you should choose us:

Expert Instructors: Our trainers are seasoned professionals from leading tech companies.

Project-Based Learning: You build real applications, not just toy examples.

Career Support: Resume workshops, interview preparation, and networking events.

Flexible Learning: Evening, weekend, and self-paced options are available.

Community: Join a vibrant community of developers and alumni.

What is Full Stack Web Development?

Full Stack Web Development refers to the creation of both the front-end (client-side) and back-end (server-side) portions of a web application. A full stack developer handles everything from designing user interfaces to managing servers and databases.

Front-End Development

Front-end development focuses on what users see and interact with. It involves technologies like:

HTML5 for structuring web content.

CSS3 for designing responsive and visually appealing layouts.

JavaScript for adding interactivity.

Frameworks like React, Angular, and Vue.js for building scalable web applications.

Back-End Development

Back-end development deals with the server-side, databases, and application logic. Key technologies include:

Node.js, Python (Django/Flask), Ruby on Rails, or Java (Spring Boot) for server-side programming.

Databases like MySQL, MongoDB, and PostgreSQL to store and retrieve data.

RESTful APIs and GraphQL for communication between client and server.

Full Stack Tools and DevOps

Version Control: Git and GitHub.

Deployment: AWS, Heroku, Netlify.

Containers: Docker.

CI/CD Pipelines: Jenkins, GitLab CI.

Gritty Tech Full Stack Coaching Curriculum

Our curriculum is carefully crafted to cover everything a full stack developer needs to know:

1. Introduction to Web Development

Understanding the internet and how web applications work.

Setting up your development environment.

Introduction to Git and GitHub.

2. Front-End Development Mastery

HTML & Semantic HTML: Best practices for accessibility.

CSS & Responsive Design: Media queries, Flexbox, Grid.

JavaScript Fundamentals: Variables, functions, objects, and DOM manipulation.

Modern JavaScript (ES6+): Arrow functions, promises, async/await.

Front-End Frameworks: Deep dive into React.js.

3. Back-End Development Essentials

Node.js & Express.js: Setting up a server, building APIs.

Database Management: CRUD operations with MongoDB.

Authentication & Authorization: JWT, OAuth.

API Integration: Consuming third-party APIs.

4. Advanced Topics

Microservices Architecture: Basics of building distributed systems.

GraphQL: Modern alternative to REST APIs.

Web Security: Preventing common vulnerabilities (XSS, CSRF, SQL Injection).

Performance Optimization: Caching, lazy loading, code splitting.

5. DevOps and Deployment

CI/CD Fundamentals: Automating deployments.

Cloud Services: Hosting apps on AWS, DigitalOcean.

Monitoring & Maintenance: Tools like New Relic and Datadog.

6. Soft Skills and Career Coaching

Resume writing for developers.

Building an impressive LinkedIn profile.

Preparing for technical interviews.

Negotiating job offers.

Real-World Projects You'll Build

At Gritty Tech, you won't just learn; you'll build. Here are some example projects:

E-commerce Website: A full stack shopping platform.

Social Media App: Create a mini version of Instagram.

Task Manager API: Backend API to handle user tasks with authentication.

Real-Time Chat Application: WebSocket-based chat system.

Each project is reviewed by mentors, and feedback is provided to ensure continuous improvement.

Personalized Mentorship and Live Sessions

Our coaching includes one-on-one mentorship to guide you through challenges. Weekly live sessions provide deeper dives into complex topics and allow real-time Q&A. Mentors assist with debugging, architectural decisions, and performance improvements.

Tools and Technologies You Will Master

Languages: HTML, CSS, JavaScript, Python, SQL.

Front-End Libraries/Frameworks: React, Bootstrap, TailwindCSS.

Back-End Technologies: Node.js, Express.js, MongoDB.

Version Control: Git, GitHub.

Deployment: Heroku, AWS, Vercel.

Other Tools: Postman, Figma (for UI design basics).

Student Success Stories

Thousands of students have successfully transitioned into tech roles through Gritty Tech. Some notable success stories:

Amit, from a sales job to Front-End Developer at a tech startup within 6 months.

Priya, a stay-at-home mom, built a portfolio and landed a full stack developer role.

Rahul, a mechanical engineer, became a software engineer at a Fortune 500 company.

Who Should Join This Coaching Program?

This coaching is ideal for:

Beginners with no coding experience.

Working professionals looking to switch careers.

Students wanting to learn industry-relevant skills.

Entrepreneurs building their tech startups.

If you are motivated to learn, dedicated to practice, and open to feedback, Gritty Tech is the right place for you.

Career Support at Gritty Tech

At Gritty Tech, our relationship doesn’t end when you finish the course. We help you land your first job through:

Mock interviews.

Technical assessments.

Building an impressive project portfolio.

Alumni referrals and job placement assistance.

Certifications

After completing the program, you will receive a Full Stack Web Developer Certification from Gritty Tech. This certification is highly respected in the tech industry and will boost your resume significantly.

Flexible Payment Plans

Gritty Tech offers affordable payment plans to make education accessible to everyone. Options include:

Monthly Installments.

Pay After Placement (Income Share Agreement).

Early Bird Discounts.

How to Enroll

Enrolling is easy! Visit Gritty Tech Website and sign up for the Full Stack Web Development Coaching program. Our admissions team will guide you through the next steps.

Frequently Asked Questions (FAQ)

How long does the Full Stack Web Development Coaching at Gritty Tech take?

The program typically spans 6 to 9 months depending on your chosen pace (full-time or part-time).

Do I need any prerequisites?

No prior coding experience is required. We start from the basics and gradually move to advanced topics.

What job roles can I apply for after completing the program?

You can apply for roles like:

Front-End Developer

Back-End Developer

Full Stack Developer

Web Application Developer

Software Engineer

Is there any placement guarantee?

While we don't offer "guaranteed placement," our career services team works tirelessly to help you land a job by providing job referrals, mock interviews, and resume building sessions.

Can I learn at my own pace?

Absolutely. We offer both live cohort-based batches and self-paced learning tracks.

Ready to kickstart your tech career? Join Gritty Tech's Full Stack Web Development Coaching today and transform your future. Visit grittytech.com to learn more and enroll!

0 notes

Text

Example of @Async in Spring Boot for Beginners

The @async annotation in Spring Boot allows you to run tasks asynchronously (in the background) without blocking the main thread. This is useful for time-consuming tasks like sending emails, processing large files, or making API calls. ✅ Step-by-Step Guide We will create a Spring Boot app where an API endpoint triggers an async task that runs in the background. 1️⃣ Add Required Dependencies In…

0 notes

Text

A Guide to Creating APIs for Web Applications

APIs (Application Programming Interfaces) are the backbone of modern web applications, enabling communication between frontend and backend systems, third-party services, and databases. In this guide, we’ll explore how to create APIs, best practices, and tools to use.

1. Understanding APIs in Web Applications

An API allows different software applications to communicate using defined rules. Web APIs specifically enable interaction between a client (frontend) and a server (backend) using protocols like REST, GraphQL, or gRPC.

Types of APIs

RESTful APIs — Uses HTTP methods (GET, POST, PUT, DELETE) to perform operations on resources.

GraphQL APIs — Allows clients to request only the data they need, reducing over-fetching.

gRPC APIs — Uses protocol buffers for high-performance communication, suitable for microservices.

2. Setting Up a REST API: Step-by-Step

Step 1: Choose a Framework

Node.js (Express.js) — Lightweight and popular for JavaScript applications.

Python (Flask/Django) — Flask is simple, while Django provides built-in features.

Java (Spring Boot) — Enterprise-level framework for Java-based APIs.

Step 2: Create a Basic API

Here’s an example of a simple REST API using Express.js (Node.js):javascriptconst express = require('express'); const app = express(); app.use(express.json());let users = [{ id: 1, name: "John Doe" }];app.get('/users', (req, res) => { res.json(users); });app.post('/users', (req, res) => { const user = { id: users.length + 1, name: req.body.name }; users.push(user); res.status(201).json(user); });app.listen(3000, () => console.log('API running on port 3000'));

Step 3: Connect to a Database

APIs often need a database to store and retrieve data. Popular databases include:

SQL Databases (PostgreSQL, MySQL) — Structured data storage.

NoSQL Databases (MongoDB, Firebase) — Unstructured or flexible data storage.

Example of integrating MongoDB using Mongoose in Node.js:javascriptconst mongoose = require('mongoose'); mongoose.connect('mongodb://localhost:27017/mydb', { useNewUrlParser: true, useUnifiedTopology: true });const UserSchema = new mongoose.Schema({ name: String }); const User = mongoose.model('User', UserSchema);app.post('/users', async (req, res) => { const user = new User({ name: req.body.name }); await user.save(); res.status(201).json(user); });

3. Best Practices for API Development

🔹 Use Proper HTTP Methods:

GET – Retrieve data

POST – Create new data

PUT/PATCH – Update existing data

DELETE – Remove data

🔹 Implement Authentication & Authorization

Use JWT (JSON Web Token) or OAuth for securing APIs.

Example of JWT authentication in Express.js:

javascript

const jwt = require('jsonwebtoken'); const token = jwt.sign({ userId: 1 }, 'secretKey', { expiresIn: '1h' });

🔹 Handle Errors Gracefully

Return appropriate status codes (400 for bad requests, 404 for not found, 500 for server errors).

Example:

javascript

app.use((err, req, res, next) => { res.status(500).json({ error: err.message }); });

🔹 Use API Documentation Tools

Swagger or Postman to document and test APIs.

4. Deploying Your API

Once your API is built, deploy it using:

Cloud Platforms: AWS (Lambda, EC2), Google Cloud, Azure.

Serverless Functions: AWS Lambda, Vercel, Firebase Functions.

Containerization: Deploy APIs using Docker and Kubernetes.

Example: Deploying with DockerdockerfileFROM node:14 WORKDIR /app COPY package.json ./ RUN npm install COPY . . CMD ["node", "server.js"] EXPOSE 3000

5. API Testing and Monitoring

Use Postman or Insomnia for testing API requests.

Monitor API Performance with tools like Prometheus, New Relic, or Datadog.

Final Thoughts

Creating APIs for web applications involves careful planning, development, and deployment. Following best practices ensures security, scalability, and efficiency.

WEBSITE: https://www.ficusoft.in/python-training-in-chennai/

0 notes

Text

Full Stack Developer Roadmap: Skills, Tools, and Best Practices

Creating a Full Stack Developer Roadmap involves mapping out the essential skills, tools, and best practices required to become proficient in both front-end and back-end development. Here's a comprehensive guide to help you understand the various stages in the journey to becoming a Full Stack Developer:

1. Fundamentals of Web Development

Before diving into full-stack development, it's essential to understand the core building blocks of web development:

1.1. HTML/CSS

HTML: The markup language used for creating the structure of web pages.

CSS: Used for styling the visual presentation of web pages (layouts, colors, fonts, etc.).

Best Practices: Write semantic HTML, use CSS preprocessors like Sass, and ensure responsive design with media queries.

1.2. JavaScript

JavaScript (JS): The programming language that adds interactivity to web pages.

Best Practices: Use ES6+ syntax, write clean and maintainable code, and implement asynchronous JavaScript (promises, async/await).

2. Front-End Development

The front end is what users see and interact with. A full-stack developer needs to master front-end technologies.

2.1. Front-End Libraries & Frameworks

React.js: A popular library for building user interfaces, focusing on reusability and performance.

Vue.js: A progressive JavaScript framework for building UIs.

Angular: A platform and framework for building single-page client applications.

Best Practices: Use state management tools (like Redux or Vuex), focus on component-based architecture, and optimize performance.

2.2. Version Control (Git)

Git: Essential for tracking changes and collaborating with others.

GitHub/GitLab/Bitbucket: Platforms for hosting Git repositories.

Best Practices: Commit often with meaningful messages, use branching strategies (like GitFlow), and create pull requests for review.

3. Back-End Development

The back end handles the data processing, storage, and logic behind the scenes. A full-stack developer must be proficient in server-side development.

3.1. Server-Side Languages

Node.js: JavaScript runtime for server-side development.

Python (Django/Flask): Python frameworks used for building web applications.

Ruby (Rails): A full-stack framework for Ruby developers.

PHP: Widely used for server-side scripting.

Java (Spring Boot): A powerful framework for building web applications in Java.

3.2. Databases

SQL Databases (e.g., PostgreSQL, MySQL): Used for relational data storage.

NoSQL Databases (e.g., MongoDB, Firebase): For non-relational data storage.

Best Practices: Design scalable and efficient databases, normalize data for SQL, use indexing and query optimization.

4. Web Development Tools & Best Practices

4.1. API Development and Integration

REST APIs: Learn how to create and consume RESTful APIs.

GraphQL: A query language for APIs, providing a more flexible and efficient way to retrieve data.

Best Practices: Design APIs with scalability in mind, use proper status codes, and document APIs with tools like Swagger.

4.2. Authentication & Authorization

JWT (JSON Web Tokens): A popular method for handling user authentication in modern web applications.

OAuth: Open standard for access delegation commonly used for logging in with third-party services.

Best Practices: Implement proper encryption, use HTTPS, and ensure token expiration.

4.3. Testing

Unit Testing: Testing individual components of the application.

Integration Testing: Testing how different components of the system work together.

End-to-End (E2E) Testing: Testing the entire application workflow.

Best Practices: Use testing libraries like Jest (JavaScript), Mocha, or PyTest (Python) and ensure high test coverage.

4.4. DevOps & Deployment

Docker: Containerization of applications for consistency across environments.

CI/CD Pipelines: Automating the process of building, testing, and deploying code.

Cloud Platforms: AWS, Azure, Google Cloud, etc., for deploying applications.

Best Practices: Use version-controlled deployment pipelines, monitor applications in production, and practice continuous integration.

4.5. Performance Optimization

Caching: Use caching strategies (e.g., Redis) to reduce server load and speed up response times.

Lazy Loading: Load parts of the application only when needed to reduce initial loading time.

Minification and Bundling: Minimize JavaScript and CSS files to improve load time.

5. Soft Skills & Best Practices

Being a full-stack developer also requires strong problem-solving skills and an ability to work collaboratively in teams.

5.1. Communication

Communicate effectively with team members, clients, and stakeholders, especially regarding technical requirements and issues.

5.2. Agile Development

Understand Agile methodologies (Scrum, Kanban) and work in sprints to deliver features incrementally.

5.3. Code Reviews & Collaboration

Regular code reviews help maintain code quality and foster learning within teams.

Practice pair programming and collaborative development.

6. Continuous Learning

The tech industry is always evolving, so it’s essential to stay up to date with new tools, languages, and frameworks.

Follow Blogs & Podcasts: Stay updated with the latest in full-stack development.

Contribute to Open Source: Engage with the developer community by contributing to open-source projects.

Build Side Projects: Continuously apply what you've learned by working on personal projects.

7. Additional Tools & Technologies

Webpack: A module bundler to optimize the workflow.

GraphQL: For efficient data fetching from APIs.

WebSockets: For real-time communication in web applications.

Conclusion

Becoming a proficient full-stack developer requires a combination of technical skills, tools, and a strong understanding of best practices. By mastering both front-end and back-end technologies, keeping up with industry trends, and continuously learning, you'll be equipped to build modern, scalable web applications.

Fullstack course in chennai

Fullstack development course in chennai

Fullstack training in chennai

0 notes

Text

hi

java executor service how to use join two collections mongo performance monorepo vs mongotemplate

angular header xml common keys

no sql spring boot

angular authentication header

how to set key …

synchronus vs asynch

resttemplate vs other types

hosted servers

how to produce/consume queue

how many servers where hosted

service goes down

fault tolerance hystrix

producing queue

spring boot microservices async communication

mule connectors

custom policies

resource level policies

convert map to list

call multiple apis

transactional attributes

trasaction progation

spring boot authentication active directory

performance optimization in angular

lazy loading in angular

load dynamic script block

immutable objects

system api vs process api

java optional - Google Search www.google.com 1:10 PM Guide To Java 8 Optional | Baeldung www.baeldung.com

1:09 PM java throws exception order - Google Search www.google.com 1:09 PM Order of throws exceptions in Java - Stack Overflow stackoverflow.com 1:08 PM java exception catch custom - Google Search www.google.com 1:07 PM Implement Custom Exceptions in Java: Why, When and How stackify.com 1:07 PM java exception catch - Google Search www.google.com 1:07 PM AJAX Database www.w3schools.com 1:06 PM ajax call call database - Google Search www.google.com 1:06 PM php - jQuery AJAX call to a database query - Stack Overflow stackoverflow.com 1:05 PM ajax call functionlity - Google Search www.google.com 1:05 PM javascript - jQuery AJAX function call - Stack Overflow stackoverflow.com 1:04 PM ajax javascript - Google Search www.google.com 1:04 PM ajax calls - Google Search www.google.com 1:04 PM rest framework jersey - Google Search www.google.com 1:03 PM REST API with Jersey and Spring | Baeldung www.baeldung.com 1:03 PM dynamic binding vs static binding - Google Search www.google.com 1:02 PM Static vs Dynamic Binding in Java - GeeksforGeeks www.geeksforgeeks.org 1:02 PM hashmap vs hashtable performance - Google Search www.google.com 1:01 PM hashmap vs hashtable - Google Search www.google.com 1:01 PM java stream filter list to set - Google Search www.google.com 1:00 PM Java 8 Filter set based on another set - Stack Overflow stackoverflow.com 1:00 PM list to set java stream - Google Search www.google.com 12:59 PM Convert List to Set in Java | Techie Delight www.techiedelight.com 12:58 PM java stream vs collection - Google Search www.google.com 12:24 AM https://www.fiverr.com/authentications/google/callback?state=a5a6e4b13a8047cda2945fa49094c4d1&code=4%2F0AbUR2VOk1hjoKnGqBip_091Rw0WSlp3HSQ6NuMxD_dxQ8meWw5IIKHv3wPUVunwIEQnwrQ&scope=email+profile+openid+https%3A%2F%2Fwww.googleapis.com%2Fauth%2Fuserinfo.email+https%3A%2F%2Fwww.googleapis.com%2Fauth%2 www.fiverr.com 12:24 AM Sign in - Google Accounts accounts.google.com 12:11 AM

0 notes

Text

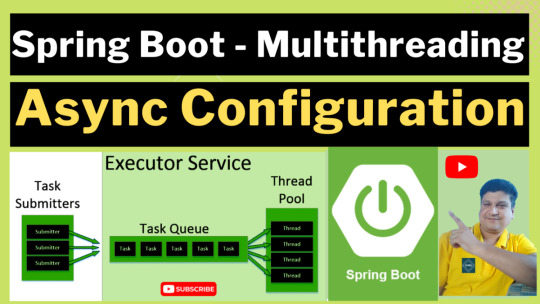

Spring Boot Multithreading using Async in Hindi | Complete Tutorial of Multithreading in Spring Boot in HINDI

Full Video Link: https://youtu.be/SSwhctye9jA Hi, a new #video on #springboot #multithreading using #async #annotation is published on #codeonedigest #youtube channel. The complete tutorial guide of multithreading in #spring #boot #project using async

Multithreading in spring boot is achieved using Async annotation and using Task Executor Service class. Multithreading in spring boot is similar to multitasking, except that it allows numerous threads to run concurrently rather than processes. A thread in Java is a sequence of programmed instructions that are managed by the operating system’s scheduler. Threads can be utilized in the background…

View On WordPress

#async call in spring boot#async in spring#async in spring boot#async in spring boot example#async in spring webflux#async processing in spring boot#async rest call in spring boot#enable async in spring boot#how to use multithreading in spring boot#multithreading#multithreading in spring batch#multithreading in spring batch example#multithreading in spring boot#multithreading in spring boot application#multithreading in spring boot example#multithreading in spring boot interview questions#multithreading in spring boot microservices#multithreading in spring boot rest api#multithreading in spring boot using completablefuture#multithreading in spring mvc#spring#Spring boot#spring boot async#spring boot async api#spring boot async completablefuture#spring boot async example#spring boot async method#spring boot async rest api#spring boot async rest api example#spring boot async rest controller

0 notes

Text

Full-Stack TypeScript/Java Developer, at Tesla Government Inc.

Full-Stack TypeScript / Java Developer

Location: Tysons Corner, Virginia Employment Type: Full-time Citizenship: US Citizenship

What do we do?

We do work for the government, we build modern web apps that look good and work well. Our product has a front end built on TypeScript/JavaScript, React/Redux, and SASS. The back-end is a REST API built on Spring Boot, Hibernate, and MySQL. We have custom integrations with some really neat applications such as Rocket Chat, ArcGIS, Tableau and others.

What’s the job?

We are looking for a developer who is passionate about writing good code. Someone who understands the importance of doing things the right way, rather than cutting corners. Someone who believes that writing code is a way of life, not simply a means to an end. The perfect candidate will be able to embrace these ideals while still pumping out code at a good pace.

Who are we?

Why would you want to work for Tesla? (Not the car company, the other one.) It's a really good place to work. We make important software that people actually use. There are people here you can learn from. You will write new code, not just maintain old, buggy code. We work hard, but we don't work to death. We understand inspiration doesn't work on a time table. We're a small company, so we can do things the right way. We give our people 4% matching on 401k contributions, health insurance and pay decent salaries. We'll set you up with a MacBook Pro and two monitors so you can spread out. If you don't have a car, no big deal; we're close to Metro and bike trails, but if you do have a car, we have free parking (and EV chargers). We also have showers if you ride your bike (and a gym to go with those showers). Your daily tasks will vary between front-end and back-end work, so you should be comfortable and happy doing both.

Who are you?

Do you enjoy solving complex problems? Do you dream in code? Do you want to make great software that informs important decisions around the globe? If so, we'd like to meet you.

Now, on to the requirements. We are open to candidates with varying levels (at least 1 year) of experience in front-end and/or back-end development. You should have strong working knowledge in the following areas, frameworks, and tools...

Front-end

TypeScript/JavaScript, ES6/ESNext

Promises, async/await

HTML, CSS/SASS

React/Redux or similar front-end frameworks

RESTful web services

Git, NPM, Node

Jest for unit tests

Back-end

Java 8/11, lambdas, streams

Gradle/Maven

Spring Boot, Hibernate (HQL)

MySQL

JUnit

Extra points for: Linux, MongoDB, Docker, RPM, Jenkins

We give our people 4% matching on 401k contributions, health insurance and pay decent salaries. We'll set you up with a MacBook Pro and two monitors so you can spread out. If you don't have a car, no big deal; we're close to Metro and bike trails, but if you do have a car, we have free parking (and EV chargers). We also have showers if you ride your bike (and a gym to go with those showers).

Where do I sign up?

Sound good? If you're up for it, prepare a cover letter including a brief (one paragraph) description of a development project you have worked on, your role, and the outcome. If you can, include a link to one of your projects on GitHub, or somewhere else on the ‘net.

TO APPLY: All interested applicants should submit relevant materials via the web portal here.

1 note

·

View note

Photo

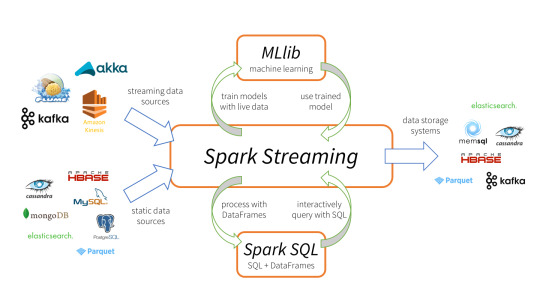

Angular 10, data grids, randomness, and checking some boxes

#494 — June 26, 2020

Unsubscribe | Read on the Web

JavaScript Weekly

Lessons Learned Refactoring Optional Chaining Into a Large Codebase — Lea Verou, creator of Mavo, decided to refactor Mavo to use optional chaining (?.) and here’s some of what she discovered along the way. (As an aside, Lea was the subject of a neat 10 minute programming documentary recently – worth a watch!)

Lea Verou

A Little Bit of Plain JavaScript Can Do A Lot — For anyone more than happy to dive in and write JavaScript without dragging in an entire framework and tooling to manage it, there will be no surprises here, but this is a nice reminder otherwise. Do you always need a 'framework'? No.

Julia Evans

Creating a Voting App with Firestore and Wijmo — Learn how to build a realtime voting app quickly and easily with the Firestore database and Wijmo components. The app uses OAuth for authentication and allows users to submit and vote for ideas.

Wijmo by GrapeCity sponsor

Angular 10 Released — A major release for the popular Google-led framework, though smaller in scope than usual as Angular 9 only came out in February ;-) 10 gains a new date range picker, optional stricter settings, and an upgrade to TypeScript 3.9.

Stephen Fluin (Google)

What's Coming in TypeScript 4? — The first beta of TypeScript 4 is due any moment with a final release due in August. New bits and pieces include variadic tuple types, labelled tuples, short-cut assignment operators (e.g. ||=) and more.

Tim Perry

⚡️ Quick bytes:

Chrome 85's DevTools have gained better support for working with styles created by CSSOM APIs (such as by CSS-in-JS frameworks). There's also syntax autocompletion for optional chaining and highlighting for private fields.

There's nothing to see just yet, but NativeScript is coming to Ionic.

The creator of AlpineJS has written about how he's passed $100K/yr in income using GitHub Sponsors.

Did you know there's a Slack theme for VS Code? 😆

▶️ The JS Party podcast crew discusses how their use of JavaScript syntax evolves (or not) over time.

engineeringblogs.xyz is a new aggregator site (by us!) that brings together what 507 (so far) product and software engineering blogs are talking about. Worth a skim.

💻 Jobs

JavaScript Developer at X-Team (Remote) — Join X-Team and work on projects for companies like Riot Games, FOX, Coinbase, and more. Work from anywhere.

X-Team

Find a Job Through Vettery — Vettery specializes in tech roles and is completely free for job seekers. Create a profile to get started.

Vettery

📚 Tutorials, Opinions and Stories

ECMAScript Proposal: Private Static Methods and Accessors in Classes — Dr. Axel takes a look at another forthcoming language feature (in this case being at stage 3 and already supported by Babel and elsewhere).

Dr. Axel Rauschmayer

npm v7 Series: Why Keep package-lock.json? — If npm v7 is going to support yarn.lock files, then why keep package-lock.json around as well? Isaac goes into some depth as to how yarn.lock works and why it doesn’t quite suit every npm use case.

Isaac Z. Schlueter

How to Dynamically Get All CSS Custom Properties on a Page — Some fun DOM and stylesheet wrangling on display here.

Tyler Gaw

Stream Chat API & JavaScript SDK for Custom Chat Apps — Build real-time chat in less time. Rapidly ship in-app messaging with our highly reliable chat infrastructure.

Stream sponsor

Getting Started with Oak for Building HTTP Services in Deno — A comprehensive Oak with Deno tutorial for beginners (which, I guess, we all are when it comes to Deno). Oak is essentially the most popular option for building HTTP-based apps in Deno right now.

Robin Wieruch

Understanding Generators in JavaScript — Generator functions can be paused and resumed and yield multiple values over time and were introduced in ES6/ES2015.

Tania Rascia

Build a CRUD App with Vue.js, Spring Boot, and Kotlin — It’s a fact of life that not everyone is building apps with JavaScript at every level of the stack. Sometimes.. people use Java too 🤫

Andrew Hughes

▶ Creating a Basic Implemention of 'Subway Surfers' — No modules, webpack or such-like here.. just exploring the joy of throwing a game mechanic together quickly using rough and ready JavaScript. Love it.

Shawn Beaton

Rubber Duck Debugging for JavaScript Developers — When you’re stuck with something, why not talk to a rubber duck?

Valeri Karpov

🔧 Code & Tools

Tabulator 4.7: An Interactive Table and Data Grid Library — Supports all major browsers and can be used with Angular, Vue, and React if you wish. 4.7 is a substantial release. Among other things is a new layout mode that resizes the table container to fit the data (example).

Oli Folkerd

Tragopan: A Minimal Dependency-Free Pan/Zoom Library — Try it out here. Claims to work faster due to use of native browser scrolling for panning (left/right/up/down) and transform/scale for zooming.

team.video

Builds Run Faster on Buildkite 🏃♀️ — Build times <5 mins at any scale. Self-hosted agents work with all languages, source control tools & platforms.

Buildkite sponsor

React Query 2: Hooks for Fetching, Caching and Updating Async Data — React Query is well worth checking out and has extensive documentation and even its own devtools. Main repo.

Tanner Linsley

Rando.js: A Helper for Making Randomness Easier — The rando function lets you get random integers in a range, floats in a range, pick between multiple items, return a random element from an array, and more. There’s also randosequence for a more shuffle-like experience.

nastyox

jinabox.js: A Customizable Omnibox for AI Powered Searching — Designed to be used with a Jina back-end. It’s all open source, but will take some digging around to understand fully.

Jina AI

MongoDB Is Easy. Now Make It Powerful. Free Download for 30 Days.

Studio 3T sponsor

IntersectionObserver Visualizer — If you’re new to using the IntersectionObserver API, this useful interactive demo might help you comprehend it a little better.

michelle barker

Polly.js 5.0: Record, Replay, and Stub HTTP Interactions

Netflix

Vest: Effortless Validations Inspired by Testing Frameworks — If you’re used to unit testing, the syntax used here will be familiar.

Evyatar

👻 Scary Item of the Week

Checkboxland: Render 'Anything' as HTML Checkboxes — This frivolous experiment is equal parts terrifying and impressive. It’s a JS library that displays animations, text, and arbitrary data using nothing but HTML checkboxes and, to be fair, they’ve presented it really well!

Bryan Braun

by via JavaScript Weekly https://ift.tt/3g53MDU

0 notes

Text

Google Analytics (GA) like Backend System Architecture

There are numerous way of designing a backend. We will take Microservices route because the web scalability is required for Google Analytics (GA) like backend. Micro services enable us to elastically scale horizontally in response to incoming network traffic into the system. And a distributed stream processing pipeline scales in proportion to the load.

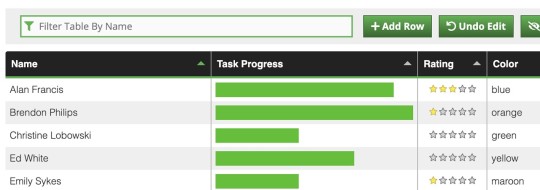

Here is the High Level architecture of the Google Analytics (GA) like Backend System.

Components Breakdown

Web/Mobile Visitor Tracking Code

Every web page or mobile site tracked by GA embed tracking code that collects data about the visitor. It loads an async script that assigns a tracking cookie to the user if it is not set. It also sends an XHR request for every user interaction.

HAProxy Load Balancer

HAProxy, which stands for High Availability Proxy, is a popular open source software TCP/HTTP Load Balancer and proxying solution. Its most common use is to improve the performance and reliability of a server environment by distributing the workload across multiple servers. It is used in many high-profile environments, including: GitHub, Imgur, Instagram, and Twitter.

A backend can contain one or many servers in it — generally speaking, adding more servers to your backend will increase your potential load capacity by spreading the load over multiple servers. Increased reliability is also achieved through this manner, in case some of your backend servers become unavailable.

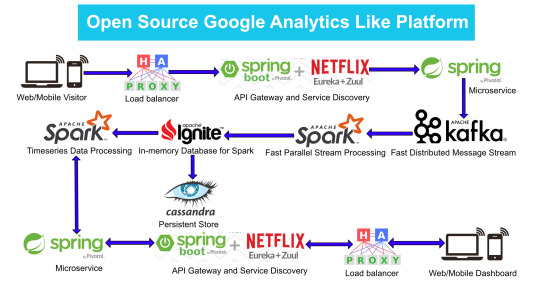

HAProxy routes the requests coming from Web/Mobile Visitor site to the Zuul API Gateway of the solution. Given the nature of a distributed system built for scalability and stateless request and response handling we can distribute the Zuul API gateways spread across geographies. HAProxy performs load balancing (layer 4 + proxy) across our Zuul nodes. High-Availability (HA ) is provided via Keepalived.

Spring Boot & Netflix OSS Eureka + Zuul

Zuul is an API gateway and edge service that proxies requests to multiple backing services. It provides a unified “front door” to the application ecosystem, which allows any browser, mobile app or other user interface to consume services from multiple hosts. Zuul is integrated with other Netflix stack components like Hystrix for fault tolerance and Eureka for service discovery or use it to manage routing rules, filters and load balancing across your system. Most importantly all of those components are well adapted by Spring framework through Spring Boot/Cloud approach.

An API gateway is a layer 7 (HTTP) router that acts as a reverse proxy for upstream services that reside inside your platform. API gateways are typically configured to route traffic based on URI paths and have become especially popular in the microservices world because exposing potentially hundreds of services to the Internet is both a security nightmare and operationally difficult. With an API gateway, one simply exposes and scales a single collection of services (the API gateway) and updates the API gateway’s configuration whenever a new upstream should be exposed externally. In our case Zuul is able to auto discover services registered in Eureka server.

Eureka server acts as a registry and allows all clients to register themselves and used for Service Discovery to be able to find IP address and port of other services if they want to talk to. Eureka server is a client as well. This property is used to setup Eureka in highly available way. We can have Eureka deployed in a highly available way if we can have more instances used in the same pattern.

Spring Boot Microservices

Using a microservices approach to application development can improve resilience and expedite the time to market, but breaking apps into fine-grained services offers complications. With fine-grained services and lightweight protocols, microservices offers increased modularity, making applications easier to develop, test, deploy, and, more importantly, change and maintain. With microservices, the code is broken into independent services that run as separate processes.

Scalability is the key aspect of microservices. Because each service is a separate component, we can scale up a single function or service without having to scale the entire application. Business-critical services can be deployed on multiple servers for increased availability and performance without impacting the performance of other services. Designing for failure is essential. We should be prepared to handle multiple failure issues, such as system downtime, slow service and unexpected responses. Here, load balancing is important. When a failure arises, the troubled service should still run in a degraded functionality without crashing the entire system. Hystrix Circuit-breaker will come into rescue in such failure scenarios.

The microservices are designed for scalability, resilience, fault-tolerance and high availability and importantly it can be achieved through deploying the services in a Docker Swarm or Kubernetes cluster. Distributed and geographically spread Zuul API gateways route requests from web and mobile visitors to the microservices registered in the load balanced Eureka server.

The core processing logic of the backend system is designed for scalability, high availability, resilience and fault-tolerance using distributed Streaming Processing, the microservices will ingest data to Kafka Streams data pipeline.

Apache Kafka Streams

Apache Kafka is used for building real-time streaming data pipelines that reliably get data between many independent systems or applications.

It allows:

Publishing and subscribing to streams of records

Storing streams of records in a fault-tolerant, durable way

It provides a unified, high-throughput, low-latency, horizontally scalable platform that is used in production in thousands of companies.

Kafka Streams being scalable, highly available and fault-tolerant, and providing the streams functionality (transformations / stateful transformations) are what we need — not to mention Kafka being a reliable and mature messaging system.

Kafka is run as a cluster on one or more servers that can span multiple datacenters spread across geographies. Those servers are usually called brokers.

Kafka uses Zookeeper to store metadata about brokers, topics and partitions.

Kafka Streams is a pretty fast, lightweight stream processing solution that works best if all of the data ingestion is coming through Apache Kafka. The ingested data is read directly from Kafka by Apache Spark for stream processing and creates Timeseries Ignite RDD (Resilient Distributed Datasets).

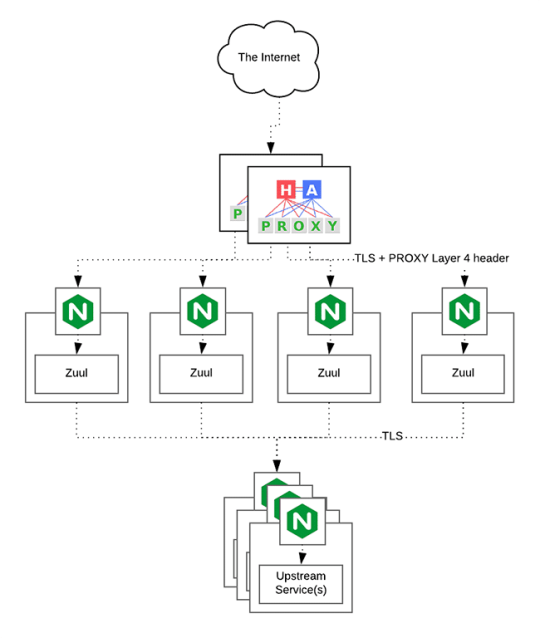

Apache Spark

Spark Streaming is an extension of the core Spark API that enables scalable, high-throughput, fault-tolerant stream processing of live data streams.

It provides a high-level abstraction called a discretized stream, or DStream, which represents a continuous stream of data.

DStreams can be created either from input data streams from sources such as Kafka, Flume, and Kinesis, or by applying high-level operations on other DStreams. Internally, a DStream is represented as a sequence of RDDs (Resilient Distributed Datasets).

Apache Spark is a perfect choice in our case. This is because Spark achieves high performance for both batch and streaming data, using a state-of-the-art DAG scheduler, a query optimizer, and a physical execution engine.

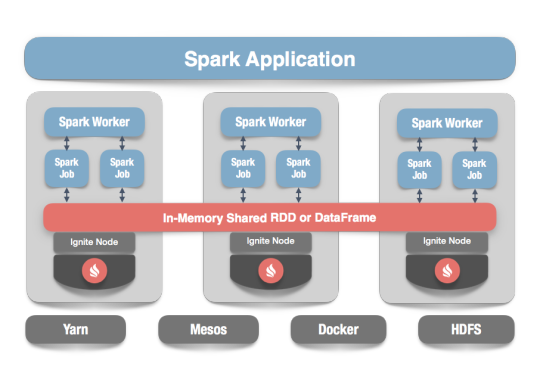

In our scenario Spark streaming process Kafka data streams; create and share Ignite RDDs across Apache Ignite which is a distributed memory-centric database and caching platform.

Apache Ignite

Apache Ignite is a distributed memory-centric database and caching platform that is used by Apache Spark users to:

Achieve true in-memory performance at scale and avoid data movement from a data source to Spark workers and applications.

More easily share state and data among Spark jobs.

Apache Ignite is designed for transactional, analytical, and streaming workloads, delivering in-memory performance at scale. Apache Ignite provides an implementation of the Spark RDD which allows any data and state to be shared in memory as RDDs across Spark jobs. The Ignite RDD provides a shared, mutable view of the same data in-memory in Ignite across different Spark jobs, workers, or applications.

The way an Ignite RDD is implemented is as a view over a distributed Ignite table (aka. cache). It can be deployed with an Ignite node either within the Spark job executing process, on a Spark worker, or in a separate Ignite cluster. It means that depending on the chosen deployment mode the shared state may either exist only during the lifespan of a Spark application (embedded mode), or it may out-survive the Spark application (standalone mode).

With Ignite, Spark users can configure primary and secondary indexes that can bring up to 1000x performance gains.

Apache Cassandra

We will use Apache Cassandra as storage for persistence writes from Ignite.

Apache Cassandra is a highly scalable and available distributed database that facilitates and allows storing and managing high velocity structured data across multiple commodity servers without a single point of failure.

The Apache Cassandra is an extremely powerful open source distributed database system that works extremely well to handle huge volumes of records spread across multiple commodity servers. It can be easily scaled to meet sudden increase in demand, by deploying multi-node Cassandra clusters, meets high availability requirements, and there is no single point of failure.

Apache Cassandra has best write and read performance.

Characteristics of Cassandra:

It is a column-oriented database

Highly consistent, fault-tolerant, and scalable

The data model is based on Google Bigtable

The distributed design is based on Amazon Dynamo

Right off the top Cassandra does not use B-Trees to store data. Instead it uses Log Structured Merge Trees (LSM-Trees) to store its data. This data structure is very good for high write volumes, turning updates and deletes into new writes.

In our scenario we will configure Ignite to work in write-behind mode: normally, a cache write involves putting data in memory, and writing the same into the persistence source, so there will be 1-to-1 mapping between cache writes and persistence writes. With the write-behind mode, Ignite instead will batch the writes and execute them regularly at the specified frequency. This is aimed at limiting the amount of communication overhead between Ignite and the persistent store, and really makes a lot of sense if the data being written rapidly changes.

Analytics Dashboard

Since we are talking about scalability, high availability, resilience and fault-tolerance, our analytics dashboard backend should be designed in a pretty similar way we have designed the web/mobile visitor backend solution using HAProxy Load Balancer, Zuul API Gateway, Eureka Service Discovery and Spring Boot Microservices.

The requests will be routed from Analytics dashboard through microservices. Apache Spark will do processing of time series data shared in Apache Ignite as Ignite RDDs and the results will be sent across to the dashboard for visualization through microservices

0 notes

Text

RxJava support 2.0 releases support for Android apps

The RxJava team has released version 2.0 of their reactive Java framework, after an 18 month development cycle. RxJava is part of the ReactiveX family of libraries and frameworks, which is in their words, "a combination of the best ideas from the Observer pattern, the Iterator pattern, and functional programming". The project's "What's different in 2.0" is a good guide for developers already familiar with RxJava 1.x.

RxJava 2.0 is a brand new implementation of RxJava. This release is based on the Reactive Streams specification, an initiative for providing a standard for asynchronous stream processing with non-blocking back pressure, targeting runtime environments (JVM and JavaScript) as well as network protocols.

Reactive implementations have concepts of publishers and subscribers, as well as ways to subscribe to data streams, get the next stream of data, handle errors and close the connection.

The Reactive Streams spec will be included in JDK 9 as java.util.concurrent.Flow. The following interfaces correspond to the Reactive Streams spec. As you can see, the spec is small, consisting of just four interfaces:

· Flow.Processor<T,R>: A component that acts as both a Subscriber and Publisher.

· Flow.Publisher<T>: A producer of items (and related control messages) received by Subscribers.

· Flow.Subscriber<T>: A receiver of messages.

· Flow.Subscription: Message control linking a Flow.Publisher and Flow.Subscriber.

Spring Framework 5 is also going reactive. To see how this looks, refer to Josh Long's Functional Reactive Endpoints with Spring Framework 5.0.

To learn more about RxJava 2.0, InfoQ interviewed main RxJava 2.0 contributor, David Karnok.

InfoQ: First of all, congrats on RxJava 2.0! 18 months in the making, that's quite a feat. What are you most proud of in this release?

David Karnok: Thanks! In some way, I wish it didn't take so long. There was a 10 month pause when Ben Christensen, the original author who started RxJava, left and there was no one at Netflix to push this forward. I'm sure many will contest that things got way better when I took over the lead role this June. I'm proud my research into more advanced and more performant Reactive Streams paid off and RxJava 2 is the proof all of it works.

InfoQ: What’s different in RxJava 2.0 and how does it help developers?

Karnok: There are a lot of differences between version 1 and 2 and it's impossible to list them all here ,but you can visit the dedicated wiki page for a comprehensible explanation. In headlines, we now support the de-facto standard Reactive Streams specification, have significant overhead reduction in many places, no longer allow nulls, have split types into two groups based on support of or lack of backpressure and have explicit interop between the base reactive types.

InfoQ: Where do you see RxJava used the most (e.g. IoT, real-time data processing, etc.)?

Karnok: RxJava is more dominantly used by the Android community, based on the feedback I saw. I believe the server side is dominated by Project Reactor and Akka at the moment. I haven't specifically seen IoT mentioned or use RxJava (it requires Java), but maybe they use some other reactive library available on their platform. For real-time data processing people still tend to use other solutions, most of them not really reactive, and I'm not aware of any providers (maybe Pivotal) who are pushing for reactive in this area.

InfoQ: What benefits does RxJava provide Android more than other environments that would explain the increased traction?

Karnok: As far as I see, Android wanted to "help" their users solving async and concurrent problems with Android-only tools such as AsyncTask, Looper/Handler etc.

Unfortunately, their design and use is quite inconvenient, often hard to understand or predict and generally brings frustration to Android developers. These can largely contribute to callback hell and the difficulty of keeping async operations off the main thread.

RxJava's design (inherited from the ReactiveX design of Microsoft) is dataflow-oriented and orthogonalized where actions execute from when data appears for processing. In addition, error handling and reporting is a key part of the flows. With AsyncTask, you had to manually work out the error delivery pattern and cancel pending tasks, whereas RxJava does that as part of its contract.

In practical terms, having a flow that queries several services in the background and then presents the results in the main thread can be expressed in a few lines with RxJava (+Retrofit) and a simple screen rotation will cancel the service calls promptly.

This is a huge productivity win for Android developers, and the simplicity helps them climb the steep learning curve the whole reactive programming's paradigm shift requires. Maybe at the end, RxJava is so attractive to Android because it reduces the "time-to-market" for individual developers, startups and small companies in the mobile app business.

There was nothing of a comparable issue on the desktop/server side Java, in my opinion, at that time. People learned to fire up ExecutorService's and wait on Future.get(), knew about SwingUtilities.invokeLater to send data back to the GUI thread and otherwise the Servlet API, which is one thread per request only (pre 3.0) naturally favored blocking APIs (database, service calls).

Desktop/server folks are more interested in the performance benefits a non-blocking design of their services offers (rather than how easy one can write a service). However, unlike Android development, having just RxJava is not enough and many expect/need complete frameworks to assist their business logic as there is no "proper" standard for non-blocking web services to replace the Servlet API. (Yes, there is Spring (~Boot) and Play but they feel a bit bandwagon-y to me at the moment).

InfoQ: HTTP is a synchronous protocol and can cause a lot of back pressure when using microservices. Streaming platforms like Akka and Apache Kafka help to solve this. Does RxJava 2.0 do anything to allow automatic back pressure?

Karnok: RxJava 2's Flowable type implements the Reactive Streams interface/specification and does support backpressure. However, the Java level backpressure is quite different from the network level backpressure. For us, backpressure means how many objects to deliver through the pipeline between different stages where these objects can be non uniform in type and size. On the network level one deals with usually fixed size packets, and backpressure manifests via the lack of acknowledgement of previously sent packets. In classical setup, the network backpressure manifests on the Java level as blocking calls that don't return until all pieces of data have been written. There are non-blocking setups, such as Netty, where the blocking is replaced by implicit buffering, and as far as I know there are only individual, non-uniform and non Reactive Streams compatible ways of handling those (i.e., a check for canWrite which one has to spin over/retry periodically). There exist libraries that try to bridge the two worlds (RxNetty, some Spring) with varying degrees of success as I see it.

InfoQ: Do you think reactive frameworks are necessary to handle large amounts of traffic and real-time data?

Karnok: It depends on the problem complexity. For example, if your task is to count the number of characters in a big-data source, there are faster and more dedicated ways of doing that. If your task is to compose results from services on top of a stream of incoming data in order to return something detailed, reactive-based solutions are quite adequate. As far as I know, most libraries and frameworks around Reactive Streams were designed for throughput and not really for latency. For example, in high-frequency trading, the predictable latency is very important and can be easily met by Aeron but not the main focus for RxJava due to the unpredictable latency behavior.

InfoQ: Does HTTP/2 help solve the scalability issues that HTTP/1.1 has?

Karnok: This is not related to RxJava and I personally haven't played with HTTP/2 but only read the spec. Multiplexing over the same channel is certainly a win in addition to the support for explicit backpressure (i.e., even if the network can deliver, the client may still be unable to process the data in that volume) per stream. I don't know all the technical details but I believe Spring Reactive Web does support HTTP/2 transport if available but they hide all the complexity behind reactive abstractions so you can express your processing pipeline in RxJava 2 and Reactor 3 terms if you wish.

InfoQ: Java 9 is projected to be featuring some reactive functionality. Is that a complete spec?

Karnok: No. Java 9 will only feature 4 Java interfaces with 7 methods total. No stream-like or Rx-like API on top of that nor any JDK features built on that.

InfoQ: Will that obviate the need for RxJava if it is built right into the JDK?

Karnok: No and I believe there's going to be more need for a true and proven library such as RxJava. Once the toolchains grow up to Java 9, we will certainly provide adapters and we may convert (or rewrite) RxJava 3 on top of Java 9's features (VarHandles).

One of my fears is that once Java 9 is out, many will try to write their own libraries (individuals, companies) and the "market" gets diluted with big branded-low quality solutions, not to mention the increased amount of "how does RxJava differ from X" questions.

My personal opinion is that this is already happening today around Reactive Streams where certain libraries and frameworks advertise themselves as RS but fail to deliver based on it. My (likely biased) conjecture is that RxJava 2 is the closest library/technology to an optimal Reactive Streams-based solution that can be.

InfoQ: What's next for RxJava?

Karnok: We had fantastic reviewers, such as Jake Wharton, during the development of RxJava 2. Unfortunately, the developer previews and release candidates didn't generate enough attention and despite our efforts, small problems and oversights slipped into the final release. I don't expect major issues in the coming months but we will keep fixing both version 1 and 2 as well as occasionally adding new operators to support our user base. A few companion libraries, such as RxAndroid, now provide RxJava 2 compatible versions, but the majority of the other libraries don't yet or haven't yet decided how to go forward. In terms of RxJava, I plan to retire RxJava 1 within six months (i.e., only bugfixes then on), partly due to the increasing maintenance burden on my "one man army" for some time now; partly to "encourage" the others to switch to the RxJava 2 ecosystem. As for RxJava 3, I don't have any concrete plans yet. There are ongoing discussions about splitting the library along types or along backpressure support as well as making the so-called operator-fusion elements (which give a significant boost to our performance) a standard extension of the Reactive Streams specification.

1 note

·

View note

Text

What’s After the MEAN Stack?

Introduction

We reach for software stacks to simplify the endless sea of choices. The MEAN stack is one such simplification that worked very well in its time. Though the MEAN stack was great for the last generation, we need more; in particular, more scalability. The components of the MEAN stack haven’t aged well, and our appetites for cloud-native infrastructure require a more mature approach. We need an updated, cloud-native stack that can boundlessly scale as much as our users expect to deliver superior experiences.

Stacks

When we look at software, we can easily get overwhelmed by the complexity of architectures or the variety of choices. Should I base my system on Python? Or is Go a better choice? Should I use the same tools as last time? Or should I experiment with the latest hipster toolchain? These questions and more stymie both seasoned and newbie developers and architects.

Some patterns emerged early on that help developers quickly provision a web property to get started with known-good tools. One way to do this is to gather technologies that work well together in “stacks.” A “stack” is not a prescriptive validation metric, but rather a guideline for choosing and integrating components of a web property. The stack often identifies the OS, the database, the web server, and the server-side programming language.

In the earliest days, the famous stacks were the “LAMP-stack” and the “Microsoft-stack”. The LAMP stack represents Linux, Apache, MySQL, and PHP or Python. LAMP is an acronym of these product names. All the components of the LAMP stack are open source (though some of the technologies have commercial versions), so one can use them completely for free. The only direct cost to the developer is the time to build the experiment.

The “Microsoft stack” includes Windows Server, SQL Server, IIS (Internet Information Services), and ASP (90s) or ASP.NET (2000s+). All these products are tested and sold together.

Stacks such as these help us get started quickly. They liberate us from decision fatigue, so we can focus instead on the dreams of our start-up, or the business problems before us, or the delivery needs of internal and external stakeholders. We choose a stack, such as LAMP or the Microsoft stack, to save time.

In each of these two example legacy stacks, we’re producing web properties. So no matter what programming language we choose, the end result of a browser’s web request is HTML, JavaScript, and CSS delivered to the browser. HTML provides the content, CSS makes it pretty, and in the early days, JavaScript was the quick form-validation experience. On the server, we use the programming language to combine HTML templates with business data to produce rendered HTML delivered to the browser.

We can think of this much like mail merge: take a Word document with replaceable fields like first and last name, add an excel file with columns for each field, and the engine produces a file for each row in the sheet.

As browsers evolved and JavaScript engines were tuned, JavaScript became powerful enough to make real-time, thick-client interfaces in the browser. Early examples of this kind of web application are Facebook and Google Maps.

These immersive experiences don’t require navigating to a fresh page on every button click. Instead, we could dynamically update the app as other users created content, or when the user clicks buttons in the browser. With these new capabilities, a new stack was born: the MEAN stack.

What is the MEAN Stack?

The MEAN stack was the first stack to acknowledge the browser-based thick client. Applications built on the MEAN stack primarily have user experience elements built in JavaScript and running continuously in the browser. We can navigate the experiences by opening and closing items, or by swiping or drilling into things. The old full-page refresh is gone.

The MEAN stack includes MongoDB, Express.js, Angular.js, and Node.js. MEAN is the acronym of these products. The back-end application uses MongoDB to store its data as binary-encoded JavaScript Object Notation (JSON) documents. Node.js is the JavaScript runtime environment, allowing you to do backend, as well as frontend, programming in JavaScript. Express.js is the back-end web application framework running on top of Node.js. And Angular.js is the front-end web application framework, running your JavaScript code in the user’s browser. This allows your application UI to be fully dynamic.

Unlike previous stacks, both the programming language and operating system aren’t specified, and for the first time, both the server framework and browser-based client framework are specified.

In the MEAN stack, MongoDB is the data store. MongoDB is a NoSQL database, making a stark departure from the SQL-based systems in previous stacks. With a document database, there are no joins, no schema, no ACID compliance, and no transactions. What document databases offer is the ability to store data as JSON, which easily serializes from the business objects already used in the application. We no longer have to dissect the JSON objects into third normal form to persist the data, nor collect and rehydrate the objects from disparate tables to reproduce the view.

The MEAN stack webserver is Node.js, a thin wrapper around Chrome’s V8 JavaScript engine that adds TCP sockets and file I/O. Unlike previous generations’ web servers, Node.js was designed in the age of multi-core processors and millions of requests. As a result, Node.js is asynchronous to a fault, easily handling intense, I/O-bound workloads. The programming API is a simple wrapper around a TCP socket.

In the MEAN stack, JavaScript is the name of the game. Express.js is the server-side framework offering an MVC-like experience in JavaScript. Angular (now known as Angular.js or Angular 1) allows for simple data binding to HTML snippets. With JavaScript both on the server and on the client, there is less context switching when building features. Though the specific features of Express.js’s and Angular.js’s frameworks are quite different, one can be productive in each with little cross-training, and there are some ways to share code between the systems.

The MEAN stack rallied a web generation of start-ups and hobbyists. Since all the products are free and open-source, one can get started for only the cost of one’s time. Since everything is based in JavaScript, there are fewer concepts to learn before one is productive. When the MEAN stack was introduced, these thick-client browser apps were fresh and new, and the back-end system was fast enough, for new applications, that database durability and database performance seemed less of a concern.

The Fall of the MEAN Stack

The MEAN stack was good for its time, but a lot has happened since. Here’s an overly brief history of the fall of the MEAN stack, one component at a time.

Mongo got a real bad rap for data durability. In one Mongo meme, it was suggested that Mongo might implement the PLEASE keyword to improve the likelihood that data would be persisted correctly and durably. (A quick squint, and you can imagine the XKCD comic about “sudo make me a sandwich.”) Mongo also lacks native SQL support, making data retrieval slower and less efficient.

Express is aging, but is still the defacto standard for Node web apps and apis. Much of the modern frameworks — both MVC-based and Sinatra-inspired — still build on top of Express. Express could do well to move from callbacks to promises, and better handle async and await, but sadly, Express 5 alpha hasn’t moved in more than a year.

Angular.js (1.x) was rewritten from scratch as Angular (2+). Arguably, the two products are so dissimilar that they should have been named differently. In the confusion as the Angular reboot was taking shape, there was a very unfortunate presentation at an Angular conference.

The talk was meant to be funny, but it was not taken that way. It showed headstones for many of the core Angular.js concepts, and sought to highlight how the presenters were designing a much easier system in the new Angular.

Sadly, this message landed really wrong. Much like the community backlash to Visual Basic’s plans they termed Visual Fred, the community was outraged. The core tenets they trusted every day for building highly interactive and profitable apps were getting thrown away, and the new system wouldn’t be ready for a long time. Much of the community moved on to React, and now Angular is struggling to stay relevant. Arguably, Angular’s failure here was the biggest factor in React’s success — much more so than any React initiative or feature.

Nowadays many languages’ frameworks have caught up to the lean, multi-core experience pioneered in Node and Express. ASP.NET Core brings a similarly light-weight experience, and was built on top of libuv, the OS-agnostic socket framework, the same way Node was. Flask has brought light-weight web apps to Python. Ruby on Rails is one way to get started quickly. Spring Boot brought similar microservices concepts to Java. These back-end frameworks aren’t JavaScript, so there is more context switching, but their performance is no longer a barrier, and strongly-typed languages are becoming more in vogue.

As a further deterioration of the MEAN stack, there are now frameworks named “mean,” including mean.io and meanjs.org and others. These products seek to capitalize on the popularity of the “mean” term. Sometimes it offers more options on the original MEAN products, sometimes scaffolding around getting started faster, sometimes merely looking to cash in on the SEO value of the term.

With MEAN losing its edge, many other stacks and methodologies have emerged.

The JAM Stack

The JAM stack is the next evolution of the MEAN stack. The JAM stack includes JavaScript, APIs, and Markup. In this stack, the back-end isn’t specified – neither the webserver, the back-end language, or the database.

In the JAM stack we use JavaScript to build a thick client in the browser, it calls APIs, and mashes the data with Markup — likely the same HTML templates we would build in the MEAN stack. The JavaScript frameworks have evolved as well. The new top contenders are React, Vue.js, and Angular, with additional players from Svelte, Auralia, Ember, Meteor, and many others.

The frameworks have mostly standardized on common concepts like virtual dom, 1-way data binding, and web components. Each framework then combines these concepts with the opinions and styles of the author.

The JAM stack focuses exclusively on the thick-client browser environment, merely giving a nod to the APIs, as if magic happens behind there. This has given rise to backend-as-a-service products like Firebase, and API innovations beyond REST including gRPC and GraphQL. But, just as legacy stacks ignored the browser thick-client, the JAM stack marginalizes the backend, to our detriment.

Maturing Application Architecture

As the web and the cloud have matured, as system architects, we have also matured in our thoughts of how to design web properties.

As technology has progressed, we’ve gotten much better at building highly scalable systems. Microservices offer a much different application model where simple pieces are arranged into a mesh. Containers offer ephemeral hardware that’s easy to spin up and replace, leading to utility computing.

As consumers and business users of systems, we almost take for granted that a system will be always on and infinitely scalable. We don’t even consider the complexity of geo-replication of data or latency of trans-continental communication. If we need to wait more than a second or two, we move onto the next product or the next task.

With these maturing tastes, we now take for granted that an application can handle near infinite load without degradation to users, and that features can be upgraded and replaced without downtime. Imagine the absurdity if Google Maps went down every day at 10 pm so they could upgrade the system, or if Facebook went down if a million people or more posted at the same time.

We now take for granted that our applications can scale, and the naive LAMP and MEAN stacks are no longer relevant.

Characteristics of the Modern Stack

What does the modern stack look like? What are the elements of a modern system? I propose a modern system is cloud-native, utility-billed, infinite-scale, low-latency, user-relevant using machine learning, stores and processes disparate data types and sources, and delivers personalized results to each user. Let’s dig into these concepts.

A modern system allows boundless scale. As a business user, I can’t handle if my system gets slow when we add more users. If the site goes viral, it needs to continue serving requests, and if the site is seasonally slow, we need to turn down the spend to match revenue. Utility billing and cloud-native scale offers this opportunity. Mounds of hardware are available for us to scale into immediately upon request. If we design stateless, distributed systems, additional load doesn’t produce latency issues.

A modern system processes disparate data types and sources. Our systems produce logs of unstructured system behavior and failures. Events from sensors and user activity flood in as huge amounts of time-series events. Users produce transactions by placing orders or requesting services. And the product catalog or news feed is a library of documents that must be rendered completely and quickly. As users and stakeholders consume the system’s features, they don’t want or need to know how this data is stored or processed. They need only see that it’s available, searchable, and consumable.

A modern system produces relevant information. In the world of big data, and even bigger compute capacity, it’s our task to give users relevant information from all sources. Machine learning models can identify trends in data, suggesting related activities or purchases, delivering relevant, real-time results to users. Just as easily, these models can detect outlier activities that suggest fraud. As we gain trust in the insights gained from these real-time analytics, we can empower the machines to make decisions that deliver real business value to our organization.

MemSQL is the Modern Stack’s Database

Whether you choose to build your web properties in Java or C#, in Python or Go, in Ruby or JavaScript, you need a data store that can elastically and boundlessly scale with your application. One that solves the problems that Mongo ran into – that scales effortlessly, and that meets ACID guarantees for data durability.

We also need a database that supports the SQL standard for data retrieval. This brings two benefits: a SQL database “plays well with others,” supporting the vast number of tools out there that interface to SQL, as well as the vast number of developers and sophisticated end users who know SQL code. The decades of work that have gone into honing the efficiency of SQL implementations is also worth tapping into.

These requirements have called forth a new class of databases, which go by a variety of names; we will use the term NewSQL here. A NewSQL database is distributed, like Mongo, but meets ACID guarantees, providing durability, along with support for SQL. CockroachDB and Google Spanner are examples of NewSQL databases.

We believe that MemSQL brings the best SQL, distributed, and cloud-native story to the table. At the core of MemSQL is the distributed database. In the database’s control plane is a master node and other aggregator nodes responsible for splitting the query across leaf nodes, and combining the results into deterministic data sets. ACID-compliant transactions ensure each update is durably committed to the data partitions, and available for subsequent requests. In-memory skiplists speed up seeking and querying data, and completely avoid data locks.

MemSQL Helios delivers the same boundless scale engine as a managed service in the cloud. No longer do you need to provision additional hardware or carve out VMs. Merely drag a slider up or down to ensure the capacity you need is available.

MemSQL is able to ingest data from Kafka streams, from S3 buckets of data stored in JSON, CSV, and other formats, and deliver the data into place without interrupting real-time analytical queries. Native transforms allow shelling out into any process to transform or augment the data, such as calling into a Spark ML model.

MemSQL stores relational data, stores document data in JSON columns, provides time-series windowing functions, allows for super-fast in-memory rowstore tables snapshotted to disk and disk-based columnstore data, heavily cached in memory.

As we craft the modern app stack, include MemSQL as your durable, boundless cloud-native data store of choice.

Conclusion

Stacks have allowed us to simplify the sea of choices to a few packages known to work well together. The MEAN stack was one such toolchain that allowed developers to focus less on infrastructure choices and more on developing business value.

Sadly, the MEAN stack hasn’t aged well. We’ve moved on to the JAM stack, but this ignores the back-end completely.

As our tastes have matured, we assume more from our infrastructure. We need a cloud-native advocate that can boundlessly scale, as our users expect us to deliver superior experiences. Try MemSQL for free today, or contact us for a personalized demo.[Source]-https://www.memsql.com/blog/whats-after-the-mean-stack/

62 Hours Mean Stack Developer Training includes MongoDB, JavaScript, A62 angularJS Training, MongoDB, Node JS and live Project Development. Demo Mean Stack Training available.

0 notes

Text

RX JAVA 2.0 releases support for Android

The RxJava team has released version 2.0 of their reactive Java framework, after an 18 month development cycle. RxJava is part of the ReactiveX family of libraries and frameworks, which is in their words, "a combination of the best ideas from the Observer pattern, the Iterator pattern, and functional programming". The project's "What's different in 2.0" is a good guide for developers already familiar with RxJava 1.x.

RxJava 2.0 is a brand new implementation of RxJava. This release is based on the Reactive Streams specification, an initiative for providing a standard for asynchronous stream processing with non-blocking back pressure, targeting runtime environments (JVM and JavaScript) as well as network protocols.

Reactive implementations have concepts of publishers and subscribers, as well as ways to subscribe to data streams, get the next stream of data, handle errors and close the connection.

The Reactive Streams spec will be included in JDK 9 as java.util.concurrent.Flow. The following interfaces correspond to the Reactive Streams spec. As you can see, the spec is small, consisting of just four interfaces:

· Flow.Processor<T,R>: A component that acts as both a Subscriber and Publisher.

· Flow.Publisher<T>: A producer of items (and related control messages) received by Subscribers.

· Flow.Subscriber<T>: A receiver of messages.

· Flow.Subscription: Message control linking a Flow.Publisher and Flow.Subscriber.

Spring Framework 5 is also going reactive. To see how this looks, refer to Josh Long's Functional Reactive Endpoints with Spring Framework 5.0.

To learn more about RxJava 2.0, InfoQ interviewed main RxJava 2.0 contributor, David Karnok.

InfoQ: First of all, congrats on RxJava 2.0! 18 months in the making, that's quite a feat. What are you most proud of in this release?

David Karnok: Thanks! In some way, I wish it didn't take so long. There was a 10 month pause when Ben Christensen, the original author who started RxJava, left and there was no one at Netflix to push this forward. I'm sure many will contest that things got way better when I took over the lead role this June. I'm proud my research into more advanced and more performant Reactive Streams paid off and RxJava 2 is the proof all of it works.

InfoQ: What’s different in RxJava 2.0 and how does it help developers?

Karnok: There are a lot of differences between version 1 and 2 and it's impossible to list them all here ,but you can visit the dedicated wiki page for a comprehensible explanation. In headlines, we now support the de-facto standard Reactive Streams specification, have significant overhead reduction in many places, no longer allow nulls, have split types into two groups based on support of or lack of backpressure and have explicit interop between the base reactive types.

InfoQ: Where do you see RxJava used the most (e.g. IoT, real-time data processing, etc.)?

Karnok: RxJava is more dominantly used by the Android community, based on the feedback I saw. I believe the server side is dominated by Project Reactor and Akka at the moment. I haven't specifically seen IoT mentioned or use RxJava (it requires Java), but maybe they use some other reactive library available on their platform. For real-time data processing people still tend to use other solutions, most of them not really reactive, and I'm not aware of any providers (maybe Pivotal) who are pushing for reactive in this area.

InfoQ: What benefits does RxJava provide Android more than other environments that would explain the increased traction?

Karnok: As far as I see, Android wanted to "help" their users solving async and concurrent problems with Android-only tools such as AsyncTask, Looper/Handler etc.