#sql failover

Explore tagged Tumblr posts

Text

Aurora PostgreSQL VS Azure SQL Failover Speeds

Introduction Have you ever wondered how different cloud SQL options compare when it comes to high availability and failover speed? As someone who manages critical databases in the cloud, I’m always evaluating the resilience and recovery capabilities of the platforms we use. Today, I want to share some insights into how Amazon Aurora PostgreSQL and the Microsoft Azure SQL options measure up in…

View On WordPress

0 notes

Text

Building a multi-zone and multi-region SQL Server Failover Cluster Instance in Azure

Much has been written about SQL Server Always On Availability Groups, but the topic of SQL Server Failover Cluster Instances (FCI) that span both availability zones and regions is far less discussed. However, for organizations that require SQL Server high availability (HA) and disaster recovery (DR) without the added licensing costs of Enterprise Edition, SQL Server FCI remains a powerful and…

0 notes

Text

U.S. Cloud DBaaS Market Set for Explosive Growth Amid Digital Transformation Through 2032

Cloud Database And DBaaS Market was valued at USD 17.51 billion in 2023 and is expected to reach USD 77.65 billion by 2032, growing at a CAGR of 18.07% from 2024-2032.

Cloud Database and DBaaS Market is witnessing accelerated growth as organizations prioritize scalability, flexibility, and real-time data access. With the surge in digital transformation, U.S.-based enterprises across industries—from fintech to healthcare—are shifting from traditional databases to cloud-native solutions that offer seamless performance and cost efficiency.

U.S. Cloud Database & DBaaS Market Sees Robust Growth Amid Surge in Enterprise Cloud Adoption

U.S. Cloud Database And DBaaS Market was valued at USD 4.80 billion in 2023 and is expected to reach USD 21.00 billion by 2032, growing at a CAGR of 17.82% from 2024-2032.

Cloud Database and DBaaS Market continues to evolve with strong momentum in the USA, driven by increasing demand for managed services, reduced infrastructure costs, and the rise of multi-cloud environments. As data volumes expand and applications require high availability, cloud database platforms are emerging as strategic assets for modern enterprises.

Get Sample Copy of This Report: https://www.snsinsider.com/sample-request/6586

Market Keyplayers:

Google LLC (Cloud SQL, BigQuery)

Nutanix (Era, Nutanix Database Service)

Oracle Corporation (Autonomous Database, Exadata Cloud Service)

IBM Corporation (Db2 on Cloud, Cloudant)

SAP SE (HANA Cloud, Data Intelligence)

Amazon Web Services, Inc. (RDS, Aurora)

Alibaba Cloud (ApsaraDB for RDS, ApsaraDB for MongoDB)

MongoDB, Inc. (Atlas, Enterprise Advanced)

Microsoft Corporation (Azure SQL Database, Cosmos DB)

Teradata (VantageCloud, ClearScape Analytics)

Ninox (Cloud Database, App Builder)

DataStax (Astra DB, Enterprise)

EnterpriseDB Corporation (Postgres Cloud Database, BigAnimal)

Rackspace Technology, Inc. (Managed Database Services, Cloud Databases for MySQL)

DigitalOcean, Inc. (Managed Databases, App Platform)

IDEMIA (IDway Cloud Services, Digital Identity Platform)

NEC Corporation (Cloud IaaS, the WISE Data Platform)

Thales Group (CipherTrust Cloud Key Manager, Data Protection on Demand)

Market Analysis

The Cloud Database and DBaaS (Database-as-a-Service) Market is being fueled by a growing need for on-demand data processing and real-time analytics. Organizations are seeking solutions that provide minimal maintenance, automatic scaling, and built-in security. U.S. companies, in particular, are leading adoption due to strong cloud infrastructure, high data dependency, and an agile tech landscape.

Public cloud providers like AWS, Microsoft Azure, and Google Cloud dominate the market, while niche players continue to innovate in areas such as serverless databases and AI-optimized storage. The integration of DBaaS with data lakes, containerized environments, and AI/ML pipelines is redefining the future of enterprise database management.

Market Trends

Increased adoption of multi-cloud and hybrid database architectures

Growth in AI-integrated database services for predictive analytics

Surge in serverless DBaaS models for agile development

Expansion of NoSQL and NewSQL databases to support unstructured data

Data sovereignty and compliance shaping platform features

Automated backup, disaster recovery, and failover features gaining popularity

Growing reliance on DBaaS for mobile and IoT application support

Market Scope

The market scope extends beyond traditional data storage, positioning cloud databases and DBaaS as critical enablers of digital agility. Businesses are embracing these solutions not just for infrastructure efficiency, but for innovation acceleration.

Scalable and elastic infrastructure for dynamic workloads

Fully managed services reducing operational complexity

Integration-ready with modern DevOps and CI/CD pipelines

Real-time analytics and data visualization capabilities

Seamless migration support from legacy systems

Security-first design with end-to-end encryption

Forecast Outlook

The Cloud Database and DBaaS Market is expected to grow substantially as U.S. businesses increasingly seek cloud-native ecosystems that deliver both performance and adaptability. With a sharp focus on automation, real-time access, and AI-readiness, the market is transforming into a core element of enterprise IT strategy. Providers that offer interoperability, data resilience, and compliance alignment will stand out as leaders in this rapidly advancing space.

Access Complete Report: https://www.snsinsider.com/reports/cloud-database-and-dbaas-market-6586

Conclusion

The future of data is cloud-powered, and the Cloud Database and DBaaS Market is at the forefront of this transformation. As American enterprises accelerate their digital journeys, the demand for intelligent, secure, and scalable database services continues to rise.

Related Reports:

Analyze U.S. market demand for advanced cloud security solutions

Explore trends shaping the Cloud Data Security Market in the U.S

About Us:

SNS Insider is one of the leading market research and consulting agencies that dominates the market research industry globally. Our company's aim is to give clients the knowledge they require in order to function in changing circumstances. In order to give you current, accurate market data, consumer insights, and opinions so that you can make decisions with confidence, we employ a variety of techniques, including surveys, video talks, and focus groups around the world.

Contact Us:

Jagney Dave - Vice President of Client Engagement

Phone: +1-315 636 4242 (US) | +44- 20 3290 5010 (UK)

0 notes

Text

The Hidden Hero of Software Success: Inside EDSPL’s Unmatched Testing & QA Framework

When a software product goes live without glitches, users often marvel at its speed, design, or functionality. What they don’t see is the invisible layer of discipline, precision, and strategy that made it possible — Testing and Quality Assurance (QA). At EDSPL, QA isn’t just a step in the process; it’s the very spine that supports software integrity from start to finish.

As digital applications grow more interconnected, especially with advancements in network security, cloud security, application security, and infrastructure domains like routing, switching, and mobility, quality assurance becomes the glue holding it all together. EDSPL’s comprehensive QA and testing framework has been fine-tuned to ensure consistent performance, reliability, and security — no matter how complex the software environment.

Let’s go behind the scenes of EDSPL’s QA approach to understand why it is a hidden hero in modern software success.

Why QA Is More Crucial Than Ever

The software ecosystem is no longer siloed. Enterprises now rely on integrated systems that span cloud platforms, APIs, mobile devices, and legacy systems — all of which need to work in sync without error.

From safeguarding sensitive data through network security protocols to validating business-critical workflows on the cloud, EDSPL ensures that testing extends beyond functionality. It is a guardrail for security, compliance, performance, and user trust.

Without rigorous QA, a minor bug in a login screen could lead to a vulnerability that compromises an entire system. EDSPL prevents these catastrophes by placing QA at the heart of its delivery model.

QA Touchpoints Across EDSPL’s Service Spectrum

Let’s explore how EDSPL’s testing excellence integrates into different service domains.

1. Ensuring Safe Digital Highways through Network Security

In an era where cyber threats can cripple operations, QA isn’t just about validating code — it’s about verifying that security holds up under stress. EDSPL incorporates penetration testing, vulnerability assessments, and simulation-based security testing into its QA model to validate:

Firewall behavior

Data leakage prevention

Encryption mechanisms

Network segmentation efficacy

By integrating QA with network security, EDSPL ensures clients launch digitally fortified applications.

2. Reliable Application Delivery on the Cloud

Cloud-native and hybrid applications are central to enterprise growth, but they also introduce shared responsibility models. EDSPL’s QA ensures that deployment across cloud platforms is:

Secure from misconfigurations

Optimized for performance

Compliant with governance standards

Whether it’s AWS, Azure, or GCP, EDSPL’s QA framework validates data access policies, scalability limits, and containerized environments. This ensures smooth delivery across the cloud with airtight cloud security guarantees.

3. Stress-Testing Application Security

Modern applications are constantly exposed to APIs, users, and third-party integrations. EDSPL includes robust application security testing as part of QA by simulating real-world attacks and identifying:

Cross-site scripting (XSS) vulnerabilities

SQL injection points

Broken authentication scenarios

API endpoint weaknesses

By using both manual and automated testing methods, EDSPL ensures applications are resilient to threat vectors and function smoothly across platforms.

4. Validating Enterprise Network Logic through Routing and Switching

Routing and switching are the operational backbone of any connected system. When software solutions interact with infrastructure-level components, QA plays a key role in ensuring:

Data packets travel securely and efficiently

VLANs are correctly configured

Dynamic routing protocols function without interruption

Failover and redundancy mechanisms are effective

EDSPL’s QA team uses emulators and simulation tools to test against varied network topologies and configurations. This level of QA ensures that software remains robust across different environments.

5. Securing Agile Teams on the Move with Mobility Testing

With a growing mobile workforce, enterprise applications must be optimized for mobile-first use cases. EDSPL’s QA team conducts deep mobility testing that includes:

Device compatibility across Android/iOS

Network condition simulation (3G/4G/5G/Wi-Fi)

Real-time responsiveness

Security over public networks

Mobile-specific security testing (root detection, data sandboxing, etc.)

This ensures that enterprise mobility solutions are secure, efficient, and universally accessible.

6. QA for Integrated Services

At its core, EDSPL offers an integrated suite of IT and software services. QA is embedded across all of them — from full-stack development to API design, cloud deployment, infrastructure automation, and cybersecurity.

Key QA activities include:

Regression testing for evolving features

Functional and integration testing across service boundaries

Automation testing to reduce human error

Performance benchmarking under realistic conditions

Whether it's launching a government portal or a fintech app, EDSPL's services rely on QA to deliver dependable digital experiences.

The QA Framework: Built for Resilience and Speed

EDSPL has invested in building a QA framework that balances speed with precision. Here's what defines it:

1. Shift-Left Testing

QA begins during requirements gathering, not after development. This reduces costs, eliminates rework, and aligns product strategy with user needs.

2. Continuous Integration & Automated Testing

Automation tools are deeply integrated with CI/CD pipelines to support agile delivery. Tests run with every commit, giving developers instant feedback and reducing deployment delays.

3. Security-First QA Culture

Security checks are integrated into every QA cycle, not treated as separate audits. This creates a proactive defense mechanism and encourages developers to write secure code from day one.

4. Test Data Management

EDSPL uses production-simulated datasets to ensure test scenarios reflect real-world user behavior. This improves defect prediction and minimizes surprises post-launch.

5. Reporting & Metrics

QA results are analyzed using KPIs like defect leakage rate, test coverage, mean time to resolve, and user-reported issue rates. These metrics drive continuous improvement.

Case Studies: Impact Through Quality

A National Education Platform

EDSPL was tasked with launching a high-traffic education portal with live video, assessments, and resource sharing. The QA team created an end-to-end test architecture including performance, usability, and application security testing.

Results:

99.9% uptime during national rollout

Zero critical issues in the first 90 days

100K+ concurrent users supported with no lag

A Banking App with Cloud-Native Architecture

A private bank chose EDSPL for QA on a mobile app deployed on the cloud. The QA team validated the app’s security posture, cloud security, and resilience under high load.

Results:

Passed all OWASP compliance checks

Load testing confirmed 5000+ concurrent sessions

Automated testing reduced release cycles by 40%

Future-Ready QA: AI, RPA, and Autonomous Testing

EDSPL’s QA roadmap includes:

AI-based test generation from user behavior patterns

Self-healing automation for flaky test cases

RPA integration for business process validation

Predictive QA using machine learning to forecast defects

These capabilities ensure that EDSPL’s QA framework not only adapts to today’s demands but also evolves with future technologies.

Conclusion: Behind Every Great Software Is Greater QA

While marketing, development, and design get much of the spotlight, software success is impossible without a strong QA foundation. At EDSPL, testing is not a checkbox — it’s a commitment to excellence, safety, and performance.

From network security to cloud security, from routing to mobility, QA is integrated into every layer of the digital infrastructure. It is the thread that ties all services together into a reliable, secure, and scalable product offering.

When businesses choose EDSPL, they’re not just buying software — they’re investing in peace of mind, powered by an unmatched QA framework.

Visit this website to know more — https://www.edspl.net/

0 notes

Video

youtube

Amazon Aurora | High-Performance Managed Relational Database

Amazon Aurora

Amazon Aurora is a fully managed relational database engine compatible with both MySQL and PostgreSQL. It’s engineered for high performance, offering up to five times the throughput of standard MySQL and twice that of PostgreSQL. Aurora is ideal for high-demand applications requiring superior speed, availability, and scalability.

- Key Features:

- Automatic, continuous backups and point-in-time recovery.

- Multi-AZ deployment with automatic failover.

- Storage that automatically grows as needed up to 128 TB.

- Global database support for cross-region replication.

- Use Cases:

- High-traffic web and mobile applications.

- Enterprise applications requiring high availability and fault tolerance.

- Real-time analytics and e-commerce platforms.

Key Benefits of Choosing the Right Amazon RDS Database:

1. Optimized Performance: Select an engine that matches your performance needs, ensuring efficient data processing and application responsiveness.

2. Scalability: Choose a database that scales seamlessly with your growing data and traffic demands, avoiding performance bottlenecks.

3. Cost Efficiency: Find a solution that fits your budget while providing the necessary features and performance.

4. Enhanced Features: Leverage advanced capabilities specific to each engine to meet your application's unique requirements.

5. Simplified Management: Benefit from managed services that reduce administrative tasks and streamline database operations.

Conclusion:

Choosing the right Amazon RDS database engine is critical for achieving the best performance, scalability, and functionality for your application. Each engine offers unique features and advantages tailored to specific use cases, whether you need the speed of Aurora, the extensibility of PostgreSQL, the enterprise features of SQL Server, or the robustness of Oracle. Understanding these options helps ensure that your database infrastructure meets your application’s needs, both now and in the future.

#youtube#Amazon RDS RDS Monitoring AWS Performance Insights Optimize RDS Amazon CloudWatch Enhanced Monitoring AWS AWS DevOps Tutorial AWS Hands-On C

0 notes

Text

Strategic Database Solutions for Modern Business Needs

Today’s businesses rely on secure, fast, and scalable systems to manage data across distributed teams and environments. As demand for flexibility and 24/7 support increases, database administration services have become central to operational stability. These services go far beyond routine backups—they include performance tuning, capacity planning, recovery strategies, and compliance support.

Adopting Agile Support with Flexible Engagement Models

Companies under pressure to scale operations without adding internal overhead are increasingly turning to outsourced database administration. This approach provides round-the-clock monitoring, specialised expertise, and faster resolution times, all without the cost of hiring full-time staff. With database workloads becoming more complex, outsourced solutions help businesses keep pace with technology changes while controlling costs.

What Makes Outsourced Services So Effective

The benefit of using outsourced database administration services lies in having instant access to certified professionals who are trained across multiple platforms—whether Oracle, SQL Server, PostgreSQL, or cloud-native options. These experts can handle upgrades, patching, and diagnostics with precision, allowing internal teams to focus on core business activities instead of infrastructure maintenance.

Cost-Effective Performance Management at Scale

Companies looking to outsource dba roles often do so to reduce capital expenditure and increase operational efficiency. Outsourcing allows businesses to pay only for the resources they need, when they need them—without being tied to long-term contracts or dealing with the complexities of recruitment. This flexibility is especially valuable for businesses managing seasonal spikes or undergoing digital transformation projects.

Minimizing Downtime Through Proactive Monitoring

Modern database administration services go beyond traditional support models by offering real-time health checks, automatic alerts, and predictive performance analysis. These features help identify bottlenecks or security issues before they impact users. Proactive support allows organisations to meet service-level agreements (SLAs) and deliver consistent performance to customers and internal stakeholders.

How External Partners Fill Critical Skill Gaps

Working with experienced database administration outsourcing companies can close gaps in internal knowledge, especially when managing hybrid or multi-cloud environments. These companies typically have teams with varied technical certifications and deep domain experience, making them well-equipped to support both legacy systems and modern architecture. The result is stronger resilience and adaptability in managing database infrastructure.

Supporting Business Continuity with Professional Oversight

Efficient dba administration includes everything from setting up new environments to handling failover protocols and disaster recovery planning. With dedicated oversight, businesses can avoid unplanned outages and meet compliance requirements, even during migrations or platform upgrades. The focus on stability and scalability helps maintain operational continuity in high-demand settings.

0 notes

Text

Windows Dedicated Hosting By CloudMinister Technologies

Unlock Peak Performance with Windows Dedicated Hosting at CloudMinister Technologies

In a world driven by data and performance, having the right hosting solution is no longer a luxury—it’s a necessity. Whether you’re managing enterprise applications, e-commerce platforms, or custom software environments, choosing a reliable and high-performance server can make or break your digital success.

That’s where Windows Dedicated Hosting by CloudMinister Technologies steps in, offering a powerful and secure hosting environment designed for businesses that demand the best.

What Is Windows Dedicated Hosting?

Windows Dedicated Hosting refers to a hosting setup where a physical server is dedicated exclusively to a single user or organization. Unlike shared or VPS hosting, you don’t share resources—CPU, RAM, storage, or bandwidth—with any other user. It runs on the Windows operating system, making it ideal for businesses that rely on Microsoft technologies such as:

ASP.NET and .NET Core applications

Microsoft SQL Server (MSSQL) databases

Microsoft Exchange and SharePoint servers

Remote Desktop Services (RDS)

Custom Windows-based enterprise applications

This hosting environment offers unparalleled control, performance, and flexibility, making it a top choice for mission-critical operations.

Why Choose CloudMinister Technologies for Windows Dedicated Hosting?

At CloudMinister Technologies, we understand that every business is different—and so are their hosting requirements. That’s why we offer fully customizable and scalable Windows Dedicated Hosting solutions that are optimized for both performance and security.

1. Uncompromised Performance

Our dedicated servers are equipped with enterprise-grade Intel Xeon processors, NVMe SSD storage, and high-speed DDR4 RAM. This ensures lightning-fast data processing, faster website load times, and seamless application execution.

2. 100% Resource Allocation

With Windows Dedicated Hosting at CloudMinister Technologies, you have complete control over your server’s resources. No noisy neighbors. No shared environments. Just pure, uninterrupted performance for your applications.

3. Full Root/Admin Access

We provide full administrative (RDP) access to your server, allowing you to install and manage your applications, software, and configurations with total freedom.

4. Advanced Security Measures

Security is a top priority. Our dedicated hosting comes with:

DDoS protection

Firewalls & Intrusion Detection Systems

Regular patch management

Real-time monitoring

Data backups and disaster recovery options

With CloudMinister Technologies, you get peace of mind knowing your digital assets are protected 24/7.

5. Tailored Solutions for Every Business

Whether you're a growing startup, an established enterprise, or a SaaS provider, our team works closely with you to design a custom Windows Dedicated Hosting plan that meets your specific needs—no cookie-cutter solutions here.

6. 24/7 Premium Technical Support

Our certified Windows Server experts are available around the clock to help you with everything from initial setup to complex server management. We don’t just offer support—we become an extension of your IT team.

7. High Availability and Uptime Guarantee

Downtime is costly. Our infrastructure is designed with redundancy and failover systems to ensure high availability. That’s why we offer a 99.99% uptime guarantee across all our Windows Dedicated Hosting services.

Key Features at a Glance

Fully Managed or Self-Managed Options

Latest Windows Server Versions (2019, 2022, etc.)

SSD & NVMe Storage for Ultra-Fast Performance

High Bandwidth & Network Redundancy

Daily Backups & Optional Disaster Recovery

Scalable Plans for Enterprise Growth

User-Friendly Control Panels (Plesk, Webuzo, etc.)

Who Should Use Windows Dedicated Hosting?

Windows Dedicated Hosting from CloudMinister Technologies is ideal for:

Enterprises running Windows-based ERP or CRM software

Developers deploying .NET-based web applications

Businesses needing isolated environments for sensitive data

E-commerce platforms needing robust security and high performance

Agencies managing high-traffic websites with custom integrations

Why CloudMinister Technologies Is a Trusted Choice

With years of experience in cloud infrastructure and hosting, CloudMinister Technologies has become a go-to provider for businesses seeking powerful, reliable, and scalable hosting environments.

We’re more than just a hosting provider—we’re a technology partner committed to your digital growth. Our customers trust us because we:

Deliver solutions that align with business goals

Offer honest, transparent pricing with no hidden fees

Provide fast onboarding and migration support

Operate with integrity and a customer-first approach

Final Thoughts

Choosing the right hosting solution can have a massive impact on your business performance, security, and growth. With Windows Dedicated Hosting from CloudMinister Technologies, you gain the power of exclusive server resources, enterprise-level infrastructure, and dedicated technical support—all optimized for your success.

For more visit:- www.cloudminister.com

#cloudminister technologies#hosting#servers#technology#information technology#windows dedicated hosting

0 notes

Text

Azure Data Factory for Financial Services: Ensuring Compliance and Secure Data Movement

Financial services organizations handle vast amounts of sensitive data, including customer financial transactions, regulatory reports, and risk assessments. Ensuring secure data movement while complying with industry regulations is crucial. Azure Data Factory (ADF) provides a robust, scalable, and secure ETL (Extract, Transform, Load) solution for financial institutions.

Key Compliance Considerations in Financial Services

Financial services organizations must adhere to various regulatory frameworks, such as:

General Data Protection Regulation (GDPR) — Protects personal data and privacy.

Payment Card Industry Data Security Standard (PCI DSS) — Ensures secure handling of credit card information.

Sarbanes-Oxley Act (SOX) — Mandates financial reporting integrity.

Financial Industry Regulatory Authority (FINRA) — Governs securities firms and brokers.

How Azure Data Factory Ensures Compliance

1. Secure Data Integration and Movement

ADF provides secure data movement across hybrid and multi-cloud environments.

Encryption: ADF encrypts data in transit and at rest using Azure Key Vault-managed keys.

Private Endpoints: Prevent data exposure by using Azure Private Link for secure connectivity.

Self-Hosted Integration Runtime: Enables on-premises to cloud data transfer while maintaining security controls.

2. Data Masking and Anonymization

To protect personally identifiable information (PII), ADF integrates with Azure SQL Database Dynamic Data Masking and Azure Data Lake’s access control policies to restrict access to sensitive data.

3. Audit Logging and Monitoring

Financial organizations need detailed audit trails. ADF offers:

Azure Monitor & Log Analytics: Tracks pipeline activities and security events.

Azure Policy & Compliance Dashboard: Helps enforce compliance across data pipelines.

Role-Based Access Control (RBAC): Ensures least privilege access to sensitive data.

4. Data Lineage and Governance

Using Azure Purview, financial institutions can track data lineage, ensuring transparency in data transformations, storage, and movement. This helps in meeting regulatory audit requirements.

5. Disaster Recovery and High Availability

Geo-Redundant Data Stores: Azure ensures business continuity with automated failover mechanisms.

Automated Backup & Restore: ADF integrates with Azure Backup to prevent data loss.

Use Case: Secure ETL for Fraud Detection

A financial institution needs to process large volumes of real-time transactional data to detect fraud.

Ingest Data: ADF securely pulls data from bank transactions, payment gateways, and mobile banking logs.

Transform & Mask Sensitive Data: Data transformations remove PII while preserving analytics value.

Load into a Data Warehouse: Data is securely loaded into Azure Synapse Analytics for fraud pattern detection.

Conclusion

Azure Data Factory offers a compliant, secure, and scalable data integration solution for financial services. By leveraging encryption, access controls, audit logging, and compliance frameworks, financial institutions can confidently move sensitive data while meeting regulatory standards.

WEBSITE: https://www.ficusoft.in/azure-data-factory-training-in-chennai/

0 notes

Text

A new SAP BASIS consultant faces several challenges when starting in the role. Here are the most common ones:

1. Complex Learning Curve

SAP BASIS covers a broad range of topics, including system administration, database management, performance tuning, and security.

Understanding how different SAP components (ERP, S/4HANA, BW, Solution Manager) interact can be overwhelming.

2. System Installations & Migrations

Setting up and configuring an SAP landscape requires deep knowledge of operating systems (Windows, Linux) and databases (HANA, Oracle, SQL Server).

Migration projects, such as moving from on-premise to SAP BTP or HANA, involve risks like downtime and data loss.

3. Performance Tuning & Troubleshooting

Identifying bottlenecks in SAP system performance can be challenging due to the complexity of memory management, work processes, and database indexing.

Log analysis and troubleshooting unexpected errors demand experience and knowledge of SAP Notes.

4. Security & User Management

Setting up user roles and authorizations correctly in SAP is critical to avoid security breaches.

Managing Single Sign-On (SSO) and integration with external authentication tools can be tricky.

5. Handling System Upgrades & Patching

Applying support packs, kernel upgrades, and enhancement packages requires careful planning to avoid system downtime or conflicts.

Ensuring compatibility with custom developments (Z programs) and third-party integrations is essential.

6. High Availability & Disaster Recovery

Understanding failover mechanisms, system clustering, and backup/restore procedures is crucial for minimizing downtime.

Ensuring business continuity in case of server crashes or database failures requires strong disaster recovery planning.

7. Communication & Coordination

Working with functional consultants, developers, and business users to resolve issues can be challenging if there’s a lack of clear communication.

Managing stakeholder expectations during system outages or performance issues is critical.

8. Monitoring & Proactive Maintenance

New BASIS consultants may struggle with configuring SAP Solution Manager for system monitoring and proactive alerts.

Setting up background jobs, spool management, and RFC connections efficiently takes practice.

9. Managing Transport Requests

Transporting changes across SAP environments (DEV → QA → PROD) without errors requires an understanding of transport logs and dependencies.

Incorrect transport sequences can cause system inconsistencies.

10. Staying Updated with SAP Evolution

SAP is rapidly evolving, especially with the shift to SAP S/4HANA and cloud solutions.

Continuous learning is required to stay up-to-date with new technologies like SAP BTP, Cloud ALM, and AI-driven automation.

Mail us on [email protected]

Website: Anubhav Online Trainings | UI5, Fiori, S/4HANA Trainings

0 notes

Text

Choosing Between Failover Cluster Instances and Availability Groups in SQL Server

In SQL Server, high availability and disaster recovery are crucial aspects of database management. Two popular options for achieving these goals are Failover Cluster Instances (FCIs) and Availability Groups (AGs). While both technologies aim to minimize downtime and ensure data integrity, they have distinct use cases and benefits. In this article, we’ll explore scenarios where Failover Cluster…

View On WordPress

#automatic failover#Availability Groups#disaster recovery#Failover Cluster Instances#SQL Server high availability

0 notes

Text

SQL Server: A Comprehensive Overview

SQL Server, developed by Microsoft, is a powerful relational database management system (RDBMS) used by organizations worldwide to manage and store data efficiently. It provides a robust platform for database operations, including data storage, retrieval, security, and analysis SQL Server is known for its scalability, reliability, and integration with other Microsoft services, making it a preferred choice for businesses of all sizes.

Key Features of SQL Server

1. Scalability and Performance

SQL Server is designed to handle large-scale databases while maintaining high performance. With features like in-memory processing, indexing, and optimized query execution, it ensures fast data retrieval and efficient processing, even with massive datasets.

2. Security and Compliance

Data security is a critical concern, and SQL Server addresses this with advanced security features such as:

Transparent Data Encryption (TDE): Encrypts the database at rest.

Row-Level Security (RLS): Restricts access to specific rows within a table based on user permissions.

Dynamic Data Masking (DDM): Protects sensitive data by masking it during query execution.

Always Encrypted: Ensures data remains encrypted both at rest and in transit.

3. High Availability and Disaster Recovery

SQL Server ensures continuous availability through features such as:

Always On Availability Groups: Provides failover support and high availability for critical databases.

Database Mirroring and Log Shipping: Helps maintain real-time data replication and backup solutions.

Backup and Restore Capabilities: Ensures data recovery in case of system failures.

4. Business Intelligence and Data Analytics

SQL Server includes built-in tools for business intelligence (BI) and analytics, allowing organizations to gain insights from their data. Features include:

SQL Server Analysis Services (SSAS): Enables data mining and multidimensional analysis.

SQL Server Integration Services (SSIS): Facilitates data extraction, transformation, and loading (ETL).

SQL Server Reporting Services (SSRS): Allows for the creation of interactive reports and dashboards.

5. Integration with Cloud and AI

SQL Server seamlessly integrates with Microsoft Azure, enabling hybrid cloud solutions. Additionally, it supports artificial intelligence (AI) and machine learning (ML) capabilities, allowing users to perform predictive analytics and automate decision-making processes.

SQL Server Editions

Microsoft offers SQL Server in different editions to cater to various business needs:

Enterprise Edition: Designed for large-scale applications with high performance and security requirements.

Standard Edition: Suitable for mid-sized businesses with essential database functionalities.

Express Edition: A free version with limited features, ideal for small applications and learning purposes.

Developer Edition: Offers full Enterprise Edition capabilities but is licensed for development and testing only.

SQL Server vs. Other RDBMS

While SQL Server is a leading database management system, it competes with other RDBMS like MySQL, PostgreSQL, and Oracle Database. Here’s how it compares:

Ease of Use: SQL Server has a user-friendly interface, particularly for Windows users.

Security Features: Provides robust security compared to MySQL and PostgreSQL.

Integration with Microsoft Ecosystem: Works seamlessly with tools like Power BI, Azure, and Office 365.

Licensing Costs: SQL Server can be more expensive than open-source databases like MySQL and PostgreSQL.

Conclusion

SQL Server is a powerful and versatile database management system that supports businesses in managing their data efficiently. With features like scalability, security, high availability, and cloud integration, it remains a top choice for enterprises looking for a reliable RDBMS. Whether used for small applications or large-scale enterprise systems, SQL Server continues to evolve with new advancements, making it an essential tool for modern data management.

0 notes

Text

AWS NoSQL: A Comprehensive Guide to Scalable and Flexible Data Management

As big data and cloud computing continue to evolve, traditional relational databases often fall short in meeting the demands of modern applications. AWS NoSQL databases offer a scalable, high-performance solution for managing unstructured and semi-structured data with efficiency. This blog provides an in-depth exploration of aws no sql databases, highlighting their key benefits, use cases, and best practices for implementation.

An Overview of NoSQL on AWS

Unlike traditional SQL databases, NoSQL databases are designed with flexible schemas, horizontal scalability, and high availability in mind. AWS offers a range of managed NoSQL database services tailored to diverse business needs. These services empower organizations to develop applications capable of processing massive amounts of data while minimizing operational complexity.

Key AWS NoSQL Database Services

1. Amazon DynamoDB

Amazon DynamoDB is a fully managed key-value and document database engineered for ultra-low latency and exceptional scalability. It offers features such as automatic scaling, in-memory caching, and multi-region replication, making it an excellent choice for high-traffic and mission-critical applications.

2. Amazon DocumentDB (with MongoDB Compatibility)

Amazon DocumentDB is a fully managed document database service that supports JSON-like document structures. It is particularly well-suited for applications requiring flexible and hierarchical data storage, such as content management systems and product catalogs.

3. Amazon ElastiCache

Amazon ElastiCache delivers in-memory data storage powered by Redis or Memcached. By reducing database query loads, it significantly enhances application performance and is widely used for caching frequently accessed data.

4. Amazon Neptune

Amazon Neptune is a fully managed graph database service optimized for applications that rely on relationship-based data modeling. It is ideal for use cases such as social networking, fraud detection, and recommendation engines.

5. Amazon Timestream

Amazon Timestream is a purpose-built time-series database designed for IoT applications, DevOps monitoring, and real-time analytics. It efficiently processes massive volumes of time-stamped data with integrated analytics capabilities.

Benefits of AWS NoSQL Databases

Scalability – AWS NoSQL databases are designed for horizontal scaling, ensuring high performance and availability as data volumes increase.

Flexibility – Schema-less architecture allows for dynamic and evolving data structures, making NoSQL databases ideal for agile development environments.

Performance – Optimized for high-throughput, low-latency read and write operations, ensuring rapid data access.

Managed Services – AWS handles database maintenance, backups, security, and scaling, reducing the operational workload for teams.

High Availability – Features such as multi-region replication and automatic failover ensure data availability and business continuity.

Use Cases of AWS NoSQL Databases

E-commerce – Flexible and scalable storage for product catalogs, user profiles, and shopping cart sessions.

Gaming – Real-time leaderboards, session storage, and in-game transactions requiring ultra-fast, low-latency access.

IoT & Analytics – Efficient solutions for large-scale data ingestion and time-series analytics.

Social Media & Networking – Powerful graph databases like Amazon Neptune for relationship-based queries and real-time interactions.

Best Practices for Implementing AWS NoSQL Solutions

Select the Appropriate Database – Choose an AWS NoSQL service that aligns with your data model requirements and workload characteristics.

Design for Efficient Data Partitioning – Create well-optimized partition keys in DynamoDB to ensure balanced data distribution and performance.

Leverage Caching Solutions – Utilize Amazon ElastiCache to minimize database load and enhance response times for your applications.

Implement Robust Security Measures – Apply AWS Identity and Access Management (IAM), encryption protocols, and VPC isolation to safeguard your data.

Monitor and Scale Effectively – Use AWS CloudWatch for performance monitoring and take advantage of auto-scaling capabilities to manage workload fluctuations efficiently.

Conclusion

AWS NoSQL databases are a robust solution for modern, data-intensive applications. Whether your use case involves real-time analytics, large-scale storage, or high-speed data access, AWS NoSQL services offer the scalability, flexibility, and reliability required for success. By selecting the right database and adhering to best practices, organizations can build resilient, high-performing cloud-based applications with confidence.

0 notes

Text

Building Scalable Web Applications: Best Practices for Full Stack Developers

Scalability is one of the most crucial factors in web application development. In today’s dynamic digital landscape, applications need to be prepared to handle increased user demand, data growth, and evolving business requirements without compromising performance. For full stack developers, mastering scalability is not just an option—it’s a necessity. This guide explores the best practices for building scalable web applications, equipping developers with the tools and strategies needed to ensure their projects can grow seamlessly.

What Is Scalability in Web Development?

Scalability refers to a system’s ability to handle increased loads by adding resources, optimizing processes, or both. A scalable web application can:

Accommodate growing numbers of users and requests.

Handle larger datasets efficiently.

Adapt to changes without requiring complete redesigns.

There are two primary types of scalability:

Vertical Scaling: Adding more power (CPU, RAM, storage) to a single server.

Horizontal Scaling: Adding more servers to distribute the load.

Each type has its use cases, and a well-designed application often employs a mix of both.

Best Practices for Building Scalable Web Applications

1. Adopt a Microservices Architecture

What It Is: Break your application into smaller, independent services that can be developed, deployed, and scaled independently.

Why It Matters: Microservices prevent a single point of failure and allow different parts of the application to scale based on their unique needs.

Tools to Use: Kubernetes, Docker, AWS Lambda.

2. Optimize Database Performance

Use Indexing: Ensure your database queries are optimized with proper indexing.

Database Partitioning: Divide large databases into smaller, more manageable pieces using horizontal or vertical partitioning.

Choose the Right Database Type:

Use SQL databases like PostgreSQL for structured data.

Use NoSQL databases like MongoDB for unstructured or semi-structured data.

Implement Caching: Use caching mechanisms like Redis or Memcached to store frequently accessed data and reduce database load.

3. Leverage Content Delivery Networks (CDNs)

CDNs distribute static assets (images, videos, scripts) across multiple servers worldwide, reducing latency and improving load times for users globally.

Popular CDN Providers: Cloudflare, Akamai, Amazon CloudFront.

Benefits:

Faster content delivery.

Reduced server load.

Improved user experience.

4. Implement Load Balancing

Load balancers distribute incoming requests across multiple servers, ensuring no single server becomes overwhelmed.

Types of Load Balancing:

Hardware Load Balancers: Physical devices.

Software Load Balancers: Nginx, HAProxy.

Cloud Load Balancers: AWS Elastic Load Balancing, Google Cloud Load Balancing.

Best Practices:

Use sticky sessions if needed to maintain session consistency.

Monitor server health regularly.

5. Use Asynchronous Processing

Why It’s Important: Synchronous operations can cause bottlenecks in high-traffic scenarios.

How to Implement:

Use message queues like RabbitMQ, Apache Kafka, or AWS SQS to handle background tasks.

Implement asynchronous APIs with frameworks like Node.js or Django Channels.

6. Embrace Cloud-Native Development

Cloud platforms provide scalable infrastructure that can adapt to your application’s needs.

Key Features to Leverage:

Autoscaling for servers.

Managed database services.

Serverless computing.

Popular Cloud Providers: AWS, Google Cloud, Microsoft Azure.

7. Design for High Availability (HA)

Ensure that your application remains operational even in the event of hardware failures, network issues, or unexpected traffic spikes.

Strategies for High Availability:

Redundant servers.

Failover mechanisms.

Regular backups and disaster recovery plans.

8. Optimize Front-End Performance

Scalability is not just about the back end; the front end plays a significant role in delivering a seamless experience.

Best Practices:

Minify and compress CSS, JavaScript, and HTML files.

Use lazy loading for images and videos.

Implement browser caching.

Use tools like Lighthouse to identify performance bottlenecks.

9. Monitor and Analyze Performance

Continuous monitoring helps identify and address bottlenecks before they become critical issues.

Tools to Use:

Application Performance Monitoring (APM): New Relic, Datadog.

Logging and Error Tracking: ELK Stack, Sentry.

Server Monitoring: Nagios, Prometheus.

Key Metrics to Monitor:

Response times.

Server CPU and memory usage.

Database query performance.

Network latency.

10. Test for Scalability

Regular testing ensures your application can handle increasing loads.

Types of Tests:

Load Testing: Simulate normal usage levels.

Stress Testing: Push the application beyond its limits to identify breaking points.

Capacity Testing: Determine how many users the application can handle effectively.

Tools for Testing: Apache JMeter, Gatling, Locust.

Case Study: Scaling a Real-World Application

Scenario: A growing e-commerce platform faced frequent slowdowns during flash sales.

Solutions Implemented:

Adopted a microservices architecture to separate order processing, user management, and inventory systems.

Integrated Redis for caching frequently accessed product data.

Leveraged AWS Elastic Load Balancer to manage traffic spikes.

Optimized SQL queries and implemented database sharding for better performance.

Results:

Improved application response times by 40%.

Seamlessly handled a 300% increase in traffic during peak events.

Achieved 99.99% uptime.

Conclusion

Building scalable web applications is essential for long-term success in an increasingly digital world. By implementing best practices such as adopting microservices, optimizing databases, leveraging CDNs, and embracing cloud-native development, full stack developers can ensure their applications are prepared to handle growth without compromising performance.

Scalability isn’t just about handling more users; it’s about delivering a consistent, reliable experience as your application evolves. Start incorporating these practices today to future-proof your web applications and meet the demands of tomorrow’s users.

0 notes

Text

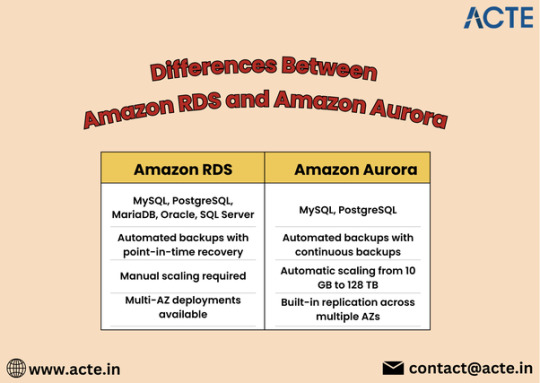

Comparing Amazon RDS and Aurora: Key Differences Explained

When it comes to choosing a database solution in the cloud, Amazon Web Services (AWS) offers a range of powerful options, with Amazon Relational Database Service (RDS) and Amazon Aurora being two of the most popular. Both services are designed to simplify database management, but they cater to different needs and use cases. In this blog, we’ll delve into the key differences between Amazon RDS and Aurora to help you make an informed decision for your applications.

If you want to advance your career at the AWS Course in Pune, you need to take a systematic approach and join up for a course that best suits your interests and will greatly expand your learning path.

What is Amazon RDS?

Amazon RDS is a fully managed relational database service that supports multiple database engines, including MySQL, PostgreSQL, MariaDB, Oracle, and Microsoft SQL Server. It automates routine database tasks such as backups, patching, and scaling, allowing developers to focus more on application development rather than database administration.

Key Features of RDS

Multi-Engine Support: Choose from various database engines to suit your specific application needs.

Automated Backups: RDS automatically backs up your data and provides point-in-time recovery.

Read Replicas: Scale read operations by creating read replicas to offload traffic from the primary instance.

Security: RDS offers encryption at rest and in transit, along with integration with AWS Identity and Access Management (IAM).

What is Amazon Aurora?

Amazon Aurora is a cloud-native relational database designed for high performance and availability. It is compatible with MySQL and PostgreSQL, offering enhanced features that improve speed and reliability. Aurora is built to handle demanding workloads, making it an excellent choice for large-scale applications.

Key Features of Aurora

High Performance: Aurora can deliver up to five times the performance of standard MySQL databases, thanks to its unique architecture.

Auto-Scaling Storage: Automatically scales storage from 10 GB to 128 TB without any downtime, adapting to your needs seamlessly.

High Availability: Data is automatically replicated across multiple Availability Zones for robust fault tolerance and uptime.

Serverless Option: Aurora Serverless automatically adjusts capacity based on application demand, ideal for unpredictable workloads.

To master the intricacies of AWS and unlock its full potential, individuals can benefit from enrolling in the AWS Online Training.

Key Differences Between Amazon RDS and Aurora

1. Performance and Scalability

One of the most significant differences lies in performance. Aurora is engineered for high throughput and low latency, making it a superior choice for applications that require fast data access. While RDS provides good performance, it may not match the efficiency of Aurora under heavy loads.

2. Cost Structure

Both services have different pricing models. RDS typically has a more straightforward pricing structure based on instance types and storage. Aurora, however, incurs costs based on the volume of data stored, I/O operations, and instance types. While Aurora may seem more expensive initially, its performance gains can result in cost savings for high-traffic applications.

3. High Availability and Fault Tolerance

Aurora inherently offers better high availability due to its design, which replicates data across multiple Availability Zones. While RDS does offer Multi-AZ deployments for high availability, Aurora’s replication and failover mechanisms provide additional resilience.

4. Feature Set

Aurora includes advanced features like cross-region replication and global databases, which are not available in standard RDS. These capabilities make Aurora an excellent option for global applications that require low-latency access across regions.

5. Management and Maintenance

Both services are managed by AWS, but Aurora requires less manual intervention for scaling and maintenance due to its automated features. This can lead to reduced operational overhead for businesses relying on Aurora.

When to Choose RDS or Aurora

Choose Amazon RDS if you need a straightforward, managed relational database solution with support for multiple engines and moderate performance needs.

Opt for Amazon Aurora if your application demands high performance, scalability, and advanced features, particularly for large-scale or global applications.

Conclusion

Amazon RDS and Amazon Aurora both offer robust solutions for managing relational databases in the cloud, but they serve different purposes. Understanding the key differences can help you select the right service based on your specific requirements. Whether you go with the simplicity of RDS or the advanced capabilities of Aurora, AWS provides the tools necessary to support your database needs effectively.

0 notes