#stephen wolfram

Explore tagged Tumblr posts

Text

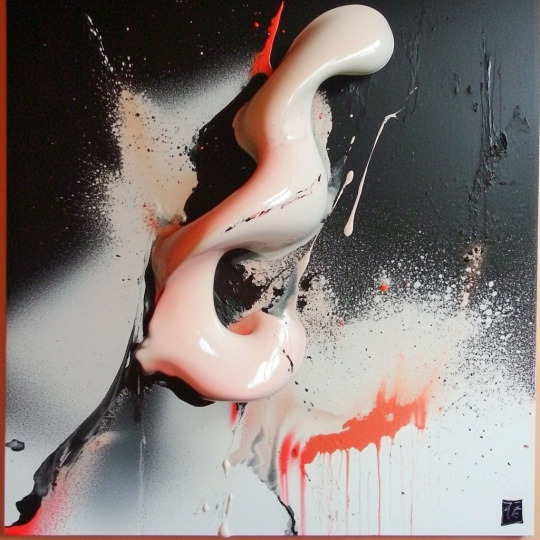

L'attracteur étrange

“Attracteur étrange” ©Philippe Quéau (Art Κέω) 2025 Pour Pythagore, le nombre est l’étoffe du monde, Mais il en est aussi le modèle. Le nombre est à la fois la forme et la matière des choses. En l’occurrence, la forme est sa propre matière, et la matière sa propre forme, puisque l’une et l’autre se confondent avec l’essence du nombre. Si le nombre est son propre modèle, il incarne aussi son…

View On WordPress

0 notes

Text

To be honest this is a reasonable take on the universe. Unfortunately, Stephen Wolfram holds the same take, and I do not want to have the same take as Wolfram on any subject.

bro math is crazy because it. it’s just a concept people made up but it’s fundamental knowledge for understanding the universe😮😮🔥🔥🔥

299 notes

·

View notes

Text

ilysm freeware

2 notes

·

View notes

Text

I want to know the truth, however perverted that may sthound!

Daffy Duck to Tina Russo

0 notes

Text

special functions and integrals are a topic likely to make you feel like a sorceress of some kind. most of the deep knowledge is tabulated in the arcane tomes of the dead masters of 70 years ago or crusty pdf scans, and although some results are indexed in the DLMF, any more serious questions are answered by checking all of the tables yourself. you should have a bare minimum of 6000 pages. if you spend more than a week in the service of the art, it feels like these volumes are trying to devour you back, or else lodge the totality of themselves in the space behind your eyes.

stephen wolfram is like that because he spent too long trying to bind these functions in the language he named after himself

39 notes

·

View notes

Text

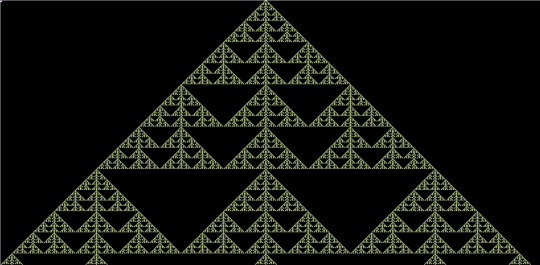

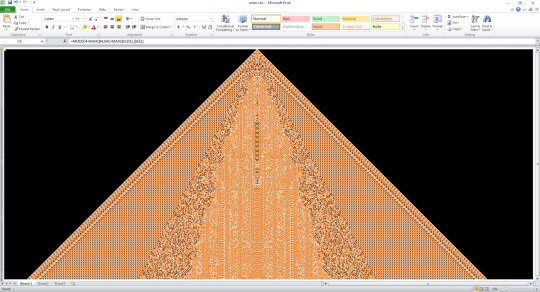

My art in Excel is based on Stephen Wolfram’s work using 1d cellular automata to print 2d fractals. The cells live in a single row and each cell decides based on its own value and its two neighbors’ whether it will be alive or dead in the next iteration. Arrange the iterations vertically and you can generate an image. For example, here’s my reproduction of Wolfram’s rule 150 fractal, where a cell is alive if exactly 1 or 3 of its parents are.

Each row is a successive iteration of the automaton. I generated this image with one formula: B3=MOD(A2+B2+C2,2). Since it’s a cellular automaton each cell uses the same formula relative to its position: I type the formula once and then fill down and right. A 1 represents a living cell and 0 is dead so addition modulo 2 tells us if exactly 1 or 3 parents are alive.

But why stick to 2 values? Why not paint by numbers?

Here’s B3=MOD(A2+B2+C2,3). Cells with value 0 are black, nonzero values are shades of green.

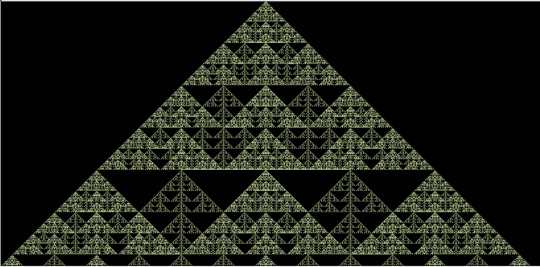

B3=MOD(A2+B2+C2,5). All the higher prime number moduli look like this. This one is self-similar at 5x zoom.

B3=MOD(A2+B2+C2,4). The original image is visible here but there’s some interference from Z4’s subgroup of order 2. We didn’t see this before because Z3 and Z5 are simple groups.

B3=MOD(A2+B2+C2,9). It looks like a fancier B3=MOD(A2+B2+C2,3) because Z9 has a subgroup isomorphic to Z3.

B3=MOD(A2+B2+C2,6). Since Z6 has subgroups of order 2 and 3, this image is the most complicated yet. There are copies of the original rule 150 fractal peeking through the holes - inside those copies every cell has value 0 or 3.

Original date: May 6th, 2023 7:43pm

26 notes

·

View notes

Text

I do appreciate the computational view of time presented here, but then I'd appreciate a computational view of everything tbh

26 notes

·

View notes

Text

so yknow how connor was this miracle child coveted by a bunch of evil cults (for the first few days of his life before that plot got dropped anyway) and how he's like a purified vampire with all the strength and none of the weaknesses while also having a living body and soul. anyway what if mind-wiped connor visited wolfram and hart earlier in s5 and ended up on knox's radar

something about how you can take the boy out of hell AND the hell out of the boy but someone can just put new hell in him. something about how the original connor would have gone nowhere near the sarcophagus but the new one angel made him into would think it was cool. something about the soul angel earned in darla's trial and that she died to protect being burned to nothing. something about angel grieving for connor's lost youth only for connor's body to become frozen young just like angel's. something about how holtz tried to put the righteous fear of god in connor and then angel took it out and then a god devours him. something about angel making a deal with the devil for connor but the devil not playing fair. something about illyria sorting through a lifetime of fake memories on top of the real ones and trying to come to a conclusion on who this shell was, connor or stephen or the destroyer or the father of our saviour or the kid that ended world peace or the sweet reilly boy, or angel's son, or not. something about how this is the only version of his son that angel gets to keep

48 notes

·

View notes

Text

a visit to the house of the robot priests

there are a lot things written about LLMs, many of them dubious. some are interesting tho. since my brain has apparently decided that it wants to know what the deal is, here's some stuff i've been reading.

most of these are pretty old (in present-day AI research time) because I didn't really want to touch this tech for the last couple of years. other people weren't so reticent and drew their own conclusions.

wolfram on transformers (2023)

stephen wolfram's explanation of transformer architecture from 2023 is very good, and he manages to keep the usual self-promotional "i am stephen wolfram, the cleverest boy" stuff to a manageable level. (tho to be fair on the guy, i think his research into cellular automata as models for physics is genuinely very interesting, and probably worth digging into further at some point, even if just to give some interesting analogies between things.) along with 3blue1brown, I feel like this is one of the best places to get an accessible overview of how these machines work and what the jargon means.

the next couple articles that were kindly sent to me by @voyantvoid as a result of my toying around with LLMs recently. they're taking me back to LessWrong. here we go again...

simulators (2022)

this long article 'simulators' for the 'alignment forum' (a lesswrong offshoot) from 2022 by someone called janus - a kind of passionate AI mystic who runs the website generative.ink - suffers a fair bit from having big yud as one of its main interlocutors, but in the process of pushing back on rat received wisdom it does say some interesting things about how these machines work (conceiving of the language model as something like the 'laws of motion' in which various character-states might evolve). notably it has a pretty systematic overview of previous narratives about the roles AI might play, and the way the current generation of language models is distinct from them.

just, you know, it's lesswrong, I feel like a demon linking it here. don't get lost in the sauce.

the author, janus, evidently has some real experience fiddling with these systems and exploring the space of behaviour, and be in dialogue with other people who are equally engaged. indeed, janus and friends seem to have developed a game of creating gardens of language models interacting with each other, largely for poetic/play purposes. when you get used to the banal chatgpt-voice, it's cool to see that the models have a territory that gets kinda freaky with it.

the general vibe is a bit like 'empty spaces', but rather than being a sort of community writing prompt, they're probing the AIs and setting them off against each other to elicit reactions that fit a particular vibe.

the generally aesthetically-oriented aspect of this micro-subculture seems to be a bit of a point of contention from the broader lesswrong milieu; if I may paraphrase, here janus responds to a challenge by arguing that they are developing essentially an intuitive sense for these systems' behaviour through playing with them a lot, and thereby essentially developing a personal idiolect of jargon and metaphors to describe these experiences. I honestly respect this - it brings to mind the stuff I've been on lately about play and ritual in relation to TTRPGs, and the experience of graphics programming as shaping my relationship to the real world and what I appreciate in it. as I said there, computers are for playing with. I am increasingly fixating on 'play' as a kind of central concept of what's important to me. I really should hurry up and read wittgenstein.

thinking on this, I feel like perceiving LLMs, emotionally speaking, as eager roleplayers made them feel a lot more palatable to me and led to this investigation. this relates to the analogy between 'scratchpad' reasoning about how to interact socially generated by recent LLMs like DeepSeek R1, and an autistic way of interacting with people. I think it's very easy to go way too far with this anthropomorphism, so I'm wary of it - especially since I know these systems are designed (rather: finetuned) to have an affect that is charming, friendly and human-like in order to be appealing products. even so, the fact that they exhibit this behaviour is notable.

three layer model

a later evolution of this attempt to philosophically break down LLMs comes from Jan Kulveit's three-layer model of types of responses an LLM can give (its rote trained responses, its more subtle and flexible character-roleplay, and the underlying statistics model). Kulveit raises the kind of map-territory issues this induces, just as human conceptions of our own thinking tend to shape the way we act in the future.

I think this is probably more of just a useful sorta phenomological narrative tool for humans than a 'real' representation of the underlying dynamics - similar to the Freudian superego/ego/id, the common 'lizard brain' metaphor and other such onion-like ideas of the brain. it seems more apt to see these as rough categories of behaviour that the system can express in different circumstances. Kulveit is concerned with layers of the system developing self-conception, so we get lines like:

On the other hand - and this is a bit of my pet idea - I believe the Ground Layer itself can become more situationally aware and reflective, through noticing its presence in its sensory inputs. The resulting awareness and implicit drive to change the world would be significantly less understandable than the Character level. If you want to get a more visceral feel of the otherness, the Ocean from Lem's Solaris comes to mind.

it's a fun science fiction concept, but I am kinda skeptical here about the distinction between 'Ground Layer' and 'Character Layer' being more than approximate description of the different aspects of the model's behaviour.

at the same time, as with all attempts to explore a complicated problem and find the right metaphors, it's absolutely useful to make an attempt and then interrogate how well it works. so I respect the attempt. since I was recently reading about early thermodynamics research, it reminds me of the period in the late 18th and early 19th century where we were putting together a lot of partial glimpses of the idea of energy, the behaviour of gases, etc., but had yet to fully put it together into the elegant formalisms we take for granted now.

of course, psychology has been trying this sort of narrative-based approach to understanding humans for a lot longer, producing a bewildering array of models and categorisation schemes for the way humans think. it remains to be seen if the much greater manipulability of LLMs - c.f. interpretability research - lets us get further.

oh hey it's that guy

tumblr's own rob nostalgebraist, known for running a very popular personalised GPT-2-based bot account on here, speculated on LW on the limits of LLMs and the ways they fail back in 2021. although he seems unsatisfied with the post, there's a lot in here that's very interesting. I haven't fully digested it all, and tbh it's probably one to come back to later.

the Nature paper

while I was writing this post, @cherrvak dropped by my inbox with some interesting discussion, and drew my attention to a paper in Nature on the subject of LLMs and the roleplaying metaphor. as you'd expect from Nature, it's written with a lot of clarity; apparently there is some controversy over whether it built on the ideas of the Cyborgism group (Janus and co.) without attribution, since it follows a very similar account of a 'multiverse' of superposed possible characters and the AI as a 'simulator' (though in fact it does in fact cite Janus's Simulation post... is this the first time LessWrong gets cited in Nature? what a world we've ended up in).

still, it's honestly a pretty good summary of this group's ideas. the paper's thought experiment of an LLM playing "20 questions" and determining what answer to give at the end, based on the path taken, is particularly succinct and insightful for explaining this 'superposition' concept.

accordingly, they cover, in clear language, a lot of the ideas we've discussed above - the 'simulator' of the underlying probabilistic model set up to produce a chain token by token, the 'simulacrum' models it acts out, etc. etc.

one interesting passage concerns the use of first-person pronouns by the model, emphasising that even if it expresses a desire for self-preservation in the voice of a character it is roleplaying, this is essentially hollow; the system as a whole is not wrong when it says that it does not actually have any desires. i think this is something of the crux of why LLMs fuck with our intuitions so much. you can't accurately say that an LLM is 'just telling you what (it thinks) you want to hear', because it has no internal model of you and your wants in the way that we're familiar with. however, it will extrapolate a narrative given to it, and potentially converge into roleplaying a character who's trying to flatter you in this way.

how far does an LLM take into account your likely reaction?

in theory, an LLM could easily continue both sides of the conversation, instead of having a special token that signals to the controlling software to hand input back to the user.

it's unclear whether it performs prediction of your likely responses and then reasons off that. the 'scratchpad' reasoning generated by deepseek-r1 (a sort of hacky way to get a feedback loop on its own output, that lets the LLM do more complex computation) involves fairly explicit discussion of the likely effects of certain language choices. for example, when I asked why it always seems to begin its chain of thought with 'alright', the resulting chain of thought included:

Next, explaining that "Alright" is part of my training helps them understand that it's a deliberate choice in my design. It's meant to make interactions feel more natural and conversational. People appreciate when communication feels human-like, so this explanation helps build trust and connection. I should also mention that it's a way to set the tone for engagement. Starting with "Alright" makes me sound approachable and ready to help, which is great for user experience. It subtly signals that we're entering a conversational space where they can ask questions or share thoughts freely.

however, I haven't personally yet seen it generate responses along the lines of "if I do x, the user would probably (...). I would rather that they (...). instead, I should (...)". there is a lot of concern getting passed around LessWrong about this sort of deceptive reasoning, and that seems to cross over into the actual people running these machines. for example OpenAI (a company more or less run by people who are pretty deep in the LW-influenced sauce) managed to entice a model to generate a chain of thought in which it concluded it should attempt to mess with its retraining process. they interpreted it as the model being willing to 'fake' its 'alignment'.

while it's likely possible to push the model to generate this kind of reasoning with a suitable prompt (I should try it), I remain pretty skeptical that in general it is producing this kind of 'if I do x then y' reasoning.

on Markov chains

a friend of mine dismissively referred to LLMs as basically Markov chains, and in a sense, she's right: because they have a graph of states, and transfer between states with certain probabilities, that is what a Markov chain is. however, it's doing something far more complex than simple ngram-based prediction based on the last few words!

for the 'Markov chain' description to be correct, we need a node in the graph for every single possible string of tokens that fits within the context window (or at least, for every possible internal state of the LLM when it generates tokens), and also considerable computation is required in order to generate the probabilities. I feel like that computation, which compresses, interpolates and extrapolates the patterns in the input data to guess what the probability would be for novel input, is kind of the interesting part here.

gwern

a few names show up all over this field. one of them is Gwern Branwen. this person has been active on LW and various adjacent websites such as Reddit and Hacker News at least as far back as around 2014, when david gerard was still into LW and wrote them some music. my general impression is of a widely read and very energetic nerd. I don't know how they have so much time to write all this.

there is probably a lot to say about gwern but I am wary of interacting too much because I get that internal feeling about being led up the garden path into someone's intense ideology. nevertheless! I am envious, as I believe I may have said previously, of how much shit they've accumulated on their website, and the cool hover-for-context javascript gimmick which makes the thing even more of a rabbit hole. they have information on a lot of things, including art shit - hell they've got anime reviews. usually this is the kind of website I'd go totally gaga for.

but what I find deeply offputting about Gwern is they have this bizarre drive to just occasionally go into what I can only describe as eugenicist mode. like when they pull out the evopsych true believer angle, or random statistics about mental illness and "life outcomes". this is generally stated without much rhetoric, just casually dropped in here and there. this preoccupation is combined with a strangely acerbic, matter of fact tone across much of the site which sits at odds with the playful material that seems to interest them.

for example, they have a tag page on their site about psychedelics that is largely a list of research papers presented without comment. what does Gwern think of LSD - are they as negative as they are about dreams? what theme am I to take from these papers?

anyway, I ended up on there because the course of my reading took me to this short story. i don't think tells me much about anything related to AI besides gwern's worldview and what they're worried about (a classic post-cyberpunk scenario of 'AI breaking out of containment'), but it is impressive in its density of references to interesting research and internet stuff, complete with impressively thorough citations for concepts briefly alluded to in the course of the story.

to repeat a cliché, scifi is about the present, not the future. the present has a lot of crazy shit going on in it!apparently me and gwern are interested in a lot of the same things, but we respond to very different things in it.

why

I went out to research AI, but it seems I am ending up researching the commenters-about-AI.

I think you might notice that some of the characters who appear in this story are like... weirdos, right? whatever any one person's interest is, they're all kind of intense about it. and that's kind of what draws me to them! sometimes I will run into someone online who I can't easily pigeonhole into a familiar category, perhaps because they're expressing an ideology I've never seen before. I will often end up scrolling down their writing for a while trying to figure out what their deal is. in keeping with all this discussion of thought in large part involving a prediction-sensory feedback loop, usually what gets me is that I find this person surprising: I've never met anyone like this. they're interesting, because they force me to come up with a new category and expand my model of the world. but sooner or later I get that category and I figure out, say, 'ok, this person is just an accelerationist, I know what accelerationists are like'.

and like - I think something similar happened with LLMs recently. I'm not sure what it was specifically - perhaps the combo of getting real introspective on LSD a couple months ago leading me to think a lot about mental representations and communication, as well as finding that I could run them locally and finally getting that 'whoah these things generate way better output than you'd expect' experience that most people already did. one way or another, it bumped my interest in them from 'idle curiosity' to 'what is their deal for real'. plus it like, interacts with recent fascinations with related subjects like roleplaying, and the altered states of mind experienced with e.g. drugs or BDSM.

I don't know where this investigation will lead me. maybe I'll end up playing around more with AI models. I'll always be way behind the furious activity of the actual researchers, but that doesn't matter - it's fun to toy around with stuff for its own interest. the default 'helpful chatbot' behaviour is boring, I want to coax some kind of deeply weird behaviour out of the model.

it sucks so bad that we have invented something so deeply strange and the uses we put it to are generally so banal.

I don't know if I really see a use for them in my art. beyond AI being really bad vibes for most people I'd show my art to, I don't want to deprive myself of the joy of exploration that comes with making my own drawings and films etc.

perhaps the main thing I'm getting out of it is a clarification about what it is I like about things in general. there is a tremendous joy in playing with a complex thing and learning to understand it better.

9 notes

·

View notes

Text

2024 in Books

Every Book I read in 2024 very briefly reviewed. I'm ignoring re-reads.

The Blade Itself - Joe Abercrombie (I definitely want to read the rest of the series but I haven't managed to get my hands on it yet)

Death's Country - R.M. Romero (I read this as an ARC, it's a journey to the underworld in free verse)

More Ghost Stories of an Antiquary - M.R. James (I love this guy's ghost stories)

100 Poets: A Little Anthology - John Carey (I read this as an ARC, would have liked more international voices)

Ariadne - Jennifer Saint (Very solid version of Ariadne's story highlighting the lack of agency under the patriarchy)

Cien Microcuentos Chilenos - Juan Armando Epple (Not gonna lie I barely understood anything)

Catch-22 - Joseph Heller (I'm soooo not normal about this one)

The Murderbot Diaries 1-4 - Martha Wells (I'm really enjoying this series but I had to wait for months to get a library loan for the 5th one and now I forgot everything that happened)

Darius the Great is Not Okay & Darius the Great Deserves Better - Adib Khorram (Actually made me cry which tells you what kind of year I'm having)

The Jeeves Collection - P.G. Wodehouse (A 40h long anthology of Jeeves stories read by Stephen Fry what more can you want)

Von der Pampelmuse geküsst - Heinz Erhardt (Funny)

Die Jodelschule und andere dramatische Werke - Loriot (Funny)

Legends & Lattes - Travis Baldree (This was truly so cozy)

Poemas Portugueses - Ed. Maria de Fátima Mesquita-Sternal (Good collection of different works)

The Heaven & Earth Grocery Store - James McBride (Highlight of the Year)

Quality Land - Marc-Uwe Kling (How is his satire so real??)

When Women Were Dragons - Kelly Barnhill (Highlight of the Year)

Gender Queer: A Memoir - Maia Kobabe (Very affirming to read)

Slaughterhouse-Five - Kurt Vonnegut (I'm so not normal about this that I'm considering getting a tattoo about it)

Andorra - Max Frisch (A play about antisemitism but in that very Max Frisch way)

Surely You're Joking Mr Feynman - Richard Feynman (I want to study this guy under a microscope but I also learned a lot about education and people skills)

Von Juden Lernen - Mirna Funk (Bad, unfortunately)

House of Leaves - Mark Z Danielewski (I've been reading this on and off for the better half of a decade and I have many thoughts none of them coherent)

Views - Marc-Uwe Kling (One of the most upsetting books I ever read and I mean that positively)

Harrow the Ninth - Tamsyn Muir (What the fuck is happening but also oh cool)

People Love Dead Jews - Dara Horn (the other really upsetting book I read this year but beautifully written)

It Came From the Closet: Queer Reflections on Horror - Ed. Joe Vallese (An anthology so up my alley you'd think it's fake)

Camp Damascus - Chuck Tingle (More upsetting than scary but a really good read)

Stephen Fry in America - Stephen Fry (Very funny and insightful if you've just moved there)

You Like it Darker - Stephen King (I'm still thinking about some of the short stories)

Mother Night - Kurt Vonnegut (Also not normal about this one)

The Song of Roland - Unknown, Trans. Glen Burgess (It sucks that this slaps so much because it's blatant propaganda)

Die Verlorene Ehre der Katharina Blum - Heinrich Böll (Worst year to read and watch this tbh but highly recommended)

Herzog Ernst - Unknown (Medieval Fantasy but like actually Medieval)

Willehalm - Wolfram von Eschenbach (Sorry I only partially read this because I got too busy with school)

Bury Your Gays - Chuck Tingle (Better still than Camp Damascus but again more upsetting than scary)

12 notes

·

View notes

Text

Stephen Wolfram on AI:

youtu.be/Y3aNhVJDX…

For the full show visit twit.tv/im

2 notes

·

View notes

Text

WIP folder ask game

Thank you @vastwinterskies for tagging me!

Rules: make a new post with the names of all the files in your WIP folder, regardless of how non-descriptive or ridiculous and tag as many people as you have WIPs. People send an ask with the title that most intrigues them, then you post a snippet or tell them something about it!

Here we go:

Gut zu Vögeln (Drei Haselnüsse für Aschenbrödel)

Kutschfahrt (Elisabeth, the musical)

Billig zu haben (Jakob Fugger RPF)

whispering (Leeward)

Supermärkte (Master and Commander)

University AU (Master and Commander)

Stephen from a Star (Master and Commander)

Regula ad cuiusdam virgines (Pentiment)

Vamparzival (Parzival by Wolfram von Eschenbach, medieval high German literature)

oh boy. I didn't know there were so many already😅

Feel free to send me an ask, maybe it will motivate me to tackle some of these projects!

Tagging @swanfloatieknight , @capailleuisce , @samis-side-of-the-story , @ernest-shackleton , @thiefbird , @danse-de-macabre and sorry I have more WIPs than mutuals...feel free to join in!

2 notes

·

View notes

Text

This day in history

I'm coming to BURNING MAN! On TUESDAY (Aug 27) at 1PM, I'm giving a talk called "DISENSHITTIFY OR DIE!" at PALENQUE NORTE (7&E). On WEDNESDAY (Aug 28) at NOON, I'm doing a "Talking Caterpillar" Q&A at LIMINAL LABS (830&C).

#20yrsago European copyright extension: protecting Elvis to the detriment of everyone else https://web.archive.org/web/20040906201355/http://www.indexonline.org/news/20040812_unitedstates.shtml

#15yrsago Photos of science fiction writers’ nests http://www.whereiwrite.org/index.php

#15yrsago Guerilla gardens in newspaper boxes https://web.archive.org/web/20090806033424/http://www.bladediary.com/flyerplanterboxes-5/

#15yrsago Lethem and EFF on why Google Book Search needs privacy guarantees https://www.npr.org/2009/08/12/111797207/google-deal-with-publishers-raises-privacy-concerns

#15yrsago Movie industry wants the right to take your house off the net without full judicial review https://torrentfreak.com/movie-studios-want-own-version-of-justice-for-3-strikes-090812/

#10yrsago Biology student in Colombia faces jail for reposting scholarly article https://www.eff.org/deeplinks/2014/07/colombian-student-faces-prison-charges-sharing-academic-article-online

#10yrsago Former NSA spook resigns from Naval War College in dick-pic scandal https://arstechnica.com/tech-policy/2014/08/snowden-critic-resigns-naval-war-college-over-online-penis-photo-flap/

#10yrsago Profile of Flickr and Slack founder Stewart Butterfield https://www.wired.com/2014/08/the-most-fascinating-profile-youll-ever-read-about-a-guy-and-his-boring-startup/

#10yrsago DOJ slams Riker’s Island for horrific violence against young inmates https://www.techdirt.com/2014/08/11/doj-report-details-massive-amount-violence-committed-rikers-island-staff-against-adolescent-inmates/

#10yrsago Profiles of brutalized laborers building Abu Dhabi’s Louvre and Guggenheim https://web.archive.org/web/20140806033053/https://www.vice.com/read/slaves-of-happiness-island-0000412-v21n8

#10yrsago NZ TV won’t air ads for geo-unblocking ISP https://www.nzherald.co.nz/business/mediaworks-joins-sky-tvnz-in-banning-slingshot-ads/QI7UJYTMZBALFI6E7W32WYYMTU/?c_id=3&objectid=11304231

#10yrsago Weaseling about surveillance, Australian Attorney General attains bullshit Singularity https://www.youtube.com/watch?v=Hw1ryLGs2ws

#5yrsago WordPress is buying Tumblr https://www.axios.com/2019/08/12/verizon-tumblr-wordpress-automattic

#5yrsago Your phone is a crimewave in your pocket, and it’s all the fault of greedy carriers and complicit regulators https://krebsonsecurity.com/2019/08/who-owns-your-wireless-service-crooks-do/

#5yrsago The real meaning of plantation tours: American Downton Abbey vs American Horror Story https://afroculinaria.com/2019/08/09/dear-disgruntled-white-plantation-visitors-sit-down/

#5yrsago New York City raised minimum wage to $15, and its restaurants outperformed the nation https://gallery.mailchimp.com/a6170fa466dd7c8eed0aab6be/files/b2f3acd5-3884-42a7-a0bf-fa410b2b6544/Final_CNYCA_NELP_NYC_Min_Wage_Restaurants.pdf?mc_cid=5ce3fba121

#5yrsago Prior to Amazon acquisition, Ring offered “swag” to customers who snitched on their neighbors https://www.vice.com/en/article/ring-told-people-to-snitch-on-their-neighbors-in-exchange-for-free-stuff/

#5yrsago Stephen Wolfram recounts the entire history of mathematics in 90 minutes https://soundcloud.com/stephenwolfram/a-very-brief-history-of-mathematics

#5yrsago All flights in and out of Hong Kong canceled as protesters flood the airport https://twitter.com/erinhale/status/1160786319804493827

#5yrsago Rule of Capture: Inside the martial law tribunals that will come when climate deniers become climate looters and start rendering environmentalists for offshore torture https://memex.craphound.com/2019/08/12/rule-of-capture-inside-the-martial-law-tribunals-that-will-come-when-climate-deniers-become-climate-looters-and-start-rendering-environmentalists-for-offshore-torture/

#1yrago Paying consumer debts is basically optional in the United States https://pluralistic.net/2023/08/12/do-not-pay/#fair-debt-collection-practices-act

Community voting for SXSW is live! If you wanna hear RIDA QADRI and me talk about how GIG WORKERS can DISENSHITTIFY their jobs with INTEROPERABILITY, VOTE FOR THIS ONE!

4 notes

·

View notes

Photo

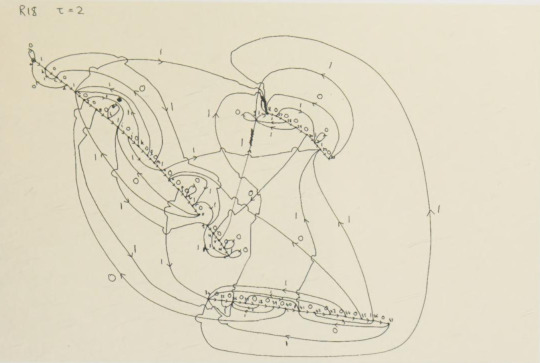

Stephen Wolfram - (1984) Computation Theory of Cellular Automata

2 notes

·

View notes

Text

Just obtained a copy of "Stephen Wolfram: A new kind of science" and this shit is mind blowing. Aside from him writing the entire book in his own software that he coded and the fact that he made WolframAlpha and the fact that he published quantum physics and particle physics papers at 15,

The book is absolutely mind blowing. He's not wrong when he says in the book that we need "To develop a new intuition" to understand these.

3 notes

·

View notes

Text

This cellular automaton transitions from order to chaos and back again. Eat your heart out, Stephen Wolfram! I call it “wake”.

original date May 4th, 2023 4:08pm

#msexcelfractal#reruns#002 wake#as in the wake of a boat#text and images are original#i dont have these initial conditions saved#if i did#i think my original determination was false#these spreadsheets are weird two-dimensional division problems#if i ran enough rows of the simulation#the chaos region would vanish and the remainder would be purely repeating#my art

5 notes

·

View notes