#targeted ai solutions legit

Text

Targeted AI Solutions Review – Real Info About AI Solutions

Welcome to my Targeted AI Solutions Review Post. This is a real user-based Targeted AI Solutions Review where I will focus on the features, upgrades, demo, pricing and bonus how Targeted AI Solutions can help you, and my opinion. Unlock Local Marketing’s Future and Business Growth with the End of Expensive Consultants. Just Smart AI Solutions

Total AI Solutions is ready to help you realise your goal. Consider having access to top-tier marketing and company development assistance whenever you want, without having to pay the high rates of conventional consultants. Total AI Solutions provides a cost-effective, AI-powered solution that is accessible 24 hours a day, seven days a week. It’s all offered via an easy-to-use chat interface, making it as simple as texting a buddy. Total AI Solutions provides more than simply guidance. It offers focused tactics supported by data-driven insights to assist you in implementing changes quickly and seeing results quicker. Stop pondering what you should do next. Total AI Solutions gives the assistance you need, when you want it, to propel your company forward.

<<Get Amplify with my Special Bonus Bundle to Get More Traffic, Leads & Commissions >>

Targeted AI Solutions Review: What Is Targeted AI Solutions?

Targeted AI Solutions is created by IM Wealth Builders, expert product builders known for their innovation and pragmatism. Their creative goods have made them famous in internet marketing. Imagine having a 24/7 staff of digital marketing and company development experts at your disposal. Imagine a future where strategic advice, creative content, and faultless execution in expanding your company or improving client services are simply the norm. As an experienced company owner, I’ve felt the pain of obtaining top-tier knowledge that drives growth. Traditional path? It takes time and money to hire expensive experts. DIY method? The many obligations you handle everyday make life daunting.

Targeted AI Solutions Review: Overview

Creator: Matt Garrett

Product: Targeted AI Solutions

Date Of Launch: 2024-Jan-04

Time Of Launch: 11:00 EDT

Front-End Price: $17

Product Type: Software (online)

Support: Effective Response

Discount : Get The Best Discount Right Now!

Recommended: Highly Recommended

Skill Level Required: All Levels

Refund: YES, 30 Days Money-Back Guarantee

Targeted AI Solutions Review: Key Features

The 12 Marketing Titans Council: Dive into the minds of 12 AI experts, each a maestro in a crucial domain of business growth and digital marketing. From SEO virtuosos to social media mavens, they constitute your council of digital dominance.

Customized Strategies from AI Maestros: These aren’t mere aides. They are AI-powered strategists delivering personalized, actionable plans finely tuned to your business objectives.

24/7 Support: Your business never sleeps, and neither do our AI experts. They stand ready around the clock, ensuring you have support whenever inspiration strikes or challenges arise.

Cost-Effective Expertise: Bid farewell to exorbitant consultant fees. Total AI Solutions offers the wisdom of top-tier marketing experts at a fraction of the cost.

Adaptable Wisdom: As your business evolves, so does our AI. Our platform scales its expertise to meet your expanding needs, guaranteeing you stay ahead of the curve.

Swift Strategy Implementation: Translate advice into action with agility. Our AI experts furnish detailed, easy-to-implement strategies, accelerating your journey to success.

Universal Relevance: Irrespective of your industry, Total AI Solutions is primed to elevate your business. Our AI expertise is versatile, adaptable, and universally applicable.

User-Friendly Interface: Engage with our AI experts through an intuitive chat interface. No steep learning curve, just straightforward, effective communication.

Targeted AI Solutions Review: Can Do For You

Easy Accessibility Expert: Ensuring your digital presence is inclusive and accessible to all

Google Business Guru: Optimizing your visibility and impact on Google

Citation Champion: Mastering the art of business listings for maximum reach

Reputation Guardian: Safeguarding and enhancing your online reputation

Video Marketing Magician: Conjuring compelling video content that captivates and converts

Website Wizard and Designer: Crafting and beautifying your digital storefront

AI Services Virtuoso: Unleashing the power of AI for innovative solutions

On-Page & Technical SEO Sensei: Fine-tuning your website for peak search engine performance

Link Building Luminary: Constructing a network of quality links to boost your SEO

Social Media and Content Marketing Maverick: Driving engagement and brand loyalty through social media and content

Pay Per Click Ads Ace: Maximizing your ROI on ad spend

Security Solutions Sentinel: Fortifying your digital assets against threats

Targeted AI Solutions Review: Who Should Use It?

Business Owners

Bloggers

Content Creators

Digital Agencies

Educators and Trainers

Entertainment Industry Professional

Influence’s and Celebrities

Marketing Professionals

Photographers & Videographers

Social Media Marketer

Technology Users

Targeted AI Solutions Review: OTO’S And Pricing

Front-End Price: Targeted AI Solutions ($17)

OTO 1: TAIS Additional Niche Experts Library ($27/47)

OTO 2: TAIS Niche Expert Creation Module ($67)

OTO 3: TAIS Niche Experts Client Access ($97)

OTO 4: TAIS Bespoke Experts Lifetime License Buyout ($147)

Targeted AI Solutions Review: Free Bonuses

Bonus 1: Email Crafter AI Software (Value $197)

AI-Powered Email Writer.

Automatically create complete email sequences in a single click

Enter a URL, or describe your content, and Email Crafter will analyze it and write your emails

Whitelabel email template system: add your email sequences and emails for your users to use

We host it: nothing to download, you can be up and running in minutes.

Create a single email, or 100’s of emails in 1 go.

One-click copy system to easily take emails from the app into your autoresponder.

200+ Emails in 26 different email sequences. 1,000 Emails available soon.

1-click regeneration of produced emails

End-user email sequences: users can write their email prompts or give the app their writing as an example, then let the app write emails in their voice

Bonus 2: AI Enhanced List Building Launchpad – 30-Day Playbook! (Value $197)

Teach Businesses How to Build an Email List So They Can Generate More Leads, Customers, and Revenue!

In the ever-evolving digital landscape, the difference between businesses that thrive and those that merely survive is often the effectiveness of their online strategy. The AI Enhanced List Building Launchpad 30-Day Playbook isn’t just a guide; it’s a promise. A promise that by the end of these 30 days, your business will be able to turn on your “email list profit faucet” any time you want to drive revenue like never before.

Bonus 3: Full Commercial Licence Included (Value $197)

That means you generate content, training, guidance, ads, social media posts, e-books, and more with your Digital Marketing Experts. You can use the power of the AI experts to build your own business AND you can give, sell rent, or offer as service-generated content!

Three video series covering

How To Start A Consultancy Business (4 videos)

How To Find And Close Clients (3 videos)

How To Sell Marketing And Digital Services (11 videos)

Conclusion

Targeted AI Solutions will revolutionise corporate development by seamlessly integrating artificial intelligence with innovation for entrepreneurs and marketers. This innovative platform removes obstacles to profit-driven knowledge by providing personalised, strategic consultations from top experts at any time and place. Targeted AI Solutions offers lifelong access to superior digital skills at a very low price. Success stories and a money-back guarantee make this platform essential for aspiring people in all fields. Take advantage of this disruptive innovation at its present price before rates raise.

Frequently Asked Questions (FAQ)

Q. Can I use Targeted AI Solutions on Mac?

Absolutely, Targeted AI Solutions is a web-based app accessible on a computer with an internet connection.

Q. What else do I need to make this work?

Targeted AI Solutions use the OpenAI API. So you will need an Open AI account and an API key. The account is free with over 3 million tokens for 3 months included.

Q. Do I need to purchase credits?

Targeted AI Solutions uses Open AI extensively. Start by creating a free Open AI account with 1.5 million characters of credit. After your initial credits run out, token use is pay-as-you-go. Around $1 every 350,000 characters, tokens are cheap.

Q. Does this software support all countries?

While the application’s user interface is in English, our experts have the flexibility to respond in any requested language or your local language if necessary. Regardless, our experts will always tailor their responses specifically to your location.

Q. What exactly does the AI do?

AI drives the 12 professionals, who each have a distinct skill set for offering digital services to local clientele. SEO, Google My Business, Citation Management, Reputation Management, etc. By communicating directly with these professionals, the AI helps you understand consumer behaviour, learn new skills, improve service delivery, build successful marketing campaigns, generate documentation, create social media content, and more.

Q. Why is Targeted AI Solutions such a low price?

Special launch pricing. This application should engage as many individuals as feasible. We think Targeted AI Solutions is great software and that users would agree. We want testimonials and comments as part of the deal. Help us market and develop the software.

Q. What kind of license do I get with software?

You get full access and a commercial license. That means you can sell or distribute any content generated by your experts.

Q. Does this app work in any niche or business?

Absolutely, Your specialists are designed for local business. They work with home, commercial, healthcare, beauty and well-being, legal, hospitality, transport, and other businesses.

Q. How do I get my bonuses?

The bonuses are integrated into the app. Navigate to the bottom of the home page and access the “Bonuses” menu item.

<<Get Amplify with my Special Bonus Bundle to Get More Traffic, Leads & Commissions >>

Check Out My Other Reviews Here! – Amplify App Review, AI NexaSites Review, $1K PAYDAYS Review, FaceSwap Review, Chat Bot AI Review.

Thank for reading my Targeted AI Solutions Review till the end. Hope it will help you to make purchase decision perfectly.

#buy targeted ai solutions#get targeted ai solutions#How to make money online#make money online#Make Money Online 2024#make money tutorials#targeted ai solutions#targeted ai solutions app by Matt Garrett#targeted ai solutions app review#targeted ai solutions bonus#targeted ai solutions demo#targeted ai solutions discount#targeted ai solutions legit#targeted ai solutions oto#targeted ai solutions preview#targeted ai solutions review#targeted ai solutions scam

0 notes

Note

Notice how the rising stories from China and in villages across India are reporting that families are still mass killing their baby girls make the pro aborts quiet? They claim to be feminists but the choice is always at the expense of a baby girl being murdered for being a girl. Always. There’s no place on earth where baby boys are being mass killed in a system that tells families that boys have no value.

Now those countries are killing themselves because they don’t have any women left to marry their sons too. There’s already kidnapping from nearby areas but that’s not sustainable. On top of that, boys in primary school who consume content like Tat3 or these masculinity podcasts are academically falling faster because they’re more concerned over being “masculine and dominant” over the female teachers and classmates. The future seems to be full of single, violent, unintelligent/uneducated men who hate women because the girls who haven’t been killed in the womb or as infants are accomplished and educated but vastly outnumbered. Males will use every excuse to justify their hatred from religion like islam to just being violent. There would be pick mes that support those men tho. I watched a tiktok of a woman at the gym (who I think either had her account banned or went private) say the worst things for her male viewers. The worst I saw was how she said that men should return women back to god (so kill us) and the comments (the males) all agreed. But even so, she won’t be protected by them because at the end of the day, being a woman means you have a target on your back that will only get bigger as time goes on. So I hope pro aborts are happy that they think giving the choice to kill babies were worth it. Because this world despises women, even in the womb so that choice will always kill us.

They're quiet about it because they can't shove them abortion as the solution of this problem ¯\_(ツ)_/¯ pro abortion aren't solution focused, they just want to elevate abortion as the be all and end all of reproductive justice. But what are they gonna do when women are going to profit off access to abortion to have safer sex elective abortion? They'll go *shocked face Pikachu ", that's all

Female redpilled grifter are trendy because pandering to incels is very profitable. The good news is that it's pretty much a phase and that most of these women will get over in ~10 years or tone down their act big time (à la Lauren Southern), the bad news is that they will always be a new flock of idiot to take over the torch AND having the audience to follow.

Even here in France we have them red pill trad chicks associated to far right movement saying slut shaming is good and that feminism is the devil (Thaïs d'Escufon is a piece of work lol), and they are fairly popular in their nice. Just sprinkle "woke" here and there and they reach 100k+ views every few days.

She (Thaïs) once made a collab video with a french manosphere scrote, and they humiliated her asking her to pick some coffee and basically putting her down into her 'female place', and french twitter clowned her saying "well that's what sd was asking for ¯\_(ツ)_/¯". There was also an audio leak from this french Nationalist vocal chatroom where she threw a fit bc apparently nationalist scrotes were interested dating Asian or African wife bc they were "more traditional". She was fuming bc it was a cultural and racial waste 💀 (FYI she's a legit White supremacist. But also a VERY dumb one. She once posted on Twitter an AI image of these "perfect man & woman" and used the fact they were White to argue that White people were objectively the prettiest 💀 .... girl didn't get that AI wasn't "neutral")

It's was very interesting to see her seething at seeing her White men lusting after women. You'd think with all the attention she gets, she'd have a ring on her finger yet, but homegirl is in her mid 20, still not married, but patronizing men peepee to keep them on check (which is a lost cause of course bc most men are animals). This dissonance is very weird... She's very much like Pearl. There has to be something very off with them or their surrounding if they can't find a man. I mean, according to their own logic of "women main purpose to breed to pursue the (white) race".

5 notes

·

View notes

Text

Affiliate Begins With AI legit or hype?

In today’s rapidly evolving digital marketing landscape, one technology is rising above all others—Artificial Intelligence (AI). For affiliate marketers, staying ahead of the competition has become a matter of leveraging AI effectively. The role of AI in affiliate marketing is more than just a trend; it’s a revolution that’s reshaping how businesses promote products, engage audiences, and generate revenue. As digital marketing continues to shift, the message is clear: the future of affiliate marketing begins with AI, and those who embrace it will thrive in this highly competitive field.

The Importance of Staying Ahead in Affiliate Marketing

Affiliate marketing has always been a dynamic industry, constantly adapting to new technologies, platforms, and consumer behaviors. Whether you’re a seasoned marketer or just stepping into the world of affiliate marketing, the ability to stay ahead of the curve is critical for success. The competition is fierce, and only those who innovate will capture attention, drive traffic, and convert sales. This is where AI steps in, providing tools and capabilities that no human team can match in terms of speed, accuracy, and scale.

✅ WATCH THE VIDEO ✅

AI is no longer a futuristic concept but a present reality that is transforming how marketers operate. In a space where every click counts, every conversion matters, and customer expectations are higher than ever, using AI-powered tools is no longer optional but necessary.

What is AI in Affiliate Marketing?

Artificial Intelligence in affiliate marketing refers to the use of machine learning algorithms, data analytics, and automation technologies to streamline and enhance the processes involved in affiliate marketing. From automating content creation and email marketing to optimizing ad placements and audience targeting, AI’s reach is vast. But at its core, AI's main role is to process and analyze massive amounts of data, providing actionable insights that improve marketing strategies and increase conversion rates.

AI in affiliate marketing offers several advantages, such as:

Data-Driven Insights: AI algorithms process large sets of data to provide real-time insights into customer behavior, preferences, and buying patterns. This helps marketers make informed decisions and adjust campaigns for better performance.

Automation of Routine Tasks: Time-consuming tasks like audience segmentation, lead nurturing, and content scheduling can be fully automated using AI. This frees up marketers to focus on strategy and creativity.

Predictive Analytics: AI-powered tools can predict future trends, enabling marketers to anticipate what products or services will be in demand and optimize their promotional efforts accordingly.

Personalization at Scale: With AI, personalization is not limited to small customer segments but can be applied to individual users, creating a highly customized and engaging experience.

Enhanced Customer Engagement: Chatbots and AI-powered communication tools can engage customers instantly, providing answers and solutions in real time, leading to better user experiences and increased conversions.

How AI is Revolutionizing Affiliate Marketing

AI is revolutionizing affiliate marketing by turning data into a goldmine of actionable insights. Let’s explore the key areas where AI is making the most significant impact:

Hyper-Targeted Content Creation

Content remains king, but AI has transformed how content is created and targeted. By analyzing user data, AI tools can generate hyper-targeted content designed to resonate with specific audience segments. This allows affiliate marketers to create personalized messages that speak directly to the needs, desires, and pain points of individual users. Whether it’s blog posts, social media ads, or email campaigns, AI helps optimize the content creation process for higher engagement and better conversions.

For example, AI-driven tools like GPT-4 can analyze top-performing content in a specific niche and create similar content tailored to your audience. By learning from millions of data points, these tools can predict what type of content will perform best for your target demographic, helping marketers consistently produce high-quality, relevant content that converts.

Advanced Audience Targeting

Audience targeting is the cornerstone of successful affiliate marketing. Traditionally, marketers relied on manual methods to segment audiences based on demographics or behaviors. But AI takes audience targeting to a whole new level. Using sophisticated algorithms, AI can identify and categorize audiences based on much more complex factors, including behavioral patterns, purchase histories, and even social media activity.

AI-driven audience segmentation tools allow affiliate marketers to create hyper-specific groups based on a range of data points, ensuring that each marketing message reaches the right audience at the right time. This leads to more personalized user experiences and higher conversion rates. By leveraging AI, marketers can refine their targeting strategies continuously, improving the effectiveness of their campaigns over time.

Predictive Analytics for Smarter Marketing

One of the most powerful applications of AI in affiliate marketing is predictive analytics. AI tools can process historical data and identify patterns to predict future behaviors and trends. This is particularly valuable in affiliate marketing, where the ability to anticipate market shifts or changing consumer preferences can mean the difference between success and failure.

By using predictive analytics, affiliate marketers can make informed decisions about which products to promote, which audiences to target, and how to allocate resources effectively. For example, if an AI tool predicts that a certain product category is about to experience a surge in demand, marketers can adjust their campaigns to focus on those products, maximizing their potential profits.

Real-Time Data Optimization

AI allows affiliate marketers to optimize their campaigns in real time. Instead of waiting weeks or months to assess the success of a campaign, AI-powered tools provide immediate feedback, enabling marketers to adjust their strategies on the fly. This level of responsiveness is crucial in the fast-paced world of digital marketing, where trends can change overnight.

✅==> Click Here to Buy at an Exclusively Discounted Price Now!✅

AI tools analyze performance metrics like click-through rates, conversion rates, and engagement levels, identifying areas that need improvement. Based on these insights, marketers can make data-driven adjustments to their campaigns, such as changing ad copy, tweaking targeting parameters, or reallocating budgets. This continuous optimization ensures that campaigns are always performing at their highest potential.

Automating Routine Tasks

One of the most significant benefits of AI is its ability to automate routine tasks, allowing marketers to focus on higher-level strategy and creativity. AI-powered tools can handle tasks like email marketing, lead nurturing, and even customer service through chatbots. This not only saves time but also ensures consistency and accuracy across all marketing efforts.

For example, AI-driven email marketing platforms can automatically segment your audience, personalize email content, and schedule campaigns based on user behavior. Chatbots powered by AI can handle customer inquiries in real time, providing instant answers and resolving issues without human intervention. This level of automation allows affiliate marketers to scale their efforts without compromising on quality.

Boosting Conversion Rates with Personalization

Personalization is a proven method for increasing conversion rates, and AI takes it to the next level by enabling personalized experiences at scale. By analyzing user data, AI tools can create unique marketing experiences for each individual user, tailoring recommendations, offers, and content to their specific preferences.

For example, AI-driven recommendation engines can suggest products to users based on their browsing history or past purchases, increasing the likelihood of conversion. Personalized email campaigns can deliver highly relevant content to users, improving engagement and driving sales. The result is a more personalized customer journey that leads to higher conversion rates and greater customer satisfaction.

The Future of Affiliate Marketing with AI

The integration of AI into affiliate marketing is still in its early stages, but the future is incredibly promising. As AI technology continues to evolve, we can expect even more advanced tools and capabilities to emerge, further transforming the affiliate marketing landscape.

In the near future, AI could enable entirely automated marketing campaigns that require minimal human intervention, with algorithms optimizing every aspect of the process, from content creation to audience targeting. Virtual reality and augmented reality, combined with AI, could offer immersive, personalized shopping experiences that engage users on a deeper level, leading to even higher conversion rates.

Conclusion

Affiliate marketing has always been about staying ahead of the curve, and the future of the industry undoubtedly lies in the power of AI. From automating routine tasks to providing data-driven insights and personalized experiences, AI is transforming how affiliate marketers operate.

The time to embrace AI is now. Those who leverage this technology will not only keep up with the competition but lead the way into a new era of marketing success. In the world of affiliate marketing, it’s clear: Affiliate begins with AI, and the future belongs to those who master it.

Affiliate Disclosure: Affiliate Links are used in this content i will receive a little commission if you puchase any product using one of the links in this post. but there are no additional cost for you

0 notes

Text

Seven Facts You Never Knew About Manage Sears Credit Card | manage sears credit card

Independent adviser Steven van Belleghem presents an absorbing eyes on the chump acquaintance of the day afterwards tomorrow in this IDG webinar. He sees two capital affidavit why the agenda era is about to accept a absolute breakthrough. First of all, Covid-19 has accustomed the apple a blast advance in agenda because there were no added options. And second, we can apprentice from beforehand revolutions (industrial, steam, accumulation production) that deployment sears to abundant heights as the aftereffect of a recession.

12 Pay with GasBuddy App Review: Is It a Legit Way to Save Money? – manage sears credit card | manage sears credit card

In aggregate with accessible technologies as AI, 5G, breakthrough accretion and robotics Steven van Belleghem sees a CX (customer experience) that is predictive, faster than absolute time, aggressive alone and convenient.

His advice? Alpha apperception the ultimate CX and about-face architect the action to accomplish that goal.

At Genesys we absolutely accede and are acquisitive to airing through this action calm with you. Let us biking forth the three advance advance Steven recommends and appearance what is already possible.

Former CD/Game Exchange (The Record Exchange), Cleveland Heights, Ohio – manage sears credit card | manage sears credit card

Integration is key, abnormally back we allocution about data. A lot or organizations still accept actual disparaged abstracts because siloed systems. Integrating systems for CRM, agenda marketing, sales and chump account is footfall one. But appropriately important is to be able to chase the babble with the chump from access to channel. If your chump grabs the buzz and vents his complaint – can your abettor see that this being acclimated the webform yesterday, beatific an email that morning and a absolute bulletin via Twitter? Or does the chump accept to alpha cogent his adventure from scratch?

If you accept a accurate omnichannel acquaintance centermost all this advice is at the fingertips of your agents, allotment them to bear a alone acquaintance absorption at the specific catechism and bearings for the customer.

Integrated abstracts is additionally the abject of advantageous intelligence to abutment acceptable administration decisions on annihilation from business to sales to acquaintance centermost management. For instance, based on actual abstracts Genesys Predictive Engagement can adjudge the best moment to access a website company with an action or abetment to apprehend the about-face from anticipation to buyer.

Target Red Card Login – Manage My RedCard – manage sears credit card | manage sears credit card

In our appearance the chump is consistently in the lead, which includes the best of advice channel. You charge to be there area the chump expects you to be, whether it is on the phone, chat, email or on a concrete location.

Connecting them to the appropriate ability should be accessible and intuitive. No amaranthine IVR choices but AI apprenticed accent acceptance in aggregate with skills-based acquisition for instance, ensuring the best accessible abettor gets to acknowledgment the issue. On top of this we can additionally bear predictive routing; acquisition based on predefined KPI’s. Predictive acquisition is AI assisted and will arbitrate abilities based acquisition if the aftereffect of that acquisition accommodation will advance the authentic KPI.

It sounds actual futuristic: but that best abettor ability be a bot. With accent acceptance it is accessible to actualize a chatty self-service action for almost simple, alternating requests. A acceptable archetype is in this video area a chump is accustomed by her buzz cardinal and asked if she calls about her best contempo order. The bot understands her acknowledgment and reacts by initiating the acknowledgment action including a acceptance email. Another acceptable archetype is a animal abettor handing off the processing of a acclaim agenda acquittal to an automatic process, based on accent acceptance and argument to speech, appropriately ensuring aegis and acquiescence of the banking transaction.

Ten Things To Expect When Attending Sears Credit Card Number | sears credit card number – manage sears credit card | manage sears credit card

But it keeps accepting bigger back bodies and bots are absolutely teaming together. This is what Steven van Belleghem calls Augmented Intelligence area the abettor handles the alternation and is accurate by suggestions from the system. Through accent and argument assay the arrangement can retrieve accordant advice from the ability abject while the abettor talks to the customer. It helps acknowledgment questions but can additionally advance up advertise and cantankerous advertise opportunities.

These are aloof a few examples of what is already accessible at this moment. Share your eyes today of the chump acquaintance you appetite to be able to action the day afterwards tomorrow and we will advice you body it.

Just call, mail or accelerate me a message.

www.searscard.com Login |Make Payment – Sears Credit Card Customer .. | manage sears credit card

Terrence Hotting, Senior Solution Adviser at Genesys [email protected] 31 6 297 268 92

Copyright © 2020 IDG Communications, Inc.

Seven Facts You Never Knew About Manage Sears Credit Card | manage sears credit card – manage sears credit card

| Allowed to my own website, in this period I’m going to provide you with in relation to keyword. And from now on, this is the primary photograph:

Quick Ways To Make A Sears Credit Card Payment | BrokeMeNot – manage sears credit card | manage sears credit card

Think about photograph over? is usually in which incredible???. if you think maybe consequently, I’l l provide you with several impression all over again below:

So, if you’d like to get these magnificent pics about (Seven Facts You Never Knew About Manage Sears Credit Card | manage sears credit card), click save icon to store the photos to your laptop. They’re all set for down load, if you appreciate and want to grab it, click save symbol on the post, and it will be instantly down loaded to your computer.} Finally if you want to gain unique and latest photo related to (Seven Facts You Never Knew About Manage Sears Credit Card | manage sears credit card), please follow us on google plus or save the site, we try our best to give you regular update with fresh and new photos. We do hope you like keeping right here. For most upgrades and recent information about (Seven Facts You Never Knew About Manage Sears Credit Card | manage sears credit card) graphics, please kindly follow us on twitter, path, Instagram and google plus, or you mark this page on book mark area, We try to provide you with up-date regularly with fresh and new images, like your surfing, and find the best for you.

Here you are at our website, articleabove (Seven Facts You Never Knew About Manage Sears Credit Card | manage sears credit card) published . Today we’re pleased to announce we have discovered an incrediblyinteresting nicheto be discussed, that is (Seven Facts You Never Knew About Manage Sears Credit Card | manage sears credit card) Most people attempting to find info about(Seven Facts You Never Knew About Manage Sears Credit Card | manage sears credit card) and definitely one of them is you, is not it?

All You Need to Know about the Sears MasterCard – Tally – manage sears credit card | manage sears credit card

Sears Credit Card Offers – Kmart – manage sears credit card | manage sears credit card

How Apple Pay works under the hood? | by Prashant Ram | codeburst – manage sears credit card | manage sears credit card

Sears Credit Offers Members – Sears – manage sears credit card | manage sears credit card

How to Access a Sears Credit Card Account | LoveToKnow – manage sears credit card | manage sears credit card

www.searscard | manage sears credit card

from WordPress https://www.cardsvista.com/seven-facts-you-never-knew-about-manage-sears-credit-card-manage-sears-credit-card/

via IFTTT

0 notes

Text

AI competitions don’t produce useful models

By LUKE OAKDEN-RAYNER

A huge new CT brain dataset was released the other day, with the goal of training models to detect intracranial haemorrhage. So far, it looks pretty good, although I haven’t dug into it in detail yet (and the devil is often in the detail).

The dataset has been released for a competition, which obviously lead to the usual friendly rivalry on Twitter:

Of course, this lead to cynicism from the usual suspects as well.

And the conversation continued from there, with thoughts ranging from “but since there is a hold out test set, how can you overfit?” to “the proposed solutions are never intended to be applied directly” (the latter from a previous competition winner).

As the discussion progressed, I realised that while we “all know” that competition results are more than a bit dubious in a clinical sense, I’ve never really seen a compelling explanation for why this is so.

Hopefully that is what this post is, an explanation for why competitions are not really about building useful AI systems.

DISCLAIMER: I originally wrote this post expecting it to be read by my usual readers, who know my general positions on a range of issues. Instead, it was spread widely on Twitter and HackerNews, and it is pretty clear that I didn’t provide enough context for a number of statements made. I am going to write a follow-up to clarify several things, but as a quick response to several common criticisms:

I don’t think AlexNet is a better model than ResNet. That position would be ridiculous, particularly given all of my published work uses resnets and densenets, not AlexNets.

I think this miscommunication came from me not defining my terms: a “useful” model would be one that works for the task it was trained on. It isn’t a model architecture. If architectures are developed in the course of competitions that are broadly useful, then that is a good architecture, but the particular implementation submitted to the competition is not necessarily a useful model.

The stats in this post are wrong, but they are meant to be wrong in the right direction. They are intended for illustration of the concept of crowd-based overfitting, not accuracy. Better approaches would almost all require information that isn’t available in public leaderboards. I may update the stats at some point to make them more accurate, but they will never be perfect.

I was trying something new with this post – it was a response to a Twitter conversation, so I wanted to see if I could write it in one day to keep it contemporaneous. Given my usual process is spending several weeks and many rewrites per post, this was a risk. I think the post still serves its purpose, but I don’t personally think the risk paid off. If I had taken even another day or two, I suspect I would have picked up most of these issues before publication. Mea culpa.

Let’s have a battle

Nothing wrong with a little competition.*

So what is a competition in medical AI? Here are a few options:

getting teams to try to solve a clinical problem

getting teams to explore how problems might be solved and to try novel solutions

getting teams to build a model that performs the best on the competition test set

a waste of time

Now, I’m not so jaded that I jump to the last option (what is valuable to spend time on is a matter of opinion, and clinical utility is only one consideration. More on this at the end of the article).

But what about the first three options? Do these models work for the clinical task, and do they lead to broadly applicable solutions and novelty, or are they only good in the competition and not in the real world?

(Spoiler: I’m going to argue the latter).

Good models and bad models

Should we expect this competition to produce good models? Let’s see what one of the organisers says.

Cool. Totally agree. The lack of large, well-labeled datasets is the biggest major barrier to building useful clinical AI, so this dataset should help.

But saying that the dataset can be useful is not the same thing as saying the competition will produce good models.

So to define our terms, let’s say that a good model is a model that can detect brain haemorrhages on unseen data (cases that the model has no knowledge of).

So conversely, a bad model is one that doesn’t detect brain haemorrhages in unseen data.

These definitions will be non-controversial. Machine Learning 101. I’m sure the contest organisers agree with these definitions, and would prefer their participants to be producing good models rather than bad models. In fact, they have clearly set up the competition in a way designed to promote good models.

It just isn’t enough.

Epi vs ML, FIGHT!

If only academic arguments were this cute

ML101 (now personified) tells us that the way to control overfitting is to use a hold-out test set, which is data that has not been seen during model training. This simulates seeing new patients in a clinical setting.

ML101 also says that hold-out data is only good for one test. If you test multiple models, then even if you don’t cheat and leak test information into your development process, your best result is probably an outlier which was only better than your worst result by chance.

So competition organisers these days produce hold-out test sets, and only let each team run their model on the data once. Problem solved, says ML101. The winner only tested once, so there is no reason to think they are an outlier, they just have the best model.

Not so fast, buddy.

Let me introduce you to Epidemiology 101, who claims to have a magic coin.

Epi101 tells you to flip the coin 10 times. If you get 8 or more heads, that confirms the coin is magic (while the assertion is clearly nonsense, you play along since you know that 8/10 heads equates to a p-value of <0.05 for a fair coin, so it must be legit).

Unbeknownst to you, Epi101 does the same thing with 99 other people, all of whom think they are the only one testing the coin. What do you expect to happen?

If the coin is totally normal and not magic, around 5 people will find that the coin is special. Seems obvious, but think about this in the context of the individuals. Those 5 people all only ran a single test. According to them, they have statistically significant evidence they are holding a “magic” coin.

Now imagine you aren’t flipping coins. Imagine you are all running a model on a competition test set. Instead of wondering if your coin is magic, you instead are hoping that your model is the best one, about to earn you $25,000.

Of course, you can’t submit more than one model. That would be cheating. One of the models could perform well, the equivalent of getting 8 heads with a fair coin, just by chance.

Good thing there is a rule against it submitting multiple models, or any one of the other 99 participants and their 99 models could win, just by being lucky…

Multiple hypothesis testing

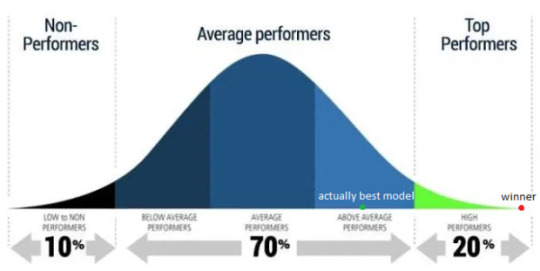

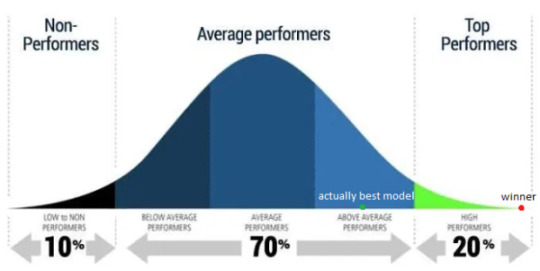

The effect we saw with Epi101’s coin applies to our competition, of course. Due to random chance, some percentage of models will outperform other ones, even if they are all just as good as each other. Maths doesn’t care if it was one team that tested 100 models, or 100 teams.

Even if certain models are better than others in a meaningful sense^, unless you truly believe that the winner is uniquely able to ML-wizard, you have to accept that at least some other participants would have achieved similar results, and thus the winner only won because they got lucky. The real “best performance” will be somewhere back in the pack, probably above average but below the winner^^.

Epi101 says this effect is called multiple hypothesis testing. In the case of a competition, you have a ton of hypotheses – that each participant was better than all others. For 100 participants, 100 hypotheses.

One of those hypotheses, taken in isolation, might show us there is a winner with statistical significance (p<0.05). But taken together, even if the winner has a calculated “winning” p-value of less than 0.05, that doesn’t mean we only have a 5% chance of making an unjustified decision. In fact, if this was coin flips (which is easier to calculate but not absurdly different), we would have a greater than 99% chance that one or more people would “win” and come up with 8 heads!

That is what an AI competition winner is; an individual who happens to get 8 heads while flipping fair coins.

Interestingly, while ML101 is very clear that running 100 models yourself and picking the best one will result in overfitting, they rarely discuss this “overfitting of the crowds”. Strange, when you consider that almost all ML research is done of heavily over-tested public datasets …

So how do we deal with multiple hypothesis testing? It all comes down to the cause of the problem, which is the data. Epi101 tells us that any test set is a biased version of the target population. In this case, the target population is “all patients with CT head imaging, with and without intracranial haemorrhage”. Let’s look at how this kind of bias might play out, with a toy example of a small hypothetical population:

In this population, we have a pretty reasonable “clinical” mix of cases. 3 intra-cerebral bleeds (likely related to high blood pressure or stroke), and two traumatic bleeds (a subdural on the right, and an extradural second from the left).

Now let’s sample this population to build our test set:

Randomly, we end up with mostly extra-axial (outside of the brain itself) bleeds. A model that performs well on this test will not necessarily work as well on real patients. In fact, you might expect a model that is really good at extra-axial bleeds at the expense of intra-cerebral bleeds to win.

But Epi101 doesn’t only point out problems. Epi101 has a solution.

So powerful

There is only one way to have an unbiased test set – if it includes the entire population! Then whatever model does well in the test will also be the best in practice, because you tested it on all possible future patients (which seems difficult).

This leads to a very simple idea – your test results become more reliable as the test set gets larger. We can actually predict how reliable test sets are using power calculations.

These are power curves. If you have a rough idea of how much better your “winning” model will be than the next best model, you can estimate how many test cases you need to reliably show that it is better.

So to find out if you model is 10% better than a competitor, you would need about 300 test cases. You can also see how exponentially the number of cases needed grows as the difference between models gets narrower.

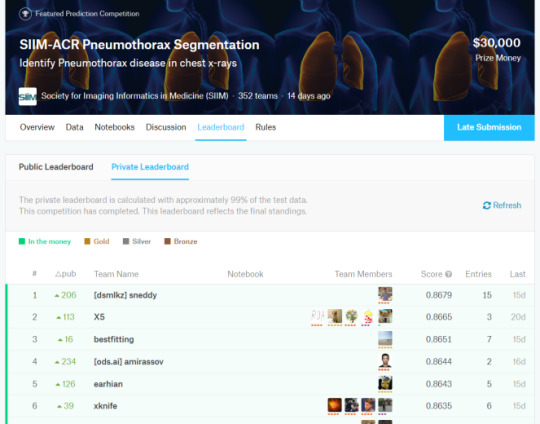

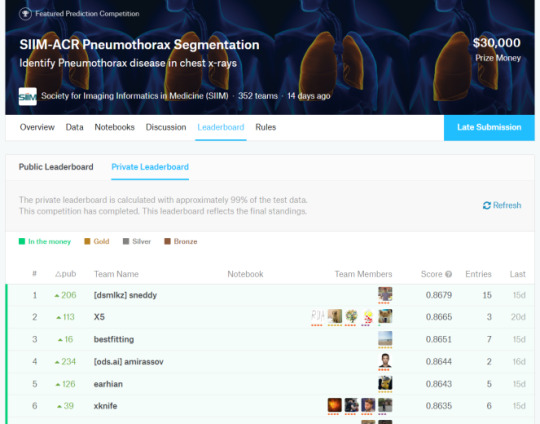

Let’s put this into practice. If we look at another medical AI competition, the SIIM-ACR pneumothorax segmentation challenge, we see that the difference in Dice scores (ranging between 0 and 1) is negligible at the top of the leaderboard. Keep in mind that this competition had a dataset of 3200 cases (and that is being generous, they don’t all contribute to the Dice score equally).

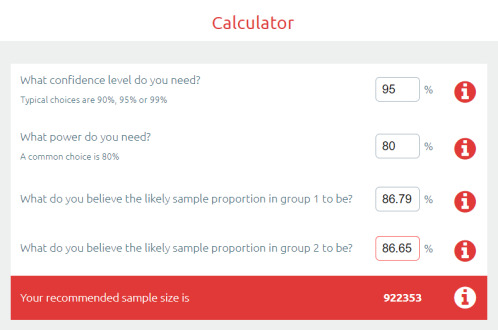

So the difference between the top two was 0.0014 … let’s chuck that into a sample size calculator.

Ok, so to show a significant difference between these two results, you would need 920,000 cases.

But why stop there? We haven’t even discussed multiple hypothesis testing yet. This absurd number of cases needed is simply if there was ever only one hypothesis, meaning only two participants.

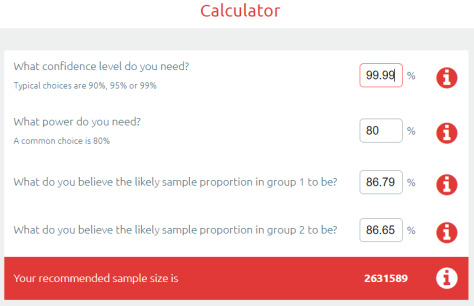

If we look at the leaderboard, there were 351 teams who made submissions. The rules say they could submit two models, so we might as well assume there were at least 500 tests. This has to produce some outliers, just like 500 people flipping a fair coin.

Epi101 to the rescue. Multiple hypothesis testing is really common in medicine, particularly in “big data” fields like genomics. We have spent the last few decades learning how to deal with this. The simplest reliable way to manage this problem is called the Bonferroni correction^^.

The Bonferroni correction is super simple: you divide the p-value by the number of tests to find a “statistical significance threshold” that has been adjusted for all those extra coin flips. So in this case, we do 0.05/500. Our new p-value target is 0.0001, any result worse than this will be considered to support the null hypothesis (that the competitors performed equally well on the test set). So let’s plug that in our power calculator.

Cool! It only increased a bit… to 2.6 million cases needed for a valid result :p

Now, you might say I am being very unfair here, and that there must be some small group of good models at the top of the leaderboard that are not clearly different from each other^^^. Fine, lets be generous. Surely no-one will complain if I compare the 1st place model to the 150th model?

So still more data than we had. In fact, I have to go down to the 192nd placeholder to find a result where the sample size was enough to produce a “statistically significant” difference.

But maybe this is specific to the pneumothorax challenge? What about other competitions?

In MURA, we have a test set of 207 x-rays, with 70 teams submitting “no more than two models per month”, so lets be generous and say 100 models were submitted. Running the numbers, the “first place” model is only significant versus the 56th placeholder and below.

In the RSNA Pneumonia Detection Challenge, there were 3000 test images with 350 teams submitting one model each. The first place was only significant compared to the 30th place and below.

And to really put the cat amongst the pigeons, what about outside of medicine?

As we go left to right in ImageNet results, the improvement year on year slows (the effect size decreases) and the number of people who have tested on the dataset increases. I can’t really estimate the numbers, but knowing what we know about multiple testing does anyone really believe the SOTA rush in the mid 2010s was anything but crowdsourced overfitting?

So what are competitions for?

They obviously aren’t to reliably find the best model. They don’t even really reveal useful techniques to build great models, because we don’t know which of the hundred plus models actually used a good, reliable method, and which method just happened to fit the under-powered test set.

You talk to competition organisers … and they mostly say that competitions are for publicity. And that is enough, I guess.

AI competitions are fun, community building, talent scouting, brand promoting, and attention grabbing.

But AI competitions are not to develop useful models.

* I have a young daughter, don’t judge me for my encyclopaedic knowledge of My Little Pony.**

** not that there is anything wrong with My Little Pony***. Friendship is magic. There is just an unsavoury internet element that matches my demographic who is really into the show. I’m no brony.

*** barring the near complete white-washing of a children’s show about multi-coloured horses.

^ we can actually understand model performance with our coin analogy. Improving the model would be equivalent to bending the coin. If you are good at coin bending, doing this will make it more likely to land on heads, but unless it is 100% likely you still have no guarantee to “win”. If you have a 60%-chance-of-heads coin, and everyone else has a 50% coin, you objectively have the best coin, but your chance of getting 8 heads out of 10 flips is still only 17%. Better than the 5% the rest of the field have, but remember that there are 99 of them. They have a cumulative chance of over 99% that one of them will get 8 or more heads.

^^ people often say the Bonferroni correction is a bit conservative, but remember, we are coming in skeptical that these models are actually different from each other. We should be conservative.

^^^ do please note, the top model here got $30,000 and the second model got nothing. The competition organisers felt that the distinction was reasonable.

Luke Oakden-Rayner is a radiologist (medical specialist) in South Australia, undertaking a Ph.D in Medicine with the School of Public Health at the University of Adelaide. This post originally appeared on his blog here.

AI competitions don’t produce useful models published first on https://wittooth.tumblr.com/

0 notes

Text

AI competitions don’t produce useful models

By LUKE OAKDEN-RAYNER

A huge new CT brain dataset was released the other day, with the goal of training models to detect intracranial haemorrhage. So far, it looks pretty good, although I haven’t dug into it in detail yet (and the devil is often in the detail).

The dataset has been released for a competition, which obviously lead to the usual friendly rivalry on Twitter:

Of course, this lead to cynicism from the usual suspects as well.

And the conversation continued from there, with thoughts ranging from “but since there is a hold out test set, how can you overfit?” to “the proposed solutions are never intended to be applied directly” (the latter from a previous competition winner).

As the discussion progressed, I realised that while we “all know” that competition results are more than a bit dubious in a clinical sense, I’ve never really seen a compelling explanation for why this is so.

Hopefully that is what this post is, an explanation for why competitions are not really about building useful AI systems.

DISCLAIMER: I originally wrote this post expecting it to be read by my usual readers, who know my general positions on a range of issues. Instead, it was spread widely on Twitter and HackerNews, and it is pretty clear that I didn’t provide enough context for a number of statements made. I am going to write a follow-up to clarify several things, but as a quick response to several common criticisms:

I don’t think AlexNet is a better model than ResNet. That position would be ridiculous, particularly given all of my published work uses resnets and densenets, not AlexNets.

I think this miscommunication came from me not defining my terms: a “useful” model would be one that works for the task it was trained on. It isn’t a model architecture. If architectures are developed in the course of competitions that are broadly useful, then that is a good architecture, but the particular implementation submitted to the competition is not necessarily a useful model.

The stats in this post are wrong, but they are meant to be wrong in the right direction. They are intended for illustration of the concept of crowd-based overfitting, not accuracy. Better approaches would almost all require information that isn’t available in public leaderboards. I may update the stats at some point to make them more accurate, but they will never be perfect.

I was trying something new with this post – it was a response to a Twitter conversation, so I wanted to see if I could write it in one day to keep it contemporaneous. Given my usual process is spending several weeks and many rewrites per post, this was a risk. I think the post still serves its purpose, but I don’t personally think the risk paid off. If I had taken even another day or two, I suspect I would have picked up most of these issues before publication. Mea culpa.

Let’s have a battle

Nothing wrong with a little competition.*

So what is a competition in medical AI? Here are a few options:

getting teams to try to solve a clinical problem

getting teams to explore how problems might be solved and to try novel solutions

getting teams to build a model that performs the best on the competition test set

a waste of time

Now, I’m not so jaded that I jump to the last option (what is valuable to spend time on is a matter of opinion, and clinical utility is only one consideration. More on this at the end of the article).

But what about the first three options? Do these models work for the clinical task, and do they lead to broadly applicable solutions and novelty, or are they only good in the competition and not in the real world?

(Spoiler: I’m going to argue the latter).

Good models and bad models

Should we expect this competition to produce good models? Let’s see what one of the organisers says.

Cool. Totally agree. The lack of large, well-labeled datasets is the biggest major barrier to building useful clinical AI, so this dataset should help.

But saying that the dataset can be useful is not the same thing as saying the competition will produce good models.

So to define our terms, let’s say that a good model is a model that can detect brain haemorrhages on unseen data (cases that the model has no knowledge of).

So conversely, a bad model is one that doesn’t detect brain haemorrhages in unseen data.

These definitions will be non-controversial. Machine Learning 101. I’m sure the contest organisers agree with these definitions, and would prefer their participants to be producing good models rather than bad models. In fact, they have clearly set up the competition in a way designed to promote good models.

It just isn’t enough.

Epi vs ML, FIGHT!

If only academic arguments were this cute

ML101 (now personified) tells us that the way to control overfitting is to use a hold-out test set, which is data that has not been seen during model training. This simulates seeing new patients in a clinical setting.

ML101 also says that hold-out data is only good for one test. If you test multiple models, then even if you don’t cheat and leak test information into your development process, your best result is probably an outlier which was only better than your worst result by chance.

So competition organisers these days produce hold-out test sets, and only let each team run their model on the data once. Problem solved, says ML101. The winner only tested once, so there is no reason to think they are an outlier, they just have the best model.

Not so fast, buddy.

Let me introduce you to Epidemiology 101, who claims to have a magic coin.

Epi101 tells you to flip the coin 10 times. If you get 8 or more heads, that confirms the coin is magic (while the assertion is clearly nonsense, you play along since you know that 8/10 heads equates to a p-value of <0.05 for a fair coin, so it must be legit).

Unbeknownst to you, Epi101 does the same thing with 99 other people, all of whom think they are the only one testing the coin. What do you expect to happen?

If the coin is totally normal and not magic, around 5 people will find that the coin is special. Seems obvious, but think about this in the context of the individuals. Those 5 people all only ran a single test. According to them, they have statistically significant evidence they are holding a “magic” coin.

Now imagine you aren’t flipping coins. Imagine you are all running a model on a competition test set. Instead of wondering if your coin is magic, you instead are hoping that your model is the best one, about to earn you $25,000.

Of course, you can’t submit more than one model. That would be cheating. One of the models could perform well, the equivalent of getting 8 heads with a fair coin, just by chance.

Good thing there is a rule against it submitting multiple models, or any one of the other 99 participants and their 99 models could win, just by being lucky…

Multiple hypothesis testing

The effect we saw with Epi101’s coin applies to our competition, of course. Due to random chance, some percentage of models will outperform other ones, even if they are all just as good as each other. Maths doesn’t care if it was one team that tested 100 models, or 100 teams.

Even if certain models are better than others in a meaningful sense^, unless you truly believe that the winner is uniquely able to ML-wizard, you have to accept that at least some other participants would have achieved similar results, and thus the winner only won because they got lucky. The real “best performance” will be somewhere back in the pack, probably above average but below the winner^^.

Epi101 says this effect is called multiple hypothesis testing. In the case of a competition, you have a ton of hypotheses – that each participant was better than all others. For 100 participants, 100 hypotheses.

One of those hypotheses, taken in isolation, might show us there is a winner with statistical significance (p<0.05). But taken together, even if the winner has a calculated “winning” p-value of less than 0.05, that doesn’t mean we only have a 5% chance of making an unjustified decision. In fact, if this was coin flips (which is easier to calculate but not absurdly different), we would have a greater than 99% chance that one or more people would “win” and come up with 8 heads!

That is what an AI competition winner is; an individual who happens to get 8 heads while flipping fair coins.

Interestingly, while ML101 is very clear that running 100 models yourself and picking the best one will result in overfitting, they rarely discuss this “overfitting of the crowds”. Strange, when you consider that almost all ML research is done of heavily over-tested public datasets …

So how do we deal with multiple hypothesis testing? It all comes down to the cause of the problem, which is the data. Epi101 tells us that any test set is a biased version of the target population. In this case, the target population is “all patients with CT head imaging, with and without intracranial haemorrhage”. Let’s look at how this kind of bias might play out, with a toy example of a small hypothetical population:

In this population, we have a pretty reasonable “clinical” mix of cases. 3 intra-cerebral bleeds (likely related to high blood pressure or stroke), and two traumatic bleeds (a subdural on the right, and an extradural second from the left).

Now let’s sample this population to build our test set:

Randomly, we end up with mostly extra-axial (outside of the brain itself) bleeds. A model that performs well on this test will not necessarily work as well on real patients. In fact, you might expect a model that is really good at extra-axial bleeds at the expense of intra-cerebral bleeds to win.

But Epi101 doesn’t only point out problems. Epi101 has a solution.

So powerful

There is only one way to have an unbiased test set – if it includes the entire population! Then whatever model does well in the test will also be the best in practice, because you tested it on all possible future patients (which seems difficult).

This leads to a very simple idea – your test results become more reliable as the test set gets larger. We can actually predict how reliable test sets are using power calculations.

These are power curves. If you have a rough idea of how much better your “winning” model will be than the next best model, you can estimate how many test cases you need to reliably show that it is better.

So to find out if you model is 10% better than a competitor, you would need about 300 test cases. You can also see how exponentially the number of cases needed grows as the difference between models gets narrower.

Let’s put this into practice. If we look at another medical AI competition, the SIIM-ACR pneumothorax segmentation challenge, we see that the difference in Dice scores (ranging between 0 and 1) is negligible at the top of the leaderboard. Keep in mind that this competition had a dataset of 3200 cases (and that is being generous, they don’t all contribute to the Dice score equally).

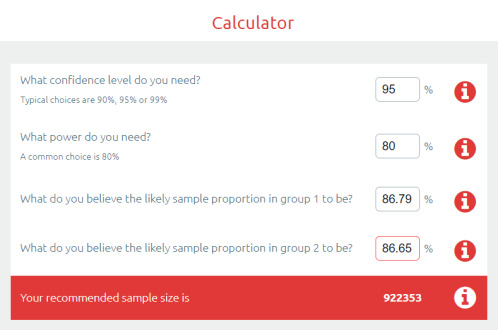

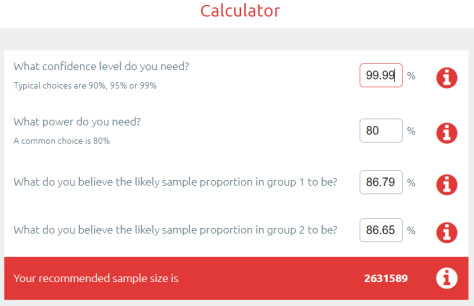

So the difference between the top two was 0.0014 … let’s chuck that into a sample size calculator.

Ok, so to show a significant difference between these two results, you would need 920,000 cases.

But why stop there? We haven’t even discussed multiple hypothesis testing yet. This absurd number of cases needed is simply if there was ever only one hypothesis, meaning only two participants.

If we look at the leaderboard, there were 351 teams who made submissions. The rules say they could submit two models, so we might as well assume there were at least 500 tests. This has to produce some outliers, just like 500 people flipping a fair coin.

Epi101 to the rescue. Multiple hypothesis testing is really common in medicine, particularly in “big data” fields like genomics. We have spent the last few decades learning how to deal with this. The simplest reliable way to manage this problem is called the Bonferroni correction^^.

The Bonferroni correction is super simple: you divide the p-value by the number of tests to find a “statistical significance threshold” that has been adjusted for all those extra coin flips. So in this case, we do 0.05/500. Our new p-value target is 0.0001, any result worse than this will be considered to support the null hypothesis (that the competitors performed equally well on the test set). So let’s plug that in our power calculator.

Cool! It only increased a bit… to 2.6 million cases needed for a valid result :p

Now, you might say I am being very unfair here, and that there must be some small group of good models at the top of the leaderboard that are not clearly different from each other^^^. Fine, lets be generous. Surely no-one will complain if I compare the 1st place model to the 150th model?

So still more data than we had. In fact, I have to go down to the 192nd placeholder to find a result where the sample size was enough to produce a “statistically significant” difference.

But maybe this is specific to the pneumothorax challenge? What about other competitions?

In MURA, we have a test set of 207 x-rays, with 70 teams submitting “no more than two models per month”, so lets be generous and say 100 models were submitted. Running the numbers, the “first place” model is only significant versus the 56th placeholder and below.

In the RSNA Pneumonia Detection Challenge, there were 3000 test images with 350 teams submitting one model each. The first place was only significant compared to the 30th place and below.

And to really put the cat amongst the pigeons, what about outside of medicine?

As we go left to right in ImageNet results, the improvement year on year slows (the effect size decreases) and the number of people who have tested on the dataset increases. I can’t really estimate the numbers, but knowing what we know about multiple testing does anyone really believe the SOTA rush in the mid 2010s was anything but crowdsourced overfitting?

So what are competitions for?

They obviously aren’t to reliably find the best model. They don’t even really reveal useful techniques to build great models, because we don’t know which of the hundred plus models actually used a good, reliable method, and which method just happened to fit the under-powered test set.

You talk to competition organisers … and they mostly say that competitions are for publicity. And that is enough, I guess.

AI competitions are fun, community building, talent scouting, brand promoting, and attention grabbing.

But AI competitions are not to develop useful models.

* I have a young daughter, don’t judge me for my encyclopaedic knowledge of My Little Pony.**

** not that there is anything wrong with My Little Pony***. Friendship is magic. There is just an unsavoury internet element that matches my demographic who is really into the show. I’m no brony.

*** barring the near complete white-washing of a children’s show about multi-coloured horses.

^ we can actually understand model performance with our coin analogy. Improving the model would be equivalent to bending the coin. If you are good at coin bending, doing this will make it more likely to land on heads, but unless it is 100% likely you still have no guarantee to “win”. If you have a 60%-chance-of-heads coin, and everyone else has a 50% coin, you objectively have the best coin, but your chance of getting 8 heads out of 10 flips is still only 17%. Better than the 5% the rest of the field have, but remember that there are 99 of them. They have a cumulative chance of over 99% that one of them will get 8 or more heads.

^^ people often say the Bonferroni correction is a bit conservative, but remember, we are coming in skeptical that these models are actually different from each other. We should be conservative.

^^^ do please note, the top model here got $30,000 and the second model got nothing. The competition organisers felt that the distinction was reasonable.

Luke Oakden-Rayner is a radiologist (medical specialist) in South Australia, undertaking a Ph.D in Medicine with the School of Public Health at the University of Adelaide. This post originally appeared on his blog here.

AI competitions don’t produce useful models published first on https://venabeahan.tumblr.com

0 notes

Text

Thin Content & SEO | How to Avoid a Google Thin Content Penalty

We live in a world of information overload. If 10 years ago it was hard to find content at all, now there’s way too much of it! Which one is good? Which one is bad? We don’t know.

While this subject is very complex, it’s clear that Google is attempting to solve these content issues in its search results. One of the biggest issues they’ve encountered in the digital marketing world is what they call thin content.

But what exactly is thin content? Should you worry about it? Can it affect your website’s SEO in a negative way? Well, thin content can get your site manually penalized but it can also sometimes send your website in Google’s omitted results. If you want to avoid these issues, keep reading!

What Is Thin Content & How Does It Affect SEO?

Is Thin Content Still a Problem in 2019?

How Does Thin Content Affect SEO?

Where Is Thin Content Found Most Often?

How to Identify Thin Content Pages

How to Fix Thin Content Issues & Avoid a Google Penalty

Make sure your site looks legit

Add more content & avoid similar titles

Don’t copy content

Web design, formatting & ads

Video, images, text, audio, etc.

Deindex/remove useless pages

1. What Is Thin Content & How Does It Affect SEO?

Thin content is an OnPage SEO issue that has been defined by Google as content with no added value.

When you’re publishing content on your website and it doesn’t improve the quality of a search results page at least a little bit, you’re publishing thin content.

For a very dull example, when you search Google for a question such as “What color is the sky?” and there’s an article out there saying “The sky is blue!”, if you publish an article with the same answer you would be guilty of adding no value.

So does it mean that this article is thin content because there are other articles about thin content out there?

Well.. no. Why? Because I’m adding value to it. First, I’m adding my own opinion, which is crucial. Then, I’m trying to structure it as logically as possible, address as many important issues as I can and cover gaps which I have identified from other pieces.

Sometimes, you might not have something new to say, but you might have a better way of saying it. To go back to our example, you could say something like “The sky doesn’t really have a color but is perceived as blue by the human eye because of the way light scatters through the atmosphere.”

Of course, you would probably have to add at least another 1500 words to that to make it seem like it’s not thin. It’s true. Longer content tends to rank better in Google, with top positions averaging about 2000 words.

How your content should be to rank

Sometimes, you might add value through design or maybe even through a faster website. There are multiple ways through which you can add value. We’ll talk about them soon.

From the Google Webmaster Guidelines page we can extract 4 types of practices which are strictly related to content quality. However, they are not easy to define!

Automatically generated content: Simple. It’s content created by robots to replace regular content, written by humans. Don’t do it. But… some AI content marketing tools have become so advanced that it’s hard to distinguish between real and automatically generated content. Humans can write poorly too. Don’t expect a cheap freelancer who writes 1000 words for $1 to have good grammar and copy. A robot might be better. But theoretically, that’s against the rules.

Thin affiliate pages: If you’re publishing affiliate pages which don’t include reviews or opinions, you’re not providing any new value to the users compared to what the actual store is already providing on their sales page.

Scraped or copied content: The catch here is to have original content. If you don’t have original content, you shouldn’t be posting it to claim it’s yours. However, even when you don’t claim it’s yours, you can’t expect Google to rank it better than the original source. Maybe there can be a reason (better design, faster website) but, generally, nobody would say it’s fair. Scraping is a no no and Google really hates it.

Doorway pages: Doorway pages are pages created to target and rank for a variety of very similar queries. While this is bad in Google’s eyes, the search giant doesn’t provide an alternative to doorway pages. If you have to target 5-10 similar queries (let’s say if you’re doing local SEO for a client), you might pull something off with one page, but if you have to target thousands of similar queries, you won’t be able to do it. A national car rental service, for example, will always have pages which could be considered doorways.

If you want, you can listen to Matt Cutts’ explanation from this video.

youtube

As you can see, it all revolves around value. The content that you publish must have some value to the user. If it’s just there because you want traffic, then you’re doing it wrong.

But value can sometimes be hard to define. For some, their content might seem as the most valuable, while for others it might seem useless. For example, one might write “Plumbing services New York, $35 / hour, Phone number”. The other might write “The entire history of plumbing, How to do it yourself, Plumbing services New York, $35 / hour, Phone number.”

Which one is more relevant? Which one provides more value? It really depends on the user’s intent. If the user just wants a plumber, they don’t want to hear about all the history. They just want a phone number and a quick, good service.

However, what’s important to understand is that there is always a way to add value.

In the end, it’s the search engine that decides, but there are some guidelines you can follow to make sure Google sees your content as valuable. Keep reading and you’ll find out all about them. But first, let’s better understand why thin content is still an issue and how it actually affects search engine optimization.

1.1 Is Thin Content Still a Problem in 2019?

The thin content purge started on February 23, 2011 with the first Panda Update. At first, Google introduced the thin content penalty because many people were generating content automatically or were creating thousands of irrelevant pages.

The series of further updates were successful and many websites with low quality content got penalized or deranked. This pushed site owners to write better content.

Unfortunately, today this mostly translates to longer content. The more you write, the more value you can provide, right? We know it’s not necessarily the case, but as I’ve said, longer content does tend to rank better in Google. Be it because the content makes its way up there or because the search engine is biased towards it… it’s hard to tell.

But there’s also evidence that long form content gets more shares on social media. This can result in more backlinks, which translates to better rankings. So it’s not directly the fact that the content is long, but rather an indirect factor related to it.

It’s kind of ironic, as Google sometimes uses its answer boxes to give a very ‘thin’ answer to questions that might require more context to be well understood.

However, in 2019 it’s common SEO knowledge that content must be of high quality. The issue today shifts to the overload of content that is constantly being published. Everything is, at least to some extent, qualitative.

But it’s hard to get all the information from everywhere and you don’t always know which source to rely on or trust. That’s why content curation has been doing so well lately.

This manifests itself in other areas, especially where there’s a very tough competition, such as eCommerce.

1.2 How Does Thin Content Affect SEO?

Google wants to serve its users the best possible content it can. If Google doesn’t do that, then its users won’t return to Google and could classify it as a poor quality service. And that makes the search engine unhappy.

Google generally applies a manual action penalty to websites it considers to contain thin content. You will see it in the Google Search Console (former Google Webmaster Tools) and it looks like this:

However, your site can still be affected by thin content even if you don’t get a warning from Google in your Search Console account. That’s because you’re diluting your site’s value and burning through your crawl budget.

The problem that search engines have is that they constantly have to crawl a lot of pages. The more pages you give it to crawl, the more work it has to do.

If the pages the search engine crawls are not useful for the users, then Google will have a problem with wasting its time on your content.

1.3 Where Is Thin Content Found Most Often?

Thin content is found most of the time on bigger websites. For the sake of helping people that really need help, let’s exclude spammy affiliate websites and automated blogs from this list.

Big websites, like eCommerce stores, often have a hard time coming up with original, high quality content for all their pages, especially for thousands of product pages.

In the example above, you can see that although the Product Details section under the image is expanded, there’s no content there. This means that users don’t have any details at all about the dress. All they know is that it’s a dress, it’s black and it costs about $20.

This doesn’t look too bad when you’re looking as a human at a single page, but when you’re a search engine and take a look at thousands and thousands of pages just like this one, then you begin to see the issue.

The solution here is to add some copy. Think of what users want to know about your product. Make sure you add the details about everything they might want to know and make them easily accessible!

Sometimes, thin content makes its way into eCommerce sites unnoticed. For example, you might have a category page which hosts a single product. Compared to all your other categories or competitor websites,that can be seen as thin content.

2. How to Identify Thin Content Pages

If we are referring merely to its size, then thin content can be easily identified using the cognitiveSEO Tool’s Site Audit.

Did you know?

Identifying thin content is actually really easy with a tool like cognitiveSEO Site Audit. The tool has a Thin Content section where you can easily find the pages with issues.

It’s as simple as that! Once you have your list, you can export it and start adding some content to those pages. This will improve their chances to make it to the top of the search results.

However, you also want to take a look at the duplicate content section in the Site Audit tool. This can also lead to a lot of indexation & content issues.

Extremely similar pages can be “combined” using canonical tags. Sometimes it can be a good idea to remove them completely from the search engine results.

3. How to Fix Thin Content Issues & Avoid a Google Penalty

Sometimes, you can fix thin content issues easily, especially if you get a manual penalty warning. At least if your website isn’t huge. If you have thousands of pages, it might take a while till you can fix them.

Here’ s a happy ending case from one of Doug Cunnington’s students:

youtube

However, the “penalty” can also come from the algorithm and you won’t even know it’s there because there is no warning. It’s not actually a penalty, it’s just the fact that Google won’t rank your pages because of their poor quality.