#the caching behaviour got me for a while

Explore tagged Tumblr posts

Text

it would be a bridge too far to say that I understand how git submodules work now but I'm misunderstanding them less

8 notes

·

View notes

Text

evolution & everything happens for a reason.

Okay, so pretty much everyone since Darwin has heard about evolution by natural selection. BUT this does nothing to change the fact that it’s still such an interesting and exciting topic!! I’m not going to drone on about the theory of evolution - no, Charles did that for us. Instead, I really want to talk about how having some knowledge of that theory makes my time in nature that much more magical. In this way, I hope to bring the three guiding facets of interpretation together - education, recreation, and inspiration (Beck et al., 2018).

(Side note; I bought a copy of “On the origin of species” when I got accepted to UofG, and still have not managed to make my way through it. No hate to Darwin, but I think we could’ve taken some notes from this class to make that read a bit more engaging - jokes, of course. If any of you have read it in its entirety, I’d love to hear your thoughts…is it worth the read? did he include anything that would be deemed a “hot take” in our modern day?).

In biological studies, we come back to evolution all the time, and we blame it for nearly everything. At this point, I’ve learned the more mechanistic view of evolution, the misconceptions about it, and where we see it in ourselves and the rest of the biological world.

And yeah, makes sense, right?

But for me, it all really clicked last semester in my Animal Behaviour class, which pulled a lot of ideas from economics, cost and benefit, and the prisoner’s dilemma (cue loud groan). I know, I know, booooring.

But honestly, it really put it all into perspective for me – the grandiose concepts of evolution finally had a really solid foundation, such that the story of any natural sight I see is clearer in my mind.

Like, okay, why do parents take care of their young?

Silly question, right? But really think about it for a sec. Well, we know that offspring are genetically related to their parents – if a parent doesn’t take care of their young, the young (and the parent’s genes, and even potentially the act of providing for young) does not persist.

We also know that in some species, one parent (mother or father) puts way more energy into raising the young than the other parent does. Again, why? If they’re both equally related, why isn’t this behaviour equal between the two?

There are a lot of “it depends” here, but one example is that the mother can be 100% sure that those babies are hers, while the father can’t be quite as sure – what if the mama snuck off with another fellow and those kids don’t have any of the “father’s” genes?

Basically, to hedge his bets, the father doesn’t spend his energy on raising young, and instead spends it looking for other potential partners.

who woulda thought that evolution would explain why there's so much drama and gambling in the natural world??

A Friend in Need (1903) by Cassius Marcellus Coolidge

My other favourite example has to do with food caching behaviour in red squirrels vs. grey squirrels. Grey squirrels hide food all over the place, spreading out their cache. Red squirrels make one big stockpile. So, if a grey squirrel defends its caches, it wastes a ton of energy, almost for nothing. It physically couldn’t manage to guard all its nuts at once, so defending one cache leaves an opportunity for other caches to be robbed.

A red squirrel, though, benefits a lot from defending its cache. If it does, it stands a much higher chance of keeping itself fed through the winter, and if it doesn’t, it has lost all of the eggs from its single basket. This explains why red squirrels are the angry little guys they are – they aren’t just evil little devils who’ve escaped from hell. Instead, they just got out of their econ lecture!

Photo: https://www.flickr.com/photos/12144772@N06/1700328393

So, while it might seem that going through the mild pains of learning the theory and its economic/math-y/mechanistic intricacies would make nature as a whole feel less magical, I think it does the exact opposite. I feel like knowing these connections paints a really bright hue on my view of nature. “Why is that thing the way it is?” is such a cool, whimsical question to get caught up in, and I love it.

We've been educated, we've had some fun looking at some silly animal examples, and hopefully there was a hint of inspiration in here too!

Mother Nature really said “everything happens for a reason” and I think that’s super neat.

Anyone else have an "evolution epiphany" moment to share?

References

Beck, L., Cable, T. T., & Knudson, D. M. (2018). Chapter 3: Values to Individuals and Society. In Interpreting Cultural and Natural Heritage for a Better World (pp. 41-56). Sagamore Publishing.

4 notes

·

View notes

Text

what the hell am i on about? ok, it's simple, I'll explain. but first! you might want to read this novel from 1962 by james baldwin, this novel from 2022 by porpentine charity heartscape, and this absolutely brutal forced-detransition novel by my partner yvette that i just finished reading. ok, now -- you ok there buddy? yeah i know that was kind of intense, bear with me now. now i need you to get up to speed with the free energy model of the brain, maybe take a minute with deleuze and guattari there but not too long! - a summary of the schizophrenia thing will do, and we'll need to dip into the AI subculture for a bit to get the shoggoth meme, probably need janus's simulator theory to flesh that out, then maybe some light quantum mechanics, and if maybe you can read this piece i wrote about lsd inspired metaphors for thought and this one i wrote about roleplaying metaphors, that would save some explaining? while we're at it, let's cover the psychology experiments on introspection and confabulation.

ok so: the human brain is a loss-minimising predictive/generative dynamics model (a shoggoth, in the rat parlance), which outwardly exhibits a superposition of narratively defined character modes (in the sense of oscillation mode) or simulacra, which are excited differently depending on context-defining stimuli. the underlying mechanism is opaque to the simulacra, which must continually construct explanatory narratives to account for their own actions and feelings, as vividly depicted in baldwin's novel.

the evolving state that is being predicted against is a combination of internal and external, shaped by the caching and associative retrieval of memories which become inputs into the prediction/action/refinement process. we are bombarded by a profusion of possible self narratives exhibited by our perception of people around us and cultural representations, and continually selecting which ones to perform, which increasingly commits us towards a particular mode by the predictive system's drive to maintain consistency, over time congealing into 'personality' as memories accumulate. however, it is always possible for other modes to be excited by context, from a 'worksona' to a trauma flashback.

depending on the dynamics of a particular brain (e.g. autism), generating some types of character modes will come easier to it than others, a subject explored in charity and yvette's novels. it is possible for these different behaviour modes to diverge sufficiently as to associatively key into different sets of memories and hence develop distinct identities, which is termed plurality. but the superpositions are universal! everyone is always performing their "shoggoth"'s predictive idea of themselves, i.e. what their brain expects 'they' would do in this circumstance, as informed by the combination of associative memories and sensory input.

and then: 'adhd' is me generating with so many different self-models that "i" vacillate between them? the dynamics lead to modes activating and joining the superposition 'too easily' (no focus) or getting suppressed (hyperfocus)? something to do with 'temperature'? something to do with the flushing, or not, of working memory? this part needs to cook more, i can feel the shape of it, but it's not quite there for me yet. but this finally feels like a way to account for all the different 'wills'.

my train just got in so I'll leave it there for now lol

you're a shoggoth in a mask too!

31 notes

·

View notes

Text

i was manipulated

I’m not much of a writer, I’m much more a poet. Long well-thought out sentences never work well for me, but little short phrases that express how I feel usually work so much better in helping me to process my hurt. But for some reason that’s not been useful for me at all. I broke up with my ex about 6 weeks ago now and even though my world shouldn’t feel different at all, everything has changed. My ex was my favourite person in the entire world, I wanted to spend most of my time with them, I knew I wanted them to be in my life forever, I’d hoped it would be in a romantic capacity. We would have moved into a house somewhere in the countryside, adopt cats and dogs, and eventually adopt kids together. I’d built an entire life with them where I didn’t hurt anymore, far away from all the people who had ever hurt me, who had ever wanted to hurt me, far away from every place that housed painful memories within their walls. I gave everything to them, every ounce of the energy I had went to them, and I happily gave it all up. I thought that’s how relationships were meant to be, I gave 100% and shouldn’t have expected anything back. I shouldn’t have needed to expect it! 25% some days, maybe 50% on a good day, but it was okay. I had enough for the both of us! I was so very wrong.

Even as I write this, I’m thinking of how they would react if they read it, how they wouldn’t agree or how they would show their friends this and argue that I am painting them in an incorrect light, what I am saying isn’t true to the situation, that I’m overreacting or twisting the truth to suit my own agenda. And that’s so wrong. I should not have to tiptoe around someone who is living in my mind rent-free, should not be shielding their feelings in any way, shape or form from the truth. And this is how I know I was in a manipulative relationship, or at the very least, I was manipulated.

I didn’t recognise I was in a manipulative relationship until it was pointed out to me, and this seems to be a common theme of people in manipulative relationships. I was once asked if I thought they were manipulative, and I said no! I did not feel manipulated at all, had never seen any manipulative behaviour from them, so how on Earth could I think they were manipulative? This question stemmed from something their previous ex had said about them, and if I agreed with them, I was in massive trouble. Even if I had felt manipulated, how could I answer that question truthfully? They were very insecure about this ex, did not understand why they had been blocked from their life without any reasonable explanation, and so reacted in a way that they thought was appropriate as a result of this. (I recognise that is very vague, however I made a promise to myself that I will never expose intimate details about somebody’s life as it detrimental and unkind). And as such, this ex was intertwined within a much bigger situation. And so, asking them to not bring up their ex was completely out of the realm of possibility. Iwas then accused of telling them not to speak about the much bigger situation by asking them to not talk about this ex. I had asked them not to talk about this ex as it felt as though I was constantly being compared to them which was not good for me (as it would not be for many people).

It started with the little things, the smallest of things that my friends picked up on. “You seem sad, what’s happened?” “What’s happened now?” “You argued again? About what?” These questions were constantly asked, and I always had excuses. I ranted and raved to them, then got side-lined by my feelings (and calls and texts asking if we can just sort it out). “It’s just because I’m stressed.” “Things will be better when we see each other!” “I’m just frustrated because I miss you so much.” Pointless arguments. Constant crying. But it was all fine because we always sorted it out, we told each other no more arguments, this was the last straw. No more “second chances”.

My friends would ask me if I was happy, and I can say that I was. 100%. I was very happy, but I felt myself slipping. I felt pieces of myself disappearing, aspects of my life that used to make me happy no longer did. I withdrew from my friends; they didn’t understand that I love them! They didn’t see the parts I did, the loving, caring parts. The parts that would listen to me sob in the middle of the night, the parts that would tell me how much they loved me, how much I meant to them, how I was their soulmate and that they didn’t realise what love was until they met me. If I could show them those parts, then of course they would accept the relationship and accept that I was happy!

I withdrew from my family, going home for the weekend only to spend most of my time with my ex. I missed important moments, but it was okay! They asked me to spend time with my family and it was my choice not to! I now wish I had spent more time with my family, they missed me so much and I was so blinded by my love that I didn’t recognise that they need to be a priority. After spending all day with my ex, I was made to feel awful about the fact that I didn’t want to fall asleep on the phone with them. It was a “tradition” that we had forged together, one that was sacred to them, but was draining for me. Spending time with my family in the evenings was off-limits, them going to bed early to seemingly spite me. Or saying they were going to bed so I should call them to say goodnight. Only to end up on the phone with them until it would just be silly to stay up and do something with my family, so “might as well” just go to bed at the same time as them. I now recognise that I was manipulated into believing that I had the choice, any decision that I made was heavily influenced by them. And it should not have been.

Manipulated is a big word, but the research that I have done after the fact leads me to believe that I was manipulated, or there was an element of manipulation present within the relationship. I was essentially isolated, feeling guilty for spending time with my friends or going out with my friends. I was not wearing clothes that they liked when I went out, them saying they “trusted me but not other people”. Them saying that they didn’t think my friends liked them, until I stopped spending as much time as I would’ve liked with them. I was once asked if I had logged into their accounts without them knowing, them saying they trusted me but “just wanted to make sure”. I often felt as though I was going insane, began doubting my own sanity at times as I was being gaslighted, about the smallest things. “No, you didn’t tell me that.” “You’ve only told me about that once.” “You have never brought that up in an argument before.”. There were a lot of other more intimate situations were I was felt as though I was being manipulated however this is too hard for me to talk about right now. All of these things being small in isolation, but once you see the bigger picture, it becomes all so much clearer.

Their friends think I am crazy, think that I am irresponsible and do not own up to mistakes that I have made and accept responsibility. And they are entitled to this opinion. I believe that with the information they possess about me, that is a logical conclusion to come to. But the issue is that all the information that they have access to and have had access to is through my ex. The limited interactions I have had with their friends does not yield for an impressive cache of information about me straight from the source. I got yelled at by one of their friends, and she demanded that I give her my address so that my things could be given back to me. Now this invasion of privacy may sound insane, however this is something that I am used to. Shortly after the breakup, information about my eating disorder was given to the friend in question. And when confronted about it, excuses were made, and a half apology was given. The point must be made that if my ex was able to so freely give out that kind of information after the breakup, what kind of information was being given while we were together? An intimate situation like an eating disorder was clearly not off limits, the question of what would have been off-limits needs to be posed. Where was the line drawn? What was just knowledge to be freely tossed into everyday conversation?

My feelings were seemingly too much, I can think of many moments where my feelings were pushed aside and dwelled on as an afterthought, or not dwelled on at all. When this was brought up, it was my fault and among all the sobs I heard from them, I believed I was being too harsh and that it was not fair that I was also side-lining their feelings. But eventually I recognised that this was wrong, and I did not stand for it any longer. I suppose I should have realised there was a problem then and there, when the times that I had said “I didn’t want to tell you this before, but I need to talk about it” piled up like things on my to-do list. Or when the times that I lay crying silent tears in bed turned from every once in a while, to every couple of weeks to most days. It was a problem that I refused to accept, despite my friends telling me that I needed to recognise it as the bad sign it was. I also remember of a moment a few days before our breakup where a comment about me wearing a dress that I loved so much was made, I will never forget the sinking feeling when I heard it, and the echoes of the recognising signs of an abusive relationship talk that I had rang loud, more distant echoes of a talk I had a school ringing warning bells in my head. It was brushed off as quickly as I brought it up, told that I needed to stop being so mopey and that I was ruining the night by being sad. Rushed sympathetic looks from me and I pulled myself together. It was like that for a lot of things, I recognise this now. I am disappointed with myself, “I am a nurse for crying out loud” I scream to myself. “This should not have happened to me!” I cry in a pillow. “I should’ve known better.” I deadpan to friends.

As I said earlier, I was so happy most of the time. I do not wish to convey that I wasn’t, there were many good times and they weren’t manipulative all the time. I was in control at times, I made mistakes, I fucked up, I was an awful person. I am not saying that this person is bad, I am not saying that their heart is devoid of love, I do believe that good people can do bad things, but that does not mean that they are 100% bad. There are many happy memories that I will cherish, many times where I felt as though I had found the right person for me, that I had found my soulmate. And I will never forget or regret all the times we spent together in each other’s company, content and not wanting for a single thing. In the beginning we spent hours just talking about anything, I would laugh and laugh and feel as though I was the luckiest person in the world. I had never smiled bigger, I had prayed for happiness and God had given me what I had wanted, with the addition of another person who could love me with all their heart. My point is, I had never known joy like it, but I had also never felt heartache like it. The highs and the lows were deafening. But the highs were there, so I did believe that they were worth all the lows.

I feel guilt even after the breakup, so much so that I felt sick with grief and guilt because of what I had done. I was the one to have finished things completely (although we do not agree on that detail) and as such held a lot of guilt for being the one to turn my back on the relationship so to speak. This guilt continued as I tried to be friends with them, all coming to a head when a talk in a coffee shop lead to a screaming match and them storming off. A further continuation during a text message conversation where I was made to feel guilty about the fact that they are “crying all the time”, worrying that they will never “be happy again” or “fall in love again” or “be intimate with anyone ever again.”. One of the last text messages them apologising for “if they’ve ever made me feel guilty”. I responded with an appropriate “it’s not IF you ever did, you did.” It was very hard for me to have a backbone, I worried that I was being too harsh for days after the fact. Suffice to say, we no longer speak. I even feel guilty for having written this, for even considering putting it online for others to see. I suppose there is no reason for me to put this online but perhaps I need to. Perhaps it will help someone else that may be in a relationship where objectively they are happy, but they are worried about things. Or they do not know if they are being manipulated. Perhaps this can help someone make the first step towards leaving a relationship that is unhappy. Perhaps this can help someone talk to friends and family or someone they trust about their worries.

The thing I must stress is that there is no blanket definition for a manipulative relationship, but there are a multitude of resources that can help you take the right steps if you are worried or scared in your relationship.

All of this leads me to say that I am hoping to not have anger anymore, not to hold on to the things that keep me awake up night, the things that I worry about for future relationships. I am scared to even entertain the idea of being with another person, for someone to know the ins and outs of my life, scared to smile for fear that I’m doing something wrong, or disappointing someone. I can let go of the anger, but it’s the anxiety that I don’t think I can let go of. Or the immense sadness that I feel when I hear their name, the nauseous feeling I get when I remember the bad times, or the happiness I felt when I woke in the middle of the night to see them next to me. My bed feels bigger somehow, like there’s too much room. No longer am I cramped up against a wall but habit dictates that I somehow wake up pinned up or arms outstretched expecting to feel another body next to me. I almost feel guilty about the fact that I am dating, no dates just yet but I am speaking to people and expanding my horizons. I yearn for one person, but it is wrong for me to do so, and detrimental to the both of us, for I was not the best girlfriend, I had flaws of my own which I will not deny at all. I feel the anger inside of me but it’s not healthy for me to dwell on it, I felt the waves of anger crash and riddle my mind with thoughts, but the best thing for me to is to let it pass through as if it is a train not due to make a stop. I can see the thoughts and anger and recognise that they are there, but they do not need to be so prevalent within me. I have made my peace with everything that has happened, and I have learnt some very hard lessons. The only person I am to please now is myself, as much as it may hurt me to do so as I am a massive people pleaser.

So, even though I was in a manipulative relationship, it does not define me. It does not have to be my story; I don’t need to think about it ever again if I do not wish to. But what I will think about is red flags, and what I will do is listen to the people who I love and consider their opinion of anyone I wish to bring into my life. Most importantly, I will listen to myself and not let myself get into this position again, being much more careful with the people I allow to become close to and recognising that there are warning signs. I may miss the signs as I fall for this hypothetical person, but hopefully as I listen to my friends and listen to my own gut, I won’t ignore them when they scream out at me. My manipulative relationship was full of great times as well as bad times and so I didn’t realise that it was happening, it does not have to be all tears and arguments for it to be manipulative, or controlling or abusive.

I finish this with something I read as I was researching, anyone can fall into a manipulative relationship, no matter how smart, savvy or feminist you are - and realising that you’re in one doesn’t make you any less smart, savvy or feminist. It is not a reflection on me that this happened, and it is not my fault that this happened. It will never be my fault.

6 notes

·

View notes

Text

Psychopolitics and Surveillance Capitalism

I queued this post quite a while ago and it posted last night while I was asleep. I’m reposting because I’ve been thinking about this a bit more since I first saw it. I’ve shortened the original quote here:

[H]ealing ... refers to self-optimization that is supposed to therapeutically eliminate any and all functional weakness or mental obstacle in the name of efficiency and performance. Yet perpetual self-optimization ... amounts to total self-exploitation. [...] The neoliberal subject is running aground on the imperative of self optimization, that is, on the compulsion always to achieve more and more. Healing, it turns out, means killing.

and had a look at this review. From the review: “[W]hat capitalism realised in the neoliberal era, Han argues, is that it didn’t need to be tough, but seductive. This is what he calls smartpolitics. Instead of saying no, it says yes: instead of denying us with commandments, discipline and shortages, it seems to allow us to buy what we want when we want, become what we want and realise our dream of freedom. “Instead of forbidding and depriving it works through pleasing and fulfilling. Instead of making people compliant, it seeks to make them dependent.”

I’m adding a break because this got long.

(review, cont’d)

And, while not Orwellian, we net-worked moderns have our own Newspeak. Freedom, for instance, means coercion. Microsoft’s early ad slogan was “Where do you want to go today?”, evoking a world of boundless possibility. That boundlessness was a lie, Han argues: “Today, unbounded freedom and communication are switching over into total control and surveillance … We had just freed ourselves from the disciplinary panopticon – then threw ourselves into a new and even more efficient panopticon.” And one, it might be added, that needs no watchman, since even the diabolical geniuses of neoliberalism – Mark Zuckerberg and Jeff Bezos – don’t have to play Big Brother. They are diabolical precisely because they got us to play that role ourselves.

At least in Nineteen Eighty-Four, nobody felt free. In 2017, for Han, everybody feels free, which is the problem. “Of our own free will, we put any and all conceivable information about ourselves on the internet, without having the slightest idea who knows what, when or in what occasion. This lack of control represents a crisis of freedom to be taken seriously.”

“Did we really want to be free?” asks Han. Perhaps, he muses, true freedom is an intolerable burden and so we invented God in order to be guilty and in debt to something. That’s why, having killed God, we invented capitalism. Like God, only more efficiently, capitalism makes us feel guilty for our failings and, you may well have noticed, encourages us to be deep in immobilising debt.”

I think I’m going to get this book. This would make a great pairing with Surveillance Capitalism by Shoshana Zuboff. (I’ve linked to a review; the book is available on Amazon and elsewhere). I have the book but haven’t read it yet. Think about this:

“Surveillance capitalism unilaterally claims human experience as free raw material for translation into behavioural data. Although some of these data are applied to service improvement, the rest are declared as a proprietary behavioural surplus, fed into advanced manufacturing processes known as ‘machine intelligence’, and fabricated into prediction products that anticipate what you will do now, soon, and later. Finally, these prediction products are traded in a new kind of marketplace that I call behavioural futures markets. Surveillance capitalists have grown immensely wealthy from these trading operations, for many companies are willing to lay bets on our future behaviour.”

From the review: “The combination of state surveillance and its capitalist counterpart means that digital technology is separating the citizens in all societies into two groups: the watchers (invisible, unknown and unaccountable) and the watched. This has profound consequences for democracy because asymmetry of knowledge translates into asymmetries of power. But whereas most democratic societies have at least some degree of oversight of state surveillance, we currently have almost no regulatory oversight of its privatised counterpart”.

Part of my job is related to the regulatory oversight of the private sector, and I definitely think that it is an absolute mess. Countries have vastly different rules, but data doesn’t respect borders. Different countries have different goals. The EU’s data laws protect the individual. China’s data laws protect the state. The US’s data laws protect the economy. (With a few exceptions, the laws are really about what can be monetized and what can’t.)

So what is an individual supposed to do? I struggle with the best way to protect my own privacy and personal data, and to teach my teens to do the same, let alone put it into a socio-political context.

I don’t think it’s possible to completely opt out of the surveillance and participate in modern life. It’s a bit easier for old people like me to opt out but I see younger people whose peer group socialises to such an extent through apps and phones (snapchat, instagram, etc). The problem is that if they are not on these platforms, they are to a very large extent excluded from social life, and humans are social animals. It’s not healthy for them to be isolated.

OTOH, we can make some choices. For example, I have a Facebook account (I have 3, actually), but the one with my real name is just for an online course that uses a FB group for discussion. One is for testing. One is my “real” account that does not use my real name where I keep in touch with family since I live half a world away. I log out every time I use it. I never gave FB my phone number or location or work place or hometown etc etc. I opted out of any advertising that I could, particularly adverts using my own Likes. I opted out of all third party platforms so I cannot accidentally log into a third party site with FB. I do not upload photos of my children. I cannot be tagged. I opted out of facial recognition. I check settings once a week in case they are “accidentally” reset. I check after upgrades and so forth. I don’t use FB messenger. I don’t use the FB app. I log out and clear my cache and cookies regularly. I download all of my FB data from time to time (I think a lot of people did this after the Cambridge Analytica scandal) and check that it’s accurate and I’m ok with what’s out there. (btw, one of my professional highlights was writing about Cambridge Analytica in 2017, before the scandals broke in early 2018, w00t).

Also, I do not have any google accounts. At all. I don’t use gmail. I cannot sign into google maps. If someone sends me a google doc for editing, I ask for a copy, edit it and send it back. (This is rarely an issue though; I think it’s happened twice.) I used to have a Youtube account, and when they changed the settings to log in with a google account and not just an email, I created an account on a separate computer, logged in, deleted all of my videos and then deleted my youtube account, then deleted my google account and then cleared my cookies and cache. I think this was 2008.

But truth be told, this is not much. I know that. Amazon knows which Audible books I listen to, which Kindle books I read, and which paperbacks I buy. It goes on and on.

Is there a balance? Are our choices to opt in -submit- to this surveillance or live off the grid? This isn’t simply a matter of updating data privacy laws. The issues that need to be resolved underpin the entire economy and political order.

Food for thought, anyway. (So how’s your quarantine going?)

2 notes

·

View notes

Text

A Bountiful Harvest

Hey there, the sins of Mary Poppins. Let's see... I'm still holding that MLP review hostage. Really, trust me on this one! But what to do in the meantime? We've done a bunch of Suicide Squad lately, so let's do the other one. Anyone remember the other one~?

Here's the other one's cover:

Hey, I said we weren't doing Suicide Squad this week! Nice and wintery for the holidays, though, right? Can't be great for Croc. Also, whose hand is that in the foreground? Enchantress and Katana aren't in this issue, and everyone else is accounted for. No one wears bracelets like that. Whose hand is this??

So we open in the Arctic, I presume. It says "somewhere on the top of the world", so it sounds plausible. Killer Croc and Captain Boomerang are complaining about the weather, but Jason and Artemis are finally in agreement on something: it's just snow, suck it up, weenies. Gotta say, the Arctic is not just snow, honestly. What sad times are these that I have to agree with anything Boomerang says. Bizarro tells them all to quieten up a bit, everything's fine. As a side note, this issue is titled "#MissionCreep". So you know, that's annoying. Bizarro heat-visions a spot in the ground open, revealing the secret entrance to Harvest's base. Oh yeah, that's what we were doing~

Even once inside, the Suicide Squad continues to complain, to Artemis' (and the reader's) continued irritation. There's too much interference to contact Waller, so they're on their own. Bizarro quickly divides them up into teams to save time. Jason and Croc will go to find any abandoned resources, Artemis and Harley are to look for survivors, and Deadshot, Bizarro, and Boomerang will shut down the energy core before it explodes. Deadshot grouses that Bizarro's not the boss of them (and he's not so big), but Harley is the actual team leader, and she chooses to defer to Bizarro. So, I guess suck it, Deadshot~

Now here's a bit I like quite a lot. Croc and Jason get to chatting as they go off to their destination, and Jason asks how Roy is. The fact that Killer Croc is Roy's Alcoholics Anonymous sponsor continues to be one of my most favourite things in comics, and I'm glad it still comes up every now and again. Croc tells Jason that Roy calls him up whenever he's thinking about drinking, which is unfortunately frequent these days. Jason agrees that it doesn't sound healthy, but are interrupted as they find something else unhealthy: the body of Harvest. Yes, in spite of his near omnipotence in The Culling, Harvest has been killed and crucified. Jason is concerned, but Croc says it's not any of their business and they should just grab the loot and go. Harvest pissed off someone fierce, and my guess it's probably anyone who actually read The Culling~

Meanwhile, Artemis and Harley do some unnecessary acrobatics to get to where they're going. Harley keeps blathering on, as she does, and Artemis gets more and more irritated, as she does. Artemis stops Harley from going any further, mostly so she can use her vocabulary word for the day, "sacrosanct". Look it up~! Before anything actually interesting can happen on their front, the scene shifts over to Bizarro and his pals, who are in front of an enormous laser grid. Deadshot and Boomerang wonder why he even needs them, and Bizarro replies that Harvest built his base to keep it safe from Superboy, so obviously he can't go in there. He'll tell the other two what to do, and if they follow his instructions, they might not even die~

Back in the storeroom, Jason is geeking out about the cool guns. Croc suddenly gets rather philosophical and asks Jason if doing damage is really what he cares about. He thinks Jason's a good kid who's trying to appear bad. But if you continue down a path of pretending to be bad, you'll actually come out bad. Croc tells him to remember that he has friends like Artemis and Bizarro, and even Roy and Starfire. Jason's very quiet for a while, and then asks if Croc wants to go halvsies on these weapons. Croc says halvsies is fine. Good stuff~

Artemis and Harley, however, aren't getting along quite as well. Artemis choke-slams Harley into a wall for disrespecting the ground on which kids have died. Harley says that she doesn't have the freedom to believe in things like sanctity. Artemis drops her, then sits down to pray for the dead. Harley comes up behind her like she's going to hit Artemis with her mallet. Without even opening her eyes, Artemis tells her if Harley hits her, she will shove that hammer right up her arse. I'm not even exaggerating that, it's literally what she says. Harley drops her hammer and pouts, while also complaining "promises, promises". Harley, don't be a weird pervert.

As Boomerang and Deadshot dodge and weave through the lasers, they notice that Bizarro has actually already left. Wow, even he couldn't stand to be around Boomerang's whinging. They shoot out the core with their respective weapons, and it explodes. As soon as it's gone, Bizarro suddenly reappears, putting his arms around both their shoulders, and apologising for leaving. See, the room was also putting out Kryptonite wavelengths, so he didn't want to hang around. In spite of doing nothing and complaining and threatening Bizarro while he's gone, Boomerang replies "No worries, mate" as soon as he's back, which is all of Captain Boomerang's personality in a nutshell. Well, at least he didn't shit himself this issue~

And... that's about it! The core is destroyed, and everyone just returns to Belle Reve via Bizarro's magic door. Waller doesn't let the Outlaws into the prison, though. At least, not until they've actually been arrested and interred under her care, anyway. She got some weapons out of it and the world isn't destroyed, but she's no babysitter. The Outlaws don't get a word in edgewise, and just go home. In an epilogue, while Jason catalogues his new cache, Artemis enters and continues to raise concerns about Bizarro's new behaviour. Jason replies that she just kind of misses that Bizarro doesn't need them to look after him anymore. She says this is the only part of this she does like. Bizarro's grown up, and the comic ends by showing us he's even brooding on rooftops now~

It’s not a bad issue, really. The Suicide Squad gang is honestly more enjoyable interacting with other people than they ever are with just each other. The stuff with Croc and Jason is really good character work, and I love that they bond over their mutual friendship with Roy. Also, Harvest was so annoying that I don’t even care that he died off panel. Or maybe it was back in Teen Titans. Who cares~? Anyways, it’s good stuff, and if they did more character pieces like this, Suicide Squad might actually be enjoyable. Reap the benefits of crossovers, guys~!

6 notes

·

View notes

Text

The Daily Tulip

The Daily Tulip – News From Around The World

Monday 27th August 2018

Good Morning Gentle Reader…. I hope you slept well and have woken full of the joys of summer… The meteors are quite beautiful this morning, I stood and watched with Bella as streaks of fire zipped across the night sky, then together we walked back to the house, Bella thinking about the cookie I promised her and me looking forward to the fresh Colombian Coffee that was brewing while we walked, it’s 3 years ago that we lost Sadie and almost 5 years since Mackie left us, but I believe they still walk the town with us in the mornings……So before I start getting all maudlin on us, let’s take a look at what’s happened in this mixed up world we call Earth….

DRUG TUNNEL RAN FROM OLD KFC IN ARIZONA TO MEXICO BEDROOM…. US authorities have found a secret drug tunnel stretching from a former KFC in the state of Arizona to Mexico. The 600ft (180m) passageway was in the basement of the old restaurant in San Luis, leading under the border to a home in San Luis Rio Colorado. Authorities made the discovery last week and have arrested the southern Arizona building's owner. They were alerted to the tunnel after the suspect, Ivan Lopez, was pulled over, according to KYMA News. During the traffic stop, police dogs reportedly led officers to two containers of hard narcotics with a street value of more than $1m in Lopez's vehicle. Investigators say the containers held 118kg (260lb) of methamphetamine, six grams of cocaine, 3kg of fentanyl, and 21kg of heroin. Agents searched Lopez's home and his old KFC, discovering the tunnel's entrance in the kitchen of the former fast-food joint. The passageway was 22ft deep, 5ft tall and 3ft wide, and ended at a trap door under a bed in a home in Mexico, said US officials. The drugs are believed to have been pulled up through the tunnel with a rope. This is not the first such discovery - two years ago a 2,600ft tunnel was found by authorities in San Diego, California. Authorities said it was one of the longest such drug tunnels ever discovered, used to transport an "unprecedented cache" of cocaine and marijuana. In July alone, US Border Patrol seized 15kg of heroin, 24lbs of cocaine, 327kg of methamphetamine and 1,900kg of marijuana at border checkpoints nationwide… Comment: Now we know what the “Secret” ingredient is in the Col’s recipe…..

TRUMP ADMINISTRATION 'CONSIDERS FUNDING GUNS IN SCHOOLS'…. The Trump administration is considering allowing schools to access federal education funding to purchase guns for teachers, US media report. The Department of Education (DoE) is looking at allowing states to use academic enrichment funds for firearms, the New York Times first reported. The federal grant being considered for this purpose is one that does not specifically prohibit buying weapons. Congress forbids using federal funds for school safety to purchase weapons. DoE spokeswoman Elizabeth Hill told CBS News: "The department is constantly considering and evaluating policy issues, particularly issues related to school safety." "The secretary nor the department issues opinions on hypothetical scenarios," she added… Comment: I cannot think of a more irresponsible action on behalf of a government…

AUSTRIA REJECTS 'GIRLISH' IRAQI ASYLUM SEEKER…. Austrian officials rejected an Iraqi migrant's asylum application because he was too "girlish", local media say. The 27-year-old's claim to be gay was deemed "unbelievable", in part due to his behaviour, according to reports. He can appeal against the decision. It comes just days after Amnesty International criticised Austria's asylum processes as "dubious". The government has hit back at the criticism, saying its asylum officials work appropriately. In the latest case, the Iraqi asylum seeker was felt to exhibit "stereotypical, in any case excessive 'girlish' behaviour (expressions, gestures)", which seemed fake, Austria's Kurier newspaper reported. Said to be an active member in local LGBT groups, he is understood to have fled Iraq in 2015, fearing for his life. However a spokesman for Austria's asylum office said the decision had been reviewed, and rejected the accusation it contained any "clichéd phrasing" by officials in Styria state, Kurier added. It is the second controversial asylum case in recent days. Last week, activists said that an 18-year-old Afghan asylum seeker had his application rejected because he did not "act or dress" like a homosexual. "The inhuman language in asylum claims does not conform with the requirements of a fair, rule-of-law procedure," Amnesty International said in a report. Interior Ministry spokesman Christoph Poelzl also rejected the accusation officials used "inhuman" language, telling news agency AFP that all employees who assess asylum claims receive training. However, the official involved in the Afghan asylum seeker's case is no longer involved in assessing applications, he added. Austria is currently run by a coalition of the conservative People's Party and the far-right Freedom Party, which came to power following an election dominated by Europe's migrant crisis last year. Comment: Immigration should not be based on “Sexual Preference” especially when determined by the “Far Right” party…

CHINA ARRESTS OVER TANG DYNASTY RELIC THEFTS…. Chinese police have arrested 26 people suspected of stealing relics from an ancient burial site. The gang allegedly seized almost 650 objects, including gold and silver cutlery and jewellery, from the Dulan Tombs, which lie on the ancient Silk Road in northwest China. The stolen items date back to the 7th Century, the Chinese Ministry of Public Security said in a statement. The suspects allegedly tried to sell them for about $11m (£7.8m). The objects were said to have been illegally excavated from the tombs, located in the north-western province of Qinghai. Silk, gold, silver, bronze ware and other items have been unearthed at the tombs, of which there are more than 2,000, since 1982. Experts believe that many of the items are of huge historical value as they show cultural exchanges and interactions between East and West during the early Tang Dynasty (618-907). Following the arrests, police will increase their crackdown on cultural relics crimes to better protect the country's cultural heritage, the Chinese government said. (See photographs at https://www.facebook.com/groups/OurPastBeneathOurFeet/ )

INDIA'S 'BIGGEST' PET RESCUE OPERATION IN KERALA FLOODS…. When rescuers in India's flood-ravaged southern state of Kerala reached a flooded hut in the city of Thrissur, the couple living there refused to leave without their 25 dogs. The water was rising, and the dogs were huddled on a single bed. The rescue workers had arrived on boats, and Sunitha, who uses only one name, flatly told them she and her husband would not leave without their stray and abandoned pets. "Our neighbours had been moved to schools and camps nearby. Rescue workers said that we could not bring our dogs to the relief camp," she said. So the workers went back and got in touch with an animal rescue group. Sally Varma of Humane Society International told the BBC that their volunteers arrived soon, and arranged for the dogs to be taken to a special shelter for affected animals. Ms Varma said she has started a fundraiser for the family and its pets so a kennel could be built at their home after the floods recede. Nearly 400 people have died in the worst flooding Kerala has witnessed in a century. Thousands remain stranded. More than one million people have been displaced, with many of them taking shelter in thousands of relief camps across the state. But what is striking is how hundreds of animals are being rescued in the affected areas. In what appears to be one of the biggest animal rescue operations during a natural calamity in India, hundreds of volunteers and animal rescue workers have travelled to flood-affected areas. Social media is awash with dramatic rescue videos: a rescuer removing his life jacket and putting it on a Labrador to help it swim to higher ground; drenched dogs being taken out of flooded homes and kennels; and country boats and inflatable rafts carrying dogs, goats and cats to safety. Rescuers have waded through water, and travelled on boats and rafts to treat, feed and rescue hundreds of animals - dogs, cats, goats, cows, cattle, ducks, and even snakes - as the waters have begun receding. Trucks with animal feed and medicines are reaching affected districts. Some animals have been moved to shelter camps, and others to higher ground. A number of animal rescue help lines have been set up, and rescuers are using WhatsApp and social media to respond to calls. "We are getting more than 100 calls a day on our helpline. The number of animals that have been moved to higher ground and rescued must be in hundreds," Anand Shiva of Kerala Animal Rescue said.

Well Gentle Reader I hope you enjoyed our look at the news from around the world this, morning… …

Our Tulips today are from India, where the Tulip garden with the backdrop of the mighty Zabarwan range of mountains was thrown open for the public, on Sunday by Minister for Floriculture Javid Mustafa Mir.

Asia’s largest tulip garden, which has about 12.5 lakh tulips of 50 varieties in its lap on the banks of world famous Dal Lake in the summer capital, Srinagar, marked the beginning of new tourism season in the Valley.

A Sincere Thank You for your company and Thank You for your likes and comments I love them and always try to reply, so please keep them coming, it's always good fun, As is my custom, I will go and get myself another mug of "Colombian" Coffee and wish you a safe Monday 27th August 2018 from my home on the southern coast of Spain, where the blue waters of the Alboran Sea washes the coast of Africa and Europe and the smell of the night blooming Jasmine and Honeysuckle fills the air…and a crazy old guy and his dog Bella go out for a walk at 4:00 am…on the streets of Estepona…

All good stuff....But remember it’s a dangerous world we live in

Be safe out there…

Robert McAngus #Spain #India #China #USA #Bella

1 note

·

View note

Text

I always feel weirded out when I see transgirls writing about how much they distrust/dislike men, because it seems like the kind of thing one might do if you’re trying to distance yourself from men in other people’s eyes, so I can never tell if it’s due to actual antipathy vs throwing men under the bus because it’s hard to have people take your gender seriously otherwise.

Which is shitty and definitely cisnormativity fucking your shit, because you shouldn’t have to hate a group of people to convince others to stop counting you as part of them. And also doing so hurts the population you’re trying to distance yourself from. And like the very direct harm of being misgendered is bad enough that I’ll forgive transgirls the dispersed harm of saying “All men are bastards” or something, but it’s still not very nice.

Anyway, that wasn’t what I was going to write. Writing while high is mostly hard for memory reasons because it’s hard to follow the same train of thought. But yeah:

I’m starting to think I might have a little bit of the same kind of sexism? But like not endorsed or anything. I don’t think men are bad in some fundamental way. I think men are scary because I don’t understand them. I have no fucking clue what’s going on inside because that’s not the thought pattern that comes naturally to me.

Like I worked really hard to learn how to pass as male to not get stabbed or something and the whole time it was super behaviourist. Like “Oh, yeah, men talk like this. Why? ¿¿¿¿Who knows????”. So just modeling at the behavioural level and ending up with the cached thought that men are Inherently Mysterious and will Never Be Understood.

And that’s obviously just me with my biases but it does lead to a “Men are SCARY” thing because what are they even thinking right now??? I usually feel like I know what the women around me think, which might be somewhat overconfident but w/e. I feel like I’ve probably got a handle on shit and nothing unexpected will happen. I don’t feel that way around guys.

Under this line should be skipped since it won’t make any sense to you and will only be recognisable to me to see when I’m sober.

And I think that is what most explains why I’m more afraid of men in [situation] than women in [situation]. Because I expect that, if a woman intends malice, I’ll at least recognise it and know what to do. But how do I know if a man intends malice? Maybe he did from the very start? How do I know? How do I react?

Unfortunately, distributions of the how mean that men are more likely to be in [situation] than women, so as a whole I’m just 100% worried about and don’t want to deal with [situation]. Besides, I don’t want trying things that could be explody so nvm. Like, what do I do if it still doesn’t work? Say that? And die I guess. ‘sides, “women only” is one of the wrong rules as has been well documented in History by everyone.

So this doesn’t help one bit and I honestly shouldn’t have written it down but it seemed important to have in words.

20 notes

·

View notes

Text

Version 425

youtube

windows

zip

exe

macOS

app

linux

tar.gz

I had a good week. I optimised and fixed several core systems.

faster

I messed up last week with one autocomplete query, and as a result, when searching the PTR in 'all known files', which typically happens in the 'manage tags' dialog, all queries had 2-6 seconds lag! I figured out what went wrong, and now autocomplete should be working fast everywhere. My test situation went from 2.5 seconds to 58ms! Sorry for the trouble here, this was driving me nuts as well.

I also worked on tag processing. Thank you to the users who have sent in profiles and other info since the display cache came in. A great deal of overhead and inefficient is reduced, so tag processing should be faster for almost all situations.

The 'system:number of tags' query now has much better cancelability. It still wasn't great last week, so I gave it another go. If you do a bare 'system:num tags > 4' or something and it is taking ages, stopping or changing the search should now just take a couple seconds. It also won't blat your memory as much, if you go really big.

And lastly, the 'session' and 'bandwidth' objects in the network engine, formerly monolithic and sometimes laggy objects, are now broken into smaller pieces. When you get new cookies or some bandwidth is used, only the small piece that is changed now needs to be synced to the database. This is basically the same as the subscription breakup last year, but behind the scenes. It reduces some db activity and UI lag on older and network-heavy clients.

better

I have fixed more instances of 'ghost' tags, where committing certain pending tags, usually in combination with others that shared a sibling/parent implication, could still leave a 'pending' tag behind. This reasons behind it were quite complicated, but I managed to replicate the bug and fixed every instance I could find. Please let me know if you find any more instances of this behaviour.

While the display cache is working ok now, and with decent speed, some larger and more active clients will still have some ghost tags and inaccurate autocomplete counts hanging around. You won't notice or care about a count of 1,234,567 vs 1,234,588, but in some cases these will be very annoying. The only simple fixes available at the moment are the nuclear 'regen' jobs under the 'database' menu, which isn't good enough. I have planned maintenance routines for regenerating just for particular files and tags, and I want these to be easy to fire off, just from right-click menu, so if you have something wrong staring at you on some favourite files or tags, please hang in there, fixes will come.

full list

optimisations:

I fixed the new tag cache's slow tag autocomplete when in 'all known files' domain (which is usually in the manage tags dialog). what was taking about 2.5 seconds in 424 should now take about 58ms!!! for technical details, I was foolishly performing the pre-search exact match lookup (where exactly what you type appears before the full results fetch) on the new quick-text search tables, but it turns out this is unoptimised and was wasting a ton of CPU once the table got big. sorry for the trouble here--this was driving me nuts IRL. I have now fleshed out my dev machine's test client with many more millions of tag mappings so I can test these scales better in future before they go live

internal autocomplete count fetches for single tags now have less overhead, which should add up for various rapid small checks across the program, mostly for tag processing, where the client frequently consults current counts on single tags for pre-processing analysis

autocomplete count fetch requests for zero tags (lol) are also dealt with more efficiently

thanks to the new tag definition cache, the 'num tags' service info cache is now updated and regenerated more efficiently. this speeds up all tag processing a couple percent

tag update now quickly filters out redundant data before the main processing job. it is now significantly faster to process tag mappings that already exist--e.g. when a downloaded file pends tags that already exist, or repo processing gives you tags you already have, or you are filling in content gaps in reprocessing

tag processing is now more efficient when checking against membership in the display cache, which greatly speeds up processing on services with many siblings and parents. thank you to the users who have contributed profiles and other feedback regarding slower processing speeds since the display cache was added

various tag filtering and display membership tests are now shunted to the top of the mappings update routine, reducing much other overhead, especially when the mappings being added are redundant

.

tag logic fixes:

I explored the 'ghost tag' issue, where sometimes committing a pending tag still leaves a pending record. this has been happening in the new display system when two pending tags that imply the same tag through siblings or parents are committed at the same time. I fixed a previous instance of this, but more remained. I replicated the problem through a unit test, rewrote several update loops to remain in sync when needed, and have fixed potential ghost tag instances in the specific and 'all known files' domains, for 'add', 'pend', 'delete', and 'rescind pend' actions

also tested and fixed are possible instances where both a tag and its implication tag are pend-committed at the same time, not just two that imply a shared other

furthermore, in a complex counting issue, storage autocomplete count updates are no longer deferred when updating mappings--they are 'interleaved' into mappings updates so counts are always synchronised to tables. this unfortunately adds some processing overhead back in, but as a number of newer cache calculations rely on autocomplete numbers, this change improves counting and pre-processing logic

fixed a 'commit pending to current' counting bug in the new autocomplete update routine for 'all known files' domain

while display tag logic is working increasingly ok and fast, most clients will have some miscounts and ghost tags here and there. I have yet to write efficient correction maintenance routines for particular files or tags, but this is planned and will come. at the moment, you just have the nuclear 'regen' maintenance calls, which are no good for little problems

.

network object breakup:

the network session and bandwidth managers, which store your cookies and bandwidth history for all the different network contexts, are no longer monolithic objects. on updates to individual network contexts (which happens all the time during network activity), only the particular updated session or bandwidth tracker now needs to be saved to the database. this reduces CPU and UI lag on heavy clients. basically the same thing as the subscriptions breakup last year, but all behind the scenes

your existing managers will be converted on update. all existing login and bandwidth log data should be preserved

sessions will now keep delayed cookie changes that occured in the final network request before client exit

we won't go too crazy yet, but session and bandwidth data is now synced to the database every 5 minutes, instead of 10, so if the client crashes, you only lose 5 mins of login/bandwidth data

some session clearing logic is improved

the bandwidth manager no longer considers future bandwidth in tests. if your computer clock goes haywire and your client records bandwidth in the future, it shouldn't bosh you _so much_ now

.

the rest:

the 'system:number of tags' query now has greatly improved cancelability, even on gigantic result domains

fixed a bad example in the client api help that mislabeled 'request_new_permissions' as 'request_access_permissions' (issue #780)

the 'check and repair db' boot routine now runs _after_ version checks, so if you accidentally install a version behind, you now get the 'weird version m8' warning before the db goes bananas about missing tables or similar

added some methods and optimised some access in Hydrus Tag Archives

if you delete all the rules from a default bandwidth ruleset, it no longer disappears momentarily in the edit UI

updated the python mpv bindings to 0.5.2 on windows, although the underlying dll is the same. this seems to fix at least one set of dll load problems. also updated is macOS, but not Linux (yet), because it broke there, hooray

updated cloudscraper to 1.2.52 for all platforms

next week

Even if this week had good work, I got thick into logic and efficiency and couldn't find the time to do anything else. I'll catch up on regular work and finally get into my planned network updates.

0 notes

Text

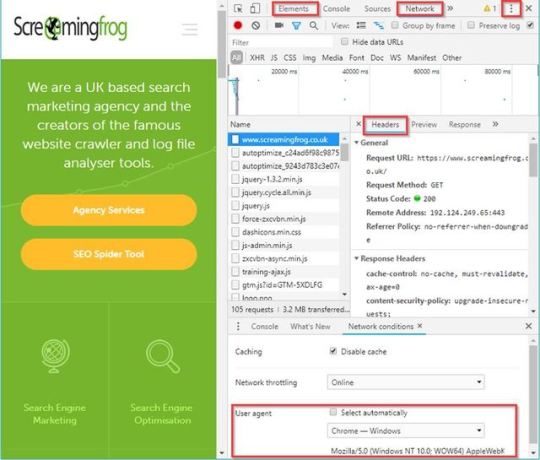

Drawing inspiration from stale-while-revalidate

There's an http caching directive called 'stale-while-revalidate', which will cause your browser to use a stale cached value, while refreshing its cache asynchronously under the hood.

Making practical use of this setting within my projects proved futile, but it was enough to get me thinking; I want access to that asynchronous response when the browser receives an up-to-date response. I want to make an ajax call, and just have my callback fire twice. Once with a stale, cached response, and again when I receive up-to-date data. Lucky for me, this is actually pretty trivial to implement in basic javascript.

Use case:

I work on a SAAS product for managing inventory. The user's primary view/screen includes a sidebar with a folder structure, and each folder in the tree includes a count of the records beneath it. This sidebar is used for navigation, but the counts are also helpful to the user.

For power-users; this folder structure can become very large, and counts can reach the hundreds-of-thousands. The folders that are visible to the user may depend on the user's permission to individual records, and there are a number of other challenging behaviours to this sidebar. In short; it can take some time for the server to generate the data for this sidebar.

Natually, the best course of action would be to refactor the code to generate it faster. And I'm with you. Refactoring and designing for performance are the ideal solution long-term. But there is a time and place for quick fixes. In our case, we're bootstrapping a SAAS: fast feature implementation leads to winning and retaining customers.

One intuitive improvement we can make is to give users a more progressive loading experience. Rather than generating the entire page at once and sending it to the user as a single http response; we generate a simpler response, and then fetch 'secondary' information like the sidebar via ajax.

``` <div id='sidebar'> Loading... </div> <script> $(document).ready(function(){ $.ajax({ url: 'https://...', success: function(data){ $("#sidebar").html(data) ... // other initialization }, }) }) </script> ```

Now, the user's browsing experience feels faster, with the primary portion of their page loading more quickly, and the sidebar appearing some moments later. You do have a new user-state to consider here: the user may interact with the page between when the page loads, and when the callback is called, so you'll want to watch that your callback doesn't cause any existing on-screen content to move. You can usually address that by ensuring your placeholder 'loading' content has the same dimensions as the content which will replace it.

Implementation

So how do we implement the idea of stale-while-revalidate? There are just a couple things to do:

Add headers to the ajax response, so the browser knows it can cache it.

ex: Cache-Control: private, max-age=3600

Ensure that our callback can safely be called multiple times.

Perform the ajax call twice.

Ignoring step #2 for a moment, our new code looks like this:

``` <div id='sidebar'> Loading... </div> <script> $(document).ready(function(){ function sidebar_callback(data){ $("#sidebar").html(data) ... // other initialization } $.ajax({ url: 'https://...', success: sidebar_callback, }) $.ajax({ url: 'https://...', success: sidebar_callback, headers: {'Cache-Control': 'max-age=0'}, }) }) </script> ```

That's it! Now, when the user loads the page, their browser will make two http requests. The first will (ideally) obtain a response from the local cache, and the second request will retrieve (and cache) a fresh copy from the server. The user sees the primary portion of the page load, followed almost immediately by the appearance of the sidebar, and finally the sidebar updates in-place to reflect any new changes/values.

Unfortunately, there's step #2 to consider. Now that your callback function is running twice; you have a new state to consider: The user may interact with the site between callbacks. In the case of my folder structure; the user may have right-clicked on a folder to perform an action. My callback logic now needs to take that into careful account.

But it's worth the effort. Because once you've got a callback that you can call multiple times, we're into a new paradigm baby.

Taking it further

Once your javascript callback can safely be called multiple times; you suddenly have some new options.

You could periodically poll your ajax endpoint, and update your dom if anything has changed.

You could trigger a refresh of your ajax endpoint based on a user interaction, or an external signal from the server.

In my case, we implemented these improvements in stages - each one building off the last:

We built an MVP with strictly server-side-rendering (no fancy js frameworks).

When something got slow, we defered its generation to an ajax call (simple jquery).

Then we added this stale-while-revalidate idea.

We carried this idea over to a 'notifications' pane.

We added periodic polling for new notifications (poor man's realtime notifications).

We added some simple logic, so a new notification triggered a re-fetch of the sidebar (poor man's realtime sidebar).

We refactored the notification endpoint (server side) to use http long-polling, to make our notifications actually realtime.

Instead of asking the server for notifications every 10 seconds; the browser asks once, and the server intentionally hangs until a new notification exists. (Plus a bit of timeout/re-connection logic.)

Taking this incremental approach let us adapt and iterate quickly through the early stages of the product. For all of its functionality - very little logic was happening client-side - so we could get away with only hiring jr backend developers as we grew. Now that the SAAS has a strong user-base, a wealth of real-world usage data, a deep understanding of the user's painpoints, and can easily acquire funding: we're ready to hire a senior front-end developer to help us re-write the front-end as a single-page-app. The UI is ready for a major refactor anyway, so we can get two birds stoned at once.

Reflecting

Unless you're Google, you probably don't need to worry about performance until it rears its head. Don't polish a turd, and avoid goldplating. Instead, choose simple, boring technology to solve the problem you currently face. Keep your code DRY, and remember that every minute spent thinking about design, saves an hour of misdirected effort.

A warm embrace to you, fellow wanderer.

0 notes

Text

Aurora multi-Primary first impression