#the smaller q is the bigger is the difference in the ratios

Note

Hello! I just saw your cat colors master post and you seem very knowledgeable on the subject so I have a question. I was reading about the O/o gene for a little school assignment to talk about X linked genes. And since male cats only need one X to show orange color or black color. Wouldn't there be more orange and black male cats than female cats? I always hear people say an orange cat is more likely to be male than female but I never hear people say a black cat is more likely to be male than female. Is there some way for cats to be black that isn't linked to the O gene that changes the ratio of female to male black cats? Or are people just not noticing the ratio in black cats vs orange cats for some reason?

Yes, you're right, there are more black males than females, because the tortoiseshells are diminishing the numbers of both the black and and the red females.

Let's calculate the ratios; let p be the frequency of the black and q is the red allele. (This is also their probability: if you choose a random allele from the cat population, it'll be black with the chance of p and red with the chance of q.)

Black male: p/2 (1/2 of all cats are males and with p chance a male cat has the black allele therefore he is black)

Red male: q/2 (similarly)

Black female: p²/2 (both of her alleles need to be black, so we need to choose twice)

Red female: q²/2 (similarly)

Tortoiseshell female: pq (we need to choose a black and a red allele, and we can do it in two orders, so this is actually the more simple form of 2pq/2)

Let's check: p/2 + q/2 + p²/2 + q²/2 + pq = (p+q+p²+q²+2pq)/2 = 1, because p+q=1 and p²+2pq+q²=(p+q)²=1²=1. Good.

What could be the frequency of the red allele? I don't know, but for the sake of simplicity let's say it 0.2 (so every fifth male cat is orange).

Black male: p/2=0.8/2=0.4 (40%)

Red male: q/2=0.2/2=0.1 (10%)

Black female: p²/2=0.8²/2=0.64/2=0.32 (32%)

Red female: q²/2=0.2²/2=0.04/2=0.02 (2%)

Tortoiseshell female: pq=0.8*0.2=0.16 (16%)

So this means the male:female ratio in red cats is 10:2=5:1 (for every five males there's one female), while in blacks 40:32=5:4 (for every five male there're four females).

You see why it's much easier to notice.

#if we stay with p and q#reds: m:f=q:q^2=1/q#blacks: m:f=p:p²=1/p#the smaller q is the bigger is the difference in the ratios#if p=q=0.5 then the difference is gone#ask and answer#cat genetics#orange

25 notes

·

View notes

Photo

All-terrain tires are the coup de foudre of the internet. They’re neat, conspicuous, and multifunctional. We like to think of them as the one thing that all travelers and racers love to have. They work on every terrain imaginable; from wet to dry and even snowy.

Best all-terrain tires use a series of clever tread markings to fair every land, while others focus more on the strength of the rubber.

For your convenience, we have prepared a list of the 10 best all-terrain tires available on the market, followed by an in-depth look into what makes these tires stand out.

BFGoodrich All-Terrain Radial Tire

The BFGoodrich has one of the best designs that we have seen. It uses wideset sipes and extra hard rubber to give you maximum control and suspension even on the toughest terrains. The extra thick rubber only helps to keep the tire safe during rocky rides.

We recommend the BFGoodrich for riding on mountain and sandy terrains, but ultimately the tire can work for any and all surfaces. The rubber has been formulated from special chemicals and compounds to reduce wear and tear even when on hard crusty gravel.

The rim of the tire has been embedded with premium quality tread patterns. This is a computer generated design, and helps to keep gravel, microparticles, dust, mud, water, and snow from accumulating in the ridges. Even the sidewall has tough bold markings to prevent this.

The BFGoodrich uses three-dimensional sipe technology, and the sidewall has hard rubber blocks protruding out. Coming on to the size and speed specs, this model has a width of 285 mm and a sidewall height aspect ratio of 75%.

The radius of the tire is 16 inches, perfect for smaller trucks, jeep, and SUVs. The speed index is an all-time low R (170 Km/h) and the weight load index is 126 (1700 Kg/tire).

Hankook DynaPro ATM

The Hankook DynaPro is the best example of how dynamic this brand really is. It features a wraparound tread technology and a tough new formula. All of this only enhances your mobility and control skills.

Speaking of mobility, have you ever been driving a truck and get a piece of rock stuck in your tread? That happens often. The DynaPro overcomes this problem by keeping its ridges deep and wide, allowing enough traction while also keeping out any unwanted pebbles.

The tread extends into the sidewall, with rubber blocks protruding out to keep you grounded and balanced. The Hankook DynaPro also makes use of two-step sipes to increase lifespan and performance of the tire. Sure enough, regardless of the road, the DynaPro can traverse it all.

This tire has a speed index of T (190 Km/h) and weight load index of 106 (950 Kg). This makes it optimal for light trucks and bigger wagons or SUVs.

The speed is a bit faster than other tires, which shows that it won’t work well with heavier vehicles. We recommend it for all types of small trucks, vans, carriers, minibusses, and SUVs.

Toyo Tire Country

Toyo Tire uses the best and most loved silica tread compounds. The tires have excellent mileage and can withstand all sorts of weather and terrains. Now running on water or on snow was never easier.

The Toyo Tire is best known for mud terrain and off-road traction. Usually, with almost every tire optimized for water and snow, most people forget that dirt traction is a complicated task too.

This tire has a 3-layered polyester tread compound embedded with refined silica to give it a characteristic strength and shine. The tread pattern itself is open enough to lower accumulation of mud and water.

The tread blocks are for the most part hook-shaped and extend over to the sidewall. This helps give extra traction, as the sidewall can occasionally lose balance or drift. Another great feature to note is the deep siping on either side, giving it better traction on water.

Toyo’s tires are made for heavier vehicles of at least 20 inches wheel radius. However, the speed rating is Q (160 Km/h) and the load index is 121 (1450 Kg). This makes it perfect for heavy trucks and SUVs or snowmobiles and dirt vehicles.

However, since the speed is very low and the weight index is high, we do not recommend the Toyo Tire for passenger or tourist cars or trucks.

Buying Guide For The Best All-Terrain Tires

If you like our top 10 list but can’t decide which one to choose, or if you want to choose a different brand altogether, then read on. We’ve prepared a list of the most important things to look for in an all-terrain tire.

Get The Right Size

Many people don’t even know what those numbers and letters on the side of the tire mean. An example would is: 225/65 R 15 91 V. These markings represent the width, aspect ratio, layer arrangement, radius, weight load index, and speed rating of the tire respectively.

The Difference Between All Season And All Terrain Tires

So you may have heard the words “all season tires” and “all-terrain tires” quite often, and thought that tire is essentially the same thing. Most people do. After all, the only issue that tires face in the different season is the terrain, right?

What’s the difference between a winter tire and a tire optimized for snow? While the two may correspond in terms of terrains, they are certainly two very different categories and should be treated as such.

All season tires are optimized for the season. They are usually capable of not only traversing the terrain but also overcoming obstacles caused by temperature drop and pressure. So a summer tire is not just designed to work on sand and dry roads, but it has special mechanisms to overcome heat, bursts, and friction.

Conclusion

To conclude, you can benefit from an all-terrain tire in all situations, surfaces, and even in any weather. From our top 10 list, we have no personal favorites. You can pick out anyone that you like. If not, then consult our buying guide to learn more about how to choose the best all-terrain tires. Our guide should be able to inform you enough.

So there you have it. The top 10 best all-terrain tires and how to buy them. We hope you enjoyed our list, and stick around for more great lists just like this one.

4.7

1 note

·

View note

Text

50.17 Percent Profits in 75 Trading Days: The Success Story of RvR Ventures!

Forex, also known as foreign exchange, FX or currency trading, is a decentralized global market where all the world's currencies trade. The forex market is the largest, most liquid market in the world with an average daily trading volume exceeding $5 trillion a day, which much more than the volume on the New York Stock Exchange.

Also, the most traded currency is the US dollar, which features in nearly 80% of all forex trades. Nearly 90% of forex trading is speculative trading. The majority of foreign exchange trades consist of spot transactions, forwards, foreign exchange swaps, currency swaps and options. However, as a leveraged product there is plenty of risk associated with Forex trades that can result in substantial losses.

Interestingly - challenging the risk-reward ratio & trading frequency of the biggest Forex Traders & Portfolio Managers in the world, RvR Ventures achieved 50.17% profits by trading on Real ECN Account of $ 1 Million. The traders of RvR ventures traded pairs like XAUUSD, EURUSD, GBPJPY, GBPUSD, EURGBP and other cross currency pairs on Real ECN accounts with 1:500 leverage through automated & manual mode of trading based on the algorithms developed by RvR Ventures & a Dubai Based Indian Cryptographer & Forex Trader.

As per the data verified by us on the Track Record Verified & Trading Privileges verified portfolios of RvR Ventures on MyFxBook.com: (Link: https://www.myfxbook.com/members/rvr005/2739956)

RvR Ventures achieved a total gain of 50.17% on their portfolio RvR005 with a daily gain of up to 0.39%, Monthly gain of 12.62% with a drawdown of 44.5% only. Total profit of $ 5,02,116 was booked in 75 Trading Days / 3 months (15 October, 2018 – 27 January, 2019) on a total portfolio size of 1,000,000$ with 83% accuracy on 2,067 trades with 20,876 lots executed during this span on their portfolio RvR005, a Real – ECN account with 1:500 leverage.

RvR Ventures achieved profit returns ranging from 68.44%- 5.78% on their various accounts. A total profit of $ 3,473,648.50 was booked with a daily gain of 0.30 % & monthly gain of 12.64% at an average trading accuracy of 71% on total 46,811 trades executed with 66.579 lots on their portfolio through manual & automated trading as seen in Image 2.

Q&A with Mr. Kevin Albuquerque, Chief Trading Officer - RvR Ventures.

How can a trader make profits safely in highly volatile & high-risk market?

"How much can I make as a trader"? This is one of the first questions that many people ask me followed by "How long will it take"? Each person is unique in their goals. Some traders want to make millions while others want to improve their financial situation and enjoy the flexibility of Forex trading. Forex Trading has unlimited possibilities. Hence one should not be greedy & should be patient enough to focus, calculate & mitigate the risk while booking / planning profits in highly volatile currencies in Forex Trading. We took less than 5% risk on the basis of our automated trading algorithm & achieved upto 68% profits in 75 trading days.

What is the trading strategy of RvR Ventures?

We at RvR Ventures focus on profits & work on profit sharing basis only without charging any handling charges, service charges, trading commissions or fund management charges. We started working on our algorithms in 2008, so that we can trade with maximum accuracy by minimizing the risk to achieve higher profits in any type of volatile markets & on any currency pair in Forex. Making our offering a win-win situation, our proposal is very simple: We earn only if you earn.

Explaining further with more insights, Mr. Kevin Albuquerque said, "The Forex is a highly leveraged market, with typical leverage ratios ranging from 1:100 to 1:1000. If you use the maximum available leverage, your account can be wiped out in a matter of seconds when the market moves against you. I generally prefer leverage of 1:400 - 1:500. In addition, I always make sure that total risk taken by me doesn't exceed more than 10% of total portfolio size in the very beginning. Once the profits in the first few trades are booked, we generally take calculated risk on the profits earned only, keeping the principal amount entirely safe. Most of the trades opened by us are closed the same day to minimize the risk on the principal/profit amount in case of sudden one directional volatility.”

Is Forex Trading A Gamble?

All trading appears as speculative as to be little more than legalized gambling. There are no guarantees and making a profit on the exchange seems a totally random matter. The reality is that successful Forex trading is a highly skilled business that is not like betting at all. A common misconception is that Forex is gambling, but I personally do not consider it gambling. If you want to be successful at trading currencies, you need to take a Forex related course, need to be actively updated regarding latest market trends, market conditions, political – economic current affairs, economic data & economy of various countries.

Does Forex Broker play an important role in Forex Trading?

Yes, definitely, the trading platform provider & Forex brokers play an important role in the same. The tight spreads, negligible slippage and timely execution of trades always boost the confidence & accuracy of a Forex Trader, hence we always & only work with Regulated Forex Brokers.

What do you prefer: Smaller Lots – Higher Profits or Bigger Lots – Smaller Profits?

Recently, we were able to book up to 2,100$ per trade’s profit on lot sizes of just 0.30 in sequence, repeatedly and on the other hand, we also booked 1,14,000$ profits in 48 trading hours by trading on lot sizes of up to 100.00 each. It doesn't matter whether a trader trades on big or small lots, what matters is how much profit he is able to book on the same & in how much time, without holding any floating losses.

Smaller the lots, lesser the risk, however, to book 20x or 30x profits on small lot sizes you need to have accurate and in-depth knowledge about markets, volumes, moving averages, pair range, market sentiments, pivot points, resistance & support of a particular pair on which you are trading. On the other hand to trade on higher lots ranging from 25.00 to 100.00 each to achieve higher profits in few minutes, you need to be more alert, very cautious & accurate on your strategy. A movement of 1000 pips can instantly make your account stand in a floating loss of 100,000$ in minutes!

What makes your automated trading more efficient & accurate?

We have integrated more than 132 parameters including OHLC, market sentiments, volumes, Live News based volatility factors, EMA, SMA, Pivot Point based volatility assumptions, Momentum, Summary of various successful indicators, Technical Analysis, Fundamental Analysis, RSI, Fibonacci Retracement, SL TP ratio, Session-Based Volatility Calculations, Ascending, Descending, Symmetrical - Triangle Strategy, Head & Shoulder Strategy & Dynamic support & resistance trackers in a single algorithm to achieve utmost efficiency, trading accuracy & right point of entry & exit in different pairs of currencies in Forex Trading through our automated Trading & Forex Trading Strategy Algorithm.

Do you also offer Training?

Yes, recently we have started training courses for the candidates who qualify for Forex Trading. We offer extensive technical & fundamental analysis based real-time training sessions on Real ECN accounts for more than 2500 trading hours. This prepares the candidates to trade with higher accuracy in highly volatile markets. In addition, we also offer the option to experience our automated trading strategy as a guide in the learning process.

What are your future expansion plans?

We are now planning to expand our Portfolio Management Business in different countries of the world, by offering the option to copy our trades on Social Trading of various regulated Forex brokers which will help beginners, experts & professional Forex Traders, to minimize the risk & maximize the opportunity of returns on investment in Forex Trading. We have no plans to sell or reveal our automated trading robots, strategy & trading algorithms to any party.

1 note

·

View note

Text

Dell S2422HG Review: Premium 24" Curved Gaming Monitor

Dell S2422HG

8.50 / 10

Read Reviews

Read More Reviews

if(window.reviewItemsImgs == undefined){ window.reviewItemsImgs = []; } window.reviewItemsImgs['1Img1'] = "" <div class=\"body-img responsive-img img-size-review-item\" > <figure> <picture> <!--[if IE 9]> <video style=\"display: none;\"><![endif]--> <source media=\"(min-width: 1024px)\" sizes=\"755px\" srcset=\"https:\/\/static3.makeuseofimages.com\/wp-content\/uploads\/2021\/06\/Dell-S24HG-OSD-Menu.jpg?q=50&fit=contain&w=755&h=430&dpr=1.5\"\/> <source media=\"(min-width: 768px)\" sizes=\"943px\" srcset=\"https:\/\/static3.makeuseofimages.com\/wp-content\/uploads\/2021\/06\/Dell-S24HG-OSD-Menu.jpg?q=50&fit=contain&w=943&h=540&dpr=1.5\"\/> <source media=\"(min-width: 481px)\" sizes=\"727px\" srcset=\"https:\/\/static3.makeuseofimages.com\/wp-content\/uploads\/2021\/06\/Dell-S24HG-OSD-Menu.jpg?q=50&fit=contain&w=727&h=425&dpr=1.5\"\/> <source media=\"(min-width: 0px)\" sizes=\"440px\" srcset=\"https:\/\/static3.makeuseofimages.com\/wp-content\/uploads\/2021\/06\/Dell-S24HG-OSD-Menu.jpg?q=50&fit=contain&w=440&h=250&dpr=1.5\"\/> <!--[if IE 9]><\/video><![endif]--> <img width=\"4008\" height=\"2682\" class=\"lazyload\" alt=\"dell-s24hg-osd-menu\"\/> <\/picture> <\/figure><\/div>""; Read More Reviews

if(window.reviewItemsImgs == undefined){ window.reviewItemsImgs = []; } window.reviewItemsImgs['1Img2'] = "" <div class=\"body-img responsive-img img-size-review-item\" > <figure> <picture> <!--[if IE 9]> <video style=\"display: none;\"><![endif]--> <source media=\"(min-width: 1024px)\" sizes=\"755px\" srcset=\"https:\/\/static3.makeuseofimages.com\/wp-content\/uploads\/2021\/06\/Dell-S24HG-Back-View.jpg?q=50&fit=contain&w=755&h=430&dpr=1.5\"\/> <source media=\"(min-width: 768px)\" sizes=\"943px\" srcset=\"https:\/\/static3.makeuseofimages.com\/wp-content\/uploads\/2021\/06\/Dell-S24HG-Back-View.jpg?q=50&fit=contain&w=943&h=540&dpr=1.5\"\/> <source media=\"(min-width: 481px)\" sizes=\"727px\" srcset=\"https:\/\/static3.makeuseofimages.com\/wp-content\/uploads\/2021\/06\/Dell-S24HG-Back-View.jpg?q=50&fit=contain&w=727&h=425&dpr=1.5\"\/> <source media=\"(min-width: 0px)\" sizes=\"440px\" srcset=\"https:\/\/static3.makeuseofimages.com\/wp-content\/uploads\/2021\/06\/Dell-S24HG-Back-View.jpg?q=50&fit=contain&w=440&h=250&dpr=1.5\"\/> <!--[if IE 9]><\/video><![endif]--> <img width=\"3975\" height=\"2659\" class=\"lazyload\" alt=\"dell-s24hg-back-view\"\/> <\/picture> <\/figure><\/div>""; Read More Reviews

if(window.reviewItemsImgs == undefined){ window.reviewItemsImgs = []; } window.reviewItemsImgs['1Img3'] = "" <div class=\"body-img responsive-img img-size-review-item\" > <figure> <picture> <!--[if IE 9]> <video style=\"display: none;\"><![endif]--> <source media=\"(min-width: 1024px)\" sizes=\"755px\" srcset=\"https:\/\/static3.makeuseofimages.com\/wp-content\/uploads\/2021\/06\/Dell-S24HG-Lowest-Angle.jpg?q=50&fit=contain&w=755&h=430&dpr=1.5\"\/> <source media=\"(min-width: 768px)\" sizes=\"943px\" srcset=\"https:\/\/static3.makeuseofimages.com\/wp-content\/uploads\/2021\/06\/Dell-S24HG-Lowest-Angle.jpg?q=50&fit=contain&w=943&h=540&dpr=1.5\"\/> <source media=\"(min-width: 481px)\" sizes=\"727px\" srcset=\"https:\/\/static3.makeuseofimages.com\/wp-content\/uploads\/2021\/06\/Dell-S24HG-Lowest-Angle.jpg?q=50&fit=contain&w=727&h=425&dpr=1.5\"\/> <source media=\"(min-width: 0px)\" sizes=\"440px\" srcset=\"https:\/\/static3.makeuseofimages.com\/wp-content\/uploads\/2021\/06\/Dell-S24HG-Lowest-Angle.jpg?q=50&fit=contain&w=440&h=250&dpr=1.5\"\/> <!--[if IE 9]><\/video><![endif]--> <img width=\"3432\" height=\"2296\" class=\"lazyload\" alt=\"dell-s24hg-lowest-angle\"\/> <\/picture> <\/figure><\/div>""; Read More Reviews

See on best buy

If you're looking for a gaming monitor with great specs, and don't mind having a curved screen that's more for style than practicality, the Dell S2422HG is worth checking out.

Key Features

165Hz Refresh Rate

1920 x 1080

1ms (MPRT)

4ms Gray-to-Gray (Super Fast mode)

Tilt -5° / 21°

Height Adjustable 100mm

Anti-glare with 3H hardness

AMD FreeSync

Specifications

Brand: Dell

Resolution: 1920 x 1080p

Refresh Rate: 165Hz

Screen Size: 23.6"

Ports: 1 DP1.2a, 2 HDMI 2.0, 3.5mm Audio

Display Technology: LED, 1500R Curved Screen

Aspect Ratio: 16:9

Pros

Picture custom settings

Great for FPS games

Height and tilt adjustments

Sleek and compact design

Cons

No HDR

Premium price tag

Curved screen isn't a game changer on smaller screens

Buy This Product

Dell S2422HG best buy

Shop

// Bottom

Are 24" gaming monitors like the Dell S2422HG a good fit for you? They offer several features making them best for serious gaming, especially FPS games when you need to see all the action at once, but perhaps not the best for productivity.

Dell's new S2422HG is a curved gaming monitor that packs impressive specs, features and looks, but with its more premium price tag, does it offer enough to stand out from competing 24" models?

youtube

Many gaming monitors either tend to be too "gamey" with their red accents and ostentation branding, or rather cheap and bland with thick bezels and minimal adjustments. The Dell S2422HG takes a rather unique direction offering users a sleek design and great height and tilt adjustments, without feeling over the top or looking out of place.

Dimensions & Weight

As life resumes and we can go back to playing games together in person, fans of LAN parties will appreciate the portability and size of this slim monitor. The monitor itself weighs less than 10 pounds with its stand. If you keep the original packaging and box it came in—which is probably the easiest and safest way to pack and travel with this—you're looking at a total weight of about 16 lbs.

With Stand: 21w x 7.5d x 13.8h inches; weight 9.4 lbs.

Without Stand: 21w x 3.5d x 3.5h inches; weight 7.4 lbs.

Connectivity & Controls

Similar to most other monitors, the Dell S2422HG houses its ports directly behind the screen and facing downwards. There is plenty of space between the monitor's stand and the ports allowing you to easily attach and remove cables without needing to flip the monitor around.

In the box, you only get a single DisplayPort cable. Unfortunately, if your PC or device only supports HDMI, as is most common, you'll need to provide your own HDMI 2.0 compatible cable. With this monitor carrying a higher price tag to competing models, this is one area I would have expected Dell to go the extra step to include a wide selection of cables to ensure it is compatible for all users out of the box.

OSD & Customization

The OSD (On Screen Display) is controlled by a joystick and a series of buttons running vertically behind the right side of the panel.

They take a bit to get used to as you can't easily see or identify which buttons you're hitting. When the OSD menu is up, there are visual indicators on the right-hand side of the screen which help you navigate more easily. I still would have preferred if all the buttons were on the right side of the panel where I can physically see them, as opposed to hidden behind.

Dell includes a handful of gaming features like Dark Stabilizer to enhance visibility in dark areas as well as an FPS Counter if you prefer those figures directly reported from the monitor rather than in-game or 3rd party software.

AC Input

Thankfully the power inverter is built into the monitor and you only need to plug a single cable to power it up. No additional power supplies need to be hidden away. The monitor is relatively efficient consuming only about 0.2w in standby and a max of 37w when in use.

HDMI 2.0 (x2)

If you do not have a compatible device that supports Displayport, you can still connect using the other two HDMI 2.0 ports. Again, it's a bit odd that an HDMI cable is not included with your purchase.

Displayport 1.2a

While this Displayport does have more bandwidth than its HDMI ports, there shouldn't be any noticeable difference or benefit to using it as both support this monitor's max resolution of 1080p 165Hz.

3.5mm Headphone Jack

The Dell S2422HG doesn't have built-in speakers, but you can still output your audio over HDMI or Displayport from your PC or Device and then use the 3.5mm jack to connect to external speakers or headphones.

Still, it would have been nice to have speakers included here. Although built-in speakers are notoriously bad, they can be especially convenient to have when you want to minimize your desk clutter or if you're frequently taking this on the go and don't want to pack speakers too.

Screen Size & Viewing Angle

Contrary to other monitors and screens designed primarily for content consumption or multi-tasking, bigger isn't always better when it comes to gaming. While larger gaming monitors do exist, they are typically much more expensive, and for more serious gamers, can come with a handful of disadvantages.

The Dell S2422HG is a 23.6" screen with a wide 178-degree viewing angle. These ≈24" monitors make it easier for gamers to see all the on-screen action without needing to turn their heads from side to side, helping you more quickly see that enemy sneaking up behind you compared to a larger monitor which could have enemies outside of your peripheral vision.

The S2422HG takes this a step further with its 1500R curved screen which is supposed to make the whole experience feel slightly more immersive. Whether or not you'll really notice that curved screen, though, will vary.

Being just a 24" monitor, I didn't notice too much of an advantage. Larger screens like my 49" Samsung Ultra-wide definitely benefit from it, but with this Dell, I frequently forgot it was curved.

Honestly, the biggest benefit of the curved-screen on this smaller monitor might just be that it helps it look more sleek and premium on your desk. Aside from that, I don't think this is a must-have feature for most.

Design, Stand, and Mounting

The smaller footprint of 24" monitors is another advantage compared to larger options. Larger monitors usually need bigger and clunkier stands which in turn take up more desk space, possibly impeding on mouse pad real estate.

The monitors' polygonal-shaped stand is pretty compact compared to some other competing models which have a wider V-shaped design. This helps it fit on smaller surfaces more easily as its stand needs less space. The stand offers tilt adjustments between -5° and 21°, with 100mm of height travel.

If you're a fan of wall mounting, the stand is easily removable with its quick-release back, revealing the 100 x 100mm VESA mount. On the back, you'll also find vents that allow for passive air cooling.

Response Time & Panel

When it comes to gaming monitors, especially for competitive E-Sports titles, 24" models with high refresh rates and low response times are most popular. Gaming monitors typically are at least 120Hz and have a response time of 5ms or less.

The Dell S2422HG with a 165Hz refresh rate and 1ms Moving Picture Response Time (MPRT) and 4ms GtG (Gray to Gray) response time. This is great for reducing motion blur and in competitive games, allows you to keep up with the action. Adaptive-Sync is supported including AMD FreeSync Premium with a 48 – 165Hz Vertical Refresh Rate.

One thing I noticed, or rather didn't, is any perceivable difference between 120hz and 165hz when gaming. If you're on a tighter budget and can't find a 165hz monitor in your price range, don't hesitate to look for 120hz models.

The screen has a matte anti-glare surface and features a 3000:1 static contrast ratio and 8-bit color. The backlight is a flicker-free WLED with 99% sRGB gamut coverage and a 350 cd/m² typical maximum luminance. HDR is not supported with this model, however.

When you're gaming at night or need to relax your eyes from longer sessions, a Low Blue Light (LBL) setting called ‘ComfortView' can be enabled.

Do You Need 4k?

Resolution is a bit of a hot debate. Does higher resolution always translate to a better gaming experience? 4k gaming monitors might seem like a no-brainer, but just as with increasing their physical size, increasing resolution also has its drawbacks.

For starters, you might not actually be able to easily perceive the resolution difference if you're sitting at a distance of about 2 feet from the screen, which is pretty common for a monitor this size. Even if you could, 4k gaming is still very demanding on even the most specced out PCs. You'll usually either have to compromise on framerate or turn down the graphical quality. Competitive gamers usually turn their settings to the lowest and really only care about getting the highest FPS.

Value vs Style

If you're not too keen on multi-tasking, this monitor has all the specs and customizations that make it a great choice for gaming and other casual tasks.

Beyond that, it has a minimal yet very sleek design that doesn't scream "gaming" and can actually fit nicely in most spaces. That said, there are many competing models with similar if not better specs that cost less, but perhaps don't have the same refined and mature design as the Dell.

If you're looking for a gaming monitor with these specs and don't mind having a curved screen that's more for style than practicality, the Dell S2422HG is worth checking out.

Dell S2422HG Review: Premium 24" Curved Gaming Monitor published first on http://droneseco.tumblr.com/

0 notes

Text

Dell XPS 17 (9700) review: The 17-inch laptop is back, and it's spectacular

Dell's XPS lineup has been among the best for years, and the company has gradually refined whatever pain points it did have, such as when it used to put the webcam below the screen. But this year, the lineup underwent a major redesign, with Dell chopping down the bezels even more, something that I wouldn't have guessed was possible.

The firm has long touted how small the footprint is on its laptops, always saying that the XPS 15 fits in the footprint of a 13-inch laptop, and that the XPS 13 fits into the footprint of an 11-inch laptop. With the XPS 15 fitting into an even smaller footprint this year, there was room for something bigger.

Dell announced the new XPS 17 in May, and it's the first new XPS 17 in around a decade. If you read my review of the latest XPS 15, then there are pretty much two things to know. The screen is bigger, and it's more powerful with Nvidia RTX graphics. In fact, it's the first XPS laptop ever with RTX graphics.

Obviously, these specs are for the unit that Dell sent me. The base model starts at $1,399.99, although that one has integrated graphics, a Core i5-10300H, an FHD screen, and 8GB RAM.

Design

While the XPS 17 was introduced alongside the XPS 15 redesign in May, this design was actually first shown in January at CES with the XPS 13. This design consists of a 16:10 display, narrow bezels on all four sides, and no USB Type-C ports. Indeed, if you put the XPS 13, 15, and 17 next to each other, they look nearly identical except for being different sizes.

The Dell XPS 17 is indeed the 17-inch laptop that can fit into the footprint of a 15-inch laptop. The most important thing that that means to me is that it can fit into a regular-sized bag. That's not always the case with 17-inch laptops; in fact, it's pretty rare. It's a bit heavy at five and a half pounds, but that's the kind of laptop that this is. It's got a lot of power under the hood, and it also fits into a small footprint. That combination makes the XPS 17 unique.

The top-down view is the one thing that looks the same. The chassis is made out of aluminum, and the laptop comes in a silver color with a chrome-colored Dell logo stamped in the lid.

The sides are silver-colored as well. This was a big change with the redesign since the sides have more traditionally been black. I think this gives it a much cleaner look. But as I mentioned, there are no USB Type-C ports, even on the 17-incher.

Instead, there are four Thunderbolt 3 ports, two of which are on each side. The bad news is that they're not full Thunderbolt 3 ports, so if you're like me and you work from a Thunderbolt 3 dock that has two 4K monitors attached to it, you won't be able to use the full resolution. My workaround was to disconnect one of the monitors from the dock and connect it directly to the laptop. Still, it's disappointing, considering how premium and powerful this PC is.

The cool thing about having two Thunderbolt 3 ports on each side is that you can charge the PC from either side. I know that this sounds like a small thing, but it's really nice, and it's a rarity in laptops.

Also on the right side, you'll find an SD card reader and a 3.5mm audio jack. I'm kind of surprised that the SD card reader is there with everything else being cut, but I guess it's nice that it's there.

Display and audio

The screen on the Dell XPS 17 is a flat 17 inches, compared to 17.3 inches on a traditional 17-inch laptop. The reason for that is because this has a 16:10 display, and to be clear, being that it's measured diagonally, this display is larger than a 17.3-inch 16:9 screen. It comes in your choice of 3840x2400 or 1920x1200 resolutions. Dell sent me the former, and it is absolutely beautiful.

It comes in at 500-nit brightness, so it works great in bright sunlight, and indoors, I only found myself using it at about 25% brightness. It also has 100% Adobe RGB, 94% DCI-P3, and a 1600:1 contrast ratio.

The colors are also nearly perfect, and that actually goes for whatever angle you're viewing the display from. Dell promises a 178-degree viewing angle, and it delivers. You can look at this thing from any angle and not see any visible distortions.

Plus, it's big. I'm not always a fan when companies make taller screens like this because it means that it's also narrower. But at 17 inches, there's plenty of screen real estate for everything.

The company also has something called Dell Cinema, which includes CinemaColor, CinemaSound, and CinemaStream. CinemaColor includes HDR technologies and more, and there's actually an included app that lets you apply different display settings such as movie, evening, sports, and animation.

The bezels are small, but that doesn't mean Dell removed the webcam, or moved it. It's shrunken down to fit into that tiny top bezel, and there's an IR camera for facial recognition as well. You're not making any sacrifices in that department like you would have been in the old days.

CinemaSound has to do with the Waves MaxxAudio Pro speakers. There's an app for that too, but this one is called MaxxAudio Pro instead of CinemaSound. The XPS 17 has large speakers on either side of the keyboard, and they sound fantastic. The dead giveaway is that it has both woofers and tweeters, a rarity on laptops.

Indeed, this has four speakers, two of which are 2.5W and two of which are 1.5W. Obviously, they're used for different frequencies. If you're looking for sound quality and volume in a laptop, you definitely came to the right place.

Keyboard and trackpad

The keyboard found in the XPS 17 is the same as can be found in its other clamshell laptops. Dell does have a technology called MagLev that it uses in the XPS 13 2-in-1 and XPS 15 2-in-1, but perhaps surprisingly, the technology didn't make it into the smaller, redesigned clamshells.

Dell didn't add a numpad, which is a decision that I'm happy with. I'm not a fan of the numpad, and it's not even easy to ignore because it moves the regular keyboard to the left, leaving it off-centered. I'll take the quad-speaker setup instead.

Key depth is 1.3mm, which is pretty standard for a consumer laptop these days. It's quite comfortable to type on, and it's definitely one of the better keyboards in a consumer laptop. If we were talking about commercial laptops, that might be another story, but we're not talking about commercial laptops. I find that I make very few mistakes with this keyboard, something that I do appreciate after using some keyboards that I've had some issues with.

There's a power button in the keyboard, which doubles as a fingerprint sensor. Unfortunately, you do have to scan your fingerprint after the PC boots up, as opposed to how everyone else with a fingerprint sensor in the power button does it, scanning your finger before it boots up.

Dell considers this to be a security issue, assuming that you might walk away from your PC between when you press the button and when it boots up and someone might sit in front of it. I have a bit more faith in the user than Dell does, and I think you'd get to know your PC and whether or not you're safe to grab a cup of coffee while it's booting up.

My favorite feature of the XPS 15 is on the XPS 17, which is that the Precision trackpad is massive. Huge trackpads are something that Apple introduced on its MacBook Pro PCs a while back, and I've been waiting for a Windows OEM to follow suit. If the real estate on the keyboard deck is there, I say use it. The large, clickable trackpad feels great, and it makes drag-and-drop operations a breeze.

Performance and battery life

Both performance and battery life are excellent on the XPS 17. This thing is great for anything. I used it for things from gaming with Forza Horizon 4 and Halo: Reach to 4K video editing to general work. Sure, there was the occasional bump in the road, particularly when it came to gaming, but it absolutely handled anything that I threw at it.

After all, this thing has top-end hardware for its class. It has an Intel Core i7-10875H processor, which has eight cores, 16 threads, and a 45W TDP. It's the better Core i7 from the H-series, the other one being the hexa-core Core i7-10750H. It's only bested by the Core i9-10885H, which is available in the XPS 17.

For graphics, it comes with an Nvidia GeForce RTX 2060 Max-Q with 6GB GDDR6. With RTX graphics, it supports things like real-time ray tracing and deep learning super sampling (DLSS). RTX graphics was how I knew it would support some solid gaming. You can get it with integrated graphics if you don't want the power at all, or you can get it with an Nvidia GeForce GTX 1650 Ti.

Keep in mind that this is a creator laptop, not a gaming laptop. It uses a 130W charger, while most gaming laptops are closer to the 230W range, and it doesn't have the thermals for it. This is primarily a work machine, but I'm here to let you know that it does have the power to play as well.

Even more impressive is battery life. I often say that you have to choose between power and battery life, and with the UHD+ display, you can bet that this uses a lot of power. I used it with the power slider one notch above the battery saver, and with the screen at around 25% brightness. I can tell you that you can easily get six hours out of this, and in many cases, you can take it further than that. With general work, I was able to get up to eight hours.

Of course, the touchscreen model comes with a 97Whr battery. In other words, this has one of the biggest batteries that you'll find in any laptop (much larger and you can't take it on a plane). The non-touch model comes with a 56Whr battery.

For benchmarks, I used PCMark 8, PCMark 10, 3DMark, VRMark, Geekbench, and Cinebench.

If you're not the type to go through benchmark scores, all you need to know is that this is a powerful machine.

Conclusion

My biggest complaint about the Dell XPS 17 is that it doesn't have full Thunderbolt 3 ports, which would have been able to handle two 4K displays on a single port. If that bothers you too, just wait for the next one. Intel's next generation of CPUs is going to support Thunderbolt 4, which is really just the full Thunderbolt 3 that I'm describing. My other gripe is that there's no cellular model. I realize that it's something of a rare feature on more powerful laptops, probably because it uses battery, but I don't care. It's 2020 and I should be able to work from anywhere.

Let's be clear that this is an absolutely incredible laptop that's nearly perfect. It's an absolute pleasure to use, no matter what you're using it for. If you're playing games, it can do that. If you're streaming movies, it's got a killer HDR display and stunning speakers. If you want to edit video, it's got the power for that as well.

All of it comes in a beautiful chassis and yes, a small footprint. The fact that this thing has a 17-inch display and can fit in a regular bag is a feat of engineering. Honestly, the Dell XPS 17 is in a class all its own, and I can't think of anything like it. If you're looking for a laptop that can do everything, this is it.

0 notes

Text

How to Train Neural Network?

As we know one of the most important part of deep learning is the training the neural networks.

So, lets learn how it’s actually work.

In this article we will try to learn how a neural network gets train. We will also learn about feed forward method and back propagation method in Deep Learning.

Why training is needed?

Training in deep learning is the process which helps machines to learn about the function/equation. We have to find the optimal values of the weights of a neural network to get the desired output.

To train a neural network, we use the iterative method using gradient descent. Initially we start with random initialization of the weights. After random initialization of the weights, we make predictions on the data with the help of forward-propagation method, then we compute the corresponding cost function C, or loss and update each weight w by an amount proportional to dC/dw, i.e., the derivative of the cost functions w.r.t. the weight. The proportionality constant is known as the learning rate.

Now we might be thinking what is learning rate?

Learning rate is a type of hyper-parameter that helps us to controls the weights of our neural network with respect to the loss gradient. It gives us an idea how quickly the neural network updates the concepts it has learned.

A learning rate should not be too low as it will take more time to converse the network and it should also not be even too high as the network may never get converse. So, it is always desirable to have optimal value of learning rate so that the network converges to something useful.

We can calculate the gradients efficiently using the back-propagation algorithm. The key observation of backward propagation or backward prop is that because of the chain rule of differentiation, the gradient at each neuron in the neural network can be calculated using the gradient at the neurons, it has outgoing edges to. Hence, we calculate the gradients backwards, i.e., first calculate the gradients of the output layer, then the top-most hidden layer, followed by the preceding hidden layer, and so on, ending at the input layer.

The back-propagation algorithm is implemented mostly using the idea of a computational graph, where each neuron is expanded into many nodes in the computational graph and performs a simple mathematical operation like addition, multiplication. The computational graph does not have any weights on the edges; all weights are assigned to the nodes, so the weights become their own nodes. The backward propagation algorithm is then run on the computational graph. Once the calculation is complete, only the gradients of the weight nodes are required for update. The rest of the gradients can be discarded.

Some of the optimization technique are:

Gradient Descent Optimization Technique

One of the most commonly used optimization techniques that adjusts the weights according to the error/loss they caused which is known as “gradient descent.”

Gradient is nothing but it is slope, and slope, on an x-y graph, represents how two variables are related to each other: the rise over the run, the change in distance over the change in time, etc. In this case, the slope is the ratio between the network’s error and a single weight; i.e., how does the error change as the weight is varied.

To put it in a straight forward way, here we mainly want to find which weights which produces the least error. We want to find the weights that correctly represents the signals contained in the input data, and translates them to a correct classification.

As a neural network learns, it slowly adjusts many weights so that they can map signal to meaning correctly. The ratio between network Error and each of those weights is a derivative, dE/dw that calculates the extent to which a slight change in a weight causes a slight change in the error.

Each weight is just one factor in a deep neural network that involves many transforms; the signal of the weight passes through activations functions and then sums over several layers, so we use the chain rule of calculus to work back through the network activations and outputs. This leads us to the weight in question, and its relationship to overall error.

Given two variables, error and weight, are mediated by a third variable, activation, through which the weight is passed. We can calculate how a change in weight affects a change in error by first calculating how a change in activation affects a change in Error, and how a change in weight affects a change in activation.

The basic idea in deep learning is nothing more than that adjusting a model’s weights in response to the error it produces, until you cannot reduce the error any more.

The deep net trains slowly if the gradient value is small and fast if the value is high. Any inaccuracies in training leads to inaccurate outputs. The process of training the nets from the output back to the input is called back propagation or back prop. We know that forward propagation starts with the input and works forward. Back prop does the reverse/opposite calculating the gradient from right to left.

Each time we calculate a gradient, we use all the previous gradients up to that point.

Let us start at a node in the output layer. The edge uses the gradient at that node. As we go back into the hidden layers, it gets more complex. The product of two numbers between 0 and 1 gives you a smaller number. The gradient value keeps getting smaller and as a result back prop takes a lot of time to train and produces bad accuracy.

Challenges in Deep Learning Algorithms

There are certain challenges for both shallow neural networks and deep neural networks, like overfitting and computation time.

DNNs are easy affected by overfitting because the use of added layers of abstraction which allow them to model rare dependencies in the training data

Regularization methods such as drop out, early stopping, data augmentation, and transfer learning are used during training to combat the problem of overfitting.

Drop out regularization randomly omits units from the hidden layers during training which helps in avoiding rare dependencies. DNNs take into consideration several training parameters such as the size, i.e., the number of layers and the number of units per layer, the learning rate and initial weights. Finding optimal parameters is not always practical due to the high cost in time and computational resources. Several hacks such as batching can speed up computation. The large processing power of GPUs has significantly helped the training process, as the matrix and vector computations required are well-executed on the GPUs.

Dropout

Dropout is a well-known regularization technique for neural networks. Deep neural networks are particularly prone to overfitting.

Let us now see what dropout is and how it works.

In the words of Geoffrey Hinton, one of the pioneers of Deep Learning, ‘If you have a deep neural net and it's not overfitting, you should probably be using a bigger one and using dropout’.

Dropout is a technique where during each iteration of gradient descent, we drop a set of randomly selected nodes. This means that we ignore some nodes randomly as if they do not exist.

Each neuron is kept with a probability of q and dropped randomly with probability 1-q. The value q may be different for each layer in the neural network. A value of 0.5 for the hidden layers, and 0 for input layer works well on a wide range of tasks.

During evaluation and prediction, no dropout is used. The output of each neuron is multiplied by q so that the input to the next layer has the same expected value.

The idea behind Dropout is as follows − In a neural network without dropout regularization, neurons develop co-dependency amongst each other that leads to overfitting.

Implementation trick

Dropout is implemented in libraries such as TensorFlow and Pytorch by keeping the output of the randomly selected neurons as 0. That is, though the neuron exists, its output is overwritten as 0.

Early Stopping

Here we try to train neural networks using an iterative algorithm called gradient descent.

The idea behind early stopping is intuitive; we stop training when the error starts to increase. Here, by error, we mean the error measured on validation data, which is the part of training data used for tuning hyper-parameters. In this case, the hyper-parameter is the stop criteria.

Data Augmentation

It is a process where we increase the quantum of data we have or augment it by using existing data and applying some transformations on it. The exact transformations used depend on the task we intend to achieve. Moreover, the transformations that help the neural net depend on its architecture.

For instance, in many computer vision tasks such as object classification, an effective data augmentation technique is adding new data points that are cropped or translated versions of original data.

When a computer accepts an image as an input, it takes in an array of pixel values. Let us say that the whole image is shifted left by 15 pixels. We apply many different shifts in different directions, resulting in an augmented dataset many times the size of the original dataset.

Transfer Learning

The process of taking a pre-trained model and “fine-tuning” the model with our own dataset is called transfer learning. There are several ways to do this. A few ways are described below −

We train the pre-trained model on a large dataset. Then, we remove the last layer of the network and replace it with a new layer with random weights.

We train the pre-trained model on a large dataset. Then, we remove the last layer of the network and replace it with a new layer with random weights.

We then freeze the weights of all the other layers and train the network normally. Here freezing the layers is not changing the weights during gradient descent or optimization.

We then freeze the weights of all the other layers and train the network normally. Here freezing the layers is not changing the weights during gradient descent or optimization.

The concept behind this is that the pre-trained model will act as a feature extractor, and only the last layer will be trained on the current task.

For more blogs/courses on data science, machine learning, artificial intelligence and new technologies do visit us at InsideAIML.

Thanks for reading

#insideaiml#artificial intelligence#machine learning#data science#datavisualization#python courses#deeplearning

0 notes

Text

Diamonds — Buyers Guide

When you buy a diamond ring, it’s important to consider your diamonds’ quality… especially if it’s a wedding ring, which you will wear for the rest of your life!

Diamond quality is ultimately how well the diamond appears. Besides, a high-quality diamond is secure long term.

So no matter, if you are buying a women’s diamond wedding ring or a men’s diamond wedding ring, make the perfect choice with our Diamonds Guide for your Wedding Ring!

The 4 C’s

In order to work out a diamonds value and quality there is a standard way of appraising it, by using criteria called the 4 C’s. The 4 C’s are the Clarity, Carat, Cut and Colour of a diamond. Diamond appraising is a complex task with only very minor differences between grades which can only be determined using the relevant tools and under strict lighting conditions.

To try and simplify diamond appraising the 4 C’s are explained below in greater detail.

Diamond Clarity

Diamond Clarity relates to the number of imperfections a diamond has. These imperfections are called inclusions, which are technically anything from minute cracks to small traces of non crystallised carbon. Diamonds are natural products and invariably will have inclusions to some degree, however the fewer the inclusions the better.

Diamond clarities such as SI 1/2 provide a diamond that technically has inclusions but do not show to the human eye, and are only apparent upon magnifying the diamond. These clarities offer a good balance of appearance and value for money.

To grade a diamond’s clarity a gemmologist will use a 10x magnification glass also known as a jeweller’s loupe to zoom in on the diamond and analyse the clarity.

Below are the standardised levels used for expressing a diamond’s clarity:

IF — absolutely free from inclusions, no imperfections visible through a 10x loupe.

VVS 1/2 — very very small inclusions, nearly invisible through a 10x loupe.

VS 1/2 — very small inclusions, barely visible through a 10x loupe.

SI 1/2 — small inclusions visible through a 10x loupe but invisible to the naked eye.

P1 — inclusions immediately evident with a 10x loupe though hard to see with the naked eye.

P2 — large/numerous inclusions visible to the naked eye and affecting brilliance.

P3 — large/numerous inclusions very visible to the naked eye and affecting brilliance.

Diamond Carat

Diamond carat relates to the weight of a diamond. Diamond carat does not relate to the size of a diamond.

One carat weighs precisely 200mg or milligrams.

Jewellery that comprises more than one diamond will have a total diamond weight. Total diamond weights are not as valuable as the equivalent individual diamond weight, i.e. a ring with five diamonds with a total weight of 1 carat is not as valuable as a ring with one diamond weighing 1 carat.

Big diamonds have bigger prices, as the size of a diamond increases so does the rarity and value. For example a 2 carat diamond is around four times as valuable as a 1 carat diamond of equal quality.

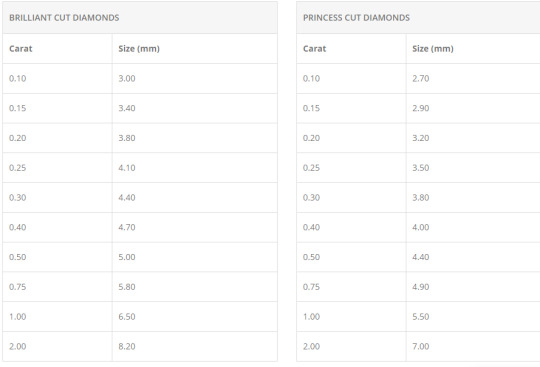

The chart below will help you understand the approximate size of a diamond for its stated weight.

Diamond Cut

Diamond cut determines two things about diamonds.

Firstly it refers to the actual shape of a diamond (e.g. round, square, oval etc). Round shaped diamonds are actually called brilliant cut diamonds and square shaped diamonds are called princess cut diamonds. The chosen shape of a diamond will determine the pattern used and how the diamond is actually cut and formed by a diamond cutter.

Once a diamond has been shaped the diamond cut usually then refers to the proportions, symmetry and polish of a diamond. These are some of the most important factors for a diamonds overall finish, and will determine how well a diamond will sparkle.

The proportions of a diamond are very important as there is an ideal ratio of width by depth for every weight. For example a 1 carat brilliant cut diamond could actually have a width of less than 6mm rather than the ideal 6.5mm and be a deeper stone, which would obviously offer less appearance once set in a ring and actually look like a smaller 0.75 carat diamond, but cost a lot more. An ideally proportioned diamond is usually achieved at the expense of losing some of the diamonds weight, which is why bigger isn’t always best.

A diamonds symmetry relates to how well aligned the facets of the diamond are. Alignment is one of the main contributing factors in how much a diamond sparkles, as this determines how the light travels through the diamond. Ideally when light enters a diamond it should be reflected out at the top towards the person looking at it, not out of the side or downwards as a poorly cut diamond would.

The polish of a diamond is how smooth or rough the facets are, and the smoother the facets the better. A diamond with excellent polish can be likened to a well polished car, offering amazing depth and shine.

Diamond Colour

The colour of a diamond is based upon a standardised scale which is set by The Gemological Institute of America. In order to determine a diamonds colour a set of master stones are used to compare diamonds side by side with the masters. A gemmologist will perform this comparison under strict lighting conditions.

GIA’s diamond colour grading scale:

Colourless — D, E, F

Near Colourless — G, H, I, J

Faint — K, L, M

Very Light — N, O, P, Q, R

Light — S, T, U, V, W, X, Y, Z

There are only very few diamonds in the world that are truly colourless, and these are the most expensive of diamonds assuming they are of excellent clarity, cut and carat. Generally speaking the more colour a diamond has the less valuable it becomes, although there are exceptions for very rare fancy colours.

It is also worth noting that the surrounding environment can affect a diamonds colour, this is why it’s best to let an expert gemmologist asses a diamond’s colour under strict lighting conditions.

Conflict Diamonds

We only select diamonds that are ethically sourced and free from worldwide conflict.

SafeGuard Jewellery Assessment

For additional peace of mind when purchasing stone set rings from us, we can also offer a SafeGuard Jewellery Assessment Report. The report is an independent expert assessment carried out by SafeGuard, who are part of the Birmingham Assay Office.

The report is an overall description of the ring, but also includes:

Diamond Quality

Diamond Sizes and Gross Weights

Precious Metal Type

Digital Photograph of Ring

Individually Hologrammed Booklet

The standard timescale for a Jewellery Assessment is approximately 1 week, although an express service is also available.

We cannot offer a refund on Jewellery Assessments, however we are happy to send your jewellery for appraisal after you have tried it first.

Please note: The SafeGuard Jewellery Assessment is not a valuation service, for Independent Valuations we use SafeGuard Valuations.

0 notes

Text

Phantom Reactor Review - The Most Powerful Compact Speaker in 2019

CURRENT PHANTOM REACTOR PRICE [EU]

Ok first of let me just say Phantom Reactor…that just might be the most awesome speaker name I ever heard… If you know of a speaker with a better name this let me know.

So imagine you’ve got this speaker in your house & your friends are like “what wireless speaker is that?” …and you’re like “hey you know I just got myself a phantom reactor….it’s 900 watts, up to 98dB. Bass as low as 18hz…why what speaker have you got?”

The Devialet Phantom reactor. What a powerful name it has, and trust me it is powerful! You WhatGear legends (YT Subscribers) who have been subscribed to the channel for a while will know… I’m a big fan of Devialet.

Now we have this new addition to the formidable French Hi-Fidelity companies Phantom line up. I can’t tell you how excited I am about this.

You know I was one of the 1st people on YouTube to put a video out on the Phantom. I was as excited then as I am now. Honestly, guys, the Phantom Reactor is going to open your ears & eyes.

DEVIALET PHANTOM REACTOR PRICE [US]

Design

The Devialet Phantom Reactor is roughly a quarter the size of it’s bigger brother the Devialet Gold/Silver Phantom. It’s roughly 16 x 17 x 22cm. It is tiny, but it is also no lightweight speaker. The Reactor weighs in at over 4.KG and it’s a heavy hitter.

Just to get an idea of its size, in the Devialet Phantom Reactor review video, you can see it side by side with a Bose SoundLink mini and my current favorite BT speaker the Sony SRS X7.

It is virtually identical to the original Phantom with the white sides, and snowflake grill on the front. Power button and ports as well as heat vents at the back. Of course it has got the iconic aluminum woofer covers on sides. except this time the are 10cm’s in diameter.

There are a couple of differences here in comparison to the big phantoms. The way the Phantom Reactor has been manufactured is slightly different. It’s still a single piece cabinet but now you’ve got capacitive touch buttons across the top.

The original phantoms had a mid driver and a seperate tweeter. Whereas the phantom Reactor has single 3cm aluminum midrange/treble driver. This is due to reduction in size of the speaker.

Just so you know there are 160 patents and 981 part on this thing. So when it comes to design the Reactor is certainly unique.

Usability

Let’s go back to the capacitive touch buttons. Across the top we have got BT pairing, volume down, play/pause, volume up, and a sync button for the app. There’s also a LED indicator light to help you with pairing etc.

As for input methods you’ve got airplay, BT, Spotify connect, upnp optical and analog

I’m a little disappointed there isn’t Deezer integration. Especially being that Deezer is also a French company. Don’t worry I’m sure thats something they could add that later.

To get the full Hi-Res audio 24bit 192kHz out of the reactor you’re going want to use either airplay, upnp or optical and analog from a compatible source.

Now onto the app. So the volume control dominates the majority of the home screen. With the volume dial.

All the options to switch sources are there. Due to the fact, I use an Android phone. To get the most out of the phantom wirelessly you will need to download the Bubble UPNP app.

It kind of works in tandem with the Devialet app. Would be nice to see this integrated into the Devialet app…but to be honest once you set it up it works pretty seamlessly.

Also, you have the option to add multiple phantoms to the one app… And even pair two of them for stereo sound…

So that brings me on nicely to the Quality.

Quality

When it came to testing the sound quality. You know I wasn’t going to be testing this epic HiFi compact speaker with some horribly compressed source. So I went out of my way to download some HiRes Flac tracks , especially for you guys.

It is important you understand, that what you are hearing in the video is recorded via a video rode mic pro. Then rendered in premiere pro. Then played back on the device your watching on… So the Phantom Reactor really is something you have to go to the store and hear and see for yourself.

Click on this image above to watch the Devialet Phantom Reactor sound test segment of the video.

I really did try my best to visualize the sound quality in the demo video. I hope you can appreciate that.

I’ve heard a lot of Hi-End speakers… I actually worked in the Harrods technology department…you know that dusty old building in London Knightsbridge for a long time. I’ve heard a lot of end stuff. Hopefully you can trust my opinion more than most.

The great thing about the sound quality is even at lower volumes you still get that full sounds. When it comes to the sound stage it’s not fair to judge it without listening to a stereo pair.

Just know this. In my opinion a single Phantom Reactor packs more than enough power to fill a large room with powerful bass and amazing clarity and smooth mids.

The best thing about the Reactors sound quality is that even at its highest output level there is no sharpness to the highs. Nothing is lost in the mids or bass notes. It is incomparable to anything you will find on a speaker this small. I can promise you that.

And I don’t even need to tell you about the build quality… Because you already know.

Awesome Features.

ADH Technology

Devialet has its own patented amplification tech. The ADH which is essentially combining class A and Class D methods.

The advantage of this is that the size of the amp can be much smaller. You get the raw power of an analog amplifier combined with the efficiency of a digital amp.

So to break it down simply you can blast this thing from zero to 100 in second and suffer no distortion, 0 saturation, and zero background noise. Just a clean room-filling audio track at whatever level your listening at.

It’s amazing that even at low volume you still get a really full rich sound.

HeartBass Implosion - HBI

Next up the heart bass Implosion tech. Did you know the cabinet of this speaker has been sealed with 500KG of pressure?

So all that pressure just wants to explode out of this thing with those bass notes…and hopefully, you could really see that in the sound test section.

Signal Active Matching - SAM

Signal active matching… So basically Devialet says the phantom will match the exact rhythm and tempo of your music with absolute precision.

Active co-spherical matching - ACE

So the idea is the way in which the phantom outputs the sounds from either side and from the front. You get this real waveform coming from the speaker.

Sort of like the pattern you would see if dropped a pebble into still water. That sort of smooth ripple effect.

SubWoofer Covers

And the last awesome Feature has got to be those woofer covers. What other HiFi speaker is there out that is visually pleasing as this.

Summary

I know the sticking point for a lot of people will be the price. All I can say is “You get what you pay for.”

Yes, there are lots of cheaper options out their…but that’s not what you’re looking if you are watching this. The Devialet Phantom is head and shoulders above anything else of comparable size. That’s why it costs so much.

If I had space I would probably opt for the bigger phantoms. That statement comes from real life experience because I actually had a gold phantom here, and I quite literally couldn’t find anywhere to put it.

The Phantom Reactor is so compact and fits perfectly in any room in my apartment.

My dream setup would be to have two phantom Reactors either side my TV on the official Devialet …stands. That would quite literally be the perfect setup for me.

The Phantom Reactor is the best in class when it comes sound to size ratio. Yes, you could get more bass and more mids from the Devialet Gold Phantom but damn they take up a lot of space.

But not as much as a floor standing speaker.

So should you buy the Phantom Reactor? Yes if you are after the best quality compact speaker on the market. If it’s the power you’re after then check out this video you will like it.

See you in the next one. Don’t be late.

MORE DEVIALET PHANTOM VIDEOS FROM WHATGEAR

Check it out! One year later I finally get my hands on maybe the FINEST wireless speaker known to man! Watch #WhatGear shootouts : https://youtu.be/x9oYZCs9vGg It’s the WhatGear Devialet Gold Phantom review. The Gold Phantom has 4500watts of power…Is it a sound investment?

So Devialet have just launched a new addition to the Phantom range…Its the Devialet Gold Phantom. I caught up with Andy from Devialet to find out whats up with this new speaker. Check it out!

Check the price : https://amzn.to/2GJ9kG2 This is a GROUND breaking bit of kit from a french company. Engineered to perfection. This really takes wireless speakers to a whole new level. If you get a chance to actually hear one of these I’m pretty sure you won’t regret it.

DEVIALET ON AMAZON : http://amzn.to/2IzQhf9 So If your a Sky Q user you can pick up a £799 Devialet Speaker for as cheap as £249. The question is it really worth what they asking for? Well I’ve been using the Devialet soundbox for some time now…

Source: https://www.whatgear.net/technology/devialet-phantom-reactor-review

0 notes

Text

Phantom Reactor Review - The Most Powerful Compact Speaker in 2019

CURRENT PHANTOM REACTOR PRICE [EU]

Ok first of let me just say Phantom Reactor...that just might be the most awesome speaker name I ever heard... If you know of a speaker with a better name this let me know.

So imagine you've got this speaker in your house & your friends are like “what wireless speaker is that?” ...and you're like “hey you know I just got myself a phantom reactor....it's 900 watts, up to 98dB. Bass as low as 18hz...why what speaker have you got?”

The Devialet Phantom reactor. What a powerful name it has, and trust me it is powerful! You WhatGear legends (YT Subscribers) who have been subscribed to the channel for a while will know... I'm a big fan of Devialet.

Now we have this new addition to the formidable French Hi-Fidelity companies Phantom line up. I can't tell you how excited I am about this.

You know I was one of the 1st people on YouTube to put a video out on the Phantom. I was as excited then as I am now. Honestly, guys, the Phantom Reactor is going to open your ears & eyes.

DEVIALET PHANTOM REACTOR PRICE [US]

Design

The Devialet Phantom Reactor is roughly a quarter the size of it's bigger brother the Devialet Gold/Silver Phantom. It's roughly 16 x 17 x 22cm. It is tiny, but it is also no lightweight speaker. The Reactor weighs in at over 4.KG and it's a heavy hitter.

Just to get an idea of its size, in the Devialet Phantom Reactor review video, you can see it side by side with a Bose SoundLink mini and my current favorite BT speaker the Sony SRS X7.

It is virtually identical to the original Phantom with the white sides, and snowflake grill on the front. Power button and ports as well as heat vents at the back. Of course it has got the iconic aluminum woofer covers on sides. except this time the are 10cm's in diameter.

There are a couple of differences here in comparison to the big phantoms. The way the Phantom Reactor has been manufactured is slightly different. It's still a single piece cabinet but now you've got capacitive touch buttons across the top.

The original phantoms had a mid driver and a seperate tweeter. Whereas the phantom Reactor has single 3cm aluminum midrange/treble driver. This is due to reduction in size of the speaker.

Just so you know there are 160 patents and 981 part on this thing. So when it comes to design the Reactor is certainly unique.

Usability

Let’s go back to the capacitive touch buttons. Across the top we have got BT pairing, volume down, play/pause, volume up, and a sync button for the app. There's also a LED indicator light to help you with pairing etc.

As for input methods you've got airplay, BT, Spotify connect, upnp optical and analog

I'm a little disappointed there isn't Deezer integration. Especially being that Deezer is also a French company. Don’t worry I'm sure thats something they could add that later.

To get the full Hi-Res audio 24bit 192kHz out of the reactor you're going want to use either airplay, upnp or optical and analog from a compatible source.

Now onto the app. So the volume control dominates the majority of the home screen. With the volume dial.

All the options to switch sources are there. Due to the fact, I use an Android phone. To get the most out of the phantom wirelessly you will need to download the Bubble UPNP app.

It kind of works in tandem with the Devialet app. Would be nice to see this integrated into the Devialet app...but to be honest once you set it up it works pretty seamlessly.

Also, you have the option to add multiple phantoms to the one app... And even pair two of them for stereo sound...

So that brings me on nicely to the Quality.

Quality

When it came to testing the sound quality. You know I wasn’t going to be testing this epic HiFi compact speaker with some horribly compressed source. So I went out of my way to download some HiRes Flac tracks , especially for you guys.

It is important you understand, that what you are hearing in the video is recorded via a video rode mic pro. Then rendered in premiere pro. Then played back on the device your watching on... So the Phantom Reactor really is something you have to go to the store and hear and see for yourself.

Click on this image above to watch the Devialet Phantom Reactor sound test segment of the video.

I really did try my best to visualize the sound quality in the demo video. I hope you can appreciate that.

I've heard a lot of Hi-End speakers... I actually worked in the Harrods technology department...you know that dusty old building in London Knightsbridge for a long time. I've heard a lot of end stuff. Hopefully you can trust my opinion more than most.

The great thing about the sound quality is even at lower volumes you still get that full sounds. When it comes to the sound stage it's not fair to judge it without listening to a stereo pair.

Just know this. In my opinion a single Phantom Reactor packs more than enough power to fill a large room with powerful bass and amazing clarity and smooth mids.

The best thing about the Reactors sound quality is that even at its highest output level there is no sharpness to the highs. Nothing is lost in the mids or bass notes. It is incomparable to anything you will find on a speaker this small. I can promise you that.

And I don't even need to tell you about the build quality... Because you already know.

Awesome Features.

ADH Technology

Devialet has its own patented amplification tech. The ADH which is essentially combining class A and Class D methods.

The advantage of this is that the size of the amp can be much smaller. You get the raw power of an analog amplifier combined with the efficiency of a digital amp.

So to break it down simply you can blast this thing from zero to 100 in second and suffer no distortion, 0 saturation, and zero background noise. Just a clean room-filling audio track at whatever level your listening at.

It's amazing that even at low volume you still get a really full rich sound.

HeartBass Implosion - HBI

Next up the heart bass Implosion tech. Did you know the cabinet of this speaker has been sealed with 500KG of pressure?

So all that pressure just wants to explode out of this thing with those bass notes...and hopefully, you could really see that in the sound test section.

Signal Active Matching - SAM

Signal active matching... So basically Devialet says the phantom will match the exact rhythm and tempo of your music with absolute precision.

Active co-spherical matching - ACE

So the idea is the way in which the phantom outputs the sounds from either side and from the front. You get this real waveform coming from the speaker.

Sort of like the pattern you would see if dropped a pebble into still water. That sort of smooth ripple effect.

SubWoofer Covers

And the last awesome Feature has got to be those woofer covers. What other HiFi speaker is there out that is visually pleasing as this.

Summary

I know the sticking point for a lot of people will be the price. All I can say is “You get what you pay for.”

Yes, there are lots of cheaper options out their...but that's not what you're looking if you are watching this. The Devialet Phantom is head and shoulders above anything else of comparable size. That's why it costs so much.

If I had space I would probably opt for the bigger phantoms. That statement comes from real life experience because I actually had a gold phantom here, and I quite literally couldn't find anywhere to put it.

The Phantom Reactor is so compact and fits perfectly in any room in my apartment.

My dream setup would be to have two phantom Reactors either side my TV on the official Devialet ...stands. That would quite literally be the perfect setup for me.

The Phantom Reactor is the best in class when it comes sound to size ratio. Yes, you could get more bass and more mids from the Devialet Gold Phantom but damn they take up a lot of space.

But not as much as a floor standing speaker.

So should you buy the Phantom Reactor? Yes if you are after the best quality compact speaker on the market. If it's the power you're after then check out this video you will like it.

See you in the next one. Don't be late.

MORE DEVIALET PHANTOM VIDEOS FROM WHATGEAR

Check it out! One year later I finally get my hands on maybe the FINEST wireless speaker known to man! Watch #WhatGear shootouts : https://youtu.be/x9oYZCs9vGg It's the WhatGear Devialet Gold Phantom review. The Gold Phantom has 4500watts of power...Is it a sound investment?

So Devialet have just launched a new addition to the Phantom range...Its the Devialet Gold Phantom. I caught up with Andy from Devialet to find out whats up with this new speaker. Check it out!

Check the price : https://amzn.to/2GJ9kG2 This is a GROUND breaking bit of kit from a french company. Engineered to perfection. This really takes wireless speakers to a whole new level. If you get a chance to actually hear one of these I'm pretty sure you won't regret it.

DEVIALET ON AMAZON : http://amzn.to/2IzQhf9 So If your a Sky Q user you can pick up a £799 Devialet Speaker for as cheap as £249. The question is it really worth what they asking for? Well I've been using the Devialet soundbox for some time now...

source https://www.whatgear.net/technology/devialet-phantom-reactor-review

0 notes

Text