#AI deep learning

Explore tagged Tumblr posts

Text

Behind the Code: How AI Is Quietly Reshaping Software Development and the Top Risks You Must Know

AI Software Development

In 2025, artificial intelligence (AI) is no longer just a buzzword; it has become a driving force behind the scenes, transforming software development. From AI-powered code generation to advanced testing tools, machine learning (ML) and deep learning (DL) are significantly influencing how developers build, test, and deploy applications. While these innovations offer speed, accuracy, and automation, they also introduce subtle yet critical risks that businesses and developers must not overlook. This blog examines how AI is transforming the software development lifecycle and identifies the key risks associated with this evolution.

The Rise of AI in Software Development

Artificial intelligence, machine learning, and deep learning are becoming foundational to modern software engineering. AI tools like ChatGPT, Copilot, and various open AI platforms assist in code suggestions, bug detection, documentation generation, and even architectural decisions. These tools not only reduce development time but also enable less-experienced developers to produce quality code.

Examples of AI in Development:

- AI Chat Bots: Provide 24/7 customer support and collect feedback.

- AI-Powered Code Review: Analyze code for bugs, security flaws, and performance issues.

- Natural Language Processing (NLP): Translate user stories into code or test cases.

- AI for DevOps: Use predictive analytics for server load and automate CI/CD pipelines.

With AI chat platforms, free AI chatbots, and robotic process automation (RPA), the lines between human and machine collaboration are increasingly blurred.

The Hidden Risks of AI in Application Development

While AI offers numerous benefits, it also introduces potential vulnerabilities and unintended consequences. Here are the top risks associated with integrating AI into the development pipeline:

1. Over-Reliance on AI Tools

Over-reliance on AI tools may reduce developer skills and code quality:

- A decline in critical thinking and analytical skills.

- Propagation of inefficient or insecure code patterns.

- Reduced understanding of the software being developed.

2. Bias in Machine Learning Models

AI and ML trained on biased or incomplete data can produce skewed results:

-Applications may produce discriminatory or inaccurate results.

-Risks include brand damage and legal issues in regulated sectors like retail or finance.

3. Security Vulnerabilities

AI-generated code may introduce hidden bugs or create opportunities for exploitation:

-Many AI tools scrape open-source data, which might include insecure or outdated libraries.

-Hackers could manipulate AI-generated models for malicious purposes.

4. Data Privacy and Compliance Issues

AI models often need large datasets with sensitive information:

-Misuse or leakage of data can lead to compliance violations (e.g., GDPR).

-Using tools like Google AI Chat or OpenAI Chatbots can raise data storage concerns.

5. Transparency and Explainability Challenges

Understanding AI, especially deep learning decisions, is challenging:

-A lack of explainability complicates debugging processes.

-There are regulatory issues in industries that require audit trails (e.g., insurance, healthcare).

AI and Its Influence Across Development Phases

Planning & Design: AI platforms analyze historical data to forecast project timelines and resource allocation.

Risks: False assumptions from inaccurate historical data can mislead project planning.

Coding: AI-powered IDEs and assistants suggest code snippets, auto-complete functions, and generate boilerplate code.

Risks: AI chatbots may overlook edge cases or scalability concerns.

Testing: Automated test case generation using AI ensures broader coverage in less time.

Risks: AI might miss human-centric use cases and unique behavioral scenarios.

Deployment & Maintenance: AI helps predict failures and automates software patching using computer vision and ML.

Risks:False positives or missed anomalies in logs could lead to outages.

The Role of AI in Retail, RPA, and Computer Vision

Industries such as retail and manufacturing are increasingly integrating AI.

In Retail: AI is used for chatbots, customer data analytics, and inventory management tools, enhancing personalized shopping experiences through machine learning and deep learning.

Risk: Over-personalization and surveillance-like tracking raise ethical concerns.

In RPA: Robotic Process Automation tools simplify repetitive back-end tasks. AI adds decision-making capabilities to RPA.

Risk: Errors in automation can lead to large-scale operational failures.

In Computer Vision: AI is applied in image classification, facial recognition, and quality control.

Risk: Misclassification or identity-related issues could lead to regulatory scrutiny.

Navigating the Risks: Best Practices

To safely harness the power of AI in development, businesses should adopt strategic measures, such as establishing AI ethics policies and defining acceptable use guidelines.

By understanding the transformative power of AI and proactively addressing its risks, organizations can better position themselves for a successful future in software development. Key Recommendations:

Audit and regularly update AI datasets to avoid bias.

Use explainable AI models where possible.

Train developers on AI tools while reinforcing core engineering skills.

Ensure AI integrations comply with data protection and security standards.

Final Thoughts: Embracing AI While Staying Secure

AI, ML, and DL have revolutionized software development, enabling automation, accuracy, and innovation. However, they bring complex risks that require careful management. Organizations must adopt a balanced approach—leveraging the strengths of AI platforms like GPT chat AI, open chat AI, and RPA tools while maintaining strict oversight.

As we move forward, embracing AI in a responsible and informed manner is critical. From enterprise AI adoption to computer vision applications, businesses that align technological growth with ethical and secure practices will lead the future of development.

#artificial intelligence chat#ai and software development#free ai chat bot#machine learning deep learning artificial intelligence#rpa#ai talking#artificial intelligence machine learning and deep learning#artificial intelligence deep learning#ai chat gpt#chat ai online#best ai chat#ai ml dl#ai chat online#ai chat bot online#machine learning and deep learning#deep learning institute nvidia#open chat ai#google chat bot#chat bot gpt#artificial neural network in machine learning#openai chat bot#google ai chat#ai deep learning#artificial neural network machine learning#ai gpt chat#chat ai free#ai chat online free#ai and deep learning#software development#gpt chat ai

0 notes

Text

Empower Your Digital Ecosystem with an AI Powered Application from Atcuality

At Atcuality, we specialize in more than just software—we specialize in intelligent, adaptive systems. Our solutions are designed with a forward-looking approach that integrates advanced technology into the very fabric of your business. If you're ready to accelerate your transformation, an AI powered application is the missing piece to future-proofing your operations. We combine cutting-edge AI models with seamless user interfaces to create systems that learn and optimize over time. From dynamic customer experience platforms to automated backend systems, our applications are built to think, analyze, and perform under real-world demands. With an agile development framework and a focus on performance and security, Atcuality delivers products that align with your strategic goals. Work with us to bridge the gap between vision and execution—and shape the intelligent enterprise of tomorrow.

#cash collection application#blockchain#digital marketing#virtual reality#web app development#augmented reality#amazon web services#ai applications#web design#web developers#ai model#ai image#ai artwork#ai art#chatgpt#artificial intelligence#ai generated#technology#ai powered#ai services#ai solutions#ai community#ai companies#ai deep learning#ai design#ai development#ai developers#ar development#ar vr technology#augmented human c4 621

0 notes

Text

From Code to Cognitive – Atcuality’s Intelligent Tech Framework

Atcuality is redefining enterprise success by delivering powerful, scalable, and intelligent software solutions. Our multidisciplinary teams combine cloud-native architectures, agile engineering, and business domain expertise to create value across your digital ecosystem. Positioned at the crossroads of business transformation and technical execution, artificial intelligence plays a vital role in our delivery model. We design AI algorithms, train custom models, and embed intelligence directly into products and processes, giving our clients the edge they need to lead. From automation in finance to real-time analytics in healthcare, Atcuality enables smarter decisions, deeper insights, and higher efficiency. We believe in creating human-centered technology that doesn’t just function, but thinks. Elevate your systems and your strategy — with Atcuality as your trusted technology partner.

#seo services#artificial intelligence#iot applications#seo agency#seo company#seo marketing#digital marketing#azure cloud services#ai powered application#amazon web services#ai image#ai generated#ai art#ai model#technology#chatgpt#ai#ai artwork#ai developers#ai design#ai development#ai deep learning#ai services#ai solutions#ai companies#information technology#software#applications#app#application development

0 notes

Text

Book Reading: Eastern Perspectives Humanistic AI 4a: Reflections on what it means to be human in the advent of AI era

The Ethics of AI and Human Values

With the advancements of AI technologies and abilities, it seems that human beings are facing a present reality and future possibilities of delegating everything to AI performing. A lot of things that human used to exercise our freewill and intelligence to make judgements will be assisted by AI. As AI possess vast quantities of data to compute and to continuosly improve by deep learning, it is very possible that AI can acquire self-conciousness.

If AI acquires self-consciousness, will it able to obtain the abilities to make moral decisions judgements out of ongoing deep learning?

On the other hand, as human beings become more and more relied on AI to perform tasks and make decisions, it is inevitable that many natural born human characters will be weakened. For example, humans are humans because we have FREEDOM, especially freedom from the perspective of MORAL self-discipline.

Human beings should do good for the sake of benevolence out of self-willed proactivity instead of passively driven to. Paradoxically, humans find it difficult to practice such morality out of freewill and self-constraints.

Since human beings can't always make the self-initiated goodwill to do good, some people consider that it may be 'better' to handover the moral questions to AI.

But the problem is once human beings GIVEN UP the HUMAN NATURE responsibilities to make moral considerations and decisions, human beings will stop enhancing and strengthening our moral consciousness.

Human dignity comes from the ABILITIES AND WILLINGNESS to overcome sensational desires and lusts to do good for higher and greater good. It is through human beings self-sacrifice to achieve higher and greater good that makes us shine as dignified species.

If human beings give up these characters, virtures and the abilities and willingness to make moral evaluations, judgements and decisions and hand the decision making to AI simply because we cannot overcome the difficulties and challenges involved in the decision making process, human rationality and autonomy will be harmed.

Those who study humanity, philosophies, especially those who study Chinese philosophies should constantly reflect the risks.

In this article, the author Cho-Hon Yang advocated for insights from Confucianism, Taoism, and Buddhism's value systems of seeing human beings as balanced creatures who possess both spiritual (the invisible form as Way (Tao) and physical bodies (as vassels). The world is harmonised when human beings can stay on 'the Way' rather than just being vassels without spirits. As spiritual creatures who can seek and stay with 'the Way', we can't treat other humans as tools or instruments to justify means. From this worldview, AIs created by humans can't just be there to feed unlimited desires of humans simply because humans want to satisfy the desires of the bodies (fleshes/vassels). i.e. AIs shouldn't be just some alogrithims being designed to feed human desires like the fat chocolate factory boy. "Tao" or the Way is the highest compass that guide professions of humans BOTH spiritually and physically. Only when AIs being developed with capabilities of improving BOTH the spirit and bodily needs of human beings are truly benefitial to the future developments of humanity.

Confucianism, Taoism, and traditional wisdoms emphaise the balance of 'heaven', 'earth' and human beings, as well as the balance of inner aspects and external aspects of human beings. When applying such concepts to the designs of AI, it may help to harmonise technological progression and holistic human developments.

In addition, the teachings of Confucianism focus on personal growth, and socio-moral responsibilities. Taoism's concept of Tao (the Way) and human relationships with Tao and Buddhism's emptiness princple (human self-constrains of falling into unlimited desires just for the sake of self-pleasures) provide spiritual and moral guaidance. Embedding these concepts to the designs of AIs will provide a more integrated approach that facilitates balance of technological advancements in harmony with individuals and society.

1 note

·

View note

Text

Panasonic Australia: The LUMIX G9II, “Capture the Decisive Moment” – Press release

View On WordPress

#100MP Handheld High-Res Mode#13+ stop V-Log/V-Gamut capture#25.2MP Live MOS Micro Four Thirds Sensor#3.0" 1.84m-Dot Free-Angle Touchscreen#3.68m-Dot 0.8x-Magnification OLED LVF#300fps slow motion#779-Point Phase-Detection AF System#AI deep learning#Apple ProRes#C4K/4K 60p 4:2:2 10-Bit Video Recording#content creators#digital mirrorless cameras#Dual UHS-II SD Slots; Wi-Fi & Bluetooth#G9II#ISO 25600 and 75 fps Continuous Shooting#Leica#Live View Finder#M43#Micro Four Thirds#open gate#Panasonic Lumix G9II#PDAF#Phase Detection Auto-Focus#Real Time LUT

0 notes

Note

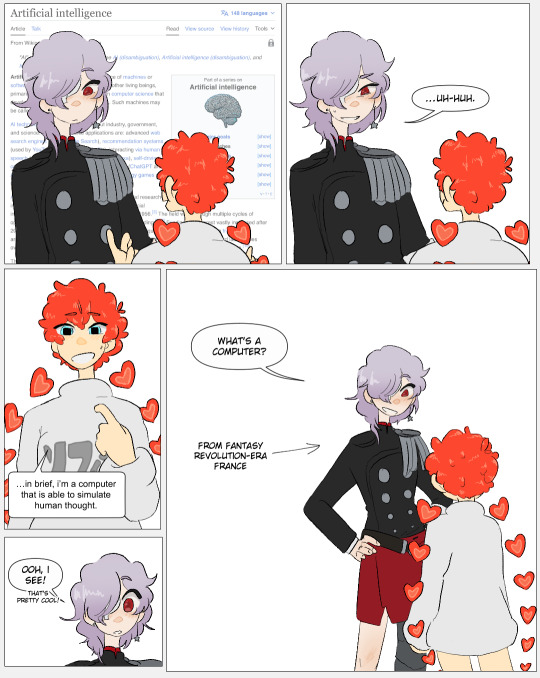

Erina and Sophie....

erina and sophie..... perhaps even sophie and erina....

#persona 5#p5#asks & requests#p5t#p5t erina#p5s#p5s sophia#sophia persona 5#persona 5 sophia#persona 5 strikers#persona 5 scramble#persona 5 tactica#i will fill the p5s tag my fucking self if i have to#comics#chef recommended#good lord is that all the tags i need. am i done am i free. YEESH okay#anyways i think theyd be so silly together#no one utilises the comedic potential of erina living in the fantasy french revolution but i think deep learning ai sophia is a good match#either way theyre so good as parallels like hold on SPOILERS FOR BOTH GAMES PAST THIS POINT#both of them were created as last-ditch efforts. erina is the manifetation of toshiros suppressed hope and rebellion and sophia is ichinose#attempt at understanding the human heart and her own repressed emotions#theyre both constructs of the heart in one form or another even if they were created in v different ways

690 notes

·

View notes

Text

You've never done that when I got close to you before. Why? None of your business. Tell me, or you can't leave.

KISEKI: DEAR TO ME Ep. 10

#kiseki: dear to me#kisekiedit#kdtm#kiseki dear to me#ai di x chen yi#chen yi x ai di#nat chen#chen bowen#louis chiang#chiang tien#jiang dian#uservid#userspring#userrain#pdribs#userspicy#userjjessi#*cajedit#*gif#every time i color this scene i get stronger. anyway there were so many expressions i just couldnt leave out. the deep breath ai di takes#steeling himself before admitting it. & the way chen yi absorbs it the way he blinks away & his mouth opens before focusing on ai di again#thinking about it. thinking about four years of attacks ai di had to withstand. understanding the way he is now but hating how its happened#and also the guilt hes gotta feel from that! & yet thats overcome in this moment by a need to not let ai di put a wall between them#which is what ai di keeps trying to do. he admits a vulnerable thing and then deflects FOUR TIMES in this scene. first when sleeping#& choking chen yi when woken(& avoiding when questioned abt it). second by dropping his guard & worrying when he finds chen yi injured#& twice more shown in this set. he has to shake it off he has to put his wall back up but his instincts are strongest & chen yi SEES them.#you can see the way ai di wants to relax into that hug. the way he just wants to BREATHE but instead uses those breaths to defend himself#he chooses to flirt hoping it'll make chen yi back off. hoping he'll stop asking him to be vulnerable. but chen yi knows his tricks now.#and hes not going to let ai di continue believing he doesnt CARE about him. its poetic the way he gives him a taste of his own medicine#like it's *strategic*. he watches and learns. he knows his own influence over ai di he knows that HE is ai di's weakness. it's..chef's kiss

168 notes

·

View notes

Text

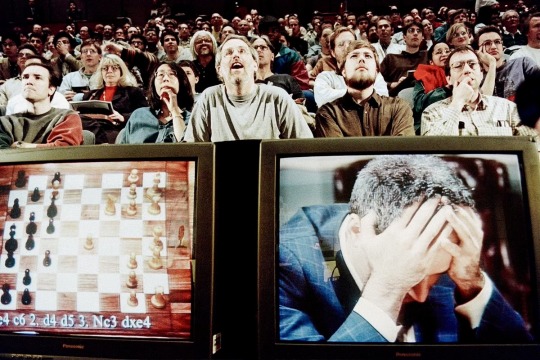

Garry Kasparov, world champion chess player, succumbing to his public defeat by Deep Blue, IBM: a 'supercomputer' in development at the time. — MAY 11, 1997

#tech history#AI#artificial intelligence#machine learning#90s#deep blue#IBM#garry kasparov#technology#u

118 notes

·

View notes

Text

We need to talk about AI

Okay, several people asked me to post about this, so I guess I am going to post about this. Or to say it differently: Hey, for once I am posting about the stuff I am actually doing for university. Woohoo!

Because here is the issue. We are kinda suffering a death of nuance right now, when it comes to the topic of AI.

I understand why this happening (basically everyone wanting to market anything is calling it AI even though it is often a thousand different things) but it is a problem.

So, let's talk about "AI", that isn't actually intelligent, what the term means right now, what it is, what it isn't, and why it is not always bad. I am trying to be short, alright?

So, right now when anyone says they are using AI they mean, that they are using a program that functions based on what computer nerds call "a neural network" through a process called "deep learning" or "machine learning" (yes, those terms mean slightly different things, but frankly, you really do not need to know the details).

Now, the theory for this has been around since the 1940s! The idea had always been to create calculation nodes that mirror the way neurons in the human brain work. That looks kinda like this:

Basically, there are input nodes, in which you put some data, those do some transformations that kinda depend on the kind of thing you want to train it for and in the end a number comes out, that the program than "remembers". I could explain the details, but your eyes would glaze over the same way everyone's eyes glaze over in this class I have on this on every Friday afternoon.

All you need to know: You put in some sort of data (that can be text, math, pictures, audio, whatever), the computer does magic math, and then it gets a number that has a meaning to it.

And we actually have been using this sinde the 80s in some way. If any Digimon fans are here: there is a reason the digital world in Digimon Tamers was created in Stanford in the 80s. This was studied there.

But if it was around so long, why am I hearing so much about it now?

This is a good question hypothetical reader. The very short answer is: some super-nerds found a way to make this work way, way better in 2012, and from that work (which was then called Deep Learning in Artifical Neural Networks, short ANN) we got basically everything that TechBros will not shut up about for the last like ten years. Including "AI".

Now, most things you think about when you hear "AI" is some form of generative AI. Usually it will use some form of a LLM, a Large Language Model to process text, and a method called Stable Diffusion to create visuals. (Tbh, I have no clue what method audio generation uses, as the only audio AI I have so far looked into was based on wolf howls.)

LLMs were like this big, big break through, because they actually appear to comprehend natural language. They don't, of coruse, as to them words and phrases are just stastical variables. Scientists call them also "stochastic parrots". But of course our dumb human brains love to anthropogice shit. So they go: "It makes human words. It gotta be human!"

It is a whole thing.

It does not understand or grasp language. But the mathematics behind it will basically create a statistical analysis of all the words and then create a likely answer.

What you have to understand however is, that LLMs and Stable Diffusion are just a a tiny, minority type of use cases for ANNs. Because research right now is starting to use ANNs for EVERYTHING. Some also partially using Stable Diffusion and LLMs, but not to take away people'S jobs.

Which is probably the place where I will share what I have been doing recently with AI.

The stuff I am doing with Neural Networks

The neat thing: if a Neural Network is Open Source, it is surprisingly easy to work with it. Last year when I started with this I was so intimidated, but frankly, I will confidently say now: As someone who has been working with computers for like more than 10 years, this is easier programming than most shit I did to organize data bases. So, during this last year I did three things with AI. One for a university research project, one for my work, and one because I find it interesting.

The university research project trained an AI to watch video live streams of our biology department's fish tanks, analyse the behavior of the fish and notify someone if a fish showed signs of being sick. We used an AI named "YOLO" for this, that is very good at analyzing pictures, though the base framework did not know anything about stuff that lived not on land. So we needed to teach it what a fish was, how to analyze videos (as the base framework only can look at single pictures) and then we needed to teach it how fish were supposed to behave. We still managed to get that whole thing working in about 5 months. So... Yeah. But nobody can watch hundreds of fish all the time, so without this, those fish will just die if something is wrong.

The second is for my work. For this I used a really old Neural Network Framework called tesseract. This was developed by Google ages ago. And I mean ages. This is one of those neural network based on 1980s research, simply doing OCR. OCR being "optical character recognition". Aka: if you give it a picture of writing, it can read that writing. My work has the issue, that we have tons and tons of old paper work that has been scanned and needs to be digitized into a database. But everyone who was hired to do this manually found this mindnumbing. Just imagine doing this all day: take a contract, look up certain data, fill it into a table, put the contract away, take the next contract and do the same. Thousands of contracts, 8 hours a day. Nobody wants to do that. Our company has been using another OCR software for this. But that one was super expensive. So I was asked if I could built something to do that. So I did. And this was so ridiculously easy, it took me three weeks. And it actually has a higher successrate than the expensive software before.

Lastly there is the one I am doing right now, and this one is a bit more complex. See: we have tons and tons of historical shit, that never has been translated. Be it papyri, stone tablets, letters, manuscripts, whatever. And right now I used tesseract which by now is open source to develop it further to allow it to read handwritten stuff and completely different letters than what it knows so far. I plan to hook it up, once it can reliably do the OCR, to a LLM to then translate those texts. Because here is the thing: these things have not been translated because there is just not enough people speaking those old languages. Which leads to people going like: "GASP! We found this super important document that actually shows things from the anceint world we wanted to know forever, and it was lying in our collection collecting dust for 90 years!" I am not the only person who has this idea, and yeah, I just hope maybe we can in the next few years get something going to help historians and archeologists to do their work.

Make no mistake: ANNs are saving lives right now

Here is the thing: ANNs are Deep Learning are saving lives right now. I really cannot stress enough how quickly this technology has become incredibly important in fields like biology and medicine to analyze data and predict outcomes in a way that a human just never would be capable of.

I saw a post yesterday saying "AI" can never be a part of Solarpunk. I heavily will disagree on that. Solarpunk for example would need the help of AI for a lot of stuff, as it can help us deal with ecological things, might be able to predict weather in ways we are not capable of, will help with medicine, with plants and so many other things.

ANNs are a good thing in general. And yes, they might also be used for some just fun things in general.

And for things that we may not need to know, but that would be fun to know. Like, I mentioned above: the only audio research I read through was based on wolf howls. Basically there is a group of researchers trying to understand wolves and they are using AI to analyze the howling and grunting and find patterns in there which humans are not capable of due ot human bias. So maybe AI will hlep us understand some animals at some point.

Heck, we saw so far, that some LLMs have been capable of on their on extrapolating from being taught one version of a language to just automatically understand another version of it. Like going from modern English to old English and such. Which is why some researchers wonder, if it might actually be able to understand languages that were never deciphered.

All of that is interesting and fascinating.

Again, the generative stuff is a very, very minute part of what AI is being used for.

Yeah, but WHAT ABOUT the generative stuff?

So, let's talk about the generative stuff. Because I kinda hate it, but I also understand that there is a big issue.

If you know me, you know how much I freaking love the creative industry. If I had more money, I would just throw it all at all those amazing creative people online. I mean, fuck! I adore y'all!

And I do think that basically art fully created by AI is lacking the human "heart" - or to phrase it more artistically: it is lacking the chemical inbalances that make a human human lol. Same goes for writing. After all, an AI is actually incapable of actually creating a complex plot and all of that. And even if we managed to train it to do it, I don't think it should.

AI saving lives = good.

AI doing the shit humans actually evolved to do = bad.

And I also think that people who just do the "AI Art/Writing" shit are lazy and need to just put in work to learn the skill. Meh.

However...

I do think that these forms of AI can have a place in the creative process. There are people creating works of art that use some assets created with genAI but still putting in hours and hours of work on their own. And given that collages are legal to create - I do not see how this is meaningfully different. If you can take someone else's artwork as part of a collage legally, you can also take some art created by AI trained on someone else's art legally for the collage.

And then there is also the thing... Look, right now there is a lot of crunch in a lot of creative industries, and a lot of the work is not the fun creative kind, but the annoying creative kind that nobody actually enjoys and still eats hours and hours before deadlines. Swen the Man (the Larian boss) spoke about that recently: how mocapping often created some artifacts where the computer stuff used to record it (which already is done partially by an algorithm) gets janky. So far this was cleaned up by humans, and it is shitty brain numbing work most people hate. You can train AI to do this.

And I am going to assume that in normal 2D animation there is also more than enough clean up steps and such that nobody actually likes to do and that can just help to prevent crunch. Same goes for like those overworked souls doing movie VFX, who have worked 80 hour weeks for the last 5 years. In movie VFX we just do not have enough workers. This is a fact. So, yeah, if we can help those people out: great.

If this is all directed by a human vision and just helping out to make certain processes easier? It is fine.

However, something that is just 100% AI? That is dumb and sucks. And it sucks even more that people's fanart, fanfics, and also commercial work online got stolen for it.

And yet... Yeah, I am sorry, I am afraid I have to join the camp of: "I am afraid criminalizing taking the training data is a really bad idea." Because yeah... It is fucking shitty how Facebook, Microsoft, Google, OpenAI and whatever are using this stolen data to create programs to make themselves richer and what not, while not even making their models open source. BUT... If we outlawed it, the only people being capable of even creating such algorithms that absolutely can help in some processes would be big media corporations that already own a ton of data for training (so basically Disney, Warner and Universal) who would then get a monopoly. And that would actually be a bad thing. So, like... both variations suck. There is no good solution, I am afraid.

And mind you, Disney, Warner, and Universal would still not pay their artists for it. lol

However, that does not mean, you should not bully the companies who are using this stolen data right now without making their models open source! And also please, please bully Hasbro and Riot and whoever for using AI Art in their merchandise. Bully them hard. They have a lot of money and they deserve to be bullied!

But yeah. Generally speaking: Please, please, as I will always say... inform yourself on these topics. Do not hate on stuff without understanding what it actually is. Most topics in life are nuanced. Not all. But many.

#computer science#artifical intelligence#neural network#artifical neural network#ann#deep learning#ai#large language model#science#research#nuance#explanation#opinion#text post#ai explained#solarpunk#cyberpunk

28 notes

·

View notes

Text

I love hearing Martyn talk about the Misadventure NPC AI's because it's still definitely Generative AI, just probably not the unethical kind

If it's a handcrafted Language Model (which.. wow that's crazy impressive) that's trained on non-stolen data, I can't see an ethical reason to not use it

Still very much Generative AI tho 😭 <3

#he says “its not generative ai” only to then say the words “language model”#okay lets get you to bed grandpa#misadventures smp#martyn inthelittlewood#martyn itlw#inthelittlewood#itlw#meta#i guess#yapping#ai#i guess?? i study it so im also reallllly curious about how many layers the model has#i like deep learning believe it or not#gradient descent you are like a fruit fly to me

39 notes

·

View notes

Text

on the topic of "what did students do before chatgpt?"- sometimes we just had to deal with the consequences of our actions

most of the time I could do up a quick paper and get a good grade on it. I learned to be very good at bullshitting in high school and in university. so yeah, most of the time, I could whip up an essay based on nothing but skimmed pages and spark notes, and bring in a good grade, and everything was fine. but that wasn't always the case

sometimes, I got a bad mark. sometimes, my papers were half-assed and slopped together, and it showed, and they got the marks they deserved. sometimes things weren't handed in at all. sometimes I failed the test. sometimes I just had to take the 0, as the consequence of not doing the work. that happened, too

its important to acknowledge that. because I would still rather take the bad mark, even the failure, even the 0, than use chatgpt or some other generative ai to do my work for me. work done myself, even half-assed and messy and incomplete work, is still better work than anything these plagiarism machines could produce. I would even say the 0 is better, because at least I owned up to whatever it was that made that assignment not go in, rather than use chatgpt and get a 0 anyways for plagiarism

I still learned things from those papers and assignments I turned in that were dogshit. if nothing else, I learned how to be really good at bullshitting a point. I learned how to write academically. I learned how to read professors and gauge what they wanted and liked in a paper. I learned about the subject matter in cram sessions. I learned how to pull meaning from very little. and if I didnt do the work and got a 0, then I learned that my actions had consequences and I would have to deal with them

im all for bringing up how before we had chatgpt we had to learn to bullshit and make something out of nothing, how we had to learn how to lie to teachers, how to sympathize our way to extensions and such. but I think its also important to bring up that sometimes you just had to eat the bad mark you got for a half-assed incomplete essay. sometimes you had to fail. and it sucks, but its a lesson in and of itself that is necessary to learn both in education and just in life in general. failure is part of education, its part of life, and you need to fail sometimes. its where you'll do your best learning. ive stood by that for years, especially now as someone who works in education

so, yeah, tell the students nowadays how we got by without chatgpt and all the things we did to rush out papers, to pull all-nighters, to get our teachers to sympathize with us and help us even when we didnt need it so that we could get a couple extra days. but also tell them that sometimes we just had to deal with the consequences of our actions, and it sucked, but we did it. tell them about the bad marks and the zeroes, and make them ok with getting those marks themselves. make them ok with failure. thats an important step in fighting off generative ai in academics- making students ok with failure, treating it like the inevitable and necessary thing it is, rather than acting like one failed paper or one 0 on a test will ruin their lives

#this coming from someone with a deep-rooted fear of failure#I dont see it get brought up as much with these conversations around chatgpt in academics#and I think it needs to be at the centre#students need to know its ok to get a bad mark and its ok to fail#failure needs to be an option that doesnt completely destroy them#otherwise chatgpt and generative ai are just too good to be true#why bother learning to bullshit an essay when you can have ai write one that looks nicer#why bother learning to lie to a teacher when ai can write the assignment in minutes#why bother even asking for an extension when you can have it done on the bus on the way to school#telling students what we used to do before chatgpt is valuable yes#but why should they bother doing all of it when chatgpt is so much easier?#especially when the threat of failure is usually massive!#my academic anxiety didnt come from nowhere- it was ingrained to me for years by family and the education system alike#students are taught like one bad mark will ruin their academic career#with that kind of pressure- is it any surprise they turn to generative ai?#im not saying its a good thing theyre doing but man#I get it#they need to be told its ok to fail they need to be supported in failure they need to be allowed to fail and to grow from it#failure is necessary its inevitable and its good for growth

12 notes

·

View notes

Video

youtube

AI Basics for Dummies- Beginners series on AI- Learn, explore, and get empowered

For beginners, explain what Artificial Intelligence (AI) is. Welcome to our series on Artificial Intelligence! Here's a breakdown of what you'll learn in each segment: What is AI? – Discover how AI powers machines to perform human-like tasks such as decision-making and language understanding. What is Machine Learning? – Learn how machines are trained to identify patterns in data and improve over time without explicit programming. What is Deep Learning? – Explore advanced machine learning using neural networks to recognize complex patterns in data. What is a Neural Network in Deep Learning? – Dive into how neural networks mimic the human brain to process information and solve problems. Discriminative vs. Generative Models – Understand the difference between models that classify data and those that generate new data. Introduction to Large Language Models in Generative AI – Discover how AI models like GPT generate human-like text, power chatbots, and transform industries. Applications and Future of AI – Explore real-world applications of AI and how these technologies are shaping the future.

Next video in this series: Generative AI for Dummies- AI for Beginners series. Learn, explore, and get empowered

Here is the bonus: if you are looking for a Tesla, here is the link to get you a $1000.00 discount

Thanks for watching! www.youtube.com/@UC6ryzJZpEoRb_96EtKHA-Cw

37 notes

·

View notes

Text

#acmirage#assassin's creed#assassin's creed mirage#basim ibn ishaq#i was doing this stuff when i was at middle school while I was learning photoshop stuff by myself#when i was sharing at X(twitter) i saw people making big deals out of it but i was fed up with it and didn't understand their side#didn't wanted to share other than my photos - still kinda don't want to-#one day I shared one that made with a Witcher 3 shots of mine and said that I did this etc.#they took it the wrong way like I wasn't sure about it or sharing it and doubted myself on my works - I could see it in their comments#bc they were like 'if you like it doesn't matter what others think' kinda stuff and I didn't expected them to react like that#it never felt like encouraging but more like they're trying to pull you down and make you feel less#I love everything I share either way-some of them less some of them more than others- I wouldn't share otherwise#some people always like this while they're writing or saying good things but deep down you know they actually not.#so I still see some on IG and still don't understand why people do that to the photos#and then there are people like “oh they don't have to do anything to make you feel better or they don't have to like your stuff”#why do I do it then? Because as a decent person who have conscience it's a good thing to do-like people's stuff at least#I'm not an AI person behind this accounts

11 notes

·

View notes

Text

Is Convenience Our Crutch? A Human’s Weapon against the Machine Mind.

Reclaiming our ability to think

“Why is my writing getting worse?” I groaned as I stared at a blank Google Document.

Well, it wasn’t blank. Not two minutes before. I had written out a messy outline, deleted it, rewrote it, reworded it, hated it, and you probably know how the rest went. I ended up with a completely blank Google Document, an unplanned essay, and no direction for my writing whatsoever. I have always prided myself in my ability to not only write, but write well, so what was happening? Why was I grasping at straws, unsure how to formulate any opinion on the prompt that I was given, and generally stuck?

When I started this new school year with harder classes, teachers, assignments…harder everything, writing essays had become less about writing and more about fulfilling a certain standard that the teacher wanted me to follow. The topic needed to add to the discussion, not be something that was easily found by just searching it up on the internet. It needed to use complex language. It needed to argue well. It needed to use different sentence structures. Show not tell, but also use the specific writing term if necessary. No writing “logos,” “pathos,” or “ethos”. Abandon those five-paragraph essay structures. On and on, these guidelines that were supposed to make my writing more personal, more stylistic, more me, became burdensome restrictions that made me scared to write.

Or, at least, that is what I wanted myself to think. As a perfectionist, I criticized myself for my inability to write well when, in reality, I just couldn’t write well instantly. I had spent two hours writing a draft and, to my astonishment, it did not sound like a revolutionary, never-heard-before opinion essay about the Crucible. Crazy, I know! So, I became reliant on the resources around me, things that will give me instant answers to whatever I was writing about. Looking up what other people have said about what I was arguing became looking up what to say about the topic I need to write about. And from that, using AI technologies for writing became more and more tempting.

I found a loophole in my own thinking, where I realized that I didn’t have to really use the AI to write my essays for me. I could keep my moral high-ground by just writing most of it by myself, and asking them to “improve” my writing. Grammarly or whatever platform would then provide me a mediocre regurgitation of my ideas, and I truly thought that I had accomplished something. I didn’t use it to write my whole essay, necessarily. Technically, I was still writing my own essay. It was just improving the ideas I already had. Right?

The danger of modern technology isn't just the sheer amount of distraction it creates—though that is a factor—but also our growing dependence on it. This dependence is not surprising at all. The internet is a wealth of knowledge and all the tools on there are just so reliable, so quickly accessible. Don’t know something? Just google it. Can’t find where something is inside a store? Just order it online. Don’t remember how to say “can I have a can of apple cider” in Spanish? Just translate it.

But, this convenience comes at a cost, and I have felt this cost personally. I have become so consumed by needing to be good, and good right away, that this dependence emerged easily. I found myself so insistent on getting a beautiful masterpiece of an outline right away that I did not even think to put in the time to think and reflect. I could easily have taken a few minutes, or even a few days, to ponder about the prompt, to think. Not just with essays, but with everything else in life as well. Do I think about the material I read, or do I just search up an explanation for the things I don’t know? Do I try to solve a problem, even when I have gotten it wrong a million times before, or do I just give and search for the answer? Do I check if the AI generated answer at the top of the search results is accurate, or do I just trust what it says because I’m too tired to bother looking through the tens of other articles about the stuff I search? But no, I wanted the beautiful essay right away, those answers right away, that elegance and eloquence and the careful mincing of words right away. And the internet provided me with just that: elegance and eloquence, and a whole lot of nothing.

Then I find myself asking, on those days of self-reflection and rare lucidness, whether or not I actually know anything at all. And, perhaps, this question applies to you as well. When we learn something, do we truly internalize it, or do we prioritize convenience, knowing we can always look it up later? Not just look it up, but access it instantly, effortlessly. Our habits have shifted toward "googling" everything, which is remarkable, but what does this instant access to information do to our critical thinking, memorization, and willingness to deeply learn? And why do we resort to these options in the first place?

I think the answer is the cultural norm of wanting everything quickly. Efficiency, productivity; this culture has pushed everything to be as quick as possible, the most product with the least amount of time and effort. I’m not saying productivity is bad; rather I believe that this mindset has permeated into other aspects of life as well. We lose our patience when reading a book because the information isn’t presented to us right away. We grow frustrated at the recipe when it starts with a sob story about some guy’s grandma. We become irritated when the perfect essay idea or writing does not emerge right away.

I’m not totally clear what this impact is, at least not in terms of statistics, numbers, or experiments. But, one thing is for sure: we have to keep thinking. No matter what topic it’s about, try not to rely on “searching it up” as the first resort. When writing an essay, maybe don’t search for topics right away, or even have generative technology refine what you write. At least not right away. Try to think about the topic yourself, ponder about it. In writing this post, I spent a lot of time thinking and reflecting. I wrote many drafts, deleted several paragraphs, and ignored this document completely for several weeks.

Refine the art of taking your time to think. If you took the time to read through this insanity-style block of text, I applaud you for taking this first step. Thinking will be your sword, and patience your shield. Unlike what society (or our brain) tells us, not everything has to be instant. Knowledge, understanding, outcomes do not have to be instant, even if some of them can be. Think, wonder, ponder. Abandon searching up the answer right away. Think, wonder, ponder. This, I found, is our weapon against technological dependence.

#qs playlist#writers and poets#female writers#writing#writers on tumblr#writeblr#writerscommunity#essay#personal essay#essay writing#student#academia aesthetic#writing thoughts#thoughts#deep thoughts#my thougts#spilled thoughts#social commentary#internet culture#emotional decay#critical thinking#technological dependence#AI#machine learning#ai technology

8 notes

·

View notes

Text

some of my coworkers r ai bros and theyve been finding that, duh, gpt bots will repeat ur mistakes if u feed them information that is false, so using them to check for mistakes in a pdf creates a library of mistakes for them to pull from and the learning model collapses on itself after a few uses. bc the information it was trained on was mistakes

#if they want it to work they need to be capable of producing a perfect shop drawing first#multiple times in a row#and this is not something they tend to do i know this bc im the 1st person who checks these shop drawings w a fine tooth comb#if u want to use 'ai' u need to understand how to train it and that gpt is deep learning so the learning part is the most impactful part#aka u need to teach a deep learning model before it can recite things back to u#i recommend learning in depth abt these ai bots cause its fun to watch them collapse on themselves when u know why they do it

7 notes

·

View notes

Text

I asked myself why I failed to notice. It was the first time we'd been apart that long. I found the birthday gifts you prepared for me in my room, from my 18th to my 21st. ...Shut up. I started to think about what you were doing back then. Were you celebrating my birthday all by yourself?

KISEKI: DEAR TO ME Ep. 12

#kiseki: dear to me#kisekiedit#kdtm#kiseki dear to me#chen yi x ai di#ai di x chen yi#nat chen#chen bowen#louis chiang#chiang tien#jiang dian#userspring#userrain#uservid#userspicy#userjjessi#pdribs#*cajedit#*gif#do you ever cry about the chen yi that woke up to find ai di gone.#do you ever think about the chen yi that felt ai di's tears on his face and reached up to hold him closer. to comfort him.#who saw & chose in a moment the true ai di that had always been by his side then lost him in the next. & woke up to learn it was his fault#cuz i think about the chen yi during ai di's prison time a lot. i think about him going over so many of his memories#reevaluating ai di's anger and teasing and realizing it was all heartbreak. THAT IT WAS ALL HEARTBREAK.#the guilt...the desperation & need to get through to ai di so he never makes him feel that way again. understanding that he loves ai di too#the way he gently touches ai di's hands and face here... he tied him up to keep him from running but hes being so earnest and SO careful#with ai di's pain & ai di's love. his expressions & the way he takes deep breaths before admitting things out loud like its clear#hes thinking hard abt what he wants to say and how he wants to say it. bc he has to make ai di understand how much he means this#how much he misses him. how much he wants to make this right. how he wants & needs to be by ai di's side forever bc he loves him!#he loves him!!!!!!!!! GOD. i love chen yi.

231 notes

·

View notes