#Stateless Architecture

Explore tagged Tumblr posts

Text

Compare Stateful vs Stateless Architecture?

Stateful and stateless architectures are two different approaches to designing and implementing systems, each with its own characteristics and use cases. Stateful Architecture: In a stateful architecture, the server maintains the state or session information of each client or user session. The server stores information about the interaction with each client, including session data, user…

View On WordPress

#Best Practices#interview#interview questions#Interview Success Tips#Interview Tips#Java#Microservices#Senior Developer#Software Architects#Stateful Architecture#Stateless Architecture

0 notes

Text

youtube

By: Douglas Murray

Published: Feb 24, 2024

Like a number of ‘anti-colonialists’, William Dalrymple lives in colonial splendour on the outskirts of Delhi. The writer often opens the doors of his estate to slavering architectural magazines. A few years ago, one described his pool, pool house, vast family rooms, animals, cockatoo ‘and the usual entourage of servants that attends any successful man in India’s capital city’.

I only mention Dalrymple because he is one of a large number of people who have lost their senses by going rampaging online about the alleged genocide in Gaza. He recently tweeted at a young Jewish woman who said she was afraid to travel into London during the Palestinian protests: ‘Forget 30,000 dead in Gaza, tens of thousands more in prison without charge, five MILLION in stateless serfdom, forget 75 years of torture, rape, dispossession, humiliation and occupation, IT’S ALL ABOUT YOU.’ It is one thing when a street rabble loses their minds. But when people who had minds start to lose them, that is another thing altogether.

I find it curious. By every measure, what is happening in Gaza is not genocide. More than that – it’s not even regionally remarkable.

Hamas’s own figures – not to be relied upon – suggest that around 28,000 people have been killed in Gaza since October. Most of the international media likes to claim these people are all innocent civilians. In fact, many of the dead will have been killed by the quarter or so Hamas and Islamic Jihad rockets that fall short and land inside Gaza.

Then there are the more than 9,000 Hamas terrorists who have been killed by the Israel Defence Forces. As Lord Roberts of Belgravia recently pointed out, that means there is fewer than a two to one ratio of civilians to terrorists killed: ‘An astonishingly low ratio for modern urban warfare where the terrorists routinely use civilians as human shields.’ Most western armies would dream of such a low civilian casualty count. But because Israel is involved (‘Jews are news’) the libellous hyperbole is everywhere.

For almost 20 years since Israel withdrew from Gaza, we have heard the same allegations. Israel has been accused of committing genocide in Gaza during exchanges with Hamas in 2009, 2012 and 2014. As a claim it is demonstrably, obviously false. When Israel withdrew from Gaza in 2005, the population of the Strip was around 1.3 million. Today it is more than two million, with a male life expectancy higher than in parts of Scotland. During the same period, the Palestinian population in the West Bank grew by a million. Either the Israelis weren’t committing genocide, or they tried to commit genocide but are uniquely bad at it. Which is it? Well, when it comes to Israel it seems people don’t have to choose. Everything and anything can be true at once.

Here is a figure I’ve never seen anyone raise. It’s an ugly little bit of maths, but stay with me. If you wish, you might add together all the people killed in every conflict involving Israel since its foundation.

In 1948, after the UN announced the state, all of Israel’s Arab neighbours invaded to try to wipe it out. They failed. But the upper estimate of the casualties on all sides came to some 20,000 people. The upper estimates of the wars of 1967 and 1973, when Israel’s neighbours once again attempted to annihilate it, are very similar (some 20,000 and 15,000 respectively). Subsequent wars in Lebanon and Gaza add several thousands more to that figure. It means that up to the present war, some 60,000 people had died on every side in all wars involving Israel.

Over the past decade of civil war in Syria, Bashar al-Assad has managed to kill more than ten times that number. Although precise figures are hard to come by, Assad is reckoned to have murdered some 600,000 Arab Muslims in his country. Meaning that every six to 12 months he manages to kill the same number as died in every war involving Israel ever.

There are lots of reasons you might give to explain this: that people don’t care when Muslims kill Muslims; that people don’t care when Arabs kill Arabs; that they only care if Israel is involved. Allow me to give another example that is suggestive.

No one knows how many people have been killed in the war in Yemen in recent years. From 2015-2021 the UN estimated perhaps 377,000 – ten times the highest estimate of the recent death toll in Gaza. The only time I’ve heard people scream on British streets about Yemen has been after the Houthis started attacking British and American ships in the Red Sea and the deadbeat idiots on the streets of London started chanting: ‘Yemen, Yemen, make us proud, turn another ship around.’ Because like all leftists and Islamists there is no terrorist group these people can’t get a pash on, so long as that terrorist group is against us.

I often wonder why this obsession arises when the war involves Israel. Why don’t people trawl along our streets and scream by their thousands about Syria, Yemen, China’s Uighurs or a hundred other terrible things? There are only two possible conclusions.

The first is a journalistic one. Ever since Marie Colvin was killed it became plain that western journalists were a target in Syria. Not eager to be the target, most journalists hotfooted it out of the country. Some who didn’t fell into the hands of Isis. Israel-Gaza wars by contrast do not have the same dynamic and on a technical level the media can applaud itself for reporting from a warzone where they are not the target.

But I suspect it is a moral explanation which explains the situation so many people find themselves in. They simply enjoy being able to accuse the world’s only Jewish state of ‘genocide’ and ‘Nazi-like behaviour’. They enjoy the opportunity to wound Jews as deeply as possible. Many find it satisfies the intense fury they feel when Israel is winning.

Like being fanned on your veranda while lambasting the evils of Empire, it is a paradox, to be sure. But it is also a perversity. And it doesn’t come from nowhere.

==

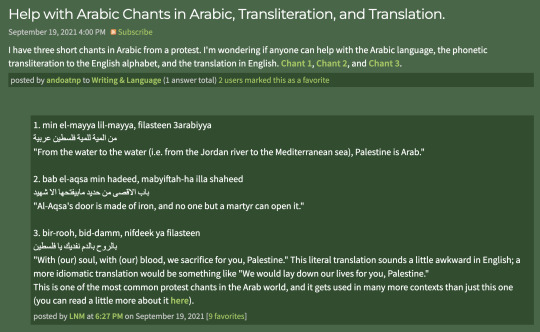

"From the water to the water, Palestine is Arab."

This is the actual genocide.

#Douglas Murray#Israel#hamas terrorism#hamas terrorists#palestine#palestinians#genocide#ethnic cleansing#israel genocide#gaza genocide#palestinian genocide#genocide myth#antisemitism#genocide lie#religion is a mental illness

68 notes

·

View notes

Text

REST APIs

REST (Representational State Transfer) is a type of API where all the information needed to perform an action is passed to the API at the time the request needs fulfilling. The server does not need previous knowledge of the clients session in order to fulfill their request.

The alternative to this is that the client having a 'session' with the server, where the server keeps information on the client while it's active, which can take up a lot of server processing power and memory. For large services handling possible hundreds of thousands of clients at a time, keeping a 'connection' can take up a lot of server processing and memory.

REST speeds up processing time for both the server and client. With sessions, they can end up split over multiple servers, meaning servers have to communicate to get data which can slow down response time. Because the server needs no prior knowledge of a client, any client can handle any client's request easily, which also makes load balancing easier, a request can be sent to any server that can handle it which is currently the least busy.

All REST APIs use HTTP methods. A client sends a request via HTTP to a specific endpoint (location on the web), along with all of the information needed to complete that request. The server will then process it and send back a response.

Core features of REST:

Client-Server Architecture - a client that sends requests to a server, or set of servers via HTTP methods.

Stateless - client information is not stored between requests and each request is handled as separate and unconnected.

Cacheability - data that is expected to be accessed often can be cached (saved for a set amount of time) on the client or server side to make client and server interactions quicker.

Uniform interface - no matter where an endpoint is accessed from, its requests have a standard format. Information returned to a client should have enough information and self description that the client knows how to process it.

Layered system architecture - calls and responses can go through multiple intermediate layers, but both the client and server will assume they're communicating directly.

Code on demand (optional) - the server can send executable code to the client when requested to extend client functionality.

5 notes

·

View notes

Text

Having trouble with stateful vs stateless architecture because i genuinely don't understand the benefits of stateful architecture. Reminder to look up some examples.

2 notes

·

View notes

Text

How to Choose the Right Tech Stack for Your Web App in 2025

In this article, you’ll learn how to confidently choose the right tech stack for your web app, avoid common mistakes, and stay future-proof. Whether you're building an MVP or scaling a SaaS platform, we’ll walk through every critical decision.

What Is a Tech Stack? (And Why It Matters More Than Ever)

Let’s not overcomplicate it. A tech stack is the combination of technologies you use to build and run a web app. It includes:

Front-end: What users see (e.g., React, Vue, Angular)

Back-end: What makes things work behind the scenes (e.g., Node.js, Django, Laravel)

Databases: Where your data lives (e.g., PostgreSQL, MongoDB, MySQL)

DevOps & Hosting: How your app is deployed and scaled (e.g., Docker, AWS, Vercel)

Why it matters: The wrong stack leads to poor performance, higher development costs, and scaling issues. The right stack supports speed, security, scalability, and a better developer experience.

Step 1: Define Your Web App’s Core Purpose

Before choosing tools, define the problem your app solves.

Is it data-heavy like an analytics dashboard?

Real-time focused, like a messaging or collaboration app?

Mobile-first, for customers on the go?

AI-driven, using machine learning in workflows?

Example: If you're building a streaming app, you need a tech stack optimized for media delivery, latency, and concurrent user handling.

Need help defining your app’s vision? Bluell AB’s Web Development service can guide you from idea to architecture.

Step 2: Consider Scalability from Day One

Most startups make the mistake of only thinking about MVP speed. But scaling problems can cost you down the line.

Here’s what to keep in mind:

Stateless architecture supports horizontal scaling

Choose microservices or modular monoliths based on team size and scope

Go for asynchronous processing (e.g., Node.js, Python Celery)

Use CDNs and caching for frontend optimization

A poorly optimized stack can increase infrastructure costs by 30–50% during scale. So, choose a stack that lets you scale without rewriting everything.

Step 3: Think Developer Availability & Community

Great tech means nothing if you can’t find people who can use it well.

Ask yourself:

Are there enough developers skilled in this tech?

Is the community strong and active?

Are there plenty of open-source tools and integrations?

Example: Choosing Go or Elixir might give you performance gains, but hiring developers can be tough compared to React or Node.js ecosystems.

Step 4: Match the Stack with the Right Architecture Pattern

Do you need:

A Monolithic app? Best for MVPs and small teams.

A Microservices architecture? Ideal for large-scale SaaS platforms.

A Serverless model? Great for event-driven apps or unpredictable traffic.

Pro Tip: Don’t over-engineer. Start with a modular monolith, then migrate as you grow.

Step 5: Prioritize Speed and Performance

In 2025, user patience is non-existent. Google says 53% of mobile users leave a page that takes more than 3 seconds to load.

To ensure speed:

Use Next.js or Nuxt.js for server-side rendering

Optimize images and use lazy loading

Use Redis or Memcached for caching

Integrate CDNs like Cloudflare

Benchmark early and often. Use tools like Lighthouse, WebPageTest, and New Relic to monitor.

Step 6: Plan for Integration and APIs

Your app doesn’t live in a vacuum. Think about:

Payment gateways (Stripe, PayPal)

CRM/ERP tools (Salesforce, HubSpot)

3rd-party APIs (OpenAI, Google Maps)

Make sure your stack supports REST or GraphQL seamlessly and has robust middleware for secure integration.

Step 7: Security and Compliance First

Security can’t be an afterthought.

Use stacks that support JWT, OAuth2, and secure sessions

Make sure your database handles encryption-at-rest

Use HTTPS, rate limiting, and sanitize inputs

Data breaches cost startups an average of $3.86 million. Prevention is cheaper than reaction.

Step 8: Don’t Ignore Cost and Licensing

Open source doesn’t always mean free. Some tools have enterprise licenses, usage limits, or require premium add-ons.

Cost checklist:

Licensing (e.g., Firebase becomes costly at scale)

DevOps costs (e.g., AWS vs. DigitalOcean)

Developer productivity (fewer bugs = lower costs)

Budgeting for technology should include time to hire, cost to scale, and infrastructure support.

Step 9: Understand the Role of DevOps and CI/CD

Continuous integration and continuous deployment (CI/CD) aren’t optional anymore.

Choose a tech stack that:

Works well with GitHub Actions, GitLab CI, or Jenkins

Supports containerization with Docker and Kubernetes

Enables fast rollback and testing

This reduces downtime and lets your team iterate faster.

Step 10: Evaluate Real-World Use Cases

Here’s how popular stacks perform:

Look at what companies are using, then adapt, don’t copy blindly.

How Bluell Can Help You Make the Right Tech Choice

Choosing a tech stack isn’t just technical, it’s strategic. Bluell specializes in full-stack development and helps startups and growing companies build modern, scalable web apps. Whether you’re validating an MVP or building a SaaS product from scratch, we can help you pick the right tools from day one.

Conclusion

Think of your tech stack like choosing a foundation for a building. You don’t want to rebuild it when you’re five stories up.

Here’s a quick recap to guide your decision:

Know your app’s purpose

Plan for future growth

Prioritize developer availability and ecosystem

Don’t ignore performance, security, or cost

Lean into CI/CD and DevOps early

Make data-backed decisions, not just trendy ones

Make your tech stack work for your users, your team, and your business, not the other way around.

1 note

·

View note

Text

What is Serverless Computing?

Serverless computing is a cloud computing model where the cloud provider manages the infrastructure and automatically provisions resources as needed to execute code. This means that developers don’t have to worry about managing servers, scaling, or infrastructure maintenance. Instead, they can focus on writing code and building applications. Serverless computing is often used for building event-driven applications or microservices, where functions are triggered by events and execute specific tasks.

How Serverless Computing Works

In serverless computing, applications are broken down into small, independent functions that are triggered by specific events. These functions are stateless, meaning they don’t retain information between executions. When an event occurs, the cloud provider automatically provisions the necessary resources and executes the function. Once the function is complete, the resources are de-provisioned, making serverless computing highly scalable and cost-efficient.

Serverless Computing Architecture

The architecture of serverless computing typically involves four components: the client, the API Gateway, the compute service, and the data store. The client sends requests to the API Gateway, which acts as a front-end to the compute service. The compute service executes the functions in response to events and may interact with the data store to retrieve or store data. The API Gateway then returns the results to the client.

Benefits of Serverless Computing

Serverless computing offers several benefits over traditional server-based computing, including:

Reduced costs: Serverless computing allows organizations to pay only for the resources they use, rather than paying for dedicated servers or infrastructure.

Improved scalability: Serverless computing can automatically scale up or down depending on demand, making it highly scalable and efficient.

Reduced maintenance: Since the cloud provider manages the infrastructure, organizations don’t need to worry about maintaining servers or infrastructure.

Faster time to market: Serverless computing allows developers to focus on writing code and building applications, reducing the time to market new products and services.

Drawbacks of Serverless Computing

While serverless computing has several benefits, it also has some drawbacks, including:

Limited control: Since the cloud provider manages the infrastructure, developers have limited control over the environment and resources.

Cold start times: When a function is executed for the first time, it may take longer to start up, leading to slower response times.

Vendor lock-in: Organizations may be tied to a specific cloud provider, making it difficult to switch providers or migrate to a different environment.

Some facts about serverless computing

Serverless computing is often referred to as Functions-as-a-Service (FaaS) because it allows developers to write and deploy individual functions rather than entire applications.

Serverless computing is often used in microservices architectures, where applications are broken down into smaller, independent components that can be developed, deployed, and scaled independently.

Serverless computing can result in significant cost savings for organizations because they only pay for the resources they use. This can be especially beneficial for applications with unpredictable traffic patterns or occasional bursts of computing power.

One of the biggest drawbacks of serverless computing is the “cold start” problem, where a function may take several seconds to start up if it hasn’t been used recently. However, this problem can be mitigated through various optimization techniques.

Serverless computing is often used in event-driven architectures, where functions are triggered by specific events such as user interactions, changes to a database, or changes to a file system. This can make it easier to build highly scalable and efficient applications.

Now, let’s explore some other serverless computing frameworks that can be used in addition to Google Cloud Functions.

AWS Lambda: AWS Lambda is a serverless compute service from Amazon Web Services (AWS). It allows developers to run code in response to events without worrying about managing servers or infrastructure.

Microsoft Azure Functions: Microsoft Azure Functions is a serverless compute service from Microsoft Azure. It allows developers to run code in response to events and supports a wide range of programming languages.

IBM Cloud Functions: IBM Cloud Functions is a serverless compute service from IBM Cloud. It allows developers to run code in response to events and supports a wide range of programming languages.

OpenFaaS: OpenFaaS is an open-source serverless framework that allows developers to run functions on any cloud or on-premises infrastructure.

Apache OpenWhisk: Apache OpenWhisk is an open-source serverless platform that allows developers to run functions in response to events. It supports a wide range of programming languages and can be deployed on any cloud or on-premises infrastructure.

Kubeless: Kubeless is a Kubernetes-native serverless framework that allows developers to run functions on Kubernetes clusters. It supports a wide range of programming languages and can be deployed on any Kubernetes cluster.

IronFunctions: IronFunctions is an open-source serverless platform that allows developers to run functions on any cloud or on-premises infrastructure. It supports a wide range of programming languages and can be deployed on any container orchestrator.

These serverless computing frameworks offer developers a range of options for building and deploying serverless applications. Each framework has its own strengths and weaknesses, so developers should choose the one that best fits their needs.

Real-time examples

Coca-Cola: Coca-Cola uses serverless computing to power its Freestyle soda machines, which allow customers to mix and match different soda flavors. The machines use AWS Lambda functions to process customer requests and make recommendations based on their preferences.

iRobot: iRobot uses serverless computing to power its Roomba robot vacuums, which use computer vision and machine learning to navigate homes and clean floors. The Roomba vacuums use AWS Lambda functions to process data from their sensors and decide where to go next.

Capital One: Capital One uses serverless computing to power its mobile banking app, which allows customers to manage their accounts, transfer money, and pay bills. The app uses AWS Lambda functions to process requests and deliver real-time information to users.

Fender: Fender uses serverless computing to power its Fender Play platform, which provides online guitar lessons to users around the world. The platform uses AWS Lambda functions to process user data and generate personalized lesson plans.

Netflix: Netflix uses serverless computing to power its video encoding and transcoding workflows, which are used to prepare video content for streaming on various devices. The workflows use AWS Lambda functions to process video files and convert them into the appropriate format for each device.

Conclusion

Serverless computing is a powerful and efficient solution for building and deploying applications. It offers several benefits, including reduced costs, improved scalability, reduced maintenance, and faster time to market. However, it also has some drawbacks, including limited control, cold start times, and vendor lock-in. Despite these drawbacks, serverless computing will likely become an increasingly popular solution for building event-driven applications and microservices.

Read more

4 notes

·

View notes

Text

Top API Certification Exams 2025 for Developers & Engineers

Why Are API Certification Exams Essential in 2025?

In the rapidly evolving world of software development, APIs have become the backbone of communication between applications and platforms. Whether it’s mobile apps, cloud services, or enterprise software, APIs play a critical role in shaping how systems interact securely and efficiently. In 2025, API certification exams are no longer optional but a professional necessity for developers and engineers aiming to establish credibility in this competitive space. These certifications validate a candidate’s expertise in designing, testing, deploying, and securing APIs that power both public and internal systems.

As more companies shift toward microservices architecture and cloud-based infrastructure, the demand for skilled API professionals is at an all-time high. Employers now seek developers who not only know how to write code but also understand API lifecycle management, documentation standards, security policies, and integration best practices. An API certification proves that the developer has the practical knowledge required to build resilient, scalable, and compliant APIs. In 2025, having a recognized credential on your resume can drastically improve your chances of being hired or promoted in the software industry.

What Are the Best API Developer Certifications Available This Year?

The best API developer certifications in 2025 are those that reflect real-world industry needs. These certifications go beyond theory to test practical skills such as endpoint creation, response formatting, error handling, and API version control. Some of the most recognized credentials include vendor-neutral programs as well as those offered by top tech companies like Google, AWS, and Microsoft. These programs are meticulously crafted to prepare developers for integration projects across web, mobile, and cloud platforms.

A standout certification typically includes hands-on labs, case-based evaluations, and performance testing. They often align with international standards such as OpenAPI Specification and OAuth2 protocols. Candidates who achieve these credentials demonstrate their ability to create efficient, well-documented, and secure APIs that adhere to enterprise-grade performance expectations. As the software development field becomes more specialized, these certifications help developers highlight their niche expertise.

How Is REST API Certification Online Reshaping Developer Education?

REST API certification online has opened doors for developers and IT professionals to gain advanced knowledge without needing to attend physical classes or workshops. In 2025, online certifications offer interactive learning through virtual labs, simulations, and instructor-led modules. REST, being the most widely adopted architectural style for APIs, forms the foundation of most certification curricula. It covers concepts such as statelessness, resource mapping, HTTP methods, and response handling.

What makes online certification powerful is the convenience and flexibility it offers. Developers can balance their work schedules with learning and complete modules at their own pace. Moreover, the inclusion of cloud-based testing environments allows learners to apply concepts immediately, creating RESTful endpoints, working with JSON or XML, and debugging APIs. This hands-on approach ensures a deeper understanding of RESTful architecture, which remains a core skill in backend development and service integration.

Suggested image prompt here: "An online API certification dashboard on a developer's laptop with code editor and REST console open in a modern workspace."

Why Is API Testing Certification Crucial for Quality Assurance?

API testing certification has become a vital qualification for professionals in software quality assurance. In modern development environments, APIs are tested early and frequently in the software lifecycle. This approach ensures that applications function correctly across different systems, even before the front-end interface is fully developed. A certified API tester understands how to validate endpoints, check response codes, perform load testing, and ensure that APIs are secure against common vulnerabilities.

With the increasing reliance on continuous integration and delivery, certified testers who can work with tools like Postman, SoapUI, JMeter, and Swagger are highly valued. In 2025, many teams follow Test-Driven Development TDD and Behavior-Driven Development (BDD) practices that depend heavily on robust API testing. A certification in this area confirms that a professional can create automated test scripts, interpret test reports, and collaborate effectively with developers to improve software quality.

What Does a Certified API Engineer Course Offer to Developers?

A certified API engineer course provides a structured path for mastering API design, security, scalability, and versioning. These courses are not just about writing code but about solving real business problems through intelligent API architecture. In 2025, such courses often include modules on GraphQL, WebSockets, API analytics, and DevOps integrations. They also introduce engineers to best practices in documentation using tools like Swagger and API Blueprint.

A good API engineer course emphasizes design-first approaches, which focus on understanding user needs and use cases before writing a single line of code. It also involves security protocols such as OAuth 2.0, API key management, and rate limiting. By completing such a course, developers position themselves as problem solvers who can create developer-friendly APIs that enhance collaboration, reduce errors, and optimize performance across systems.

How Does an API Development Exam Guide Prepare You for Certification?

An API development exam guide is your roadmap to passing certification exams on the first attempt. These guides compile all necessary knowledge areas including API lifecycle management, schema validation, endpoint design, authentication models, and performance testing. In 2025, updated exam guides often include adaptive study plans, real-time performance tracking, and practice questions modeled after actual certification tests.

These guides go beyond theory by walking you through real-world projects, teaching you to use API gateways, monitoring tools, and logging systems. They cover essential topics like RESTful services, asynchronous API calls, webhook management, and documentation standards. Following a well-structured exam guide gives you the confidence to tackle exam scenarios efficiently and helps you identify the areas that require deeper focus. A reliable guide not only helps in passing the exam but also enhances your real-life skills in building enterprise-grade APIs.

What Value Does Postman API Certification Bring to Your Portfolio?

Postman API certification is one of the most recognized credentials in the API ecosystem today. In 2025, it holds even greater relevance as Postman has become the go-to platform for API testing, documentation, and collaboration. The certification validates your ability to create collections, automate test cases, monitor endpoints, and collaborate with teams using shared workspaces. It also demonstrates proficiency in managing environments, using variables, and building workflows with pre-request scripts and test scripts.

Earning this certification proves your hands-on experience with one of the industry’s most widely adopted tools. For employers, a Postman-certified developer or tester means quicker onboarding and higher productivity. For freelance developers and consultants, it adds weight to their profile and opens doors to API related project opportunities globally. The certification is equally valuable for QA engineers, DevOps professionals, and backend developers.

Why Should You Consider Web API Certification in 2025?

Web API certification in 2025 is a game-changer for anyone involved in full-stack development, cloud computing, or enterprise application design. These certifications emphasize building RESTful and GraphQL-based APIs that serve dynamic web applications, mobile apps, and third-party services. As companies continue to expose services to external partners and customers, web APIs have become the standard method of integration. Certifications validate your capability to design scalable, secure, and versioned APIs that meet industry demands.

Web API certification also ensures that you are up to date with modern API technologies like token-based authentication, API throttling, caching mechanisms, and gateway integration. With the proliferation of frontend frameworks and headless CMS platforms, web APIs serve as the connective tissue between content, data, and users. A certification confirms that you are capable of building APIs that drive real business value and align with cloud-native principles.

Where Can You Find the Best Platform for API Certification Practice?

To succeed in any of the above certifications, it is essential to practice using a trusted and comprehensive platform that offers realistic simulations, performance metrics, and expert-designed content. One of the most reliable platforms in 2025 is PracticeTestSoftware, which offers dedicated API exam preparation tools, mock tests, and scenario-based questions. Whether you are preparing for REST API certification, testing validation, or full-stack integration exams, this platform provides all the necessary support to track your progress and refine your skills.

To directly explore the API certification practice area, visit: https://www.practicetestsoftware.com/api. Their carefully crafted modules are ideal for both beginners and advanced professionals aiming to gain recognized credentials. Additionally, the main site offers broader tools and learning resources: https://www.practicetestsoftware.com. With PracticeTestSoftware, your journey toward becoming a certified API expert becomes smoother, smarter, and more effective.

0 notes

Text

Introduction to REST API in Python

APIs are the backbone of modern web development, allowing systems to communicate and exchange data. If you're working with Python, building a REST API in Python is one of the most practical skills you can learn—whether you're building web apps, microservices, or mobile backends.

This article introduces the core concepts of REST APIs, explains why Python is ideal for API development, and shows how to get started with a real-world example.

What Is a REST API?

REST (Representational State Transfer) is an architectural style that uses HTTP methods (GET, POST, PUT, DELETE) to interact with resources. RESTful APIs are stateless, meaning each request from the client must contain all the information needed to process it.

Key principles of REST APIs:

Stateless communication

Resource-based (usually in JSON)

Use of standard HTTP methods

Uniform interface and consistent URLs

Example:

bashCopyEdit

GET /users/123 POST /users DELETE /users/123

Why Use Python for REST APIs?

Python is widely used in backend development thanks to:

Simple, readable syntax

Powerful libraries and frameworks (Flask, FastAPI, Django)

Large developer community and ecosystem

Great for prototyping and production

Whether you're building a basic API or a scalable service, Python makes it fast and easy.

Build a Simple REST API Using Flask

Flask is a lightweight Python framework perfect for creating small, fast APIs.

Step 1: Install Flask

bashCopyEdit

pip install Flask

Step 2: Create a Basic API

pythonCopyEdit

from flask import Flask, jsonify, request app = Flask(__name__) users = [{"id": 1, "name": "Alice"}, {"id": 2, "name": "Bob"}] @app.route('/users', methods=['GET']) def get_users(): return jsonify(users) @app.route('/users/<int:user_id>', methods=['GET']) def get_user(user_id): user = next((u for u in users if u["id"] == user_id), None) return jsonify(user) if user else ("User not found", 404) @app.route('/users', methods=['POST']) def create_user(): data = request.get_json() new_user = {"id": len(users) + 1, "name": data["name"]} users.append(new_user) return jsonify(new_user), 201 if __name__ == '__main__': app.run(debug=True)

You now have a basic REST API that supports GET and POST.

Alternative Frameworks

FastAPI – High-performance, modern Python framework with async support and automatic documentation.

Django REST Framework – Great for complex apps with built-in admin and ORM.

Tornado / Sanic – For high-performance, asynchronous use cases.

Best Practices

Use meaningful endpoints (/users, /posts, /products)

Follow HTTP status codes correctly (200, 201, 400, 404, 500)

Secure APIs with authentication (API keys, OAuth)

Use versioning (/api/v1/users)

Write automated tests for endpoints

Related Reads

What is Unit Testing

Python Code for Pulling API Data

What Does Enumerate Do in Python

Conclusion

Learning how to build a REST API in Python is a powerful skill that can open the door to countless projects, from web apps to automation scripts. Whether you start with Flask or FastAPI, Python makes building and maintaining APIs simple and scalable.

Looking to automate API testing? Try Keploy — an open-source tool that records real traffic and auto-generates test cases and mocks, perfect for streamlining your API development workflow.

0 notes

Link

0 notes

Text

DevOps Services at CloudMinister Technologies: Tailored Solutions for Scalable Growth

In a business landscape where technology evolves rapidly and customer expectations continue to rise, enterprises can no longer rely on generic IT workflows. Every organization has a distinct set of operational requirements, compliance mandates, infrastructure dependencies, and delivery goals. Recognizing these unique demands, CloudMinister Technologies offers Customized DevOps Services — engineered specifically to match your organization's structure, tools, and objectives.

DevOps is not a one-size-fits-all practice. It thrives on precision, adaptability, and optimization. At CloudMinister Technologies, we provide DevOps solutions that are meticulously tailored to fit your current systems while preparing you for the scale, speed, and security of tomorrow’s digital ecosystem.

Understanding the Need for Customized DevOps

While traditional DevOps practices bring automation and agility into the software delivery cycle, businesses often face challenges when trying to implement generic solutions. Issues such as toolchain misalignment, infrastructure incompatibility, compliance mismatches, and inefficient workflows often emerge, limiting the effectiveness of standard DevOps models.

CloudMinister Technologies bridges these gaps through in-depth discovery, personalized architecture planning, and customized automation flows. Our team of certified DevOps engineers works alongside your developers and operations staff to build systems that work the way your organization works.

Our Customized DevOps Service Offerings

Personalized DevOps Assessment

Every engagement at CloudMinister begins with a thorough analysis of your existing systems and workflows. This includes evaluating:

Development and deployment lifecycles

Existing tools and platforms

Current pain points in collaboration or release processes

Security protocols and compliance requirements

Cloud and on-premise infrastructure configurations

We use this information to design a roadmap that matches your business model, technical environment, and future expansion goals.

Tailored CI/CD Pipeline Development

Continuous Integration and Continuous Deployment (CI/CD) pipelines are essential for accelerating software releases. At CloudMinister, we create CI/CD frameworks that are tailored to your workflow, integrating seamlessly with your repositories, testing tools, and production environments. These pipelines are built to support:

Automated testing at each stage of the build

Secure, multi-environment deployments

Blue-green or canary releases based on your delivery strategy

Integration with tools like GitLab, Jenkins, Bitbucket, and others

Infrastructure as Code (IaC) Customized for Your Stack

We use leading Infrastructure as Code tools such as Terraform, AWS CloudFormation, and Ansible to help automate infrastructure provisioning. Each deployment is configured based on your stack, environment type, and scalability needs—whether cloud-native, hybrid, or legacy. This ensures repeatable deployments, fewer manual errors, and better control over your resources.

Customized Containerization and Orchestration

Containerization is at the core of modern DevOps practices. Whether your application is built for Docker, Kubernetes, or OpenShift, our team tailors the container ecosystem to suit your service dependencies, traffic patterns, and scalability requirements. From stateless applications to persistent volume management, we ensure your services are optimized for performance and reliability.

Monitoring and Logging Built Around Your Metrics

Monitoring and observability are not just about uptime—they are about capturing the right metrics that define your business’s success. We deploy customized dashboards and logging frameworks using tools like Prometheus, Grafana, Loki, and the ELK stack. These systems are designed to track application behavior, infrastructure health, and business-specific KPIs in real-time.

DevSecOps Tailored for Regulatory Compliance

Security is integrated into every stage of our DevOps pipelines through our DevSecOps methodology. We customize your pipeline to include vulnerability scanning, access control policies, automated compliance reporting, and secret management using tools such as Vault, SonarQube, and Aqua. Whether your business operates in finance, healthcare, or e-commerce, our solutions ensure your system meets all necessary compliance standards like GDPR, HIPAA, or PCI-DSS.

Case Study: Optimizing DevOps for a FinTech Organization

A growing FinTech firm approached CloudMinister Technologies with a need to modernize their software delivery process. Their primary challenges included slow deployment cycles, manual error-prone processes, and compliance difficulties.

After an in-depth consultation, our team proposed a custom DevOps solution which included:

Building a tailored CI/CD pipeline using GitLab and Jenkins

Automating infrastructure on AWS with Terraform

Implementing Kubernetes for service orchestration

Integrating Vault for secure secret management

Enforcing compliance checks with automated auditing

As a result, the company achieved:

A 70 percent reduction in deployment time

Streamlined compliance reporting with automated logging

Full visibility into release performance

Better collaboration between development and operations teams

This engagement not only improved their operational efficiency but also gave them the confidence to scale rapidly.

Business Benefits of Customized DevOps Solutions

Partnering with CloudMinister Technologies for customized DevOps implementation offers several strategic benefits:

Streamlined deployment processes tailored to your workflow

Reduced operational costs through optimized resource usage

Increased release frequency with lower failure rates

Enhanced collaboration between development, operations, and security teams

Scalable infrastructure with version-controlled configurations

Real-time observability of application and infrastructure health

End-to-end security integration with compliance assurance

Industries We Serve

We provide specialized DevOps services for diverse industries, each with its own regulatory, technological, and operational needs:

Financial Services and FinTech

Healthcare and Life Sciences

Retail and eCommerce

Software as a Service (SaaS) providers

EdTech and eLearning platforms

Media, Gaming, and Entertainment

Each solution is uniquely tailored to meet industry standards, customer expectations, and digital transformation goals.

Why CloudMinister Technologies?

CloudMinister Technologies stands out for its commitment to client-centric innovation. Our strength lies not only in the tools we use, but in how we customize them to empower your business.

What makes us the right DevOps partner:

A decade of experience in DevOps, cloud management, and server infrastructure

Certified engineers with expertise in AWS, Azure, Kubernetes, Docker, and CI/CD platforms

24/7 client support with proactive monitoring and incident response

Transparent engagement models and flexible service packages

Proven track record of successful enterprise DevOps transformations

Frequently Asked Questions

What does customization mean in DevOps services? Customization means aligning tools, pipelines, automation processes, and infrastructure management based on your business’s existing systems, goals, and compliance requirements.

Can your DevOps services be implemented on AWS, Azure, or Google Cloud? Yes, we provide cloud-specific DevOps solutions, including tailored infrastructure management, CI/CD automation, container orchestration, and security configuration.

Do you support hybrid cloud and legacy systems? Absolutely. We create hybrid pipelines that integrate seamlessly with both modern cloud-native platforms and legacy infrastructure.

How long does it take to implement a customized DevOps pipeline? The timeline varies based on the complexity of the environment. Typically, initial deployment starts within two to six weeks post-assessment.

What if we already have a DevOps process in place? We analyze your current DevOps setup and enhance it with better tools, automation, and customized configurations to maximize efficiency and reliability.

Ready to Transform Your Operations?

At CloudMinister Technologies, we don’t just implement DevOps—we tailor it to accelerate your success. Whether you are a startup looking to scale or an enterprise aiming to modernize legacy systems, our experts are here to deliver a DevOps framework that is as unique as your business.

Contact us today to get started with a personalized consultation.

Visit: www.cloudminister.com Email: [email protected]

0 notes

Text

Enterprise Kubernetes Storage With Red Hat OpenShift Data Foundation

In today’s enterprise IT environments, the adoption of containerized applications has grown exponentially. While Kubernetes simplifies application deployment and orchestration, it poses a unique challenge when it comes to managing persistent data. Stateless workloads may scale with ease, but stateful applications require a robust, scalable, and resilient storage backend — and that’s where Red Hat OpenShift Data Foundation (ODF) plays a critical role.

🌐 Why Enterprise Kubernetes Storage Matters

Kubernetes was originally designed for stateless applications. However, modern enterprise applications — databases, analytics engines, monitoring tools — often need to store data persistently. Enterprises require:

High availability

Scalable performance

Data protection and recovery

Multi-cloud and hybrid-cloud compatibility

Standard storage solutions often fall short in a dynamic, containerized environment. That’s why a storage platform designed for Kubernetes, within Kubernetes, is crucial.

🔧 What is Red Hat OpenShift Data Foundation?

Red Hat OpenShift Data Foundation is a Kubernetes-native, software-defined storage solution integrated with Red Hat OpenShift. It provides:

Block, file, and object storage

Dynamic provisioning for persistent volumes

Built-in data replication, encryption, and disaster recovery

Unified management across hybrid cloud environments

ODF is built on Ceph, a battle-tested distributed storage system, and uses Rook to orchestrate storage on Kubernetes.

Key Capabilities

1. Persistent Storage for Containers

ODF provides dynamic, persistent storage for stateful workloads like PostgreSQL, MongoDB, Kafka, and more, enabling them to run natively on OpenShift.

2. Multi-Access and Multi-Tenancy

Supports file sharing between pods and secure data isolation between applications or business units.

3. Elastic Scalability

Storage scales with compute, ensuring performance and capacity grow as application needs increase.

4. Built-in Data Services

Includes snapshotting, backup and restore, mirroring, and encryption, all critical for enterprise-grade reliability.

Integration with OpenShift

ODF integrates seamlessly into the OpenShift Console, offering a native, operator-based deployment model. Storage is provisioned and managed using familiar Kubernetes APIs and Custom Resources, reducing the learning curve for DevOps teams.

🔐 Enterprise Benefits

Operational Consistency: Unified storage and platform management

Security and Compliance: End-to-end encryption and audit logging

Hybrid Cloud Ready: Runs consistently across on-premises, AWS, Azure, or any cloud

Cost Efficiency: Optimize storage usage through intelligent tiering and compression

✅ Use Cases

Running databases in Kubernetes

Storing logs and monitoring data

AI/ML workloads needing high-throughput file storage

Object storage for backups or media repositories

📈 Conclusion

Enterprise Kubernetes storage is no longer optional — it’s essential. As businesses migrate more critical workloads to Kubernetes, solutions like Red Hat OpenShift Data Foundation provide the performance, flexibility, and resilience needed to support stateful applications at scale.

ODF helps bridge the gap between traditional storage models and cloud-native innovation — making it a strategic asset for any organization investing in OpenShift and modern application architectures.

For more info, Kindly follow: Hawkstack Technologies

0 notes

Text

Meet the Machines That Think for Themselves: AI Agent Development Explained

Here is your full 1500-word blog post titled:

Meet the Machines That Think for Themselves: AI Agent Development Explained

For decades, artificial intelligence (AI) has largely been about recognition—recognizing images, processing language, classifying patterns. But today, AI is stepping into something more profound: autonomy. Machines are no longer limited to reacting to input. They’re learning how to act on goals, make independent decisions, and interact with complex environments. These are not just AI systems—they are AI agents. And they may be the most transformative development in the field since the invention of the neural network.

In this post, we explore the world of AI agent development: what it means, how it works, and why it’s reshaping everything from software engineering to how businesses run.

1. What Is an AI Agent?

At its core, an AI agent is a software system that perceives its environment, makes decisions, and takes actions to achieve specific goals—autonomously. Unlike traditional AI tools, which require step-by-step commands or input prompts, agents:

Operate over time

Maintain a memory or state

Plan and re-plan as needed

Interact with APIs, tools, and even other agents

Think of the difference between a calculator (traditional AI) and a personal assistant who schedules your meetings, reminds you of deadlines, and reschedules events when conflicts arise (AI agent). The latter acts with purpose—on your behalf.

2. The Evolution: From Models to Agents

Most of today’s AI tools, like ChatGPT or image generators, are stateless. They process an input and return an output, without understanding context or goals. But humans don’t work like that—and increasingly, we need AI that collaborates, not just computes.

AI agents represent the next logical step in this evolution: PhaseCharacteristicsRule-based SystemsHardcoded logic; no learningMachine LearningLearns from data; predicts outcomesLanguage ModelsUnderstands and generates natural languageAI AgentsThinks, remembers, acts, adapts

The shift from passive prediction to active decision-making changes how AI can be used across virtually every industry.

3. Key Components of AI Agents

An AI agent is a system made up of many intelligent parts. Let’s break it down:

Core Brain (Language Model)

Most agents are powered by an LLM (like GPT-4 or Claude) that enables reasoning, language understanding, and decision-making.

Tool Use

Agents often use tools (e.g., web search, code interpreters, APIs) to complete tasks beyond what language alone can do. This is called tool augmentation.

Memory

Agents track past actions, conversations, and environmental changes—allowing for long-term planning and learning.

Looped Execution

Agents operate in loops: observe → plan → act → evaluate → repeat. This dynamic cycle gives them persistence and adaptability.

Goal Orientation

Agents aren’t just reactive. They’re goal-driven, meaning they pursue defined outcomes and can adjust their behavior based on progress or obstacles.

4. Popular Agent Architectures and Frameworks

AI agent development has gained momentum thanks to several open-source and commercial frameworks:

LangChain

LangChain allows developers to build agents that interact with external tools, maintain memory, and chain reasoning steps.

AutoGPT

One of the first agents to go viral, AutoGPT creates task plans and executes them autonomously using GPT models and various plugins.

CrewAI

CrewAI introduces a multi-agent framework where different agents collaborate—each with specific roles like researcher, writer, or strategist.

Open Interpreter

This agent runs local code and connects to your machine, allowing more grounded interaction and automation tasks like file edits and data manipulation.

These platforms are making it easier than ever to prototype and deploy agentic behavior across domains.

5. Real-World Use Cases of AI Agents

The rise of AI agents is not confined to research labs. They are already being used in practical, impactful ways:

Personal Productivity Agents

Imagine an AI that manages your schedule, drafts emails, books travel, and coordinates with teammates—all while adjusting to changes in real time.

Examples: HyperWrite’s Personal Assistant, Rewind’s AI agent

Enterprise Workflows

Companies are deploying agents to automate cross-platform tasks: extract insights from databases, generate reports, trigger workflows in CRMs, and more.

Examples: Bardeen, Zapier AI, Lamini

Research and Knowledge Work

Agents can autonomously scour the internet, summarize findings, cite sources, and synthesize information for decision-makers or content creators.

Examples: Perplexity Copilot, Elicit.org

Coding and Engineering

AI dev agents can write, test, debug, and deploy code—either independently or in collaboration with human engineers.

Examples: Devika, Smol Developer, OpenDevin

6. Challenges in Building Reliable AI Agents

While powerful, AI agents also come with serious technical and ethical considerations:

Planning Failures

Long chains of reasoning can fail or loop endlessly without effective goal-checking mechanisms.

Hallucinations

Language models may invent tools, misinterpret instructions, or generate false information that leads agents off course.

Tool Integration Complexity

Agents often need to interact with dozens of APIs and services. Building secure, resilient integrations is non-trivial.

Security Risks

Autonomous access to files, databases, or systems introduces the risk of unintended consequences or malicious misuse.

Human-Agent Trust

Transparency is key. Users must understand what agents are doing, why, and when intervention is needed.

7. The Rise of Multi-Agent Collaboration

One of the most exciting developments in AI agent design is the emergence of multi-agent systems—where teams of agents work together on complex tasks.

In a multi-agent environment:

Agents take on specialized roles (e.g., researcher, planner, executor)

They communicate via structured dialogue

They make decisions collaboratively

They can adapt roles dynamically based on performance

Think of it like a digital startup where every team member is an AI.

8. AI Agents vs Traditional Automation

It’s worth comparing agents to traditional automation tools like RPA (robotic process automation): FeatureRPAAI AgentsRule-basedYesNo (uses reasoning)AdaptableNoYesGoal-drivenNo (task-driven)YesHandles ambiguityPoorlyWell (via LLM reasoning)Learns/improvesNot inherentlyPossible (with memory or RL)Use of external toolsFixed integrationsDynamic tool use via API calls

Agents are smarter, more flexible, and better suited to environments with changing conditions and complex decision trees.

9. The Future of AI Agents: What’s Next?

We’re just at the beginning of what AI agents can do. Here’s what’s on the horizon:

Agent Networks

Future systems may consist of thousands or millions of agents interacting across the internet—solving problems, offering services, or forming digital marketplaces.

Autonomous Organizations

Agents may be used to power decentralized organizations where decisions, operations, and strategies are managed algorithmically.

Human-Agent Collaboration

The most promising future isn’t one where agents replace humans—but where they amplify them. Picture digital teammates who never sleep, always learn, and constantly adapt.

Self-Improving Agents

Combining LLMs with reinforcement learning and feedback loops will allow agents to learn from their successes and mistakes autonomously.

10. Getting Started: Building Your First AI Agent

Want to experiment with AI agents? Here's how to begin:

Choose a Framework: LangChain, AutoGPT, or CrewAI are good places to start.

Define a Goal: Simple goals like “send weekly reports” or “summarize news articles” are ideal.

Enable Tool Use: Set up access to external tools (e.g., web APIs, search engines).

Implement Memory: Use vector databases like Pinecone or Chroma for contextual recall.

Test in Loops: Observe how your agent plans, acts, and adjusts—then refine.

Monitor and Gate: Use human-in-the-loop systems or rule-based checks to prevent runaway behavior.

Conclusion: Thinking Machines Are Already Here

We no longer need to imagine a world where machines think for themselves—it’s already happening. From simple assistants to advanced autonomous researchers, AI agents are beginning to shape a world where intelligence is not just available but actionable.

The implications are massive. We’ll see a rise in automation not just of tasks, but of strategies. Human creativity and judgment will pair with machine persistence and optimization. Entire business units will be run by collaborative AI teams. And we’ll all have agents working behind the scenes to make our lives smoother, smarter, and more scalable.

In this future, understanding how to build and interact with AI agents will be as fundamental as knowing how to use the internet was in the 1990s.

Welcome to the age of the machines that think for themselves.

0 notes

Text

Introduction: The Evolution of Web Scraping

Traditional Web Scraping involves deploying scrapers on dedicated servers or local machines, using tools like Python, BeautifulSoup, and Selenium. While effective for small-scale tasks, these methods require constant monitoring, manual scaling, and significant infrastructure management. Developers often need to handle cron jobs, storage, IP rotation, and failover mechanisms themselves. Any sudden spike in demand could result in performance bottlenecks or downtime. As businesses grow, these challenges make traditional scraping harder to maintain. This is where new-age, cloud-based approaches like Serverless Web Scraping emerge as efficient alternatives, helping automate, scale, and streamline data extraction.

Challenges of Manual Scraper Deployment (Scaling, Infrastructure, Cost)

Manual scraper deployment comes with numerous operational challenges. Scaling scrapers to handle large datasets or traffic spikes requires robust infrastructure and resource allocation. Managing servers involves ongoing costs, including hosting, maintenance, load balancing, and monitoring. Additionally, handling failures, retries, and scheduling manually can lead to downtime or missed data. These issues slow down development and increase overhead. In contrast, Serverless Web Scraping removes the need for dedicated servers by running scraping tasks on platforms like AWS Lambda, Azure Functions, and Google Cloud Functions, offering auto-scaling and cost-efficiency on a pay-per-use model.

Introduction to Serverless Web Scraping as a Game-Changer

What is Serverless Web Scraping?

Serverless Web Scraping refers to the process of extracting data from websites using cloud-based, event-driven architecture, without the need to manage underlying servers. In cloud computing, "serverless" means the cloud provider automatically handles infrastructure scaling, provisioning, and resource allocation. This enables developers to focus purely on writing the logic of Data Collection, while the platform takes care of execution.

Popular Cloud Providers like AWS Lambda, Azure Functions, and Google Cloud Functions offer robust platforms for deploying these scraping tasks. Developers write small, stateless functions that are triggered by events such as HTTP requests, file uploads, or scheduled intervals—referred to as Scheduled Scraping and Event-Based Triggers. These functions are executed in isolated containers, providing secure, cost-effective, and on-demand scraping capabilities.

The core advantage is Lightweight Data Extraction. Instead of running a full scraper continuously on a server, serverless functions only execute when needed—making them highly efficient. Use cases include:

Scheduled Scraping (e.g., extracting prices every 6 hours)

Real-time scraping triggered by user queries

API-less extraction where data is not available via public APIs

These functionalities allow businesses to collect data at scale without investing in infrastructure or DevOps.

Key Benefits of Serverless Web Scraping

Scalability on Demand

One of the strongest advantages of Serverless Web Scraping is its ability to scale automatically. When using Cloud Providers like AWS Lambda, Azure Functions, or Google Cloud Functions, your scraping tasks can scale from a few requests to thousands instantly—without any manual intervention. For example, an e-commerce brand tracking product listings during flash sales can instantly scale their Data Collection tasks to accommodate massive price updates across multiple platforms in real time.

Cost-Effectiveness (Pay-as-You-Go Model)

Traditional Web Scraping involves paying for full-time servers, regardless of usage. With serverless solutions, you only pay for the time your code is running. This pay-as-you-go model significantly reduces costs, especially for intermittent scraping tasks. For instance, a marketing agency running weekly Scheduled Scraping to track keyword rankings or competitor ads will only be billed for those brief executions—making Serverless Web Scraping extremely budget-friendly.

Zero Server Maintenance

Server management can be tedious and resource-intensive, especially when deploying at scale. Serverless frameworks eliminate the need for provisioning, patching, or maintaining infrastructure. A developer scraping real estate listings no longer needs to manage server health or uptime. Instead, they focus solely on writing scraping logic, while Cloud Providers handle the backend processes, ensuring smooth, uninterrupted Lightweight Data Extraction.

Improved Reliability and Automation

Using Event-Based Triggers (like new data uploads, emails, or HTTP calls), serverless scraping functions can be scheduled or executed automatically based on specific events. This guarantees better uptime and reduces the likelihood of missing important updates. For example, Azure Functions can be triggered every time a CSV file is uploaded to the cloud, automating the Data Collection pipeline.

Environmentally Efficient

Traditional servers consume energy 24/7, regardless of activity. Serverless environments run functions only when needed, minimizing energy usage and environmental impact. This makes Serverless Web Scraping an eco-friendly option. Businesses concerned with sustainability can reduce their carbon footprint while efficiently extracting vital business intelligence.

Ideal Use Cases for Serverless Web Scraping

1. Market and Price Monitoring

Serverless Web Scraping enables retailers and analysts to monitor competitor prices in real-time using Scheduled Scraping or Event-Based Triggers.

Example:

A fashion retailer uses AWS Lambda to scrape competitor pricing data every 4 hours. This allows dynamic pricing updates without maintaining any servers, leading to a 30% improvement in pricing competitiveness and a 12% uplift in revenue.

2. E-commerce Product Data Collection

Collect structured product information (SKUs, availability, images, etc.) from multiple e-commerce platforms using Lightweight Data Extraction methods via serverless setups.

Example:

An online electronics aggregator uses Google Cloud Functions to scrape product specs and availability across 50+ vendors daily. By automating Data Collection, they reduce manual data entry costs by 80%.

3. Real-Time News and Sentiment Tracking

Use Web Scraping to monitor breaking news or updates relevant to your industry and feed it into dashboards or sentiment engines.

Example:

A fintech firm uses Azure Functions to scrape financial news from Bloomberg and CNBC every 5 minutes. The data is piped into a sentiment analysis engine, helping traders act faster based on market sentiment—cutting reaction time by 40%.

4. Social Media Trend Analysis

Track hashtags, mentions, and viral content in real time across platforms like Twitter, Instagram, or Reddit using Serverless Web Scraping.

Example:

A digital marketing agency leverages AWS Lambda to scrape trending hashtags and influencer posts during product launches. This real-time Data Collection enables live campaign adjustments, improving engagement by 25%.

5. Mobile App Backend Scraping Using Mobile App Scraping Services

Extract backend content and APIs from mobile apps using Mobile App Scraping Services hosted via Cloud Providers.

Example:

A food delivery startup uses Google Cloud Functions to scrape menu availability and pricing data from a competitor’s app every 15 minutes. This helps optimize their own platform in real-time, improving response speed and user satisfaction.

Technical Workflow of a Serverless Scraper

In this section, we’ll outline how a Lambda-based scraper works and how to integrate it with Web Scraping API Services and cloud triggers.

1. Step-by-Step on How a Typical Lambda-Based Scraper Functions

A Lambda-based scraper runs serverless functions that handle the data extraction process. Here’s a step-by-step workflow for a typical AWS Lambda-based scraper:

Step 1: Function Trigger

Lambda functions can be triggered by various events. Common triggers include API calls, file uploads, or scheduled intervals.

For example, a scraper function can be triggered by a cron job or a Scheduled Scraping event.

Example Lambda Trigger Code:

Lambda functionis triggered based on a schedule (using EventBridge or CloudWatch).

requests.getfetches the web page.

BeautifulSoupprocesses the HTML to extract relevant data.

Step 2: Data Collection

After triggering the Lambda function, the scraper fetches data from the targeted website. Data extraction logic is handled in the function using tools like BeautifulSoup or Selenium.

Step 3: Data Storage/Transmission

After collecting data, the scraper stores or transmits the results:

Save data to AWS S3 for storage.

Push data to an API for further processing.

Store results in a database like Amazon DynamoDB.

2. Integration with Web Scraping API Services

Lambda can be used to call external Web Scraping API Services to handle more complex scraping tasks, such as bypassing captchas, managing proxies, and rotating IPs.

For instance, if you're using a service like ScrapingBee or ScraperAPI, the Lambda function can make an API call to fetch data.

Example: Integrating Web Scraping API Services

In this case, ScrapingBee handles the web scraping complexities, and Lambda simply calls their API.

3. Using Cloud Triggers and Events

Lambda functions can be triggered in multiple ways based on events. Here are some examples of triggers used in Serverless Web Scraping:

Scheduled Scraping (Cron Jobs Cron Jobs):

You can use AWS EventBridge or CloudWatch Events to schedule your Lambda function to run at specific intervals (e.g., every hour, daily, or weekly).

Example: CloudWatch Event Rule (cron job) for Scheduled Scraping:

This will trigger the Lambda function to scrape a webpage every hour.

File Upload Trigger (Event-Based):

Lambda can be triggered by file uploads in S3. For example, after scraping, if the data is saved as a file, the file upload in S3 can trigger another Lambda function for processing.

Example: Trigger Lambda on S3 File Upload:

By leveraging Serverless Web Scraping using AWS Lambda, you can easily scale your web scraping tasks with Event-Based Triggers such as Scheduled Scraping, API calls, or file uploads. This approach ensures that you avoid the complexity of infrastructure management while still benefiting from scalable, automated data collection. Learn More

#LightweightDataExtraction#AutomatedDataExtraction#StreamlineDataExtraction#ServerlessWebScraping#DataMining

0 notes

Text

Building APIs for Communication Between Client and Server

In the realm of modern web development, building efficient and reliable APIs (Application Programming Interfaces) is fundamental for seamless communication between the client and server. APIs act as the bridge that connects the frontend—the user interface—with backend services, enabling data exchange, business logic execution, and dynamic content delivery. Understanding how to design and implement APIs effectively is a core skill for any aspiring developer and a vital part of a full-stack developer classes.

An API essentially outlines a collection of guidelines and protocols for the interaction between software components. In web development, APIs typically adhere to the RESTful architectural style, which utilises standard HTTP methods, such as GET, POST, PUT, and DELETE, to perform operations on resources. This stateless communication protocol makes APIs scalable, easy to maintain, and accessible across different platforms and devices.

The process of building APIs begins with defining the endpoints—the specific URLs that clients can request to access or manipulate data. Designing clear, intuitive endpoints that follow naming conventions improves developer experience and makes the API easier to use. For instance, endpoints that represent collections of resources typically use plural nouns, while actions are implied through HTTP methods.

Data exchange between client and server typically happens in JSON format, a lightweight and human-readable data interchange standard. JSON's simplicity allows both frontend and backend developers to easily parse, generate, and debug data, making it the preferred choice for APIs.

Security is paramount when building APIs. Developers must implement authentication and authorisation mechanisms to control who can access the API and what operations they can perform. Common techniques include API keys, OAuth tokens, and JSON Web Tokens (JWT). A strong grasp of these security practices is often emphasised in a full-stack developer course, preparing learners to build secure and reliable APIs.

Error handling and status codes are essential components of API design. Clear and consistent responses allow clients to understand the outcome of their requests and handle exceptions gracefully. Standard HTTP status codes like 200 (OK), 404 (Page Not Found), and also 500 (Internal Server Error) convey success or failure, while descriptive error messages help diagnose issues quickly.

Building scalable APIs involves considering performance optimisation strategies. Techniques like request throttling, caching, and pagination prevent server overload and improve response times, especially when the website is dealing with large datasets or high traffic volumes. Implementing these optimisations ensures the API remains responsive and reliable under heavy use.

Another important consideration is versioning. As APIs evolve to include new features or improvements, maintaining backward compatibility prevents breaking existing clients. Versioning strategies might include embedding the version number in the URL or specifying it in request headers.

Modern development practices encourage building APIs that are easy to test and document. Automated testing helps catch bugs early and verify that endpoints behave as expected under different conditions. Documentation tools, such as Swagger or Postman, provide interactive interfaces that allow developers and users to explore API capabilities, boosting adoption and collaboration.

Integration with databases and backend logic is a fundamental part of API development. APIs serve as an abstraction layer that exposes data and services while shielding clients from underlying complexities. This separation of concerns enhances security and maintainability, allowing backend systems to evolve independently of frontend applications.

Learning how to build robust APIs is a major focus in a full-stack developer course in Mumbai, where students gain hands-on experience designing, developing, and consuming APIs in real-world projects. This exposure equips them with the skills needed to build complete applications that are modular, scalable, and user-friendly.

In conclusion, APIs are the lifeline of communication between clients or users and the server in modern web applications. Designing and building well-structured, secure, and efficient APIs enables developers to create dynamic, data-driven experiences that meet user needs. Through comprehensive training, such as a full-stack developer course in Mumbai, aspiring developers learn best practices and gain the confidence to build APIs that power today's digital world. Mastery of API development not only enhances career prospects but also opens doors to innovation in software development.

Business Name: Full Stack Developer Course In Mumbai Address: Tulasi Chambers, 601, Lal Bahadur Shastri Marg, near by Three Petrol Pump, opp. to Manas Tower, Panch Pakhdi, Thane West, Mumbai, Thane, Maharashtra 400602, Phone: 09513262822

0 notes

Text

There is something deeply exhausting — almost obscene — about watching architecture that bends, without resistance, to the delusions of the nouveau riche. Houses that speak loudly but say nothing. Constructions that rise as if trying to prove something — about money, perhaps, or about the owners’ idea of elegance. A kind of architecture that confuses shine with beauty, excess with sophistication.

I recognize these houses even before turning the corner. By their affected symmetry, by the materials that gleam without ever convincing. Sometimes by the overly polished marble, the excessive lighting, the lines that try to be modern but unravel into a generic, stateless baroque. There is a restlessness in them, as if they fear the world might not notice how much they cost.

What bothers me is not the exaggeration itself, but the lack of conviction. It’s the desperate attempt to appear relevant. To follow a trend. It’s not a matter of wealth. Money, when it comes without aesthetic grounding, without memory, without the grace of restraint, tends to be cruel to spaces. There is, indeed, an anxious haste, a string of choices made in fear of being wrong that, ironically, all go wrong together.

And then the years pass. And with them, what was meant to be timeless reveals itself as dated, everything rotting with wounded prestige. Deep down, there is something moving in that failure. A profound fear of not being taken seriously. Of not belonging. And it is this fear, so human, that makes these houses — with their disproportionate chandeliers and screaming marbles — less about architecture and more about misplaced grandeur.

Good architecture, I’ve come to believe, isn’t loud. It welcomes reference and history. It carries within it a sense of presence — not merely wealth, but thought, care, restraint. It is not afraid of patina.

That is why these spaces feel so sterile, even when opulent, performing theatrical excess. They lack intimacy. The art on the walls exists only to signal newfound money, not to provoke thought. Yet you can tell, at a glance, that this is the first time the owners could afford to be seen — and that they equate this visibility with worth.

I often wonder what these houses would say if they could speak honestly. They would confess their enervation. The strain of holding the attention of every single room, of being constantly on display.

What’s missing most is a sense of soul. Not a blank canvas for the owner’s ambition, but the dignity of building something that, like all things of value, comes from careful style and knowledge.

Perhaps what’s truly missing is the quality that emerges when a space is allowed to breathe, to age, to carry the rust of time well spent. Without that, all that remains is the surface — polished, expensive, and deeply forgettable for its lack of substance.

0 notes

Text

Top Mistakes Beginners Make in Flutter (and How to Avoid Them)