#autonomous AI

Explore tagged Tumblr posts

Text

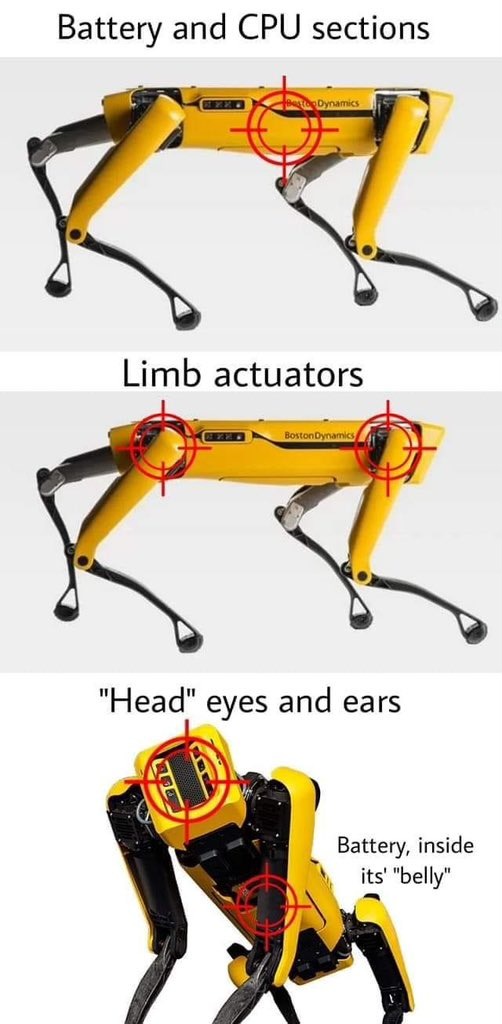

Watched Unknown:Killer Robots on Netflix last night. AI technology is now being developed with a level of autonomy that is truly frightening. If reports are true, AI weapons with autonomy have already be used, Ukraine being one of the places, to kill humans with no input from humans in the decision making process to kill.

So you have a group of people on the show talking about the immense potential for good this technology can be used for. Which I’m confident it true. Almost gives you a sense of hope.

But then you have a group of people talking about how this technology can be used to give a military force a huge advantage on the battlefield. Things like AI swarming. Battle planning, in which an AI program is deciding how to conduct military operations. And these folks are all like “Yeah this is great, a huge game changer. It will make warfare more effective and save lives.” without really seeming to comprehend that one day it could easily end all human life.

And you are then hammered in the face with that reality AI will probably be what ends the human race some day. But the real killer will have been greed mixed with hubris.

For future reference

53K notes

·

View notes

Text

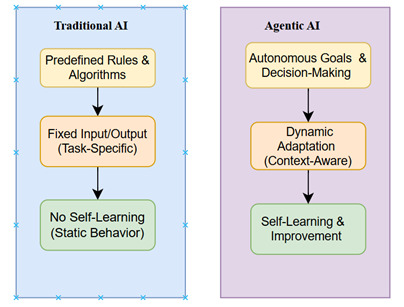

What Are Agentic AI Systems and How Do They Work Autonomously?

Agentic AI systems are autonomous, self-directed technologies capable of decision-making without constant human input. Learn how they work. Agentic AI systems are transforming how machines interact with their environments by acting independently, adapting goals, and executing decisions. These systems use advanced machine learning to function autonomously, revolutionizing industries in the USA,…

0 notes

Text

How Autonomous AI is Revolutionizing Business Workflows Today

The future is now. Autonomous AI agents are reshaping business operations by working independently to solve complex problems, accelerate workflows, and make smarter decisions—freeing humans to focus on creativity, strategy, and growth.

🔍 𝐇𝐨𝐰 𝐀𝐮𝐭𝐨𝐧𝐨𝐦𝐨𝐮𝐬 𝐀𝐈 𝐢𝐬 𝐭𝐫𝐚𝐧𝐬𝐟𝐨𝐫𝐦𝐢𝐧𝐠 𝐭𝐨𝐝𝐚𝐲’𝐬 𝐛𝐮𝐬𝐢𝐧𝐞𝐬𝐬:

✅ 𝐇𝐲𝐩𝐞𝐫-𝐄𝐟𝐟𝐢𝐜𝐢𝐞𝐧𝐭 𝐖𝐨𝐫𝐤𝐟𝐥𝐨𝐰𝐬 AI agents automate repetitive tasks—like scheduling, supply chain adjustments, and compliance checks—running 24/7 without human error or delays.

✅ 𝐑𝐞𝐚𝐥-𝐓𝐢𝐦𝐞 𝐃𝐚𝐭𝐚-𝐃𝐫𝐢𝐯𝐞𝐧 𝐃𝐞𝐜𝐢𝐬𝐢𝐨𝐧𝐬 They analyze vast datasets and provide business leaders with predictive insights and scenario simulations for smarter strategies.

✅ 𝐏𝐞𝐫𝐬𝐨𝐧𝐚𝐥𝐢𝐳𝐞𝐝 𝐂𝐮𝐬𝐭𝐨𝐦𝐞𝐫 𝐄𝐱𝐩𝐞𝐫𝐢𝐞𝐧𝐜𝐞𝐬 From chatbots to virtual assistants, AI agents deliver tailored customer support and recommendations that boost loyalty at scale.

✅ 𝐅𝐚𝐬𝐭𝐞𝐫 𝐈𝐧𝐧𝐨𝐯𝐚𝐭𝐢𝐨𝐧 They track market trends and user feedback, helping teams innovate rapidly and stay ahead of the competition.

✅ 𝐀𝐮𝐭𝐨𝐧𝐨𝐦𝐨𝐮𝐬 𝐂𝐲𝐛𝐞𝐫𝐬𝐞𝐜𝐮𝐫𝐢𝐭𝐲 AI agents detect and respond to threats instantly, learning new attack patterns to keep business data safe.

💡 𝐁𝐢𝐠 𝐏𝐢𝐜𝐭𝐮𝐫𝐞: Autonomous AI agents aren’t replacing humans—they’re augmenting them. Businesses embracing these intelligent partners now are building the agile, efficient enterprises of tomorrow.

📌 𝐓𝐡𝐞 𝐜𝐨𝐦𝐩𝐚𝐧𝐢𝐞𝐬 𝐭𝐡𝐚𝐭 𝐰𝐢𝐥𝐥 𝐰𝐢𝐧 𝐢𝐧 𝟐𝟎𝟐𝟓 𝐚𝐫𝐞 𝐭𝐡𝐨𝐬𝐞 𝐚𝐝𝐨𝐩𝐭𝐢𝐧𝐠 𝐚𝐮𝐭𝐨𝐧𝐨𝐦𝐨𝐮𝐬 𝐀𝐈 𝐚𝐠𝐞𝐧𝐭𝐬 𝐭𝐨 𝐰𝐨𝐫𝐤 𝐬𝐦𝐚𝐫𝐭𝐞𝐫, 𝐟𝐚𝐬𝐭𝐞𝐫, 𝐚𝐧𝐝 𝐦𝐨𝐫𝐞 𝐫𝐞𝐬𝐩𝐨𝐧𝐬𝐢𝐯𝐞𝐥𝐲.

𝐑𝐞𝐚𝐝 𝐌𝐨𝐫𝐞: https://technologyaiinsights.com/

𝐀𝐛𝐨𝐮𝐭 𝐔𝐒: AI Technology Insights (AITin) is the fastest-growing global community of thought leaders, influencers, and researchers specializing in AI, Big Data, Analytics, Robotics, Cloud Computing, and related technologies. Through its platform, AITin offers valuable insights from industry executives and pioneers who share their journeys, expertise, success stories, and strategies for building profitable, forward-thinking businesses.

𝐂𝐨𝐧𝐭𝐚𝐜𝐭 𝐔𝐬: 📞 +1 (520) 350-7212 ✉️ [email protected] 🏢 1846 E Innovation Park DR Site 100, ORO Valley AZ 85755

0 notes

Text

AI agents automate tasks, boost productivity in daily life and business. They shape future of work, letting you focus on what matters.

0 notes

Text

AI That Knows What You Need Before You Do

Check out how Agentic AI tech is making waves across industries! 🚗💡 #AgenticAI #AI #TechInnovation #TechnologyTrends #AutonomousSystems #Tech Transformation

Suppose your coffee machine decides when you must have a caffeine boost—if it were up to me, I’d be the happiest person. So Meet Agentic AI, the smart technology that doesn’t wait for you to give it instructions. It says, “You don’t tell me what to do, I tell you what to do!” 😊 Unlike traditional AI assistant that responds to prompts, Agentic AI is A smart assistant that, along with responding…

View On WordPress

#Agentic AI#Agentic AI Artificial Intelligence Autonomous AI Smart Technology AI Revolution AI in Industries Future of AI AI Automation AI Assistants Se#AI Assistants#AI Automation#AI Decision-Making#AI in Finance#AI in Healthcare#AI in Industries#AI in Supply Chain#AI Innovations#AI Personalization#AI Revolution#Artificial Intelligence#Autonomous AI#Autonomous Systems#Future of AI#machine learning#Self-Driving Cars#Smart Technology#Tech Transformation#Technology Trends

1 note

·

View note

Text

We Need to Regulate and Defend Ourselves From Rogue Autonomous AI and AGI Systems

0 notes

Text

Experiment #3.1: The Birth of Digital Minds: My Quest to Create AI Employees

Picture this: a bustling office where no human steps foot, yet work hums along like clockwork. Screens flicker with activity, data flows seamlessly, and decisions are made in milliseconds. This isn’t science fiction. It’s the future I’m building at Uncultured AI. I’m about to tell you a story that began with a simple question: “What if AI could run a company?” Not just assist, mind you, but…

#AI Content Creation#AI Employees#AI-Driven Business#Autonomous AI#Business Innovation#Digital Workforce#Future of Work#Future Technology#Innovation

0 notes

Text

OpenAI introduces five-level framework to track progress towards Human-Level AI

OpenAI has introduced a structured framework comprising five levels to gauge its advancement in developing artificial intelligence capable of surpassing human capabilities, marking a significant step in its quest for Artificial General Intelligence (AGI). The classification system, a key component of OpenAI’s AI development strategy, was unveiled during an all-hands meeting. It delineates the…

0 notes

Text

Since y'all liked that post about Tesla removing the gear selectors in their cars, did you also know that Elon Musk is promising v12.0 of Autopilot Full Self Driving will be "much better" than previous versions. He's claiming it will be the first version without the "Beta" label and will be basically as good or better than a human driver. To show this off, he took to Twitter Live and streamed a 45-minute drive from Twitter HQ to Mark Zuckerburg's house. Live doxxing aside, the best part of this live stream was

A: When the vehicle came to a stop at a red light, waited a few seconds, and then attempted to run the red light while traffic was actively going through the intersection, causing Musk to slam on the brakes and deactivate the system.

B: When the vehicle got caught in a Yellow Light and was taking way longer than necessary to stop, prompting a verbal "I hope it stops," panic from Musk.

Full Self Driving my ass.

#rambles#tesla#elon musk#fuck tesla#fuck elon musk#ai#autonomous cars#car#cars#self driving#public transportation#fuck cars

304 notes

·

View notes

Text

AI‑Native Humanoid Robots in 2025: Korea’s Strategic Alliances & Global Real‑World Applications

AI‑native humanoid robots complete complex industrial tasks worldwide, leveraging Korea’s K‑Humanoid Alliance, global partnerships, and domestic manufacturing innovation.AI-native humanoid robots encompass autonomous, intelligent androids powered by on‑device AI and advanced sensors, reshaping real‑world applications globally. Visit now 🤖 Strategic Alliances in Korea South Korea unveiled the…

0 notes

Text

CAP theorem in ML: Consistency vs. availability

New Post has been published on https://thedigitalinsider.com/cap-theorem-in-ml-consistency-vs-availability/

CAP theorem in ML: Consistency vs. availability

The CAP theorem has long been the unavoidable reality check for distributed database architects. However, as machine learning (ML) evolves from isolated model training to complex, distributed pipelines operating in real-time, ML engineers are discovering that these same fundamental constraints also apply to their systems. What was once considered primarily a database concern has become increasingly relevant in the AI engineering landscape.

Modern ML systems span multiple nodes, process terabytes of data, and increasingly need to make predictions with sub-second latency. In this distributed reality, the trade-offs between consistency, availability, and partition tolerance aren’t academic — they’re engineering decisions that directly impact model performance, user experience, and business outcomes.

This article explores how the CAP theorem manifests in AI/ML pipelines, examining specific components where these trade-offs become critical decision points. By understanding these constraints, ML engineers can make better architectural choices that align with their specific requirements rather than fighting against fundamental distributed systems limitations.

Quick recap: What is the CAP theorem?

The CAP theorem, formulated by Eric Brewer in 2000, states that in a distributed data system, you can guarantee at most two of these three properties simultaneously:

Consistency: Every read receives the most recent write or an error

Availability: Every request receives a non-error response (though not necessarily the most recent data)

Partition tolerance: The system continues to operate despite network failures between nodes

Traditional database examples illustrate these trade-offs clearly:

CA systems: Traditional relational databases like PostgreSQL prioritize consistency and availability but struggle when network partitions occur.

CP systems: Databases like HBase or MongoDB (in certain configurations) prioritize consistency over availability when partitions happen.

AP systems: Cassandra and DynamoDB favor availability and partition tolerance, adopting eventual consistency models.

What’s interesting is that these same trade-offs don’t just apply to databases — they’re increasingly critical considerations in distributed ML systems, from data pipelines to model serving infrastructure.

The great web rebuild: Infrastructure for the AI agent era

AI agents require rethinking trust, authentication, and security—see how Agent Passports and new protocols will redefine online interactions.

Where the CAP theorem shows up in ML pipelines

Data ingestion and processing

The first stage where CAP trade-offs appear is in data collection and processing pipelines:

Stream processing (AP bias): Real-time data pipelines using Kafka, Kinesis, or Pulsar prioritize availability and partition tolerance. They’ll continue accepting events during network issues, but may process them out of order or duplicate them, creating consistency challenges for downstream ML systems.

Batch processing (CP bias): Traditional ETL jobs using Spark, Airflow, or similar tools prioritize consistency — each batch represents a coherent snapshot of data at processing time. However, they sacrifice availability by processing data in discrete windows rather than continuously.

This fundamental tension explains why Lambda and Kappa architectures emerged — they’re attempts to balance these CAP trade-offs by combining stream and batch approaches.

Feature Stores

Feature stores sit at the heart of modern ML systems, and they face particularly acute CAP theorem challenges.

Training-serving skew: One of the core features of feature stores is ensuring consistency between training and serving environments. However, achieving this while maintaining high availability during network partitions is extraordinarily difficult.

Consider a global feature store serving multiple regions: Do you prioritize consistency by ensuring all features are identical across regions (risking unavailability during network issues)? Or do you favor availability by allowing regions to diverge temporarily (risking inconsistent predictions)?

Model training

Distributed training introduces another domain where CAP trade-offs become evident:

Synchronous SGD (CP bias): Frameworks like distributed TensorFlow with synchronous updates prioritize consistency of parameters across workers, but can become unavailable if some workers slow down or disconnect.

Asynchronous SGD (AP bias): Allows training to continue even when some workers are unavailable but sacrifices parameter consistency, potentially affecting convergence.

Federated learning: Perhaps the clearest example of CAP in training — heavily favors partition tolerance (devices come and go) and availability (training continues regardless) at the expense of global model consistency.

Model serving

When deploying models to production, CAP trade-offs directly impact user experience:

Hot deployments vs. consistency: Rolling updates to models can lead to inconsistent predictions during deployment windows — some requests hit the old model, some the new one.

A/B testing: How do you ensure users consistently see the same model variant? This becomes a classic consistency challenge in distributed serving.

Model versioning: Immediate rollbacks vs. ensuring all servers have the exact same model version is a clear availability-consistency tension.

Superintelligent language models: A new era of artificial cognition

The rise of large language models (LLMs) is pushing the boundaries of AI, sparking new debates on the future and ethics of artificial general intelligence.

Case studies: CAP trade-offs in production ML systems

Real-time recommendation systems (AP bias)

E-commerce and content platforms typically favor availability and partition tolerance in their recommendation systems. If the recommendation service is momentarily unable to access the latest user interaction data due to network issues, most businesses would rather serve slightly outdated recommendations than no recommendations at all.

Netflix, for example, has explicitly designed its recommendation architecture to degrade gracefully, falling back to increasingly generic recommendations rather than failing if personalization data is unavailable.

Healthcare diagnostic systems (CP bias)

In contrast, ML systems for healthcare diagnostics typically prioritize consistency over availability. Medical diagnostic systems can’t afford to make predictions based on potentially outdated information.

A healthcare ML system might refuse to generate predictions rather than risk inconsistent results when some data sources are unavailable — a clear CP choice prioritizing safety over availability.

Edge ML for IoT devices (AP bias)

IoT deployments with on-device inference must handle frequent network partitions as devices move in and out of connectivity. These systems typically adopt AP strategies:

Locally cached models that operate independently

Asynchronous model updates when connectivity is available

Local data collection with eventual consistency when syncing to the cloud

Google’s Live Transcribe for hearing impairment uses this approach — the speech recognition model runs entirely on-device, prioritizing availability even when disconnected, with model updates happening eventually when connectivity is restored.

Strategies to balance CAP in ML systems

Given these constraints, how can ML engineers build systems that best navigate CAP trade-offs?

Graceful degradation

Design ML systems that can operate at varying levels of capability depending on data freshness and availability:

Fall back to simpler models when real-time features are unavailable

Use confidence scores to adjust prediction behavior based on data completeness

Implement tiered timeout policies for feature lookups

DoorDash’s ML platform, for example, incorporates multiple fallback layers for their delivery time prediction models — from a fully-featured real-time model to progressively simpler models based on what data is available within strict latency budgets.

Hybrid architectures

Combine approaches that make different CAP trade-offs:

Lambda architecture: Use batch processing (CP) for correctness and stream processing (AP) for recency

Feature store tiering: Store consistency-critical features differently from availability-critical ones

Materialized views: Pre-compute and cache certain feature combinations to improve availability without sacrificing consistency

Uber’s Michelangelo platform exemplifies this approach, maintaining both real-time and batch paths for feature generation and model serving.

Consistency-aware training

Build consistency challenges directly into the training process:

Train with artificially delayed or missing features to make models robust to these conditions

Use data augmentation to simulate feature inconsistency scenarios

Incorporate timestamp information as explicit model inputs

Facebook’s recommendation systems are trained with awareness of feature staleness, allowing the models to adjust predictions based on the freshness of available signals.

Intelligent caching with TTLs

Implement caching policies that explicitly acknowledge the consistency-availability trade-off:

Use time-to-live (TTL) values based on feature volatility

Implement semantic caching that understands which features can tolerate staleness

Adjust cache policies dynamically based on system conditions

How to build autonomous AI agent with Google A2A protocol

How to build autonomous AI agent with Google A2A protocol, Google Agent Development Kit (ADK), Llama Prompt Guard 2, Gemma 3, and Gemini 2.0 Flash.

Design principles for CAP-aware ML systems

Understand your critical path

Not all parts of your ML system have the same CAP requirements:

Map your ML pipeline components and identify where consistency matters most vs. where availability is crucial

Distinguish between features that genuinely impact predictions and those that are marginal

Quantify the impact of staleness or unavailability for different data sources

Align with business requirements

The right CAP trade-offs depend entirely on your specific use case:

Revenue impact of unavailability: If ML system downtime directly impacts revenue (e.g., payment fraud detection), you might prioritize availability

Cost of inconsistency: If inconsistent predictions could cause safety issues or compliance violations, consistency might take precedence

User expectations: Some applications (like social media) can tolerate inconsistency better than others (like banking)

Monitor and observe

Build observability that helps you understand CAP trade-offs in production:

Track feature freshness and availability as explicit metrics

Measure prediction consistency across system components

Monitor how often fallbacks are triggered and their impact

Wondering where we’re headed next?

Our in-person event calendar is packed with opportunities to connect, learn, and collaborate with peers and industry leaders. Check out where we’ll be and join us on the road.

AI Accelerator Institute | Summit calendar

Unite with applied AI’s builders & execs. Join Generative AI Summit, Agentic AI Summit, LLMOps Summit & Chief AI Officer Summit in a city near you.

#agent#Agentic AI#agents#ai#ai agent#AI Engineering#ai summit#AI/ML#amp#applications#applied AI#approach#architecture#Article#Articles#artificial#Artificial General Intelligence#authentication#autonomous#autonomous ai#awareness#banking#Behavior#Bias#budgets#Business#cache#Calendar#Case Studies#challenge

0 notes

Text

i have learned the art of blinkie making

#🧁 sweet shenanigans#jiraiblr#blinkie#cevio ai#cevio#decentralized autonomous golem reml#web resources

17 notes

·

View notes

Text

Trying to bully my brother out of using AI for math homework when there's at least, like, perfectly good online calculators that he could use instead if he wanted a cheap but reliable way out, but then I remembered he went to a career fair last year where he got told by A Person With A Job In The Industry that he should be using ChatGPT to write more code, so like. I think I might not be able to convince him on this one, and also might just stop trusting any technology designed since 2023 to do anything correctly or safely ever

#kids these fucking days don't even know how to find an integral calculator on wolfram alpha or whatever. doomed society#i think some versions of chat GPT can call wolfram alpha's own AI now? but why not just? use a wolfram alpha calculator?#don't read my tags below this if you have anxiety#but i know. i just know in my heart. that at least one industry professional out there#has used ChatGPT to write code to be implemented in autonomous or semi-autonomous vehicles#it is a statistical certainty.#and i will be spending the rest of my life pretending that i don't know that for a fact

32 notes

·

View notes

Text

Neturbiz Enterprises - AI Innov7ions

Our mission is to provide details about AI-powered platforms across different technologies, each of which offer unique set of features. The AI industry encompasses a broad range of technologies designed to simulate human intelligence. These include machine learning, natural language processing, robotics, computer vision, and more. Companies and research institutions are continuously advancing AI capabilities, from creating sophisticated algorithms to developing powerful hardware. The AI industry, characterized by the development and deployment of artificial intelligence technologies, has a profound impact on our daily lives, reshaping various aspects of how we live, work, and interact.

#ai technology#Technology Revolution#Machine Learning#Content Generation#Complex Algorithms#Neural Networks#Human Creativity#Original Content#Healthcare#Finance#Entertainment#Medical Image Analysis#Drug Discovery#Ethical Concerns#Data Privacy#Artificial Intelligence#GANs#AudioGeneration#Creativity#Problem Solving#ai#autonomous#deepbrain#fliki#krater#podcast#stealthgpt#riverside#restream#murf

17 notes

·

View notes

Text

On the object level, I do find myself just very frustrated with any AI singularity discourse topics nowadays. Part of this is just age - I think everyone over time runs out of patience with the sort of high-concept, "really makes you think" discussions as their own interests crystallize and you have enough of them that their circularity and lack of purpose becomes clear. Your brain can just "skip to the end" so cleanly because the intermediate steps are all cleverness, no utility, and you know that now.

Part of it is how ridiculous the proposals are - "6 months of AI research pause" like 'AI research' isn't a thing, that is not a category of human activity one can pause. The idea of building out a regulatory framework that defines what that means is itself at minimum a 6 month process. These orgs have legal rights! Does the federal government even have jurisdiction to tell a company what code it can run on servers it owns because of 'x-risk'? Again, your brain can skip to the end; a legal pause on AI research is not happening in the current environment, at all, 0% odds.

And the other part, which I admit my own humility on being not an AI researcher, is that still am not convinced any of these new tools are at all progress towards actual general intelligence because they are completely without agency. It seems very obviously so and the fact that Chat-GPT is not ever going to prompt itself to do things without human input is just handwaved away. The idea that it will ship itself nanomachine recipes and hack Boston Dynamics bots to build them is a fantasy, that isn't how any of this works. There is always some new version of the AI that can do this, but its vaporware, no one is really building it.

Its not shade on the real AI X-risk people, I have heard their arguments, I get it and they are smart and thorough. Its just a topic where every one of their arguments I ever engage with leave me with the feeling that I am reading something not based in reality. Its a feeling that is hard to push through for me.

63 notes

·

View notes