#in-context learning

Explore tagged Tumblr posts

Text

mom and baby

#just for context on the first two images i hc kris as autistic and wanted to portray them having sensory issues w their hair specifically#and toriel is still learning about the things that make them uncomfortable as kris is a nonverbal/very quiet kid#and they dont really express their discomfort with words#deltarune#kris dreemurr#toriel#fanart#my art!#tarteaumiau

23K notes

·

View notes

Text

MoRA: High-Rank Updating for Parameter-Efficient Fine-Tuning

New Post has been published on https://thedigitalinsider.com/mora-high-rank-updating-for-parameter-efficient-fine-tuning/

MoRA: High-Rank Updating for Parameter-Efficient Fine-Tuning

Owing to its robust performance and broad applicability when compared to other methods, LoRA or Low-Rank Adaption is one of the most popular PEFT or Parameter Efficient Fine-Tuning methods for fine-tuning a large language model. The LoRA framework employs two low-rank matrices to decompose, and approximate the updated weights in the FFT or Full Fine Tuning, and the LoRA framework modifies these trainable parameters accordingly by adjusting the rank of the matrices. The major benefit of implementing the process is that it facilitates the LoRA framework to merge these matrices without the inference latency after fine-tuning. Furthermore, although recent large language models deliver remarkable performance on in-context learning tasks, certain scenarios still require fine-tuning, and can be categorized broadly into three types. The first type, instruction tuning, aims to align LLMs better with end tasks and user preferences without enhancing the knowledge and capabilities of LLMs, an approach that simplifies the process of dealing with varied tasks and complex instructions. The second type includes complex reasoning tasks like mathematical problem solving. Finally, the third type is continual pretraining, an approach that attempts to enhance the overall domain-specific capabilities of large language models.

In this article, we will talk about whether low-rank updating impacts the performance of the LoRA framework as it has been observed that low-rank updating mechanism might hamper the ability of the large language model to learn and memorize new knowledge. Building on the same, in this article we will talk about MoRA, a new method that achieves high-rank updating while maintaining the same number of trainable parameters, by employing a square matrix. To achieve this, the MoRA framework reduces input dimension and increases output dimension for the square matrix by introducing the corresponding non-parameter operators. Furthermore, these operators ensure that the weight can be merged back into LLMs, which makes the MoRA framework deployable like LoRA.

This article aims to cover the MoRA framework in depth, and we explore the mechanism, the methodology, the architecture of the framework along with its comparison with state of the art frameworks. So let’s get started.

As the size and the capabilities of the language models are increasing, PEFT or Parameter Efficient Fine-Tuning is emerging as one of the most popular and efficient methods to adapt LLMs to specific downstream tasks. Compared to FFT or Full Fine Tuning, that updates all parameters, PEFT only modifies a fraction of the total parameters as on some tasks it can achieve similar performance as FFT by updating less than 1% of the total parameters, thus reducing memory requirements for optimizer significantly while facilitating the storage and deployment of models. Furthermore, amongst all the existing PEFT methods, LoRA is the one most popular today, especially for LLMs. One of the major reasons why LoRA methods deliver better performance when compared to PEFT methods like adapters or prompt tuning is that LoRA uses low-rank matrices to update parameters, with the framework having the control to merge these matrices into the original model parameters, without adding to the computational requirements during inference. Although there are numerous methods that attempt to improve LoRA for large language models, a majority of these models rely on GLUE to validate their efficiency, either by requiring few trainable parameters, or by achieving better performance.

Furthermore, experiments conducted on LoRA across a wide array of tasks including continual pretraining, mathematical reasoning, and instruction tuning indicate that although LoRA-based frameworks demonstrate similar performance across these tasks, and deliver performance on instruction tuning tasks comparable to FFT-based methods. However, the LoRA-based models could not replicate the performance on continual pretraining, and mathematical reasoning tasks. A possible explanation for this lack of performance can be the reliance on LoRA on low-rank matrix updates, since the low-rank update matrix might struggle to estimate the full-rank updates in FFT, especially in memory intensive tasks that require memorizing domain-specific knowledge like continual pretraining. Since the rank of the low-rank update matrix is smaller than the full rank, it caps the capacity to store new information using fine-tuning. Building on these observations, the MoRA attempts to maximize the rank in the low-rank update matrix while maintaining the same number trainable parameters, by employing a square matrix as opposed to the use of low-rank matrices in traditional LoRA-based models. The following figure compares the MoRA framework with LoRA under the same number of trainable parameters.

In the above image, (a) represents LoRA, and (b) represents MoRA. W is the frozen weight from the model, M is the trainable matrix in MoRA, A and B are trainable low-rank matrices in LoRA, and r represents the rank in LoRA and MoRA. As it can be observed, the MoRA framework demonstrates a greater capacity than LoRA-based models with a large rank. Furthermore, the MoRA framework develops corresponding non-parameter operators to reduce the input dimension and increase the output dimension for the trainable matrix M. Furthermore, the MoRA framework grants the flexibility to use a low-rank update matrix to substitute the trainable matrix M and the operators, ensuring the MoRA method can be merged back into the large language model like LoRA. The following table compares the performance of FFT, LoRA, LoRA variants and our method on instruction tuning, mathematical reasoning and continual pre-training tasks.

MoRA : Methodology and Architecture

The Influence of Low-Rank Updating

The key principle of LoRA-based models is to estimate full-rank updates in FFT by employing low-rank updates. Traditionally, for a given pre-trained parameter matrix, LoRA employs two low-rank matrices to calculate the weight update. TO ensure the weight updates are 0 when the training begins, the LoRA framework initializes one of the low-rank matrices with a Gaussian distribution while the other with 0. The overall weight update in LoRA exhibits a low-rank when compared to fine-tuning in FFT, although low-rank updating in LoRA delivers performance on-par with full-rank updating on specific tasks including instruction tuning and text classification. However, the performance of the LoRA framework starts deteriorating for tasks like continual pretraining, and complex reasoning. On the basis of these observations, MoRA proposes that it is easier to leverage the capabilities and original knowledge of the LLM to solve tasks using low-rank updates, but the model struggles to perform tasks that require enhancing capabilities and knowledge of the large language model.

Methodology

Although LLMs with in-context learning are a major performance improvement over prior approaches, there are still contexts that rely on fine-tuning broadly falling into three categories. There are LLMs tuning for instructions, by aligning with user tasks and preferences, which do not considerably increase the knowledge and capabilities of LLMs. This makes it easier to work with multiple tasks and comprehend complicated instructions. Another type is about involving complex reasoning tasks that are like mathematical problem-solving for which general instruction tuning comes short when it comes to handling complex symbolic multi-step reasoning tasks. Most related research is in order to improve the reasoning capacities of LLMs, and it either requires designing corresponding training datasets based on larger teacher models such as GPT-4 or rephrasing rationale-corresponding questions along a reasoning path. The third type, continual pretraining, is designed to improve the domain-specific abilities of LLMs. Unlike instruction tuning, fine-tuning is required to enrich related domain specific knowledge and skills.

Nevertheless, the majority of the variants of LoRA almost exclusively use GLUE instruction tuning or text classification tasks to evaluate their effectiveness in the context of LLMs. As fine-tuning for instruction tuning requires the least resources compared to other types, it may not represent proper comparison among LoRA variants. Adding reasoning tasks to evaluate their methods better has been a common practice in more recent works. However, we generally employ small training sets (even at 1M examples, which is quite large). LLMS struggle to learn proper reasoning from examples of this size. For example, some approaches utilize the GSM8K with only 7.5K training episodes. However, these numbers fall short of the SOTA method that was trained on 395K samples and they make it hard to judge the ability of these methods to learn the reasoning power of NLP.

Based on the observations from the influence of low-rank updating, the MoRA framework proposes a new method to mitigate the negative effects of low-rank updating. The basic principle of the MoRA framework is to employ the same trainable parameters to the maximum possible extent to achieve a higher rank in the low-rank update matrix. After accounting for the pre-trained weights, the LoRA framework uses two low-rank matrices A and B with total trainable parameters for rank r. However, for the same number of trainable parameters, a square matrix can achieve the highest rank, and the MoRA framework achieves this by reducing the input dimension, and increasing the output dimension for the trainable square matrix. Furthermore, these two functions ought to be non parameterized operators and expected to execute in linear time corresponding to the dimension.

MoRA: Experiments and Results

To evaluate its performance, the MoRA framework is evaluated on a wide array of tasks to understand the influence of high-rank updating on three tasks: memorizing UUID pairs, fine-tuning tasks, and pre-training.

Memorizing UUID Pairs

To demonstrate the improvements in performance, the MoRA framework is compared against FFT and LoRA frameworks on memorizing UUID pairs. The training loss from the experiment is reflected in the following image.

It is worth noting that for the same number of trainable parameters, the MoRA framework is able to outperform the existing LoRA models, indicating it benefitted from the high-rank updating strategy. The character-level training accuracy report at different training steps is summarized in the following table.

As it can be observed, when compared to LoRA, the MoRA framework takes fewer training steps to memorize the UUID pairs.

Fine-Tuning Tasks

To evaluate its performance on fine-tuning tasks, the MoRA framework is evaluated on three fine-tuning tasks: instruction tuning, mathematical reasoning, and continual pre-training, designed for large language models, along with a high-quality corresponding dataset for both the MoRA and LoRA models. The results of fine-tuning tasks are presented in the following table.

As it can be observed, on mathematical reasoning and instruction tuning tasks, both the LoRA and MoRA models return similar performance. However, the MORA model emerges ahead of the LoRA framework on continual pre-training tasks for both biomedical and financial domains, benefitting from high-rank update approach to memorize new knowledge. Furthermore, it is vital to understand that the three tasks are different from one another with different requirements, and different fine-tuning abilities.

Pre-Training

To evaluate the influence of high-rank updating on the overall performance, the transformer within the MoRA framework is trained from scratch on the C4 datasets, and performance is compared against the LoRA and ReLoRA models. The pre-training loss along with the corresponding complexity on the C4 dataset are demonstrated in the following figures.

As it can be observed, the MoRA model delivers better performance on pre-training tasks when compared against LoRA and ReLoRA models with the same amount of trainable parameters.

Furthermore, to demonstrate the impact of high-rank updating on the rank of the low-rank update matrix, the MoRA framework analyzes the spectrum of singular values for the learned low-rank update matrix by pre-training the 250M model, and the results are contained in the following image.

Final Thoughts

In this article, we have talked about whether low-rank updating impacts the performance of the LoRA framework as it has been observed that low-rank updating mechanism might hamper the ability of the large language model to learn and memorize new knowledge. Building on the same, in this article we will talk about MoRA, a new method that achieves high-rank updating while maintaining the same number of trainable parameters, by employing a square matrix. To achieve this, the MoRA framework reduces input dimension and increases output dimension for the square matrix by introducing the corresponding non-parameter operators. Furthermore, these operators ensure that the weight can be merged back into LLMs, which makes the MoRA framework deployable like LoRA.

#accounting#approach#architecture#Art#Article#Artificial Intelligence#Building#comparison#complexity#datasets#deployment#domains#effects#efficiency#Exhibits#explanation#financial#Fine Tuning#Fine Tuning LLM#Fraction#framework#Full#GPT#GPT-4#Grants#impact#Impacts#in-context learning#inference#it

0 notes

Text

Michael learns of Henry and William’s FNAF lore..

#myart#chloesimagination#comic#fnaf#five nights at freddy's#fnaf fanart#michael afton#william afton#henry emily#fnaf pizzeria simulator#Michael isn’t ready to learn about their lore#he doesn’t trust Williams word dude only lies#but Henry agreeing? now he’s worried#I kinda wish we got to have Henry and scraptrap etc talk#cause Henry’s lines directed at William go unbelievable hard#maybe they’ll get more scenes or at least context for them in the future#in games of course cause silver eyes is a lil different etc

6K notes

·

View notes

Text

i love being late to trends. my new years resolution is to be late to every trend ever. and you know im serious because im even late to new years.

(theres an inverse version of this by @chamiryokuroi , you should go check it out! i started making this before i saw theirs, but i think its cool that now theres both versions)

#art#fanart#digital art#dc comics#bernard dowd#timber#timbern#tim drake#tim drake fanart#bernard dowd fanart#red robin#red robin fanart#dc fanart#i dont usually do more graphic styles like this so it was a fun challenge#theres so many little parts to the original that i had to notice and include#i didnt wanna download or make my own heart and star stamps just to use them maybe 6 times each so those are all hand drawn#i adjusted the colors of the heart outlines like 6 times before i was satisfied#also the fact that bernard has a red jacket and pink shirt in this changes the color profile completely so i had to change some things about#-the OG colors so it fit in well#but im happy to report that i didnt use any major blend mode layers over everything at the end to get the colors to mesh well#which is a thing ive been doing for a long time but isnt very conducive to actually learning color theory#also also i spent like a full 45 minutes trying to get the text to look right#bc i dint have whatever font that is so i had to improvise with the fonts i did have and a little bit of editing#and then i had to duplicate it for the shadow and outline it and everything#it was pretty fun tho#seeing the end product was especially satisfying#i havent read ‘go for it nakamura!’ but i assume from context clues the little squid things on the cover are-#-calling him a simp/being supportive wingmen so i replaced them with steph and dick#who i imagine are watching bernard and tim’s relationship like a soapy romcom#and occasionally heckling them (affectionately!) when theyre being lovey-dovey

2K notes

·

View notes

Text

fanart of [REDACTED] because who doesn't love a good bord

#gravity falls#billford#bill cipher#stanford pines#m.png#there is so little context in this one theres no reason i cant post now right. right. right. right.#right.#one day i'll learn how to do blood better but this is fine for now#DONT WORRYYYYYYYY its his own blood

2K notes

·

View notes

Text

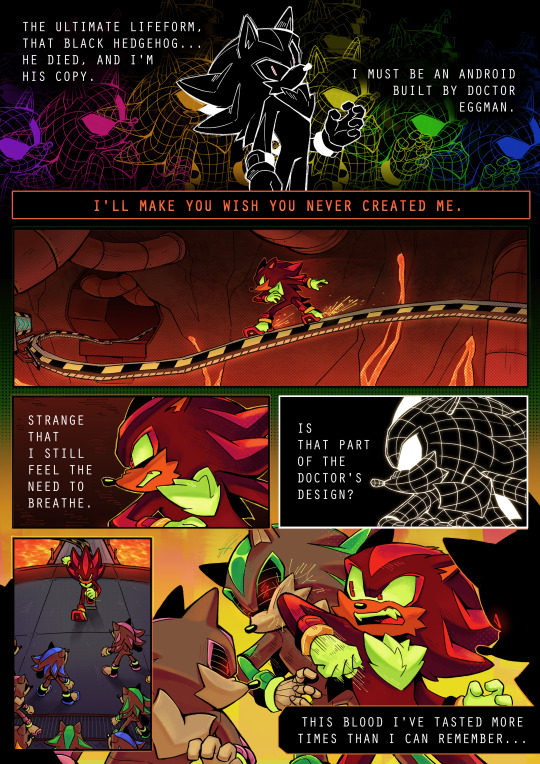

Messy comic of my SHIT ASS AU!!!!! 😭😭😭❤️❤️❤️❤️❤️❤️❤️❤️❤️❤️❤️❤️❤️❤️❤️❤️

have decided to call it dm dusk au Tehe. theres like a whole wordplay thing going on here . the kr word (not common. its js a term that exists) for meeting someone after a long time of not seeing them is haehoo. hae by itself means sun and hoo by itself means after. i was like okay sun after. and then i was like okay well sun after is like. sunset. and whats after sunset ? dusk. also the confession event happens at sunset . i assume some other important event happens during dusk in the aus main plot. Fuckkk im crazy

#my art#detey#for context they both start learning asl on their own and they treat it like a competition bc theyre annoying and stupid#learning asl to talk to each other is Too friendly so they treat it like a competiton so they can keep pretending they dont like each other#Im crazy i fear.#dm dusk au#dogman#dm aus

2K notes

·

View notes

Text

On one hand, Young Justice is kind of neglected by the actual superheroes that should be looking out for them in a lot of crucial ways and very much failed by the adults around them

But on the other hand Red Tornado straight up hosts a parent-teacher conference where their respective legal guardians all show up, barring Batman who’s in traffic so Nightwing fills in instead because Robin’s dad does not know he’s a vigilante which is objectively hilarious

#Superboy does not have a name and his ass is constantly getting groomed like Clark please take a more active role in his life#And all of these guys need a healthy interaction with their respective legacies that does not involve heroing#Take them out for lunch! Play a video game with them! Let them be a kid around you without getting criticized!#Red Tornado had barely reconnected with his own humanity and he’s taken a more active role in being like they are safe happy and learning#compared to the people who literally are the reason they exist in the first place#and is the only one who seems to recognize their potential and ability as a team! and he wants others to know that!#and it’s kind of heartbreaking because the JLA should be paying attention to them and noticing how they succeed and instead just show up#at the worst possible time and take things out of context and criticize them and bestie I bet you they are a lot better of a hero#than *you* were at 14-16 because they actually are going out and making a difference and saving people#but the ones who should support them the most are barely there for them at all#someone give these kids healthy and appropriate emotional support I am begging you#yj#young just us#young justice#yj98#bart allen#tim drake#kon el#conner kent#superboy#robin#dc impulse#cassie sandsmark#wonder girl#cissie king jones#arrowette#greta hayes#slobo dc#empress#anita fite

3K notes

·

View notes

Text

i have been trying to figure out why the whole 'fae god' and katniss everdeen things with kendrick lamar on here were bothering me, and i think i finally put it into words.

most posts like that are probably coming from well-meaning white people (i am also partially a White People, to be clear), who otherwise dont really listen to rap. they cannot find a way to 'relate' to this black man who sings largely about issues that affect the black community– and rather than try and meet him where he is, they have to fit him into these little tumblr cultural boxes before he can be 'palatable' to them.

they have to shave off the rougher/more abrasive aspects of his work and activism because it makes them uncomfortable, that way they can pigeonhole him into something that allows them to enjoy his work without the critical analysis that MUST come with it

he is not your fae god, he is not a YA protagonist, he is not a little gremlin or a cinnamon roll or a blorbo. He is a human being with opinions and beliefs that deeply permeate his work, and to ignore that truth is to ignore the entire point. PLEASE try to engage with artists' work outside of the lens of tumblr fandom, and i mean that as nicely as possible. you are doing YOURSELF a disservice

#kendrick lamar#to clarify#i am a white person that isnt super familiar with rap culture as a whole#but thats more because im like that with literally every musician#half the time i can barely even name the lead singers of some of my favorite bands#i also only really learned of kendrick through the context of the disses he released last year#but the way people were reacting had me incredibly intrigued#so i DUG. i watched reaction videos. i watched people dissect the lyrics and explain#i watched FD signifiers breakdown of the whole history of the beef#and because of that ive been following the story as it developed#because i find kendricks cultural influence astonishing#and it makes me sad to see people just. ignore the history and culture of the conflict#while claiming to be invested in whats happening

1K notes

·

View notes

Text

even your most basic introductory teaching course in Latin America teaches about the social, cultural and historical context of education, meanwhile it seems that MIT researchers in the United States see students as barely more than slightly smarter laboratory rats

#cosas mias#I've met many people who were apolitical and didn't care much about history or social issues having their minds changed#by just learning about the context in which our education happens

634 notes

·

View notes

Text

In which Oskar gathers enough skills to write a whole ass script for his fantasy scenario with Ed

#sfr draws#siren and scientist#context: Oskar just learned to read and write so this is a big deal for Ed#but it reads like a fanfic a 10 year old made on wattpad

2K notes

·

View notes

Text

Being even more cringe than usual

Featuring my friends drawing of Joel, who I’m pretty sure they don’t even know, @dustystripe is the friend

#fanart#hermitblr#hermitcraft#geminitay#smallishbeans#hermits#god I’m getting cringer by the day#mcyt fanart#mcyt#mcytblr#hermitcraft season 10#idk why I made gem a lion fish but I just think they look cool#plus I mean they’re pretty scary so it fits or whatever#Joel is a tanuki because I asked my friend out of context if I should do shrek ears or tanuki#what do people even tag stuff#ugh#posting for different fandoms is so annoying because I have to learn the tags#be prepared for my next 20 posts to be hermitcraft#I’m sorry to my booster gold heads#joel smallishbeans#do they have a duo name or some shit#aughhhh#and are duo names even different than ship names? I’m unclear on that#bilby art tag#artists on tumblr

2K notes

·

View notes

Text

Fenton, the Ghost Hunter Hero

So! When Danny first saw a Ghost attacking his school, he was still terrified of his parents finding out about his Powers.

He looked exactly the same in his Ghost Form, sounded the same, he even had the Hazmat Suit his parents had custom made for him on as a Ghost. There was no way anybody wouldn't immediately find him out if he tried to stop Lunch Lady with his Powers, it was so obvious!

But he couldn't just leave her there. She had crossed through the Portal that he opened, and was attacking his friends. He needed to stop her somehow!

So he tried, he just didn't use his Powers. He stole a bunch of his parents Inventions, fixed the broken ones so they actually worked, and ran in to stop Lunch Lady as a Human. The battle lasted far longer than he would have liked, but eventually he managed to stop her and shove her into the Thermos.

And from there on out, he just kept doing it. Danny became the Town's defacto Hero, since his parents were too Incompetent and he had the ability to actually beat the Ghosts, he had to protect the people he had endangered.

Soon enough people began to notice his Heroics. Mr Lancer didn't stop him when he ran out of the classroom, Dash stopped shoving him in Lockers, and his parents were Ecstatic when they found out he had gone into the "Family Business".

He still kept his Ghost Form hidden from his parents and the Public though. It was still too dangerous.

He only ever used his Ghost Form while in the Ghost Zone so he could blend in, and avoid being attacked by the multiple Ghosts who he had forced back in there. Danny Fenton was a Ghost Hunter, Phantom was just another Ghost wandering the Ghost Zone.

(Though he did gain some infamy by defeating some powerful ghosts, like Aragon or Plasmius)

Years down the line, Fenton remained the respected Ghost Hunting Hero of Amity Park, his greatest accomplishment being the defeat of Pariah Dark, the Ghost King.

That battle had actually drawn outside attention to the town for a change, and it wasn't long before Danny was offered a spot on the Justice League's Junior Team. It wasn't every day when the evil Ruler of another Dimension was defeated by a non-powered Human, so it actually sparked some interest in the Town.

Unfortunately, Danny couldn't accept the Invitation.

If he joined the Justice League, it ws only a matter of time before one of their multitude of Magic Users realized the truth and outed him as a Ghost. He couldn't take that chance.

He was content staying as a small town Hero dealing with a "minor" Ghost Problem, no need to overcomplicate matters.

That is, until the JL contacted him again a few months later. Apparently, their Time Travelers had warned of an Evil Ghost known as Phantom, who would one day grow so powerful he would destroy the world and leave it in ruins. They needed his help as an expert Ghost Hunter to track down Phantom, for the safety of the world.

Problem. This version of Danny had never actually met Dan, since his history went so differently. Now he is terrified of what event could have led to him becoming the Worst Supervillain in History.

#Dpxdc#Dp x dc#Dcxdp#Dc x dp#Danny Phantom#Dc#Dcu#Danny is a Hero as a Human#Fenton is a Hero#Phantom is a random Ghost#Danny has Sam and Tucker act as his Sidekicks/Guys in the Chair#They have their powers but also hide them for the same reasons as Danny#Danny never encountered Dan or Clockwork in his AU#This Danny was forgiven more in Class because he was a known Hero so he didn't need to steal the Test Answers#So his friends and family never died and he never became Phantom#But that future with an Evil Danny still exists in this AU somehow#So how the hell did he turn into a Supervillain in this version of events?#Was he destined to become a Supervillain or did the JLA just kickstart a self fulfilling Prophecy?#Danny is scared#The Evil Future Phantom matches him Exactly down to the Powerset and Appearance so he knows it must be him#For context Danny is 16 when he is first offered to join the JLA#And 17 when he learns about his Future

2K notes

·

View notes

Text

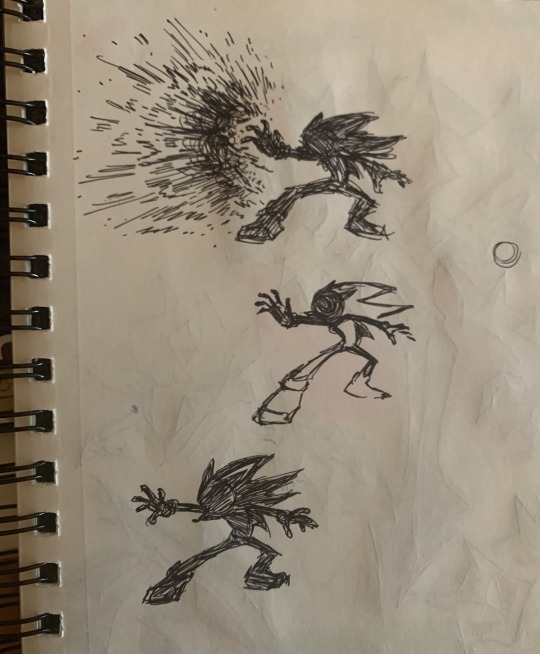

my comic from the @neverturnbackzine! truly one of my favorite zines i've been a part of :]

some extra insight/fun facts about the process of this piece below the cut 💥💥💥

posting pieces from collaborative zines is always something i struggle with because i look back and think of how i would do things differently now, but i learned a lot working on this comic and even developed some style techniques that i still use!

Fun Fact 1: the panel where shadow Fucking Disintegrates That Guy is technically traditionally drawn! i couldn't get it right in clip studio so i just started frantically scribbling in a notebook and got it eventually lol

highly highly recommend scribbling stuff out in a notebook, scanning it on your phone, and then dropping it into a canvas to edit later if you ever have trouble sketching something.

Fun Fact 2: a lot of the overlay/background effects were made in Kid Pix Deluxe 3D. i created a whole collection of various textures/abstract effects for this comic that i've been using in my art since last year. you can even find them scattered through my team dark zine lol. here's a few of them:

similarly, the background at the bottom of page 2 is actually a warped photo i took of a bunch of headphone wires. this is the original:

Fun Fact 3: i made this comic during a very busy and wild period of time last year so this is what the final panel looked like for a while before i fully finished it LMAO

ok yay thanks for reading bye

#ah yes the comic that i kept showing to my friends for notes and asking “hey guys is this even REMOTELY comprehensible”#very fun to work on! learned a lot :] for context i finished this in july of last year#fern's sketchbook#eyestrain#sth#shadow the hedgehog

4K notes

·

View notes

Text

OK but like...Gethsemane. Gethsemane, guys. I cannot express enough how important Gethsemane is and what happened there. Not to downplay the incredible importance of what happened on the Cross, of course not, but I find people really don't talk about Gethsemane enough in comparison, so I'm going to do it myself.

Gethsemane is found at the foot of the Mount of Olives in Jerusalem. Fitting its name, the garden itself is full of olive trees, a kind of tree which holds great significance in scripture, probably the most revered plant in the bible. It is a symbol of peace, new life, prosperity, and reconciliation. It was the branch of an olive tree that the dove brought back to Noah while on the ark, signifying that the floods were receding. An olive tree is used to describe Jesus's jewish roots as the stem of David, two olive trees are used as symbolism in Revelations, and the people of Israel are likened to an olive tree and its branches. And this is only a few of the many references to olive trees in the Bible.

Olive Trees were and still are highly valued for their oil, which can be retrieved from the olives themselves. Olive oil was used as medicine, for light, for making food, etc. It was a very valuable resource, and still is.

The very name of this garden, Gethsemane, means “Oil Press”, where oil is obtained from the olives-by squeezing and crushing them. Only by being crushed can this precious oil be obtained.

When the olives are first crushed, the liquid begins to leak out, but rather than coming out as the golden-green color that we are used to, it instead comes out as a dark red hue, looking eerily similar to blood.

Only later does the oil turn into its famous golden-green color.

Like the olives being crushed for their oil, Jesus Christ was being pressed and crushed by the weight of the Atonement in the garden, suffering through such incomprehensible anguish and agony that, according to Luke's account, He literally started sweating blood.

KJV Luke 22: 44: "And being in agony he prayed more earnestly: and his sweat was like great drops of blood falling down to the ground."

(Believe it or not, sweating blood is an actual medical condition called Hematridosis, which is caused by an extreme level of stress.)

Another important thing about olive oil's use during Christ's time: it was also used for ceremonial annointing, especially for sacred rituals performed in the temple, consecrating those like priests, kings and prophets. To be annointed means to be chosen or set apart for a specific role by God, often signified by smearing oil on the body or head, and what is the true meaning of Christ's title as the Messiah?

"The Annointed One."

And yet now, here in Gethsemane, Jesus has rather become the olive. He is the one being crushed, for the sins and pains of the world in the shadow of the Mount of Olives, His blood, like the sacred oil, to be used to annoint us, to not only save us but to make us into something greater than we could ever be by ourselves.

Also, very very interesting that Gethsemane is described as a garden. Only so many gardens are mentioned in the Bible, the most well known and one of the only other named gardens being the Garden of Eden, the paradise where humankind was first created and dwelt with God. Eden was a place of beauty, of innocence, and represented humanity's oneness with the Father.

But when Adam and Eve partook of the fruit of the Tree of Knowledge of Good and Evil, that innocence was lost, and thus the first man and woman could no longer dwell in Eden, and were driven out. Because of transgression, humankind had fallen.

In Eden's garden, beneath a tree, mankind lost its innocence resulting in the Fall, and became seperated from its creator, but in another garden millennia later, beneath the olive trees, that same creator would begin the agonizing process required to save us and lift us, mankind, from the consequences of that fall, to bring us back to the true garden of the Lord.

In the place of the Oil Press Jesus Christ allowed Himself to be pressed and crushed in our stead, letting Himself to eventually be led to the cross, taking upon Himself the demands of justice so that we might not suffer a similar fate, if we so choose to follow Him.

That is what happened in Gethsemane.

#Just...gah! it's absolutely beautiful!#Learning more about the context and symbolism of everything just makes me appreciate the Atonement(and the scriptures) that much more#I honestly have so much more I want to say on this but this is all I could get out#At least in time for today anyway so forgive any minor errors#Gethsemane#holy thursday#Bible#Christianity#Jesus Christ#Easter#Holy Week#Eden

446 notes

·

View notes

Text

more hades zombie apocalypse au sketches

#hades#hades game#hades supergiant#zagreus hades#hades achilles#hades zombie apocalypse au#context for the first sketch uhh i forgot *lying*#idk zagreus wants ice cream or something but its the apocalypse#so they hijack a fast food place#and achilles tries to learn how to use an ice cream machine#hes probably never worked fast food in his life but anything for his little guy

426 notes

·

View notes

Text

this is from two? three? months ago fksjhf i spent like weeks and weeks working on it and then got rly scared of posting it so it's been sitting in my drafts ¯\_(ツ)_/¯

shinyduo au where gem n pearl are characters in a legend forced to play out the same story over n over again and to always forget the times before. until the cycle breaks & they have to work together to escape <3 (loosely inspired by wolfwalkers and set to the vibes of aeseaes's megalomaniac)

edit: you guys?? thank you so much for the support on this :’0 i’ve been hopefully trying to continue the story as a fic so stay tuned for updates on that,,

#i was rly rly hyped about telling this story for a while and then started worrying that i'd mischaracterized them & psyched myself out :[#so whatever this exists without context now i guess. haha#geminitay#pearlescentmoon#hermitcraft#trafficblr#shiny duo#cw blood#ourgh i can draw sm better now 😔 learned a lot from this#aurie's art#shapeshifter shinyduo au

2K notes

·

View notes