#scrapy enterprise solutions

Explore tagged Tumblr posts

Text

Hire Expert Scrapy Developers for Scalable Web Scraping & Data Automation

Looking to extract high-value data from the web quickly and accurately? At Prospera Soft, we offer top-tier Scrapy development services to help businesses automate data collection, gain market insights, and scale operations with ease.

Our team of Scrapy experts specializes in building robust, Python-based web scrapers that deliver 10X faster data extraction, 99.9% accuracy, and full cloud scalability. From price monitoring and sentiment analysis to lead generation and product scraping, we design intelligent, secure, and GDPR-compliant scraping solutions tailored to your business needs.

Why Choose Our Scrapy Developers?

✅ Custom Scrapy Spider Development for complex and dynamic websites

✅ AI-Optimized Data Parsing to ensure clean, structured output

✅ Middleware & Proxy Rotation to bypass anti-bot protections

✅ Seamless API Integration with BI tools and databases

✅ Cloud Deployment via AWS, Azure, or GCP for high availability

Whether you're in e-commerce, finance, real estate, or research, our scalable Scrapy solutions power your data-driven decisions.

#Hire Expert Scrapy Developers#scrapy development company#scrapy development services#scrapy web scraping#scrapy data extraction#scrapy automation#hire scrapy developers#scrapy company#scrapy consulting#scrapy API integration#scrapy experts#scrapy workflow automation#best scrapy development company#scrapy data mining#hire scrapy experts#scrapy scraping services#scrapy Python development#scrapy no-code scraping#scrapy enterprise solutions

0 notes

Text

NLP Sentiment Analysis | Reviews Monitoring for Actionable Insights

NLP Sentiment Analysis-Powered Insights from 1M+ Online Reviews

Business Challenge

A global enterprise with diversified business units in retail, hospitality, and tech was inundated with customer reviews across dozens of platforms:

Amazon, Yelp, Zomato, TripAdvisor, Booking.com, Google Maps, and more. Each platform housed thousands of unstructured reviews written in multiple languages — making it ideal for NLP sentiment analysis to extract structured value from raw consumer feedback.

The client's existing review monitoring efforts were manual, disconnected, and slow. They lacked a modern review monitoring tool to streamline analysis. Key business leaders had no unified dashboard for customer experience (CX) trends, and emerging issues often went unnoticed until they impacted brand reputation or revenue.

The lack of a central sentiment intelligence system meant missed opportunities not only for service improvements, pricing optimization, and product redesign — but also for implementing a robust Brand Reputation Management Service capable of safeguarding long-term consumer trust.

Key pain points included:

No centralized system for analyzing cross-platform review data

Manual tagging that lacked accuracy and scalability

Absence of real-time CX intelligence for decision-makers

Objective

The client set out to:

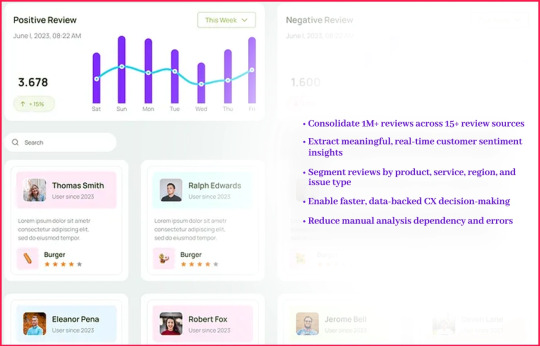

Consolidate 1M+ reviews across 15+ review sources

Extract meaningful, real-time customer sentiment insights

Segment reviews by product, service, region, and issue type

Enable faster, data-backed CX decision-making

Reduce manual analysis dependency and errors

Their goal: Build a scalable sentiment analysis system using a robust Sentiment Analysis API to drive operational, marketing, and strategic decisions across business units.

Our Approach

DataZivot designed and deployed a fully-managed NLP-powered review analytics pipeline, customized for the client's data structure and review volume. Our solution included:

1. Intelligent Review Scraping

Automated scraping from platforms like Zomato, Yelp, Amazon, Booking.com

Schedule-based data refresh (daily & weekly)

Multi-language support (English, Spanish, German, Hindi)

2. NLP Sentiment Analysis

Hybrid approach combining rule-based tagging with transformer-based models (e.g., BERT, RoBERTa)

Sentiment scores (positive, neutral, negative) and sub-tagging (service, delivery, product quality)

Topic modeling to identify emerging concerns

3. Categorization & Tagging

Entity recognition (locations, product names, service mentions)

Keyword extraction for trend tracking

Complaint type detection (delay, quality, attitude, etc.)

4. Insights Dashboard Integration

Custom Power BI & Tableau dashboards

Location, time, sentiment, and keyword filters

Export-ready CSV/JSON options for internal analysts

Results & Competitive Insights

DataZivot's solution produced measurable results within the first month:

These improvements gave the enterprise:

Faster product feedback loops

Better pricing and menu optimization for restaurants

Localized insights for store/service operations

Proactive risk mitigation (e.g., before issues trended on social media)

Want to See the Dashboard in Action?

Book a demo or download a Sample Reviews Dataset to experience the power of our sentiment engine firsthand.

Contact Us Today!

Dashboard Highlights

The custom dashboard provided by DataZivot enabled:

Review Sentiment Dashboard featuring sentiment trend graphs (daily, weekly, monthly)

Top Keywords by Sentiment Type ("slow service", "friendly staff")

Geo Heatmaps showing regional sentiment fluctuations

Comparative Brand Insights (across subsidiaries or competitors)

Dynamic Filters by platform, region, product, date, language

Tools & Tech Stack

To deliver the solution at scale, we utilized:

Scraping Frameworks: Scrapy, Selenium, BeautifulSoup

NLP Libraries: spaCy, TextBlob, Hugging Face Transformers (BERT, RoBERTa)

Cloud Infrastructure: AWS Lambda, S3, EC2, Azure Functions

Dashboards & BI: Power BI, Tableau, Looker

Languages Used: Python, SQL, JavaScript (for dashboard custom scripts)

Strategic Outcome

By leveraging DataZivot’s NLP infrastructure, the enterprise achieved:

Centralized CX Intelligence: CX leaders could make decisions based on real-time, data-backed feedback

Cross-Industry Alignment: Insights across retail, hospitality, and tech units led to unified improvement strategies

Brand Perception Tracking: Marketing teams tracked emotional tone over time and correlated with ad campaigns

Revenue Impact: A/B-tested updates (product tweaks, price changes) showed double-digit improvements in review sentiment and NPS

Conclusion

This case study proves that large-scale review analytics is not only possible — it’s essential for modern enterprises managing multiple consumer-facing touchpoints. DataZivot’s approach to scalable NLP and real-time sentiment tracking empowered the client to proactively manage their brand reputation, uncover hidden customer insights, and drive growth across verticals.

If your organization is facing similar challenges with fragmented review data, inconsistent feedback visibility, or a slow response to customer sentiment — DataZivot’s sentiment intelligence platform is your solution.

#NLPSentimentAnalysis#CrossPlatformReviewData#SentimentAnalysisAPI#BrandReputationManagement#ReviewMonitoringTool#IntelligentReviewScraping#ReviewSentimentDashboard#RealTimeSentimentTracking#ReviewAnalytics

0 notes

Text

Travel Data Scraping Tools And Techniques For 2025

Introduction

The travel industry generates massive amounts of data every second, from fluctuating flight prices to real-time hotel availability. Businesses harnessing this information effectively gain a significant competitive advantage in the market. Travel Data Scraping has emerged as a crucial technique for extracting valuable insights from various travel platforms, enabling companies to make informed decisions and optimize their strategies.

Modern travelers expect transparency, competitive pricing, and comprehensive options when planning their journeys. To meet these demands, travel companies must continuously monitor competitor pricing, track market trends, and analyze consumer behavior patterns. Given the scale and speed at which travel data changes, extracting this information manually would be impossible.

Understanding the Fundamentals of Travel Data Extraction

Car Rental Data Scraping involves automated information collection from travel websites, booking platforms, and related online sources. This process utilizes specialized software and programming techniques to navigate through web pages, extract relevant data points, and organize them into structured formats for analysis.

The complexity of travel websites presents unique challenges for data extraction. Many platforms implement dynamic pricing algorithms, use JavaScript-heavy interfaces, and employ anti-bot measures to protect their data. Successfully navigating these obstacles requires sophisticated Travel Data Intelligence systems that can adapt to changing website structures and security measures.

Key components of effective travel data extraction include:

Target identification: Determining which websites and data points are most valuable for your business objectives.

Data parsing: Converting unstructured web content into organized, analyzable formats.

Quality assurance: Implementing validation mechanisms to ensure data accuracy and completeness.

Scalability management: Handling large volumes of requests without overwhelming target servers.

The extracted information typically includes pricing data, availability schedules, customer reviews, amenities descriptions, and geographical information. This comprehensive dataset enables businesses to analyze competition, identify market opportunities, and develop data-driven strategies.

Essential Tools and Technologies for 2025

The landscape of modern data extraction has evolved significantly, with advanced solutions offering enhanced capabilities for handling complex travel websites. Python-based frameworks like Scrapy and BeautifulSoup remain popular for custom development, while cloud-based platforms provide scalable solutions for enterprise-level operations.

Vacation Rental Data Scraping services have gained prominence by offering pre-built integrations with major travel platforms. These APIs handle the technical complexities of data extraction while providing standardized access to travel information. Popular providers include RapidAPI, Amadeus, and specialized travel data services focusing on industry needs.

Browser automation tools such as Selenium and Playwright excel at handling JavaScript-heavy websites that traditional scraping methods cannot access. These tools simulate human browsing behavior, making them particularly effective for sites with dynamic content loading and complex user interactions.

Advanced practitioners increasingly adopt machine learning approaches to improve Real-Time Travel Data Extraction accuracy. These systems can adapt to website changes automatically, recognize content patterns more effectively, and handle anti-bot measures with greater sophistication.

Flight Price Data Collection Strategies

Airlines constantly adjust their pricing based on demand, seasonality, route popularity, and competitive factors. Flight Price Data Scraping enables businesses to track these fluctuations across multiple carriers and booking platforms simultaneously. This information proves invaluable for travel agencies, price comparison sites, and market researchers.

Effective flight data collection requires monitoring multiple sources, including airline websites, online travel agencies, and metasearch engines. Each platform may display prices for identical flights due to exclusive deals, booking fees, or promotional campaigns. Comprehensive coverage ensures accurate market representation through Web Scraping Tools For Travel.

Key considerations for flight data extraction include:

Timing optimization: Prices change frequently, requiring strategic scheduling of data collection activities.

Route coverage: Monitoring popular routes while also tracking emerging destinations.

Fare class differentiation: Distinguishing between economy, business, and first-class offerings.

Additional fees tracking: Capturing baggage costs, seat selection charges, and other ancillary fees.

The challenge lies in handling the dynamic nature of flight search results. Many websites generate prices on demand based on search parameters, requiring sophisticated query management and result processing capabilities.

Hotel Industry Data Mining Techniques

The hospitality sector presents unique opportunities for data extraction, with thousands of properties across various booking platforms offering different rates, amenities, and availability windows. Hotel Data Scraping involves collecting information from major platforms like Booking.com, Expedia, Hotels.com, and individual hotel websites.

Property data encompasses room types, pricing structures, guest reviews, amenities lists, location details, and availability calendars. This comprehensive information enables competitive analysis, market positioning, and customer preference identification. Revenue management teams benefit from understanding competitor pricing strategies and occupancy patterns through Travel Scraping API solutions.

Modern hotel data extraction must account for the following:

Multi-platform presence: Hotels often list varying information on multiple booking sites.

Dynamic pricing models: Rates change based on demand, events, and seasonal factors.

Review authenticity: Filtering genuine customer feedback from promotional content.

Geographic clustering: Understanding local market dynamics and competitive landscapes.

These solutions incorporate advanced filtering and categorization features to handle the complexity of hotel data effectively.

Car Rental Market Intelligence

The car rental industry operates with complex pricing models influenced by vehicle availability, location demand, seasonal patterns, and local events. Modern data extraction provides insights into fleet availability, pricing strategies, and market trends across different geographic regions.

Major rental companies like Hertz, Avis, Enterprise, and Budget maintain extensive online presence with real-time inventory management systems. Extracting data from these platforms requires understanding their booking workflows and availability calculation methods using Best Travel Data Extraction Software.

Essential data points for car rental analysis include:

Vehicle categories: From economy cars to luxury vehicles and specialty equipment.

Location-based pricing: Airport versus city locations often have different rate structures.

Seasonal variations: Holiday periods and local events significantly impact availability and costs.

Add-on services: Insurance options, GPS rentals, and additional driver fees.

The challenge is the relationship between pickup/dropoff locations, rental duration, and vehicle availability. These factors interact in complex ways that require sophisticated data modeling approaches supported by Travel Data Intelligence systems.

Vacation Rental Platform Analysis

The rise of platforms like Airbnb, VRBO, and HomeAway has created new opportunities for travel data extraction. These platforms collect information about property listings, host profiles, guest reviews, pricing calendars, and booking availability.

Unlike traditional hotels, vacation rentals operate with unique pricing models, often including cleaning fees, security deposits, and variable nightly rates. Understanding these cost structures requires comprehensive Travel Data Scraping capabilities and analysis.

Key aspects of vacation rental data include:

Property characteristics: Number of bedrooms, amenities, location ratings, and unique features.

Host information: Response times, acceptance rates, and guest communication patterns.

Pricing strategies: Base rates, seasonal adjustments, and additional fee structures.

Market saturation: Understanding supply and demand dynamics in specific locations.

Real-Time Travel Data Extraction becomes particularly important for vacation rentals due to the personal nature of these properties and the impact of local events on availability and pricing.

Building Effective Data Intelligence Systems

Modern intelligence systems transform raw extracted information into actionable business insights. This process involves data cleaning, normalization, analysis, and visualization to support decision-making across various business functions.

Successful intelligence systems integrate data from multiple sources to provide comprehensive market views. They combine pricing information with availability data, customer sentiment analysis, and competitive positioning metrics to create holistic business intelligence dashboards using Web Scraping Tools For Travel.

Key components of effective systems include:

Data quality management: Ensuring accuracy, completeness, and consistency across all data sources.

Automated analysis: Implementing algorithms to identify trends, anomalies, and opportunities.

Customizable reporting: Providing stakeholders with relevant, timely, and actionable information.

Predictive modeling: Using historical data to forecast future trends and market conditions.

The integration of artificial intelligence and machine learning technologies enhances the capability of Travel Scraping API systems to provide deeper insights and more accurate predictions.

Real-Time Data Processing Capabilities

Modern travel businesses require up-to-the-minute information to remain competitive in fast-paced markets. Advanced processing systems operate continuously, monitoring changes across multiple platforms and updating business intelligence systems accordingly.

The technical infrastructure for real-time processing must handle high-frequency data updates while maintaining system performance and reliability. This requires distributed computing approaches, efficient data storage solutions, and robust error handling mechanisms Best Travel Data Extraction Software supports.

Critical aspects of real-time systems include:

Low-latency processing: Minimizing delays between data availability and business intelligence updates.

Scalable architecture: Handling varying data volumes and processing demands.

Fault tolerance: Maintaining operations despite individual component failures.

Data freshness: Ensuring information accuracy and relevance for time-sensitive decisions.

Advanced Flight Price Data Scraping systems exemplify these capabilities by providing instant updates on pricing changes across multiple airlines and booking platforms.

Legal and Ethical Considerations

Modern Hotel Data Scraping tools must navigate legal boundaries, adhering to website terms of service and data protection laws. As data extraction regulations evolve, businesses must stay compliant while gathering valuable travel insights.

Best practices include respecting robots.txt files, implementing reasonable request rates, and avoiding actions that could disrupt website operations. Many travel companies now offer official APIs as alternatives to scraping, providing structured access to their data while maintaining control over usage terms.

Important considerations include:

Terms of service compliance: Understanding and adhering to platform-specific usage policies.

Data privacy regulations: Ensuring compliance with GDPR, CCPA, and other privacy laws.

Rate limiting: Implementing respectful crawling practices that don't overwhelm target servers.

Attribution requirements: Properly crediting data sources when required.

Data scraping in the hotel sector must prioritize guest privacy and protect reservation confidentiality. Similarly, Car Rental Data Scraping should consider competitive pricing and ensure it doesn't interfere with booking platforms.

Future Trends and Innovations

The evolution of travel data extraction is rapidly advancing, driven by AI, machine learning, and cloud innovations. Amid this progress, Travel Data Intelligence unlocks deeper insights, greater accuracy, and scalable solutions for travel businesses.

Emerging trends such as natural language processing for review analysis, computer vision for extracting image-based data, and blockchain for secure data verification and sharing are transforming the landscape. These innovations enhance the value and scope of Vacation Rental Data Scraping systems across all market segments.

How Travel Scrape Can Help You?

We provide comprehensive data extraction solutions tailored to your business needs. Our expert team understands the complexities of Travel Data Scraping and offers cutting-edge tools to give you a competitive advantage.

Custom API Development: We create specialized solutions that integrate seamlessly with your existing systems and workflows.

Multi-Platform Coverage: Our services encompass data collection across hundreds of travel websites and booking platforms.

Real-Time Intelligence: Implement continuous monitoring capabilities that keep your business informed of market changes as they happen.

Advanced Analytics: Transform raw data into actionable insights using sophisticated analysis and reporting tools.

Scalable Infrastructure: Our solutions grow with your business, handling increased data volumes and additional platforms.

Compliance Management: We ensure all data collection activities adhere to legal requirements and industry best practices.

24/7 Support: Our dedicated team provides continuous monitoring and technical support to maintain optimal system performance.

Conclusion

The travel industry's data-driven transformation requires sophisticated information collection and analysis approaches. Travel Data Scraping has become an essential capability for businesses seeking to understand market dynamics, optimize pricing strategies, and deliver superior customer experiences. Modern Travel Aggregators rely heavily on comprehensive data extraction systems to provide accurate, timely information to their users.

Success in today's competitive environment demands robust Travel Industry Web Scraping capabilities that can adapt to changing technologies and market conditions. By implementing the right tools, strategies, and partnerships, travel businesses can harness the power of data to drive growth and innovation.

Ready to transform your travel business with comprehensive data intelligence? Contact Travel Scrape today to discover how we can provide the competitive advantage you need.

Read More :- https://www.travelscrape.com/how-travel-data-scraping-works-2025.php

#TravelDataScrapingWork#TheBestToolsToUseIn2025#TravelDataIntelligence#VacationRentalDataScraping#FlightPriceDataScraping#TravelScrapingAPI#HotelDataScraping#TravelIndustryWebScraping#TravelAggregators

0 notes

Text

How to Integrate WooCommerce Scraper into Your Business Workflow

In today’s fast-paced eCommerce environment, staying ahead means automating repetitive tasks and making data-driven decisions. If you manage a WooCommerce store, you’ve likely spent hours handling product data, competitor pricing, and inventory updates. That’s where a WooCommerce Scraper becomes a game-changer. Integrated seamlessly into your workflow, it can help you collect, update, and analyze data more efficiently, freeing up your time and boosting operational productivity.

In this blog, we’ll break down what a WooCommerce scraper is, its benefits, and how to effectively integrate it into your business operations.

What is a WooCommerce Scraper?

A WooCommerce scraper is a tool designed to extract data from WooCommerce-powered websites. This data could include:

Product titles, images, descriptions

Prices and discounts

Reviews and ratings

Stock status and availability

Such a tool automates the collection of this information, which is useful for e-commerce entrepreneurs, data analysts, and digital marketers. Whether you're monitoring competitors or syncing product listings across multiple platforms, a WooCommerce scraper can save hours of manual work.

Why Businesses Use WooCommerce Scrapers

Before diving into the integration process, let’s look at the key reasons businesses rely on scraping tools:

Competitor Price Monitoring

Stay competitive by tracking pricing trends across similar WooCommerce stores. Automated scrapers can pull this data daily, helping you optimize your pricing strategy in real time.

Bulk Product Management

Import product data at scale from suppliers or marketplaces. Instead of manually updating hundreds of SKUs, use a scraper to auto-populate your database with relevant information.

Enhanced Market Research

Get a snapshot of what’s trending in your niche. Use scrapers to gather data about top-selling products, customer reviews, and seasonal demand.

Inventory Tracking

Avoid stockouts or overstocking by monitoring inventory availability from your suppliers or competitors.

How to Integrate a WooCommerce Scraper Into Your Workflow

Integrating a WooCommerce scraper into your business processes might sound technical, but with the right approach, it can be seamless and highly beneficial. Whether you're aiming to automate competitor tracking, streamline product imports, or maintain inventory accuracy, aligning your scraper with your existing workflow ensures efficiency and scalability. Below is a step-by-step guide to help you get started.

Step 1: Define Your Use Case

Start by identifying what you want to achieve. Is it competitive analysis? Supplier data syncing? Or updating internal catalogs? Clarifying this helps you choose the right scraping strategy.

Step 2: Choose the Right Scraper Tool

There are multiple tools available, ranging from browser-based scrapers to custom-built Python scripts. Some popular options include:

Octoparse

ParseHub

Python-based scrapers using BeautifulSoup or Scrapy

API integrations for WooCommerce

For enterprise-level needs, consider working with a provider like TagX, which offers custom scraping solutions with scalability and accuracy in mind.

Step 3: Automate with Cron Jobs or APIs

For recurring tasks, automation is key. Set up cron jobs or use APIs to run scrapers at scheduled intervals. This ensures that your database stays up-to-date without manual intervention.

Step 4: Parse and Clean Your Data

Raw scraped data often contains HTML tags, formatting issues, or duplicates. Use tools or scripts to clean and structure the data before importing it into your systems.

Step 5: Integrate with Your CMS or ERP

Once cleaned, import the data into your WooCommerce backend or link it with your ERP or PIM (Product Information Management) system. Many scraping tools offer CSV or JSON outputs that are easy to integrate.

Common Challenges in WooCommerce Scraping (And Solutions)

Changing Site Structures

WooCommerce themes can differ, and any update might break your script. Solution: Use dynamic selectors or AI-powered tools that adapt automatically.

Rate Limiting and Captchas

Some sites use rate limiting or CAPTCHAs to block bots. Solution: Use rotating proxies, headless browsers like Puppeteer, or work with scraping service providers.

Data Duplication or Inaccuracy

Messy data can lead to poor business decisions. Solution: Implement deduplication logic and validation rules before importing data.

Tips for Maintaining an Ethical Scraping Strategy

Respect Robots.txt Files: Always check the site’s scraping policy.

Avoid Overloading Servers: Schedule scrapers during low-traffic hours.

Use the Data Responsibly: Don’t scrape copyrighted or sensitive data.

Why Choose TagX for WooCommerce Scraping?

While it's possible to set up a basic WooCommerce scraper on your own, scaling it, maintaining data accuracy, and handling complex scraping tasks require deep technical expertise. TagX’s professionals offer end-to-end scraping solutions tailored specifically for e-commerce businesses. Whether you're looking to automate product data extraction, monitor competitor pricing, or implement web scraping using AI at scale. Key Reasons to Choose TagX:

AI-Powered Scraping: Go beyond basic extraction with intelligent scraping powered by machine learning and natural language processing.

Scalable Infrastructure: Whether you're scraping hundreds or millions of pages, TagX ensures high performance and minimal downtime.

Custom Integration: TagX enables seamless integration of scrapers directly into your CMS, ERP, or PIM systems, ensuring a streamlined workflow.

Ethical and Compliant Practices: All scraping is conducted responsibly, adhering to industry best practices and compliance standards.

With us, you’re not just adopting a tool—you’re gaining a strategic partner that understands the nuances of modern eCommerce data operations.

Final Thoughts

Integrating a WooCommerce scraper into your business workflow is no longer just a technical choice—it’s a strategic advantage. From automating tedious tasks to extracting market intelligence, scraping tools empower businesses to operate faster and smarter.

As your data requirements evolve, consider exploring web scraping using AI to future-proof your automation strategy. And for seamless implementation, TagX offers the technology and expertise to help you unlock the full value of your data.

0 notes

Text

Web Scrapper Software Market Size, Share, Key Growth Drivers, Trends, Challenges and Competitive Landscape

"Web Scrapper Software Market - Size, Share, Demand, Industry Trends and Opportunities

Global Web Scrapper Software Market, By Type (General-Purpose Web Crawlers, Focused Web Crawlers, Incremental Web Crawlers, Deep Web Crawler), Vertical (Retail & Ecommerce, Advertising & Media, Real Estate, Finance, Automotive, Others), Country (U.S., Canada, Mexico, Brazil, Argentina, Rest of South America, Germany, Italy, U.K., France, Spain, Netherlands, Belgium, Switzerland, Turkey, Russia, Rest of Europe, Japan, China, India, South Korea, Australia, Singapore, Malaysia, Thailand, Indonesia, Philippines, Rest of Asia-Pacific, Saudi Arabia, U.A.E, South Africa, Egypt, Israel, Rest of Middle East and Africa) Industry Trends

Access Full 350 Pages PDF Report @

**Segments**

- **Type**: The web scrapper software market can be segmented based on type, including general-purpose web scraping tools and specialized web scraping tools catering to specific industries or needs. - **Deployment Mode**: Another key segmentation factor is the deployment mode of the software, with options such as on-premise, cloud-based, or hybrid solutions. - **End-User**: End-users of web scrapper software vary widely, ranging from individual users and small businesses to large enterprises across various industries. - **Application**: The market can also be segmented based on the specific applications of web scrapper software, such as e-commerce, market research, competitive analysis, content aggregation, and more.

**Market Players**

- **Octoparse** - **Import.io** - **Scrapy** - **ParseHub** - **Apify** - **Diffbot** - **Common Crawl** - **Dexi.io** - **Mozenda** - **Content Grabber**

The global web scrapper software market is a dynamic and rapidly growing industry, driven by the increasing need for data extraction, competitive intelligence, and automation across various sectors. The market segmentation based on type allows customers to choose between general-purpose tools that provide a broad range of functionalities and specialized tools that cater to specific niche requirements. Deployment mode segmentation offers flexibility in adopting the software based on different infrastructure needs and preferences. The diverse end-user base further underscores the widespread utility of web scrapper software in serving the data extraction needs of individual users, small businesses, and large enterprises operating in sectors like e-commerce, finance, healthcare, and more. Additionally, the segmentation by application highlights the versatility of web scrapper software in enabling tasks such as competitive analysis, content aggregation, market research, and beyond.

In the competitive landscape of the web scrapper software market, there are several key players that offer innovative solutions and play a significant role in shaping the industry. Companies like Octoparse, Import.io, and Scrapy are known for their user-friendly interfaces and robust scraping capabilities. ParseHub and Apify excel in providing customizable and scalable web scraping tools for businesses of all sizes. Meanwhile, players like Diffbot, Common Crawl, and Dexi.io offer advanced features such as AI-powered data extraction and web crawling services. Other market players like Mozenda and Content Grabber also contribute to the market's growth by providing efficient and reliable web scraping solutions tailored to meet specific business requirements.

https://www.databridgemarketresearch.com/reports/global-web-scrapper-software-marketThe global web scrapper software market is witnessing significant growth due to the escalating demand for data extraction, competitive intelligence, and process automation across various industries. This surge is further fueled by the increasing reliance on digital platforms and the need for real-time data to drive informed decision-making. As businesses strive to stay competitive and agile in a data-driven world, web scraping tools have become essential for extracting valuable insights from the vast expanse of online information. The market segmentation based on type, deployment mode, end-user, and application reflects the diverse needs and preferences of customers looking to leverage web scrapper software for various purposes.

In this competitive landscape, market players such as Octoparse, Import.io, and Scrapy stand out for their user-friendly interfaces and robust scraping capabilities, making them popular choices among businesses of all sizes. These companies are continually innovating to enhance their tools' efficiency, scalability, and adaptability to meet evolving market demands. ParseHub and Apify, on the other hand, cater to businesses seeking customizable and scalable web scraping solutions tailored to their specific requirements, thereby offering a more personalized approach to data extraction. Companies like Diffbot, Common Crawl, and Dexi.io leverage advanced technologies such as machine learning and AI to provide cutting-edge data extraction services, empowering businesses with streamlined processes and accurate insights. Moreover, market players like Mozenda and Content Grabber excel in delivering efficient and reliable web scraping solutions that address the unique needs of different industries and business functions.

Looking ahead, the web scrapper software market is poised for continued growth as organizations across sectors recognize the pivotal role of data in driving innovation, improving decision-making, and gaining a competitive edge. The market is likely to witness increased adoption of cloud-based and hybrid deployment models, offering businesses greater flexibility, scalability, and cost-efficiency in deploying web scraping solutions. Moreover, the expanding range of applications for web scrapper software, including e-commerce optimization, market research, competitive analysis, and content aggregation, will open up new opportunities for market players to innovate and diversify their offerings. With a focus on user experience, data accuracy, and compliance with data privacy regulations, web scrapper software providers will continue to play a vital role in helping businesses unlock the full potential of web data for strategic growth and operational excellence.**Segments**

-

Global Web Scrapper Software Market, By Type (General-Purpose Web Crawlers, Focused Web Crawlers, Incremental Web Crawlers, Deep Web Crawler), Vertical (Retail & Ecommerce, Advertising & Media, Real Estate, Finance, Automotive, Others), Country (U.S., Canada, Mexico, Brazil, Argentina, Rest of South America, Germany, Italy, U.K., France, Spain, Netherlands, Belgium, Switzerland, Turkey, Russia, Rest of Europe, Japan, China, India, South Korea, Australia, Singapore, Malaysia, Thailand, Indonesia, Philippines, Rest of Asia-Pacific, Saudi Arabia, U.A.E, South Africa, Egypt, Israel, Rest of Middle East and Africa) Industry Trends and Forecast to 2028

In the evolving landscape of the global web scrapper software market, segmentation plays a crucial role in understanding the diverse needs and preferences of customers across different industries. The differentiation based on type, such as general-purpose web crawlers, focused web crawlers, incremental web crawlers, and deep web crawlers, enables businesses to select tools that align with their specific data extraction requirements. Moreover, vertical segmentation highlights the varied applications of web scrapper software across industries like retail & ecommerce, advertising & media, real estate, finance, automotive, and more, showcasing the versatility and widespread adoption of these tools in enhancing business operations and competitiveness. The country-wise segmentation further provides insights into regional market trends, regulatory landscapes, and opportunities for market expansion, helping industry players tailor their strategies to local market dynamics.

As the global web scrapper software market continues to witness robust growth driven by the escalating demand for data-driven insights and automation, the competitive landscape is characterized by key market players striving to innovate and meet the evolving needs of businesses worldwide. Companies like Octoparse, Import.io, and Scrapy have established themselves as industry leaders renowned for their user-friendly interfaces and advanced scraping capabilities, attracting a broad clientele spanning from small enterprises to large corporations. These players focus on continuous enhancement of their tools to ensure efficiency, accuracy, and compliance with evolving data privacy regulations, thereby instilling trust among users seeking reliable web scraping solutions.

In parallel, emerging players like ParseHub and Apify are carving their niche in the market by offering customizable and scalable web scraping tools tailored to the unique requirements of businesses operating in diverse sectors. Their emphasis on flexibility and personalized solutions resonates well with organizations looking to optimize their data extraction processes while maintaining a competitive edge. Additionally, companies like Diffbot, Common Crawl, and Dexi.io leverage cutting-edge technologies such as AI and machine learning to deliver advanced data extraction services that empower businesses with real-time insights and streamlined operations, setting new benchmarks for efficiency and innovation in the market.

Looking ahead, the future outlook of the web scrapper software market is promising, with a continued focus on enhancing user experience, data accuracy, and compliance standards to meet the evolving needs of businesses in an increasingly digitized world. The adoption of cloud-based and hybrid deployment models is expected to rise, enabling organizations to leverage scalable and cost-effective web scraping solutions to drive operational efficiencies and strategic decision-making. Furthermore, the expanding applications of web scrapper software across sectors like e-commerce optimization, market research, competitive analysis, and content aggregation will create new growth opportunities for market players to innovate, diversify, and address the evolving needs of customers seeking data-driven insights for sustainable growth and competitive advantage.

The report provides insights on the following pointers:

Market Penetration: Comprehensive information on the product portfolios of the top players in the Web Scrapper Software Market.

Product Development/Innovation: Detailed insights on the upcoming technologies, R&D activities, and product launches in the market.

Competitive Assessment: In-depth assessment of the market strategies, geographic and business segments of the leading players in the market.

Market Development: Comprehensive information about emerging markets. This report analyzes the market for various segments across geographies.

Market Diversification: Exhaustive information about new products, untapped geographies, recent developments, and investments in the Web Scrapper Software Market.

Table of Content:

Part 01: Executive Summary

Part 02: Scope of the Report

Part 03: Global Web Scrapper Software Market Landscape

Part 04: Global Web Scrapper Software Market Sizing

Part 05: Global Web Scrapper Software Market Segmentation by Product

Part 06: Five Forces Analysis

Part 07: Customer Landscape

Part 08: Geographic Landscape

Part 09: Decision Framework

Part 10: Drivers and Challenges

Part 11: Market Trends

Part 12: Vendor Landscape

Part 13: Vendor Analysis

This study answers to the below key questions:

What are the key factors driving the Web Scrapper Software Market?

What are the challenges to market growth?

Who are the key players in the Web Scrapper Software Market?

What are the market opportunities and threats faced by the key players?

Browse Trending Reports:

Dental Consumables Market Wind Turbine Casting Market Liquid Packaging Market Yield Monitoring System Market Cocoa Processing Equipment Market Barbecue (BBQ) Sauces and Rubs Market Bioresorbable Scaffolds Market Biotinidase Deficiency Market Consumer Appliances Market Carded Blister Packaging Market GPS (Positioning System) Tracking System Market Moisture Analyser Market

About Data Bridge Market Research:

Data Bridge set forth itself as an unconventional and neoteric Market research and consulting firm with unparalleled level of resilience and integrated approaches. We are determined to unearth the best market opportunities and foster efficient information for your business to thrive in the market. Data Bridge endeavors to provide appropriate solutions to the complex business challenges and initiates an effortless decision-making process.

Contact Us:

Data Bridge Market Research

US: +1 614 591 3140

UK: +44 845 154 9652

APAC : +653 1251 975

Email: [email protected]"

0 notes

Text

How Python Powers Modern Web Applications

Python has become one of the most widely used programming languages for web development, powering everything from small websites to large-scale enterprise applications. Its simplicity, versatility, and robust ecosystem make it an ideal choice for building modern web applications.

Considering the kind support of Python Course in Chennai Whatever your level of experience or reason for switching from another programming language, learning Python gets much more fun.

Here’s how Python plays a crucial role in web development.

User-Friendly and Efficient Development

Python’s clean and readable syntax allows developers to write web applications faster with fewer lines of code. This makes development more efficient and reduces errors, making Python an excellent choice for both beginners and experienced developers.

Powerful Web Frameworks

Python offers several powerful web frameworks that simplify development and enhance productivity. Some of the most popular ones include:

Django – A full-stack framework that provides built-in tools for authentication, database management, and security. It is used by major platforms like Instagram and Pinterest.

Flask – A lightweight and flexible framework that gives developers complete control over their web applications. It is ideal for small projects and microservices.

FastAPI – Optimized for building high-performance APIs with features like asynchronous programming and automatic data validation.

Backend Development and API Integration

Python is widely used for server-side programming, handling requests, processing data, and managing user authentication. It is also essential for building RESTful APIs that connect web applications with mobile apps, databases, and third-party services. With the aid of Best Online Training & Placement Programs, which offer comprehensive training and job placement support to anyone looking to develop their talents, it’s easier to learn this tool and advance your career.

Seamless Database Management

Python supports various databases, making it easy to store and retrieve data efficiently. Some commonly used databases include:

SQL databases – MySQL, PostgreSQL, SQLite (managed with Django ORM and SQLAlchemy).

NoSQL databases – MongoDB and Firebase for handling large and flexible data structures.

Web Scraping and Automation

Python is frequently used for web scraping, which involves extracting data from websites using libraries like BeautifulSoup and Scrapy. It also automates repetitive tasks such as content updates, email notifications, and form submissions.

AI and Machine Learning Integration

Many modern web applications leverage artificial intelligence for personalization, chatbots, and predictive analytics. Python’s powerful AI and machine learning libraries, such as TensorFlow, Scikit-learn, and OpenCV, enable developers to build intelligent web applications with advanced features.

Security and Scalability

Python-based web applications are known for their security and scalability. Django, for example, includes built-in security features that protect against common threats like SQL injection and cross-site scripting. Python also allows applications to scale seamlessly, handling growing user demands without compromising performance.

Conclusion

Python continues to power modern web applications by offering ease of development, powerful frameworks, seamless database integration, and AI-driven capabilities. Whether you’re building a personal project, an e-commerce platform, or a large enterprise solution, Python provides the tools and flexibility needed to create high-quality web applications.

#python course#python training#python#technology#tech#python programming#python online training#python online course#python certification#python online classes

0 notes

Text

The Role of Residential Proxies in Enhancing Business Data Scraping

In order to obtain accurate and comprehensive market intelligence, many companies choose to collect information through online data crawling. However, with the strengthening of network protection measures, direct data crawling often faces many challenges, such as IP blocking, access restrictions, etc. At this time, residential proxies, as an effective solution, are gradually becoming an important tool for online data crawling for enterprises.

What is a residential proxy?

A residential proxy is a special type of proxy server that uses an IP address from a real home or residential network to make network requests. Unlike data center proxies, residential proxy IP addresses are more authentic and trustworthy because they share the same network environment as real Internet users.

How do residential proxies help with online data scraping?

1. Bypass IP blocking and access restrictions

When performing large-scale data scraping, companies often encounter problems with IP blocking or restricted access. This is because the target website sets up an anti-crawler mechanism to prevent crawlers from abusing resources. Residential proxies can effectively bypass these restrictions by providing a large number of real residential IP addresses, which can simulate the access behavior of different users in different locations. When an IP address is blocked, the company can quickly switch to another residential IP to ensure the continuity and success rate of data scraping.

2. Improve the anonymity and security of data scraping

When using residential proxies for data scraping, the company's real IP address and identity are hidden and replaced by the IP address of the proxy server. This makes it difficult for the target website to track the company's real identity and location, thereby improving the anonymity and security of data scraping. This is particularly important for companies that need to protect trade secrets or avoid unnecessary competition risks.

3. Get more authentic and comprehensive data samples

The IP addresses of residential proxies come from real users, who are diverse and cover different demographic groups and geographic locations. Therefore, using residential proxies for data scraping can obtain more authentic and comprehensive data samples. These data samples are more able to reflect real human behavior and consumption habits, and provide more accurate basis for the company's market analysis and decision-making.

4. Support global data scraping

With the accelerated development of globalization, companies have an increasing demand for global market data. Residential proxies are usually located all over the world, which means that companies can access target websites from different geographical locations and conduct global data scraping. This helps companies understand global market dynamics, grasp market trends, and provide strong support for cross-border operations and market competition.

Advantages of Swiftproxy residential proxies

1.Authenticity and credibility :

The IP addresses of residential proxies provided by Swiftproxy come from real home or residential networks, which makes them more authentic and credible. Compared with data center proxies, residential proxies are less likely to be identified as crawlers by websites and platforms.

2. Rich IP resources:

Swiftproxy has 70 million residential IP resources, which can meet the data scraping tasks of different sizes and needs of enterprises. In addition, these IP resources can be dynamically allocated and updated according to the needs of enterprises.

3. Stability and reliability:

Swiftproxy provides high-quality network connections and stable proxy services. This ensures that enterprises will not encounter problems such as network interruptions or proxy failures when scraping data.

Precautions for using residential proxies

Although residential proxies have many advantages in online data scraping, enterprises still need to pay attention to the following points when using them:

1. Comply with laws and regulations:

When using residential proxies for data scraping, enterprises should comply with relevant laws, regulations and platform regulations. No illegal or malicious data scraping behavior shall be carried out.

2. Protect user privacy:

When using residential proxies for data scraping, enterprises should ensure that users' personal information and privacy data will not be leaked or abused.

Conclusion

In summary, residential proxies, as an effective online data scraping tool, are gradually becoming an important means for enterprises to obtain market intelligence. Residential proxies provide enterprises with a more convenient and efficient data capture solution by bypassing IP blocking, improving anonymity and security, obtaining more authentic and comprehensive data samples, and supporting global data capture. However, when using them, enterprises should also pay attention to reasonably planning capture strategies and complying with laws and regulations to ensure the legality and effectiveness of data capture.

0 notes

Text

Data extraction services

Data extraction has become an essential aspect of modern business operations, enabling companies to collect, process, and leverage information from diverse sources. This forum thread discusses the importance, applications, and best practices related to data extraction services.

What is Data Extraction? Data extraction refers to the process of retrieving data from various sources, including websites, documents, databases, or APIs. This data can then be organized and used for various purposes like analytics, reporting, machine learning, or decision-making. It’s crucial for businesses to transform raw data into structured formats for better analysis and insights.

Types of Data Extraction Web Scraping: This is the most common form of data extraction, where data is extracted from websites. This can include information like product prices, customer reviews, and news updates. Web scraping tools like BeautifulSoup, Scrapy, and Selenium are frequently used to automate the process. Web scraping can be both legal and ethical, but it’s important to respect the terms and conditions of the websites from which the data is being extracted.

Text Extraction from Documents: Companies often need to extract text data from documents like PDFs, Word files, and scanned images. OCR (Optical Character Recognition) tools, such as Tesseract and Adobe Acrobat, are widely used for this purpose. These tools convert scanned images into editable text, facilitating the extraction of valuable data.

API Data Extraction: Many modern businesses rely on APIs to fetch data from various sources. This method involves using APIs to access data in a structured format (e.g., JSON, XML) and integrate it with internal systems. API extraction is commonly used for accessing financial data, social media metrics, or even IoT device data.

Why Data Extraction Matters Business Intelligence: The ability to extract, transform, and analyze data is vital for making informed decisions. Data extraction allows businesses to collect data from multiple sources, providing a more comprehensive view of their industry, competitors, and market trends. Automation: By automating data extraction processes, companies can save time and reduce human error. Automated systems can continuously extract and process data, ensuring that businesses always have up-to-date information.

Data Integration: Extracted data can be integrated into business applications, customer relationship management (CRM) systems, enterprise resource planning (ERP) software, and other platforms to enable better data-driven decision-making.

Challenges in Data Extraction

Despite its numerous benefits, data extraction does come with some challenges:

Data Quality: Extracting accurate and high-quality data is essential. Poor-quality data can lead to faulty analysis, making it important to use the right tools and verify the sources.

Legal and Ethical Concerns: In some cases, extracting data from websites or third-party sources may violate terms of service or privacy laws (such as GDPR). Organizations need to ensure they comply with legal regulations when collecting data.

Data Structuring: Raw extracted data often needs significant transformation before it can be used effectively. This might involve cleaning the data, standardizing formats, and dealing with missing values.

Best Practices for Data Extraction

Choose the Right Tools: Selecting the appropriate data extraction tools based on the type of data you need is critical. Consider web scraping tools, OCR solutions, and APIs carefully to match your needs.

Prioritize Data Privacy: Ensure that you follow legal guidelines such as GDPR when extracting personal or sensitive data. Consent should be obtained where necessary.

Ensure Data Accuracy: Implement processes to verify the accuracy of the extracted data. Use algorithms to clean and correct errors in the dataset.

Scalability: Ensure that your data extraction system can handle the increasing volume of data as your business grows. Scalable tools like cloud-based data extraction services are ideal for large-scale operations.

Conclusion

Data extraction services play a pivotal role in streamlining data collection processes and enhancing business intelligence. Whether it involves web scraping, document processing, or API integrations, having the right tools and strategies is essential for successfully leveraging extracted data. However, businesses must also be aware of the challenges, such as data quality and compliance, to maximize the effectiveness of their data extraction efforts.

#Data extraction#data mining#data transmission#data translation#data analytics#video analytics#cctv surveillance#cctv monitoring#branding#business#big data#data#adobe indesign

0 notes

Text

Best Web Scraping Software to Automate Data Collection

Choosing the right web scraping software is key to extracting accurate and valuable data. Popular options like Scrapy, Octoparse, and ParseHub offer powerful features to help you gather data quickly and efficiently. Need expert advice on the best web scraping software?

Contact us now : https://outsourcebigdata.com/top-10-web-scraping-software-you-should-explore/

About AIMLEAP Outsource Bigdata is a division of Aimleap. AIMLEAP is an ISO 9001:2015 and ISO/IEC 27001:2013 certified global technology consulting and service provider offering AI-augmented Data Solutions, Data Engineering, Automation, IT Services, and Digital Marketing Services. AIMLEAP has been recognized as a ���Great Place to Work®’.

With a special focus on AI and automation, we built quite a few AI & ML solutions, AI-driven web scraping solutions, AI-data Labeling, AI-Data-Hub, and Self-serving BI solutions. We started in 2012 and successfully delivered IT & digital transformation projects, automation-driven data solutions, on-demand data, and digital marketing for more than 750 fast-growing companies in the USA, Europe, New Zealand, Australia, copyright; and more.

-An ISO 9001:2015 and ISO/IEC 27001:2013 certified -Served 750+ customers -11+ Years of industry experience -98% client retention -Great Place to Work® certified -Global delivery centers in the USA, copyright, India & Australia

Our Data Solutions APISCRAPY: AI driven web scraping & workflow automation platform APISCRAPY is an AI driven web scraping and automation platform that converts any web data into ready-to-use data. The platform is capable to extract data from websites, process data, automate workflows, classify data and integrate ready to consume data into database or deliver data in any desired format.

AI-Labeler: AI augmented annotation & labeling solution AI-Labeler is an AI augmented data annotation platform that combines the power of artificial intelligence with in-person involvement to label, annotate and classify data, and allowing faster development of robust and accurate models.

AI-Data-Hub: On-demand data for building AI products & services On-demand AI data hub for curated data, pre-annotated data, pre-classified data, and allowing enterprises to obtain easily and efficiently, and exploit high-quality data for training and developing AI models.

PRICESCRAPY: AI enabled real-time pricing solution An AI and automation driven price solution that provides real time price monitoring, pricing analytics, and dynamic pricing for companies across the world.

APIKART: AI driven data API solution hub APIKART is a data API hub that allows businesses and developers to access and integrate large volume of data from various sources through APIs. It is a data solution hub for accessing data through APIs, allowing companies to leverage data, and integrate APIs into their systems and applications.

Locations: USA: 1-30235 14656 copyright: +1 4378 370 063 India: +91 810 527 1615 Australia: +61 402 576 615 Email: [email protected]

0 notes

Text

Top 5 Web Scraping Tools in 2021

Web scraping, also known as Web harvesting, Web data extraction, is the process of obtaining and analyzing data from a website. After that, for various purposes, the extracted data is saved in a local database. Web crawling can be performed manually or automatically through the software. There is no doubt that automated processes are more cost-effective than manual processes. Because there is a large amount of digital information online, companies equipped with these tools can collect more data at a lower cost than they did not collect, and gain a competitive advantage in the long run.

Web scraping benefits businesses > HOW!

Modern companies build the best data. Although the Internet is actually the largest database in the world, the Internet is full of unstructured data, which organizations cannot use directly. Web scraping can help overcome this hurdle and turn the site into structured data, which in many cases is of great value. The benefits of sales and marketing of a specific type of web scraping are contact scraping, which can collect business contact information from websites. This helps to attract more sales leads, close more deals, and improve marketing. By using a web scraper to monitor job commission updates, recruiters can find ideal candidates with very specific searches. By monitoring job board updates with a web scrape, recruiters are able to find their ideal candidates with very specific searches. Financial analysts use web scraping to collect data about global stock markets, financial markets, transactions, commodities, and economic indicators to make better decisions. E-commerce / travel sites get product prices and availability from competitors and use the extracted data to maintain a competitive advantage. Get data from social media and review sites (Facebook, Twitter, Yelp, etc.). Monitor the impact of your brand and take your brand reputation / customer review department to a new level. It's also a great tool for data scientists and journalists. An automatic web scraping can collect millions of data points in your database in just a few minutes. This data can be used to support the data model and academic research. Moreover, if you are a journalist, you can collect rich data online to practice data-driven journalism.

Web scraping tools List.....

In many cases, you can use a web crawler to extract website data for your own use. You can use browser tools to extract data from the website you are browsing in a semi-automatic way, or you can use free API/paid services to automate the crawling process. If you are technically proficient, you can even use programming languages like Python to develop your own web dredging applications.

No matter what your goal is, there are some tools that suit your needs. This is our curated list of top web crawlers.

Scraper site API

License: FREE

Website: https://www.scrapersite.com/

Scraper site enables you to create scalable web detectors. It can handle proxy, browser, and verification code on your behalf, so you can get data from any webpage with a simple API call.

The location of the scraper is easy to integrate. Just send your GET request along with the API key and URL to their API endpoint and they'll return the HTML.

Scraper site is an extension for Chrome that is used to extract data from web pages. You can make a site map, and how and where the content should be taken. Then you can export the captured data to CSV.

Web Scraping Function List:

Checking in multiple pages

Dental data stored in local storage

Multiple data selection types

Extract data from dynamic pages (JavaScript + AJAX)

Browse the captured data

Export the captured data to CSV

Importing and exporting websites

It depends on Chrome browser only

The Chrome extension is completely free to use

Highlights: sitemap, e-commerce website, mobile page.

Beautiful soup

License: Free Site: https://www.crummy.com/software/BeautifulSoup/ Beautiful Soup is a popular Python language library designed for web scraping. Features list: • Some simple ways to navigate, search and modify the analysis tree • Document encodings are handled automatically • Provide different analysis strategies Highlights: Completely free, highly customizable, and developer-friendly.

dexi.io

License: Commercial, starting at $ 119 a month.

Site: https://dexi.io/

Dexi provides leading web dredging software for enterprises. Their solutions include web scraping, interaction, monitoring, and processing software to provide fast data insights that lead to better decisions and better business performance.

Features list:

• Scraping the web on a large scale Intelligent data mining • Real-time data points

Import.io

License: Commercial starts at $ 299 per month. Site: https://www.import.io/ Import.io provides a comprehensive web data integration solution that makes it fast, easy and affordable to increase the strategic value of your web data. It also has a professional service team who can help clients maximize the solution value. Features list: Pointing and clicking training • Interactive workflow • Scheduled abrasion • Machine learning proposal • Works with login • Generates website screen shots • Notifications upon completion • URL generator Highlights: Point and Click, Machine Learning, URL Builder, Interactive Workflow.

Scrapinghub

License: Commercial version, starting at $299 per month. Website: https://scrapinghub.com/ Scraping hub is the main creator and administrator of Scrapy, which is the most popular web scraping framework written in Python. They can also provide data services on demand in the following situations: product and price information, alternative financial data, competition and market research, sales and market research, news and content monitoring, and retail and distribution monitoring. functions list: • Scrapy Cloud crawling, standardized as Scrapy Cloud •Robot countermeasures and other challenges Focus: Scalable crawling cloud.

Highlights: scalable scraping cloud.

Summary The web is a huge repository of data. Firms involved in web dredging prefer to maintain a competitive advantage. Although the main objective of the aforementioned web dredging tools / services is to achieve the goal of converting a website into data, they differ in terms of functionality, price, ease of use, etc. We hope you can find the one that best suits your needs. Happy scraping!

2 notes

·

View notes

Text

What is Web Scraping Amazon Inventory?

The e-commerce platform of Amazon offers a wider range of services. Amazon does not give easy access to their product data. Hence, everyone in the e-commerce market must scrape Amazon product listings in some manner. Whether you need competitor research, online shopping, or an API for your app project, we have solutions for every problem. This problem could also be solved using web scraping Amazon inventory. It is not true that only smaller businesses will need Scraping Amazon data. But it is a fact that big companies like Walmart conduct scraping of Amazon products data and keep a record of prices and policies.

Reasons behind Scraping Amazon Product Data

Amazon possesses a huge amount of data and information such as products, ratings, reviews, and so on. Sellers and vendors both are benefitted from Web Scraping Amazon inventory. You will need an understanding of amount of data that the internet holds and the number of websites you want to scrape and fetch all the information. Amazon data scraping solves the issue of extracting data that consumes a lot of time.

1. Enhancing Product Design using Web Scraping Amazon Inventory

Every product passes through several stages of development. After the initial phases of the product creation, it's important to place product on the market. Client feedback or other issues, on the other hand, will ultimately arise, demanding a redesign or enhancement. Scraping Amazon data and design data such as size, material, colors, etc. makes it simple to continuously improve your product design.

2. Consider Customer Inputs

After scraping for basic designs and exploring the improvement, it is a perfect time to consider customer feedback. While customer reviews are not like product information, they often provide comments about the design or the buying procedure. It's essential to analyze client feedback while changing or updating designs. Scraping Amazon reviews to identify common sources of client’s confusion. E-Commerce data scraping allows you to compare and contrast evaluations, enabling you to spot trends or common difficulties.

3. Searching for the Best Deal

Despite the importance of materials and style, many clients place a premium on price. When browsing through Amazon product search results, the first attribute that distinguishes all of the identical options is price. Scraping price data of your and competitor items provides you with a wide range of pricing options. Once the range is determined, it becomes easy to determine the ideal place for your company which includes manufacturing and shipping costs.

Web Scraping Amazon Inventory

Scraping Amazon product lists will help your business in a variety of ways. Manually gathering Amazon data is far more difficult than it appears. For instance, looking out for every product link when finding a specific product category can be time-consuming. Furthermore, thousands of products flood your Amazon display when you look for a particular product, and you can't navigate through each product link to obtain information. Instead, you may use Amazon product scraping tools to swiftly scrape product listings and other product information. This includes the following:

1. Product Name:

Scraping product names is a necessary factor. It is possible to scrape many ideas using e-commerce data scraping including naming your products and creating a unique identity.

2. Price:

Pricing is the most important step to consider. If one knows the strategies of the market, then it becomes easy to price your product. Scraping Amazon Product listings to learn the product pricing.

3. Amazon Bestsellers:

Scraping Amazon Bestsellers will brief you about your main competitors and their working policy.

4. Ratings and Reviews:

Amazon collects a wealth of user input in the form of sales, customer reviews, and ratings. Scraping Amazon data and reviews to better understand your customers and their preferences.

5. Product Features

Product characteristics can assist you in understanding the technical aspects of the product, allowing you to quickly identify your USP and how this will benefit the user.

6. Product Description

For a seller, the product is everything. And you'll need a detailed and compelling product description to entice customers.

Ways to Web Scraping Amazon Inventory

1. Web Scraping using Python Libraries

Scrapy is a large-scale web scraping Python framework. It comes with everything that you need to quickly extract information from data, evaluate it as necessary, and store this in the style and content of your choice. There is no “one-size-fits-all” technique for data extraction from websites since the internet is so different.

2. Choosing Web Scraping Services

You'll require skilled and professional employees who can organize all of data rationally for web scraping Amazon inventory. The e-commerce scraping solution from X-Byte Enterprise Crawling can provide you the information you need quickly.

If you are looking for Amazon inventory data scraping then you can contact X-Byte Enterprise Crawling or ask for a free quote!

For more visit: https://www.xbyte.io/what-is-web-scraping-amazon-inventory.php

#Amazon Data Scraping#Scrape Amazon Product Data#Amazon Product Details#Pricing data scraping#Amazon Reviews Scraping#web scraping services#Amazon Price Intelligence

0 notes

Text

web scraping is majorly done in the following ways

Build your very own scraper from scratch

This is for code-savvy folks who love experimenting with site layouts and tackle blockage problems and are well-versed in any programming language like Python, R or Perl. Just like their routine programming for any data science project, a student or researcher can easily build their scraping solution with open-source frameworks like Python-based Scrapy or the rvest package, RCrawler in R

2.Developer-friendly tools to host efficient scrapers

Web scraping tools suitable for developers mostly, where they can construct custom scraping agents with programming logic in a visual manner. You can equate these tools to the Eclipse IDE for Java EE applications. Provisions to rotate IPs, host agents, and parse data are available in this range for personalization.

3.DIY Point-and-click web scraping tools for the no-coders

To the self-confessed non-techie with no coding knowledge, there’s a bunch of visually appealing point and click tools that help you build sales list or populate product information for your catalog with zero manual scripting

4.Outsourcing the entire web scraping project

For enterprises that look for extensively scaled scraping or time-pressed projects where you don’t have a team of developers to put together a scraping solution, web scraping services come to the rescue.

If you are going with the tools, then here are the advantages and drawbacks of popular web scraping tools that fall in the 2nd and 3rd category.

Visit this useful web portal: scraping google

0 notes

Text

Best 5 Web Scraping Tools

1. Cheerio

Who is this for: NodeJS developers who want a straightforward way to parse HTML.

Why you should use it: Cheerio offers an API similar to jQuery, so developers familiar with jQuery will immediately feel at home using Cheerio to parse HTML. It is blazing fast, and offers many helpful methods to extract text, html, classes, ids, and more. It is by far the most popular HTML parsing library written in NodeJS.

2. Beautiful Soup

Who is this for: Python developers who just want an easy interface to parse HTML, and don't necessarily need the power and complexity that comes with Scrapy.

Why you should use it: Like Cheerio for NodeJS developers, Beautiful Soup is by far the most popular HTML parser for Python developers. It's been around for over a decade now and is extremely well documented, with many tutorials on using it to scrape various website in both Python 2 and Python 3.

3. Puppeteer

Who is this for: Puppeteer is a headless Chrome API for NodeJS developers who want very granular control over their scraping activity.

Why you should use it: As an open source tool, Puppeteer is completely free. It is well supported and actively being developed and backed by the Google Chrome team itself. It is quickly replacing Selenium and PhantomJS as the default headless browser automation tool. It has a well thought out API, and automatically installs a compatible Chromium binary as part of its setup process, meaning you don't have to keep track of browser versions yourself.

4. Content Grabber

Who is this for: Enterprises looking to build robust, large scale web data extraction agents.

Why you should use it: Content Grabber provides end to end service, offering knowledgeable experts to develop custom web data extraction solutions for experts. In addition, they offer training and implementation services, as well as the ability to host their solutions on premise.

5. Mozenda

Who is this for: Enterprises looking for a cloud based self serve web scraping platform need look no further. With over 7 billion pages scraped, Mozenda has experience in serving enterprise customers from all around the world.

Why you should use it: Mozenda allows enterprise customers to run web scrapers on their robust cloud platform. They set themselves apart with the customer service (providing both phone and email support to all paying customers). Its platform is highly scalable and will allow for on premise hosting as well.

Visit this web portal for more: google scraper

0 notes

Text

Stop Paying To Web Data Scraping Tools (And Try This Instead)

In the ever-expanding digital landscape, big data continues to drive innovation and growth. With the exponential increase in data generation predicted to reach the equivalent of 212,765,957 DVDs daily by 2025, businesses are turning to big data and analytics to gain insights and fuel success in the global marketplace.

Web data scraping has emerged as a vital tool for businesses seeking to harness the wealth of information available on the internet. By extracting non-tabular or poorly structured data and converting it into a usable format, web scraping enables businesses to align strategies, uncover new opportunities, and drive growth.

Free vs. Paid Web Scraping Tools: Making the Right Choice

When it comes to web data scraping, businesses have the option to choose between free and paid tools. While both options serve the purpose of data extraction, paid tools often offer additional features and functionalities. However, for businesses looking to save on operational costs without compromising on quality, free web scraping tools present a viable solution.

Top Free Web Scrapers in the Market

ApiScrapy: ApiScrapy offers advanced, easy-to-use web scraping tools tailored to meet the diverse data needs of businesses across industries. With support for various data formats such as JSON, XML, and Excel, ApiScrapy's web scraper ensures seamless data extraction from websites with anti-bot protection. Leveraging AI technologies, ApiScrapy adapts to website structures, delivering high-quality data for quick analysis.

Octoparse: Octoparse is a user-friendly web scraping tool designed for professionals with no coding skills. It handles both static and dynamic websites efficiently, delivering data in TXT, CSV, HTML, or XLSX formats. While the free edition limits users to creating up to 10 crawlers, paid plans offer access to APIs and a wider range of IP proxies for faster and continuous data extraction.

Pattern: Pattern is a web data extraction software for Python programming language users, offering accurate and speedy data extraction. With its easy-to-use toolkit, Pattern enables users to extract data effortlessly, making it ideal for both coders and non-coders alike.