#scrapy in python

Explore tagged Tumblr posts

Text

Venkatesh (Rahul Shetty) Academy-Lifetime Access Get lifetime access to Venkatesh (Rahul Shetty) Academy and learn software testing, AI testing, automation tools, and more. Learn at your own pace with real projects and expert guidance. Perfect for beginners and professionals who want long-term learning support.

#ai generator tester#ai software testing#ai automated testing#ai in testing software#playwright automation javascript#playwright javascript tutorial#playwright python tutorial#scrapy playwright tutorial#api testing using postman#online postman api testing#postman automation api testing#postman automated testing#postman performance testing#postman tutorial for api testing#free api for postman testing#api testing postman tutorial#postman tutorial for beginners#postman api performance testing#automate api testing in postman

0 notes

Text

Hire Expert Scrapy Developers for Scalable Web Scraping & Data Automation

Looking to extract high-value data from the web quickly and accurately? At Prospera Soft, we offer top-tier Scrapy development services to help businesses automate data collection, gain market insights, and scale operations with ease.

Our team of Scrapy experts specializes in building robust, Python-based web scrapers that deliver 10X faster data extraction, 99.9% accuracy, and full cloud scalability. From price monitoring and sentiment analysis to lead generation and product scraping, we design intelligent, secure, and GDPR-compliant scraping solutions tailored to your business needs.

Why Choose Our Scrapy Developers?

✅ Custom Scrapy Spider Development for complex and dynamic websites

✅ AI-Optimized Data Parsing to ensure clean, structured output

✅ Middleware & Proxy Rotation to bypass anti-bot protections

✅ Seamless API Integration with BI tools and databases

✅ Cloud Deployment via AWS, Azure, or GCP for high availability

Whether you're in e-commerce, finance, real estate, or research, our scalable Scrapy solutions power your data-driven decisions.

#Hire Expert Scrapy Developers#scrapy development company#scrapy development services#scrapy web scraping#scrapy data extraction#scrapy automation#hire scrapy developers#scrapy company#scrapy consulting#scrapy API integration#scrapy experts#scrapy workflow automation#best scrapy development company#scrapy data mining#hire scrapy experts#scrapy scraping services#scrapy Python development#scrapy no-code scraping#scrapy enterprise solutions

0 notes

Text

Telegram-Scraper - A Powerful Python Script That Allows You To Scrape Messages And Media From Telegram Channels Using The Telethon Library

A powerful Python script that allows you to scrape messages and media from Telegram channels using the Telethon library. Features include real-time continuous scraping, media downloading, and data export capabilities. ___________________ _________\__ ___/ _____/ / _____/| | / \ ___ \_____ \ | | \ \_\ \/ \|____| \______ /_______ /\/ \/ Features 🚀 Scrape messages from multiple Telegram…

#KitPloit#OSINT#OSINT Python#OSINT Tool#Osinttool#Scraper#Scraping#Scrapy#Telegram#TeleGram-Scraper#Tracking

0 notes

Text

Master Web Scraping with Python: Beautiful Soup, Scrapy, and More

Learn web scraping with Python using Beautiful Soup, Scrapy, and other tools. Extract and automate data collection for your projects seamlessly.

#WebScraping#Python#BeautifulSoup#Scrapy#Automation#PythonProgramming#DataScience#TechLearning#PythonDevelopment

0 notes

Text

Unlock the Secrets of Python Web Scraping for Data-Driven Success

Ever wondered how to extract data from websites without manual effort? Python web scraping is the answer!

This blog covers everything you need to know to harness Python’s powerful libraries like BeautifulSoup, Scrapy, and Requests.

Whether you're scraping for research, monitoring prices, or gathering content, this guide will help you turn the web into a vast source of structured data.

Learn how to set up Python for scraping, handle errors, and ensure your scraping process is both legal and efficient.

If you're ready to dive into the world of information mining, this article is your go-to resource.

0 notes

Text

Why Should You Do Web Scraping for python

Web scraping is a valuable skill for Python developers, offering numerous benefits and applications. Here’s why you should consider learning and using web scraping with Python:

1. Automate Data Collection

Web scraping allows you to automate the tedious task of manually collecting data from websites. This can save significant time and effort when dealing with large amounts of data.

2. Gain Access to Real-World Data

Most real-world data exists on websites, often in formats that are not readily available for analysis (e.g., displayed in tables or charts). Web scraping helps extract this data for use in projects like:

Data analysis

Machine learning models

Business intelligence

3. Competitive Edge in Business

Businesses often need to gather insights about:

Competitor pricing

Market trends

Customer reviews Web scraping can help automate these tasks, providing timely and actionable insights.

4. Versatility and Scalability

Python’s ecosystem offers a range of tools and libraries that make web scraping highly adaptable:

BeautifulSoup: For simple HTML parsing.

Scrapy: For building scalable scraping solutions.

Selenium: For handling dynamic, JavaScript-rendered content. This versatility allows you to scrape a wide variety of websites, from static pages to complex web applications.

5. Academic and Research Applications

Researchers can use web scraping to gather datasets from online sources, such as:

Social media platforms

News websites

Scientific publications

This facilitates research in areas like sentiment analysis, trend tracking, and bibliometric studies.

6. Enhance Your Python Skills

Learning web scraping deepens your understanding of Python and related concepts:

HTML and web structures

Data cleaning and processing

API integration

Error handling and debugging

These skills are transferable to other domains, such as data engineering and backend development.

7. Open Opportunities in Data Science

Many data science and machine learning projects require datasets that are not readily available in public repositories. Web scraping empowers you to create custom datasets tailored to specific problems.

8. Real-World Problem Solving

Web scraping enables you to solve real-world problems, such as:

Aggregating product prices for an e-commerce platform.

Monitoring stock market data in real-time.

Collecting job postings to analyze industry demand.

9. Low Barrier to Entry

Python's libraries make web scraping relatively easy to learn. Even beginners can quickly build effective scrapers, making it an excellent entry point into programming or data science.

10. Cost-Effective Data Gathering

Instead of purchasing expensive data services, web scraping allows you to gather the exact data you need at little to no cost, apart from the time and computational resources.

11. Creative Use Cases

Web scraping supports creative projects like:

Building a news aggregator.

Monitoring trends on social media.

Creating a chatbot with up-to-date information.

Caution

While web scraping offers many benefits, it’s essential to use it ethically and responsibly:

Respect websites' terms of service and robots.txt.

Avoid overloading servers with excessive requests.

Ensure compliance with data privacy laws like GDPR or CCPA.

If you'd like guidance on getting started or exploring specific use cases, let me know!

2 notes

·

View notes

Text

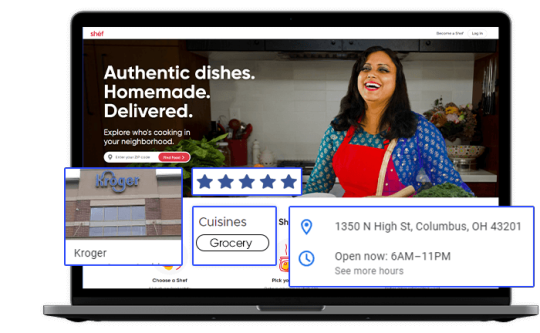

Tapping into Fresh Insights: Kroger Grocery Data Scraping

In today's data-driven world, the retail grocery industry is no exception when it comes to leveraging data for strategic decision-making. Kroger, one of the largest supermarket chains in the United States, offers a wealth of valuable data related to grocery products, pricing, customer preferences, and more. Extracting and harnessing this data through Kroger grocery data scraping can provide businesses and individuals with a competitive edge and valuable insights. This article explores the significance of grocery data extraction from Kroger, its benefits, and the methodologies involved.

The Power of Kroger Grocery Data

Kroger's extensive presence in the grocery market, both online and in physical stores, positions it as a significant source of data in the industry. This data is invaluable for a variety of stakeholders:

Kroger: The company can gain insights into customer buying patterns, product popularity, inventory management, and pricing strategies. This information empowers Kroger to optimize its product offerings and enhance the shopping experience.

Grocery Brands: Food manufacturers and brands can use Kroger's data to track product performance, assess market trends, and make informed decisions about product development and marketing strategies.

Consumers: Shoppers can benefit from Kroger's data by accessing information on product availability, pricing, and customer reviews, aiding in making informed purchasing decisions.

Benefits of Grocery Data Extraction from Kroger

Market Understanding: Extracted grocery data provides a deep understanding of the grocery retail market. Businesses can identify trends, competition, and areas for growth or diversification.

Product Optimization: Kroger and other retailers can optimize their product offerings by analyzing customer preferences, demand patterns, and pricing strategies. This data helps enhance inventory management and product selection.

Pricing Strategies: Monitoring pricing data from Kroger allows businesses to adjust their pricing strategies in response to market dynamics and competitor moves.

Inventory Management: Kroger grocery data extraction aids in managing inventory effectively, reducing waste, and improving supply chain operations.

Methodologies for Grocery Data Extraction from Kroger

To extract grocery data from Kroger, individuals and businesses can follow these methodologies:

Authorization: Ensure compliance with Kroger's terms of service and legal regulations. Authorization may be required for data extraction activities, and respecting privacy and copyright laws is essential.

Data Sources: Identify the specific data sources you wish to extract. Kroger's data encompasses product listings, pricing, customer reviews, and more.

Web Scraping Tools: Utilize web scraping tools, libraries, or custom scripts to extract data from Kroger's website. Common tools include Python libraries like BeautifulSoup and Scrapy.

Data Cleansing: Cleanse and structure the scraped data to make it usable for analysis. This may involve removing HTML tags, formatting data, and handling missing or inconsistent information.

Data Storage: Determine where and how to store the scraped data. Options include databases, spreadsheets, or cloud-based storage.

Data Analysis: Leverage data analysis tools and techniques to derive actionable insights from the scraped data. Visualization tools can help present findings effectively.

Ethical and Legal Compliance: Scrutinize ethical and legal considerations, including data privacy and copyright. Engage in responsible data extraction that aligns with ethical standards and regulations.

Scraping Frequency: Exercise caution regarding the frequency of scraping activities to prevent overloading Kroger's servers or causing disruptions.

Conclusion

Kroger grocery data scraping opens the door to fresh insights for businesses, brands, and consumers in the grocery retail industry. By harnessing Kroger's data, retailers can optimize their product offerings and pricing strategies, while consumers can make more informed shopping decisions. However, it is crucial to prioritize ethical and legal considerations, including compliance with Kroger's terms of service and data privacy regulations. In the dynamic landscape of grocery retail, data is the key to unlocking opportunities and staying competitive. Grocery data extraction from Kroger promises to deliver fresh perspectives and strategic advantages in this ever-evolving industry.

#grocerydatascraping#restaurant data scraping#food data scraping services#food data scraping#fooddatascrapingservices#zomato api#web scraping services#grocerydatascrapingapi#restaurantdataextraction

4 notes

·

View notes

Text

Python simplifies web scraping using libraries like BeautifulSoup, Requests, and Selenium. You can fetch web pages, parse HTML, and extract data such as text, images, or links. This data can then be cleaned, structured, and stored in files or databases for analysis, automation, or business intelligence tasks.

0 notes

Text

Making your very first Scrapy spider - 01 - Python scrapy tutorial for inexperienced persons https://www.altdatum.com/wp-content/uploads/2019/10/Making-your-very-first-Scrapy-spider-01-Python.jpg #inexperienced #Making #persons #Python #Pythoncourse #pythoncourseforbeginners #pythonforbeginners #Pythonprogramming #PythonScrapytutorial #pythonscrapywebcrawler #pythontrainingforbeginners #PythonTutorial #pythontutorialforbeginners #pythonwebscrapingtutorial #Scrapy #scrapyframework #scrapyframeworkinpython #scrapyspider #scrapyspiderexample #scrapyspiderpython #scrapyspidertutorial #Scrapytutorial #spider #tutorial #webscraping #webscrapingpython https://www.altdatum.com/making-your-very-first-scrapy-spider-01-python-scrapy-tutorial-for-inexperienced-persons/?feed_id=138654&_unique_id=687ccc421907b

0 notes

Text

Python is no longer just a programming language for developers; it’s becoming an invaluable tool for SEO looking to sharpen their strategies. Imagine wielding the power of automation and data analysis to elevate your search engine optimization efforts. With Python, you can dive deep into keyword relevance and search intent, unraveling mysteries that traditional methods might overlook.

As the digital landscape evolves, so too must our approaches to SEO. Understanding what users want when they type a query into Google is crucial. The right keywords can make or break your online visibility. That’s where Python comes in—streamlining processes and providing insights that drive results.

Ready to unlock new possibilities? Let’s explore how Python can transform your SEO game by offering innovative ways to track keyword relevance and decode search intent with ease. Whether you’re a seasoned pro or just starting out, this journey promises valuable tools tailored for modern SEO challenges.

Understanding Keyword Relevance and Search Intent

Keyword relevance is the heart of effective SEO. It refers to how closely a keyword matches the content on your page. Choosing relevant keywords helps search engines understand what your site offers.

Search intent goes deeper. It’s about understanding why users perform a specific query. Are they looking for information, trying to make a purchase, or seeking navigation? Grasping this concept is crucial for creating content that resonates with audiences.

Different types of search intents exist: informational, transactional, navigational, and commercial investigation. Each type requires tailored strategies to meet user expectations effectively.

By aligning keyword relevance with search intent, you can craft content that not only attracts visitors but also engages them meaningfully. This dual focus enhances user experience and boosts rankings in SERPs over time.

Using Python for Keyword Research

Keyword research is a crucial step in any SEO strategy. Python simplifies this process significantly, allowing you to analyze large datasets efficiently.

With libraries like Pandas and NumPy, you can manipulate and clean keyword data quickly. These tools help you uncover valuable insights that drive content creation.

You can also use the Beautiful Soup library for web scraping. This enables you to gather keywords from competitor sites or industry forums effortlessly.

Additionally, integrating Google Trends API within your scripts offers real-time keyword popularity metrics. This feature helps identify rising trends that are relevant to your niche.

Python scripts automate repetitive tasks, freeing up time for more strategic initiatives. By leveraging these capabilities, you’re better equipped to optimize your campaigns effectively without getting bogged down by manual processes.

Tracking Search Intent with Python Scripts

Understanding search intent is crucial for any SEO strategy. With Python, you can automate the process of analyzing user queries and determining their underlying motivations.

Using libraries like BeautifulSoup or Scrapy, you can scrape SERPs to gather data on keyword rankings and associated content. This helps identify patterns in how users interact with different topics.

Additionally, Natural Language Processing (NLP) tools such as NLTK or spaCy can assist in categorizing keywords based on intent—whether informational, transactional, or navigational.

By implementing custom scripts, you can quickly assess which keywords align best with your audience’s needs. This not only saves time but also enhances your ability to target content effectively.

Automating this analysis allows for regular updates on changing search behaviors. Staying ahead of trends means better optimization strategies that resonate with real user intent.

Integrating Python into your SEO Workflow

Integrating Python into your SEO workflow can transform how you approach data analysis and keyword tracking. By automating repetitive tasks, you free up valuable time for strategic thinking and creative exploration.

Start by leveraging libraries like Pandas to analyze large datasets efficiently. This helps in identifying trends or anomalies that might be missed with traditional methods.

You can also use Beautiful Soup for web scraping, allowing you to gather competitor insights directly from their sites. Extracting relevant information becomes a breeze, enhancing your research capabilities.

Consider creating custom scripts tailored to your specific needs. Whether it’s monitoring rankings or analyzing backlinks, Python allows unprecedented flexibility.

Incorporate visualizations using Matplotlib or Seaborn to present data clearly. These visuals make it easier to share findings with team members or stakeholders who may not be as familiar with the technical aspects of SEO.

Tips and Tricks for Mastering Search Intent Analysis with Python

Mastering search intent analysis with Python can elevate your SEO strategy. Start by leveraging libraries like Pandas and Beautiful Soup for efficient data manipulation and web scraping.

Utilize Natural Language Processing (NLP) techniques to understand user queries better. Libraries such as NLTK or SpaCy can help you analyze keywords, phrases, and their contexts.

Make your code modular. Break down functions into smaller components for cleaner debugging and easier updates in the future.

Experiment with machine learning models to predict user behavior based on historical data. Tools like Scikit-learn offer a range of algorithms that can enhance your insights dramatically.

Stay updated with SEO trends. Adapt your scripts regularly to reflect changes in search engine algorithms and user preferences. Continuous learning is key in this ever-evolving field!

Conclusion

As the digital landscape continues to evolve, SEO professionals must adapt and innovate. Python has emerged as a powerful tool that can transform how you approach keyword relevance and search intent.

With Python, you can streamline your keyword research process, analyze massive datasets quickly, and effectively interpret user intent behind searches. The ability to automate repetitive tasks frees up time for deeper strategic thinking.

Integrating Python into your SEO workflow enhances efficiency and results in more targeted strategies. Real-world applications showcase its versatility—from scraping data from SERPs to analyzing trends over time.

By leveraging Python for SEO activities, you’re not only keeping pace with the industry but also setting yourself apart as a forward-thinking professional ready to tackle the challenges of tomorrow’s search engines. Embrace this technology; it could very well be your secret weapon in achieving online success.

0 notes

Text

AI Automated Testing Course with Venkatesh (Rahul Shetty) Join our AI Automated Testing Course with Venkatesh (Rahul Shetty) and learn how to test software using smart AI tools. This easy-to-follow course helps you save time, find bugs faster, and grow your skills for future tech jobs. To know more about us visit https://rahulshettyacademy.com/

#ai generator tester#ai software testing#ai automated testing#ai in testing software#playwright automation javascript#playwright javascript tutorial#playwright python tutorial#scrapy playwright tutorial#api testing using postman#online postman api testing#postman automation api testing#postman automated testing#postman performance testing#postman tutorial for api testing#free api for postman testing#api testing postman tutorial#postman tutorial for beginners#postman api performance testing#automate api testing in postman#java automation testing#automation testing selenium with java#automation testing java selenium#java selenium automation testing#python selenium automation#selenium with python automation testing#selenium testing with python#automation with selenium python#selenium automation with python#python and selenium tutorial#cypress automation training

0 notes

Text

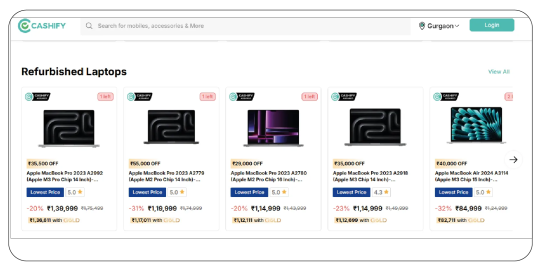

Extract Laptop Resale Value from Cashify

Introduction

In India’s fast-evolving second-hand electronics market, Cashify has emerged as a leading platform for selling used gadgets, especially laptops. This research report investigates how to Extract laptop resale value from Cashify, using data-driven insights derived from Web scraping laptop listings from Cashify and analyzing multi-year pricing trends.

This report also explores the potential of building a Cashify product data scraping tool, the benefits of Web Scraping E-commerce Websites, and how businesses can leverage a Custom eCommerce Dataset for strategic pricing.

Market Overview: The Rise of Second-Hand Laptops in India

In India, the refurbished and second-hand electronics segment has witnessed double-digit growth over the last five years. Much of this boom is driven by the affordability crisis for new electronics, inflationary pressure, and the rising acceptance of certified pre-owned gadgets among Gen Z and millennials. Platforms like Cashify have revolutionized this space by building trust through verified listings, quality checks, and quick payouts. For brands, resellers, or entrepreneurs, the ability to extract laptop resale value from Cashify has become crucial for shaping buyback offers, warranty pricing, and trade-in deals.

Web scraping laptop listings from Cashify allows stakeholders to get a clear, real-time snapshot of average selling prices across brands, conditions, and configurations. Unlike OLX or Quikr, where listings can be inconsistent or scattered, Cashify offers structured data points — model, age, wear and tear, battery health, and more — making it a goldmine for second-hand market intelligence. By combining this structured data with a Cashify product data scraping tool, businesses can identify underpriced segments, negotiate better supplier rates, and create competitive refurbished offerings.

With millions of laptops entering the resale loop every year, the scope of scraping and analyzing Cashify’s data goes far beyond academic interest. For retailers, this data can translate into practical business actions — from customizing trade-in bonuses to launching flash sale campaigns for old stock. The bigger goal is to build an adaptive pricing model that updates dynamically. This is where Web Scraping Cashify.in E-Commerce Product Data proves indispensable for data-driven decision-making.

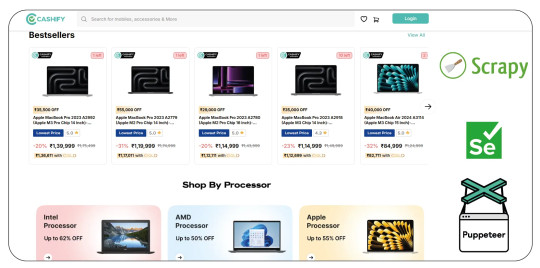

Technology & Tools: How to Scrape Laptop Prices from Cashify India

Building an efficient pipeline to scrape laptop prices from Cashify India demands more than just basic scraping scripts. Cashify uses dynamic content loading, pagination, and real-time pricing updates, which means your scraper must be robust enough to handle AJAX calls, handle IP blocks, and store large datasets securely. Many modern scraping stacks use Python libraries like Scrapy, Selenium, or Puppeteer, which can render JavaScript-heavy pages and pull detailed product listings, price fluctuations, and time-stamped snapshots.

Setting up a Cashify web scraper for laptop prices India can help businesses automate daily price checks, generate real-time price drop alerts, and spot sudden changes in average resale value. Combining this with a smart notification system ensures refurbishers and second-hand retailers stay one step ahead of market fluctuations.

Additionally, deploying a custom eCommerce dataset extracted from Cashify helps link multiple data points: for example, pairing model resale values with the original launch price, warranty status, or historical depreciation. This layered dataset supports advanced analytics, like predicting when a specific model’s resale value will hit rock bottom — an insight invaluable for maximizing margins on bulk procurement.

A good Cashify product data scraping tool should include error handling, proxy rotation, and anti-bot bypass methods. For larger operations, integrating this tool with CRM or ERP software automates workflows — from setting competitive buyback quotes to updating storefront listings. Ultimately, the technical strength behind web scraping e-commerce websites is what makes data actionable, turning raw pricing into real profit.

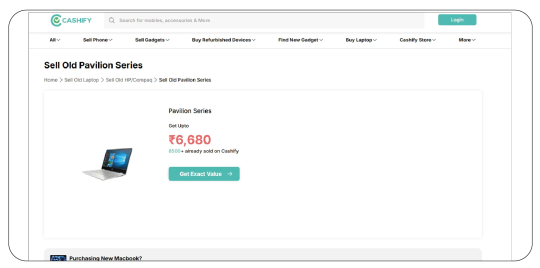

Opportunities: Turning Scraped Cashify Data into Business Strategy

Once you extract laptop resale value from Cashify, the next step is turning this raw pricing intelligence into a clear business advantage. For individual resellers, knowing the exact resale price of a MacBook Air or HP Pavilion in real-time can mean the difference between a profit and a loss. For larger refurbishing chains or online marketplaces, scraped data powers dynamic pricing engines, localized offers, and even targeted marketing campaigns for specific models or city clusters.

For instance, with a robust Cashify.com laptop pricing dataset India, a company can forecast upcoming spikes in demand — say during the start of the academic year when students buy affordable laptops — and stock up on popular mid-range models in advance. Additionally, trends in price drop alerts help predict when it’s cheaper to buy in bulk. With a Cashify web scraper for laptop prices India, these insights update automatically, ensuring no opportunity is missed.

Beyond pricing, the data can reveal supply gaps — like when certain brands or specs become scarce in specific cities. Using Web Scraping Solutions, retailers can then launch hyperlocal campaigns, offering better trade-in deals or doorstep pickups in under-supplied zones. This level of precision turns simple scraping into a strategic tool for growth.

In summary, the real power of web scraping laptop listings from Cashify lies not just in collecting prices, but in transforming them into a sustainable, profitable second-hand business model. With a solid scraping stack, well-defined use cases, and data-driven action plans, businesses can stay ahead in India’s booming refurbished laptop market.

Key Insights

Growing Popularity of Used Laptops

Analysis:

With over 7 million units projected for 2025, there’s a clear demand for affordable laptops, boosting the need to extract laptop resale value from Cashify for resale arbitrage and trade-in programs.

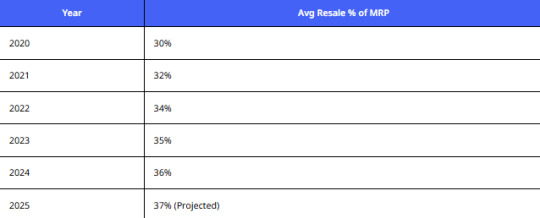

Average Resale Value Trend

Analysis:

Consumers get back an average of 30–37% of the original price. This data justifies why many refurbishers and dealers scrape laptop prices from Cashify India to negotiate smarter buyback deals.

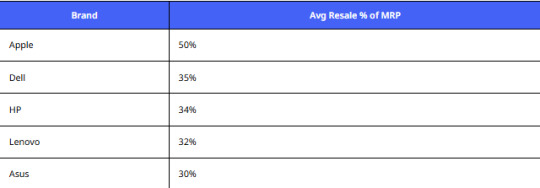

Brand-wise Resale Premium

Analysis:

Apple retains the highest value — a key insight for businesses using a Cashify.com laptop pricing dataset India to optimize refurbished stock.

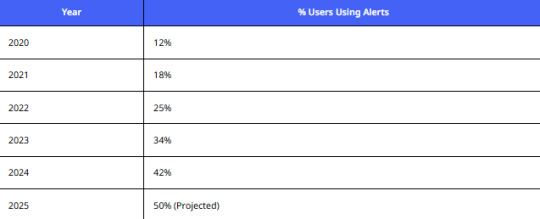

Price Drop Alerts Influence

Analysis:

More users want real-time price drop alerts for laptops on Cashify, pushing resellers to deploy a Cashify web scraper for laptop prices India to monitor and react instantly.

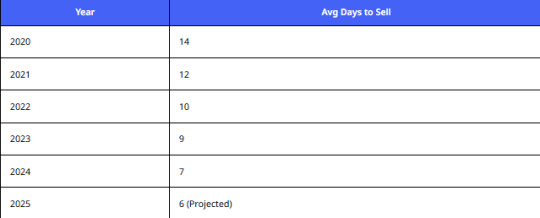

Average Listing Time Before Sale

Analysis:

Faster selling cycles demand real-time tracking. Extract laptop resale value from Cashify in near real-time with a robust Cashify product data scraping tool.

Popular Price BracketsPrice Band (INR)% Share< 10,00020%10,000–20,00045%20,000–30,00025%>30,00010%

Analysis:

The ₹10k–₹20k band dominates, highlighting why Web Scraping Cashify.in E-Commerce Product Data is crucial for budget-focused segments.

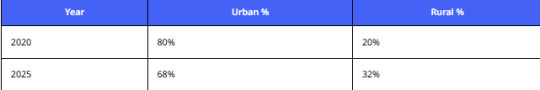

Urban vs Rural Split

Analysis:

Growth in rural demand shows the need for local price intelligence via Web Scraping Solutions tailored for regional buyers.

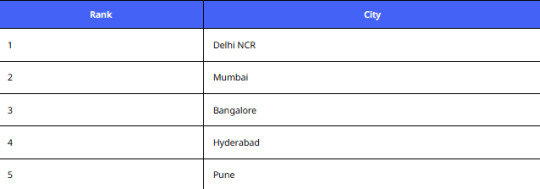

Top Cities by Resale Listings

Analysis:

A Custom eCommerce Dataset from Cashify helps brands target these hubs with region-specific offers.

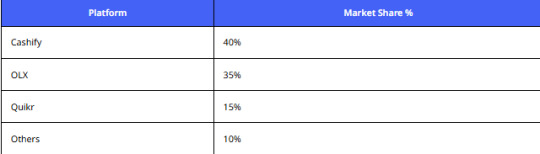

Analysis:

Cashify’s stronghold makes web scraping laptop listings from Cashify vital for second-hand market trend research.

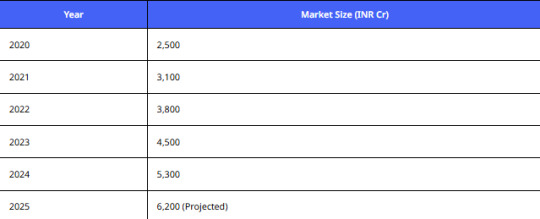

Projected Market Value

Analysis:

The second-hand laptop market will surpass INR 6,000 Cr by 2025 — a clear opportunity to build a Cashify web scraper for laptop prices India and lead the arbitrage game.

Conclusion

From real-time price tracking to building custom pricing datasets, this research shows that to stay ahead in the resale game, businesses must extract laptop resale value from Cashify with smart Web Scraping E-commerce Websites strategies. Ready to unlock hidden profits? Start scraping smarter with a custom Cashify product data scraping tool today!

Know More >> https://www.productdatascrape.com/extract-laptop-resale-value-cashify-market-trends.php

#ExtractLaptopResaleValueFromCashify#WebScrapingLaptopListingsFromCashify#ScrapeLaptopPricesFromCashifyIndia#CashifyComLaptopPricingDatasetIndia#CashifyProductDataScrapingTool#WebScrapingEcommerceWebsites

0 notes

Text

Crush It with Twitter Web Scraping Tips

Picking the Perfect Twitter Scraping Tool

One of the first lessons I learned? Not all scraping tools are created equal. A good Twitter scraping tool can make or break your project. I’ve tried everything from Python libraries like Tweepy to more advanced X scraping APIs. My go-to? Tools that balance ease of use with flexibility. For beginners, something like BeautifulSoup paired with Requests in Python is a solid start. If you’re ready to level up, X data API like the official X API (if you can get access) or third-party solutions can save you time. Pro tip: always check rate limits to avoid getting blocked!

Ethical Web Scraping: My Golden Rule

Here’s a story from my early days: I got a bit too excited scraping X and hit a rate limit. Ouch. That taught me the importance of ethical web scraping X data. Always respect X’s terms of service and robots.txt file. Use X data APIs when possible — they’re designed for this! Also, stagger your requests to avoid overwhelming servers. Not only does this keep you on the right side of the rules, but it also ensures your data collection is sustainable.

Step-by-Step Twitter Scraping Tips

Ready to get your hands dirty? Here’s how I approach Twitter web scraping:

Set Clear Goals: Are you after tweets, user profiles, or hashtags? Knowing what you want helps you pick the right Twitter scraping tool.

Use Python for Flexibility: Libraries like Tweepy or Scrapy are my favorites for Data Scraping X. They’re powerful and customizable.

Leverage X Data APIs: If you can, use official X data APIs for cleaner, structured data. They’re a bit pricier but worth it for reliability.

Handle Data Smartly: Store your scraped data in a structured format like CSV or JSON. I once lost hours of work because I didn’t organize my data properly — don’t make that mistake!

Stay Updated: X’s platform evolves, so keep an eye on API changes or new scraping tools to stay ahead.

Overcoming Common Challenges

Scraping isn’t always smooth sailing. I’ve hit roadblocks like CAPTCHAs, changing APIs, and messy data outputs. My fix? Use headless browsers like Selenium for tricky pages, but sparingly — they’re resource-heavy. Also, clean your data as you go. Trust me, spending 10 minutes filtering out irrelevant tweets saves hours later. If you’re using X scraping APIs, check their documentation for updates to avoid surprises.

Turning Data into Action

Here’s where the magic happens. Once you’ve scraped your data, analyze it! I love using tools like Pandas to spot trends or visualize insights with Matplotlib. For example, I once scraped X data to track sentiment around a product launch — game-changer for my client’s strategy. With web scraping X.com, you can uncover patterns that drive smarter decisions, whether it’s for SEO, marketing, or research.

Final Thoughts: Scrape Smart, Win Big

Twitter web scraping has been a game-changer for me, and I’m confident it can be for you too. Start small, experiment with a Twitter scraping tool, and don’t be afraid to dive into X data APIs for bigger projects. Just remember to scrape ethically and organize your data like a pro. Got a favorite scraping tip or tool? Drop it in the comments on X — I’d love to hear your thoughts!

Happy scraping, and let’s crush it!

0 notes

Text

How to Scrape Data from Amazon: A Quick Guide

How to scrape data from Amazon is a question asked by many professionals today. Whether you’re a data analyst, e-commerce seller, or startup founder, Amazon holds tons of useful data — product prices, reviews, seller info, and more. Scraping this data can help you make smarter business decisions.

In this guide, we’ll show you how to do it the right way: safely, legally, and without getting blocked. You’ll also learn how to deal with common problems like IP bans, CAPTCHA, and broken scrapers.

Is It Legal to Scrape Data from Amazon?

This is the first thing you should know.

Amazon’s Terms of Service (TOS) say you shouldn’t access their site with bots or scrapers. So technically, scraping without permission breaks their rules. But the laws on scraping vary depending on where you live.

Safer alternatives:

Use the Amazon Product Advertising API (free but limited).

Join Amazon’s affiliate program.

Buy clean data from third-party providers.

If you still choose to scrape, make sure you’re not collecting private data or hurting their servers. Always scrape responsibly.

What Kind of Data Can You Scrape from Amazon?

Here are the types of data most people extract:

1. Product Info:

You can scrape Amazon product titles, prices, descriptions, images, and availability. This helps with price tracking and competitor analysis.

2. Reviews and Ratings:

Looking to scrape Amazon reviews and ratings? These show what buyers like or dislike — great for product improvement or market research.

3. Seller Data:

Need to know who you’re competing with? Scrape Amazon seller data to analyze seller names, fulfillment methods (like FBA), and product listings.

4. ASINs and Rankings:

Get ASINs, category info, and product rankings to help with keyword research or SEO.

What Tools Can You Use to Scrape Amazon?

You don’t need to be a pro developer to start. These tools and methods can help:

For Coders:

Python + BeautifulSoup/Scrapy: Best for basic HTML scraping.

Selenium: Use when pages need to load JavaScript.

Node.js + Puppeteer: Another great option for dynamic content.

For Non-Coders:

Octoparse and ParseHub: No-code scraping tools.

Just point, click, and extract!

Don’t forget:

Use proxies to avoid IP blocks.

Rotate user-agents to mimic real browsers.

Add delays between page loads.

These make scraping easier and safer, especially when you’re trying to scrape Amazon at scale.

How to Scrape Data from Amazon — Step-by-Step

Let’s break it down into simple steps:

Step 1: Pick a Tool

Choose Python, Node.js, or a no-code platform like Octoparse based on your skill level.

Step 2: Choose URLs

Decide what you want to scrape — product pages, search results, or seller profiles.

Step 3: Find HTML Elements

Right-click > “Inspect” on your browser to see where the data lives in the HTML code.

Step 4: Write or Set Up the Scraper

Use tools like BeautifulSoup or Scrapy to create scripts. If you’re using a no-code tool, follow its visual guide.

Step 5: Handle Pagination

Many listings span multiple pages. Be sure your scraper can follow the “Next” button.

Step 6: Save Your Data

Export the data to CSV or JSON so you can analyze it later.

This is the best way to scrape Amazon if you’re starting out.

How to Avoid Getting Blocked by Amazon

One of the biggest problems? Getting blocked. Amazon has smart systems to detect bots.

Here’s how to avoid that:

1. Use Proxies:

They give you new IP addresses, so Amazon doesn’t see repeated visits from one user.

2. Rotate User-Agents:

Each request should look like it’s coming from a different browser or device.

3. Add Time Delays:

Pause between page loads. This helps you look like a real human, not a bot.

4. Handle CAPTCHAs:

Use services like 2Captcha, or manually solve them when needed.

Following these steps will help you scrape Amazon products without being blocked.

Best Practices for Safe and Ethical Scraping

Scraping can be powerful, but it must be used wisely.

Always check the site’s robots.txt file.

Don’t overload the server by scraping too fast.

Never collect sensitive or private information.

Use data only for ethical and business-friendly purposes.

When you’re learning how to get product data from Amazon, ethics matter just as much as technique.

Are There Alternatives to Scraping?

Yes — and sometimes they’re even better:

Amazon API:

This is a legal, developer-friendly way to get product data.

Third-Party APIs:

These services offer ready-made solutions and handle proxies and errors for you.

Buy Data:

Some companies sell clean, structured data — great for people who don’t want to build their own tools.

Common Errors and Fixes

Scraping can be tricky. Here are a few common problems:

Error 503:

This usually means Amazon is blocking you. Fix it by using proxies and delays.

Missing Data:

Amazon changes its layout often. Re-check the HTML elements and update your script.

JavaScript Not Loading:

Switch from BeautifulSoup to Selenium or Puppeteer to load dynamic content.

The key to Amazon product scraping success is testing, debugging, and staying flexible.

Conclusion:

To scrape data from Amazon, use APIs or scraping tools with care. While it violates Amazon’s Terms of Service, it’s not always illegal. Use ethical practices: avoid private data, limit requests, rotate user-agents, use proxies, and solve CAPTCHAs to reduce detection risk.

Looking to scale your scraping efforts or need expert help? Whether you’re building your first script or extracting thousands of product listings, you now understand how to scrape data from Amazon safely and smartly. Let Iconic Data Scrap help you get it done right.

Contact us today for custom tools, automation services, or scraping support tailored to your needs.

#iconicdatascrap#howtoscrapedatafromamazon#amazondatascraping#amazonwebscraping#scrapeamazonproducts#extractdatafromamazon#amazonscraper#amazonproductscraper#bestwaytoscrapeamazon#scrapeamazonreviews#scrapeamazonprices#scrapeamazonsellerdata#extractproductinfofromamazon#howtogetproductdatafromamazon#webscrapingtools#pythonscraping#beautifulsoupamazon#amazonapialternative#htmlscraping#dataextraction#scrapingscripts#automateddatascraping#howtoscrapedatafromamazonusingpython#isitlegaltoscrapeamazondata#howtoscrapeamazonreviewsandratings#toolstoscrapeamazonproductlistings#scrapeamazonatscale

1 note

·

View note

Text

Python Automation Ideas: Save Hours Every Week with These Scripts

Tired of repeating the same tasks on your computer? With just a bit of Python knowledge, you can automate routine work and reclaim your time. Here are 10 Python automation ideas that can help you boost productivity and eliminate repetitive tasks.

1. Auto Email Sender

Use Python’s smtplib and email modules to send customized emails in bulk—perfect for reminders, reports, or newsletters.

2. File Organizer Script

Tired of a messy Downloads folder? Automate the sorting of files by type, size, or date using os and shutil.

3. PDF Merger/Splitter

Automate document handling by merging or splitting PDFs using PyPDF2 or pdfplumber.

4. Rename Files in Bulk

Rename multiple files based on patterns or keywords using os.rename()—great for photos, reports, or datasets.

5. Auto Backup Script

Schedule Python to back up files and folders to another directory or cloud with shutil or third-party APIs.

6. Instagram or Twitter Bot

Use automation tools like Selenium or APIs to post, like, or comment—ideal for marketers managing multiple accounts.

7. Invoice Generator

Automate invoice creation from Excel or CSV data using reportlab or docx. Perfect for freelancers and small businesses.

8. Weather Notifier

Set up a daily weather alert using the OpenWeatherMap API and send it to your phone or email each morning.

9. Web Scraper

Extract data from websites (news, prices, job listings) using BeautifulSoup or Scrapy. Automate market research or data collection.

10. Keyboard/Mouse Automation

Use pyautogui to simulate mouse clicks, keystrokes, and automate desktop workflows—great for repetitive UI tasks.

🎯 Want help writing these automation scripts or need hands-on guidance? Visit AllHomeworkAssignments.com for expert support, script writing, and live Python tutoring.

#PythonAutomation#TimeSavingScripts#LearnPython#ProductivityHacks#PythonProjects#AllHomeworkAssignments#AutomateWithPython

0 notes

Text

How Quick Commerce Apps Use Web Scraping for Competitive Pricing

Introduction

In the rapidly changing market of quick commerce, competition is fierce for businesses to win customer loyalty and subsequently market share. Pricing is undoubtedly one of the key factors influencing consumer decisions in the instant delivery arena. To remain ahead of the game, these quick commerce apps use perhaps the most powerful tactic: web scraping for the collection, analysis, and dynamic alteration of pricing strategies.

This blog discusses how web scraping quick commerce applications enable businesses in real-time price information extraction, evaluating competitor prices, and institute solid pricing strategies. We will also consider the methods, the challenges along the way, and the best practices for quick commerce app data extraction.

Understanding Quick Commerce and Its Competitive Landscape

Quick commerce (Q-commerce) is an advanced e-commerce model that focuses on ultra-fast deliveries, typically within 10 to 30 minutes. Leading players such as Instacart, Gopuff, Getir, Zapp, and Blinkit have redefined customer expectations by offering instant grocery and essential item deliveries.

Given the high competition and dynamic pricing models in the industry, quick commerce apps data scraper solutions are increasingly being used to gain pricing intelligence and make real-time adjustments.

Why Quick Commerce Apps Use Web Scraping for Competitive Pricing

1. Real-Time Price Monitoring

Quick commerce companies continuously scrape competitor websites to ensure their pricing remains competitive.

Scraping quick commerce apps data enables businesses to track price changes and adjust accordingly.

2. Dynamic Pricing Optimization

Real-time data extraction helps companies implement automated price adjustments based on competitor pricing trends.

AI-driven algorithms use scraped pricing data to set optimal pricing strategies.

3. Competitor Benchmarking

Quick commerce apps data collections offer insights into competitor pricing, discounts, and promotions.

Businesses can assess market trends and consumer purchasing behavior to position themselves strategically.

4. Consumer Behavior Analysis

Price sensitivity varies among different customer demographics.

Quick commerce apps data extractor solutions analyze historical price trends to identify customer behavior patterns.

5. Stock and Availability Insights

Web scraping also helps track product availability across various competitors.

Businesses can adjust their inventory and pricing strategies based on demand trends.

How Web Scraping Works for Quick Commerce Pricing Intelligence

1. Identifying Target Quick Commerce Apps

Before implementing a quick commerce apps data scraper, businesses must identify the top competitors in their industry. Some popular quick commerce apps include:

Instacart – Grocery delivery from multiple retailers

Gopuff – Instant delivery of everyday essentials

Getir – On-demand grocery delivery

Zapp – 24/7 convenience store at your doorstep

Blinkit – Ultra-fast grocery delivery in India

2. Data Points Extracted for Competitive Pricing

Web scraping quick commerce apps involves extracting crucial pricing-related data points such as:

Product Name

Brand and Category

Competitor Pricing

Discounts and Promotions

Stock Availability

Delivery Fees and Charges

3. Web Scraping Tools and Technologies Used

To perform efficient quick commerce apps data extraction, businesses use:

Scrapy – A Python-based web scraping framework.

Selenium – Automates browser-based scraping for dynamic pages.

BeautifulSoup – Extracts structured data from HTML pages.

Proxies & Rotating IPs – Prevents blocking while scraping large datasets.

4. Data Cleaning and Processing

Once extracted, quick commerce apps data collections are structured into organized formats such as JSON, CSV, or databases for analysis.

5. Implementing Pricing Strategies

AI-based algorithms analyze the scraped data to:

Adjust prices dynamically based on real-time competitor data.

Optimize discount offerings.

Enhance consumer engagement through personalized pricing strategies.

6. Automated Price Adjustment

Businesses integrate scraped data into pricing algorithms to dynamically modify their product prices.

Price adjustments can be triggered based on competitor movements, stock levels, or peak demand periods.

7. Predictive Pricing Models

Machine learning models process scraped pricing data to forecast future pricing trends.

Retailers can use predictive analytics to set optimal pricing strategies before competitors adjust their prices.

8. Custom Alerts and Notifications

Businesses set up alerts when competitors change pricing structures, allowing real-time responses.

Notifications can be automated through dashboard integrations for instant decision-making.

Challenges in Scraping Quick Commerce Apps Data

1. Anti-Scraping Mechanisms

Many quick commerce platforms use CAPTCHAs, IP blocking, and bot detection to prevent automated scraping.

Solutions: Use rotating proxies, user-agent rotation, and headless browsing to bypass restrictions.

2. Frequent Website Structure Changes

Quick commerce apps regularly update their UI, requiring frequent updates to web scrapers.

Solutions: Implement dynamic XPath selectors and use AI-powered adaptive scrapers.

3. Dynamic Content Loading

Many platforms use JavaScript-rendered content, making scraping more challenging.

Solutions: Use Selenium or Puppeteer for headless browser interactions.

4. Legal and Ethical Considerations

Businesses must comply with data privacy laws and terms of service.

Solutions: Focus on publicly available data and respect robots.txt policies.

Best Practices for Web Scraping Quick Commerce Apps

Use Headless Browsing – To navigate JavaScript-heavy content efficiently.

Leverage API Access Where Possible – Some quick commerce apps offer APIs for structured data extraction.

Monitor and Adapt to Changes – Regularly update scrapers to adapt to changing website structures.

Ensure Data Accuracy and Deduplication – Implement validation checks to avoid redundant data entries.

Utilize Cloud-Based Scraping Services – To handle large-scale scraping tasks more efficiently.

Future of Competitive Pricing with Web Scraping

1. AI-Driven Pricing Algorithms

AI and machine learning will play a significant role in predictive pricing based on web-scraped competitor data.

2. Integration with Business Intelligence Tools

Web-scraped data will be seamlessly integrated with BI platforms for real-time pricing analysis.

3. Blockchain for Transparent Pricing

Blockchain technology may improve data transparency and reliability in pricing comparisons.

4. Automated Decision-Making Systems

Businesses will automate price adjustments using real-time scraped data and dynamic pricing models.

Conclusion

Web scraping from instant commerce platforms has opened up new avenues for pricing in today's intense competition in e-commerce. The scraped data of instant commerce applications become indeed instrumental for organizations to track their competitors, modify their pricing strategies in real-time, and make their customer engagement even better. As the name implies, in-depth pricing intelligence will be revolutionized by data, and leaving this exact technological edge will be one of the requisites for sustained success in the market.

Know More : https://www.crawlxpert.com/blog/quick-commerce-apps-use-web-scraping

#WebScrapingCompetitivePricing#WebScrapingQuickCommerce#ScrapingQuickCommerceAppsData#ScrapeQuickCommerceAppsData

0 notes