#Apache Hadoop

Explore tagged Tumblr posts

Photo

Hive Tutorial | Hive Course For Beginners | Intellipaat - YouTube ☞ http://go.codetrick.net/d68b7e0dba #bigdata #hadoop

0 notes

Photo

Hive Tutorial | Hive Course For Beginners | Intellipaat - YouTube ☞ http://go.codetrick.net/d68b7e0dba #bigdata #hadoop

0 notes

Text

Are you looking to build a career in Big Data Analytics? Gain in-depth knowledge of Hadoop and its ecosystem with expert-led training at Sunbeam Institute, Pune – a trusted name in IT education.

Why Choose Our Big Data Hadoop Classes?

🔹 Comprehensive Curriculum: Covering Hadoop, HDFS, MapReduce, Apache Spark, Hive, Pig, HBase, Sqoop, Flume, and more. 🔹 Hands-on Training: Work on real-world projects and industry use cases to gain practical experience. 🔹 Expert Faculty: Learn from experienced professionals with real-time industry exposure. 🔹 Placement Assistance: Get career guidance, resume building support, and interview preparation. 🔹 Flexible Learning Modes: Classroom and online training options available. 🔹 Industry-Recognized Certification: Boost your resume with a professional certification.

Who Should Join?

✔️ Freshers and IT professionals looking to enter the field of Big Data & Analytics ✔️ Software developers, system administrators, and data engineers ✔️ Business intelligence professionals and database administrators ✔️ Anyone passionate about Big Data and Machine Learning

#Big Data Hadoop training in Pune#Hadoop classes Pune#Big Data course Pune#Hadoop certification Pune#learn Hadoop in Pune#Apache Spark training Pune#best Big Data course Pune#Hadoop coaching in Pune#Big Data Analytics training Pune#Hadoop and Spark training Pune

0 notes

Text

What is PySpark? A Beginner’s Guide

Introduction

The digital era gives rise to continuous expansion in data production activities. Organizations and businesses need processing systems with enhanced capabilities to process large data amounts efficiently. Large datasets receive poor scalability together with slow processing speed and limited adaptability from conventional data processing tools. PySpark functions as the data processing solution that brings transformation to operations.

The Python Application Programming Interface called PySpark serves as the distributed computing framework of Apache Spark for fast processing of large data volumes. The platform offers a pleasant interface for users to operate analytics on big data together with real-time search and machine learning operations. Data engineering professionals along with analysts and scientists prefer PySpark because the platform combines Python's flexibility with Apache Spark's processing functions.

The guide introduces the essential aspects of PySpark while discussing its fundamental elements as well as explaining operational guidelines and hands-on usage. The article illustrates the operation of PySpark through concrete examples and predicted outputs to help viewers understand its functionality better.

What is PySpark?

PySpark is an interface that allows users to work with Apache Spark using Python. Apache Spark is a distributed computing framework that processes large datasets in parallel across multiple machines, making it extremely efficient for handling big data. PySpark enables users to leverage Spark’s capabilities while using Python’s simple and intuitive syntax.

There are several reasons why PySpark is widely used in the industry. First, it is highly scalable, meaning it can handle massive amounts of data efficiently by distributing the workload across multiple nodes in a cluster. Second, it is incredibly fast, as it performs in-memory computation, making it significantly faster than traditional Hadoop-based systems. Third, PySpark supports Python libraries such as Pandas, NumPy, and Scikit-learn, making it an excellent choice for machine learning and data analysis. Additionally, it is flexible, as it can run on Hadoop, Kubernetes, cloud platforms, or even as a standalone cluster.

Core Components of PySpark

PySpark consists of several core components that provide different functionalities for working with big data:

RDD (Resilient Distributed Dataset) – The fundamental unit of PySpark that enables distributed data processing. It is fault-tolerant and can be partitioned across multiple nodes for parallel execution.

DataFrame API – A more optimized and user-friendly way to work with structured data, similar to Pandas DataFrames.

Spark SQL – Allows users to query structured data using SQL syntax, making data analysis more intuitive.

Spark MLlib – A machine learning library that provides various ML algorithms for large-scale data processing.

Spark Streaming – Enables real-time data processing from sources like Kafka, Flume, and socket streams.

How PySpark Works

1. Creating a Spark Session

To interact with Spark, you need to start a Spark session.

Output:

2. Loading Data in PySpark

PySpark can read data from multiple formats, such as CSV, JSON, and Parquet.

Expected Output (Sample Data from CSV):

3. Performing Transformations

PySpark supports various transformations, such as filtering, grouping, and aggregating data. Here’s an example of filtering data based on a condition.

Output:

4. Running SQL Queries in PySpark

PySpark provides Spark SQL, which allows you to run SQL-like queries on DataFrames.

Output:

5. Creating a DataFrame Manually

You can also create a PySpark DataFrame manually using Python lists.

Output:

Use Cases of PySpark

PySpark is widely used in various domains due to its scalability and speed. Some of the most common applications include:

Big Data Analytics – Used in finance, healthcare, and e-commerce for analyzing massive datasets.

ETL Pipelines – Cleans and processes raw data before storing it in a data warehouse.

Machine Learning at Scale – Uses MLlib for training and deploying machine learning models on large datasets.

Real-Time Data Processing – Used in log monitoring, fraud detection, and predictive analytics.

Recommendation Systems – Helps platforms like Netflix and Amazon offer personalized recommendations to users.

Advantages of PySpark

There are several reasons why PySpark is a preferred tool for big data processing. First, it is easy to learn, as it uses Python’s simple and intuitive syntax. Second, it processes data faster due to its in-memory computation. Third, PySpark is fault-tolerant, meaning it can automatically recover from failures. Lastly, it is interoperable and can work with multiple big data platforms, cloud services, and databases.

Getting Started with PySpark

Installing PySpark

You can install PySpark using pip with the following command:

To use PySpark in a Jupyter Notebook, install Jupyter as well:

To start PySpark in a Jupyter Notebook, create a Spark session:

Conclusion

PySpark is an incredibly powerful tool for handling big data analytics, machine learning, and real-time processing. It offers scalability, speed, and flexibility, making it a top choice for data engineers and data scientists. Whether you're working with structured data, large-scale machine learning models, or real-time data streams, PySpark provides an efficient solution.

With its integration with Python libraries and support for distributed computing, PySpark is widely used in modern big data applications. If you’re looking to process massive datasets efficiently, learning PySpark is a great step forward.

youtube

#pyspark training#pyspark coutse#apache spark training#apahe spark certification#spark course#learn apache spark#apache spark course#pyspark certification#hadoop spark certification .#Youtube

0 notes

Text

Big Data Battle Alert! Apache Spark vs. Hadoop: Which giant rules your data universe? Spark = Lightning speed (100x faster in-memory processing!) Hadoop = Batch processing king (scalable & cost-effective).Want to dominate your data game? Read more: https://bit.ly/3F2aaNM

0 notes

Text

Hadoop . . . for more information and tutorial https://bit.ly/4hPFcGk check the above link

0 notes

Text

Is Apache spark going to replace Hadoop?

Explore Apache Spark, a high-speed data processing framework, and its relationship with Hadoop. Discover its key features, use cases, and why it's not a Hadoop replacement.

0 notes

Text

https://www.ksolves.com/blog/hadoop/hdfs-overview-everything-you-need-to-know

These are all the information about the HDFS and how it can help you to store a huge amount of data without any hassle. This tool is a great addition to enhance business efficiency. This tool will help you read all the data from a single machine.

1 note

·

View note

Quote

カーパシー氏は、ソフトウェアというものが過去2回にわたって急速に変化したものと考えています。最初に登場したのがソフトウェア 1.0です。ソフトウェア1.0は誰もがイメージするような基本的なソフトウェアのことです。 ソフトウェア1.0がコンピュータ向けに書くコードであるのに対し、ソフトウェア2.0は基本的にニューラルネットワークであり、特に「重み」のことを指します。開発者はコードを直接書くのではなく、データセットを調整し、最適化アルゴリズムを実行してこのニューラルネットワークのパラメーターを生成するのです。 ソフトウェア 1.0に当たるGitHub上のプロジェクトは、それぞれを集約して関係のあるプロジェクトを線で結んだ「Map of GitHub」で表せます。 ソフトウェア 2.0は同様に「Model Atlas」で表されます。巨大な円の中心にOpenAIやMetaのベースとなるモデルが存在し、そのフォークがつながっています。 生成AIが洗練されるにつれ、ニューラルネットワークの調整すらAIの助けを得て行えるようになりました。これらは専門的なプログラミング言語ではなく、「自然言語」で実行できるのが特徴です。自然言語、特に英語で大規模言語モデル(LLM)をプログラミング可能になった状態を、カーパシー氏は「ソフトウェア 3.0」と呼んでいます。 まとめると、コードでコンピューターをプログラムするのがソフトウェア 1.0、重みでニューラルネットワークをプログラムするのがソフトウェア 2.0、自然言語のプロンプトでLLMをプログラムするのがソフトウェア 3.0です。 カーパシー氏は「おそらくGitHubのコードはも��や単なるコードではなく、コードと英語が混在した新しい種類のコードのカテゴリーが拡大していると思います。これは単に新しいプログラミングパラダイムであるだけでなく、私たちの母国語である英語でプログラミングしている点も驚くべきことです。私たちは3つの完全に異なるプログラミングパラダイムを有しており、業界に参入するならば、これらすべてに精通していることが非常に重要です。なぜなら、それぞれに微妙な長所と短所があり、特定の機能は1.0や2.0、3.0でプログラミングする必要があるかもしれません。ニューラルネットワークをトレーニングするべきか、LLMにプロンプトを送信するべきか。指示は明示的なコードであるべきでしょうか?つまり、私たちは皆、こうした決定を下し、実際にこれらのパラダイム間を流動的に移行できる可能性を秘めているのです」と述べました。 ◆AIは「電気」である カーパシー氏は「AIは新しい電気である」と捉えています。OpenAI、Google、Anthropic などのLLMラボはトレーニングのために設備投資を行っていて、これは電気のグリッドを構築することとよく似ています。企業はAPIを通じてAIを提供するための運用コストもかかります。通常、100万件など一定単位ごとに料金を請求する仕組みです。このAPIには、低遅延、高稼働率、安定した品質などさまざまなバリューがあります。これらの点に加え、過去に多くのLLMがダウンした影響で人々が作業不能に陥った現象も鑑みると、AIは電気のようななくてはならないインフラに当たるというのがカーパシー氏の考えです。 しかし、LLMは単なる電気や水のようなものではなく、もっと複雑なエコシステムが構築されています。OSだとWindowsやMacのようなクローズドソースのプロバイダーがいくつかあり、Linuxのようなオープンソースの代替案があります。LLMにおいても同様の構造が形成されつつあり、クローズドソースのプロバイダーが競合している中、LlamaのようなオープンソースがLLM界におけるLinuxのようなものへと成長するかもしれません。 カーパシー氏は「LLMは新しい種類のOSのようなものだと感じました。CPUの役割を果たすような存在で、LLMが処理できるトークンの長さ(コンテキストウィンドウ)はメモリに相当し、メモリと計算リソースを調整して問題解決を行うのです。これらの機能をすべて活用しているため、客観的に見ると、まさにOSに非常に似ています。OSだとソフトウェアをダウンロードして実行できますが、LLMでも同様の操作ができるものもあります」と述べました。 ◆AIは発展途中 LLMの計算リソースはコンピューターにとってまだ非常に高価であり、性能の良いLLMはほとんどクラウドサーバーで動作しています。ローカルで実行できるDeepSeek-R1のようなモデルも出てきていますが、やはり何百万円もするような機器を何百台とつなげて動かしているようなクラウドサーバーと個人のPCでは出力結果に大きな差が現れます。 カーパシー氏は「個人用コンピューター革命はまだ起こっていません。経済的ではないからです。意味がありません。しかし、一部の人々は試みているかもしれません。例えば、Mac miniは一部のLLMに非常に適しています。将来的にどのような形になるかは不明です。もしかしたら、皆さんがこの形や仕組みを発明するかもしれません」と述べました。 また、PCでは当たり前に使われているグラフィカルユーザーインターフェース(GUI)がLLMには中途半端にしか導入されていないという点も特徴です。ChatGPTなどのチャットボットは、基本的にテキスト入力欄を提供しているだけです。カーパシー氏は「まだ一般的な意味でのGUIが発明されていないと思います」と話しています。 ◆AIは技術拡散の方向が逆 これまでのPCは、政府が軍事用に開発し、企業などがそれを利用し、その後広くユーザーに使われるという歴史をたどってきました。一方でAIは政府や企業ではなくユーザーが広く利用し、その集合知が体系化され、企業が利用するようになります。カーパシー氏は「実際、企業や政府は、私たちが技術を採用するような速度に追いついていません。これは逆行していると言えるでしょう。新しい点であり前例がないといえるのは、LLMが少数の人々や企業の手中にあるのではなく、私たち全員の手中にあることです。なぜなら、私たちは皆コンピュータを持っており、それはすべてソフトウェアであり、ChatGPTは数十億の人々に瞬時に、一夜にしてコンピュータに配信されたからです。これは信じられないことです」と語りました。 ◆人類はAIと協力関係にある AIが利用されるときは、通常、AIが生成を行い、人間である私たちが検証を行うという作業が繰り返されます。このループをできるだけ高速化することは人間にとってもAIにとってもメリットがあります。 これを実現する方法としてカーパシー氏が挙げるのが、1つは検証を大幅にスピードアップすることです。これはGUIを導入することで実現できる可能性があります。長いテキストだけを読むことは労力がかかりますが、絵など文字以外の物を見ることで容易になります。 2つ目は、AIを制御下に置く必要がある点です。カーパシー氏は「多くの人々がAIエージェントに過剰に興奮している」と指摘しており、AIの出力すべてを信じるのではなく、AIが正しいことを行っているか、セキュリティ上の問題がないかなどを確かめることが重要だと述べています。LLMは基本的にもっともらしい言葉をもっともらしく並べるだけの機械であり、出力結果が必ずしも正しいとは限りません。結果を常に検証することが大切です。 この記事のタイトルとURLをコピーする ・関��記事 Metaが既存の生成AIにあるトークン制限をはるかに上回る100万トークン超のコンテンツ生成を可能にする次世代AIアーキテクチャ「Megabyte」を発表 - GIGAZINE 世界最長のコンテキストウィンドウ100万トークン入力・8万トークン出力対応にもかかわらずたった7800万円でトレーニングされたAIモデル「MiniMax-M1」がオープンソースで公開され誰でもダウンロード可能に - GIGAZINE AppleがXcodeにAIでのコーディング補助機能を追加&Apple Intelligenceの基盤モデルフレームワークが利用可能に - GIGAZINE AnthropicがAIモデルの思考内容を可視化できるオープンソースツール「circuit-tracer」を公開 - GIGAZINE DeepSeekと清華大学の研究者がLLMの推論能力を強化する新しい手法を発表 - GIGAZINE 「現在のAIをスケールアップしても汎用人工知能は開発できない」と考える科学者の割合は76% - GIGAZINE ・関連コンテンツ TwitterやFacebookで使われている「Apache Hadoop」のメリットや歴史を作者自らが語る 仮想通貨暴落などで苦境に立たされたマイニング業者は余ったGPUを「AIトレーニング用のリソース」として提供している 「AI懐疑論者の友人はみんな頭がおかしい」というブログが登場、賛否両論さまざまなコメントが寄せられる 私たちが何気なく使っているソフトウェアはどのように開発されているのか? グラフをはみ出した点と線が世界に広がっていく手描きアニメ「Extrapolate」が圧巻 「アルゴリズムって何?」を専門家が分かりやすく解説 機械学習でコンピューターが音楽を理解することが容易ではない理由 生きてるだけでお金がもらえるベーシックインカムこそ「資本主義2.0」だとの主張、その理由とは?

講演「ソフトウェアは再び変化している」が海外で大反響、その衝撃的な内容とは? - GIGAZINE

5 notes

·

View notes

Text

Why Python Will Thrive: Future Trends and Applications

Python has already made a significant impact in the tech world, and its trajectory for the future is even more promising. From its simplicity and versatility to its widespread use in cutting-edge technologies, Python is expected to continue thriving in the coming years. Considering the kind support of Python Course in Chennai Whatever your level of experience or reason for switching from another programming language, learning Python gets much more fun.

Let's explore why Python will remain at the forefront of software development and what trends and applications will contribute to its ongoing dominance.

1. Artificial Intelligence and Machine Learning

Python is already the go-to language for AI and machine learning, and its role in these fields is set to expand further. With powerful libraries such as TensorFlow, PyTorch, and Scikit-learn, Python simplifies the development of machine learning models and artificial intelligence applications. As more industries integrate AI for automation, personalization, and predictive analytics, Python will remain a core language for developing intelligent systems.

2. Data Science and Big Data

Data science is one of the most significant areas where Python has excelled. Libraries like Pandas, NumPy, and Matplotlib make data manipulation and visualization simple and efficient. As companies and organizations continue to generate and analyze vast amounts of data, Python’s ability to process, clean, and visualize big data will only become more critical. Additionally, Python’s compatibility with big data platforms like Hadoop and Apache Spark ensures that it will remain a major player in data-driven decision-making.

3. Web Development

Python’s role in web development is growing thanks to frameworks like Django and Flask, which provide robust, scalable, and secure solutions for building web applications. With the increasing demand for interactive websites and APIs, Python is well-positioned to continue serving as a top language for backend development. Its integration with cloud computing platforms will also fuel its growth in building modern web applications that scale efficiently.

4. Automation and Scripting

Automation is another area where Python excels. Developers use Python to automate tasks ranging from system administration to testing and deployment. With the rise of DevOps practices and the growing demand for workflow automation, Python’s role in streamlining repetitive processes will continue to grow. Businesses across industries will rely on Python to boost productivity, reduce errors, and optimize performance. With the aid of Best Online Training & Placement Programs, which offer comprehensive training and job placement support to anyone looking to develop their talents, it’s easier to learn this tool and advance your career.

5. Cybersecurity and Ethical Hacking

With cyber threats becoming increasingly sophisticated, cybersecurity is a critical concern for businesses worldwide. Python is widely used for penetration testing, vulnerability scanning, and threat detection due to its simplicity and effectiveness. Libraries like Scapy and PyCrypto make Python an excellent choice for ethical hacking and security professionals. As the need for robust cybersecurity measures increases, Python’s role in safeguarding digital assets will continue to thrive.

6. Internet of Things (IoT)

Python’s compatibility with microcontrollers and embedded systems makes it a strong contender in the growing field of IoT. Frameworks like MicroPython and CircuitPython enable developers to build IoT applications efficiently, whether for home automation, smart cities, or industrial systems. As the number of connected devices continues to rise, Python will remain a dominant language for creating scalable and reliable IoT solutions.

7. Cloud Computing and Serverless Architectures

The rise of cloud computing and serverless architectures has created new opportunities for Python. Cloud platforms like AWS, Google Cloud, and Microsoft Azure all support Python, allowing developers to build scalable and cost-efficient applications. With its flexibility and integration capabilities, Python is perfectly suited for developing cloud-based applications, serverless functions, and microservices.

8. Gaming and Virtual Reality

Python has long been used in game development, with libraries such as Pygame offering simple tools to create 2D games. However, as gaming and virtual reality (VR) technologies evolve, Python’s role in developing immersive experiences will grow. The language’s ease of use and integration with game engines will make it a popular choice for building gaming platforms, VR applications, and simulations.

9. Expanding Job Market

As Python’s applications continue to grow, so does the demand for Python developers. From startups to tech giants like Google, Facebook, and Amazon, companies across industries are seeking professionals who are proficient in Python. The increasing adoption of Python in various fields, including data science, AI, cybersecurity, and cloud computing, ensures a thriving job market for Python developers in the future.

10. Constant Evolution and Community Support

Python’s open-source nature means that it’s constantly evolving with new libraries, frameworks, and features. Its vibrant community of developers contributes to its growth and ensures that Python stays relevant to emerging trends and technologies. Whether it’s a new tool for AI or a breakthrough in web development, Python’s community is always working to improve the language and make it more efficient for developers.

Conclusion

Python’s future is bright, with its presence continuing to grow in AI, data science, automation, web development, and beyond. As industries become increasingly data-driven, automated, and connected, Python’s simplicity, versatility, and strong community support make it an ideal choice for developers. Whether you are a beginner looking to start your coding journey or a seasoned professional exploring new career opportunities, learning Python offers long-term benefits in a rapidly evolving tech landscape.

#python course#python training#python#technology#tech#python programming#python online training#python online course#python online classes#python certification

2 notes

·

View notes

Text

Are you looking to build a career in Big Data Analytics? Gain in-depth knowledge of Hadoop and its ecosystem with expert-led training at Sunbeam Institute, Pune – a trusted name in IT education.

Why Choose Our Big Data Hadoop Classes?

🔹 Comprehensive Curriculum: Covering Hadoop, HDFS, MapReduce, Apache Spark, Hive, Pig, HBase, Sqoop, Flume, and more. 🔹 Hands-on Training: Work on real-world projects and industry use cases to gain practical experience. 🔹 Expert Faculty: Learn from experienced professionals with real-time industry exposure. 🔹 Placement Assistance: Get career guidance, resume building support, and interview preparation. 🔹 Flexible Learning Modes: Classroom and online training options available. 🔹 Industry-Recognized Certification: Boost your resume with a professional certification.

Who Should Join?

✔️ Freshers and IT professionals looking to enter the field of Big Data & Analytics ✔️ Software developers, system administrators, and data engineers ✔️ Business intelligence professionals and database administrators ✔️ Anyone passionate about Big Data and Machine Learning

Course Highlights:

✅ Introduction to Big Data & Hadoop Framework ✅ HDFS (Hadoop Distributed File System) – Storage & Processing ✅ MapReduce Programming – Core of Hadoop Processing ✅ Apache Spark – Fast and Unified Analytics Engine ✅ Hive, Pig, HBase – Data Querying & Management ✅ Data Ingestion Tools – Sqoop & Flume ✅ Real-time Project Implementation

#Big Data Hadoop training in Pune#Hadoop classes Pune#Big Data course Pune#Hadoop certification Pune#learn Hadoop in Pune#Apache Spark training Pune#best Big Data course Pune#Hadoop coaching in Pune#Big Data Analytics training Pune#Hadoop and Spark training Pune

0 notes

Text

Wielding Big Data Using PySpark

Introduction to PySpark

PySpark is the Python API for Apache Spark, a distributed computing framework designed to process large-scale data efficiently. It enables parallel data processing across multiple nodes, making it a powerful tool for handling massive datasets.

Why Use PySpark for Big Data?

Scalability: Works across clusters to process petabytes of data.

Speed: Uses in-memory computation to enhance performance.

Flexibility: Supports various data formats and integrates with other big data tools.

Ease of Use: Provides SQL-like querying and DataFrame operations for intuitive data handling.

Setting Up PySpark

To use PySpark, you need to install it and set up a Spark session. Once initialized, Spark allows users to read, process, and analyze large datasets.

Processing Data with PySpark

PySpark can handle different types of data sources such as CSV, JSON, Parquet, and databases. Once data is loaded, users can explore it by checking the schema, summary statistics, and unique values.

Common Data Processing Tasks

Viewing and summarizing datasets.

Handling missing values by dropping or replacing them.

Removing duplicate records.

Filtering, grouping, and sorting data for meaningful insights.

Transforming Data with PySpark

Data can be transformed using SQL-like queries or DataFrame operations. Users can:

Select specific columns for analysis.

Apply conditions to filter out unwanted records.

Group data to find patterns and trends.

Add new calculated columns based on existing data.

Optimizing Performance in PySpark

When working with big data, optimizing performance is crucial. Some strategies include:

Partitioning: Distributing data across multiple partitions for parallel processing.

Caching: Storing intermediate results in memory to speed up repeated computations.

Broadcast Joins: Optimizing joins by broadcasting smaller datasets to all nodes.

Machine Learning with PySpark

PySpark includes MLlib, a machine learning library for big data. It allows users to prepare data, apply machine learning models, and generate predictions. This is useful for tasks such as regression, classification, clustering, and recommendation systems.

Running PySpark on a Cluster

PySpark can run on a single machine or be deployed on a cluster using a distributed computing system like Hadoop YARN. This enables large-scale data processing with improved efficiency.

Conclusion

PySpark provides a powerful platform for handling big data efficiently. With its distributed computing capabilities, it allows users to clean, transform, and analyze large datasets while optimizing performance for scalability.

For Free Tutorials for Programming Languages Visit-https://www.tpointtech.com/

2 notes

·

View notes

Text

Hadoop . . . for more information and tutorial https://bit.ly/3EaIiXD check the above link

0 notes

Text

From Curious Novice to Data Enthusiast: My Data Science Adventure

I've always been fascinated by data science, a field that seamlessly blends technology, mathematics, and curiosity. In this article, I want to take you on a journey—my journey—from being a curious novice to becoming a passionate data enthusiast. Together, let's explore the thrilling world of data science, and I'll share the steps I took to immerse myself in this captivating realm of knowledge.

The Spark: Discovering the Potential of Data Science

The moment I stumbled upon data science, I felt a spark of inspiration. Witnessing its impact across various industries, from healthcare and finance to marketing and entertainment, I couldn't help but be drawn to this innovative field. The ability to extract critical insights from vast amounts of data and uncover meaningful patterns fascinated me, prompting me to dive deeper into the world of data science.

Laying the Foundation: The Importance of Learning the Basics

To embark on this data science adventure, I quickly realized the importance of building a strong foundation. Learning the basics of statistics, programming, and mathematics became my priority. Understanding statistical concepts and techniques enabled me to make sense of data distributions, correlations, and significance levels. Programming languages like Python and R became essential tools for data manipulation, analysis, and visualization, while a solid grasp of mathematical principles empowered me to create and evaluate predictive models.

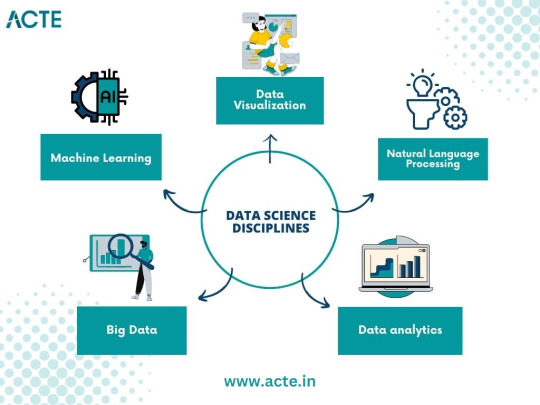

The Quest for Knowledge: Exploring Various Data Science Disciplines

A. Machine Learning: Unraveling the Power of Predictive Models

Machine learning, a prominent discipline within data science, captivated me with its ability to unlock the potential of predictive models. I delved into the fundamentals, understanding the underlying algorithms that power these models. Supervised learning, where data with labels is used to train prediction models, and unsupervised learning, which uncovers hidden patterns within unlabeled data, intrigued me. Exploring concepts like regression, classification, clustering, and dimensionality reduction deepened my understanding of this powerful field.

B. Data Visualization: Telling Stories with Data

In my data science journey, I discovered the importance of effectively visualizing data to convey meaningful stories. Navigating through various visualization tools and techniques, such as creating dynamic charts, interactive dashboards, and compelling infographics, allowed me to unlock the hidden narratives within datasets. Visualizations became a medium to communicate complex ideas succinctly, enabling stakeholders to understand insights effortlessly.

C. Big Data: Mastering the Analysis of Vast Amounts of Information

The advent of big data challenged traditional data analysis approaches. To conquer this challenge, I dived into the world of big data, understanding its nuances and exploring techniques for efficient analysis. Uncovering the intricacies of distributed systems, parallel processing, and data storage frameworks empowered me to handle massive volumes of information effectively. With tools like Apache Hadoop and Spark, I was able to mine valuable insights from colossal datasets.

D. Natural Language Processing: Extracting Insights from Textual Data

Textual data surrounds us in the digital age, and the realm of natural language processing fascinated me. I delved into techniques for processing and analyzing unstructured text data, uncovering insights from tweets, customer reviews, news articles, and more. Understanding concepts like sentiment analysis, topic modeling, and named entity recognition allowed me to extract valuable information from written text, revolutionizing industries like sentiment analysis, customer service, and content recommendation systems.

Building the Arsenal: Acquiring Data Science Skills and Tools

Acquiring essential skills and familiarizing myself with relevant tools played a crucial role in my data science journey. Programming languages like Python and R became my companions, enabling me to manipulate, analyze, and model data efficiently. Additionally, I explored popular data science libraries and frameworks such as TensorFlow, Scikit-learn, Pandas, and NumPy, which expedited the development and deployment of machine learning models. The arsenal of skills and tools I accumulated became my assets in the quest for data-driven insights.

The Real-World Challenge: Applying Data Science in Practice

Data science is not just an academic pursuit but rather a practical discipline aimed at solving real-world problems. Throughout my journey, I sought to identify such problems and apply data science methodologies to provide practical solutions. From predicting customer churn to optimizing supply chain logistics, the application of data science proved transformative in various domains. Sharing success stories of leveraging data science in practice inspires others to realize the power of this field.

Cultivating Curiosity: Continuous Learning and Skill Enhancement

Embracing a growth mindset is paramount in the world of data science. The field is rapidly evolving, with new algorithms, techniques, and tools emerging frequently. To stay ahead, it is essential to cultivate curiosity and foster a continuous learning mindset. Keeping abreast of the latest research papers, attending data science conferences, and engaging in data science courses nurtures personal and professional growth. The journey to becoming a data enthusiast is a lifelong pursuit.

Joining the Community: Networking and Collaboration

Being part of the data science community is a catalyst for growth and inspiration. Engaging with like-minded individuals, sharing knowledge, and collaborating on projects enhances the learning experience. Joining online forums, participating in Kaggle competitions, and attending meetups provides opportunities to exchange ideas, solve challenges collectively, and foster invaluable connections within the data science community.

Overcoming Obstacles: Dealing with Common Data Science Challenges

Data science, like any discipline, presents its own set of challenges. From data cleaning and preprocessing to model selection and evaluation, obstacles arise at each stage of the data science pipeline. Strategies and tips to overcome these challenges, such as building reliable pipelines, conducting robust experiments, and leveraging cross-validation techniques, are indispensable in maintaining motivation and achieving success in the data science journey.

Balancing Act: Building a Career in Data Science alongside Other Commitments

For many aspiring data scientists, the pursuit of knowledge and skills must coexist with other commitments, such as full-time jobs and personal responsibilities. Effectively managing time and developing a structured learning plan is crucial in striking a balance. Tips such as identifying pockets of dedicated learning time, breaking down complex concepts into manageable chunks, and seeking mentorships or online communities can empower individuals to navigate the data science journey while juggling other responsibilities.

Ethical Considerations: Navigating the World of Data Responsibly

As data scientists, we must navigate the world of data responsibly, being mindful of the ethical considerations inherent in this field. Safeguarding privacy, addressing bias in algorithms, and ensuring transparency in data-driven decision-making are critical principles. Exploring topics such as algorithmic fairness, data anonymization techniques, and the societal impact of data science encourages responsible and ethical practices in a rapidly evolving digital landscape.

Embarking on a data science adventure from a curious novice to a passionate data enthusiast is an exhilarating and rewarding journey. By laying a foundation of knowledge, exploring various data science disciplines, acquiring essential skills and tools, and engaging in continuous learning, one can conquer challenges, build a successful career, and have a good influence on the data science community. It's a journey that never truly ends, as data continues to evolve and offer exciting opportunities for discovery and innovation. So, join me in your data science adventure, and let the exploration begin!

#data science#data analytics#data visualization#big data#machine learning#artificial intelligence#education#information

17 notes

·

View notes

Text

Java's Lasting Impact: A Deep Dive into Its Wide Range of Applications

Java programming stands as a towering pillar in the world of software development, known for its versatility, robustness, and extensive range of applications. Since its inception, Java has played a pivotal role in shaping the technology landscape. In this comprehensive guide, we will delve into the multifaceted world of Java programming, examining its wide-ranging applications, discussing its significance, and highlighting how ACTE Technologies can be your guiding light in mastering this dynamic language.

The Versatility of Java Programming:

Java programming is synonymous with adaptability. It's a language that transcends boundaries and finds applications across diverse domains. Here are some of the key areas where Java's versatility shines:

1. Web Development: Java has long been a favorite choice for web developers. Robust and scalable, it powers dynamic web applications, allowing developers to create interactive and feature-rich websites. Java-based web frameworks like Spring and JavaServer Faces (JSF) simplify the development of complex web applications.

2. Mobile App Development: The most widely used mobile operating system in the world, Android, mainly relies on Java for app development. Java's "write once, run anywhere" capability makes it an ideal choice for creating Android applications that run seamlessly on a wide range of devices.

3. Desktop Applications: Java's Swing and JavaFX libraries enable developers to craft cross-platform desktop applications with sophisticated graphical user interfaces (GUIs). This cross-platform compatibility ensures that your applications work on Windows, macOS, and Linux.

4. Enterprise Software: Java's strengths in scalability, security, and performance make it a preferred choice for developing enterprise-level applications. Customer Relationship Management (CRM) systems, Enterprise Resource Planning (ERP) software, and supply chain management solutions often rely on Java to deliver reliability and efficiency.

5. Game Development: Java isn't limited to business applications; it's also a contender in the world of gaming. Game developers use Java, along with libraries like LibGDX, to create both 2D and 3D games. The language's versatility allows game developers to target various platforms.

6. Big Data and Analytics: Java plays a significant role in the big data ecosystem. Popular frameworks like Apache Hadoop and Apache Spark utilize Java for processing and analyzing massive datasets. Its performance capabilities make it a natural fit for data-intensive tasks.

7. Internet of Things (IoT): Java's ability to run on embedded devices positions it well for IoT development. It is used to build applications for smart homes, wearable devices, and industrial automation systems, connecting the physical world to the digital realm.

8. Scientific and Research Applications: In scientific computing and research projects, Java's performance and libraries for data analysis make it a valuable tool. Researchers leverage Java to process and analyze data, simulate complex systems, and conduct experiments.

9. Cloud Computing: Java is a popular choice for building cloud-native applications and microservices. It is compatible with cloud platforms such as AWS, Azure, and Google Cloud, making it integral to cloud computing's growth.

Why Java Programming Matters:

Java programming's enduring significance in the tech industry can be attributed to several compelling reasons:

Platform Independence: Java's "write once, run anywhere" philosophy allows code to be executed on different platforms without modification. This portability enhances its versatility and cost-effectiveness.

Strong Ecosystem: Java boasts a rich ecosystem of libraries, frameworks, and tools that expedite development and provide solutions to a wide range of challenges. Developers can leverage these resources to streamline their projects.

Security: Java places a strong emphasis on security. Features like sandboxing and automatic memory management enhance the language's security profile, making it a reliable choice for building secure applications.

Community Support: Java enjoys the support of a vibrant and dedicated community of developers. This community actively contributes to its growth, ensuring that Java remains relevant, up-to-date, and in line with industry trends.

Job Opportunities: Proficiency in Java programming opens doors to a myriad of job opportunities in software development. It's a skill that is in high demand, making it a valuable asset in the tech job market.

Java programming is a dynamic and versatile language that finds applications in web and mobile development, enterprise software, IoT, big data, cloud computing, and much more. Its enduring relevance and the multitude of opportunities it offers in the tech industry make it a valuable asset in a developer's toolkit.

As you embark on your journey to master Java programming, consider ACTE Technologies as your trusted partner. Their comprehensive training programs, expert guidance, and hands-on experiences will equip you with the skills and knowledge needed to excel in the world of Java development.

Unlock the full potential of Java programming and propel your career to new heights with ACTE Technologies. Whether you're a novice or an experienced developer, there's always more to discover in the world of Java. Start your training journey today and be at the forefront of innovation and technology with Java programming.

8 notes

·

View notes