Text

Agentic AI: the rise of agents

Why Agentic AI is the Next Big Thing in Technology

Introduction

Rapid technological advancements are changing sectors and impacting customer expectations, social conventions, and international economic dynamics. Artificial intelligence, connectivity, sustainable practices, digital security, and data privacy are significant developments that will reshape the world of technology in 2025. Due to these advancements, business leaders, IT specialists, and industry analysts have opportunities.

Agentic AI allows systems to make decisions on their own and define goals in an adaptable way.

According to Gartner, agentic AI will be incorporated into 33% of business software applications by 2028, using task-oriented techniques and real-time data. This technology will make 15% of daily job choices autonomous, up from less than 1% in 2024. It will enable more flexible and intelligent operations in various applications by using context awareness, continuous learning, and sophisticated problem-solving approaches.

Which primary uses of agentic AI may there be?

Healthcare: Treatment regimens may be tailored by automatically assessing patient data and adjusting suggestions as conditions change.

Finance: Strategies for managing investment portfolios can be dynamically adjusted in response to market movements and risk considerations.

Consumer Support: Chatbots and virtual assistants may respond to consumer questions and proactively handle problems.

Gaming: Agentic AI can improve NPC behavior in video games by allowing them to respond to players' methods and actions, resulting in a more enjoyable and dynamic gameplay experience.

Autonomous Vehicles: Agentic AI can help self-driving cars understand their environment, make judgments, and travel safely in real-time.

Smart Cities: Anticipating and responding to real-time demands improves traffic flow, energy usage, and public services.

AI agents use cases unique to a given industry.

AI agents have many applications in e-commerce, sales, marketing, customer service, and hospitality. Let's go over these use scenarios in depth.

E-Commerce

AI agents may help you optimize inventory management, provide tailored product suggestions, and speed up checkout. Amazon's recommendation engine uses AI to propose goods based on user activity, increasing sales and enhancing customer experience.

Sales and Marketing

Artificial intelligence agents are used in sales and marketing to generate leads, segment customers, and optimize campaigns. Chatbots may help validate leads and respond to client queries, while AI algorithms improve ad targeting.

Hospitality

Hotel AI agents assist with booking, personalized suggestions, and even room service automation. For example, AI-powered hotel virtual assistants may help visitors with check-ins, room choices, and activity recommendations, improving the guest experience.

Benefits of using AI Agents for your business:

Reduced Costs

AI agents eliminate the need for human labor by executing activities autonomously. This cuts operating expenses in industries such as customer service and logistics and allows for efficient processing and route optimization.

Informed Decision-Making

AI bots process massive volumes of data to provide insights and inform decisions. From stock trading to supply chain optimization, AI bots can provide real-time suggestions based on data trends, resulting in more competent judgments.

Improved Customer Experience

AI agents, such as chatbots and virtual assistants, deliver quick replies, which boosts client happiness. These systems may operate 24 hours a day, seven days a week, providing clients with individualized help and timely answers.

Improved Productivity

AI agents may automate monotonous operations, allowing human workers to focus on more challenging and creative tasks. Whether automating customer service with chatbots or simplifying industrial processes, AI agents significantly boost efficiency.

Wrapping Up:

AI agents, increasingly autonomous, independent, and ethical, are transforming numerous sectors by enhancing daily living and tackling key business concerns. These agents, from virtual assistants to autonomous systems, are powering a new era of technology and human connection.

0 notes

Text

Generative AI: The Game Changer of 2025

Generative AI in action

Generative AI can revolutionize business operations by optimizing text, images, and code creation. This year, numerous companies are expected to transition their generative AI initiatives from pilot to full production, resulting in workforce implications that may surpass previously conceived possibilities.

Revolutionizing financial services

Generative AI is gaining prominence in the financial services sector due to its potential benefits like cost reduction and faster customer resolution. It automates manual processes in digital data transfer and offers new opportunities in repetitive tasks, such as real-time fraud detection. This technology enhances financial institutions' competitiveness and reduces false-positive rates, particularly in areas like fraud detection where traditional systems are reactive and high false-positive rates.

Generative AI is driving a profound transformation in financial services, fostering innovation and streamlining operations

With its broad applications, artificial intelligence is enhancing customer service, boosting risk management and reshaping capital markets

Balancing the opportunities and challenges of AI, the banking sector is on a strategic journey toward an AI-enabled future

Future of Generative AI in Banking and Financial Institutions

Generative AI is poised to disrupt the banking and finance industries by improving operational efficiency and client experience. Advanced data processing enables it to automate complicated activities, provide tailored services, and increase fraud detection. Future uses of generative AI in banking will include predictive analytics for risk management, enhanced credit scoring, and individualized financial advising, all of which will result in simplified processes and cost savings. Despite its promise, generative AI creates issues such as data privacy and regulatory compliance, necessitating banks to maintain transparency and security in their AI systems. By using this technology, financial institutions will be better positioned to satisfy client expectations and remain competitive in the sector.

Enhancing healthcare

Generative AI is also improving healthcare and services. Its application in the medical industry has the potential to greatly improve treatment. Generative AI can evaluate large volumes of medical data to help healthcare practitioners diagnose illnesses, prescribe therapies, and anticipate patient outcomes, resulting in more accurate and timely care.

Applications of Generative AI in the Healthcare Industry

Automating administrative tasks

Medical imaging

Drug discovery and development

Medical research and data analysis

Risk prediction of pandemic preparedness

Generating synthetic medical data

Personalized medicine

Conclusion

In 2025, organizations are projected to prioritize strategic planning, form collaborations between business and IT to support generative AI efforts, and transition from pilot projects employing large language models (LLMs) to full-scale deployments. Smaller language models are expected to gain popularity, handling specialized tasks without placing undue demand on data center resources and energy usage. Companies will use novel technologies and frameworks to improve data and AI management, resulting in the return of predictive AI.

0 notes

Text

Trends and Forecasts for Test Automation in 2025 and Beyond

Overview

The demand for advanced test automation is rising due to the rapid advancements in AI-driven futures, ML, and software. The scope of test automation is expanding from basic functionality tests to complex domains like security, data integrity, and user experience. The future of Quality Engineering will see new standards for efficiency, accuracy, and resilience.

AI-Powered Testing Will Set the Standard

By 2025, AI-driven testing will dominate test automation, with machine learning enabling early detection of shortcomings. AI-powered pattern recognition will enhance regression testing speed and reliability. By 2025, over 75% of test automation frameworks will have AI-based self-healing capabilities, creating a more robust and responsive testing ecosystem.

No-Code and Low-Code Testing Platforms' Ascent

The rapid development is prompting the rise of no-code and low-code test automation solutions. These platforms allow technical and non-technical users to write and perform tests without advanced programming skills. By 2026, they are predicted to be used in 80% of test automation procedures, promoting wider adoption across teams.

Testing that is Autonomous and Highly Automated

Hyper-automation, a combination of AI, machine learning, and robotic process automation, is revolutionizing commercial processes, particularly testing. By 2027, enterprises can automate up to 85% of their testing operations, enabling continuous testing and faster delivery times, reinforcing DevOps and agile methodologies.

Automated Testing for Privacy and Cybersecurity

Test automation is advancing in ensuring apps comply with global security standards and regulations, including GDPR, HIPAA, and CCPA. By 2025, security-focused test automation is expected to grow by 70%, becoming crucial in businesses requiring privacy and data integrity. This technology will enable real-time monitoring, threat detection, and mitigation in the face of increasing cyberattacks.

Testing Early in the Development Cycle

Shift-left testing is a popular method for detecting and addressing flaws in the early stages of development, reducing rework, and improving software quality. It is expected to increase as tests are integrated with advanced automation technologies. By 2025, DevOps-focused firms will use shift-left testing, reducing defect rates by up to 60% and shortening time-to-market.

Testing's Extension to Edge Computing and IoT

The increasing prevalence of IoT devices and edge computing will significantly complicate testing, necessitating numerous setup changes and real-time data handling due to network and device differences. By 2026, IoT and edge computing test automation will account for 45% of the testing landscape, with increasing demand in healthcare, manufacturing, and logistics.

The Need for Instantaneous Test Analytics and Reports

Real-time analytics are crucial in test automation, enabling data-driven decisions and improved test coverage, defect rates, and quality. By 2025, 65% of QA teams will use real-time analytics to monitor and optimize test automation tactics, resulting in a 30% increase in testing productivity.

Testing Across Platforms for Applications with Multiple Experiences

Multi-experience applications, which work across multiple platforms, require extensive testing for compatibility, responsiveness, and UX. By 2025, 80% of businesses will have implemented cross-platform test automation technologies, enhancing multi-experience application quality by 45%. AI-based tools will replicate human interaction across multiple platforms.

Using Containerization and Virtualization to Simulate Environments

Test automation relies heavily on virtualization and containerization, with Docker and Kubernetes technologies enabling virtualized environments that resemble production. By 2025, containerized testing environments will enable 65% of test automation, allowing quicker and more flexible testing solutions, reducing dependencies, and increasing testing scalability and accuracy.

The Expanding Function of AI-Powered RPA in Test Automation

RPA integrates with AI to create sophisticated automation solutions, increasing productivity in repetitive testing operations, data transfer, and system integrations. By 2026, AI-enhanced RPA will account for 45% of test automation in industries with highly repetitive testing, such as banking, healthcare, and manufacturing, enabling complex judgments and dependable outcomes.

An increasing emphasis on accessibility testing

Due to increased accessibility priorities, the demand for accessibility testing in businesses has surged. Automated tools will detect issues like color contrast, screen reader compatibility, and keyboard navigation assistance, ensuring WCAG compliance. By 2025, over 65% of enterprises aim for inclusive and accessible user experiences.

Accepting the Future of Automated Testing

The next generation of test automation, utilizing AI, machine learning, and RPA, holds immense potential for creating high-quality, secure, and user-friendly apps. Sun Technologies, a leading testing solutions provider, must stay ahead of these trends to provide clients with the most modern testing solutions available.

Are you set to transform your test automation journey?

Contact us at suntechnologies.com to learn more about how we can help you grasp the newest testing trends and technology.

Together, let's define the future of quality engineering!

0 notes

Text

From GenAI to Quantum Computing: Tech trends that will define 2025

From GenAI to Quantum Computing: Tech trends that will define 2025

As we approach 2025, the technology environment is poised for dramatic change, predicted to redefine many sectors and affect how people live and work. The following are the key trends to watch as we enter this new era of innovation:

Advancement of quantum computing.

Quantum computing is no longer a future idea; it is poised to become a game changer for sectors that rely on intricate problem-solving. By 2025, quantum computing capabilities will likely develop, with substantial implications for banking and healthcare.

Use case

Material simulation: Researching medicines and battery chemistry.

Banking and Finance: Pricing optimization and the identification of fraud

Automotive and Aerospace: The dilemma of the paint shop and fluid dynamics

Metaverse's Evolution

By 2025, the metaverse, a virtual realm where individuals may communicate, work, and play, will have evolved significantly. Companies like Meta (previously Facebook) and Epic Games are at the forefront of developing virtual environments where users can conduct business meetings, social interactions, and entertainment. Nike, for example, has already built a virtual store in the metaverse, where people can purchase and experience the brand digitally.

Usage

Virtual event management, Metaverse e-commerce, virtual education platforms, Metaverse games, social networking platforms, tourism experiences, NFT markets, and other offerings.

Agentic AI

Organizations have long wanted to promote high-performing teams, improve cross-functional collaboration, and coordinate issues across team networks. Agentic AI, a popular software program, helps CIOs achieve their vision for generative AI to boost productivity by performing tasks independently and providing insights from derivative events.

Use Cases:

Customer interactions are becoming automated by data analysis to make calculated judgments at every step.

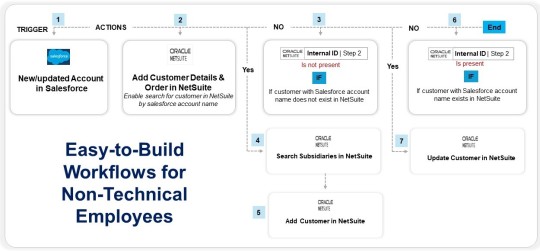

Using plain language, workers may build and manage increasingly complex technological tasks.

AI Governance Platforms

AI governance platforms are rapidly being employed in businesses with stringent requirements to manage and oversee AI systems ethically and responsibly. By 2028, organizations that use AI governance systems are likely to outperform their competitors in terms of consumer trust ratings and regulatory compliance scores. These platforms help verify that AI systems make fair judgments, secure data, and follow rules, making them an essential tool for IT leaders in industries like banking.

Use Cases:

Assessing the possible risks and problems that AI systems may cause, such as prejudice, privacy infringement, and negative social consequences.

Guiding AI models through the model governance process, ensuring that all required gates and controls are followed throughout the model's life cycle.

Hybrid Computing

Hybrid computing is a system that employs different technologies to address complicated computational issues, allowing organizations to expand rapidly, save money, and remain flexible. This method enables enterprises to operate core programs on local servers for security and control, using the cloud for high-performance activities such as data analytics and artificial intelligence. It also enables firms to use emerging technologies such as biocomputing and quantum systems for disruptive impact.

Use Cases:

Cost-effective scalability: Critical workloads should be kept in-house for security reasons, with the cloud handling peak demands during busy seasons.

Optimizing Data Security and Compliance: Maintaining critical data on-premises, adhering to tight data protection standards, and leveraging the cloud for less sensitive activities or analytics.

Promoting innovation and development: Using cloud-based development tools while preserving safe on-premises settings for production.

Conclusion

The technological developments that will shape 2025 provide many opportunities for innovation and expansion in various sectors. Adopting these trends can help businesses stay competitive while fostering a safer, sustainable, and interconnected future.

Read More:

How Generative AI Can Help Transform Loan Underwriting While Meeting all Compliance Needs?

How Our GenAI Bots Enhance 401K Regulatory Compliance and Form 5500 Report Generation?

0 notes

Text

Where Are Banking Technologies Heading in 2025?

Key Innovations and Trends

The process of digital transformation within the banking sector is an ongoing endeavor that has been significantly altering the landscape of the industry for several decades. It is imperative for banks to swiftly adapt to fulfill the evolving expectations of customers regarding seamless digital experiences, facilitated by mobile banking, blockchain technology, cloud computing, and artificial intelligence. Financial institutions are increasingly embracing innovative technologies to maintain their competitive edge. Digital transformation is transforming banking, with mobile and online channels becoming the predominant methods for customer account management. 48% of US consumers prefer mobile banking, while 23% prefer online banking accessed through laptops or PCs.

Americans' banking practices: Most often used banking method

• Mobile app banking is most preferred

Why is the Transition to Digital Essential in the Banking Industry?

1. Customer Expectations and Competition

Modern customers demand quick, fluid digital interactions, and financial institutions can meet these expectations by offering 24/7 services, personalized support, and seamless integration with mobile and online platforms.

2. Increased Operational Efficiency through Automation

Automation in banking lowers labor costs, decreases human error, and provides consumers with faster, more accurate services. Real-time processing capabilities help banks to make more informed choices, increase efficiency, and prioritize client engagement and innovation.

3. Enhanced Security and Fraud Prevention

AI-powered fraud detection and blockchain technology assist banks in developing solid security procedures for digital transactions, lowering the risk of fraud and increasing consumer confidence.

4. Strengthening Regulatory Compliance

The banking industry is undergoing digital transformation, leveraging AI and machine learning to automate compliance procedures, enhancing efficiency, precision, and risk mitigation.

5. Long-Term Competitiveness and Market Growth

Digital transformation helps traditional banks compete with fintech by providing quicker and innovative services, broadening market penetration, retaining clientele, and sustaining their position in a rapidly evolving industry.

6. Better Customer Experience

Digital transformation improves customer experience by providing immediate responses, round-the-clock access, self-service capabilities, and personalized advice.

7. Improved Risk Management

Digital transformation enables financial institutions to identify risks in real-time using analytics and AI, enabling predictive analytics for informed decisions, effective liquidity management, and customer confidence.

8. Increased Revenue Opportunities

Digital banking transformation enhances revenue generation, personalized services, and customer satisfaction through advanced analytics, artificial intelligence, mobile banking, and digital wallets, fostering long-lasting relationships.

9. Greater Agility and Adaptability

Digital technologies have made banks more adaptable, allowing them to respond to changes in consumer requirements and market dynamics, securing a competitive advantage.

Technologies Helping Digital Transformation in Banking

Digital transformation in banking is driven by sophisticated technologies that alter operational processes, customer interactions, and service delivery mechanisms. Banks must adapt to meet evolving customer expectations and employ advanced tools for effective service provision.

1. AI and Machine Learning

AI is crucial in the banking sector, enabling personalized services, predictive analytics, and real-time customer support. It also helps detect fraudulent activities and refine services for operational efficiency and personalization.

2. Blockchain Technology

Blockchain technology enhances security, transparency, and efficiency in the banking sector by utilizing decentralized ledger systems for cross-border transactions and self-executing agreements.

3. Cloud Computing

Cloud computing improves banking operations by providing on-demand access to computing resources, reducing IT infrastructure expenses, enabling efficient scaling, data storage, and collaboration among teams. It is crucial for banks to respond to evolving consumer demands.

4. IoT (Internet of Things)

The Internet of Things (IoT) is transforming the banking sector by enhancing operational efficiency and the customer experience. IoT enables banks to provide personalized services tailored to user behavior, and bolsters security by enabling more accurate authentication methods.

Leading Digital Innovations Revolutionizing the Banking Sector

Customer-Centric Approach

Invisible Authentication for Secure and Effortless Access

Embedded Finance for Effortless Transformation within Applications

Regulatory Technology for Automated Compliance and Privacy Management

Quantum Computing for Improved Security Measures

AI-Driven Personalized Financial Assistance for Customized Money Management

APIs and Open Banking for Integrated Financial Management

Does Your Team Possess the Necessary Skills for Guiding Digital Transformation?

Data Analytics: Banking teams should receive data analytics training to improve personalized services, operational efficiency, and ethical management of customer information.

AI Proficiency: AI is crucial for digital transformation in banking, enhancing automation, decision-making, and customer engagement. ChatGPT for Banking aims to improve customer support and maintain a competitive edge.

Fundamentals of Cybersecurity and Building Cyber Resilience: Financial institutions need to establish a strong cybersecurity foundation through training, encryption protocols, and effective security strategies.

Leveraging Agile Methodologies for Project Management and Marketing Strategy in the Digital Transformation Era: Agile methodologies aid in project management and marketing strategy for digital transformation, allowing for quick modifications and adaptability to evolving customer requirements.

Managing Change and Effective Communication: Team members need comprehensive training in change management, including communication, emotional intelligence, and stakeholder engagement, to navigate organizational transitions smoothly.

Awareness of Regulatory Compliance: Banks need to comply with regulations like AML and GDPR. Ongoing learning initiatives and competency matrix can inform training strategies. Specialized certifications in data analytics, cybersecurity, and digital transformation are valuable.

What Does the Future Hold for Digital Transformation within the Banking Industry?

The banking industry is expected to become more agile, customer-centric, and data-driven. Key trends include the proliferation of artificial intelligence, the adoption of blockchain technology, and the integration of banking services within non-financial platforms. Banks will collaborate with fintech entities and prioritize cybersecurity and regulatory compliance. Quantum cryptography and real-time risk management tools will play a crucial role in safeguarding sensitive data.

Sun Technologies is fully equipped, experienced, and ready to take your organization to the next level with our wide array of services tailored specifically to the BFSI industry.

The BFSI industry faces continuous challenges due to changing trends and compliance regulations. Because of this, staying ahead of the game has never been more important. We help the BFSI sector realize measurable outcomes by improving business agility and speed.

We excel in providing BFSI services with our ideal digital operating model, thereby improving the customer journey experience. Our Financial Services Team tailors every solution based on the industry’s best practices and continuously improves quality. Our scalable, flexible, and future-proof solutions enable the public sector to achieve transformation seamlessly.

We have experience in working with the BFSI sector on a wide array of project solutions, such as

Modernize Legacy apps to Java, Angular JS/Apache Tomcat framework

Maintenance and enhancements of existing custom applications

QA Testing

Functional and integration Testing of applications

Test Automation using HP UFT and SWAUT Selenium framework

Cloud Migration

Migrate In-house Database and applications to cloud environment

0 notes

Text

Retirement Industry Leveraging Artificial Intelligence for Maximum Benefit

What influence will AI have on the US retirement industry?

In the past, the US retirement sector has hesitated to embrace cutting-edge innovations. The entry of numerous young, tech-savvy individuals into the US workforce forces the US retirement plan industry to adapt to evolving clientele expectations. AI, including machine learning (ML), large language models, and even ChatGPT, is used by tech-inclined retirement plan providers, recordkeepers, and third-party administrators to digitize and automate monotonous and repetitive administrative tasks, which reduces costs and burdens for plan sponsors while also improving the retirement experience for participants and sponsors.

AI can play a vital role in the following areas:

Benefits: AI's increased efficiency streamlines the process for employees to access benefits and makes it easier, faster, and less expensive for plan sponsors to offer them. When AI provides these advantages, it can also aid in lowering the related legal and compliance concerns. Other possible dangers can be anticipated and flagged by technology, which can also notify plan sponsors of late deposits or other problems.

Marketing and Engagement: To better serve clients and increase conversion rates, AI may help with code authoring and personalization of marketing materials. AI may also maximize participant engagement since, through a multi-channel strategy, chatbots, robo-advisors, and other algorithm-based technologies can lead and teach participants on demand and from any location. Advisors who instruct customers can also be trained using these technologies.

Retirement Plan Design: Artificial Intelligence (AI) tools, including Machine Learning (ML) and predictive analysis, offer significant potential for enhancing the customization of retirement plan designs and supporting plan advisers in devising more effective savings strategies. This is particularly advantageous when there is a lack of comprehensive information pertaining to employees. By leveraging data from additional interconnected data sources and the unique information of each employee, it becomes possible to identify patterns and develop more precise or customized solutions.

Investment Strategies and Risks: Artificial Intelligence can be incorporated into the company's customer relationship management (CRM) system to gather and utilize extensive data. Advanced AI can generate a portfolio distribution based on the investment options available or recommend the appropriate savings amount without the need for employees to respond to the typical risk tolerance inquiries.

Business Development: AI can facilitate the prospecting process for retirement participants by eliminating irrelevant discussions, such as promoting offerings that a plan sponsor may not require. This could simplify the process of business development.

Onboarding of employees: New employees can be onboarded with the assistance of AI, and accounts and investments can be established with just a few keystrokes.

Exploring the Adoption of Artificial Intelligence Among Elite Retirement Players Across the Value Spectrum

Several prominent participants in the US retirement industry have announced a variety of initiatives, and some have already implemented this technology. The following are examples of such players:

Utilizing Artificial Intelligence for Robo-Advisory Services: Leveraging the firm's Exchange-Traded Funds (ETFs) to craft tailored retirement portfolios for clients.

Artificial Intelligence-driven chatbot designed for customers to seek assistance or initiate transactions.

Employ artificial intelligence technologies to enhance client experiences, optimize self-service operations, and improve analytical capabilities, thereby facilitating more effective interactions within its National Contact Center (NCC).

Artificial Intelligence (AI) is integrated across the entirety of its Financial Wellness suite, encompassing its 401k platform.

Utilizes artificial intelligence to assist participants in making well-informed decisions regarding their financial well-being and provides guidance to employers in the design and structuring of their retirement plans.

To promptly and precisely address client concerns

Artificial Intelligence (AI) is also being utilized to enhance productivity levels. The implementation of automated reminders not only diminishes the volume of paperwork but also aids in facilitating informed decisions regarding retirement planning.

Artificial Intelligence (AI) assists in pinpointing potential retirement planning concerns that may be of significance to the client. Subsequently, it enables us to ascertain whether the advisor has addressed these issues with the client, and if not, it can encourage the advisor to initiate discussions on these matters as insights. Furthermore, we possess the capability to identify pivotal events, such as the approach to Social Security retirement age. This allows us to pose pertinent questions concerning these occurrences.

Exploring the potential of the most recent advancements in artificial intelligence to unlock new avenues for business growth, such as integrating AI technology into call centers to enhance products, thereby improving the overall experience for both clients and their employees.

Participated in more than 130,000 customer engagements across businesses specializing in wealth and health solutions, successfully resolving over 70% of cases following the introduction of its AI technology.

Instituted 401kAI, a platform engineered to enhance the efficacy of advisors' strategies, research, and endeavors in business expansion.

Artificial Intelligence (AI) facilitates rapid and effective communication with participants on a large scale. AI has the capability to identify opportunities for action, and subsequently, we can employ highly personalized digital nudges through various channels such as text messages, emails, and phone calls from our advisors.

What can be anticipated for the future of the retirement industry, its participants, and its stakeholders?

Predicting the future landscape of the retirement industry, its participants, and stakeholders is a task fraught with complexity. Potential developments include enhancing employees' access to retirement plans, augmenting investment offerings to better align with participants' behaviors and characteristics and offering more comprehensive advice. A pivotal use case involves plan advisers leveraging artificial intelligence for asset management decisions, marking an advanced iteration of 'robo-advisory' services and the creation of a drawdown strategy during retirement.

Retirement companies are presently contributing significant data to the systems and instructing large language models on the art of investment. This approach is aimed at training these models on various financial principles, thereby enhancing their capacity to comprehend, scrutinize, and address investment challenges.

While AI currently exhibits and holds the potential for remarkable capabilities, the complete dependence on AI for financial guidance may be realized in the foreseeable future. This is due to the belief among industry professionals that the human element cannot entirely be eradicated, particularly given the emotional nuances associated with financial matters.

In what ways may Sun Technologies help you?

We are equipped to furnish significant artificial intelligence assistance to the retirement sector by tackling major obstacles and augmenting operational efficacy, personalization, and risk mitigation. An information technology services company has the capability to provide essential IT support to the United States retirement sector, ensuring the seamless operation of their technological infrastructure, bolstering security measures, and elevating the user experience. Herein lies the way an information technology services company can offer support:

Infrastructure Management & Optimization

Cybersecurity & Data Protection

Disaster Recovery & Business Continuity

IT Helpdesk & User Support

Compliance and Regulatory Support

Cloud & SaaS Implementation

Data Management & Analytics Support

Software Development & Customization

Client Portal & Mobile App Development

Network Management & Connectivity

Automation & Workflow Improvements

The Many Ways Our AI Configurations Can Help

Configuring AI to Automate 401(k) Plan Administration Manual Tasks

Robo Advisors: AI Bots can double up as Robo Advisors to provide tailored advice to 401(k) plan participants.

Form 5500 preparation: Use data automation and AI Bot amplified review to correctly list plan sponsor information

Track Deadlines: Configure AI driven alerts to ensure plan sponsors and administrators are always on track with compliance deadlines

Raise Auto-Alerts: AI can help raise alerts to ensure timely deposit of employee contributions and document loan defaults

Amplify Review: AI can speed up the process of annual reviews that have to be undertaken by the plan fiduciaries

Send timely notices: AI Bots can gather all necessary information and format it to send notices to participants, beneficiaries, and eligible employees

Impact

Automate 90% of all manual calculations done by plan administrators

Save up 80% of time spent on coordinating with sponsors manually

Automate 95% of all sponsor uploads and data extraction tasks

0 notes

Text

Sun Technologies DevOps-As-A-Service and Testing Centers of Excellence (CoE)

Here’s how we are helping top Fortune 500 Companies to automate application testing & CI/CD: Build, Deploy, Test & Commit.

Powering clients with the right DevOps talent for the following: Continuous Development | Continuous Testing | Continuous Integration|Continuous Delivery | Continuous Monitoring

How Our Testing and Deployment Expertise Ensures Zero Errors

Test

Dedicated Testing Team: Prior to promoting changes to production, the product goes through a series of automated vulnerability assessments and manual tests

Proven QA Frameworks: Ensures architectural and component level modifications don’t expose the underlying platform to security weaknesses

Focus on Security: Design requirements stated during the Security Architecture Review are validated against what was built

Deploy

User-Acceptance Environments: All releases first pushed into user-acceptance environments and then, when it’s ready, into production

No-Code Release Management: Supports quick deployment of applications by enabling non-technical Creators and business users

No-Code platform orientation and training: Helps release multiple deploys together, increasing productivity while reducing errors

Our approach to ensure seamless deployments at scale

Testing Prior to Going Live: Get a secure place to test and prepare major software updates and infrastructural changes.

Creating New Live Side: Before going all in, we will first make room to test changes on small amounts of production traffic.

Gradual Deployments at Scale: Rolls deployment out to production by gradually increasing the percentage served to the new live side.

Arriving at the best branching and version control strategy that delivers the highest-quality software quickly and reliably?

Discovery assessment to evaluate existing branching strategies: Git Flow, GitHub Flow, Trunk-Based Development, GitLab Flow

Sun DevOps teams use a library that allows developers to test their changes: For example, tested in Jenkins without having to commit to trunk.

Sun’s deployment team uses a one-button web-based deployment: Makes code deployment as easy and painless as possible.

The deployment pipeline passes through our staging environment: Before going into production. It uses data stores, networks, and production resources.

Config flags (feature flags) are an integral part of our deployment process: Leverages an internal A/B testing tool and API builder to test new features.

New features are made live for a certain percentage of users: We will understand its behavior before making it live on a global scale.

Case Study: How Sun Technologies helped CI/CD Pipelines Implementation for a Leading Bank of USA

Customer Challenges:

Legacy Systems: The bank grappled with legacy systems and traditional software development methodologies, hindering agility and slowing down the delivery of new features and updates.

Regulatory Compliance: The financial industry is highly regulated, requiring strict adherence to compliance standards and rigorous testing processes, which often led to delays in software releases.

Customer Expectations: With the rise of digital banking and fintech startups, customers increasingly expect seamless and innovative banking experiences. The bank faced pressure to meet these expectations while ensuring the security and reliability of its software systems.

Our Solution:

Automated Build and Integration: Automated the build and integration process to trigger automatically upon code commits, ensuring that new features and bug fixes are integrated and tested continuously.

Automated Testing: Integrated automated testing into the pipeline, including unit tests, integration tests, and security tests, to detect and address defects early in the development cycle and ensure compliance with regulatory standards.

Continuous Deployment: Implemented continuous deployment to automate the deployment of validated code changes to production and staging environments, reducing manual effort and minimizing the risk of deployment errors.

Monitoring and Logging: Integrated monitoring and logging tools into the pipeline to track performance metrics, monitor system health, and detect anomalies in real-time, enabling proactive problem resolution and continuous improvement

Compliance and Security Checks: Incorporated security scanning tools and compliance checks into the pipeline to identify and remediate security vulnerabilities and ensure compliance with regulatory requirements before software releases

Tools and Technologies Used:

Jenkins, Bitbucket, Jfrog Artifactory, Sonarqube, Ansbile,Vault

Engagement Highlights:

Employee Training and Adoption: The bank invested in comprehensive training programs to upskill employees on CI/CD practices and tools, fostering a culture of continuous learning and innovation.

Cross-Functional Collaboration: The implementation of DevOps practices encouraged collaboration between development, operations, and security teams, breaking down silos and promoting shared ownership of software delivery and quality.

Feedback and Improvement Loop: The bank established feedback mechanisms to gather input from stakeholders, including developers, testers, and end-users, enabling continuous improvement of the CI/CD pipeline and software development processes

Impact:

Accelerated Delivery: CI/CD pipelines enabled swift deployment of new features, keeping the bank agile and competitive.

Enhanced Quality: Automated testing and integration processes bolstered software quality, reducing errors and boosting satisfaction.

Improved Security and Compliance: Integrated checks ensured regulatory adherence, fostering trust with customers and regulators.

Cost Efficiency: Automation curtailed manual tasks, slashing costs and optimizing resources.

Competitive Edge: CI/CD adoption facilitated innovation and agility, positioning the bank as a market leader.

0 notes

Text

Code Migration and Modernization: Why Manual Tests are Necessary

There are many reasons that motivate application owners to modernize the existing code base. Some of the objectives outlined by most of our top clients includes the following:

Making the codes safer to change in the future without breaking functionality

Ensuring the code becomes more container friendly and is easy to update

Moving to less expensive and easy-to-maintain application server

Moving off from a deprecated version of Java to the most updated version

Migrating from a hybrid codebase to traditional java code

Ensuring codes are testable and writing tests become faster, easier

Ensuring codes and configurations are more container friendly

For most of our clients, another key roadblock is finding the talent and skills to write manual test cases. When the state of the code undergoes many changes over the years, it can create problems in adding test coverage. Therefore, in many applications, it may also require manual creation of test cases.

In most cases, our clients come to us because their application teams have to deal with problems of legacy code modernization and testing. Legacy code is typically code that has been in use for a long time and may not have been well-documented, often-times lacking automated tests.

Here’s what makes preparation of manual test cases necessary:

Lack of Automated Tests

No Existing Test Coverage: Legacy code often lacks automated tests, which means that there is no existing suite of tests to rely on. Writing manual test cases helps ensure that the code behaves as expected before any changes are made.

Gradual Test Automation: While the long-term goal might be to automate testing, starting with manual test cases allows for immediate validation and helps in identifying critical areas for automated test development.

Understanding Complex and Untested Code

Code Complexity: Legacy systems can be complex and difficult to understand, especially if the code has evolved over time without proper refactoring. Manual testing allows testers to interact with the system in a way that automated tests may not easily facilitate, helping to uncover edge cases and unexpected behavior.

Exploratory Testing: Manual testing allows for exploratory testing, where testers can use their intuition and experience to find issues that are not covered by predefined test cases. This is particularly important in legacy systems where the code’s behavior might be unpredictable.

High Risk of Breaking Changes

Fragility of Legacy Systems: Legacy code is often fragile, and small changes can lead to significant issues elsewhere in the system. Manual test cases allow for careful and deliberate testing, reducing the risk of introducing breaking changes.

Regression Testing: Manual regression testing is often necessary to ensure that new changes do not negatively impact existing functionality, especially when automated regression tests are not available.

Lack of Documentation

Poor or Outdated Documentation: Legacy code is often poorly documented, if at all. Manual test cases can serve as a form of documentation, helping developers and testers understand the expected behavior of the system.

Knowledge Transfer: Manual test cases can also help in knowledge transfer, especially when working with code that was originally written by developers who are no longer with the organization.

Limited Tooling and Automation Compatibility

Incompatibility with Modern Tools: Legacy systems may not be compatible with modern testing frameworks and tools, making it difficult to implement automated testing without significant investment in refactoring or tool adaptation. In such cases, manual testing might be the most feasible option.

Custom or Proprietary Systems: If the legacy code is part of a custom or proprietary system, existing automated testing tools might not work out of the box, necessitating manual test case development.

Interdependencies with Other Legacy Systems

Complex Interactions: Legacy code often interacts with other legacy systems, and the complexity of these interactions may not be fully understood. Manual testing allows testers to observe and verify the behavior of the system as a whole, which can be difficult to achieve with automated tests alone.

End-to-End Testing: Manual end-to-end testing is often necessary in legacy environments to ensure that all components of the system work together as expected.

Identifying Test Scenarios for Automation

Test Case Identification: Writing manual test cases helps identify critical and high-value test scenarios that should be automated in the future. This can serve as a roadmap for gradually building an automated test suite.

Incremental Automation: Starting with manual tests allows teams to prioritize and incrementally automate the most important or frequently executed test cases.

Case Study: Executing Manual Regression Testing for a Legacy Collateral Management System of a Federal bank

Background:

A top Federal Bank had been using a legacy loan advances system for over a decade. The system was originally developed in COBOL and has undergone numerous small updates over the years. However, it lacked automated test coverage, and the codebase was complex, with many interdependencies. The system’s functionality was critical, as it handled the calculation of mortgages and distribution of loans.

A typical problem:

The bank’s application team decided to make a small but significant change to the system. This meant updating the tax calculation logic to comply with new government policy regulations. This change required modification in risk calculations of loan applicants.

Given the age and complexity of the system, there were concerns about the potential for unintended side effects. The loan advances system is tightly integrated with other legacy systems, used by auditors and risk compliance, and a bug could lead to incorrect calculations, which would be a serious issue.

The system had no automated test suite due to its age and the lack of modern testing practices when it was originally developed.

The codebase was poorly documented, making it difficult to fully understand the impact of the changes.

The potential risk of failure was high, as any error in tax calculations could lead to legal compliance issues and unhappy employees.

Decision:

Due to the lack of automated tests and the critical nature of the loan ad system, it was decided that a manual regression test would be conducted. The regression test would focus on ensuring that the recent changes to the tax calculation logic did not break any existing functionality.

Steps Taken:

Testing team identified key test cases that covered the most critical functionalities of the payroll system, including:

Accurate calculation of collateral value for different types of assets

Correct application of federal and state taxes

Proper handling of risk and compliance

Accurate generation of loan disbursal stubs and financial reports

Creation of Test Data: The testers created a set of test data that included employees from different tax brackets, states, and employment categories. This data was designed to cover various scenarios that might be affected by the mortgage calculation changes.

Manual Execution of Test Cases: The testing team manually executed the identified test cases. This involved:

Running the collateral calculations for different brackets as a separate test scenarios

Verifying that the calculations were correct according to the new regulations

Checking that no other parts of other interlinked process were affected by the changes (e.g., credit check, risk analysis, compliance)

Validation of Results: Testers cross-checked the results against expected outcomes. They manually calculated and validated results for each scenario and compared these with the outputs from the system.

Exploratory Testing: In addition to predefined test cases, testers performed exploratory testing to uncover any unexpected issues. This involved running the payroll process under various edge cases, such as unusual deduction combinations or high-income employees in multiple states.

Outcome:

The manual regression testing uncovered a few minor issues where the mortgage calculations were slightly off for specific edge cases. These issues were documented, fixed, and re-tested manually. The overall system was confirmed to be stable after the changes, with all critical functionalities working as expected.

The manual regression test provided confidence that the collateral system would function correctly in production. As a result, the company successfully updated the system to comply with the new tax regulations without any disruptions.

Lessons Learned:

Importance of Manual Testing: In environments where automated testing is not feasible, manual regression testing is essential to ensure that critical systems continue to function correctly after changes.

Documentation: The process highlighted the importance of documenting test cases and results, especially in legacy systems where knowledge is often scattered or lost over time.

Incremental Improvement: The Federal Bank recognized the need to gradually build an automated test suite for the collateral system to reduce reliance on manual testing in the future.

Need Expert Testing and Code Migration Services for Your COBOL-Based Applications?Schedule a call today!

0 notes

Text

Logistics Software Testing: How to Avoid Interruptions to Logistics Operations Caused by Inadequate Testing of Software Updates

Inadequate logistics software testing is the root cause of interruptions to critical daily operations. Logistics software applications can include modules for route optimization, shipment tracking, warehouse management, and customer notifications. When any logistics company decides to roll out a significant software update aimed at improving route optimization algorithms and enhancing the customer notification system, they face tight deadlines and budget constraints. Testing phase for these software update can get shortened and as a result, the testing team can end up focusing primarily on new features and basic functionality. It can lead to neglecting comprehensive integration, load, and regression testing. As a result, several critical issues may not be identified before any update is deployed to the live environment.

Join us to uncover a real-world scenario to see how our dedicated Testing CoE can help deploy new software updates with minimal disruptions, ensuring a smooth transition and maintaining high levels of customer satisfaction.

How Adequate Testing Helps Avoid Warehouse Management System (WMS) Problems

Inventory Inaccuracies

Operational Disruption: Warehouse staff must spend additional time verifying and correcting inventory records manually, delaying order fulfilment and increasing the risk of errors.

Resolution: Ensure adequate testing to detect issues related to inventory counting and tracking.

Impact: Inventory data becomes accurate, ensuring elimination of issues related to stockouts or overstock situations.

Order Fulfilment Delays

Operational Disruption: Customer satisfaction declines due to delayed deliveries, and operational costs increase due to the need for expedited shipping to meet deadlines.

Resolution: Ensure adequate testing before releasing any new software updates to detect any inefficiencies or errors in order processing workflows.

Impact: Avoid slower shipment processing time and ensure shipments are always on time.

System Downtime

Operational Disruption: Workers cannot access the system to pick, pack, or ship orders, leading to significant delays and potential financial losses.

Resolution: Ensure adequate testing to see if the tested updates cause system crashes or unresponsiveness.

Impact: The WMS is always available, thereby never halting warehouse operations.

Integration Failures

Operational Disruption: Misalignment between systems can cause discrepancies in order status, inventory levels, and shipment details, necessitating manual intervention to reconcile data.

Resolution: Ensure adequate testing of interfaces with other systems (e.g., ERP, CRM).

Impact: Avoid data synchronization issues that can lead to inconsistent information across systems.

User Interface Bugs

Operational Disruption: Reduced efficiency and productivity among warehouse staff, leading to slower operations and potential training needs for the updated system.

Resolution: A smooth transition to modern UI/UX interface accompanied by change management measures ensure user adoption and ease-of-use.

Impact: Increased time to complete tasks and a higher rate of user errors.

How Adequate Testing Helps Avoid Transportation Management System (TMS) Problems

Route Optimization Errors

Operational Disruption: Deliveries are delayed, and transportation costs rise, negatively impacting profitability and customer satisfaction.

Resolution: Ensure any system glitches do not go undetected during updates to route optimization algorithms.

Impact: Avoid inefficiencies in route optimization, longer delivery times, and increased fuel costs.

Shipment Tracking Failures

Operational Disruption: Customers cannot track their shipments, leading to increased calls to customer service and potential loss of trust in the service.

Resolution: Ensure any new update does not introduce glitches that affect real-time tracking.

Impact: Avoid inaccurate shipment tracking information or unavailable data.

Carrier Integration Issues

Operational Disruption: Shipments are delayed or misrouted, causing additional administrative work to correct issues and potentially incurring extra shipping costs.

Resolution: Ensure properly tested updates to avoid disruptive integration issues with carrier systems.

Impact: Avoidinaccurate or failed communication with carriers regarding shipment details.

Billing and Documentation Errors

Operational Disruption: Financial discrepancies arise, requiring time-consuming reconciliations and possibly leading to disputes with carriers or customers.

Resolution: Ensure software updates do not affect the generation of shipping documents and billing statements.

Impact: Always have an available system that supports accurate billing and documentation.

Performance Degradation

Operational Disruption: Sluggish system performance hampers the ability of logistics managers to plan and execute shipments efficiently, leading to operational delays and potential missed delivery windows.

Resolution: Ensure updates are always tested for performance under load.

Impact: The system will never slow down during peak usage times.

Practical Steps Undertaken by Sun Technologies Testing Team to Ensure Logistics Software Updates Don’t Cause Operational Disruptions

Incremental Rollouts:

Deploy updates incrementally rather than all at once to minimize risk. This allows for easier rollback if issues are found.

Disaster Recovery Testing:

Regularly test disaster recovery procedures to ensure quick recovery in case of system failures.

Training and Support:

Provide adequate training for users and support teams on new features and changes introduced by software updates.

Version Control:

Use version control to manage and track changes in the software. This helps in maintaining consistency and facilitates easier rollback if needed.

User Interface (UI) Testing:

Ensure the UI is intuitive and responsive across different devices and screen sizes. Test for usability to ensure a positive user experience.

Example Scenario of Implementing Best Practices for a Logistics IT Team

Context:

A logistics software company is rolling out a major update to its WMS and TMS. The update includes a new route optimization algorithm, enhanced shipment tracking, and improved user interface features.

Steps to be Taken

Detailed Requirements Gathering:

Workshops with stakeholders to gather detailed requirements and develop use case scenarios.

Comprehensive Testing Strategy:

Developing a strategy that includes functional, performance, security, integration, and user acceptance testing.

Automation:

Automated regression and load testing using industry-standard tools.

Integration Testing:

Conduct end-to-end testing and API testing to ensure seamless integration with external systems.

Real Data Usage Simulation:

Use anonymized real data to test the new features, ensuring realistic test conditions.

Performance and Load Testing:

Simulate peak load conditions to identify and address performance bottlenecks.

Security Testing:

Perform vulnerability scanning and penetration testing to ensure the system was secure.

User Acceptance Testing:

Engage end-users in the testing process and gathered feedback on the new features.

Continuous Testing:

Integrate testing into the CI/CD pipeline to catch issues early and ensure continuous quality.

Documentation and Communication:

Maintain clear documentation of all test cases and results, and ensured effective communication between teams.

Post-Deployment Monitoring:

Implement real-time monitoring and established a user feedback loop to continuously improve the system.

#qa hashtag#logistics hashtag#automation hashtag#automatedtesting hashtag#testing hashtag#supplychain

0 notes

Text

Generative Artificial Intelligence in the Financial Sector: Exploring Promising Use Cases and Potential Challenges

Generative Artificial Intelligence (AI) transforms the finance and banking sectors by enabling real-time fraud detection, anticipating customer requirements, and providing superior customer experiences. This industry has undergone significant digital transformations, enhancing efficiency, convenience, and security. Generative AI can revolutionize various aspects of our lives, including work, banking, and investment, potentially resolving challenges related to talent shortages in software development, risk and compliance, and front-line personnel. The influence of Generative AI is expected to be as profound as that of the internet or mobile devices.

Generative Artificial Intelligence (Gen AI) offers three primary capabilities that are beneficial for businesses and institutions:

Facilitating conversational online interactions (e.g., through the use of conversational journeys, customer service automation, and knowledge access, among others).

Simplifying the access and understanding of complex data (e.g., through enterprise search, product discovery and recommendation functionalities, and business process automation, among others).

Creating content with the mere press of a button (e.g., through the generation of creative content, document creation functionalities, and enhancing developer efficiency, among others).

Generative AI Use Cases in Financial Services: A Closer Look

Enhancing Efficiency Through the Automation of Repetitive Tasks

Problem. The necessity to manage redundant and time-consuming responsibilities, including the manual entry of data and the summarization of extensive documents, detracts attention from more valuable tasks.

Solution.

Finding important data in different types of documents and correctly filling in records or worksheets.

Shortening complex financial reports, articles about finance, or documents about rules into understandable outlines, emphasizing important details and patterns.

Converting complicated terms related to a specific sector into simple language, making information easier to understand for more people.

Impact. Artificial Intelligence liberates experts to focus on higher-level projects that demand deep reasoning and evaluation. It also results in quicker response periods, enhanced efficiency throughout processes, and a deep comprehension of intricate financial information.

Improving the Assessment and Management of Risks

Problem. The assessment of vulnerabilities within the sector continues to be a multifaceted and intricate procedure. Conventional approaches frequently depend on constrained historical documentation or manual investigation, which may result in erroneous forecasts and overlooked warning signs.

Solution.

Creating believable artificial data to enhance the training sets for machine learning algorithms.

Creating different situations to evaluate financial models and find potential weaknesses.

Examining a range of sources to discover overlooked risks and offer a detailed analysis of potential errors.

Impact. The integration of technology facilitates enhanced decision-making processes, thereby minimizing the risk of potential losses for organizations. The prompt recognition of emerging risks allows for the implementation of proactive mitigation strategies.

Creating Financial Documentation and Analysis

Problem. Generating precise and perceptive financial reports is a process that demands considerable effort and time. It requires analysts to collect information from diverse sources, execute intricate computations, and develop comprehensible narratives, frequently operating under stringent deadlines.

Collect data from various datasets, thereby generating reports that automatically incorporate customized insights and visual representations.

Perform regular calculations, reconciliations, and amalgamations, guaranteeing mathematical accuracy.

Compile periodic management documents, encompassing both quantitative and textual elements, to underscore trends or irregularities.

Impact. The integration of Generalized Accounting Information Systems (GAI) in the generation of reports liberates the expertise of professionals, allowing them to allocate more time towards strategic analysis. It also diminishes the likelihood of errors, thereby enhancing the accuracy of the reports. Furthermore, it expedites the process of pinpointing essential recommendations aimed at augmenting agility.

Enhancing Customer Experience and Service Personalization

Problem. Consumers are increasingly seeking personalized digital experiences and bespoke deals, presenting a challenge for enterprises constrained by limited resources and conventional service methodologies.

Solution.

Conducting an analysis of user data to formulate distinct recommendations for investment portfolios, financial products, and services.

Developing finance AI chatbots capable of engaging in natural language conversations, comprehending complex queries, and offering context-aware, beneficial responses to consumers round the clock.

Supporting customer support representatives by locating pertinent information, condensing escalated cases, and proposing solutions, thereby optimizing the process of problem resolution.

Impact. With their proactive support and well-curated recommendations, these innovations dramatically increase client happiness. Increased loyalty and engagement follow from this. In the end, providing a superior, customised CX gives financial settings a competitive advantage.

Optimising Fraud Identification and Avoidance

Problem. The dynamic nature of fraudulent activity poses a challenge to the efficacy of typical monitoring systems. This damages client confidence and exposes financial service providers to financial losses.

Solution.

Producing synthetic data that is realistic and replicates cunning patterns to improve the training and resilience of detection systems.

Real-time transaction analysis to spot irregularities and suspicious activity, allowing for the quick identification of such frauds.

Streamlining the investigation process, relieving the load on staff engaged, and automating the flagging of possibly criminal conduct.

Impact. Artificial intelligence (AI)-powered fraud management improves brand image, protects client assets, boosts security requirements, and eases the operational burden on the investigative teams.

Enhancing Analysis and Forecasts of Market Trends

Problem. Traditional techniques of evaluation are out of step with the constantly changing financial markets, leaving investors open to missing out on opportunities.

Solution. The use of generative AI allows for the simulation of market conditions, stress testing of strategies, and the early detection of possible dangers and opportunities.

Impact. Businesses may quickly and effectively take advantage of changes in the industry by using GAI to maximize profits and outperform rivals.

Some of the top generative AI use cases highlighted by financial organizations

75% Improved virtual assistants

70% Financial document search and synthesis

80% Personalized financial recommendations

72% Capital market research

Key applications of GenAI for the BFSI sector

Internal processes

Decision making

Cybersecurity

Document analysis & processing

Customer service & support

Key considerations for secure GenAI deployment

While not a panacea, artificial intelligence is a valuable tool that must be used carefully and thoughtfully, particularly in the banking and fintech industries. This post has shown a number of areas where AI is now being used cautiously and producing real benefits like cost reductions and increased operational efficiencies.

Selecting the appropriate AI service provider

AI technology is rapidly evolving due to competition among tech companies. Significant advancements are expected in generative AI, necessitating continuous experimentation and participation in scientific research to refine and validate AI solutions. This includes testing diverse methodologies for engineering and technology stacks.

Years of Experience in Implementing AI for Commercial Applications

Deploying AI in commercial BFSI (Banking, Financial Services, and Insurance) settings demands a carefully crafted and meticulous strategy. Therefore, when selecting an AI consultant or service provider, it’s crucial to examine their track record across a broad spectrum of AI implementations.

In summary, the integration of Generative AI within the realm of financial services introduces a set of distinct challenges. However, the potential benefits justify the investment of effort. To guarantee success, it is imperative to focus on enhancing information quality, the development of explainable models, the establishment of robust data governance frameworks, and the implementation of comprehensive risk management strategies. We are committed to collaborating with you to devise strategies that address these challenges, thereby facilitating the realization of the transformative advantages associated with Generative AI.

0 notes

Text

Essential Skills for Testing Applications in Different Environments

Testing applications in different environments requires a diverse set of skills to ensure the software performs well under various conditions and configurations. Here are the essential skills needed for this task:

1. Understanding of Different Environments

Development, Staging, and Production: Knowledge of the differences between development, staging, and production environments, and the purpose of each.

Configuration Management: Understanding how to configure and manage different environments, including handling environment-specific settings and secrets.

2. Test Planning and Strategy

Test Plan Creation: Ability to create comprehensive test plans that cover different environments.

Environment-specific Test Cases: Designing test cases that take into account the specific characteristics and constraints of each environment.

3. Automation Skills

Automated Testing Tools: Proficiency with automated testing tools like Selenium, JUnit, TestNG, or Cypress.

Continuous Integration/Continuous Deployment (CI/CD): Experience with CI/CD tools like Jenkins, GitLab CI, or Travis CI to automate the testing process across different environments.

4. Configuration Management Tools

Infrastructure as Code (IaC): Familiarity with IaC tools like Terraform, Ansible, or CloudFormation to manage and configure environments consistently.

Containerization: Knowledge of Docker and Kubernetes for creating consistent and isolated testing environments.

5. Version Control Systems

Git: Proficiency in using Git for version control, including branching, merging, and handling environment-specific code changes.

6. Test Data Management

Data Masking and Anonymization: Skills in anonymizing sensitive data for testing purposes.

Synthetic Data Generation: Ability to create synthetic test data that mimics real-world scenarios.

7. Performance Testing

Load Testing: Experience with load testing tools like JMeter, LoadRunner, or Gatling to assess performance under different conditions.

Stress Testing: Ability to perform stress testing to determine the application's breaking point.

8. Security Testing

Vulnerability Scanning: Knowledge of tools like OWASP ZAP, Burp Suite, or Nessus for identifying security vulnerabilities in different environments.

Penetration Testing: Skills in conducting penetration tests to assess security risks.

9. Cross-Browser and Cross-Device Testing

Browser Testing: Proficiency with tools like BrowserStack or Sauce Labs for testing across different browsers.

Device Testing: Experience with testing on different devices and operating systems to ensure compatibility.

10. API Testing

API Testing Tools: Experience with tools like Postman, SoapUI, or RestAssured for testing APIs.

Contract Testing: Knowledge of contract testing frameworks like Pact to ensure consistent API behavior across environments.

11. Monitoring and Logging

Monitoring Tools: Familiarity with monitoring tools like Prometheus, Grafana, or New Relic to observe application performance and health in different environments.

Log Management: Skills in using log management tools like ELK Stack (Elasticsearch, Logstash, Kibana) or Splunk for troubleshooting and analysis.

12. Soft Skills

Attention to Detail: Meticulous attention to detail to identify environment-specific issues.

Problem-solving: Strong problem-solving skills to troubleshoot and resolve issues quickly.

Collaboration: Ability to work effectively with development, operations, and product teams to manage and troubleshoot environment-related issues.

Practical Steps for Testing in Different Environments

Environment Setup:

Define the infrastructure and configuration needed for each environment.

Use IaC tools to automate environment setup and teardown.

Configuration Management:

Manage environment-specific configurations and secrets securely.

Use tools like Consul or Vault for managing secrets.

Automate Testing:

Integrate automated tests into your CI/CD pipeline.

Ensure tests are run in all environments as part of the deployment process.

Test Data Management:

Use consistent and reliable test data across all environments.

Implement data seeding or generation scripts as part of your environment setup.

Performance and Security Testing:

Conduct regular performance and security tests in staging and production-like environments.

Monitor application performance and security continuously.

Sun Technologies has testers who have the above listed skills to ensure that applications are robust, secure, and performant across different environments, leading to higher quality software and better user experiences. Contact us to get a free assessment of CI/CD automation opportunity that you can activate using Sun Technologies’ Testing Center-of-Excellence (CoE).

#api#testingapplication#automation#ci/cd#infrastructureasCode#containerization#loadtesting#securitytesting#performancetesting

0 notes

Text

10 Key Factors to Keep in Mind for Keeping HIPAA Compliance in Office 365 Migration

When migrating to Office 365 while maintaining HIPAA compliance, several essentials need to be considered:

Business Associate Agreement (BAA): Ensure that Microsoft signs a Business Associate Agreement (BAA) with your organization. This agreement establishes the responsibilities of Microsoft as a HIPAA business associate, outlining their obligations to safeguard protected health information (PHI).

Data Encryption: Utilize encryption mechanisms, such as Transport Layer Security (TLS) or BitLocker encryption, to protect PHI during transmission and storage within Office 365.

Access Controls: Implement strict access controls and authentication mechanisms to ensure that only authorized personnel have access to PHI stored in Office 365. Utilize features like Azure Active Directory (AAD) for user authentication and role-based access control (RBAC) to manage permissions.

Data Loss Prevention (DLP): Configure DLP policies within Office 365 to prevent unauthorized sharing or leakage of PHI. DLP policies can help identify and restrict the transmission of sensitive information via email, SharePoint, OneDrive, and other Office 365 services.

Audit Logging and Monitoring: Enable audit logging within Office 365 to track user activities and changes made to PHI. Regularly review audit logs and implement monitoring solutions to detect suspicious activities or unauthorized access attempts.

Secure Email Communication: Implement secure email communication protocols, such as Secure/Multipurpose Internet Mail Extensions (S/MIME) or Microsoft Information Protection (MIP), to encrypt email messages containing PHI and ensure secure transmission.

Data Retention Policies: Define and enforce data retention policies to ensure that PHI is retained for the required duration and securely disposed of when no longer needed. Use features like retention labels and retention policies in Office 365 to manage data lifecycle.

Mobile Device Management (MDM): Implement MDM solutions to enforce security policies on mobile devices accessing Office 365 services. Use features like Intune to manage device encryption, enforce passcode policies, and remotely wipe devices if lost or stolen.

Training and Awareness: Provide HIPAA training and awareness programs to employees who handle PHI in Office 365. Educate them about their responsibilities, security best practices, and how to identify and respond to potential security incidents.

Regular Risk Assessments: Conduct regular risk assessments to identify vulnerabilities and risks associated with PHI in Office 365. Address any identified gaps or deficiencies promptly to maintain HIPAA compliance.

By incorporating these essentials into your Office 365 migration strategy, you can ensure that your organization remains HIPAA compliant while leveraging the productivity and collaboration benefits of the platform. It's also essential to stay updated with changes in HIPAA regulations and Microsoft's security features to adapt your compliance measures accordingly..

Are You Looking for a Migration Partner to Ensure HIPAA Compliance in Office 365 Migration?

Read this insightful article to learn more about the essential steps your data migration expert must follow to ensure a smooth and successful transition of data to OneDrive.

0 notes

Text

Scenarios in Which Kubernetes is Used for Container Orchestration of a Web Application

Kubernetes is commonly used for container orchestration of web applications in various scenarios where scalability, reliability, and efficient management of containerized workloads are required. Here are some scenarios where Kubernetes is used for container orchestration of web applications:

Microservices Architecture:

Scenario: When deploying a web application composed of multiple microservices.