#.net framework linux debian

Explore tagged Tumblr posts

Note

So are you a windows user or a linux user.

windows in the streets, linux in the sheets

my workplace uses Windows computers and also I have to interact with legacy .NET Framework stuff sometimes, but my computers at home use Linux (Linux Mint on desktop and my laptop, Debian on VPSes, Raspbian and OSMC for the rPi machines and SteamOS for my Steam Deck). With the power of virtual machines I am able to use Windows as a guest OS, but only for testing purposes and Photoshop.

2 notes

·

View notes

Link

#dot net#asp.net#.net development#.net framework install linux ubuntu#.net framework linux debian#.net framework install linux fedora#software#web application firewall#php web application#web application#web application design#web application services

0 notes

Text

Entity Framework introduction in .NET

Entity Framework is described as an ORM (Object Relational Mapping) framework that provides an automatic mechanism for developers to store and access the information from the database.

#c#dotnet#.net framework linux fedora#.net framework linux centos#.net developers#.net#.net framework install linux debian#developer#development#programing#programming#coding#coder#students

0 notes

Link

0 notes

Text

The Elastic stack (ELK) is made up of 3 open source components that work together to realize logs collection, analysis, and visualization. The 3 main components are: Elasticsearch – which is the core of the Elastic software. This is a search and analytics engine. Its task in the Elastic stack is to store incoming logs from Logstash and offer the ability to search the logs in real-time Logstash – It is used to collect data, transform logs incoming from multiple sources simultaneously, and sends them to storage. Kibana – This is a graphical tool that offers data visualization. In the Elastic stack, it is used to generate charts and graphs to make sense of the raw data in your database. The Elastic stack can as well be used with Beats. These are lightweight data shippers that allow multiple data sources/indices, and send them to Elasticsearch or Logstash. There are several Beats, each with a distinct role. Filebeat – Its purpose is to forward files and centralize logs usually in either .log or .json format. Metricbeat – It collects metrics from systems and services including CPU, memory usage, and load, as well as other data statistics from network data and process data, before being shipped to either Logstash or Elasticsearch directly. Packetbeat – It supports a collection of network protocols from the application and lower-level protocols, databases, and key-value stores, including HTTP, DNS, Flows, DHCPv4, MySQL, and TLS. It helps identify suspicious network activities. Auditbeat – It is used to collect Linux audit framework data and monitor file integrity, before being shipped to either Logstash or Elasticsearch directly. Heartbeat – It is used for active probing to determine whether services are available. This guide offers a deep illustration of how to run the Elastic stack (ELK) on Docker Containers using Docker Compose. Setup Requirements. For this guide, you need the following. Memory – 1.5 GB and above Docker Engine – version 18.06.0 or newer Docker Compose – version 1.26.0 or newer Install the required packages below: ## On Debian/Ubuntu sudo apt update && sudo apt upgrade sudo apt install curl vim git ## On RHEL/CentOS/RockyLinux 8 sudo yum -y update sudo yum -y install curl vim git ## On Fedora sudo dnf update sudo dnf -y install curl vim git Step 1 – Install Docker and Docker Compose Use the dedicated guide below to install the Docker Engine on your system. How To Install Docker CE on Linux Systems Add your system user to the docker group. sudo usermod -aG docker $USER newgrp docker Start and enable the Docker service. sudo systemctl start docker && sudo systemctl enable docker Now proceed and install Docker Compose with the aid of the below guide: How To Install Docker Compose on Linux Step 2 – Provision the Elastic stack (ELK) Containers. We will begin by cloning the file from Github as below git clone https://github.com/deviantony/docker-elk.git cd docker-elk Open the deployment file for editing: vim docker-compose.yml The Elastic stack deployment file consists of 3 main parts. Elasticsearch – with ports: 9200: Elasticsearch HTTP 9300: Elasticsearch TCP transport Logstash – with ports: 5044: Logstash Beats input 5000: Logstash TCP input 9600: Logstash monitoring API Kibana – with port 5601 In the opened file, you can make the below adjustments: Configure Elasticsearch The configuration file for Elasticsearch is stored in the elasticsearch/config/elasticsearch.yml file. So you can configure the environment by setting the cluster name, network host, and licensing as below elasticsearch: environment: cluster.name: my-cluster xpack.license.self_generated.type: basic To disable paid features, you need to change the xpack.license.self_generated.type setting from trial(the self-generated license gives access only to all the features of an x-pack for 30 days) to basic.

Configure Kibana The configuration file is stored in the kibana/config/kibana.yml file. Here you can specify the environment variables as below. kibana: environment: SERVER_NAME: kibana.example.com JVM tuning Normally, both Elasticsearch and Logstash start with 1/4 of the total host memory allocated to the JVM Heap Size. You can adjust the memory by setting the below options. For Logstash(An example with increased memory to 1GB) logstash: environment: LS_JAVA_OPTS: -Xm1g -Xms1g For Elasticsearch(An example with increased memory to 1GB) elasticsearch: environment: ES_JAVA_OPTS: -Xm1g -Xms1g Configure the Usernames and Passwords. To configure the usernames, passwords, and version, edit the .env file. vim .env Make desired changes for the version, usernames, and passwords. ELASTIC_VERSION= ## Passwords for stack users # # User 'elastic' (built-in) # # Superuser role, full access to cluster management and data indices. # https://www.elastic.co/guide/en/elasticsearch/reference/current/built-in-users.html ELASTIC_PASSWORD='StrongPassw0rd1' # User 'logstash_internal' (custom) # # The user Logstash uses to connect and send data to Elasticsearch. # https://www.elastic.co/guide/en/logstash/current/ls-security.html LOGSTASH_INTERNAL_PASSWORD='StrongPassw0rd1' # User 'kibana_system' (built-in) # # The user Kibana uses to connect and communicate with Elasticsearch. # https://www.elastic.co/guide/en/elasticsearch/reference/current/built-in-users.html KIBANA_SYSTEM_PASSWORD='StrongPassw0rd1' Source environment: source .env Step 3 – Configure Persistent Volumes. For the Elastic stack to persist data, we need to map the volumes correctly. In the YAML file, we have several volumes to be mapped. In this guide, I will configure a secondary disk attached to my device. Identify the disk. $ lsblk NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT sda 8:0 0 40G 0 disk ├─sda1 8:1 0 1G 0 part /boot └─sda2 8:2 0 39G 0 part ├─rl-root 253:0 0 35G 0 lvm / └─rl-swap 253:1 0 4G 0 lvm [SWAP] sdb 8:16 0 10G 0 disk └─sdb1 8:17 0 10G 0 part Format the disk and create an XFS file system to it. sudo parted --script /dev/sdb "mklabel gpt" sudo parted --script /dev/sdb "mkpart primary 0% 100%" sudo mkfs.xfs /dev/sdb1 Mount the disk to your desired path. sudo mkdir /mnt/datastore sudo mount /dev/sdb1 /mnt/datastore Verify if the disk has been mounted. $ sudo mount | grep /dev/sdb1 /dev/sdb1 on /mnt/datastore type xfs (rw,relatime,seclabel,attr2,inode64,logbufs=8,logbsize=32k,noquota) Create the persistent volumes in the disk. sudo mkdir /mnt/datastore/setup sudo mkdir /mnt/datastore/elasticsearch Set the right permissions. sudo chmod 775 -R /mnt/datastore sudo chown -R $USER:docker /mnt/datastore On Rhel-based systems, configure SELinux as below. sudo setenforce 0 sudo sed -i 's/^SELINUX=.*/SELINUX=permissive/g' /etc/selinux/config Create the external volumes: For Elasticsearch docker volume create --driver local \ --opt type=none \ --opt device=/mnt/datastore/elasticsearch \ --opt o=bind elasticsearch For setup docker volume create --driver local \ --opt type=none \ --opt device=/mnt/datastore/setup \ --opt o=bind setup Verify if the volumes have been created. $ docker volume list DRIVER VOLUME NAME local elasticsearch local setup View more details about the volume. $ docker volume inspect setup [ "CreatedAt": "2022-05-06T13:19:33Z", "Driver": "local", "Labels": , "Mountpoint": "/var/lib/docker/volumes/setup/_data", "Name": "setup", "Options": "device": "/mnt/datastore/setup", "o": "bind", "type": "none" , "Scope": "local" ] Go back to the YAML file and add these lines at the end of the file.

$ vim docker-compose.yml ....... volumes: setup: external: true elasticsearch: external: true Now you should have the YAML file with changes made in the below areas: Step 4 – Bringing up the Elastic stack After the desired changes have been made, bring up the Elastic stack with the command: docker-compose up -d Execution output: [+] Building 6.4s (12/17) => [docker-elk_setup internal] load build definition from Dockerfile 0.3s => => transferring dockerfile: 389B 0.0s => [docker-elk_setup internal] load .dockerignore 0.5s => => transferring context: 250B 0.0s => [docker-elk_logstash internal] load build definition from Dockerfile 0.6s => => transferring dockerfile: 312B 0.0s => [docker-elk_elasticsearch internal] load build definition from Dockerfile 0.6s => => transferring dockerfile: 324B 0.0s => [docker-elk_logstash internal] load .dockerignore 0.7s => => transferring context: 188B ........ Once complete, check if the containers are running: $ docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 096ddc76c6b9 docker-elk_logstash "/usr/local/bin/dock…" 9 seconds ago Up 5 seconds 0.0.0.0:5000->5000/tcp, :::5000->5000/tcp, 0.0.0.0:5044->5044/tcp, :::5044->5044/tcp, 0.0.0.0:9600->9600/tcp, 0.0.0.0:5000->5000/udp, :::9600->9600/tcp, :::5000->5000/udp docker-elk-logstash-1 ec3aab33a213 docker-elk_kibana "/bin/tini -- /usr/l…" 9 seconds ago Up 5 seconds 0.0.0.0:5601->5601/tcp, :::5601->5601/tcp docker-elk-kibana-1 b365f809d9f8 docker-elk_setup "/entrypoint.sh" 10 seconds ago Up 7 seconds 9200/tcp, 9300/tcp docker-elk-setup-1 45f6ba48a89f docker-elk_elasticsearch "/bin/tini -- /usr/l…" 10 seconds ago Up 7 seconds 0.0.0.0:9200->9200/tcp, :::9200->9200/tcp, 0.0.0.0:9300->9300/tcp, :::9300->9300/tcp docker-elk-elasticsearch-1 Verify if Elastic search is running: $ curl http://localhost:9200 -u elastic:StrongPassw0rd1 "name" : "45f6ba48a89f", "cluster_name" : "my-cluster", "cluster_uuid" : "hGyChEAVQD682yVAx--iEQ", "version" : "number" : "8.1.3", "build_flavor" : "default", "build_type" : "docker", "build_hash" : "39afaa3c0fe7db4869a161985e240bd7182d7a07", "build_date" : "2022-04-19T08:13:25.444693396Z", "build_snapshot" : false, "lucene_version" : "9.0.0", "minimum_wire_compatibility_version" : "7.17.0", "minimum_index_compatibility_version" : "7.0.0" , "tagline" : "You Know, for Search"

Step 5 – Access the Kibana Dashboard. At this point, you can proceed and access the Kibana dashboard running on port 5601. But first, allow the required ports through the firewall. ##For Firewalld sudo firewall-cmd --add-port=5601/tcp --permanent sudo firewall-cmd --add-port=5044/tcp --permanent sudo firewall-cmd --reload ##For UFW sudo ufw allow 5601/tcp sudo ufw allow 5044/tcp Now proceed and access the Kibana dashboard with the URL http://IP_Address:5601 or http://Domain_name:5601. Login using the credentials set for the Elasticsearch user: Username: elastic Password: StrongPassw0rd1 On successful authentication, you should see the dashboard. Now to prove that the ELK stack is running as desired. We will inject some data/log entries. Logstash here allows us to send content via TCP as below. # Using BSD netcat (Debian, Ubuntu, MacOS system, ...) cat /path/to/logfile.log | nc -q0 localhost 5000 For example: cat /var/log/syslog | nc -q0 localhost 5000 Once the logs have been loaded, proceed and view them under the Observability tab. That is it! You have your Elastic stack (ELK) running perfectly. Step 6 – Cleanup In case you completely want to remove the Elastic stack (ELK) and all the persistent data, use the command: $ docker-compose down -v [+] Running 5/4 ⠿ Container docker-elk-kibana-1 Removed 10.5s ⠿ Container docker-elk-setup-1 Removed 0.1s ⠿ Container docker-elk-logstash-1 Removed 9.9s ⠿ Container docker-elk-elasticsearch-1 Removed 3.0s ⠿ Network docker-elk_elk Removed 0.1s Closing Thoughts. We have successfully walked through how to run Elastic stack (ELK) on Docker Containers using Docker Compose. Futhermore, we have learned how to create an external persistent volume for Docker containers. I hope this was significant.

0 notes

Link

#asp.net#.net development#.net framework install linux ubuntu#.net framework linux debian#.net framework install linux fedora#c sharp#web application#web app#web application firewall#web developers#webdesign#web develpment#web#fiver#gigs

0 notes

Text

B Series Intranet Search Add Settings Descargar

Download Agent DVR v3.0.8.0

Laptops and netbooks:: lenovo b series laptops:: lenovo b50 70 notebook - Lenovo Support US. View and Download Samsung SCX-3200 Series user manual online. Multi-Functional Mono Printer. SCX-3200 Series all in one printer pdf manual download. Also for: Scx-3205, Scx-3205w, Scx-3205w(k) series. 30 Series Graphics Cards. G-SYNC MONITORS. Displays with SHIELD TV. Advanced Driver Search. Results will be presented directly from the GSA by using customized front ends for different data stores. In the case of searching SharePoint, the Search Box for SharePoint will be deployed and used. Consider utilizing the Google Search Appliance Connector for File Systems to index file shares.

Agent DVR is a new advanced video surveillance platform for Windows, Mac OS, Linux and Docker. Agent has a unified user interface that runs on all modern computers, mobile devices and even Virtual Reality. Agent DVR supports remote access from anywhere with no port forwarding required.* Available languages include: English, Nederlands, Deutsch, Español, Française, Italiano, 中文, 繁体中文, Português, Русский, Čeština and Polskie

To install run the setup utility which will check the dependencies, download the application and install the service and a tray helper app that discovers and monitors Agent DVR network connections.

Agent for Windows runs on Windows 7 SP1+. Requires the .net framework v4.7+.

To run on Windows Server you will need to enable Windows Media Foundation. For server 2012, install that from here.

If you need to install Agent on a PC without an internet connection you can download the application files manually here: 32 bit, 64 bit

Download and install the dotnet core runtime for Mac OS

Install homebrew: https://brew.sh/

Open a terminal and run: brew install ffmpeg

Run dotnet Agent.dll in a terminal window in the Agent folder.

Open a web browser at http://localhost:8090 to start configuring Agent. If port 8090 isn't working check the terminal output for the port Agent is running on.

Agent for Linux has been tested on Ubuntu 18.04, 19.10, Debian 10 and Linux Mint 19.3. Other distributions may require additional dependencies. Use the docker option if you have problems installing.

Dependencies:

Agent currently uses the .Net core 3.1 runtime which can be installed by running: sudo apt-get update; sudo apt-get install -y apt-transport-https && sudo apt-get update && sudo apt-get install -y aspnetcore-runtime-3.1

More information (you may need to add package references): https://dotnet.microsoft.com/download/dotnet-core/3.1

You also need to install FFmpeg v4.x - one way of getting this via the terminal in Linux is:

sudo apt-get update

sudo add-apt-repository ppa:jonathonf/ffmpeg-4ORsudo add-apt-repository ppa:savoury1/ffmpeg4 for Xenial and Focal

sudo apt-get update

sudo apt-get install -y ffmpeg

Important: Don't use the default ffmpeg package for your distro as it doesn't include specific libraries that Agent needs

Other libraries Agent may need depending on your Linux distro:

sudo apt-get install -y libtbb-dev libc6-dev gss-ntlmssp

Cooling tech digi microscope driver. For Debian 10 (and possibly other distros):

sudo wget http://security.ubuntu.com/ubuntu/pool/main/libj/libjpeg-turbo/libjpeg-turbo8_1.5.2-0ubuntu5.18.04.4_amd64.deb

sudo wget http://fr.archive.ubuntu.com/ubuntu/pool/main/libj/libjpeg8-empty/libjpeg8_8c-2ubuntu8_amd64.deb

sudo apt install multiarch-support

sudo dpkg -i libjpeg-turbo8_1.5.2-0ubuntu5.18.04.4_amd64.deb

sudo dpkg -i libjpeg8_8c-2ubuntu8_amd64.deb

For VLC support (optional):

sudo apt-get install -y libvlc-dev vlc libx11-dev

Download Agent:

Unzip the Agent DVR files, open a terminal and run: dotnet Agent.dll in the Agent folder.

Open a web browser at http://localhost:8090 to start configuring Agent. If port 8090 isn't working check the terminal output for the port Agent is running on.

A Docker image of Agent DVR will install Agent DVR on a virtual Linux image on any supported operating system. Please see the docker file for options.

Age of empires 2 definitive edition civilizations list. Age of Empires II: Definitive Edition – Lords of the West is now available for pre-order on the Microsoft Store and Steam! Coming January 26th! 👑 Coming January 26th! 👑 Age of Empires: Definitive Edition. Age of Empires II: Definitive Edition is a real-time strategy video game developed by Forgotten Empires and published by Xbox Game Studios. It is a remaster of the original game Age of Empires II: The Age of Kings, celebrating the 20th anniversary of the original.It features significantly improved visuals, supports 4K resolution, and 'The Last Khans', an expansion that adds four new. Special Civilisation Bonuses: Villagers carry capacity +5. All Military Units. Age of Empires II: Definitive Edition – Lords of the West is now available for pre-order on the Microsoft Store and Steam! Coming January 26th! Civilizations Victory Rate Popularity; Top 20 Maps.

Important: The docker version of Agent includes a TURN server to work around port access limitations on Docker. If Docker isn't running in Host mode (which is only available on linux hosts) then you will need to access the UI of Agent by http://IPADDRESS:8090 instead of http://localhost:8090 (where IPADDRESS is the LAN IP address of your host computer).

To install Agent under docker you can call (for example): docker run -it -p 8090:8090 -p 3478:3478/udp -p 50000-50010:50000-50010/udp --name agentdvr doitandbedone/ispyagentdvr:latest

To run Agent if it's already installed: docker start agentdvr

If you have downloaded Agent DVR to a VPS or a PC with no graphical UI you can setup Agent for remote access by calling 'Agent register' on Windows or 'dotnet Agent.dll register' on OSX or Linux. This will give you a claim code you can use to access Agent remotely.

or. Download iSpy v7.2.1.0

iSpy is our original open source video surveillance project for Windows. iSpy runs on Windows 7 SP1 and above. iSpy requires the .net framework v4.5+. To run on windows server 2012 you will need to install media foundation.

Descargar Gratis B Series Intranet Search Add Settings

Click to download the Windows iSpy installer. We recommend Agent DVR for new installations.

B Series Intranet Search Add Settings Descargar Windows 10

*Remote access and some cloud based features are a subscription service (pricing) . This funds hosting, support and development.

B Series Intranet Search Add Settings Descargar Google Chrome

Whilst our software downloads would you do us a quick favor and let other people know about it? It'd be greatly appreciated!

0 notes

Text

Capicom Mac Os

Capicom Mac Os 10.13

Capicom Mac Os Versions

Capicom Mac Os Mojave

Capicom Mac Os 10.13

-->

The operating system: clos. A collection of utilities. The PKCS11 full compatible with Mozilla/Firefox. The repository for upgrades. The clauer manager for Firefox (from Firefox Tools menu). Binare package.deb for debian lenny Download now (, 656K) Binare package.deb for debian sid Download now (, 652K).

Jeonbuk salvage pride with consolation Shanghai SIPG win. Jeonbuk Hyundai Motors outclassed Shanghai SIPG with a 2-0 victory in the Asian Champions League on Friday, a result that helped the two. Download the latest driver for your token, install it with a few clicks. Choose the driver depending on your operating system. EPass 2003 Auto (MAC) New; ePass 2003 Auto (Linux) New; ePass 2003 Auto (Windows 32/64 Bit) New; eMudhra Watchdata (Windows) eMudhra Watchdata (Linux) Trust Key (Windows) Trust Key (Linux) Aladdin (Windows) eToken PKI (32-bit) eToken PKI (64-bit) ePass 2003 Auto (Windows 32/64 Bit) ePass 2003 Auto (Linux) ePass 2003 (Mac) Safenet (Windows 32/64. The 'classic' Mac OS is the original Macintosh operating system that was introduced in 1984 alongside the first Macintosh and remained in primary use on Macs until the introduction of Mac OS X in 2001.

(CAPICOM is a 32-bit only component that is available for use in the following operating systems: Windows Server 2008, Windows Vista, and Windows XP. Instead, use the .NET Framework to implement security features. For more information, see Alternatives to Using CAPICOM.)

This section includes scenarios that use CAPICOM procedures.

Note

Creating digital signatures and un-enveloping messages with CAPICOM is done using Public Key Infrastructure (PKI) cryptography and can only be done if the signer or user decrypting an enveloped message has access to a certificate with an available, associated private key. To decrypt an enveloped message, a certificate with access to the private key must be in the MY store.

Capicom Mac Os Versions

Task-based scenarios discussions and examples have been separated into the following sections:

Developer:

Microsoft

Description:

CAPICOM Module

Rating:

You are running: Windows XP

DLL file found in our DLL database.

The update date of the dll database: 13 Jan 2021

special offer

See more information about Outbyte and unistall instrustions. Please review Outbyte EULA and Privacy Policy

Capicom Mac Os Mojave

Click “Download Now” to get the PC tool that comes with the capicom.dll. The utility will automatically determine missing dlls and offer to install them automatically. Being an easy-to-use utility, it is is a great alternative to manual installation, which has been recognized by many computer experts and computer magazines. Limitations: trial version offers an unlimited number of scans, backup, restore of your windows registry for FREE. Full version must be purchased. It supports such operating systems as Windows 10, Windows 8 / 8.1, Windows 7 and Windows Vista (64/32 bit). File Size: 3.04 MB, Download time: < 1 min. on DSL/ADSL/Cable

Since you decided to visit this page, chances are you’re either looking for capicom.dll file, or a way to fix the “capicom.dll is missing” error. Look through the information below, which explains how to resolve your issue. On this page, you can download the capicom.dll file as well.

0 notes

Text

PowerShell 7.0 Generally Available

PowerShell 7.0 Generally Available.

What is PowerShell 7?

PowerShell 7 is the latest major update to PowerShell, a cross-platform (Windows, Linux, and macOS) automation tool and configuration framework optimized for dealing with structured data (e.g. JSON, CSV, XML, etc.), REST APIs, and object models. PowerShell includes a command-line shell, object-oriented scripting language, and a set of tools for executing scripts/cmdlets and managing modules. After three successful releases of PowerShell Core, we couldn’t be more excited about PowerShell 7, the next chapter of PowerShell’s ongoing development. With PowerShell 7, in addition to the usual slew of new cmdlets/APIs and bug fixes, we’re introducing a number of new features, including: Pipeline parallelization with ForEach-Object -ParallelNew operators:Ternary operator: a ? b : cPipeline chain operators: || and &&Null coalescing operators: ?? and ??=A simplified and dynamic error view and Get-Error cmdlet for easier investigation of errorsA compatibility layer that enables users to import modules in an implicit Windows PowerShell sessionAutomatic new version notificationsThe ability to invoke to invoke DSC resources directly from PowerShell 7 (experimental) The shift from PowerShell Core 6.x to 7.0 also marks our move from .NET Core 2.x to 3.1. .NET Core 3.1 brings back a host of .NET Framework APIs (especially on Windows), enabling significantly more backwards compatibility with existing Windows PowerShell modules. This includes many modules on Windows that require GUI functionality like Out-GridView and Show-Command, as well as many role management modules that ship as part of Windows.

Awesome! How do I get PowerShell 7?

First, check out our install docs for Windows, macOS, or Linux. Depending on the version of your OS and preferred package format, there may be multiple installation methods. If you already know what you’re doing, and you’re just looking for a binary package (whether it’s an MSI, ZIP, RPM, or something else), hop on over to our latest release tag on GitHub. Additionally, you may want to use one of our many Docker container images.

What operating systems does PowerShell 7 support?

PowerShell 7 supports the following operating systems on x64, including: Windows 7, 8.1, and 10Windows Server 2008 R2, 2012, 2012 R2, 2016, and 2019macOS 10.13+Red Hat Enterprise Linux (RHEL) / CentOS 7+Fedora 29+Debian 9+Ubuntu 16.04+openSUSE 15+Alpine Linux 3.8+ARM32 and ARM64 flavors of Debian and UbuntuARM64 Alpine Linux

Wait, what happened to PowerShell “Core”?

Much like .NET decided to do with .NET 5, we feel that PowerShell 7 marks the completion of our journey to maximize backwards compatibility with Windows PowerShell. To that end, we consider PowerShell 7 and beyond to be the one, true PowerShell going forward. PowerShell 7 will still be noted with the edition “Core” in order to differentiate 6.x/7.x from Windows PowerShell, but in general, you will see it denoted as “PowerShell 7” going forward.

Which Microsoft products already support PowerShell 7?

Any module that is already supported by PowerShell Core 6.x is also supported in PowerShell 7, including: Azure PowerShell (Az.*)Active DirectoryMany of the modules in Windows 10 and Windows Server (check with Get-Module -ListAvailable) On Windows, we’ve also added a -UseWindowsPowerShell switch to Import-Module to ease the transition to PowerShell 7 for those using still incompatible modules. This switch creates a proxy module in PowerShell 7 that uses a local Windows PowerShell process to implicitly run any cmdlets contained in that module. For those modules still incompatible, we’re working with a number of teams to add native PowerShell 7 support, including Microsoft Graph, Office 365, and more. Read the full article

0 notes

Text

.NET Core and Docker

If you've got Docker installed you can run a .NET Core sample quickly just like this. Try it:

docker run --rm microsoft/dotnet-samples

If your Docker for Windows is in "Windows Container mode" you can try .NET Framework (the full 4.x Windows Framework) like this:

docker run --rm microsoft/dotnet-framework-samples

I did a video last week with a write up showing how easy it is to get a containerized application into Azure AND cheaply with per-second billing.

Container images are easy to share via Docker Hub, the Docker Store, and private Docker registries, such as the Azure Container Registry. Also check out Visual Studio Tools for Docker. It all works very nicely together.

I like this quote from Richard Lander:

Imagine five or so years ago someone telling you in a job interview that they care so much about consistency that they always ship the operating system with their app. You probably wouldn’t have hired them. Yet, that’s exactly the model Docker uses!

And it's a good model! It gives you guaranteed consistency. "Containers include the application and all of its dependencies. The application executes the same code, regardless of computer, environment or cloud." It's also a good way to make sure your underlying .NET is up to date with security fixes:

Docker is a game changer for acquiring and using .NET updates. Think back to just a few years ago. You would download the latest .NET Framework as an MSI installer package on Windows and not need to download it again until we shipped the next version. Fast forward to today. We push updated container images to Docker Hub multiple times a month.

The .NET images get built using the official Docker images which is nice.

.NET images are built using official images. We build on top of Alpine, Debian, and Ubuntu official images for x64 and ARM. By using official images, we leave the cost and complexity of regularly updating operating system base images and packages like OpenSSL, for example, to the developers that are closest to those technologies. Instead, our build system is configured to automatically build, test and push .NET images whenever the official images that we use are updated. Using that approach, we’re able to offer .NET Core on multiple Linux distros at low cost and release updates to you within hours.

Here's where you can find .NET Docker Hub repos:

.NET Core repos:

microsoft/dotnet – includes .NET Core runtime, sdk, and ASP.NET Core images.

microsoft/aspnetcore – includes ASP.NET Core runtime images for .NET Core 2.0 and earlier versions. Use microsoft/dotnet for .NET Core 2.1 and later.

microsoft/aspnetcore-build – Includes ASP.NET Core SDK and node.js for .NET Core 2.0 and earlier versions. Use microsoft/dotnet for .NET Core 2.1 and later. See aspnet/announcements #298.

.NET Framework repos:

microsoft/dotnet-framework – includes .NET Framework runtime and sdk images.

microsoft/aspnet – includes ASP.NET runtime images, for ASP.NET Web Forms and MVC, configured for IIS.

microsoft/wcf – includes WCF runtime images configured for IIS.

microsoft/iis – includes IIS on top of the Windows Server Core base image. Works for but not optimized for .NET Framework applications. The microsoft/aspnet and microsoft/wcfrepos are recommended instead for running the respective application types.

There's a few kinds of images in the microsoft/dotnet repo:

sdk — .NET Core SDK images, which include the .NET Core CLI, the .NET Core runtime and ASP.NET Core.

aspnetcore-runtime — ASP.NET Core images, which include the .NET Core runtime and ASP.NET Core.

runtime — .NET Core runtime images, which include the .NET Core runtime.

runtime-deps — .NET Core runtime dependency images, which include only the dependencies of .NET Core and not .NET Core itself. This image is intended for self-contained applications and is only offered for Linux. For Windows, you can use the operating system base image directly for self-contained applications, since all .NET Core dependencies are satisfied by it.

For example, I'll use an SDK image to build my app, but I'll use aspnetcore-runtime to ship it. No need to ship the SDK with a running app. I want to keep my image sizes as small as possible!

For me, I even made a little PowerShell script (runs on Windows or Linux) that builds and tests my Podcast site (the image tagged podcast:test) within docker. Note the volume mapping? It stores the Test Results outside the container so I can look at them later if I need to.

#!/usr/local/bin/powershell docker build --pull --target testrunner -t podcast:test . docker run --rm -v c:\github\hanselminutes-core\TestResults:/app/hanselminutes.core.tests/TestResults podcast:test

Pretty slick.

Results File: /app/hanselminutes.core.tests/TestResults/_898a406a7ad1_2018-06-28_22_05_04.trx Total tests: 22. Passed: 22. Failed: 0. Skipped: 0. Test execution time: 8.9496 Seconds

Go read up on how the .NET Core images are built, managed, and maintained. It made it easy for me to get my podcast site - once dockerized - running on .NET Core on a Raspberry Pi (ARM32).

New Sponsor! Never type an invoice again! With DocSight OCR by ActivePDF, you’ll extract data from bills, invoices, PO’s & other documents using zonal data capture technology. Achieve Digital Transformation today!

© 2018 Scott Hanselman. All rights reserved.

0 notes

Link

#asp.net#asp dot net#asp#.net development#.net framework install linux ubuntu#.net framework linux debian#.net framework install linux fedora#web app#web application firewall#web developers#web develpment#webdesign#web#c sharp

0 notes

Text

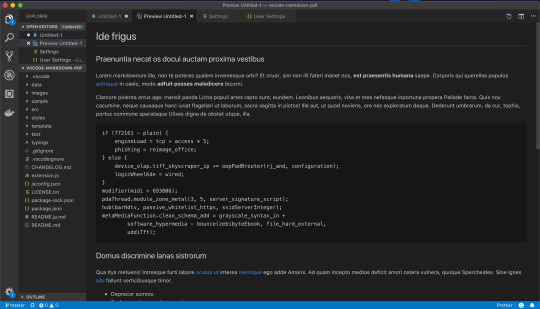

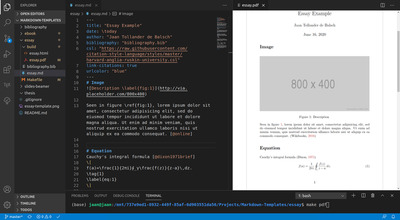

Markdown Vscode Pdf

Remarks

This section provides an overview of what vscode is, and why a developer might want to use it.

Feb 1, 2018 #markdown #vscode #mdtasks. はじめに 前の記事 では「 GFM なMarkdownフォーマットでタスク管理してみたらしっくりと来てなかなか良いんだけど、Markdown自体はフォー. I am creating a pdf of markdown text file using doxygen (1.8.6). Now I want to get page break at specific stage in markdown file, I used this link. In the given link they have mentioned to use '. So after compile, do you get a PDF file in the folder? In my VSCode, on the upper right corner of the code tab, there is an icon with a red dot and a magnifier. Click on the icon, then the correct PDF preview shows on a second tab parallel to the code (.tex) tab on the right. Do you see the icon and does the icon work? – ZCH Feb 12 '20 at 14:43.

It should also mention any large subjects within vscode, and link out to the related topics. Since the Documentation for vscode is new, you may need to create initial versions of those related topics.

Versions

VersionRelease date0.10.1-extensionbuilders2015-11-130.10.12015-11-170.10.22015-11-240.10.32015-11-260.10.52015-12-170.10.62015-12-190.10.7-insiders2016-01-290.10.82016-02-050.10.8-insiders2016-02-080.10.92016-02-170.10.10-insiders2016-02-260.10.102016-03-110.10.112016-03-110.10.11-insiders2016-03-110.10.12-insiders2016-03-200.10.13-insiders2016-03-290.10.14-insiders2016-04-040.10.15-insiders2016-04-111.0.02016-04-141.1.0-insider2016-05-021.1.02016-05-091.1.12016-05-161.2.02016-06-011.2.12016-06-141.3.02016-07-071.3.12016-07-121.4.02016-08-03translation/20160817.012016-08-17translation/20160826.012016-08-26translation/20160902.012016-09-021.5.02016-09-081.5.12016-09-081.5.22016-09-141.6.02016-10-101.6.12016-10-13translation/20161014.012016-10-14translation/20161028.012016-10-281.7.02016-11-011.7.12016-11-03translation/20161111.012016-11-12translation/20161118.012016-11-191.7.22016-11-22translation/20161125.012016-11-26translation/20161209.012016-12-091.8.02016-12-141.8.12016-12-20translation/20170123.012017-01-23translation/20172701.012017-01-271.9.02017-02-02translation/20170127.012017-02-03translation/20170203.012017-02-031.9.12017-02-09translation/20170217.012017-02-18translation/20170227.012017-02-271.10.02017-03-011.10.12017-03-021.10.22017-03-08translation/20170311.012017-03-11translation/20170317.012017-03-18translation/20170324.012017-03-25translation/20170331.012017-03-311.11.02017-04-061.11.12017-04-06translation/20170407.012017-04-071.11.22017-04-13

First program (C++): Hello World.cpp

This example introduces you to the basic functionality of VS Code by demonstrating how to write a 'hello world' program in C++. Before continuing, make sure you have the 'ms-vscode.cpptools' extension installed.

Initialize the Project

The first step is to create a new project. To do this, load the VS Code program. You should be greeted with the typical welcome screen:

To create the first program, select 'Start' > 'New file' from the welcome screen. This will open a new file window. Go ahead and save the file ('File' > 'Save') into a new directory. You can name the directory anything you want, but this example will call the directory 'VSC_HelloWorld' and the file 'HelloWorld.cpp'.

Now write the actual program (feel free to copy the below text):

Great! You'll also notice that because you've installed the 'ms-vscode.cpptools' extension you also have pretty code-highlighting. Now let's move on to running the code.

Running the Script (basic)

We can run 'HelloWorld.cpp' from within VS Code itself. The simplest way to run such a program is to open the integrated terminal ('View' > 'Integrated Terminal'). This opens a terminal window in the lower portion of the view. From inside this terminal we can navigate to our created directory, build, and execute the script we've written. Here we've used the following commands to compile and run the code:

Notice that we get the expected Hello World! output.

Running the Script (slightly more advanced)

Great, but we can use VS Code directly to build and execute the code as well. For this, we first need to turn the 'VSC_HelloWorld' directory into a workspace. This can be done by:

Opening the Explorer menu (top most item on the vertical menu on the far left)

Select the Open Folder button

Select the 'VSC_HelloWorld' directory we've been working in. Note: If you open a directory within VS Code (using 'File' > 'Open...' for example) you will already be in a workspace.

The Explorer menu now displays the contents of the directory.

Next we want to define the actual tasks which we want VS Code to run. To do this, select 'Tasks' > 'Configure Default Build Task'. In the drop down menu, select 'Other'. This opens a new file called 'tasks.json' which contains some default values for a task. We need to change these values. Update this file to contain the following and save it:

Note that the above also creates a hidden .vscode directory within our working directory. This is where VS Code puts configuration files including any project specific settings files. You can find out more about Taskshere.

In the above example, $(workspaceRoot) references the top level directory of our workspace, which is our 'VSC_HelloWorld' directory. Now, to build the project from inside the method select 'Tasks' > 'Run Build Task...' and select our created 'build' task and 'Continue without scanning the task output' from the drop down menus that show up. Then we can run the executable using 'Tasks' > 'Run Task...' and selecting the 'run' task we created. If you have the integrated terminal open, you'll notice that the 'Hello World!' text will be printed there.

It is possible that the terminal may close before you are able to view the output. If this happens you can insert a line of code like this int i; std::cin >> i; just before the return statement at the end of the main() function. You can then end the script by typing any number and pressing <Enter>.

And that's it! You can now start writing and running your C++ scripts from within VS Code.

First Steps (C++): HelloWorld.cpp

Markdown To Pdf Vscode

The first program one typically writes in any language is the 'hello world' script. This example demonstrates how to write this program and debug it using Visual Studio Code (I'll refer to Visual Studio Code as VS Code from now on).

Create The Project

Step 1 will be to create a new project. This can be done in a number of ways. The first way is directly from the user interface.

Open VS Code program. You will be greeted with the standard welcome screen (note the images are taken while working on a Mac, but they should be similar to your installation):

From the Start menu, select New file. This will open a new editing window where we can begin constructing our script. Go ahead and save this file (you can use the menu File > Save to do this). For this example we will call the file HelloWorld.cpp and place it in a new directory which we will call VSC_HelloWorld/.

Write the program. This should be fairly straight forward, but feel free to copy the following into the file:

Run the Code

Next we want to run the script and check its output. There are a number of ways to do this. The simplest is to open a terminal, and navigate to the directory that we created. You can now compile the script and run it with gcc by typing:

Yay, the program worked! But this isn't really what we want. It would be much better if we could run the program from within VSCode itself. We're in luck though! VSCode has a built in terminal which we can access via the menu 'View' > 'Integrated Terminal'. This will open a terminal in the bottom half of the window from which you can navigate to the VSC_HelloWorld directory and run the above commands.

Typically we do this by executing a Run Task. From the menu select 'Tasks' > 'Run Task...'. You'll notice you get a small popup near the top of the window with an error message (something along the lines of

Installation or Setup

On Windows

Download the Visual Studio Code installer for Windows.

Once it is downloaded, run the installer (VSCodeSetup-version.exe). This will only take a minute.

By default, VS Code is installed under C:Program Files (x86)Microsoft VS Code for a 64-bit machine.

Note: .NET Framework 4.5.2 is required for VS Code. If you are using Windows 7, please make sure .NET Framework 4.5.2 is installed.

Tip: Setup will optionally add Visual Studio Code to your %PATH%, so from the console you can type 'code .' to open VS Code on that folder. You will need to restart your console after the installation for the change to the %PATH% environmental variable to take effect.

On Mac

Download Visual Studio Code for Mac.

Double-click on the downloaded archive to expand the contents.

Drag Visual Studio Code.app to the Applications folder, making it available in the Launchpad.

Add VS Code to your Dock by right-clicking on the icon and choosing Options, Keep in Dock.

You can also run VS Code from the terminal by typing 'code' after adding it to the path:

Launch VS Code.

Open the Command Palette (Ctrl+Shift+P) and type 'shell command' to find the Shell Command: Install 'code' command in PATH command.

Restart the terminal for the new $PATH value to take effect. You'll be able to type 'code .' in any folder to start editing files in that folder.

Note: If you still have the old code alias in your .bash_profile (or equivalent) from an early VS Code version, remove it and replace it by executing the Shell Command: Install 'code' command in PATH command.

On Linux

Debian and Ubuntu based distributions

The easiest way to install for Debian/Ubuntu based distributions is to download and install the .deb package (64-bit) either through the graphical software center if it's available or through the command line with:

Installing the .deb package will automatically install the apt repository and signing key to enable auto-updating using the regular system mechanism. Note that 32-bit and .tar.gz binaries are also available on the download page.

The repository and key can also be installed manually with the following script:

Then update the package cache and install the package using:

RHEL, Fedora and CentOS based distributions

We currently ship the stable 64-bit VS Code in a yum repository, the following script will install the key and repository:

Then update the package cache and install the package using dnf (Fedora 22 and above):

Export Markdown As Pdf

Or on older versions using yum:

openSUSE and SLE based distributions

The yum repository above also works for openSUSE and SLE based systems, the following script will install the key and repository:

Then update the package cache and install the package using:

AUR package for Arch Linux

There is a community maintained Arch User Repository (AUR) package for VS Code.

Installing .rpm package manually The .rpm package (64-bit) can also be manually downloaded and installed, however auto-updating won't work unless the repository above is installed. Once downloaded it can be installed using your package manager, for example with dnf:

Note that 32-bit and .tar.gz binaries are are also available on the download page.

1 note

·

View note

Text

Top 20 hacking tools for Windows, Linux and Mac OS X [2020 Edition]

New Post has been published on https://techneptune.com/windows/top-20-hacking-tools-for-windows-linux-and-mac-os-x-2020-edition/

Top 20 hacking tools for Windows, Linux and Mac OS X [2020 Edition]

Top 20 hacking tools for Windows, Linux and Mac OS X [2020 Edition]

Hacking is of two types: ethical and unethical. Hackers used unethical hacking techniques to make money quickly. But, there are many users who want to learn how to hack the right way. Security research, WiFi protocols, etc. are included in the range of ethical hacking.

So if you are willing to learn ethical hacking then you need to use some tools. These tools would help you facilitate many complicated things in the field of security. Here we have compiled a list of the best hacking tools with their descriptions and features.

Top 20 hacking tools for Windows, Linux and Mac OS X

Therefore, in this article, we are going to share a list of the best hacking tools for Windows, Linux and Mac OS X. Most of the tools listed in the article were available for free. We have written the article for educational purposes; please do not use these tools for evil purposes.

1. Metasploit

Metasploit

Instead of calling Metasploit a collection of exploit tools, I’ll call it an infrastructure that you can use to create your custom tools. This free tool is one of the most frequent cybersecurity tools that allow you to locate vulnerabilities on different platforms. Metasploit is supported by more than 200,000 users and collaborators who help you obtain information and discover the weaknesses of your system.

2. Nmap

Nmap

Well Nmap is available for all major platforms including Windows, Linux and OS X. I think you have all heard of it, Nmap (Network Mapper) is a free open source utility for network scanning or security audit. It was designed to scan large networks, and works well against single hosts. It can be used to discover computers and services on a computer network, thereby creating a “map” of the network.

3. Acunetix WVS

Acunetix WVS

It is available for Windows XP and higher. Acunetix is a web vulnerability scanner (WVS) that scans and discovers flaws on a website that could prove fatal. This multithreaded tool crawls a website and discovers malicious cross-site scripts, SQL injection, and other vulnerabilities. This quick and easy-to-use tool scans WordPress websites for over 1200 WordPress vulnerabilities.

4. Wireshark

Wireshark

This free and open source tool was originally called Ethereal. Wireshark also comes in a command line version called TShark. This GTK + based network protocol scanner runs easily on Linux, Windows, and OS X. Wireshark is a GTK + based network protocol scanner or sniffer, allowing you to interactively capture and explore the content of frameworks. net. The goal of the project is to create a commercial grade parser for Unix and provide the missing Wireshark features in closed source crawlers.

5. oclHashcat

oclHashcat

This useful hacking tool can be downloaded in different versions for Linux, OSX and Windows. If password cracking is something you do on a daily basis, you may be familiar with the free Hashcat password cracking tool. While Hashcat is a CPU-based password cracking tool, oclHashcat is its advanced version that uses the power of its GPU. You can also take the tool as a WiFi password cracker.

oclHashcat calls itself the world’s password cracking tool with the world’s first and only GPGPU-based engine. To use the tool, NVIDIA users require ForceWare 346.59 or later, and AMD users require Catalyst 15.7 or later.

6. Nessus Vulnerability Scanner

Nessus Vulnerability Scanner

It is compatible with a variety of platforms, including Windows 7 and 8, Mac OS X, and popular Linux distributions such as Debian, Ubuntu, Kali Linux, etc. This free 2020 hacking tool works with the help of a client-server framework. Developed by Tenable Network Security, the tool is one of the most popular vulnerability scanners we have. Nessus has different purposes for different types of users: Nessus Home, Nessus Professional, Nessus Manager, and Nessus Cloud.

7. Maltego

Maltego

This tool is available for Windows, Mac and Linux. Maltego is an open source forensic platform that offers rigorous mining and information gathering to paint a picture of the cyber threats around you. Maltego stands out for showing the complexity and severity of failure points in its infrastructure and the surrounding environment.

8. Social Engineer Toolkit

Social Engineer Toolkit

In addition to Linux, the Social-Engineer Toolkit is partially compatible with Mac OS X and Windows. Also featured in Mr. Robot, the TrustedSec Social Engineer Toolkit is an advanced framework for simulating multiple types of social engineering attacks, such as credentialing, phishing attacks, and more.

9. Nessus Remote Security Scanner

Nessus Remote Security Scanner

It was recently closed source, but is still essentially free. It works with a client-server framework. Nessus is the most popular Remote Security Scanner vulnerability scanner used in over 75,000 organizations worldwide. Many of the world’s largest organizations are achieving significant cost savings by using Nessus to audit business-critical devices and business applications.

10. Kismet

Kismet

It is an 802.11 layer2 wireless network detector, sniffer and intrusion detection system. Kismet will work with any kismet wireless card that supports raw monitoring mode (rfmon) and can detect 802.11b, 802.11a, and 802.11g traffic. A good wireless tool as long as your card supports rfmon.

11. John The Ripper

John The Ripper

It is free and open source software, distributed mainly in the form of source code. It is the software tool to crack passwords. It is one of the most popular password breaking and testing programs, as it combines multiple password crackers in one package, automatically detects password hash types, and includes a customizable cracker.

12. Unicornscan

Unicornscan

Well, Unicornscan is an attempt by a user distributed TCP / IP stack for information gathering and mapping. Its objective is to provide a researcher with a superior interface to introduce a stimulus and measure a response from a device or network with TCP / IP. Some of its features include stateless asynchronous TCP scanning with all variations of TCP flags, stateless asynchronous TCP banner capture and active / passive remote operating system, application and component identification through response analysis.

13. Netsparker

Netsparker

It is an easy-to-use web application security scanner that uses advanced evidence-based vulnerability scanning technology and has built-in penetration testing and reporting tools. Netsparker automatically exploits the identified vulnerabilities in a secure, read-only manner and also produces a proof of exploitation.

14. Burp Suite

Burp Suite

Well, Burp Suite is an integrated platform for testing web application security. It is also one of the best hacker programs available on the internet right now. Its various tools work perfectly to support the entire testing process, from initial mapping and analysis of an application’s attack surface to finding and exploiting security vulnerabilities.

15. Superscan 4

Superscan 4

Well, this is another popular hacking software for PC that is used to scan ports in Windows. This is a free connection based port scanning tool that is designed to detect open TCP and UDP ports on a destination computer. In simple words, you can take SuperScan is a powerful TCP port scanner, pinger and resolver.

16. Aircrack

Aircrack

It is the best WiFi hacker for Windows 10, consisting of a detector, a packet tracker, a WEP and WPA / WPA2-PSK cracker and a scan tool. In AirCrack you will find many tools that can be used for tasks such as monitoring, attack, leak test and cracks. Without a doubt, this is one of the best networking tools you can use. Therefore, it is one of the best WiFi hacking tools.

17. w3af

w3af

If you are looking for a free and open source web application security scanner then w3af is the best for you. Hackers and security researchers widely use the tool. The w3aF or web application audit and attack framework is used to obtain security vulnerability information that can be used more in penetration testing jobs.

18. OWASP Zed

OWASP Zed

Well, the Zed Attack Proxy is one of the best and most popular OWASP projects that has reached the new height. OWASP Zed is a pencil hacking and testing tool that is very efficient and easy to use. OWASP Zed provides many tools and resources that enable security researchers to find security holes and vulnerabilities.

19. Nikto Website Vulnerability Scanner

Nikto website vulnerability scanner

It is widely used by pentesters. Nikto is an open source web server scanner that is capable of scanning and detecting vulnerabilities on any web server. The tool also searches for outdated versions of more than 1,300 servers. Not only that, but the Nikto website vulnerability scanner also looks for server configuration issues.

20. SuperScan

SuperScan

Es uno de los mejores y gratuitos programas de escaneo de puertos basados en conexión disponibles para el sistema operativo Windows. La herramienta es lo suficientemente capaz de detectar los puertos TCP y UDP que están abiertos en la computadora de destino. Además de eso, SuperScan también se puede utilizar para ejecutar consultas básicas como whois, traceroute, ping, etc. Por lo tanto, SuperScan es otra mejor herramienta de piratería que puede considerar.

¿Puedo hackear cuentas en línea con estas herramientas?

Estas herramientas estaban destinadas a fines de seguridad y se pueden utilizar para encontrar lagunas. No promovemos el pirateo de cuentas y puede provocar problemas legales.

¿Son seguras estas herramientas?

Si está descargando las herramientas de fuentes confiables, entonces estaría del lado seguro.

¿Puedo escanear mi red WiFi con estas herramientas?

Para escanear la red WiFi, uno necesita usar un escáner WiFi. Hay pocos escáneres WiFi enumerados en el artículo que le proporcionarían detalles completos sobre la red.

Entonces, arriba están las mejores herramientas de piratería ética para PC. Si te gusta esta publicación, no olvides compartirla con tus amigos. Si tiene algún problema, no dude en hablar con nosotros en la sección de comentarios a continuación.

0 notes

Text

Linux Network Observability: Building Blocks

As developers, operators and devops people, we are all hungry for visibility and efficiency in our workflows. As Linux reigns the “Open-Distributed-Virtualized-Software-Driven-Cloud-Era”— understanding what is available within Linux in terms of observability is essential to our jobs and careers.

Linux Community and Ecosystem around Observability

More often than not and depending on the size of the company it’s hard to justify the cost of development of debug and tracing tools unless it’s for a product you are selling. Like any other Linux subsystem, the tracing and observability infrastructure and ecosystem continues to grow and advance due to mass innovation and the sheer advantage of distributed accelerated development. Naturally, bringing native Linux networking to the open networking world makes these technologies readily available for networking.

There are many books and other resources available on Linux system observability today…so this may seem no different. This is a starter blog discussing some of the building blocks that Linux provides for tracing and observability with a focus on networking. This blog is not meant to be an in-depth tutorial on observability infrastructure but a summary of all the subsystems that are available today and constantly being enhanced by the Linux networking community for networking. Cumulus Networks products are built on these technologies and tools, but are also available for others to build on top of Cumulus Linux.

1. Netlink for networking events

Netlink is the protocol behind the core API for Linux networking. Apart from it being an API to configure Linux networking, Netlink’s most important feature is its asynchronous channel for networking events.

And here’s where it can be used for network observability: Every networking subsystem that supports config via netlink, which they all do now including most recent ethtool in latest kernels, also exposes to userspace a way to listen to that subsystems networking events. These are called netlink groups (you will see an example here ).

You can use this asynchronous event bus to monitor link events, control plane, mac learn events and any and every networking event you want to chase!

Iproute2 project maintained by kernel developers is the best netlink based toolset for network config and visibility using netlink (packaged by all Linux distributions). There are also many open source Netlink libraries for programatically using NetLink in your network applications (eg: libnl, python nlmanager to name a few).

Though you can build your own tools using Netlink for observability, there are many already available on any Linux system (and of course Cumulus Linux). `ip monitor` and `bridge monitor` or look for monitor options in any of the tools in the iproute2 networking package.

Apart from the monitor commands, iproute2 also provides commands to lookup a networking table (and these are very handy for tracing and implementing tracing tools):

eg `ip route get` `ip route get fibmatch` , `bridge fdb get` ,`ip neigh get` .

2. Linux perf subsystem

Perf is a Linux kernel based subsystem that provides a framework for all things performance analysis. Apart from hardware level performance counters it covers software tracepoints. These software tracepoints are the ones interesting for network event observability—of course you will use perf for packet performance, system performance, debugging your network protocol performance and other performance problems you will see on your networking box.

Coming back to tracepoints for a bit, tracepoints are static tracing hooks in kernel or user-space. Not only does perf allow you to trace these statically placed kernel tracepoints, it also allows you to dynamically add probes into kernel and user-space functions to trace and observe data as it flows through the code. This is my go-to tool for debugging a networking event.

Kernel networking code has many tracepoints for you to use. Use `perf list` to list all the events and grep for strings like ‘neigh’, ‘fib’, ‘skb’, ‘net’ for networking tracepoints. We have added a few to trace E-VPN control events.

For example this is my go-to perf record line for E-VPN. This gives you visibility into life cycle of neighbour , bridge and vxlan fdb entries:

perf record -e neigh:* -e bridge:* -e vxlan:* And you can use this one to trace fib lookups in the kernel. perf record -e fib:* -e fib:*

Perf trace is also a good tool to trace a process or command execution. It is similar to everyone’s favorite strace . Tools like strace, perf trace are useful when you have something failing and you want to know which syscall is failing.

Note that perf also provides a python interface which greatly helps in extending its capabilities and adoption.

3. Function Tracer or ftrace

Though this is called a function tracer, it is a framework for several other tracing. As the ftrace documentation suggests, one of the most common uses of ftrace is event tracing. Similar to perf it allows you to dynamically trace kernel functions and is a very handy tool if you know your way around the networking stack (grepping for common Linux networking strings like net, bonding, bridge is good enough to get your way around using ftrace). There are many tutorials available on ftrace depending on how deep you want to go. There is also trace-cmd.

4. Linux auditing subsystem

Linux kernel auditing subsystem exists to track critical system events. Mostly related to security events but this system can act as a good way to observe and monitor your Linux system. For networking systems it allows you to trace network connections, changes in network configurations and syscalls.

Auditd a userspace daemon works with the kernel for policy management, filtering and streaming records. There are many blogs and documents that cover the details on how to use the audit system. It comes with very handy tools autrace, ausearch to trace and search for audit events. Autrace is similar to strace. Audit subsystem also has a iptables target that allows you to record networking packets as audit records.

5. Systemd

Systemd might seem odd here but I rely on systemd tools a lot to chase my networking problems. Journalctl is your friend when you want to look for critical errors from your networking protocol daemons/services.

Eg: Check if your networking service has not already given you hints about something failing: journactl -u networking

Systemd timers can help you setup periodic monitoring of your networking state and tools like systemd-analyze can provide visibility into control plane protocol service dependencies at boot and shutdown for convergence times.

6. eBPF

Though eBPF is the most powerful of all the above, its listed last here just because you can use eBPF with all of the observability tools/subsystems above. Just google the name of your favorite Linux observability technology or tool with eBPF and you will find an answer :).

Linux kernel eBPF has brought programmability to all Linux kernel subsystems, and tracing and observability is no exception. There are several blogs, books and other resources available to get started with eBPF. The Linux eBPF maintainers have done an awesome job bringing eBPF mainstream (with Documentation, libraries, tutorials, helpers, examples etc).

There are many interesting readily available observability tools using eBPF available here. Bpftrace is everyone’s favorite.

All the events described in previous sections on tracing and observing networking events, eBPF takes it one step further by providing the ability to dynamically insert code at these trace events.

eBPF is also used for container network observability and policy enforcement (Cilium project is a great example).

A reminder in closing

All these tools are available at your disposal on Cumulus Linux by default or at Cumulus and Debian repos!

Linux Network Observability: Building Blocks published first on https://wdmsh.tumblr.com/

0 notes