#.net framework install linux debian

Explore tagged Tumblr posts

Text

Entity Framework introduction in .NET

Entity Framework is described as an ORM (Object Relational Mapping) framework that provides an automatic mechanism for developers to store and access the information from the database.

#c#dotnet#.net framework linux fedora#.net framework linux centos#.net developers#.net#.net framework install linux debian#developer#development#programing#programming#coding#coder#students

0 notes

Link

#dot net#asp.net#.net development#.net framework install linux ubuntu#.net framework linux debian#.net framework install linux fedora#software#web application firewall#php web application#web application#web application design#web application services

0 notes

Text

The Elastic stack (ELK) is made up of 3 open source components that work together to realize logs collection, analysis, and visualization. The 3 main components are: Elasticsearch – which is the core of the Elastic software. This is a search and analytics engine. Its task in the Elastic stack is to store incoming logs from Logstash and offer the ability to search the logs in real-time Logstash – It is used to collect data, transform logs incoming from multiple sources simultaneously, and sends them to storage. Kibana – This is a graphical tool that offers data visualization. In the Elastic stack, it is used to generate charts and graphs to make sense of the raw data in your database. The Elastic stack can as well be used with Beats. These are lightweight data shippers that allow multiple data sources/indices, and send them to Elasticsearch or Logstash. There are several Beats, each with a distinct role. Filebeat – Its purpose is to forward files and centralize logs usually in either .log or .json format. Metricbeat – It collects metrics from systems and services including CPU, memory usage, and load, as well as other data statistics from network data and process data, before being shipped to either Logstash or Elasticsearch directly. Packetbeat – It supports a collection of network protocols from the application and lower-level protocols, databases, and key-value stores, including HTTP, DNS, Flows, DHCPv4, MySQL, and TLS. It helps identify suspicious network activities. Auditbeat – It is used to collect Linux audit framework data and monitor file integrity, before being shipped to either Logstash or Elasticsearch directly. Heartbeat – It is used for active probing to determine whether services are available. This guide offers a deep illustration of how to run the Elastic stack (ELK) on Docker Containers using Docker Compose. Setup Requirements. For this guide, you need the following. Memory – 1.5 GB and above Docker Engine – version 18.06.0 or newer Docker Compose – version 1.26.0 or newer Install the required packages below: ## On Debian/Ubuntu sudo apt update && sudo apt upgrade sudo apt install curl vim git ## On RHEL/CentOS/RockyLinux 8 sudo yum -y update sudo yum -y install curl vim git ## On Fedora sudo dnf update sudo dnf -y install curl vim git Step 1 – Install Docker and Docker Compose Use the dedicated guide below to install the Docker Engine on your system. How To Install Docker CE on Linux Systems Add your system user to the docker group. sudo usermod -aG docker $USER newgrp docker Start and enable the Docker service. sudo systemctl start docker && sudo systemctl enable docker Now proceed and install Docker Compose with the aid of the below guide: How To Install Docker Compose on Linux Step 2 – Provision the Elastic stack (ELK) Containers. We will begin by cloning the file from Github as below git clone https://github.com/deviantony/docker-elk.git cd docker-elk Open the deployment file for editing: vim docker-compose.yml The Elastic stack deployment file consists of 3 main parts. Elasticsearch – with ports: 9200: Elasticsearch HTTP 9300: Elasticsearch TCP transport Logstash – with ports: 5044: Logstash Beats input 5000: Logstash TCP input 9600: Logstash monitoring API Kibana – with port 5601 In the opened file, you can make the below adjustments: Configure Elasticsearch The configuration file for Elasticsearch is stored in the elasticsearch/config/elasticsearch.yml file. So you can configure the environment by setting the cluster name, network host, and licensing as below elasticsearch: environment: cluster.name: my-cluster xpack.license.self_generated.type: basic To disable paid features, you need to change the xpack.license.self_generated.type setting from trial(the self-generated license gives access only to all the features of an x-pack for 30 days) to basic.

Configure Kibana The configuration file is stored in the kibana/config/kibana.yml file. Here you can specify the environment variables as below. kibana: environment: SERVER_NAME: kibana.example.com JVM tuning Normally, both Elasticsearch and Logstash start with 1/4 of the total host memory allocated to the JVM Heap Size. You can adjust the memory by setting the below options. For Logstash(An example with increased memory to 1GB) logstash: environment: LS_JAVA_OPTS: -Xm1g -Xms1g For Elasticsearch(An example with increased memory to 1GB) elasticsearch: environment: ES_JAVA_OPTS: -Xm1g -Xms1g Configure the Usernames and Passwords. To configure the usernames, passwords, and version, edit the .env file. vim .env Make desired changes for the version, usernames, and passwords. ELASTIC_VERSION= ## Passwords for stack users # # User 'elastic' (built-in) # # Superuser role, full access to cluster management and data indices. # https://www.elastic.co/guide/en/elasticsearch/reference/current/built-in-users.html ELASTIC_PASSWORD='StrongPassw0rd1' # User 'logstash_internal' (custom) # # The user Logstash uses to connect and send data to Elasticsearch. # https://www.elastic.co/guide/en/logstash/current/ls-security.html LOGSTASH_INTERNAL_PASSWORD='StrongPassw0rd1' # User 'kibana_system' (built-in) # # The user Kibana uses to connect and communicate with Elasticsearch. # https://www.elastic.co/guide/en/elasticsearch/reference/current/built-in-users.html KIBANA_SYSTEM_PASSWORD='StrongPassw0rd1' Source environment: source .env Step 3 – Configure Persistent Volumes. For the Elastic stack to persist data, we need to map the volumes correctly. In the YAML file, we have several volumes to be mapped. In this guide, I will configure a secondary disk attached to my device. Identify the disk. $ lsblk NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT sda 8:0 0 40G 0 disk ├─sda1 8:1 0 1G 0 part /boot └─sda2 8:2 0 39G 0 part ├─rl-root 253:0 0 35G 0 lvm / └─rl-swap 253:1 0 4G 0 lvm [SWAP] sdb 8:16 0 10G 0 disk └─sdb1 8:17 0 10G 0 part Format the disk and create an XFS file system to it. sudo parted --script /dev/sdb "mklabel gpt" sudo parted --script /dev/sdb "mkpart primary 0% 100%" sudo mkfs.xfs /dev/sdb1 Mount the disk to your desired path. sudo mkdir /mnt/datastore sudo mount /dev/sdb1 /mnt/datastore Verify if the disk has been mounted. $ sudo mount | grep /dev/sdb1 /dev/sdb1 on /mnt/datastore type xfs (rw,relatime,seclabel,attr2,inode64,logbufs=8,logbsize=32k,noquota) Create the persistent volumes in the disk. sudo mkdir /mnt/datastore/setup sudo mkdir /mnt/datastore/elasticsearch Set the right permissions. sudo chmod 775 -R /mnt/datastore sudo chown -R $USER:docker /mnt/datastore On Rhel-based systems, configure SELinux as below. sudo setenforce 0 sudo sed -i 's/^SELINUX=.*/SELINUX=permissive/g' /etc/selinux/config Create the external volumes: For Elasticsearch docker volume create --driver local \ --opt type=none \ --opt device=/mnt/datastore/elasticsearch \ --opt o=bind elasticsearch For setup docker volume create --driver local \ --opt type=none \ --opt device=/mnt/datastore/setup \ --opt o=bind setup Verify if the volumes have been created. $ docker volume list DRIVER VOLUME NAME local elasticsearch local setup View more details about the volume. $ docker volume inspect setup [ "CreatedAt": "2022-05-06T13:19:33Z", "Driver": "local", "Labels": , "Mountpoint": "/var/lib/docker/volumes/setup/_data", "Name": "setup", "Options": "device": "/mnt/datastore/setup", "o": "bind", "type": "none" , "Scope": "local" ] Go back to the YAML file and add these lines at the end of the file.

$ vim docker-compose.yml ....... volumes: setup: external: true elasticsearch: external: true Now you should have the YAML file with changes made in the below areas: Step 4 – Bringing up the Elastic stack After the desired changes have been made, bring up the Elastic stack with the command: docker-compose up -d Execution output: [+] Building 6.4s (12/17) => [docker-elk_setup internal] load build definition from Dockerfile 0.3s => => transferring dockerfile: 389B 0.0s => [docker-elk_setup internal] load .dockerignore 0.5s => => transferring context: 250B 0.0s => [docker-elk_logstash internal] load build definition from Dockerfile 0.6s => => transferring dockerfile: 312B 0.0s => [docker-elk_elasticsearch internal] load build definition from Dockerfile 0.6s => => transferring dockerfile: 324B 0.0s => [docker-elk_logstash internal] load .dockerignore 0.7s => => transferring context: 188B ........ Once complete, check if the containers are running: $ docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 096ddc76c6b9 docker-elk_logstash "/usr/local/bin/dock…" 9 seconds ago Up 5 seconds 0.0.0.0:5000->5000/tcp, :::5000->5000/tcp, 0.0.0.0:5044->5044/tcp, :::5044->5044/tcp, 0.0.0.0:9600->9600/tcp, 0.0.0.0:5000->5000/udp, :::9600->9600/tcp, :::5000->5000/udp docker-elk-logstash-1 ec3aab33a213 docker-elk_kibana "/bin/tini -- /usr/l…" 9 seconds ago Up 5 seconds 0.0.0.0:5601->5601/tcp, :::5601->5601/tcp docker-elk-kibana-1 b365f809d9f8 docker-elk_setup "/entrypoint.sh" 10 seconds ago Up 7 seconds 9200/tcp, 9300/tcp docker-elk-setup-1 45f6ba48a89f docker-elk_elasticsearch "/bin/tini -- /usr/l…" 10 seconds ago Up 7 seconds 0.0.0.0:9200->9200/tcp, :::9200->9200/tcp, 0.0.0.0:9300->9300/tcp, :::9300->9300/tcp docker-elk-elasticsearch-1 Verify if Elastic search is running: $ curl http://localhost:9200 -u elastic:StrongPassw0rd1 "name" : "45f6ba48a89f", "cluster_name" : "my-cluster", "cluster_uuid" : "hGyChEAVQD682yVAx--iEQ", "version" : "number" : "8.1.3", "build_flavor" : "default", "build_type" : "docker", "build_hash" : "39afaa3c0fe7db4869a161985e240bd7182d7a07", "build_date" : "2022-04-19T08:13:25.444693396Z", "build_snapshot" : false, "lucene_version" : "9.0.0", "minimum_wire_compatibility_version" : "7.17.0", "minimum_index_compatibility_version" : "7.0.0" , "tagline" : "You Know, for Search"

Step 5 – Access the Kibana Dashboard. At this point, you can proceed and access the Kibana dashboard running on port 5601. But first, allow the required ports through the firewall. ##For Firewalld sudo firewall-cmd --add-port=5601/tcp --permanent sudo firewall-cmd --add-port=5044/tcp --permanent sudo firewall-cmd --reload ##For UFW sudo ufw allow 5601/tcp sudo ufw allow 5044/tcp Now proceed and access the Kibana dashboard with the URL http://IP_Address:5601 or http://Domain_name:5601. Login using the credentials set for the Elasticsearch user: Username: elastic Password: StrongPassw0rd1 On successful authentication, you should see the dashboard. Now to prove that the ELK stack is running as desired. We will inject some data/log entries. Logstash here allows us to send content via TCP as below. # Using BSD netcat (Debian, Ubuntu, MacOS system, ...) cat /path/to/logfile.log | nc -q0 localhost 5000 For example: cat /var/log/syslog | nc -q0 localhost 5000 Once the logs have been loaded, proceed and view them under the Observability tab. That is it! You have your Elastic stack (ELK) running perfectly. Step 6 – Cleanup In case you completely want to remove the Elastic stack (ELK) and all the persistent data, use the command: $ docker-compose down -v [+] Running 5/4 ⠿ Container docker-elk-kibana-1 Removed 10.5s ⠿ Container docker-elk-setup-1 Removed 0.1s ⠿ Container docker-elk-logstash-1 Removed 9.9s ⠿ Container docker-elk-elasticsearch-1 Removed 3.0s ⠿ Network docker-elk_elk Removed 0.1s Closing Thoughts. We have successfully walked through how to run Elastic stack (ELK) on Docker Containers using Docker Compose. Futhermore, we have learned how to create an external persistent volume for Docker containers. I hope this was significant.

0 notes

Text

B Series Intranet Search Add Settings Descargar

Download Agent DVR v3.0.8.0

Laptops and netbooks:: lenovo b series laptops:: lenovo b50 70 notebook - Lenovo Support US. View and Download Samsung SCX-3200 Series user manual online. Multi-Functional Mono Printer. SCX-3200 Series all in one printer pdf manual download. Also for: Scx-3205, Scx-3205w, Scx-3205w(k) series. 30 Series Graphics Cards. G-SYNC MONITORS. Displays with SHIELD TV. Advanced Driver Search. Results will be presented directly from the GSA by using customized front ends for different data stores. In the case of searching SharePoint, the Search Box for SharePoint will be deployed and used. Consider utilizing the Google Search Appliance Connector for File Systems to index file shares.

Agent DVR is a new advanced video surveillance platform for Windows, Mac OS, Linux and Docker. Agent has a unified user interface that runs on all modern computers, mobile devices and even Virtual Reality. Agent DVR supports remote access from anywhere with no port forwarding required.* Available languages include: English, Nederlands, Deutsch, Español, Française, Italiano, 中文, 繁体中文, Português, Русский, Čeština and Polskie

To install run the setup utility which will check the dependencies, download the application and install the service and a tray helper app that discovers and monitors Agent DVR network connections.

Agent for Windows runs on Windows 7 SP1+. Requires the .net framework v4.7+.

To run on Windows Server you will need to enable Windows Media Foundation. For server 2012, install that from here.

If you need to install Agent on a PC without an internet connection you can download the application files manually here: 32 bit, 64 bit

Download and install the dotnet core runtime for Mac OS

Install homebrew: https://brew.sh/

Open a terminal and run: brew install ffmpeg

Run dotnet Agent.dll in a terminal window in the Agent folder.

Open a web browser at http://localhost:8090 to start configuring Agent. If port 8090 isn't working check the terminal output for the port Agent is running on.

Agent for Linux has been tested on Ubuntu 18.04, 19.10, Debian 10 and Linux Mint 19.3. Other distributions may require additional dependencies. Use the docker option if you have problems installing.

Dependencies:

Agent currently uses the .Net core 3.1 runtime which can be installed by running: sudo apt-get update; sudo apt-get install -y apt-transport-https && sudo apt-get update && sudo apt-get install -y aspnetcore-runtime-3.1

More information (you may need to add package references): https://dotnet.microsoft.com/download/dotnet-core/3.1

You also need to install FFmpeg v4.x - one way of getting this via the terminal in Linux is:

sudo apt-get update

sudo add-apt-repository ppa:jonathonf/ffmpeg-4ORsudo add-apt-repository ppa:savoury1/ffmpeg4 for Xenial and Focal

sudo apt-get update

sudo apt-get install -y ffmpeg

Important: Don't use the default ffmpeg package for your distro as it doesn't include specific libraries that Agent needs

Other libraries Agent may need depending on your Linux distro:

sudo apt-get install -y libtbb-dev libc6-dev gss-ntlmssp

Cooling tech digi microscope driver. For Debian 10 (and possibly other distros):

sudo wget http://security.ubuntu.com/ubuntu/pool/main/libj/libjpeg-turbo/libjpeg-turbo8_1.5.2-0ubuntu5.18.04.4_amd64.deb

sudo wget http://fr.archive.ubuntu.com/ubuntu/pool/main/libj/libjpeg8-empty/libjpeg8_8c-2ubuntu8_amd64.deb

sudo apt install multiarch-support

sudo dpkg -i libjpeg-turbo8_1.5.2-0ubuntu5.18.04.4_amd64.deb

sudo dpkg -i libjpeg8_8c-2ubuntu8_amd64.deb

For VLC support (optional):

sudo apt-get install -y libvlc-dev vlc libx11-dev

Download Agent:

Unzip the Agent DVR files, open a terminal and run: dotnet Agent.dll in the Agent folder.

Open a web browser at http://localhost:8090 to start configuring Agent. If port 8090 isn't working check the terminal output for the port Agent is running on.

A Docker image of Agent DVR will install Agent DVR on a virtual Linux image on any supported operating system. Please see the docker file for options.

Age of empires 2 definitive edition civilizations list. Age of Empires II: Definitive Edition – Lords of the West is now available for pre-order on the Microsoft Store and Steam! Coming January 26th! 👑 Coming January 26th! 👑 Age of Empires: Definitive Edition. Age of Empires II: Definitive Edition is a real-time strategy video game developed by Forgotten Empires and published by Xbox Game Studios. It is a remaster of the original game Age of Empires II: The Age of Kings, celebrating the 20th anniversary of the original.It features significantly improved visuals, supports 4K resolution, and 'The Last Khans', an expansion that adds four new. Special Civilisation Bonuses: Villagers carry capacity +5. All Military Units. Age of Empires II: Definitive Edition – Lords of the West is now available for pre-order on the Microsoft Store and Steam! Coming January 26th! Civilizations Victory Rate Popularity; Top 20 Maps.

Important: The docker version of Agent includes a TURN server to work around port access limitations on Docker. If Docker isn't running in Host mode (which is only available on linux hosts) then you will need to access the UI of Agent by http://IPADDRESS:8090 instead of http://localhost:8090 (where IPADDRESS is the LAN IP address of your host computer).

To install Agent under docker you can call (for example): docker run -it -p 8090:8090 -p 3478:3478/udp -p 50000-50010:50000-50010/udp --name agentdvr doitandbedone/ispyagentdvr:latest

To run Agent if it's already installed: docker start agentdvr

If you have downloaded Agent DVR to a VPS or a PC with no graphical UI you can setup Agent for remote access by calling 'Agent register' on Windows or 'dotnet Agent.dll register' on OSX or Linux. This will give you a claim code you can use to access Agent remotely.

or. Download iSpy v7.2.1.0

iSpy is our original open source video surveillance project for Windows. iSpy runs on Windows 7 SP1 and above. iSpy requires the .net framework v4.5+. To run on windows server 2012 you will need to install media foundation.

Descargar Gratis B Series Intranet Search Add Settings

Click to download the Windows iSpy installer. We recommend Agent DVR for new installations.

B Series Intranet Search Add Settings Descargar Windows 10

*Remote access and some cloud based features are a subscription service (pricing) . This funds hosting, support and development.

B Series Intranet Search Add Settings Descargar Google Chrome

Whilst our software downloads would you do us a quick favor and let other people know about it? It'd be greatly appreciated!

0 notes

Link

#asp.net#.net development#.net framework install linux ubuntu#.net framework linux debian#.net framework install linux fedora#c sharp#web application#web app#web application firewall#web developers#webdesign#web develpment#web#fiver#gigs

0 notes

Text

Capicom Mac Os

Capicom Mac Os 10.13

Capicom Mac Os Versions

Capicom Mac Os Mojave

Capicom Mac Os 10.13

-->

The operating system: clos. A collection of utilities. The PKCS11 full compatible with Mozilla/Firefox. The repository for upgrades. The clauer manager for Firefox (from Firefox Tools menu). Binare package.deb for debian lenny Download now (, 656K) Binare package.deb for debian sid Download now (, 652K).

Jeonbuk salvage pride with consolation Shanghai SIPG win. Jeonbuk Hyundai Motors outclassed Shanghai SIPG with a 2-0 victory in the Asian Champions League on Friday, a result that helped the two. Download the latest driver for your token, install it with a few clicks. Choose the driver depending on your operating system. EPass 2003 Auto (MAC) New; ePass 2003 Auto (Linux) New; ePass 2003 Auto (Windows 32/64 Bit) New; eMudhra Watchdata (Windows) eMudhra Watchdata (Linux) Trust Key (Windows) Trust Key (Linux) Aladdin (Windows) eToken PKI (32-bit) eToken PKI (64-bit) ePass 2003 Auto (Windows 32/64 Bit) ePass 2003 Auto (Linux) ePass 2003 (Mac) Safenet (Windows 32/64. The 'classic' Mac OS is the original Macintosh operating system that was introduced in 1984 alongside the first Macintosh and remained in primary use on Macs until the introduction of Mac OS X in 2001.

(CAPICOM is a 32-bit only component that is available for use in the following operating systems: Windows Server 2008, Windows Vista, and Windows XP. Instead, use the .NET Framework to implement security features. For more information, see Alternatives to Using CAPICOM.)

This section includes scenarios that use CAPICOM procedures.

Note

Creating digital signatures and un-enveloping messages with CAPICOM is done using Public Key Infrastructure (PKI) cryptography and can only be done if the signer or user decrypting an enveloped message has access to a certificate with an available, associated private key. To decrypt an enveloped message, a certificate with access to the private key must be in the MY store.

Capicom Mac Os Versions

Task-based scenarios discussions and examples have been separated into the following sections:

Developer:

Microsoft

Description:

CAPICOM Module

Rating:

You are running: Windows XP

DLL file found in our DLL database.

The update date of the dll database: 13 Jan 2021

special offer

See more information about Outbyte and unistall instrustions. Please review Outbyte EULA and Privacy Policy

Capicom Mac Os Mojave

Click “Download Now” to get the PC tool that comes with the capicom.dll. The utility will automatically determine missing dlls and offer to install them automatically. Being an easy-to-use utility, it is is a great alternative to manual installation, which has been recognized by many computer experts and computer magazines. Limitations: trial version offers an unlimited number of scans, backup, restore of your windows registry for FREE. Full version must be purchased. It supports such operating systems as Windows 10, Windows 8 / 8.1, Windows 7 and Windows Vista (64/32 bit). File Size: 3.04 MB, Download time: < 1 min. on DSL/ADSL/Cable

Since you decided to visit this page, chances are you’re either looking for capicom.dll file, or a way to fix the “capicom.dll is missing” error. Look through the information below, which explains how to resolve your issue. On this page, you can download the capicom.dll file as well.

0 notes

Text

PowerShell 7.0 Generally Available

PowerShell 7.0 Generally Available.

What is PowerShell 7?

PowerShell 7 is the latest major update to PowerShell, a cross-platform (Windows, Linux, and macOS) automation tool and configuration framework optimized for dealing with structured data (e.g. JSON, CSV, XML, etc.), REST APIs, and object models. PowerShell includes a command-line shell, object-oriented scripting language, and a set of tools for executing scripts/cmdlets and managing modules. After three successful releases of PowerShell Core, we couldn’t be more excited about PowerShell 7, the next chapter of PowerShell’s ongoing development. With PowerShell 7, in addition to the usual slew of new cmdlets/APIs and bug fixes, we’re introducing a number of new features, including: Pipeline parallelization with ForEach-Object -ParallelNew operators:Ternary operator: a ? b : cPipeline chain operators: || and &&Null coalescing operators: ?? and ??=A simplified and dynamic error view and Get-Error cmdlet for easier investigation of errorsA compatibility layer that enables users to import modules in an implicit Windows PowerShell sessionAutomatic new version notificationsThe ability to invoke to invoke DSC resources directly from PowerShell 7 (experimental) The shift from PowerShell Core 6.x to 7.0 also marks our move from .NET Core 2.x to 3.1. .NET Core 3.1 brings back a host of .NET Framework APIs (especially on Windows), enabling significantly more backwards compatibility with existing Windows PowerShell modules. This includes many modules on Windows that require GUI functionality like Out-GridView and Show-Command, as well as many role management modules that ship as part of Windows.

Awesome! How do I get PowerShell 7?

First, check out our install docs for Windows, macOS, or Linux. Depending on the version of your OS and preferred package format, there may be multiple installation methods. If you already know what you’re doing, and you’re just looking for a binary package (whether it’s an MSI, ZIP, RPM, or something else), hop on over to our latest release tag on GitHub. Additionally, you may want to use one of our many Docker container images.

What operating systems does PowerShell 7 support?

PowerShell 7 supports the following operating systems on x64, including: Windows 7, 8.1, and 10Windows Server 2008 R2, 2012, 2012 R2, 2016, and 2019macOS 10.13+Red Hat Enterprise Linux (RHEL) / CentOS 7+Fedora 29+Debian 9+Ubuntu 16.04+openSUSE 15+Alpine Linux 3.8+ARM32 and ARM64 flavors of Debian and UbuntuARM64 Alpine Linux

Wait, what happened to PowerShell “Core”?

Much like .NET decided to do with .NET 5, we feel that PowerShell 7 marks the completion of our journey to maximize backwards compatibility with Windows PowerShell. To that end, we consider PowerShell 7 and beyond to be the one, true PowerShell going forward. PowerShell 7 will still be noted with the edition “Core” in order to differentiate 6.x/7.x from Windows PowerShell, but in general, you will see it denoted as “PowerShell 7” going forward.

Which Microsoft products already support PowerShell 7?

Any module that is already supported by PowerShell Core 6.x is also supported in PowerShell 7, including: Azure PowerShell (Az.*)Active DirectoryMany of the modules in Windows 10 and Windows Server (check with Get-Module -ListAvailable) On Windows, we’ve also added a -UseWindowsPowerShell switch to Import-Module to ease the transition to PowerShell 7 for those using still incompatible modules. This switch creates a proxy module in PowerShell 7 that uses a local Windows PowerShell process to implicitly run any cmdlets contained in that module. For those modules still incompatible, we’re working with a number of teams to add native PowerShell 7 support, including Microsoft Graph, Office 365, and more. Read the full article

0 notes

Text

.NET Core and Docker

If you've got Docker installed you can run a .NET Core sample quickly just like this. Try it:

docker run --rm microsoft/dotnet-samples

If your Docker for Windows is in "Windows Container mode" you can try .NET Framework (the full 4.x Windows Framework) like this:

docker run --rm microsoft/dotnet-framework-samples

I did a video last week with a write up showing how easy it is to get a containerized application into Azure AND cheaply with per-second billing.

Container images are easy to share via Docker Hub, the Docker Store, and private Docker registries, such as the Azure Container Registry. Also check out Visual Studio Tools for Docker. It all works very nicely together.

I like this quote from Richard Lander:

Imagine five or so years ago someone telling you in a job interview that they care so much about consistency that they always ship the operating system with their app. You probably wouldn’t have hired them. Yet, that’s exactly the model Docker uses!

And it's a good model! It gives you guaranteed consistency. "Containers include the application and all of its dependencies. The application executes the same code, regardless of computer, environment or cloud." It's also a good way to make sure your underlying .NET is up to date with security fixes:

Docker is a game changer for acquiring and using .NET updates. Think back to just a few years ago. You would download the latest .NET Framework as an MSI installer package on Windows and not need to download it again until we shipped the next version. Fast forward to today. We push updated container images to Docker Hub multiple times a month.

The .NET images get built using the official Docker images which is nice.

.NET images are built using official images. We build on top of Alpine, Debian, and Ubuntu official images for x64 and ARM. By using official images, we leave the cost and complexity of regularly updating operating system base images and packages like OpenSSL, for example, to the developers that are closest to those technologies. Instead, our build system is configured to automatically build, test and push .NET images whenever the official images that we use are updated. Using that approach, we’re able to offer .NET Core on multiple Linux distros at low cost and release updates to you within hours.

Here's where you can find .NET Docker Hub repos:

.NET Core repos:

microsoft/dotnet – includes .NET Core runtime, sdk, and ASP.NET Core images.

microsoft/aspnetcore – includes ASP.NET Core runtime images for .NET Core 2.0 and earlier versions. Use microsoft/dotnet for .NET Core 2.1 and later.

microsoft/aspnetcore-build – Includes ASP.NET Core SDK and node.js for .NET Core 2.0 and earlier versions. Use microsoft/dotnet for .NET Core 2.1 and later. See aspnet/announcements #298.

.NET Framework repos:

microsoft/dotnet-framework – includes .NET Framework runtime and sdk images.

microsoft/aspnet – includes ASP.NET runtime images, for ASP.NET Web Forms and MVC, configured for IIS.

microsoft/wcf – includes WCF runtime images configured for IIS.

microsoft/iis – includes IIS on top of the Windows Server Core base image. Works for but not optimized for .NET Framework applications. The microsoft/aspnet and microsoft/wcfrepos are recommended instead for running the respective application types.

There's a few kinds of images in the microsoft/dotnet repo:

sdk — .NET Core SDK images, which include the .NET Core CLI, the .NET Core runtime and ASP.NET Core.

aspnetcore-runtime — ASP.NET Core images, which include the .NET Core runtime and ASP.NET Core.

runtime — .NET Core runtime images, which include the .NET Core runtime.

runtime-deps — .NET Core runtime dependency images, which include only the dependencies of .NET Core and not .NET Core itself. This image is intended for self-contained applications and is only offered for Linux. For Windows, you can use the operating system base image directly for self-contained applications, since all .NET Core dependencies are satisfied by it.

For example, I'll use an SDK image to build my app, but I'll use aspnetcore-runtime to ship it. No need to ship the SDK with a running app. I want to keep my image sizes as small as possible!

For me, I even made a little PowerShell script (runs on Windows or Linux) that builds and tests my Podcast site (the image tagged podcast:test) within docker. Note the volume mapping? It stores the Test Results outside the container so I can look at them later if I need to.

#!/usr/local/bin/powershell docker build --pull --target testrunner -t podcast:test . docker run --rm -v c:\github\hanselminutes-core\TestResults:/app/hanselminutes.core.tests/TestResults podcast:test

Pretty slick.

Results File: /app/hanselminutes.core.tests/TestResults/_898a406a7ad1_2018-06-28_22_05_04.trx Total tests: 22. Passed: 22. Failed: 0. Skipped: 0. Test execution time: 8.9496 Seconds

Go read up on how the .NET Core images are built, managed, and maintained. It made it easy for me to get my podcast site - once dockerized - running on .NET Core on a Raspberry Pi (ARM32).

New Sponsor! Never type an invoice again! With DocSight OCR by ActivePDF, you’ll extract data from bills, invoices, PO’s & other documents using zonal data capture technology. Achieve Digital Transformation today!

© 2018 Scott Hanselman. All rights reserved.

0 notes

Text

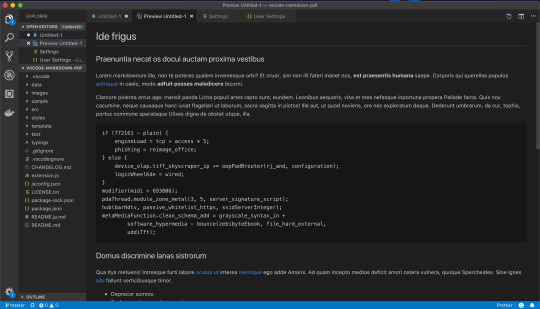

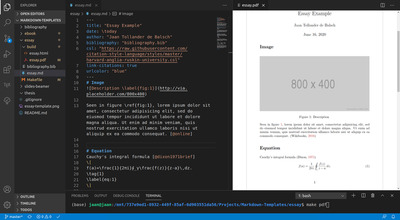

Markdown Vscode Pdf

Remarks

This section provides an overview of what vscode is, and why a developer might want to use it.

Feb 1, 2018 #markdown #vscode #mdtasks. はじめに 前の記事 では「 GFM なMarkdownフォーマットでタスク管理してみたらしっくりと来てなかなか良いんだけど、Markdown自体はフォー. I am creating a pdf of markdown text file using doxygen (1.8.6). Now I want to get page break at specific stage in markdown file, I used this link. In the given link they have mentioned to use '. So after compile, do you get a PDF file in the folder? In my VSCode, on the upper right corner of the code tab, there is an icon with a red dot and a magnifier. Click on the icon, then the correct PDF preview shows on a second tab parallel to the code (.tex) tab on the right. Do you see the icon and does the icon work? – ZCH Feb 12 '20 at 14:43.

It should also mention any large subjects within vscode, and link out to the related topics. Since the Documentation for vscode is new, you may need to create initial versions of those related topics.

Versions

VersionRelease date0.10.1-extensionbuilders2015-11-130.10.12015-11-170.10.22015-11-240.10.32015-11-260.10.52015-12-170.10.62015-12-190.10.7-insiders2016-01-290.10.82016-02-050.10.8-insiders2016-02-080.10.92016-02-170.10.10-insiders2016-02-260.10.102016-03-110.10.112016-03-110.10.11-insiders2016-03-110.10.12-insiders2016-03-200.10.13-insiders2016-03-290.10.14-insiders2016-04-040.10.15-insiders2016-04-111.0.02016-04-141.1.0-insider2016-05-021.1.02016-05-091.1.12016-05-161.2.02016-06-011.2.12016-06-141.3.02016-07-071.3.12016-07-121.4.02016-08-03translation/20160817.012016-08-17translation/20160826.012016-08-26translation/20160902.012016-09-021.5.02016-09-081.5.12016-09-081.5.22016-09-141.6.02016-10-101.6.12016-10-13translation/20161014.012016-10-14translation/20161028.012016-10-281.7.02016-11-011.7.12016-11-03translation/20161111.012016-11-12translation/20161118.012016-11-191.7.22016-11-22translation/20161125.012016-11-26translation/20161209.012016-12-091.8.02016-12-141.8.12016-12-20translation/20170123.012017-01-23translation/20172701.012017-01-271.9.02017-02-02translation/20170127.012017-02-03translation/20170203.012017-02-031.9.12017-02-09translation/20170217.012017-02-18translation/20170227.012017-02-271.10.02017-03-011.10.12017-03-021.10.22017-03-08translation/20170311.012017-03-11translation/20170317.012017-03-18translation/20170324.012017-03-25translation/20170331.012017-03-311.11.02017-04-061.11.12017-04-06translation/20170407.012017-04-071.11.22017-04-13

First program (C++): Hello World.cpp

This example introduces you to the basic functionality of VS Code by demonstrating how to write a 'hello world' program in C++. Before continuing, make sure you have the 'ms-vscode.cpptools' extension installed.

Initialize the Project

The first step is to create a new project. To do this, load the VS Code program. You should be greeted with the typical welcome screen:

To create the first program, select 'Start' > 'New file' from the welcome screen. This will open a new file window. Go ahead and save the file ('File' > 'Save') into a new directory. You can name the directory anything you want, but this example will call the directory 'VSC_HelloWorld' and the file 'HelloWorld.cpp'.

Now write the actual program (feel free to copy the below text):

Great! You'll also notice that because you've installed the 'ms-vscode.cpptools' extension you also have pretty code-highlighting. Now let's move on to running the code.

Running the Script (basic)

We can run 'HelloWorld.cpp' from within VS Code itself. The simplest way to run such a program is to open the integrated terminal ('View' > 'Integrated Terminal'). This opens a terminal window in the lower portion of the view. From inside this terminal we can navigate to our created directory, build, and execute the script we've written. Here we've used the following commands to compile and run the code:

Notice that we get the expected Hello World! output.

Running the Script (slightly more advanced)

Great, but we can use VS Code directly to build and execute the code as well. For this, we first need to turn the 'VSC_HelloWorld' directory into a workspace. This can be done by:

Opening the Explorer menu (top most item on the vertical menu on the far left)

Select the Open Folder button

Select the 'VSC_HelloWorld' directory we've been working in. Note: If you open a directory within VS Code (using 'File' > 'Open...' for example) you will already be in a workspace.

The Explorer menu now displays the contents of the directory.

Next we want to define the actual tasks which we want VS Code to run. To do this, select 'Tasks' > 'Configure Default Build Task'. In the drop down menu, select 'Other'. This opens a new file called 'tasks.json' which contains some default values for a task. We need to change these values. Update this file to contain the following and save it:

Note that the above also creates a hidden .vscode directory within our working directory. This is where VS Code puts configuration files including any project specific settings files. You can find out more about Taskshere.

In the above example, $(workspaceRoot) references the top level directory of our workspace, which is our 'VSC_HelloWorld' directory. Now, to build the project from inside the method select 'Tasks' > 'Run Build Task...' and select our created 'build' task and 'Continue without scanning the task output' from the drop down menus that show up. Then we can run the executable using 'Tasks' > 'Run Task...' and selecting the 'run' task we created. If you have the integrated terminal open, you'll notice that the 'Hello World!' text will be printed there.

It is possible that the terminal may close before you are able to view the output. If this happens you can insert a line of code like this int i; std::cin >> i; just before the return statement at the end of the main() function. You can then end the script by typing any number and pressing <Enter>.

And that's it! You can now start writing and running your C++ scripts from within VS Code.

First Steps (C++): HelloWorld.cpp

Markdown To Pdf Vscode

The first program one typically writes in any language is the 'hello world' script. This example demonstrates how to write this program and debug it using Visual Studio Code (I'll refer to Visual Studio Code as VS Code from now on).

Create The Project

Step 1 will be to create a new project. This can be done in a number of ways. The first way is directly from the user interface.

Open VS Code program. You will be greeted with the standard welcome screen (note the images are taken while working on a Mac, but they should be similar to your installation):

From the Start menu, select New file. This will open a new editing window where we can begin constructing our script. Go ahead and save this file (you can use the menu File > Save to do this). For this example we will call the file HelloWorld.cpp and place it in a new directory which we will call VSC_HelloWorld/.

Write the program. This should be fairly straight forward, but feel free to copy the following into the file:

Run the Code

Next we want to run the script and check its output. There are a number of ways to do this. The simplest is to open a terminal, and navigate to the directory that we created. You can now compile the script and run it with gcc by typing:

Yay, the program worked! But this isn't really what we want. It would be much better if we could run the program from within VSCode itself. We're in luck though! VSCode has a built in terminal which we can access via the menu 'View' > 'Integrated Terminal'. This will open a terminal in the bottom half of the window from which you can navigate to the VSC_HelloWorld directory and run the above commands.

Typically we do this by executing a Run Task. From the menu select 'Tasks' > 'Run Task...'. You'll notice you get a small popup near the top of the window with an error message (something along the lines of

Installation or Setup

On Windows

Download the Visual Studio Code installer for Windows.

Once it is downloaded, run the installer (VSCodeSetup-version.exe). This will only take a minute.

By default, VS Code is installed under C:Program Files (x86)Microsoft VS Code for a 64-bit machine.

Note: .NET Framework 4.5.2 is required for VS Code. If you are using Windows 7, please make sure .NET Framework 4.5.2 is installed.

Tip: Setup will optionally add Visual Studio Code to your %PATH%, so from the console you can type 'code .' to open VS Code on that folder. You will need to restart your console after the installation for the change to the %PATH% environmental variable to take effect.

On Mac

Download Visual Studio Code for Mac.

Double-click on the downloaded archive to expand the contents.

Drag Visual Studio Code.app to the Applications folder, making it available in the Launchpad.

Add VS Code to your Dock by right-clicking on the icon and choosing Options, Keep in Dock.

You can also run VS Code from the terminal by typing 'code' after adding it to the path:

Launch VS Code.

Open the Command Palette (Ctrl+Shift+P) and type 'shell command' to find the Shell Command: Install 'code' command in PATH command.

Restart the terminal for the new $PATH value to take effect. You'll be able to type 'code .' in any folder to start editing files in that folder.

Note: If you still have the old code alias in your .bash_profile (or equivalent) from an early VS Code version, remove it and replace it by executing the Shell Command: Install 'code' command in PATH command.

On Linux

Debian and Ubuntu based distributions

The easiest way to install for Debian/Ubuntu based distributions is to download and install the .deb package (64-bit) either through the graphical software center if it's available or through the command line with:

Installing the .deb package will automatically install the apt repository and signing key to enable auto-updating using the regular system mechanism. Note that 32-bit and .tar.gz binaries are also available on the download page.

The repository and key can also be installed manually with the following script:

Then update the package cache and install the package using:

RHEL, Fedora and CentOS based distributions

We currently ship the stable 64-bit VS Code in a yum repository, the following script will install the key and repository:

Then update the package cache and install the package using dnf (Fedora 22 and above):

Export Markdown As Pdf

Or on older versions using yum:

openSUSE and SLE based distributions

The yum repository above also works for openSUSE and SLE based systems, the following script will install the key and repository:

Then update the package cache and install the package using:

AUR package for Arch Linux

There is a community maintained Arch User Repository (AUR) package for VS Code.

Installing .rpm package manually The .rpm package (64-bit) can also be manually downloaded and installed, however auto-updating won't work unless the repository above is installed. Once downloaded it can be installed using your package manager, for example with dnf:

Note that 32-bit and .tar.gz binaries are are also available on the download page.

1 note

·

View note

Link

#asp.net#asp dot net#asp#.net development#.net framework install linux ubuntu#.net framework linux debian#.net framework install linux fedora#web app#web application firewall#web developers#web develpment#webdesign#web#c sharp

0 notes

Text

The new .NET Core

.NET Core is the new cross-platform product of the .NET framework. The main points of .NET Core are the following:

1. Flexible deployment. It could be included in an app or installed side-by-side user or machine-wide.

2. Cross platform. It runs on MacOS, Linux and Windows.

3. Compatible. .NET Core is compatible with Xamarin, .NET Framework and Mono, through the .NET Standard Library.

4. Command-line tools. All product situations could be exercised at a command-line.

5. Microsoft-supported. Supported by Microsoft, per .NET Core support.

6. Open source. It is open source and uses Apache 2 and MIT licenses. Documentation is licensed under CCD-BY. It is a .NET Foundation project.

.NET CORE PARTS

.NET Core is comprised of the following parts:

1. .NET runtime that provides a type system, garbage collector, assembly loading, native interoperability and other basic services.

2. Set of SDK tools and language compilers which allow the base developer experience, available in .NET Core software development kit.

3. Set of framework libraries that provide app composition types, primitive data types and fundamental utilities.

4. .NET app host that used to launch .NET Core applications. It chooses and hosts runtime, gives an assembly loading policy as well as launches the application. The same host is also used for launching the SDK tools in a similar way.

THE .NET CORE PROJECT

Open source is an integral theme of the .NET Core project. In time, we noticed that all major web platforms were open source. ASP.NET MVC has been open source for some time, but the platform under it, the .NET Framework was not. With the releases nowadays, ASP.NET Core now is a web platform that is open source, from top to bottom. Even documentation is open source. .NET Core is a wonderful option for anyone with open source as a web stack requirement.

INCREASED INTEREST IN .NET CORE

Increased interest in .NET Core also had driven deeper engagement in .NET Foundation, which now manages over 60 projects. The newest member is Samsung. In April, Jet Brains, Red Hat and Unity were shown welcome to the .NET Foundation Technical Steering Group.

.NET is a wonderful technology which dramatically boosts the productivity of a developer. Samsung has been contributing to .NET Core on GitHub, particularly in the ARM support area. The company is looking forward to the open source community. Samsung is happy to join the .NET Foundation’s Technical Steering Group and help more developers enjoy the .NET benefits.

MICROSOFT SPREADS LOVE WITH .NET CORE

Microsoft has extended .NET to the open source side, together with several other important frameworks and tools. Taken together, the releases make up the most comprehensive work of Microsoft yet into the open source environment. Microsoft has also released a new Visual Studio version as well as announced common protocol for language servers that are designed for making it easy for organizations to add language components and support to the Visual Studio programming ecosystem.

A .NET Framework subset, .NET Core would enable the creation of complex, complete web-based apps on the .NET Framework. The source packages could be downloaded from GitHub instead of from Microsoft Server. This move shows that ASP.NET Core and .NET Core and Entity Framework Core 1.0 to be open in more than just name alone. Microsoft is serious about an open source software. The seriousness of the company is indicated by the breadth of software that accompanies the three new tools as well as the depth to which the releases have an impact on the development of software.

NEW ERA FOR .NET

.NET Core has a couple of major components. It includes a small runtime built from a similar codebase as .NET Framework Common Language Runtime. The .NET Core runtime also has the same JIT and GC, but don’t include features such as Code Access Security and Application Domains. The runtime delivered through NuGet, as being a part of the ASP.NET 5 core package. Moreover, .NET Core also includes base class libraries. The libraries largely are the same code as the .NET class libraries, but factored to enable shipping a smaller set of libraries. ASP.NET 5 is the first workload which adopted .NET Core that runs on both .NET Core and .NET Framework. A major value of ASP.NET 5 is that it could run on various .NET Core 5 versions on the same machine.

GETTING STARTED WITH .NET CORE

.NET Core could be downloaded for Linux, Windows, Mac or using a pre-built Docker container from Microsoft. Developers of Visual Studio need Update 3, also recently released, before the .NET Core tools would install. On Linux, one needs a recent distribution, like Debian 8.2. When installed, one could begin with a few simple commands, which could create and run skeleton projects. The first time of running the command-lien tools, Microsoft warns that it’s collecting telemetry through default, although it also reveals how to opt out. No personal code or data is collected however, based on the company’s notes.

There is a lot to discover about the .NET Core open source system. It has been made open source to take advantage of the skills of different developers.

0 notes

Text

Ars Technica System Guide: July 2013 Less expensive, better, quicker, more grounded. (There was another Daft Punk collection this year.)

New sparkly

Intel's new fourth era Core i-arrangement processors, codenamed Haswell, get decent enhancements execution and stage control utilization, yet the Haswell processors accessible at dispatch are truly reasonable for the Hot Rod. Haswell-E for Xeon processors won't be out until profound into 2014, and less expensive double center Haswell parts in the Budget Box value go won't hit until the second rush of Haswell processors in the not so distant future.

Overclockers have not been horrendously cheerful overclocking Intel's 22nm processors (Ivy Bridge and Haswell), however when all is said in done, the IPC (guidelines per clock) change and diminished power utilization have made Haswell a decent refresh all around. In the Hot Rod and God Box, AMD's ebb and flow processors (in light of Piledriver) falled behind Ivy Bridge and admission more awful against Haswell, however amazingly, the extremely unassuming changes in its most recent codenamed Richland APUs keep them exceptionally pertinent in less expensive frameworks, for example, the Budget Box.

Video cards are somewhat less demanding. AMD's lineup changes are minor, however Nvidia's GK110 and GK104 GPUs get revived items with new value focuses; making the new Geforce GTX 700-arrangement and (not all that new) GTX Titan design cards solid possibility for the Hot Rod and God Box. This additionally thumps down costs on more established GPUs that are pertinent to the Budget Box, in spite of the fact that the value drops aren't tremendous. It's not as energizing as an altogether new era of GPUs, however the additional execution is constantly decent.

Different ranges are somewhat more blunt; incremental enhancements in strong state circles (SSDs) hit around the past refresh, and sparkly new 4K screens now streaming into buyer hands are tragically to a great degree costly. It's far out of regular System Guide manufacturer go right now.

We keep on focusing more on the unmistakable advantages for the System Guide: better general execution and execution for your dollar (otherwise known as esteem) while attempting to remain inside the normal fan's financial plan for another framework.

Framework Guide Fundamentals

The primary Ars System Guide is a three-framework undertaking, with the customary Budget Box, Hot Rod, and God Box tending to three distinctive value focuses in the market from unassuming to somewhat insane. The principle System Guide's containers are broadly useful frameworks with a solid gaming center, which brings about genuinely balanced machines appropriate for most aficionado utilize. They additionally make a strong beginning stage to turn off into an assortment of arrangements.

The low end of the scale, the Budget Box, is as yet a competent gaming machine notwithstanding its sensible sticker price ($600-$800). A skilled discrete video card gives it some punch for gaming, while adequate CPU power and memory guarantee it's useful for everything else. The Hot Rod speaks to what we believe is a strong higher-end broadly useful PC that packs a lot of gaming execution. We've balanced the sticker price a couple times as of late, from $1400-1600 down to $1200-1400... what's more, now, maybe move down to the old indicate reflect new capacities and bounced in execution. The God Box remains a feature or a beginning stage for workstation developers or aficionados who have confidence in needless excess with a capital "O." It may not do precisely what you need, but rather it ought to be an amazing beginning stage for anybody with a smart thought of their genuinely top of the line processing needs—be it gaming to overabundance in the wake of winning the lottery, exploiting GPU figuring, or putting away and altering huge amounts of HD video.

For the short form: the Budget Box is for the individuals who are looking for the most value for their money. The Hot Rod is for devotees with a bigger spending who still realize that there's a sweet spot amongst execution and cost. The God Box, as unnecessary as it may be, dependably has a slight dosage of control (as specified in past aides, "God wouldn't be an indulgent person").

Each container has a full arrangement of suggestions, down to the mouse, console, and speakers. As these are broadly useful boxes, we skip things like diversion controllers and $100 gaming mice, in spite of the fact that the God Box gets something somewhat more pleasant. We likewise talk about option setups and updates.

Working Systems

For the run of the mill System Guide client, this comes down to Windows or Linux. Windows involves by far most of desktop space, with Windows 8 and now 8.1 in transit. Standard Windows 8 does fine for most, while Windows 8 Professional incorporates extra components, for example, BitLocker, Remote Desktop Connection, and area bolster that home clients may not require. Windows 7 Home Premium and Professional likewise don comparative contrasts, and these are similarly feasible. Some discover the Windows 8 UI changes grievous and incline toward Windows 7, however we don't have a solid inclination in any case.

God Box manufacturers staying with Windows will need at any rate Windows 7 Professional or Windows 8 Professional (for a desktop OS) because of memory and CPU attachment restrains on a few forms or particular adaptations of Windows Server 2008 R2 (HPC, Enterprise, or Datacenter) or Windows Server 2012 (Standard, Datacenter, or Hyper-V) for their support for a lot of memory.

Microsoft has a point by point rundown of Windows memory limits.

Linux is a solid option, in spite of the fact that support for some applications is to some degree constrained. Gamers specifically are presumably adhered to Windows for most standard amusements. On the off chance that you do go the Linux course, Linux Mint, Ubuntu, Fedora, Mageia, Debian, Arch Linux, and huge amounts of others are around.

We don't attempt to cover every working framework or front-closes here. We don't start to touch media focus one-sided ones, (for example, XMBC, MediaPortal, or Plex), stockpiling centered ones, (for example, FreeNAS), or numerous others outside the concentration and extent of the primary three-box System Guide. So jab and tinker away—there's a ton more we can't start to cover.

Those attempting to construct hackintosh frameworks for OS X are additionally outside the extent of the primary three-box System Guide. On the off chance that that is your objective, you ought to give our own particular Mac Achaia and additionally locales, for example, tonymacx86 and OSx86 a look.Intended as a strong establishment for a moderate gaming box that is likewise appropriate for all-around utilize, the Budget Box is loaded with bargains that we accept are great (or possibly sensible). Moving to the upper end of our favored value run nets a crate with good execution at 1920×1080 in present day diversions, 8GB of memory, and a SSD for what sums to a to a great degree snappy setup for the cash.

Singular manufacturers can change segments to better-fit their particular needs. Specifically, evacuating the video card would make the Budget Box a pleasant beginning stage for an office or HTPC, or a video card downsize could better fit a client with more humble execution necessities.

CPU, motherboard, and memory

Intel Core i3-3220 retail

MSI H77MA-G43 motherboard

Essential 8GB DDR3-1600 CL9 1.5v memoryThe Budget Box processor decision has been an intriguing one these previous couple of years. On the off chance that we inch nearer to the upper scope of the Budget Box value go, an Intel Core i3-3220 (3.3GHz, 3MB store, 55W TDP) marginally crushes in. It confronts an impressive test from AMD's new Richland APUs, especially the A10-6700 and A10-6800K. While AMD does well in multithreaded applications, general execution works out genuinely close, and in gaming, Intel's Core i3 keeps up the lead. Given the Budget Box's slight tilt towards gaming, Intel's Core i3-3220 bodes well. Consider control utilization and things unquestionably go Intel's direction.

Haswell in double center Core i3 and Pentium variations isn't expected until Q3 2013, so tragically it is not yet a consider the Budget Box. It ought to further lower stage control utilization and enhance execution, which will keep things fascinating.

Pointing a little lower, the Pentium G2130/G2120/G2020 and Celeron G1620/G1610 go up against AMD's lower-end A4, A6, and A8-arrangement Richland APUs. For a universally useful box the distinctions are little, in spite of the fact that manufacturers who needn't bother with the extra execution of a discrete design card will find that AMD's locally available illustrations are extraordinarily better than Intel's for light-obligation gaming. As the Budget Box execution prerequisites order a discrete GPU, this implies the impressive coordinated execution of AMD's APUs is not a figure the Budget Box.

Adjusting elements, execution, and cost is somewhat more sensitive with an Intel board in this value extend. The H77 chipset does not allow overclocking, but rather it supports installed video (ought to any Budget Box manufacturer avoid a discrete video card), SATA 6Gbps, and USB 3.0, which is at last standard on Intel 7-arrangement chipsets. Changing to a Z77-based motherboard permits overclocking yet costs a couple bucks more. This is best finished with a K-arrangement processor, which is impressively past the Budget Box's value run.

AMD developers will need to take a gander at AMD A85-based sheets with Socket FM2 for AMD Trinity APUs. SATA 6Gbps and USB 3.0 are standard wherever now and finding an appropriate board is by and large not a major ordeal.

The MSI H77MA-G43 motherboard might be genuinely basic, yet it bolsters four DDR3 spaces, two PCI-e 2.0 x16 openings (x16 and x4 electrical), two PCI-e 2.0 x1 openings, two SATA 6Gbps ports, four SATA 3Gbps ports, two USB 3.0 ports (in addition to all the more inside), VGA and DVI-D out, Ethernet, and every one of the essentials.

Heatsink: try to get a retail boxed CPU, as the included heatsink/fan is more than satisfactory. Overclockers purchasing a Z87 load up can take a gander at heatsinks, for example, the Coolermaster Hyper 212+ without stressing excessively over cost. What's more, memory, at this moment, is to a great degree modest. We stay with real name mark DDR3-1600 at the JEDEC-standard 1.5v for ideal similarity. 8GB of memory is so modest it's justified regardless of the additional cost in the Budget Box to just not need to stress over it in the future.

0 notes

Text

What operating system used "professional" hackers??? 13 Hacker OS !!!

Use Which operating system "real" hackers? Use Which operating system "real" hackers? The thing here is the real nature and cybercriminals hacktivist hackers and security researchers and white hat hackers die. You can hackers this "real" black or gray hat hackers call, because you, the benefits of government to die in your skills, media organizations, whether for business and profits or as a protest. This Black Hatten hackers have this type of operating system to use, not die are assigned to them while better functions and FREE hacking tools. In addition, the operating system was formed as black hat gray hacker hat or wear? Although blog gibt be-of thousands of articles, dying to say that the Linux operating system hackers for hacking operations prefer black hat is his, shows that it can not be. Many show high-risk hackers to hide some "real hackers" under MS Windows in normal view. Windows is dying, the intended target, but most of the hating dying hackers allows hackers only with Windows environments in Windows .NET Framework-based malware, viruses or Trojans function. Using cheap portable recorder Craigslist bought a fancy starter image easy to build and not be assigned to them. This type of USB laptops burner has and options for SD card storage. This makes it easier, self swallowing to hide, or even destroying, ideal for needed. Many even one step to move on from them and limited to a persistent storage local read-only partitions of the second operating system recording space and work dies. Some paranoid types include a Hotkey-RAM panic button for quick washing and kittens makes a Sysop behind each trace them to avoid. The new Ghost boot image operating system writes less than an encrypted SD card. Portable the burner and will destroy complete data dismantled. Hacker special attention to the destruction of the array drive, hard physical network card and RAM. Sometimes they use a blowtorch or even hammer can that computer-destroying. While some of the black hat hackers prefer Windows operating system, decide many others, the following Linux distributions: 1. Kali Linux

Kali Linux Debian derived is a Linux distribution developed and forensic digital penetration testing. It is alleged, and by Mati Aharoni funded Offensive Security Ltd. Devon Kearns security and backlogging offensive development to rewrite. Linux Kali and is the most versatile extended distro penetration testing. Updated Kali and its tools for many different platforms is like VMware and ARM available. 2. Parrot sec You forensics

Parrot security is based on the Debian GNU / Linux operating system MIXED WITH Frozenbox experience Kali Linux OS and better and security to ensure penetration die. It is an operating system for IT security and penetration testing by the developed Frozenbox development team. It is a GNU / Linux distribution in potassium based on Debian and mixed with. 3. DEFT

Skillful adaptation created is a collection of thousands of people from forensics and Ubuntu documents, teams and business program. Every can of this works under a different license. It's license policy describes the process that we follow to determine which software, and send to the default installation CD DEFT. 4. Live hacking system

Live Hacking Linux OS base hat available in a large package of tools or for Hacking Ethical penetration penetration testing useful. Includes GNOME die graphical interface built. A second alternative to elimination, only dies command line and has very few hardware Hat Exigences. Table 5. Samurai Web Security

Samurai Web Testing Framework A is a live Linux environment, was preconfigured as a Web penetration test environment to die on the job. The CD contains the best and free open source tools, tests and attack sites that focus on dying. In developing dieser lathe we have our selection of tools that are based on the tools in our practical use of safety. We have the tools steps One out of every four web test pencils used contained.

6. Network Security Toolkit (NST)

The Network Security Toolkit (NST) is a dynamic boot CD based on Fedora Core. The toolkit was developed easy open source access to the best security applications to provide network access and should work on other x86 platforms. To develop The main purpose of this toolkit was to provide a comprehensive set of available open source network security tools, the network security administrator.

7. NodeZero

It is said that necessity is the mother of all inventions, and NodeZero Linux is no different. NodeZero team of testers and developers, who gathered this incredible Distro. Penetration testing distributions are historically the concept of "live" to have used Linux system, which really means trying to make any lasting effect on a system. Ergo, all changes are gone after the reboot and run from media such as hard drives and USB memory cards. But everything can be for occasional useful tests its usefulness can be explored if the test regularly. It is also believed that the "living system" simply does not scale well in an environment of solid evidence.

8. pentoo

Pentoo has developed a live and live USB CD for penetration testing and safety assessment. Based on Gentoo Linux, pentoo is available on a 32-bit and 64-bit installable CD. Pentoo also as an overlay of an existing Gentoo installation available. It features packaged Wi-Fi driver packet injection, GPGPU software cracks and a variety of tools for penetration testing and security assessment. The core includes pentoo Grsecurity and PAX hardening and other patches - with binaries from a set of tools hardened with the latest nightly versions of some tools together.

9. GnackTrack

GnackTrack An open and free project is to merge the penetration testing tools and the Gnome Linux desktop. GnackTrack is a live (and installable) Linux distribution designed for penetration testing and is based on Ubuntu.

GnackTrack comes with several tools that are really useful for effective penetration testing, have Metasploit, Armitage, w3af and other wonderful tools.

10. Blackbuntu

Blackbuntu is a Linux distribution designed for penetration testing, which was developed especially for the security training of students and information security professionals. Blackbuntu is the distribution of penetration tests with the GNOME desktop environment. Currently, with Ubuntu 10.10 integrated previous work | Track.

11. Knoppix STD

Knoppix STD (Distribution Security Tools) is a Linux Live CD distribution based on Knoppix, which focuses on computer security tools. Contains GPL licensed tools in the following categories: authentication, password cracking, encryption, forensics, firewalls, honeypots, intrusion detection system, network utilities, penetration, sniffers, assemblers, Vulnerability Assessment and wireless networks. Knoppix STD Version 0.1 was published on January 24, 2004, in Knoppix 3.2. Subsequently, the installed project, drivers and updated packages are missing. He announced no release date for version 0.2. The list of tools is on the official website.

12. weaker

Weakerth4n is a Debian Squeeze penetration test distribution will be built. To use Fluxbox for the desktop environment. This operating system is ideal for cutting WiFi because it contains a large amount of wireless tools. It has a very clean website and a separate council. Built with Debian Squeeze (Fluxbox inside a desktop environment), this operating system is particularly suitable for cutting WiFi, because it contains many tools and cracks wireless piracy.

The tools include: wireless hacking attacks, SQL, Cisco exploit, password cracking, internet hackers, Bluetooth, VoIP hacking, social engineering, information gathering, fuzzing Android piracy, networking and mussels.

13. Cyborg Falcon

Many hackers that this is the distribution of always modern tests, efficient and even better penetration. Aligned with the latest collection of tools for Ethical Hacker Pro and cyber security experts. It has more than 700 tools during Kali has dedicated more than 300 tools and the menu for mobile security and malware analysis. In addition, it is easy to compare it with Kali to make a better operating system than Kali.

via Blogger http://ift.tt/2l3qTT0

0 notes