#360 Spatial Sound

Text

Sony Unveils INZONE Buds – Truly Wireless Gaming Earbuds

Sony has released INZONE Buds – new truly wireless gaming earbuds packed with Sony’s renowned audio technology designed to help you win. INZONE Buds offer both PC and console gamers an immersive gaming experience thanks to personalised sound, unrivalled 12 hours of battery life and low latency.

Accompanying this innovation is the INZONE H5 wireless headset, which offers up to an astonishing 28…

View On WordPress

#360 Spatial Sound#3D sound positioning#active noise cancellation#advanced spatial reproduction#AI-based noise reduction#console gaming#crystal-clear communication#customisable tap functions#Dynamic Driver X#esports collaboration#esports performance#extended battery life#Fnatic partnership#immersive gaming experience#INZONE H5 wireless headset#INZONE H9 wireless headset#lightweight design#Long Battery Life#low latency#new Black colour option#noise cancelling#PC gaming#personalised sound#professional gaming advice#Sony audio technology#Sony INZONE Buds#Sony new releases#Sound Field Optimisation#Sound Tone Personalisation#USB-C dongle connection

0 notes

Text

[231228] Sound BOMB 360° Behind Photo | Make ATEEZ’s voice more vivid with a spatial sound live that you can feel🎧 Like every day we spend with ATINY, it was a #DreamyDay day that felt like a dream☁

167 notes

·

View notes

Text

Apple’s Mysterious Fisheye Projection

If you’ve read my first post about Spatial Video, the second about Encoding Spatial Video, or if you’ve used my command-line tool, you may recall a mention of Apple’s mysterious “fisheye” projection format. Mysterious because they’ve documented a CMProjectionType.fisheye enumeration with no elaboration, they stream their immersive Apple TV+ videos in this format, yet they’ve provided no method to produce or playback third-party content using this projection type.

Additionally, the format is undocumented, they haven’t responded to an open question on the Apple Discussion Forums asking for more detail, and they didn’t cover it in their WWDC23 sessions. As someone who has experience in this area – and a relentless curiosity – I’ve spent time digging-in to Apple’s fisheye projection format, and this post shares what I’ve learned.

As stated in my prior post, I am not an Apple employee, and everything I’ve written here is based on my own history, experience (specifically my time at immersive video startup, Pixvana, from 2016-2020), research, and experimentation. I’m sure that some of this is incorrect, and I hope we’ll all learn more at WWDC24.

Spherical Content

Imagine sitting in a swivel chair and looking straight ahead. If you tilt your head to look straight up (at the zenith), that’s 90 degrees. Likewise, if you were looking straight ahead and tilted your head all the way down (at the nadir), that’s also 90 degrees. So, your reality has a total vertical field-of-view of 90 + 90 = 180 degrees.

Sitting in that same chair, if you swivel 90 degrees to the left or 90 degrees to the right, you’re able to view a full 90 + 90 = 180 degrees of horizontal content (your horizontal field-of-view). If you spun your chair all the way around to look at the “back half” of your environment, you would spin past a full 360 degrees of content.

When we talk about immersive video, it’s common to only refer to the horizontal field-of-view (like 180 or 360) with the assumption that the vertical field-of-view is always 180. Of course, this doesn’t have to be true, because we can capture whatever we’d like, edit whatever we’d like, and playback whatever we’d like.

But when someone says something like VR180, they really mean immersive video that has a 180-degree horizontal field-of-view and a 180-degree vertical field-of-view. Similarly, 360 video is 360-degrees horizontally by 180-degrees vertically.

Projections

When immersive video is played back in a device like the Apple Vision Pro, the Meta Quest, or others, the content is displayed as if a viewer’s eyes are at the center of a sphere watching video that is displayed on its inner surface. For 180-degree content, this is a hemisphere. For 360-degree content, this is a full sphere. But it can really be anything in between; at Pixvana, we sometimes referred to this as any-degree video.

It's here where we run into a small problem. How do we encode this immersive, spherical content? All the common video codecs (H.264, VP9, HEVC, MV-HEVC, AVC1, etc.) are designed to encode and decode data to and from a rectangular frame. So how do you take something like a spherical image of the Earth (i.e. a globe) and store it in a rectangular shape? That sounds like a map to me. And indeed, that transformation is referred to as a map projection.

Equirectangular

While there are many different projection types that each have useful properties in specific situations, spherical video and images most commonly use an equirectangular projection. This is a very simple transformation to perform (it looks more complicated than it is). Each x location on a rectangular image represents a longitude value on a sphere, and each y location represents a latitude. That’s it. Because of these relationships, this kind of projection can also be called a lat/long.

Imagine “peeling” thin one-degree-tall strips from a globe, starting at the equator. We start there because it’s the longest strip. To transform it to a rectangular shape, start by pasting that strip horizontally across the middle of a sheet of paper (in landscape orientation). Then, continue peeling and pasting up or down in one-degree increments. Be sure to stretch each strip to be as long as the first, meaning that the very short strips at the north and south poles are stretched a lot. Don’t break them! When you’re done, you’ll have a 360-degree equirectangular projection that looks like this.

If you did this exact same thing with half of the globe, you’d end up with a 180-degree equirectangular projection, sometimes called a half-equirect. Performed digitally, it’s common to allocate the same number of pixels to each degree of image data. So, for a full 360-degree by 180-degree equirect, the rectangular video frame would have an aspect ratio of 2:1 (the horizontal dimension is twice the vertical dimension). For 180-degree by 180-degree video, it’d be 1:1 (a square). Like many things, these aren’t hard and fast rules, and for technical reasons, sometimes frames are stretched horizontally or vertically to fit within the capabilities of an encoder or playback device.

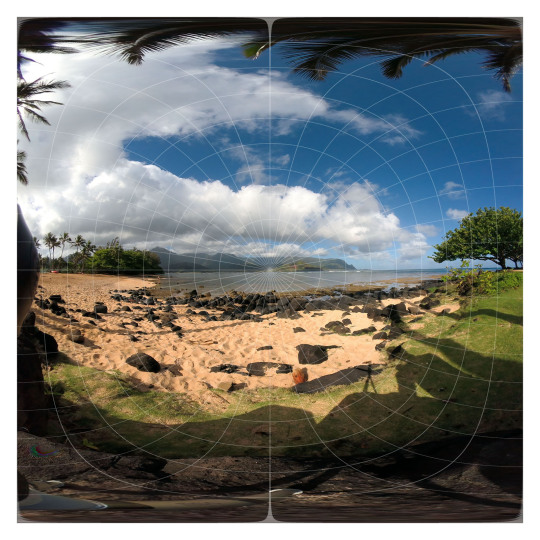

This is a 180-degree half equirectangular image overlaid with a grid to illustrate its distortions. It was created from the standard fisheye image further below. Watch an animated version of this transformation.

What we’ve described so far is equivalent to monoscopic (2D) video. For stereoscopic (3D) video, we need to pack two of these images into each frame…one for each eye. This is usually accomplished by arranging two images in a side-by-side or over/under layout. For full 360-degree stereoscopic video in an over/under layout, this makes the final video frame 1:1 (because we now have 360 degrees of image data in both dimensions). As described in my prior post on Encoding Spatial Video, though, Apple has chosen to encode stereo video using MV-HEVC, so each eye’s projection is stored in its own dedicated video layer, meaning that the reported video dimensions match that of a single eye.

Standard Fisheye

Most immersive video cameras feature one or more fisheye lenses. For 180-degree stereo (the short way of saying stereoscopic) video, this is almost always two lenses in a side-by-side configuration, separated by ~63-65mm, very much like human eyes (some 180 cameras).

The raw frames that are captured by these cameras are recorded as fisheye images where each circular image area represents ~180 degrees (or more) of visual content. In most workflows, these raw fisheye images are transformed into an equirectangular or half-equirectangular projection for final delivery and playback.

This is a 180 degree standard fisheye image overlaid with a grid. This image is the source of the other images in this post.

Apple’s Fisheye

This brings us to the topic of this post. As I stated in the introduction, Apple has encoded the raw frames of their immersive videos in a “fisheye” projection format. I know this, because I’ve monitored the network traffic to my Apple Vision Pro, and I’ve seen the HLS streaming manifests that describe each of the network streams. This is how I originally discovered and reported that these streams – in their highest quality representations – are ~50Mbps, HDR10, 4320x4320 per eye, at 90fps.

While I can see the streaming manifests, I am unable to view the raw video frames, because all the immersive videos are protected by DRM. This makes perfect sense, and while I’m a curious engineer who would love to see a raw fisheye frame, I am unwilling to go any further. So, in an earlier post, I asked anyone who knew more about the fisheye projection type to contact me directly. Otherwise, I figured I’d just have to wait for WWDC24.

Lo and behold, not a week or two after my post, an acquaintance introduced me to Andrew Chang who said that he had also monitored his network traffic and noticed that the Apple TV+ intro clip (an immersive version of this) is streamed in-the-clear. And indeed, it is encoded in the same fisheye projection. Bingo! Thank you, Andrew!

Now, I can finally see a raw fisheye video frame. Unfortunately, the frame is mostly black and featureless, including only an Apple TV+ logo and some God rays. Not a lot to go on. Still, having a lot of experience with both practical and experimental projection types, I figured I’d see what I could figure out. And before you ask, no, I’m not including the actual logo, raw frame, or video in this post, because it’s not mine to distribute.

Immediately, just based on logo distortions, it’s clear that Apple’s fisheye projection format isn’t the same as a standard fisheye recording. This isn’t too surprising, given that it makes little sense to encode only a circular region in the center of a square frame and leave the remainder black; you typically want to use all the pixels in the frame to send as much data as possible (like the equirectangular format described earlier).

Additionally, instead of seeing the logo horizontally aligned, it’s rotated 45 degrees clockwise, aligning it with the diagonal that runs from the upper-left to the lower-right of the frame. This makes sense, because the diagonal is the longest dimension of the frame, and as a result, it can store more horizontal (post-rotation) pixels than if the frame wasn’t rotated at all.

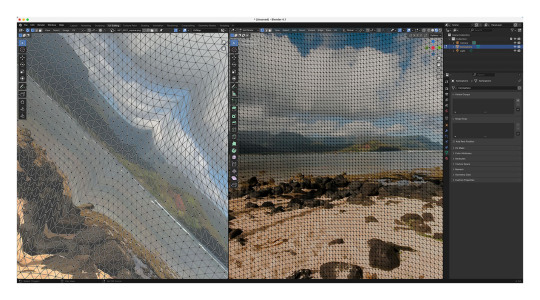

This is the same standard fisheye image from above transformed into a format that seems very similar to Apple’s fisheye format. Watch an animated version of this transformation.

Likewise, the diagonal from the lower-left to the upper-right represents the vertical dimension of playback (again, post-rotation) providing a similar increase in available pixels. This means that – during rotated playback – the now-diagonal directions should contain the least amount of image data. Correctly-tuned, this likely isn’t visible, but it’s interesting to note.

More Pixels

You might be asking, where do these “extra” pixels come from? I mean, if we start with a traditional raw circular fisheye image captured from a camera and just stretch it out to cover a square frame, what have we gained? Those are great questions that have many possible answers.

This is why I liken video processing to turning knobs in a 747 cockpit: if you turn one of those knobs, you more-than-likely need to change something else to balance it out. Which leads to turning more knobs, and so on. Video processing is frequently an optimization problem just like this. Some initial thoughts:

It could be that the source video is captured at a higher resolution, and when transforming the video to a lower resolution, the “extra” image data is preserved by taking advantage of the square frame.

Perhaps the camera optically transforms the circular fisheye image (using physical lenses) to fill more of the rectangular sensor during capture. This means that we have additional image data to start and storing it in this expanded fisheye format allows us to preserve more of it.

Similarly, if we record the image using more than two lenses, there may be more data to preserve during the transformation. For what it’s worth, it appears that Apple captures their immersive videos with a two-lens pair, and you can see them hiding in the speaker cabinets in the Alicia Keys video.

There are many other factors beyond the scope of this post that can influence the design of Apple’s fisheye format. Some of them include distortion handling, the size of the area that’s allocated to each pixel, where the “most important” pixels are located in the frame, how high-frequency details affect encoder performance, how the distorted motion in the transformed frame influences motion estimation efficiency, how the pixels are sampled and displayed during playback, and much more.

Blender

But let’s get back to that raw Apple fisheye frame. Knowing that the image represents ~180 degrees, I loaded up Blender and started to guess at a possible geometry for playback based on the visible distortions. At that point, I wasn’t sure if the frame encodes faces of the playback geometry or if the distortions are related to another kind of mathematical mapping. Some of the distortions are more severe than expected, though, and my mind couldn’t imagine what kind of mesh corrected for those distortions (so tempted to blame my aphantasia here, but my spatial senses are otherwise excellent).

One of the many meshes and UV maps that I’ve experimented with in Blender.

Radial Stretching

If you’ve ever worked with projection mappings, fisheye lenses, equirectangular images, camera calibration, cube mapping techniques, and so much more, Google has inevitably led you to one of Paul Bourke’s many fantastic articles. I’ve exchanged a few e-mails with Paul over the years, so I reached out to see if he had any insight.

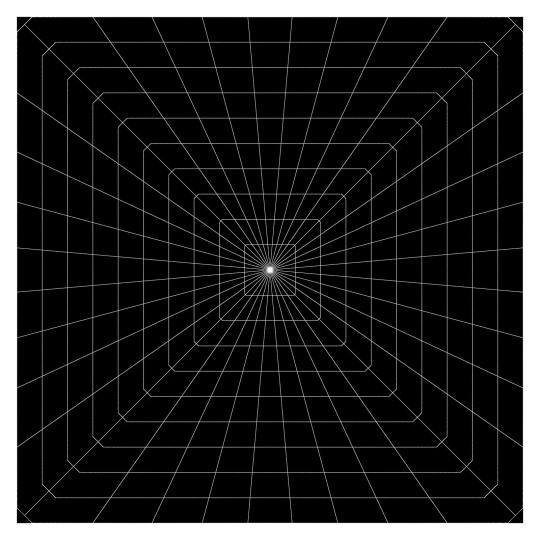

After some back-and-forth discussion over a couple of weeks, we both agreed that Apple’s fisheye projection is most similar to a technique called radial stretching (with that 45-degree clockwise rotation thrown in). You can read more about this technique and others in Mappings between Sphere, Disc, and Square and Marc B. Reynolds’ interactive page on Square/Disc mappings.

Basically, though, imagine a traditional centered, circular fisheye image that touches each edge of a square frame. Now, similar to the equirectangular strip-peeling exercise I described earlier with the globe, imagine peeling one-degree wide strips radially from the center of the image and stretching those along the same angle until they touch the edge of the square frame. As the name implies, that’s radial stretching. It’s probably the technique you’d invent on your own if you had to come up with something.

By performing the reverse of this operation on a raw Apple fisheye frame, you end up with a pretty good looking version of the Apple TV+ logo. But, it’s not 100% correct. It appears that there is some additional logic being used along the diagonals to reduce the amount of radial stretching and distortion (and perhaps to keep image data away from the encoded corners). I’ve experimented with many approaches, but I still can’t achieve a 100% match. My best guess so far uses simple beveled corners, and this is the same transformation I used for the earlier image.

It's also possible that this last bit of distortion could be explained by a specific projection geometry, and I’ve iterated over many permutations that get close…but not all the way there. For what it’s worth, I would be slightly surprised if Apple was encoding to a specific geometry because it adds unnecessary complexity to the toolchain and reduces overall flexibility.

While I have been able to playback the Apple TV+ logo using the techniques I’ve described, the frame lacks any real detail beyond its center. So, it’s still possible that the mapping I’ve arrived at falls apart along the periphery. Guess I’ll continue to cross my fingers and hope that we learn more at WWDC24.

Conclusion

This post covered my experimentation with the technical aspects of Apple’s fisheye projection format. Along the way, it’s been fun to collaborate with Andrew, Paul, and others to work through the details. And while we were unable to arrive at a 100% solution, we’re most definitely within range.

The remaining questions I have relate to why someone would choose this projection format over half-equirectangular. Clearly Apple believes there are worthwhile benefits, or they wouldn’t have bothered to build a toolchain to capture, process, and stream video in this format. I can imagine many possible advantages, and I’ve enumerated some of them in this post. With time, I’m sure we’ll learn more from Apple themselves and from experiments that all of us can run when their fisheye format is supported by existing tools.

It's an exciting time to be revisiting immersive video, and we have Apple to thank for it.

As always, I love hearing from you. It keeps me motivated! Thank you for reading.

12 notes

·

View notes

Text

[SUB] [Sound BOMB 360˚] NMIXX(엔믹스) 'Run For Roses' | 싸운드밤 삼육공 공간음향 라이브 | Spatial Audio 4K LIVE

8 notes

·

View notes

Text

youtube

[SUB] [Sound BOMB 360˚] ATEEZ(에이티즈) '꿈날(Dreamy Day)' | 싸운드밤 삼육공 공간음향 라이브 | Spatial Audio 4K LIVE

Credit: 스브스케이팝 X INKIGAYO

#ateez#hongjoong#kim hongjoong#seonghwa#park seonghwa#jeong yunho#yunho#yeosang#kang yeosang#san#choi san#mingi#song mingi#wooyoung#jung wooyoung#jongho#choi jongho#kq entertainment#p: youtube#sound bomb 360#Youtube

5 notes

·

View notes

Text

youtube

[Sound BOMB 360˚] VIXX(빅스) 'Amnesia' | 싸운드밤 삼육공 공간음향 라이브 | Spatial Audio 4K LIVE | @SBSKPOP

#vixx#taekwoon#leo#jung taekwoon#vixx leo#jaehwan#ken#lee jaehwan#vixx ken#sanghyuk#han sanghyuk#hyuk#vixx hyuk#vixx 5th mini album <continuum>#231127#*v:p#🖤#💕#🐻#Youtube

6 notes

·

View notes

Text

The Inversion of Melisma

“This isn’t spoken word. It’s the reinvention of Sugar Hill.” - Sole

Originally posted at bluevelvetreview.com

You can’t discuss recitation in America without interfacing with Rap music. I mean. You can. But it would be disingenuous to do so. Not that I’m totally opposed to being disingenuous. There are times when being disingenuous is totally necessary. Just not in this particular case. When I’m discussing music theory and shit.

But what makes rap Rap exactly. No. Let’s. Just this one time. In the service of actually discussing the purely musical components of what deem quote-unquote “rap.” Let’s strip the subjectivity from the equation completely. Subjectivity is. Honestly? It’s so 20th Century to me. This notion of so-called personal experience. Ugh. It’s so sterile. This is perhaps post-subjectivity.

Anyway. What makes rap Rap? Musically? Well it’s obviously speed. It’s tempo. I mean. Okay. To some extent it’s rhyme. It’s the concept of the bar. These are true. But it’s mostly tempo. It’s speech. But contracted so that it operates at an accelerated pace. Obviously the speech needs to be stylistic. In one way or another. It needs to be good. But beyond that. What chiefly distinguishes rap from. For example. Spoken word poetry. Is that it has an increased tempo. And that tempo has a relationship with a piece of music. Even if it’s an electronic loop (most of the time). Now. Sure. You can make an argument that a slower paced delivery. With a temporal relationship to a beat. That that’s still rap. Sure. I don’t disagree. That’s a valid exception to the rule. People can and do rap and slower tempos.

But what about melisma? Isn’t melisma. From Byzantine chant to the Qurra of the Islamic world to the Gospel singers of America. Isn’t that what people generally view as an apex of sorts? An ecstasy of sorts? Where the signifier of the syllable within the grammatical structure of language gets stretched into pure sound? Becomes perhaps unintelligible. Or at least less intelligible. But. Isn’t the inverse of that process double. Triple. Quadruple time rap? Except rather than an expansion of the signifier into (relative) unintellibility we have the contraction of the signifier into (relative) unintelligibility? Doesn’t that. Make perfect sense conceptually?

I think it does. The most quote-unquote technical rappers are the ones who. Generally speaking. Are on the faster side. Big Daddy Kane and Myka 9 started this like over thirty years ago now. And the realm of rap is. Whether you like it or not. Where the most advanced recitative singing and/or vocalization is done in the English language. The English language. With its 44 phonemes. And. What? Eleven vowel sounds? Is preternaturally disposed to the contraction of itself. As opposed to the expansion that the Romance languages are. Consonants are everywhere in English.

Yet one place where Rap has. At least very rarely. Dared to go is outside of this concept of bar. The vast (vast!) majority of rap is constructed on this concept. That the relationship between the vocal and the music is one of syncopation on the bar level. This is in the vernacular. The line of the rapper is supposed to match up with the bar of music. Obviously you should rhyme too. But the rhyme should always. Ideally. Land on the same snare. Or kick. Of each line of music. This is essentially a spatial relationship. The lines extend the same length. Length resides in space.

But you could have a temporal relationship too. Right? My idea is that. I don’t know. Maybe you write unequal lines of text. But the vocal and the music exist in a temporal relationship. Now that relationship doesn’t necessarily need to be 1:1. In fact I think it’s better if it’s not. But if you have a 4/4 beat at 90 BPM then you could equate each syllable of text to. Say. A 16th note. Which at 90 BPM would impute 360 syllables per minute rapped. So if you’re rapping at or around that rate. Then you’re in a 4x temporal relationship with the beat.

It’s really that simple! You could increase the BPM of that 4/4 beat to 180 BPM. The vocals can stay static. You’d be at a 2x relationship. Or syllables would be essentially 8th notes. This is audible. Even as the signifier becomes less. Yet in this instance there’s another inversion. There’s an inverted melisma. But compositionally. Realistically. You’re probably setting the BPM based on the vocal. As opposed to selecting a beat and then constructed a verse to rap over it at that set tempo.

But to fit these many syllables into a verse? How uneven should they be? I’d personally say they should occupy the 8th interval of the Fibonacci sequence. Sitting somewhere between 34 and 55 syllables. Each line. That gives each line enough variability. But not too much variability. And it packs enough syllables into a single line that velocity can be reached. But there’s still room to. You know. Breathe?

Melisma is the. Extended technique? That brings the signifier of language into. As Charlie Looker notably said. Not into abstraction. But into raw material. Raw sound. There is no longer any representational reference. This is done by slowing. Expanding. By assigning many notes to a single syllables. The inversion of this is the opposite. But circuitously ends at a very similar results. By assigning many syllables to a single note. Quadruple time. The Ison and Byzantine cantor. The text. Of course it’s textual. But it’s. Via melisma. Or the inversion of melisma. It achieves a breaking with the signifier. A text as raw sound. As opposed to signifying representational items. It’s not a coincidence that the inversion of melisma has achieved popularity in America.

In the English language. Melisma never sounds as good in English as it does in. Literally any other language. But especially the Romance languages. The Latin languages. Or the Semitic languages. But rap. The inversion of melisma. It never sounds quite as good in those Romance languages. The vowel-based languages. With fewer phonemes. They can’t stylize the inversion of melisma the way English can. Just as English. With 87 vowel sounds surrounded by infinite consonants. Can never get melisma to quite the technical level or Italian. Or Greek. Or Arabic. Yet this inversion of melisma. I mean. Melisma isn’t a bar-based style. Rap as we understand it today? It’s incapable of truly reaching appropriately unhinged levels of inverted Melisma. Melisma is naturally uneven. So to truly invert melisma. It requires a method to make the lines uneven. But still somehow relate to the specific music as well. Which has been shown here.

2 notes

·

View notes

Audio

Franck Biyong - Kunde - guitarist’s new “Afro Electronic Body Music Odyssey”

“Franck Biyong's new body of work is a fascinating foray

into industrial ambiance mixed and imagined exclusively

in 360 Reality Audio, a new immersive music experience

driven by spatial sound technology.

KUNDE does not get lost in the EBM barrel but sounds

contemporary, dark, and displaying a detailed kind of

craftsmanship in each shadowy beat thump. It is still

reminiscent of old school, yet it has a new age-sounding

edge in the aggressive instrumental moments and then

the open and calm expressionisms.

This African psychedelic flower of musical innovation's

beauty resembles some of King Crimson's improvisation

style with more successful subtle focus, and then follows

dramatic closure movements inspired by Krautrock's

trademark cosmic voyage-like tones.

KUNDE relies on electronic Afro meditation set against

towering synths, a sinister wall of distortion, hard-hitting

rhythms and melodic moods...A slow soothing space

climax that will send you off into another dimension…”

MUSICIANS

Vox & Percussion - Djanuno Dabo

Bass & Keyboards - Louis Sommer

Keyboards, Beats & Laptop - Benjamin Lafont

Guitars, Keyboards & Samples - FB

7 notes

·

View notes

Video

youtube

[VIDEO] 221014 Sound 360° | MAMAMOO - 'ILLELLA (일낼라)' Sound Remastered Spatial Acoustics Ver.ㅣKBS Music Bank

8 notes

·

View notes

Text

Sony Bravia Theatre U Neckband Speaker With 360-Degree Spatial Sound Support Launched in India | Daily Reports Online

Sony Bravia Theatre U neckband speaker was launched in India on Monday. The wearable speaker is backed by Dolby Atmos and supports 360-degree spatial audio. It is equipped with X-Balanced speaker unit and advanced noise isolation technologies. The lightweight design alongside the adjustable, cushioned material is said to ensure a relaxed fit around the neck and shoulders of the users. The Bravia…

0 notes

Photo

Transpanner PRO offers easy control over sound positioning and depth, enabling audio professionals to craft realistic 3D soundscapes with a standard 2-loudspeakers setup. Its sleek UI allows fine-tuning of sound angle, diffusion, and realistic motion parameters.How to download:Select a Version: Choose either the Demo (free) or Full Version ($49.90 or more).Enter Your Email: Provide your email address to receive the plugin files and follow the instructions on the download page.Install the Plugin: Open your DAW and add Transpanner PRO to a stereo track. Enjoy!What's Inside:Choose the Angle and Distance:Transpanner PRO's polar pad interface features a thumb that can be dragged around the pad's center, enabling precise control over sound direction. Set the position between any angle from 0 to 360 degrees and choose the distance. At 0% distance, only the dry sound is heard, while 100% distance the far field parameters are fully engaged.Far Field Parameters: The far field emphasizes sound diffusion and timbral characteristics. Transpanner PRO facilitates this with reverb and low/high cut sections. Achieve realistic distance effects and fine-tune reverb to range from smooth diffusion to highly reflective settings. The offset parameter shifts the reverb position at an angle offset from the dry sound, adding spatial complexity.Doppler Effect: Simulate the Doppler effect with Transpanner Pro, which alters pitch and volume based on the relative motion of the sound source and listener. This effect can be finely tuned, offering control over the intensity of the Doppler shift for dynamic and evolving soundscapes.Additional parameters:Input: Choose the input to be mono or stereo.Gains: The gains of the far and near fields can be adjusted with the gain and far gain control dials.Inversion button: The inversion button switches the near and far fields on the pad, so the far field is either in the center and the near field in the outer ring, or vice versa. By inverting, you can choose whether to have more angular precision in the near field or the far field.Compatibility:Mac (Intel/M1/M2): macOS 10.11 and newer (Formats: AU & VST3)Windows (x64): Windows 10 and newer (Format: VST3)Any DAW that supports VST3 or AU formats.Any Question?Please refer to our Support Page or join our Discord Channel.

0 notes

Text

Sony BRAVIA, KD-55X85L, 55 Inch, Full Array LED, Smart TV, 4K HDR, Google TV, ECO PACK, BRAVIA CORE, Seamless Edge Design HT-A3000 3.1 channel Dolby Atmos® Soundbar

Price:

Buy Now

Last Updated:

4K Full Array LED TV with 4K HDR Processor X1, X-Balanced Speaker, Google TV and Flush Surface. Enhance the exeprience with the HT-A3000 Soundbar 3.1 channel soundbar with S-Force PRO Front Surround, Vertical Surround Engine, 360 Spatial Sound Mapping with optional wireless rear speakers, and Sound Field Optimization with optional speakers. Date First Available : …

View On WordPress

0 notes

Text

[231226] Sound BOMB 360

ATEEZ Dreamy Day Acoustic Live ver.

🎧12.28 12(KST) for the first time!🎧

|UP NEXT

ATEEZ <Dreamy Day> (Spatial Audio Live)

🎧12. 27 (WED) 10PM(EST)🎧

#ateez#m: all#type: photo#content: twt#content: sbs#content: inkigayo#era: towards the light : will to power

80 notes

·

View notes

Text

[SUB] [Sound BOMB 360˚] NMIXX(엔믹스) 'Run For Roses' | 싸운드밤 삼육공 공간음향 라이브 | Spatial Audio 4K LIVE

2 notes

·

View notes

Text

Peralatan Gaming Terbaik yang Harus Kamu Miliki di Tahun Ini

Peralatan Gaming Terbaik yang Harus Kamu Miliki di Tahun Ini

Gaming telah menjadi lebih dari sekadar hobi—ini adalah gaya hidup. Untuk meningkatkan pengalaman bermainmu, peralatan gaming yang tepat adalah kunci. Tidak hanya meningkatkan performa, tetapi juga memberikan kenyamanan dan kepuasan lebih saat bermain. Berikut adalah daftar peralatan gaming terbaik yang harus kamu miliki di tahun ini untuk mendapatkan pengalaman bermain yang optimal.

1. Headset Gaming: Menyelam Lebih Dalam ke Dunia Game

Salah satu peralatan paling krusial adalah headset gaming. Headset berkualitas tinggi tidak hanya menawarkan suara yang jernih tetapi juga memberikan fitur-fitur canggih seperti noise cancellation, surround sound, dan mikrofon berkualitas untuk komunikasi yang jelas. Beberapa pilihan terbaik tahun ini adalah:

SteelSeries Arctis Pro Wireless: Dikenal dengan kualitas suara superior dan kenyamanan luar biasa. Headset ini memiliki fitur dual wireless yang memungkinkanmu bermain tanpa terganggu oleh kabel.

Razer BlackShark V2 Pro: Dengan teknologi THX Spatial Audio, headset ini menawarkan pengalaman suara 360 derajat yang imersif.

2. Keyboard Mekanik: Ketangguhan dan Responsivitas

Keyboard mekanik selalu menjadi favorit para gamer berkat durabilitas dan responsivitasnya. Tombol-tombol mekanik memberikan feedback yang lebih baik dan cepat dibandingkan keyboard membran biasa. Pilihan keyboard mekanik terbaik tahun ini meliputi:

Corsair K95 RGB Platinum XT: Dilengkapi dengan saklar Cherry MX Speed dan pencahayaan RGB yang bisa disesuaikan, keyboard ini tidak hanya cepat tetapi juga stylish.

Razer Huntsman Elite: Menggunakan saklar optik, keyboard ini menawarkan respons yang sangat cepat, menjadikannya pilihan ideal untuk gamer kompetitif.

3. Mouse Gaming: Presisi di Ujung Jari

Mouse gaming yang tepat dapat meningkatkan akurasi dan reaksi dalam permainan. Beberapa mouse gaming terbaik tahun ini yang patut kamu pertimbangkan adalah:

Logitech G Pro X Superlight: Mouse ini terkenal dengan desainnya yang ringan dan sensor HERO 25K, memberikan akurasi tingkat tinggi dan gerakan yang mulus.

Razer DeathAdder V2: Dengan desain ergonomis dan sensor optik Focus+, mouse ini memberikan kenyamanan serta performa terbaik untuk berbagai jenis game.

4. Monitor Gaming: Visual Lebih Tajam dan Cepat

Monitor dengan refresh rate tinggi dan resolusi tinggi sangat penting untuk pengalaman bermain yang lancar dan detail yang tajam. Monitor gaming terbaik tahun ini termasuk:

Asus ROG Swift PG259QN: Dengan refresh rate 360Hz, monitor ini adalah pilihan terbaik untuk gamer kompetitif yang membutuhkan performa terbaik.

LG UltraGear 27GN950: Monitor 4K dengan refresh rate 144Hz ini menawarkan visual yang tajam dan warna yang hidup, cocok untuk game dengan grafis berat.

5. Kursi Gaming: Kenyamanan yang Tak Tergantikan

Kursi gaming mungkin tampak seperti aksesori, tetapi kenyamanan selama sesi bermain yang panjang tidak bisa diremehkan. Beberapa kursi gaming terbaik tahun ini meliputi:

Secretlab Titan Evo 2022: Dikenal dengan material premium dan desain ergonomis, kursi ini menawarkan kenyamanan maksimal dan mendukung postur tubuh yang sehat.

Herman Miller x Logitech G Embody: Kombinasi ergonomi Herman Miller dengan sentuhan gaming dari Logitech, kursi ini tidak hanya nyaman tetapi juga dirancang untuk performa optimal.

6. Controller: Fleksibilitas dan Kontrol Lebih Baik

Bagi para gamer konsol atau PC yang suka bermain game tertentu dengan controller, memiliki controller berkualitas adalah suatu keharusan. Pilihan terbaik tahun ini adalah:

Xbox Elite Wireless Controller Series 2: Dengan kustomisasi yang luas dan build quality yang kokoh, controller ini adalah pilihan utama untuk fleksibilitas dalam bermain.

Sony DualSense Wireless Controller: Dengan haptic feedback dan adaptive triggers, controller ini menawarkan pengalaman bermain yang lebih imersif, khususnya untuk PlayStation 5.

Kesimpulan

Memiliki peralatan gaming terbaik adalah investasi yang sepadan bagi para gamer. Dari headset dengan kualitas suara jernih hingga kursi yang mendukung kenyamanan maksimal, peralatan ini tidak hanya meningkatkan performa tetapi juga memberikan pengalaman bermain yang lebih menyenangkan. Pastikan untuk memilih peralatan yang sesuai dengan kebutuhan dan gaya bermainmu agar dapat merasakan perbedaan nyata dalam setiap sesi bermain.

Apakah kamu siap untuk meningkatkan pengalaman gaming-mu? Segera lengkapi setup-mu dengan peralatan terbaik tahun ini!

0 notes

Text

#UKDEALS Sony INZONE H3 Gaming Headset - 360 Spatial Sound for Gaming - Boom microphone - PC/PlayStation5 https://www.bargainshouse.co.uk/?p=107848 https://www.bargainshouse.co.uk/?p=107848

0 notes