#3D Motion Capture Software

Explore tagged Tumblr posts

Text

looking through the dual destinies and spirit of justice sprites really has me like "did ace attorney have a rough transition to 3d or do some of the sprite animations just not work in 3d like they do 2d"

#yes theres a difference. “rough transition to 3d” generally means the entire game is messy#additionally usually “rough transition to 3d” implies they couldn't help how the 3d stuff turned out#100% convinced that if they hadn't stuck to some of the 2d sprite animations it wouldn't be QUITE as awkward as it is#not all the sprites in dd and soj are awkward either. they just decided to keep the 2d sprite animations#for some characters that works (ema and trucy for example) but for others (APOLLO) it feels SO awkward on some animations#klavier. i just hate klavier's model. i love his animations though lol. his hair flip after his laughing animation? 10/10#the other issue is that dual destinies and spirit of justice feel like they were animated using a regular 3d animation software#im 100% convinced that the reason the animation on tgaa's sprites is so nice in comparison is because they used motion capture#i dont know if dd and/or soj used mocap but it feels like they didnt and i think its one of the issues with the sprites#most of the designs arent any more or less realistic than tgaa's designs. mocap would work for dd and soj's sprites#anyway enjoy in-the-tags the ramblings about the sprites of a game i havent played yet lmao#ace attorney#dual destinies#spirit of justice#ramble in the tags

13 notes

·

View notes

Text

The "Mario In Real-Time" engine allowed Charles Martinet and other voice actors to project their movements onto a 3D floating Mario head using motion capture at live shows to interact with the audience as Mario. The footage shows it in action at the 1992 Summer Consumer Electronics Show.

The earliest iterations of the engine did not have protective measures against the head clipping into the camera. As such, when the voice actor tilted his head forward too far, Mario would pass through the screen so that the inside of his head was visible, as seen in the footage. This was fixed in the later versions of the software.

Main Blog | Patreon | Twitter | Bluesky | Small Findings | Source: DigitalNeohuman

1K notes

·

View notes

Note

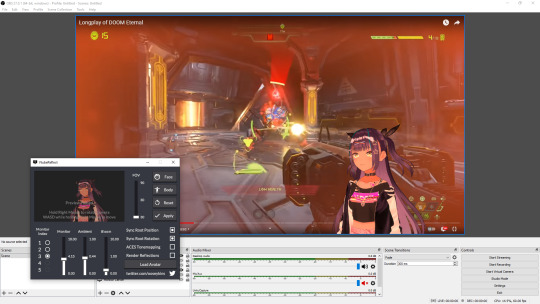

How do you do your model capture for twitch?

I use a Logitech C930e webcam (not the most ideal option but it is a good affordable one) and VSeeFace (there's a lot of 3D Vtubing software out there that gives a loooot more options for Gimmicks n shit but honestly I don't feel like learning how they work sahfghafj) and a Leap Motion hand tracker for my hands (I tried using a Leap Motion 2, it was Actively Worse)

One little thing I wanna say while we're on the topic, regardless of your Vtuber software, if you feel your mouth tracking doesn't look right, try turning down mouth sync smoothing (or whatever similar name it has in the program in question.) Mouth sync smoothing means the mouth will take longer to transition between the sounds you make, meaning it can't keep up with normal talking speed and doesn't actually mimic your mouth properly.

29 notes

·

View notes

Text

Link! Like! LoveLive! SPECIAL STAFF INTERVIEW

[This interview was published online on the G's Channel website, and is print in the Link! Like! LoveLive! FIRST FAN BOOK, released Dec 27, 2023.]

The Hasunosora Jogakuin School Idol Club is a group of virtual school idols that made their striking debut in April this year. How did their fan engagement app “Link! Like! LoveLive!” come about? Fujimoto Yoshihiro and Sato Kazuki discuss the app’s origin and what they think about the project.

It’s all about enjoying Love Live!—! The untold story behind the birth of the fan engagement app LLLL

—The smartphone app “Link! Like! LoveLive!” (which we’ll refer to as “LLLL”), celebrated its six-month anniversary in November. Let’s look back on the past six months and discuss the birth of LLLL. To start us off, could you two tell us about your roles in developing this app?

Sato: I’m Sato Kazuki from CERTIA, a producer for this app. I’ve worked for game companies as a planner and director, and now I’m a self-directed game producer. From arcade games to consumer software to apps, my path has involved a variety of media. I’ve been following Love Live! as a fan since the µ’s era, and became a producer for Hasunosora’s game after being consulted for a Love Live! app where the members stream.

Fujimoto: I’m Fujimoto Yoshihiro from Bandai Namco Filmworks, and I’m also a producer for LLLL. I started with the desire to make a Love Live! smartphone game.

***

—LLLL’s official start of service was May 20, 2023, but I heard that the road there was quite long. Could you talk about that?

Fujimoto: If we want to start from when development began…. we’ll have to go back to 5 years ago.

Sato: A big part of the appeal of Love Live! comes from these school idols being independent and putting their souls into it as a club activity, so there is a certain kind of enjoyment and emotional payoff from supporting them.

If you insert a “player” who can essentially play god into this, it inevitably feels like a mismatch. Then wouldn’t it be great to turn that idea on its head and instead have an app where you support these independent idols? And streaming would be a great match for that. As we worked out this concept where you cheer on the members as just another fan, this “school idol fan engagement app” took form.

—What led up to integrating motion capture into the app?

Sato: From the very beginning, we had decided that virtual concerts and streams would use 3D models. The development team already had the know-how for streaming with 3D models, so of course we were going to use them.

The key idea is to have the virtual concerts, live streams, and story intimately linked to make the entire experience real-time, so we decided it would be best to have them appear in the same form in both the streams and the activity log (story), which is why the story also uses 3D models. The visuals should be precisely linked so we carefully animated the story with 3D models.

Fujimoto: It is quite rare for the cast to also do motion capture, but it was necessary to realize the vision that Sato-san has described. Yes, it would technically be doable to have the cast only voice the characters and instead have actors do the motion capture, but that would be a lot less palatable.

Sato: It certainly feels a lot more real when the cast is both voicing and moving their characters. The fact that this project is a part of the Love Live! series helped a lot in being able to implement this. The cast loves Love Live!, and it’s because of how much the project staff and cast members put into this that we could make it a reality.

—As an example of this hard work: the cast had intense training on motion and talking, with lessons and rehearsals starting about a year before the app was released.

Sato: In both the live streams and virtual concerts, the cast do the motion capture, in real-time too! Even now, this fact isn’t well-known, or rather, it seems like there are a lot of people who think “No way they go that far!”. Yes, the cast do it themselves!

—So why does doing it in real-time make it good?

Sato: What a pointed question! (laughs)

Fujimoto: What’s important depends on what you value. What we’re aiming for is the feeling of a real concert.

Sato: Putting it concisely, the feeling that it’s live is very important to LLLL. It seems to me that the feeling of being live has recently been highly valued, not just in concerts but in the entertainment industry as a whole. We can look back on works of the past whenever we want through subscription services and the like, so following something now has its own appeal that can evoke a certain zeal. Bearing witness to this moment in history is now viewed as being more significant than it used to be. That’s why we maximize that feeling of being live by having the cast do the motion capture and by streaming in real-time. We felt that those two points are essential.

—So rather than just being parts of the concept, these are essential to the project.

Sato: At one point, we considered doing only the talk and MC in real-time, while having the performance part be recorded. But because we want to get at the heart of the pleasure of having it be real-time, we couldn’t make that compromise.

Actually, the cast do occasionally hit the wrong notes or mess up their dance formations, but these are little things that signal that it’s live—in fact, such happenings can increase how real it feels, which can make the performance feel special if you approach it with the right mindset.

***

—Can I ask about the Fes×LIVE production, like the camerawork, lighting, and outfits? It feels like the production value goes even higher every time.

Fujimoto: I think that’s probably because, just like how the Hasunosora members are growing, the staff is as well, going further each time after saying things like “For the next performance, I want more of this!”.

Sato: Things like flying over the stage or swapping outfits in the instant that the lighting changes—we won’t do those. A core principle is that we aim for a production that matches what school idols would do and constrain ourselves to portray what’s possible in reality.

***

—The story following school idols at a school steeped in tradition—that’s a new challenge, too.

Sato: We had certain reasons for setting this at a school with a long history—so long that this year is the 103rd. There’s this common image of streamers debuting as no-names, and then working their way up to become more popular. But if Hasunosora were like that, the fact that there would be a lot of fans from the beginning would make it very unrealistic. After all, there’s no way your average rookie streamer could be like “This is my first stream!” and yet have 5000 viewers. (laughs)

We thought hard about how to resolve this in a way that makes sense. Then, we figured out that it would work to have a tradition-laden school that was already a “powerhouse”. So in the end, we made it a point to have the school be a powerhouse with a history of having won Love Live! before.

—So the school already had fans following it, with high expectations for the new school idols: Kaho and the other first years.

Sato: Yeah, it’s meant to be like one of those schools that are regular contenders at Koshien, the national high school baseball championship.

—The world-building is done so thoroughly that such an answer could be arrived at pretty quickly.

Sato: We consulted with many people, starting with the writer team, about implementing the concepts that we mentioned before—having the cast do motion capture to maximize the live feeling, and doing the streams in real-time.

In the beginning, there was a lot of resistance to the idea of trying to successfully operate an app while constructing the story and streams such that there would be no contradictions between them. If you do streams in real-time, such contradictions can certainly arise—you have to work out the setting in quite a lot of detail, and even then, things can happen that are impossible to predict.

But my thinking was always that this is doable if we adjust how often we synchronize the story and streams. I think it’s impossible to stream every day and release a new story every day. But on the other side of the spectrum, if we were to write a year’s worth of story ahead of time, and then do however many streams within that year, that would certainly be doable.

Following that line of thought, in the early stages, we had a lot of discussion about how often we could do streams, searching for the limit of what would be possible to implement. As a compromise, we arrived at the system of fully synchronizing once a month. For each month, we can keep the setting relatively flexible, not setting everything precisely in stone until the month is over. We thought that a monthly frequency should make it possible to both maintain the charm of the story and implement streams.

But right now we’re streaming three times a week, so there end up being a lot of things like “they shouldn’t know this yet” or “this event hasn’t happened yet”. It feels like putting together a complicated puzzle.

Fujimoto: There’s one aspect that we haven’t made clear before—I don’t think there are many people that have realized it. It’s that the talk streams are the furthest along in the timeline, while the story shows what has already happened. It’s not that the story happens on the day that we update it, but rather that the story describes what happened up to that day. People who have realized this have probably read quite closely.

Sato: That’s why it’s called the “activity log”. The With×MEETS serve the important role of synchronization between what happened in the activity log—the world of the story—with reality, so we carefully prepare for them each time.

—That makes sense! One nice thing is that if you keep up with the With×MEETS in real-time, then watch the Fes×LIVE, it feels like keeping up paid off—”Good thing I watched the With×MEETS!”.

Fujimoto: It would be great if our efforts got through to everyone enjoying LLLL.

—If you set things up that carefully, it must be difficult when irregularities happen. In August, several cast members had to take a break to recover from COVID-19, causing many With×MEETS to be canceled. How did you deal with that?

Sato: The silver lining of that period was that it avoided the most critical timing. If that had been off by even a week… it might not have been possible to recover from that. This project always has this feeling of tension, because there is no redoing things.

***

Tackling the Love Live! Local Qualifiers! The culmination of the story put viewers on the edges of their seats!!

—On how people have reacted to LLLL: was there anything that went exactly as planned, and on the other hand, was there anything that defied your expectations?

Fujimoto: To bring up a recent event, the Love Live! Local Qualifiers in October very much stood out. There was tension all around—even I was nervous about how it would go. The feeling that “these girls really are going to take on this challenge, at this very moment”. It was like watching the championship of the World Baseball Classic… Well, maybe that’s an overstatement, but an atmosphere similar to that. I’m really happy that we managed to create such an atmosphere with the cast and everyone who participated as a user.

Sato: Not just in sports, but also in the world of anime or fiction, there is definitely a certain excitement that arises when you “witness” something. Bearing witness to the moment that “drama” is born produces an impulse, something that hits deep. This is not something that can be produced in an instant. Rather, such an overwhelming concentration of passion can come about only because so much has happened up to that point.

That’s why, aiming for “that moment”, we first have people experience the Hasunosora members’ existence and reality day after day. After having connected with the members and viscerally felt the sense of being in sync with their “now”, something new will be born…

The closest we’ve gotten to an atmosphere of tension that’s as close as possible to the real thing is what Fujimoto-san just mentioned, the Love Live! Local Qualifiers. I think part of why that was such an “incredible” event was the weight of everything that happened in the Love Live! series up to that point.

—Is there anything else from the fans’ reactions and the like that was unexpected?

Fujimoto: For the talk streams, we have a system where everyone can write comments in real-time. Some users go all in on the setting and act as if they’re in that world.

Sato: The characters are not aware that their everyday lives can be observed in the story, so these users adhere to the rule of not talking about anything that’s only depicted in the story, commenting as if they don’t know what happened there. And if you do that, your comment might get read. So you really can be a participant in the performance. We were surprised at just how many people were earnestly participating like that.

—It’s like making a fan work in real-time, or rather, it’s like playing a session of a tabletop RPG.

Sato: I think what we’re doing here is very much like a game. It’s the “role-playing” of a tabletop RPG taken to an extreme, a pillar of a certain kind of “game”, or maybe more like a participatory form of entertainment.

—Oh, I see! It’s as if we’ve returned to the classic reader participation projects of Dengeki G’s magazine, which is kind of touching.

[Translator’s note: Love Live! originated from Sunrise collaborating with the Dengeki G’s magazine editorial department, bringing Lantis in for music.]

Sato: Yes, indeed. In the era of magazine participatory projects, the back-and-forth would have several months in between. In comparison, a real-time system makes it easier to participate, which I think is a significant and useful advancement. For now, in the first year, it feels like we’ve been grasping in the dark while trying to do the best with what we have. But we’re thinking about how best to adjust the frequency and volume of activities from here on.

Fujimoto: Also, the users have largely figured out all the hints we cooked in!

Sato: They’ve been looking in quite some detail. (laughs) Our cooking has paid off.

***

—Is there any challenge that LLLL is taking on anew?

Sato: Going back to what I said at the beginning, I was a huge fan of µ’s, so I started by trying to analyze and put into words just what made me so attracted to, so crazy about them.

A big part of the charm was that it involved youth and a club activity, so by following their story and cheering them on, you could vicariously re-live the passion of those three years in high school. So I wanted to make it so that you could re-live that experience of youth in depth.

My goal is to have it feel so overwhelmingly real—through LLLL’s real-time nature—that you can unwittingly delude yourself into the sense that you’re re-living your high school years.

Fujimoto: If we’re talking about a new challenge, maybe it’d be good to touch on how frequent the interactions are.

For example, let’s consider a one-cour anime. No matter how much you’d like to keep watching it, it ends after three months. Even if it turns out there’s a continuation, you’ll have to wait before you can watch that.

In contrast, if we turn to virtual content, people are streaming three times a week on YouTube. In that vein, what we’re aiming for with this project is this: you can follow this group you like throughout that limited time between matriculation and graduation, and during that time they’ll always be there for you to meet.

***

—Some people are expecting an anime as one of the future mixed-media developments. To put it bluntly, what do you think about that?

Sato: What we are doing right now with Hasunosora would not work outside the structure of an app. As mentioned before, it’s not quite a tabletop RPG but rather a participatory form of entertainment, and it’s that structure that allows fans to experience the true spirit of the Hasunosora Jogakuin School Idol Club.

—So for now, we should experience it through the streams in the app! Is that what you’re saying?

Fujimoto: Exactly. It wouldn’t be an exaggeration to say that LLLL is a project to make you fall for these girls throughout their streams—that’s how much effort we’re putting into it.

Sato: Starting LLLL by going through the story and then participating in the With×MEETS streams might be more common, but you can also start enjoying it in a novel way by just jumping into the latest With×MEETS stream. The members’ engagement with the comments has been especially noticeable in the most recent streams—it feels like a symbiotic relationship.

***

—Finally, a word for those who are looking forward to the project’s future.

Sato: I can confidently say that LLLL is a new experience made possible because of the times we live in. Because of that, it is very high-context, so it’s hard to explain how it will make you feel or what kind of game it is—you must play it to understand. We are very aware of how much of a hurdle this is, and how it might be difficult to get a grasp on if you start later.

But if you’re willing to take that first step into the world of LLLL, we promise to bring you content that is worth following. So to those reading this interview: please do consider trying it out once, even if it’s with a window-shopping mindset.

Fujimoto: Hasunosora’s story can only happen because of everyone watching. We hope you’ll keep supporting us!

Sato: We’ve prepared plenty of tricks up our sleeves for the end of the school year in March, so please look forward to it!

Credits

Translation: ramen Translation check: xIceArcher Various suggestions: Yahallo, Yujacha, zura

44 notes

·

View notes

Text

Effective XMLTV EPG Solutions for VR & CGI Use

Effective XMLTV EPG Guide Solutions and Techniques for VR and CGI Adoption. In today’s fast-paced digital landscape, effective xml data epg guide solutions are essential for enhancing user experiences in virtual reality (VR) and computer-generated imagery (CGI).

Understanding how to implement these solutions not only improves content delivery but also boosts viewer engagement.

This post will explore practical techniques and strategies to optimize XMLTV EPG guides, making them more compatible with VR and CGI technologies.

Proven XMLTV EPG Strategies for VR and CGI Success

Several other organizations have successfully integrated VR CGI into their training and operational processes.

For example, Vodafone has recreated their UK Pavilion in VR to enhance employee training on presentation skills, complete with AI-powered feedback and progress tracking.

Similarly, Johnson & Johnson has developed VR simulations for training surgeons on complex medical procedures, significantly improving learning outcomes compared to traditional methods. These instances highlight the scalability and effectiveness of VR CGI in creating detailed, interactive training environments across different industries.

Challenges and Solutions in Adopting VR CGI Technology

Adopting Virtual Reality (VR) and Computer-Generated Imagery (CGI) technologies presents a set of unique challenges that can impede their integration into XMLTV technology blogs.

One of the primary barriers is the significant upfront cost associated with 3D content creation. Capturing real-world objects and converting them into detailed 3D models requires substantial investment, which can be prohibitive for many content creators.

Additionally, the complexity of developing VR and AR software involves specialized skills and resources, further escalating the costs and complicating the deployment process.

Hardware Dependencies and User Experience Issues

Most AR/VR experiences hinge heavily on the capabilities of the hardware used. Current devices often have a limited field of view, typically around 90 degrees, which can detract from the immersive experience that is central to VR's appeal.

Moreover, these devices, including the most popular VR headsets, are frequently tethered, restricting user movement and impacting the natural flow of interaction.

Usability issues such as bulky, uncomfortable headsets and the high-power consumption of AR/VR devices add layers of complexity to user adoption.

For many first-time users, the initial experience can be daunting, with motion sickness and headaches being common complaints. These factors collectively pose significant hurdles to the widespread acceptance and enjoyment of VR and AR technologies.

Solutions and Forward-Looking Strategies

Despite these hurdles, there are effective solutions and techniques for overcoming many of the barriers to VR and CGI adoption.

Companies such as VPL Research is one of the first pioneer in the creation of developed and sold virtual reality products.

For example, improving the design and aesthetics of VR technology may boost their attractiveness and comfort, increasing user engagement.

Furthermore, technological developments are likely to cut costs over time, making VR and AR more accessible.

Strategic relationships with tech titans like Apple, Google, Facebook, and Microsoft, which are always inventing in AR, can help to improve xmltv guide epg for iptv blog experiences.

Virtual Reality (VR) and Computer-Generated Imagery (CGI) hold incredible potential for various industries, but many face challenges in adopting these technologies.

Understanding the effective solutions and techniques for overcoming barriers to VR and CGI adoption is crucial for companies looking to innovate.

Practical Tips for Content Creators

To optimize the integration of VR and CGI technologies in xmltv epg blogs, content creators should consider the following practical tips:

Performance Analysis

Profiling Tools: Utilize tools like Unity Editor's Profiler and Oculus' Performance Head Hub Display to monitor VR application performance. These tools help in identifying and addressing performance bottlenecks.

Custom FPS Scripts: Implement custom scripts to track frames per second in real-time, allowing for immediate adjustments and optimization.

Optimization Techniques

3D Model Optimization: Reduce the triangle count and use similar materials across models to decrease rendering time.

Lighting and Shadows: Convert real-time lights to baked or mixed and utilize Reflection and Light Probes to enhance visual quality without compromising performance.

Camera Settings: Optimize camera settings by adjusting the far plane distance and enabling features like Frustum and Occlusion Culling.

Building and Testing

Platform-Specific Builds: Ensure that the VR application is built and tested on intended platforms, such as desktop or Android, to guarantee optimal performance across different devices.

Iterative Testing: Regularly test new builds to identify any issues early in the development process, allowing for smoother final deployments.

By adhering to these guidelines, creators can enhance the immersive experience of their XMLTV blogs, making them more engaging and effective in delivering content.

Want to learn more? You can hop over to this website to have a clear insights into how to elevate your multimedia projects and provide seamless access to EPG channels.

youtube

7 notes

·

View notes

Text

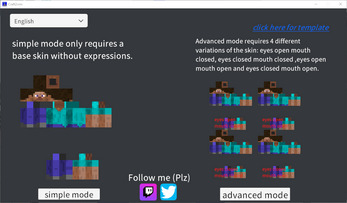

VTUBER SOFTWARES

XR Animator DL

Full body tracking via webcam.

Able to use props.

Able to import motion data files.

Able to import videos to render motion data.

Able to pose.

Able to export the data via VMC to VSeeFace or any other applications.

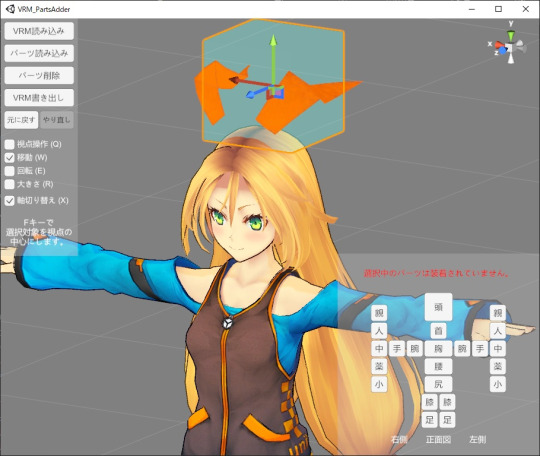

VRM Part Adder DL

Able to attach props to VRM file without using Unity.

Texture Replacer DL

Able to fix textures of the said props if they are missing.

Hana App DL

Able to add 52 blendshapes for PerfectSync without using Unity.

VNyan DL

VNyan is an 3D animating software.

VtubeReflect DL

VtubeReflect captures your desktop and projects light onto your vtuber avatar.

Craft2VRM DL

Use Minecraft skin as a vtuber model.

Dollplayer DL

Able to pose VRM.

ThreeDPoseTracker DL

Able to generate motion data based on video.

VDraw DL

Able to show drawing and using keyboard animation through VRM.

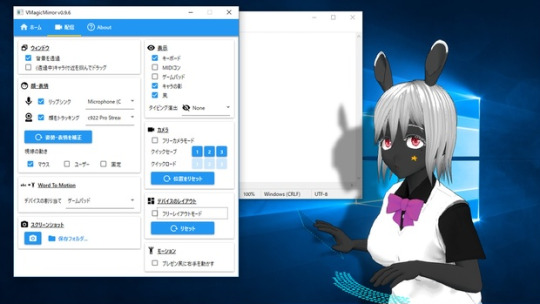

VMagicMirror DL

Able to show drawing and using keyboard animation through VRM.

Virtual Motion Capture DL

Track avatar movement through VR.

Mechvibes DL

One of the only free applications that'll let you to change the sound of your keyboard into anything!

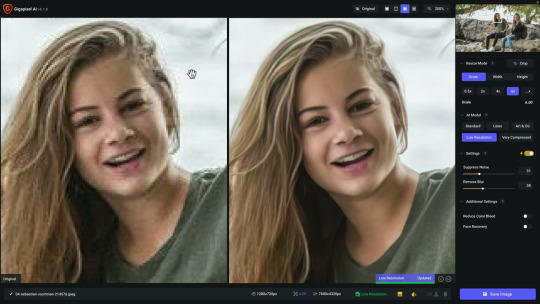

Gigapixel DL

Picture upscaler.

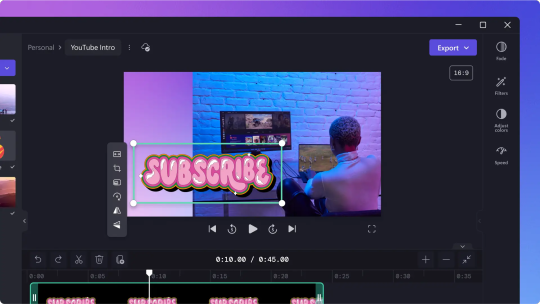

Clipchamp DL

Free text-to-speech tools.

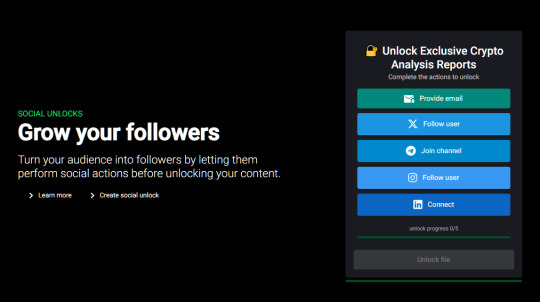

Rekonise LINK

Content creator tools.

VRoom LINK

Places your VRM in a world.

VRM games LINK

List of games/applications that allow the import of VRM.

4 notes

·

View notes

Text

^ it's extremely extremely funny that none of this is proved at all, it's just people's gut feelings (no, that Microsoft paper supposedly proving the decline of critical thinking due to AI does not say what you think it says! if you believe this, you have been bamboozled!)

some of you will go "erm, it's fancy autocomplete" but also "erm, you are hurting yourself by using it, it's a special kind of evil tool that isn't a tool, for reasons" and then developers like me will say "we had fancy autocomplete like IntelliSense for years now and we're fine, what's GitHub Copilot going to do? make our code worse than the noble tradition of copypasting from StackOverflow?"and the only thing we get in response is misinformation, fearmongering or a blank stare.

there are entire creative subcultures that just simply don't share your romantic, extremely American Protestant view of struggling. there's widely-spread programmer wisdom about the virtues of laziness! the reason you can play so many wonderful indie games today is because the glorious art of struggling to code your own physics system has been automated by game engines.

reducing the amount of struggling so you can move on to more personally-appealing and valuable decision-making is in fact an entire part of the human experience. sorry that you don't value this, but i am not learning how to make my own physics system in order to make games with physics in them, for the same reason i am not learning how to draw to make games with drawings in them. it just doesn't appeal to me, and the idea that i'm harming myself as a result

all in all, your protesting is completely indistinguishable from past movements complaining about the destruction of creative/critical-thinking skills, with absolutely no actual evidence, based solely on fear and the age-old belief that kids these days are so much stupider than you and civilization is crumbling.

all the contemptible fossils that came before you yelling This Technology Is Monstrously Harmful To Your Growth As A Human Bean about writing, the printing press, photography, personal computers, calculators, phones, digital art software, 3D modeling, motion capture, drum machines, synthesizers, the internet, wikipedia, stackoverflow, etc etc etc etc., surely these guys were wrong, but you're right and this time history will validate you! surely this time! for sure!

Something I don't think we talk enough about in discussions surrounding AI is the loss of perseverance.

I have a friend who works in education and he told me about how he was working with a small group of HS students to develop a new school sports chant. This was a very daunting task for the group, in large part because many had learning disabilities related to reading and writing, so coming up with a catchy, hard-hitting, probably rhyming, poetry-esque piece of collaborative writing felt like something outside of their skill range. But it wasn't! I knew that, he knew that, and he worked damn hard to convince the kids of that too. Even if the end result was terrible (by someone else's standards), we knew they had it in them to complete the piece and feel super proud of their creation.

Fast-forward a few days and he reports back that yes they have a chant now... but it's 99% AI. It was made by Chat-GPT. Once the kids realized they could just ask the bot to do the hard thing for them - and do it "better" than they (supposedly) ever could - that's the only route they were willing to take. It was either use Chat-GPT or don't do it at all. And I was just so devastated to hear this because Jesus Christ, struggling is important. Of course most 14-18 year olds aren't going to see the merit of that, let alone understand why that process (attempting something new and challenging) is more valuable than the end result (a "good" chant), but as adults we all have a responsibility to coach them through that messy process. Except that's become damn near impossible with an Instantly Do The Thing app in everyone's pocket. Yes, AI is fucking awful because of plagiarism and misinformation and the environmental impact, but it's also keeping people - particularly young people - from developing perseverance. It's not just important that you learn to write your own stuff because of intellectual agency, but because writing is hard and it's crucial that you learn how to persevere through doing hard things.

Write a shitty poem. Write an essay where half the textual 'evidence' doesn't track. Write an awkward as fuck email with an equally embarrassing typo. Every time you do you're not just developing that particular skill, you're also learning that you did something badly and the world didn't end. You can get through things! You can get through challenging things! Not everything in life has to be perfect but you know what? You'll only improve at the challenging stuff if you do a whole lot of it badly first. The ability to say, "I didn't think I could do that but I did it anyway. It's not great, but I did it," is SO IMPORTANT for developing confidence across the board, not just in these specific tasks.

Idk I'm just really worried about kids having to grow up in a world where (for a variety of reasons beyond just AI) they're not given the chance to struggle through new and challenging things like we used to.

#tech#ai#i am getting tired of this shit#so many artists going “i don't want to draw backgrounds so i stole a photograph off google images” “this is acceptable!”#and simultaneously “AI IS DESTROYING YOUR CAPABILITIES”. absolute goofball behavior

37K notes

·

View notes

Text

The Rise of Multilayer PMMA Temporary Prostheses: Revolutionizing Dental Restorations

In modern dentistry, temporary prostheses play a critical role in ensuring patient comfort, aesthetics, and functionality while awaiting permanent restorations. With advancements in digital dentistry, multilayer PMMA (polymethyl methacrylate) has emerged as a game-changer for temporary crowns, bridges, and dentures. This blog post explores why temporary prostheses are essential, compares multilayer versus monolayer PMMA for patient options, and outlines the streamlined workflow from intraoral scanning to final multilayer PMMA prostheses. For dental labs seeking high-quality materials, we’ll also highlight why Dental Lab Shop is a go-to source for multilayer PMMA blocks.

Why Temporary Prostheses Are Essential

Temporary prostheses serve as interim solutions during the transition from tooth preparation to permanent restoration. They are vital for several reasons:

Protection: Temporaries shield prepared teeth or implants from sensitivity, bacterial infiltration, and damage, preserving the underlying structure.

Aesthetics: They maintain a natural smile, boosting patient confidence during the healing or fabrication period.

Functionality: Temporaries restore chewing and speech capabilities, ensuring patients can maintain their daily routines.

Occlusal Stability: They help stabilize the bite and prevent tooth migration, ensuring proper alignment for the final restoration.

Evaluation: Temporaries allow dentists to assess fit, function, and aesthetics before crafting permanent prostheses, reducing adjustments later.

Temporary prostheses are typically needed for weeks to months, depending on the complexity of the case, making material choice critical for durability and patient satisfaction.

Multilayer vs. Monolayer PMMA: Patient-Centric Options

When selecting materials for temporary prostheses, PMMA is a popular choice due to its versatility, biocompatibility, and ease of milling. However, multilayer PMMA offers distinct advantages over monolayer PMMA, especially for patient-focused outcomes.

For patients seeking a balance of aesthetics, durability, and comfort, multilayer PMMA is the superior choice, particularly for extended temporization or anterior restorations.

Digital Workflow: From Intraoral Scan to Multilayer PMMA Prostheses

The integration of digital dentistry has transformed the fabrication of temporary prostheses, making the process faster, more precise, and patient-friendly. Here’s a step-by-step workflow leveraging intraoral scanners and CAD/CAM technology:

Capture Digital Impression:

Use an intraoral scanner (e.g., Medit, 3Shape, or Planmeca) to obtain a high-resolution 3D digital impression of the patient’s oral anatomy.

Share Data with Dental Laboratory:

Transmit the digital impression file (STL format) securely to the dental lab via a cloud-based platform for real-time collaboration.

Design Prosthesis in CAD Software:

Import the digital impression into CAD software (e.g., Exocad or 3Shape) and design the temporary prosthesis, aligning it with the multilayer PMMA block’s shade gradient for optimal aesthetics.

Mill Prosthesis Using CAM:

Send the design to a CAD/CAM milling machine (e.g., Amann Girrbach Ceramill Motion 2, Zirkonzahn, or Sirona CEREC) to mill the multilayer PMMA block into the temporary prosthesis.

Finish and Polish:

Polish the milled prosthesis to a high gloss using standard dental polishing tools, leveraging the material’s smooth transitions for minimal adjustments.

Deliver and Place Prosthesis:

Send the finished temporary prosthesis to the dentist for placement, typically within 24–48 hours, and cement it using resin-modified hybrid cement for secure retention.

This digital workflow reduces turnaround time, enhances precision, and improves patient outcomes compared to traditional methods.

Source High-Quality Multilayer PMMA from Dental Lab Shop

For dental labs seeking top-tier multilayer PMMA blocks, look no further than Dental Lab Shop. They offer a vast in-stock selection of multilayer PMMA materials compatible with open systems, and Zirkonzahn, Amann Girrbach, and CEREC systems available. Available in Vita 16 shades, these blocks ensure seamless shade matching and natural aesthetics. Dental Lab Shop’s multilayer PMMA is designed for superior millability, high strength, and easy polishing, making it a reliable choice for temporary crowns, bridges, and dentures. For more details, visit their reference page at Dental Lab Shop.

Conclusion

Multilayer PMMA temporary prostheses represent a leap forward in dental restorations, offering unmatched aesthetics, durability, and patient comfort compared to monolayer PMMA. Coupled with digital workflows—leveraging intraoral scanners, CAD software, and advanced CAD/CAM milling machines—dental labs can deliver high-quality temporaries in record time. By sourcing multilayer PMMA blocks from trusted suppliers like Dental Lab Shop, labs can meet the growing demand for lifelike, long-lasting temporary restorations. Embrace this technology to transform smiles and elevate patient care.

0 notes

Text

AI VidForest Review - Create & Sell High-Demand 8k AI Videos!

AI VidForest Review - Introduction

Hello, Internet Marketers! Welcome To My AI VidForest Review. Video Marketing And Creation The Next Big Trend Of 2025. Absolutely! Video is already dominating the marketing world, but what truly makes it stand out is its uniqueness. No one wants to watch a dull, text-heavy video or outdated content. To capture attention, your videos must be fresh, engaging, and interactive.

As the video content landscape continues to evolve, the flood of best practices, tips, and trends can feel overwhelming. But here's the truth: creating beautiful content alone won’t guarantee success anymore. To truly stand out, your content needs to be accessible, inclusive, and resonate with diverse audiences. Video isn’t just a trend; it’s a revolution. From website designs to social media feeds, digital interfaces, and beyond, video is the glue holding together the future of digital communication. AI VidForest Has Created 421,792+ AI Video Song, AI Ads Maker ,AI Cinematic Movies, AI Storyteller, AI Animations, AI Cartoon Videos, AI Whiteboard Videos. Over 150 Top Agency Owners, Marketers & more than 2000 customers have started making money with AI VidForest.

AI VidForest Review - Overview

AI VidForest Creator: Akshat Gupta

Product Title: AI VidForest

Front-end price: $14.95 (One-time payment)

Available Coupon: Apply code “VIDFORADMIN” for 30% off

Bonus: Yes, Huge Bonuses

Niche: Software(Online)

Guarantee: 100% Refund 30-day Money Back Guarantee.

F E SalesPage: Click Here To Grab AI VidForest.

AI VidForest Review - What is AI VidForest?

AI VidFrest is The World's First Fully AI-Powered App That Turns Any Idea, Voice, Or Even Prompt Into Breathtaking "Multi-Sense Hyper Realistic 8K Creations" Such As - AI Video Song, AI Ads Maker ,AI Cinematic Movies, AI Storyteller, AI Animations, Motion Videos, Live 3D Model Video, AI Cartoon Videos, Green Screen Videos, AI Whiteboard Videos, AI Dynamic Emotion Based Video, AI Specific Camera Angle Videos & Many More In Single Dashboard.

Step 1: Give Idea, Prompt Or Voice Command Step 2: Craft Anything You Desire From Ai Videos To Ai Musics To Ai Contents… Step3: Sell These "High-In-Demand" Ai Videos, Musics, Contents Or Anything You Want To Our Built-In 168M+ Audience & Profit $232.32 Per Day.

AI VidForest Review – How Does AI VidForest Work?

Start Your Own Video Agency & Sell Unlimited Videos For Huge Profit In 3 Simple Steps.

STEP #1 - Login & GIVE

Any Prompt Or Even Voice (or pick a done-for-you template)

STEP #2 - AI Generates Unlimited

Multiple Videos Like AI Video Song, AI Ads Maker, AI Cinematic Movies, AI Storyteller, AI Animations, AI Cartoon Videos, AI Whiteboard Videos & Many More in Few Minutes.

STEP #3 - Download

Sell, or Use It—KEEP 100% PROFITS!

AI VidForest Review – Features & Benefits

AI Video Songs – Type or speak an idea, and AI generates a full musical video with vocals, beats & visuals.

AI Ads Maker – Create high-converting ads for Facebook, TikTok, YouTube & more in Minutes.

AI Cinematic Videos – Hollywood-style scenes, AI actors, scripts & effects—no filming needed.

AI Storyteller Videos – Turn blogs, scripts, or prompts into engaging animated story videos.

AI Cartoon & Animations Videos – Make Pixar-style cartoons or anime videos with just a text prompt.

AI Whiteboard Videos – Perfect for explainers, tutorials & sales videos with auto-drawing visuals.

8K Ultra HD Quality – Crisp, studio-grade videos with AI-enhanced visuals & effects.

Realistic AI Voiceovers – 500+ voices, emotions & languages (perfect for dubbing!).

AI Music Composer – Custom soundtracks that match your video’s mood automatically.

AI Script Writer – Struggling for ideas? AI generates scripts, dialogues & scenes for you.

AI Thumbnail & Titles – Auto-generate click-worthy thumbnails & viral-ready titles.

1-Click Social Export – Optimized for YouTube, Instagram, TikTok, Ads & more.

Commercial License – Sell videos to clients (ads, songs, cartoons—keep 100% profits!).

Go Viral Globally – Auto-translate & dub videos in 100+ languages.

Reuse & Remix – Turn one idea into 10+ video formats (TikTok clips, YouTube videos, ads, etc.).

Made for Everyone – Zero Learning Curve!

Prompt-to-Video – Just type, speak, or even hum an idea—AI handles the rest.

Few Minutes Creation – No waiting hours to render—fast, smooth & buffer-free.

30-Day Money-Back Guarantee – Try it risk-free!

>> Click Here To Get Access <<

AI VidForest Review – Bonus

Check Out these Bonuses You'll Get for Free. If You GRAB THIS Profitable Business Opportunity Today.

Bonus #1 - LogoMaker Pro

LogoMaker Pro is your go-to AI-powered app for designing stunning logos in just minutes. Whether you're launching a brand, creating a YouTube channel, or building a client’s identity, this tool makes logo creation effortless. Choose from 1000s of modern templates, customize fonts, icons, and colors, and export in high resolution. No design skills? No problem! This drag-and-drop interface is beginner-friendly but powerful enough for pros. Plus, every logo you create is 100% unique. Save time, save money, and look professional from day one with LogoMaker Pro in your toolkit.

Bonus #2 - Thumbmail Genius

ThumbnailGenius helps you create scroll-stopping YouTube and social media thumbnails that boost your click-through rates. With proven templates optimized for virality, this tool gives you the edge needed to grab attention in busy feeds. Add bold text, catchy graphics, and emotion-driven visuals with a simple editor that anyone can use. Whether you're a YouTuber, content creator, or video marketer, ThumbnailGenius gives your content the visual punch it needs. No Photoshop required—just results. Get more views, more engagement, and more growth with thumbnails that speak louder than words.

Bonus #3 - AI Image Generator

Transform your imagination into visuals using the AI Image Generator. Just type what you want to see—“a flying robot in Tokyo” or “minimalist beach background”—and AI creates it instantly. Perfect for video backgrounds, thumbnails, social posts, or creative design work. You don’t need a camera or designer—this tool makes unlimited custom images in Minutes. Choose from styles like cartoon, realistic, abstract, or 3D. With support for 100+ languages, it’s global-ready.

Bonus #4 - Intro/Outro App Maker

Make your videos look professional from start to finish with the Intro/Outro Maker App. Choose from pre-animated templates or build your own with logos, music, and transitions. Whether you're branding a YouTube channel, launching a promo, or delivering a tutorial, your video will leave a lasting impression. Add your name, logo, social handles, and calls-to-action—fully customizable in minutes. With cinematic effects and drag-and-drop ease, this app ensures every video starts strong and ends memorably.

Bonus #5 - AI Script Generator

Say goodbye to writer’s block! The AI Script Generator writes high-converting, audience-grabbing video scripts in Minutes. Just enter your topic, product, or keyword—and get a full video script ready to use. Whether you're creating YouTube videos, sales videos, or explainers, this tool crafts intros, hooks, bullet points, and CTAs automatically. Choose tones like professional, casual, or humorous, and get scripts in multiple languages too.

Bonus #6 - Background Removal App

Quickly remove backgrounds from images or videos using this powerful AI tool—no green screen needed! Whether you're editing product shots, placing yourself in new environments, or creating creative overlays, the Background Remover Tool does it in one click. Upload any image or frame, and the AI instantly detects and isolates the subject.

Bonus #7 - Reels & Shorts Resizer

Want to post your video on multiple platforms without re-editing? The Reel & Shorts Resizer App resizes your content for Instagram Reels, YouTube Shorts, TikTok, and Stories in one click. Upload your video and choose your platform—this smart tool adjusts dimensions, framing, and quality automatically. Save hours of manual cropping and exporting. Maintain high resolution, adjust focus areas, and even preview before publishing.

Bonus #8 - Video Ads Templates Pack

Launch winning video ads faster with this ready-to-use Video Ads Template Pack. Get access to 100+ customizable templates for various industries like eCommerce, coaching, real estate, and digital services. Each template includes eye-catching visuals, proven CTA structures, and attention-grabbing animations. Just drop in your content, edit the text, and export. Perfect for marketers and business owners who want to run ads without hiring a team or starting from scratch.

Bonus #9 - Caption Pro (Subtitle Maker)

Boost engagement and accessibility with CaptionPro. This smart subtitle generator automatically transcribes your video audio into accurate, timed captions in 100+ languages. Just upload your video, and the tool handles everything—perfect for YouTube, Instagram, and silent-scroll platforms. Customize font style, size, and position to match your branding. No more manual typing or syncing headaches. Captions improve SEO, viewer retention, and reach, especially in global markets.

Bonus #10 - Mockup Maker

Present your videos, websites, or designs like a pro using MockupMagic. Choose from hundreds of realistic device frames—phones, tablets, laptops, desktops—and place your content inside in Minutes. Great for showcasing apps, YouTube channels, thumbnails, websites, or digital products. Add backgrounds, lighting effects, and shadows for a polished look. Whether you're creating sales pages, portfolio pieces, or client demos, this tool gives your content a professional edge.

AI VidForest Review – Full Funnel Breakdown & Pricing

🔹 Front-End: AI VidForest – $14.95 (One-Time)

Grab lifetime access to AI VidForest at its lowest-ever price—just $14.95. Don’t wait—this special deal will soon switch to a recurring monthly plan.

✅ Risk-Free Guarantee: If you’re unable to access the software and support can’t resolve the issue within 30 days, you’ll receive a full refund—no questions asked.

💸 Extra Savings: Use code “VIDFORADMIN” to get 30% off instantly!

🔹 AI VidForest OTOs (One-Time Offers)

OTO 1: Unlimited Access

Unlimited Basic: $45

Unlimited Premium: $47

OTO 2: Pro Features

Pro Basic: $45

Pro Full Access: $47

OTO 3: DFY Profitizer – $67

Ready-made profit-generating tools for instant use.

OTO 4: Autopilot Profit – $47

Automate your video profits with minimal effort.

OTO 5: Profit Templates – $67

Access to high-converting video and marketing templates.

OTO 6: DFY Traffic Hub – $37

Plug-and-play traffic solution to drive visitors fast.

OTO 7: AiFortune – $197

Advanced AI suite for scaling your income streams.

OTO 8: Agency Edition – $97

Sell AI VidForest as a service to clients.

OTO 9: Full Reseller License – $97

Keep 100% profits by selling AI VidForest as your own.

OTO 10: Giant Mega Bundle – $197

Massive value pack loaded with premium bonuses and upgrades.

💸 Extra Savings: Use code “VIDFORADMIN” to get 30% off instantly!

Frequently Asked Questions(FAQ)

Q. Do I need experience or tech/design skills to get started?

A. AI VidForest is created keeping newbies in mind. So, it’s 100% newbie-friendly & requires no prior design or tech skills.

Q. How are you different from available tools in the market?

A. This tool is packed with industry-leading features that have never been offered before. Also, if you’re on this page with us, which simply means you have checked out a majority of the available tools and are looking for a complete solution. You’ll not get these features ever at such a low price, so be rest assured with your purchase.

Q. Does your software work easily on Mac and Windows?

A. Definitely AI VidForest is 100% cloud based & AI Powered. You can download & use it on any Mac or Windows operating machine.

Q. Is step-by-step training included?

A. YEAH- AI VidForest comes with step-by-step video training that makes it simple, easy & guides you through the entire process with no turbulence.

Q. Do you provide any support?

A. Yes, we’re always on our toes to deliver you an unmatched experience. Drop us an email if you ever have any query, and we’ll be more than happy to help.

Q. Do you provide a money back guarantee?

A. Absolutely yes. We’ve already mentioned on the page that you’re getting a 30-day no questions money back guarantee. Be rest assured, your investment is in safe hands.

AI VidForest Review - In Summary

AI VidForest is a game-changer in video creation, transforming simple text prompts into stunning cinematic visuals with ease. It empowers users to unlock both creative expression and income potential—without the burden of costly subscriptions. Whether you're a marketer, content creator, or entrepreneur, AI VidForest makes it effortless to produce high-quality videos and connect with a wider audience. It's a must-have tool for anyone seeking powerful, affordable, and efficient video solutions.

>> Click Here To Get Access AI VidForest <<

0 notes

Text

Retail Store Digital Signage Trends to Watch in 2025

As the world changes fast retail store digital signage is becoming a must have for brands who want to engage customers, deliver real time messaging and drive in store sales. In 2025 digital signage is no longer a nice to have, it's a business imperative. From AI to personalisation here are the top retail store digital signage trends to look out for this year.

1. Personalized, Data-Driven Displays

In 2025, personalized digital signages will dominate the retail scene. Using customer data such as past purchases, browsing behavior, or loyalty program activity, retailers can tailor signage content in real time.Imagine walking into a store and seeing promotions curated just for you on your preferences.The new way of data-driven retail marketing is upscaling dwell time and conversion rates like never before.

2. AI-Powered Content Automation

The use of AI in the creation of retail signage background content will allow this system to go one step further. The best AI tools allow automation of signage content depending on several factors such as time of day, weather, inventory levels, and customer foot traffic. So for instance, if a product is low on stock, the signage can change dynamically to urge the customer to buy.nowThis type of responsive advertising guarantees your message is always relevant and timely.

3. Interactive Digital Signage Experiences

Static displays are a thing of the past. In 2025 interactive digital signage – touch kiosks and motion sensitive screens – is changing how customers shop in-store. These smart displays allow users to browse a product catalogue, check prices, view size charts and even order without much help from sales staff. This is how to reduce friction and improve the customer experience in fashion and electronics retail.

4. Integration with Mobile and Omnichannel Platforms

Retail store digital signage is being integrated more and more with mobile apps, QR codes, and NFC (near-field communication) technology. Shoppers are able to scan content from signage to discern more information, save an offer, or actually purchase the item on their phone. This tight integration backs an omnichannel retail strategy and allows for a seamless switch between the online and offline.

5. Sustainable and Energy-Efficient Signage Solutions

Acting in favor of sustainability, retailers have started investing in LED digital signage and display screens that are energy-efficient. These signs, along with lesser power consumption, are made of green materials. With sustainability being ingrained into the brand story, green digital signage helps stores in communicating their values and in reducing operational costs.

6. Real-Time Analytics and Performance Tracking

Modern-day digital signage software of 2025 would have advanced analytics and reporting features. There is an ability for retailers to capture impressions, dwell time, interaction rates, and demographic data using embedded sensors and cameras. This is to carry out A/B testing of signage content and deliver the best possible messaging based on real-time performance data.

7. Visual Merchandising Uses 3D and Motion Graphics

Using 3D animation, motion graphics, and HD video for visual merchandising is a step toward pushing the creative boundaries of retailers. These visuals, engaging in nature, draw customer attention and convey brand messages better than traditional print materials.

Final Thoughts

As retail continues to evolve, retail store digital signage will take an even more pivotal role in shaping customer journeys and delivering personalized content in full support of a seamless omnichannel experience. In 2025, smart, sustainable, and interactive signage will attract consumers as well as create an interactive engagement with and conversion of consumers.

Whether you have a boutique store or a large chain, staying on top of these trends will give your business the edge it needs in a digital retail world. At Engagis we specialise in smart, scalable and fully integrated digital signage solutions for the retail environment. From interactive displays to AI powered content management we help brands turn customer engagement into real business results.

#Retail store digital signage#interactive digital signage#LED digital signage#digital signage software#Digital signage solutions#digital signage

0 notes

Text

Graphic Design: The Art of Visual Communication

Walk into a store, scroll through Instagram, or browse a website — chances are, you’ve already seen dozens of examples of graphic design today. It’s all around us, quietly shaping how we see, feel, and interact with the world. But what exactly is graphic design, and why does it matter so much?

What Is Graphic Design, Really?

Graphic design is more than just "making things look pretty." At its heart, it’s about solving problems visually. It’s the creative process of combining text, imagery, colors, and layout to communicate a message, evoke emotion, and guide the viewer’s attention.

From the layout of a magazine to the logo of your favorite coffee shop, graphic design is responsible for the way information is presented — and whether it captures attention or gets ignored.

Why Graphic Design Matters

We live in a highly visual world. Research shows that the human brain processes visuals 60,000 times faster than text. In such an environment, good design isn’t optional — it’s essential.

Here’s why graphic design matters:

First Impressions Count: People judge brands within seconds. Design helps you make a positive, lasting impact.

Trust and Credibility: Professional design signals that you're serious and trustworthy.

Communication Made Clear: Infographics, icons, and layouts make complex information easier to understand.

Stronger Branding: Consistent visual elements build recognition and loyalty.

Main Areas of Graphic Design

1. Visual Identity Design

This includes logos, color schemes, and brand style guides. It’s what gives a business its unique look and feel.

2. Marketing and Advertising

Social media posts, flyers, posters, banners, and email templates are all tools to attract and convert customers.

3. Publication Design

Books, magazines, newspapers, and digital editorial layouts all rely on effective graphic design.

4. User Interface (UI) Design

The visual layout of websites, apps, and software that users interact with directly.

5. Packaging Design

Everything from cereal boxes to skincare bottles. Good packaging design can make the difference between a sale and a pass.

6. Motion Graphics

Animated visuals like explainer videos, transitions, and social media reels that bring static elements to life.

Tools of the Trade

Graphic designers use a range of tools — both traditional and digital — to bring their ideas to life:

Adobe Photoshop (photo editing, composites)

Adobe Illustrator (vector graphics, logos)

Figma (UI/UX and collaborative design)

Canva (accessible design for non-professionals)

Procreate (digital drawing on iPad)

What Makes a Design Great?

It’s not just about creativity. Great design is:

Purposeful – It communicates a clear message.

User-Centric – It considers how the viewer will interact with it.

Consistent – It matches the overall brand or message.

Visually Balanced – Colors, shapes, and space are used effectively.

Innovative – It brings something new or unexpected.

Trends to Watch in 2025

Graphic design is always evolving. Some current trends include:

Bold, expressive typography

3D and immersive visuals

Minimalist design with a twist

Dark mode interfaces

Authentic, hand-drawn illustrations

Graphic Design as a Career

If you're interested in becoming a graphic designer, it’s a fantastic time to start. The demand for design skills spans every industry, from tech and fashion to entertainment and education.

Start by:

Learning the fundamentals (online courses, tutorials, or design school)

Practicing with real or mock projects

Building a portfolio to showcase your work

Collaborating with others and getting feedback

Staying inspired by following trends, artists, and brands you admire

Final Thoughts

Graphic design is everywhere — and it’s here to stay. Whether it’s helping businesses grow, making technology easier to use, or simply adding beauty to everyday life, graphic design plays a critical role in shaping how we see and engage with the world.

Whether you're a business owner, content creator, or aspiring designer, understanding the basics of graphic design can help you communicate more effectively and stand out in a visually crowded world.

#graphic design#graphics design#digital marketing#digital art#seo services#digital marketing services

0 notes

Text

Visual Effects (VFX) Software Market Growth Driven by Demand for High-Quality Content in Film and Gaming

The Visual Effects (VFX) Software Market Growth is gaining momentum as the global demand for high-quality digital content intensifies across the film and gaming industries. With consumers increasingly expecting realistic visuals and immersive experiences, content creators are investing heavily in advanced VFX tools to meet and exceed audience expectations. This shift marks a significant evolution in how entertainment is produced, consumed, and valued worldwide.

Rising Demand from Film Industry

The film industry remains one of the primary drivers of the VFX software market. Blockbuster movies and streaming services are competing to deliver visually stunning narratives that captivate global audiences. Studios are leveraging VFX software to create lifelike characters, breathtaking environments, and complex action sequences that would be impossible or cost-prohibitive to film in real life.

High-profile films and series are integrating VFX at every stage—from pre-visualization to post-production. As a result, production companies are prioritizing software that offers advanced simulation, compositing, and rendering capabilities. The continuous evolution of 3D modeling and motion capture technologies has also expanded the creative possibilities for filmmakers, fostering innovation and visual storytelling on a whole new level.

Gaming Industry: A Powerhouse for VFX Innovation

Parallel to the film industry, the gaming sector has become a dominant force in driving VFX software adoption. Modern video games now feature highly realistic graphics and intricate visual effects that mirror cinematic quality. Game developers utilize VFX software to craft dynamic environments, fluid character movements, and special effects such as explosions, weather patterns, and light reflections.

The popularity of immersive gaming experiences, including virtual reality (VR) and augmented reality (AR), is pushing VFX software to new heights. These platforms require even more detailed visual elements, prompting software developers to innovate continuously and provide tools that support real-time rendering and efficient asset creation. Consequently, the gaming industry's rapid technological progression is accelerating the growth of the VFX software market.

Technological Advancements Fueling Market Expansion

The market is also benefiting from advances in cloud computing, artificial intelligence (AI), and machine learning (ML). These technologies are revolutionizing the way VFX software operates, offering faster rendering, improved workflow automation, and greater scalability. AI-driven tools can assist artists with tasks like rotoscoping, texture mapping, and facial animation, significantly reducing production timelines and costs.

Cloud-based VFX platforms allow teams to collaborate globally, enabling studios to access a broader talent pool and maintain continuity in cross-border productions. This flexibility has become especially valuable in a post-pandemic world, where remote work remains a common practice in the creative sector.

Market Opportunities and Competitive Landscape

There is a significant market opportunity in developing cost-effective VFX software tailored for indie filmmakers, small studios, and mobile game developers. As the democratization of content creation continues, demand for scalable and user-friendly software solutions will grow. This presents opportunities for new entrants and established players to tap into emerging customer segments.

Major players in the VFX software market include Adobe, Autodesk, Foundry, SideFX, and Maxon, among others. These companies are investing in R&D to enhance their product offerings, integrate emerging technologies, and retain a competitive edge. Strategic partnerships, acquisitions, and licensing agreements are also common as firms aim to expand their global footprint.

Future Outlook

The Visual Effects (VFX) Software Market is poised for sustained growth in the coming years, driven by increasing content consumption, rapid technological innovation, and heightened competition among entertainment platforms. As digital storytelling becomes more sophisticated, VFX software will continue to play a pivotal role in shaping the future of visual media.

In conclusion, the demand for high-quality content in film and gaming is not just a passing trend—it is a long-term catalyst that will continue to elevate the VFX software market. Stakeholders who prioritize innovation, flexibility, and user experience will be well-positioned to thrive in this dynamic and rapidly evolving landscape.

0 notes

Text

Sketching the Future: Why Traditional Drawing Still Drives Modern Animation

In a world full of sophisticated digital aids, motion capture, and CGI magic, one might think that old-fashioned drawing has gone the way of the dinosaur. But beneath some of the most compelling scenes in contemporary animation is a pencil sketch—hand-drawn, bringing to life what subsequently dazzles on screen. This harmony of analog beauty and digital skill is the essence of modern animation, a testament to the fact that drawing is dead only in the imagination; it's stronger and more vital than ever. The Foundation Never Fades

Hand-drawn drawing is more than a throwback to the golden age of animation; it's the ground on which all visual storytelling begins. Whether a storyboard, a concept piece, character design, or a full-fledged environment, almost all animation projects start with hand-drawn sketches. These first few lines of work allow animators to experiment with visual potential, establish mood, and define character personality well before animation software comes into play. Despite 3D modeling and AI-driven software taking center stage, capable drawing animators always produce fuller, more expressive work. They are able to visualize anatomy, movement, perspective, and emotion in ways beyond the capabilities of a mouse click.

A Resurgence in 2D Appeal

In recent years, there has been a resurgence of the warmth of hand-drawn animation on the big screen. Netflix, for example, released Klaus in 2019, a movie that blended traditional 2D animation with state-of-the-art lighting. The success of the movie encouraged new discussions within the industry regarding the emotional resonance of hand-made art.

More recently, The Peasants (2023), a hand-painted animated feature produced by the team that made Loving Vincent, further followed this path by presenting the sheer beauty of frame-by-frame drawing. Audiences reacted not only to the narrative, but to the craftsmanship itself—valuing the human element in each frame. This newfound appreciation shows that there is a hunger out there for real, traditional aesthetics, particularly when mixed creatively with digital innovation.

Why Sketching Still Matters in Digital Workflows

Even in studios with robust animation tools such as Toon Boom, Blender, or After Effects, old-school drawing is at the heart of pre-production. Pencil tests, rough sketches, and animatics offer a low-stress, high-impact way to test ideas prior to full-scale production.

Additionally, artists working in traditional drawing have greater precision with timing, spacing, and motion fluidity—principles essential both to 2D and 3D animation. Disney's fabled "12 Principles of Animation," as initially developed to guide hand-drawn animation, continue to instruct techniques employed for CGI-drenched productions today. Principles such as squash and stretch, anticipation, and exaggeration are initially gained through pencil and sketchpad work.

Education Still Focuses on Drawing Ability

There are no animation schools dropping drawing—they're redoubling it. Syllabi are being reworked to combine classic methods with computer workflows. Students are learning to draw by hand not because they'll be animating all their frames by hand, but because it hones their eye and develops discipline.

Intriguingly, the call for drawing-oriented animation training has increased, particularly in cities such as Boston, where the creative economy is flourishing. With more studios, game developers, and marketing firms wanting 2D imagery for mobile media and social storytelling, students taking an Animation course in Boston frequently discover that their sketchbook is as valuable as their tablet.

Old Drawing and New Technology: A Consonant Mixture

What is interesting in the current animation era is not the conflict between old and new, but rather how they blend together. Traditional hand-drawn characters are now scanned and rigged in computer programs. Artists use tablets that are designed to recreate the tactile feel of pencil marks on paper. Programs such as Procreate and Adobe Fresco provide software that simulates brushes, inks, and watercolors with near-perfect precision.

AI is even being taught on classical art. Recent advances in machine learning enable algorithms to forecast the way a character would move or feel based on hand-drawn frames. Though AI can computerize in-between frames or background elements, the flash of inspiration—the personality in a character's eyes or the shape of a smile—continues to emanate from the artist's hand.

This balance permits more experimentation. Productions such as Arcane from Riot Games combine hand-painted textures with 3D modeling to produce a distinctive visual language that is handcrafted in feel but at the scale of a blockbuster production.

The Indie Revolution and Hand-Drawn Revival

Yet another area where traditional drawing is making a comeback is the independent animation world. Free from the limitations of popular trends, independent animators frequently prefer 2D techniques for their honesty and emotive capabilities. Short movies, web series, and music videos often rely on the pure energy of hand-drawn pieces.

For example, in early 2025, animation forums were buzzing with the viral popularity of Daydreams, a short film by up-and-coming artist Reina Park. Animated completely in pencil with little post-production, it accumulated millions of views on Vimeo and YouTube—reminding everyone that simplicity, when executed well, can outshine even the most elaborate renders.

This renaissance is motivating a new generation of animators to take up the pencil once more—not because it's nostalgic, but because of creative control and emotional impact.

What the Industry Says

Top animators and directors frequently reiterate the importance of drawing. In an interview with Animation Magazine, Glen Keane (Disney legend animator and director of Over the Moon) had to say:

"Drawing is thinking. You can't create an interesting character, whether you're doing 2D or 3D, unless you can think with your pencil."

Studios such as Studio Ghibli, in their commitment to hand-drawn animation, remain a guiding influence even for CGI-dominated productions. Their recent film, How Do You Live?, by Hayao Miyazaki, was nearly completely done by hand, serving as a testament to the longstanding relevance of traditional techniques.

At the same time, Disney+ and HBO Max are actively investing in 2D projects, demonstrating that the market sees and invests in this art.

The Road Ahead

With technology being more available, the future of animation will most probably be hybrid—where old skills and new tools come together in harmony. Drawing will continue to be the pulse of storytelling, not because of nostalgia, but because it adds simplicity, expression, and humanity to a field that is increasingly mechanized.

Prospective animators, then, would be well advised to take up traditional drawing. Not as a substitute for computer tools, but as an essential ally that enriches every part of contemporary production.

Where cities have thriving creative industries, as is the case with Boston, their schools are already adapting to the change. Curriculum for character design, motion graphics, and frame-by-frame narratives is bridging analog ways of working and computer-based practice, giving the students the perfect of both. One such venture is the 2D visualization course in Boston, bringing the basics of drawing together with state-of-the-art software and thereby equipping the animators with the needs of the new business environment.

Conclusion

Even as technology continues to re-form the weapons in the animators' arsenals, animation still depends upon the fundamental gesture of drawing. From the earliest of concept doodles to final on-screen illusions, the time-tested act of drawing is what fundamentally defines animation. And as international studios look for artists who possess not only digital proficiency but also a deep understanding of hand-drawn skills, there's also a renewed emphasis on developing these ageless skills. That is why Animation courses are becoming popular—because they know that in animation, all that's significant still starts with a line.

0 notes

Text

Bathymetric : An Overview of Underwater Mapping | Epitome

In a world increasingly reliant on precise geospatial data, bathymetry surveys stand out as an indispensable tool for understanding the hidden landscapes beneath bodies of water. From enhancing maritime navigation to aiding scientific research and supporting infrastructure development, bathymetric surveys serve as the cornerstone for any project requiring detailed information about underwater topography.

This blog delves into the essence of bathymetry surveys, why they are vital, and how modern technology has redefined the way we map the seafloor.

What is a Bathymetric ?

Bathymetric is the study and mapping of the underwater features of oceans, seas, rivers, and lakes. A bathymetry survey involves the measurement of the depth of water bodies to determine the shape and features of the seabed or riverbed. It is essentially the aquatic counterpart to topography and involves collecting depth data and converting it into charts or 3D models.

Why Bathymetric Surveys Matter

Accurate bathymetric data is critical across a wide range of industries and applications:

Maritime Navigation: Helps ensure safe passage for ships by identifying shallow areas, submerged obstacles, and underwater channels.

Dredging Projects: Provides pre- and post-dredging data to guide excavation and verify results.

Flood Modeling and Risk Management: Supports hydrological modeling and floodplain mapping for better disaster planning.

Environmental Studies: Assists in assessing aquatic habitats, sediment transport, and underwater ecosystems.

Infrastructure Development: Informs the design and placement of bridges, pipelines, ports, and offshore structures.

How Bathymetric Surveys Are Conducted

Modern Bathymetric surveys employ various technologies to capture precise data:

Single-Beam Echo Sounders (SBES): Emit a single sonar beam directly below the survey vessel to measure depth. Ideal for smaller, simpler projects.

Multi-Beam Echo Sounders (MBES): Offer broader coverage by emitting multiple sonar beams, allowing high-resolution mapping of large areas quickly and accurately.

LiDAR (Light Detection and Ranging): Airborne systems that use lasers to map shallow coastal waters, combining bathymetric and topographic data.

Side Scan Sonar: Provides detailed images of the seafloor's texture and structure, often used alongside MBES for comprehensive analysis.

GPS and Motion Sensors: Integrated to enhance the accuracy of positional and depth data, compensating for vessel movement and water currents.

The Epitome of Precision and Technology

Today’s bathymetry surveys are not just about depth—they’re about data accuracy, spatial resolution, and the ability to visualize the unseen. The use of real-time kinematic positioning (RTK), autonomous surface vessels (ASVs), and advanced data processing software ensures that surveys deliver pinpoint precision. High-definition 3D models and GIS integration allow stakeholders to make informed decisions faster and more confidently.

Applications Across Industries

Whether it’s for offshore wind farms, marine construction, coastal management, or pipeline routing, bathymetry surveys play a pivotal role. Ports and harbors rely on them for dredging and safe navigation. Oil and gas companies use them for subsea asset installation. Governments depend on them for environmental monitoring and disaster resilience.

Conclusion