#ALTER TABLE SQL Server

Explore tagged Tumblr posts

Text

Adding a Column with Default Value to an Existing Table in SQL Server

To add a column with a default value to an existing table in SQL Server, you can use the ALTER TABLE statement combined with the ADD clause. The general syntax for adding a new column with a default value is as follows: ALTER TABLE TableName ADD ColumnName DataType DEFAULT DefaultValue; Here’s a step-by-step breakdown of the syntax: ALTER TABLE TableName: This specifies that you’re modifying…

View On WordPress

#ALTER TABLE SQL Server#default value SQL#modify table SQL#SQL Server add column#SQL Server database management

0 notes

Text

Structured Query Language (SQL): A Comprehensive Guide

Structured Query Language, popularly called SQL (reported "ess-que-ell" or sometimes "sequel"), is the same old language used for managing and manipulating relational databases. Developed in the early 1970s by using IBM researchers Donald D. Chamberlin and Raymond F. Boyce, SQL has when you consider that end up the dominant language for database structures round the world.

Structured query language commands with examples

Today, certainly every important relational database control system (RDBMS)—such as MySQL, PostgreSQL, Oracle, SQL Server, and SQLite—uses SQL as its core question language.

What is SQL?

SQL is a website-specific language used to:

Retrieve facts from a database.

Insert, replace, and delete statistics.

Create and modify database structures (tables, indexes, perspectives).

Manage get entry to permissions and security.

Perform data analytics and reporting.

In easy phrases, SQL permits customers to speak with databases to shop and retrieve structured information.

Key Characteristics of SQL

Declarative Language: SQL focuses on what to do, now not the way to do it. For instance, whilst you write SELECT * FROM users, you don’t need to inform SQL the way to fetch the facts—it figures that out.

Standardized: SQL has been standardized through agencies like ANSI and ISO, with maximum database structures enforcing the core language and including their very own extensions.

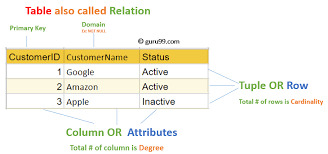

Relational Model-Based: SQL is designed to work with tables (also called members of the family) in which records is organized in rows and columns.

Core Components of SQL

SQL may be damaged down into numerous predominant categories of instructions, each with unique functions.

1. Data Definition Language (DDL)

DDL commands are used to outline or modify the shape of database gadgets like tables, schemas, indexes, and so forth.

Common DDL commands:

CREATE: To create a brand new table or database.

ALTER: To modify an present table (add or put off columns).

DROP: To delete a table or database.

TRUNCATE: To delete all rows from a table but preserve its shape.

Example:

sq.

Copy

Edit

CREATE TABLE personnel (

id INT PRIMARY KEY,

call VARCHAR(one hundred),

income DECIMAL(10,2)

);

2. Data Manipulation Language (DML)

DML commands are used for statistics operations which include inserting, updating, or deleting information.

Common DML commands:

SELECT: Retrieve data from one or more tables.

INSERT: Add new records.

UPDATE: Modify existing statistics.

DELETE: Remove information.

Example:

square

Copy

Edit

INSERT INTO employees (id, name, earnings)

VALUES (1, 'Alice Johnson', 75000.00);

three. Data Query Language (DQL)

Some specialists separate SELECT from DML and treat it as its very own category: DQL.

Example:

square

Copy

Edit

SELECT name, income FROM personnel WHERE profits > 60000;

This command retrieves names and salaries of employees earning more than 60,000.

4. Data Control Language (DCL)

DCL instructions cope with permissions and access manage.

Common DCL instructions:

GRANT: Give get right of entry to to users.

REVOKE: Remove access.

Example:

square

Copy

Edit

GRANT SELECT, INSERT ON personnel TO john_doe;

five. Transaction Control Language (TCL)

TCL commands manage transactions to ensure data integrity.

Common TCL instructions:

BEGIN: Start a transaction.

COMMIT: Save changes.

ROLLBACK: Undo changes.

SAVEPOINT: Set a savepoint inside a transaction.

Example:

square

Copy

Edit

BEGIN;

UPDATE personnel SET earnings = income * 1.10;

COMMIT;

SQL Clauses and Syntax Elements

WHERE: Filters rows.

ORDER BY: Sorts effects.

GROUP BY: Groups rows sharing a assets.

HAVING: Filters companies.

JOIN: Combines rows from or greater tables.

Example with JOIN:

square

Copy

Edit

SELECT personnel.Name, departments.Name

FROM personnel

JOIN departments ON personnel.Dept_id = departments.Identity;

Types of Joins in SQL

INNER JOIN: Returns statistics with matching values in each tables.

LEFT JOIN: Returns all statistics from the left table, and matched statistics from the right.

RIGHT JOIN: Opposite of LEFT JOIN.

FULL JOIN: Returns all records while there is a in shape in either desk.

SELF JOIN: Joins a table to itself.

Subqueries and Nested Queries

A subquery is a query inside any other query.

Example:

sq.

Copy

Edit

SELECT name FROM employees

WHERE earnings > (SELECT AVG(earnings) FROM personnel);

This reveals employees who earn above common earnings.

Functions in SQL

SQL includes built-in features for acting calculations and formatting:

Aggregate Functions: SUM(), AVG(), COUNT(), MAX(), MIN()

String Functions: UPPER(), LOWER(), CONCAT()

Date Functions: NOW(), CURDATE(), DATEADD()

Conversion Functions: CAST(), CONVERT()

Indexes in SQL

An index is used to hurry up searches.

Example:

sq.

Copy

Edit

CREATE INDEX idx_name ON employees(call);

Indexes help improve the performance of queries concerning massive information.

Views in SQL

A view is a digital desk created through a question.

Example:

square

Copy

Edit

CREATE VIEW high_earners AS

SELECT call, salary FROM employees WHERE earnings > 80000;

Views are beneficial for:

Security (disguise positive columns)

Simplifying complex queries

Reusability

Normalization in SQL

Normalization is the system of organizing facts to reduce redundancy. It entails breaking a database into multiple related tables and defining overseas keys to link them.

1NF: No repeating groups.

2NF: No partial dependency.

3NF: No transitive dependency.

SQL in Real-World Applications

Web Development: Most web apps use SQL to manipulate customers, periods, orders, and content.

Data Analysis: SQL is extensively used in information analytics systems like Power BI, Tableau, and even Excel (thru Power Query).

Finance and Banking: SQL handles transaction logs, audit trails, and reporting systems.

Healthcare: Managing patient statistics, remedy records, and billing.

Retail: Inventory systems, sales analysis, and consumer statistics.

Government and Research: For storing and querying massive datasets.

Popular SQL Database Systems

MySQL: Open-supply and extensively used in internet apps.

PostgreSQL: Advanced capabilities and standards compliance.

Oracle DB: Commercial, especially scalable, agency-degree.

SQL Server: Microsoft’s relational database.

SQLite: Lightweight, file-based database used in cellular and desktop apps.

Limitations of SQL

SQL can be verbose and complicated for positive operations.

Not perfect for unstructured information (NoSQL databases like MongoDB are better acceptable).

Vendor-unique extensions can reduce portability.

Java Programming Language Tutorial

Dot Net Programming Language

C ++ Online Compliers

C Language Compliers

2 notes

·

View notes

Text

How to Improve Database Performance with Smart Optimization Techniques

Database performance is critical to the efficiency and responsiveness of any data-driven application. As data volumes grow and user expectations rise, ensuring your database runs smoothly becomes a top priority. Whether you're managing an e-commerce platform, financial software, or enterprise systems, sluggish database queries can drastically hinder user experience and business productivity.

In this guide, we’ll explore practical and high-impact strategies to improve database performance, reduce latency, and increase throughput.

1. Optimize Your Queries

Poorly written queries are one of the most common causes of database performance issues. Avoid using SELECT * when you only need specific columns. Analyze query execution plans to understand how data is being retrieved and identify potential inefficiencies.

Use indexed columns in WHERE, JOIN, and ORDER BY clauses to take full advantage of the database indexing system.

2. Index Strategically

Indexes are essential for speeding up data retrieval, but too many indexes can hurt write performance and consume excessive storage. Prioritize indexing on columns used in search conditions and join operations. Regularly review and remove unused or redundant indexes.

3. Implement Connection Pooling

Connection pooling allows multiple application users to share a limited number of database connections. This reduces the overhead of opening and closing connections repeatedly, which can significantly improve performance, especially under heavy load.

4. Cache Frequently Accessed Data

Use caching layers to avoid unnecessary hits to the database. Frequently accessed and rarely changing data—such as configuration settings or product catalogs—can be stored in in-memory caches like Redis or Memcached. This reduces read latency and database load.

5. Partition Large Tables

Partitioning splits a large table into smaller, more manageable pieces without altering the logical structure. This improves performance for queries that target only a subset of the data. Choose partitioning strategies based on date, region, or other logical divisions relevant to your dataset.

6. Monitor and Tune Regularly

Database performance isn’t a one-time fix—it requires continuous monitoring and tuning. Use performance monitoring tools to track query execution times, slow queries, buffer usage, and I/O patterns. Adjust configurations and SQL statements accordingly to align with evolving workloads.

7. Offload Reads with Replication

Use read replicas to distribute query load, especially for read-heavy applications. Replication allows you to spread read operations across multiple servers, freeing up the primary database to focus on write operations and reducing overall latency.

8. Control Concurrency and Locking

Poor concurrency control can lead to lock contention and delays. Ensure your transactions are short and efficient. Use appropriate isolation levels to avoid unnecessary locking, and understand the impact of each level on performance and data integrity.

0 notes

Text

How to enable and disable constraints in SQL Server

— Disable all foreign key constraints EXEC sp_MSforeachtable “ALTER TABLE ? NOCHECK CONSTRAINT ALL” — Enable all foreign key constraints EXEC sp_MSforeachtable “ALTER TABLE ? WITH CHECK CHECK CONSTRAINT ALL”

0 notes

Text

How to Create Interactive Reports in Power BI

In today's data-driven world, businesses need powerful tools to visualize, analyze, and share insights effectively. Microsoft Power BI is one such tool that enables users to create interactive, real-time reports and dashboards with ease. Whether you're a business analyst, student, or aspiring data professional, learning to build interactive reports in Power BI is a key skill that adds tremendous value.

If you're looking for Power BI training in KPHB, this article will guide you through the fundamentals of creating engaging, user-friendly reports in Power BI.

What is an Interactive Report in Power BI?

An interactive report in Power BI allows users to:

Filter and slice data with visual controls

Drill down into data for deeper insights

Use tooltips and visuals for storytelling

Explore data dynamically without editing the report

Interactivity transforms static charts into powerful data exploration tools.

Step-by-Step: Creating Interactive Reports in Power BI

1. Connect to Your Data

Power BI supports multiple data sources—Excel, SQL Server, SharePoint, and many more.

Open Power BI Desktop

Click on “Get Data” and select your data source

Load the data into your report

2. Clean and Prepare Your Data

Use Power Query Editor to:

Remove unwanted columns

Fix formatting issues

Create calculated columns or measures

Clean data ensures accurate and reliable reporting.

3. Add Visualizations

Drag and drop fields onto the canvas to create visuals like:

Bar and column charts

Pie charts

Maps

Tables

Cards and KPIs

4. Enable Interactivity

You can make your report interactive by:

Adding Slicers (e.g., date range, region, category)

Enabling Drillthrough pages for detailed views

Using Bookmarks and Buttons for navigation

Setting up Tooltips to show extra details on hover

These features allow users to explore data without altering the actual dataset.

5. Use Filters and Sync Slicers

Apply visual-level, page-level, or report-level filters to control what data appears. Sync slicers across pages to maintain consistency in filtering.

6. Publish and Share

Save and publish your report to the Power BI Service

Set up permissions and share with your team or clients

Schedule data refreshes for real-time updates

Tips for Designing User-Friendly Reports

Keep visuals simple and clear

Use consistent colors and fonts

Highlight key metrics using cards or KPIs

Group related visuals together

Use white space effectively for readability

Who Should Learn Power BI?

Power BI is essential for:

Business Analysts

Data Analysts

Project Managers

Marketing Professionals

Students aspiring to enter the data field

If you're in KPHB or nearby, enrolling in a Power BI training in KPHB can give you hands-on experience and help you master these concepts faster.

Frequently Asked Questions

Q1. Do I need coding skills to use Power BI? No! Power BI is designed with a drag-and-drop interface. Basic knowledge of DAX (Data Analysis Expressions) can be helpful but is not required for beginners.

Q2. Can I use Power BI for real-time dashboards? Yes. With the right data connection and refresh settings, you can build real-time dashboards.

Q3. Is Power BI free? Power BI Desktop is free. Power BI Pro and Premium offer additional sharing and collaboration features at a cost.

➡️ For more FAQs, visit our website.

Ready to Learn More?

If you're serious about mastering Power BI and want expert guidance, consider joining a Power BI training in KPHB. It’s a great way to build your skills and get certified under professional mentorship.

#PowerBI#DataAnalytics#BusinessIntelligence#PowerBItraining#KPHB#InteractiveReports#DataVisualization

0 notes

Text

The Significance of Scalable NoSQL Databases in Contemporary Applications

In the era of big data, businesses encounter unprecedented demands for the processing, storage, and management of vast amounts of information in real-time. Traditional relational databases (SQL) often struggle to meet the evolving needs of modern applications, particularly regarding scalability and flexibility. This is where NoSQL databases prove invaluable, offering a versatile solution for businesses that require high performance, scalability, and flexibility in managing unstructured or semi-structured data.

NoSQL databases have become essential tools for contemporary, data-intensive applications, especially those operating in dynamic environments such as e-commerce, social networks, IoT (Internet of Things), and cloud-native applications. In this blog post, we will examine the advantages of scalable NoSQL databases and how they effectively address the challenges of today's data-centric landscape.

What is a NoSQL Database?

NoSQL, or "Not Only SQL," encompasses a range of non-relational database technologies designed to manage large volumes of data that may not conform to the strict structures of traditional relational databases. Unlike conventional SQL databases, which organize data in rows and tables, NoSQL databases utilize more flexible formats, including documents, graphs, key-value pairs, and wide-column stores.

Some of the most widely used NoSQL databases include MongoDB, Cassandra, Redis, Couchbase, and Amazon DynamoDB. These databases are engineered for horizontal scalability, making them highly effective at managing extensive datasets distributed across multiple servers.

Why Scalability Matters

Scalability is a crucial consideration for any database system employed in contemporary applications, particularly for organizations confronting substantial datasets or fluctuations in traffic. NoSQL databases provide horizontal scalability, enabling businesses to distribute their data across multiple servers or clusters in response to increasing demand. This approach ensures that the database can accommodate heightened workloads without compromising performance.

In contrast, traditional SQL databases generally rely on vertical scalability, which entails augmenting resources (CPU, memory, storage) on a single server. However, vertical scaling has inherent limitations and can become costly and less efficient as datasets expand. This is where NoSQL databases truly excel.

Benefits of Scalable NoSQL Databases

Horizontal Scalability

NoSQL databases are engineered to scale horizontally by distributing data across multiple servers or nodes. This capability presents a significant advantage for applications that must manage large datasets or high traffic volumes, as horizontal scaling allows for the addition of servers in response to data growth, thereby ensuring consistent performance. For instance, platforms such as Facebook and Twitter, which handle millions of users and real-time updates, leverage NoSQL databases like Cassandra to achieve horizontal scalability, enabling them to process vast amounts of unstructured data without encountering performance bottlenecks.

Flexibility and Schema-Less Design

NoSQL databases provide enhanced flexibility regarding data storage. Unlike SQL databases that necessitate a predefined schema, NoSQL databases can accommodate data in various formats, such as JSON, XML, or key-value pairs. This schema-less characteristic empowers developers to modify and adapt the data structure as applications evolve, facilitating the management of diverse data types without the need to alter the underlying database structure.

High Performance for Big Data

Scalable NoSQL databases are optimized for big data handling, offering accelerated read and write operations critical for real-time applications where response times are crucial. By dispersing data across multiple nodes, NoSQL databases mitigate bottlenecks and ensure that data retrieval and processing occur concurrently across different servers. For example, e-commerce giants like Amazon utilize NoSQL databases to manage customer orders, inventory, and transactions, guaranteeing smooth and prompt responses even during peak sales periods.

Cost-Effective Scaling

NoSQL databases offer horizontal scalability, enabling businesses to expand their storage and computing capacity by adding commodity servers rather than investing in costly, high-performance machines. This distributed architecture renders NoSQL databases more cost-effective for large-scale applications, allowing organizations to scale in alignment with their needs without incurring significant capital expenditures.

Handling Unstructured Data

Modern applications generate a wide array of unstructured or semi-structured data types, including social media interactions, sensor data, multimedia content, and logs. NoSQL databases are specifically designed to accommodate this diversity, making them an ideal choice for IoT applications, real-time analytics, and content management systems.

Use Cases for Scalable NoSQL Databases

E-commerce Platforms: With rapidly changing inventory, customer data, and transactional histories, e-commerce platforms require databases that can scale swiftly while delivering real-time analytics. NoSQL solutions such as MongoDB and Cassandra provide the scalability and performance necessary to maintain smooth operations, even during peak traffic events like Black Friday.

Social Networks: Applications like Instagram and Twitter must process vast amounts of data from users in real-time. NoSQL databases can efficiently store and retrieve this data without compromising the user experience, even as the network expands to accommodate millions of users.

IoT and Big Data: IoT devices generate massive data streams that need to be processed and analyzed in real-time. Scalable NoSQL databases can ingest, store, and analyze this data, delivering faster insights and promoting more efficient operations.

Conclusion

In today's landscape, where businesses must process extensive volumes of diverse data in real-time, scalable NoSQL databases offer the flexibility, performance, and cost-effective scalability essential to address these challenges. By enabling horizontal scaling, accommodating unstructured data, and providing high performance for big data applications, NoSQL databases are crucial for any organization aiming to remain competitive in the digital era. Whether developing a cloud-native application, an IoT platform, or a data-intensive service, a scalable NoSQL database is pivotal for fostering innovation and driving growth.

0 notes

Text

Cómo instalar PostgreSQL en Windows paso a paso

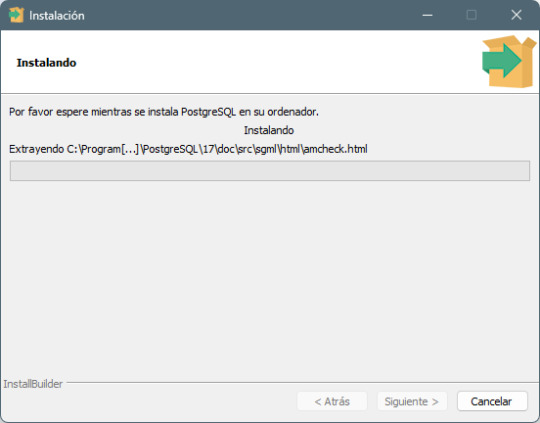

PostgreSQL es un sistema de bases de datos relacional y de código abierto ampliamente utilizado por su robustez y soporte para funciones avanzadas como JSON y operaciones geoespaciales. A continuación, te mostramos cómo instalar PostgreSQL en Windows y realizaremos una práctica personalizada para confirmar que los alumnos completaron la instalación y el uso del sistema. Requisitos previos - Sistema operativo: Windows 10 o superior. - Memoria RAM mínima: 4 GB. - Espacio libre en disco: Al menos 2 GB. - Conexión a internet para descargar el instalador. Para este tema, es importante tomar capturas de pantalla de todo el proceso, para que entregues las evidencias de lo realizado. Paso a paso para instalar PostgreSQL Paso 1: Descargar el instalador - Ve al sitio oficial de PostgreSQL: Descargar PostgreSQL. - Selecciona Windows y haz clic en el enlace para descargar el instalador correspondiente.

Paso 2: Ejecutar el instalador - Una vez descargado, haz doble clic en el archivo postgresql-17.0-1-windows.exe - En la primera pantalla, haz clic en Next.

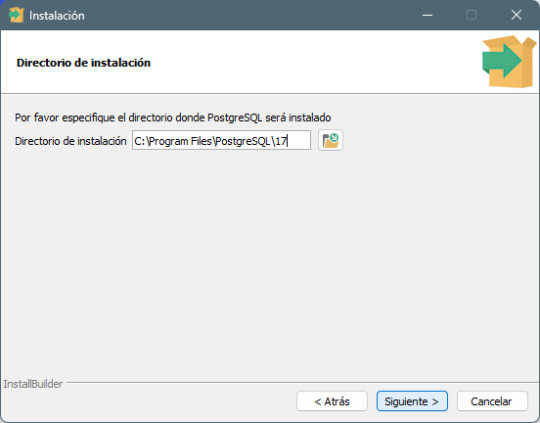

Paso 3: Selección del directorio de instalación - Elige la carpeta donde deseas instalar PostgreSQL o deja la predeterminada. - Haz clic en Next.

Paso 4: Selección de componentes - Asegúrate de seleccionar: - PostgreSQL Server - pgAdmin 4 (herramienta gráfica para administrar bases de datos) - Haz clic en Next.

Paso 5: Configuración de la contraseña del superusuario - Introduce y confirma una contraseña para el usuario postgres (anótala, la necesitarás más adelante). - Haz clic en Next.

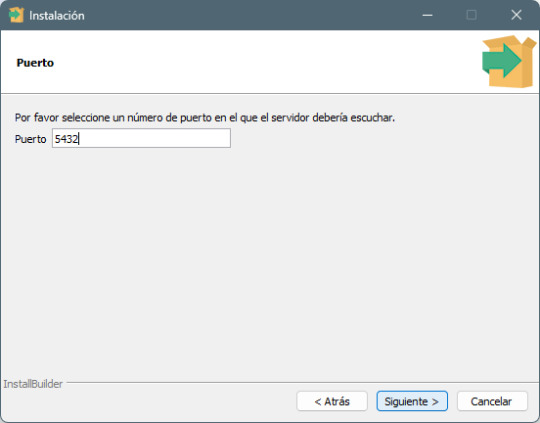

Paso 6: Configuración del puerto de conexión - Deja el puerto predeterminado 5432 (a menos que necesites cambiarlo por alguna razón). - Haz clic en Next.

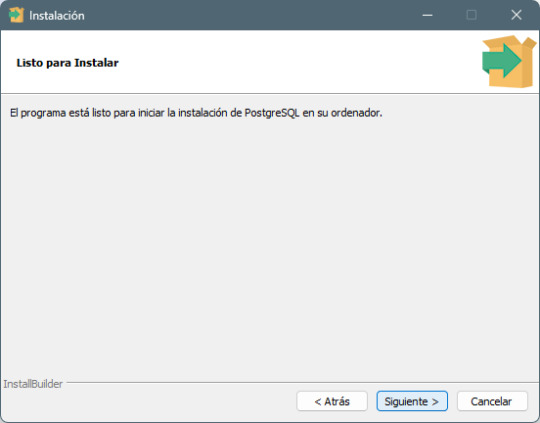

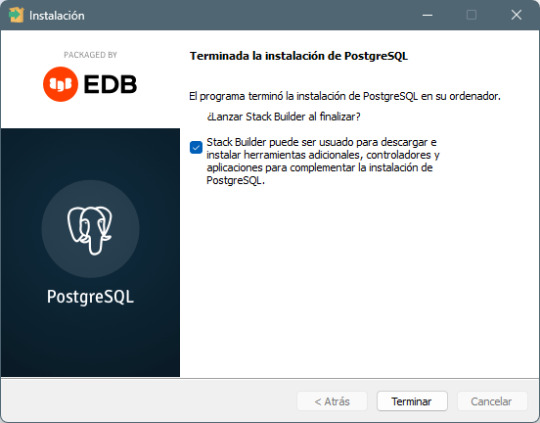

Paso 7: Finalización de la instalación - Haz clic en Next y luego en Finish para completar la instalación. - PostgreSQL y pgAdmin 4 estarán ahora listos para ser usados.

Paso a paso para usar PostgreSQL con pgAdmin 4

Paso 1: Abrir pgAdmin 4

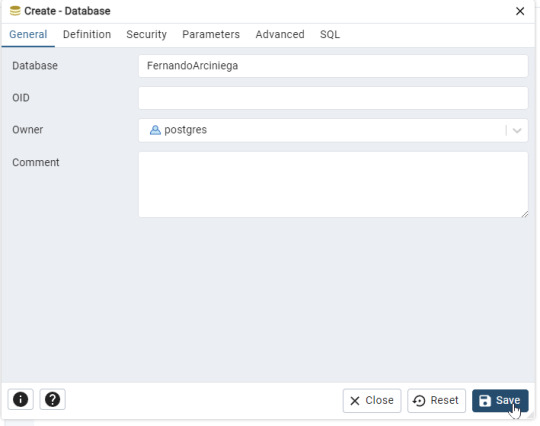

- Desde el menú de inicio, abre pgAdmin 4. - Ingresa la contraseña que configuraste para el usuario postgres. Paso 2: Crear una nueva base de datos - En el panel izquierdo, haz clic derecho sobre Databases y selecciona Create > Database.

- En el campo Database Name, ingresa tu nombre y tu primer apellido (por ejemplo: FernandoArciniega). - Haz clic en Save para crear la base de datos.

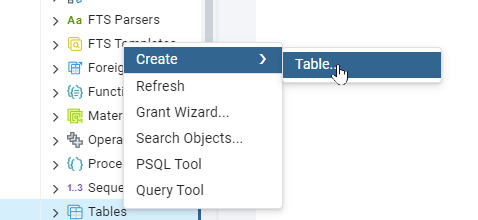

Paso 3: Crear una tabla personalizada - Selecciona tu base de datos y ve a Schemas > Tables.

- Haz clic derecho en Tables y selecciona Create > Table.

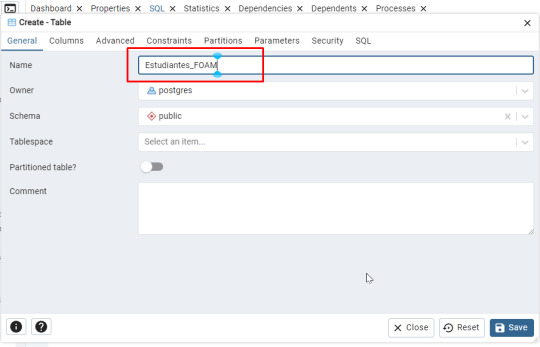

- Asigna un nombre a la tabla, como Estudiantes_ (por ejemplo: Estudiantes_FOAM).

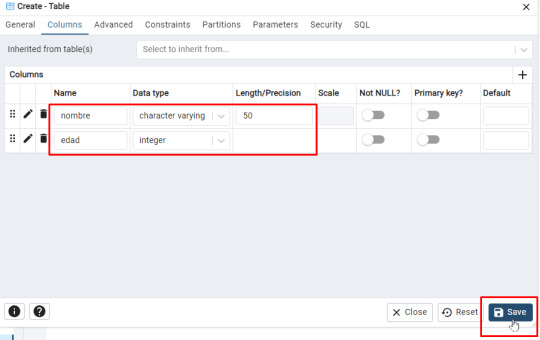

Agrega dos columnas: (Ficha Columns)

- Nombre (Tipo: character varying(50)) - Edad (Tipo: integer)

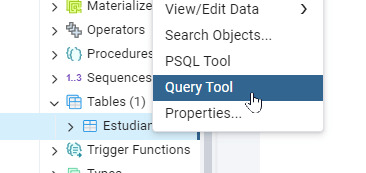

- Guarda la tabla haciendo clic en Save. Aparecerá el siguiente código: -- Table: public.Estudiantes_FOAM -- DROP TABLE IF EXISTS public."Estudiantes_FOAM"; CREATE TABLE IF NOT EXISTS public."Estudiantes_FOAM" ( nombre character varying(50) COLLATE pg_catalog."default", edad integer ) TABLESPACE pg_default; ALTER TABLE IF EXISTS public."Estudiantes_FOAM" OWNER to postgres; Paso 4: Insertar datos en la tabla - Haz clic derecho en la tabla y selecciona Query Tool.

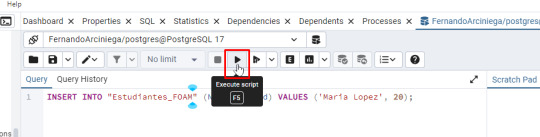

- Escribe la siguiente consulta SQL para insertar un registro: INSERT INTO "Estudiantes_FOAM" (Nombre, Edad) VALUES ('Maria Lopez', 20); - Haz clic en Run para ejecutar la consulta o F5

Paso 5: Verificar los datos - Haz clic derecho en la tabla y selecciona View/Edit Data > All Rows. - Verifica que el registro se haya insertado correctamente.

Inserta 14 registroas más para tener un total de 15 en tu tabla. Práctica: Demostrar la instalación y uso de PostgreSQL Instrucciones personalizadas para la entrega: - Base de datos personalizada: - Cada alumno debe crear una base de datos con su nombre completo. - Tabla personalizada: - Crear una tabla con el nombre Estudiantes_ (por ejemplo: Estudiantes_FOAM). - Inserción de registro: - Inserta 15 registros en total con su nombre y edad. Entrega del trabajo - Formato: Impreso. - Equipos de 4 personas. Read the full article

#basededatosgratuita#basededatosrelacionalWindows#basesdedatosparaprincipiantes#cómousarpgAdmin#conexiónaPostgreSQL#configuraciónPostgreSQL#crearbasededatosenPostgreSQL#crearbasededatospersonalizada#creartablaenPostgreSQL#descargarPostgreSQLgratis#insertardatosPostgreSQL#instalacióndePostgreSQL2024#instalaciónfácilPostgreSQL#instalarpgAdmin4#PostgreSQLejercicios#PostgreSQLinstalaciónWindows#PostgreSQLManagementTool#PostgreSQLparaestudiantes#PostgreSQLpasoapaso#prácticasconPostgreSQL#servidorPostgreSQLlocal#SQLenPostgreSQL#tutorialpgAdminpasoapaso#tutorialPostgreSQLenespañol

0 notes

Text

Creating and Managing SSRS Reports in Dynamics 365 Finance and Operations

In Dynamics 365 Finance and Operations (D365FO), ssrs report in d365fo is an effective tool for creating, organizing, and distributing reports. Through the provision of comprehensive insights into corporate data, SSRS reports enable users to optimize operational efficiency and make well-informed decisions. In order to help you get the most out of your reporting endeavors, this article walks you through the steps of generating, modifying, and maintaining SSRS reports in D365FO. It also provides helpful advice and best practices.

What does Dynamics 365 Finance and Operations' SSRS mean?

The creation and management of reports through the integration of SQL Server Reporting Services with the ERP system is referred to as SSRS in D365FO. Operational indicators, financial statements, and other crucial business data may be included in these reports. Because SSRS reports are so flexible, they may be made to match particular reporting requirements, offering both routine and customized reporting options.

Crucial Actions to Generate and Oversee SSRS Reports in D365FO

1. Recognize the Environment for Report Development

Development Tools: Visual Studio or SQL Server Data Tools (SSDT) are used to create SSRS reports. Make sure the right development environment is configured.

Data Sources: Tables, data entities, and ERP system queries are just a few of the sources from which data can be pulled for reports in D365FO.

2. Create the Report

Make a New Project for Reports: To get started, create a new SSRS report project in SSDT or Visual Studio. Define the parameters and format of the report based on your needs.

Describe the Data Sources: To obtain the required data, establish a connection with the D365FO database or data entities. Existing data entities can be used, or Make new ones if necessary.

Design Report Layout: Data fields, tables, charts, and other graphic components can be arranged using the report designer. Make that the design complies with reporting requirements and is easy to use.

3. Create Report Inquiries

Use Data Entities: In D365FO, data entities offer a more straightforward method of accessing data for the majority of reports. To get the necessary data, create queries against these entities.

Optimize Performance: Make sure your searches are as efficient as possible, particularly when working with big datasets. Steer clear of intricate joins and make sure you're using indexes wisely.

4. Put Report Parameters Into Practice

Include Parameters: Include parameters in your reports so that users can alter and filter the information that is shown. Date ranges, project codes, and other pertinent factors are examples of parameters.

Set Up Default Parameters: Establish default settings for adjustments when necessary to improve user experience.

5. Implement and Examine the Report

Deploy Report: Upload the report to the D365FO environment as soon as work is finished. This entails setting up the required parameters and uploading the report to the report server.

Examine the report: Test the report thoroughly to make sure it performs as intended. Verify the accuracy, functionality, and user interface. Verify that the report satisfies all operational needs.

6. Oversee and Keep Reports

Update Reports: Continually update reports to take into account modifications to data structures or business requirements. To properly handle report modifications, use version control.

Track Performance: To find and fix any problems, track report performance and user input. As necessary, optimize report performance to guarantee effective data retrieval and display.

Top Techniques for Reports on SSRS in D365FO

Maintain User-Centric Reports: Think about the end user when creating reports. Make sure reports are clear, easy to read, and provide essential information without being too overwhelming for users.

Verify the accuracy and timeliness of the data that is displayed in reports to ensure data accuracy. Review and reconcile report data against source systems on a regular basis.

Optimize for Performance: To improve report performance, create effective queries and make use of indexing. When feasible, try to employ as little complicated math and large datasets as possible.

Observe security procedures: Make sure that only authorized individuals can access sensitive data in reports by putting in place the necessary security measures. Set access limits and report permissions appropriately.

Summary

In Dynamics 365 Finance and Operations, SSRS reports are crucial resources for producing meaningful insights and assisting with data-driven decision-making. Gaining a grasp of the procedures involved in generating, modifying, and maintaining SSRS reports can help you improve your reporting skills and give stakeholders useful information. By following best practices, reports are guaranteed to be accurate, effective, and user-friendly, which eventually improves corporate results and operational efficiency. Gaining proficiency in SSRS reporting will help you take use of D365FO's full capabilities and help your company make more strategic, well-informed decisions.

0 notes

Link

How To Use The SQL Server ALTER Keyword To Modify Database Objects

The SQL Server ALTER keyword is a fundamental tool in a database administrator’s toolkit, allowing for modifications to database objects without the need to drop and recreate them. This powerful command is versatile, enabling changes to tables, stored procedures, views, functions, triggers, and more. Understanding how to use the ALTER keyword effectively can significantly enhance your ability to manage and optimize your SQL Server databases...

Learn more here:

https://www.nilebits.com/blog/2024/08/alter-modify-database-objects/

0 notes

Text

SQL, or Structured Query Language, is a standardized programming language used for managing and manipulating relational databases. It allows users to perform various operations on data stored in databases, such as:

Querying: Retrieving specific data from a database (e.g., using the SELECT statement).

Inserting: Adding new records to a table (e.g., using the INSERT INTO statement).

Updating: Modifying existing data within a table (e.g., using the UPDATE statement).

Deleting: Removing records from a table (e.g., using the DELETE statement).

Creating and Modifying Structures: Creating new tables, modifying existing ones, and defining relationships between them (e.g., using CREATE TABLE, ALTER TABLE).

SQL is essential for database management systems (DBMS) like MySQL, PostgreSQL, SQL Server, and SQLite. It provides a way to interact with the data and structure within these systems, making it a crucial skill for anyone working with databases.

0 notes

Text

6 Different Types of Cyber Threats

6 Different Types of Cyber Threats

Table of Contents

What Are Cyber Threats?

Different Types of Cyber Threats

Ransomware

Malware

Phishing & Spam

Internal Threats

Denial-of-Service Attacks

SQL Injection

Key Takeaways

Conclusion

FAQs

What Are Cyber Threats?

Cyber threats refer to the risk of malicious attacks aiming to breach a network, corrupt data, or steal sensitive information. Both individuals and organizations face these threats, which can lead to severe consequences like data breaches or system failures. As cyber threats grow more advanced, it's crucial to implement robust security measures and stay informed about the different types of threats to ensure adequate protection.

Different Types of Cyber Threats

Ransomware

Ransomware is a form of malicious software that blocks users from accessing their systems. Attackers gain control over the system and demand payment, often in cryptocurrencies like Bitcoin, to restore access. Ransomware encrypts files on the infected network, and the decryption key is kept by the cybercriminals. The victim is then required to pay a ransom to regain access to their data.

Protection Tips: To defend against ransomware, focus on prevention. Employ strong security practices, train employees, and establish effective incident response and business continuity plans.

Malware

Malware, short for "malicious software," encompasses various threats like viruses, worms, trojans, spyware, and ransomware. Malware typically infiltrates a system through unreliable links, emails, or downloads. Once inside, it can gather private information, alter or delete data, and compromise system integrity.

Protection Tips: Install and regularly update anti-malware software to add a layer of security. Ensure it scans downloaded files automatically to detect and neutralize potential threats.

Phishing & Spam

Phishing involves attempts to obtain sensitive information by masquerading as a trustworthy entity. Cybercriminals may send emails or texts that seem to come from legitimate sources, urging you to click on malicious links or provide personal data. Spam refers to unsolicited messages, which may also be used for phishing.

Protection Tips: Be cautious of emails that prompt you to click links or open attachments, especially if they ask for personal information. Verify the sender’s authenticity and avoid interacting with suspicious messages.

Internal Threats

Internal threats are posed by individuals within an organization, such as employees, contractors, or former staff, who misuse their access to cause harm. These threats can stem from malicious intent, negligence, or carelessness and can lead to significant financial and reputational damage.

Protection Tips: Cultivate a strong security culture within your organization. Implement access controls, monitor employee activities, and provide regular training to recognize and mitigate potential insider threats.

Denial-of-Service Attacks

Denial-of-Service (DoS) attacks aim to overwhelm a system, server, or network with excessive traffic, causing service disruptions. Distributed Denial-of-Service (DDoS) attacks involve multiple systems working together to launch the attack. These attacks can vary in severity, from minor inconveniences to major financial losses.

Protection Tips: Conduct network vulnerability assessments to identify and address potential weaknesses. Employ multi-layered protection strategies and monitor for signs of attack to mitigate the impact of DoS attacks.

SQL Injection

SQL Injection attacks involve inserting malicious SQL code into a web application’s input fields, which can manipulate or access the database in unintended ways. This technique can expose, modify, or delete data, and in some cases, execute commands on the underlying operating system.

Protection Tips: Use input validation and prepared statements with bind variables to protect against SQL injection. This ensures that only legitimate data is processed and prevents attackers from executing harmful SQL commands.

Key Takeaways

Cyber threats are continually evolving, making it essential to stay informed and adopt robust security measures. Common threats include malware, phishing, DoS attacks, and SQL injections, each requiring specific protective strategies. Employing anti-malware software, practicing vigilance with emails, and implementing strong access controls are crucial steps in defending against these threats.

Conclusion

Protecting yourself from cyber threats involves a proactive approach. Use strong passwords, enable multi-factor authentication, and keep your software up to date. Investing in a comprehensive cybersecurity solution is vital, whether for personal or business use. Effective cybersecurity measures are essential in safeguarding against the growing risks of cyber attacks.

For tailored cybersecurity solutions, consider iBovi Cybersecurity. We offer cost-effective and easy-to-implement services for both individuals and organizations.

CyberSecurity Services | Book Now

FAQs

Q. Are people aware of cybersecurity? While awareness of cybersecurity is increasing, many people are still insufficiently protected. The field of effective cybersecurity is continually evolving.

Q. Are the threats to cybersecurity growing? Yes, cyber threats are becoming more sophisticated and numerous. Reports indicate a rise in external attacks, especially from criminal organizations and foreign entities.

Q. What does a cyberattack cost? In 2020, victims paid approximately $350 million in ransom, a significant increase from previous years. Businesses can face losses of up to $600 billion due to cybercrime, according to reports.

Q. How should you respond to a potential phishing email? Verify the sender’s identity and avoid clicking on links or opening attachments from unknown sources. If suspicious, report and delete the email. Contact the organization directly using verified contact information, not the details provided in the email.

0 notes

Text

Discover the Full Potential of Your Data with tableau certification Training

The capacity to evaluate and visualize data is an essential talent in today's data-driven environment. Learning tableau course can alter your life, whether you're a business analyst, data scientist, or just someone trying to improve your data abilities. One of the best tools for data visualization is Tableau, which excels at converting unprocessed data into interactive dashboards that can be shared. A wise decision if you want to become proficient with this potent tool is to sign up for a Tableau Certification Training Course.

Tableau: What is it?

One of the best business intelligence tools for firms is Tableau, which aids in data analysis. Numerous features are available, such as collaboration, real-time analysis, and data mixing. Without requiring in-depth coding knowledge, both technical and non-technical users can generate intelligent and engaging visualizations thanks to its user-friendly interface.

Why Get Certified in Tableau?

Industry Acknowledgment

A Tableau certification is an internationally accepted qualification that attests to your proficiency with Tableau's data analysis and visualization features. It lets potential employers know that you have the know-how to use Tableau to meet their demands.

Improved Prospects for Careers

Tableau certified professionals are highly sought after in a variety of sectors, including technology, retail, healthcare, and finance. A certification can lead to positions with competitive pay packages, such as data scientist, business intelligence developer, and analyst.

Understanding Tableau's Features

The whole range of Tableau's features is covered in the certification course. You'll learn all you need to know to become an expert Tableau user, from setting up connections to data sources and carrying out intricate calculations to building sophisticated charts and dashboards.

An overview of the training course for Tableau certification

The goal of a well-organized Tableau Certification Training Course is to give you practical experience and a thorough understanding of Tableau. From such a course, you may usually anticipate the following:

Module 1: Overview of Visualization Techniques

Understanding the value and advantages of data visualization.

Tableau overview: installation, user interface, and main functionalities.

Establishing a Data Connection: connecting Tableau to a range of data sources, including cloud-based, SQL, and Excel sources.

Module 2: Fundamental Methods of Visualization

Making Simple Charts: Pie, line, and bar charts.

Interactive Dashboards: Dashboard creation and customization.

Increasing interactivity with filters and parameters.

Module 3: Advanced Analytics and Visualization

Advanced Charts: Heat maps, scatter plots, and dual-axis charts.

Table Calculations: Handling intricate computations.

Integrating data from several sources is known as data blending and joins.

Module 4: Data and Mapping in Real Time

Linking to real-time data sources for data analysis in real time.

Making maps and geographic data visualizations is known as geospatial visualization.

Module 5: covers sharing and collaboration with Tableau

Online and Server, specifically publishing dashboards on both platforms.

Permissions and Security: Controlling data security and user permissions.

Module 6: Study Guide and Exam Strategies

Comprehending the format and sorts of questions on the certification exam.

Practice Exams: Model tests to gauge your level of preparedness.

Study Advice: Useful tools and techniques for studying.

Becoming Certified: Tableau has multiple certification tiers, such as:

Tableau Desktop Specialist: Covers fundamental abilities, perfect for novices.

Tableau Desktop Certified Associate: This certification is for intermediate users and emphasizes advanced features.

Tableau Desktop Certified Professional: This advanced certification has a strong emphasis on complex data solutions and is intended for seasoned users.

Tableau Server: Architecture, Management, and Security are the main areas of focus for Tableau Server certification.

Exam format details: multiple-choice questions with practical assignments.

Time: Generally, 60-120 minutes, based on the degree of certification.

Cost: Depending on the certification, this can range from $100 to $600.

In summary

Anyone hoping to develop in their profession in data analytics and visualization would be advised to invest in a tableau certification Training Course. Your CV will look better with the knowledge and certification you obtain, and you'll be able to make data-driven decisions that will help your company succeed. Developing your Tableau skills might put you ahead of the competition in the employment market since data is becoming more and more important in many industries.

Start your Tableau certification path now to revolutionize the way you perceive and convey data!

0 notes

Text

So, I've eliminated a few paths already. One has nice examples that the author says are scripts. They're not Batch commands. If they're PowerShell, I don't have the right module (and it doesn't look right to my untrained eye). So what are they? Another was supposedly learning to use ScriptDOM, but no explanation of what to create is included. Maybe I'm too inexperienced to understand some stuff, but if you don't include at least a file type I'm fairly sure you skipped something.

So I'm trying this. It's worth a shot. First step, have a database project in VS. Uhm... I've never done that. I know why we should. But my work has a history of not requiring programmers to document what we do on production systems. Finally got the server admins doing it a while ago, but folks like me live dangerously. Grumble.

So - step 1, create a database. It's not a listed step, but apparently you don't do the creation in VS. There's no step for it in the template listing at least.

So instead I'm doing https://medium.com/hitachisolutions-braintrust/create-your-first-visual-studio-database-project-e6c22e45145b

Step one: in SSMS run the command:

CREATE DATABASE TCommon

T for temporary, and Common is a database I've already got going. It's for non-secure tools/programs/etc. that any of the other databases should be able to access.

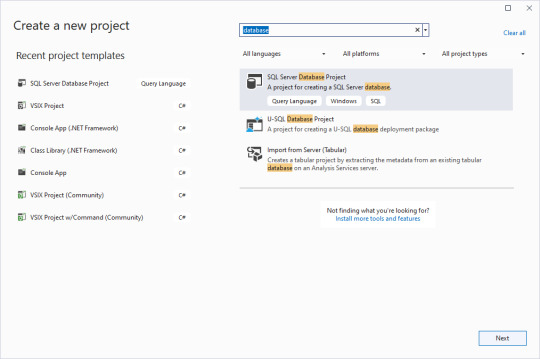

Now to start up VS 2022. We begin a new project and search for database templates.

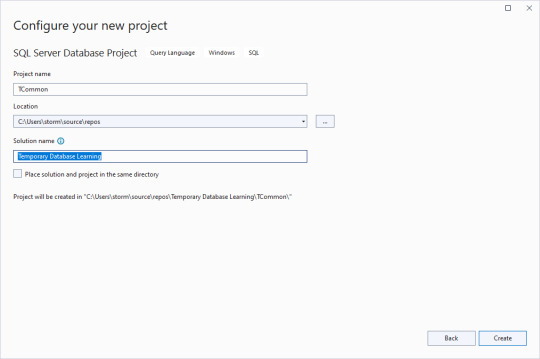

Clear the checkbox for putting the solution and project in the same directory, and give an overarching name to the solution. That way you can have multiple database projects worked on inside of one solution.

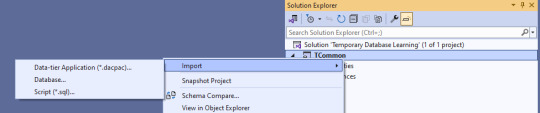

Next, we import the blank database so we have a test bed based off what is in production. Right click on the solution name, select Import, then Database.

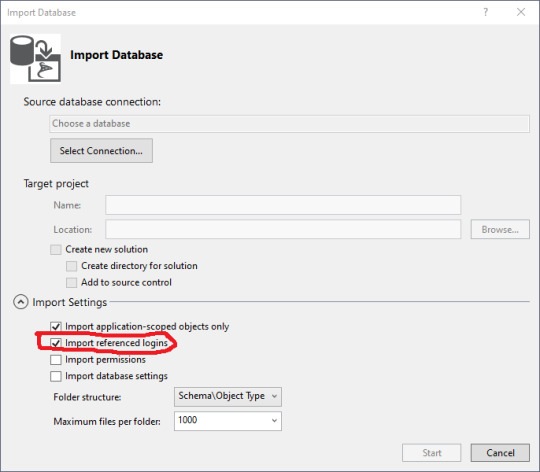

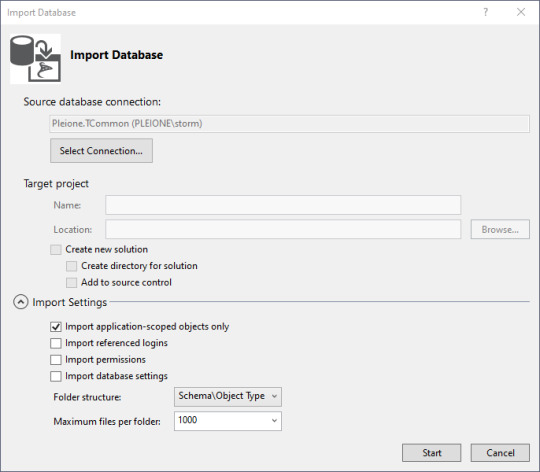

The import database wizard looks like this after the connection is set.

Blackburn suggests that you turn off the importation of referenced logins so you don't accidentally alter permissions. Sound strategy.

Then you can click on the "Select Connection" button.

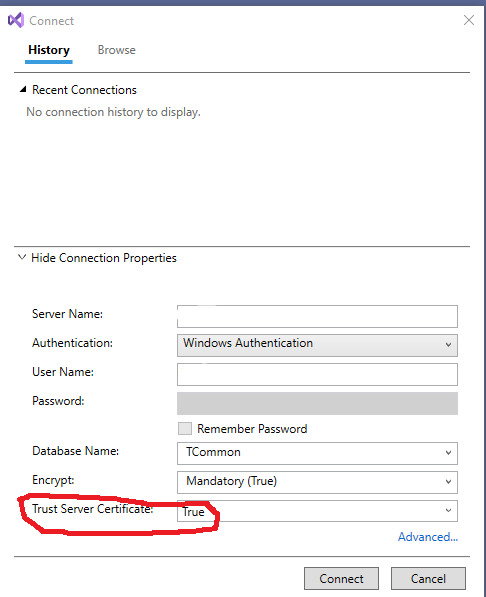

On my workstation, I have to Show Connection Properties, then change the default for Trust Server Certificate to True for it to make a connection. I'm running a test version of SQL Server and didn't set up the certificates.

Click on Connect. Then on the Import Database window, click Start.

With a blank database, it's fairly anticlimactic, but there really is a connection now to the database, and the properties are copied to your work area. The summary tells you where the log is stored. Then click "Finish" to continue on.

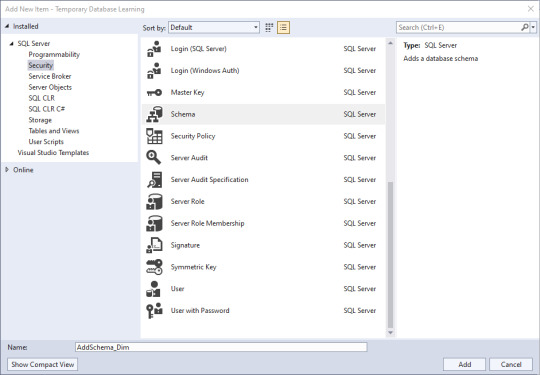

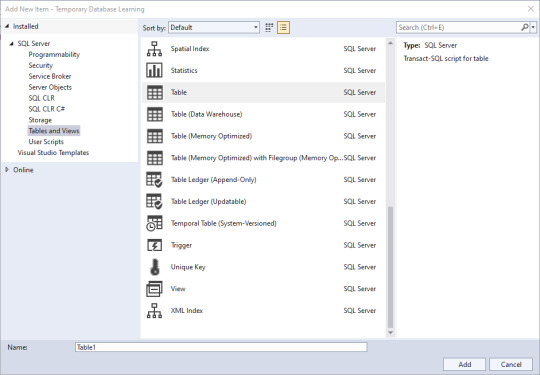

Next, we'll add some objects in. Right click in the Solution Explorer pane, then click Add, then New Item. Lots of little goodies to play with. Since I've been trying to match a project from another site, I need to create a schema to store the objects in. Schemas are part of Security, and there's my little object. I select the schema, give it a name down below, and click Add.

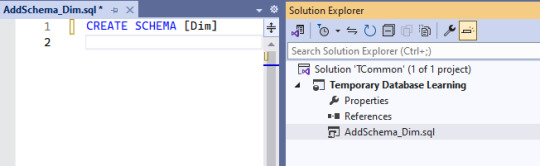

Well, not quite what I expected to happen: CREATE SCHEMA [AddSchema_Dim]

But that's changeable. And in making that change, the solution's object has the name I wanted, and the code has the actual name of the schema I want.

Now, lets add a table.

If you're like me, you've used a few of these, but not all of them. Time to do research if you're unsure, but I'm going to go with a simple table for this demonstration. Since I know the name of the solution object will take the name I put in the bottom, I'll name this one AddTable_Dim.Date, and know that I need to edit the actual code.

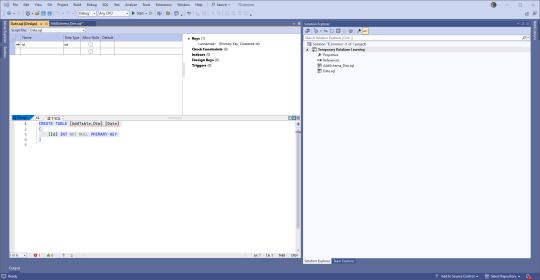

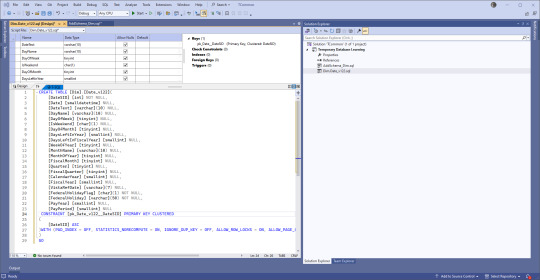

You have choices. If you're used to creating tables using the upper part of the pane where there is a GUI type of set up, go for that. If you're used to typing things out, go to the lower part. Or mix and match! VS will keep the two in sync.

Instead of 'ID' we use 'SID' for Surrogate Identifier. The intake process sets up the unique (across a table) SID values and follows rules that help us track issues backwards to the original location where the data came from.

Second, there's a version number in there. We have the same tables across various enclaves (groups of servers), and we keep the versions the same between all but our development enclave. But instead of forcing our developers and end users to keep up, we use views that are in the databases they work from to expose the data. Many times we don't need to change the views at all which is easier on people that don't need to memorize a few hundred tables and variations.

I'm going to cut this off here, and start working on the next post. Back soon!

0 notes

Text

Tracking Changes in SQL Server 2022

In the latest update of SQL Server 2022, keeping an eye on database table alterations has gotten a bit easier for the folks who build and maintain our databases. This piece is all about giving you the lowdown on how to make the most of SQL Server’s built-in goodies for change tracking. We’re zeroing in on Change Data Capture (CDC) and Temporal Tables, which are like superheroes for making sure…

View On WordPress

#CDC SQL Server#Change Data Capture#Data Auditing SQL Server#System-Versioned Temporal Tables#Temporal Tables SQL Server

1 note

·

View note