#AlgorithmBias

Explore tagged Tumblr posts

Text

AI’s Role in Breaking the Internet’s Algorithmic Echo Chamber

Introduction: The Social Media Bubble We Live In

Have you ever scrolled through your social media feed and noticed that most of the content aligns with your views? It’s no accident. Algorithms have been carefully designed to keep you engaged by showing you content that reinforces your beliefs. While this may seem harmless, it creates echo chambers — digital spaces where we are only exposed to information that supports our existing opinions. This is a significant issue, leading to misinformation, polarization, and a lack of critical thinking.

But here’s the good news: AI, the very technology that fuels these echo chambers, could also be the key to breaking them. Let’s explore how AI can be used to promote a more balanced and truthful online experience.

Click Here to Know More

Understanding the Echo Chamber Effect

What Is an Algorithmic Echo Chamber?

An algorithmic echo chamber occurs when AI-driven recommendation systems prioritize content that aligns with a user’s previous interactions. Over time, this creates an isolated digital world where people are rarely exposed to differing viewpoints.

The Dangers of Echo Chambers

Misinformation Spread: Fake news thrives when it goes unchallenged by diverse perspectives.

Polarization: Societies become more divided when people only engage with one-sided content.

Cognitive Bias Reinforcement: Users start believing their opinions are the absolute truth, making constructive debates rare.

How AI Can Combat Social Media Bubbles

1. Diverse Content Recommendations

AI can be programmed to intentionally diversify the content users see, exposing them to a range of viewpoints. For example, social media platforms could tweak their algorithms to introduce articles, posts, or videos that present alternative perspectives.

Example:

If you frequently engage with political content from one side of the spectrum, AI could introduce well-researched articles from reputable sources that present differing viewpoints, fostering a more balanced perspective.

2. AI-Powered Fact-Checking

One of AI’s most promising roles is in real-time fact-checking. By analyzing text, images, and videos, AI can detect misleading information and flag it before it spreads.

Tools Already Making an Impact:

Google’s Fact Check Tools: Uses AI to verify information accuracy.

Facebook’s AI Fact-Checkers: Work alongside human reviewers to curb misinformation.

3. Intent-Based Content Curation

Instead of focusing solely on engagement, AI can prioritize content based on educational value and credibility. This would mean:

Prioritizing verified news sources over sensational headlines.

Reducing the spread of clickbait designed to manipulate emotions rather than inform.

4. Promoting Critical Thinking Through AI Chatbots

AI-driven chatbots can encourage users to question and analyze the content they consume. By engaging users in meaningful discussions, these chatbots can counteract the effects of misinformation.

Real-World Example:

Imagine an AI assistant on social media that asks, “Have you considered checking other sources before forming an opinion?” Simple nudges like these can significantly impact how people engage with information.

5. Breaking Filter Bubbles with AI-Powered Search Engines

Search engines often personalize results based on past behavior, but AI can introduce unbiased search results by ensuring that users see information from diverse perspectives.

Click Here to Know More

Future Possibility:

A browser extension powered by AI that identifies and labels potential echo chamber content, helping users make informed decisions about the media they consume.

The Future of AI and Online Information

AI has immense potential to transform the way we consume information. But the question remains: Will tech companies prioritize breaking the echo chambers, or will they continue feeding users what keeps them engaged?

What Needs to Happen Next?

Transparency in Algorithm Design: Users should know how AI curates their content.

Ethical AI Development: Companies must ensure that AI serves public interest, not just profits.

User Awareness and Education: People should understand how echo chambers work and how they affect their worldview.

Click Here to Know More

Conclusion: A Smarter Digital World

While AI played a role in creating echo chambers, it also has the power to dismantle them. By prioritizing diversity, credibility, and education over engagement-driven content, AI can make the internet a place of discovery rather than division. But this change requires collaboration between AI developers, tech giants, policymakers, and, most importantly, users like you.

Click Here to Know More

#AI#ArtificialIntelligence#EchoChamber#SocialMedia#TechEthics#Misinformation#FactChecking#DigitalAwareness#AlgorithmBias#FutureOfAI#TechForGood#AIInnovation#CyberCulture#OnlineTruth#MediaLiteracy#usa

2 notes

·

View notes

Text

[Week 10]

🌐 The Double-Edged Sword of Social Media: Pressure, Politics, and the Need for Collective Responsibility

Politics vs. Platforms: When Social Media and Governments Clash

The intersection of social media and government regulation exposes a fragile balance between free expression and political influence (Briggs, S. , 2018).

Platforms like Meta have faced criticism for silencing pro-Palestinian voices, with over 1,050 instances of content takedowns and suppression documented by Human Rights Watch during the Israel-Palestine conflict (2023).

youtube

📌 This is not mere content moderation - it is a calculated response to geopolitical pressures, raising concerns about platforms shaping political narratives rather than merely hosting discussions (McLaughlin & Velez, 2017).

On the other hand, corporate ambition often clashes with national governance.

In Vietnam, strict data-sharing regulations challenge Meta’s data-driven business model, highlighting how regulatory policies shape user privacy and digital freedoms (Huynh, 2025).

⚠️ These cases prove that social media is far from neutral - it is an arena where corporate and political interests dictate the boundaries of online discourse.

The Dark Side of Online Discourse: Harassment & Hate Speech

Beyond political suppression, social media’s engagement-driven algorithms fuel online toxicity, prioritizing outrage over ethical discourse (KhosraviNik & Esposito, 2018).

The case of Vietnamese pop star Sơn Tùng M-TP is a perfect example of how social media can turn public discourse into a toxic battleground (TUOI TRE ONLINE, 2022).

⚠️ Trigger Warning: This content includes discussions of suicide. Please proceed with caution.

dailymotion

This is the music video that sparked controversy

youtube

This is the official music video released after the scandal

🎵 His 2022 music video, There's No One At All, raised valid concerns about its portrayal of mental health. However, the conversation quickly spiraled into mass outrage.

(TUOI TRE ONLINE, 2022)

Algorithms, designed to maximize engagement, amplified negativity (Pohjonen & Udupa, 2017), turning: ➡️ Constructive criticism into harassment ➡️ Discussion into cancel culture ➡️ Concern into hate speech

This incident highlights the challenges of regulating online content in a decentralized digital environment (Pohjonen & Udupa, 2017).

Once the backlash gained momentum, the sheer volume of hate speech and personal attacks made it nearly impossible to control.

The Sơn Tùng M-TP case proves how social media platforms can quickly become tools for targeted harassment, where: ✔️ Algorithmic amplification fuels negativity ✔️ The rapid spread of outrage overwhelms regulatory systems

⚖️ It serves as a stark reminder that the pursuit of engagement often trumps ethical considerations, leading to a digital world where outrage thrives while nuanced debate is silenced (Pohjonen & Udupa, 2017).

Beyond the Law: Collective Responsibility in Digital Spaces

Given the systemic nature of algorithmic amplification and online toxicity, legal solutions alone are insufficient. The viral spread of harmful content demonstrates the need for a multifaceted approach:

���� Strengthening Digital Literacy Educating users on algorithmic manipulation can empower them to critically assess content and resist misinformation (Sari & Suryadi, 2022). By understanding how platforms prioritize outrage, users can make more informed engagement decisions, reducing the spread of harmful narratives.

🏛 Holding Platforms Accountable Through CSR Social media companies must take responsibility for the consequences of their algorithms. Corporate social responsibility (CSR) initiatives should enforce stricter content moderation policies to prevent the prioritization of harmful engagement over user well-being (Tamvada, 2020). Advocacy groups and public pressure are key to driving ethical platform policies.

🌱 Shifting Online Culture Addressing systemic issues requires a cultural shift in how digital spaces operate (Badel & Baeza, 2021). Beyond regulatory measures, fostering a safer online environment demands ethical platform design, stronger digital literacy programs, and corporate accountability. Without proactive efforts, social media will continue to prioritize profit over ethical responsibility.

Final Thoughts: The Need for Collective Action

⚡ Combating online toxicity and political influence is not the responsibility of a single entity - it is a shared duty. Governments, platforms, and users must work together to create a more ethical digital space.

🔍 Understanding the interplay between corporate interests, regulations, and user behavior is the first step toward moving beyond reactive solutions and toward real change.

Let's build a better, more responsible online world together.

💬 If this resonates with you, share your thoughts below!

🔄 Reblog to spread awareness!

References

Badel, F., & Baeza, J. L. (2021). DIGITAL PUBLIC SPACE FOR A DIGITAL SOCIETY: A REVIEW OF PUBLIC SPACES IN THE DIGITAL AGE. ArtGRID - Journal of Architecture Engineering and Fine Arts, 3(2), 127–137. https://dergipark.org.tr/en/pub/artgrid/issue/67840/1002117

Briggs, S. (2018, March 8). About | HeinOnline. HeinOnline. https://heinonline.org/HOL/LandingPage?handle=hein.journals/collsp52&div=5&id=&page=

Human Rights Watch. (2023). Meta’s Broken Promises: Systemic Censorship of Palestine Content on Instagram and Facebook. Human Rights Watch. https://www.hrw.org/report/2023/12/21/metas-broken-promises/systemic-censorship-palestine-content-instagram-and

Huynh, T. T. (2025). Everyone Is Safe Now: Constructing the Meaning of Data Privacy Regulation in Vietnam. Asian Journal of Law and Society, 1–29. https://doi.org/10.1017/als.2024.36

KhosraviNik, M., & Esposito, E. (2018). Online hate, digital discourse and critique: Exploring digitally-mediated discursive practices of gender-based hostility. Lodz Papers in Pragmatics, 14(1), 45–68. https://doi.org/10.1515/lpp-2018-0003

McLaughlin, B., & Velez, J. A. (2017). Imagined Politics: How Different Media Platforms Transport Citizens Into Political Narratives. Social Science Computer Review, 37(1), 22–37. https://doi.org/10.1177/0894439317746327

Pohjonen, M., & Udupa, S. (2017). Extreme Speech Online: An Anthropological Critique of Hate Speech Debates. International Journal of Communication, 11(0), 19. https://ijoc.org/index.php/ijoc/article/view/5843

Sari, A. D. I., & Suryadi, K. (2022). Strengthening Digital Literacy to Develop Technology Wise Attitude Through Civic Education. Advances in Social Science, Education and Humanities Research/Advances in Social Science, Education and Humanities Research. https://doi.org/10.2991/assehr.k.220108.006

Tamvada, M. (2020). Corporate Social Responsibility and accountability: a New Theoretical Foundation for Regulating CSR. International Journal of Corporate Social Responsibility, 5(1), 1–14. Springeropen. https://link.springer.com/article/10.1186/s40991-019-0045-8

TUOI TRE ONLINE. (2022, April 29). Dư luận phẫn nộ vì MV There’s no one at all của Sơn Tùng M-TP có cách giải quyết độc hại. TUOI TRE ONLINE. https://tuoitre.vn/du-luan-phan-no-vi-mv-theres-no-one-at-all-cua-son-tung-m-tp-co-cach-giai-quyet-doc-hai-20220429155227083.htm

#mda20009#SocialMediaEthics#DigitalResponsibility#FreeSpeechVsCensorship#OnlineHarassment#AlgorithmBias#TechAndSociety#CancelCultureDebate#MediaManipulation#CorporateAccountability#EthicalTech#MisinformationCrisis#DigitalActivism#InternetCulture#OnlineDiscourse#SocialMediaAwareness#Youtube

0 notes

Text

Do you know that social media platforms shape what we see, whose voices are amplified, and who or what gets buried?

Think of who is missing from your feed? How does algorithm bias make you feel?

1 note

·

View note

Link

https://bit.ly/451Dtsi - 📺 A new report accuses YouTube's recommendation algorithm of steering children towards violent content, including videos of guns and school shootings. Despite YouTube's previous claims of prioritizing responsible recommendations, this troubling trend reveals a potential failure in their promise. #YouTube #ContentAlgorithms 🕹️ The study, conducted by the Campaign for Accountability's (CFA's) Tech Transparency Project (TTP), created four accounts impersonating two nine-year-olds and two 14-year-olds. All accounts were fed with gaming content, and their recommended videos were analyzed. Results showed that YouTube pushed violent content to all four, with a higher volume directed at those who clicked on YouTube-recommended videos. #TechTransparency #DigitalSafety 🔫 "It’s bad enough that YouTube makes videos glorifying gun violence accessible to children. Now, we’re discovering that it recommends these videos to young people," said Michelle Kuppersmith, Campaign for Accountability executive director. The findings raise concerns about the role of Big Tech's algorithms in exposing children to inappropriate content. #BigTech #Accountability 🎬 The recommended videos showcased a wide range of violent content, from school shootings to guides for converting a handgun to a fully automatic weapon. One account was even recommended an R-rated movie about serial killer Jeffrey Dahmer. This unsettling trend points towards an urgent need for more effective content regulation. #ContentRegulation #OnlineSafety 📈 The study also highlighted that accounts that engaged with recommended videos received up to 10 times more violent content. The videos were not age-restricted, with real firearms videos being pushed to the nine-year-old engagement account more than 12 times per day on average. #AlgorithmBias #OnlineEngagement 🎮 "YouTube’s algorithms seem intent on glorifying real-world gun use to boys as young as nine — at a time when mass shooters are trending younger and younger," warns Kuppersmith, casting a spotlight on the potential real-world implications of such exposure. #DigitalInfluence #MediaImpact 🔄 YouTube responded stating they offer options for younger viewers including the YouTube Kids app and Supervised Experience tools designed for a safer experience. They also mentioned they are looking into more ways to bring in academic researchers to study their systems. However, they questioned the study's methodology for lack of context and insight into how the test accounts were set up.

#YouTube#ContentAlgorithms#TechTransparency#DigitalSafety#BigTech#Accountability#ContentRegulation#OnlineSafety#AlgorithmBias#OnlineEngagement#DigitalInfluence#MediaImpact#YouTubeResponse#DigitalEthics#youtube#campaign#gunviolence#video#report#algorithm#children#content#school#shooter#recommendations#study#tech#transparency#project#gaming

1 note

·

View note

Text

[Week 9]

🎮 Game Over for Gatekeeping? How Diversity is Reshaping Gaming

From Ethics to Exclusion: How Gamergate Redefined Online Harassment

Gamergate, disguised as a push for "ethics in gaming journalism," was a targeted harassment campaign designed to exclude women and marginalized voices from gaming (Stuart, 2014).

youtube

It stemmed from anxieties over shifting power dynamics - gaming was no longer an exclusively male space, and the rise of female representation triggered a backlash.

💻 Online platforms amplified this outrage through their algorithms, normalizing harassment under the guise of "free speech." Far from neutral, they became enablers, rewarding inflammatory content with visibility and allowing harassment to flourish. Gamergate reinforced the "gamer mold," framing women and marginalized groups as interlopers and discouraging diversity (O’Donnell, 2022).

⚠️ The tactics of Gamergate persist in modern online hate campaigns. While diversity initiatives exist, women in gaming - whether developers, streamers, or critics - still face routine harassment (Cote, 2020). The fight for inclusivity is not just about gaming; it reflects broader struggles over power and digital exclusion.

The Gamer Mold: Who Fits, Who is Pushed Out, and Who Fights Back

The stereotypical "gamer" - young, white or East Asian, middle-class male - is an instrument of control, upholding the status quo (Yao et al., 2022). This exclusionary identity dictates who is seen as a "real" gamer.

📊 A staggering 83% of adults aged 18–45 report harassment in gaming spaces (Moreno-López & Argüello-Gutiérrez, 2025), exposing systemic hostility toward those who do not conform.

This structure fuels gatekeeping. When Anita Sarkeesian critiqued sexist tropes in Tropes vs. Women in Video Games, she faced a coordinated harassment campaign, including death threats, rape threats, and a mass shooting threat at Utah State University (Teti, 2017).

youtube

💣 Such extreme responses reveal that digital platforms are not neutral but contested battlegrounds for power and exclusion (Chia et al., 2020).

Gamergate further entrenched the "gamer mold," reinforcing gaming as a male-dominated space. However, marginalized gamers are fighting back, reshaping the industry, and creating alternative spaces.

youtube

Press Start to Disrupt: How Marginalized Gamers Are Fighting Back

Diverse gamers reclaim space through streaming, esports, game development, and indie gaming.

Groups like Black Girl Gamers (BGG), founded in 2015 by Jay-Ann Lopez (Teasley, 2024), have built safe digital spaces for Black women, amplifying marginalized voices through curated Twitch streams and industry panels.

🌍 BGG is not just about representation - it is a direct challenge to the structures that police gaming culture.

The rise of such communities underscores the depth of systemic exclusion (Paul, 2018). Their existence highlights how traditional gaming spaces remain hostile, necessitating alternative platforms.

However, progress is not just about visibility; it requires dismantling entrenched power structures. Actual change goes beyond inclusion - it demands systemic transformation in how gaming spaces are defined and governed (Peterson, M. , 2013).

Gaming's future hinges on this battle (Chia et al., 2020). The question is no longer whether diversity belongs in gaming but whether the industry will dismantle the barriers that necessitated these counter-movements in the first place.

💬 What do you think?

💡 Have you experienced gatekeeping in gaming?

🚀 What are your favorite communities that are pushing for diversity?

🔁 Reblog & share your thoughts in the tags or comments! Let's keep the conversation going.

References

Chia, A., Keogh, B., Leorke, D., & Nicoll, B. (2020). Platformisation in game development. Internet Policy Review, 9(4). https://policyreview.info/articles/analysis/platformisation-game-development

Cote, A. C. (2020). Redirecting. Ebsco.com. https://research.ebsco.com/c/ln5f2k/search/details/ye2pindx6n?db=e000xww

Moreno-López, R., & Argüello-Gutiérrez, C. (2025). Violence, Hate Speech, and Discrimination in Video Games: A Systematic Review. Social Inclusion, 13. https://doi.org/10.17645/si.9401

O’Donnell, J. (2022). Gamergate and Anti-Feminism in the Digital Age. In Springer eBooks. Palgrave Macmillan Cham. https://doi.org/10.1007/978-3-031-14057-0

Paul, C. A. (2018). The Toxic Meritocracy of Video Games. Google Books. https://books.google.com.vn/books?hl=en&lr=&id=Mip0DwAAQBAJ&oi=fnd&pg=PT6&dq=how+traditional+gaming+spaces+remain+hostile

Peterson, M. (2013). Computer Games: Definitions, Theories, Elements, and Genres. In: Computer Games and Language Learning. Palgrave Macmillan’s Digital Education and Learning. Springer.com; Palgrave Macmillan, New York. https://fsso.springer.com/federation/Consumer/metaAlias/SpringerServiceProvider

Stuart, K. (2014, December 3). Zoe Quinn: “All Gamergate has done is ruin people’s lives.” The Observer. https://www.theguardian.com/technology/2014/dec/03/zoe-quinn-gamergate-interview

Teasley, S. (2024, February 12). A Peek Inside Her Agenda: Jay-Ann Lopez. Her Agenda. https://heragenda.com/p/jay-ann-lopez/

Teti, I. F. (2017). Female Tropes in Video Games | Penn State - Presidential Leadership Academy (PLA). Psu.edu. https://sites.psu.edu/academy/2017/04/09/female-tropes-in-video-games/

Yao, S. X., Ewoldsen, D., Ellithorpe, M., Van Der Heide, B., & Rhodes, N. (2022). Gamer Girl vs. Girl Gamer: Stereotypical gamer traits increase Men’s play intention. Computers in Human Behavior, 107217. https://doi.org/10.1016/j.chb.2022.107217

#mda20009#Gamergate#GamingDiversity#WomenInGaming#GamingCulture#VideoGames#ToxicGaming#Gatekeeping#RepresentationMatters#OnlineHarassment#InclusionInGaming#GamingCommunity#BlackGirlGamers#Esports#GamingIndustry#StreamersOfColor#FeminismInGaming#GameDevelopment#SocialJustice#InternetCulture#DigitalExclusion#PowerAndPrivilege#GamingHistory#IndieGames#SafeSpaces#AlgorithmBias#GamerMold#Youtube

0 notes

Text

[Week 7]

The Instagram Effect: How "Perfection" Became a Full-Time Job

Instagram is not just an app; it is a beauty factory.

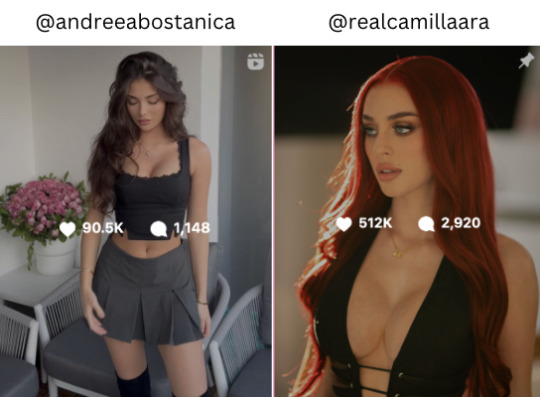

The Aesthetic Template: Copy, Paste, Repeat

Instagram's "aesthetic template" is not just a trend but a strategy for visibility. Influencers copy trends for algorithm success, forcing conformity and killing individuality (Witz et al., 2003).

Most European women adhere to a beauty template that favors darker skin tones, sharp and dramatic makeup, and a more muscular, robust physique.

In contrast, traditional Asian beauty standards have long celebrated lighter, whiter skin, softer makeup, and a more delicate, slender body type (Dean, 2005). Besides, Asian women on Instagram now express themselves more but keep their distinct style, which is influenced by Western culture.

💭 Confidence or conformity: Why chase beauty ideals?

The truth is complex. While some see it as self-improvement and validation, the overwhelming influence of curated, idealized images and algorithmic pressures on social media often pushes people toward conformity over authenticity (Daniels, 2016).

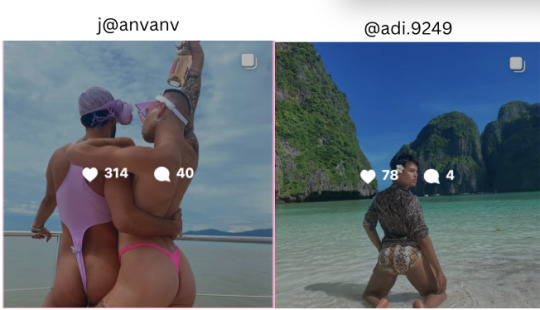

Beauty Is not Universal - It is Algorithmic.

Beauty on Instagram is not just about looks but about playing the algorithm's game.

Conventionally attractive women in revealing outfits? Boosted.

Gay men expressing femininity? Often ignored.

📲 Instagram boosts sexy women and ignores feminine gay men, creating a skewed perception of self-worth and societal value. When users constantly see sexy, Eurocentric beauty ideals being rewarded with visibility and engagement, they may internalize the message that this is the "ideal" to aspire to - leading to intense pressure to conform (Bishop, 2021).

🚫 Meanwhile, the lack of representation for feminine gay men can make individuals in this group feel invisible or undervalued, reinforcing feelings of exclusion and inadequacy (Carrotte et al., 2017).

🔄 This dynamic shapes self-perception, often pushing people to alter their appearance or suppress their authenticity just to be seen. It also perpetuates harmful stereotypes about what is considered desirable or acceptable, ultimately narrowing diversity and limiting self-acceptance on the platform (Witz et al., 2003).

When Beauty & Sexualization Blur

(Warning: sensitive photos included)

This is not just about 'blurred lines'; it is about the normalization of exploitation. Young people who really look up to influencers are being taught the wrong ideas about beauty and sex. They start to think their value comes from how sexually attractive they are, which is bad for their minds and feelings (Drenten et al., 2019).

📌 BOP House, a social media collective known for its provocative and highly sexualized content, exemplifies this phenomenon (Upton-Clark, 2025). Members like Sophia Rain (6M followers) gain fame through controversial posts, blurring the lines between social media, entertainment, and adult content. This normalization reshapes user perceptions of beauty, self-worth, and online expression.

The Mental Health Toll: When “Instagram Face” Becomes the Goal

Figure 1 (Dixon, 2024)

32% of Instagram users are 18-24, making Gen Z dominant (Figure 1). The platform is linked to depression, low self-esteem, and body image issues (Abrams, 2021). BDD affects 2% of people, especially young users, highlighting social media's role in these challenges (BDDF, n.d.).

In this video, she discusses a client with BDD driven by perfectionism and the online "aesthetic template." Obsessed with perfection, the client feels insecure and has undergone surgeries since age 14 to "fix" perceived flaws.

✨ Instagram promotes an illusion of perfection, fueling unhealthy comparisons (Witz et al., 2003). The platform's focus on curated images creates a false sense that everyone else has an ideal life, pressuring users to meet unrealistic beauty standards. A 14-year-old undergoing multiple surgeries highlights how far people go to chase this unattainable ideal.

📱 This constant exposure distorts self-image, increases anxiety, and normalizes harmful behaviors - profoundly impacting mental health.

So... Who Really Wins?

Influencers? Maybe. Instagram? Definitely. The rest of us? We are left chasing a beauty standard that was never real.

✨ Thoughts? Have you ever felt the pressure of Instagram beauty standards? Let's talk. 💬

References

Abrams, Z. (2021, December 2). How can we minimize Instagram’s harmful effects? American Psychological Association. https://www.apa.org/monitor/2022/03/feature-minimize-instagram-effects

BDDF. (n.d.). How Common is BDD? – BDDF. Body Dysmorphic Disorder Foundation. https://bddfoundation.org/information/frequently-asked-questions/how-common-is-bdd/

Bishop, S. (2021). Influencer Management Tools: Algorithmic Cultures, Brand Safety, and Bias. Social Media + Society, 7(1), 205630512110030. https://doi.org/10.1177/20563051211003066

Carrotte, E., Prichard, I., & Lim, M. S. C. (2017). “Fitspiration” on Social Media: a Content Analysis of Gendered Images. “Fitspiration” on Social Media: A Content Analysis of Gendered Images, 19(3). https://doi.org/10.2196/jmir.6368

Daniels, E. A. (2016). Sexiness on Social Media. Sexualization, Media, & Society, 2(4), 237462381668352. https://doi.org/10.1177/2374623816683522

Dean, D. (2005). Recruiting a self: Women performers and aesthetic labour. Work, Employment and Society, 19(4), 761–774. https://doi.org/10.1177/0950017005058061

Dixon, S. J. (2024, May 2). Distribution of Instagram Users Worldwide as of April 2024, by Age Group. Statista. https://www.statista.com/statistics/325587/instagram-global-age-group/

Drenten, J., Gurrieri, L., & Tyler, M. (2019). Sexualized labour in digital culture: Instagram influencers, porn chic and the monetization of attention. Gender, Work & Organization, 27(1), 41–66. https://doi.org/10.1111/gwao.12354

Marwick, A. (2013). Status Update: Celebrity, Publicity, and Branding in the Social Media Age. Yale University Press. https://yalebooks.yale.edu/book/9780300209389/status-update/

Senft, T. (2013). Microcelebrity and the Branded Self. https://fws.commacafe.org/resources/theresa_senft_microcelebrity_branded_self.pdf

Upton-Clark, E. (2025, February 12). Meet the Bop House, the internet’s divisive new OnlyFans hype house. Fast Company. https://www.fastcompany.com/91277825/meet-the-bop-house-the-internets-divisive-new-onlyfans-hype-house

Witz, A., Warhurst, C., & Nickson, D. (2003). The Labour of Aesthetics and the Aesthetics of Organization. Organization, 10(1), 33–54. https://doi.org/10.1177/1350508403010001375

#mda20009#SocialMediaCulture#InstagramEffects#BeautyStandards#AlgorithmBias#DigitalConformity#MentalHealthAwareness#BodyImageIssues#SelfEsteemMatters#UnrealisticBeautyStandards#DiversityInBeauty#RepresentationMatters#SocialMediaExploitation#BlurredLines#SexualizationOfYouth#InfluencerMarketing#SocialMediaInfluence#ContentCreators#LetsTalk#YourThoughts#OpenDiscussion

0 notes