#Amazon Machine Images(AMIs)

Text

Splunk on AWS Marketplaceの活用ポイント

AWS MarketplaceでのSplunk導入メリット

AWS Marketplaceを通じてSplunkを導入することで、多くのメリットが得られます。

まず第一に、トータルコスト(TCO)の大幅な削減が可能になります。

従来の調達方法と比較して、ライセンス費用や運用コストを20%以上削減できた事例があります。

さらに、契約や管理にかかる事務作業の負担も軽減され、全体で30%以上のコスト削減につながります。

Splunk環境の迅速な構築

AWS Marketplaceでは、Splunk EnterpriseのAMI(Amazon Machine Image)が提供されています。

このAMIを使用することで、Splunk環境を短時間で構築できます。

例えば、以下のようなコマンドでSplunkインスタンスを起動できます。

aws ec2 run-instances --image-id…

0 notes

Text

AWS Üzerinde Ücretsiz VPN Kurulumu: adım adım kılavuz

AWS (Amazon Web Services), güçlü ve esnek bulut altyapısıyla, kendi VPN sunucunuzü kurmak için harika bir platform sunar. Özellikle, t2.micro instance tipiyle aylık 750 saat ücretsiz kullanım hakkı sunması, bu işlemi daha da cazip hale getirir.

Gerekliler:

- Bir AWS hesabı

- Bir SSH istemcisi (Putty gibi)

- Bir OpenVPN istemci uygulaması (Windows, macOS veya Linux için)

Adım Adım Kılavuz:

AWS Konsoluna Giriş Yapın:

- AWS hesabınıza giriş yapın ve EC2 (Elastic Compute Cloud) servisini seçin.

Yeni Instance Oluştur:

- "Launch Instance" butonuna tıklayın.

- AMI (Amazon Machine Image): Arama çubuğuna "openvpn" yazın ve uygun bir AMI seçin (örneğin, AWS Marketplace'teki OpenVPN Appliance).

- Instance Type: t2.micro seçin (ücretsiz kullanım hakkı için).

- Key Pair: Yeni bir key pair oluşturun veya mevcut birini seçin. Bu anahtar, instance'ınıza SSH ile bağlanmak için kullanılacaktır.

- Security Group: Tüm trafiğe izin veren bir güvenlik grubu oluşturun veya mevcut birini seçin (daha sonra daha güvenli bir yapılandırma yapabilirsiniz).

- Instance Launch: Instance'ı başlatın.

SSH ile Bağlanın:

- Oluşturulan instance'ın Public DNS veya Public IP adresini not edin.

- SSH istemcinizi kullanarak instance'a bağlanın.

- Komut: ssh -i your-key-pair.pem ec2-user@your-public-ip (your-key-pair.pem yerine kendi anahtar dosyanızın adını ve your-public-ip yerine instance'ın public IP'sini yazın.)

OpenVPN Yapılandırması:

- Yapılandırma Dosyasını İndirin: Genellikle /etc/openvpn dizini altında bulunur.

- Yapılandırma Dosyasını Düzenleyin: İhtiyaçlarınıza göre (server, port, protokoller vb.) düzenleyebilirsiniz.

- İstemciye Kurulum: İndirilen yapılandırma dosyasını OpenVPN istemci uygulamanıza ekleyin ve bağlantı kurun.

Ek Bilgiler ve İpuçları:

- Güvenlik:

- Security Group: Sadece gerekli portları (OpenVPN için genellikle 1194 UDP) açın.

- Key Pair: Anahtarınızı güvenli bir yerde saklayın.

- Şifreleme: OpenVPN'in sunduğu güçlü şifreleme yöntemlerini kullanın.

- Performans:

- Instance Tipi: İhtiyaçlarınıza göre daha güçlü bir instance tipi seçebilirsiniz.

- Ücretsiz Kullanım:

- Aylık 750 saat ücretsiz kullanım hakkından sonra ücretlendirme başlayacaktır.

- Diğer VPN Çözümleri:

- Softether: Daha fazla özelleştirme imkanı sunan bir başka VPN çözümüdür.

- WireGuard: Daha yeni ve hızlı bir VPN protokolüdür.

Video Kılavuzları:

- YouTube'da "AWS üzerinde ücretsiz VPN kurulumu" gibi anahtar kelimelerle birçok detaylı video bulabilirsiniz.

Önemli Not: Bu kılavuz genel bir çerçeve sunmaktadır. Tam olarak sizin ihtiyaçlarınıza uygun bir kurulum için AWS belgesini ve ilgili kaynakları detaylı bir şekilde incelemeniz önerilir.

Bu kılavuzu kullanarak kendi VPN sunucunuzu kolayca kurabilir ve internet bağlantınızı güvence altına alabilirsiniz.

Başka sorularınız olursa çekinmeden sorabilirsiniz.

Ek Not: Bu kılavuzda verilen bilgiler genel bilgilendirme amaçlıdır ve herhangi bir garanti taşımaz. Sisteminizde yapacağınız değişikliklerden kendiniz sorumlusunuz.

Read the full article

#AWSgüvenlikgrupları#AWSileVPNmaliyeti#AWSücretsiztier#bulutVPNavantajları#evdençalışmakiçinVPN#OpenVPNistemciuygulaması#OpenVPNkonfigürasyon#sibergüvenlikönlemleri#SSHbağlantısı#t2.microinstance#uzaktançalışmaçözümleri#VPNileinternetbağlantısınıgüvencealtınaalma#VPNkurulumuadımadım#VPNprotokolleri

0 notes

Text

How to Connect GitHub to Your EC2 Instance: Easy-to-Follow Step-by-Step Guide

Connecting GitHub with AWS EC2 Instance

Are you looking to seamlessly integrate your GitHub repository with an Amazon EC2 instance? Connecting GitHub to your EC2 instance allows you to easily deploy your code, automate workflows, and streamline your development process.

In this comprehensive guide, we'll walk you through the step-by-step process of setting up this connection, from creating an EC2 instance to configuring webhooks and deploying your code.

By the end of this article, you'll have a fully functional GitHub-EC2 integration, enabling you to focus on writing great code and delivering your projects efficiently.

Before you begin

Before we dive into the process of connecting GitHub to your EC2 instance, make sure you have the following prerequisites in place:

View Prerequisites

1️⃣ AWS Account:

An AWS account with access to the EC2 service

2️⃣ GitHub Account:

A GitHub account with a repository you want to connect to your EC2 instance

3️⃣ Basic KNowledge:

Basic knowledge of AWS EC2 and GitHub

With these prerequisites in hand, let's get started with the process of creating an EC2 instance.

Discover the Benefits of Connecting GitHub to Your EC2 Instance

1. Automation:Connecting your GitHub repository to your EC2 instance enables you to automate code deployments. Changes pushed to your repo can trigger automatic updates on the EC2 instance, making the development and release process much smoother.

2. Centralized Code:GitHub acts as a central hub for your project code. This allows multiple developers to work on the same codebase simultaneously, improving collaboration and code sharing.

3. Controlled Access: pen_spark:GitHub's access control mechanisms let you manage who can view, modify, and deploy your code. This helps in maintaining the security and integrity of your application.

Creating an EC2 Instance

The first step in connecting GitHub to your EC2 instance is to create an EC2 instance. Follow these steps to create a new instance:- Login to your AWS Management Console. and navigate to the EC2 dashboard.

- Click on the "Launch Instance" button to start the instance creation wizard.

- Choose an Amazon Machine Image (AMI) that suits your requirements. For this guide, we'll use the Amazon Linux 2 AMI.

- Select an instance type based on your computational needs and budget. A t2.micro instance is sufficient for most basic applications.

- Configure the instance details, such as the number of instances, network settings, and IAM role (if required).

- Add storage to your instance. The default settings are usually sufficient for most use cases.

- Add tags to your instance for better organization and management.

- Configure the security group to control inbound and outbound traffic to your instance. We'll dive deeper into this in the next section.

- Review your instance configuration and click on the "Launch" button.

- Choose an existing key pair or create a new one. This key pair will be used to securely connect to your EC2 instance via SSH.

- Launch your instance and wait for it to be in the "Running" state.Congratulations! You have successfully created an EC2 instance. Let's move on to configuring the security group to allow necessary traffic.

Configuring Security Groups on AWS

Security groups act as virtual firewalls for your EC2 instances, controlling inbound and outbound traffic. To connect GitHub to your EC2 instance, you need to configure the security group to allow SSH and HTTP/HTTPS traffic. Follow these steps:

Easy Steps for Configuring Security Groups on AWS

- In the EC2 dashboard, navigate to the “Security Groups” section under “Network & Security.”

- Select the security group associated with your EC2 instance.

- In the “Inbound Rules” tab, click on the “Edit inbound rules” button.

- Add a new rule for SSH (port 22) and set the source to your IP address or a specific IP range.

- Add another rule for HTTP (port 80) and HTTPS (port 443) and set the source to “Anywhere” or a specific IP range, depending on your requirements.

- Save the inbound rules.

Your security group is now configured to allow the necessary traffic for connecting GitHub to your EC2 instance.

Installing Git on the EC2 Instance

To clone your GitHub repository and manage version control on your EC2 instance, you need to install Git. Follow these steps to install Git on your Amazon Linux 2 instance:- Connect to your EC2 instance using SSH. Use the key pair you specified during instance creation.

- Update the package manager by running the following command:sudo yum update -y

- Install Git by running the following command:sudo yum install git -y

- Verify the installation by checking the Git version:git --version

Git is now installed on your EC2 instance, and you're ready to clone your GitHub repository.

Generating SSH Keys

To securely connect your EC2 instance to GitHub, you need to generate an SSH key pair. Follow these steps to generate SSH keys on your EC2 instance:

- Connect to your EC2 instance using SSH.

- Run the following command to generate an SSH key pair:ssh-keygen -t rsa -b 4096 -C "[email protected]"

Replace [email protected] with your GitHub email address.

- Press Enter to accept the default file location for saving the key pair.

- Optionally, enter a passphrase for added security. Press Enter if you don't want to set a passphrase.

- The SSH key pair will be generated and saved in the specified location (default: ~/.ssh/id_rsa and ~/.ssh/id_rsa.pub).

Add SSH Key to GitHub account

To enable your EC2 instance to securely communicate with GitHub, you need to add the public SSH key to your GitHub account. Follow these steps:- On your EC2 instance, run the following command to display the public key:cat ~/.ssh/id_rsa.pub

- Copy the entire contents of the public key.

- Log in to your GitHub account and navigate to the "Settings" page.

- Click on "SSH and GPG keys" in the left sidebar.

- Click on the "New SSH key" button.

- Enter a title for the key to identify it easily (e.g., "EC2 Instance Key").

- Paste the copied public key into the "Key" field.

- Click on the "Add SSH key" button to save the key.Your EC2 instance is now linked to your GitHub account using the SSH key. Let's proceed to cloning your repository.

Cloning a Repository

To clone your GitHub repository to your EC2 instance, follow these steps:- Connect to your EC2 instance using SSH.

- Navigate to the directory where you want to clone the repository.

- Run the following command to clone the repository using SSH:git clone [email protected]:your-username/your-repository.git

Replace "your-username" with your GitHub username and "your-repository" with the name of your repository.

- Enter the passphrase for your SSH key, if prompted.

- The repository will be cloned to your EC2 instance.You have successfully cloned your GitHub repository to your EC2 instance. You can now work with the code locally on your instance.

Configure a GitHub webhook in 7 easy steps

Webhooks allow you to automate actions based on events in your GitHub repository. For example, you can configure a webhook to automatically deploy your code to your EC2 instance whenever a push is made to the repository. Follow these steps to set up a webhook:- In your GitHub repository, navigate to the "Settings" page.

- Click on "Webhooks" in the left sidebar.

- Click on the "Add webhook" button.

- Enter the payload URL, which is the URL of your EC2 instance where you want to receive the webhook events.

- Select the content type as "application/json."

- Choose the events that should trigger the webhook. For example, you can select "Push events" to trigger the webhook whenever a push is made to the repository.

- Click on the "Add webhook" button to save the webhook configuration.Your webhook is now set up, and GitHub will send POST requests to the specified payload URL whenever the selected events occur.

Deploying to AWS EC2 from Github

With the webhook configured, you can automate the deployment of your code to your EC2 instance whenever changes are pushed to your GitHub repository. Here's a general outline of the deployment process:- Create a deployment script on your EC2 instance that will be triggered by the webhook.

- The deployment script should perform the following tasks:- Pull the latest changes from the GitHub repository.

- Install any necessary dependencies.

- Build and compile your application, if required.

- Restart any services or application servers.

- Configure your web server (e.g., Apache or Nginx) on the EC2 instance to serve your application.

- Ensure that the necessary ports (e.g., 80 for HTTP, 443 for HTTPS) are open in your EC2 instance's security group.

- Test your deployment by making a change to your GitHub repository and verifying that the changes are automatically deployed to your EC2 instance.The specific steps for deploying your code will vary depending on your application's requirements and the technologies you are using. You may need to use additional tools like AWS CodeDeploy or a continuous integration/continuous deployment (CI/CD) pipeline to streamline the deployment process.

AWS Official Documentation

Tips for Troubleshooting Common Technology Issues While Connecting GitHub to your EC2 Instance

1. Secure PortEnsure that your EC2 instance's security group is configured correctly to allow incoming SSH and HTTP/HTTPS traffic.

2. SSH VerificationVerify that your SSH key pair is correctly generated and added to your GitHub account.

3. Payload URL CheckingDouble-check the payload URL and the events selected for your webhook configuration.

4. Logs on EC2 InstanceCheck the logs on your EC2 instance for any error messages related to the deployment process.

5. Necessary Permissions Ensure that your deployment script has the necessary permissions to execute and modify files on your EC2 instance.

6. Check DependenciesVerify that your application's dependencies are correctly installed and configured on the EC2 instance.

7. Test Everything Locally FirstTest your application locally on the EC2 instance to rule out any application-specific issues.

If you still face issues, consult the AWS and GitHub documentation (Trobleshotting Conections) or seek assistance from the respective communities or support channels.

Conclusion

Connecting GitHub to your EC2 instance provides a seamless way to deploy your code and automate your development workflow. By following the steps outlined in this guide, you can create an EC2 instance, configure security groups, install Git, generate SSH keys, clone your repository, set up webhooks, and deploy your code to the instance.Remember to regularly review and update your security settings, keep your EC2 instance and application dependencies up to date, and monitor your application's performance and logs for any issues.With GitHub and EC2 connected, you can focus on writing quality code, collaborating with your team, and delivering your applications efficiently.

Read the full article

0 notes

Text

EC2 Auto Recovery: Ensuring High Availability In AWS

In the modern world of cloud computing, high availability is a critical requirement for many businesses. AWS offers a wide range of services that can help achieve high availability, including EC2 Auto Recovery. In this article, we will explore what it is, how it works, and why it is important for ensuring high availability in AWS.

What is EC2 Auto Recovery?

EC2 Auto Recovery is a feature provided by AWS that automatically recovers an EC2 instance if it becomes impaired due to underlying hardware or software issues. It works by monitoring the health of the EC2 instances and automatically initiates the recovery process to restore the instance to a healthy state.

How does EC2 Auto Recovery work?

It works by leveraging the capabilities of the underlying AWS infrastructure. It continuously monitors the EC2 instances and their associated system status checks. If it detects an issue with an instance, it automatically triggers the recovery process.

The recovery process involves stopping and starting the impaired instance using the latest available Amazon Machine Image (AMI). By using the latest AMI, the instance can be restored to a known good state, ensuring that any software or configuration issues causing the impairment are resolved.

In addition to using the latest AMI, it also restores any previously attached secondary EBS volumes as well as any instance-level metadata associated with the instance. This ensures that the recovered instance is as close to the original state as possible.

Why is EC2 Auto Recovery important?

It is important for ensuring high availability in AWS for several reasons:

1. Automated recovery:

EC2 Auto Recovery automates the recovery process, reducing the need for manual intervention in the event of an instance impairment. This helps in minimizing downtime and ensuring that the services running on the EC2 instance are quickly restored.

2. Proactive monitoring:

EC2 Auto Recovery continuously monitors the health of the EC2 instances and their associated system status checks. This allows for early detection of any issues and enables proactive recovery before it becomes a major problem. This helps in maintaining the overall health and stability of the infrastructure.

3. Simplified management:

Managing the recovery process of impaired instances manually can be complex and time-consuming. It simplifies the management by automating the entire process, saving time and effort for the administrators.

4. Enhanced availability:

By automatically recovering impaired instances, EC2 Auto Recovery enhances the availability of EC2 instances and the services running on them. It helps in minimizing the impact of hardware and software failures on the overall system availability.

Enabling EC2 Auto Recovery

Enabling EC2 Auto Recovery for an instance is a straightforward process. It can be done either through the AWS Management Console, AWS CLI, or AWS SDKs. The following steps outline the process through the AWS Management Console:

1. Open the EC2 console and select the target instance.

2. In the “Actions” drop-down menu, select “Recover this instance”.

3. In the recovery settings dialog, select the “Enable” checkbox for EC2 Auto Recovery.

4. Click on “Save” to enable EC2 for the instance.

Once EC2 Auto-Recovery is enabled for an instance, it starts monitoring the instance and automatically initiates the recovery process when necessary.

Limitations and Best Practices

While EC2 Auto Recovery is a powerful feature, it is important to be aware of its limitations and follow best practices to ensure optimal usage. Some of the limitations and best practices include:

1. Instance types:

Not all instance types are currently supported by EC2 Auto Recovery. It is important to check the AWS documentation for the list of supported instance types before enabling it.

2. Elastic IP addresses:

If an instance has an associated Elastic IP address, it will be disassociated during the recovery process. To ensure seamless transition and avoid disruptions, it is recommended to use an Elastic Load Balancer and Route 53 DNS failover records.

3. Custom monitoring and recovery:

EC2 Auto Recovery is primarily designed for system status checks. If you have custom monitoring in place, it is important to ensure that it is integrated with it.

4. Testing and validation:

It is recommended to test and validate the recovery process regularly to ensure that it works as expected. This can be done by manually triggering a recovery or using the AWS Command Line Interface (CLI) or SDKs.

Conclusion

EC2 Auto Recovery is a powerful feature provided by AWS that helps ensure high availability by automatically recovering impaired it’s instances. By automating the recovery process, it reduces downtime, simplifies management, and enhances overall availability. It is important to be aware of the limitations and follow best practices to ensure it’s optimal usage. By leveraging this feature, businesses can effectively improve the reliability and resilience of their infrastructure in the cloud.

0 notes

Text

5 Udemy Paid Course for Free with Certification.(Limited Time for Enrollment)

1. HTML & CSS - Certification Course for Beginners

Learn the Foundations of HTML & CSS to Create Fully Customized, Mobile Responsive Web Pages

What you'll learn

The Structure of an HTML Page

Core HTML Tags

HTML Spacing

HTML Text Formatting & Decoration

HTML Lists (Ordered, Unordered)

HTML Image Insertion

HTML Embedding Videos

Absolute vs. Relative File Referencing

Link Creation, Anchor Tags, Tables

Table Background Images

Form Tags and Attributes - Buttons, Input Areas, Select Menus

Parts of a CSS Rule

CSS - Classes, Spans, Divisions

CSS Text Properties, Margins, & Padding

CSS Borders, Backgrounds, & Transparency

CSS Positioning - Relative, Absolute, Fixed, Float, Clear

CSS Z-Index, Styling Links, Tables

Responsive Web Page Design using CSS

Take This Course

👇👇👇👇👇👇👇

5 Udemy Paid Course for Free with Certification. (Limited Time for Enrollment)

2. Bootstrap & jQuery - Certification Course for Beginners

Learn to Create fully Animated, Interactive, Mobile Responsive Web Pages using Bootstrap & jQuery Library.

What you'll learn

How to create Mobile-Responsive web pages using the Bootstrap Grid System

How to create custom, drop-down navigation menus with animation

How to create collapse panels, accordion menus, pill menus and other types of UI elements

Working with Typography in Bootstrap for modern, stylish fonts

Working with Lists and Pagination to organize content

How to add events to page elements using jQuery

How to create animations in jQuery (Fade, Toggle, Slide, Animate, Hide-Show)

How to add and remove elements using Selectors (Id, Class)

How to use the Get Content function to retrieve Values and Attributes

How to use the jQuery Callback, and Chaining Function

Master the use of jQuery Animate with Multiple Params, Relative Values, and Queue Functionality

Take This Course

👇👇👇👇👇👇👇👇

5 Udemy Paid Course for Free with Certification.(Limited Time for Enrollment)

3. AWS Beginner to Intermediate: EC2, IAM, ELB, ASG, Route 53

AWS Accounts | Billing | IAM Admin | EC2 Config | Ubuntu | AWS Storage | EBS | EFS | AMI | Load Balancers | Route 53

What you'll learn

AWS Account Registration and Administration

Account Billing and Basic Security

AWS Identity and Access Management (IAM)

Creating IAM Users, Groups, Policies, and Roles

Deploying and Administering Amazon EC2 Instances

Creating Amazon Machine Images

Navigating the EC2 Instances Console

Working with Elastic IPs

Remote Instance Administration using Terminal and PuTTY

Exploring various AWS Storage Solutions (EBS, EFS)

Creating EBS Snapshots

Working with the EC2 Image Builder

Working with the Elastic File System (EFS)

Deploying Elastic Load Balancers (ELB)

Working with Auto Scaling Groups (ASG)

Dynamic Scaling using ELB + ASG

Creating Launch Templates

Configuring Hosted-Zones using Route 53

Take This Course

👇👇👇👇👇👇👇👇

5 Udemy Paid Course for Free with Certification.(Limited Time for Enrollment)

4. Google Analytics 4 (GA4) Certification. How to Pass the Exam

A Step-by-Step Guide to Passing the Google Analytics 4 (GA4) Certification Exam!

What you'll learn

Master key terms and concepts to effortlessly pass the Google Analytics 4 Certification Exam

Understand GA4 settings to optimize data flow to your site

Utilize the power of tags and events for effective data collection

Learn to track important metrics like events, conversions, LTV, etc. for operational decisions

Navigate GA4’s user-friendly interface to create and interpret impactful reports and analyses

Gain insider tips and sample questions to effortlessly pass the certification test

Take This Course

👇👇👇👇👇👇👇👇

5 Udemy Paid Course for Free with Certification.(Limited Time for Enrollment)

5. The Complete C & C++ Programming Course - Mastering C & C++

Complete C & C++ Programming Course basic to advanced

What you'll learn

Fundamentals of Programming

No outdated C++ Coding Style

Loops - while, do-while, for

The right way to code in C++

Gain confidence in C++ memory management

Take This Course

👇👇👇👇👇👇👇👇

5 Udemy Paid Course for Free with Certification.(Limited Time for Enrollment)

0 notes

Text

What is AWS?

Sure! Here's a beginner-friendly tutorial for getting started with AWS (Amazon Web Services):

Step 1: Sign Up for an AWS Account

Go to the AWS website (https://aws.amazon.com/).

Click on "Sign In to the Console" at the top right corner.

Follow the prompts to create a new AWS account.

Step 2: Navigate the AWS Management Console

Once you've created an account and logged in, you'll be taken to the AWS Management Console.

Take some time to familiarize yourself with the layout and navigation of the console.

Step 3: Understand AWS Services

AWS offers a wide range of services for various purposes such as computing, storage, databases, machine learning, etc.

Start by exploring some of the core services like EC2 (Elastic Compute Cloud), S3 (Simple Storage Service), and RDS (Relational Database Service).

Step 4: Launch Your First EC2 Instance

EC2 is a service for virtual servers in the cloud.

Click on "EC2" from the AWS Management Console.

Follow the wizard to launch a new EC2 instance.

Choose an Amazon Machine Image (AMI), instance type, configure instance details, add storage, configure security groups, and review.

Finally, launch the instance.

Step 5: Create a Simple S3 Bucket

S3 is a scalable object storage service.

Click on "S3" from the AWS Management Console.

Click on "Create bucket" and follow the prompts to create a new bucket.

Choose a unique bucket name, select a region, configure options, set permissions, and review.

Step 6: Explore Other Services

Spend some time exploring other AWS services like RDS (Relational Database Service), Lambda (Serverless Computing), IAM (Identity and Access Management), etc.

Each service has its own documentation and tutorials available.

Step 7: Follow AWS Documentation and Tutorials

AWS provides extensive documentation and tutorials for each service.

Visit the AWS documentation website (https://docs.aws.amazon.com/) and search for the service or topic you're interested in.

Follow the step-by-step tutorials to learn how to use different AWS services.

Step 8: Join AWS Training and Certification Programs

AWS offers various training and certification programs for individuals and organizations.

Consider enrolling in AWS training courses or preparing for AWS certification exams to deepen your understanding and skills.

Step 9: Join AWS Community and Forums

Join the AWS community and forums to connect with other users, ask questions, and share knowledge.

Participate in AWS events, webinars, and meetups to learn from industry experts and network with peers.

Step 10: Keep Learning and Experimenting

AWS is constantly evolving with new services and features being added regularly.

Keep learning and experimenting with different AWS services to stay updated and enhance your skills.

By following these steps, you'll be able to get started with AWS and begin your journey into cloud computing. Remember to take your time, explore at your own pace, and don't hesitate to ask for help or clarification when needed. Happy cloud computing!

Watch Now:- https://www.youtube.com/watch?v=bYYAejIfcNE&t=3s

0 notes

Text

Title: Unleashing the Power of Amazon Machine Images (AMIs): A Comprehensive Guide

Title: Unleashing the Power of Amazon Machine Images (AMIs): A Comprehensive Guide

Introduction:

In the dynamic world of cloud computing, Amazon Machine Images (AMIs) emerge as a cornerstone for building and deploying applications seamlessly on the Amazon Web Services (AWS) platform. This blog post delves into the creation, uses, and importance of Amazon Machine Images, unraveling the potential…

View On WordPress

0 notes

Text

Qualcomm Cloud AI 100 Lifts AWS’s Latest EC2!

Qualcomm Cloud AI 100 in AWS EC2

With the general release of new Amazon Elastic Compute Cloud (Amazon EC2) DL2q instances, the Qualcomm Cloud AI 100 launch, which built on the company’s technological collaboration with AWS, marked the first significant milestone in the joint efforts. The first instances of the Qualcomm artificial intelligence (AI) solution to be deployed in the cloud are the Amazon EC2 DL2q instances.

The Qualcomm Cloud AI 100 accelerator’s multi-core architecture is both scalable and flexible, making it suitable for a broad variety of use-cases, including:

Large Language Models (LLMs) and Generative AI: Supporting models with up to 16B parameters on a single card and 8x that in a single DL2q instance, LLMs address use cases related to creativity and productivity.

Classic AI: This includes computer vision and natural language processing.

They recently showcased a variety of applications using AWS EC DL2q powered by Qualcomm Cloud AI 100 at this year’s AWS re:Invent 2023:

A conversational AI that makes use of the Llama2 7B parameter LLM model.

Using the Stable Diffusion model, create images from text.

Utilising the Whisper Lite model to simultaneously transcribing multiple audio streams.

Utilising the transformer-based Opus mode to translate between several languages.

Nakul Duggal, SVP & GM, Automotive & Cloud Computing at Qualcomm Technologies, Inc., stated, “Working with AWS is empowering us to build on they established industry leadership in high-performance, low-power deep learning inference acceleration technology.” The work they have done so far shows how well cloud technologies can be integrated into software development and deployment cycles.

An affordable revolution in AI

EC2 customers can run inference on a variety of models with best-in-class performance-per-total cost of ownership (TCO) thanks to the Amazon EC2 DL2q instance. As an illustration:

For DL inference models, there is a price-performance advantage of up to 50% when compared to the latest generation of GPU-based Amazon EC2 instances.

With CV-based security, there is a reduction in Inference cards of over three times, resulting in a significantly more affordable system solution.

allowing for the optimization of 2.5 smaller models, such as Deci.ai models, on Qualcomm Cloud AI 100.

The Qualcomm AI Stack, which offers a consistent developer experience across Qualcomm AI in the cloud and other Qualcomm products, is a feature of the DL2q instance.

The DL2q instances and Qualcomm edge devices are powered by the same Qualcomm AI Stack and base AI technology, giving users a consistent developer experience with a single application programming interface (API) across their:

Cloud,

Automobile,

Computer,

Expanded reality, as well as

Environments for developing smartphones.

Customers can use the AWS Deep Learning AMI (DLAMI), which includes popular machine learning frameworks like PyTorch and TensorFlow along with Qualcomm’s SDK prepackaged.

Read more on Govindhtech.com

0 notes

Text

Understanding Amazon EC2 in AWS: A Comprehensive Overview

Amazon Elastic Compute Cloud (Amazon EC2) is one of the foundational services offered by Amazon Web Services (AWS). It’s a crucial component for businesses and developers looking to deploy scalable, flexible, and cost-effective computing resources in the cloud. In this blog post, we’ll delve into what EC2 is, how it works, its key features, and why it’s a go-to choice for cloud computing.

What is Amazon EC2?

Key Features of Amazon EC2

Scalability: EC2 instances are highly scalable. You can launch as many instances as you need, and you can choose from various instance types, each optimized for different use cases. This scalability makes it easy to handle changing workloads and traffic patterns.

3.Instance Types: There are a wide range of instance types available, from general-purpose to memory-optimized, compute-optimized, and GPU instances. This allows you to choose the right instance type for your specific use case.

4.Pricing Options: EC2 offers flexible pricing options, including on-demand, reserved, and spot instances. This flexibility enables you to optimize costs based on your usage patterns and budget.

5.Security: EC2 instances can be launched within a Virtual Private Cloud (VPC), and security groups and network access control lists (ACLs) can be configured to control inbound and outbound traffic. Additionally, EC2 instances can be integrated with other AWS security services for enhanced protection.

6.Elastic Load Balancing: EC2 instances can be used in conjunction with Elastic Load Balancing (ELB) to distribute incoming traffic across multiple instances, ensuring high availability and fault tolerance.

7.Elastic Block Store (EBS): EC2 instances can be attached to EBS volumes, which provide scalable and durable block storage for your data.

How Does Amazon EC2 Work?

Amazon EC2 operates on the principle of virtualization. It leverages a hypervisor to run multiple virtual instances on a single physical server. Users can choose from various Amazon Machine Images (AMIs), which are pre-configured templates for instances. These AMIs contain the necessary information to launch an instance, including the operating system, software, and any additional configurations.

When you launch an EC2 instance, you select an AMI, specify the instance type, and configure network settings and storage options. Once your instance is up and running, you can connect to it remotely and start using it just like a physical server.

Use Cases of Amazon EC2

Amazon EC2 is a versatile service with a wide range of use cases, including but not limited to:

Web Hosting: Host your websites and web applications on EC2 instances for easy scalability and high availability.

2.Development and Testing: Use EC2 to set up development and testing environments without the need for physical hardware.

3.Data Processing: EC2 is ideal for running data analytics, batch processing, and scientific computing workloads.

4.Machine Learning: Train machine learning models on GPU-backed EC2 instances for accelerated performance.

5.Databases: Deploy and manage databases on EC2 instances, and scale them as needed.

Amazon EC2 is a fundamental building block of AWS, offering users the flexibility to configure and run virtual instances tailored to their specific needs. With its scalability, variety of instance types, security features, and cost-effectiveness, EC2 is a popular choice for businesses and developers looking to harness the power of the cloud.

Whether you’re a startup, a large enterprise, or an individual developer, Amazon EC2 can be a valuable resource in your cloud computing toolkit.

ACTE Technologies is one of the best AWS training institute in Hyderabad. ACTE Technologies aim to provide trainees with both academic knowledge and hands-on training to maximize their exposure. They are expanding fast and ranked as top notch training institute. Highly recommended. Professional AWS training provider in Hyderabad. I got certificate from this. For your bright future, get certified now!!

Follow me to get answers about the topic of AWS.

0 notes

Text

AWS Cloud Practitioner - study notes

Machine Learning

------------------------------------------------------

Rekognition:

Automate image and video analysis.

Image and video analysis

Identify custom labels in images and videos

Face and text detection in images and videos

Comprehend:

Natural-language processing (NLP) service which finds relationships in text.

Natural-language processing service

Finds insights and relationships

Analyzes text

Polly:

Text to speech.

Mimics natural-sounding human speech

Several voices across many languages

Can create a custom voice

SageMaker:

Build, train and deploy machine learning models.

Prepare data for models

Train and deploy models

Provides Deep Learning AMIs

Translate:

Language translation.

Provides real-time and batch language translation

Support many languages

Translates many content formats

Lex:

Build conversational interfaces like chatbots.

Recognizes speech and understands language

Build engaging chatbots

Powers Amazon Alexa

0 notes

Text

Selecting AWS: Six Compelling Reasons

Amazon Web Services (AWS), often abbreviated as AWS, represents a comprehensive suite of remote computing services, commonly known as web services. These services collectively form a cloud computing platform, accessible via the Internet through Amazon.com. Among the most prominent AWS offerings are Amazon EC2 (Elastic Compute Cloud) and Amazon S3 (Simple Storage Service). In this article, we'll explore the six compelling reasons that led us to select AWS as our preferred cloud provider.

AWS: A Host for the Modern World

AWS is a versatile hosting suite designed to simplify the complexities of traditional hosting. Renowned services like Dropbox and platforms like Reddit have embraced AWS for their hosting needs, reflecting the high standards it upholds.

Our choice to join the AWS ecosystem places us in esteemed company. AWS isn't just for giants like Dropbox; it accommodates businesses of all sizes, including individuals like you and me. We've experienced the benefits firsthand through hosting an enterprise web application tailored for the mortgage servicing industry. Our application, which sees substantial traffic fluctuations throughout the day, thrives on AWS's adaptability.

On-Demand Pricing for Every Occasion

Amazon introduced a refreshing approach to hosting pricing by adopting an "à la carte" model for AWS services. This means you pay only for what you utilize—a game-changer in server infrastructure. This approach is particularly apt for traffic patterns that exhibit bursts of activity, saving costs during periods of inactivity.

The Gateway: AWS Free Tier

One common deterrent to adopting AWS is the initial learning curve. AWS, with its dynamic infrastructure designed for rapid server provisioning and de-provisioning, could be intimidating for IT professionals accustomed to traditional hosting. The AWS Free Tier, however, alleviates this concern. It provides sufficient credits to run an EC2 micro instance 24/7 throughout the month, encouraging developers to experiment and integrate AWS's API into their software.

Unmatched Performance

AWS's speed is undeniable. Elastic Block Storage (EBS) nearly matches the speed of S3 while offering distinct features. EC2 Compute Units deliver Xeon-class performance on an hourly rate. The platform's reliability often surpasses private data centers, ensuring minimal disruption in case of issues, typically resulting in reduced capacity.

Our real-world experience using Chaos Monkey, a tool that randomly shuts down components in the cloud environment, proved AWS's high availability performance. AWS's Multi AZ feature seamlessly transitioned our database to another instance when needed. In the case of web servers, autoscaling automatically launched replacements, ensuring uninterrupted service.

Lightning-Fast Deployment

Provisioning a hosted web service through traditional providers can be a time-consuming ordeal, taking anywhere from 48 to 96 hours. AWS revolutionizes this process, reducing deployment to mere minutes. By utilizing Amazon Machine Images (AMIs), a server can be deployed and ready to accept connections in a remarkably short time frame.

Robust Security

AWS provides a robust security framework through IAM, allowing precise control over resource access and reducing the risk of misuse.

For added security, AWS offers VPC, which can be used to host our services on a private network that is not accessible from the Internet but can communicate with the resources in the same network. Resources in this private network can be accessed through Amazon VPN or open-source alternatives like OpenVPN.

Conclusion

In summary, AWS stands as a flexible, efficient, and cost-effective solution for hosting and cloud computing needs. With AWS, server management is a thing of the past, and our custom-tailored AWS ecosystem adapts seamlessly to changing demands. As our chosen cloud provider, AWS empowers us to deliver superior performance, scalability, and security to our clients. AWS is more than just a hosting platform; it's a strategic asset for navigating the modern digital landscape.

0 notes

Text

DLAMI (Deep Learning Amazon Machine Image)ディープラーニングに必要なソフトウェアやライブラリが事前インストールされたEC2インスタンス

DLAMIの概要と特徴

DLAMIは、Amazon Web Services (AWS)が提供する特殊なEC2インスタンスイメージです。

ディープラーニングに必要な各種ソフトウェアやライブラリが事前にインストールされており、研究者やデータサイエンティストが迅速にプロジェクトを開始できる環境を提供します。

DLAMIの主な特徴として、TensorFlowやPyTorchなどの人気の深層学習フレームワークが既にセットアップされていることが挙げられます。

さらに、NVIDIA CUDAドライバーやcuDNNなどのGPU関連ソフトウェアも設定済みで、GPUを活用した高速な計算が即座に可能となります。

DLAMIの種類と選択

DLAMIには主に2種類のバリエーションがあります。

1つ目は「Deep Learning AMI with…

0 notes

Text

youtube

AWS | Episode 37 | Introduction to AMI | Understanding AMI (Amazon Machine Image) #awscloud #aws #iam

1 note

·

View note

Text

Create AWS EC2 Instance

AWS EC2 (Elastic Compute Cloud) is a web service that provides resizable compute capacity in the cloud. It is designed to make web-scale cloud computing easier for developers. With EC2, you can quickly and easily create virtual machines (instances) in the cloud, configure them as per your requirements, and launch them in minutes. AWS EC2 instances can be launched from a variety of pre-configured Amazon Machine Images (AMIs) or you can create your own custom AMIs. You can also choose from a range of instance types optimized for different workloads and applications. EC2 instances can be managed via the AWS Management Console, command line interface, or using SDKs and APIs.

1 note

·

View note

Text

Amazon Web Service & Adobe Experience Manager:- A Journey together (Part-11)

In the previous parts (1,2,3,4,5,6,7,8,9 & 10) we discussed how one day digital market leader meet with the a friend AWS in the Cloud and become very popular pair. It bring a lot of gifts for the digital marketing persons. Then we started a journey into digital market leader house basement and structure, mainly repository CRX and the way its MK organized. Ways how both can live and what smaller modules they used to give architectural benefits.Also visited how they are structured together to give more on AEM eCommerce and Adobe Creative cloud .In the last part we have discussed how we can use AEM as AEM cloud open source with effortless solution to take advantage of AWS, that one is first part of the story. We will continue in this part more interesting portion in this part.

As promised in the in part 8, We started journey of AEM OpenCloud , in the earlier part we have explored few interesting facts about it .In this part as well will continue see more on AEM OpenCloud, a variant of AEM cloud it provide as open source platform for running AEM on AWS.

I hope now you ready to go with this continues journey to move AEM OpenCloud with open source benefits all in one bundled solutions.

So let set go.....................

After AEM OpenCloud Full-Set Architecture in earlier part -10 we have seen how it arrange and work to deliver full functionality.

Now another variation we will see how it fit into your AEM solution for digital marketing .

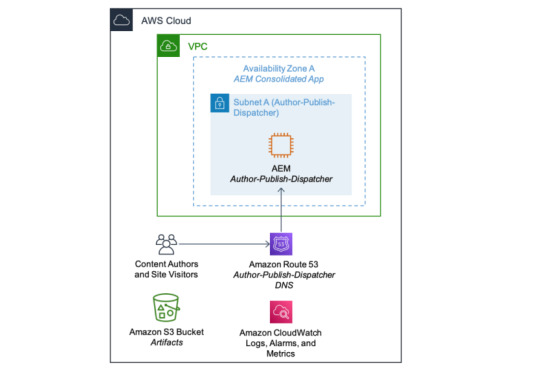

Consolidated Architecture:-

A consolidated architecture is a cut-down environment where an AEM Author Primary, an AEM Publish, and an AEM Dispatcher are all running on a single Amazon EC2 instance.

This architecture is low-cost alternative suitable for development and testing environments.

This architecture also offers those three types of backup, just like full-set architecture, where the backup AEM package and EBS snapshots are interchangeable between consolidated and full-set environments.

This option is useful, to restore production backup from a full-set environment to multiple development environments running consolidated architecture.

Another use case is to upgrade an AEM repository to a newer version in a development environment, which is then pushed through to testing, staging, and eventually production.

Now both require environment management full-set and consolidated architectures.

Environment Management :-

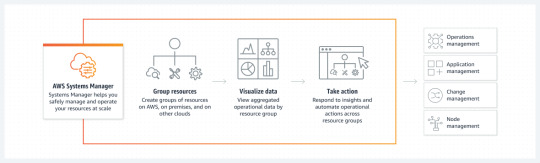

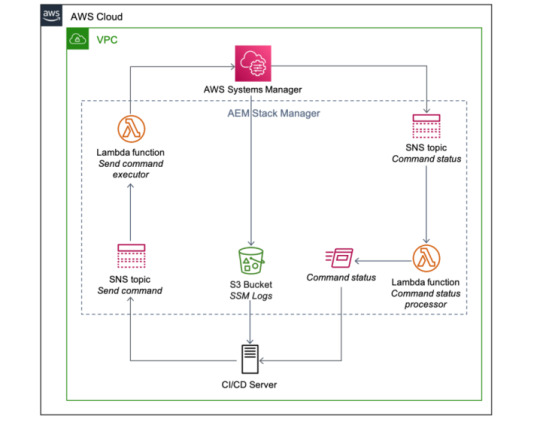

To manage multiple environments with a mixture of full-set and consolidated architectures, AEM OpenCloud has a Stack Manager that handles the command executions within AEM instances via AWS Systems Manager(described as below in picture) .

These commands include taking backups, checking environment readiness, running the AEM security checklist,enabling and disabling CRXDE and SAML, deploying multiple AEM packages configured in a descriptor, flushing AEM Dispatcher cache, and promoting the AEM Author Standby instance to Primary.

Other than the Stack Manager, there is also AEM OpenCloud Manager which currently provides Jenkins pipelines for creating and terminating AEM full-set and consolidated architectures, baking AEM Amazon Machine Images(AMIs), executing operational tasks

via Stack Manager, and upgrading an AEM repository between versions, (i.e. from AEM 6.2 to 6.4, or from AEM 6.4 to 6.5)

AEM OpenCloud Stack Manager

In this interesting journey we are continuously walking through AEM OpenCloud an open source variant of AEM and AWS. Few partner provide quick start for it in few clicks.So any this variation very quicker and effortless variation which gives deliver holistic, personalized experiences at scale, tailoring each moment of your digital marketing journey.

For more details on this interesting Journey you can browse back earlier parts from 1-10.

Keep reading.......

#aem#adobe#aws#wcm#aws lambda#cloud#programing#ec2#elb#security#AEM Open Cloud#migration#CURL#jenkins the librarians#ci/cd#xss#ddos attack#ddos protection#ddos#Distributed Denial of Service#Apache#cross site scripting#dispatcher#Security Checklist#mod_rewrite#mod_security#SAML#crx#publish#author

1 note

·

View note

Text

8 of the Most Common AWS Security Pitfalls

The vast ecosystem of features and capabilities provided by Amazon Web Services (AWS) is without a doubt one of the most appealing aspects of the public cloud platform and web development services. However, because of its complexity, it is easy for administrators to forget about or mishandle a crucial security option. This may result in disastrous security problems.

Even though one error might not seem like much, it can have severe repercussions. By 2025, 99% of cloud security breaches will have been caused by uncomplicated user error. Service outages, business losses, expensive data breaches, and potential fines and penalties for breaking data privacy laws could all result from information security breaches.

Regardless of how much AWS is used in our digital world, professional cloud engineers from mobile application development companies in Sri Lanka, must understand how to prevent sensitive data from leaking and getting stolen.

What are the typical AWS security pitfalls in web and mobile application development, and how can you avoid them?

Overly accommodating S3 bucket authorizations.

Users of AWS can reliably and affordably store and retrieve data using the Simple Storage Service (S3). To store their data, users choose a location, create a bucket there, and then upload their objects to the bucket. The S3 infrastructure stores items on various hardware throughout various facilities in the selected area. S3 preserves durability by swiftly identifying and restoring any lost redundancy once an object has been saved.

S3 buckets are by default private; however, an administrator can decide to make one public if they so desire. However, it can be troublesome if a user uploads personal data to that public bucket.

Giving any of these rights may or may not be problematic, depending on the bucket and the objects it holds. Any bucket with "Everyone" access, though, needs to be evaluated right away. That anonymous access could result in data being stolen or compromised if it is allowed unchecked. For instance, Symantec discovered in 2018 that improper settings caused over 70 million records to be stolen or leaked from just S3 buckets.

Direct rights granted to IAM users.

By creating and managing AWS users and permissions, Identity and Access Management (IAM) enables AWS users to restrict access to their accounts. IAM enables the creation of groups in addition to users. A group can be granted permissions, and every user who is a member of that group will have access to those permissions.

As a result, administrators may more easily determine which users are granted which permissions by the group to which they belong, streamlining the management of permissions as each user no longer has a separate set of permissions. Users who possess their own special permissions ought to have those privileges removed and be assigned to a group in their place.

Unintentionally public AMIs.

AMIs that were accidentally made public Amazon Machine Images (AMIs) include all the data required to start an EC2 instance on the Amazon Elastic Compute Cloud. They serve as a template for the software configuration—including the operating system, application server, and software—that will be used with the launched instance. Users of AWS have three options for AMIs: buy custom AMIs or use public AMIs.

An AMI can be made private, shared only with certain AWS accounts, or made public by the user when they build the AMI. All AWS accounts have access to the AMI library and can launch public AMIs. However, it is advised that AMIs always be set to private because they frequently contain confidential or sensitive information. Any AMIs that are available to the general public ought to be thoroughly examined.

Lack of encryption usage.

Almost all traffic should be encrypted, but financial and medical information should be protected much more. Encrypting and decrypting data has a low speed impact and ensures web users' trust when submitting forms. Encryption in transit is the name for this practise.

In storage arrays, data should also be shielded from prying eyes. The term "encryption at rest" refers to this. When it comes to protecting sensitive data, businesses must make sure that there are no weak links in the security chain.

Not keeping track of access information

Users of AWS can access a complete history of all API calls performed against their account with Amazon CloudTrail. Calls made through the AWS Management Console, SDKs, command-line programmes, and other AWS services are included in this. This data is converted into log files by CloudTrail, which then stores the log files in a specified S3 bucket. The date and time of the calls, together with the source IP address, are recorded in log files.

To be constantly aware of who and where the AWS account is being used, administrators should enable CloudTrail within the AWS account.

Inadequate IP address ranges in private cloud virtualization.

Similar to a VPN, a Virtual Private Cloud (VPC) enables customers to launch AWS resources on a separate virtual network. Depending on the level of security required, administrators can manage who has access to the virtual private cloud by choosing IP address ranges, setting up subnets, configuring route tables, and setting up network gateways.

Cloud administrators must provide the rights in their virtual private cloud environment because this is a customizable solution. Only particular IP ranges and required ports should be provided for the VPC. It is strongly advised against leaving the VPC accessible to all ports and IP addresses because doing so gives hostile users a wide attack surface.

AWS is a platform that may do a lot for clients but is also complicated for businesses of all kinds. Even the largest information security teams and the best-trained cloud technicians need to be aware of the security flaws that can be caused by incorrect AWS setups and permissions.

#web development services#mobile application development#mobile application development companies in sri lanka

0 notes