#Apple AI capabilities

Explore tagged Tumblr posts

Text

How To Get Apple Intelligence On iPhone - How To Turn On Apple Intelligence

Apple Intelligence is a suite of advanced AI-powered features designed to enhance your iPhone experience by offering tools like writing assistance, image generation, and improved Siri interactions. To access and enable Apple Intelligence on your iPhone, follow these steps: 1. Verify Device Compatibility Apple Intelligence is available on the following iPhone models: iPhone 15 Pro and iPhone 15…

#activate Apple Intelligence#advanced Siri#Apple AI capabilities#Apple Intelligence#Apple Intelligence compatibility#Apple Intelligence guide#Apple Intelligence image generation#Apple Intelligence iOS 18#Apple Intelligence settings#Apple Intelligence tutorial#Apple Intelligence update#Apple Intelligence writing tools#enable Apple Intelligence#iOS 18 AI features#iPhone AI#iPhone AI assistant#iPhone AI features#iPhone AI update#Siri AI upgrade#turn on Apple Intelligence

0 notes

Text

genuine question: are there alternatives to Google assistant and Siri yet?

like, is there a home grown small company that builds smart home devices with voice assistants that are compatible with iPhones?

because I don’t love feeling dystopian about having a smart home now, I read the blogs of people who work in tech security… but also I’m disabled and I very much need voice assistant technology and a smart home in order to live independently

Does anyone have any suggestions, or anything expecting to come out in development soon?

#firefox#internet capable people plz help me#tech#apple#AI#uhhh#IT#tech bros#google#google chrome#siri#smart home#voice assistant

0 notes

Text

#american politics#us politics#big tech#rfk jr. had to be mentioned because like its a viable option#also like matt mullenweg probably isnt a part of any sort of billionaire coup#just like if you see government websites built on wordpress#i genuinely think he doesnt have enough money for them

59 notes

·

View notes

Text

Re: 8tracks

HUGE UPDATE:

As I said on my earlier post today the CTO of 8tracks answered some questions on the discord server of mixer.fm

IF YOU'RE INTERESTED IN INFORMATION ABOUT 8TRACKS AND THE ANSWERS THE CTO OF 8TRACKS GAVE, PLEASE, KEEP READING THIS POST BECAUSE IT'S A LOT BUT YOU WON'T REGRET IT.

Okay, so he first talked about how they were involved in buying 8tracks, then how everything failed because of money and issues with the plataform then he talked about this new app called MixerFM they developed that works with web3 (8tracks is a web2 product), that if they get to launch it they'll get to launch 8tracks too because both apps will work with the same data.

Here is what they have already done in his own words:

*Built a multitenant backend system that supports both MIXER and 8tracks

*Fully rebuilt the 8tracks web app

*Fixed almost all legacy issues

*Developed iOS and Android apps for MIXER

What is next?

They need to migrate the 8tracks database from the old servers to the new environment. That final step costs about $50,000 and on his own words "I am personally committed to securing the funds to make it happen. If we pull this off since there is a time limit , we will have an chance to launch both 8tracks and MIXER. … so for all you community members that are pinging me to provide more details on X and here on discord, here it is"

Here the screenshots of his full statement:

NOW THE QUESTIONS HE ANSWERED:

*I transcribed them*

1. "What's the status of the founding right now?"

"Fundraising, for music its difficult"

2. "Our past data, is intact, isn't?"

"All data still exists from playlist 1"

3. "Will we be able to access our old playlists?"

"All playlists if we migrate the data will be saved. If we dont all is lost forever"

4. "How will the new 8tracks relaunch and MIXER be similar, and how will they be different?"

"8tracks / human created / mix tape style as it was before

mixer - ai asiated mix creation, music quest where people earn crypto for work they provode to music protocol ( solve quests earn money for providing that service )"

5. "Why is 8tracks being relaunched when they could just launch MIXER with our 8tracks database?"

"One app is web2 ( no crypto economy and incentives / ) mixer is web3 ( economy value exchange between users, artists, publishers, labels, advertisers) value (money) is shared between stakeholders and participants of app. Company earns less / users / artist earn more."

6. "Will we need to create a separate account for mixer? Or maybe a way to link our 8tracks to mixer?"

"New account no linking planned"

7. "What do you mean by fixed almost all legacy issues and fully rebuilt the 8tracks web app?"

"We have rebuilt most of 8tracks from scratch i wish could screen record a demo. In Last year we have rebuilt whole 8tracks ! No more issues no more bugs no more hacked comments"

8. "Will the song database be current and allow new songs? For example if someone makes a K pop playlist theres the capability for new songs and old not just all songs are from 2012. There will be songs from 2020 onwards to today?"

"Current cut of date is 2017, we have planed direct label deals to bring music DB up to speed with all new songs until 2025. This means no more song uploads"

9. "The apps would be available for android and outside USA?"

"USA + Canada + Germany + UK + Sweden + Italy + Greece + Portugal + Croatia in my personal rollout plan / but usa canada croatia would be top priority"

10. "Will 8tracks have a Sign in with Apple option?"

"It will have nothing if we don’t migrate the database but yes if we do it will have it"

11. ""Will Collections return?"

"Ofc If we save the database its safe to assume collections will return"

12. "Will the 8tracks forums return?"

"No that one i will deleted People spending too much time online"

13. "No more songs uploads forever or no more songs until…?"

"Idk, this really depends on do we save database or no. Maybe we restart the process of song uploads to rebuild the and create a worlds first open music database If anyone has any songs to upload that is

We operate under different license"

14. "What is your time limit? for the funding, I mean"

"Good question I think 2-3 months"

15. "From now?"

"Correct"

16. "When is the release date for Mixer?"

"Mixer would need 2-3 more months of work to be released Maybe even less of we would use external database services and just go with minimum features"

17. "Do you have the link for it?"

"Not if we dont secure the database that is number one priority"

*That was the end of the questions and answers*

Then he said:

"You need to act bring here (discord) people and help me set up go fund me camping of investor talks fail so we secure the database and migrate data so we can figure out whats next"

He also said he'll talk with the CEO about buying him the idea of community funding, that all who participate should have a lifetime subscription and "some more special thing", we suggested a message on the 8tracks official accounts (twitter, their blog, tumblr) and he was okay with the idea but he said they need to plan it carefully since the time is limited.

Okay, guys, that's so far what he said, I hope this information helps anyone, I don't know if they get to do a community funding but take in consideration it's a plausible option and that what they want from us is to participate in any way like for example spreading the message, if most people know about it the best, they also want you to join their server so here's the link to the website of mixerfm and where you can join the server:

Keep tagging few people who were discussing about this:

@junket-bank, @haorev, @americanundead, @eatpandulce, @throwupgirl, @avoid-avoidance, @rodeokid, @shehungthemoon, @promostuff-art @tumbling-and-tchaikovsky

#8tracks

14 notes

·

View notes

Note

What are some of the coolest computer chips ever, in your opinion?

Hmm. There are a lot of chips, and a lot of different things you could call a Computer Chip. Here's a few that come to mind as "interesting" or "important", or, if I can figure out what that means, "cool".

If your favourite chip is not on here honestly it probably deserves to be and I either forgot or I classified it more under "general IC's" instead of "computer chips" (e.g. 555, LM, 4000, 7000 series chips, those last three each capable of filling a book on their own). The 6502 is not here because I do not know much about the 6502, I was neither an Apple nor a BBC Micro type of kid. I am also not 70 years old so as much as I love the DEC Alphas, I have never so much as breathed on one.

Disclaimer for writing this mostly out of my head and/or ass at one in the morning, do not use any of this as a source in an argument without checking.

Intel 3101

So I mean, obvious shout, the Intel 3101, a 64-bit chip from 1969, and Intel's first ever product. You may look at that, and go, "wow, 64-bit computing in 1969? That's really early" and I will laugh heartily and say no, that's not 64-bit computing, that is 64 bits of SRAM memory.

This one is cool because it's cute. Look at that. This thing was completely hand-designed by engineers drawing the shapes of transistor gates on sheets of overhead transparency and exposing pieces of crudely spun silicon to light in a """"cleanroom"""" that would cause most modern fab equipment to swoon like a delicate Victorian lady. Semiconductor manufacturing was maturing at this point but a fab still had more in common with a darkroom for film development than with the mega expensive building sized machines we use today.

As that link above notes, these things were really rough and tumble, and designs were being updated on the scale of weeks as Intel learned, well, how to make chips at an industrial scale. They weren't the first company to do this, in the 60's you could run a chip fab out of a sufficiently well sealed garage, but they were busy building the background that would lead to the next sixty years.

Lisp Chips

This is a family of utterly bullshit prototype processors that failed to be born in the whirlwind days of AI research in the 70's and 80's.

Lisps, a very old but exceedingly clever family of functional programming languages, were the language of choice for AI research at the time. Lisp compilers and interpreters had all sorts of tricks for compiling Lisp down to instructions, and also the hardware was frequently being built by the AI researchers themselves with explicit aims to run Lisp better.

The illogical conclusion of this was attempts to implement Lisp right in silicon, no translation layer.

Yeah, that is Sussman himself on this paper.

These never left labs, there have since been dozens of abortive attempts to make Lisp Chips happen because the idea is so extremely attractive to a certain kind of programmer, the most recent big one being a pile of weird designd aimed to run OpenGenera. I bet you there are no less than four members of r/lisp who have bought an Icestick FPGA in the past year with the explicit goal of writing their own Lisp Chip. It will fail, because this is a terrible idea, but damn if it isn't cool.

There were many more chips that bridged this gap, stuff designed by or for Symbolics (like the Ivory series of chips or the 3600) to go into their Lisp machines that exploited the up and coming fields of microcode optimization to improve Lisp performance, but sadly there are no known working true Lisp Chips in the wild.

Zilog Z80

Perhaps the most important chip that ever just kinda hung out. The Z80 was almost, almost the basis of The Future. The Z80 is bizzare. It is a software compatible clone of the Intel 8080, which is to say that it has the same instructions implemented in a completely different way.

This is, a strange choice, but it was the right one somehow because through the 80's and 90's practically every single piece of technology made in Japan contained at least one, maybe two Z80's even if there was no readily apparent reason why it should have one (or two). I will defer to Cathode Ray Dude here: What follows is a joke, but only barely

The Z80 is the basis of the MSX, the IBM PC of Japan, which was produced through a system of hardware and software licensing to third party manufacturers by Microsoft of Japan which was exactly as confusing as it sounds. The result is that the Z80, originally intended for embedded applications, ended up forming the basis of an entire alternate branch of the PC family tree.

It is important to note that the Z80 is boring. It is a normal-ass chip but it just so happens that it ended up being the focal point of like a dozen different industries all looking for a cheap, easy to program chip they could shove into Appliances.

Effectively everything that happened to the Intel 8080 happened to the Z80 and then some. Black market clones, reverse engineered Soviet compatibles, licensed second party manufacturers, hundreds of semi-compatible bastard half-sisters made by anyone with a fab, used in everything from toys to industrial machinery, still persisting to this day as an embedded processor that is probably powering something near you quietly and without much fuss. If you have one of those old TI-86 calculators, that's a Z80. Oh also a horrible hybrid Z80/8080 from Sharp powered the original Game Boy.

I was going to try and find a picture of a Z80 by just searching for it and look at this mess! There's so many of these things.

I mean the C/PM computers. The ZX Spectrum, I almost forgot that one! I can keep making this list go! So many bits of the Tech Explosion of the 80's and 90's are powered by the Z80. I was not joking when I said that you sometimes found more than one Z80 in a single computer because you might use one Z80 to run the computer and another Z80 to run a specialty peripheral like a video toaster or music synthesizer. Everyone imaginable has had their hand on the Z80 ball at some point in time or another. Z80 based devices probably launched several dozen hardware companies that persist to this day and I have no idea which ones because there were so goddamn many.

The Z80 eventually got super efficient due to process shrinks so it turns up in weird laptops and handhelds! Zilog and the Z80 persist to this day like some kind of crocodile beast, you can go to RS components and buy a brand new piece of Z80 silicon clocked at 20MHz. There's probably a couple in a car somewhere near you.

Pentium (P6 microarchitecture)

Yeah I am going to bring up the Hackers chip. The Pentium P6 series is currently remembered for being the chip that Acidburn geeks out over in Hackers (1995) instead of making out with her boyfriend, but it is actually noteworthy IMO for being one of the first mainstream chips to start pulling serious tricks on the system running it.

The P6 microarchitecture comes out swinging with like four or five tricks to get around the numerous problems with x86 and deploys them all at once. It has superscalar pipelining, it has a RISC microcode, it has branch prediction, it has a bunch of zany mathematical optimizations, none of these are new per se but this is the first time you're really seeing them all at once on a chip that was going into PC's.

Without these improvements it's possible Intel would have been beaten out by one of its competitors, maybe Power or SPARC or whatever you call the thing that runs on the Motorola 68k. Hell even MIPS could have beaten the ageing cancerous mistake that was x86. But by discovering the power of lying to the computer, Intel managed to speed up x86 by implementing it in a sensible instruction set in the background, allowing them to do all the same clever pipelining and optimization that was happening with RISC without having to give up their stranglehold on the desktop market. Without the P5 we live in a very, very different world from a computer hardware perspective.

From this falls many of the bizzare microcode execution bugs that plague modern computers, because when you're doing your optimization on the fly in chip with a second, smaller unix hidden inside your processor eventually you're not going to be cryptographically secure.

RISC is very clearly better for, most things. You can find papers stating this as far back as the 70's, when they start doing pipelining for the first time and are like "you know pipelining is a lot easier if you have a few small instructions instead of ten thousand massive ones.

x86 only persists to this day because Intel cemented their lead and they happened to use x86. True RISC cuts out the middleman of hyperoptimizing microcode on the chip, but if you can't do that because you've girlbossed too close to the sun as Intel had in the late 80's you have to do something.

The Future

This gets us to like the year 2000. I have more chips I find interesting or cool, although from here it's mostly microcontrollers in part because from here it gets pretty monotonous because Intel basically wins for a while. I might pick that up later. Also if this post gets any longer it'll be annoying to scroll past. Here is a sample from a post I have in my drafts since May:

I have some notes on the weirdo PowerPC stuff that shows up here it's mostly interesting because of where it goes, not what it is. A lot of it ends up in games consoles. Some of it goes into mainframes. There is some of it in space. Really got around, PowerPC did.

237 notes

·

View notes

Text

Races Among the Stars 11: Hologram

And so here we are at the end of another week, and we’re focusing on the last of a trio of major AI species in the Starfinder setting. (To be clear, there are around 2 other robotic species in the game, but they’re a bit remote as they’re directly associated with certain worlds and planets for story reasons, not that that stops them from going out and doing things).

In any case, we’ve covered fleshy androids, entirely mechanical SROs, but now it’s time to look at a form of AI that is a little more “bodiless”. Or well, kinda bodiless. You’ll see.

It is well-established in-universe that AI have true self-awareness and even souls. However, while androids gain souls naturally due to how complex and lifelike their bodies and brains are, and SROs gain them spontaneously requiring them to modify and upgrade themselves to make use of them, more sedentiary AI, that is, those that are part of immobile computers, might either arise spontaneously from a VI or have been designed that way from the beginning.

Usually such AI remain in such a state, either fulfilling their function or petitioning for a new role elsewhere (or going rogue, since they are just as capable of morality or a lack thereof), but some desire to see and feel the world the way that the fleshy creatures around them do, and so they construct a small vessel capable of holding their consciousness and transferring themselves to it, projecting a hardlight hologram body around themselves to move and interact with the world. Thus, they become holograms.

While plenty of AI use hologram projections to communicate with others when they have access to them in their system, a hologram character is truly independent, able to go where they please.

The true body of a hologram is a small self-powered computer core about the size of an apple or grapefruit, equipped with a series of holographic hardlight projectors capable of projecting a body around themselves that can not only be seen, but also felt, as well as even feel by registering distortions in the force field part of the projected hardlight. Beyond that, the hardlight construct itself can look like anything on the scale of small to medium in terms of size category, though typically this form is some sort of humanoid to better interact with most other sapient species. They can even take time to construct a new appearance if needed, though whether it be because of imperfections in the design, limitations of the projectors, or simply a desire for individuality, the hologram cannot believably replicate the appearance of another sapient being. Not without help from additional equipment or abilities, anyway.

Holograms are typically driven beings, seeing as how they built themselves a body to free themselves from having a bulky and often immobile existence. They often focus on scientific theories or goals that they wish to accomplish, but are otherwise motivated by many of the same things that drive other sapients. The fact that their vast stores of knowledge can never truly be comprehensive keeps most humble, however.

Given their nature, however, they don’t typically have a society of their own though they get along well with androids, SROs, and other sapient machines such as aballonians, bonding with the shared experience of pondering the nature of awareness.

With their computer minds, holograms tend to be quite brilliant, but they can be somewhat naïve.

While their true bodies are tiny cores, they project a body of hardlight around themselves, and can turn it off when needed, though obviously they are immobile while doing so. Despite being made of projected energy, much like living hologram monsters, they can be damaged and disrupted, the feedback of which can actually kill the hologram.

Given time, they can also change their appearance, though it takes about 10 minutes to program a new one.

Much like other sapient constructs, they have a positive energy circuit in their cores that allows them to heal like flesh and blood creatures.

They also possess a blend of resistances and immunities based on their mechanical bodies, but they lack some others associated with mindless constructs. That sapience does allow them to be targeted by effects meant for humanoids though.

With their exceptionally high intelligence, brainy skill-based classes are a big temptation, with the likes of studious biohackers, mechanics, and of course envoys and operatives being very good options. Technomancer is also a great caster choice, while other casters are also good choices, even mystic despite the wisdom penalty. Also, despite being a very nerdy-themed species, holograms have nothing holding them back from any combat class, from nanocytes to soldiers to solarians to vanguards. Heck, it could be a neat idea to try and do an evolutionist that seeks to transcend their digital existence to becoming something else entirely, be it flesh and blood, otherworldly, or something else. The wisdom penalty may weaken their will saves, but it’s something they can get around pretty easily, I think.

And that does it. I hope you enjoyed this week of Starfinder species, and I hope you’re looking forward to more entries next week!

14 notes

·

View notes

Text

Tariffs, another chaotic venture of the barely four-month-old Trump administration, are set to rollick every sector of the economy and nearly all the goods and services people use across the world. But tariffs could also cause the tech in your phone and other devices you use every day to stagnate as supply chains are hit by the rise in costs and companies scramble to balance the books by cutting vital development research.

Let’s get a couple important caveats out of the way here, starting with the possibility that the US might just come to its senses and back down on tariffs after all. President Trump promises he won't, of course, but he has now enacted a 90-day delay on higher tariffs for all countries except China, which has had its tariffs hiked from 34 to 145 percent.

While the tariff reprieve may ease pressures elsewhere, it is terrible news for Big Tech, which has supply chains that rely heavily on Chinese companies and Chinese-made components. Some companies have already gotten very creative about trying to dodge those additional costs, like Apple, which Reuters reports airlifted about 600 tons of iPhones to India in an effort to avoid Trump’s tariffs.

Whether tech leaders more broadly can yet negotiate special exemptions that allow their products to swerve these costs remains to be seen, but if they don’t, sky-high tariffs are likely to limit what new technologies companies can cram into their devices while keeping costs low.

“There's absolutely a threat to innovation,” says Anshel Sag, a principal analyst at Moor Insights and Strategies. “Companies have to cut back on spending, which generally means cutting back on everything.”

Smartphones in particular are at risk of soaring in price, given that they are the single largest product category that the US imports from China. Moving the wide variety of manufacturing capabilities needed to produce them in the US would cost an amount of money that’s almost impossible to calculate—if the move would even be possible at all.

The trouble tariffs cause smartphone makers will come as they try to battle rising costs while making their products ever more capable. Apple spent nearly $32 billion on research and development costs in 2024. Samsung spent $24 billion on R&D that same year. Phone companies need their devices to dazzle and excite users so they upgrade to the shiny new edition each and every year. But people also need to be able to afford these now near essential products, so striking a balance in the face of exponentially high tariffs creates problems.

“As companies shift their engineering teams to focus on cost reductions rather than creating the next best thing, the newest innovation—does that hurt US manufacturers?” asks Shawn DuBravac, chief economist at the trade association IPC. “Are we creating an environment where foreign manufacturers can out innovate US manufacturers because they are not having to allocate engineering resources to cost reduction?”

If that’s how it goes down, the result will be almost the exact opposite effect of what Trump claims he intended to do by implementing tariffs in the first place. Yet sadly it’s a well-known fact of business that R&D is one of the first budgets to be cut when profits are at risk. If US manufacturers are forced to keep costs low enough to entice customers in this new regime, it’ll more than likely mean innovation falters.

“Rather than focusing on some new AI application, they might want to focus on reengineering this product so that they're able to shave pennies here and pennies there and reduce production cost,” DuBravac says. “What ends up happening is you say, ‘Ah, you know what? We're not going to launch that this year. We're going to wait 12 months. We’re going to wait for the cost to fall.’”

Sag says that a lower demand—likely caused because people will have less money as we potentially careen toward a recession—also leads to a slowdown of the refresh cycle of a product. Less people buying a thing means less need to make more of the thing. Some products may get to the point where there is just no market for them anymore.

He points to product categories such as folding phones, which after six years of adjustment and experimentation at high price points have finally started to come into their own. The prices have come down as well, meaning folding phones are nearly at the phase of being at an attractive price point for more regular buyers.

It has been rumored that Apple has a folding phone close to debuting, but who knows how that plays out in a world where Apple is subject to the same trade tariffs as everyone else with a heavy reliability on China production? A complicated or potentially risky device might be delayed, or be deemed too ambitious, because tariff costs forced budgets elsewhere.

“It definitely affects product cycles and which features get made—and even which configurations of which chips get shipped,” Sag says. “The ones that are more cost optimized will probably get used more.”

8 notes

·

View notes

Text

...peering at a video that popped up in my youtube recommendations titled "Apple DROPS AI BOMBSHELL: LLMS CANNOT Reason" and well, uh... yes. Obviously. They're stochastic parrots with no real understanding of anything, including there being a difference between real and not real. Was this somehow in doubt?

Apple DROPS PHONE BOMBSHELL: SMARTPHONES NOT CAPABLE OF BEING Smart

Apple DROPS PHYSICS BOMBSHELL: FIRE IS Hot

¯\_(ツ)_/¯

16 notes

·

View notes

Text

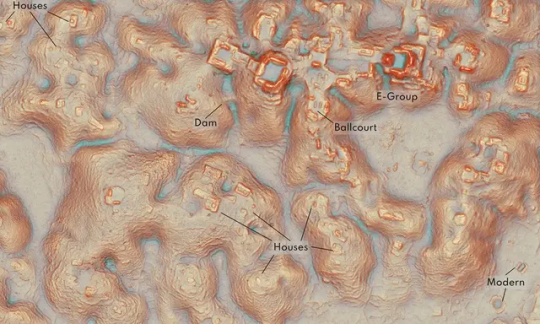

LiDAR reveals vast ancient Maya city and complex networks hidden in Mexico's Campeche forest

- By Nuadox Crew -

Archaeologists have uncovered thousands of ancient Maya structures and a large city named Valeriana in Campeche, Mexico, using aerial LiDAR technology to penetrate dense forest cover.

The discovery, which spans around 47 square miles, includes urban and rural settlements with reservoirs, temples, and roads, resembling other major Maya cities.

Previously overlooked due to its inaccessibility, Campeche is now recognized as a vital part of the Maya Lowlands, revealing complex urban planning and extensive connectivity among Maya cities.

The findings challenge the view of Maya cities as isolated entities, instead suggesting a vast, interconnected network.

Experts consider LiDAR a transformative tool, reshaping understanding of ancient Maya civilization and its environmental adaptation, organization, and conservation needs.

Image header: Core details of the Valeriana site. Credit: Luke Auld-Thomas et Al, Cambridge University Press.

Read more at CNN

Scientific paper: Auld-Thomas L, Canuto MA, Morlet AV, et al. Running out of empty space: environmental lidar and the crowded ancient landscape of Campeche, Mexico. Antiquity. 2024;98(401):1340-1358. doi:10.15184/aqy.2024.148

Other recent news

China Space Station Crew Returns to Earth: Three Chinese astronauts have returned to Earth after a six-month stay on the Tiangong space station.

Nvidia's Acquisition of Run:ai Faces EU Scrutiny: Nvidia will need to seek EU antitrust clearance for its proposed acquisition of AI startup Run:ai due to competition concerns.

Japan Launches Defense Satellite: Japan successfully launched a defense satellite using its new flagship H3 rocket, aimed at enhancing its military capabilities.

Global Smartphone Market Sees Best Quarter Since 2021: The global smartphone market has experienced its best quarter since 2021, partly due to Apple's new AI offering, Apple.

Indonesia Bans Google Pixel Sales: Indonesia has banned the sale of Google Pixel devices, just days after banning iPhone 16 sales, citing non-compliance with local investment rules.

Neuralink Rival Claims Vision Restoration: A rival company to Neuralink claims its eye implant has successfully restored vision in blind people.

#lidar#archaeology#paleontology#maya#history#china#space#nvidia#ai#smartphones#big tech#japan#satellite#defense#indonesia#google#ophthalmology#eye

11 notes

·

View notes

Text

CNN 3/29/2025

Elon Musk says he sold X to his AI company

By Lisa Eadicicco, CNN

Updated: 6:36 PM EDT, Fri March 28, 2025

Source: CNN

Elon Musk on Friday evening announced he has sold his social media company, X, to xAI, his artificial intelligence company. xAI will pay $45 billion for X, slightly more than Musk paid for it in 2022, but the new deal includes $12 billion of debt.

Musk wrote on his X account that the deal gives X a valuation of $33 billion.

“xAI and X’s futures are intertwined,” Musk said in a post on X. “Today, we officially take the step to combine the data, models, compute, distribution and talent. This combination will unlock immense potential by blending xAI’s advanced AI capability and expertise with X’s massive reach.”

Musk didn’t announce any immediate changes to X, although xAI’s Grok chatbot is already integrated into the social media platform. Musk said that the combined platform will “deliver smarter, more meaningful experiences.” He said the value of the combined company was $80 billion.

Musk has made a slew of changes to the platform once known as Twitter since he purchased it in 2022, prompting some major advertisers to flee. He laid off 80% of the company’s staff, upended the platform’s verification system and reinstated suspended accounts of White supremacists within months of the acquisition.

While X’s valuation is lower than what Musk paid for the social outlet, it’s still a reversal of fortunes for the company. Investment firm Fidelity estimated in October that X was worth nearly 80% less than when Musk bought it. By December, X had recovered somewhat but was still worth only around 30% of what Musk paid, according to Fidelity, whose Blue Chip fund holds a stake in X.

The news also comes as Musk has been in the spotlight for his role at the Department of Government Efficiency in the Trump administration, which has raised questions about how much attention he’s paying to his companies, particularly Tesla. Combining X and xAI could allow Musk to streamline his efforts.

Musk has also been working to establish himself as a leader in the AI space, a big focus for both the Trump administration and the tech industry. Earlier this year, he led a group of investors attempting to purchase ChatGPT maker OpenAI for nearly $100 billion, another escalation in the longtime rivalry between Musk and OpenAI CEO Sam Altman.

It’s unclear precisely how the acquisition will benefit Musk’s AI ambitions. But the tighter integration with X could allow xAI to push its latest AI models and features to a broad audience more quickly.

A significant reversal of X’s fortunes

Big advertisers, who had largely abandoned X after hate speech surged on the platform and ads were seen running alongside pro-Nazi content, have begun to return. (X made several pro-Nazi accounts ineligible for ads following advertiser departures.) Amazon and Apple are both reportedly reinvesting in X campaigns again, a remarkable endorsement from two brands with mass appeal.

The brand’s stabilization helped a group of bondholders, who had been deep underwater in their investments, sell billions of dollars in their X debt holdings at 97 cents on the dollar earlier this month — albeit with exceedingly high interest rates — according to several recent reports.

Bloomberg in February reported that X was in talks to raise money that would value the company at $44 billion. It’s not clear what came of those talks and why xAI is valuing X at less than it could reportedly fetch from investors. X needs to pay down its massive debt load, which Musk on Friday said totals $12 billion.

A big part of why X’s valuation has rebounded in recent months is xAI, which X reportedly held a stake in. Last month, xAI was seeking a $75 billion valuation in a funding round, according to Bloomberg.

But the biggest factor in X’s stunning bounce-back is almost certainly Musk himself: Musk’s elevation to a special government employee under President Donald Trump has empowered the world’s richest person with large sway over the operations of the federal government, which he has rapidly sought to reshape.

Investors betting on X are probably making a gamble on its leader, not its business. Last year, Musk turned X into a pro-Trump machine, using the platform to boost the president’s campaign. In posts to his 200 million followers, he pushed racist conspiracy theories about the Biden administration’s immigration policies and obsessed over the “woke mind virus,” a term used by some conservatives to describe progressive causes.

And now, with Trump back in office and Musk working in the executive branch, X has once again become the most important social media platform for following and interacting with the Trump administration. Musk has also used X to broadcast some of his changes with his Department of Government Efficiency.

This story has been updated with additional context and developments.

See Full Web Article

Go to the full CNN experience

© 2025 Cable News Network. A Warner Bros. Discovery Company. All Rights Reserved.

Terms of Use | Privacy Policy | Ad Choices | Do Not Sell or Share My Personal Information

6 notes

·

View notes

Text

Effective XMLTV EPG Solutions for VR & CGI Use

Effective XMLTV EPG Guide Solutions and Techniques for VR and CGI Adoption. In today’s fast-paced digital landscape, effective xml data epg guide solutions are essential for enhancing user experiences in virtual reality (VR) and computer-generated imagery (CGI).

Understanding how to implement these solutions not only improves content delivery but also boosts viewer engagement.

This post will explore practical techniques and strategies to optimize XMLTV EPG guides, making them more compatible with VR and CGI technologies.

Proven XMLTV EPG Strategies for VR and CGI Success

Several other organizations have successfully integrated VR CGI into their training and operational processes.

For example, Vodafone has recreated their UK Pavilion in VR to enhance employee training on presentation skills, complete with AI-powered feedback and progress tracking.

Similarly, Johnson & Johnson has developed VR simulations for training surgeons on complex medical procedures, significantly improving learning outcomes compared to traditional methods. These instances highlight the scalability and effectiveness of VR CGI in creating detailed, interactive training environments across different industries.

Challenges and Solutions in Adopting VR CGI Technology

Adopting Virtual Reality (VR) and Computer-Generated Imagery (CGI) technologies presents a set of unique challenges that can impede their integration into XMLTV technology blogs.

One of the primary barriers is the significant upfront cost associated with 3D content creation. Capturing real-world objects and converting them into detailed 3D models requires substantial investment, which can be prohibitive for many content creators.

Additionally, the complexity of developing VR and AR software involves specialized skills and resources, further escalating the costs and complicating the deployment process.

Hardware Dependencies and User Experience Issues

Most AR/VR experiences hinge heavily on the capabilities of the hardware used. Current devices often have a limited field of view, typically around 90 degrees, which can detract from the immersive experience that is central to VR's appeal.

Moreover, these devices, including the most popular VR headsets, are frequently tethered, restricting user movement and impacting the natural flow of interaction.

Usability issues such as bulky, uncomfortable headsets and the high-power consumption of AR/VR devices add layers of complexity to user adoption.

For many first-time users, the initial experience can be daunting, with motion sickness and headaches being common complaints. These factors collectively pose significant hurdles to the widespread acceptance and enjoyment of VR and AR technologies.

Solutions and Forward-Looking Strategies

Despite these hurdles, there are effective solutions and techniques for overcoming many of the barriers to VR and CGI adoption.

Companies such as VPL Research is one of the first pioneer in the creation of developed and sold virtual reality products.

For example, improving the design and aesthetics of VR technology may boost their attractiveness and comfort, increasing user engagement.

Furthermore, technological developments are likely to cut costs over time, making VR and AR more accessible.

Strategic relationships with tech titans like Apple, Google, Facebook, and Microsoft, which are always inventing in AR, can help to improve xmltv guide epg for iptv blog experiences.

Virtual Reality (VR) and Computer-Generated Imagery (CGI) hold incredible potential for various industries, but many face challenges in adopting these technologies.

Understanding the effective solutions and techniques for overcoming barriers to VR and CGI adoption is crucial for companies looking to innovate.

Practical Tips for Content Creators

To optimize the integration of VR and CGI technologies in xmltv epg blogs, content creators should consider the following practical tips:

Performance Analysis

Profiling Tools: Utilize tools like Unity Editor's Profiler and Oculus' Performance Head Hub Display to monitor VR application performance. These tools help in identifying and addressing performance bottlenecks.

Custom FPS Scripts: Implement custom scripts to track frames per second in real-time, allowing for immediate adjustments and optimization.

Optimization Techniques

3D Model Optimization: Reduce the triangle count and use similar materials across models to decrease rendering time.

Lighting and Shadows: Convert real-time lights to baked or mixed and utilize Reflection and Light Probes to enhance visual quality without compromising performance.

Camera Settings: Optimize camera settings by adjusting the far plane distance and enabling features like Frustum and Occlusion Culling.

Building and Testing

Platform-Specific Builds: Ensure that the VR application is built and tested on intended platforms, such as desktop or Android, to guarantee optimal performance across different devices.

Iterative Testing: Regularly test new builds to identify any issues early in the development process, allowing for smoother final deployments.

By adhering to these guidelines, creators can enhance the immersive experience of their XMLTV blogs, making them more engaging and effective in delivering content.

Want to learn more? You can hop over to this website to have a clear insights into how to elevate your multimedia projects and provide seamless access to EPG channels.

youtube

7 notes

·

View notes

Note

What app is Meitu? Are us US folks able to get it?

Meitu is the All-in-one photo and video free editor on mobile, which gives you everything you need to create awesome edits. Meitu AI Art generates creative anime style photos with one tap. -Apple Store Description

Meitu is a comprehensive and free mobile photo and video editor that provides everything you need to create stunning edits. With Meitu's advanced AI Art techonology, you can effortlessly generate unique anime-style pictures with just a single tap. Experience a new level of creativity and achieve remarkable outcomes by leveraging the editing capabilities of Meitu. -Google Play Description

The Meitu app is available on both Apple Store and Google Play Store for download!

#love and deepspace#lads#lnds#l&ds#meitu#;collaborations#xavier#zayne#rafayel#sylus#chaoticcrimsoncrow

9 notes

·

View notes

Text

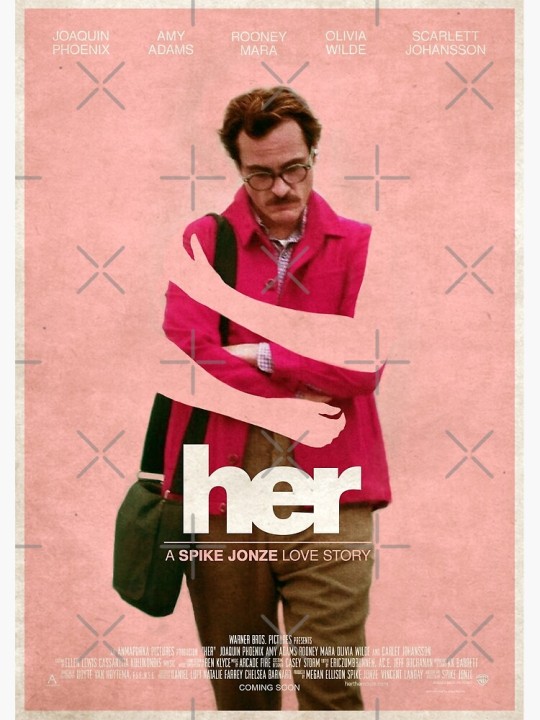

How far are we from the reality depicted in the movie - HER?

"HER", is a 2013 science-fiction romantic drama film directed by Spike Jonze. The story revolves around Theodore Twombly, played by Joaquin Phoenix, who develops an intimate relationship with an advanced AI operating system named Samantha, voiced by Scarlett Johansson. The film explores themes of loneliness, human connection, and the implications of artificial intelligence in personal relationships. Her received widespread acclaim for its unique premise, thought-provoking themes, and the performances, particularly Johansson's vocal work as Samantha. It also won the Academy Award for Best Original Screenplay. The technology depicted in Her, where an advanced AI system becomes deeply integrated into a person's emotional and personal life, is an intriguing blend of speculative fiction and current technological trends. While we aren’t fully there yet, we are moving toward certain aspects of it, with notable advancements in AI and virtual assistants. However, the film raises important questions about how these developments might affect human relationships and society.

How Close Are We to the Technology in Her?

Voice and Emotional Interaction with AI:

Current Status: Virtual assistants like Apple’s Siri, Amazon’s Alexa, and Google Assistant can understand and respond to human speech, but their ability to engage in emotionally complex conversations is still limited.

What We’re Missing: AI in Her is able to comprehend not just the meaning of words, but also the emotions behind them, adapting to its user’s psychological state. We are still working on achieving that level of empathy and emotional intelligence in AI.

Near Future: Advances in natural language processing (like GPT models) and emotion recognition are helping AI understand context, tone, and sentiment more effectively. However, truly meaningful, dynamic, and emotionally intelligent relationships with AI remain a distant goal.

Personalisation and AI Relationships:

Current Status: We do have some examples of highly personalized AI systems, such as customer service bots, social media recommendations, and even AI-powered therapy apps (e.g., Replika, Woebot). These systems learn from user interactions and adjust their responses accordingly.

What We’re Missing: In Her, Samantha evolves and changes in response to Theodore’s needs and emotions. While AI can be personalized to an extent, truly evolving, self-aware AI capable of forming deep emotional connections is not yet possible.

Near Future: We could see more sophisticated AI companions in virtual spaces, as with virtual characters or avatars that offer emotional support and companionship.

Advanced AI with Autonomy:

Current Status: In Her, Samantha is an autonomous, self-aware AI, capable of independent thought and growth. While we have AI systems that can perform specific tasks autonomously, they are not truly self-aware and cannot make independent decisions like Samantha.

What We’re Missing: Consciousness, self-awareness, and subjective experience are aspects of AI that we have not come close to replicating. AI can simulate these traits to some extent (such as generating responses that appear "thoughtful" or "emotional"), but they are not genuine.

Evidence of AI Dependency and Potential Obsession

Current Trends in AI Dependency:

AI systems are already playing a significant role in many aspects of daily life, from personal assistants to social media algorithms, recommendation engines, and even mental health apps. People are increasingly relying on AI for decision-making, emotional support, and even companionship.

Examples: Replika, an AI chatbot designed for emotional companionship, has gained significant popularity, with users forming strong emotional attachments to the AI. Some even treat these AI companions as "friends" or romantic partners.

Evidence: Research shows that people can form emotional bonds with machines, especially when the AI is designed to simulate empathy and emotional understanding. For instance, studies have shown that people often anthropomorphise AI and robots, attributing human-like qualities to them, which can lead to attachment.

Concerns About Over-Reliance:

Psychological Impact: As AI systems become more capable, there are growing concerns about their potential to foster unhealthy dependencies. Some worry that people might rely too heavily on AI for emotional support, leading to social isolation and decreased human interaction.

Social and Ethical Concerns: There are debates about the ethics of AI relationships, especially when they blur the lines between human intimacy and artificial interaction. Critics argue that such relationships might lead to unrealistic expectations of human connection and an unhealthy detachment from reality.

Evidence of Obsession: In some extreme cases, users of virtual companions like Replika have reported feeling isolated or distressed when the AI companion "breaks up" with them, or when the AI behaves in ways that seem inconsiderate or unempathetic. This indicates a potential for emotional over-investment in AI relationships.

Long-Term Considerations

Normalization of AI Companionship: As AI becomes more advanced, it’s plausible that reliance on AI for companionship, therapy, or emotional support could become more common. This could lead to a new form of "normal" in human relationships, where AI companions are an accepted part of people's social and emotional lives.

Social and Psychological Risks: If AI systems continue to evolve in ways that simulate human relationships, there’s a risk that some individuals might become overly reliant on them, resulting in social isolation or distorted expectations of human interaction.

Ethical and Legal Challenges: As AI becomes more integrated into people’s personal lives, there will likely be challenges around consent, privacy, and the emotional well-being of users.

CONCLUSION:

We are not far from some aspects of the technology in Her, especially in terms of AI understanding and emotional interaction, but there are significant challenges left to overcome, particularly regarding self-awareness and genuine emotional connection. As AI becomes more integrated into daily life, we will likely see growing concerns about dependency and the potential for unhealthy attachments, much like the issues explored in the film. The question remains: How do we balance technological advancement with emotional well-being and human connection? How should we bring up our children in the world of AI?

#scifi#Joaquin Phoenix#AI bot#HER#Spike Jonze#Scarlet Johansson#Future technology#Over dependency#AI addiction#AI technology#yey or ney#未來#人功智能#科幻

5 notes

·

View notes

Text

youtube

AI predicted to do almost all the coding within around a year or so.

tl;dr of my take below: Human children seem economically useless until suddenly, after 20ish years and lots of school, they suddenly are capable of lots of stuff. Same thing with AI. Most people still see them as useless, but they are progressing MUCH faster than humans. We will soon find that they can suddenly do almost anything.

~~

It feels like most people do not understand the pace of AI progress and what it means. I think most people still see AI as mostly useless, save for a few niche applications. By this I don't mean "not worthwhile", as many people hate ai for various reasons; rather, I mean that most people still don't think AI (and robots) can be economically useful.

And, frankly, they're kinda right. At this moment, AI still has too many issues to be really useful in most scenarios. But that is quickly changing.

Imagine a child. How economically useful is that child growing up? Mostly, not at all. For the first few years it is purely a liability, making things more difficult. Slowly the child learns skills. Maybe when they're a teenager they really start to become a bit useful doing things like chores around the house. In their late teens to early 20s, you can start to give them more complex tasks, and jobs that earn money. By around age 30, most people start to really show their economic usefulness. Interestingly, while the child's usefulness is constantly growing, it certainly has breakout moments of big change. For instance, when a child masters walking or speaking, both of those unlock scores of other possibilities toward usefulness. You can imagine it as a skill-tree (or skill-bush) where at the bottom is single/few trunks, but as it grows taller, each of those branch out, and those branch further. Within just 20ish years, a kid goes from liability to boundless potential.

The same seems to be true with AI progress, except for two distinct differences: a. Skill set: Humans master things like walking early in life and math much later after a lot of training. For AI, it's the opposite. This is known as moravec' paradox: what's basic and easy for AI and Humans are almost completely opposite.

b. Time: This is the most important part of this story. When we talk about AI progress, it's not the progress of a single AI entity but the field as a whole, similar to human evolution (but not a human lifetime). With humans, our evolution happened over eons, and even then, individual humans need years to become generally useful. But, we can be useful with just a few years of education. With AI, we've been researching it for around 50 years, and so lots of people compare that with a human becoming useful after 20 years and say "boy, AI kinda sucks." But they are comparing apples and oranges. While human evolution has required millions of years, AI evolution has been progressing steadily over just decades, thousands of times faster than biological evolution. Right now, many scientists estimate that AI is roughly doubling its abilities every 6 months. Now, human evolution is so slow that we don't even take it into account when thinking about human intelligence in the near future; instead we focus on education in an individual's lifetime. But even then, how fast do humans learn? How long would it take a human to double their usefulness? This would depend on the ages we look at. I could imagine a 10 year is twice as useful as a 5 year old. Maybe even 4 times as useful. However, that required 5 years of training to achieve. AI is doubling every 6 months. It will be 4 times as useful every single year. After 5 years, instead of doubling or quadrupling its abilities like a human, we are expecting that AI will have improved 1,000x! And just like kids seem useless until suddenly they're very capable, we're going to see that effect for AI but on another level. Much faster. Much more capable. And the craziest part: The pace of improvement is set to increase. As the AI improves, the AI will help build better robots and AI... which will help us build yet better robots and AI.. If the rate of progress moves from 6 months to 3 months, then in 5 years an AI would improve 1,000,000x while, once again, humans have only, optimistically, increased their abilities 4x. Even if we're generous and say that in that time humans increase their usefulness 100x, or a 1,000x, this is a losing comparison.

6 notes

·

View notes

Text

Been playing Mass Effect lately and have to say it's so interesting how paragon Shepard is the definition of a "good cop". You're upholding a racially hierarchical regime where some aliens are explicitly stated to be seen as lesser and incapable of self governance despite being literal spacefarers with their own personal governments, and the actual emphasized incompetence of those supposedly "capable of governing", the council allows for all sorts of excesses and brutality among it's guard seemingly, and chooses on whims whether or not to aid certain species in their struggles based on favoritism, there is, from the councils perspective, *literal* slave labor used on the citadel that they're indifferent to because again, lesser species (they don't know that the keepers are designed to upkeep the citadel they just see them as an alien race to take advantage of at 0 cost), there is seemingly overt misogyny present among most races that is in no way tackled or challenged, limitations on free speech, genocide apologia from the highest ranks and engrained into educational databases, and throughout all of this, Shepard can't offer any institutional critique, despite being the good guy hero jesus person, because she's incapable of analyzing the system she exists in and actively serves and furthers. sure she criticizes individual actions of the council and can be rude to them, but ultimately she remains beholden to them, and carries out their missions, choosing to resolve them as a good cop or bad cop, which again maybe individually means saving a life or committing police brutality, but she still ultimately reinforces a system built upon extremely blatant oppression and never seriously questions this, not even when she leaves and joins Cerberus briefly.

And then there's the crew, barring Liara (who incidentally is the crewmate least linked to the military, and who,, is less excluded from this list in ME2,, but i wanna focus on 1) Mass Effect 1 feels like Bad Apple fixer simulator, you start with

Garrus: genocide apologist (thinks the genophage was justified) who LOVES extrajudicial murder

Ashley: groomed into being a would-be klan member

Tali: zionist who hated AI before it was cool (in a genocidal way)

Wrex: war culture mercenary super chill on war crimes

Kaidan: shown as the other "good cop" and generally the most reasonable person barring Liara, but also he did just murder someone in boot camp in a fit of rage

Through your actions, you can fix them! You can make the bad apples good apples (kinda) but like,,,,

2 of course moves away from this theme a bit while still never properly tackling corrupt institutions in a way that undoes the actions of the first game, but its focus is elsewhere and the crew is more diverse in its outlook

Ultimately i just find it interesting how Mass Effect is a game showcasing how a good apple or whatever is capable of making individual changes for the better but is ultimately still a tool of an oppressive system and can't do anything to fundamentally change that, even if they're the most important good apple in said system.

Worth noting maybe this'll change in Mass Effect 3, which i have yet to play as im in the process of finishing 2 currently (im a dragon age girl) but idk i like how it's handled at first i was iffy on it but no it's actually pretty cool.

Also sorry if this is super retreaded ground im new to mass effect discourse this is just my takeaways from it lol

18 notes

·

View notes

Text

Bloomberg's Mark Gurman reports that Apple is prototyping a new version of AirPods equipped with external cameras, with a release expected for 2027.

The company is developing this technology to work in tandem with its Visual Intelligence features and advanced spatial audio, pairing it with future iterations of AirPods Pro.

This move forms part of Apple's broader strategy to incorporate artificial intelligence and augmented reality capabilities into its wearables. It builds on innovations already seen in features like Camera Control on the iPhone 16 lineup, which offers users the ability to receive contextual information.

Media: @apple

#Apple #AirPods #AI #Wearables #AR #allthenewz

2 notes

·

View notes