#Azure blob storage tiering

Explore tagged Tumblr posts

Text

Which Cloud Computing Platform Is Best to Learn in 2025?

Cloud computing is no longer optional—it’s essential for IT jobs, developers, data engineers, and career switchers.

But here’s the question everyone’s Googling: Which cloud platform should I learn first? Should it be AWS, Azure, or GCP? Which one gets me hired faster? And where do I start if I’m a fresher?

This article answers it all, using simple language, real use cases, and proven guidance from NareshIT’s cloud training experts.

☁️ What Is a Cloud Platform?

Cloud platforms let you run software, manage storage, or build apps using internet-based infrastructure—without needing your own servers.

The 3 most popular providers are:

🟡 Amazon Web Services (AWS)

🔵 Microsoft Azure

🔴 Google Cloud Platform (GCP)

🔍 AWS vs Azure vs GCP for Beginners

Let’s compare them based on what beginners care about—ease of learning, job market demand, and use case relevance.

✅ Learn AWS First – Most Versatile & Job-Friendly

Best cloud certification for freshers (AWS Cloud Practitioner, Solutions Architect)

Huge job demand across India & globally

Tons of free-tier resources + real-world projects

Ideal if you want to land your first cloud job fast

✅ Learn Azure – Best for Enterprise & System Admin Roles

Works great with Microsoft stack: Office 365, Windows Server, Active Directory

AZ-900 and AZ-104 are beginner-friendly

Popular in government and large MNC jobs

✅ Learn GCP – Best for Developers, Data & AI Enthusiasts

Strong support for Python, ML, BigQuery, Kubernetes

Associate Cloud Engineer is the top beginner cert

Clean UI and modern tools

🧑🎓 Which Cloud Course Is Best at NareshIT?

No matter which provider you choose, our courses help you start with real cloud labs, not theory. Ideal for:

Freshers

IT support staff

Developers switching careers

Data & AI learners

🟡 AWS Cloud Course

EC2, IAM, Lambda, S3, VPC

Beginner-friendly with certification prep

60 Days, job-ready in 3 months

🔵 Azure Cloud Course

AZ-900 + AZ-104 covered

Learn Azure Portal, Blob, AD, and DevOps

Perfect for enterprise IT professionals

🔴 GCP Cloud Course

Compute Engine, IAM, App Engine, BigQuery

30–45 Days, with real-time labs

Ideal for developers and data engineers

📅 Check new batches and enroll → Both online and classroom formats available.

🛠️ Beginner Cloud Engineer Guide (In 4 Simple Steps)

Choose one platform: AWS is best to start

Learn core concepts: IAM, storage, compute, networking

Practice using free-tier accounts and real labs

Get certified → Apply for entry-level cloud roles

🎯 Final Thought: Don’t Wait for the “Best.” Start Smart.

If you're waiting to decide which cloud is perfect, you’ll delay progress. All three are powerful. Learning one cloud platform well is better than learning all poorly.

NareshIT helps you start strong and grow faster—with hands-on training, certifications, and placement support.

📅 Start your cloud journey with us → DevOps with Multi Cloud Training in KPHB

At NareshIT, we’ve helped thousands of learners go from “I don’t get it” to “I got the job.”

And Articles are :

What is Cloud Computing? A Practical Guide for Beginners in 2025

Where to Start Learning Cloud Computing? A Beginner’s Guide for 2025

Entry level cloud computing jobs salary ?

Cloud Computing Job Roles for Freshers: What You Need to Know in 2025

Cloud Computing Learning Roadmap (2025): A Realistic Path for Beginners

How to Learn Cloud Computing Step by Step (From a Beginner’s Perspective)

How to Become a Cloud Engineer in 2025

How to become a cloud engineer ?

Cloud Computing Salaries in India (2025) – Career Scope, Certifications & Job Trends

Where to Start Learning Google Cloud Computing? A Beginner’s Guide by NareshIT

Future Scope of Cloud Computing in 2025 and Beyond

#BestCloudToLearn#AWSvsAzurevsGCP#NareshITCloudCourses#CloudForBeginners#LearnCloudComputing#CareerInCloud2025#CloudCertificationsIndia#ITJobsForFreshers#CloudLearningRoadmap#CloudTrainingIndia

0 notes

Text

Understanding DP-900: Microsoft Azure Data Fundamentals

The DP-900, or Microsoft Azure Data Fundamentals, is an entry-level certification designed for individuals looking to build foundational knowledge of core data concepts and Microsoft Azure data services. This certification validates a candidate’s understanding of relational and non-relational data, data workloads, and the basics of data processing in the cloud. It serves as a stepping stone for those pursuing more advanced Azure data certifications, such as the DP-203 (Azure Data Engineer Associate) or the DP-300 (Azure Database Administrator Associate).

What Is DP-900?

The DP-900 exam, officially titled "Microsoft Azure Data Fundamentals," tests candidates on fundamental data concepts and how they are implemented using Microsoft Azure services. It is part of Microsoft’s role-based certification path, specifically targeting beginners who want to explore data-related roles in the cloud. The exam does not require prior experience with Azure, making it accessible to students, career changers, and IT professionals new to cloud computing.

Exam Objectives and Key Topics

The DP-900 exam covers four primary domains:

1. Core Data Concepts (20-25%) - Understanding relational and non-relational data. - Differentiating between transactional and analytical workloads. - Exploring data processing options (batch vs. real-time).

2. Working with Relational Data on Azure (25-30%) - Overview of Azure SQL Database, Azure Database for PostgreSQL, and Azure Database for MySQL. - Basic provisioning and deployment of relational databases. - Querying data using SQL.

3. Working with Non-Relational Data on Azure (25-30%) - Introduction to Azure Cosmos DB and Azure Blob Storage. - Understanding NoSQL databases and their use cases. - Exploring file, table, and graph-based data storage.

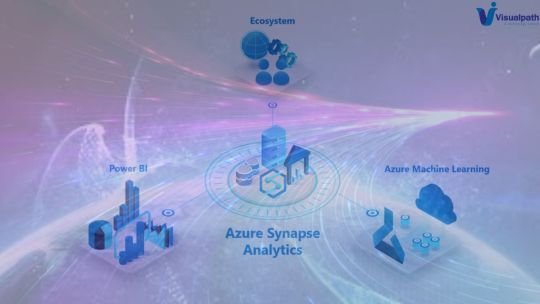

4. Data Analytics Workloads on Azure (20-25%) - Basics of Azure Synapse Analytics and Azure Databricks. - Introduction to data visualization with Power BI. - Understanding data ingestion and processing pipelines.

Who Should Take the DP-900 Exam?

The DP-900 certification is ideal for: - Beginners with no prior Azure experience who want to start a career in cloud data services. - IT Professionals looking to validate their foundational knowledge of Azure data solutions. - Students and Career Changers exploring opportunities in data engineering, database administration, or analytics. - Business Stakeholders who need a high-level understanding of Azure data services to make informed decisions.

Preparation Tips for the DP-900 Exam

1. Leverage Microsoft’s Free Learning Resources Microsoft offers free online training modules through Microsoft Learn, covering all exam objectives. These modules include hands-on labs and interactive exercises.

2. Practice with Hands-on Labs Azure provides a free tier with limited services, allowing candidates to experiment with databases, storage, and analytics tools. Practical experience reinforces theoretical knowledge.

3. Take Practice Tests Practice exams help identify weak areas and familiarize candidates with the question format. Websites like MeasureUp and Whizlabs offer DP-900 practice tests.

4. Join Study Groups and Forums Online communities, such as Reddit’s r/AzureCertification or Microsoft’s Tech Community, provide valuable insights and study tips from past exam takers.

5. Review Official Documentation Microsoft’s documentation on Azure data services is comprehensive and frequently updated. Reading through key concepts ensures a deeper understanding.

Benefits of Earning the DP-900 Certification

1. Career Advancement The certification demonstrates foundational expertise in Azure data services, making candidates more attractive to employers.

2. Pathway to Advanced Certifications DP-900 serves as a prerequisite for higher-level Azure data certifications, helping professionals specialize in data engineering or database administration.

3. Industry Recognition Microsoft certifications are globally recognized, adding credibility to a resume and increasing job prospects.

4. Skill Validation Passing the exam confirms a solid grasp of cloud data concepts, which is valuable in roles involving data storage, processing, or analytics.

Exam Logistics

- Exam Format: Multiple-choice questions (single and multiple responses). - Duration: 60 minutes. - Passing Score: 700 out of 1000. - Languages Available: English, Japanese, Korean, Simplified Chinese, and more. - Cost: $99 USD (prices may vary by region).

Conclusion

The DP-900 Microsoft Azure Data Fundamentals certification is an excellent starting point for anyone interested in cloud-based data solutions. By covering core data concepts, relational and non-relational databases, and analytics workloads, it provides a well-rounded introduction to Azure’s data ecosystem. With proper preparation, candidates can pass the exam and use it as a foundation for more advanced certifications. Whether you’re a student, IT professional, or business stakeholder, earning the DP-900 certification can open doors to new career opportunities in the growing field of cloud data management.

1 note

·

View note

Text

Cloud Cost Optimization: Smart Strategies to Reduce Spending Without Compromising Performance

As cloud adoption continues to grow, organizations are reaping the benefits of scalability, flexibility, and on-demand access to computing resources. However, with this growth comes a common challenge—escalating cloud costs. Businesses often find themselves over-provisioning resources, underutilizing services, or struggling with surprise billing, all of which directly impact the bottom line.

At Salzen Cloud, we help organizations implement cost optimization strategies that maintain performance while ensuring every dollar spent delivers value.

Why Cloud Costs Spiral Out of Control

Cloud platforms like AWS, Azure, and Google Cloud offer hundreds of services with countless configuration options. Without clear cost management practices in place, it’s easy to:

Leave unused or idle resources running

Overestimate capacity needs

Use expensive storage tiers unnecessarily

Miss opportunities for reserved instances or discounts

As environments scale, so do the risks of waste—especially in multi-team or multi-cloud setups.

Principles of Effective Cloud Cost Optimization

To control and reduce cloud spending without affecting performance, organizations must follow three core principles:

Visibility – Know what you’re using, where, and how much it costs.

Accountability – Assign ownership of resources to teams or services.

Efficiency – Continuously improve resource usage, eliminate waste, and right-size deployments.

Proven Strategies for Cloud Cost Optimization

Here are key cost-saving tactics that Salzen Cloud helps clients adopt:

🔍 1. Enable Detailed Cost Monitoring and Alerts

Use tools like AWS Cost Explorer, Azure Cost Management, or third-party solutions like CloudHealth and Spot.io to track spending by service, team, or region. Set up alerts for budget thresholds to prevent surprise charges.

⚙️ 2. Right-Size Your Resources

Often, cloud instances are over-provisioned “just in case.” Regularly review and adjust virtual machines, storage sizes, and compute capacity based on actual utilization using built-in recommendations from your cloud provider.

🌐 3. Leverage Auto-Scaling and Spot Instances

Set up auto-scaling groups to automatically adjust resources based on traffic. For non-critical workloads, use spot or preemptible instances, which offer significant savings—up to 90%—compared to on-demand pricing.

💾 4. Optimize Storage Costs

Move infrequently accessed data to lower-cost storage tiers (e.g., S3 Glacier, Azure Cool Blob Storage). Delete outdated backups or unused volumes. Implement lifecycle policies to automate storage management.

📅 5. Use Reserved Instances and Savings Plans

For predictable workloads, commit to long-term usage through Reserved Instances (RIs) or Savings Plans to enjoy up to 75% cost reductions compared to on-demand pricing.

🧠 6. Implement FinOps Culture Across Teams

Cost optimization isn't just a task for IT—make it a shared responsibility. Adopt FinOps principles by encouraging collaboration between finance, engineering, and operations to make data-driven spending decisions.

🔁 7. Automate Cleanup of Unused Resources

Use scripts or policies to regularly clean up unused resources like:

Stale snapshots and old AMIs

Idle load balancers

Detached volumes

Unused IP addresses

Automation ensures you're not paying for resources you don’t use.

Salzen Cloud’s Approach to Cost Optimization

At Salzen Cloud, our experts help you take a strategic approach to cost control without sacrificing cloud capabilities. We offer:

Detailed cost audits and recommendations

Implementation of monitoring and auto-scaling tools

Infrastructure as Code (IaC) templates for consistent and efficient provisioning

Automation pipelines for cleanup and resource scheduling

Training and policy development to embed cost-awareness into your DevOps workflows

Final Thoughts

Cloud cost optimization is not a one-time event—it’s an ongoing process of visibility, accountability, and continuous improvement. With the right tools, strategies, and cultural mindset, your organization can maximize ROI while maintaining performance, reliability, and innovation.

Salzen Cloud empowers businesses to gain full control over their cloud expenses and grow with confidence. Ready to reduce costs without compromise? Let’s optimize your cloud together.

0 notes

Text

Cloud Computing for Programmers

Cloud computing has revolutionized how software is built, deployed, and scaled. As a programmer, understanding cloud services and infrastructure is essential to creating efficient, modern applications. In this guide, we’ll explore the basics and benefits of cloud computing for developers.

What is Cloud Computing?

Cloud computing allows you to access computing resources (servers, databases, storage, etc.) over the internet instead of owning physical hardware. Major cloud providers include Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP).

Key Cloud Computing Models

IaaS (Infrastructure as a Service): Provides virtual servers, storage, and networking (e.g., AWS EC2, Azure VMs)

PaaS (Platform as a Service): Offers tools and frameworks to build applications without managing servers (e.g., Heroku, Google App Engine)

SaaS (Software as a Service): Cloud-hosted apps accessible via browser (e.g., Gmail, Dropbox)

Why Programmers Should Learn Cloud

Deploy apps quickly and globally

Scale applications with demand

Use managed databases and storage

Integrate with AI, ML, and big data tools

Automate infrastructure with DevOps tools

Popular Cloud Services for Developers

AWS: EC2, Lambda, S3, RDS, DynamoDB

Azure: App Services, Functions, Cosmos DB, Blob Storage

Google Cloud: Compute Engine, Cloud Run, Firebase, BigQuery

Common Use Cases

Hosting web and mobile applications

Serverless computing for microservices

Real-time data analytics and dashboards

Cloud-based CI/CD pipelines

Machine learning model deployment

Getting Started with the Cloud

Create an account with a cloud provider (AWS, Azure, GCP)

Start with a free tier or sandbox environment

Launch your first VM or web app

Use the provider’s CLI or SDK to deploy code

Monitor usage and set up billing alerts

Example: Deploying a Node.js App on Heroku (PaaS)

# Step 1: Install Heroku CLI heroku login # Step 2: Create a new Heroku app heroku create my-node-app # Step 3: Deploy your code git push heroku main # Step 4: Open your app heroku open

Tools and Frameworks

Docker: Containerize your apps for portability

Kubernetes: Orchestrate containers at scale

Terraform: Automate cloud infrastructure with code

CI/CD tools: GitHub Actions, Jenkins, GitLab CI

Security Best Practices

Use IAM roles and permissions

Encrypt data at rest and in transit

Enable firewalls and VPCs

Regularly update dependencies and monitor threats

Conclusion

Cloud computing enables developers to build powerful, scalable, and reliable software with ease. Whether you’re developing web apps, APIs, or machine learning services, cloud platforms provide the tools you need to succeed in today’s tech-driven world.

0 notes

Text

Hosting Options for Full Stack Applications: AWS, Azure, and Heroku

Introduction

When deploying a full-stack application, choosing the right hosting provider is crucial. AWS, Azure, and Heroku offer different hosting solutions tailored to various needs. This guide compares these platforms to help you decide which one is best for your project.

1. Key Considerations for Hosting

Before selecting a hosting provider, consider: ✅ Scalability — Can the platform handle growth? ✅ Ease of Deployment — How simple is it to deploy and manage apps? ✅ Cost — What is the pricing structure? ✅ Integration — Does it support your technology stack? ✅ Performance & Security — Does it offer global availability and robust security?

2. AWS (Amazon Web Services)

Overview

AWS is a cloud computing giant that offers extensive services for hosting and managing applications.

Key Hosting Services

🚀 EC2 (Elastic Compute Cloud) — Virtual servers for hosting web apps 🚀 Elastic Beanstalk — PaaS for easy deployment 🚀 AWS Lambda — Serverless computing 🚀 RDS (Relational Database Service) — Managed databases (MySQL, PostgreSQL, etc.) 🚀 S3 (Simple Storage Service) — File storage for web apps

Pros & Cons

✔️ Highly scalable and flexible ✔️ Pay-as-you-go pricing ✔️ Integration with DevOps tools ❌ Can be complex for beginners ❌ Requires manual configuration

Best For: Large-scale applications, enterprises, and DevOps teams.

3. Azure (Microsoft Azure)

Overview

Azure provides cloud services with seamless integration for Microsoft-based applications.

Key Hosting Services

🚀 Azure Virtual Machines — Virtual servers for custom setups 🚀 Azure App Service — PaaS for easy app deployment 🚀 Azure Functions — Serverless computing 🚀 Azure SQL Database — Managed database solutions 🚀 Azure Blob Storage — Cloud storage for apps

Pros & Cons

✔️ Strong integration with Microsoft tools (e.g., VS Code, .NET) ✔️ High availability with global data centers ✔️ Enterprise-grade security ❌ Can be expensive for small projects ❌ Learning curve for advanced features

Best For: Enterprise applications, .NET-based applications, and Microsoft-centric teams.

4. Heroku

Overview

Heroku is a developer-friendly PaaS that simplifies app deployment and management.

Key Hosting Features

🚀 Heroku Dynos — Containers that run applications 🚀 Heroku Postgres — Managed PostgreSQL databases 🚀 Heroku Redis — In-memory caching 🚀 Add-ons Marketplace — Extensions for monitoring, security, and more

Pros & Cons

✔️ Easy to use and deploy applications ✔️ Managed infrastructure (scaling, security, monitoring) ✔️ Free tier available for small projects ❌ Limited customization compared to AWS & Azure ❌ Can get expensive for large-scale apps

Best For: Startups, small-to-medium applications, and developers looking for quick deployment.

5. Comparison Table

FeatureAWSAzureHerokuScalabilityHighHighMediumEase of UseComplexModerateEasyPricingPay-as-you-goPay-as-you-goFixed plansBest ForLarge-scale apps, enterprisesEnterprise apps, Microsoft usersStartups, small appsDeploymentManual setup, automated pipelinesIntegrated DevOpsOne-click deploy

6. Choosing the Right Hosting Provider

✅ Choose AWS for large-scale, high-performance applications.

✅ Choose Azure for Microsoft-centric projects.

✅ Choose Heroku for quick, hassle-free deployments.

WEBSITE: https://www.ficusoft.in/full-stack-developer-course-in-chennai/

0 notes

Text

How to Integrate PowerApps with Azure AI Search Services

In today’s digital world, businesses require efficient and intelligent search functionalities within their applications. Microsoft PowerApps Training Course, a low-code development platform, allows users to build powerful applications, while Azure AI Search Services enhances data retrieval with AI-driven search capabilities. By integrating PowerApps with Azure AI Search, organizations can optimize their applications for better search performance, user experience, and data accessibility.

This article provides a step-by-step guide on how to integrate PowerApps with Azure AI Search Services to create an intelligent and responsive search solution.

Prerequisites

Before starting, ensure you have the following:

A Microsoft Azure account and subscription

An Azure AI Search service instance

A PowerApps environment set up

A data source (SQL Database, Cosmos DB, or Blob Storage) indexed in Azure AI Search. Microsoft PowerApps Online Training Courses

Step 1: Set Up Azure AI Search

Create an Azure AI Search Service

Sign in to the Azure Portal and search for “Azure AI Search.”

Click Create and configure settings such as subscription, resource group, and pricing tier.

Choose a service name and location, then click Review + Create to deploy the service.

Create and Populate an Index

In your Azure AI Search service, navigate to Indexes and click Add Index.

Define the necessary fields, including ID, Title, Description, and other relevant attributes.

Navigate to Data Sources and select the source you want to index (SQL, Blob Storage, etc.).

Set up an Indexer to populate the index automatically and keep it updated.

Once the index is created and populated, you can query it using REST API endpoints. Power Automate Training

Step 2: Create a Custom Connector in PowerApps

To connect PowerApps with Azure AI Search, a custom connector is required to communicate with the search API.

Set Up a Custom Connector

Open PowerApps and navigate to Custom Connectors.

Click New Custom Connector and select Create from Blank.

Provide a connector name and continue to the configuration page.

Configure API Connection

Enter the Base URL of your Azure AI Search service

Select API Key Authentication and enter the Azure AI Search Admin Key found in the Azure portal under the Keys section.

Define API Actions

Click Add Action and configure it as follows:

Verb: GET

Endpoint URL: /indexes/{index-name}/docs?api-version=2023-07-01-Preview&search={search-text}

Define request parameters such as index-name and search-text.

Save and test the connection to ensure it retrieves data from Azure AI Search. Microsoft PowerApps Online Training Courses

Step 3: Integrate PowerApps with Azure AI Search

Add the Custom Connector to PowerApps

Open your PowerApps Studio and create a Canvas App.

Navigate to Data and add the newly created Custom Connector.

Implement Search Functionality

Insert a Text Input field where users can enter search queries.

Add a Button labeled "Search."

Insert a Gallery Control to display search results.

Step 4: Test and Deploy

After setting up the integration, test the app by entering search queries and verifying that results are retrieved from Azure AI Search. If necessary, refine the search logic and adjust index configurations.

Once satisfied with the functionality, publish and share the PowerApps application with users.

Benefits of PowerApps Azure AI Search Integration

Enhanced Search Performance: AI-driven search provides fast and accurate results. Power Automate Training

Scalability: Supports large datasets with minimal performance degradation.

Customization: Allows tailored search functionalities for different business needs.

Improved User Experience: Enables intelligent and context-aware search results.

Conclusion

Integrating PowerApps with Azure AI Search Services is a powerful way to enhance application functionality with AI-driven search capabilities. This step-by-step guide provides the necessary steps to set up and configure both platforms, allowing you to create efficient and intelligent search applications.

By leveraging the power of Azure AI Search, PowerApps users can significantly improve data accessibility and user experience, making applications more intuitive and efficient. Start integrating today to unlock the full potential of your applications!

Visualpath is the Leading and Best Institute for learning in Hyderabad. We provide PowerApps and Power Automate Training. You will get the best course at an affordable cost.

Call on – +91-7032290546

Visit: https://www.visualpath.in/online-powerapps-training.html

#PowerApps Training#Power Automate Training#PowerApps Training in Hyderabad#PowerApps Online Training#Power Apps Power Automate Training#PowerApps and Power Automate Training#Microsoft PowerApps Training Courses#PowerApps Online Training Course#PowerApps Training in Chennai#PowerApps Training in Bangalore#PowerApps Training in India#PowerApps Course In Ameerpet

1 note

·

View note

Text

What Is Azure Blob Storage? And Azure Blob Storage Cost

Microsoft Azure Blob Storage

Scalable, extremely safe, and reasonably priced cloud object storage

Incredibly safe and scalable object storage for high-performance computing, archiving, data lakes, cloud-native workloads, and machine learning.

What is Azure Blob Storage?

Microsoft’s cloud-based object storage solution is called Blob Storage. Massive volumes of unstructured data are best stored in blob storage. Text and binary data are examples of unstructured data, which deviates from a certain data model or specification.

Scalable storage and retrieval of unstructured data

Azure Blob Storage offers storage for developing robust cloud-native and mobile apps, as well as assistance in creating data lakes for your analytics requirements. For your long-term data, use tiered storage to minimize expenses, and scale up flexibly for tasks including high-performance computing and machine learning.

Construct robust cloud-native apps

Azure Blob Storage was designed from the ground up to meet the demands of cloud-native, online, and mobile application developers in terms of availability, security, and scale. For serverless systems like Azure Functions, use it as a foundation. Blob storage is the only cloud storage solution that provides a premium, SSD-based object storage layer for low-latency and interactive applications, and it supports the most widely used development frameworks, such as Java,.NET, Python, and Node.js.

Save petabytes of data in an economical manner

Store enormous volumes of rarely viewed or infrequently accessed data in an economical manner with automated lifecycle management and numerous storage layers. Azure Blob Storage can take the place of your tape archives, and you won’t have to worry about switching between hardware generations.

Construct robust data lakes

One of the most affordable and scalable data lake options for big data analytics is Azure Data Lake Storage. It helps you accelerate your time to insight by fusing the strength of a high-performance file system with enormous scalability and economy. Data Lake Storage is tailored for analytics workloads and expands the possibilities of Azure Blob Storage.

Scale out for billions of IoT devices or scale up for HPC

Azure Blob Storage offers the volume required to enable storage for billions of data points coming in from IoT endpoints while also satisfying the rigorous, high-throughput needs of HPC applications.

Features

Scalable, robust, and accessible

With geo-replication and the capacity to scale as needed, the durability is designed to be sixteen nines.

Safe and sound

Role-based access control (RBAC), Microsoft Entra ID (previously Azure Active Directory) authentication, sophisticated threat protection, and encryption at rest.

Data lake-optimized

Multi-protocol access and file namespace facilitate analytics workloads for data insights.

Complete data administration

Immutable (WORM) storage, policy-based access control, and end-to-end lifecycle management.

Integrated security and conformance

Complete security and conformance, integrated

Every year, Microsoft spends about $1 billion on cybersecurity research and development.

Over 3,500 security professionals who are committed to data security and privacy work for it.

Azure boasts one of the biggest portfolios of compliance certifications in the sector.

Azure Blob storage cost

Documents, films, images, backups, and other unstructured text or binary data can all be streamed and stored using block blob storage.

The most recent features are accessible through blob storage accounts, however they do not allow page blobs, files, queues, or tables. For the majority of users, general-purpose v2 storage accounts are advised.

Block blob storage’s overall cost is determined by:

Monthly amount of data kept.

Number and kinds of activities carried out, as well as any expenses related to data transfer.

The option for data redundancy was chosen.

Adaptable costs with reserved alternatives to satisfy your needs for cloud storage

Depending on how frequently you anticipate accessing the data, you can select from its storage tiers. Keep regularly accessed data in Hot, seldom accessed data in Cool and Cold, performance-sensitive data in Premium, and rarely accessed data in Archive. Save a lot of money by setting up storage space.

To continue building with the same free features after your credit, switch to pay as you go. Only make a payment if your monthly usage exceeds your free amounts.

You will continue to receive over fifty-five services at no cost after a year, and you will only be charged for the services you utilize above your monthly allotment.

Read more on Govindhtech.com

#AzureBlobStorage#BlobStorage#machinelearning#Cloudcomputing#cloudstorage#DataLakeStorage#datasecurity#News#Technews#Technology#Technologynews#Technologytrends#govindhtech

0 notes

Text

Where to learn cloud computing for free ?

💡 Where to Learn Cloud Computing for Free? Your First Steps in 2025

So you’ve heard about cloud computing. Maybe you’ve seen the job titles—Cloud Engineer, DevOps Specialist, AWS Associate—or heard stories of people getting hired after just a few months of learning.

Naturally, you wonder: "Can I learn cloud computing for free?"

Yes, you can. But you need to know where to look, and what free learning can (and can’t) do for your career.

☁️ What Can You Learn for Free?

Free cloud resources help you understand:

What cloud computing is

How AWS, Azure, or GCP platforms work

Basic terminology: IaaS, SaaS, EC2, S3, VPC, IAM

Free-tier services (you can try cloud tools without being charged)

Entry-level certification concepts

🌐 Best Places to Learn Cloud for Free (Legit Sources)

✅ AWS Free Training & AWS Skill Builder

📍 https://aws.amazon.com/training

Learn cloud basics with self-paced videos

Good for AWS Cloud Practitioner prep

Access real AWS Console via the free tier

✅ Microsoft Learn – Azure

📍 https://learn.microsoft.com/en-us/training

Offers free Azure paths for beginners

Gamified learning experience

Ideal for preparing for AZ-900

✅ Google Cloud Skills Boost

📍 https://cloud.google.com/training

Interactive quests and labs

Learn Compute Engine, IAM, BigQuery

Some credits may be needed for advanced labs

✅ YouTube Channels

FreeCodeCamp: Full cloud crash courses

Simplilearn, Edureka, NareshIT: Basic tutorials

AWS, Azure official channels: Real demos

✅ GitHub Repos & Blogs

Open-source lab guides

Resume projects (e.g., deploy a website on AWS)

Real-world practice material

⚠️ But Wait—Here’s What Free Learning Misses

While free content is great to start, most learners eventually hit a wall:

❌ No structured syllabus

❌ No mentor to answer questions

❌ No feedback on real projects

❌ No resume guidance or placement support

❌ Certification confusion (what to take, when, why?)

That’s where formal, hands-on training can make the difference—especially if you want to get hired.

🎓 Want to Learn Faster, Smarter? Try NareshIT’s Cloud Courses

At NareshIT, we’ve helped over 100,000 learners start their cloud journey—with or without a tech background.

We bridge the gap between free concepts and job-ready skills.

🔹 AWS Cloud Beginner Program

Duration: 60 Days

Covers EC2, IAM, S3, Lambda

Includes: AWS Cloud Practitioner & Associate exam prep

Ideal For: Freshers, support engineers, and non-coders

🔹 Azure Fundamentals Course

Duration: 45 Days

Learn VMs, Azure AD, Blob Storage, and DevOps basics

Prepares you for AZ-900 and AZ-104 certifications

Best For: IT admins and .NET developers

🔹 Google Cloud (GCP) Basics

Duration: 30 Days

Practice labs + GCP Associate Cloud Engineer training

Perfect for: Python devs, data learners, AI enthusiasts

📅 View all cloud training batches at NareshIT

👣 Final Words: Start Free. Scale Smart.

If you’re serious about cloud, there’s no shame in starting with free videos or cloud tutorials. That’s how many great engineers begin.

But when you’re ready to:

Work on real projects

Earn certifications

Prepare for interviews

Get career guidance

Then it’s time to consider a guided course like the ones at NareshIT.

📌 Explore new batches →

0 notes

Text

Smart Cloud Cost Optimization: Reducing Expenses Without Sacrificing Performance

As businesses scale their cloud infrastructure, cost optimization becomes a critical priority. Many organizations struggle to balance cost efficiency with performance, security, and scalability. Without a strategic approach, cloud expenses can spiral out of control.

This blog explores key cost optimization strategies to help businesses reduce cloud spending without compromising performance—ensuring an efficient, scalable, and cost-effective cloud environment.

Why Cloud Cost Optimization Matters

Cloud services provide on-demand scalability, but improper management can lead to wasteful spending. Some common cost challenges include:

❌ Overprovisioned resources leading to unnecessary expenses. ❌ Unused or underutilized instances wasting cloud budgets. ❌ Lack of visibility into spending patterns and cost anomalies. ❌ Poorly optimized storage and data transfer costs.

A proactive cost optimization strategy ensures businesses pay only for what they need while maintaining high availability and performance.

Key Strategies for Cloud Cost Optimization

1. Rightsize Compute Resources

One of the biggest sources of cloud waste is overprovisioned instances. Businesses often allocate more CPU, memory, or storage than necessary.

✅ Use auto-scaling to adjust resources dynamically based on demand. ✅ Leverage rightsizing tools (AWS Compute Optimizer, Azure Advisor, Google Cloud Recommender). ✅ Monitor CPU, memory, and network usage to identify underutilized instances.

🔹 Example: Switching from an overprovisioned EC2 instance to a smaller instance type or serverless computing can cut costs significantly.

2. Implement Reserved and Spot Instances

Cloud providers offer discounted pricing models for long-term or flexible workloads:

✔️ Reserved Instances (RIs): Up to 72% savings for predictable workloads (AWS RIs, Azure Reserved VMs). ✔️ Spot Instances: Ideal for batch processing and non-critical workloads at up to 90% discounts. ✔️ Savings Plans: Flexible commitment-based pricing for compute and storage services.

🔹 Example: Running batch jobs on AWS EC2 Spot Instances instead of on-demand instances significantly reduces compute costs.

3. Optimize Storage Costs

Cloud storage costs can escalate quickly if data is not managed properly.

✅ Move infrequently accessed data to low-cost storage tiers (AWS S3 Glacier, Azure Cool Blob Storage). ✅ Implement automated data lifecycle policies to delete or archive unused files. ✅ Use compression and deduplication to reduce storage footprint.

🔹 Example: Instead of storing all logs in premium storage, use tiered storage solutions to balance cost and accessibility.

4. Reduce Data Transfer and Network Costs

Hidden data transfer fees can inflate cloud bills if not monitored.

✅ Minimize inter-region and inter-cloud data transfers to avoid high egress costs. ✅ Use content delivery networks (CDNs) (AWS CloudFront, Azure CDN) to cache frequently accessed data. ✅ Optimize API calls and batch data transfers to reduce unnecessary network usage.

🔹 Example: Hosting a website with AWS CloudFront CDN reduces bandwidth costs by caching content closer to users.

5. Automate Cost Monitoring and Governance

A lack of visibility into cloud spending can lead to uncontrolled costs.

✅ Use cost monitoring tools like AWS Cost Explorer, Azure Cost Management, and Google Cloud Billing Reports. ✅ Set up budget alerts and automated cost anomaly detection. ✅ Implement tagging policies to track costs by department, project, or application.

🔹 Example: With Salzen Cloud’s automated cost optimization solutions, businesses can track and control cloud expenses effortlessly.

6. Adopt Serverless and Containerization for Efficiency

Traditional VM-based architectures can be cost-intensive compared to modern alternatives.

✅ Use serverless computing (AWS Lambda, Azure Functions, Google Cloud Functions) to pay only for execution time. ✅ Adopt containers and Kubernetes for efficient resource allocation. ✅ Scale workloads dynamically using container orchestration tools like Kubernetes.

🔹 Example: Running a serverless API on AWS Lambda eliminates idle costs compared to running a dedicated EC2 instance.

How Salzen Cloud Helps Optimize Cloud Costs

At Salzen Cloud, we offer AI-driven cloud cost optimization solutions to help businesses:

✔️ Automatically detect and eliminate unused cloud resources. ✔️ Optimize compute, storage, and network costs without sacrificing performance. ✔️ Implement real-time cost monitoring and forecasting. ✔️ Apply smart scaling, reserved instance planning, and serverless strategies.

With Salzen Cloud, businesses can maximize cloud efficiency, reduce expenses, and enhance operational performance.

Final Thoughts

Cloud cost optimization is not about cutting resources—it’s about using them wisely. By rightsizing workloads, leveraging reserved instances, optimizing storage, and automating cost governance, businesses can reduce cloud expenses while maintaining high performance and security.

🔹 Looking for smarter cloud cost management? Salzen Cloud helps businesses streamline costs without downtime or performance trade-offs.

🚀 Optimize your cloud costs today with Salzen Cloud!

0 notes

Text

Azure Storage Plays The Same Role in Azure

Azure Storage is an essential service within the Microsoft Azure ecosystem, providing scalable, reliable, and secure storage solutions for a vast range of applications and data types. Whether it's storing massive amounts of unstructured data, enabling high-performance computing, or ensuring data durability, Azure Storage is the backbone that supports many critical functions in Azure.

Understanding Azure Storage is vital for anyone pursuing Azure training, Azure admin training, or Azure Data Factory training. This article explores how Azure Storage functions as the central hub of Azure services and why it is crucial for cloud professionals to master this service.

The Core Role of Azure Storage in Cloud Computing

Azure Storage plays a pivotal role in cloud computing, acting as the central hub where data is stored, managed, and accessed. Its flexibility and scalability make it an indispensable resource for businesses of all sizes, from startups to large enterprises.

Data Storage and Accessibility: Azure Storage enables users to store vast amounts of data, including text, binary data, and large media files, in a highly accessible manner. Whether it's a mobile app storing user data or a global enterprise managing vast data lakes, Azure Storage is designed to handle it all.

High Availability and Durability: Data stored in Azure is replicated across multiple locations to ensure high availability and durability. Azure offers various redundancy options, such as Locally Redundant Storage (LRS), Geo-Redundant Storage (GRS), and Read-Access Geo-Redundant Storage (RA-GRS), ensuring data is protected against hardware failures, natural disasters, and other unforeseen events.

Security and Compliance: Azure Storage is built with security at its core, offering features like encryption at rest, encryption in transit, and role-based access control (RBAC). These features ensure that data is not only stored securely but also meets compliance requirements for industries such as healthcare, finance, and government.

Integration with Azure Services: Azure Storage is tightly integrated with other Azure services, making it a central hub for storing and processing data across various applications. Whether it's a virtual machine needing disk storage, a web app requiring file storage, or a data factory pipeline ingesting and transforming data, Azure Storage is the go-to solution.

Azure Storage Services Overview

Azure Storage is composed of several services, each designed to meet specific data storage needs. These services are integral to any Azure environment and are covered extensively in Azure training and Azure admin training.

Blob Storage: Azure Blob Storage is ideal for storing unstructured data such as documents, images, and video files. It supports various access tiers, including Hot, Cool, and Archive, allowing users to optimize costs based on their access needs.

File Storage: Azure File Storage provides fully managed file shares in the cloud, accessible via the Server Message Block (SMB) protocol. It's particularly useful for lifting and shifting existing applications that rely on file shares.

Queue Storage: Azure Queue Storage is used for storing large volumes of messages that can be accessed from anywhere in the world. It’s commonly used for decoupling components in cloud applications, allowing them to communicate asynchronously.

Table Storage: Azure Table Storage offers a NoSQL key-value store for rapid development and high-performance queries on large datasets. It's a cost-effective solution for applications needing structured data storage without the overhead of a traditional database.

Disk Storage: Azure Disk Storage provides persistent, high-performance storage for Azure Virtual Machines. It supports both standard and premium SSDs, making it suitable for a wide range of workloads from general-purpose VMs to high-performance computing.

Azure Storage and Azure Admin Training

In Azure admin training, a deep understanding of Azure Storage is crucial for managing cloud infrastructure. Azure administrators are responsible for creating, configuring, monitoring, and securing storage accounts, ensuring that data is both accessible and protected.

Creating and Managing Storage Accounts: Azure admins must know how to create and manage storage accounts, selecting the appropriate performance and redundancy options. They also need to configure network settings, including virtual networks and firewalls, to control access to these accounts.

Monitoring and Optimizing Storage: Admins are responsible for monitoring storage metrics such as capacity, performance, and access patterns. Azure provides tools like Azure Monitor and Application Insights to help admins track these metrics and optimize storage usage.

Implementing Backup and Recovery: Admins must implement robust backup and recovery solutions to protect against data loss. Azure Backup and Azure Site Recovery are tools that integrate with Azure Storage to provide comprehensive disaster recovery options.

Securing Storage: Security is a top priority for Azure admins. This includes managing encryption keys, setting up role-based access control (RBAC), and ensuring that all data is encrypted both at rest and in transit. Azure provides integrated security tools to help admins manage these tasks effectively.

Azure Storage and Azure Data Factory

Azure Storage plays a critical role in the data integration and ETL (Extract, Transform, Load) processes managed by Azure Data Factory. Azure Data Factory training emphasizes the use of Azure Storage for data ingestion, transformation, and movement, making it a key component in data workflows.

Data Ingestion: Azure Data Factory often uses Azure Blob Storage as a staging area for data before processing. Data from various sources, such as on-premises databases or external data services, can be ingested into Blob Storage for further transformation.

Data Transformation: During the transformation phase, Azure Data Factory reads data from Azure Storage, applies various data transformations, and then writes the transformed data back to Azure Storage or other destinations.

Data Movement: Azure Data Factory facilitates the movement of data between different Azure Storage services or between Azure Storage and other Azure services. This capability is crucial for building data pipelines that connect various services within the Azure ecosystem.

Integration with Other Azure Services: Azure Data Factory integrates seamlessly with Azure Storage, allowing data engineers to build complex data workflows that leverage Azure Storage’s scalability and durability. This integration is a core part of Azure Data Factory training.

Why Azure Storage is Essential for Azure Training

Understanding Azure Storage is essential for anyone pursuing Azure training, Azure admin training, or Azure Data Factory training. Here's why:

Core Competency: Azure Storage is a foundational service that underpins many other Azure services. Mastery of Azure Storage is critical for building, managing, and optimizing cloud solutions.

Hands-On Experience: Azure training often includes hands-on labs that use Azure Storage in real-world scenarios, such as setting up storage accounts, configuring security settings, and building data pipelines. These labs provide valuable practical experience.

Certification Preparation: Many Azure certifications, such as the Azure Administrator Associate or Azure Data Engineer Associate, include Azure Storage in their exam objectives. Understanding Azure Storage is key to passing these certification exams.

Career Advancement: As cloud computing continues to grow, the demand for professionals with expertise in Azure Storage increases. Proficiency in Azure Storage is a valuable skill that can open doors to a wide range of career opportunities in the cloud industry.

Conclusion

Azure Storage is not just another service within the Azure ecosystem; it is the central hub that supports a wide array of applications and services. For anyone undergoing Azure training, Azure admin training, or Azure Data Factory training, mastering Azure Storage is a crucial step towards becoming proficient in Azure and advancing your career in cloud computing.

By understanding Azure Storage, you gain the ability to design, deploy, and manage robust cloud solutions that can handle the demands of modern businesses. Whether you are a cloud administrator, a data engineer, or an aspiring Azure professional, Azure Storage is a key area of expertise that will serve as a strong foundation for your work in the cloud.

#azure devops#azurecertification#microsoft azure#azure data factory#azure training#azuredataengineer

0 notes

Text

Tips for improving pipeline execution speed and cost-efficiency.

Improving pipeline execution speed and cost-efficiency in Azure Data Factory (ADF) or any ETL/ELT workflow involves optimizing data movement, transformation, and resource utilization. Here are some key strategies:

Performance Optimization Tips

Use the Right Integration Runtime (IR)

Use Azure IR for cloud-based operations.

Use Self-Hosted IR for on-premises data movement and hybrid scenarios.

Scale out IR by increasing node count for better performance.

Optimize Data Movement

Use staged copy (e.g., from on-premises to Azure Blob before loading into SQL).

Enable parallel copy for large datasets.

Use compression and column pruning to reduce data transfer size.

Optimize Data Transformations

Use push-down computations in Azure Synapse, SQL, or Snowflake instead of ADF Data Flows.

Use partitioning in Data Flows to process data in chunks.

Leverage cache in Data Flows to reuse intermediate results.

Reduce Pipeline Execution Time

Optimize pipeline dependencies using concurrency and parallelism.

Use Lookups efficiently — avoid fetching large datasets.

Minimize the number of activities in a pipeline.

Use Delta Processing Instead of Full Loads

Implement incremental data loads using watermark columns (e.g., last modified timestamp).

Use Change Data Capture (CDC) in supported databases to track changes.

Cost-Efficiency Tips

Optimize Data Flow Execution

Choose the right compute size for Data Flows (low for small datasets, high for big data).

Reduce execution time to avoid unnecessary compute costs.

Use debug mode wisely to avoid extra billing.

Monitor & Tune Performance

Use Azure Monitor and Log Analytics to track pipeline execution time and bottlenecks.

Set up alerts and auto-scaling for self-hosted IR nodes.

Leverage Serverless and Pay-As-You-Go Models

Use Azure Functions or Databricks for certain transformations instead of Data Flows.

Utilize reserved instances or spot pricing for cost savings.

Reduce Storage and Data Transfer Costs

Store intermediate results in low-cost storage (e.g., Azure Blob Storage Hot/Cool tier).

Minimize data movement across regions to reduce egress charges.

Automate Pipeline Execution Scheduling

Use event-driven triggers instead of fixed schedules to reduce unnecessary runs.

Consolidate multiple pipelines into fewer, more efficient workflows.

WEBSITE: https://www.ficusoft.in/azure-data-factory-training-in-chennai/

0 notes

Text

Cloud Computing Programming: Harnessing the Power of the Cloud

Cloud computing has revolutionized the way businesses and developers approach computing resources. It provides on-demand availability of computer system resources, especially data storage and computing power, without direct active management by the user. Cloud computing programming involves writing code that runs on cloud platforms, leveraging their scalable and flexible infrastructure. This article explores the basics of cloud computing programming, its benefits, and how to get started with courses that teach this valuable skill.

What is Cloud Computing Programming?

Cloud computing programming refers to the process of developing software applications that run on cloud environments. This includes utilizing services such as computing power, storage, databases, networking, and analytics provided by cloud service providers like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP).

Key Concepts in Cloud Computing Programming

Scalability: One of the primary advantages of cloud computing is the ability to scale resources up or down based on demand. This means applications can handle varying loads without significant changes to the underlying infrastructure.

Flexibility: Cloud platforms offer a wide range of services and configurations, allowing developers to choose the best tools for their specific needs. This flexibility speeds up development and deployment processes.

Cost Efficiency: With pay-as-you-go pricing models, cloud computing can reduce costs as businesses only pay for what they use. This eliminates the need for significant upfront investment in hardware.

Global Reach: Cloud providers have data canters around the world, enabling applications to serve a global audience with low latency and high availability.

Getting Started with Cloud Computing Programming

To start with cloud computing programming, it's essential to familiarize yourself with at least one major cloud platform. Here are some steps to guide you:

Choose a Cloud Platform: AWS, Azure, and GCP are the most popular cloud platforms. Each has its own set of services and tools. Starting with one of these platforms will give you a strong foundation.

Learn the Basics: Understand core cloud services such as virtual machines (EC2 in AWS, Compute Engine in GCP, Virtual Machines in Azure), storage (S3 in AWS, Blob Storage in Azure, Cloud Storage in GCP), and databases (RDS in AWS, SQL Database in Azure, Cloud SQL in GCP).

Hands-on Practice: Most cloud providers offer free tiers or trial credits. Use these to practice deploying and managing resources. Try building simple applications and gradually move to more complex projects.

Understand DevOps: Familiarize yourself with DevOps practices, as cloud programming often involves deploying and managing applications in a continuous integration/continuous deployment (CI/CD) pipeline.

Courses to Learn Cloud Computing Programming

AWS Certified Developer - Associate: This course provides a comprehensive overview of developing applications on AWS, including best practices and design patterns. Available on platforms like Coursera and Udemy.

Microsoft Azure Developer Certification: Offered by Microsoft, this certification track includes courses that cover building, testing, and maintaining cloud applications on Azure.

Google Cloud Professional Cloud Developer: Google offers this course for developers looking to build scalable and efficient applications on GCP. It includes hands-on labs and projects.

Coursera's "Cloud Computing Specialization": This specialization, offered by the University of Illinois, covers cloud computing concepts, infrastructure, and services, with a focus on developing cloud-native applications.

edX's "Introduction to Cloud Computing": Provided by IBM, this course covers the fundamental concepts of cloud computing and includes practical exercises on IBM Cloud.

Conclusion

Cloud computing programming is an essential skill for modern developers, offering the ability to build scalable, flexible, and cost-efficient applications. By understanding the core concepts and gaining hands-on experience with major cloud platforms, developers can leverage the full power of the cloud. Enrolling in targeted courses can accelerate the learning process and provide the necessary knowledge and skills to excel in this dynamic field.

0 notes

Text

Azure Data Engineer Course | Azure Data Engineer Training

Azure data distribution and partitions

Azure, when it comes to distributing data and managing partitions, you're typically dealing with Azure services like Azure SQL Database, Azure Cosmos DB, Azure Data Lake Storage, or Azure Blob Storage.

Data Engineer Course in Hyderabad

Azure SQL Database: In Azure SQL Database, you can distribute data across multiple databases using techniques like sharding or horizontal partitioning. You can also leverage Azure SQL Elastic Database Pools for managing resources across multiple databases. Additionally, Azure SQL Database provides built-in support for partitioning tables, which allows you to horizontally divide your table data into smaller, more manageable pieces.

Data Engineer Training Hyderabad

Azure Cosmos DB: Azure Cosmos DB is a globally distributed, multi-model database service. It automatically distributes data across multiple regions and provides tunable consistency levels. Cosmos DB also offers partitioning at the database and container levels, allowing you to scale throughput and storage independently. Azure Data Engineer Training

Azure Data Lake Storage: Azure Data Lake Storage supports the concept of hierarchical namespaces and allows you to organize your data into directories and folders. You can distribute data across multiple storage accounts for scalability and performance. Additionally, you can use techniques like partitioning and file formats optimized for big data processing, such as Parquet or ORC, to improve query performance.

Azure Blob Storage: Azure Blob Storage provides object storage for a wide variety of data types, including unstructured data, images, videos, and more. You can distribute data across multiple storage accounts and containers for scalability and fault tolerance. Blob Storage also supports partitioning through blob storage tiers and Azure Blob Indexer for efficient indexing and querying of metadata. Azure Data Engineer Course

In all of these Azure services, distributing data and managing partitions are essential for achieving scalability, performance, and fault tolerance in your applications. Depending on your specific use case and requirements, you can choose the appropriate Azure service and partitioning strategy to optimize data distribution and query performance.

Visualpath is the Best Software Online Training Institute in Hyderabad. Avail complete Azure Data Engineer Training worldwide. You will get the best course at an affordable cost.

Attend Free Demo

Call on - +91-9989971070.

WhatsApp: https://www.whatsapp.com/catalog/919989971070

Visit https://visualpath.in/azure-data-engineer-online-training.html

#Azure Data Engineer Training Ameerpet#Azure Data Engineer Training Hyderabad#Azure Data Engineer Online Training#Azure Data Engineer Course#Azure Data Engineer Training#Data Engineer Training Hyderabad#Data Engineer Course in Hyderabad

0 notes

Text

BigLake Tables: Future of Unified Data Storage And Analytics

Introduction BigLake external tables

This article introduces BigLake and assumes database tables and IAM knowledge. To query data in supported data storage, build BigLake tables and query them using GoogleSQL:

Create Cloud Storage BigLake tables and query.

Create BigLake tables in Amazon S3 and query.

Create Azure Blob Storage BigLake tables and query.

BigLake tables provide structured data queries in external data storage with delegation. Access delegation separates BigLake table and data storage access. Data store connections are made via service account external connections. Users only need access to the BigLake table since the service account retrieves data from the data store. This allows fine-grained table-level row- and column-level security. Dynamic data masking works for Cloud Storage-based BigLake tables. BigQuery Omni explains multi-cloud analytic methods integrating BigLake tables with Amazon S3 or Blob Storage data.

Support for temporary tables

BigLake Cloud Storage tables might be temporary or permanent.

Amazon S3/Blob Storage BigLake tables must last.

Source files multiple

Multiple external data sources with the same schema may be used to generate a BigLake table.

Cross-cloud connects

Query across Google Cloud and BigQuery Omni using cross-cloud joins. Google SQL JOIN can examine data from AWS, Azure, public datasets, and other Google Cloud services. Cross-cloud joins prevent data copying before queries.

BigLake table may be used in SELECT statements like any other BigQuery table, including in DML and DDL operations that employ subqueries to obtain data. BigQuery and BigLake tables from various clouds may be used in the same query. BigQuery tables must share a region.

Cross-cloud join needs permissions

Ask your administrator to give you the BigQuery Data Editor (roles/bigquery.dataEditor) IAM role on the project where the cross-cloud connect is done. See Manage project, folder, and organization access for role granting.

Cross-cloud connect fees

BigQuery splits cross-cloud join queries into local and remote portions. BigQuery treats the local component as a regular query. The remote portion constructs a temporary BigQuery table by performing a CREATE TABLE AS SELECT (CTAS) action on the BigLake table in the BigQuery Omni region. This temporary table is used for your cross-cloud join by BigQuery, which deletes it after eight hours.

Data transmission expenses apply to BigLake tables. BigQuery reduces these expenses by only sending the BigLake table columns and rows referenced in the query. Google Cloud propose a thin column filter to save transfer expenses. In your work history, the CTAS task shows the quantity of bytes sent. Successful transfers cost even if the primary query fails.

One transfer is from an employees table (with a level filter) and one from an active workers table. BigQuery performs the join after the transfer. The successful transfer incurs data transfer costs even if the other fails.

Limits on cross-cloud join

The BigQuery free tier and sandbox don’t enable cross-cloud joins.

A query using JOIN statements may not push aggregates to BigQuery Omni regions.

Even if the identical cross-cloud query is repeated, each temporary table is utilized once.

Transfers cannot exceed 60 GB. Filtering a BigLake table and loading the result must be under 60 GB. You may request a greater quota. No restriction on scanned bytes.

Cross-cloud join queries have an internal rate limit. If query rates surpass the quota, you may get an All our servers are busy processing data sent between regions error. Retrying the query usually works. Request an internal quota increase from support to handle more inquiries.

Cross-cloud joins are only supported in colocated BigQuery regions, BigQuery Omni regions, and US and EU multi-regions. Cross-cloud connects in US or EU multi-regions can only access BigQuery Omni data.

Cross-cloud join queries with 10+ BigQuery Omni datasets may encounter the error “Dataset was not found in location “. When doing a cross-cloud join with more than 10 datasets, provide a location to prevent this problem. If you specifically select a BigQuery region and your query only includes BigLake tables, it runs as a cross-cloud query and incurs data transfer fees.

Can’t query _FILE_NAME pseudo-column with cross-cloud joins.

WHERE clauses cannot utilize INTERVAL or RANGE literals for BigLake table columns.

Cross-cloud join operations don’t disclose bytes processed and transmitted from other clouds. Child CTAS tasks produced during cross-cloud query execution have this information.

Only BigQuery Omni regions support permitted views and procedures referencing BigQuery Omni tables or views.

No pushdowns are performed to remote subqueries in cross-cloud queries that use STRUCT or JSON columns. Create a BigQuery Omni view that filters STRUCT and JSON columns and provides just the essential information as columns to enhance speed.

Inter-cloud joins don’t allow time travel queries.

Connectors

BigQuery connections let you access Cloud Storage-based BigLake tables from other data processing tools. BigLake tables may be accessed using Apache Spark, Hive, TensorFlow, Trino, or Presto. The BigQuery Storage API enforces row- and column-level governance on all BigLake table data access, including connectors.

In the diagram below, the BigQuery Storage API allows Apache Spark users to access approved data:Image Credit To Google Cloud

The BigLake tables on object storage

BigLake allows data lake managers to specify user access limits on tables rather than files, giving them better control.

Google Cloud propose utilizing BigLake tables to construct and manage links to external object stores because they simplify access control.

External tables may be used for ad hoc data discovery and modification without governance.

Limitations

BigLake tables have all external table constraints.

BigQuery and BigLake tables on object storage have the same constraints.

BigLake does not allow Dataproc Personal Cluster Authentication downscoped credentials. For Personal Cluster Authentication, utilize an empty Credential Access Boundary with the “echo -n “{}” option to inject credentials.

Example: This command begins a credential propagation session in myproject for mycluster:

gcloud dataproc clusters enable-personal-auth-session \ --region=us \ --project=myproject \ --access-boundary=<(echo -n "{}") \ mycluster

The BigLake tables are read-only. BigLake tables cannot be modified using DML or other ways.

These formats are supported by BigLake tables:

Avro

CSV

Delta Lake

Iceberg

JSON

ORC

Parquet

BigQuery requires Apache Iceberg’s manifest file information, hence BigLake external tables for Apache Iceberg can’t use cached metadata.

AWS and Azure don’t have BigQuery Storage API.

The following limits apply to cached metadata:

Only BigLake tables that utilize Avro, ORC, Parquet, JSON, and CSV may use cached metadata.

Amazon S3 queries do not provide new data until the metadata cache refreshes after creating, updating, or deleting files. This may provide surprising outcomes. After deleting and writing a file, your query results may exclude both the old and new files depending on when cached information was last updated.

BigLake tables containing Amazon S3 or Blob Storage data cannot use CMEK with cached metadata.

Secure model

Managing and utilizing BigLake tables often involves several organizational roles:

Managers of data lakes. Typically, these administrators administer Cloud Storage bucket and object IAM policies.

Data warehouse managers. Administrators usually edit, remove, and create tables.

A data analyst. Usually, analysts read and query data.

Administrators of data lakes create and share links with data warehouse administrators. Data warehouse administrators construct tables, configure restricted access, and share them with analysts.

Performance metadata caching

Cacheable information improves BigLake table query efficiency. Metadata caching helps when dealing with several files or hive partitioned data. BigLake tables that cache metadata include:

Amazon S3 BigLake tables

BigLake cloud storage

Row numbers, file names, and partitioning information are included. You may activate or disable table metadata caching. Metadata caching works well for Hive partition filters and huge file queries.

Without metadata caching, table queries must access the external data source for object information. Listing millions of files from the external data source might take minutes, increasing query latency. Metadata caching lets queries split and trim files faster without listing external data source files.

Two properties govern this feature:

Cache information is used when maximum staleness is reached.

Metadata cache mode controls metadata collection.

You set the maximum metadata staleness for table operations when metadata caching is enabled. If the interval is 1 hour, actions against the table utilize cached information if it was updated within an hour. If cached metadata is older than that, Amazon S3 or Cloud Storage metadata is retrieved instead. Staleness intervals range from 30 minutes to 7 days.

Cache refresh may be done manually or automatically:

Automatic cache refreshes occur at a system-defined period, generally 30–60 minutes. If datastore files are added, destroyed, or updated randomly, automatically refreshing the cache is a good idea. Manual refresh lets you customize refresh time, such as at the conclusion of an extract-transform-load process.

Use BQ.REFRESH_EXTERNAL_METADATA_CACHE to manually refresh the metadata cache on a timetable that matches your needs. You may selectively update BigLake table information using subdirectories of the table data directory. You may prevent superfluous metadata processing. If datastore files are added, destroyed, or updated at predetermined intervals, such as pipeline output, manually refreshing the cache is a good idea.

Dual manual refreshes will only work once.

The metadata cache expires after 7 days without refreshment.

Manual and automated cache refreshes prioritize INTERACTIVE queries.

To utilize automatic refreshes, establish a reservation and an assignment with a BACKGROUND job type for the project that executes metadata cache refresh tasks. This avoids refresh operations from competing with user requests for resources and failing if there aren’t enough.

Before setting staleness interval and metadata caching mode, examine their interaction. Consider these instances:

To utilize cached metadata in table operations, you must call BQ.REFRESH_EXTERNAL_METADATA_CACHE every 2 days or less if you manually refresh the metadata cache and set the staleness interval to 2 days.

If you automatically refresh the metadata cache for a table and set the staleness interval to 30 minutes, some operations against the table may read from the datastore if the refresh takes longer than 30 to 60 minutes.

Tables with materialized views and cache

When querying structured data in Cloud Storage or Amazon S3, materialized views over BigLake metadata cache-enabled tables increase speed and efficiency. Automatic refresh and adaptive tweaking are available with these materialized views over BigQuery-managed storage tables.

Integrations

BigLake tables are available via other BigQuery features and gcloud CLI services, including the following.

Hub for Analytics

Analytics Hub supports BigLake tables. BigLake table datasets may be listed on Analytics Hub. These postings provide Analytics Hub customers a read-only linked dataset for their project. Subscribers may query all connected dataset tables, including BigLake.

BigQuery ML

BigQuery ML trains and runs models on BigLake in Cloud Storage.

Safeguard sensitive data

BigLake Sensitive Data Protection classifies sensitive data from your tables. Sensitive Data Protection de-identification transformations may conceal, remove, or obscure sensitive data.

Read more on Govindhtech.com

#BigLaketable#DataStorage#BigQueryOmni#AmazonS3#BigQuery#Crosscloud#ApacheSpark#CloudStoragebucket#news#technews#technology#technologynews#technologytrends#govindhtech

0 notes

Text

Becoming an Azure Administration Expert

Introduction

In the rapidly evolving world of cloud computing, Microsoft Azure has established itself as one of the leading platforms for cloud services and solutions. Azure provides a wide range of cloud-based infrastructure and services, making it a highly sought-after skill for IT professionals. If you aspire to become an expert in Azure administration, this comprehensive guide will serve as your roadmap to navigate the Azure ecosystem successfully.

Understanding Microsoft Azure

In this initial chapter, we will delve into the fundamental concepts of Microsoft Azure. You will gain insights into the history and significance of Azure, understand its global datacenter presence, and explore the core services and offerings provided. A solid grasp of Azure's ecosystem is essential as you embark on your journey to master it.

Setting Up Your Azure Account

To begin your Azure journey, you must first create an Azure account. This chapter will guide you through the account creation process, emphasizing crucial factors such as selecting the right subscription, securing your account, and enabling multi-factor authentication (MFA). We'll also discuss Azure's free tier, which allows you to explore Azure services within usage limits at no cost.

Navigating the Azure Portal

The Azure Portal is your gateway to Azure services and resources. In this chapter, we'll explore the portal's features, teach you how to configure preferences, and introduce you to the Azure Command-Line Interface (CLI) for those who prefer a text-based approach to managing resources.

Virtual Machines and Compute Services

Compute services form the backbone of any cloud platform. Azure provides a variety of compute offerings, including Azure Virtual Machines (VMs), Azure App Service for web applications, and Azure Functions for serverless computing. This chapter will teach you how to create and manage VMs, configure scaling, and develop and deploy web and serverless applications.

Azure Storage Services

Effective data storage is a critical component of cloud infrastructure. Azure offers a range of storage solutions, such as Azure Blob Storage for object storage, Azure Disk Storage for block storage, and Azure SQL Database for managed databases. This chapter will guide you through these storage options, best practices for data management, and strategies for optimizing costs.

Azure Networking

Networking plays a vital role in Azure administration. This chapter covers Azure Virtual Networks, Azure Load Balancers, and Azure DNS. You will learn how to design secure and scalable network architectures, set up load balancers, and manage domain names and DNS services.

Databases and Data Management in Azure

Databases are at the core of many applications, and Azure offers a variety of database services. This chapter explores Azure SQL Database for managed relational databases, Azure Cosmos DB for NoSQL databases, and other data management solutions. You'll discover how to create and manage databases, perform backups, and ensure high availability.

Identity and Access Management (IAM)

Security is paramount in Azure administration. This chapter delves into Azure Active Directory (Azure AD) for identity and access management, encryption, and best practices for securing your Azure environment. We'll also explore Azure's identity federation and single sign-on (SSO) capabilities.

Monitoring and Management

To maintain a healthy Azure environment, you must effectively monitor and manage your resources. Azure provides services like Azure Monitor for monitoring and Azure Automation for task automation. In this chapter, you'll learn how to set up alerts, collect and analyze logs, and automate routine tasks.

Azure DevOps and Deployment

DevOps practices are crucial for efficient software development and deployment. Azure offers tools like Azure DevOps Services, Azure Resource Manager templates, and Azure Functions for automating deployments and continuous integration/continuous deployment (CI/CD) pipelines. This chapter will guide you through DevOps practices in Azure.

Advanced Azure Services

Azure provides a wide range of advanced services, including machine learning with Azure Machine Learning, serverless computing with Azure Functions, and data analytics with Azure Data Lake Analytics. This chapter offers an overview of these services and how to get started with them.

Azure Cost Management and Optimization

Effective cost management is a key concern for organizations using Azure. In this chapter, you'll learn how to set budgets, monitor spending, and use Azure Cost Management tools to analyze your expenses. We'll discuss strategies for cost optimization, helping you make the most of your cloud investment.

Azure Certifications

Azure certifications are highly regarded in the IT industry. This chapter explains the various Azure certification paths and provides tips for preparing and passing the exams, which can validate your expertise as an Azure administrator.

Azure Community and Partners

Azure has a vibrant community and ecosystem of partners. This chapter explores how you can connect with fellow Azure enthusiasts, seek help, and share your knowledge through community forums, events, and meetups. Additionally, we'll discuss Azure partner programs that can enhance your Azure expertise.

Real-World Use Cases

In the final chapter, we'll explore real-world Azure use cases, including case studies from prominent companies that have successfully leveraged Azure services. These examples will inspire you to apply Azure in various industries and scenarios, from startups to enterprises.

Conclusion

Becoming an expert in Azure administration is a journey that requires dedication, learning, and continuous practice. This comprehensive guide has provided you with a roadmap to becoming proficient in Microsoft Azure, enabling you to excel in the ever-expanding world of cloud computing. Whether you're an aspiring cloud administrator, an experienced IT professional, or a business owner looking to harness the power of the cloud, this guide is your key to success. With the skills and knowledge gained along this journey, you can confidently navigate the intricacies of Azure administration and advance your career in cloud computing.

0 notes

Text

Costs involved to delete/purge the blob that has Archive access tier

Costs involved to delete/purge the blob that has Archive access tier

A blob cannot be read directly from the Archive tier. To read a blob in the Archive tier, a user must first change the tier to Hot or Cool. For example, to retrieve and read a single 1,000-GB archived blob that has been in the Archive tier for 90 days, the following charges would apply: Data retrieval (per GB) from the Archive tier: $0.022/GB-month x 1,000 GB = $22Rehydrate operation…

View On WordPress

0 notes