#Ceph File System

Explore tagged Tumblr posts

Text

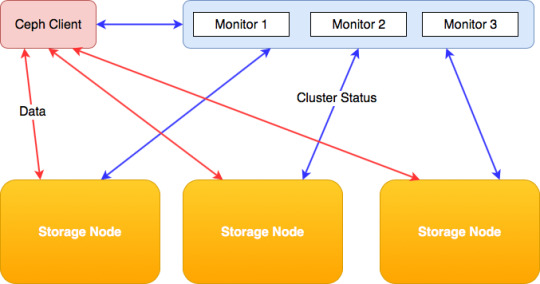

Mastering Ceph Storage Configuration in Proxmox 8 Cluster

Mastering Ceph Storage Configuration in Proxmox 8 Cluster #100daysofhomelab #proxmox8 #cephstorage #CephStorageConfiguration #ProxmoxClusterSetup #ObjectStorageInCeph #CephBlockStorage #CephFileSystem #CephClusterManagement #CephAndProxmoxIntegration

The need for highly scalable storage solutions that are fault-tolerant and offer a unified system is undeniably significant in data storage. One such solution is Ceph Storage, a powerful and flexible storage system that facilitates data replication and provides data redundancy. In conjunction with Proxmox, an open-source virtualization management platform, it can help manage important business…

View On WordPress

#Ceph and Proxmox Integration#Ceph Block Storage#Ceph Cluster Management#Ceph File System#Ceph OSD Daemons#Ceph Storage Configuration#Data Replication in Ceph#High Scalability Storage Solution#Object Storage in Ceph#Proxmox Cluster Setup

0 notes

Text

Enterprise Kubernetes Storage with Red Hat OpenShift Data Foundation (DO370)

In today’s fast-paced cloud-native world, managing storage across containers and Kubernetes platforms can be complex and resource-intensive. Red Hat OpenShift Data Foundation (ODF), formerly known as OpenShift Container Storage (OCS), provides an integrated and robust solution for managing persistent storage in OpenShift environments. One of Red Hat’s key training offerings in this space is the DO370 course – Enterprise Kubernetes Storage with Red Hat OpenShift Data Foundation.

In this blog post, we’ll explore the highlights of this course, what professionals can expect to learn, and why ODF is a game-changer for enterprise Kubernetes storage.

What is Red Hat OpenShift Data Foundation?

Red Hat OpenShift Data Foundation is a software-defined storage solution built on Ceph and tightly integrated with Red Hat OpenShift. It provides persistent, scalable, and secure storage for containers, enabling stateful applications to thrive in a Kubernetes ecosystem.

With ODF, enterprises can manage block, file, and object storage across hybrid and multi-cloud environments—without the complexities of managing external storage systems.

Course Overview: DO370

The DO370 course is designed for developers, system administrators, and site reliability engineers who want to deploy and manage Red Hat OpenShift Data Foundation in an OpenShift environment. It is a hands-on lab-intensive course, emphasizing practical experience over theory.

Key Topics Covered:

Introduction to storage challenges in Kubernetes

Deployment of OpenShift Data Foundation

Managing block, file, and object storage

Configuring storage classes and dynamic provisioning

Monitoring, troubleshooting, and managing storage usage

Integrating with workloads such as databases and CI/CD tools

Why DO370 is Essential for Modern IT Teams

1. Storage Made Kubernetes-Native

ODF integrates seamlessly with OpenShift, giving developers self-service access to dynamic storage provisioning without needing to understand the underlying infrastructure.

2. Consistency Across Environments

Whether your workloads run on-prem, in the cloud, or at the edge, ODF provides a consistent storage layer, which is critical for hybrid and multi-cloud strategies.

3. Data Resiliency and High Availability

With Ceph at its core, ODF provides high availability, replication, and fault tolerance, ensuring data durability across your Kubernetes clusters.

4. Hands-on Experience with Industry-Relevant Tools

DO370 includes hands-on labs with tools like NooBaa for S3-compatible object storage and integrates storage into realistic OpenShift use cases.

Who Should Take This Course?

OpenShift Administrators looking to extend their skills into persistent storage.

Storage Engineers transitioning to container-native storage solutions.

DevOps professionals managing stateful applications in OpenShift environments.

Teams planning to scale enterprise workloads that require reliable data storage in Kubernetes.

Certification Pathway

DO370 is part of the Red Hat Certified Architect (RHCA) infrastructure track and is a valuable step for anyone pursuing expert-level certification in OpenShift or storage technologies. Completing this course helps prepare for the EX370 certification exam.

Final Thoughts

As enterprises continue to shift towards containerized and cloud-native application architectures, having a reliable and scalable storage solution becomes non-negotiable. Red Hat OpenShift Data Foundation addresses this challenge, and the DO370 course is the perfect entry point for mastering it.

If you're an IT professional looking to gain expertise in Kubernetes-native storage and want to future-proof your career, Enterprise Kubernetes Storage with Red Hat OpenShift Data Foundation (DO370) is the course to take. For more details www.hawkstack.com

0 notes

Text

Cloud Native Storage Market Insights: Industry Share, Trends & Future Outlook 2032

TheCloud Native Storage Market Size was valued at USD 16.19 Billion in 2023 and is expected to reach USD 100.09 Billion by 2032 and grow at a CAGR of 22.5% over the forecast period 2024-2032

The cloud native storage market is experiencing rapid growth as enterprises shift towards scalable, flexible, and cost-effective storage solutions. The increasing adoption of cloud computing and containerization is driving demand for advanced storage technologies.

The cloud native storage market continues to expand as businesses seek high-performance, secure, and automated data storage solutions. With the rise of hybrid cloud, Kubernetes, and microservices architectures, organizations are investing in cloud native storage to enhance agility and efficiency in data management.

Get Sample Copy of This Report: https://www.snsinsider.com/sample-request/3454

Market Keyplayers:

Microsoft (Azure Blob Storage, Azure Kubernetes Service (AKS))

IBM, (IBM Cloud Object Storage, IBM Spectrum Scale)

AWS (Amazon S3, Amazon EBS (Elastic Block Store))

Google (Google Cloud Storage, Google Kubernetes Engine (GKE))

Alibaba Cloud (Alibaba Object Storage Service (OSS), Alibaba Cloud Container Service for Kubernetes)

VMWare (VMware vSAN, VMware Tanzu Kubernetes Grid)

Huawei (Huawei FusionStorage, Huawei Cloud Object Storage Service)

Citrix (Citrix Hypervisor, Citrix ShareFile)

Tencent Cloud (Tencent Cloud Object Storage (COS), Tencent Kubernetes Engine)

Scality (Scality RING, Scality ARTESCA)

Splunk (Splunk SmartStore, Splunk Enterprise on Kubernetes)

Linbit (LINSTOR, DRBD (Distributed Replicated Block Device))

Rackspace (Rackspace Object Storage, Rackspace Managed Kubernetes)

Robin.Io (Robin Cloud Native Storage, Robin Multi-Cluster Automation)

MayaData (OpenEBS, Data Management Platform (DMP))

Diamanti (Diamanti Ultima, Diamanti Spektra)

Minio (MinIO Object Storage, MinIO Kubernetes Operator)

Rook (Rook Ceph, Rook EdgeFS)

Ondat (Ondat Persistent Volumes, Ondat Data Mesh)

Ionir (Ionir Data Services Platform, Ionir Continuous Data Mobility)

Trilio (TrilioVault for Kubernetes, TrilioVault for OpenStack)

Upcloud (UpCloud Object Storage, UpCloud Managed Databases)

Arrikto (Kubeflow Enterprise, Rok (Data Management for Kubernetes)

Market Size, Share, and Scope

The market is witnessing significant expansion across industries such as IT, BFSI, healthcare, retail, and manufacturing.

Hybrid and multi-cloud storage solutions are gaining traction due to their flexibility and cost-effectiveness.

Enterprises are increasingly adopting object storage, file storage, and block storage tailored for cloud native environments.

Key Market Trends Driving Growth

Rise in Cloud Adoption: Organizations are shifting workloads to public, private, and hybrid cloud environments, fueling demand for cloud native storage.

Growing Adoption of Kubernetes: Kubernetes-based storage solutions are becoming essential for managing containerized applications efficiently.

Increased Data Security and Compliance Needs: Businesses are investing in encrypted, resilient, and compliant storage solutions to meet global data protection regulations.

Advancements in AI and Automation: AI-driven storage management and self-healing storage systems are revolutionizing data handling.

Surge in Edge Computing: Cloud native storage is expanding to edge locations, enabling real-time data processing and low-latency operations.

Integration with DevOps and CI/CD Pipelines: Developers and IT teams are leveraging cloud storage automation for seamless software deployment.

Hybrid and Multi-Cloud Strategies: Enterprises are implementing multi-cloud storage architectures to optimize performance and costs.

Increased Use of Object Storage: The scalability and efficiency of object storage are driving its adoption in cloud native environments.

Serverless and API-Driven Storage Solutions: The rise of serverless computing is pushing demand for API-based cloud storage models.

Sustainability and Green Cloud Initiatives: Energy-efficient storage solutions are becoming a key focus for cloud providers and enterprises.

Enquiry of This Report: https://www.snsinsider.com/enquiry/3454

Market Segmentation:

By Component

Solution

Object Storage

Block Storage

File Storage

Container Storage

Others

Services

System Integration & Deployment

Training & Consulting

Support & Maintenance

By Deployment

Private Cloud

Public Cloud

By Enterprise Size

SMEs

Large Enterprises

By End Use

BFSI

Telecom & IT

Healthcare

Retail & Consumer Goods

Manufacturing

Government

Energy & Utilities

Media & Entertainment

Others

Market Growth Analysis

Factors Driving Market Expansion

The growing need for cost-effective and scalable data storage solutions

Adoption of cloud-first strategies by enterprises and governments

Rising investments in data center modernization and digital transformation

Advancements in 5G, IoT, and AI-driven analytics

Industry Forecast 2032: Size, Share & Growth Analysis

The cloud native storage market is projected to grow significantly over the next decade, driven by advancements in distributed storage architectures, AI-enhanced storage management, and increasing enterprise digitalization.

North America leads the market, followed by Europe and Asia-Pacific, with China and India emerging as key growth hubs.

The demand for software-defined storage (SDS), container-native storage, and data resiliency solutions will drive innovation and competition in the market.

Future Prospects and Opportunities

1. Expansion in Emerging Markets

Developing economies are expected to witness increased investment in cloud infrastructure and storage solutions.

2. AI and Machine Learning for Intelligent Storage

AI-powered storage analytics will enhance real-time data optimization and predictive storage management.

3. Blockchain for Secure Cloud Storage

Blockchain-based decentralized storage models will offer improved data security, integrity, and transparency.

4. Hyperconverged Infrastructure (HCI) Growth

Enterprises are adopting HCI solutions that integrate storage, networking, and compute resources.

5. Data Sovereignty and Compliance-Driven Solutions

The demand for region-specific, compliant storage solutions will drive innovation in data governance technologies.

Access Complete Report: https://www.snsinsider.com/reports/cloud-native-storage-market-3454

Conclusion

The cloud native storage market is poised for exponential growth, fueled by technological innovations, security enhancements, and enterprise digital transformation. As businesses embrace cloud, AI, and hybrid storage strategies, the future of cloud native storage will be defined by scalability, automation, and efficiency.

About Us:

SNS Insider is one of the leading market research and consulting agencies that dominates the market research industry globally. Our company's aim is to give clients the knowledge they require in order to function in changing circumstances. In order to give you current, accurate market data, consumer insights, and opinions so that you can make decisions with confidence, we employ a variety of techniques, including surveys, video talks, and focus groups around the world.

Contact Us:

Jagney Dave - Vice President of Client Engagement

Phone: +1-315 636 4242 (US) | +44- 20 3290 5010 (UK)

#cloud native storage market#cloud native storage market Scope#cloud native storage market Size#cloud native storage market Analysis#cloud native storage market Trends

0 notes

Note

[Silas didn't have anything else to go off of, so he decided to dutifully take Sonny's word for it.]

It sounds... rather dreadful. I'm sure once things are back on track you can make a good difference with what you have made.

[There's another bit of quiet, one of Silas' fins twitching at small sounds outside of the cave. The few birds left this time of year trilling away, he supposed.]

Here, you just... do what you need to survive. If you are gifted with claws and teeth and the will to live, you make it out here. If you aren't, you feed someone else who will.

[He had begun to run out of rations a week prior, himself. Hard to hunt right now, and GOD was he tired of dried fish. The idea of food made his stomach pang, yet with a slight hiss he pushed it back. He'll hunt later. He was needed currently, if even as a mere distraction from nagging voices and blithering fools]

Intellect is measured by how well you survive and nothing more out here... However, I imagine that within the ceph's walls, it is quite different.

What are you working on, currently?

- Silas

"Claws and teeth, money and brains... Really, there's no difference. At the end of the day, they're both gifts you've got to be lucky enough to be born into. It would seem our worlds are more similar than you might realize; they're just wearing different coats of paint."

He mused absently, rubbing at the dark bristles under his chin with a talon.

"As for this machine. It's to be a physical interface for tracking purposes; It'll ping whatever important items and files that set it to search for. Without a map, I'll only be able to see the general location in blank space, but it's better than nothing, at least for now... Most importantly, it'll mask my location, so if that orange bastard tries the same thing to try and find me he'll be shit out of luck."

A rounded, forest green screen began to appear on the topmost surface, looking like a radar display you'd see on a ship. He hesitated before presenting his next words, aware that it was much more sensitive information.

"...I didn't mention this before, but I've already got the first code... The one for the credentials. It's the easiest in the set to remember, as it's just my own name. I mean, obviously. I never stopped being admin out there, after all."

He rolled his eyes with a bemused smirk.

"As for the rest... They are based in a homebrew cryptographic scheme. Unfortunately, tackling it by brute force is not an option given the time crunch, so I'm going to need to retrieve them manually."

The fabrication of the unit paused, and Sonny leaned forward and squinted at the screen as he stopped to figure out some kinks in the code. Typical dev stuff.

"Sam will likely figure out or find the code for the credentials - including its format - given enough time, but for now I'm at an advantage as I can start looking for the other keys right away. He doesn't know the system nearly as intimately as I."

5 notes

·

View notes

Text

Price: [price_with_discount] (as of [price_update_date] - Details) [ad_1] Seagate manufactures hard drives that specifically address the demand for hyperscale cloud scalability. As the flagship of the Seagate® X class, the Exos® X18 enterprise hard drives are the highest-capacity hard drives in the fleet.Maximum Storage Capacity for Highest Rack Space EfficiencyMarket-leading 18TB HDD offering the highest capacity available for more petabytes per rackHighly reliable performance with enhanced caching, making it the logical choice for cloud data center and massive scale-out data center applicationsHyperscale SATA model tuned for large data transfers and low latencyPowerBalance™ feature optimizes Watts/TBMaximize total cost of ownership savings through lower power and weight with helium sealed-drive designProven helium side-sealing weld technology for added handling robustness and leak protectionDigital environmental sensors to monitor internal drive conditions for optimal operation and performanceData protection and security- Seagate Secure™ features for safe, affordable, fast, and easy drive retirementProven enterprise-class reliability backed by 5-year limited warranty and 2.5M-hr MTBF rating Scalable hyperscale applications/cloud data centers Massive scale-out data centers Big data applications High-capacity density RAID storage Mainstream enterprise external storage arrays Distributed file systems, including Hadoop and Ceph Enterprise backup and restore- D2D, virtual tape Centralized surveillance [ad_2]

0 notes

Text

SSD Capacities: Accessing the Potential

Real-world workloads enable huge SSD capacities and efficient data granularity

Large SSDs (30TB+) present new challenges. Two important ones are:

High-density NAND, like QLC (quad-level cell NAND that stores 4 bits of data per cell), enables large SSDs but presents more challenges than TLC.

SSD capacity growth requires local DRAM memory growth for maps that have traditional 1:1000 DRAM to Storage Capacity ratios.

We cannot sustain the 1:1000 ratio. Do we need it? Why not 1:4000? Or 1:8000? They would cut DRAM demand by 4 or 8. What’s stopping us?

This blog examines this approach and proposes a solution for large-capacity SSDs

First, why must DRAM and NAND capacity be 1:1000? The SSD must map system logical block addresses (LBA) to NAND pages and keep a live copy of them to know where to write or read data. Since LBAs are 4KB and map addresses are 32 bits (4 bytes), we need one 4-byte entry per 4KB LBA, hence the 1:1000 ratio. We’ll stick to this ratio because it simplifies the reasoning and won’t change the outcome. Very large capacities would need more.

Once map entry per LBA is the best granularity because it allows the system to write at the lowest level. 4KB random writes are used to benchmark SSD write performance and endurance.

Long-term, this may not work. What about one map entry every four LBAs? 8, 16, 32+ LBAs? One map entry every 4 LBAs (i.e., 16KB) may save DRAM space, but what happens when the system wants to write 4KB? Since the entry is every 16KB, the SSD must read the 16KB page, modify the 4KB to be written, and write back the entire 16KB page. This would affect performance (“read 16KB, modify 4KB, write back 4KB”, rather than just “write 4KB”) and endurance (system writes 4KB but SSD writes 16KB to NAND), reducing SSD life by a factor of 4.

It’s concerning when this happens on QLC technology, which has a harder endurance profile. For QLC, endurance is essential!

Common wisdom holds that changing the map granularity (or Indirection Unit, or “IU”) would severely reduce SSD life (endurance).

All of the above is true, but do systems write 4KB data? And how often? One can buy a system to run FIO with 4KB RW profile, but most people don’t. They buy them for databases, file systems, object stores, and applications. Does anyone use 4KB Writes?

We measured it. We measured how many 4KB writes are issued and how they contribute to Write Amplification, i.e., extra writes that reduce device life, on a set of application benchmarks from TPC-H (data analytics) to YCSB (cloud operations), running on various databases (Microsoft SQL Server, RocksDB, Apache Cassandra), File Systems (EXT4, XFS), and, in some cases, entire software defined storage solutions like Red Hat Ceph Storage

Before discussing the analysis, we must discuss why write size matters when endurance is at stake.

Write 16K to modify 4K creates a 4x Write Amplification Factor for a 4KB write. What about an 8K write? If in the same IU, “write 16K to modify 8K” = WAF=2. A bit better. If we write 16K? It may not contribute to WAF because one “writes 16K to modify 16KB”. Just small writes contribute to WAF.

Writes may not be aligned, so there is always a misalignment that contributes to WAF but decreases rapidly with size.

The chart below shows this trend:

Large writes barely affect WAF. 256KB may have no effect (WAF=1x) if aligned or low (WAF=1.06x) if misaligned. Much better than 4KB writes’ 4x!

To calculate WAF contribution, we must profile all SSD writes and align them within an IU. Bigger is better. The system was instrumented to trace IOs for several benchmarks. After 20 min, we post-process samples (100–300 million per benchmark) to check size, IU alignment, and add every IO contribution to WAF.

The table below shows how many IOs each size bucket holds:

As shown, most writes fit in the 4–8KB (bad) or 256KB+ (good) buckets.

If we apply the above WAF chart to all misaligned IOs, the “Worst case” column shows most WAF is 1.x, a few 2.x, and very rarely 3.x. Better than 4x but not enough to make it viable.

IOs are not always misaligned. Why would they? Why would modern file systems misalign structures at such small scales? They don’t.

We measured and post-processed each benchmark’s 100+ million IOs to compare them to a 16KB IU. Last column “Measured” WAF shows the result. WAF >=1.05x, so one can grow the IU size by 400%, make large SSD using QLC NAND and existing, smaller DRAM technologies at a life cost of >5%, not 400%! These results are amazing.

The argument may be that many 4KB and 8KB writes contribute 400% or 200% to WAF. Given the small but numerous IO contributions, shouldn’t the aggregated WAF be much higher? Though many, they are small and carry a small payload, minimising their volume impact. A 4KB write and a 256KB write are both considered single writes in the above table, but the latter carries 64x the data.

Adjusting the above table for IO Volume (i.e., each IO size and data moved) rather than IO count yields the following representation:

The colour grading for more intense IOs is now skewed to the right, indicating that large IOs move a lot of data and have a small WAF contribution.

Finally, not all SSD workloads are suitable for this approach. For instance, the metadata portion of a Ceph storage node does very little IO, causing high WAF=2.35x. Metadata is unsuitable for large IU drives. The combined WAF is minimally affected if we mix data and metadata in Ceph (a common approach with NVMe SSDs), as data is larger than metadata.

Moving to 16K IU works in apps and most benchmarks, according to our testing. The industry must be convinced to stop benchmarking SSDs with 4K RW and FIO, which is unrealistic and harmful to evolution.

0 notes

Text

Ceph and Minio are the two main self-hostable distributed file storage systems I'm aware of. I've only used Minio programmatically but they seem to have a web interface. A friend of a friend is a big fan of Ceph.

anybody know any good FOSS alternatives to google drive?

14 notes

·

View notes

Link

DreamHost is a Los Angeles-based web hosting provider and domain name registrar. It is owned by New Dream Network, LLC, founded in 1996 by Dallas Bethune, Josh Jones, Michael Rodriguez and Sage Weil, undergraduate students at Harvey Mudd College in Claremont, California, and registered in 1997 by Michael Rodriguez. DreamHost began hosting customers' sites in 1997. In May 2012, DreamHost spun off Inktank. Inktank is a professional services and support company for the open source Ceph file system. In November 2014, DreamHost spun off Akanda, an open source network virtualization project. As of February 2016, Dreamhost employs about 200 employees and has close to 400,000 customers.

1 note

·

View note

Link

interesting discussion of something I was chewing on yesterday: if you want to implement an efficient database you want to skip over the filesystem abstraction entirely and actually see what hardware you’re talking to.

24 notes

·

View notes

Text

File Systems Unfit as Distributed Storage Back Ends: 10 Years of Ceph

http://muratbuffalo.blogspot.com/2019/11/sosp19-file-systems-unfit-as.html Comments

1 note

·

View note

Link

1 note

·

View note

Text

GlusterFS vs Ceph: Two Different Storage Solutions with Pros and Cons

GlusterFS vs Ceph: Two Different Storage Solutions with Pros and Cons @vexpert #vmwarecommunities #ceph #glusterfs #glusterfsvsceph #cephfs #containerstorage #kubernetesstorage #virtualization #homelab #homeserver #docker #kubernetes #hci

I have been trying out various storage solutions in my home lab environment over the past couple of months or so. Two that I have been extensively testing are GlusterFS vs Ceph, and specifically GlusterFS vs CephFS to be exact, which is Ceph’s file system running on top of Ceph underlying storage. I wanted to give you a list of pros and cons of GlusterFS vs Ceph that I have seen in working with…

0 notes

Text

Unlocking Enterprise Kubernetes Storage with Red Hat OpenShift Data Foundation (DO370)

In today’s cloud-native world, Kubernetes has become the de facto platform for orchestrating containerized applications. However, with this rise comes the critical need for persistent, reliable, and scalable storage solutions that can keep up with dynamic workloads. This is where Red Hat OpenShift Data Foundation (ODF) steps in as a powerful storage layer for OpenShift clusters.

In this blog post, we explore how the DO370 training course—Enterprise Kubernetes Storage with Red Hat OpenShift Data Foundation—equips IT professionals with the skills to deploy and manage advanced Kubernetes storage solutions in an enterprise environment.

What is OpenShift Data Foundation?

Red Hat OpenShift Data Foundation (formerly OpenShift Container Storage) is an integrated, software-defined storage solution for containers. It provides a unified platform to manage block, file, and object storage directly within your OpenShift cluster. Built on Ceph and Rook, ODF is designed for high availability, scalability, and performance—making it ideal for enterprise applications.

Key Features of ODF:

Seamless integration with Red Hat OpenShift

Dynamic provisioning of persistent volumes

Support for multi-cloud and hybrid storage scenarios

Built-in data replication, encryption, and disaster recovery

Monitoring and management through the OpenShift console

About the DO370 Course

The DO370 course is designed for infrastructure administrators, storage engineers, and DevOps professionals who want to master enterprise-grade storage in OpenShift environments.

Course Highlights:

Install and configure OpenShift Data Foundation on OpenShift clusters

Manage storage classes, persistent volume claims (PVCs), and object storage

Implement monitoring and troubleshooting for storage resources

Secure data at rest and in motion

Explore advanced topics like snapshotting, data resilience, and performance tuning

Hands-On Labs:

Red Hat’s training emphasizes practical, real-world labs. In DO370, learners get hands-on experience setting up ODF, deploying workloads, managing storage resources, and performing disaster recovery simulations—all within a controlled OpenShift environment.

Why DO370 Matters for Enterprises

As enterprises transition from legacy systems to cloud-native platforms, managing stateful workloads on Kubernetes becomes a top priority. DO370 equips your team with the tools to:

Deliver high-performance storage for databases and big data applications

Ensure business continuity with built-in data protection features

Optimize storage usage across hybrid and multi-cloud environments

Reduce infrastructure complexity by consolidating storage on OpenShift

Ideal Audience

This course is ideal for:

Red Hat Certified System Administrators (RHCSA)

OpenShift administrators and site reliability engineers (SREs)

Architects designing storage for containerized applications

Teams adopting DevOps and CI/CD practices with stateful apps

Conclusion

If your organization is embracing Kubernetes at scale, you cannot afford to overlook storage. DO370: Enterprise Kubernetes Storage with Red Hat OpenShift Data Foundation gives your teams the knowledge and confidence to deploy resilient, scalable, and secure storage in OpenShift environments.

Whether you're modernizing legacy applications or building new cloud-native solutions, OpenShift Data Foundation is your answer for enterprise-grade Kubernetes storage—and DO370 is the path to mastering it.

Interested in enrolling in DO370 for your team? At HawkStack Technologies, we offer Red Hat official training and corporate packages, including access to Red Hat Learning Subscription (RHLS). Contact us today to learn more! 🌐 www.hawkstack.com

0 notes

Text

Crysis 3 Crack Download

Crysis 3 CRACK NEW 100% WORKING - YouTube.

Crysis 3 Keygen and Crack Download GamesC.

Crysis 2 Activation Crack - trueyfile.

DOWNLOAD CRYSIS 3 CRACK FOR FREE.

Crysis 3 - CRACK v_3 - FIX | U.

Crysis 3 - Kho Game Offline Cũ.

Crysis® 3 for PC | Origin.

Free Download Crysis 3 Game for PC - DoubleGames.

Crysis 2 and 3 Multiplayer Crack file ~ Free Download.

Crysis 3 Crack Reloaded Crack.

Crysis 3 crack.

Crysis - PC Game Trainer Cheat PlayFix No-CD No-DVD | GameCopyWorld.

Crysis 3 Torrent Download - CroTorrents.

Crysis 3 CRACK NEW 100% WORKING - YouTube.

Crysis 3: Digital Deluxe Edition v1.3 - FitGirl. The fate of the world is in your hands. New and old enemies threaten the peace you worked so hard to achieve 24 years ago. Your search for the Alpha Ceph continues, but this time you'll also need to expose the truth behind the C.E.L.L. corporation.

Crysis 3 Keygen and Crack Download GamesC.

1. Open your browser and go to www(dot)crysis-3-crack-download(dot)BlogSpot (dot) com 2. Go to the Crysis 3 Crack Page 3. Download the crack by clicking the button 4. Unzip / extract the downloaded crack (use preinstalled winzip or winrar) 5. Cut the extracted file and paste it in the Bin32 folder of the Crysis 3 game 6. Create a shorcut for.

Crysis 2 Activation Crack - trueyfile.

Crysis 3 Mission 2 Crack Fix 11 -- DOWNLOAD (Mirror #1) bb84b2e1ba Crysis 3 - PC Game Trainer Cheat PlayFix No-CD No-DVD. 25 MB) Crysis 3 Crack Fix.... Mission Pack 1: Time of the Mutants · Mission:In Boxes · Mist Survival · Mistfal · MISTOVER · M The Game · MLB 2K11 · MLB 2K12 · MMORPG Tycoon 2. Crysis 3 KEYGEN & CRACK (DOWNLOAD)2013 100% Working Keygen Check Yourself - YouTube. dm_5122d03d0f2a7. Trending Shinzo Abe. Trending. Shinzo Abe. 4:28. DH NewsRush | July 8 | Shinzo Abe | Mohammed Zubair | Rohit Ranjan | Amnesty India | Aakar Patel | Rishi Sunak. Deccan Herald. 34:41. Download Crysis 3 crack only FIX. Crack Fix Boss Alpha Celph | Direct. Pass vnsharing. Các Bạn Down về bỏ cùng một Folder sau đó down tiếp file NÀY về bỏ cùng folder, sau đó nhấn open file bat thì các file sẽ tự chuyển EVM sang rar. NEXT.

DOWNLOAD CRYSIS 3 CRACK FOR FREE.

More Crysis 3 Fixes. Crysis 3 v1.0 Fix 2 Internal All No-DVD (Reloaded) Crysis 3 v1.1 All No-DVD (Reloaded) Crysis 3 v1.2 All No-DVD (Reloaded) Add new comment; Add new comment. Your name (Login to post using username, leave blank to post as Anonymous) Your name. Subject. Comment * user name. I double dare you to fill this field! What is another name for a graphics.

Crysis 3 - CRACK v_3 - FIX | U.

Crysis 3 Free Download Crack Full Version with Activation Code/Serial for. This is a full crack version of Crysis 3 and it is free to download and play!. Crysis 3; The quick and easy way to download and play Crysis 3 for free. However, if you want to play the game. Crysis 3 on PC Crack is here to download and play.

Crysis 3 - Kho Game Offline Cũ.

May 09, 2016 · 1.Mount file dari folder DVD1 dengan PowerISO. 2.Jalankan originalinstaller dan instal. 3.Jika muncul “Next volume is required” maka mount file di DVD2 dan pilih ok. 4.Pilih exit jika sudah ada pesan “External component has thrown an exception.”. 5.Buka my computer > Crysis 3 Disk 2. 6.Copy file dari folder Crack dan paste di direktori.

Crysis® 3 for PC | Origin.

Crysis 3 download torrent 15 3 Release Date: 2013 Genre: Action / Shooters Developer: Electronic Arts Publisher: Crytek Language: EN / Multi Crack: Not required System Requirements CPU: Dual core CPU RAM: 3 GB OS: Windows Vista, Windows 7 or Windows 8 Video Card: DirectX 11 graphics card with 1Gb Video RAM (Geforce GTS 450/Radeon HD 5770). Play Instructions: Install the game - Full Installation. Replace the original <GameDir>\BIN32\CRYSIS.EXE & <GameDir>\BIN64\CRYSIS.EXE files with the one from the File Archive. Play the Game! Note: Most likely this Fixed EXE also works as a No-DVD and for other languages, but this has not been confirmed yet!.

Free Download Crysis 3 Game for PC - DoubleGames.

Crysis 3 Türkçe İndir Full Son final sürüm Tek Link Drive Mega Free Torrent oyun direkt indir tüm dlc,repack türkçe yama kurulum Crysis 3 Digital Deluxe Edition.... CRACK YAPARKEN CARCK DOSYASININ İÇERİSİNDEKİLERİ OYUNUN KURULU OLDUĞU KLASÖRE ATINCA OLMUYOR.Crack dosyalarını (C ve ) oyunun kurulu olduğu C. Đây đã là phần thứ 3 trong loạt series Crysis do Crytek phát triển và EA phát hành năm 2013. Trong bài viết này, Freetuts sẽ chia sẻ link Download Crysis 3 Full miễn phí cho PC, hướng dẫn cài đặt chi tiết cho các bạn. Cấu hình PC để chơi game Crysis 3. If you want to download the crack get it here in my blog its safe and tested.. DOWNLOAD Crysis 3 Crack NOW! Watch the video demonstration below. It is quite simple and easy so just go through the instructions. If playback doesn't begin shortly, try restarting your device.

Crysis 2 and 3 Multiplayer Crack file ~ Free Download.

MegaGames - founded in 1998, is a comprehensive hardcore gaming resource covering PC, Xbox Series X, Xbox One, PS5, PS4, Nintendo Switch, Wii U, Mobile Games, News. Download below to solve your dll problem. We currently have 1 version available for this file. If you have other versions of this file, please contribute to the community by uploading that dll file. , File description: Errors related to can arise for a few different different reasons..

Crysis 3 Crack Reloaded Crack.

Download Crysis. File information File name CRYSIS.V1..ALL.VISTAX64.RAZOR19... File size 8.56 MB Mime type Stdin has more than one entry--rest ignored compressed-encoding=application/zip; charset=binary Other info Zip archive data, at least v1.0 to extract. Download. user name. I double dare you to fill this field! Popular Videos. Dolmen. Crysis-3-D.-D.-E.-No files in this folder. Sign in to add files to this folder. Main menu. Google apps.

Crysis 3 crack.

Crysis 3 è il terzo capitolo della serie di sparatutto in prima persona Crytek e ci riporta ancora una volta tra le macere di New York.... Commenti aventi ad oggetto indicazioni o richieste per il download completo del gioco saranno cancellati. Crysis 3 Update v1.3 INTERNAL-RELOADED... Copy over the cracked content from the /Crack directory. Crysis 3 Crack Download. 1.Game Rewiew. The hunted becomes the hunter in the CryEngine-powered open-world shooter Crysis 3! Players take on the role of 'Prophet' as he returns to New York in the year in 2047, only to discover that the city has been encased in a Nanodome created by the corrupt Cell Corporation. The New York City Liberty Dome is. Search for videos, audio, pictures and other files Search files.

Crysis - PC Game Trainer Cheat PlayFix No-CD No-DVD | GameCopyWorld.

May 12, 2013 · 2. Copy the cracked content from the crack folder and into the main. install folder and overwrite. 3. Block the game in your firewall and mark our cracked content as. secure/trusted in your antivirus program. 4. Start the game from C 5.

Crysis 3 Torrent Download - CroTorrents.

Crysis 3 Free Download game setup direct single link. Crysis 3 is one of very nice and perfect action and shooting games. With high graphics. Crysis 3 Overview. This is a game which is full of action and adventure. Crysis 3 is developed by. Description. The fate of the world is in your hands. New and old enemies threaten the peace you worked so hard to achieve 24 years ago. Your search for the Alpha Ceph continues, but this time you'll also need to expose the truth behind the C.E.L.L. corporation. It won't be easy, but your Nanosuit helps you clear a path to victory.

See also:

Office Tab Enterprise 10 Serial Key

Guitar Rig 5 Download Cracked Mac

Plants Vs Zombies Mac Free Full Version Crack

1 note

·

View note

Video

youtube

In this video, we talk about how to set up a Ceph RADOS block device. We will mount this device on a Linux client and talk about what block device is used for and the difference between a Ceph file system. We also touch on iSCSI and its usage around block devices. Moreover, we talk about mirroring RADOS data. Gist with instructions https://ift.tt/3I5Vjig Please follow me on Twitter https://twitter.com/kalaspuffar Learn the basics of Java programming and software development in 5 online courses from Duke University. https://ift.tt/2QkqKvO My merchandise: https://ift.tt/3aqGTZF Join this channel to get access to perks: https://www.youtube.com/channel/UCnG-TN23lswO6QbvWhMtxpA/join Or visit my blog at: https://ift.tt/3bF6D4l Outro music: Sanaas Scylla #ceph #rados #block

0 notes

Text

Ceph Client

Ceph.client.admin.keyring ceph.bootstrap-mgr.keyring ceph.bootstrap-osd.keyring ceph.bootstrap-mds.keyring ceph.bootstrap-rgw.keyring ceph.bootstrap-rbd.keyring Use ceph-deploy to copy the configuration file and admin key to your admin node and your Ceph Nodes so that you can use the ceph CLI without having to specify the monitor address.

Generate a minimal ceph.conf file, make a local copy, and transfer it to the client: juju ssh ceph-mon/0 sudo ceph config generate-minimal-conf tee ceph.conf juju scp ceph.conf ceph-client/0: Connect to the client: juju ssh ceph-client/0 On the client host, Install the required software, put the ceph.conf file in place, and set up the correct.

1.10 Installing a Ceph Client. To install a Ceph Client: Perform the following steps on the system that will act as a Ceph Client: If SELinux is enabled, disable it and then reboot the system. Stop and disable the firewall service. For Oracle Linux 6 or Oracle Linux 7 (where iptables is used instead of firewalld ), enter: For Oracle Linux 7, enter.

Ceph kernel client (kernel modules). Contribute to ceph/ceph-client development by creating an account on GitHub. Get rid of the releases annotation by breaking it up into two functions: prepcap which is done under the spinlock and sendcap that is done outside it.

Ceph Client List

Ceph Client Log

Ceph Client Windows

A python client for ceph-rest-api After learning there was an API for Ceph, it was clear to me that I was going to write a client to wrap around it and use it for various purposes. January 1, 2014.

Ceph is a massively scalable, open source, distributed storage system.

These links provide details on how to use Ceph with OpenStack:

Ceph - The De Facto Storage Backend for OpenStack(Hong Kong Summittalk)

Note

Configuring Ceph storage servers is outside the scope of this documentation.

Authentication¶

We recommend the cephx authentication method in the Cephconfig reference. OpenStack-Ansible enables cephx by default forthe Ceph client. You can choose to override this setting by using thecephx Ansible variable:

Deploy Ceph on a trusted network if disabling cephx.

Configuration file overrides¶

OpenStack-Ansible provides the ceph_conf_file variable. This allowsyou to specify configuration file options to override the defaultCeph configuration:

The use of the ceph_conf_file variable is optional. By default,OpenStack-Ansible obtains a copy of ceph.conf from one of your Cephmonitors. This transfer of ceph.conf requires the OpenStack-Ansibledeployment host public key to be deployed to all of the Ceph monitors. Moredetails are available here: Deploying SSH Keys.

The following minimal example configuration sets nova and glanceto use ceph pools: ephemeral-vms and images respectively.The example uses cephx authentication, and requires existing glance andcinder accounts for images and ephemeral-vms pools.

For a complete example how to provide the necessary configuration for a Cephbackend without necessary access to Ceph monitors via SSH please seeCeph keyring from file example.

Extra client configuration files¶

Deployers can specify extra Ceph configuration files to supportmultiple Ceph cluster backends via the ceph_extra_confs variable.

Ceph Client List

These config file sources must be present on the deployment host.

Ceph Client Log

Alternatively, deployers can specify more options in ceph_extra_confsto deploy keyrings, ceph.conf files, and configure libvirt secrets.

The primary aim of this feature is to deploy multiple ceph clusters ascinder backends and enable nova/libvirt to mount block volumes from thosebackends. These settings do not override the normal deployment ofceph client and associated setup tasks.

Deploying multiple ceph clusters as cinder backends requires the followingadjustments to each backend in cinder_backends Onyx sierra.

The dictionary keys rbd_ceph_conf, rbd_user, and rbd_secret_uuidmust be unique for each ceph cluster to used as a cinder_backend.

Monitors¶

The Ceph Monitor maintains a master copy of the cluster map.OpenStack-Ansible provides the ceph_mons variable and expects a list ofIP addresses for the Ceph Monitor servers in the deployment:

Configure os_gnocchi with ceph_client¶

Ceph Client Windows

If the os_gnocchi role is going to utilize the ceph_client role, the followingconfigurations need to be added to the user variable file:

0 notes