#Claude 3 Haiku

Text

#AWS#Amazon Bedrock#AI#Generative AI#Anthropic Claude 3 Haiku#Anthropic Claude 3#Anthropic#Claude 3 Haiku#Claude 3#Claude#Optical Character Recognition#OCR

0 notes

Text

On Hatred

My Query:

What are some common views, both pro and con, towards the attitude of "I don't believe in hating anyone"?

~~~~~

Claude-3-Haiku’s Response:

The attitude of "I don't believe in hating anyone" is a complex and multifaceted one that has both positive and negative perspectives associated with it.

Potential Positives:

Promotes compassion and understanding: This view suggests a desire to avoid harboring deep-seated animosity or malice towards other human beings, even in the face of disagreement or conflict.

Rejects absolutist thinking: It indicates an openness to seeing the humanity in others and avoiding the tendency to demonize or completely write off those with different beliefs or behaviors.

Encourages de-escalation: This attitude can help diffuse tensions and promote more constructive dialogue, rather than fueling cycles of retaliation and hatred.

Aligns with ethical/spiritual principles: For some, this view may stem from religious, philosophical or moral convictions about the importance of love, forgiveness and non-violence.

Potential Negatives:

Can come across as naive or simplistic: Critics may argue that this attitude fails to grapple with the reality of genuine evil, oppression or unforgivable actions in the world.

Risks moral relativism: Taken to an extreme, this view could be seen as refusing to condemn clearly unethical behaviors or hold people accountable for their actions.

May neglect valid feelings of anger/outrage: Denouncing "hate" entirely could invalidate justified emotions in response to injustice, cruelty or victimization.

Ignores power dynamics: Some may feel this attitude glosses over the need for marginalized groups to actively resist and condemn their oppressors.

Can enable harm: In certain cases, a refusal to "hate" could enable the continuation of abusive or destructive behaviors by failing to set firm boundaries.

Ultimately, the validity of this attitude likely depends on the specific context, the degree to which it is applied, and how it is balanced against other ethical considerations. A more nuanced, situation-specific approach is often warranted, rather than an absolutist stance of never feeling or expressing any form of hatred. The key is to avoid hatred becoming an all-consuming force, while still recognizing its potential utility in certain circumstances.

5 notes

·

View notes

Text

5 Best Large Language Models (LLMs) (September 2024)

New Post has been published on https://thedigitalinsider.com/5-best-large-language-models-llms-september-2024/

5 Best Large Language Models (LLMs) (September 2024)

The field of artificial intelligence is evolving at a breathtaking pace, with large language models (LLMs) leading the charge in natural language processing and understanding. As we navigate this, a new generation of LLMs has emerged, each pushing the boundaries of what’s possible in AI.

In this overview of the best LLMs, we’ll explore the key features, benchmark performances, and potential applications of these cutting-edge language models, offering insights into how they’re shaping the future of AI technology.

Anthropic’s Claude 3 models, released in March 2024, represented a significant leap forward in artificial intelligence capabilities. This family of LLMs offers enhanced performance across a wide range of tasks, from natural language processing to complex problem-solving.

Claude 3 comes in three distinct versions, each tailored for specific use cases:

Claude 3 Opus: The flagship model, offering the highest level of intelligence and capability.

Claude 3.5 Sonnet: A balanced option, providing a mix of speed and advanced functionality.

Claude 3 Haiku: The fastest and most compact model, optimized for quick responses and efficiency.

Key Capabilites of Claude 3:

Enhanced Contextual Understanding: Claude 3 demonstrates improved ability to grasp nuanced contexts, reducing unnecessary refusals and better distinguishing between potentially harmful and benign requests.

Multilingual Proficiency: The models show significant improvements in non-English languages, including Spanish, Japanese, and French, enhancing their global applicability.

Visual Interpretation: Claude 3 can analyze and interpret various types of visual data, including charts, diagrams, photos, and technical drawings.

Advanced Code Generation and Analysis: The models excel at coding tasks, making them valuable tools for software development and data science.

Large Context Window: Claude 3 features a 200,000 token context window, with potential for inputs over 1 million tokens for select high-demand applications.

Benchmark Performance:

Claude 3 Opus has demonstrated impressive results across various industry-standard benchmarks:

MMLU (Massive Multitask Language Understanding): 86.7%

GSM8K (Grade School Math 8K): 94.9%

HumanEval (coding benchmark): 90.6%

GPQA (Graduate-level Professional Quality Assurance): 66.1%

MATH (advanced mathematical reasoning): 53.9%

These scores often surpass those of other leading models, including GPT-4 and Google’s Gemini Ultra, positioning Claude 3 as a top contender in the AI landscape.

Claude 3 Benchmarks (Anthropic)

Claude 3 Ethical Considerations and Safety

Anthropic has placed a strong emphasis on AI safety and ethics in the development of Claude 3:

Reduced Bias: The models show improved performance on bias-related benchmarks.

Transparency: Efforts have been made to enhance the overall transparency of the AI system.

Continuous Monitoring: Anthropic maintains ongoing safety monitoring, with Claude 3 achieving an AI Safety Level 2 rating.

Responsible Development: The company remains committed to advancing safety and neutrality in AI development.

Claude 3 represents a significant advancement in LLM technology, offering improved performance across various tasks, enhanced multilingual capabilities, and sophisticated visual interpretation. Its strong benchmark results and versatile applications make it a compelling choice for an LLM.

Visit Claude 3 →

OpenAI’s GPT-4o (“o” for “omni”) offers improved performance across various tasks and modalities, representing a new frontier in human-computer interaction.

Key Capabilities:

Multimodal Processing: GPT-4o can accept inputs and generate outputs in multiple formats, including text, audio, images, and video, allowing for more natural and versatile interactions.

Enhanced Language Understanding: The model matches GPT-4 Turbo’s performance on English text and code tasks while offering superior performance in non-English languages.

Real-time Interaction: GPT-4o can respond to audio inputs in as little as 232 milliseconds, with an average of 320 milliseconds, comparable to human conversation response times.

Improved Vision Processing: The model demonstrates enhanced capabilities in understanding and analyzing visual inputs compared to previous versions.

Large Context Window: GPT-4o features a 128,000 token context window, allowing for processing of longer inputs and more complex tasks.

Performance and Efficiency:

Speed: GPT-4o is twice as fast as GPT-4 Turbo.

Cost-efficiency: It is 50% cheaper in API usage compared to GPT-4 Turbo.

Rate limits: GPT-4o has five times higher rate limits compared to GPT-4 Turbo.

GPT-4o benchmarks (OpenAI)

GPT-4o’s versatile capabilities make it suitable for a wide range of applications, including:

Natural language processing and generation

Multilingual communication and translation

Image and video analysis

Voice-based interactions and assistants

Code generation and analysis

Multimodal content creation

Availability:

ChatGPT: Available to both free and paid users, with higher usage limits for Plus subscribers.

API Access: Available through OpenAI’s API for developers.

Azure Integration: Microsoft offers GPT-4o through Azure OpenAI Service.

GPT-4o Safety and Ethical Considerations

OpenAI has implemented various safety measures for GPT-4o:

Built-in safety features across modalities

Filtering of training data and refinement of model behavior

New safety systems for voice outputs

Evaluation according to OpenAI’s Preparedness Framework

Compliance with voluntary commitments to responsible AI development

GPT-4o offers enhanced capabilities across various modalities while maintaining a focus on safety and responsible deployment. Its improved performance, efficiency, and versatility make it a powerful tool for a wide range of applications, from natural language processing to complex multimodal tasks.

Visit GPT-4o →

Llama 3.1 is the latest family of large language models by Meta and offers improved performance across various tasks and modalities, challenging the dominance of closed-source alternatives.

Llama 3.1 is available in three sizes, catering to different performance needs and computational resources:

Llama 3.1 405B: The most powerful model with 405 billion parameters

Llama 3.1 70B: A balanced model offering strong performance

Llama 3.1 8B: The smallest and fastest model in the family

Key Capabilities:

Enhanced Language Understanding: Llama 3.1 demonstrates improved performance in general knowledge, reasoning, and multilingual tasks.

Extended Context Window: All variants feature a 128,000 token context window, allowing for processing of longer inputs and more complex tasks.

Multimodal Processing: The models can handle inputs and generate outputs in multiple formats, including text, audio, images, and video.

Advanced Tool Use: Llama 3.1 excels at tasks involving tool use, including API interactions and function calling.

Improved Coding Abilities: The models show enhanced performance in coding tasks, making them valuable for developers and data scientists.

Multilingual Support: Llama 3.1 offers improved capabilities across eight languages, enhancing its utility for global applications.

Llama 3.1 Benchmark Performance

Llama 3.1 405B has shown impressive results across various benchmarks:

MMLU (Massive Multitask Language Understanding): 88.6%

HumanEval (coding benchmark): 89.0%

GSM8K (Grade School Math 8K): 96.8%

MATH (advanced mathematical reasoning): 73.8%

ARC Challenge: 96.9%

GPQA (Graduate-level Professional Quality Assurance): 51.1%

These scores demonstrate Llama 3.1 405B’s competitive performance against top closed-source models in various domains.

Llama 3.1 benchmarks (Meta)

Availability and Deployment:

Open Source: Llama 3.1 models are available for download on Meta’s platform and Hugging Face.

API Access: Available through various cloud platforms and partner ecosystems.

On-Premises Deployment: Can be run locally or on-premises without sharing data with Meta.

Llama 3.1 Ethical Considerations and Safety Features

Meta has implemented various safety measures for Llama 3.1:

Llama Guard 3: A high-performance input and output moderation model.

Prompt Guard: A tool for protecting LLM-powered applications from malicious prompts.

Code Shield: Provides inference-time filtering of insecure code produced by LLMs.

Responsible Use Guide: Offers guidelines for ethical deployment and use of the models.

Llama 3.1 marks a significant milestone in open-source AI development, offering state-of-the-art performance while maintaining a focus on accessibility and responsible deployment. Its improved capabilities position it as a strong competitor to leading closed-source models, transforming the landscape of AI research and application development.

Visit Llama 3.1 →

Announced in February 2024 and made available for public preview in May 2024, Google’s Gemini 1.5 Pro also represented a significant advancement in AI capabilities, offering improved performance across various tasks and modalities.

Key Capabilities:

Multimodal Processing: Gemini 1.5 Pro can process and generate content across multiple modalities, including text, images, audio, and video.

Extended Context Window: The model features a massive context window of up to 1 million tokens, expandable to 2 million tokens for select users. This allows for processing of extensive data, including 11 hours of audio, 1 hour of video, 30,000 lines of code, or entire books.

Advanced Architecture: Gemini 1.5 Pro uses a Mixture-of-Experts (MoE) architecture, selectively activating the most relevant expert pathways within its neural network based on input types.

Improved Performance: Google claims that Gemini 1.5 Pro outperforms its predecessor (Gemini 1.0 Pro) in 87% of the benchmarks used to evaluate large language models.

Enhanced Safety Features: The model underwent rigorous safety testing before launch, with robust technologies implemented to mitigate potential AI risks.

Gemini 1.5 Pro Benchmarks and Performance

Gemini 1.5 Pro has demonstrated impressive results across various benchmarks:

MMLU (Massive Multitask Language Understanding): 85.9% (5-shot setup), 91.7% (majority vote setup)

GSM8K (Grade School Math): 91.7%

MATH (Advanced mathematical reasoning): 58.5%

HumanEval (Coding benchmark): 71.9%

VQAv2 (Visual Question Answering): 73.2%

MMMU (Multi-discipline reasoning): 58.5%

Google reports that Gemini 1.5 Pro outperforms its predecessor (Gemini 1.0 Ultra) in 16 out of 19 text benchmarks and 18 out of 21 vision benchmarks.

Gemini 1.5 Pro benchmarks (Google)

Key Features and Capabilities:

Audio Comprehension: Analysis of spoken words, tone, mood, and specific sounds.

Video Analysis: Processing of uploaded videos or videos from external links.

System Instructions: Users can guide the model’s response style through system instructions.

JSON Mode and Function Calling: Enhanced structured output capabilities.

Long-context Learning: Ability to learn new skills from information within its extended context window.

Availability and Deployment:

Google AI Studio for developers

Vertex AI for enterprise customers

Public API access

Visit Gemini Pro →

Released in August 2024 by xAI, Elon Musk’s artificial intelligence company, Grok-2 represents a significant advancement over its predecessor, offering improved performance across various tasks and introducing new capabilities.

Model Variants:

Grok-2: The full-sized, more powerful model

Grok-2 mini: A smaller, more efficient version

Key Capabilities:

Enhanced Language Understanding: Improved performance in general knowledge, reasoning, and language tasks.

Real-Time Information Processing: Access to and processing of real-time information from X (formerly Twitter).

Image Generation: Powered by Black Forest Labs’ FLUX.1 model, allowing creation of images based on text prompts.

Advanced Reasoning: Enhanced abilities in logical reasoning, problem-solving, and complex task completion.

Coding Assistance: Improved performance in coding tasks.

Multimodal Processing: Handling and generation of content across multiple modalities, including text, images, and potentially audio.

Grok-2 Benchmark Performance

Grok-2 has shown impressive results across various benchmarks:

GPQA (Graduate-level Professional Quality Assurance): 56.0%

MMLU (Massive Multitask Language Understanding): 87.5%

MMLU-Pro: 75.5%

MATH: 76.1%

HumanEval (coding benchmark): 88.4%

MMMU (Multi-Modal Multi-Task): 66.1%

MathVista: 69.0%

DocVQA: 93.6%

These scores demonstrate significant improvements over Grok-1.5 and position Grok-2 as a strong competitor to other leading AI models.

Grok-2 benchmarks (xAI)

Availability and Deployment:

X Platform: Grok-2 mini is available to X Premium and Premium+ subscribers.

Enterprise API: Both Grok-2 and Grok-2 mini will be available through xAI’s enterprise API.

Integration: Plans to integrate Grok-2 into various X features, including search and reply functions.

Unique Features:

“Fun Mode”: A toggle for more playful and humorous responses.

Real-Time Data Access: Unlike many other LLMs, Grok-2 can access current information from X.

Minimal Restrictions: Designed with fewer content restrictions compared to some competitors.

Grok-2 Ethical Considerations and Safety Concerns

Grok-2’s release has raised concerns regarding content moderation, misinformation risks, and copyright issues. xAI has not publicly detailed specific safety measures implemented in Grok-2, leading to discussions about responsible AI development and deployment.

Grok-2 represents a significant advancement in AI technology, offering improved performance across various tasks and introducing new capabilities like image generation. However, its release has also sparked important discussions about AI safety, ethics, and responsible development.

Visit Grok-2 →

The Bottom Line on LLMs

As we’ve seen, the latest advancements in large language models have significantly elevated the field of natural language processing. These LLMs, including Claude 3, GPT-4o, Llama 3.1, Gemini 1.5 Pro, and Grok-2, represent the pinnacle of AI language understanding and generation. Each model brings unique strengths to the table, from enhanced multilingual capabilities and extended context windows to multimodal processing and real-time information access. These innovations are not just incremental improvements but transformative leaps that are reshaping how we approach complex language tasks and AI-driven solutions.

The benchmark performances of these models underscore their exceptional capabilities, often surpassing human-level performance in various language understanding and reasoning tasks. This progress is a testament to the power of advanced training techniques, sophisticated neural architectures, and vast amounts of diverse training data. As these LLMs continue to evolve, we can expect even more groundbreaking applications in fields such as content creation, code generation, data analysis, and automated reasoning.

However, as these language models become increasingly powerful and accessible, it’s crucial to address the ethical considerations and potential risks associated with their deployment. Responsible AI development, robust safety measures, and transparent practices will be key to harnessing the full potential of these LLMs while mitigating potential harm. As we look to the future, the ongoing refinement and responsible implementation of these large language models will play a pivotal role in shaping the landscape of artificial intelligence and its impact on society.

#000#2024#8K#Accessibility#ai#AI development#AI models#AI research#ai safety#ai studio#Analysis#anthropic#API#application development#applications#approach#arc#architecture#Art#artificial#Artificial Intelligence#audio#azure#azure openai#benchmark#benchmarks#Best Of#Bias#billion#Black Forest Labs

0 notes

Text

ChatGPT Alternatives Must Try In 2024

ChatGPT is a leading large language model known for its broad applications and customizability, allowing innovative solutions. If you find ChatGPT too broad or complex, or if you want to explore different data sets, consider alternative large language models. While ChatGPT dominates the AI text generation market with over 100 million weekly users, other options offer unique features and user experiences not covered by ChatGPT. TechAhead can help you explore these alternatives.

Now, let’s explore these alternatives to uncover their unique features. Outlined below are seven ChatGPT alternatives for anyone who is looking for a leg up on their projects.

1. Google Gemini (Formerly Bard)

Google Gemini (Bard) is Google’s answer to ChatGPT. It is an experimental AI conversational service powered by Google’s Gemini Pro 1.0.

Google Gemini is a free AI tool that allows unlimited questions and is powered by Google's advanced Gemini Pro model. It offers features like editing prompts, exporting answers to Google Docs and Gmail, listening to responses, and performing double-checks via Google search. It also includes image generation and works faster than ChatGPT for web searches.

Google Gemini Advanced, priced at $19.99 per month, is described as Google's most capable Pro 1.5 model. It excels in handling complex tasks such as coding and logical reasoning. Users can upload spreadsheets, Google Docs, and PDFs, and benefit from integration with Google’s apps. Additionally, it provides 2 TB of Google One storage and includes a 1-month free trial.

2. Microsoft Copilot (Formerly Bing Chat)

Microsoft Copilot integrates well with Microsoft products, especially Edge, and is accessible directly from the app menu. It facilitates on-the-go interactions, enabling users to ask questions about web content.

Copilot is a free, ad-supported AI tool with three chat modes for different interaction settings. It features image generation and integrates with Microsoft products like the Edge Browser and Skype. The Microsoft Edge app menu also allows users to ask questions about web content.

Copilot Pro, priced at $20/month, offers priority GPT-4 and GPT-4 Turbo access and integrates with Microsoft 365 apps like Word and Excel. Available from January 2024 in select countries including the U.S. and U.K., Copilot for Microsoft 365 costs $30/user/month for commercial clients, featuring privacy protections, organizational resource access, and company document queries.

3. Jasper.ai

Jasper.ai is a conversational AI engine that uses large language models developed by OpenAI, Google, Anthropic, and others, including their own customized model.

Jasper.ai starts at $49 per month with a 7-day free trial available. The Pro version costs $69 per month, and custom pricing is available for Business plans. Key features include a Brand voice tool, an instant marketing campaign generator, and a long-form editor. It also offers over 50 pre-built templates, a plagiarism checker through Copyscape integration, and support for 30 languages. Jasper.ai includes a Chrome extension and AI image generation capabilities.

4. Claude

Claude (by Anthropic) is an AI assistant capable of performing a wide range of conversational and text-processing tasks.

Claude offers several pricing options. Claude Pro costs $20 per person per month, while Claude Team is priced at $25 per person per month. For token-based pricing, Claude 3.5 Sonnet is $3 per million tokens, Claude 3 Opus is $15 per million tokens, and Claude 3 Haiku is $0.25 per million tokens. Claude is noted for its stronger accuracy and superior creativity compared to other models.

5. Perplexity

Perplexity.AI is designed to understand user queries through follow-up questions, summarize relevant findings, and pull information from diverse sources to provide a comprehensive view.

Perplexity offers two pricing plans. The Standard Plan provides limited usage with Copilot and GPT-3 as the default model. The Professional Plan costs $20 per month or $200 per year, offering nearly unlimited usage, GPT-4 as the default model, and Pro support. API pricing ranges from $0.2 to $1 per million tokens, depending on the model. Perplexity is known for its versatility and comprehensive capabilities, allowing users to ask follow-up questions and source information in real-time with links to all sources. It also features advanced data analysis and predictive analytics.

6. Elicit

Elicit is a platform that calls itself an AI research assistant, claiming it can help with research and other tasks.

Elicit offers a Free Plan that includes unlimited searches across 125 million papers, the ability to summarize and chat with up to four papers at once, extract data from 10 PDFs monthly, and view sources for answers. The Plus Plan costs $10 per month, while the Pro Plan is $42 per month, billed annually. Elicit is well-suited for automating data extraction tasks and allows exporting to CSV, RIS, and BIB formats. The Pro Plan provides the ability to extract information from 1,200 PDFs annually (100 monthly) and includes unlimited high-accuracy mode columns.

7. Learnt.ai

Learnt.ai has been specifically created for the needs of education professionals.

Learnt.ai offers a freemium model with basic features available for free. Paid plans start at $9 per month and go up to $99 per month. It is tailored for educational professionals, helping with creating lesson plans, learning objectives, assessment questions, and other educational resources. The tool is designed to augment rather than replace the user's creativity, saving time and effort in content creation.

0 notes

Photo

Claude 3.5 Sonnet: A Game-Changer for LLMs

Anthropic's latest release, Claude 3.5 Sonnet, is shaking things up in the world of LLMs.

1. Performance Frontier Pushed

Anthropic's three LLM models—Haiku, Sonnet, and Opus—are designed for different needs.

Sonnet 3.5 outperforms Opus 3.0, being twice as fast and cost-effective.

The relentless focus on performance raises the question: Are LLMs becoming a commodity?

2. Speed Unlocks New Use Cases

Faster LLMs enable agentic use cases, where the model reasons step-by-step.

Sonnet 3.5 excels in coding tasks, analyzing code and executing it efficiently.

Speed improvements broaden possibilities for real-time applications.

3. Moving Beyond Chatbots

Claude Sonnet 3.5 introduces Artifacts, allowing side-by-side output adjustments.

This feature streamlines user interactions and project management.

UI updates make Claude more intuitive and user-friendly.

Anthropic's Claude 3.5 Sonnet sets new standards in performance and usability for LLMs. Give it a try and see how it can transform your workflow!

#artificial intelligence#automation#machine learning#business#digital marketing#professional services#marketing#web design#web development#social media#tech#Technology

0 notes

Photo

OpenAI представила GPT-4o mini — самую доступную и компактную ИИ-модель

Новый чат-бот отличается высокой скоростью работы и невысокой ценой. Доступ к ИИ-модели откроется уже в конце июля.

18 июля OpenAI презентовала новую версию чат-бота GPT-4o mini — упрощенную версию GPT-4o. По словам компании, новинка превосходит в ряде тестов предыдущие языковые модели, имеет меньший размер и является самым доступным ИИ среди всех продуктов бренда. GPT-4o mini позволяет решать широкий спектр задач при низкой стоимости и отлично подходит для использования в различных приложениях, обработки большого объема контекста и выдачи быстрых ответов в режиме реального времени.

GPT-4o mini поддерживает текстовые и графические интерфейсы, а скоро появится поддержка видео- и аудиоконтента. Модель способна обрабатывать до 128 000 входных токенов, до 16 000 выходных токенов на запрос, а ее знания актуальны на период до октября 2023 года. Благодаря улучшенному токенизатору, совместимому с мощнейшим чат-ботом GPT-4o, новинка способна более экономично обрабатывать текст не на английском языке.

Сообщается, что представленный ИИ превосходит не только продукты OpenAI в некоторых задачах, но и разработки конкурентов. Например, в тестах на рассуждение GPT-4o mini набирает 82% по MMLU, в то время как Gemini Flash и Claude Haiku демонстрируют показатели 77,9% и 73,8% соответственно. Отличился чат-бот и в задачах, связанных с математикой и программированием, набрав 87% по MGSM против 75,5% у Gemini Flash и 71,7% у Claude Haiku. Похожая ситуация и в вопросе мультимодальных рассуждений, где GPT-4o mini лучше конкурентов на 3% и 9% соответственно.

Подробнее https://7ooo.ru/group/2024/07/19/774-openai-predstavila-gpt-4o-mini-samuyu-dostupnuyu-i-kompaktnuyu-ii-model-grss-325449411.html

0 notes

Text

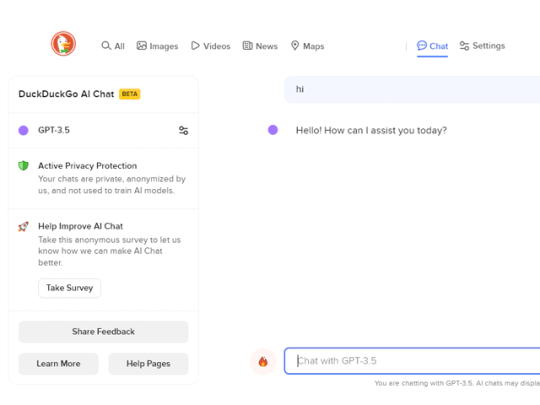

اگر میخواهید صحبتهای شما با هوش مصنوعی کاملا خصوصی باقی بماند، Duck.ai انتخاب شماست!

در عصری که نگرانی در مورد حفظ حریم خصوصی دادهها رو به افزایش است، دوک دوکگو با راهاندازی پلتفرم گفتگوی هوش مصنوعی خود: https://duckduckgo.com/aichat، گام بزرگی در راستای حفاظت از حریم خصوصی کاربران برداشته است. این سرویس نوآورانه به کاربران امکان میدهد با رباتهای گفتگوی هوش مصنوعی محبوب تعامل داشته باشند، در حالی که از امنیت و حفاظت از دادههای آنها اطمینان حاصل میشود.

Duck.ai که در سراسر جهان در دسترس است، دارای رابط کاربری تمیز و کاربرپسند است و به کاربران این امکان را میدهد تا از میان چهار مدل هوش مصنوعی انتخاب کنند: دو مدل متنبسته (GPT-3.5 Turbo از اوپنایآی و Claude 3 Haiku از آنتروپیک) و دو مدل متنباز (Llama-3 70B از متا و Mixtral 8x7b از میسترال ایآی). آنچه دوک دوکگو AI Chat را متمایز میکند، تعهد قاطع آن به حفظ حریم خصوصی کاربران است.

نه دوک دوکگو و نه ارائهدهندگان چت باتها میتوانند از دادههای کاربر برای آموزش مدلهای خود استفاده کنند، که این امر باعث میشود تعاملات به طور کامل خصوصی و ناشناس باقی بمانند. علاوه بر این، دوک دوکگو متادیتای مانند آدرس سرور یا آیپی را حذف میکند.

این شرکت با تمام ارائهدهندگان مدلها توافقنامههایی را منعقد کرده است تا اطمینان حاصل شود که هر گفتگوی ذخیره شدهای در عرض 30 روز به طور کامل حذف میشود و هیچ یک از گفتگوهای انجام شده در این پلتفرم نمیتواند برای آموزش یا بهبود مدلها استفاده شود. این امر حفظ حریم خصوصی را نسبت به تنظیم جداگانه تنظیمات حریم خصوصی برای هر سرویس، آسانتر میکند.

در دنیایی که سرویسهای آنلاین روزبهروز نسبت به دادههای کاربران گرسنهتر هستند، سرویس دوک دوکگو AI Chat همچون نسیم تازهای است. تعهد این شرکت به حریم خصوصی، پاسخی مستقیم به نگرانیهای رو به رشد در مورد جمعآوری و استفاده از دادهها در صنعت هوش مصنوعی است. با ارائه یک پلتفرم خصوصی و ناشناس برای تعامل کاربران با چت باتها (رباتهای گفتگوی هوش مصنوعی)، دوک دوکگو استانداردی جدید را برای این صنعت تعیین میکند.

سرویس هوش مصنوعی دوک دوکگو در محدوده روزانه رایگان است و این شرکت در نظر دارد یک سطح پرداختشده را راهاندازی کند تا محدودیتها را کاهش یا حذف کند. این سرویس برای مکمل کردن موتور جستجوی این شرکت طراحی شده است تا به کاربران امکان جابجایی بین جستجو و گفتگوی هوش مصنوعی برای تجربه جستجویی جامعتر را بدهد.

دوکدوکگو توضیح میدهد:

ما گفتگوی هوش مصنوعی و جستجو را ابزارهای مکمل میبینیم که میتوانند به کاربران در یافتن آنچه که به دنبال آن هستند، کمک کنند، به ویژه هنگام کاوش در موضوعات جدید. چه شما با گفتگوی هوش مصنوعی شروع کنید تا بینشهای اولیه را کسب کنید یا از جستجو برای اطلاعات عمیقتر استفاده کنید، هر دو ابزار میتوانند شما را به طور مؤثر راهنمایی کنند.

ترکیب گفتگوی هوش مصنوعی با جستجوی خصوصی دوکدوکگو، گردش کار کاربران را بهبود میبخشد. کاربران میتوانند با گفتگوی هوش مصنوعی شروع کنند تا پرسشهای اولیه خود را مطرح کنند و سپس با جستجوهای سنتی برای کسب اطلاعات دقیقتر ادامه دهند، یا برعکس، بسته به نیازهایشان عمل کنند.

با ادامه تکامل صنعت هوش مصنوعی، حریم خصوصی همچنان یکی از دغدغههای اصلی کاربران خواهد بود. با راهاندازی دوک دوکگو AI Chat، این شرکت گامی بزرگ در راستای ارائه یک پلتفرم خصوصی و امن برای تعامل کاربران با رباتهای گفتگوی هوش مصنوعی برداشته و جایگاه خود را به عنوان یک پیشگام در زمینه فناوریهای محافظ از حریم خصوصی تثبیت میکند.

Read the full article

0 notes

Text

is this* Japanese sentence correct?

GPT-3.5 Turbo 👎 argues that it is

Claude 3 Haiku 👍 answers the question in Japanese

Llama 3 70B 👍 ok

Mixtral 8x7B 😱👎👎 invents own grammar to justify the error

0 notes

Text

Try DuckDuckGo AI Chat for Private Conversations

DuckDuckGo AI Chat

AI chatbots are the latest innovation from DuckDuckGo, the search engine that protects user privacy. DuckDuckGo AI Chat, their latest product, prioritises user anonymity while enabling users to communicate with some of the most potent language models out there. As a privacy-aware substitute for current chatbot systems, this action represents a substantial change in the AI field.

DuckDuckGo AI Search

With a variety of conversation models, each with unique characteristics and capabilities, DuckDuckGo AI conversation is sure to please. The four models that are currently on the market are shown here:

OpenAI’s ChatGPT 3.5 Turbo

Powerhouse models like this one are renowned for their capacity to translate languages, create original text formats, and provide insightful answers to your queries. It is the tool of choice for ideation, producing many forms of artistic content, and gaining a broad grasp of a subject.

Claude 3 Haiku by Anthropic Art

Preciseness of facts and security are given top priority in this paradigm. It is quite good at summarising complicated subjects and performs exceptionally well in situations requiring dependable information retrieval. See Claude as your go-to person for help sifting through the clutter to locate reliable sources for your research.

Meta’s Llama 3 70B

A mix between originality and factuality can be found in Meta’s Llama 3 70B, an open-source solution. It can translate across languages, produce a variety of imaginative text layouts, and provide you with enlightening answers to your queries, making it a useful tool for most jobs.

Blender 8x7B by Mistral AI

Code-focused features are a hallmark of Mistral AI, another open-source architecture. It can translate between different programming languages, write various types of code, and provide you with enlightening answers to your programming-related queries. Mistral may be the ideal choice if you’re a developer searching for an intelligent assistant.

AI DuckDuckGo

DuckDuckGo AI answers to a range of user requirements with this first choice. There is an AI model waiting to interact with you on your terms, whether you’re looking for factual information, creative inspiration, or just a thought-provoking conversation companion.

The Foundation of DuckDuckGo

Its unrelenting dedication to user privacy is one of DuckDuckGo AI Chat’s most important features. In contrast to a lot of other chatbot platforms, DuckDuckGo guarantees that every communication is 100 percent anonymous. How they accomplish this is as follows:

IP Masking

DuckDuckGo AI facilitates communication between you and chat models. This essentially anonymizes your interaction because DuckDuckGo’s servers are sending the queries, hiding your IP address.

No Retention of Data

DuckDuckGo’s servers don’t keep a record of any chat conversations. A contractual requirement requires the underlying model suppliers to remove this data after 30 days, without the ability to trace it back to you, even though they may temporarily preserve interactions for system maintenance.

Model supplier Agreements

DuckDuckGo has rigorous agreements in place with every chat model supplier. To further protect your privacy, these agreements further prohibit the use of the anonymised chat data for model training or improvement.

Interface That’s Easy to Use for Smooth Communication

It’s easy to engage with AI models because to DuckDuckGo AI Chat’s intuitive UI. These are the salient characteristics:

Model Selection

During the course of your conversation, you are free to select the chat model that best meets your needs. Just pick your favourite by clicking on the name of the model that is currently visible in the sidebar.

Preset Prompts

DuckDuckGo has pre-programmed prompts to help you get started with typical tasks such as studying a topic, sending an email, or obtaining technical assistance. In addition to helping you make the most of the AI model, these prompts serve as a launchpad for discussion.

Clear Chat History

To maintain privacy, all it takes is a single button click to clear your chat history. By just clicking the “Fire” button, you can erase the entirety of your chat history and guarantee that your interactions stay totally private.

The Prospects for AI Chat on DuckDuckGo

Though it’s still early, DuckDuckGo AI Chat’s future suggests some fascinating opportunities. A preview of what to expect is as follows:

Adding to the Model Library

In order to provide customers with an even greater selection of AI personalities, DuckDuckGo intends to complement their current chat model library with additional models.

Improved Browser Integration

DuckDuckGo is investigating a direct browser integration of AI Chat to create a more seamless experience and make it even simpler to use these potent language models.

Power User Subscription Packages

We’re working on a paid subscription tier that will provide customers access to more sophisticated (and possibly more expensive) conversation models as well as larger daily limits.

An Emerging Era for AI Interaction with a Privacy Focus

For those concerned about their privacy, DuckDuckGo AI Chat is a big step forward. With DuckDuckGo, people can explore AI possibilities on their own terms by providing access to sophisticated language models without sacrificing user privacy.

Read more on Govindhtech.com

0 notes

Text

Поисковая система DuckDuckGo запустила ИИ чат-бота

Поисковая система DuckDuckGo, известная своим стремлением к защите конфиденциальности пользователей, представила новый сервис — AI-чат Duck...

#ChatGPT #DuckDuckGo #OpenAI

https://daboom.ru/poiskovaja-sistema-duckduckgo-zapustila-ii-chat-bota/?feed_id=30414&_unique_id=6663f2d0ee9ec

0 notes

Text

What is a sensitive way to encourage someone to be kinder to themselves?

Claude-3-Haiku's Answer:

Here are some sensitive ways to encourage someone to be kinder to themselves:

Use “I” statements to express your concern. For example, “I’ve noticed you can be really hard on yourself, and it worries me.” This avoids sounding accusatory.

Validate their feelings first. Say something like, “I understand you’re being really tough on yourself right now. That makes sense given what you’re going through.”

Suggest small, manageable steps. Rather than demanding a huge change, offer gentle prompts like, “Maybe try giving yourself 5 minutes a day to do something you enjoy, just for you.”

Compliment specific things you admire about them. This helps build their self-esteem. For instance, “I really admire how dedicated you are to your work. Maybe you could apply that same commitment to taking care of yourself.”

Ask questions to get them reflecting. For example, “What do you think your closest friend would say if they could see how you’re treating yourself right now?”

Offer to support them. Say something like, “I’m here if you ever want to talk through being kinder to yourself. I’ll listen without judgment.”

The key is to come from a place of care and concern, not criticism. Make it clear you want to help, not make them feel worse. With patience and empathy, you can encourage self-compassion.

2 notes

·

View notes

Text

GPT-4o Mini Unveiled: A Cost-Effective, High-Performance Alternative to Claude Haiku, Gemini Flash and GPT 3.5 Turbo

New Post has been published on https://thedigitalinsider.com/gpt-4o-mini-unveiled-a-cost-effective-high-performance-alternative-to-claude-haiku-gemini-flash-and-gpt-3-5-turbo/

GPT-4o Mini Unveiled: A Cost-Effective, High-Performance Alternative to Claude Haiku, Gemini Flash and GPT 3.5 Turbo

OpenAI, a leader in scaling Generative Pre-trained Transformer (GPT) models, has now introduced GPT-4o Mini, shifting toward more compact AI solutions. This move addresses the challenges of large-scale AI, including high costs and energy-intensive training, and positions OpenAI to compete with rivals like Google and Claude. GPT-4o Mini offers a more efficient and affordable approach to multimodal AI. This article will explore what sets GPT-4o Mini apart by comparing it with Claude Haiku, Gemini Flash, and OpenAI’s GPT-3.5 Turbo. We’ll evaluate these models based on six key factors: modality support, performance, context window, processing speed, pricing, and accessibility, which are crucial for selecting the right AI model for various applications.

Unveiling GPT-4o Mini:

GPT-4o Mini is a compact multimodal AI model with text and vision intelligence capabilities. Although OpenAI hasn’t shared specific details about its development method, GPT-4o Mini builds on the foundation of the GPT series. It is designed for cost-effective and low-latency applications. GPT-4o Mini is useful for tasks that require chaining or parallelizing multiple model calls, handling large volumes of context, and providing fast, real-time text responses. These features are particularly vital for building applications such as retrieval augment generation (RAG) systems and chatbots.

Key features of GPT-4o Mini include:

A context window of 128K tokens

Support for up to 16K output tokens per request

Enhanced handling of non-English text

Knowledge up to October 2023

GPT-4o Mini vs. Claude Haiku vs. Gemini Flash: A Comparison of Small Multimodal AI Models

This section compares GPT-4o Mini with two existing small multimodal AI models: Claude Haiku and Gemini Flash. Claude Haiku, launched by Anthropic in March 2024, and Gemini Flash, introduced by Google in December 2023 with an updated version 1.5 released in May 2024, are significant competitors.

Modality Support: Both GPT-4o Mini and Claude Haiku currently support text and image capabilities. OpenAI plans to add audio and video support in the future. In contrast, Gemini Flash already supports text, image, video, and audio.

Performance: OpenAI researchers have benchmarked GPT-4o Mini against Gemini Flash and Claude Haiku across several key metrics. GPT-4o Mini consistently outperforms its rivals. In reasoning tasks involving text and vision, GPT-4o Mini scored 82.0% on MMLU, surpassing Gemini Flash’s 77.9% and Claude Haiku’s 73.8%. GPT-4o Mini achieved 87.0% in math and coding on MGSM, compared to Gemini Flash’s 75.5% and Claude Haiku’s 71.7%. On HumanEval, which measures coding performance, GPT-4o Mini scored 87.2%, ahead of Gemini Flash at 71.5% and Claude Haiku at 75.9%. Additionally, GPT-4o Mini excels in multimodal reasoning, scoring 59.4% on MMMU, compared to 56.1% for Gemini Flash and 50.2% for Claude Haiku.

Context Window: A larger context window enables a model to provide coherent and detailed answers over extended passages. GPT-4o Mini offers a 128K token capacity and supports up to 16K output tokens per request. Claude Haiku has a longer context window of 200K tokens but returns fewer tokens per request, with a maximum of 4096 tokens. Gemini Flash boasts a significantly larger context window of 1 million tokens. Hence, Gemini Flash has an edge over GPT-4o Mini regarding context window.

Processing Speed: GPT-4o Mini is faster than the other models. It processes 15 million tokens per minute, while Claude Haiku handles 1.26 million tokens per minute, and Gemini Flash processes 4 million tokens per minute.

Pricing: GPT-4o Mini is more cost-effective, pricing at 15 cents per million input tokens and 60 cents per one million output tokens. Claude Haiku costs 25 cents per million input tokens and $1.25 per million response tokens. Gemini Flash is priced at 35 cents per million input tokens and $1.05 per million output tokens.

Accessibility: GPT-4o Mini can be accessed via the Assistants API, Chat Completions API, and Batch API. Claude Haiku is available through a Claude Pro subscription on claude.ai, its API, Amazon Bedrock, and Google Cloud Vertex AI. Gemini Flash can be accessed at Google AI Studio and integrated into applications through the Google API, with additional availability on Google Cloud Vertex AI.

In this comparison, GPT-4o Mini stands out with its balanced performance, cost-effectiveness, and speed, making it a strong contender in the small multimodal AI model landscape.

GPT-4o Mini vs. GPT-3.5 Turbo: A Detailed Comparison

This section compares GPT-4o Mini with GPT-3.5 Turbo, OpenAI’s widely used large multimodal AI model.

Size: Although OpenAI has not disclosed the exact number of parameters for GPT-4o Mini and GPT-3.5 Turbo, it is known that GPT-3.5 Turbo is classified as a large multimodal model, whereas GPT-4o Mini falls into the category of small multimodal models. It means that GPT-4o Mini requires significantly less computational resources than GPT-3.5 Turbo.

Modality Support: GPT-4o Mini and GPT-3.5 Turbo support text and image-related tasks.

Performance: GPT-4o Mini shows notable improvements over GPT-3.5 Turbo in various benchmarks such as MMLU, GPQA, DROP, MGSM, MATH, HumanEval, MMMU, and MathVista. It performs better in textual intelligence and multimodal reasoning, consistently surpassing GPT-3.5 Turbo.

Context Window: GPT-4o Mini offers a much longer context window than GPT-3.5 Turbo��s 16K token capacity, enabling it to handle more extensive text and provide detailed, coherent responses over longer passages.

Processing Speed: GPT-4o Mini processes tokens at an impressive rate of 15 million tokens per minute, far exceeding GPT-3.5 Turbo’s 4,650 tokens per minute.

Price: GPT-4o Mini is also more cost-effective, over 60% cheaper than GPT-3.5 Turbo. It costs 15 cents per million input tokens and 60 cents per million output tokens, whereas GPT-3.5 Turbo is priced at 50 cents per million input tokens and $1.50 per million output tokens.

Additional Capabilities: OpenAI highlights that GPT-4o Mini surpasses GPT-3.5 Turbo in function calling, enabling smoother integration with external systems. Moreover, its enhanced long-context performance makes it a more efficient and versatile tool for various AI applications.

The Bottom Line

OpenAI’s introduction of GPT-4o Mini represents a strategic shift towards more compact and cost-efficient AI solutions. This model effectively addresses the challenges of high operational costs and energy consumption associated with large-scale AI systems. GPT-4o Mini excels in performance, processing speed, and affordability compared to competitors like Claude Haiku and Gemini Flash. It also demonstrates superior capabilities over GPT-3.5 Turbo, with notable advantages in context handling and cost efficiency. GPT-4o Mini’s enhanced functionality and versatile application make it a strong choice for developers seeking high-performance, multimodal AI.

#2023#2024#Accessibility#ADD#ai#ai model#AI models#ai studio#AI systems#Amazon#anthropic#API#applications#approach#Article#Artificial Intelligence#assistants api#audio#benchmarks#Building#chatbots#claude#Claude Haiku#claude pro#Cloud#coding#comparison#cost efficiency#december#details

0 notes

Text

DuckDuckGo, the company known for its search engine that doesn’t track its users is launching today DuckDuckGo AI chat, a hub for accessing Open AI's GPT 3.5 Turbo, Anthropic's Claude 3 Haiku, and other popular AI chatbots in a more private way.

0 notes