#Cloud-based data extraction

Explore tagged Tumblr posts

Text

Harnessing the Power of Cloud-Based Data Extraction: A Guide by Micro Com Systems LTD

In today's data-driven world, businesses are constantly seeking innovative solutions to extract valuable insights from vast amounts of information. Cloud-based data extraction has emerged as a game-changing technology, allowing organizations like Micro Com Systems LTD to efficiently collect, process, and analyze data from diverse sources. In this blog, we'll delve into the benefits and capabilities of cloud-based data extraction and how Micro Com Systems LTD leverages this technology to drive business success.

Scalability and Flexibility: One of the key advantages of cloud-based data extraction is its scalability and flexibility. Micro Com Systems LTD can scale up or down resources based on demand, ensuring optimal performance and cost efficiency. Whether extracting data from structured databases, unstructured documents, or web sources, cloud-based solutions offer the flexibility to handle diverse data types and volumes seamlessly.

Real-time Data Extraction: Cloud-based data extraction enables Micro Com Systems LTD to extract data in real time, providing timely insights for decision-making and operational efficiency. Whether monitoring market trends, customer behavior, or supply chain dynamics, real-time data extraction ensures that businesses stay agile and responsive in a fast-changing environment.

Automated Data Processing: Cloud-based data extraction solutions often come with advanced automation capabilities, allowing Micro Com Systems LTD to automate data processing tasks such as data cleansing, transformation, and enrichment. This automation reduces manual effort, minimizes errors, and speeds up data processing workflows, enabling faster data-driven decisions and actions.

Integration with Cloud Services: Cloud-based data extraction solutions seamlessly integrate with other cloud services and platforms, such as cloud storage, data warehouses, and analytics tools. Micro Com Systems LTD can leverage this integration to streamline data workflows, centralize data storage, and facilitate seamless data exchange between different systems and applications.

Advanced Data Analysis: Cloud-based data extraction is not just about collecting data; it's also about unlocking insights and value from that data. Micro Com Systems LTD can leverage advanced analytics tools and techniques available in the cloud to perform deep data analysis, generate actionable insights, and uncover valuable patterns and trends that drive business growth and innovation.

Enhanced Security and Compliance: Cloud-based data extraction solutions offer robust security features and compliance capabilities to protect sensitive data and ensure regulatory compliance. Micro Com Systems LTD can implement encryption, access controls, and audit trails to safeguard data integrity and privacy, providing peace of mind to both businesses and customers.

Cost Savings and Efficiency: By leveraging cloud-based data extraction, Micro Com Systems LTD can realize significant cost savings compared to traditional on-premises solutions. Cloud-based solutions eliminate the need for upfront hardware investments, reduce maintenance costs, and offer pay-as-you-go pricing models, allowing businesses to scale resources and pay only for what they use.

Collaboration and Accessibility: Cloud-based data extraction promotes collaboration and accessibility, enabling teams at Micro Com Systems LTD to access and work with data from anywhere, at any time. Remote access, real-time collaboration, and version control features enhance productivity and collaboration among teams, driving faster decision-making and problem-solving.

In conclusion, cloud-based data extraction is a transformative technology that empowers businesses like Micro Com Systems LTD to unlock the full potential of their data. By leveraging scalability, automation, advanced analytics, and enhanced security, cloud-based solutions enable businesses to extract actionable insights, drive innovation, and stay competitive in today's data-driven landscape.

0 notes

Text

Cars bricked by bankrupt EV company will stay bricked

On OCTOBER 23 at 7PM, I'll be in DECATUR, presenting my novel THE BEZZLE at EAGLE EYE BOOKS.

There are few phrases in the modern lexicon more accursed than "software-based car," and yet, this is how the failed EV maker Fisker billed its products, which retailed for $40-70k in the few short years before the company collapsed, shut down its servers, and degraded all those "software-based cars":

https://insideevs.com/news/723669/fisker-inc-bankruptcy-chapter-11-official/

Fisker billed itself as a "capital light" manufacturer, meaning that it didn't particularly make anything – rather, it "designed" cars that other companies built, allowing Fisker to focus on "experience," which is where the "software-based car" comes in. Virtually every subsystem in a Fisker car needs (or rather, needed) to periodically connect with its servers, either for regular operations or diagnostics and repair, creating frequent problems with brakes, airbags, shifting, battery management, locking and unlocking the doors:

https://www.businessinsider.com/fisker-owners-worry-about-vehicles-working-bankruptcy-2024-4

Since Fisker's bankruptcy, people with even minor problems with their Fisker EVs have found themselves owning expensive, inert lumps of conflict minerals and auto-loan debt; as one Fisker owner described it, "It's literally a lawn ornament right now":

https://www.businessinsider.com/fisker-owners-describe-chaos-to-keep-cars-running-after-bankruptcy-2024-7

This is, in many ways, typical Internet-of-Shit nonsense, but it's compounded by Fisker's capital light, all-outsource model, which led to extremely unreliable vehicles that have been plagued by recalls. The bankrupt company has proposed that vehicle owners should have to pay cash for these recalls, in order to reserve the company's capital for its creditors – a plan that is clearly illegal:

https://www.veritaglobal.net/fisker/document/2411390241007000000000005

This isn't even the first time Fisker has done this! Ten years ago, founder Henrik Fisker started another EV company called Fisker Automotive, which went bankrupt in 2014, leaving the company's "Karma" (no, really) long-range EVs (which were unreliable and prone to bursting into flames) in limbo:

https://en.wikipedia.org/wiki/Fisker_Karma

Which raises the question: why did investors reward Fisker's initial incompetence by piling in for a second attempt? I think the answer lies in the very factor that has made Fisker's failure so hard on its customers: the "software-based car." Investors love the sound of a "software-based car" because they understand that a gadget that is connected to the cloud is ripe for rent-extraction, because with software comes a bundle of "IP rights" that let the company control its customers, critics and competitors:

https://locusmag.com/2020/09/cory-doctorow-ip/

A "software-based car" gets to mobilize the state to enforce its "IP," which allows it to force its customers to use authorized mechanics (who can, in turn, be price-gouged for licensing and diagnostic tools). "IP" can be used to shut down manufacturers of third party parts. "IP" allows manufacturers to revoke features that came with your car and charge you a monthly subscription fee for them. All sorts of features can be sold as downloadable content, and clawed back when title to the car changes hands, so that the new owners have to buy them again. "Software based cars" are easier to repo, making them perfect for the subprime auto-lending industry. And of course, "software-based cars" can gather much more surveillance data on drivers, which can be sold to sleazy, unregulated data-brokers:

https://pluralistic.net/2023/07/24/rent-to-pwn/#kitt-is-a-demon

Unsurprisingly, there's a large number of Fisker cars that never sold, which the bankruptcy estate is seeking a buyer for. For a minute there, it looked like they'd found one: American Lease, which was looking to acquire the deadstock Fiskers for use as leased fleet cars. But now that deal seems dead, because no one can figure out how to restart Fisker's servers, and these vehicles are bricks without server access:

https://techcrunch.com/2024/10/08/fisker-bankruptcy-hits-major-speed-bump-as-fleet-sale-is-now-in-question/

It's hard to say why the company's servers are so intransigent, but there's a clue in the chaotic way that the company wound down its affairs. The company's final days sound like a scene from the last days of the German Democratic Republic, with apparats from the failing state charging about in chaos, without any plans for keeping things running:

https://www.washingtonpost.com/opinions/2023/03/07/east-germany-stasi-surveillance-documents/

As it imploded, Fisker cycled through a string of Chief Financial officers, losing track of millions of dollars at a time:

https://techcrunch.com/2024/05/31/fisker-collapse-investigation-ev-ocean-suv-henrik-geeta/

When Fisker's landlord regained possession of its HQ, they found "complete disarray," including improperly stored drums of toxic waste:

https://techcrunch.com/2024/10/05/fiskers-hq-abandoned-in-complete-disarray-with-apparent-hazardous-waste-clay-models-left-behind/

And while Fisker's implosion is particularly messy, the fact that it landed in bankruptcy is entirely unexceptional. Most businesses fail (eventually) and most startups fail (quickly). Despite this, businesses – even those in heavily regulated sectors like automotive regulation – are allowed to design products and undertake operations that are not designed to outlast the (likely short-lived) company.

After the 2008 crisis and the collapse of financial institutions like Lehman Brothers, finance regulators acquired a renewed interest in succession planning. Lehman consisted of over 6,000 separate corporate entities, each one representing a bid to evade regulation and/or taxation. Unwinding that complex hairball took years, during which the entities that entrusted Lehman with their funds – pensions, charitable institutions, etc – were unable to access their money.

To avoid repeats of this catastrophe, regulators began to insist that banks produce "living wills" – plans for unwinding their affairs in the event of catastrophe. They had to undertake "stress tests" that simulated a wind-down as planned, both to make sure the plan worked and to estimate how long it would take to execute. Then banks were required to set aside sufficient capital to keep the lights on while the plan ran on.

This regulation has been indifferently enforced. Banks spent the intervening years insisting that they are capable of prudently self-regulating without all this interference, something they continue to insist upon even after the Silicon Valley Bank collapse:

https://pluralistic.net/2023/03/15/mon-dieu-les-guillotines/#ceci-nes-pas-une-bailout

The fact that the rules haven't been enforced tells us nothing about whether the rules would work if they were enforced. A string of high-profile bankruptcies of companies who had no succession plans and whose collapse stands to materially harm large numbers of people tells us that something has to be done about this.

Take 23andme, the creepy genomics company that enticed millions of people into sending them their genetic material (even if you aren't a 23andme customer, they probably have most of your genome, thanks to relatives who sent in cheek-swabs). 23andme is now bankrupt, and its bankruptcy estate is shopping for a buyer who'd like to commercially exploit all that juicy genetic data, even if that is to the detriment of the people it came from. What's more, the bankruptcy estate is refusing to destroy samples from people who want to opt out of this future sale:

https://bourniquelaw.com/2024/10/09/data-23-and-me/

On a smaller scale, there's Juicebox, a company that makes EV chargers, who are exiting the North American market and shutting down their servers, killing the advanced functionality that customers paid extra for when they chose a Juicebox product:

https://www.theverge.com/2024/10/2/24260316/juicebox-ev-chargers-enel-x-way-closing-discontinued-app

I actually owned a Juicebox, which ultimately caught fire and melted down, either due to a manufacturing defect or to the criminal ineptitude of Treeium, the worst solar installers in Southern California (or both):

https://pluralistic.net/2024/01/27/here-comes-the-sun-king/#sign-here

Projects like Juice Rescue are trying to reverse-engineer the Juicebox server infrastructure and build an alternative:

https://juice-rescue.org/

This would be much simpler if Juicebox's manufacturer, Enel X Way, had been required to file a living will that explained how its customers would go on enjoying their property when and if the company discontinued support, exited the market, or went bankrupt.

That might be a big lift for every little tech startup (though it would be superior than trying to get justice after the company fails). But in regulated sectors like automotive manufacture or genomic analysis, a regulation that says, "Either design your products and services to fail safely, or escrow enough cash to keep the lights on for the duration of an orderly wind-down in the event that you shut down" would be perfectly reasonable. Companies could make "software based cars" but the more "software based" the car was, the more funds they'd have to escrow to transition their servers when they shut down (and the lest capital they'd have to build the car).

Such a rule should be in addition to more muscular rules simply banning the most abusive practices, like the Oregon state Right to Repair bill, which bans the "parts pairing" that makes repairing a Fisker car so onerous:

https://www.theverge.com/2024/3/27/24097042/right-to-repair-law-oregon-sb1596-parts-pairing-tina-kotek-signed

Or the Illinois state biometric privacy law, which strictly limits the use of the kind of genomic data that 23andme collected:

https://www.ilga.gov/legislation/ilcs/ilcs3.asp?ActID=3004

Failing to take action on these abusive practices is dangerous – and not just to the people who get burned by them. Every time a genomics research project turns into a privacy nightmare, that salts the earth for future medical research, making it much harder to conduct population-scale research, which can be carried out in privacy-preserving ways, and which pays huge scientific dividends that we all benefit from:

https://pluralistic.net/2022/10/01/the-palantir-will-see-you-now/#public-private-partnership

Just as Fisker's outrageous ripoff will make life harder for good cleantech companies:

https://pluralistic.net/2024/06/26/unplanned-obsolescence/#better-micetraps

If people are convinced that new, climate-friendly tech is a cesspool of grift and extraction, it will punish those firms that are making routine, breathtaking, exciting (and extremely vital) breakthroughs:

https://www.euronews.com/green/2024/10/08/norways-national-football-stadium-has-the-worlds-largest-vertical-solar-roof-how-does-it-w

Tor Books as just published two new, free LITTLE BROTHER stories: VIGILANT, about creepy surveillance in distance education; and SPILL, about oil pipelines and indigenous landback.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/10/10/software-based-car/#based

#pluralistic#enshittification#evs#automotive#bricked#fisker#ocean#cleantech#iot#internet of shit#autoenshittification

577 notes

·

View notes

Note

Ok regarding that “can i make Yves do my homework if I give him my childhood pictures” ask, exactly how much access does Yves have to our lives? Does he have images or videos from when we were still a baby or would they be new information to him?

A bunch of my baby pictures and videos are lost because my dad lost the computer that had them but we recently found my aunt’s old camera filled with our childhood pictures, it was a pleasant surprise for us but would it be for Yves too?

It absolutely is. If Yves was there with you while your aunt showed you the photo gallery of her old camera, Yves would momentarily lose a bit of inhibition and let his pupils dilate to a maddening degree before instantly constricting it back to appear normal. It's a rare, super deluxe edition photos of you, there isn't anything else like it out there as they're most likely not uploaded to the internet or a cloud based service, where he could easily hack.

Him coming across media from your childhood or at least during those early days where people still go to and get their photos developed, is like winning the lottery for him. Because, although he tries to collect everything relating to your existence, there is only so much he can do in a day. He rather prioritizes the present and the future, as the past is the past; neither you nor him can change it, he can only understand or connect it to your current behaviours or thought patterns.

He does have some information about you as a baby or a child, but that is if they're "readily available" to him. (I.e., it can be found in predictable places like in your childhood home.), that is why, Yves would try to build a good relationship with people you grew up with, to extract information.

Despite being reclusive as he is, Yves would never fail to attend every and any family gathering he is invited to or expected to come. Encouraging that drunk uncle to drink more if he knew he has something to say about you, bribing your relatives with gifts and career opportunities, perhaps even drugging that really difficult and combative cousin to make them more bearable to interrogate.

As soon as he knew your aunt could be another goldmine of your data, he would get to work. Wasting no time building a rapport with her, it's a piece of cake given how obsessive and manipulative his nature is.

Inevitably, your aunt will come to love him and see Yves as family. By extension, her relationship with you will skyrocket too, she will invite you to her place much more often even though she might not be the most sociable person in the first place. Yves will find a way to make her bend to his whims.

The majority of their conversations would be about you, only sometimes Yves would talk about something else if it meant he could keep the drive to spill more about your lore going. His sharp ears and mind will pick up on clues as to where he might find more pictures or writings about you. He would then break into your aunt's home to give it a thorough shakedown and leave without a trace. Yves would repeat this process until he's positive that she has nothing left to offer. That camera is getting fucking stolen and replaced with a duplicate.

It didn't matter if your aunt was a minimalist or a severe hoarder, he would go through all her things just to try and find pieces of your puzzle. He would wade through cobwebs, dust piles, rat droppings and mould if he had to, Yves isn't scared to get dirty to obtain what he wants, "squeamish" isn't in his vocabulary.

When she is robbed of all your essence, Yves would become distant. Not hostile towards her, just cold and indifferent. He would still maintain some sort of relationship with her though, in case she becomes useful again later. As of now, he either puts his entire focus on your current peripheral and direct life, or start to hunt other members down- from his snooping, he had learned of other people who may have valuable input about your childhood.

All of this is happening in the background. You wouldn't suspect a thing, there wasn't a dip in his attention for you. In fact, he may have gotten a lot more smothering, as Yves would be shaking at the thought of testing out his new theories and hypothesis that were birthed from his new knowledge.

He just loves you so much that he couldn't help himself but to get greedy. Yves wants all of you; past, present and future. And any version of you that could have been.

#yandere#yandere oc#yandere x reader#yandere male#oc yves#yandere concept#tw yandere#yandere oc x reader#yandere x you#male yandere oc x reader

152 notes

·

View notes

Text

A $2 million contract that United States Immigration and Customs Enforcement signed with Israeli commercial spyware vendor Paragon Solutions has been paused and placed under compliance review, WIRED has learned.

The White House’s scrutiny of the contract marks the first test of the Biden administration’s executive order restricting the government’s use of spyware.

The one-year contract between Paragon’s US subsidiary in Chantilly, Virginia, and ICE’s Homeland Security Investigations (HSI) Division 3 was signed on September 27 and first reported by WIRED on October 1. A few days later, on October 8, HSI issued a stop-work order for the award “to review and verify compliance with Executive Order 14093,” a Department of Homeland Security spokesperson tells WIRED.

The executive order signed by President Joe Biden in March 2023 aims to restrict the US government’s use of commercial spyware technology while promoting its “responsible use” that aligns with the protection of human rights.

DHS did not confirm whether the contract, which says it covers a “fully configured proprietary solution including license, hardware, warranty, maintenance, and training,” includes the deployment of Paragon’s flagship product, Graphite, a powerful spyware tool that reportedly extracts data primarily from cloud backups.

“We immediately engaged the leadership at DHS and worked very collaboratively together to understand exactly what was put in place, what the scope of this contract was, and whether or not it adhered to the procedures and requirements of the executive order,” a senior US administration official with first-hand knowledge of the workings of the executive order tells WIRED. The official requested anonymity to speak candidly about the White House’s review of the ICE contract.

Paragon Solutions did not respond to WIRED's request to comment on the contract's review.

The process laid out in the executive order requires a robust review of the due diligence regarding both the vendor and the tool, to see whether any concerns, such as counterintelligence, security, and improper use risks, arise. It also stipulates that an agency may not make operational use of the commercial spyware until at least seven days after providing this information to the White House or until the president's national security adviser consents.

“Ultimately, there will have to be a determination made by the leadership of the department. The outcome may be—based on the information and the facts that we have—that this particular vendor and tool does not spur a violation of the requirements in the executive order,” the senior official says.

While publicly available details of ICE’s contract with Paragon are relatively sparse, its existence alone raised alarms among civil liberties groups, with the nonprofit watchdog Human Rights Watch saying in a statement that “giving ICE access to spyware risks exacerbating” the department’s problematic practices. HRW also questioned what it calls the Biden administration’s “piecemeal approach” to spyware regulation.

The level of seriousness with which the US government approaches the compliance review of the Paragon contract will influence international trust in the executive order, experts say.

“We know the dangers mercenary spyware poses when sold to dictatorships, but there is also plenty of evidence of harms in democracies,” says John Scott-Railton, a senior researcher at the University of Toronto’s Citizen Lab who has been instrumental in exposing spyware-related abuses. “This is why oversight, transparency, and accountability around any US agency attempt to acquire these tools is essential.”

International efforts to rein in commercial spyware are gathering pace. On October 11, during the 57th session of the Human Rights Council, United Nation member states reached a consensus to adopt language acknowledging the threat that the misuse of commercial spyware poses to democratic values, as well as the protection of human rights and fundamental freedoms. “This is an important norm setting, especially for countries who claim to be democracies,” says Natalia Krapiva, senior tech-legal counsel at international nonprofit Access Now.

Although the US is leading global efforts to combat spyware through its executive order, trade and visa restrictions, and sanctions, the European Union has been more lenient. Only 11 of the 27 EU member states have joined the US-led initiative stipulated in the “Joint Statement on Efforts to Counter the Proliferation and Misuse of Commercial Spyware,” which now counts 21 signatories, including Australia, Canada, Costa Rica, Japan, and South Korea.

“An unregulated market is both a threat to the citizens of those countries, but also to those governments, and I think that increasingly our hope is that there is a recognition [in the EU] of that as well,” the senior US administration official tells WIRED.

The European Commission published on October 16 new guidelines on the export of cyber-surveillance items, including spyware; however, it has yet to respond to the EU Parliament's call to draft a legislative proposal or admonish countries for their misuse of the technology.

While Poland launched an inquiry into the previous government’s spyware use earlier this year, a probe in Spain over the use of spyware against Spanish politicians has so far led to no accusations against those involved, and one in Greece has cleared government agencies of any wrongdoing.

“Europe is in the midst of a mercenary spyware crisis,” says Scott-Railton. “I have looked on with puzzled wonderment as European institutions and governments fail to address this issue at scale, even though there are domestic and export-related international issues.”

With the executive order, the US focuses on its national security and foreign policy interests in the deployment of the technology in accordance with human rights and the rule of law, as well as mitigating counterintelligence risks (e.g. the targeting of US officials). Europe—though it acknowledges the foreign policy dimension—has so far primarily concentrated on human rights considerations rather than counterintelligence and national security threats.

Such a threat became apparent in August, when Google’s Threat Analysis Group (TAG) found that Russian government hackers were using exploits made by spyware companies NSO Group and Intellexa.

Meanwhile, Access Now and Citizen Lab speculated in May that Estonia may have been behind the hacking of exiled Russian journalists, dissidents, and others with NSO Group’s Pegasus spyware.

“In an attempt to protect themselves from Russia, some European countries are using the same tools against the same people that Russia is targeting,” says Access Now’s Krapiva. “By having easier access to this kind of vulnerabilities, because they are then sold on the black market, Russia is able to purchase them in the end.”

“It’s a huge mess,” she adds. “By attempting to protect national security, they actually undermine it in many ways.”

Citizen Lab’s Scott-Railton believes these developments should raise concern among European decisionmakers just as they have for their US counterparts, who emphasized the national security aspect in the executive order.

“What is it going to take for European heads of state to recognize they have a national security threat from this technology?” Scott-Railton says. “Until they recognize the twin human rights and national security threats, the way the US has, they are going to be at a tremendous security disadvantage.”

8 notes

·

View notes

Text

youtube

FINAL FANTASY VII REBIRTH Final Trailer

youtube

Final Trailer (Japanese version)

youtube

Gameplay Video

youtube

Gameplay Video (Japanese version)

youtube

Immersion Trailer

youtube

Full State of Play presentation

youtube

The demo for Final Fantasy VII Rebirth is available now via PlayStation Store.

Demo overview

This product is a playable demo of Final Fantasy VII Rebirth.

This demo lets you enjoy the Nibelheim episode that forms the opening chapter of the game’s story, as well as the Junon area episode where you can experience the world map and exploration elements of the gameplay.

Players who have save data from the demo can claim a kupo charm and adventuring items set in the full game.

**Kupo Charm – An accessory that increases the number of resource items extracted at a set rate.

**Survival Set – A selection of items to aid on your adventure, such as potions and ethers.

Players who complete the Nibelheim episode will be able to skip the same section in the full game.

*Save data needs to be present on the PlayStation 5 console. *The Junon area episode will become playable after an update on February 21, 2024 and can be accessed after completing the Nibelheim episode. *The Junon area featured in this demo has been altered to make the content more compact, so progress cannot be carried over to the full game. *Before diving into the full game, be sure you have applied the latest update. This ensures you get the Kupo Charm, Survival Set, and the ability to skip sections covered in the Nibelheim episode demo.

What is Final Fantasy VII Rebirth?

After escaping from the mako powered city of Midgar, Cloud and his friends have broken the shackles of fate and set out on a journey into untracked wastelands. New adventures await in a vast and multifaceted world, sprinting across grassy plains on a Chocobo and exploring expansive environments.

*This game is a remake of the original Final Fantasy VII released in 1997. It is the second part of the Final Fantasy VII Remake trilogy and is based on the section of the original story leading up to the Forgotten Capital, with new elements added in.

New character key visuals

New character renders

Final Fantasy VII Rebirth, the second game in the Final Fantasy VII remake trilogy, will launch for PlayStation 5 on February 29, 2024.

#Final Fantasy VII Rebirth#FFVII Rebirth#Final Fantasy 7 Rebirth#FF7 Rebirth#Final Fantasy VII Remake#FFVIIR#Final Fantasy 7 Remake#FF7R#Final Fantasy VII#FFVII#Final Fantasy 7#FF7#Final Fantasy#Square Enix#video game#PS5#long post

25 notes

·

View notes

Text

Data warehousing solution

Unlocking the Power of Data Warehousing: A Key to Smarter Decision-Making

In today's data-driven world, businesses need to make smarter, faster, and more informed decisions. But how can companies achieve this? One powerful tool that plays a crucial role in managing vast amounts of data is data warehousing. In this blog, we’ll explore what data warehousing is, its benefits, and how it can help organizations make better business decisions.

What is Data Warehousing?

At its core, data warehousing refers to the process of collecting, storing, and managing large volumes of data from different sources in a central repository. The data warehouse serves as a consolidated platform where all organizational data—whether from internal systems, third-party applications, or external sources—can be stored, processed, and analyzed.

A data warehouse is designed to support query and analysis operations, making it easier to generate business intelligence (BI) reports, perform complex data analysis, and derive insights for better decision-making. Data warehouses are typically used for historical data analysis, as they store data from multiple time periods to identify trends, patterns, and changes over time.

Key Components of a Data Warehouse

To understand the full functionality of a data warehouse, it's helpful to know its primary components:

Data Sources: These are the various systems and platforms where data is generated, such as transactional databases, CRM systems, or external data feeds.

ETL (Extract, Transform, Load): This is the process by which data is extracted from different sources, transformed into a consistent format, and loaded into the warehouse.

Data Warehouse Storage: The central repository where cleaned, structured data is stored. This can be in the form of a relational database or a cloud-based storage system, depending on the organization’s needs.

OLAP (Online Analytical Processing): This allows for complex querying and analysis, enabling users to create multidimensional data models, perform ad-hoc queries, and generate reports.

BI Tools and Dashboards: These tools provide the interfaces that enable users to interact with the data warehouse, such as through reports, dashboards, and data visualizations.

Benefits of Data Warehousing

Improved Decision-Making: With data stored in a single, organized location, businesses can make decisions based on accurate, up-to-date, and complete information. Real-time analytics and reporting capabilities ensure that business leaders can take swift action.

Consolidation of Data: Instead of sifting through multiple databases or systems, employees can access all relevant data from one location. This eliminates redundancy and reduces the complexity of managing data from various departments or sources.

Historical Analysis: Data warehouses typically store historical data, making it possible to analyze long-term trends and patterns. This helps businesses understand customer behavior, market fluctuations, and performance over time.

Better Reporting: By using BI tools integrated with the data warehouse, businesses can generate accurate reports on key metrics. This is crucial for monitoring performance, tracking KPIs (Key Performance Indicators), and improving strategic planning.

Scalability: As businesses grow, so does the volume of data they collect. Data warehouses are designed to scale easily, handling increasing data loads without compromising performance.

Enhanced Data Quality: Through the ETL process, data is cleaned, transformed, and standardized. This means the data stored in the warehouse is of high quality—consistent, accurate, and free of errors.

Types of Data Warehouses

There are different types of data warehouses, depending on how they are set up and utilized:

Enterprise Data Warehouse (EDW): An EDW is a central data repository for an entire organization, allowing access to data from all departments or business units.

Operational Data Store (ODS): This is a type of data warehouse that is used for storing real-time transactional data for short-term reporting. An ODS typically holds data that is updated frequently.

Data Mart: A data mart is a subset of a data warehouse focused on a specific department, business unit, or subject. For example, a marketing data mart might contain data relevant to marketing operations.

Cloud Data Warehouse: With the rise of cloud computing, cloud-based data warehouses like Google BigQuery, Amazon Redshift, and Snowflake have become increasingly popular. These platforms allow businesses to scale their data infrastructure without investing in physical hardware.

How Data Warehousing Drives Business Intelligence

The purpose of a data warehouse is not just to store data, but to enable businesses to extract valuable insights. By organizing and analyzing data, businesses can uncover trends, customer preferences, and operational inefficiencies. Some of the ways in which data warehousing supports business intelligence include:

Customer Segmentation: Companies can analyze data to segment customers based on behavior, demographics, or purchasing patterns, leading to better-targeted marketing efforts.

Predictive Analytics: By analyzing historical data, businesses can forecast trends and predict future outcomes, such as sales, inventory needs, and staffing levels.

Improved Operational Efficiency: With data-driven insights, businesses can streamline processes, optimize supply chains, and reduce costs. For example, identifying inventory shortages or surplus can help optimize stock levels.

Challenges in Data Warehousing

While the benefits of data warehousing are clear, there are some challenges to consider:

Complexity of Implementation: Setting up a data warehouse can be a complex and time-consuming process, requiring expertise in database management, ETL processes, and BI tools.

Data Integration: Integrating data from various sources with differing formats can be challenging, especially when dealing with legacy systems or unstructured data.

Cost: Building and maintaining a data warehouse can be expensive, particularly when managing large volumes of data. However, the investment is often worth it in terms of the business value generated.

Security: With the consolidation of sensitive data in one place, data security becomes critical. Organizations need robust security measures to prevent unauthorized access and ensure compliance with data protection regulations.

The Future of Data Warehousing

The world of data warehousing is constantly evolving. With advancements in cloud technology, machine learning, and artificial intelligence, businesses are now able to handle larger datasets, perform more sophisticated analyses, and automate key processes.

As companies increasingly embrace the concept of a "data-driven culture," the need for powerful data warehousing solutions will continue to grow. The integration of AI-driven analytics, real-time data processing, and more intuitive BI tools will only further enhance the value of data warehouses in the years to come.

Conclusion

In today’s fast-paced, data-centric world, having access to accurate, high-quality data is crucial for making informed business decisions. A robust data warehousing solution enables businesses to consolidate, analyze, and extract valuable insights from their data, driving smarter decision-making across all departments. While building a data warehouse comes with challenges, the benefits—improved efficiency, better decision-making, and enhanced business intelligence—make it an essential tool for modern organizations.

You said:

blog for Data warehousing

ChatGPT said:

Title: Data Warehousing: The Backbone of Data-Driven Decision Making

In today’s fast-paced business environment, the ability to make data-driven decisions quickly is paramount. However, to leverage data effectively, companies need more than just raw data. They need a centralized, structured system that allows them to store, manage, and analyze data seamlessly. This is where data warehousing comes into play.

Data warehousing has become the cornerstone of modern business intelligence (BI) systems, enabling organizations to unlock valuable insights from vast amounts of data. In this blog, we’ll explore what data warehousing is, why it’s important, and how it drives smarter decision-making.

What is Data Warehousing?

At its core, data warehousing refers to the process of collecting and storing data from various sources into a centralized system where it can be easily accessed and analyzed. Unlike traditional databases, which are optimized for transactional operations (i.e., data entry, updating), data warehouses are designed specifically for complex queries, reporting, and data analysis.

A data warehouse consolidates data from various sources—such as customer information systems, financial systems, and even external data feeds—into a single repository. The data is then structured and organized in a way that supports business intelligence (BI) tools, enabling organizations to generate reports, create dashboards, and gain actionable insights.

Key Components of a Data Warehouse

Data Sources: These are the different systems or applications that generate data. Examples include CRM systems, ERP systems, external APIs, and transactional databases.

ETL (Extract, Transform, Load): This is the process by which data is pulled from different sources (Extract), cleaned and converted into a usable format (Transform), and finally loaded into the data warehouse (Load).

Data Warehouse Storage: The actual repository where structured and organized data is stored. This could be in traditional relational databases or modern cloud-based storage platforms.

OLAP (Online Analytical Processing): OLAP tools enable users to run complex analytical queries on the data warehouse, creating reports, performing multidimensional analysis, and identifying trends.

Business Intelligence Tools: These tools are used to interact with the data warehouse, generate reports, visualize data, and help businesses make data-driven decisions.

Benefits of Data Warehousing

Improved Decision Making: By consolidating data into a single repository, decision-makers can access accurate, up-to-date information whenever they need it. This leads to more informed, faster decisions based on reliable data.

Data Consolidation: Instead of pulling data from multiple systems and trying to make sense of it, a data warehouse consolidates data from various sources into one place, eliminating the complexity of handling scattered information.

Historical Analysis: Data warehouses are typically designed to store large amounts of historical data. This allows businesses to analyze trends over time, providing valuable insights into long-term performance and market changes.

Increased Efficiency: With a data warehouse in place, organizations can automate their reporting and analytics processes. This means less time spent manually gathering data and more time focusing on analyzing it for actionable insights.

Better Reporting and Insights: By using data from a single, trusted source, businesses can produce consistent, accurate reports that reflect the true state of affairs. BI tools can transform raw data into meaningful visualizations, making it easier to understand complex trends.

Types of Data Warehouses

Enterprise Data Warehouse (EDW): This is a centralized data warehouse that consolidates data across the entire organization. It’s used for comprehensive, organization-wide analysis and reporting.

Data Mart: A data mart is a subset of a data warehouse that focuses on specific business functions or departments. For example, a marketing data mart might contain only marketing-related data, making it easier for the marketing team to access relevant insights.

Operational Data Store (ODS): An ODS is a database that stores real-time data and is designed to support day-to-day operations. While a data warehouse is optimized for historical analysis, an ODS is used for operational reporting.

Cloud Data Warehouse: With the rise of cloud computing, cloud-based data warehouses like Amazon Redshift, Google BigQuery, and Snowflake have become popular. These solutions offer scalable, cost-effective, and flexible alternatives to traditional on-premises data warehouses.

How Data Warehousing Supports Business Intelligence

A data warehouse acts as the foundation for business intelligence (BI) systems. BI tools, such as Tableau, Power BI, and QlikView, connect directly to the data warehouse, enabling users to query the data and generate insightful reports and visualizations.

For example, an e-commerce company can use its data warehouse to analyze customer behavior, sales trends, and inventory performance. The insights gathered from this analysis can inform marketing campaigns, pricing strategies, and inventory management decisions.

Here are some ways data warehousing drives BI and decision-making:

Customer Insights: By analyzing customer purchase patterns, organizations can better segment their audience and personalize marketing efforts.

Trend Analysis: Historical data allows companies to identify emerging trends, such as seasonal changes in demand or shifts in customer preferences.

Predictive Analytics: By leveraging machine learning models and historical data stored in the data warehouse, companies can forecast future trends, such as sales performance, product demand, and market behavior.

Operational Efficiency: A data warehouse can help identify inefficiencies in business operations, such as bottlenecks in supply chains or underperforming products.

2 notes

·

View notes

Text

By Sesona Mdlokovana

Understanding Data Colonialism

Data colonialism is the unregulated extraction, commodification and monopolisation of data from developing countries by multinational corporations that are primarily based in the West. Companies like Meta (outlawed in Russia - InfoBRICS), Google, Microsoft, Amazon and Apple dominate digital infrastructures across the globe, offering low-cost or free services in exchange for vast amounts of governmental, personal and commercial data. In countries across Latin America, Africa and South Asia, these tech conglomerates use their technological and financial dominance to enforce unequal digital dependencies. For example:

- The dominance of Google in cloud and search services means that millions of government institutions and businesses across the Global South store highly sensitive data on Western-owned servers, often located outside of their jurisdictions.

- Meta controlling social media platforms such as WhatsApp, Facebook and Instagram has led to content moderation policies that disproportionately have serious impacts on non-Western voices, while simultaneously profiting from local user-generated content.

- Amazon Web Services (AWS) hosts an immense amount of cloud storage and creates a scenario where governments and local startups in BRICS nations have to rely on foreign digital infrastructure.

- AI models and fintech systems rely on data from Global South users, yet these countries see little economic benefit from its monetisation.

The BRICS bloc response: Strengthening Digital Sovereignty In order to counter data colonialism, BRICS countries have to prioritise strategies and policies that assert digital sovereignty while facilitating indigenous technological growth. There are several approaches in which this could be achieved:

2 notes

·

View notes

Text

Transforming Businesses with IoT: How Iotric’s IoT App Development Services Drive Innovation

In these days’s fast-paced virtual world, companies should include smart technology to stay ahead. The Internet of Things (IoT) is revolutionizing industries by way of connecting gadgets, collecting actual-time data, and automating approaches for stronger efficiency. Iotric, a leading IoT app improvement carrier issuer, makes a speciality of developing contemporary answers that help businesses leverage IoT for boom and innovation.

Why IoT is Essential for Modern Businesses IoT generation allows seamless communique between gadgets, permitting agencies to optimize operations, enhance patron enjoy, and reduce charges. From smart homes and wearable gadgets to business automation and healthcare monitoring, IoT is reshaping the manner industries perform. With a complicated IoT app, companies can:

Enhance operational efficiency by automating methods Gain real-time insights with linked devices Reduce downtime thru predictive renovation Improve purchaser revel in with smart applications

Strengthen security with far off tracking

Iotric: A Leader in IoT App Development Iotric is a trusted name in IoT app development, imparting cease-to-stop solutions tailored to numerous industries. Whether you want an IoT mobile app, cloud integration, or custom firmware improvement, Iotric can provide modern answers that align with your commercial enterprise goals.

Key Features of Iotric’s IoT App Development Service Custom IoT App Development – Iotric builds custom designed IoT programs that seamlessly connect to various gadgets and systems, making sure easy statistics waft and person-pleasant interfaces.

Cloud-Based IoT Solutions – With knowledge in cloud integration, Iotric develops scalable and comfy cloud-based totally IoT programs that permit real-time statistics access and analytics.

Embedded Software Development – Iotric focuses on developing green firmware for IoT gadgets, ensuring optimal performance and seamless connectivity.

IoT Analytics & Data Processing – By leveraging AI-driven analytics, Iotric enables businesses extract valuable insights from IoT facts, enhancing decision-making and operational efficiency.

IoT Security & Compliance – Security is a pinnacle precedence for Iotric, ensuring that IoT programs are covered in opposition to cyber threats and comply with enterprise standards.

Industries Benefiting from Iotric’s IoT Solutions Healthcare Iotric develops IoT-powered healthcare programs for far off patient tracking, clever wearables, and real-time health monitoring, making sure better patient care and early diagnosis.

Manufacturing With business IoT (IIoT) solutions, Iotric facilitates manufacturers optimize manufacturing traces, lessen downtime, and decorate predictive preservation strategies.

Smart Homes & Cities From smart lighting and security structures to intelligent transportation, Iotric’s IoT solutions make contributions to building linked and sustainable cities.

Retail & E-commerce Iotric’s IoT-powered stock monitoring, smart checkout structures, and personalized purchaser reviews revolutionize the retail region.

Why Choose Iotric for IoT App Development? Expert Team: A team of professional IoT builders with deep industry understanding Cutting-Edge Technology: Leverages AI, gadget gaining knowledge of, and big records for smart solutions End-to-End Services: From consultation and development to deployment and support Proven Track Record: Successful IoT projects throughout more than one industries

Final Thoughts As organizations maintain to embody digital transformation, IoT stays a game-changer. With Iotric’s advanced IoT app improvement services, groups can unencumber new possibilities, beautify efficiency, and live ahead of the competition. Whether you are a startup or an established agency, Iotric offers the expertise and innovation had to carry your IoT vision to lifestyles.

Ready to revolutionize your commercial enterprise with IoT? Partner with Iotric these days and enjoy the destiny of connected generation!

2 notes

·

View notes

Text

The Role of Photon Insights in Helps In Academic Research

In recent times, the integration of Artificial Intelligence (AI) with academic study has been gaining significant momentum that offers transformative opportunities across different areas. One area in which AI has a significant impact is in the field of photonics, the science of producing as well as manipulating and sensing photos that can be used in medical, telecommunications, and materials sciences. It also reveals its ability to enhance the analysis of data, encourage collaboration, and propel the development of new technologies.

Understanding the Landscape of Photonics

Photonics covers a broad range of technologies, ranging from fibre optics and lasers to sensors and imaging systems. As research in this field gets more complicated and complex, the need for sophisticated analytical tools becomes essential. The traditional methods of data processing and interpretation could be slow and inefficient and often slow the pace of discovery. This is where AI is emerging as a game changer with robust solutions that improve research processes and reveal new knowledge.

Researchers can, for instance, use deep learning methods to enhance image processing in applications such as biomedical imaging. AI-driven algorithms can improve the image’s resolution, cut down on noise, and even automate feature extraction, which leads to more precise diagnosis. Through automation of this process, experts are able to concentrate on understanding results, instead of getting caught up with managing data.

Accelerating Material Discovery

Research in the field of photonics often involves investigation of new materials, like photonic crystals, or metamaterials that can drastically alter the propagation of light. Methods of discovery for materials are time-consuming and laborious and often require extensive experiments and testing. AI can speed up the process through the use of predictive models and simulations.

Facilitating Collaboration

In a time when interdisciplinary collaboration is vital, AI tools are bridging the gap between researchers from various disciplines. The research conducted in the field of photonics typically connects with fields like engineering, computer science, and biology. AI-powered platforms aid in this collaboration by providing central databases and sharing information, making it easier for researchers to gain access to relevant data and tools.

Cloud-based AI solutions are able to provide shared datasets, which allows researchers to collaborate with no limitations of geographic limitations. Collaboration is essential in photonics, where the combination of diverse knowledge can result in revolutionary advances in technology and its applications.

Automating Experimental Procedures

Automation is a third area in which AI is becoming a major factor in the field of academic research in the field of photonics. The automated labs equipped with AI-driven technology can carry out experiments with no human involvement. The systems can alter parameters continuously based on feedback, adjusting conditions for experiments to produce the highest quality outcomes.

Furthermore, robotic systems that are integrated with AI can perform routine tasks like sampling preparation and measurement. This is not just more efficient but also decreases errors made by humans, which results in more accurate results. Through automation researchers can devote greater time for analysis as well as development which will speed up the overall research process.

Predictive Analytics for Research Trends

The predictive capabilities of AI are crucial for analyzing and predicting research trends in the field of photonics. By studying the literature that is already in use as well as research outputs, AI algorithms can pinpoint new themes and areas of research. This insight can assist researchers to prioritize their work and identify emerging trends that could be destined to be highly impactful.

For organizations and funding bodies These insights are essential to allocate resources as well as strategic plans. If they can understand where research is heading, they are able to help support research projects that are in line with future requirements, ultimately leading to improvements that benefit the entire society.

Ethical Considerations and Challenges

While the advantages of AI in speeding up research in photonics are evident however, ethical considerations need to be taken into consideration. Questions like privacy of data and bias in algorithmic computation, as well as the possibility of misuse by AI technology warrant careful consideration. Institutions and researchers must adopt responsible AI practices to ensure that the applications they use enhance human decision-making and not substitute it.

In addition, the incorporation in the use of AI into academic studies calls for the level of digital literacy which not every researcher are able to attain. Therefore, investing in education and education about AI methods and tools is vital to reap the maximum potential advantages.

Conclusion

The significance of AI in speeding up research at universities, especially in the field of photonics, is extensive and multifaceted. Through improving data analysis and speeding up the discovery of materials, encouraging collaboration, facilitating experimental procedures and providing insights that are predictive, AI is reshaping the research landscape. As the area of photonics continues to grow, the integration of AI technologies is certain to be a key factor in fostering innovation and expanding our knowledge of applications based on light.

Through embracing these developments scientists can open up new possibilities for research, which ultimately lead to significant scientific and technological advancements. As we move forward on this new frontier, interaction with AI as well as academic researchers will prove essential to address the challenges and opportunities ahead. The synergy between these two disciplines will not only speed up discovery in photonics, but also has the potential to change our understanding of and interaction with the world that surrounds us.

2 notes

·

View notes

Text

Say Goodbye to Manual Data Entry: Receipts Made Easy with AlgoDocs

Receipt monitoring is a pain for small and large enterprises alike, and even for individuals. Keeping track of spending can be difficult, from fading receipts to overstuffed shoeboxes. AlgoDocs is a straightforward and effective solution that streamlines your expenditure management.

Start your AlgoDocs adventure today by securing your Forever Free Subscription! Ready to witness the time-saving magic of PDF processing? Dive in now and enjoy complimentary document parsing for up to 50 pages each month. If your document needs exceed this limit, explore our cost-effective pricing options.

How AlgoDocs Simplifies Receipts

Quick Capture: Snap a photo of any receipt, and AlgoDocs extracts key data like date, merchant, totals, etc.

Accessible Anywhere: Cloud-based storage keeps your receipt data secure and accessible from any device.

Integration with hundreds of Software: Eliminate manual data entry by exporting receipt data into your favorite expense management tool.

Step-by-Step Guide

Upload the PDF or photo of your receipt.

Create extracting rules and Walla AlgoDocs extracts and stores the data.

Export data as Excel, XML, JSON, or simply integrate it with your software

Empower Your Business with AlgoDocs

Join the free AlgoDocs plan [www.algodocs.com]. Let AlgoDocs handle the receipts so you can focus on what truly matters - growing your business.

#AlgoDocs#TableExtraction#OCRAlgorithms#AIHandwritingRecognition#AlgoDocsOCRRevolution#ImageToExcel#PdfToExcel#imagetotext

3 notes

·

View notes

Text

3.22 Billion years ago

The first work from our ongoing BASE project examining freshly drilled material from the 3.22 Billion year old Moodies Group, South Africa. This was written by local on site geologist Phumelele Mashele and project lead Christoph Heubeck Most rocks of this age have been metamorphosed and weathered and so the picture they give us of the ancient Earth is clouded and unclear. In contrast the Moodies Group are amazingly well preserved though very weathered on the surface. This is why the Barberton Archean Surface Environment project was formed. Teaming up with International Continental Drilling Project, and the South African Geological Survey, we extracted drill core material of the Moodies Group to access the rocks below the weathered surface. In total we drilled several kilometres of rock and were able to extract over 90% of the drilled rock. The preservation quality is *astounding*, but you will have to wait for that data. Instead, here is a taster from Phumi and Christoph.

https://kids.frontiersin.org/articles/10.3389/frym.2024.1252881

2 notes

·

View notes

Text

Best Data Science Courses Online - Skillsquad

Why is data science important?

Information science is significant on the grounds that it consolidates instruments, techniques, and innovation to create importance from information. Current associations are immersed with information; there is a multiplication of gadgets that can naturally gather and store data. Online frameworks and installment gateways catch more information in the fields of web based business, medication, finance, and each and every part of human existence. We have text, sound, video, and picture information accessible in huge amounts.

Best future of data science with Skillsquad

Man-made consciousness and AI advancements have made information handling quicker and more effective. Industry request has made a biological system of courses, degrees, and occupation positions inside the field of information science. As a result of the cross-practical range of abilities and skill required, information science shows solid extended development throughout the next few decades.

What is data science used for?

Data science is used in four main ways:

1. Descriptive analysis

2. Diagnostic analysis

3. Predictive analysis

4. Prescriptive analysis

1. Descriptive analysis: -

Distinct examination looks at information to acquire experiences into what occurred or what's going on in the information climate. It is portrayed by information representations, for example, pie diagrams, bar outlines, line charts, tables, or created accounts. For instance, a flight booking administration might record information like the quantity of tickets booked every day. Graphic investigation will uncover booking spikes, booking ruts, and high-performing a very long time for this help.

2. Diagnostic analysis: -

Symptomatic investigation is a profound plunge or point by point information assessment to comprehend the reason why something occurred. It is portrayed by procedures, for example, drill-down, information revelation, information mining, and connections. Different information tasks and changes might be performed on a given informational index to find extraordinary examples in every one of these methods.

3. Predictive analysis: -

Prescient examination utilizes authentic information to make precise gauges about information designs that might happen from here on out. It is portrayed by procedures, for example, AI, determining, design coordinating, and prescient displaying. In every one of these procedures, PCs are prepared to figure out causality associations in the information

4. Prescriptive analysis: -

Prescriptive examination takes prescient information to a higher level. It predicts what is probably going to occur as well as proposes an ideal reaction to that result. It can investigate the likely ramifications of various decisions and suggest the best strategy. It utilizes chart investigation, reproduction, complex occasion handling, brain organizations, and suggestion motors from AI.

Different data science technologies: -

Information science experts work with complex advancements, for example,

- Computerized reasoning: AI models and related programming are utilized for prescient and prescriptive investigation.

- Distributed computing: Cloud innovations have given information researchers the adaptability and handling power expected for cutting edge information investigation.

- Web of things: IoT alludes to different gadgets that can consequently associate with the web. These gadgets gather information for information science drives. They create gigantic information which can be utilized for information mining and information extraction.

- Quantum figuring: Quantum PCs can perform complex estimations at high velocity. Gifted information researchers use them for building complex quantitative calculations.

We are providing the Best Data Science Courses Online

AWS Certification Program

Full Stack Java Developer Training Courses

2 notes

·

View notes

Text

Future-Ready Enterprises: The Crucial Role of Large Vision Models (LVMs)

New Post has been published on https://thedigitalinsider.com/future-ready-enterprises-the-crucial-role-of-large-vision-models-lvms/

Future-Ready Enterprises: The Crucial Role of Large Vision Models (LVMs)

What are Large Vision Models (LVMs)

Over the last few decades, the field of Artificial Intelligence (AI) has experienced rapid growth, resulting in significant changes to various aspects of human society and business operations. AI has proven to be useful in task automation and process optimization, as well as in promoting creativity and innovation. However, as data complexity and diversity continue to increase, there is a growing need for more advanced AI models that can comprehend and handle these challenges effectively. This is where the emergence of Large Vision Models (LVMs) becomes crucial.

LVMs are a new category of AI models specifically designed for analyzing and interpreting visual information, such as images and videos, on a large scale, with impressive accuracy. Unlike traditional computer vision models that rely on manual feature crafting, LVMs leverage deep learning techniques, utilizing extensive datasets to generate authentic and diverse outputs. An outstanding feature of LVMs is their ability to seamlessly integrate visual information with other modalities, such as natural language and audio, enabling a comprehensive understanding and generation of multimodal outputs.

LVMs are defined by their key attributes and capabilities, including their proficiency in advanced image and video processing tasks related to natural language and visual information. This includes tasks like generating captions, descriptions, stories, code, and more. LVMs also exhibit multimodal learning by effectively processing information from various sources, such as text, images, videos, and audio, resulting in outputs across different modalities.

Additionally, LVMs possess adaptability through transfer learning, meaning they can apply knowledge gained from one domain or task to another, with the capability to adapt to new data or scenarios through minimal fine-tuning. Moreover, their real-time decision-making capabilities empower rapid and adaptive responses, supporting interactive applications in gaming, education, and entertainment.

How LVMs Can Boost Enterprise Performance and Innovation?

Adopting LVMs can provide enterprises with powerful and promising technology to navigate the evolving AI discipline, making them more future-ready and competitive. LVMs have the potential to enhance productivity, efficiency, and innovation across various domains and applications. However, it is important to consider the ethical, security, and integration challenges associated with LVMs, which require responsible and careful management.

Moreover, LVMs enable insightful analytics by extracting and synthesizing information from diverse visual data sources, including images, videos, and text. Their capability to generate realistic outputs, such as captions, descriptions, stories, and code based on visual inputs, empowers enterprises to make informed decisions and optimize strategies. The creative potential of LVMs emerges in their ability to develop new business models and opportunities, particularly those using visual data and multimodal capabilities.

Prominent examples of enterprises adopting LVMs for these advantages include Landing AI, a computer vision cloud platform addressing diverse computer vision challenges, and Snowflake, a cloud data platform facilitating LVM deployment through Snowpark Container Services. Additionally, OpenAI, contributes to LVM development with models like GPT-4, CLIP, DALL-E, and OpenAI Codex, capable of handling various tasks involving natural language and visual information.

In the post-pandemic landscape, LVMs offer additional benefits by assisting enterprises in adapting to remote work, online shopping trends, and digital transformation. Whether enabling remote collaboration, enhancing online marketing and sales through personalized recommendations, or contributing to digital health and wellness via telemedicine, LVMs emerge as powerful tools.

Challenges and Considerations for Enterprises in LVM Adoption

While the promises of LVMs are extensive, their adoption is not without challenges and considerations. Ethical implications are significant, covering issues related to bias, transparency, and accountability. Instances of bias in data or outputs can lead to unfair or inaccurate representations, potentially undermining the trust and fairness associated with LVMs. Thus, ensuring transparency in how LVMs operate and the accountability of developers and users for their consequences becomes essential.

Security concerns add another layer of complexity, requiring the protection of sensitive data processed by LVMs and precautions against adversarial attacks. Sensitive information, ranging from health records to financial transactions, demands robust security measures to preserve privacy, integrity, and reliability.

Integration and scalability hurdles pose additional challenges, especially for large enterprises. Ensuring compatibility with existing systems and processes becomes a crucial factor to consider. Enterprises need to explore tools and technologies that facilitate and optimize the integration of LVMs. Container services, cloud platforms, and specialized platforms for computer vision offer solutions to enhance the interoperability, performance, and accessibility of LVMs.

To tackle these challenges, enterprises must adopt best practices and frameworks for responsible LVM use. Prioritizing data quality, establishing governance policies, and complying with relevant regulations are important steps. These measures ensure the validity, consistency, and accountability of LVMs, enhancing their value, performance, and compliance within enterprise settings.

Future Trends and Possibilities for LVMs

With the adoption of digital transformation by enterprises, the domain of LVMs is poised for further evolution. Anticipated advancements in model architectures, training techniques, and application areas will drive LVMs to become more robust, efficient, and versatile. For example, self-supervised learning, which enables LVMs to learn from unlabeled data without human intervention, is expected to gain prominence.

Likewise, transformer models, renowned for their ability to process sequential data using attention mechanisms, are likely to contribute to state-of-the-art outcomes in various tasks. Similarly, Zero-shot learning, allowing LVMs to perform tasks they have not been explicitly trained on, is set to expand their capabilities even further.

Simultaneously, the scope of LVM application areas is expected to widen, encompassing new industries and domains. Medical imaging, in particular, holds promise as an avenue where LVMs could assist in the diagnosis, monitoring, and treatment of various diseases and conditions, including cancer, COVID-19, and Alzheimer’s.

In the e-commerce sector, LVMs are expected to enhance personalization, optimize pricing strategies, and increase conversion rates by analyzing and generating images and videos of products and customers. The entertainment industry also stands to benefit as LVMs contribute to the creation and distribution of captivating and immersive content across movies, games, and music.

To fully utilize the potential of these future trends, enterprises must focus on acquiring and developing the necessary skills and competencies for the adoption and implementation of LVMs. In addition to technical challenges, successfully integrating LVMs into enterprise workflows requires a clear strategic vision, a robust organizational culture, and a capable team. Key skills and competencies include data literacy, which encompasses the ability to understand, analyze, and communicate data.

The Bottom Line

In conclusion, LVMs are effective tools for enterprises, promising transformative impacts on productivity, efficiency, and innovation. Despite challenges, embracing best practices and advanced technologies can overcome hurdles. LVMs are envisioned not just as tools but as pivotal contributors to the next technological era, requiring a thoughtful approach. A practical adoption of LVMs ensures future readiness, acknowledging their evolving role for responsible integration into business processes.

#Accessibility#ai#Alzheimer's#Analytics#applications#approach#Art#artificial#Artificial Intelligence#attention#audio#automation#Bias#Business#Cancer#Cloud#cloud data#cloud platform#code#codex#Collaboration#Commerce#complexity#compliance#comprehensive#computer#Computer vision#container#content#covid

2 notes

·

View notes

Text

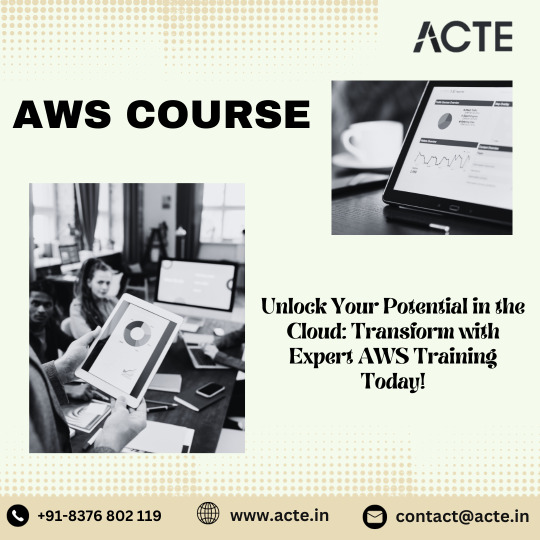

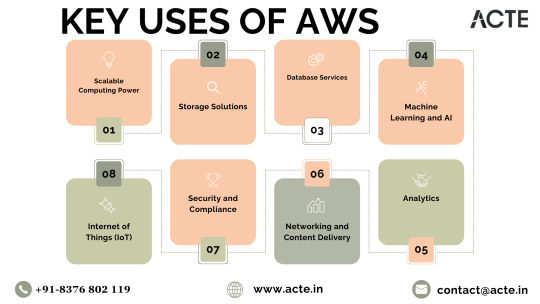

Navigating the Cloud: Unleashing Amazon Web Services' (AWS) Impact on Digital Transformation

In the ever-evolving realm of technology, cloud computing stands as a transformative force, offering unparalleled flexibility, scalability, and cost-effectiveness. At the forefront of this paradigm shift is Amazon Web Services (AWS), a comprehensive cloud computing platform provided by Amazon.com. For those eager to elevate their proficiency in AWS, specialized training initiatives like AWS Training in Pune offer invaluable insights into maximizing the potential of AWS services.

Exploring AWS: A Catalyst for Digital Transformation

As we traverse the dynamic landscape of cloud computing, AWS emerges as a pivotal player, empowering businesses, individuals, and organizations to fully embrace the capabilities of the cloud. Let's delve into the multifaceted ways in which AWS is reshaping the digital landscape and providing a robust foundation for innovation.

Decoding the Heart of AWS

AWS in a Nutshell: Amazon Web Services serves as a robust cloud computing platform, delivering a diverse range of scalable and cost-effective services. Tailored to meet the needs of individual users and large enterprises alike, AWS acts as a gateway, unlocking the potential of the cloud for various applications.

Core Function of AWS: At its essence, AWS is designed to offer on-demand computing resources over the internet. This revolutionary approach eliminates the need for substantial upfront investments in hardware and infrastructure, providing users with seamless access to a myriad of services.

AWS Toolkit: Key Services Redefined

Empowering Scalable Computing: Through Elastic Compute Cloud (EC2) instances, AWS furnishes virtual servers, enabling users to dynamically scale computing resources based on demand. This adaptability is paramount for handling fluctuating workloads without the constraints of physical hardware.

Versatile Storage Solutions: AWS presents a spectrum of storage options, such as Amazon Simple Storage Service (S3) for object storage, Amazon Elastic Block Store (EBS) for block storage, and Amazon Glacier for long-term archival. These services deliver robust and scalable solutions to address diverse data storage needs.

Streamlining Database Services: Managed database services like Amazon Relational Database Service (RDS) and Amazon DynamoDB (NoSQL database) streamline efficient data storage and retrieval. AWS simplifies the intricacies of database management, ensuring both reliability and performance.

AI and Machine Learning Prowess: AWS empowers users with machine learning services, exemplified by Amazon SageMaker. This facilitates the seamless development, training, and deployment of machine learning models, opening new avenues for businesses integrating artificial intelligence into their applications. To master AWS intricacies, individuals can leverage the Best AWS Online Training for comprehensive insights.

In-Depth Analytics: Amazon Redshift and Amazon Athena play pivotal roles in analyzing vast datasets and extracting valuable insights. These services empower businesses to make informed, data-driven decisions, fostering innovation and sustainable growth.

Networking and Content Delivery Excellence: AWS services, such as Amazon Virtual Private Cloud (VPC) for network isolation and Amazon CloudFront for content delivery, ensure low-latency access to resources. These features enhance the overall user experience in the digital realm.

Commitment to Security and Compliance: With an unwavering emphasis on security, AWS provides a comprehensive suite of services and features to fortify the protection of applications and data. Furthermore, AWS aligns with various industry standards and certifications, instilling confidence in users regarding data protection.

Championing the Internet of Things (IoT): AWS IoT services empower users to seamlessly connect and manage IoT devices, collect and analyze data, and implement IoT applications. This aligns seamlessly with the burgeoning trend of interconnected devices and the escalating importance of IoT across various industries.

Closing Thoughts: AWS, the Catalyst for Transformation

In conclusion, Amazon Web Services stands as a pioneering force, reshaping how businesses and individuals harness the power of the cloud. By providing a dynamic, scalable, and cost-effective infrastructure, AWS empowers users to redirect their focus towards innovation, unburdened by the complexities of managing hardware and infrastructure. As technology advances, AWS remains a stalwart, propelling diverse industries into a future brimming with endless possibilities. The journey into the cloud with AWS signifies more than just migration; it's a profound transformation, unlocking novel potentials and propelling organizations toward an era of perpetual innovation.

2 notes

·

View notes

Text

Cryptocurrencies to invest long term in 2023

With fiat currencies in constant devaluation, inflation that does not seem to let up and job offers that are increasingly precarious, betting on entrepreneurship and investment seem to be the safest ways to ensure a future. Knowing this, we have developed a detailed list with the twelve best cryptocurrencies to invest in the long term .

Bitcoin Minetrix Bitcoin Minetrix has developed an innovative proposal for investors to participate in cloud mining at low cost, without complications, without scams and without expensive equipment. launchpad development company is the first solution to decentralized mining that will allow participants to obtain mining credits for the extraction of BTC.

The proposal includes the possibility of staking, an attractive APY and the potential to alleviate selling pressure during the launch of the native BTCMTX token to crypto exchange platforms.

The push of the new pre-sale has managed to attract the attention of investors, who a few minutes after starting its pre-sale stage, managed to raise 100,000 dollars, out of a total of 15.6 million that it aims for.