#Cluster Computing Market Size

Explore tagged Tumblr posts

Text

#Cluster Computing#Cluster Computing Market#Cluster Computing Market Size#Cluster Computing Market Share#Cluster Computing Market Growth#Cluster Computing Market Trend

0 notes

Text

Consumers purchase fewer or less specific products or items, so the grocer must increase the prices to recover the profits. Often there are specific products that are unavailable in minority neighborhood stores. One basic premise of The Efficiency Wage model is that the “law of one price is repealed. Wages and Workers' productivity may differ between and across industries” (Romaguera 13). Even in the same chain stores, prices are often lower in those located near prime real estate, where affluent citizens live, particularly where pricing is left for individual store owners and managers to decide. First, we must assume that many minorities live in less affluent neighborhoods. Next, we must assume they purchase fewer products and have less income to spend on products. This is particularly true of higher priced items, such as produce in grocery stores or hardwood lumber from the local Home Depot. These are not illogical assumptions. There is less demand and there is less income available for resources. Consider the local BP stations in two very different neighborhoods. Those living in the less affluent neighborhood are more likely to have jobs closer to home, thus consuming less fuel than those in the more affluent neighborhood. Therefore, to make the same profit as the station in the more affluent neighborhood, the station in the less affluent neighborhood must increase the price of fuel. In turn, the higher cost of fuel may cause those living nearby to become more conscious of fuel consumption or use alternative methods of transportation. Given that another premise of the Efficiency Wage Model “Net productivity of workers is a function of the wage they receive” (Romaguera 14) is true, wages in minority may be lower because they do not need to be higher. If one worker is not productive and is fired, many others are waiting to take his or her place and will work for the same wage. Again, the ability to purchase resources is reduced, due to lower income. The same concept can be applied to housing and rent, only reversed. “In 2000, the proportion of African American households that had obtained home ownership was 65% lower than the proportion of white households that had obtained homeownership” (Ohio State University 3). Therefore, the rental owner will seek to charge as much as demand will allow, regardless of race. This means that those living in less affluent neighborhoods may ultimately get less for their money, in terms of rental unit quality, amenities, and space. Social segmentation also affects the distribution of resources. It may not always do so directly, but by altering marketing efforts, based on clustering or categorization of specific geographic locations or types of customers, allocation is indirectly affected. In Asian Social Science December 2008, Yingchun Guo ( 3-4) explains how clustering works for electric power consumers. The types of customers are divided into four categories. The highest ranking is those that consume more power and have good credit, while the lowest-ranking group has less than ideal credit and consumes less power. Marketing strategies are likely to focus more heavily on the highest-ranking users, where the company stands to make the most profit. Those in the highest ranking group may be offered special rate packages and pricing that is lower per unit than those in the lowest ranking group. Therefore, those in the lowest-ranking group will continue to use less power and are likely to focus more on measures that conserve power, such as unplugging appliances and turning off more lights. They also own fewer major appliances and they may be smaller in size. Fewer electronics such as stereos, TVs, computers, and others. Because they consume less power and have poorer credit, they will be less likely to move up in the rankings and even less likely to be offered the benefits that preferred customers are offered, such as special rates. Read the full article

0 notes

Text

Cloud Native Storage Market Insights: Industry Share, Trends & Future Outlook 2032

TheCloud Native Storage Market Size was valued at USD 16.19 Billion in 2023 and is expected to reach USD 100.09 Billion by 2032 and grow at a CAGR of 22.5% over the forecast period 2024-2032

The cloud native storage market is experiencing rapid growth as enterprises shift towards scalable, flexible, and cost-effective storage solutions. The increasing adoption of cloud computing and containerization is driving demand for advanced storage technologies.

The cloud native storage market continues to expand as businesses seek high-performance, secure, and automated data storage solutions. With the rise of hybrid cloud, Kubernetes, and microservices architectures, organizations are investing in cloud native storage to enhance agility and efficiency in data management.

Get Sample Copy of This Report: https://www.snsinsider.com/sample-request/3454

Market Keyplayers:

Microsoft (Azure Blob Storage, Azure Kubernetes Service (AKS))

IBM, (IBM Cloud Object Storage, IBM Spectrum Scale)

AWS (Amazon S3, Amazon EBS (Elastic Block Store))

Google (Google Cloud Storage, Google Kubernetes Engine (GKE))

Alibaba Cloud (Alibaba Object Storage Service (OSS), Alibaba Cloud Container Service for Kubernetes)

VMWare (VMware vSAN, VMware Tanzu Kubernetes Grid)

Huawei (Huawei FusionStorage, Huawei Cloud Object Storage Service)

Citrix (Citrix Hypervisor, Citrix ShareFile)

Tencent Cloud (Tencent Cloud Object Storage (COS), Tencent Kubernetes Engine)

Scality (Scality RING, Scality ARTESCA)

Splunk (Splunk SmartStore, Splunk Enterprise on Kubernetes)

Linbit (LINSTOR, DRBD (Distributed Replicated Block Device))

Rackspace (Rackspace Object Storage, Rackspace Managed Kubernetes)

Robin.Io (Robin Cloud Native Storage, Robin Multi-Cluster Automation)

MayaData (OpenEBS, Data Management Platform (DMP))

Diamanti (Diamanti Ultima, Diamanti Spektra)

Minio (MinIO Object Storage, MinIO Kubernetes Operator)

Rook (Rook Ceph, Rook EdgeFS)

Ondat (Ondat Persistent Volumes, Ondat Data Mesh)

Ionir (Ionir Data Services Platform, Ionir Continuous Data Mobility)

Trilio (TrilioVault for Kubernetes, TrilioVault for OpenStack)

Upcloud (UpCloud Object Storage, UpCloud Managed Databases)

Arrikto (Kubeflow Enterprise, Rok (Data Management for Kubernetes)

Market Size, Share, and Scope

The market is witnessing significant expansion across industries such as IT, BFSI, healthcare, retail, and manufacturing.

Hybrid and multi-cloud storage solutions are gaining traction due to their flexibility and cost-effectiveness.

Enterprises are increasingly adopting object storage, file storage, and block storage tailored for cloud native environments.

Key Market Trends Driving Growth

Rise in Cloud Adoption: Organizations are shifting workloads to public, private, and hybrid cloud environments, fueling demand for cloud native storage.

Growing Adoption of Kubernetes: Kubernetes-based storage solutions are becoming essential for managing containerized applications efficiently.

Increased Data Security and Compliance Needs: Businesses are investing in encrypted, resilient, and compliant storage solutions to meet global data protection regulations.

Advancements in AI and Automation: AI-driven storage management and self-healing storage systems are revolutionizing data handling.

Surge in Edge Computing: Cloud native storage is expanding to edge locations, enabling real-time data processing and low-latency operations.

Integration with DevOps and CI/CD Pipelines: Developers and IT teams are leveraging cloud storage automation for seamless software deployment.

Hybrid and Multi-Cloud Strategies: Enterprises are implementing multi-cloud storage architectures to optimize performance and costs.

Increased Use of Object Storage: The scalability and efficiency of object storage are driving its adoption in cloud native environments.

Serverless and API-Driven Storage Solutions: The rise of serverless computing is pushing demand for API-based cloud storage models.

Sustainability and Green Cloud Initiatives: Energy-efficient storage solutions are becoming a key focus for cloud providers and enterprises.

Enquiry of This Report: https://www.snsinsider.com/enquiry/3454

Market Segmentation:

By Component

Solution

Object Storage

Block Storage

File Storage

Container Storage

Others

Services

System Integration & Deployment

Training & Consulting

Support & Maintenance

By Deployment

Private Cloud

Public Cloud

By Enterprise Size

SMEs

Large Enterprises

By End Use

BFSI

Telecom & IT

Healthcare

Retail & Consumer Goods

Manufacturing

Government

Energy & Utilities

Media & Entertainment

Others

Market Growth Analysis

Factors Driving Market Expansion

The growing need for cost-effective and scalable data storage solutions

Adoption of cloud-first strategies by enterprises and governments

Rising investments in data center modernization and digital transformation

Advancements in 5G, IoT, and AI-driven analytics

Industry Forecast 2032: Size, Share & Growth Analysis

The cloud native storage market is projected to grow significantly over the next decade, driven by advancements in distributed storage architectures, AI-enhanced storage management, and increasing enterprise digitalization.

North America leads the market, followed by Europe and Asia-Pacific, with China and India emerging as key growth hubs.

The demand for software-defined storage (SDS), container-native storage, and data resiliency solutions will drive innovation and competition in the market.

Future Prospects and Opportunities

1. Expansion in Emerging Markets

Developing economies are expected to witness increased investment in cloud infrastructure and storage solutions.

2. AI and Machine Learning for Intelligent Storage

AI-powered storage analytics will enhance real-time data optimization and predictive storage management.

3. Blockchain for Secure Cloud Storage

Blockchain-based decentralized storage models will offer improved data security, integrity, and transparency.

4. Hyperconverged Infrastructure (HCI) Growth

Enterprises are adopting HCI solutions that integrate storage, networking, and compute resources.

5. Data Sovereignty and Compliance-Driven Solutions

The demand for region-specific, compliant storage solutions will drive innovation in data governance technologies.

Access Complete Report: https://www.snsinsider.com/reports/cloud-native-storage-market-3454

Conclusion

The cloud native storage market is poised for exponential growth, fueled by technological innovations, security enhancements, and enterprise digital transformation. As businesses embrace cloud, AI, and hybrid storage strategies, the future of cloud native storage will be defined by scalability, automation, and efficiency.

About Us:

SNS Insider is one of the leading market research and consulting agencies that dominates the market research industry globally. Our company's aim is to give clients the knowledge they require in order to function in changing circumstances. In order to give you current, accurate market data, consumer insights, and opinions so that you can make decisions with confidence, we employ a variety of techniques, including surveys, video talks, and focus groups around the world.

Contact Us:

Jagney Dave - Vice President of Client Engagement

Phone: +1-315 636 4242 (US) | +44- 20 3290 5010 (UK)

#cloud native storage market#cloud native storage market Scope#cloud native storage market Size#cloud native storage market Analysis#cloud native storage market Trends

0 notes

Text

Self-supervised Learning Market Growth: A Deep Dive Into Trends and Insights

The global self-supervised learning market size is estimated to reach USD 89.68 billion by 2030, expanding at a CAGR of 35.2% from 2025 to 2030, according to a new report by Grand View Research, Inc. Self-supervised learning is a machine learning technique used prominently in Natural Language Processing (NLP), followed by computer vision and speech processing applications. Applications of self-supervised learning include paraphrasing, colorization, and speech recognition.

The COVID-19 pandemic had a positive impact on the market. More businesses adopted AI and Machine Learning as a response to the COVID-19 pandemic. Many prominent market players such as U.S.-based Amazon Web Services, Inc., Google, and Microsoft witnessed a rise in revenue during the pandemic. Moreover, accelerated digitalization also contributed to the adoption of self-supervised learning applications. For instance, in April 2020, Google Cloud, a business segment of Google, launched an Artificial Intelligence (AI) chatbot that provides critical information to fight the COVID-19 pandemic.

Many market players offer solutions for various applications such as text-to-speech and language translation & prediction. Moreover, these players are researching in self-supervised learning. For instance, U.S.-based Meta has been advancing in self-supervised learning research and has developed various algorithms and models. In February 2022, Meta announced new advances in the company’s self-supervised computer vision model SEER. The model is more powerful and is expected to enable the company in building computer vision products.

Request Free Sample PDF of Self-supervised Learning Market Size, Share & Trends Analysis Report

Self-supervised Learning Market Report Highlights

• In terms of end-use, the BFSI segment accounted for the largest revenue share of 18.3% in 2024 and is expected to retain its position over the forecast period. This can be attributed to the increasing adoption of technologies such as AI and ML in the segment. The Advertising & Media segment is anticipated to register lucrative growth over the forecast period.

• Based on technology, the natural language processing segment accounted for the dominant share in 2024 due to its ability to handle vast amounts of unstructured text data across multiple industries.. This can be attributed to the variety and penetration of NLP applications.

• North America held the largest share of 35.7% in 2024 and is expected to retain its position over the forecast period. This can be attributed to the presence of a large number of market players in the region. Moreover, the presence of specialists and developed technology infrastructure are aiding the growth of the market.

• In July 2024, Google LLC launched the Agricultural Landscape Understanding (ALU) tool in India, an AI-based platform that uses high-resolution satellite imagery and machine learning to provide detailed insights on drought preparedness, irrigation, and crop management at an individual farm level.

• In May 2024, Researchers from Meta AI, Google, INRIA, and University Paris Saclay created an automatic dataset curation technique for self-supervised learning (SSL) using embedding models and hierarchical k-means clustering. This method improves model performance by ensuring balanced datasets and reducing the costs and time associated with manual curation.

Self-supervised Learning Market Segmentation

Grand View Research has segmented the global Self-supervised Learning market based on application and region:

Self-supervised Learning End Use Outlook (Revenue, USD Million, 2018 - 2030)

• Healthcare

• BFSI

• Automotive & Transportation

• Software Development (IT)

• Advertising & Media

• Others

Self-supervised Learning Technology Outlook (Revenue, USD Million, 2018 - 2030)

• Natural Language Processing (NLP)

• Computer Vision

• Speech Processing

Self-supervised Learning Regional Outlook (Revenue, USD Million, 2018 - 2030)

• North America

o U.S.

o Canada

o Mexico

• Europe

o UK

o Germany

o France

• Asia Pacific

o China

o Japan

o India

o Australia

o South Korea

• Latin America

o Brazil

• Middle East & Africa (MEA)

o KSA

o UAE

o South Africa

List of Key Players in Self-supervised Learning Market

• Amazon Web Services, Inc.

• Apple Inc.

• Baidu, Inc.

• Dataiku

• Databricks

• DataRobot, Inc.

• IBM Corporation

• Meta

• Microsoft

• SAS Institute Inc.

• Tesla

• The MathWorks, Inc.

Order a free sample PDF of the Self-supervised Learning Market Intelligence Study, published by Grand View Research.

#Self-supervised Learning Market#Self-supervised Learning Market Analysis#Self-supervised Learning Market Report#Self-supervised Learning Market Size#Self-supervised Learning Market Share

0 notes

Text

Everyone is doing digital marketing these days. Small and medium-sized businesses are competing directly with large corporations online. Digital marketing makes the playing field even for everyone, even those that have no real idea of how the internet works or what is a proxy. Large corporations may rely on their huge digital marketing budget to dominate the market, but SMEs can be more clever with their strategies and their use of data.That last part is actually very important. How you use data to drive digital marketing campaigns can determine the success rate of those campaigns. More importantly, data allows for digital services and marketing campaigns to be automated to a certain degree. How can this help small and medium businesses compete with the big boys?Eliminating Mundane TasksAutomation is the way forward in digital marketing. Rather than doing everything manually, small and medium-sized businesses can work towards automating different parts of their marketing campaigns to achieve higher efficiency. Since mundane tasks – such as tracking users along the sales funnel – can be fully automated and marketers can focus on other, more important tasks.Automation isn’t just a tool for streamlining campaigns either. Automation can be used to fire up other parts of the digital marketing campaign. For example, you can automate the growth of your Instagram or social media accounts using scripts that automatically engage users and search for active followers. Some businesses even go as far as creating an army of social media users – all fully automated – for the purpose of gaining traction and boosting exposure.This leads to the introduction of digital services based solely on automation. With the right tools, there is nothing you cannot automate online. Even creating hundreds – thousands – of social media accounts for campaign purposes becomes easy when the whole process is automated. The task can be completed in a matter of hours as opposed to in several weeks.Digital Services in the CloudThe key to boosting your digital marketing capacity to the next level is cloud computing. Cloud computing has made a lot of things possible. Scripts designed to automate different parts of the campaign can now run in the cloud rather than from a desktop computer. You don’t have to keep your laptop on or monitor every detail of the campaign with automation tools running in the cloud.There are additional cloud services that take this particular benefit even further. Digital services running in the cloud are not only more cost-efficient, but also more reliable in general. Bots that access social media sites from cloud computing clusters can remain connected for longer – and in a more reliable way – to help boost your social media posts.Even the capacity gets increased. Cloud computing has gotten so affordable that you can now host more scripts without spending more than $10 a month on cloud servers. Of course, the cloud servers are only one part of the equation. You also need multiple IP addresses (usually a proxy network) and other measures to make sure that your operations run smoothly.Data to the RescueThere is another thing that you can now automate at a higher level, and that is data collection. Data-driven marketing and digital services become competitive advantages that SMEs cannot afford to miss. Data is as valuable as it gets right now; some say data is now more valuable than oil. Fortunately for smaller digital operations, collecting data on a large scale is also easier to do.Web scraping is the go-to method for collecting information from the World Wide Web. Web scraping tools available today are more advanced than ever. They can be programmed to automatically identify lead-related information such as email addresses, job titles, and even phone numbers. You can automate data collected by your web scraping operation for digital marketing purposes; XML or CSV files generated by the scraping operation can be processed by tools like ActiveCampaign directly.

Data acquisition is so important than even larger corporations are starting to utilize web scraping for the same purpose. For smaller businesses, however, web scraping can be made flexible and contextual. You don’t have to wait until the entire process of scraping the web for information is completed before you start refining your scraping parameters.Bridging the Gap with Intermediary Servers or ProxiesWhile web scraping isn’t illegal, sites like Google and Facebook don’t really like it when you collect large amounts of information from them. If you use your real IP address to scrape the web – or the IP address of your cloud server – you will get banned sooner than you can type your IP address without copying and pasting.This is where proxies, acting as intermediary servers, act as the perfect bridge to the digital gap. Proxies and intermediary servers use dynamic IP addresses that get rolled frequently. You can take full advantage of web scraping without exposing your real IP address in the process. Creating a constant stream of data becomes easy with proxies acting as the final pieces of the puzzle.Behind every marketing tool, data scraping application or data acquisition service, there is a robust and extensive proxy network to supply access and reliable data sourcing. And, as digital services continue to increasingly rely on data, the weight of these networks will become more and more apparent.

0 notes

Text

A Comprehensive Handbook on Datasets for Machine Learning Initiatives

Introduction:

Datasets in Machine Learning is fundamentally dependent on data. Whether you are a novice delving into predictive modeling or a seasoned expert developing deep learning architectures, the selection of an appropriate dataset is vital for achieving success. This detailed guide will examine the various categories of datasets, sources for obtaining them, and criteria for selecting the most suitable ones for your machine learning endeavors.

The Importance of Datasets in Machine Learning

A dataset serves as the foundation for any machine learning model. High-quality and well-organized datasets enable models to identify significant patterns, whereas subpar data can result in inaccurate and unreliable outcomes. Datasets impact several aspects, including:

Model accuracy and efficiency

Feature selection and engineering

Generalizability of models

Training duration and computational requirements

Selecting the appropriate dataset is as critical as choosing the right algorithm. Let us now investigate the different types of datasets and their respective applications.

Categories of Machine Learning Datasets

Machine learning datasets are available in various formats and serve multiple purposes. The primary categories include:

1. Structured vs. Unstructured Datasets

Structured data: Arranged in a tabular format consisting of rows and columns (e.g., Excel, CSV files, relational databases).

Unstructured data: Comprises images, videos, audio files, and text that necessitate preprocessing prior to being utilized in machine learning models.

2. Supervised vs. Unsupervised Datasets

Supervised datasets consist of labeled information, where input-output pairs are clearly defined, and are typically employed in tasks related to classification and regression.

Unsupervised datasets, on the other hand, contain unlabeled information, allowing the model to independently identify patterns and structures, and are utilized in applications such as clustering and anomaly detection.

3. Time-Series and Sequential Data

These datasets are essential for forecasting and predictive analytics, including applications like stock market predictions, weather forecasting, and data from IoT sensors.

4. Text and NLP Datasets

Text datasets serve various natural language processing functions, including sentiment analysis, the development of chatbots, and translation tasks.

5. Image and Video Datasets

These datasets are integral to computer vision applications, including facial recognition, object detection, and medical imaging.

Having established an understanding of the different types of datasets, we can now proceed to examine potential sources for obtaining them.

Domain-Specific Datasets

Healthcare and Medical Datasets

MIMIC-III – ICU patient data for medical research.

Chest X-ray Dataset – Used for pneumonia detection.

Finance and Economics Datasets

Yahoo Finance API – Financial market and stock data.

Quandl – Economic, financial, and alternative data.

Natural Language Processing (NLP) Datasets

Common Crawl – Massive web scraping dataset.

Sentiment140 – Labeled tweets for sentiment analysis.

Computer Vision Datasets

ImageNet – Large-scale image dataset for object detection.

COCO (Common Objects in Context) – Image dataset for segmentation and captioning tasks.

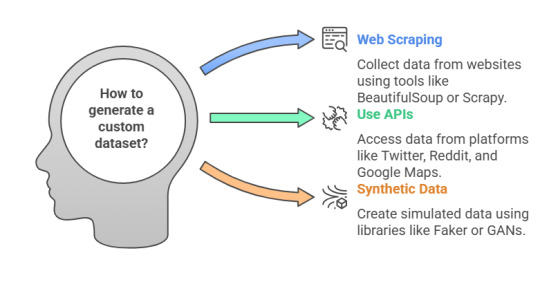

Custom Dataset Generation

When publicly available datasets do not fit your needs, you can:

Web Scraping: Use BeautifulSoup or Scrapy to collect custom data.

APIs: Utilize APIs from Twitter, Reddit, and Google Maps to generate unique datasets.

Synthetic Data: Create simulated datasets using libraries like Faker or Generative Adversarial Networks (GANs).

Selecting an Appropriate Dataset

The choice of an appropriate dataset is influenced by various factors:

Size and Diversity – A dataset that is both large and diverse enhances the model's ability to generalize effectively.

Data Quality – High-quality data that is clean, accurately labeled, and devoid of errors contributes to improved model performance.

Relevance – It is essential to select a dataset that aligns with the specific objectives of your project.

Legal and Ethical Considerations – Ensure adherence to data privacy laws and regulations, such as GDPR and HIPAA.

In Summary

Datasets serve as the cornerstone of any machine learning initiative. Regardless of whether the focus is on natural language processing, computer vision, or financial forecasting, the selection of the right dataset is crucial for the success of your model. Utilize platforms such as GTS.AI to discover high-quality datasets, or consider developing your own through web scraping and APIs.

With the appropriate data in hand, your machine learning project is already significantly closer to achieving success.

0 notes

Text

How to Choose the Right Machine Learning Algorithm for Your Data

How to Choose the Right Machine Learning Algorithm for Your Data Selecting the right machine learning algorithm is crucial for building effective models and achieving accurate predictions.

With so many algorithms available, deciding which one to use can feel overwhelming. This blog will guide you through the key factors to consider and help you make an informed decision based on your data and problem.

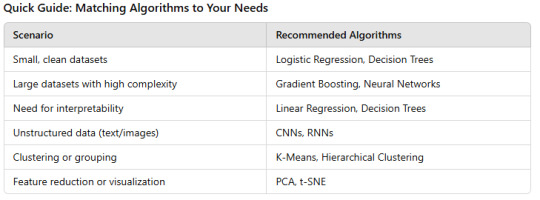

Understand Your Problem Type The type of problem you’re solving largely determines the algorithm you’ll use.

Classification: When your goal is to assign data to predefined categories, like spam detection or disease diagnosis.

Algorithms:

Logistic Regression, Decision Trees, Random Forest, SVM, Neural Networks.

Regression:

When predicting continuous values, such as house prices or stock market trends.

Algorithms:

Linear Regression, Ridge Regression, Lasso Regression, Gradient Boosting.

Clustering:

For grouping similar data points, like customer segmentation or image clustering.

Algorithms:

K-Means, DBSCAN, Hierarchical Clustering. Dimensionality Reduction: For reducing features while retaining important information, often used in data preprocessing.

Algorithms: PCA, t-SNE, Autoencoders.

2. Assess Your Data The quality, size, and characteristics of your data significantly impact algorithm selection.

Data Size: For small datasets, simpler models like Linear Regression or Decision Trees often perform well.

For large datasets, algorithms like Neural Networks or Gradient Boosting can leverage more data effectively.

Data Type:

Structured data (tables with rows and columns):

Use algorithms like Logistic Regression or Random Forest.

Unstructured data (text, images, audio):

Deep learning models such as Convolutional Neural Networks (CNNs) or Recurrent Neural Networks (RNNs) work best.

Missing Values and Outliers:

Robust algorithms like Random Forest or Gradient Boosting handle missing values and outliers well.

3. Consider Interpretability Sometimes, understanding how a model makes predictions is as important as its accuracy.

High Interpretability Needed:

Choose simpler models like Decision Trees, Linear Regression, or Logistic Regression.

Accuracy Over Interpretability:

Complex models like Neural Networks or Gradient Boosting might be better.

4. Evaluate Training Time and Computational Resources Some algorithms are computationally expensive and may not be suitable for large datasets or limited hardware.

Fast Algorithms: Logistic Regression, Naive Bayes, K-Nearest Neighbors (KNN).

Resource-Intensive Algorithms: Neural Networks, Gradient Boosting, SVM with non-linear kernels.

5. Experiment and Validate Even with careful planning, it’s essential to test multiple algorithms and compare their performance using techniques like cross-validation.

Use performance metrics such as accuracy, precision, recall, F1 score, or mean squared error to evaluate models.

Conclusion

Choosing the right machine learning algorithm requires understanding your problem, dataset, and resources.

By matching the algorithm to your specific needs and experimenting with different options, you can build a model that delivers reliable and actionable results.

0 notes

Text

Datasets for Machine Learning Projects: Making Content Work for Everyone

In the fast-paced world of artificial intelligence (AI) and machine learning (ML), datasets are fundamental to success. They power algorithms, provide pattern recognition, and do much for groundbreaking innovations. GTS.AI recognizes the potential of high-quality datasets to aid in advancing the purpose of making everything work for everyone. The accuracy of performance is essential, whether training a model on identifying objects in images or predicting stock market trends. Let's dive into the different sources from which datasets come and how GTS.AI is breaking ground in handling datasets for modern AI projects.

Significance of the Datasets in Machine Learning

Datasets are the core of ML models-they contain the information from which algorithms learn to perform tasks such as classification, regression, and clustering. Below are reasons that make datasets important.

Model Training: Algorithms learn their relationship and patterns with labeled datasets. Models incorporating insufficient and homogeneous data would be hard-pressed for generalization and thus performance.

Performance Assessment: To approach model performance correctness and robustness, test datasets have to be employed. Functions will imitate real-world scenarios for reliability evaluation.

Inclusivity in Reaching its Purposes: Diverse datasets go a long way in creating systems that perform equally across population segments, where bias is less prevalent, and the user experience is much enhanced.

Types of Datasets for Machine Learning Work

Based on the kind of application, various types of datasets are utilized:

Structured Data: Formatted and organized data, such as excel sheets or SQL tables, are generally used for applications such as fraud detection and recommendation systems.

Unstructured Data: Data that does not follow any particular structure is sourced from texts, images, and videos, and these are used in projects dealing with NLP and computer vision.

Time-Series Data: Data collected at regular intervals, usually time-dependent and critical for weather forecasting and finance analysis.

Anonymized and Synthetic Data: Data in which privacy concerns have been accounted for or data made synthetically, used to augment training without security breach.

Popular Sources for ML Datasets

Many datasets are now included on Kaggle, which is one of the popular sites to experiment and compete against others.

UCI Machine Learning Repository; a credible source for academic and practical projects.

Open Government Data Portals; government sources such as the data.gov website covering data on public policy, transportation, and others.

Custom data collection refers to specially curated dataset collected by scraping the web, through surveys, or via IoT devices.

What Should the Dataset Managers Be Careful About?

Even though these managers are very important, they all have challenges.

Volume and Variety: Huge size and variety of these datasets need robust storage capabilities as well as quick processing.

Quality Assurance: Imprecisely labeled or imbalanced datasets hamper how effectively the data are modeled and consequently introduce biases.

Privacy Concerns: Any handling of sensitive data must comply with all data protection laws.

Access and Licensing: There are challenges related to ownership of datasets and the allowance for the use of a dataset.

How GTS.AI Provides a Solution for Dataset Management

GTS.AI is aware of these challenges and provides cutting-edge solutions to support the effective usage of datasets by organizations to maximize power.

Custom Dataset Curation: We design datasets tailored specifically to the requirements of your project, keeping a watchful eye on their quality and relevance.

Data Augmentation: In data augmentation, we train your datasets to assist you in developing models through generating synthetic data and doing some class balancing.

Annotation Services: Using precise labeling and semantic annotation, we ready datasets for immediate input into AI systems.

Mitigation of Bias: We are pro-inclusivity and present reversible resources to identify and fix biases in datasets; ensuring fairness and equity.

Secure Data Handling: With GTS.AI, the data relying is maintained in very strict standards, safeguarding sensitive data and upholding the compliance to the regulations.

Content Works for Everyone

At GTS.AI, we make certain that AI is accessible and impactful for all. Not only do we provide quality datasets and annotation services, but we also allow organizations to develop truly working and impactful AI systems. Other developments range from accessibility enhancement to promoting innovations, with our solutions ensuring that the content speaks to everyone.

Conclusion

The dataset is the lifeline for machine learning operations in expanding the capability of AI systems. Globose Technology Solution GTS.AI couples expertise with innovation and a generous mindset for inclusiveness in the provision of dataset solutions that agilely empower all organizations and modify industries. So, are you in to take your AI venture to another level? Come and visit GTS.AI, discovering how we can help you make your content work for everyone.

0 notes

Text

Storage as a Service (STaaS) Market Expected to Surge at a 16.4% CAGR from 2020 to 2030

According to Future industry Insights, the storage-as-a-service industry is expected to grow at a remarkable rate of seventeen percent between 2020 and 2030. The increasing ease of data syncing, sharing, collaboration, and accessibility across smartphones and other devices serves as the foundation for this prediction.

In addition, Storage as a Service (STaaS) has grown rapidly in the last several years due to its ability to increase operational flexibility at lower operating costs. This increase is especially noticeable in every industry segment where cloud services have had an impact. Automation has notably increased productivity fourfold while lowering costs and improving service quality at the same time.

One of the key advantages of STaaS is its capacity to accommodate massive volumes of data in the cloud, obviating the need for on-premises storage. As a result, businesses can enjoy liberated storage space, eliminate the necessity for extensive backup procedures, and achieve substantial savings on disaster recovery plans.

Key Takeaways of Storage as a Service Market Study

SMEs are expected to hold 74% of market share in 2020, as the adoption of STaaS becomes essential to cutting back on infrastructural costs and focusing on business continuity.

The BFSI segment held a market share of 22% in 2019 and is expected to continue on a similar trend because banking is getting digitized even in rural clusters.

Moreover, South Asia & Pacific is projected to register a CAGR of 23% from 2020-2030 in the global STaaS market, due to countries undergoing rapid digitalization across sectors.

Additionally, cloud computing and the remote work ethic are set to remain strong undercurrents of the booming STaaS market.

COVID-19 Impact Analysis on Storage as a Service Market

The COVID-19 pandemic accelerated remote work adoption, leading businesses to upgrade their tech infrastructure for continuity. Future trends show continued tech investments. SaaS is now recognized for profit margin expansion, driven by cost reduction focus. Pre-pandemic, only 2.9% worked remotely; post-pandemic, remote work surges due to operational strategy reevaluation.

In 2018 and 2019, the market for storage as a service expanded by around 15% year over year. With the COVID-19 epidemic, the market is anticipated to rise by about 18%–20% between 2021 and 2023.

In the medium term, it may be difficult for the storage as a service market to maintain its growth pace due to concerns about budgets. Furthermore, deteriorating profitability is a key issue, and sales growth has also been a significant factor, all of which have led to significant losses for companies of all sizes.

Partnerships and Innovations to Drive Growth

The global Storage as a Service industry is experiencing a storm due to the rapidly evolving technical landscape, shifting consumer expectations, and fierce competition. This is forcing solution providers to consistently search for novel and affordable solutions. Additionally, partnerships and collaborations with digital solution providers might aid suppliers of storage as a service in growing their clientele and market share.

For instance, Pure Storage and SAP formed a cooperation in March 2020 to provide customers with shared competency centres, technical support, and technological integrations in STaaS, intelligent enterprise, cloud computing, storage, and virtualization.

Storage as a Service Market: Segmentation

Service Type

Cloud NAS

SAN

Cloud Backup

Archiving

Enterprise Size

Small & Medium Enterprises

Large Enterprises

Industry

Media & Entertainment

Government

Healthcare

IT & Telecom

Manufacturing

Education

Others

Region

North America

Latin America

Europe

East Asia

South Asia Pacific

Middle East & Africa

0 notes

Text

Alex Yeh, Founder & CEO of GMI Cloud – Interview Series

New Post has been published on https://thedigitalinsider.com/alex-yeh-founder-ceo-of-gmi-cloud-interview-series/

Alex Yeh, Founder & CEO of GMI Cloud – Interview Series

Alex Yeh is the Founder and CEO of GMI Cloud, a venture-backed digital infrastructure company with the mission of empowering anyone to deploy AI effortlessly and simplifying how businesses build, deploy, and scale AI through integrated hardware and software solutions

What inspired you to start GMI Cloud, and how has your background influenced your approach to building the company?

GMI Cloud was founded in 2021, focusing primarily in its first two years on building and operating data centers to provide Bitcoin computing nodes. Over this period, we established three data centers in Arkansas and Texas.

In June of last year, we noticed a strong demand from investors and clients for GPU computing power. Within a month, he made the decision to pivot toward AI cloud infrastructure. AI’s rapid development and the wave of new business opportunities it brings are either impossible to foresee or hard to describe. By providing the essential infrastructure, GMI Cloud aims to stay closely aligned with the exciting, and often unimaginable, opportunities in AI.

Before GMI Cloud, I was a partner at a venture capital firm, regularly engaging with emerging industries. I see artificial intelligence as the 21st century’s latest “gold rush,” with GPUs and AI servers serving as the “pickaxes” for modern-day “prospectors,” spurring rapid growth for cloud companies specializing in GPU computing power rental.

Can you tell us about GMI Cloud’s mission to simplify AI infrastructure and why this focus is so crucial in today’s market?

Simplifying AI infrastructure is essential due to the current complexity and fragmentation of the AI stack, which can limit accessibility and efficiency for businesses aiming to harness AI’s potential. Today’s AI setups often involve several disconnected layers—from data preprocessing and model training to deployment and scaling—that require significant time, specialized skills, and resources to manage effectively. Many companies spend weeks and even months identifying the best-fitting layers of AI infrastructure, a process that can extend to weeks or even months, impacting user experience and productivity.

Accelerating Deployment: A simplified infrastructure enables faster development and deployment of AI solutions, helping companies stay competitive and adaptable to changing market needs.

Lowering Costs and Reducing Resources: By minimizing the need for specialized hardware and custom integrations, a streamlined AI stack can significantly reduce costs, making AI more accessible, especially for smaller businesses.

Enabling Scalability: A well-integrated infrastructure allows for efficient resource management, which is essential for scaling applications as demand grows, ensuring AI solutions remain robust and responsive at larger scales.

Improving Accessibility: Simplified infrastructure makes it easier for a broader range of organizations to adopt AI without requiring extensive technical expertise. This democratization of AI promotes innovation and creates value across more industries.

Supporting Rapid Innovation: As AI technology advances, less complex infrastructure makes it easier to incorporate new tools, models, and methods, allowing organizations to stay agile and innovate quickly.

GMI Cloud’s mission to simplify AI infrastructure is essential for helping enterprises and startups fully realize AI’s benefits, making it accessible, cost-effective, and scalable for organizations of all sizes.

You recently secured $82 million in Series A funding. How will this new capital be used, and what are your immediate expansion goals?

GMI Cloud will utilize the funding to open a new data center in Colorado and primarily invest in H200 GPUs to build an additional large-scale GPU cluster. GMI Cloud is also actively developing its own cloud-native resource management platform, Cluster Engine, which is seamlessly integrated with our advanced hardware. This platform provides unparalleled capabilities in virtualization, containerization, and orchestration.

GMI Cloud offers GPU access at 2x the speed compared to competitors. What unique approaches or technologies make this possible?

A key aspect of GMI Cloud’s unique approach is leveraging NVIDIA’s NCP, which provides GMI Cloud with priority access to GPUs and other cutting-edge resources. This direct procurement from manufacturers, combined with strong financing options, ensures cost-efficiency and a highly secure supply chain.

With NVIDIA H100 GPUs available across five global locations, how does this infrastructure support your AI customers’ needs in the U.S. and Asia?

GMI Cloud has strategically established a global presence, serving multiple countries and regions, including Taiwan, the United States, and Thailand, with a network of IDCs (Internet Data Centers) around the world. Currently, GMI Cloud operates thousands of NVIDIA Hopper-based GPU cards, and it is on a trajectory of rapid expansion, with plans to multiply its resources over the next six months. This geographic distribution allows GMI Cloud to deliver seamless, low-latency service to clients in different regions, optimizing data transfer efficiency and providing robust infrastructure support for enterprises expanding their AI operations worldwide.

Additionally, GMI Cloud’s global capabilities enable it to understand and meet diverse market demands and regulatory requirements across regions, providing customized solutions tailored to each locale’s unique needs. With a growing pool of computing resources, GMI Cloud addresses the rising demand for AI computing power, offering clients ample computational capacity to accelerate model training, enhance accuracy, and improve model performance for a broad range of AI projects.

As a leader in AI-native cloud services, what trends or customer needs are you focusing on to drive GMI’s technology forward?

From GPUs to applications, GMI Cloud drives intelligent transformation for customers, meeting the demands of AI technology development.

Hardware Architecture:

Physical Cluster Architecture: Instances like the 1250 H100 include GPU racks, leaf racks, and spine racks, with optimized configurations of servers and network equipment that deliver high-performance computing power.

Network Topology Structure: Designed with efficient IB fabric and Ethernet fabric, ensuring smooth data transmission and communication.

Software and Services:

Cluster Engine: Utilizing an in-house developed engine to manage resources such as bare metal, Kubernetes/containers, and HPC Slurm, enabling optimal resource allocation for users and administrators.

Proprietary Cloud Platform: The CLUSTER ENGINE is a proprietary cloud management system that optimizes resource scheduling, providing a flexible and efficient cluster management solution

Add inference engine roadmap:

Continuous computing, guarantee high SLA.

Time share for fractional time use.

Spot instance

Consulting and Custom Services: Offers consulting, data reporting, and customized services such as containerization, model training recommendations, and tailored MLOps platforms.

Robust Security and Monitoring Features: Includes role-based access control (RBAC), user group management, real-time monitoring, historical tracking, and alert notifications.

In your opinion, what are some of the biggest challenges and opportunities for AI infrastructure over the next few years?

Challenges:

Scalability and Costs: As models grow more complex, maintaining scalability and affordability becomes a challenge, especially for smaller companies.

Energy and Sustainability: High energy consumption demands more eco-friendly solutions as AI adoption surges.

Security and Privacy: Data protection in shared infrastructures requires evolving security and regulatory compliance.

Interoperability: Fragmented tools in the AI stack complicate seamless deployment and integration.complicates deploying any AI as a matter of fact. We now can shrink development time by 2x and reduce headcount for an AI project by 3x .

Opportunities:

Edge AI Growth: AI processing closer to data sources offers latency reduction and bandwidth conservation.

Automated MLOps: Streamlined operations reduce the complexity of deployment, allowing companies to focus on applications.

Energy-Efficient Hardware: Innovations can improve accessibility and reduce environmental impact.

Hybrid Cloud: Infrastructure that operates across cloud and on-prem environments is well-suited for enterprise flexibility.

AI-Powered Management: Using AI to autonomously optimize infrastructure reduces downtime and boosts efficiency.

Can you share insights into your long-term vision for GMI Cloud? What role do you see it playing in the evolution of AI and AGI?

I want to build the AI of the internet. I want to build the infrastructure that powers the future across the world.

To create an accessible platform, akin to Squarespace or Wix, but for AI. Anyone should be able to build their AI application.

In the coming years, AI will see substantial growth, particularly with generative AI use cases, as more industries integrate these technologies to enhance creativity, automate processes, and optimize decision-making. Inference will play a central role in this future, enabling real-time AI applications that can handle complex tasks efficiently and at scale. Business-to-business (B2B) use cases are expected to dominate, with enterprises increasingly focused on leveraging AI to boost productivity, streamline operations, and create new value. GMI Cloud’s long-term vision aligns with this trend, aiming to provide advanced, reliable infrastructure that supports enterprises in maximizing the productivity and impact of AI across their organizations.

As you scale operations with the new data center in Colorado, what strategic goals or milestones are you aiming to achieve in the next year?

As we scale operations with the new data center in Colorado, we are focused on several strategic goals and milestones over the next year. The U.S. stands as the largest market for AI and AI compute, making it imperative for us to establish a strong presence in this region. Colorado’s strategic location, coupled with its robust technological ecosystem and favorable business environment, positions us to better serve a growing client base and enhance our service offerings.

What advice would you give to companies or startups looking to adopt advanced AI infrastructure?

For startups focused on AI-driven innovation, the priority should be on building and refining their products, not spending valuable time on infrastructure management. Partner with trustworthy technology providers who offer reliable and scalable GPU solutions, avoiding providers who cut corners with white-labeled alternatives. Reliability and rapid deployment are critical; in the early stages, speed is often the only competitive moat a startup has against established players. Choose cloud-based, flexible options that support growth, and focus on security and compliance without sacrificing agility. By doing so, startups can integrate smoothly, iterate quickly, and channel their resources into what truly matters—delivering a standout product in the marketplace.

Thank you for the great interview, readers who wish to learn more should visit GMI Cloud,

#access control#Accessibility#adoption#Advice#AGI#agile#ai#AI adoption#AI Infrastructure#ai use cases#AI-powered#applications#approach#architecture#artificial#Artificial Intelligence#Asia#B2B#background#bitcoin#Building#Business#business environment#CEO#challenge#channel#Cloud#cloud infrastructure#Cloud Management#cloud platform

0 notes

Text

0 notes

Text

Artificial Intelligence Course in Nagercoil

Dive into the Future with Jclicksolutions’ Artificial Intelligence Course in Nagercoil

Artificial Intelligence (AI) is rapidly transforming industries, opening up exciting opportunities for anyone eager to explore its vast potential. From automating processes to enhancing decision-making, AI technologies are changing how we live and work. If you're looking to gain a foothold in this field, the Artificial Intelligence course at Jclicksolutions in Nagercoil is the ideal starting point. This course is carefully designed to cater to both beginners and those with a tech background, offering comprehensive training in the concepts, tools, and applications of AI.

Why Study Artificial Intelligence?

AI is no longer a concept of the distant future. It is actively shaping industries such as healthcare, finance, automotive, retail, and more. Skills in AI can lead to careers in data science, machine learning engineering, robotics, and even roles as AI strategists and consultants. By learning AI, you’ll be joining one of the most dynamic and impactful fields, making you a highly valuable asset in the job market.

Course Overview at Jclicksolutions

The Artificial Intelligence course at Jclicksolutions covers foundational principles as well as advanced concepts, providing a balanced learning experience. It is designed to ensure that students not only understand the theoretical aspects of AI but also gain hands-on experience with its practical applications. The curriculum includes modules on machine learning, data analysis, natural language processing, computer vision, and neural networks.

1. Comprehensive and Structured Curriculum

The course covers every critical aspect of AI, starting from the basics and gradually moving into advanced topics. Students begin by learning fundamental concepts like data pre-processing, statistical analysis, and supervised vs. unsupervised learning. As they progress, they delve deeper into algorithms, decision trees, clustering, and neural networks. The course also includes a segment on deep learning, enabling students to explore areas like computer vision and natural language processing, which are essential for applications in image recognition and AI-driven communication.

2. Hands-On Learning with Real-World Projects

One of the standout features of the Jclicksolutions AI course is its emphasis on hands-on learning. Rather than just focusing on theoretical knowledge, the course is structured around real-world projects that allow students to apply what they’ve learned. For example, students might work on projects that involve creating machine learning models, analyzing large datasets, or designing AI applications for specific business problems. By working on these projects, students gain practical experience, making them job-ready upon course completion.

3. Experienced Instructors and Personalized Guidance

The instructors at Jclicksolutions are industry experts with years of experience in AI and machine learning. They provide invaluable insights, sharing real-life case studies and offering guidance based on current industry practices. With small class sizes, each student receives personalized attention, ensuring they understand complex topics and receive the support needed to build confidence in their skills.

4. Cutting-Edge Tools and Software

The AI course at Jclicksolutions also familiarizes students with the latest tools and platforms used in the industry, including Python, TensorFlow, and Keras. These tools are essential for building, training, and deploying AI models. Students learn how to use Jupyter notebooks for coding, experiment with datasets, and create data visualizations that reveal trends and patterns. By the end of the course, students are proficient in these tools, positioning them well for AI-related roles.

Career Opportunities after Completing the AI Course

With the knowledge gained from this AI course, students can pursue various roles in the tech industry. AI professionals are in demand across sectors such as healthcare, finance, retail, and technology. Here are some career paths open to graduates of the Jclicksolutions AI course:

Machine Learning Engineer: Design and develop machine learning systems and algorithms.

Data Scientist: Extract meaningful insights from data and help drive data-driven decision-making.

AI Consultant: Advise businesses on implementing AI strategies and solutions.

Natural Language Processing Specialist: Work on projects involving human-computer interaction, such as chatbots and voice recognition systems.

Computer Vision Engineer: Focus on image and video analysis for industries like automotive and healthcare.

To support students in their career journey, Jclicksolutions also offers assistance in building portfolios, resumes, and interview preparation, helping students transition from learning to employment.

Why Choose Jclicksolutions?

Located in Nagercoil, Jclicksolutions is known for its commitment to delivering high-quality tech education. The institute stands out for its strong focus on practical training, a supportive learning environment, and a curriculum that aligns with industry standards. Students benefit from a collaborative atmosphere, networking opportunities, and mentorship that goes beyond the classroom. This hands-on, project-based approach makes Jclicksolutions an excellent choice for those looking to make a mark in AI.

Enroll Today and Join the AI Revolution

AI is a transformative field that is reshaping industries and creating new opportunities. By enrolling in the Artificial Intelligence course at Jclicksolutions in Nagercoil, you’re setting yourself up for a promising future in tech. This course is more than just an educational program; it's a gateway to a career filled with innovation and possibilities. Whether you’re a beginner or an experienced professional looking to upskill, Jclicksolutions offers the resources, knowledge, and support to help you succeed.

Software Internship Training | Placement Centre Course Nagercoil

0 notes

Text

Unlocking the Power of Generative AI in Retail: From High Investments to Collaborative Innovation

Generative AI is reshaping sectors from proptech to real estate and renovation startups like Allreno. Advanced AI models, including GANs, VAEs, and transformer-based architectures like GPT-4, excel at creating realistic, new content by learning from vast datasets. This potential, however, comes with costs—training large models requires significant investment in computing resources, extensive datasets, and specialized expertise.

For smaller businesses and startups, these barriers may seem daunting. However, with open-source models and pre-trained frameworks, generative AI technology is increasingly accessible. By leveraging cloud-based services, even businesses with limited budgets can apply advanced AI models. Retailers and startups like Allreno can collaborate with academic institutions or technology partners to bypass the need for large in-house infrastructure and still benefit from generative AI.

In the retail and real estate industries, businesses can achieve impactful results by focusing on domain-specific applications of generative AI. For example, a mid-sized fashion retailer could develop a model that designs clothing based on trends and customer data. Similarly, Allreno can apply AI to offer customers customizable virtual designs for bathroom vanities and home renovations, making the design process more interactive and tailored to individual preferences.

Generative AI also enables unique opportunities for collaborative innovation. By pooling resources, smaller businesses can form AI collectives, creating shared models more robust than what each could achieve individually. This approach allows independent retailers, from bookstores to renovation firms, to gain access to AI-driven insights and tailored recommendations that would otherwise be costly to develop alone.

Consider a real estate company or renovation startup like Allreno using AI to produce 3D architectural designs. These models can be repurposed for virtual tours in real estate or for real-time product customization in retail, enhancing the customer experience. The cross-industry potential of generative AI enables businesses to innovate in ways that resonate directly with their audience and create new pathways for customer engagement.

Digital clusters of businesses, each with a focus on generative AI, promote shared knowledge and reduce redundancy. This type of collaborative innovation accelerates technology adoption, ensuring companies can compete in rapidly evolving markets. Through these partnerships, businesses in proptech, retail, and beyond can streamline operations, boost efficiency, and build unique value for customers.

In conclusion, while developing and maintaining generative AI models requires resources, strategic adoption can make this technology accessible. Through targeted investments and a focus on domain-specific applications, companies like Allreno are proving that generative AI is within reach, enhancing both operational capabilities and customer experiences. As these advancements take hold, they set the stage for a future where retail and related sectors are defined by creativity, innovation, and personalized customer engagement.

Tags: ai design, bathroom renovation, renovation, bathroom design, interior design

#ai#bathroom remodeling#bathroom renovation#interior design#investors#real estate#tech#techinnovation#proptech#youtube

0 notes

Text

Projects Centered on Machine Learning Tailored for Individuals Possessing Intermediate Skills.

Introduction:

Datasets for Machine Learning Projects , which underscores the importance of high-quality datasets for developing accurate and dependable models. Regardless of whether the focus is on computer vision, natural language processing, or predictive analytics, the selection of an appropriate dataset can greatly influence the success of a project. This article will examine various sources and categories of datasets that are frequently utilized in ML initiatives.

The Significance of Datasets in Machine Learning

Datasets form the cornerstone of any machine learning model. The effectiveness of a model in generalizing to new data is contingent upon the quality, size, and diversity of the dataset. When selecting a dataset, several critical factors should be taken into account:

Relevance: The dataset must correspond to the specific problem being addressed.

Size: Generally, larger datasets contribute to enhanced model performance.

Cleanliness: Datasets should be devoid of errors and missing information.

Balanced Representation: Mitigating bias is essential for ensuring equitable model predictions.

There are various categories of datasets utilized in machine learning.

Datasets can be classified into various types based on their applications:

Structured Datasets: These consist of systematically organized data presented in tabular formats (e.g., CSV files, SQL databases).

Unstructured Datasets: This category includes images, audio, video, and text data that necessitate further processing.

Labeled Datasets: Each data point is accompanied by a label, making them suitable for supervised learning applications.

Unlabeled Datasets: These datasets lack labels and are often employed in unsupervised learning tasks such as clustering.

Synthetic Datasets: These are artificially created datasets that mimic real-world conditions.

Categories of Datasets in Machine Learning

Machine learning datasets can be classified into various types based on their characteristics and applications:

1. Structured and Unstructured Datasets

Structured Data: Arranged in organized formats such as CSV files, SQL databases, and spreadsheets.

Unstructured Data: Comprises text, images, videos, and audio that do not conform to a specific format.

2. Supervised and Unsupervised Datasets

Supervised Learning Datasets: Consist of labeled data utilized for tasks involving classification and regression.

Unsupervised Learning Datasets: Comprise unlabeled data employed for clustering and anomaly detection.

Semi-supervised Learning Datasets: Combine both labeled and unlabeled data.

3. Small and Large Datasets

Small Datasets: Suitable for prototyping and preliminary experiments.

Large Datasets: Extensive datasets that necessitate considerable computational resources.

Popular Sources for Machine Learning Datasets

1. Google Dataset Search

Google Dataset Search facilitates the discovery of publicly accessible datasets sourced from a variety of entities, including research institutions and governmental organizations.

2. AWS Open Data Registry

AWS Open Data provides access to extensive datasets, which are particularly advantageous for machine learning projects conducted in cloud environments.

3. Image and Video Datasets

ImageNet (for image classification and object recognition)

COCO (Common Objects in Context) (for object detection and segmentation)

Open Images Dataset (a varied collection of labeled images)

4. NLP Datasets

Wikipedia Dumps (a text corpus suitable for NLP applications)

Stanford Sentiment Treebank (for sentiment analysis)

SQuAD (Stanford Question Answering Dataset) (designed for question-answering systems)

5. Time-Series and Finance Datasets

Yahoo Finance (providing stock market information)

Quandl (offering economic and financial datasets)

Google Trends (tracking public interest over time)

6. Healthcare and Medical Datasets

MIMIC-III (data related to critical care)

NIH Chest X-rays (a dataset for medical imaging)

PhysioNet (offering physiological and clinical data).

Guidelines for Selecting an Appropriate Dataset

Comprehend Your Problem Statement: Determine if your requirements call for structured or unstructured data.

Verify Licensing and Usage Permissions: Confirm that the dataset is permissible for your intended application.

Prepare and Clean the Data: Data from real-world sources typically necessitates cleaning and transformation prior to model training.

Consider Data Augmentation: In scenarios with limited data, augmenting the dataset can improve model performance.

Conclusion

Choosing the appropriate dataset is vital for the success of any machine learning initiative. With a plethora of freely accessible datasets, both developers and researchers can create robust AI models across various fields. Regardless of your experience level, the essential factor is to select a dataset that aligns with your project objectives while maintaining quality and fairness.

Are you in search of datasets to enhance your machine learning project? Explore Globose Technology Solutions for a selection of curated AI datasets tailored to your requirements!

0 notes

Text

What Role Does Generative AI Play in Personalizing Sales Approaches?

In the rapidly evolving landscape of sales and marketing, the integration of technology is transforming traditional methodologies into more sophisticated, data-driven approaches. Among the most significant advancements is the advent of Generative Artificial Intelligence (AI), a technology that not only enhances automation but also redefines personalization in sales strategies. By utilizing Generative AI, businesses can better understand their customers, tailor their approaches, and ultimately drive higher conversion rates and customer satisfaction. This blog explores the multifaceted role of Generative AI in personalizing sales approaches, its applications, benefits, challenges, and future prospects.

Understanding Generative AI

Generative AI refers to a subset of artificial intelligence models that can create new content based on existing data. Unlike traditional AI models that typically analyze data or make predictions, Generative AI has the capability to generate text, images, music, and even code, mimicking human creativity. Technologies such as OpenAI's GPT-3 and GPT-4, Google’s BERT, and various generative design algorithms have ushered in a new era of machine learning where computers can produce original work and contribute meaningfully to various tasks, including sales and marketing.

The Shift Towards Personalization in Sales

In recent years, personalization has become a cornerstone of effective sales strategies. The shift from one-size-fits-all approaches to tailored experiences is driven by the understanding that customers have unique needs, preferences, and behaviors. Personalized sales approaches foster deeper connections between businesses and consumers, enhancing customer loyalty and increasing the likelihood of conversion. However, achieving true personalization can be challenging due to the vast amounts of data generated by customers and the complexities involved in analyzing that data effectively.

How Generative AI Enhances Personalization

1. Data Analysis and Customer Insights

Generative AI excels at processing and analyzing large datasets quickly and efficiently. By leveraging machine learning algorithms, businesses can gain insights into customer behavior, preferences, and pain points. Here are several ways in which Generative AI contributes to data analysis:

Predictive Analytics: Generative AI can forecast customer behavior by identifying patterns in historical data, allowing sales teams to anticipate needs and tailor their approaches accordingly.

Segmentation: By clustering customers into different segments based on their characteristics and behavior, businesses can create targeted marketing strategies that resonate with specific audiences.

Sentiment Analysis: Generative AI can analyze customer feedback and reviews to gauge sentiment, providing insights into customer satisfaction and areas for improvement.

2. Personalized Content Creation

One of the standout features of Generative AI is its ability to create personalized content. This capability can significantly enhance sales strategies through:

Tailored Messaging: AI can generate customized email templates, chat responses, and social media posts that align with individual customer preferences, improving engagement and response rates.

Dynamic Content: Generative AI can adapt content in real-time based on customer interactions. For example, an AI-driven chatbot can alter its responses based on the user’s previous inquiries and preferences, ensuring a more relevant experience.

Content Variability: Instead of relying on generic marketing materials, businesses can produce multiple versions of sales pitches or marketing content tailored to different audience segments, maximizing the chances of capturing attention.

3. Enhanced Customer Interactions

Generative AI plays a crucial role in facilitating more meaningful customer interactions:

AI-Powered Chatbots: Chatbots powered by Generative AI can engage in natural conversations with customers, answering queries and guiding them through the sales process. These bots can be programmed to learn from each interaction, continuously improving their responses and recommendations.

Virtual Sales Assistants: Generative AI can assist sales representatives by providing them with real-time data and insights during customer interactions. For instance, a virtual assistant could suggest personalized product recommendations based on the customer’s purchase history and preferences.

Voice and Tone Customization: Generative AI can help sales teams adjust their communication style according to the customer’s preferences. By analyzing past interactions, AI can recommend whether a more formal or casual tone would be more effective for engagement.

4. Tailored Product Recommendations

Generative AI's ability to analyze customer data enables it to make highly personalized product recommendations:

Recommendation Engines: By processing data on customer preferences, past purchases, and browsing history, Generative AI can suggest products that align with individual tastes. This capability is particularly effective in e-commerce, where personalized product recommendations can drive higher conversion rates.

Cross-Selling and Upselling: AI can identify opportunities for cross-selling or upselling by understanding the customer's journey and interests, making it easier for sales teams to present relevant offers during their interactions.

Benefits of Using Generative AI in Sales Personalization

1. Increased Efficiency

Generative AI automates various tasks involved in sales personalization, allowing sales teams to focus on building relationships and closing deals. By streamlining processes like data analysis and content generation, businesses can operate more efficiently.

2. Improved Customer Experience

Personalized sales approaches lead to better customer experiences. When customers receive relevant information and offers, they feel valued and understood, resulting in higher satisfaction levels and loyalty.

3. Higher Conversion Rates

Generative AI's ability to generate targeted content and recommendations can significantly improve conversion rates. When potential customers encounter personalized messaging that resonates with their needs, they are more likely to engage and make purchases.

4. Cost-Effectiveness

By automating repetitive tasks and optimizing sales processes, Generative AI can reduce costs associated with manual labor. This efficiency can lead to better allocation of resources and improved ROI on marketing efforts.

Challenges in Implementing Generative AI for Sales Personalization

1. Data Privacy Concerns

As businesses collect and analyze vast amounts of customer data, they must navigate privacy regulations and ethical considerations. Striking the right balance between personalization and privacy is essential to maintain customer trust.

2. Integration with Existing Systems

Implementing Generative AI solutions may require significant changes to existing sales and marketing systems. Businesses must ensure seamless integration to avoid disruptions and maximize the benefits of AI technologies.

3. Quality of Generated Content

While Generative AI is powerful, it is not infallible. Ensuring the quality and relevance of the content generated by AI is crucial. Businesses should have mechanisms in place to review and refine AI-generated materials to maintain brand integrity.

4. Continuous Learning and Adaptation

Generative AI models require ongoing training and adaptation to remain effective. Businesses must invest in maintaining and updating their AI systems to keep pace with changing customer preferences and market dynamics.

The Future of Generative AI in Sales Personalization

As technology continues to evolve, the role of Generative AI in sales personalization is likely to expand further. Several trends are emerging that could shape the future landscape:

1. Enhanced Predictive Capabilities

The future of Generative AI will see even more advanced predictive analytics, allowing businesses to anticipate customer needs more accurately and tailor their sales strategies accordingly. This capability will lead to hyper-personalized experiences that can adapt in real-time.

2. Greater Integration of AI with Human Intelligence

While AI will play an increasingly prominent role in sales, the need for human intelligence will remain. The future will likely see a hybrid approach where AI tools assist sales representatives in making informed decisions while maintaining the human touch in interactions.

3. Evolution of Customer Experience

Generative AI will continue to reshape customer experiences by creating more immersive and interactive environments. Businesses could leverage AI to create virtual showrooms, personalized video messages, or even AI-driven virtual sales representatives, making customer interactions more engaging and effective.

4. Ethical Considerations

As the use of AI in sales personalization grows, so will the focus on ethical considerations. Companies will need to establish transparent policies regarding data usage and AI-generated content, ensuring that they prioritize customer trust and privacy.

Conclusion

Generative AI is revolutionizing the way businesses approach sales personalization. By leveraging its capabilities in data analysis, content creation, and customer interaction, organizations can create tailored experiences that resonate with individual customers. The benefits of increased efficiency, improved customer experiences, and higher conversion rates underscore the significance of adopting Generative AI in sales strategies. However, challenges such as data privacy and content quality must be navigated carefully to maximize the potential of this technology. As we look to the future, the integration of Generative AI in sales is set to evolve, promising even more innovative approaches to personalizing sales experiences and enhancing customer relationships. Embracing these advancements will be essential for businesses seeking to thrive in a competitive landscape driven by technology and customer-centricity.

0 notes