#Container and Kubernetes Security

Explore tagged Tumblr posts

Text

The global container and kubernetes security market size reached US$ 1,346.0 Million in 2023. Looking forward, IMARC Group expects the market to reach US$ 8,137.1 Million by 2032, exhibiting a growth rate (CAGR) of 21.47% during 2024-2032. The rising prevalence of data breaches, the growing demand for container and Kubernetes security, emerging technological advancements, and favorable government policies are some of the major factors propelling the market.

#Container and Kubernetes Security Market#Container and Kubernetes Security#Container and Kubernetes

0 notes

Text

Container and Kubernetes Security Market Report, Market Size, Share, Trends, Analysis By Forecast Period

The 2024 Container and Kubernetes Security Market Report offers a comprehensive overview of the Container and Kubernetes Security Market industry, summarizing key findings on market size, growth projections, and major trends. It includes segmentation by region, by type, by product with targeted analysis for strategic guidance. The report also evaluates industry dynamics, highlighting growth drivers, challenges, and opportunities. Key stakeholders will benefit from the SWOT and PESTLE analyses, which provide insights into competitive strengths, vulnerabilities, opportunities, and threats across regions and industry segments.

According to Straits Research, the global Container and Kubernetes Security Market size was valued at USD 1510.01 Million in 2023. It is projected to reach from USD 1907.14 Million in 2024 to USD 12348.3 Million by 2032, growing at a CAGR of 26.3% during the forecast period (2024–2032).

New Features in the 2024 Report:

Expanded Industry Overview: A more detailed and comprehensive examination of the industry.

In-Depth Company Profiles: Enhanced profiles offering extensive information on key market players.

Customized Reports and Analyst Assistance: Tailored reports and direct access to analyst support are available on request.

Container and Kubernetes Security Market Insights: Analysis of the latest market developments and upcoming growth opportunities.

Regional and Country-Specific Reports: Personalized reports focused on specific regions and countries to meet your unique requirements.

Detailed Table of Content of Container and Kubernetes Security Market report: @ https://straitsresearch.com/report/container-and-kubernetes-security-market/toc

Report Structure

Economic Impact: Analysis of the economic effects on the industry.

Production and Opportunities: Examination of production processes, business opportunities, and potential.

Trends and Technologies: Overview of emerging trends, new technologies, and key industry players.

Cost and Market Analysis: Insights into manufacturing costs, marketing strategies, regional market shares, and market segmentation by type and application.

Request a free request sample (Full Report Starting from USD 995) : https://straitsresearch.com/report/container-and-kubernetes-security-market/request-sample

Regional Analysis for Container and Kubernetes Security Market:

North America: The leading region in the Container and Kubernetes Security Market, driven by technological advancements, high consumer adoption rates, and favorable regulatory conditions. The United States and Canada are the main contributors to the region's robust growth.

Europe: Experiencing steady growth in the Container and Kubernetes Security Market, supported by stringent regulations, a strong focus on sustainability, and increased R&D investments. Key countries driving this growth include Germany, France, the United Kingdom, and Italy.

Asia-Pacific: The fastest-growing regional market, with significant growth due to rapid industrialization, urbanization, and a rising middle class. China, India, Japan, and South Korea are pivotal markets fueling this expansion.

Latin America, Middle East, and Africa: Emerging as growth regions for the Container and Kubernetes Security Market, with increasing demand driven by economic development and improved infrastructure. Key countries include Brazil and Mexico in Latin America, Saudi Arabia, the UAE, and South Africa in the Middle East and Africa.

Top Key Players of Container and Kubernetes Security Market :

Alert Logic

Aqua Security

Capsule8

CloudPassage

NeuVector

Qualys

Trend Micro

Twistlock

StackRox

Sysdig

Container and Kubernetes Security Market Segmentations:

By Product

Cloud

On-Premises

By Component

Container Security Platform

Services

By Organizational Size

Small and Medium Enterprises

Large Enterprises

By Industry Vertical

BFSI

Retail and Consumer Goods

Healthcare and Life Science

Manufacturing

IT and Telecommunication

Government and Public Sector

Others

Get Detail Market Segmentation @ https://straitsresearch.com/report/container-and-kubernetes-security-market/segmentation

FAQs answered in Container and Kubernetes Security Market Research Report

What recent brand-building initiatives have key players undertaken to enhance customer value in the Container and Kubernetes Security Market?

Which companies have broadened their focus by engaging in long-term societal initiatives?

Which firms have successfully navigated the challenges of the pandemic, and what strategies have they adopted to remain resilient?

What are the global trends in the Container and Kubernetes Security Market, and will demand increase or decrease in the coming years?

Where will strategic developments lead the industry in the mid to long term?

What factors influence the final price of Absorption Cooling Devices, and what raw materials are used in their manufacturing?

How significant is the growth opportunity for the Container and Kubernetes Security Market, and how will increasing adoption in mining affect the market's growth rate?

What recent industry trends can be leveraged to create additional revenue streams?

Scope

Impact of COVID-19: This section analyzes both the immediate and long-term effects of COVID-19 on the industry, offering insights into the current situation and future implications.

Industry Chain Analysis: Explores how the pandemic has disrupted the industry chain, with a focus on changes in marketing channels and supply chain dynamics.

Impact of the Middle East Crisis: Assesses the impact of the ongoing Middle East crisis on the market, examining its influence on industry stability, supply chains, and market trends.

This Report is available for purchase on @ https://straitsresearch.com/buy-now/container-and-kubernetes-security-market

About Us:

Straits Research is a leading research and intelligence organization, specializing in research, analytics, and advisory services along with providing business insights & research reports.

Contact Us:

Email: [email protected]

Address: 825 3rd Avenue, New York, NY, USA, 10022

Tel: +1 646 905 0080 (U.S.) +91 8087085354 (India) +44 203 695 0070 (U.K.)

#Container and Kubernetes Security Market#Container and Kubernetes Security Market Share#Container and Kubernetes Security Market Size#Container and Kubernetes Security Market Research#Container and Kubernetes Security Industry

0 notes

Text

10 Best Docker Containers for Security in 2024

10 Best Docker Containers for Security in 2024 #docker #containers #kubernetes #security #cybersecurity #selfhosted #virtualization #vhtforums #virtualizationhowto #homeserver #homelab #dockercompose #dockersecurity #dockermanagement #dockerlearning

Many are embracing running containers as opposed to running a virtual machines instance for workloads. There are so many great Docker containers users can look at for many different use cases and applications. However, there are also excellent container security tool images and solutions we can use for Docker security and securing Docker container image configurations, and giving visibility to…

View On WordPress

0 notes

Text

Day three of tech convention. The last time I was at this exhibition hall, it was comicon. Now it is full of cloud computing geeks, and I’m having to physically dodge out of the way of people helping run AI Workflows (Which, annoyingly, I do care about, because my job involves services that do image analysis using LLM-based systems, but the fact that your booth has a enough AI art to drain a small lake does not make me interested in your company).

On an empty table south of the main hall, the roar of five thousand nerds becomes the white noise of the ocean, with only the sounds of a nearby tennis game (I’ve no idea why the booth for a kubernetes container security service has built a full sized tennis court in the hall, and I’m afraid they might tell me if I ask) disrupting its swell. I am adrift in a sea of humanity, and now I go to a talk on “A twelve factor approach to workload identification”. I hope one of the factors is coffee.

It’s not. But hope keeps us moving.

One day more.

8 notes

·

View notes

Text

Exploring the Azure Technology Stack: A Solution Architect’s Journey

Kavin

As a solution architect, my career revolves around solving complex problems and designing systems that are scalable, secure, and efficient. The rise of cloud computing has transformed the way we think about technology, and Microsoft Azure has been at the forefront of this evolution. With its diverse and powerful technology stack, Azure offers endless possibilities for businesses and developers alike. My journey with Azure began with Microsoft Azure training online, which not only deepened my understanding of cloud concepts but also helped me unlock the potential of Azure’s ecosystem.

In this blog, I will share my experience working with a specific Azure technology stack that has proven to be transformative in various projects. This stack primarily focuses on serverless computing, container orchestration, DevOps integration, and globally distributed data management. Let’s dive into how these components come together to create robust solutions for modern business challenges.

Understanding the Azure Ecosystem

Azure’s ecosystem is vast, encompassing services that cater to infrastructure, application development, analytics, machine learning, and more. For this blog, I will focus on a specific stack that includes:

Azure Functions for serverless computing.

Azure Kubernetes Service (AKS) for container orchestration.

Azure DevOps for streamlined development and deployment.

Azure Cosmos DB for globally distributed, scalable data storage.

Each of these services has unique strengths, and when used together, they form a powerful foundation for building modern, cloud-native applications.

1. Azure Functions: Embracing Serverless Architecture

Serverless computing has redefined how we build and deploy applications. With Azure Functions, developers can focus on writing code without worrying about managing infrastructure. Azure Functions supports multiple programming languages and offers seamless integration with other Azure services.

Real-World Application

In one of my projects, we needed to process real-time data from IoT devices deployed across multiple locations. Azure Functions was the perfect choice for this task. By integrating Azure Functions with Azure Event Hubs, we were able to create an event-driven architecture that processed millions of events daily. The serverless nature of Azure Functions allowed us to scale dynamically based on workload, ensuring cost-efficiency and high performance.

Key Benefits:

Auto-scaling: Automatically adjusts to handle workload variations.

Cost-effective: Pay only for the resources consumed during function execution.

Integration-ready: Easily connects with services like Logic Apps, Event Grid, and API Management.

2. Azure Kubernetes Service (AKS): The Power of Containers

Containers have become the backbone of modern application development, and Azure Kubernetes Service (AKS) simplifies container orchestration. AKS provides a managed Kubernetes environment, making it easier to deploy, manage, and scale containerized applications.

Real-World Application

In a project for a healthcare client, we built a microservices architecture using AKS. Each service—such as patient records, appointment scheduling, and billing—was containerized and deployed on AKS. This approach provided several advantages:

Isolation: Each service operated independently, improving fault tolerance.

Scalability: AKS scaled specific services based on demand, optimizing resource usage.

Observability: Using Azure Monitor, we gained deep insights into application performance and quickly resolved issues.

The integration of AKS with Azure DevOps further streamlined our CI/CD pipelines, enabling rapid deployment and updates without downtime.

Key Benefits:

Managed Kubernetes: Reduces operational overhead with automated updates and patching.

Multi-region support: Enables global application deployments.

Built-in security: Integrates with Azure Active Directory and offers role-based access control (RBAC).

3. Azure DevOps: Streamlining Development Workflows

Azure DevOps is an all-in-one platform for managing development workflows, from planning to deployment. It includes tools like Azure Repos, Azure Pipelines, and Azure Artifacts, which support collaboration and automation.

Real-World Application

For an e-commerce client, we used Azure DevOps to establish an efficient CI/CD pipeline. The project involved multiple teams working on front-end, back-end, and database components. Azure DevOps provided:

Version control: Using Azure Repos for centralized code management.

Automated pipelines: Azure Pipelines for building, testing, and deploying code.

Artifact management: Storing dependencies in Azure Artifacts for seamless integration.

The result? Deployment cycles that previously took weeks were reduced to just a few hours, enabling faster time-to-market and improved customer satisfaction.

Key Benefits:

End-to-end integration: Unifies tools for seamless development and deployment.

Scalability: Supports projects of all sizes, from startups to enterprises.

Collaboration: Facilitates team communication with built-in dashboards and tracking.

4. Azure Cosmos DB: Global Data at Scale

Azure Cosmos DB is a globally distributed, multi-model database service designed for mission-critical applications. It guarantees low latency, high availability, and scalability, making it ideal for applications requiring real-time data access across multiple regions.

Real-World Application

In a project for a financial services company, we used Azure Cosmos DB to manage transaction data across multiple continents. The database’s multi-region replication ensure data consistency and availability, even during regional outages. Additionally, Cosmos DB’s support for multiple APIs (SQL, MongoDB, Cassandra, etc.) allowed us to integrate seamlessly with existing systems.

Key Benefits:

Global distribution: Data is replicated across regions with minimal latency.

Flexibility: Supports various data models, including key-value, document, and graph.

SLAs: Offers industry-leading SLAs for availability, throughput, and latency.

Building a Cohesive Solution

Combining these Azure services creates a technology stack that is flexible, scalable, and efficient. Here’s how they work together in a hypothetical solution:

Data Ingestion: IoT devices send data to Azure Event Hubs.

Processing: Azure Functions processes the data in real-time.

Storage: Processed data is stored in Azure Cosmos DB for global access.

Application Logic: Containerized microservices run on AKS, providing APIs for accessing and manipulating data.

Deployment: Azure DevOps manages the CI/CD pipeline, ensuring seamless updates to the application.

This architecture demonstrates how Azure’s technology stack can address modern business challenges while maintaining high performance and reliability.

Final Thoughts

My journey with Azure has been both rewarding and transformative. The training I received at ACTE Institute provided me with a strong foundation to explore Azure’s capabilities and apply them effectively in real-world scenarios. For those new to cloud computing, I recommend starting with a solid training program that offers hands-on experience and practical insights.

As the demand for cloud professionals continues to grow, specializing in Azure’s technology stack can open doors to exciting opportunities. If you’re based in Hyderabad or prefer online learning, consider enrolling in Microsoft Azure training in Hyderabad to kickstart your journey.

Azure’s ecosystem is continuously evolving, offering new tools and features to address emerging challenges. By staying committed to learning and experimenting, we can harness the full potential of this powerful platform and drive innovation in every project we undertake.

#cybersecurity#database#marketingstrategy#digitalmarketing#adtech#artificialintelligence#machinelearning#ai

2 notes

·

View notes

Text

Navigating the DevOps Landscape: A Beginner's Comprehensive

Roadmap In the dynamic realm of software development, the DevOps methodology stands out as a transformative force, fostering collaboration, automation, and continuous enhancement. For newcomers eager to immerse themselves in this revolutionary culture, this all-encompassing guide presents the essential steps to initiate your DevOps expedition.

Grasping the Essence of DevOps Culture: DevOps transcends mere tool usage; it embodies a cultural transformation that prioritizes collaboration and communication between development and operations teams. Begin by comprehending the fundamental principles of collaboration, automation, and continuous improvement.

Immerse Yourself in DevOps Literature: Kickstart your journey by delving into indispensable DevOps literature. "The Phoenix Project" by Gene Kim, Jez Humble, and Kevin Behr, along with "The DevOps Handbook," provides invaluable insights into the theoretical underpinnings and practical implementations of DevOps.

Online Courses and Tutorials: Harness the educational potential of online platforms like Coursera, edX, and Udacity. Seek courses covering pivotal DevOps tools such as Git, Jenkins, Docker, and Kubernetes. These courses will furnish you with a robust comprehension of the tools and processes integral to the DevOps terrain.

Practical Application: While theory is crucial, hands-on experience is paramount. Establish your own development environment and embark on practical projects. Implement version control, construct CI/CD pipelines, and deploy applications to acquire firsthand experience in applying DevOps principles.

Explore the Realm of Configuration Management: Configuration management is a pivotal facet of DevOps. Familiarize yourself with tools like Ansible, Puppet, or Chef, which automate infrastructure provisioning and configuration, ensuring uniformity across diverse environments.

Containerization and Orchestration: Delve into the universe of containerization with Docker and orchestration with Kubernetes. Containers provide uniformity across diverse environments, while orchestration tools automate the deployment, scaling, and management of containerized applications.

Continuous Integration and Continuous Deployment (CI/CD): Integral to DevOps is CI/CD. Gain proficiency in Jenkins, Travis CI, or GitLab CI to automate code change testing and deployment. These tools enhance the speed and reliability of the release cycle, a central objective in DevOps methodologies.

Grasp Networking and Security Fundamentals: Expand your knowledge to encompass networking and security basics relevant to DevOps. Comprehend how security integrates into the DevOps pipeline, embracing the principles of DevSecOps. Gain insights into infrastructure security and secure coding practices to ensure robust DevOps implementations.

Embarking on a DevOps expedition demands a comprehensive strategy that amalgamates theoretical understanding with hands-on experience. By grasping the cultural shift, exploring key literature, and mastering essential tools, you are well-positioned to evolve into a proficient DevOps practitioner, contributing to the triumph of contemporary software development.

2 notes

·

View notes

Text

Bridging the Gap: A Developer's Roadmap to Embracing DevOps Excellence

In the ever-evolving landscape of software development, the role of a DevOps engineer stands out as a crucial link between development and operations. For developers with an eye on this transformative career path, acquiring a unique skill set and embracing a holistic mindset becomes imperative. In the city of Hyderabad, DevOps Training offers a strategic avenue for individuals keen on validating their proficiency in DevOps practices and enhancing their career trajectory.

Charting the DevOps Odyssey: A Developer's Comprehensive Guide

Shifting gears from a developer to a DevOps engineer involves a nuanced approach, harmonizing development expertise with operational acumen. Here's a detailed step-by-step guide to assist developers aspiring to embark on the dynamic journey into the world of DevOps:

1. Grasp the Fundamentals of DevOps: Establish a solid foundation by delving into the core principles of DevOps, emphasizing collaboration, automation, and a culture of continuous improvement. Recognize the significance of the cultural shift required for successful DevOps implementation.

2. Master Git and Version Control: Dive into the world of version control with a mastery of Git, including branches and pull requests. Proficiency in these areas is pivotal for streamlined code collaboration, versioning, and effective tracking of changes.

3. Cultivate Scripting Skills (e.g., Python, Shell): Cultivate essential scripting skills to automate mundane tasks. Languages like Python and Shell scripting play a key role in the DevOps toolchain, providing a robust foundation for automation.

4. Explore Containers and Kubernetes: Immerse yourself in the realms of containerization with Docker and orchestration with Kubernetes. A comprehensive understanding of these technologies is fundamental for creating reproducible environments and managing scalable applications.

5. Grasp Infrastructure as Code (IaC): Familiarize yourself with Infrastructure as Code (IaC) principles. Tools like Terraform or Ansible empower the codification of infrastructure, streamlining deployment processes. The pursuit of the Best DevOps Online Training can offer profound insights into leveraging IaC effectively.

6. Experiment with Continuous Integration/Continuous Deployment (CI/CD): Take the leap into CI/CD territory with experimentation using tools like Jenkins or GitLab CI. The automation of code testing, integration, and deployment is pivotal for ensuring swift and reliable releases within the development pipeline.

7. Explore Monitoring and Logging: Gain proficiency in monitoring and troubleshooting by exploring tools like Prometheus or Grafana. A deep understanding of the health and performance of applications is crucial for maintaining a robust system.

8. Foster Collaboration with Other Teams: Cultivate effective communication and collaboration with operations, QA, and security teams. DevOps thrives on breaking down silos and fostering a collaborative environment to achieve shared goals.

Remember, the transition to a DevOps role is an evolutionary process, where gradual incorporation of DevOps practices into existing roles, coupled with hands-on projects, fortifies the foundation for a successful journey towards becoming a DevOps engineer.

3 notes

·

View notes

Text

Machine Learning Infrastructure: The Foundation of Scalable AI Solutions

Introduction: Why Machine Learning Infrastructure Matters

In today's digital-first world, the adoption of artificial intelligence (AI) and machine learning (ML) is revolutionizing every industry—from healthcare and finance to e-commerce and entertainment. However, while many organizations aim to leverage ML for automation and insights, few realize that success depends not just on algorithms, but also on a well-structured machine learning infrastructure.

Machine learning infrastructure provides the backbone needed to deploy, monitor, scale, and maintain ML models effectively. Without it, even the most promising ML solutions fail to meet their potential.

In this comprehensive guide from diglip7.com, we’ll explore what machine learning infrastructure is, why it’s crucial, and how businesses can build and manage it effectively.

What is Machine Learning Infrastructure?

Machine learning infrastructure refers to the full stack of tools, platforms, and systems that support the development, training, deployment, and monitoring of ML models. This includes:

Data storage systems

Compute resources (CPU, GPU, TPU)

Model training and validation environments

Monitoring and orchestration tools

Version control for code and models

Together, these components form the ecosystem where machine learning workflows operate efficiently and reliably.

Key Components of Machine Learning Infrastructure

To build robust ML pipelines, several foundational elements must be in place:

1. Data Infrastructure

Data is the fuel of machine learning. Key tools and technologies include:

Data Lakes & Warehouses: Store structured and unstructured data (e.g., AWS S3, Google BigQuery).

ETL Pipelines: Extract, transform, and load raw data for modeling (e.g., Apache Airflow, dbt).

Data Labeling Tools: For supervised learning (e.g., Labelbox, Amazon SageMaker Ground Truth).

2. Compute Resources

Training ML models requires high-performance computing. Options include:

On-Premise Clusters: Cost-effective for large enterprises.

Cloud Compute: Scalable resources like AWS EC2, Google Cloud AI Platform, or Azure ML.

GPUs/TPUs: Essential for deep learning and neural networks.

3. Model Training Platforms

These platforms simplify experimentation and hyperparameter tuning:

TensorFlow, PyTorch, Scikit-learn: Popular ML libraries.

MLflow: Experiment tracking and model lifecycle management.

KubeFlow: ML workflow orchestration on Kubernetes.

4. Deployment Infrastructure

Once trained, models must be deployed in real-world environments:

Containers & Microservices: Docker, Kubernetes, and serverless functions.

Model Serving Platforms: TensorFlow Serving, TorchServe, or custom REST APIs.

CI/CD Pipelines: Automate testing, integration, and deployment of ML models.

5. Monitoring & Observability

Key to ensure ongoing model performance:

Drift Detection: Spot when model predictions diverge from expected outputs.

Performance Monitoring: Track latency, accuracy, and throughput.

Logging & Alerts: Tools like Prometheus, Grafana, or Seldon Core.

Benefits of Investing in Machine Learning Infrastructure

Here’s why having a strong machine learning infrastructure matters:

Scalability: Run models on large datasets and serve thousands of requests per second.

Reproducibility: Re-run experiments with the same configuration.

Speed: Accelerate development cycles with automation and reusable pipelines.

Collaboration: Enable data scientists, ML engineers, and DevOps to work in sync.

Compliance: Keep data and models auditable and secure for regulations like GDPR or HIPAA.

Real-World Applications of Machine Learning Infrastructure

Let’s look at how industry leaders use ML infrastructure to power their services:

Netflix: Uses a robust ML pipeline to personalize content and optimize streaming.

Amazon: Trains recommendation models using massive data pipelines and custom ML platforms.

Tesla: Collects real-time driving data from vehicles and retrains autonomous driving models.

Spotify: Relies on cloud-based infrastructure for playlist generation and music discovery.

Challenges in Building ML Infrastructure

Despite its importance, developing ML infrastructure has its hurdles:

High Costs: GPU servers and cloud compute aren't cheap.

Complex Tooling: Choosing the right combination of tools can be overwhelming.

Maintenance Overhead: Regular updates, monitoring, and security patching are required.

Talent Shortage: Skilled ML engineers and MLOps professionals are in short supply.

How to Build Machine Learning Infrastructure: A Step-by-Step Guide

Here’s a simplified roadmap for setting up scalable ML infrastructure:

Step 1: Define Use Cases

Know what problem you're solving. Fraud detection? Product recommendations? Forecasting?

Step 2: Collect & Store Data

Use data lakes, warehouses, or relational databases. Ensure it’s clean, labeled, and secure.

Step 3: Choose ML Tools

Select frameworks (e.g., TensorFlow, PyTorch), orchestration tools, and compute environments.

Step 4: Set Up Compute Environment

Use cloud-based Jupyter notebooks, Colab, or on-premise GPUs for training.

Step 5: Build CI/CD Pipelines

Automate model testing and deployment with Git, Jenkins, or MLflow.

Step 6: Monitor Performance

Track accuracy, latency, and data drift. Set alerts for anomalies.

Step 7: Iterate & Improve

Collect feedback, retrain models, and scale solutions based on business needs.

Machine Learning Infrastructure Providers & Tools

Below are some popular platforms that help streamline ML infrastructure: Tool/PlatformPurposeExampleAmazon SageMakerFull ML development environmentEnd-to-end ML pipelineGoogle Vertex AICloud ML serviceTraining, deploying, managing ML modelsDatabricksBig data + MLCollaborative notebooksKubeFlowKubernetes-based ML workflowsModel orchestrationMLflowModel lifecycle trackingExperiments, models, metricsWeights & BiasesExperiment trackingVisualization and monitoring

Expert Review

Reviewed by: Rajeev Kapoor, Senior ML Engineer at DataStack AI

"Machine learning infrastructure is no longer a luxury; it's a necessity for scalable AI deployments. Companies that invest early in robust, cloud-native ML infrastructure are far more likely to deliver consistent, accurate, and responsible AI solutions."

Frequently Asked Questions (FAQs)

Q1: What is the difference between ML infrastructure and traditional IT infrastructure?

Answer: Traditional IT supports business applications, while ML infrastructure is designed for data processing, model training, and deployment at scale. It often includes specialized hardware (e.g., GPUs) and tools for data science workflows.

Q2: Can small businesses benefit from ML infrastructure?

Answer: Yes, with the rise of cloud platforms like AWS SageMaker and Google Vertex AI, even startups can leverage scalable machine learning infrastructure without heavy upfront investment.

Q3: Is Kubernetes necessary for ML infrastructure?

Answer: While not mandatory, Kubernetes helps orchestrate containerized workloads and is widely adopted for scalable ML infrastructure, especially in production environments.

Q4: What skills are needed to manage ML infrastructure?

Answer: Familiarity with Python, cloud computing, Docker/Kubernetes, CI/CD, and ML frameworks like TensorFlow or PyTorch is essential.

Q5: How often should ML models be retrained?

Answer: It depends on data volatility. In dynamic environments (e.g., fraud detection), retraining may occur weekly or daily. In stable domains, monthly or quarterly retraining suffices.

Final Thoughts

Machine learning infrastructure isn’t just about stacking technologies—it's about creating an agile, scalable, and collaborative environment that empowers data scientists and engineers to build models with real-world impact. Whether you're a startup or an enterprise, investing in the right infrastructure will directly influence the success of your AI initiatives.

By building and maintaining a robust ML infrastructure, you ensure that your models perform optimally, adapt to new data, and generate consistent business value.

For more insights and updates on AI, ML, and digital innovation, visit diglip7.com.

0 notes

Text

What Are the Challenges of Scaling a Fintech Application?

Scaling a fintech application is no small feat. What starts as a minimal viable product (MVP) quickly becomes a complex digital ecosystem that must support thousands—or even millions—of users. As financial technology continues to disrupt traditional banking and investment models, the pressure to scale efficiently, securely, and reliably has never been greater.

Whether you're offering digital wallets, lending platforms, or investment tools, successful fintech software development requires more than just technical expertise. It demands a deep understanding of regulatory compliance, user behavior, security, and system architecture. As Fintech Services expand, so too do the challenges of maintaining performance and trust at scale.

Below are the key challenges companies face when scaling a fintech application.

1. Regulatory Compliance Across Regions

One of the biggest hurdles in scaling a fintech product is adapting to varying financial regulations across different markets. While your application may be fully compliant in your home country, entering a new region might introduce requirements like additional identity verification, data localization laws, or different transaction monitoring protocols.

Scaling means compliance must be baked into your infrastructure. Your tech stack must allow for modular integration of different compliance protocols based on regional needs. Failing to do so not only risks penalties but also erodes user trust—something that's critical for any company offering Fintech Services.

2. Maintaining Data Security at Scale

Security becomes exponentially more complex as the number of users grows. More users mean more data, more access points, and more potential vulnerabilities. While encryption and multi-factor authentication are baseline requirements, scaling applications must go further with role-based access control (RBAC), real-time threat detection, and zero-trust architecture.

In fintech software development, a single breach can be catastrophic, affecting not only financial data but also brand reputation. Ensuring that your security architecture scales alongside user growth is essential.

3. System Performance and Reliability

As usage grows, so do demands on your servers, databases, and APIs. Fintech users expect fast, seamless transactions—delays or downtimes can directly affect business operations and customer satisfaction.

Building a highly available and resilient system often involves using microservices, container orchestration tools like Kubernetes, and distributed databases. Load balancing, horizontal scaling, and real-time monitoring must be in place to ensure consistent performance under pressure.

4. Complexity of Integrations

Fintech applications typically rely on multiple third-party integrations—payment processors, banking APIs, KYC/AML services, and more. As the application scales, managing these integrations becomes increasingly complex. Each new integration adds potential points of failure and requires monitoring, updates, and compliance reviews.

Furthermore, integrating with legacy banking systems, which may not support modern protocols or cloud infrastructure, adds another layer of difficulty.

5. User Experience and Onboarding

Scaling is not just about infrastructure—it’s about people. A growing user base means more diverse needs, devices, and technical competencies. Ensuring that onboarding remains simple, intuitive, and compliant can be a challenge.

If users face friction during onboarding—like lengthy KYC processes or difficult navigation—they may abandon the application altogether. As your audience expands globally, you may also need to support multiple languages, currencies, and localization preferences, all of which add complexity to your frontend development.

6. Data Management and Analytics

Fintech applications generate vast amounts of transactional, behavioral, and compliance data. Scaling means implementing robust data infrastructure that can collect, store, and analyze this data in real-time. You’ll need to ensure that your analytics pipeline can handle increasing data volumes without latency or errors.

Moreover, actionable insights from data become critical for fraud detection, user engagement strategies, and personalization. Your data stack should evolve from basic reporting to real-time analytics, machine learning, and predictive modeling.

7. Team and Process Scalability

As your application scales, so must your team and internal processes. Engineering teams must adopt agile methodologies and DevOps practices to keep pace with rapid iteration. Communication overhead increases, and maintaining product quality while shipping faster becomes a balancing act.

Documentation, version control, and automated testing become non-negotiable components of scalable development. Without them, technical debt grows quickly and future scalability is compromised.

8. Cost Management

Finally, scaling often leads to ballooning infrastructure and operational costs. Cloud services, third-party integrations, and security tools can become increasingly expensive. Without proper monitoring, you might find yourself with an unsustainable burn rate.

Cost-efficient scaling requires regular performance audits, architecture optimization, and intelligent use of auto-scaling and serverless technologies.

Real-World Perspective

A practical example of tackling these challenges can be seen in the approach used by Xettle Technologies, which emphasizes modular architecture, automated compliance workflows, and real-time analytics to support scaling without sacrificing security or performance. Their strategy demonstrates how thoughtful planning and the right tools can ease the complexities of scale in fintech ecosystems.

Conclusion

Scaling a fintech application is a multifaceted challenge that touches every part of your business—from backend systems to regulatory frameworks. It's not just about growing bigger, but about growing smarter. The demands of fintech software development extend far beyond coding; they encompass strategic planning, regulatory foresight, and deep customer empathy.

Companies that invest early in scalable architecture, robust security, and user-centric design are more likely to thrive in the competitive world of Fintech Services. By anticipating these challenges and addressing them proactively, you set a foundation for sustainable growth and long-term success.

0 notes

Text

Red Hat OpenShift Administration III: Scaling Deployments in the Enterprise

In the world of modern enterprise IT, scalability is not just a desirable trait—it's a mission-critical requirement. As organizations continue to adopt containerized applications and microservices architectures, the ability to seamlessly scale infrastructure and workloads becomes essential. That’s where Red Hat OpenShift Administration III comes into play, focusing on the advanced capabilities needed to manage and scale OpenShift clusters in large-scale production environments.

Why Scaling Matters in OpenShift

OpenShift, Red Hat’s Kubernetes-powered container platform, empowers DevOps teams to build, deploy, and manage applications at scale. But managing scalability isn’t just about increasing pod replicas or adding more nodes—it’s about making strategic, automated, and resilient decisions to meet dynamic demand, ensure availability, and optimize resource usage.

OpenShift Administration III (DO380) is the course designed to help administrators go beyond day-to-day operations and develop the skills needed to ensure enterprise-grade scalability and performance.

Key Takeaways from OpenShift Administration III

1. Advanced Cluster Management

The course teaches administrators how to manage large OpenShift clusters with hundreds or even thousands of nodes. Topics include:

Advanced node management

Infrastructure node roles

Cluster operators and custom resources

2. Automated Scaling Techniques

Learn how to configure and manage:

Horizontal Pod Autoscalers (HPA)

Vertical Pod Autoscalers (VPA)

Cluster Autoscalers These tools allow the platform to intelligently adjust resource consumption based on workload demands.

3. Optimizing Resource Utilization

One of the biggest challenges in scaling is maintaining cost-efficiency. OpenShift Administration III helps you fine-tune quotas, limits, and requests to avoid over-provisioning while ensuring optimal performance.

4. Managing Multitenancy at Scale

The course delves into managing enterprise workloads in a secure and multi-tenant environment. This includes:

Project-level isolation

Role-based access control (RBAC)

Secure networking policies

5. High Availability and Disaster Recovery

Scaling isn't just about growing—it’s about being resilient. Learn how to:

Configure etcd backup and restore

Maintain control plane and application availability

Build disaster recovery strategies

Who Should Take This Course?

This course is ideal for:

OpenShift administrators responsible for large-scale deployments

DevOps engineers managing Kubernetes-based platforms

System architects looking to standardize on Red Hat OpenShift across enterprise environments

Final Thoughts

As enterprises push towards digital transformation, the demand for scalable, resilient, and automated platforms continues to grow. Red Hat OpenShift Administration III equips IT professionals with the skills and strategies to confidently scale deployments, handle complex workloads, and maintain robust system performance across the enterprise.

Whether you're operating in a hybrid cloud, multi-cloud, or on-premises environment, mastering OpenShift scalability ensures your infrastructure can grow with your business.

Ready to take your OpenShift skills to the next level? Contact HawkStack Technologies today to learn about our Red Hat Learning Subscription (RHLS) and instructor-led training options for DO380 – Red Hat OpenShift Administration III. For more details www.hawkstack.com

0 notes

Text

Container and Kubernetes Security Market Report, Market Size, Share, Trends, Analysis By Forecast Period

#Container and Kubernetes Security Market#Container and Kubernetes Security Market Share#Container and Kubernetes Security Market Size#Container and Kubernetes Security Market Research#Container and Kubernetes Security Industry

0 notes

Text

Step-by-Step Guide to Hiring an MLOps Engineer

: Steps to Hire an MLOps Engineer Make the role clear.

Decide your needs: model deployment, CI/CD for ML, monitoring, cloud infrastructure, etc.

2. Choose the level (junior, mid, senior) depending on how advanced the project is.

Create a concise job description.

Include responsibilities like:

2. ML workflow automation (CI/CD)

3. Model lifecycle management (training to deployment)

4. Model performance tracking

5. Utilizing Docker, Kubernetes, Airflow, MLflow, etc.

: Emphasize necessary experience with ML libraries (TensorFlow, PyTorch), cloud platforms (AWS, GCP, Azure), and DevOps tools.

: Source Candidates

Utilize dedicated platforms: LinkedIn, Stack Overflow, GitHub, and AI/ML forums (e.g., MLOps Community, Weights & Biases forums).

Use freelancers or agencies on a temporary or project-by-project basis.

1. Screen Resumes for Technical Skills

2. Look for experience in:

3. Building responsive machine learning pipelines

4 .Employing in a cloud-based environment

5. Managing manufacturing ML systems

: Technical Interview & Assessment

Add coding and system design rounds.

Check understanding of:

1.CI/CD for ML

2. Container management.

3. Monitoring & logging (e.g., Prometheus, Grafana)

4. Tracking experiments

Optional: hands-on exercise or take-home assignment (e.g., build a simple training-to-deployment pipeline).

1. Evaluate Soft Skills & Culture Fit

2. Collaboration with data scientists, software engineers, and product managers is necessary.

3. Assess communication, documentation style, and collaboration.

4. Make an Offer & Onboard

5. Offer thorough onboarding instructions.

6. Begin with a real project to see the impact soon.

Mlops engineer

???? Most Important Points to Remember MLOps ≠ DevOps: MLOps introduces additional complexity — model versioning, drift, data pipelines.

Infrastructure experience is a must: Hire individuals who have experience with cloud, containers, and orchestration tools.

Cross-function thinking: This is where MLOps intersect IT, software development, and machine learning—clear communications are crucial.

Knowledge tools: MLflow, Kubeflow, Airflow, DVC, Terraform, Docker, and Kubernetes are typical.

Security and scalability: Consider if the candidate has developed secure and scalable machine learning systems.

Model monitoring and feedback loops: Make sure they know how to check and keep the model’s performance good over time.

0 notes

Text

Top 10 DevOps Containers in 2023

Top 10 DevOps Containers in your Stack #homelab #selfhosted #DevOpsContainerTools #JenkinsContinuousIntegration #GitLabCodeRepository #SecureHarborContainerRegistry #HashicorpVaultSecretsManagement #ArgoCD #SonarQubeCodeQuality #Prometheus #nginxproxy

If you want to learn more about DevOps and building an effective DevOps stack, several containerized solutions are commonly found in production DevOps stacks. I have been working on a deployment in my home lab of DevOps containers that allows me to use infrastructure as code for really cool projects. Let’s consider the top 10 DevOps containers that serve as individual container building blocks…

View On WordPress

#ArgoCD Kubernetes deployment#DevOps container tools#GitLab code repository#Grafana data visualization#Hashicorp Vault secrets management#Jenkins for continuous integration#Prometheus container monitoring#Secure Harbor container registry#SonarQube code quality#Traefik load balancing

0 notes

Text

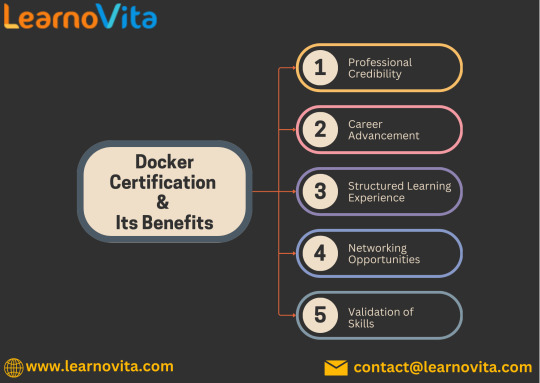

Docker Certification Explained: Benefits and Insights

In the realm of software development and DevOps, Docker has become a household name. As organizations increasingly adopt containerization, the demand for skilled professionals proficient in Docker continues to rise. One way to validate your expertise is through Docker certification. This blog will explain what Docker certification is, its benefits, and provide insights into how it can enhance your career.

Enhancing your career at the Docker Certification Course involves taking a systematic strategy and enrolling in a suitable course that will greatly expand your learning journey while matching with your preferences.

What is Docker Certification?

Docker certification is an official credential that verifies your skills and knowledge in using Docker technology. The primary certification offered is the Docker Certified Associate (DCA), which assesses your understanding of essential Docker concepts, tools, and best practices.

Key Areas Covered in the Certification

The DCA exam evaluates your proficiency in several critical areas, including:

Docker Basics: Understanding containers, images, and the Docker architecture.

Networking: Setting up and managing networks for containerized applications.

Security: Implementing security best practices to protect your applications and data.

Orchestration: Using Docker Swarm and Kubernetes for effective container orchestration.

Storage Management: Managing data volumes and persistent storage effectively.

Benefits of Docker Certification

1. Enhanced Professional Credibility

Holding a Docker certification boosts your credibility in the eyes of potential employers. It serves as proof of your expertise and commitment to mastering Docker technology, distinguishing you from non-certified candidates.

2. Career Advancement Opportunities

With the growing adoption of containerization, the demand for Docker-certified professionals is increasing. A Docker certification can open doors to new job opportunities, promotions, and potentially higher salaries. Many organizations actively seek certified individuals for key roles in DevOps and software development.

3. Structured Learning Pathway

Preparing for Docker certification provides a structured approach to learning. The comprehensive curriculum ensures you cover essential topics and gain a solid understanding of Docker, best practices, and advanced techniques, equipping you for real-world challenges.

It's simpler to master this course and progress your profession with the help of Best Online Training & Placement programs, which provide through instruction and job placement support to anyone seeking to improve their talents.

4. Networking Benefits

Becoming certified can connect you to a community of professionals who are also certified. Engaging with this network can provide valuable opportunities for knowledge sharing, mentorship, and job referrals, enriching your career journey.

5. Validation of Skills

For those transitioning into DevOps roles or looking to solidify their Docker expertise, certification serves as tangible proof of your skills. It demonstrates your commitment to professional growth and your capability to work with modern technologies.

Insights for Success

1. Preparation is Key

To succeed in the Docker certification exam, invest time in studying the relevant topics. Utilize official resources, online courses, and practice exams to reinforce your knowledge.

2. Hands-On Experience

Practical experience is invaluable. Engage in real-world projects or personal experiments with Docker to solidify your understanding and application of the concepts.

3. Stay Updated

The tech landscape is constantly evolving. Stay updated with the latest Docker features, tools, and best practices to ensure your knowledge remains relevant.

Conclusion

Docker certification is a powerful asset for anyone looking to advance their career in software development or DevOps. It enhances your credibility, opens up new opportunities, and provides a structured learning experience. If you’re serious about your professional growth, pursuing Docker certification is a worthwhile endeavor. Embrace the journey, and unlock the doors to your future success.

1 note

·

View note

Text

Security and Compliance in Cloud Deployments: A Proactive DevOps Approach

As cloud computing becomes the backbone of modern digital infrastructure, organizations are increasingly migrating applications and data to the cloud for agility, scalability, and cost-efficiency. However, this shift also brings elevated risks around security and compliance. To ensure safety and regulatory alignment, companies must adopt a proactive DevOps approach that integrates security into every stage of the development lifecycle—commonly referred to as DevSecOps.

Why Security and Compliance Matter in the Cloud

Cloud environments are dynamic and complex. Without the proper controls in place, they can easily become vulnerable to data breaches, configuration errors, insider threats, and compliance violations. Unlike traditional infrastructure, cloud-native deployments are continuously evolving, which requires real-time security measures and automated compliance enforcement.

Neglecting these areas can lead to:

Financial penalties for regulatory violations (GDPR, HIPAA, SOC 2, etc.)

Data loss and reputation damage

Business continuity risks due to breaches or downtime

The Role of DevOps in Cloud Security

DevOps is built around principles of automation, collaboration, and continuous delivery. By extending these principles to include security (DevSecOps), teams can ensure that infrastructure and applications are secure from the ground up, rather than bolted on as an afterthought.

A proactive DevOps approach focuses on:

Shift-Left Security: Security checks are moved earlier in the development process to catch issues before deployment.

Continuous Compliance: Policies are codified and integrated into CI/CD pipelines to maintain adherence to industry standards automatically.

Automated Risk Detection: Real-time scanning tools identify vulnerabilities, misconfigurations, and policy violations continuously.

Infrastructure as Code (IaC) Security: IaC templates are scanned for compliance and security flaws before provisioning cloud infrastructure.

Key Components of a Proactive Cloud Security Strategy

Identity and Access Management (IAM): Ensure least-privilege access using role-based policies and multi-factor authentication.

Encryption: Enforce encryption of data both at rest and in transit using cloud-native tools and third-party integrations.

Vulnerability Scanning: Use automated scanners to check applications, containers, and VMs for known security flaws.

Compliance Monitoring: Track compliance posture continuously against frameworks such as ISO 27001, PCI-DSS, and NIST.

Logging and Monitoring: Centralized logging and anomaly detection help detect threats early and support forensic investigations.

Secrets Management: Store and manage credentials, tokens, and keys using secure vaults.

Best Practices for DevSecOps in the Cloud

Integrate Security into CI/CD Pipelines: Use tools like Snyk, Aqua, and Checkov to run security checks automatically.

Perform Regular Threat Modeling: Continuously assess evolving attack surfaces and prioritize high-impact risks.

Automate Patch Management: Ensure all components are regularly updated and unpatched vulnerabilities are minimized.

Enable Policy as Code: Define and enforce compliance rules through version-controlled code in your DevOps pipeline.

Train Developers and Engineers: Security is everyone’s responsibility—conduct regular security training and awareness sessions.

How Salzen Cloud Ensures Secure Cloud Deployments

At Salzen Cloud, we embed security and compliance at the core of our cloud solutions. Our team works with clients to develop secure-by-design architectures that incorporate DevSecOps principles from planning to production. Whether it's automating compliance reports, hardening Kubernetes clusters, or configuring IAM policies, we ensure cloud operations are secure, scalable, and audit-ready.

Conclusion

In the era of cloud-native applications, security and compliance can no longer be reactive. A proactive DevOps approach ensures that every component of your cloud environment is secure, compliant, and continuously monitored. By embedding security into CI/CD workflows and automating compliance checks, organizations can mitigate risks while maintaining development speed.

Partner with Salzen Cloud to build secure and compliant cloud infrastructures with confidence.

0 notes

Text

What Makes a Great DevSecOps Developer: Insights for Hiring Managers

In the fast-pacing software industry security is no longer a mere afterthought. That’s where DevSecOps come in the picture - shifting security left and integrating it across the development lifecycle. With more tech companies adopting this approach, the demand for hiring DevSecOps developers is shooting high.

But what exactly counts for a great hire?

If you are a hiring manager considering developing secure, scalable, and reliable infrastructure, to understand what to look for in a DevSecOps hire is the key. In this article we will look at a few top skills and traits you need to prioritize.

Balancing Speed, Security, and Scalability in Modern Development Teams

Security mindset from day one

In addition to being a DevOps engineer with security expertise, a DevSecOps developer considers risk, compliance, and threat modelling from the outset. Employing DevSecOps developers requires someone who can:

Find weaknesses in the pipeline early on.

Include automatic security solutions such as Checkmarx, Aqua, or Snyk.

Write secure code in conjunction with developers.

Security is something they build for, not something they add on.

Strong background in DevOps and CI/CD

Skilled DevSecOps specialists are knowledgeable about the procedures and tools that facilitate constant delivery and integration. Seek for prior experience with platforms like GitHub Actions, Jenkins, or GitLab CI.

They should be able to set up pipelines that manage configurations, enforce policies, and do automated security scans in addition to running tests.

It's crucial that your candidate has experience managing pipelines in collaborative, cloud-based environments and is at ease working with remote teams if you're trying to hire remote developers.

Cloud and infrastructure knowledge

DevSecOps developers must comprehend cloud-native security regardless of whether their stack is in AWS, Azure, or GCP. This covers runtime monitoring, network policies, IAM roles, and containerization.

Terraform, Docker, and Kubernetes are essential container security tools. Inquire about prior expertise securely managing secrets and protecting infrastructure as code when hiring DevSecOps developers.

Communication and collaboration skills

In the past, security was a silo. It's everyone's responsibility in DevSecOps. This implies that your hiring must be able to interact effectively with security analysts, product teams, and software engineers.

The most qualified applicants will not only identify problems but also assist in resolving them, training team members, and streamlining procedures. Look for team players that share responsibilities and support a security culture when you hire software engineers to collaborate with DevSecOps experts.

Problem-solving and constant learning

As swiftly as security threats develop, so do the methods used to prevent them. Outstanding DevSecOps developers remain up to date on the newest approaches, threats, and compliance requirements. Additionally, they are proactive, considering ways to enhance systems before problems occur.

Top candidates stand out for their dedication to automation, documentation, and ongoing process development.

Closing Remarks

In addition to technical expertise, you need strategic thinkers who support security without sacrificing delivery if you want to hire DevSecOps developers who will truly add value to your team.

DevSecOps is becoming more than just a nice-to-have as more tech businesses move towards cloud-native designs; it is becoming an essential component of creating robust systems. Seek experts that can confidently balance speed, stability, and security, whether you need to build an internal team or engage remote engineers for flexibility.

0 notes