#ArgoCD Kubernetes deployment

Explore tagged Tumblr posts

Text

Top 10 DevOps Containers in 2023

Top 10 DevOps Containers in your Stack #homelab #selfhosted #DevOpsContainerTools #JenkinsContinuousIntegration #GitLabCodeRepository #SecureHarborContainerRegistry #HashicorpVaultSecretsManagement #ArgoCD #SonarQubeCodeQuality #Prometheus #nginxproxy

If you want to learn more about DevOps and building an effective DevOps stack, several containerized solutions are commonly found in production DevOps stacks. I have been working on a deployment in my home lab of DevOps containers that allows me to use infrastructure as code for really cool projects. Let’s consider the top 10 DevOps containers that serve as individual container building blocks…

View On WordPress

#ArgoCD Kubernetes deployment#DevOps container tools#GitLab code repository#Grafana data visualization#Hashicorp Vault secrets management#Jenkins for continuous integration#Prometheus container monitoring#Secure Harbor container registry#SonarQube code quality#Traefik load balancing

0 notes

Text

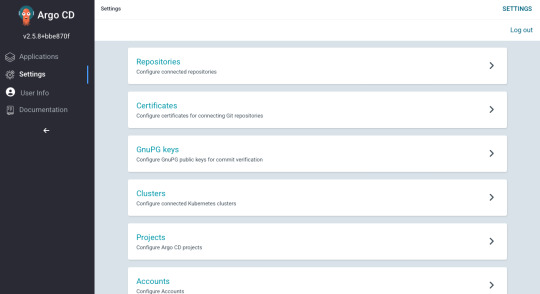

What is Argo CD? And When Was Argo CD Established?

What Is Argo CD?

Argo CD is declarative Kubernetes GitOps continuous delivery.

In DevOps, ArgoCD is a Continuous Delivery (CD) technology that has become well-liked for delivering applications to Kubernetes. It is based on the GitOps deployment methodology.

When was Argo CD Established?

Argo CD was created at Intuit and made publicly available following Applatix’s 2018 acquisition by Intuit. The founding developers of Applatix, Hong Wang, Jesse Suen, and Alexander Matyushentsev, made the Argo project open-source in 2017.

Why Argo CD?

Declarative and version-controlled application definitions, configurations, and environments are ideal. Automated, auditable, and easily comprehensible application deployment and lifecycle management are essential.

Getting Started

Quick Start

kubectl create namespace argocd kubectl apply -n argocd -f https://raw.githubusercontent.com/argoproj/argo-cd/stable/manifests/install.yaml

For some features, more user-friendly documentation is offered. Refer to the upgrade guide if you want to upgrade your Argo CD. Those interested in creating third-party connectors can access developer-oriented resources.

How it works

Argo CD defines the intended application state by employing Git repositories as the source of truth, in accordance with the GitOps pattern. There are various approaches to specify Kubernetes manifests:

Applications for Customization

Helm charts

JSONNET files

Simple YAML/JSON manifest directory

Any custom configuration management tool that is set up as a plugin

The deployment of the intended application states in the designated target settings is automated by Argo CD. Deployments of applications can monitor changes to branches, tags, or pinned to a particular manifest version at a Git commit.

Architecture

The implementation of Argo CD is a Kubernetes controller that continually observes active apps and contrasts their present, live state with the target state (as defined in the Git repository). Out Of Sync is the term used to describe a deployed application whose live state differs from the target state. In addition to reporting and visualizing the differences, Argo CD offers the ability to manually or automatically sync the current state back to the intended goal state. The designated target environments can automatically apply and reflect any changes made to the intended target state in the Git repository.

Components

API Server

The Web UI, CLI, and CI/CD systems use the API, which is exposed by the gRPC/REST server. Its duties include the following:

Status reporting and application management

Launching application functions (such as rollback, sync, and user-defined actions)

Cluster credential management and repository (k8s secrets)

RBAC enforcement

Authentication, and auth delegation to outside identity providers

Git webhook event listener/forwarder

Repository Server

An internal service called the repository server keeps a local cache of the Git repository containing the application manifests. When given the following inputs, it is in charge of creating and returning the Kubernetes manifests:

URL of the repository

Revision (tag, branch, commit)

Path of the application

Template-specific configurations: helm values.yaml, parameters

A Kubernetes controller known as the application controller keeps an eye on all active apps and contrasts their actual, live state with the intended target state as defined in the repository. When it identifies an Out Of Sync application state, it may take remedial action. It is in charge of calling any user-specified hooks for lifecycle events (Sync, PostSync, and PreSync).

Features

Applications are automatically deployed to designated target environments.

Multiple configuration management/templating tools (Kustomize, Helm, Jsonnet, and plain-YAML) are supported.

Capacity to oversee and implement across several clusters

Integration of SSO (OIDC, OAuth2, LDAP, SAML 2.0, Microsoft, LinkedIn, GitHub, GitLab)

RBAC and multi-tenancy authorization policies

Rollback/Roll-anywhere to any Git repository-committed application configuration

Analysis of the application resources’ health state

Automated visualization and detection of configuration drift

Applications can be synced manually or automatically to their desired state.

Web user interface that shows program activity in real time

CLI for CI integration and automation

Integration of webhooks (GitHub, BitBucket, GitLab)

Tokens of access for automation

Hooks for PreSync, Sync, and PostSync to facilitate intricate application rollouts (such as canary and blue/green upgrades)

Application event and API call audit trails

Prometheus measurements

To override helm parameters in Git, use parameter overrides.

Read more on Govindhtech.com

#ArgoCD#CD#GitOps#API#Kubernetes#Git#Argoproject#News#Technews#Technology#Technologynews#Technologytrends#govindhtech

2 notes

·

View notes

Text

Unlocking SRE Success: Roles and Responsibilities That Matter

In today’s digitally driven world, ensuring the reliability and performance of applications and systems is more critical than ever. This is where Site Reliability Engineering (SRE) plays a pivotal role. Originally developed by Google, SRE is a modern approach to IT operations that focuses strongly on automation, scalability, and reliability.

But what exactly do SREs do? Let’s explore the key roles and responsibilities of a Site Reliability Engineer and how they drive reliability, performance, and efficiency in modern IT environments.

🔹 What is a Site Reliability Engineer (SRE)?

A Site Reliability Engineer is a professional who applies software engineering principles to system administration and operations tasks. The main goal is to build scalable and highly reliable systems that function smoothly even during high demand or failure scenarios.

🔹 Core SRE Roles

SREs act as a bridge between development and operations teams. Their core responsibilities are usually grouped under these key roles:

1. Reliability Advocate

Ensures high availability and performance of services

Implements Service Level Objectives (SLOs), Service Level Indicators (SLIs), and Service Level Agreements (SLAs)

Identifies and removes reliability bottlenecks

2. Automation Engineer

Automates repetitive manual tasks using tools and scripts

Builds CI/CD pipelines for smoother deployments

Reduces human error and increases deployment speed

3. Monitoring & Observability Expert

Sets up real-time monitoring tools like Prometheus, Grafana, and Datadog

Implements logging, tracing, and alerting systems

Proactively detects issues before they impact users

4. Incident Responder

Handles outages and critical incidents

Leads root cause analysis (RCA) and postmortems

Builds incident playbooks for faster recovery

5. Performance Optimizer

Analyzes system performance metrics

Conducts load and stress testing

Optimizes infrastructure for cost and performance

6. Security and Compliance Enforcer

Implements security best practices in infrastructure

Ensures compliance with industry standards (e.g., ISO, GDPR)

Coordinates with security teams for audits and risk management

7. Capacity Planner

Forecasts traffic and resource needs

Plans for scaling infrastructure ahead of demand

Uses tools for autoscaling and load balancing

🔹 Day-to-Day Responsibilities of an SRE

Here are some common tasks SREs handle daily:

Deploying code with zero downtime

Troubleshooting production issues

Writing automation scripts to streamline operations

Reviewing infrastructure changes

Managing Kubernetes clusters or cloud services (AWS, GCP, Azure)

Performing system upgrades and patches

Running game days or chaos engineering practices to test resilience

🔹 Tools & Technologies Commonly Used by SREs

Monitoring: Prometheus, Grafana, ELK Stack, Datadog

Automation: Terraform, Ansible, Chef, Puppet

CI/CD: Jenkins, GitLab CI, ArgoCD

Containers & Orchestration: Docker, Kubernetes

Cloud Platforms: AWS, Google Cloud, Microsoft Azure

Incident Management: PagerDuty, Opsgenie, VictorOps

🔹 Why SRE Matters for Modern Businesses

Reduces system downtime and increases user satisfaction

Improves deployment speed without compromising reliability

Enables proactive problem solving through observability

Bridges the gap between developers and operations

Drives cost-effective scaling and infrastructure optimization

🔹 Final Thoughts

Site Reliability Engineering roles and responsibilities are more than just monitoring systems—it’s about building a resilient, scalable, and efficient infrastructure that keeps digital services running smoothly. With a blend of coding, systems knowledge, and problem-solving skills, SREs play a crucial role in modern DevOps and cloud-native environments.

📥 Click Here: Site Reliability Engineering certification training program

0 notes

Text

Mastering OpenShift at Scale: Red Hat OpenShift Administration III (DO380)

In today’s cloud-native world, organizations are increasingly adopting Kubernetes and Red Hat OpenShift to power their modern applications. As these environments scale, so do the challenges of managing complex workloads, automating operations, and ensuring reliability. That’s where Red Hat OpenShift Administration III: Scaling Kubernetes Workloads (DO380) steps in.

What is DO380?

DO380 is an advanced-level training course offered by Red Hat that focuses on scaling, performance tuning, and managing containerized applications in production using Red Hat OpenShift Container Platform. It is designed for experienced OpenShift administrators and DevOps professionals who want to deepen their knowledge of Kubernetes-based platform operations.

Who Should Take DO380?

This course is ideal for:

✅ System Administrators managing large-scale containerized environments

✅ DevOps Engineers working with CI/CD pipelines and automation

✅ Platform Engineers responsible for OpenShift clusters

✅ RHCEs or OpenShift Certified Administrators (EX280 holders) aiming to level up

Key Skills You Will Learn

Here’s what you’ll master in DO380:

🔧 Advanced Cluster Management

Configure and manage OpenShift clusters for performance and scalability.

📈 Monitoring & Tuning

Use tools like Prometheus, Grafana, and the OpenShift Console to monitor system health, tune workloads, and troubleshoot performance issues.

📦 Autoscaling & Load Management

Configure Horizontal Pod Autoscaling (HPA), Cluster Autoscaler, and manage workloads efficiently with resource quotas and limits.

🔐 Security & Compliance

Implement security policies, use node taints/tolerations, and manage namespaces for better isolation and governance.

🧪 CI/CD Pipeline Integration

Automate application delivery using Tekton pipelines and manage GitOps workflows with ArgoCD.

Course Prerequisites

Before enrolling in DO380, you should be familiar with:

Red Hat OpenShift Administration I (DO180)

Red Hat OpenShift Administration II (DO280)

Kubernetes fundamentals (kubectl, deployments, pods, services)

Certification Path

DO380 also helps you prepare for the Red Hat Certified Specialist in OpenShift Scaling and Performance (EX380) exam, which counts towards the Red Hat Certified Architect (RHCA) credential.

Why DO380 Matters

With enterprise workloads becoming more dynamic and resource-intensive, scaling OpenShift effectively is not just a bonus — it’s a necessity. DO380 equips you with the skills to:

✅ Maximize infrastructure efficiency

✅ Ensure high availability

✅ Automate operations

✅ Improve DevOps productivity

Conclusion

Whether you're looking to enhance your career, improve your organization's cloud-native capabilities, or take the next step in your Red Hat certification journey — Red Hat OpenShift Administration III (DO380) is your gateway to mastering OpenShift at scale.

Ready to elevate your OpenShift expertise?

Explore DO380 training options with HawkStack Technologies and get hands-on with real-world OpenShift scaling scenarios.

For more details www.hawkstack.com

0 notes

Text

Kubernetes Cluster Management at Scale: Challenges and Solutions

As Kubernetes has become the cornerstone of modern cloud-native infrastructure, managing it at scale is a growing challenge for enterprises. While Kubernetes excels in orchestrating containers efficiently, managing multiple clusters across teams, environments, and regions presents a new level of operational complexity.

In this blog, we’ll explore the key challenges of Kubernetes cluster management at scale and offer actionable solutions, tools, and best practices to help engineering teams build scalable, secure, and maintainable Kubernetes environments.

Why Scaling Kubernetes Is Challenging

Kubernetes is designed for scalability—but only when implemented with foresight. As organizations expand from a single cluster to dozens or even hundreds, they encounter several operational hurdles.

Key Challenges:

1. Operational Overhead

Maintaining multiple clusters means managing upgrades, backups, security patches, and resource optimization—multiplied by every environment (dev, staging, prod). Without centralized tooling, this overhead can spiral quickly.

2. Configuration Drift

Cluster configurations often diverge over time, causing inconsistent behavior, deployment errors, or compliance risks. Manual updates make it difficult to maintain consistency.

3. Observability and Monitoring

Standard logging and monitoring solutions often fail to scale with the ephemeral and dynamic nature of containers. Observability becomes noisy and fragmented without standardization.

4. Resource Isolation and Multi-Tenancy

Balancing shared infrastructure with security and performance for different teams or business units is tricky. Kubernetes namespaces alone may not provide sufficient isolation.

5. Security and Policy Enforcement

Enforcing consistent RBAC policies, network segmentation, and compliance rules across multiple clusters can lead to blind spots and misconfigurations.

Best Practices and Scalable Solutions

To manage Kubernetes at scale effectively, enterprises need a layered, automation-driven strategy. Here are the key components:

1. GitOps for Declarative Infrastructure Management

GitOps leverages Git as the source of truth for infrastructure and application deployment. With tools like ArgoCD or Flux, you can:

Apply consistent configurations across clusters.

Automatically detect and rollback configuration drifts.

Audit all changes through Git commit history.

Benefits:

· Immutable infrastructure

· Easier rollbacks

· Team collaboration and visibility

2. Centralized Cluster Management Platforms

Use centralized control planes to manage the lifecycle of multiple clusters. Popular tools include:

Rancher – Simplified Kubernetes management with RBAC and policy controls.

Red Hat OpenShift – Enterprise-grade PaaS built on Kubernetes.

VMware Tanzu Mission Control – Unified policy and lifecycle management.

Google Anthos / Azure Arc / Amazon EKS Anywhere – Cloud-native solutions with hybrid/multi-cloud support.

Benefits:

· Unified view of all clusters

· Role-based access control (RBAC)

· Policy enforcement at scale

3. Standardization with Helm, Kustomize, and CRDs

Avoid bespoke configurations per cluster. Use templating and overlays:

Helm: Define and deploy repeatable Kubernetes manifests.

Kustomize: Customize raw YAMLs without forking.

Custom Resource Definitions (CRDs): Extend Kubernetes API to include enterprise-specific configurations.

Pro Tip: Store and manage these configurations in Git repositories following GitOps practices.

4. Scalable Observability Stack

Deploy a centralized observability solution to maintain visibility across environments.

Prometheus + Thanos: For multi-cluster metrics aggregation.

Grafana: For dashboards and alerting.

Loki or ELK Stack: For log aggregation.

Jaeger or OpenTelemetry: For tracing and performance monitoring.

Benefits:

· Cluster health transparency

· Proactive issue detection

· Developer fliendly insights

5. Policy-as-Code and Security Automation

Enforce security and compliance policies consistently:

OPA + Gatekeeper: Define and enforce security policies (e.g., restrict container images, enforce labels).

Kyverno: Kubernetes-native policy engine for validation and mutation.

Falco: Real-time runtime security monitoring.

Kube-bench: Run CIS Kubernetes benchmark checks automatically.

Security Tip: Regularly scan cluster and workloads using tools like Trivy, Kube-hunter, or Aqua Security.

6. Autoscaling and Cost Optimization

To avoid resource wastage or service degradation:

Horizontal Pod Autoscaler (HPA) – Auto-scales pods based on metrics.

Vertical Pod Autoscaler (VPA) – Adjusts container resources.

Cluster Autoscaler – Scales nodes up/down based on workload.

Karpenter (AWS) – Next-gen open-source autoscaler with rapid provisioning.

Conclusion

As Kubernetes adoption matures, organizations must rethink their management strategy to accommodate growth, reliability, and governance. The transition from a handful of clusters to enterprise-wide Kubernetes infrastructure requires automation, observability, and strong policy enforcement.

By adopting GitOps, centralized control planes, standardized templates, and automated policy tools, enterprises can achieve Kubernetes cluster management at scale—without compromising on security, reliability, or developer velocity.

0 notes

Text

CI/CD Pipelines in the Cloud: How to Achieve Faster, Safer Deployments

In today’s digital-first world, speed and reliability in software delivery are critical. With cloud infrastructure becoming the new normal, CI/CD pipelines (Continuous Integration and Continuous Deployment) are key to enabling faster, safer deployments. They help development teams automate code integration, testing, and deployment—removing bottlenecks and minimizing risks.

In this blog, we’ll explore how CI/CD works in cloud environments, why it’s essential for modern development, and how it supports seamless delivery of scalable, secure applications.

🛠️ What Is CI/CD?

Continuous Integration (CI): Developers frequently merge code into a shared repository. Each change triggers automated tests to catch bugs early.

Continuous Deployment (CD): After passing tests, code is automatically deployed to production or staging environments without manual intervention.

Together, CI/CD creates a feedback loop that ensures rapid, reliable software delivery.

🌐 Why CI/CD Pipelines Matter in the Cloud

CI/CD is not a luxury—it’s a necessity in the cloud. Here’s why:

Cloud environments are dynamic: With auto-scaling, microservices, and distributed systems, manual deployments are error-prone and slow.

Rapid release cycles: Customers expect continuous improvements. CI/CD helps teams ship features weekly or even daily.

Consistency and traceability: Every change is logged, tested, and version-controlled—reducing deployment risks.

The cloud provides the perfect infrastructure for CI/CD pipelines, offering scalability, flexibility, and automation capabilities.

🔁 How a Cloud-Based CI/CD Pipeline Works

A typical CI/CD pipeline in the cloud includes:

Code Commit: Developers push code to a Git repository (e.g., GitHub, GitLab, Bitbucket).

Build & Test: Cloud-native CI tools (like GitHub Actions, AWS CodeBuild, or CircleCI) compile the code and run unit/integration tests.

Artifact Creation: Build artifacts (e.g., Docker images) are stored in cloud repositories (e.g., Amazon ECR, Azure Container Registry).

Deployment: Tools like AWS CodeDeploy, Azure DevOps, or ArgoCD deploy the artifacts to target environments.

Monitoring: Real-time monitoring and alerts ensure the deployment is successful and stable.

✅ Benefits of Cloud CI/CD

1. Faster Time-to-Market

Automated testing and deployment reduce manual overhead, accelerating release cycles.

2. Improved Code Quality

Each commit is tested, catching bugs early and ensuring only clean code reaches production.

3. Consistent Deployments

Standardized pipelines eliminate the “it worked on my machine” problem, ensuring repeatable results.

4. Efficient Collaboration

CI/CD fosters DevOps culture, encouraging collaboration between developers, testers, and operations.

5. Scalability on Demand

Cloud CI/CD systems scale automatically with the size and complexity of the application.

🔐 Security and Compliance in CI/CD

Modern pipelines are integrating security and compliance checks directly into the deployment process (DevSecOps). These include:

Static and dynamic code analysis

Container vulnerability scans

Secrets detection

Infrastructure compliance checks

By catching vulnerabilities early, businesses reduce risks and ensure regulatory alignment.

📊 CI/CD with IaC and Automated Testing

CI/CD becomes even more powerful when combined with:

Infrastructure as Code (IaC) for consistent cloud infrastructure provisioning

Automated Testing to validate performance, functionality, and security

Together, they create a fully automated, end-to-end deployment ecosystem that supports cloud-native scalability and resilience.

🏢 How Salzen Cloud Builds Smart CI/CD Solutions

At Salzen Cloud, we design and implement cloud-native CI/CD pipelines tailored to your business needs. Whether you're deploying microservices on Kubernetes, serverless applications, or hybrid environments, we help you:

Automate build, test, and deployment workflows

Integrate security and compliance into your pipelines

Optimize for speed, reliability, and rollback safety

Monitor performance and deployment health in real time

Our solutions empower teams to ship faster, fix faster, and innovate with confidence.

🧩 Conclusion

CI/CD is the backbone of modern cloud development. It streamlines the software delivery process, reduces manual errors, and provides the agility needed to stay competitive.

To achieve faster, safer deployments in the cloud:

Adopt CI/CD pipelines for all projects

Integrate IaC and automated testing

Shift security left with DevSecOps practices

Continuously monitor and optimize your pipelines

With the right CI/CD strategy, your team can move fast without breaking things—delivering value to users at cloud speed.

Want help designing a CI/CD pipeline for your cloud environment? Let Salzen Cloud show you how.

0 notes

Text

Mastering GitOps with Kubernetes: The Future of Cloud-Native Application Management

In the world of modern cloud-native application management, GitOps has emerged as a game-changer. By combining the power of Git as a single source of truth with Kubernetes for infrastructure orchestration, GitOps enables seamless deployment, monitoring, and management of applications. Let’s dive into what GitOps is, how it integrates with Kubernetes, and why it’s a must-have for DevOps teams.

What is GitOps?

GitOps is a DevOps practice that uses Git repositories as the single source of truth for declarative infrastructure and application configurations. The GitOps workflow automates deployment processes, ensuring:

Consistency: Changes are tracked and version-controlled.

Simplicity: The Git repository acts as the central command center.

Reliability: Rollbacks are effortless, thanks to Git’s history.

Why GitOps and Kubernetes are a Perfect Match

Kubernetes is designed for container orchestration and declarative infrastructure, making it an ideal companion for GitOps. Here’s why the two fit perfectly together:

Declarative Configuration Kubernetes inherently uses declarative YAML manifests, which align perfectly with GitOps principles. All changes can be stored and managed in Git.

Automated Deployments Tools like ArgoCD and Flux monitor Git repositories for updates and automatically apply changes to Kubernetes clusters. This reduces manual interventions and human error.

Continuous Delivery Kubernetes ensures your desired state (declared in Git) is always maintained in production. GitOps handles the CI/CD pipeline, making deployments more predictable.

Auditability With Git, every infrastructure or application change is version-controlled. This enhances traceability and simplifies compliance.

Benefits of GitOps with Kubernetes

Enhanced Developer Productivity Developers can focus on writing code and committing changes without worrying about the complexities of infrastructure management.

Improved Security Using Git as the central source of truth means no direct access to the Kubernetes cluster is needed, reducing security risks.

Faster Recovery Rolling back to a previous state is as simple as reverting a Git commit and letting the GitOps tools sync the changes.

Scalability GitOps is ideal for managing large-scale Kubernetes clusters, ensuring consistency across multiple environments.

Getting Started with GitOps on Kubernetes

To implement GitOps with Kubernetes, follow these steps:

Set Up a Git Repository Create a repository for your Kubernetes manifests and configurations. Structure it logically to separate environments (e.g., dev, staging, production).

Choose a GitOps Tool Popular tools include:

ArgoCD: A Kubernetes-native continuous delivery tool.

Flux: A powerful tool for GitOps workflows.

Define Infrastructure as Code (IaC) Write your Kubernetes configurations (deployments, services, etc.) as YAML files and store them in Git.

Enable Continuous Reconciliation Configure your GitOps tool to watch the Git repository and sync changes automatically to the Kubernetes cluster.

Monitor and Iterate Use Kubernetes monitoring tools (e.g., Prometheus, Grafana) to observe the cluster's state and refine configurations as needed.

Real-World Use Cases

Application Deployment Automate the deployment of new application versions across multiple environments.

Cluster Management Manage infrastructure upgrades and scaling operations through Git.

Disaster Recovery Restore clusters to a known-good state by reverting to a previous Git commit.

Challenges to Overcome

While GitOps offers many advantages, there are a few challenges to consider:

Learning Curve: Teams need to understand GitOps workflows and tools.

Complexity at Scale: Managing large, multi-cluster environments requires careful repository organization.

Tooling Dependencies: GitOps tools must be properly configured and maintained.

The Future of GitOps and Kubernetes

As enterprises increasingly adopt cloud-native architectures, GitOps will become a cornerstone of efficient, reliable, and secure application management. By integrating GitOps with Kubernetes, organizations can achieve faster delivery cycles, improved operational stability, and better scalability.

Conclusion GitOps with Kubernetes is more than just a trend—it’s a paradigm shift in how infrastructure and applications are managed. Whether you're a startup or an enterprise, adopting GitOps practices will empower your DevOps teams to build and manage cloud-native applications with confidence.

Looking to implement GitOps in your organization? HawkStack offers tailored solutions to help you streamline your DevOps processes with Kubernetes and GitOps. Contact us today to learn more!

#redhatcourses#information technology#containerorchestration#kubernetes#docker#container#dockerswarm#linux#containersecurity

0 notes

Text

The following is an example project that uses ArgoCD to management Kubernetes deployments.

0 notes

Text

Wie können Sie Kubernetes-Cluster mit ArgoCD automatisieren?: "Automatisieren Sie Ihr Kubernetes-Cluster mit ArgoCD & MHM Digitale Lösungen UG!"

Automatisierung, Anwendungsentwicklung und Infrastructure-as-Code sind die Schlüssel zu erfolgreichen DevOps-Projekten. Nutze die Möglichkeiten von Continuous Deployment und Continuous Integration, um dein #Kubernetes-Cluster mit #ArgoCD zu automatisieren. Erfahre mehr bei #MHMDigitalSolutionsUG! #DevOps #Anwendungsentwicklung #InfrastructureAsCode

Mithilfe von ArgoCD und MHM Digitale Lösungen UG können Sie Ihr Kubernetes-Cluster einfach und schnell automatisieren. ArgoCD ist ein Open Source Continuous Delivery-Tool, das Änderungen an Kubernetes-Anwendungen automatisiert und in Echtzeit nachverfolgt. Es ermöglicht es Entwicklern, sicherzustellen, dass ihre Änderungen auf der Grundlage von Präferenzen und Richtlinien ausgeliefert werden. MHM…

View On WordPress

#10 Keywörter: Kubernetes#Anwendungsentwicklung.#ArgoCD#Automatisierung#Cluster#Continuous Integration.#Continuous-Deployment.#DevOps#Infrastructure-as-Code#MHM Digital Solutions UG

0 notes

Text

GitOps: Argo cd and application deployment demo – part II

Hello People ! In the previous article we have deployed a gitops tool, so there is nothing, if we can do to do anything interesting.

View On WordPress

0 notes

Text

5 GitOps Tools that you need to know!

5 GitOps Tools that you need to know! @vexpert @portainerio #vmwarecommunities #kubernetes #docker #dockercontainers #gitops #devops #argocd #fluxcd #portainer #homeserver #homelab

I have been getting hugely into GitOps in the home lab lately and carrying those skillings into production environments. GitOps is a methodology that focuses on deployments being sourced from a git repo. Using GitOps you can encapsulate everything in your git repo and then make sure your apps are applied to your environment in a declarative way. Let’s look at 5 tools that you need to know for…

0 notes

Text

Fleet-Argocd-Plugin Streamlines Multi-Cluster Kubernetes

Introducing Google’s Fleet-Argocd-Plugin, Simplifying Multi-Cluster Management for GKE Fleets

Give your teams self-service to empower them. Kubernetes with Argo CD and GKE fleets

It can be challenging to manage apps across several Kubernetes clusters, particularly when those clusters are spread across various environments or even cloud providers. Google Kubernetes Engine (GKE) fleets and Argo CD, a declarative, GitOps continuous delivery platform for Kubernetes, are combined in one potent and secure solution. Workload Identity and Connect Gateway further improve the solution.

This blog post explains how to use these offerings to build a strong, team-focused multi-cluster architecture. Google uses a prototype GKE fleet that has a control cluster to host Argo CD and application clusters for your workloads. It uses Connect Gateway and Workload Identity to improve security and expedite authentication, allowing Argo CD to safely administer clusters without having to deal with clumsy Kubernetes Services Accounts.

Additionally, it uses GKE Enterprise Teams to control resources and access, assisting in making sure that every team has the appropriate namespaces and permissions inside this safe environment.

Lastly, Google presents the fleet-argocd-plugin, a specially created Argo CD generator intended to make cluster management in this complex configuration easier. This plugin makes it simpler for platform administrators to manage resources and for application teams to concentrate on deployments by automatically importing your GKE Fleet cluster list into Argo CD and maintaining synchronized cluster information.

Follow along as Google Cloud:

Build a GKE fleet that includes control and application clusters.

Install Argo CD on the control cluster with Workload Identity and Connect Gateway set up.

Set up GKE Enterprise Teams to have more precise access control.

Install the fleet-argocd-plugin and use it to manage your multi-cluster, secure fleet with team awareness.

Using GKE Fleets, Argo CD, Connect Gateway, Workload Identity, and Teams, you will develop a strong and automated multi-cluster system by the end that is prepared to meet the various demands and security specifications of your company. Let’s get started!

Create a multi-cluster infrastructure using Argo CD and the GKE fleet

The procedure for configuring a prototype GKE fleet is simple:

In the selected Google Cloud Project, enable the necessary APIs. This project serves as the host project for the fleet.

Installing the gcloud SDK and logging in with gcloud auth are prerequisites.

Assign application clusters to your fleet host project and register them.

Assemble groups within your fleet. Assume you have a webserver namespace and a single frontend team.

a. You may manage which team has access to particular namespaces on particular clusters by using fleet teams and fleet namespace.

Argo CD should now be configured and deployed to the control cluster. As your application, create a new GKE cluster and set up Workload Identity.

To communicate with the Argo CD API server, install the Argo CD CLI. It must be version 2.8.0 or later. The CLI installation guide contains comprehensive installation instructions.

Install Argo CD on the cluster under control.

Argo CD generator customization

You have now installed Argo CD on the control cluster and your GKE fleet is operational. By saving their credentials (such as the address of the API server and login information) as Kubernetes Secrets inside the Argo CD namespace, application clusters are registered with the control cluster in Argo CD. It has a method to greatly simplify this process!

A customized Argo CD plugin generator called fleet-argocd-plugin simplifies cluster administration by:

Automatically configuring the cluster secret objects for every application cluster and loading your GKE fleet cluster list into Argo CD

Monitoring the state of your fleet on Google Cloud and ensuring that your Argo CD cluster list is consistently current and in sync

Let’s now see how to set up and construct the Argo CD generator.

Set up your control cluster with the fleet-argocd-plugin.

a. In this demonstration, the fleet-argocd-plugin is built and deployed using Cloud Build.

Provide the fleet-argocd-plugin with the appropriate fleet management permissions to ensure it functions as intended.

a. In your Argo CD control cluster, create an IAM service account and provide it the necessary rights. The configuration adheres to the GKE Workload Identity Federation’s official onboarding manual. b. You must also grant access to your artifacts repository’s pictures for the Google Compute Engine service account.

Launch the Argo CD control cluster’s fleet plugin!

Demo time

To ensure that the GKE fleet and Argo CD are working well together, let’s take a brief look. You ought to see that your application clusters’ secrets have been produced automatically.

Demo 1: Argo CD’s automated fleet management

Alright, let’s check this out! The guestbook sample app will be used. Google starts by deploying it to the frontend team’s clusters. After that, you should be able to see the guestbook app operating on your application clusters without having to manually handle any cluster secrets!

export TEAM_ID=frontend envsubst ‘$FLEET_PROJECT_NUMBER $TEAM_ID’ < applicationset-demo.yaml | kubectl apply -f – -n argocd

kubectl config set-context –current –namespace=argocd argocd app list -o name

Example Output:

argocd/app-cluster-1.us-central1.141594892609-webserver

argocd/app-cluster-2.us-central1.141594892609-webserver

Demo 2: Fleet-argocd-plugin makes fleet evolution simple

Let’s say you choose to expand the frontend staff by adding another cluster. The frontend team should be given a fresh GKE cluster. Next, see whether the new cluster has deployed your guestbook app.

gcloud container clusters create app-cluster-3 –enable-fleet –region=us-central1 gcloud container fleet memberships bindings create app-cluster-3-b \ –membership app-cluster-3 \ –scope frontend \ –location us-central1

argocd app list -o name

Example Output: a new app shows up!

argocd/app-cluster-1.us-central1.141594892609-webserver

argocd/app-cluster-2.us-central1.141594892609-webserver

argocd/app-cluster-3.us-central1.141594892609-webserver

Final reflections

We’ve demonstrated in this blog post how to build a reliable and automated multi-cluster platform by combining the capabilities of GKE fleets, Argo CD, Connect Gateway, Workload Identity, and GKE Enterprise Teams. You can improve security, expedite Kubernetes operations, and enable your teams to effectively manage and deploy apps throughout your fleet by utilizing these technologies.

Remember that GKE fleets and Argo CD offer a strong basis for creating a scalable, safe, and effective platform as you proceed with multi-cluster Kubernetes.

Read more on Govindhtech.com

#MulticlusterGKE#GKE#Kubernetes#GKEFleet#AgroCD#Google#GoogleCloud#govindhtech#NEWS#technews#TechnologyNews#technologies#technology#technologytrends

1 note

·

View note

Text

Upscale your Continuous Deployment at Enterprise-grade with ArgoCD

#argocD #ContinuousDeployment #cd #cicd #argo #kubernetes #k8s #azure #aks #eks #git #gitops #scalability #enterprise #opensource

So far, we have discussed about ArgoCD and how to use it. When it comes to production level or enterprise level of any application, there are lot factors to considers. What are those? Before we understand that, it is always important to understand what the tool is capable. Let’s recall, Hope you aware Argo CD can support thousands of applications? you can connect hundreds of Kubernetes clusters?…

View On WordPress

0 notes

Text

GitOps: A Streamlined Approach to Kubernetes Automation

In today’s fast-paced DevOps world, automation is the key to achieving efficiency, scalability, and reliability in software deployments. Kubernetes has become the de facto standard for container orchestration, but managing its deployments effectively can be challenging. This is where GitOps comes into play, providing a streamlined approach for automating the deployment and maintenance of Kubernetes clusters by leveraging Git repositories as a single source of truth.

What is GitOps?

GitOps is a declarative way of managing infrastructure and application deployments using Git as the central control mechanism. Instead of manually applying configurations to Kubernetes clusters, GitOps ensures that all desired states of the system are defined in a Git repository and automatically synchronized to the cluster.

With GitOps, every change to the infrastructure and applications goes through version-controlled pull requests, enabling transparency, auditing, and easy rollbacks if necessary.

How GitOps Works with Kubernetes

GitOps enables a Continuous Deployment (CD) approach to Kubernetes by maintaining configuration and application states in a Git repository. Here’s how it works:

Define Desired State – Kubernetes manifests (YAML files), Helm charts, or Kustomize configurations are stored in a Git repository.

Automatic Synchronization – A GitOps operator (such as ArgoCD or Flux) continuously monitors the repository for changes.

Deployment Automation – When a change is detected, the operator applies the new configurations to the Kubernetes cluster automatically.

Continuous Monitoring & Drift Detection – GitOps ensures the actual state of the cluster matches the desired state. If discrepancies arise, it can either notify or automatically correct them.

Benefits of GitOps for Kubernetes

✅ Improved Security & Compliance – Since all changes are tracked in Git, auditing is straightforward, ensuring security and compliance.

✅ Faster Deployments & Rollbacks – Automation speeds up deployments while Git history allows for easy rollbacks if issues arise.

✅ Enhanced Collaboration – Teams work with familiar Git workflows (pull requests, approvals) instead of manually modifying clusters.

✅ Reduced Configuration Drift – Ensures the cluster is always in sync with the repository, minimizing configuration discrepancies.

Popular GitOps Tools for Kubernetes

Several tools help implement GitOps in Kubernetes environments:

ArgoCD – A declarative GitOps CD tool for Kubernetes.

Flux – A GitOps operator that automates deployment using Git repositories.

Kustomize – A Kubernetes native configuration management tool.

Helm – A package manager for Kubernetes that simplifies application deployment.

Conclusion

GitOps simplifies Kubernetes management by integrating version control, automation, and continuous deployment. By leveraging Git as the single source of truth, organizations can achieve better reliability, faster deployments, and improved operational efficiency. As Kubernetes adoption grows, embracing GitOps becomes an essential strategy for modern DevOps workflows.

Are you ready to streamline your Kubernetes automation with GitOps? Start implementing today with tools like ArgoCD, Flux, and Helm, and take your DevOps strategy to the next level! 🚀

For more details www.hawkstack.com

#GitOps #Kubernetes #DevOps #ArgoCD #FluxCD #ContinuousDeployment #CloudNative

0 notes

Text

Kubernetes vs. Traditional Infrastructure: Why Clusters and Pods Win

In today’s fast-paced digital landscape, agility, scalability, and reliability are not just nice-to-haves—they’re necessities. Traditional infrastructure, once the backbone of enterprise computing, is increasingly being replaced by cloud-native solutions. At the forefront of this transformation is Kubernetes, an open-source container orchestration platform that has become the gold standard for managing containerized applications.

But what makes Kubernetes a superior choice compared to traditional infrastructure? In this article, we’ll dive deep into the core differences, and explain why clusters and pods are redefining modern application deployment and operations.

Understanding the Fundamentals

Before drawing comparisons, it’s important to clarify what we mean by each term:

Traditional Infrastructure

This refers to monolithic, VM-based environments typically managed through manual or semi-automated processes. Applications are deployed on fixed servers or VMs, often with tight coupling between hardware and software layers.

Kubernetes

Kubernetes abstracts away infrastructure by using clusters (groups of nodes) to run pods (the smallest deployable units of computing). It automates deployment, scaling, and operations of application containers across clusters of machines.

Key Comparisons: Kubernetes vs Traditional Infrastructure

Feature

Traditional Infrastructure

Kubernetes

Scalability

Manual scaling of VMs; slow and error-prone

Auto-scaling of pods and nodes based on load

Resource Utilization

Inefficient due to over-provisioning

Efficient bin-packing of containers

Deployment Speed

Slow and manual (e.g., SSH into servers)

Declarative deployments via YAML and CI/CD

Fault Tolerance

Rigid failover; high risk of downtime

Self-healing, with automatic pod restarts and rescheduling

Infrastructure Abstraction

Tightly coupled; app knows about the environment

Decoupled; Kubernetes abstracts compute, network, and storage

Operational Overhead

High; requires manual configuration, patching

Low; centralized, automated management

Portability

Limited; hard to migrate across environments

High; deploy to any Kubernetes cluster (cloud, on-prem, hybrid)

Why Clusters and Pods Win

1. Decoupled Architecture

Traditional infrastructure often binds application logic tightly to specific servers or environments. Kubernetes promotes microservices and containers, isolating app components into pods. These can run anywhere without knowing the underlying system details.

2. Dynamic Scaling and Scheduling

In a Kubernetes cluster, pods can scale automatically based on real-time demand. The Horizontal Pod Autoscaler (HPA) and Cluster Autoscaler help dynamically adjust resources—unthinkable in most traditional setups.

3. Resilience and Self-Healing

Kubernetes watches your workloads continuously. If a pod crashes or a node fails, the system automatically reschedules the workload on healthy nodes. This built-in self-healing drastically reduces operational overhead and downtime.

4. Faster, Safer Deployments

With declarative configurations and GitOps workflows, teams can deploy with speed and confidence. Rollbacks, canary deployments, and blue/green strategies are natively supported—streamlining what’s often a risky manual process in traditional environments.

5. Unified Management Across Environments

Whether you're deploying to AWS, Azure, GCP, or on-premises, Kubernetes provides a consistent API and toolchain. No more re-engineering apps for each environment—write once, run anywhere.

Addressing Common Concerns

“Kubernetes is too complex.”

Yes, Kubernetes has a learning curve. But its complexity replaces operational chaos with standardized automation. Tools like Helm, ArgoCD, and managed services (e.g., GKE, EKS, AKS) help simplify the onboarding process.

“Traditional infra is more secure.”

Security in traditional environments often depends on network perimeter controls. Kubernetes promotes zero trust principles, pod-level isolation, and RBAC, and integrates with service meshes like Istio for granular security policies.

Real-World Impact

Companies like Spotify, Shopify, and Airbnb have migrated from legacy infrastructure to Kubernetes to:

Reduce infrastructure costs through efficient resource utilization

Accelerate development cycles with DevOps and CI/CD

Enhance reliability through self-healing workloads

Enable multi-cloud strategies and avoid vendor lock-in

Final Thoughts

Kubernetes is more than a trend—it’s a foundational shift in how software is built, deployed, and operated. While traditional infrastructure served its purpose in a pre-cloud world, it can’t match the agility and scalability that Kubernetes offers today.

Clusters and pods don’t just win—they change the game.

0 notes

Text

GitOps – Streamlining Kubernetes with Automated Workflows

In the world of cloud-native applications, managing Kubernetes clusters efficiently has become more critical than ever. As businesses scale, the need for automation and streamlined processes in deploying and maintaining these clusters becomes paramount. Enter GitOps – an innovative approach that leverages Git repositories to automate and manage Kubernetes clusters seamlessly.

What is GitOps?

GitOps is an operational framework that applies DevOps best practices—such as version control, collaboration, and continuous integration/continuous deployment (CI/CD)—to infrastructure automation. At its core, GitOps uses Git as the single source of truth for your entire system.

How GitOps Simplifies Kubernetes Management

In traditional setups, managing Kubernetes clusters involves manual processes and human intervention, often leading to configuration drift, errors, and inefficiencies. With GitOps, all configuration files (such as YAML) are stored in a Git repository. Whenever a change is needed, it is made in the repository and automatically applied to the Kubernetes cluster.

GitOps focuses on automation through continuous reconciliation. This means that the desired state of your system, defined in Git, is continuously compared to the actual state in Kubernetes. If there’s any discrepancy, GitOps tools like Flux or ArgoCD detect and automatically correct it, ensuring consistency and minimizing manual errors.

Key Benefits of Using GitOps with Kubernetes

Version Control and Auditability: Every change to your cluster configuration is stored in Git, providing a clear audit trail. You can easily track who made changes and when they were applied.

Automated Deployments: By automating the deployment process, GitOps significantly reduces the time it takes to roll out changes. This speeds up release cycles and boosts developer productivity.

Improved Security: GitOps ensures that only approved changes (those committed in the Git repository) are deployed to your clusters. This minimizes the risk of unauthorized or accidental modifications.

Easier Rollbacks: Since every configuration is versioned, you can quickly roll back to a previous state in case something goes wrong, providing added stability and control.

Scalability: GitOps allows teams to manage large-scale Kubernetes clusters with ease, even in multi-cloud or hybrid cloud environments. The framework simplifies the management of complex environments by making the cluster's desired state declarative and version-controlled.

How HawkStack Supports GitOps and Kubernetes Automation

At HawkStack, we specialize in providing advanced DevOps tools and support for businesses looking to streamline their Kubernetes operations. Our team leverages GitOps principles to offer Kubernetes automation, ensuring a highly efficient, scalable, and secure infrastructure.

Whether you're starting your cloud-native journey or looking to enhance your existing Kubernetes setup, HawkStack’s tailored services can help you implement GitOps strategies to optimize performance and reduce operational complexity.

Conclusion

GitOps is revolutionizing the way Kubernetes clusters are deployed and managed. By using Git as a source of truth and automating key processes, businesses can enjoy faster deployments, enhanced security, and scalable management. With HawkStack's expertise in DevOps and Kubernetes automation, you're set to embrace this modern approach and drive business growth efficiently.

Visit HawkStack today to learn more about our services and how we can help you implement GitOps for your Kubernetes clusters.

0 notes