#Custom Reports Via SQL Queries

Explore tagged Tumblr posts

Text

Unlock the Power of Data: Start Your Power BI Training Journey Today!

Introduction: The Age of Data Mastery

The world runs on data — from e-commerce trends to real-time patient monitoring, logistics optimization to financial forecasting. But data without clarity is chaos. That’s why the demand for data-driven professionals is skyrocketing.

If you’re wondering where to begin, the answer lies in Power BI training — a toolset that empowers you to visualize, interpret, and tell stories with data. When paired with Azure Data Factory training and ADF Training, you’re not just a data user — you become a data engineer, storyteller, and business enabler.

Section 1: Power BI — Your Data Storytelling Toolkit

What is Power BI?

Power BI is a suite of business analytics tools by Microsoft that connects data from hundreds of sources, cleans and shapes it, and visualizes it into stunning reports and dashboards.

Key Features:

Data modeling and transformation (Power Query & DAX)

Drag-and-drop visual report building

Real-time dashboard updates

Integration with Excel, SQL, SharePoint, and cloud platforms

Easy sharing via Power BI Service and Power BI Mobile

Why you need Power BI training:

It’s beginner-friendly yet powerful enough for experts

You learn to analyze trends, uncover insights, and support decisions

Widely used by Fortune 500 companies and startups alike

Power BI course content usually includes:

Data import and transformation

Data relationships and modeling

DAX formulas

Visualizations and interactivity

Publishing and sharing dashboards

Section 2: Azure Data Factory & ADF Training — Automate Your Data Flows

While Power BI helps with analysis and reporting, tools like Azure Data Factory (ADF) are essential for preparing that data before analysis.

What is Azure Data Factory?

Azure Data Factory is a cloud-based ETL (Extract, Transform, Load) tool by Microsoft used to create data-driven workflows for moving and transforming data.

ADF Training helps you master:

Building pipelines to move data from databases, CRMs, APIs, and more

Scheduling automated data refreshes

Monitoring pipeline executions

Using triggers and parameters

Integrating with Azure services and on-prem data

Azure Data Factory training complements your Power BI course by

Giving you end-to-end data skills: from ingestion → transformation → reporting

Teaching you how to scale workflows using cloud resources

Prepping you for roles in Data Engineering and Cloud Analytics

Section 3: Real-Life Applications and Benefits

After completing Power BI training, Azure Data Factory training, and ADF training, you’ll be ready to tackle real-world business scenarios such as:

Business Intelligence Analyst

Track KPIs and performance in real-time dashboards

Help teams make faster, better decisions

Data Engineer

Build automated workflows to handle terabytes of data

Integrate enterprise data from multiple sources

Marketing Analyst

Visualize campaign performance and audience behavior

Use dashboards to influence creative and budgeting

Healthcare Data Analyst

Analyze patient data for improved diagnosis

Predict outbreaks or resource needs with live dashboards

Small Business Owner

Monitor sales, inventory, customer satisfaction — all in one view

Automate reports instead of doing them manually every week

Section 4: What Will You Achieve?

Tangible Career Growth

Access to high-demand roles: Data Analyst, Power BI Developer, Azure Data Engineer, Cloud Analyst

Average salaries range between $70,000 to $130,000 annually (varies by country)

Future-Proof Skills

Data skills are relevant in every sector: retail, finance, healthcare, manufacturing, and IT

Learn the Microsoft ecosystem, which dominates enterprise tools globally

Practical Confidence

Work on real projects, not just theory

Build a portfolio of dashboards, ADF pipelines, and data workflows

Certification Readiness

Prepares you for exams like Microsoft Certified: Power BI Data Analyst Associate (PL-300), Azure Data Engineer Associate (DP-203)

Conclusion: Data Skills That Drive You Forward

In an era where businesses are racing toward digital transformation, the ones who understand data will lead the way. Learning Power BI, Azure Data Factory, and undergoing ADF training gives you a complete, end-to-end data toolkit.

Whether you’re stepping into IT, upgrading your current role, or launching your own analytics venture, now is the time to act. These skills don’t just give you a job — they build your confidence, capability, and career clarity.

#Azure data engineer certification#Azure data engineer course#Azure data engineer training#Azure certification data engineer#Power bi training#Power bi course#Azure data factory training#ADF Training

0 notes

Text

Generative AI Platform Development Explained: Architecture, Frameworks, and Use Cases That Matter in 2025

The rise of generative AI is no longer confined to experimental labs or tech demos—it’s transforming how businesses automate tasks, create content, and serve customers at scale. In 2025, companies are not just adopting generative AI tools—they’re building custom generative AI platforms that are tailored to their workflows, data, and industry needs.

This blog dives into the architecture, leading frameworks, and powerful use cases of generative AI platform development in 2025. Whether you're a CTO, AI engineer, or digital transformation strategist, this is your comprehensive guide to making sense of this booming space.

Why Generative AI Platform Development Matters Today

Generative AI has matured from narrow use cases (like text or image generation) to enterprise-grade platforms capable of handling complex workflows. Here’s why organizations are investing in custom platform development:

Data ownership and compliance: Public APIs like ChatGPT don’t offer the privacy guarantees many businesses need.

Domain-specific intelligence: Off-the-shelf models often lack nuance for healthcare, finance, law, etc.

Workflow integration: Businesses want AI to plug into their existing tools—CRMs, ERPs, ticketing systems—not operate in isolation.

Customization and control: A platform allows fine-tuning, governance, and feature expansion over time.

Core Architecture of a Generative AI Platform

A generative AI platform is more than just a language model with a UI. It’s a modular system with several architectural layers working in sync. Here’s a breakdown of the typical architecture:

1. Foundation Model Layer

This is the brain of the system, typically built on:

LLMs (e.g., GPT-4, Claude, Mistral, LLaMA 3)

Multimodal models (for image, text, audio, or code generation)

You can:

Use open-source models

Fine-tune foundation models

Integrate multiple models via a routing system

2. Retrieval-Augmented Generation (RAG) Layer

This layer allows dynamic grounding of the model in your enterprise data using:

Vector databases (e.g., Pinecone, Weaviate, FAISS)

Embeddings for semantic search

Document pipelines (PDFs, SQL, APIs)

RAG ensures that generative outputs are factual, current, and contextual.

3. Orchestration & Agent Layer

In 2025, most platforms include AI agents to perform tasks:

Execute multi-step logic

Query APIs

Take user actions (e.g., book, update, generate report)

Frameworks like LangChain, LlamaIndex, and CrewAI are widely used.

4. Data & Prompt Engineering Layer

The control center for:

Prompt templates

Tool calling

Memory persistence

Feedback loops for fine-tuning

5. Security & Governance Layer

Enterprise-grade platforms include:

Role-based access

Prompt logging

Data redaction and PII masking

Human-in-the-loop moderation

6. UI/UX & API Layer

This exposes the platform to users via:

Chat interfaces (Slack, Teams, Web apps)

APIs for integration with internal tools

Dashboards for admin controls

Popular Frameworks Used in 2025

Here's a quick overview of frameworks dominating generative AI platform development today: FrameworkPurposeWhy It MattersLangChainAgent orchestration & tool useDominant for building AI workflowsLlamaIndexIndexing + RAGPowerful for knowledge-based appsRay + HuggingFaceScalable model servingProduction-ready deploymentsFastAPIAPI backend for GenAI appsLightweight and easy to scalePinecone / WeaviateVector DBsCore for context-aware outputsOpenAI Function Calling / ToolsTool use & plugin-like behaviorPlug-in capabilities without agentsGuardrails.ai / Rebuff.aiOutput validationFor safe and filtered responses

Most Impactful Use Cases of Generative AI Platforms in 2025

Custom generative AI platforms are now being deployed across virtually every sector. Below are some of the most impactful applications:

1. AI Customer Support Assistants

Auto-resolve 70% of tickets with contextual data from CRM, knowledge base

Integrate with Zendesk, Freshdesk, Intercom

Use RAG to pull product info dynamically

2. AI Content Engines for Marketing Teams

Generate email campaigns, ad copy, and product descriptions

Align with tone, brand voice, and regional nuances

Automate A/B testing and SEO optimization

3. AI Coding Assistants for Developer Teams

Context-aware suggestions from internal codebase

Documentation generation, test script creation

Debugging assistant with natural language inputs

4. AI Financial Analysts for Enterprise

Generate earnings summaries, budget predictions

Parse and summarize internal spreadsheets

Draft financial reports with integrated charts

5. Legal Document Intelligence

Draft NDAs, contracts based on templates

Highlight risk clauses

Translate legal jargon to plain language

6. Enterprise Knowledge Assistants

Index all internal documents, chat logs, SOPs

Let employees query processes instantly

Enforce role-based visibility

Challenges in Generative AI Platform Development

Despite the promise, building a generative AI platform isn’t plug-and-play. Key challenges include:

Data quality and labeling: Garbage in, garbage out.

Latency in RAG systems: Slow response times affect UX.

Model hallucination: Even with context, LLMs can fabricate.

Scalability issues: From GPU costs to query limits.

Privacy & compliance: Especially in finance, healthcare, legal sectors.

What’s New in 2025?

Private LLMs: Enterprises increasingly train or fine-tune their own models (via platforms like MosaicML, Databricks).

Multi-Agent Systems: Agent networks are collaborating to perform tasks in parallel.

Guardrails and AI Policy Layers: Compliance-ready platforms with audit logs, content filters, and human approvals.

Auto-RAG Pipelines: Tools now auto-index and update knowledge bases without manual effort.

Conclusion

Generative AI platform development in 2025 is not just about building chatbots—it's about creating intelligent ecosystems that plug into your business, speak your data, and drive real ROI. With the right architecture, frameworks, and enterprise-grade controls, these platforms are becoming the new digital workforce.

0 notes

Text

Demystifying Data Analytics: Techniques, Tools, and Applications

Introduction: In today’s digital landscape, data analytics plays a critical role in transforming raw data into actionable insights. Organizations rely on data-driven decision-making to optimize operations, enhance customer experiences, and gain a competitive edge. At Tudip Technologies, the focus is on leveraging advanced data analytics techniques and tools to uncover valuable patterns, correlations, and trends. This blog explores the fundamentals of data analytics, key methodologies, industry applications, challenges, and emerging trends shaping the future of analytics.

What is Data Analytics? Data analytics is the process of collecting, processing, and analyzing datasets to extract meaningful insights. It includes various approaches, ranging from understanding past events to predicting future trends and recommending actions for business optimization.

Types of Data Analytics: Descriptive Analytics – Summarizes historical data to reveal trends and patterns Diagnostic Analytics – Investigates past data to understand why specific events occurred Predictive Analytics – Uses statistical models and machine learning to forecast future outcomes Prescriptive Analytics – Provides data-driven recommendations to optimize business decisions Key Techniques & Tools in Data Analytics Essential Data Analytics Techniques: Data Cleaning & Preprocessing – Ensuring accuracy, consistency, and completeness in datasets Exploratory Data Analysis (EDA) – Identifying trends, anomalies, and relationships in data Statistical Modeling – Applying probability and regression analysis to uncover hidden patterns Machine Learning Algorithms – Implementing classification, clustering, and deep learning models for predictive insights Popular Data Analytics Tools: Python – Extensive libraries like Pandas, NumPy, and Matplotlib for data manipulation and visualization. R – A statistical computing powerhouse for in-depth data modeling and analysis. SQL – Essential for querying and managing structured datasets in databases. Tableau & Power BI – Creating interactive dashboards for data visualization and reporting. Apache Spark – Handling big data processing and real-time analytics. At Tudip Technologies, data engineers and analysts utilize scalable data solutions to help businesses extract insights, optimize processes, and drive innovation using these powerful tools.

Applications of Data Analytics Across Industries: Business Intelligence – Understanding customer behavior, market trends, and operational efficiency. Healthcare – Predicting patient outcomes, optimizing treatments, and managing hospital resources. Finance – Detecting fraud, assessing risks, and enhancing financial forecasting. E-commerce – Personalizing marketing campaigns and improving customer experiences. Manufacturing – Enhancing supply chain efficiency and predicting maintenance needs for machinery. By integrating data analytics into various industries, organizations can make informed, data-driven decisions that lead to increased efficiency and profitability. Challenges in Data Analytics Data Quality – Ensuring clean, reliable, and structured datasets for accurate insights. Privacy & Security – Complying with data protection regulations to safeguard sensitive information. Skill Gap – The demand for skilled data analysts and scientists continues to rise, requiring continuous learning and upskilling. With expertise in data engineering and analytics, Tudip Technologies addresses these challenges by employing best practices in data governance, security, and automation. Future Trends in Data Analytics Augmented Analytics – AI-driven automation for faster and more accurate data insights. Data Democratization – Making analytics accessible to non-technical users via intuitive dashboards. Real-Time Analytics – Enabling instant data processing for quicker decision-making. As organizations continue to evolve in the data-centric era, leveraging the latest analytics techniques and technologies will be key to maintaining a competitive advantage.

Conclusion: Data analytics is no longer optional—it is a core driver of digital transformation. Businesses that leverage data analytics effectively can enhance productivity, streamline operations, and unlock new opportunities. At Tudip Learning, data professionals focus on building efficient analytics solutions that empower organizations to make smarter, faster, and more strategic decisions. Stay ahead in the data revolution! Explore new trends, tools, and techniques that will shape the future of data analytics.

Click the link below to learn more about the blog Demystifying Data Analytics Techniques, Tools, and Applications: https://tudiplearning.com/blog/demystifying-data-analytics-techniques-tools-and-applications/.

#Data Analytics Techniques#Big Data Insights#AI-Powered Data Analysis#Machine Learning for Data Analytics#Data Science Applications#Data Analytics#Tudip Learning

1 note

·

View note

Text

Big Data Analysis Application Programming

Big data is not just a buzzword—it's a powerful asset that fuels innovation, business intelligence, and automation. With the rise of digital services and IoT devices, the volume of data generated every second is immense. In this post, we’ll explore how developers can build applications that process, analyze, and extract value from big data.

What is Big Data?

Big data refers to extremely large datasets that cannot be processed or analyzed using traditional methods. These datasets exhibit the 5 V's:

Volume: Massive amounts of data

Velocity: Speed of data generation and processing

Variety: Different formats (text, images, video, etc.)

Veracity: Trustworthiness and quality of data

Value: The insights gained from analysis

Popular Big Data Technologies

Apache Hadoop: Distributed storage and processing framework

Apache Spark: Fast, in-memory big data processing engine

Kafka: Distributed event streaming platform

NoSQL Databases: MongoDB, Cassandra, HBase

Data Lakes: Amazon S3, Azure Data Lake

Big Data Programming Languages

Python: Easy syntax, great for data analysis with libraries like Pandas, PySpark

Java & Scala: Often used with Hadoop and Spark

R: Popular for statistical analysis and visualization

SQL: Used for querying large datasets

Basic PySpark Example

from pyspark.sql import SparkSession # Create Spark session spark = SparkSession.builder.appName("BigDataApp").getOrCreate() # Load dataset data = spark.read.csv("large_dataset.csv", header=True, inferSchema=True) # Basic operations data.printSchema() data.select("age", "income").show(5) data.groupBy("city").count().show()

Steps to Build a Big Data Analysis App

Define data sources (logs, sensors, APIs, files)

Choose appropriate tools (Spark, Hadoop, Kafka, etc.)

Ingest and preprocess the data (ETL pipelines)

Analyze using statistical, machine learning, or real-time methods

Visualize results via dashboards or reports

Optimize and scale infrastructure as needed

Common Use Cases

Customer behavior analytics

Fraud detection

Predictive maintenance

Real-time recommendation systems

Financial and stock market analysis

Challenges in Big Data Development

Data quality and cleaning

Scalability and performance tuning

Security and compliance (GDPR, HIPAA)

Integration with legacy systems

Cost of infrastructure (cloud or on-premise)

Best Practices

Automate data pipelines for consistency

Use cloud services (AWS EMR, GCP Dataproc) for scalability

Use partitioning and caching for faster queries

Monitor and log data processing jobs

Secure data with access control and encryption

Conclusion

Big data analysis programming is a game-changer across industries. With the right tools and techniques, developers can build scalable applications that drive innovation and strategic decisions. Whether you're processing millions of rows or building a real-time data stream, the world of big data has endless potential. Dive in and start building smart, data-driven applications today!

0 notes

Text

AX 2012 Interview Questions and Answers for Beginners and Experts

Microsoft Dynamics AX 2012 is a powerful ERP answer that facilitates organizations streamline their operations. Whether you're a newbie or an professional, making ready for an interview associated with AX 2012 requires a radical knowledge of its core standards, functionalities, and technical factors. Below is a list of commonly requested AX 2012 interview questions together with their solutions.

Basic AX 2012 Interview Questions

What is Microsoft Dynamics AX 2012?Microsoft Dynamics AX 2012 is an company aid planning (ERP) solution advanced with the aid of Microsoft. It is designed for large and mid-sized groups to manage finance, supply chain, manufacturing, and client relationship control.

What are the important thing features of AX 2012?

Role-primarily based user experience

Strong financial control skills

Advanced warehouse and deliver chain management

Workflow automation

Enhanced reporting with SSRS (SQL Server Reporting Services)

What is the distinction between AX 2009 and AX 2012?

AX 2012 introduced a new data version with the introduction of surrogate keys.

The MorphX IDE changed into replaced with the Visual Studio development environment.

Improved workflow and role-based totally get right of entry to manipulate.

What is the AOT (Application Object Tree) in AX 2012?The AOT is a hierarchical shape used to keep and manipulate objects like tables, bureaucracy, reports, lessons, and queries in AX 2012.

Explain the usage of the Data Dictionary in AX 2012.The Data Dictionary contains definitions of tables, information types, family members, and indexes utilized in AX 2012. It guarantees facts integrity and consistency across the device.

Technical AX 2012 Interview Questions

What are the distinctive sorts of tables in AX 2012?

Regular tables

Temporary tables

In Memory tables

System tables

What is the distinction between In Memory and TempDB tables?

In Memory tables shop information within the purchaser memory and aren't continual.

Temp DB tables save brief statistics in SQL Server and are session-unique.

What is X++ and the way is it utilized in AX 2012?X++ is an item-oriented programming language used in AX 2012 for growing business good judgment, creating custom modules, and automating processes.

What is the cause of the CIL (Common Intermediate Language) in AX 2012?CIL is used to convert X++ code into .NET IL, enhancing overall performance by using enabling execution at the .NET runtime degree.

How do you debug X++ code in AX 2012?Debugging may be accomplished the use of the X++ Debugger or with the aid of enabling the Just-In-Time Debugging function in Visual Studio.

Advanced AX 2012 Interview Questions

What is a Query Object in AX 2012?A Query Object is used to retrieve statistics from tables using joins, tiers, and sorting.

What are Services in AX 2012, and what sorts are to be had?

Document Services (for replacing statistics)

Custom Services (for exposing X++ logic as a carrier)

System Services (metadata, question, and user consultation offerings)

Explain the concept of Workflows in AX 2012.Workflows allow the automation of commercial enterprise techniques, together with approvals, via defining steps and assigning responsibilities to users.

What is the purpose of the SysOperation Framework in AX 2012?It is a substitute for RunBaseBatch framework, used for walking techniques asynchronously with higher scalability.

How do you optimize overall performance in AX 2012?

Using indexes effectively

Optimizing queries

Implementing caching strategies

Using batch processing for massive facts operations

Conclusion

By understanding those AX 2012 interview questions, applicants can successfully put together for interviews. Whether you're a novice or an experienced expert, gaining knowledge of those topics will boost your self assurance and help you secure a role in Microsoft Dynamics AX 2012 tasks.

0 notes

Text

What is the best way to begin learning Power BI, and how can a beginner smoothly progress to advanced skills?

Introduction to Power BI

Power BI is a revolutionary tool in the data analytics universe, famous for its capacity to transform raw data into engaging visual insights. From small startups to global multinationals, it is a vital resource for making informed decisions based on data.

Learning the Core Features of Power BI

At its essence, Power BI provides three fundamental features:

Data Visualization – Designing dynamic and interactive visuals.

Data Modelling – Organizing and structuring data for proper analysis.

DAX (Data Analysis Expressions) – Driving complex calculations and insights.

Setting Up for Success

Getting started starts with downloading and installing Power BI Desktop. Getting familiar with its interface, menus, and features is an essential step in your learning process.

Starting with the Basics

Beginners often start by importing data from Excel or SQL. Your initial focus should be on creating straightforward visuals, such as bar charts or line graphs, while learning to establish relationships between datasets.

Importance of Structured Learning Paths

A structured learning path, such as enrolling in a power BI certification course in washington, San Francisco, or New York, accelerates your progress. These courses provide a solid foundation and hands-on practice.

Working with Hands-On Projects

Hands-on projects are the optimal method to cement your knowledge. Begin with building a simple sales dashboard that monitors revenue patterns, providing you with instant real-world exposure.

Building Analytical Skills

Early on, work on describing data in tables and matrices. Seeing trends using bar charts or pie charts improves your capacity to clearly communicate data insights.

Moving on to Intermediate Skills

After becoming familiar with the fundamentals, it's time to go deeper. Understand how to work with calculated fields, measures, and Power Query Editor. Data model optimization will enhance report performance.

Learning Advanced Visualization Methods

Elevate your reports to the next level with personalize visuals. Add slicers to slice data or drill-through features for detailed analysis, making your dashboards more interactive.

Mastering Data Analysis Expressions (DAX)

DAX is the powerhouse behind Power BI’s analytics capabilities. Start with simple functions like SUM and AVERAGE, and gradually explore advanced calculations involving CALCULATE and FILTER.

Working with Power BI Service

Publishing your reports online via Power BI Service allows for easy sharing and collaboration. Learn to manage datasets, create workspaces, and collaborate effectively.

Collaborative Features in Power BI

Use shared workspaces for collaboration. Utilize version control to avoid conflicts and allow seamless collaboration.

Data Security and Governance

Data security is top-notch. Master setting roles, permissions management, and adherence to data governance policies to guarantee data integrity.

Power BI Integration with Other Tools

Maximize the power of Power BI by integrating it with Excel or linking it with cloud platforms such as Azure or Google Drive.

Preparing for Power BI Certification

Certification proves your proficiency. A power BI certification course in san francisco or any other city can assist you in excelling on exam content, ranging from data visualization to expert DAX.

Emphasizing the Top Courses for Power BI

Courses in Washington and New York city’s provide customized content to fit the demands of skilled professionals at all levels. Their complete curriculum helps students learn in-depth.

Keeping Current with Power BI Trends

Stay ahead by exploring updates, joining the Power BI community, and engaging with forums to share knowledge.

Common Challenges and How to Overcome Them

Troubleshooting is part of the process. Practice resolving common issues, such as data import errors or incorrect DAX outputs, to build resilience.

Building a Portfolio with Power BI

A well-rounded portfolio showcases your skills. Include projects that demonstrate your ability to solve real-world problems, enhancing your appeal to employers.

Conclusion and Next Steps

Your Power BI journey starts small but culminates in huge rewards. You may be learning on your own or doing a power BI certification course in new york, but whatever you choose, embrace the journey and feel the transformation as you develop into a Power BI master.

0 notes

Text

Is it worth doing a BSc in data science from BSE Institute?

Yes, the BSc in Data Science from BSE Institute is a legitimate, industry-aligned program with a modern curriculum. It offers a broader data science foundation with tools like Python, R, Jupyter Notebook, and machine learning. Here’s why it’s worth considering:

1. Curriculum Breakdown: It’s a Real Data Science Program

🔗 BSc Data Science – BSE Institute

Core Technical Components

Programming & Tools Python & R: Core languages for data analysis, machine learning, and visualization. Jupyter Notebook: Hands-on practice for creating live code, equations, and visualizations (critical for real-world data storytelling).SQL: Database management and querying.

Machine Learning: Supervised/unsupervised learning, regression, classification, and clustering algorithms.

Data Visualization:Tools like Tableau, Power BI, and Matplotlib for creating dashboards and reports.

Big Data Basics:Introduction to Hadoop/Spark (industry-standard frameworks).

Practical Projects

Real-world datasets: Work on projects across domains like healthcare, e-commerce, social media, and finance.

Capstone projects: Solve industry problems (e.g., customer churn prediction, sentiment analysis).

Industry Certifications

NISM modules: Optional for finance enthusiasts, but not mandatory for all students.

2. Industry Experience: Beyond Just Finance

Internships: Partnerships with IT firms, healthcare startups, e-commerce giants, and fintech companies. Example: You could intern at a health-tech startup analyzing patient data or at an e-commerce firm optimizing supply chains.

Live Case Studies: Solve problems for companies across sectors (retail, logistics, banking, etc.).

3. Career Scope: Not Limited to Stocks

Jobs Across Industries

Healthcare: Role: Healthcare Data Analyst Work: Predict disease outbreaks, optimize hospital operations.

E-commerce: Role: Customer Insights Analyst Work: Analyze buying patterns, improve recommendations.

Tech/IT: Role: Data Engineer/Analyst Work: Clean, process, and visualize data for AI models.

Finance (Optional):Role: Financial Data AnalystWork: Only if you choose finance electives or internships.

Placements

Average Package: ₹4–8 LPA (varies by role and sector).

Top Recruiters: Tech: TCS, Infosys, Wipro (for data roles).E-commerce: Amazon, Flipkart.

Startups : PharmEasy, Swiggy (data-driven decision-making).

4. How It Compares to Generic BSc Data Science Programs

BSE Institute’s BSc

Generic BSc Data Science

Tools

Jupyter, Python, R, Tableau, Spark

Often limited to Excel, basic Python

Projects

Industry-backed, cross-domain

Academic/theoretical

Finance Focus

Optional (via electives/NISM)

Rarely offered

Placements

Sector-agnostic + finance options

Limited industry connections

5. Why Choose This Program?

Modern Tools: Learn Jupyter Notebook, Python/R, and cloud platforms (AWS/Google Cloud basics).

Flexibility: Work in healthcare, tech, retail, or finance—you pick your niche.

Cost-Effective: Fees (~₹2–3L) are lower than private engineering colleges.

Mumbai Advantage: Access to internships across industries (IT parks, hospitals, startups).

6. Potential Drawbacks

Not for Hardcore Coders: Less focus on software engineering (e.g., Java/C++).

Brand Recognition: While BSE is respected, it’s not an IIT/NIT. You’ll need to prove your skills.

7. Who Should Enroll?

You’re a fit if: You want a practical, tool-driven data science degree (not just theory).You’re okay with self-learning to master advanced topics like deep learning later. You want flexibility to work in healthcare, tech, or finance.

Not a fit if:You want a coding-heavy engineering degree (opt for BTech).

The BSE Institute’s BSc Data Science is a legitimate, industry-relevant program with a modern curriculum (Jupyter, Python, ML) and cross-sector opportunities. While it offers finance electives, also

Worth it if:

You want a 3-year, affordable degree with hands-on tools.

You’re proactive about internships and building projects.

Program Link: BSc Data Science – BSE Institute

0 notes

Text

A Complete Guide to Oracle Fusion Technical and Oracle Integration Cloud (OIC)

Oracle Fusion Applications have revolutionized enterprise resource planning (ERP) by providing a cloud-based, integrated, scalable solution. Oracle Fusion Technical + OIC Online Training is crucial in managing, customizing, and extending these applications. Oracle Integration Cloud (OIC) is a powerful platform for connecting various cloud and on-premises applications, enabling seamless automation and data exchange. This guide explores the key aspects of Oracle Fusion Technical and OIC, their functionalities, and best practices for implementation.

Understanding Oracle Fusion Technical

Oracle Fusion Technical involves the backend functionalities that enable customization, reporting, data migration, and integration within Fusion Applications. Some core aspects include:

1. BI Publisher (BIP) Reports

BI Publisher (BIP) is a powerful reporting tool that allows users to create, modify, and schedule reports in Oracle Fusion Applications. It supports multiple data sources, including SQL queries, Web Services, and Fusion Data Extracts.

Features:

Customizable templates using RTF, Excel, and XSL

Scheduling and bursting capabilities

Integration with Fusion Security

2. Oracle Transactional Business Intelligence (OTBI)

OTBI is a self-service reporting tool that provides real-time analytics for business users. It enables ad-hoc analysis and dynamic dashboards using subject areas.

Key Benefits:

No SQL knowledge required

Drag-and-drop report creation

Real-time data availability

3. File-Based Data Import (FBDI)

FBDI is a robust mechanism for bulk data uploads into Oracle Fusion Applications. It is widely used for migrating data from legacy systems.

Process Overview:

Download the predefined FBDI template

Populate data and generate CSV files

Upload files via the Fusion application

Load data using scheduled processes

4. REST and SOAP APIs in Fusion

Oracle Fusion provides REST and SOAP APIs to facilitate integration with external systems.

Use Cases:

Automating business processes

Fetching and updating data from external applications

Integrating with third-party tools

Introduction to Oracle Integration Cloud (OIC)

Oracle Integration Cloud (OIC) is a middleware platform that connects various cloud and on-premise applications. It offers prebuilt adapters, process automation, and AI-powered insights to streamline integrations.

Key Components of OIC:

Application Integration - Connects multiple applications using prebuilt and custom integrations.

Process Automation - Automates business workflows using structured and unstructured processes.

Visual Builder - A low-code development platform for building web and mobile applications.

OIC Adapters and Connectivity

OIC provides a wide range of adapters to simplify integration:

ERP Cloud Adapter - Connects with Oracle Fusion Applications

FTP Adapter - Enables file-based integrations

REST/SOAP Adapter - Facilitates API-based integrations

Database Adapter - Interacts with on-premise or cloud databases

Implementing an OIC Integration

Step 1: Define Integration Requirements

Before building an integration, determine the source and target applications, data transformation needs, and error-handling mechanisms.

Step 2: Choose the Right Integration Pattern

OIC supports various integration styles, including:

App-Driven Orchestration - Used for complex business flows requiring multiple steps.

Scheduled Integration - Automates batch processes at predefined intervals.

File Transfer Integration - Moves large volumes of data between systems.

Step 3: Create and Configure the Integration

Select the source and target endpoints (e.g., ERP Cloud, Salesforce, FTP).

Configure mappings and transformations using OIC’s drag-and-drop mapper.

Add error handling to manage integration failures effectively.

Step 4: Test and Deploy

Once configured, test the integration in OIC’s test environment before deploying it to production.

Best Practices for Oracle Fusion Technical and OIC

For Oracle Fusion Technical:

Use OTBI for ad-hoc reports and BIP for pixel-perfect reporting.

Leverage FBDI for bulk data loads and REST APIs for real-time integrations.

Follow security best practices, including role-based access control (RBAC) for reports and APIs.

For Oracle Integration Cloud:

Use prebuilt adapters whenever possible to reduce development effort.

Implement error handling and logging to track failures and improve troubleshooting.

Optimize data transformations using XSLT and built-in functions to enhance performance.

Schedule integrations efficiently to avoid API rate limits and performance bottlenecks.

Conclusion

Oracle Fusion Technical and Oracle Integration Cloud (OIC) are vital in modern enterprise applications. Mastering these technologies enables businesses to create seamless integrations, automate processes, and generate insightful reports. Organizations can maximize efficiency and drive digital transformation by following best practices and leveraging the right tools.

Whether you are an IT professional, consultant, or business user, understanding Oracle Fusion Technical and OIC is essential for optimizing business operations in the cloud era. With the right approach, you can harness the full potential of Oracle’s powerful ecosystem.

0 notes

Text

BigQuery And Spanner With External Datasets Boosts Insights

BigQuery and Spanner work better together by extending operational insights with external datasets.

Analyzing data from several databases has always been difficult for data analysts. They must employ ETL procedures to transfer data from transactional databases into analytical data storage due to data silos. If you have data in both Spanner and BigQuery, BigQuery has made the issue somewhat simpler to tackle.

You might use federated queries to wrap your Spanner query and integrate the results set with BigQuery using a TVF by using the EXTERNAL_QUERY table-valued function (TVF). Although effective, this method had drawbacks, including restricted query monitoring and query optimization insights, and added complexity by having the analyst to create intricate SQL when integrating data from two sources.

Google Cloud to provides today public preview of BigQuery external datasets for Spanner, which represents a significant advancement. Data analysts can browse, analyze, and query Spanner tables just as they would native BigQuery tables with to this productivity-boosting innovation that connects Spanner schema to BigQuery datasets. BigQuery and Spanner tables may be used with well-known GoogleSQL to create analytics pipelines and dashboards without the need for additional data migration or complicated ETL procedures.

Using Spanner external datasets to get operational insights

Gathering operational insights that were previously impossible without transferring data is made simple by spanner external databases.

Operational dashboards: A service provider uses BigQuery for historical analytics and Spanner for real-time transaction data. This enables them to develop thorough real-time dashboards that assist frontline employees in carrying out daily service duties while providing them with direct access to the vital business indicators that gauge the effectiveness of the company.

Customer 360: By combining extensive analytical insights on customer loyalty from purchase history in their data lake with in-store transaction data, a retail company gives contact center employees a comprehensive picture of its top consumers.

Threat intelligence: Information security businesses’ Security Operations (SecOps) personnel must use AI models based on long-term data stored in their analytical data store to assess real-time streaming data entering their operations data store. To compare incoming threats with pre-established threat patterns, SecOps staff must be able to query historical and real-time data using familiar SQL via a single interface.

Leading commerce data SaaS firm Attain was among the first to integrate BigQuery external datasets and claims that it has increased data analysts’ productivity.

Advantages of Spanner external datasets

The following advantages are offered by Spanner and BigQuery working together for data analysts seeking operational insights on their transactions and analytical data:

Simplified query writing: Eliminate the need for laborious federated queries by working directly with data in Spanner as if it were already in BigQuery.

Unified transaction analytics: Combine data from BigQuery and Spanner to create integrated dashboards and reports.

Real-time insights: BigQuery continuously asks Spanner for the most recent data, giving reliable, current insights without affecting production Spanner workloads or requiring intricate synchronization procedures.

Low-latency performance: BigQuery speeds up queries against Spanner by using parallelism and Spanner Data Boost features, which produces results more quickly.

How it operates

Suppose you want to include new e-commerce transactions from a Spanner database into your BigQuery searches.

All of your previous transactions are stored in BigQuery, and your analytical dashboards are constructed using this data. But sometimes, you may need to examine the combined view of recent and previous transactions. At that point, you may use BigQuery to generate an external datasets that replicates your Spanner database.

Assume that you have a project called “myproject” in Spanner, along with an instance called “myinstance” and a database called “ecommerce,” where you keep track of the transactions that are currently occurring on your e-commerce website. With the inclusion of the “Link to an external database” option, you may Create an external datasets in BigQuery exactly like any other dataset:Image Credit To Google Cloud

Browse a Spanner external dataset

A chosen Spanner database may also be seen as an external datasets via the Google Cloud console’s BigQuery Studio. You may see all of your Spanner tables by selecting this dataset and expanding it:Image Credit To Google Cloud

Sample queries

You can now run any query you choose on the tables in your external datasets actually, your Spanner database.

Let’s look at today’s transactions using customer segments that BigQuery calculates and stores, for instance:

SELECT o.id, o.customer_id, o.total_value, s.segment_name FROM current_transactions.ecommerce_order o left join crm_dataset.customer_segments s on o.customer_id=s.customer_id WHERE o.order_date = ‘2024-09-01’

Observe that current_transactions is an external datasets that refers to a Spanner database, whereas crm_dataset is a standard BigQuery dataset.

An additional example would be a single view of every transaction a client has ever made, both past and present:

SELECT id, customer_id, total_value FROM current_transactions.ecommerce_order o union transactions_history th

Once again, transactions_history is stored in BigQuery, but current_transactions is an external datasets.

Note that you don’t need to manually transfer the data using any ETL procedures since it is retrieved live from Spanner!

You may see the query plan when the query is finished. You can see how the ecommerce_order table was utilized in a query and how many entries were read from a particular database by selecting the EXECUTION GRAPH tab.

Reda more on Govindhtech.com

#Externaldatasets#BigQuery#BigQuerytables#SecurityOperations#Spanner#news#technews#technology#technologynews#technologytrends#govindhtech

0 notes

Text

Data Science with SQL: Managing and Querying Databases

Data science is about extracting insights from vast amounts of data, and one of the most critical steps in this process is managing and querying databases. Structured Query Language (SQL) is the standard language used to communicate with relational databases, making it essential for data scientists and analysts. Whether you're pulling data for analysis, building reports, or integrating data from multiple sources, SQL is the go-to tool for efficiently managing and querying large datasets.

This blog post will guide you through the importance of SQL in data science, common use cases, and how to effectively use SQL for managing and querying databases.

Why SQL is Essential for Data Science

Data scientists often work with structured data stored in relational databases like MySQL, PostgreSQL, or SQLite. SQL is crucial because it allows them to retrieve and manipulate this data without needing to work directly with raw files. Here are some key reasons why SQL is a fundamental tool for data scientists:

Efficient Data Retrieval: SQL allows you to quickly retrieve specific data points or entire datasets from large databases using queries.

Data Management: SQL supports the creation, deletion, and updating of databases and tables, allowing you to maintain data integrity.

Scalability: SQL works with databases of any size, from small-scale personal projects to enterprise-level applications.

Interoperability: SQL integrates easily with other tools and programming languages, such as Python and R, which makes it easier to perform further analysis on the retrieved data.

SQL provides a flexible yet structured way to manage and manipulate data, making it indispensable in a data science workflow.

Key SQL Concepts for Data Science

1. Databases and Tables

A relational database stores data in tables, which are structured in rows and columns. Each table represents a different entity, such as customers, orders, or products. Understanding the structure of relational databases is essential for writing efficient queries and working with large datasets.

Table: An array of data with columns and rows arranged.

Column: A specific field of the table, like “Customer Name” or “Order Date.”

Row: A single record in the table, representing a specific entity, such as a customer’s details or a product’s information.

By structuring data in tables, SQL allows you to maintain relationships between different data points and query them efficiently.

2. SQL Queries

The commands used to communicate with a database are called SQL queries. Data can be selected, inserted, updated, and deleted using queries. In data science, the most commonly used SQL commands include:

SELECT: Retrieves data from a database.

INSERT: Adds new data to a table.

UPDATE: Modifies existing data in a table.

DELETE: Removes data from a table.

Each of these commands can be combined with various clauses (like WHERE, JOIN, and GROUP BY) to refine the results, filter data, and even combine data from multiple tables.

3. Joins

A SQL join allows you to combine data from two or more tables based on a related column. This is crucial in data science when you have data spread across multiple tables and need to combine them to get a complete dataset.

Returns rows from both tables where the values match through an inner join.

All rows from the left table and the matching rows from the right table are returned via a left-join. If no match is found, the result is NULL.

Like a left join, a right join returns every row from the right table.

FULL JOIN: Returns rows in cases where both tables contain a match.

Because joins make it possible to combine and evaluate data from several sources, they are crucial when working with relational databases.

4. Aggregations and Grouping

Aggregation functions like COUNT, SUM, AVG, MIN, and MAX are useful for summarizing data. SQL allows you to aggregate data, which is particularly useful for generating reports and identifying trends.

COUNT: Returns the number of rows that match a specific condition.

SUM: Determines a numeric column's total value.

AVG: Provides a numeric column's average value.

MIN/MAX: Determines a column's minimum or maximum value.

You can apply aggregate functions to each group of rows that have the same values in designated columns by using GROUP BY. This is helpful for further in-depth analysis and category-based data breakdown.

5. Filtering Data with WHERE

The WHERE clause is used to filter data based on specific conditions. This is critical in data science because it allows you to extract only the relevant data from a database.

Managing Databases in Data Science

Managing databases means keeping data organized, up-to-date, and accurate. Good database management helps ensure that data is easy to access and analyze. Here are some key tasks when managing databases:

1. Creating and Changing Tables

Sometimes you’ll need to create new tables or change existing ones. SQL’s CREATE and ALTER commands let you define or modify tables.

CREATE TABLE: Sets up a new table with specific columns and data types.

ALTER TABLE: Changes an existing table, allowing you to add or remove columns.

For instance, if you’re working on a new project and need to store customer emails, you might create a new table to store that information.

2. Ensuring Data Integrity

Maintaining data integrity means ensuring that the data is accurate and reliable. SQL provides ways to enforce rules that keep your data consistent.

Primary Keys: A unique identifier for each row, ensuring that no duplicate records exist.

Foreign Keys: Links between tables that keep related data connected.

Constraints: Rules like NOT NULL or UNIQUE to make sure the data meets certain conditions before it’s added to the database.

Keeping your data clean and correct is essential for accurate analysis.

3. Indexing for Faster Performance

As databases grow, queries can take longer to run. Indexing can speed up this process by creating a shortcut for the database to find data quickly.

CREATE INDEX: Builds an index on a column to make queries faster.

DROP INDEX: Removes an index when it’s no longer needed.

By adding indexes to frequently searched columns, you can speed up your queries, which is especially helpful when working with large datasets.

Querying Databases for Data Science

Writing efficient SQL queries is key to good data science. Whether you're pulling data for analysis, combining data from different sources, or summarizing results, well-written queries help you get the right data quickly.

1. Optimizing Queries

Efficient queries make sure you’re not wasting time or computer resources. Here are a few tips:

*Use SELECT Columns Instead of SELECT : Select only the columns you need, not the entire table, to speed up queries.

Filter Early: Apply WHERE clauses early to reduce the number of rows processed.

Limit Results: Use LIMIT to restrict the number of rows returned when you only need a sample of the data.

Use Indexes: Make sure frequently queried columns are indexed for faster searches.

Following these practices ensures that your queries run faster, even when working with large databases.

2. Using Subqueries and CTEs

Subqueries and Common Table Expressions (CTEs) are helpful when you need to break complex queries into simpler parts.

Subqueries: Smaller queries within a larger query to filter or aggregate data.

CTEs: Temporary result sets that you can reference within a main query, making it easier to read and understand.

These tools help organize your SQL code and make it easier to manage, especially for more complicated tasks.

Connecting SQL to Other Data Science Tools

SQL is often used alongside other tools for deeper analysis. Many programming languages and data tools, like Python and R, work well with SQL databases, making it easy to pull data and then analyze it.

Python and SQL: Libraries like pandas and SQLAlchemy let Python users work directly with SQL databases and further analyze the data.

R and SQL: R connects to SQL databases using packages like DBI and RMySQL, allowing users to work with large datasets stored in databases.

By using SQL with these tools, you can handle and analyze data more effectively, combining the power of SQL with advanced data analysis techniques.

Conclusion

If you work with data, you need to know SQL. It allows you to manage, query, and analyze large datasets easily and efficiently. Whether you're combining data, filtering results, or generating summaries, SQL provides the tools you need to get the job done. By learning SQL, you’ll improve your ability to work with structured data and make smarter, data-driven decisions in your projects.

0 notes

Text

Elevate Your Career with SAP ABAP Online Training

Are you ready to boost your expertise in SAP ABAP? Our comprehensive online training program is designed to provide you with the skills and knowledge required to excel in this highly sought-after field.

Why Choose Our SAP ABAP Online Training?

Expert Instructors

Our training is led by industry veterans with extensive SAP ABAP experience. These professionals bring their real-world knowledge into the virtual classroom, ensuring you receive practical and relevant instruction.

Flexible Learning Schedule

We understand the demands of busy professionals and students. Our online training program offers the flexibility to learn at your own pace, with 24/7 access to course materials and recorded sessions.

Comprehensive Coverage

Our curriculum is meticulously designed to cover all essential aspects of SAP ABAP, from the basics to advanced topics. You will gain a thorough understanding of data dictionary objects, reports, module pool programming, and forms.

Hands-On Experience

Theory is important, but practice makes perfect. Our training includes numerous hands-on exercises and real-time projects to help you apply what you've learned and gain practical experience.

Certification Support

Achieving SAP ABAP certification can open many doors in your career. We provide extensive resources and support to help you prepare for certification exams, including practice tests and study guides.

Detailed Course Overview

Introduction to SAP ABAP

Learn the fundamentals of SAP ABAP, including its architecture, data types, and operators. Understand its role within the SAP ecosystem and its importance for enterprise solutions.

Data Dictionary

Gain a deep understanding of data dictionary objects. Learn to create and manage tables, views, indexes, data elements, and domains. Understand the relationships and dependencies between these objects.

Reports

Master the art of report generation. Develop classical reports, interactive reports, and ALV (ABAP List Viewer) reports. Learn to customize reports to meet specific business requirements.

Module Pool Programming

Dive into dialog programming with module pools. Create custom transactions and understand the process of screen creation, flow logic, and event handling.

Forms

Explore SAPscript and Smart Forms for designing and managing print layouts. Learn to create custom forms for invoices, purchase orders, and other business documents.

Enhancements and Modifications

Discover how to enhance standard SAP applications using techniques like user exits, BADIs (Business Add-Ins), and enhancement frameworks. Learn to modify standard functionalities without affecting the core system.

Performance Tuning

Understand the best practices for optimizing the performance of your ABAP programs. Learn about SQL performance tuning, efficient coding practices, and performance analysis tools.

Advanced Topics

Delve into advanced areas such as Object-Oriented ABAP (OOABAP), Web Dynpro for ABAP, and ABAP on SAP HANA. These modules will prepare you for the latest trends and technologies in the SAP world.

Get Started Today

Don’t miss out on the opportunity to enhance your SAP ABAP skills with our expert-led online training. Enroll now and take the first step towards advancing your career.

For more details and enrollment information, visit our website at www.feligrat.com or reach out to us via email at [email protected]. Our team is ready to assist you with any queries and provide additional information about the course.

0 notes

Text

Oracle SQL Reporting Tools and Solutions: A Comparative Analysis

Effective reporting is crucial for organizations to gain insights from their data and make informed decisions. Oracle SQL, a powerful database query language, plays a pivotal role in extracting, transforming, and presenting data for reporting purposes. However, to create meaningful reports efficiently, you need the right tools and solutions. In this article, we'll conduct a comparative analysis of various Oracle SQL reporting tools and solutions to help you choose the one that best fits your reporting needs.

1. Oracle SQL Developer

Overview: Oracle SQL Developer is a free, integrated development environment (IDE) that simplifies database development tasks, including SQL query writing and report generation. It offers a user-friendly interface with various features to aid in report creation.

Key Features:

SQL Query Builder: Allows users to build complex SQL queries visually.

Query Result Formatting: Provides options to customize the appearance of query results.

Report Exporting: Supports exporting query results to various formats (e.g., CSV, Excel, PDF).

Data Modeler: Helps design and manage database schemas for reporting.

PL/SQL Support: Allows you to create stored procedures and functions for report-specific calculations.

Pros:

Free and widely used.

Integrates seamlessly with Oracle databases.

Offers a variety of customization options for query results.

Suitable for developers and database administrators.

Cons:

Lacks advanced reporting features like data visualization.

Limited scheduling and automation capabilities.

2. Oracle Business Intelligence Enterprise Edition (OBIEE)

Overview: OBIEE is a comprehensive business intelligence platform offered by Oracle. It is designed for creating interactive dashboards, reports, and ad-hoc queries from Oracle databases and other data sources.

Key Features:

Data Visualization: Supports rich data visualization tools for creating interactive reports and dashboards.

Advanced Analytics: Provides predictive analytics and data mining capabilities.

Integration: Can integrate with various data sources, including Oracle databases, Excel, and other databases.

Report Scheduling: Allows for automated report delivery via email or other channels.

Security and Access Control: Offers robust security features for data access and sharing.

Pros:

Powerful data visualization and interactive reporting capabilities.

Supports large-scale enterprise reporting needs.

Suitable for organizations requiring advanced analytics and complex reporting.

Cons:

High cost of licensing and maintenance.

Requires a steep learning curve for beginners.

May be overly complex for smaller organizations with basic reporting needs.

3. Oracle Application Express (APEX)

Overview: Oracle APEX is a low-code development platform that enables users to create web applications and reports, including SQL-based reports, with minimal coding.

Key Features:

SQL Workshop: Includes a SQL Query Builder for creating reports.

Interactive Reporting: Supports interactive, drill-down reports.

Templates and Themes: Offers customizable report templates and themes.

RESTful Web Services: Allows integration with other applications and services.

Scalability: Scales well for both small and large reporting projects.

Pros:

User-friendly, with a low learning curve.

Quick and easy report development with minimal coding.

Ideal for small to medium-sized organizations.

Cons:

May lack advanced reporting features required by larger enterprises.

Limited support for complex data modeling.

4. Oracle Reports

Overview: Oracle Reports is a traditional reporting tool included in Oracle's development suite. It allows developers to design and generate sophisticated reports from Oracle databases.

Key Features:

Report Design: Provides a design interface for creating pixel-perfect reports.

Data Sources: Supports various data sources, including Oracle databases.

Report Distribution: Offers report distribution options, including email and file output.

Integration: Can be integrated with other Oracle development tools.

Pros:

Suitable for organizations with legacy reporting needs.

Allows for precise report design control.

Integrates well with Oracle databases.

Cons:

Older technology with limited support for modern reporting features.

Not well-suited for complex data visualizations or interactive reports.

5. Oracle BI Publisher

Overview: Oracle BI Publisher is a reporting tool designed for generating highly formatted, pixel-perfect reports from various data sources, including Oracle databases.

Key Features:

Template-Based Reporting: Uses templates for report layout and formatting.

Data Integration: Supports multiple data sources, including databases and web services.

Output Formats: Offers output in various formats, including PDF, Excel, and Word.

Bursting and Scheduling: Allows automated report distribution and scheduling.

Pros:

Ideal for organizations requiring precise, highly formatted reports.

Supports data integration from multiple sources.

Suitable for organizations with regulatory reporting requirements.

Cons:

Limited support for interactive or data visualization-based reporting.

May not be cost-effective for organizations with diverse reporting needs.

Conclusion

Selecting the right Oracle SQL reporting tool or solution depends on your organization's specific requirements and resources. Oracle SQL Developer is a solid choice for basic reporting needs and is especially beneficial for developers and database administrators. OBIEE is a powerful option for enterprises requiring advanced analytics and interactive reporting, but it comes with a higher cost and complexity. Oracle APEX is a user-friendly, low-code solution for smaller organizations with simpler reporting needs. Oracle Reports and Oracle BI Publisher are suitable for organizations with legacy systems or highly formatted reporting requirements.

Ultimately, the choice of a reporting tool should align with your organization's reporting goals, budget, and technical capabilities. It may also be beneficial to consult with Oracle experts or seek vendor support to ensure that the selected tool meets your specific reporting requirements.

0 notes

Video

youtube

How to Create Moodle Custom Report Using SQL Queries on LearnerScript?

#moodle-custom sql report queries#moodle custom report builder#moodle custom sql reports#Custom Reports Via SQL Queries#Moodle configurable reports#Moodle report builder#moodle reporting dashboard#moodle analytics dashboard#reporting plugins for moodle#moodle student report#moodle reports plugin#moodle custom sql queries

0 notes

Text

Web Application Penetration Testing Checklist

Web-application penetration testing, or web pen testing, is a way for a business to test its own software by mimicking cyber attacks, find and fix vulnerabilities before the software is made public. As such, it involves more than simply shaking the doors and rattling the digital windows of your company's online applications. It uses a methodological approach employing known, commonly used threat attacks and tools to test web apps for potential vulnerabilities. In the process, it can also uncover programming mistakes and faults, assess the overall vulnerability of the application, which include buffer overflow, input validation, code Execution, Bypass Authentication, SQL-Injection, CSRF, XSS etc.

Penetration Types and Testing Stages

Penetration testing can be performed at various points during application development and by various parties including developers, hosts and clients. There are two essential types of web pen testing:

l Internal: Tests are done on the enterprise's network while the app is still relatively secure and can reveal LAN vulnerabilities and susceptibility to an attack by an employee.

l External: Testing is done outside via the Internet, more closely approximating how customers — and hackers — would encounter the app once it is live.

The earlier in the software development stage that web pen testing begins, the more efficient and cost effective it will be. Fixing problems as an application is being built, rather than after it's completed and online, will save time, money and potential damage to a company's reputation.

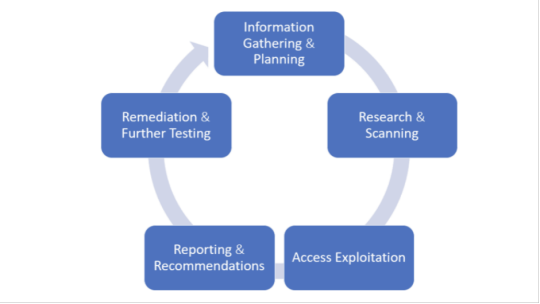

The web pen testing process typically includes five stages:

1. Information Gathering and Planning: This comprises forming goals for testing, such as what systems will be under scrutiny, and gathering further information on the systems that will be hosting the web app.

2. Research and Scanning: Before mimicking an actual attack, a lot can be learned by scanning the application's static code. This can reveal many vulnerabilities. In addition to that, a dynamic scan of the application in actual use online will reveal additional weaknesses, if it has any.

3. Access and Exploitation: Using a standard array of hacking attacks ranging from SQL injection to password cracking, this part of the test will try to exploit any vulnerabilities and use them to determine if information can be stolen from or unauthorized access can be gained to other systems.

4. Reporting and Recommendations: At this stage a thorough analysis is done to reveal the type and severity of the vulnerabilities, the kind of data that might have been exposed and whether there is a compromise in authentication and authorization.

5. Remediation and Further Testing: Before the application is launched, patches and fixes will need to be made to eliminate the detected vulnerabilities. And additional pen tests should be performed to confirm that all loopholes are closed.

Information Gathering

1. Retrieve and Analyze the robot.txt files by using a tool called GNU Wget.

2. Examine the version of the software. DB Details, the error technical component, bugs by the error codes by requesting invalid pages.

3. Implement techniques such as DNS inverse queries, DNS zone Transfers, web-based DNS Searches.

4. Perform Directory style Searching and vulnerability scanning, Probe for URLs, using tools such as NMAP and Nessus.

5. Identify the Entry point of the application using Burp Proxy, OWSAP ZAP, TemperIE, WebscarabTemper Data.

6. By using traditional Fingerprint Tool such as Nmap, Amap, perform TCP/ICMP and service Fingerprinting.

7.By Requesting Common File Extension such as.ASP,EXE, .HTML, .PHP ,Test for recognized file types/Extensions/Directories.

8. Examine the Sources code From the Accessing Pages of the Application front end.

9. Many times social media platform also helps in gathering information. Github links, DomainName search can also give more information on the target. OSINT tool is such a tool which provides lot of information on target.

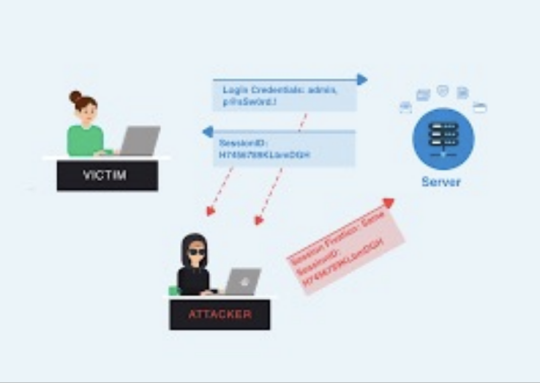

Authentication Testing

1. Check if it is possible to “reuse” the session after Logout. Verify if the user session idle time.

2. Verify if any sensitive information Remain Stored in browser cache/storage.

3. Check and try to Reset the password, by social engineering crack secretive questions and guessing.

4.Verify if the “Remember my password” Mechanism is implemented by checking the HTML code of the log-in page.

5. Check if the hardware devices directly communicate and independently with authentication infrastructure using an additional communication channel.

6. Test CAPTCHA for authentication vulnerabilities.

7. Verify if any weak security questions/Answer are presented.

8. A successful SQL injection could lead to the loss of customer trust and attackers can steal PID such as phone numbers, addresses, and credit card details. Placing a web application firewall can filter out the malicious SQL queries in the traffic.

Authorization Testing

1. Test the Role and Privilege Manipulation to Access the Resources.

2.Test For Path Traversal by Performing input Vector Enumeration and analyze the input validation functions presented in the web application.

3.Test for cookie and parameter Tempering using web spider tools.

4. Test for HTTP Request Tempering and check whether to gain illegal access to reserved resources.

Configuration Management Testing

1. Check file directory , File Enumeration review server and application Documentation. check the application admin interfaces.

2. Analyze the Web server banner and Performing network scanning.

3. Verify the presence of old Documentation and Backup and referenced files such as source codes, passwords, installation paths.

4.Verify the ports associated with the SSL/TLS services using NMAP and NESSUS.

5.Review OPTIONS HTTP method using Netcat and Telnet.

6. Test for HTTP methods and XST for credentials of legitimate users.

7. Perform application configuration management test to review the information of the source code, log files and default Error Codes.

Session Management Testing

1. Check the URL’s in the Restricted area to Test for CSRF (Cross Site Request Forgery).

2.Test for Exposed Session variables by inspecting Encryption and reuse of session token, Proxies and caching.

3. Collect a sufficient number of cookie samples and analyze the cookie sample algorithm and forge a valid Cookie in order to perform an Attack.

4. Test the cookie attribute using intercept proxies such as Burp Proxy, OWASP ZAP, or traffic intercept proxies such as Temper Data.

5. Test the session Fixation, to avoid seal user session.(session Hijacking )

Data Validation Testing

1. Performing Sources code Analyze for javascript Coding Errors.

2. Perform Union Query SQL injection testing, standard SQL injection Testing, blind SQL query Testing, using tools such as sqlninja, sqldumper, sql power injector .etc.

3. Analyze the HTML Code, Test for stored XSS, leverage stored XSS, using tools such as XSS proxy, Backframe, Burp Proxy, OWASP, ZAP, XSS Assistant.

4. Perform LDAP injection testing for sensitive information about users and hosts.

5. Perform IMAP/SMTP injection Testing for Access the Backend Mail server.

6.Perform XPATH Injection Testing for Accessing the confidential information

7. Perform XML injection testing to know information about XML Structure.

8. Perform Code injection testing to identify input validation Error.

9. Perform Buffer Overflow testing for Stack and heap memory information and application control flow.

10. Test for HTTP Splitting and smuggling for cookies and HTTP redirect information.

Denial of Service Testing

1. Send Large number of Requests that perform database operations and observe any Slowdown and Error Messages. A continuous ping command also will serve the purpose. A script to open browsers in loop for indefinite no will also help in mimicking DDOS attack scenario.

2.Perform manual source code analysis and submit a range of input varying lengths to the applications

3.Test for SQL wildcard attacks for application information testing. Enterprise Networks should choose the best DDoS Attack prevention services to ensure the DDoS attack protection and prevent their network

4. Test for User specifies object allocation whether a maximum number of object that application can handle.

5. Enter Extreme Large number of the input field used by the application as a Loop counter. Protect website from future attacks Also Check your Companies DDOS Attack Downtime Cost.

6. Use a script to automatically submit an extremely long value for the server can be logged the request.

Conclusion:

Web applications present a unique and potentially vulnerable target for cyber criminals. The goal of most web apps is to make services, products accessible for customers and employees. But it's definitely critical that web applications must not make it easier for criminals to break into systems. So, making proper plan on information gathered, execute it on multiple iterations will reduce the vulnerabilities and risk to a greater extent.

1 note

·

View note