#Data Analysis with Power BI

Explore tagged Tumblr posts

Text

Bridging Gaps in Distribution: Integrating SAP with Microsoft's Power Platform - Pinnacle The distribution industry is a complex ecosystem involving inventory management, customer service, logistics, and real-time decision-making. For businesses leveraging SAP as their core Enterprise Resource Planning (ERP) system, efficiency often hinges on maximizing the utility of their data. However, SAP alone may not always provide the level of agility and customization needed to address nuanced distribution workflows. This is where Microsoft’s Power Platform—comprising Power BI, Power Apps, and Power Automate—comes into play. When integrated with SAP, this trio empowers businesses to enhance data analysis, streamline operations, and improve customer service. Let’s explore how these tools complement SAP to transform distribution workflows. Join us now [email protected]

#Data Analysis with Power BI#Integrating Power BI with SAP#Applications of Power Apps in Distribution#SAP and Microsoft’s Power Platform

0 notes

Text

#Finance#Business#Work Meme#Work Humor#Excel#Hilarious#funny meme#funny#accounting#office humor#consulting#big data#data analysis#data visualization#data analytics#data#dashboard commentary#tableau#power bi

13 notes

·

View notes

Text

🚀 Ready to become a Data Science pro? Join our comprehensive Data Science Course and unlock the power of data! 📊💡

🔍 Learn: Excel PowerBi Python & R Machine Learning Data Visualization Real-world Projects

👨🏫 Taught by industry experts 💼 Career support & networking

3 notes

·

View notes

Text

Power BI Data Analysis

You Can Start the Power BI Training Courses now in Thane. Analyzing and interpreting data using Power BI to derive actionable insights. This may involve creating visualizations, reports, and dashboards. Designing and developing reports and dashboards using Power BI. This involves transforming raw data into a format suitable for analysis. Developing and maintaining data infrastructure to support Power BI reports, ensuring data is accessible and accurate. join now to start the career with edureka learning center. For more information contact us

For More Information

Name:- edurekathane

Email Id:[email protected]

Address:-3rd Floor, Guruprerana,Opp. Jagdish Book Depot,Above Choice Interiors, Naik Wadi, Near Thane Station,Thane (W) 400602

Contact no:--9987408100

2 notes

·

View notes

Text

Elevate your business operations with DOIT-BI's high-quality solutions that not only streamline workflows but also accelerate data analysis, resulting in enhanced overall productivity.

3 notes

·

View notes

Text

☝ High-quality solutions by DOIT-BI:

1. Help streamline workflows

2. Speed up data analysis

3. Improve overall productivity.

2 notes

·

View notes

Text

#business analytics#business analysis training#business analytics institute in india#power bi#business analyst certification#businessanalysis#business analyst#business analysis course#business analyst careers#business analyst skills#business analyst course#data analysis#data analytics#tableau#excel

0 notes

Text

Power BI - The Complete Course

🔍 Master Data Analysis with This End-to-End Power BI Course

Whether you're just starting out or aiming to upskill, our Power BI Data Analysis Course is designed to take you from beginner to intermediate level with confidence. This hands-on course enables you to independently carry out real-world data analysis using Power BI.

💡 What You’ll Learn:

Throughout the course, you’ll learn how to extract data from multiple sources like Excel, web data, and SQL Server. You’ll also get in-depth training on how to clean, transform, and model data to uncover insights.

From building interactive dashboards to using DAX functions for calculations, this course provides a comprehensive approach. You’ll even discover how to leverage AI insights for trend detection and anomaly spotting.

🛠️ Key Topics Covered:

Power BI Installation & Setup

Importing & Transforming Data

Connecting to SQL Server & Web Data

Data Modeling & Relationships

Calculated Columns & Quick Measures

Visualizing Data with Charts & Filters

Publishing Reports to Power BI Service

Deep Dive into DAX Functions

🎯 Learning Outcomes: By the end of this course, you will:

Understand Power BI's core features and business value

Clean and prepare data for analysis

Build professional dashboards and reports

Use AI-powered tools to derive smart insights

Navigate and share dashboards using Power BI Service

All practice datasets are provided so you can apply what you learn and build your portfolio. Perfect for aspiring data analysts, this course helps you turn raw data into powerful business decisions.

Ready to transform your data skills? Start learning Power BI today!

0 notes

Text

Your Data Career Starts Here: DICS Institute in Laxmi Nagar

In a world driven by data, those who can interpret it hold the power. From predicting market trends to driving smarter business decisions, data analysts are shaping the future. If you’re looking to ride the data wave and build a high-demand career, your journey begins with choosing the Best Data Analytics Institute in Laxmi Nagar.

Why Data Analytics? Why Now?

Companies across the globe are investing heavily in data analytics to stay competitive. This boom has opened up exciting opportunities for data professionals with the right skills. But success in this field depends on one critical decision — where you learn. And that’s where Laxmi Nagar, Delhi’s thriving educational hub, comes into play.

Discover Excellence at the Best Data Analytics Institute in Laxmi Nagar

When it comes to learning data analytics, you need more than just lectures — you need an experience. The Best Data Analytics Institute in Laxmi Nagar offers exactly that, combining practical training with industry insights to ensure you’re not just learning, but evolving.

Here’s what makes it a top choice for aspiring analysts:

Real-World Curriculum: Learn the tools and technologies actually used in the industry — Python, SQL, Power BI, Excel, Tableau, and more — with modules designed to match current job market needs.

Project-Based Learning: The institute doesn’t just teach concepts — it puts them into practice. You’ll work on live projects, business case studies, and analytics problems that mimic real-life scenarios.

Expert Mentors: Get trained by data professionals with years of hands-on experience. Their mentorship gives you an insider’s edge and prepares you to tackle interviews and workplace challenges with confidence.

Smart Class Formats: Whether you’re a student, jobseeker, or working professional, the flexible batch options — including weekend and online classes — ensure you don’t miss a beat.

Career Support That Works: From resume crafting and portfolio building to mock interviews and job referrals, the placement team works closely with students until they land their dream role.

Enroll in the Best Data Analytics Course in Laxmi Nagar

The Best Data Analytics Course in Laxmi Nagar goes beyond the basics. It’s a complete roadmap for mastering data — right from data collection and cleaning, to analysis, visualization, and even predictive modeling.

This course is ideal for beginners, professionals looking to upskill, or anyone ready for a career switch. You’ll gain hands-on expertise, problem-solving skills, and a strong foundation that puts you ahead of the curve.

Your Data Career Starts Here

The future belongs to those who understand data. With the Best Data Analytics Institute in Laxmi Nagar and the Best Data Analytics Course in Laxmi Nagar, you’re not just preparing for a job — you’re investing in a thriving, future-proof career.

Ready to become a data expert? Enroll today and take the first step toward transforming your future — one dataset at a time.

#Data Analytics#Data Science#Business Intelligence#Machine Learning#Data Visualization#Python for Data Analysis#SQL Training#Power BI

0 notes

Text

Ravi Bommakanti, CTO of App Orchid – Interview Series

New Post has been published on https://thedigitalinsider.com/ravi-bommakanti-cto-of-app-orchid-interview-series/

Ravi Bommakanti, CTO of App Orchid – Interview Series

Ravi Bommakanti, Chief Technology Officer at App Orchid, leads the company’s mission to help enterprises operationalize AI across applications and decision-making processes. App Orchid’s flagship product, Easy Answers™, enables users to interact with data using natural language to generate AI-powered dashboards, insights, and recommended actions.

The platform integrates structured and unstructured data—including real-time inputs and employee knowledge—into a predictive data fabric that supports strategic and operational decisions. With in-memory Big Data technology and a user-friendly interface, App Orchid streamlines AI adoption through rapid deployment, low-cost implementation, and minimal disruption to existing systems.

Let’s start with the big picture—what does “agentic AI” mean to you, and how is it different from traditional AI systems?

Agentic AI represents a fundamental shift from the static execution typical of traditional AI systems to dynamic orchestration. To me, it’s about moving from rigid, pre-programmed systems to autonomous, adaptable problem-solvers that can reason, plan, and collaborate.

What truly sets agentic AI apart is its ability to leverage the distributed nature of knowledge and expertise. Traditional AI often operates within fixed boundaries, following predetermined paths. Agentic systems, however, can decompose complex tasks, identify the right specialized agents for sub-tasks—potentially discovering and leveraging them through agent registries—and orchestrate their interaction to synthesize a solution. This concept of agent registries allows organizations to effectively ‘rent’ specialized capabilities as needed, mirroring how human expert teams are assembled, rather than being forced to build or own every AI function internally.

So, instead of monolithic systems, the future lies in creating ecosystems where specialized agents can be dynamically composed and coordinated – much like a skilled project manager leading a team – to address complex and evolving business challenges effectively.

How is Google Agentspace accelerating the adoption of agentic AI across enterprises, and what’s App Orchid’s role in this ecosystem?

Google Agentspace is a significant accelerator for enterprise AI adoption. By providing a unified foundation to deploy and manage intelligent agents connected to various work applications, and leveraging Google’s powerful search and models like Gemini, Agentspace enables companies to transform siloed information into actionable intelligence through a common interface.

App Orchid acts as a vital semantic enablement layer within this ecosystem. While Agentspace provides the agent infrastructure and orchestration framework, our Easy Answers platform tackles the critical enterprise challenge of making complex data understandable and accessible to agents. We use an ontology-driven approach to build rich knowledge graphs from enterprise data, complete with business context and relationships – precisely the understanding agents need.

This creates a powerful synergy: Agentspace provides the robust agent infrastructure and orchestration capabilities, while App Orchid provides the deep semantic understanding of complex enterprise data that these agents require to operate effectively and deliver meaningful business insights. Our collaboration with the Google Cloud Cortex Framework is a prime example, helping customers drastically reduce data preparation time (up to 85%) while leveraging our platform’s industry-leading 99.8% text-to-SQL accuracy for natural language querying. Together, we empower organizations to deploy agentic AI solutions that truly grasp their business language and data intricacies, accelerating time-to-value.

What are real-world barriers companies face when adopting agentic AI, and how does App Orchid help them overcome these?

The primary barriers we see revolve around data quality, the challenge of evolving security standards – particularly ensuring agent-to-agent trust – and managing the distributed nature of enterprise knowledge and agent capabilities.

Data quality remains the bedrock issue. Agentic AI, like any AI, provides unreliable outputs if fed poor data. App Orchid tackles this foundationally by creating a semantic layer that contextualizes disparate data sources. Building on this, our unique crowdsourcing features within Easy Answers engage business users across the organization—those who understand the data’s meaning best—to collaboratively identify and address data gaps and inconsistencies, significantly improving reliability.

Security presents another critical hurdle, especially as agent-to-agent communication becomes common, potentially spanning internal and external systems. Establishing robust mechanisms for agent-to-agent trust and maintaining governance without stifling necessary interaction is key. Our platform focuses on implementing security frameworks designed for these dynamic interactions.

Finally, harnessing distributed knowledge and capabilities effectively requires advanced orchestration. App Orchid leverages concepts like the Model Context Protocol (MCP), which is increasingly pivotal. This enables the dynamic sourcing of specialized agents from repositories based on contextual needs, facilitating fluid, adaptable workflows rather than rigid, pre-defined processes. This approach aligns with emerging standards, such as Google’s Agent2Agent protocol, designed to standardize communication in multi-agent systems. We help organizations build trusted and effective agentic AI solutions by addressing these barriers.

Can you walk us through how Easy Answers™ works—from natural language query to insight generation?

Easy Answers transforms how users interact with enterprise data, making sophisticated analysis accessible through natural language. Here’s how it works:

Connectivity: We start by connecting to the enterprise’s data sources – we support over 200 common databases and systems. Crucially, this often happens without requiring data movement or replication, connecting securely to data where it resides.

Ontology Creation: Our platform automatically analyzes the connected data and builds a comprehensive knowledge graph. This structures the data into business-centric entities we call Managed Semantic Objects (MSOs), capturing the relationships between them.

Metadata Enrichment: This ontology is enriched with metadata. Users provide high-level descriptions, and our AI generates detailed descriptions for each MSO and its attributes (fields). This combined metadata provides deep context about the data’s meaning and structure.

Natural Language Query: A user asks a question in plain business language, like “Show me sales trends for product X in the western region compared to last quarter.”

Interpretation & SQL Generation: Our NLP engine uses the rich metadata in the knowledge graph to understand the user’s intent, identify the relevant MSOs and relationships, and translate the question into precise data queries (like SQL). We achieve an industry-leading 99.8% text-to-SQL accuracy here.

Insight Generation (Curations): The system retrieves the data and determines the most effective way to present the answer visually. In our platform, these interactive visualizations are called ‘curations’. Users can automatically generate or pre-configure them to align with specific needs or standards.

Deeper Analysis (Quick Insights): For more complex questions or proactive discovery, users can leverage Quick Insights. This feature allows them to easily apply ML algorithms shipped with the platform to specified data fields to automatically detect patterns, identify anomalies, or validate hypotheses without needing data science expertise.

This entire process, often completed in seconds, democratizes data access and analysis, turning complex data exploration into a simple conversation.

How does Easy Answers bridge siloed data in large enterprises and ensure insights are explainable and traceable?

Data silos are a major impediment in large enterprises. Easy Answers addresses this fundamental challenge through our unique semantic layer approach.

Instead of costly and complex physical data consolidation, we create a virtual semantic layer. Our platform builds a unified logical view by connecting to diverse data sources where they reside. This layer is powered by our knowledge graph technology, which maps data into Managed Semantic Objects (MSOs), defines their relationships, and enriches them with contextual metadata. This creates a common business language understandable by both humans and AI, effectively bridging technical data structures (tables, columns) with business meaning (customers, products, sales), regardless of where the data physically lives.

Ensuring insights are trustworthy requires both traceability and explainability:

Traceability: We provide comprehensive data lineage tracking. Users can drill down from any curations or insights back to the source data, viewing all applied transformations, filters, and calculations. This provides full transparency and auditability, crucial for validation and compliance.

Explainability: Insights are accompanied by natural language explanations. These summaries articulate what the data shows and why it’s significant in business terms, translating complex findings into actionable understanding for a broad audience.

This combination bridges silos by creating a unified semantic view and builds trust through clear traceability and explainability.

How does your system ensure transparency in insights, especially in regulated industries where data lineage is critical?

Transparency is absolutely non-negotiable for AI-driven insights, especially in regulated industries where auditability and defensibility are paramount. Our approach ensures transparency across three key dimensions:

Data Lineage: This is foundational. As mentioned, Easy Answers provides end-to-end data lineage tracking. Every insight, visualization, or number can be traced back meticulously through its entire lifecycle—from the original data sources, through any joins, transformations, aggregations, or filters applied—providing the verifiable data provenance required by regulators.

Methodology Visibility: We avoid the ‘black box’ problem. When analytical or ML models are used (e.g., via Quick Insights), the platform clearly documents the methodology employed, the parameters used, and relevant evaluation metrics. This ensures the ‘how’ behind the insight is as transparent as the ‘what’.

Natural Language Explanation: Translating technical outputs into understandable business context is crucial for transparency. Every insight is paired with plain-language explanations describing the findings, their significance, and potentially their limitations, ensuring clarity for all stakeholders, including compliance officers and auditors.

Furthermore, we incorporate additional governance features for industries with specific compliance needs like role-based access controls, approval workflows for certain actions or reports, and comprehensive audit logs tracking user activity and system operations. This multi-layered approach ensures insights are accurate, fully transparent, explainable, and defensible.

How is App Orchid turning AI-generated insights into action with features like Generative Actions?

Generating insights is valuable, but the real goal is driving business outcomes. With the correct data and context, an agentic ecosystem can drive actions to bridge the critical gap between insight discovery and tangible action, moving analytics from a passive reporting function to an active driver of improvement.

Here’s how it works: When the Easy Answers platform identifies a significant pattern, trend, anomaly, or opportunity through its analysis, it leverages AI to propose specific, contextually relevant actions that could be taken in response.

These aren’t vague suggestions; they are concrete recommendations. For instance, instead of just flagging customers at high risk of churn, it might recommend specific retention offers tailored to different segments, potentially calculating the expected impact or ROI, and even drafting communication templates. When generating these recommendations, the system considers business rules, constraints, historical data, and objectives.

Crucially, this maintains human oversight. Recommended actions are presented to the appropriate users for review, modification, approval, or rejection. This ensures business judgment remains central to the decision-making process while AI handles the heavy lifting of identifying opportunities and formulating potential responses.

Once an action is approved, we can trigger an agentic flow for seamless execution through integrations with operational systems. This could mean triggering a workflow in a CRM, updating a forecast in an ERP system, launching a targeted marketing task, or initiating another relevant business process – thus closing the loop from insight directly to outcome.

How are knowledge graphs and semantic data models central to your platform’s success?

Knowledge graphs and semantic data models are the absolute core of the Easy Answers platform; they elevate it beyond traditional BI tools that often treat data as disconnected tables and columns devoid of real-world business context. Our platform uses them to build an intelligent semantic layer over enterprise data.

This semantic foundation is central to our success for several key reasons:

Enables True Natural Language Interaction: The semantic model, structured as a knowledge graph with Managed Semantic Objects (MSOs), properties, and defined relationships, acts as a ‘Rosetta Stone’. It translates the nuances of human language and business terminology into the precise queries needed to retrieve data, allowing users to ask questions naturally without knowing underlying schemas. This is key to our high text-to-SQL accuracy.

Preserves Critical Business Context: Unlike simple relational joins, our knowledge graph explicitly captures the rich, complex web of relationships between business entities (e.g., how customers interact with products through support tickets and purchase orders). This allows for deeper, more contextual analysis reflecting how the business operates.

Provides Adaptability and Scalability: Semantic models are more flexible than rigid schemas. As business needs evolve or new data sources are added, the knowledge graph can be extended and modified incrementally without requiring a complete overhaul, maintaining consistency while adapting to change.

This deep understanding of data context provided by our semantic layer is fundamental to everything Easy Answers does, from basic Q&A to advanced pattern detection with Quick Insights, and it forms the essential foundation for our future agentic AI capabilities, ensuring agents can reason over data meaningfully.

What foundational models do you support, and how do you allow organizations to bring their own AI/ML models into the workflow?

We believe in an open and flexible approach, recognizing the rapid evolution of AI and respecting organizations’ existing investments.

For foundational models, we maintain integrations with leading options from multiple providers, including Google’s Gemini family, OpenAI’s GPT models, and prominent open-source alternatives like Llama. This allows organizations to choose models that best fit their performance, cost, governance, or specific capability needs. These models power various platform features, including natural language understanding for queries, SQL generation, insight summarization, and metadata generation.

Beyond these, we provide robust pathways for organizations to bring their own custom AI/ML models into the Easy Answers workflow:

Models developed in Python can often be integrated directly via our AI Engine.

We offer seamless integration capabilities with major cloud ML platforms such as Google Vertex AI and Amazon SageMaker, allowing models trained and hosted there to be invoked.

Critically, our semantic layer plays a key role in making these potentially complex custom models accessible. By linking model inputs and outputs to the business concepts defined in our knowledge graph (MSOs and properties), we allow non-technical business users to leverage advanced predictive, classification or causal models (e.g., through Quick Insights) without needing to understand the underlying data science – they interact with familiar business terms, and the platform handles the technical translation. This truly democratizes access to sophisticated AI/ML capabilities.

Looking ahead, what trends do you see shaping the next wave of enterprise AI—particularly in agent marketplaces and no-code agent design?

The next wave of enterprise AI is moving towards highly dynamic, composable, and collaborative ecosystems. Several converging trends are driving this:

Agent Marketplaces and Registries: We’ll see a significant rise in agent marketplaces functioning alongside internal agent registries. This facilitates a shift from monolithic builds to a ‘rent and compose’ model, where organizations can dynamically discover and integrate specialized agents—internal or external—with specific capabilities as needed, dramatically accelerating solution deployment.

Standardized Agent Communication: For these ecosystems to function, agents need common languages. Standardized agent-to-agent communication protocols, such as MCP (Model Context Protocol), which we leverage, and initiatives like Google’s Agent2Agent protocol, are becoming essential for enabling seamless collaboration, context sharing, and task delegation between agents, regardless of who built them or where they run.

Dynamic Orchestration: Static, pre-defined workflows will give way to dynamic orchestration. Intelligent orchestration layers will select, configure, and coordinate agents at runtime based on the specific problem context, leading to far more adaptable and resilient systems.

No-Code/Low-Code Agent Design: Democratization will extend to agent creation. No-code and low-code platforms will empower business experts, not just AI specialists, to design and build agents that encapsulate specific domain knowledge and business logic, further enriching the pool of available specialized capabilities.

App Orchid’s role is providing the critical semantic foundation for this future. For agents in these dynamic ecosystems to collaborate effectively and perform meaningful tasks, they need to understand the enterprise data. Our knowledge graph and semantic layer provide exactly that contextual understanding, enabling agents to reason and act upon data in relevant business terms.

How do you envision the role of the CTO evolving in a future where decision intelligence is democratized through agentic AI?

The democratization of decision intelligence via agentic AI fundamentally elevates the role of the CTO. It shifts from being primarily a steward of technology infrastructure to becoming a strategic orchestrator of organizational intelligence.

Key evolutions include:

From Systems Manager to Ecosystem Architect: The focus moves beyond managing siloed applications to designing, curating, and governing dynamic ecosystems of interacting agents, data sources, and analytical capabilities. This involves leveraging agent marketplaces and registries effectively.

Data Strategy as Core Business Strategy: Ensuring data is not just available but semantically rich, reliable, and accessible becomes paramount. The CTO will be central in building the knowledge graph foundation that powers intelligent systems across the enterprise.

Evolving Governance Paradigms: New governance models will be needed for agentic AI – addressing agent trust, security, ethical AI use, auditability of automated decisions, and managing emergent behaviors within agent collaborations.

Championing Adaptability: The CTO will be crucial in embedding adaptability into the organization’s technical and operational fabric, creating environments where AI-driven insights lead to rapid responses and continuous learning.

Fostering Human-AI Collaboration: A key aspect will be cultivating a culture and designing systems where humans and AI agents work synergistically, augmenting each other’s strengths.

Ultimately, the CTO becomes less about managing IT costs and more about maximizing the organization’s ‘intelligence potential’. It’s a shift towards being a true strategic partner, enabling the entire business to operate more intelligently and adaptively in an increasingly complex world.

Thank you for the great interview, readers who wish to learn more should visit App Orchid.

#adoption#agent#Agentic AI#agents#ai#AI adoption#AI AGENTS#AI systems#AI-powered#AI/ML#Algorithms#Amazon#amp#Analysis#Analytics#anomalies#anomaly#app#App Orchid#applications#approach#attributes#audit#autonomous#bedrock#bi#bi tools#Big Data#black box#box

0 notes

Text

How to use COPILOT in Microsoft Word | Tutorial

This page contains a video tutorial by Reza Dorrani on how to use Microsoft 365 Copilot in Microsoft Word. The video covers: Starting a draft with Copilot in Word. Adding content to an existing document using Copilot. Rewriting text with Copilot. Generating summaries with Copilot. Overall, using Copilot as a dynamic writing companion to enhance productivity in Word. Is there something…

View On WordPress

#Advanced Excel#Automation tools#Collaboration#copilot#Data analysis#Data management#Data visualization#Excel#Excel formulas#Excel functions#Excel skills#Excel tips#Excel tutorials#MIcrosoft Copilot#Microsoft Excel#Microsoft Office#Microsoft Word#Office 365#Power BI#productivity#Task automation

1 note

·

View note

Text

The Future of Data Analytics: Emerging Trends in Power BI

In the ever-evolving landscape of data analytics, Power BI continues to lead the charge with cutting-edge innovations that empower businesses to make data-driven decisions. As organizations increasingly rely on analytics to stay competitive, understanding the emerging trends in Power BI becomes essential for leveraging its full potential.

Here’s a look at what the future holds for Power BI and how these trends can revolutionize data analytics.

AI-Driven Insights

Artificial Intelligence (AI) is reshaping data analytics, and Power BI is at the forefront of this transformation. Features like AI-powered visualizations and natural language processing (NLP) allow users to interact with their data intuitively.

With tools like Quick Insights and AI Builder, businesses can uncover hidden patterns and predict trends, enabling smarter decision-making. The integration of AI makes it easier than ever for users to derive actionable insights without extensive technical expertise.

Real-Time Data Analytics

The demand for real-time insights is surging as businesses seek to make quicker decisions. Power BI’s real-time dashboards and streaming data capabilities provide up-to-the-second updates, enabling organizations to monitor key metrics and respond proactively.

This trend is especially crucial for industries like retail, finance, and healthcare, where timely decisions can have a significant impact.

Enhanced Integration with Third-Party Tools

Power BI’s seamless integration with tools like Microsoft Excel, Azure, and third-party applications is becoming even more robust. As businesses work with diverse data sources, enhanced integration ensures a unified view of their data ecosystem. This capability streamlines workflows, reduces silos, and fosters better collaboration across teams.

Custom Visualizations and Reports

Tailored insights are becoming a necessity for organizations aiming to address specific business needs. Power BI’s customization capabilities allow users to create bespoke dashboards and reports, ensuring that analytics align with unique goals. This trend reflects the growing importance of personalized solutions in today’s data-driven world.

Growing Adoption of Mobile Analytics

With remote work and mobile operations on the rise, Power BI’s mobile app is gaining traction. Users can access and interact with dashboards from anywhere, ensuring that decision-makers remain informed and agile, regardless of location.

Power BI continues to evolve, offering businesses unparalleled capabilities in data visualization, real-time monitoring, and AI-driven analytics. By staying ahead of these emerging trends, organizations can harness the full potential of Power BI to drive growth and innovation. For more details reach out power bi solutions.

#power bi integration#power bi solutions#data visualization#data analysis#data science course#data science#machine learning#business intelligence

0 notes

Text

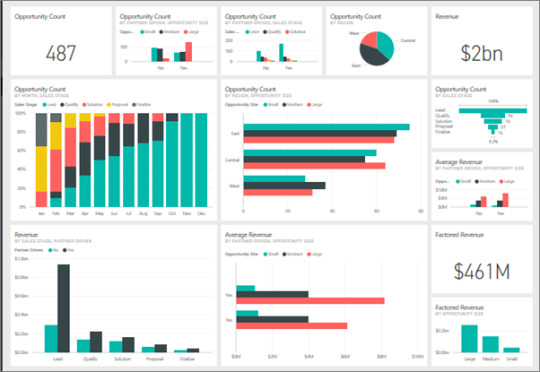

create custom business intelligence dashboards in power bi

#data visualization#data analytics#data analysis#power bi services#power bi reporting#power bi service#power bi consulting services

0 notes

Text

My Journey in Learning Power BI: Crafting Visually Appealing Dashboards for My Services and Clients

Introduction As an IT consultant at Valantic Hamburg, I focus primarily on developing Chatbots using platforms like Cognigy, IBM Watson Assistant, and Discovery. Throughout my experience, I have recognized the importance of effectively communicating the usage data of my clients’ customers who interact with these Chatbots. This realization led me to explore Microsoft Power BI, a tool that allows…

#Business Intelligence#Chatbots#Cognigy#Dashboard Design#Data Analysis#Data Analytics#Data Visualization#IBM Watson Assistant#IT Consulting#Microsoft Power BI#PL-300 Exam#Power BI

0 notes

Text

Optimizing Power BI Dashboards for Real-Time Data Insights

Today, having access to real-time data is crucial for organizations to stay ahead of the competition. Power BI, a powerful business intelligence tool, allows businesses to create dynamic, real-time dashboards that provide immediate insights for informed decision-making. But how can you ensure your Power BI dashboards are optimized for real-time data insights? Let’s explore some key strategies.

1. Enable Real-Time Data Feeds

You'll need to integrate live data sources to get started with real-time data in Power BI. Power BI supports streaming datasets, which allow data to be pushed into the dashboard in real time. Ensure your data sources are properly configured for live streaming, such as APIs, IoT devices, or real-time databases. Power BI provides three types of real-time datasets:

Push datasets

Streaming datasets

PubNub datasets

Each serves different use cases, so selecting the appropriate one is key to a smooth real-time experience.

2. Utilize DirectQuery for Up-to-Date Data

When real-time reporting is critical, leveraging DirectQuery mode in Power BI can be a game-changer. DirectQuery allows Power BI to pull data directly from your database without needing to import it. This ensures that the data displayed in your dashboard is always up-to-date. However, ensure your data source can handle the performance load that comes with querying large datasets directly.

3. Set Up Data Alerts for Timely Notifications

Data alerts in Power BI enable you to monitor important metrics automatically. When certain thresholds are met or exceeded, Power BI can trigger alerts, notifying stakeholders in real time. These alerts can be set up on tiles pinned from reports with gauge, KPI, and card visuals. By configuring alerts, you ensure immediate attention is given to critical changes in your data, enabling faster response times.

4. Optimize Dashboard Performance

To make real-time insights more actionable, ensure your dashboards are optimized for performance. Consider the following best practices:

Limit visual complexity: Use fewer visuals to reduce rendering time.

Use aggregation: Rather than showing detailed data, use summarized views for quicker insights.

Leverage custom visuals: If native visuals are slow, explore Power BI’s marketplace for optimized custom visuals.

5. Utilize Power BI Dataflows for Data Preparation

Data preparation can sometimes be the bottleneck in providing real-time insights. Power BI Dataflows help streamline the ETL (Extract, Transform, Load) process by automating data transformations and ensuring that only clean, structured data is used in dashboards. This reduces the time it takes for data to be available in the dashboard, speeding up the entire pipeline.

6. Enable Row-Level Security (RLS) for Personalized Real-Time Insights

Real-time data can vary across different users in an organization. With Row-Level Security (RLS), you can control access to the data so that users only see information relevant to them. For example, sales teams can receive live updates on their specific region without seeing data from other territories, making real-time insights more personalized and relevant.

7. Monitor Data Latency

While real-time dashboards sound ideal, you should also monitor for data latency. Even with live datasets, there can be delays depending on your data source’s performance or how data is being processed. Ensure your data pipeline is optimized to reduce latency as much as possible. Implement caching mechanisms and optimize data refresh rates to keep latency low and insights truly real-time.

8. Embed Real-Time Dashboards into Apps

Embedding Power BI dashboards into your business applications can significantly enhance how your teams use real-time data. Whether it’s a customer support tool, sales management app, or project management system, embedding Power BI visuals ensures that decision-makers have the most recent data at their fingertips without leaving their workflow.

9. Regularly Update Data Sources and Models

Data sources evolve over time. Regularly update your data models, refresh connections, and ensure that the data you’re pulling in is still relevant. As your business needs change, it’s essential to refine and adjust your real-time data streams and visuals to reflect these shifts.

Conclusion

Optimizing Power BI dashboards for real-time data insights requires a combination of leveraging the right data connections, optimizing performance, and ensuring the dashboards are designed for quick interpretation. With the ability to access real-time data, businesses can improve their agility, make faster decisions, and stay ahead in an increasingly data-driven world.

By following these strategies, you can ensure that your Power BI dashboards are fully optimized to deliver the timely insights your organization needs to succeed.

0 notes

Text

#business analytics#business analysis training#business analytics institute in india#business analyst certification#businessanalysis#business analyst#business analysis course#business analyst careers#business analyst course#business analyst skills#power bi#sql#tableau#excel#data analytics#data analysis#courses

0 notes