#Data Backup

Explore tagged Tumblr posts

Text

185 notes

·

View notes

Text

I know we all already know this, but this is a good point: tis the season to buy electronics cheap.

14 notes

·

View notes

Text

Secure Configurations on your Technology

Ensuring secure configurations on your technology is crucial to protect against cyber threats and vulnerabilities. Here are some tips to make sure your configurations are secure:

-Change Default Settings: Always change default usernames and passwords on your devices.

-Enable Firewalls: Use firewalls to block unauthorized access to your network.

-Regular Updates: Keep your software and firmware up to date to patch any security holes.

-Strong Passwords: Use complex and unique passwords for all your accounts and devices.

-Disable Unnecessary Services: Turn off services and features you don't use to reduce potential entry points for attackers.

-Monitor and Audit: Regularly monitor and audit your configurations to ensure they remain secure.

Stay vigilant and keep your technology secure! #CyberSecurity #SecureConfigurations #StaySafe – www.centurygroup.net

#Cybersecurity#managed it services#data backup#Secured Configuration#cloud technology services#phishing

3 notes

·

View notes

Text

Myth Busted: Data Backup ≠ Data Security

Think your data's safe just because it's backed up? Think again. Backups help you recover—but they won’t stop a breach, ransomware attack, or insider threat.

What you really need: a multi-layered defense with encryption, access controls, and real-time monitoring. Read the full blog

#CyberSecurity #SmallBusinessTips #FileAgo #DataSecurity #TechMyths #RansomwareProtection #BackupIsNotEnough

1 note

·

View note

Text

I present, for your consideration:

My dog, Tank, on a couch sitting on a blanket;

20 LTO Ultrium 5 magnetic tapes with 1500 GB uncompressed capacity each;

An Appa plushie peeking his forehead out from behind the couch; and

KN95 masks by the door.

0 notes

Text

Preventing Ransomware Attacks: Proactive Measures to Shield Your Business

New Post has been published on https://thedigitalinsider.com/preventing-ransomware-attacks-proactive-measures-to-shield-your-business/

Preventing Ransomware Attacks: Proactive Measures to Shield Your Business

All forms of cyber attacks are dangerous to organizations in one way or another. Even small data breaches can lead to time-consuming and expensive disruptions to day-to-day operations.

One of the most destructive forms of cybercrime businesses face is ransomware. These types of attacks are highly sophisticated both in their design and in the way they’re delivered. Even just visiting a website or downloading a compromised file can bring an entire organization to a complete standstill.

Mounting a strong defense against ransomware attacks requires careful planning and a disciplined approach to cyber readiness.

Strong Endpoint Security

Any device that’s used to access your business network or adjacent systems is known as an “endpoint.” While all businesses have multiple endpoints they need to be mindful of, organizations with decentralized teams tend to have significantly more they need to track and protect. This is typically due to remote working employees accessing company assets from personal laptops and mobile devices.

The more endpoints a business needs to manage, the higher the chances that attackers can find hackable points of entry. To mitigate these risks effectively, it’s essential to first identify all the potential access points a business has. Businesses can then use a combination of EDR (Endpoint Detection and Response) solutions and access controls to help reduce the risk of unauthorized individuals posing as legitimate employees.

Having an updated BYOD (Bring Your Own Device) policy in place is also important when improving cybersecurity. These policies outline specific best practices for employees when using their own devices for business-related purposes – whether they’re in the office or working remotely. This can include avoiding the use of public Wi-Fi networks, keeping devices locked when not in use, and keeping security software up-to-date.

Better Password Policies and Multi-Factor Authentication

Whether they know it or not, your employees are the first line of defense when it comes to avoiding ransomware attacks. Poorly configured user credentials and bad password management habits can easily contribute to an employee inadvertently putting an organization at more risk of a security breach than necessary.

While most people like having a fair amount of flexibility when creating a password they can easily remember, it’s important as a business to establish certain best practices that need to be followed. This includes ensuring employees are creating longer and more unique passwords, leveraging MFA (multi-factor authentication) security features, and refreshing their credentials at regular intervals throughout the year.

Data Backup and Recovery

Having regular backups of your databases and systems is one way to increase your operational resilience in the wake of a major cyberattack. In the event your organization is hit with ransomware and your critical data becomes inaccessible, you’ll be able to rely on your backups to help recover your systems. While this process can take some time, it’s a much more reliable alternative to paying a ransom amount.

When planning your backups, there is a 3-2-1 rule you should follow. This rule stipulates that you should:

Have three up-to-date copies of your database

Use two different data storage formats (internal, external, etc.)

Keep at least one copy stored off premises

Following this best practice lowers the likelihood that “all” your backups become compromised and gives you the best chance for recovering your systems successfully.

Network Segmentation and Access Control

One of the most challenging things about ransomware is its ability to spread rapidly to other connected systems. A viable strategy for limiting this ability is to segment your networks, breaking them up into smaller, isolated strings of a wider network.

Network segmentation makes it so that if one system becomes compromised, attackers still won’t have open access to a system. This makes it much harder for malware to spread.

Maintaining strict access control policies is another way you can reduce your attack surface. Access control systems limit the amount of free access that users have in a system at any given time. In these types of systems, the best practice is to ensure that regardless of who someone is, they should still only ever have just enough permissions in place to access the information they need to accomplish their tasks – nothing more, nothing less.

Vulnerability Management and Penetration Testing

To create a safer digital environment for your business, it’s important to regularly scan systems for new vulnerabilities that may have surfaced. While businesses may spend a lot of time putting various security initiatives into place, as the organization grows, these initiatives may not be as effective as they used to be.

However, identifying security gaps across business infrastructures can be incredibly time-consuming for many organizations. Working with penetration testing partners is a great way to fill this gap.

Pentesting services can be invaluable when helping businesses pinpoint precisely where their security systems are failing. By using simulated real-world attacks, penetration testers can help businesses see where their most significant security weaknesses are and prioritize the adjustments that will bring the most value when protecting against ransomware attacks.

Data Security Compliance and Ethical AI Practices

There are various considerations you want to make when implementing new security protocols for your business. Ransomware attacks can do much more than disrupt day-to-day operations. They can also lead to data security compliance issues that can lead to a long list of legal headaches and do irreparable damage to your reputation.

Because of this, it’s important to ensure all critical business data uses active encryption protocols. This essentially makes data inaccessible to anyone not authorized to view it. While this in itself won’t necessarily stop cybercriminals from accessing stolen data, it can help to protect the information from being sold to unauthorized parties. Leveraging data encryption may also already be a requirement for your business depending on the regulatory bodies that govern your industry.

Another thing to consider is that while AI-enabled security solutions are becoming more widely used, there are certain compliance standards that need to be followed when implementing them. Understanding any implications associated with leveraging data-driven technologies will help ensure you’re able to get maximum benefit out of using them without inadvertently breaching data privacy rights.

Keep Your Business Better Protected

Protecting your business from ransomware attacks requires a proactive approach to risk management and prevention. By following the strategies discussed, you’ll be able to lower your susceptibility to an attack while having the right protocols in place if and when you need them.

#access control#ai#approach#assets#Attack surface#attackers#authentication#backup#backups#breach#Business#BYOD#compliance#control systems#credentials#cyber#cyber attacks#cyber readiness#cyberattack#cybercrime#cybercriminals#cybersecurity#data#data backup#Data Breaches#data privacy#data security#data storage#data-driven#databases

0 notes

Text

Top External Hard Drives to Buy in 2025

In 2025, external hard drives continue to be essential for data storage, backup, and portability. Whether you’re looking for high-speed SSDs, large-capacity HDDs, or rugged drives for travel, there are plenty of great options. Below, we’ve compiled a list of the best external hard drives to buy in 2025, categorized based on performance, storage capacity, and use case. Best Overall External Hard…

#best hard drives 2025#data backup#External hard drives#gaming hard drives#high-capacity storage#portable SSD#professional storage solutions#SSD vs HDD#storage devices#tech accessories

0 notes

Text

Network Right

Network Right is the leading Managed IT Services and IT Support provider in the San Francisco Bay Area, serving startups and tech companies across San Francisco, San Jose, Oakland, Berkeley, San Mateo, Santa Clara, and Alameda. Offering comprehensive remote and onsite IT solutions, their services include IT infrastructure, IT security, data backup, network management support, VoIP, A/V solutions, and more. They also provide IT Helpdesk support and Fractional IT Manager roles, ensuring full-spectrum IT management. Trusted by fast-growing companies, Network Right combines expert solutions with unparalleled customer support to accelerate business growth.

Contact us:

333 Bryant St #250, San Francisco, CA 94107

(415) 209-5808

Opening Hours:

Monday to Friday: 9 AM–6 PM

Saturday to Sunday: Closed

Social Links:

https://www.linkedin.com/company/networkright/

#IT Support#Managed IT Services#IT Helpdesk#IT Infrastructure#IT Security#Data Backup#Network Management#VoIP Solutions#A/V Solutions#Cybersecurity#IT Consulting#Professional IT Services

1 note

·

View note

Text

impermanent/cloud storage fad rant coming along:

why is everything so hell bent on making me store my data in the cloud?

like the PS4 was designed to work beside an external HDD or SSD if you ran low on space, the PS5 on the other hand *really* wants you to use their cloud storage (the options to back up data are so limited when you try to back up PS4 data vs PS5)--for which you must be subscribed to PS+ also!

computers come integrated with OneDrive storage on them for easy backing up of files (sure, I've lost files to corruption or deletion before, this is nifty for things that *should* be backed up continuously like a thesis or a research paper--but then again, space is finite, so to get more space, that's more money!) but they also word the storage options so weirdly. what do you mean "free up space" frees up the physical space and not the cloud storage backup?

and now my antivirus has removed the interface for me to choose *where* to store my back ups, and has now renamed and re-shopped the "backup" panel to "cloud backup"--for which they have their own specialty server--which you have to subscribe to on top of your anti-virus.

I'm just so tired of the cloud. it's so impermanent. it's not practical in the long run. and so many devices are now hardwired to best back up on cloud software than hard-drive to hard-drive. maybe I'm just getting old but this doesn't seem sustainable or fiscally applicable.

#technology rant#cloud storage#data backup#subscription services#turning into the sims 3 trait technophobe rn

0 notes

Text

What is Zero Trust Architecture?

Zero Trust Architecture (ZTA) is a security model that operates on the principle "never trust, always verify." Unlike traditional security models that assume everything within a network is trustworthy, ZTA requires verification for every access request, regardless of whether it originates inside or outside the network.

Why is it Important?

In today's digital landscape, cyber threats are becoming increasingly sophisticated. Zero Trust Architecture helps mitigate risks by continuously verifying every user and device, ensuring that only authorized entities can access sensitive information.

How Does It Protect You?

1. Enhanced Security: By requiring strict verification, ZTA minimizes the risk of unauthorized access and data breaches.

2. Reduced Attack Surface: Limiting access to only what is necessary decreases potential entry points for attackers.

3. Real-time Monitoring: Continuous monitoring and verification help detect and respond to threats promptly.

Adopt Zero Trust Architecture with Century Solutions Group to fortify your cybersecurity defenses and protect your business from evolving cyber threats! #ZeroTrust #CyberSecurity #CenturySolutionsGroup

Learn More:https://centurygroup.net/cloud-computing/cyber-security/

3 notes

·

View notes

Text

Check out this insightful infographic highlighting 8 key points on why securing Microsoft 365 is critical for your business. From preventing cyber-attacks to ensuring compliance and safeguarding data, it's essential to stay proactive in securing your cloud environment. Protect your organization by understanding these crucial steps.

For more details, view the full blog: https://www.znetlive.com/blog/why-securing-microsoft-365-is-essential-8-key-points/

#office 365#microsoft 365#microsoft 365 backup#data backup#data security#data protection#Microsoft 365 security#acronis backup#cyber security#how to protect M365 data

0 notes

Text

Understanding Cloud Outages: Causes, Consequences, and Mitigation Strategies

Cloud computing has transformed business operations, providing unmatched scalability, flexibility, and cost-effectiveness. However, even leading cloud platforms are vulnerable to cloud outages.

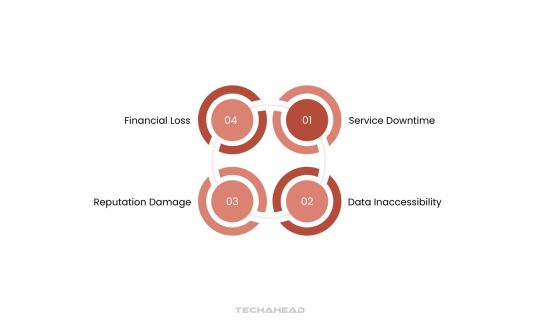

Cloud outages can severely disrupt service delivery, jeopardizing business continuity and causing substantial financial setbacks. When a vendor’s servers experience downtime or fail to meet SLA commitments, the consequences can be far-reaching.

During a cloud outage, organizations often lose access to critical applications and data, rendering essential operations inoperable. This unavailability halts productivity, delays decision-making, and undermines customer trust.

Although cloud technology promises high reliability, no system is entirely immune to disruptions. Even the most reputable cloud service providers occasionally face interruptions due to unforeseen issues. These outages highlight the inherent challenges of cloud computing and the necessity for businesses to prepare for such contingencies.

While cloud computing offers transformative benefits, the risks of cloud outages demand proactive strategies. Organizations must adopt robust mitigation plans to ensure resilience and sustain operations during these inevitable disruptions.

Key Takeaways:

Cloud outages occur when services become unavailable. These disruptions impact businesses by affecting operations, causing financial loss, and harming reputation.

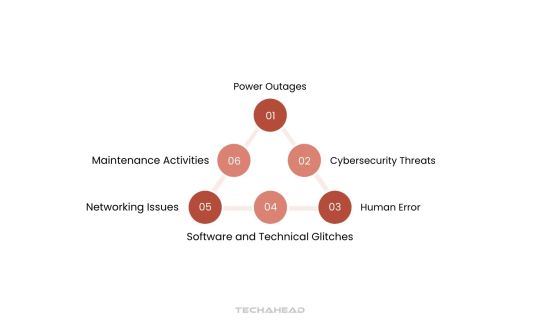

Power failures disrupt data centers, cybersecurity threats like DDoS attacks can compromise services, and human errors or technical failures can lead to downtime. Network problems and scheduled maintenance can also cause outages.

Outages have significant consequences; these include financial loss from service interruptions, reputational damage due to loss of customer trust, and legal implications from data breaches or non-compliance.

Distributing workloads across multiple regions, implementing strong security protocols, and continuously monitoring systems help prevent outages. Planning maintenance and having disaster recovery protocols ensure quick recovery from disruptions.

Businesses should focus on minimizing risks to ensure service availability and protect against potential disruptions.

What are Cloud Outages?

Cloud outages are periods when cloud-hosted applications and services become temporarily inaccessible. During these downtimes, users face slow response times, connectivity issues, or complete service disruptions. These interruptions can severely impact businesses across multiple dimensions.

The financial repercussions of cloud outages are immediate and far-reaching. When services go offline, organizations lose revenue as customers are unable to complete transactions. Additionally, businesses cannot track critical performance metrics, which can lead to operational inefficiencies and delayed decision-making.

Beyond monetary losses, cloud outages also cause reputational damage. Frustrated customers often perceive these disruptions as a sign of unreliability. A lack of transparent communication during downtime further exacerbates customer dissatisfaction. Over time, this can erode trust and push clients toward competitors offering more dependable solutions.

Another critical concern during cloud outages is the potential for legal consequences. If an outage leads to data loss, breaches, or compromised privacy, businesses may face litigation, regulatory penalties, and increased scrutiny. The fallout from such incidents can add both financial and reputational burdens.

Long-term consequences of cloud outages include reduced customer satisfaction, loss of client loyalty, and ongoing revenue declines. Organizations may also incur significant costs to restore affected systems and prevent future outages. Inadequate cloud infrastructure increases the risk of repeated disruptions, making businesses more vulnerable to prolonged downtimes.

To mitigate these risks, organizations must proactively invest in robust backup and recovery systems. Reliable disaster recovery plans and redundancies help minimize downtime, ensuring business continuity during unforeseen cloud outages. This strategic approach safeguards revenue streams, protects customer trust, and fortifies operational resilience.

Common Causes of Cloud Outages

Cloud outages can stem from various factors, both within and beyond the control of cloud vendors. These challenges must be addressed to ensure cloud services meet Service Level Agreements (SLAs) with optimal performance and reliability.

Power Outages

Power disruptions are one of the most prevalent causes of cloud outages. Data centers operate on an enormous scale, consuming anywhere from tens to hundreds of megawatts of electricity. These facilities often rely on national power grids or third-party-operated power plants.

Consistently maintaining sufficient electricity supply becomes increasingly difficult as demand surges alongside market growth. Limited power scalability can leave cloud infrastructure vulnerable to sudden disruptions, impacting the availability of hosted services. To address this, cloud vendors invest heavily in backup solutions like on-site generators and alternative energy sources.

Cybersecurity Threats

Cyber attacks, such as Distributed Denial of Service (DDoS) attacks, overwhelm data centers with malicious traffic, disrupting legitimate access to cloud services. Despite robust security measures, attackers continuously identify loopholes to exploit. These intrusions may trigger automated protective mechanisms that mistakenly block legitimate users, leading to unexpected downtime.

In severe cases, breaches result in data leaks, service shutdowns, or prolonged outages. Cloud vendors constantly refine their defense systems to combat these evolving threats and ensure service continuity despite rising cybersecurity challenges.

Human Error

Human errors, though rare, can have catastrophic effects on cloud infrastructure. A single misconfiguration or incorrect command may trigger a chain reaction, causing widespread outages. Even leading cloud providers have experienced significant disruptions due to human oversight.

For instance, a human error at an AWS data center in 2017 led to widespread Internet outages globally. Although anomaly detection systems can identify such issues early, complete restoration often requires system-wide restarts, prolonging the recovery period. Cloud vendors mitigate this risk through rigorous protocols, automation tools, and comprehensive staff training.

Software and Technical Glitches

Cloud infrastructure relies on a complex interplay of hardware and software components. Even minor bugs or glitches within this ecosystem can trigger unexpected cloud outages. Technical faults may remain undetected during routine monitoring until they manifest as critical service disruptions. When these incidents occur, identifying and resolving the root cause can take time, leaving end-users unable to access essential services. Cloud vendors implement automated monitoring, rigorous testing, and proactive maintenance to identify vulnerabilities before they impact operations.

Networking Issues

Networking failures are other significant contributor to cloud outages. Cloud vendors often rely on telecommunications providers and government-operated networks for global connectivity. Issues in these external networks, such as damaged infrastructure or cross-border disruptions, are beyond the vendor’s direct control. To mitigate these risks, leading cloud providers operate data centers across geographically diverse regions. Dynamic workload balancing allows cloud vendors to shift operations to unaffected regions, ensuring uninterrupted service delivery even during network failures.

Maintenance Activities

Scheduled and unscheduled maintenance is essential for improving cloud infrastructure performance and cloud security. Cloud vendors routinely conduct upgrades, fixes, and system optimizations to enhance service delivery. However, these maintenance activities may require temporary service interruptions, workload transfers, or full system restarts.

During this period, end-users may experience service disruptions classified as cloud outages. Vendors strive to minimize downtime through well-planned maintenance windows, redundancy systems, and real-time communication with customers.

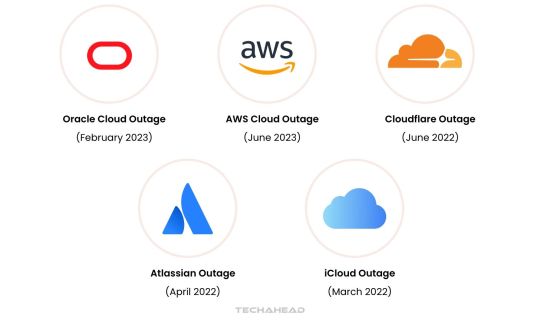

Global Cloud Outage Statistics and Notable Cases

Cloud outages remain a critical challenge for organizations worldwide, often disrupting essential operations. Below are significant real-world examples and insights drawn from these incidents to uncover key lessons.

Oracle Cloud Outage (February 2023)

In February 2023, Oracle Cloud Infrastructure encountered a severe outage triggered by an erroneous DNS configuration update. This impacted Oracle’s Ashburn data center, causing widespread service interruptions. The outage affected Oracle’s internal systems and global customers, highlighting the importance of robust change management protocols in cloud operations.

AWS Cloud Outage (June 2023)

AWS faced an extensive service disruption in June 2023, affecting prominent services, including the New York Metropolitan Transportation Authority and the Boston Globe. The root cause was a subsystem failure managing AWS Lambda’s capacity, revealing the need for stronger subsystem reliability in serverless environments.

Cloudflare Outage (June 2022)

A network configuration change caused an unplanned outage at Cloudflare in June 2022. The incident lasted 90 minutes and disrupted major platforms like Discord, Shopify, and Peloton. This outage underscores the necessity for rigorous testing of configuration updates, especially in global networks.

Atlassian Outage (April 2022)

Atlassian suffered one of its most prolonged outages in April 2022, lasting up to two weeks for some users. The disruption was due to underlying cloud infrastructure problems compounded by ineffective communication. This case emphasizes the importance of clear communication strategies during extended outages.

iCloud Outage (March 2022)

Slack’s AWS Outage (February 2022)

In February 2022, Slack users faced a five-hour disruption due to a configuration error in its AWS cloud infrastructure. Over 11,000 users experienced issues like message failures and file upload problems. The outage highlights the need for quick troubleshooting processes to minimize downtime.

IBM Outage (January 2022)

IBM encountered two significant outages in January 2022, the first lasting five hours in the Dallas region. A second, one-hour outage impacted virtual private cloud services globally due to a remediation misstep. These incidents highlight the importance of precision during issue resolution.

AWS Outage (December 2021)

AWS’s December 2021 outage disrupted key services, including API Gateway and EC2 instances, for nearly 11 hours. The issue stemmed from an automated error in the “us-east-1” region, causing network congestion akin to a DDoS attack. This underscores the necessity for robust automated system safeguards.

Google Cloud Outage (November 2021)

A two-hour outage impacted Google Cloud in November 2021, disrupting platforms like Spotify, Etsy, and Snapchat. The root cause was a load-balancing network configuration issue. This incident highlights the role of advanced network architecture in maintaining service availability.

Microsoft Azure Cloud Outage (October 2021)

Microsoft Azure experienced a six-hour service disruption in October 2021 due to a software issue during a VM architecture migration. Users faced difficulties deploying virtual machines and managing basic services. This case stresses the need for meticulous oversight during major architectural changes.

These examples serve as critical reminders of vulnerabilities in cloud systems. Businesses can minimize the impact of cloud outages through proactive measures like redundancy, real-time monitoring, and advanced disaster recovery planning.

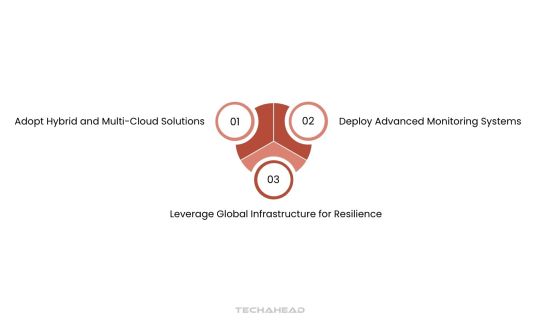

Ways to Manage Cloud Outages

While natural disasters are unavoidable, strategic measures can help you mitigate and overcome cloud outages effectively.

Adopt Hybrid and Multi-Cloud Solutions

Redundancy is key to minimizing cloud outages. Relying on a single provider introduces a single point of failure, which can disrupt your operations. Implementing failover mechanisms ensures continuous service delivery during an outage.

Hybrid cloud solutions combine private and public cloud infrastructure. Critical workloads remain operational on the private cloud even when the public cloud fails. This approach not only safeguards core business functions but also ensures compliance with data regulations.

According to Cisco’s 2022 survey of 2,577 IT decision-makers, 73% of respondents utilized hybrid cloud for backup and disaster recovery. This demonstrates its effectiveness in reducing downtime risks.

Multi-cloud solutions utilize multiple public cloud providers simultaneously. By distributing workloads across diverse cloud platforms, businesses eliminate single points of failure. If one service provider experiences downtime, another provider ensures service continuity.

Deploy Advanced Monitoring Systems

Cloud outages do not always cause full system failures. They can manifest as delayed responses, missed queries, or slower performance. Such anomalies, if ignored, can impact user experience before they escalate into major outages.

Implementing cloud monitoring systems helps you proactively detect irregularities in performance. These tools identify early warning signs, allowing you to resolve potential disruptions before they affect end users. Real-time monitoring ensures seamless operations and reduces the risk of unplanned outages.

Leverage Global Infrastructure for Resilience

Natural disasters and regional disruptions are inevitable, but you can minimize their impact. Distributing IT infrastructure across multiple geographical locations provides a robust solution against localized cloud outages.

Instead of relying on a single data center, consider global redundancy strategies. Deploy backup systems in geographically diverse regions, such as U.S. Central, U.S. West, or European data centers. This ensures uninterrupted service delivery, even if one location goes offline.

For businesses operating in Europe, adopting multi-region solutions also supports GDPR compliance. This way, customer data remains protected, and operations continue seamlessly, regardless of cloud disruptions.

By leveraging global infrastructure, businesses can enhance reliability, improve redundancy, and build resilience against unforeseen cloud outages.

Additional Preventive Measures for Businesses

To effectively mitigate the risk of cloud outages, CIOs can adopt a multi-faceted approach that enhances resilience and ensures business continuity:

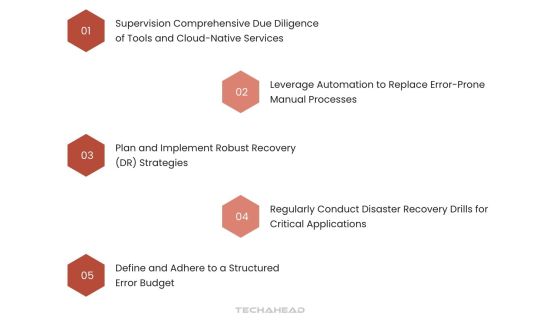

Supervision Comprehensive Due Diligence of Tools and Cloud-Native Services

Conduct a thorough evaluation of cloud-native services, ensuring they meet organizational requirements for scalability, security, and performance. This involves reviewing vendor capabilities, compatibility with existing infrastructure, and potential vulnerabilities that could lead to cloud outages. Regular audits help identify gaps early, preventing disruptions.

Leverage Automation to Replace Error-Prone Manual Processes

Automating operational tasks, such as provisioning, monitoring, and patch management, minimizes the human errors often linked to cloud outages. Automation tools also enhance efficiency by streamlining workflows, allowing IT teams to focus on proactive system improvements rather than reactive troubleshooting.

Plan and Implement Robust Recovery (DR) Strategies

A well-structured DR strategy is critical to quickly recover from cloud outages. This involves identifying mission-critical applications, determining acceptable recovery time objectives (RTOs), and creating recovery workflows. Comprehensive planning ensures minimal data loss and rapid resumption of services, even during large-scale disruptions.

Regularly Conduct Disaster Recovery Drills for Critical Applications

Testing DR plans through realistic drills allows organizations to simulate cloud outages and measure the effectiveness of their recovery protocols. These exercises reveal weaknesses in existing plans, providing actionable insights for improvement. Frequent testing also builds confidence in the system’s ability to handle unexpected disruptions.

Define and Adhere to a Structured Error Budget

An error budget establishes a clear threshold for acceptable service disruptions, balancing innovation and stability. It quantifies the permissible level of failure, enabling organizations to implement risk management frameworks effectively. This approach ensures proactive maintenance, minimizing the chances of severe cloud outages while allowing room for improvement.

By combining these preventive measures with ongoing monitoring and optimization, CIOs can significantly reduce the likelihood and impact of cloud outages, safeguarding critical operations and maintaining customer trust.

Conclusion

Although cloud outages are unavoidable when depending on cloud services, understanding their causes and consequences is crucial. Organizations can mitigate the risks of cloud outages by proactively adopting best practices that ensure operational resilience.

Key strategies include implementing redundancy to eliminate single points of failure, enabling continuous monitoring to detect issues early, and scheduling regular backups to safeguard critical data. Robust security measures are also essential to protect against vulnerabilities that could exacerbate outages.

In today’s cloud-reliant environment, being proactive is vital. Businesses that anticipate potential disruptions are better positioned to maintain seamless operations and customer trust. Proactive planning not only minimizes the operational impact of cloud outages but also reinforces long-term business continuity.

For better seamless cloud computing you should go for a proud partner like TechAhead. We can help you in migrating and consulting for your cloud environment.

Source URL: https://www.techaheadcorp.com/blog/understanding-cloud-outages-causes-consequences-and-mitigation-strategies/

0 notes

Text

#Oracle EBS Cloning#oracle database cloning#database cloning#erp cloning#data protection#data backup#Oracle EBS snapshot

0 notes

Text

The Most Dangerous Data Blind Spots in Healthcare and How to Successfully Fix Them

New Post has been published on https://thedigitalinsider.com/the-most-dangerous-data-blind-spots-in-healthcare-and-how-to-successfully-fix-them/

The Most Dangerous Data Blind Spots in Healthcare and How to Successfully Fix Them

Data continues to be a significant sore spot for the healthcare industry, with increasing security breaches, cumbersome systems, and data redundancies undermining the quality of care delivered.

Adding to the pressure, the US Department of Health and Human Services (HSS) is set to introduce more stringent regulations around interoperability and handling of electronic health records (EHRs), with transparency a top priority.

However, it’s clear that technology has played a crucial role in streamlining and organizing information-sharing in the industry, which is a significant advantage when outstanding services heavily rely on speed and accuracy.

Healthcare organizations have been turning to emerging technologies to alleviate growing pressures, which could possibly save them $360 billion annually. In fact, 85% of companies are investing or planning to invest in AI to streamline operations and reduce delays in patient care. Technology is cited as a top strategic priority in healthcare for 56% of companies versus 34% in 2022, according to insights from Bain & Company and KLAS Research.

Yet there are a number of factors healthcare providers should be mindful of when looking to deploy advanced technology, especially considering that AI solutions are only as good as the information used to train them.

Let’s take a look at the biggest data pain points in healthcare and technology’s role in alleviating them.

Enormous Amounts of Data

It’s no secret that healthcare organizations have to deal with a massive amount of data, and it’s only growing in size: By next year, healthcare data is expected to hit 10 trillion gigabytes.

The sheer volume of data that needs to be stored is a driving force behind cloud storage popularity, although this isn’t a problem-free answer, especially when it comes to security and interoperability. That’s why 69% of healthcare organizations prefer localized cloud storage (i.e., private clouds on-premises).

However, this can easily become challenging to manage for a number of reasons. In particular, this huge amount of data has to be stored for years in order to be HHS-compliant.

AI is helping providers tackle this challenge by automating processes that are otherwise resource-exhaustive in terms of manpower and time. There are a plethora of solutions on the market designed to ease data management, whether that’s in the form of tracking patient data via machine learning integrations with big data analytics or utilizing generative AI to speed up diagnostics.

For AI to do its job well, organizations must ensure they’re keeping their digital ecosystems as interoperable as possible to minimize disruptions in data exchanges that have devastating repercussions for their patients’ well-being.

Moreover, it’s crucial that these solutions are scalable according to an organization’s fluctuating needs in terms of performance and processing capabilities. Upgrading and replacing solutions because they fail to scale is a time-consuming and expensive process that few healthcare providers can afford. That’s because it means further training, realigning processes, and ensuring interoperability hasn’t been compromised with the introduction of a new technology.

Data Redundancies

With all that data to manage and track, it’s no surprise that things slip through the cracks, and in an industry where lives are on the line, data redundancies are a worst-case scenario that only serves to undermine the quality of patient care. Shockingly, 24% of patient records are duplicates, and this challenge is worsened when consolidating information across multiple electronic medical records (EMR).

AI has a big role to play in handling data redundancies, helping companies streamline operations and minimize data errors. Automation solutions are especially useful in this context, speeding up data entry processes in Health Information Management Systems (HIMS), lowering the risk of human error in creating and maintaining more accurate EHRs, and slashing risks of duplicated or incorrect information.

However, these solutions aren’t always flawless, and organizations need to prioritize fault tolerance when integrating them into their systems. It’s vital to have certain measures in place so that when a component fails, the software can continue functioning properly.

Key mechanisms of fault tolerance include guaranteed delivery of data and information in instances of system failure, data backup and recovery, load balancing across multiple workflows, and redundancy management.

This essentially ensures that the wheels keep turning until a system administrator is available to manually address the problem and prevent disruptions from bringing the entire system to a screeching halt. Fault tolerance is a great feature to look out for when selecting a solution, so it can help narrow down the product search for healthcare organizations.

Additionally, it’s crucial for organizations to make sure they’ve got the right framework in place for redundancy and error occurrences. That’s where data modeling comes in as it helps organizations map out requirements and data processes to maximize success.

A word of caution though: building the best data models entails analyzing all the optional information derived from pre-existing data. That’s because this enables the accurate identification of a patient and delivers timely and relevant information about them for swift, insight-driven intervention. An added bonus of data modeling is that it’s easier to pinpoint APIs and curate these for automatically filtering and addressing redundancies like data duplications.

Fragmented and Siloed Data

We know there are a lot of moving parts in data management, but compound this with the high-paced nature of healthcare and it’s easily a recipe for disaster. Data silos are among the most dangerous blind spots in this industry, and in life-or-death situations where practitioners aren’t able to access a complete picture of a patient’s record, the consequences are beyond catastrophic.

While AI and technology are helping organizations manage and process data, integrating a bunch of APIs and new software isn’t always smooth sailing, particularly if it requires outsourcing help whenever a new change or update is made. Interoperability and usability are at the crux of maximizing technology’s role in healthcare data handling and should be prioritized by organizations.

Most platforms are developer-centric, involving high levels of coding with complex tools that are beyond most people’s skill sets. This limits the changes that can be made within a system and means that every time an organization wants to make an update, they have to outsource a trained developer.

That’s a significant headache for people operating in an industry that really can’t sacrifice more time and energy to needlessly complicated processes. Technology should facilitate instant action, not hinder it, which is why healthcare providers and organizations need to opt for solutions that can be rapidly and seamlessly integrated into their existing digital ecosystem.

What to Look for in a Solution

Opt for platforms that can be templatized so they can be imported and implemented easily without having to build and write complex code from scratch, like Enterprise Integration Platform as a Service (EiPaaS) solutions. Specifically, these services use drag-and-drop features that are user-friendly so that changes can be made without the need to code.

This means that because they’re so easy to use, they democratize access for continuous efficiency so team members from across departments can implement changes without fear of causing massive disruptions.

Another vital consideration is auditing, which helps providers ensure they’re maintaining accountability and consistently connecting the dots so data doesn’t go missing. Actions like tracking transactions, logging data transformations, documenting system interactions, monitoring security controls, measuring performance, and flagging failure points should be non-negotiable for tackling these data challenges.

In fact, audit trails serve to set organizations up for continuous success in data management. Not only do they strengthen the safety of a system to ensure better data handling, but they are also valuable for enhancing business logic so operations and process workflows are as airtight as possible.

Audit trails also empower teams to be as proactive and alert as possible and to keep abreast of data in terms of where it comes from, when it was logged, and where it is sent. This bolsters the bottom line of accountability in the entire processing stage to minimize the risk of errors in data handling as much as possible.

The best healthcare solutions are designed to cover all bases in data management, so no stone is left unturned. AI isn’t perfect, but keeping these risks and opportunities in mind will help providers make the most of it in the healthcare landscape.

#2022#ai#amp#Analytics#APIs#as a service#audit#automation#backup#backup and recovery#bases#Big Data#big data analytics#billion#Building#Business#challenge#change#Cloud#cloud storage#clouds#code#coding#Companies#continuous#data#data analytics#data backup#Data Management#data modeling

0 notes

Text

https://www.bloglovin.com/@vastedge/hybrid-cloud-backup-strategy-specifics-benefits

Learn how to create a resilient hybrid cloud backup strategy that combines the best of both private and public clouds. Explore key considerations such as data security, cost management, and disaster recovery to ensure your data is protected and accessible at all times.

#hybrid cloud#cloud backup strategy#data backup#cloud security#disaster recovery#hybrid cloud benefits#cloud storage solutions.

0 notes

Text

Back. Up. Everything.

You cannot trust the internet, you cannot trust the cloud, you need to have physical backups of your data, because sometimes computer components fry and sometimes huge-arse corporations decide they can't be fucked storing people's data any more.

Get an external hard drive. Set up your own network-attached storage (NAS; like your own little cloud). Burn important files to DVDs is another one that came up when I had this rant on Twitter. The Wayback Machine is great, but if you're deadset on keeping videos, download them. I use the extension Video DownloadHelper; it's how I managed to archive a ton of stuff off a site that's since vanished off the internet over stupid copyright reasons.

Keep the 3-2-1 data backup strategy in mind:

Keep at least three copies of your data.

Store two backup copies of your data on different storage devices.

Store one backup copy off-site or on the cloud.

This is not always going to be feasible if you (like me) have found yourself babysitting terabytes of video that a company won't (not can't; won't) release on YouTube just because it was paywalled content in 2018, but I have it on an external drive and some of it in the cloud... but the cloud in question is my Google Drive, so if my account goes inactive, that's not safe either.

doing this with anything is an awful hit, but god, there are so many youtube accounts that serve as the last existing records of so many dead people's voices. feels especially perverse

35K notes

·

View notes