#Deduplication Software

Explore tagged Tumblr posts

Text

B2B Database Contacts: Achieving the Precise Harmony Between Quality and Quantity

In the ever-evolving landscape of B2B sales, the tapestry of effective B2B Lead Generation, targeted Sales Leads, and strategic Business Development is intricately woven with the threads of the B2B Contact Database. This comprehensive article embarks on an exploration to unravel the profound interplay between quality and quantity – the pulse that resonates within B2B Database Leads. Join us on this journey as we traverse the pathways, strategies, and insights that guide you towards mastering the equilibrium, steering your Sales Prospecting initiatives towards finesse and success.

DOWNLOAD THE INFOGRAPHIC HERE

The Essence of Quality

Quality emerges as the cornerstone in the realm of B2B Lead Generation, encapsulating the essence of depth, precision, and pertinence that envelops the contact data nestled within the B2B Contact Database. These quality leads, much like jewels in a treasure trove, possess the capacity to metamorphose into valuable clients, etching a definitive impact on your revenue stream. Every contact entry isn't a mere data point; it's a capsule that encapsulates an individual's journey – their role, industry, buying tendencies, and distinctive preferences. Cultivating a repository of such high-caliber contacts is akin to nurturing a reservoir of prospects, where each interaction holds the promise of meaningful outcomes.

Deciphering the Role of Quantity

Yet, even in the pursuit of quality, quantity emerges as a steadfast ally. Quantity embodies the expanse of contacts that populate your B2B Database Leads. Imagine casting a net wide enough to enfold diverse prospects, broadening your scope of engagement. A higher count of contacts translates to an amplified potential for interaction, heightening the probability of uncovering those latent prospects whose untapped potential can blossom into prosperous business alliances. However, it's imperative to acknowledge that quantity, devoid of quality, risks transforming into an exercise in futility – a drain on resources without yielding substantial outcomes.

Quality vs. Quantity: The Artful Balancing Act

In the fervor of database compilation, the allure of sheer quantity can occasionally overshadow the crux of strategic B2B Sales and Sales Prospecting. An extensive, indiscriminate list of contacts can rapidly devolve into a resource drain, sapping efforts and diluting the efficacy of your marketing endeavors. Conversely, an overemphasis on quality might inadvertently curtail your outreach, constraining the potential for growth. The true artistry lies in achieving a symphony – a realization that true success unfolds from the harmonious interaction of quality and quantity.

youtube

Navigating the Equilibrium

This path towards equilibrium demands a continual commitment to vigilance and meticulous recalibration. Consistent audits of your B2B Contact Database serve as the bedrock for maintaining data that is not only up-to-date but also actionable. Removing outdated, duplicated, or erroneous entries becomes a proactive stride towards upholding quality. Simultaneously, infusing your database with fresh, relevant contacts injects vibrancy into your outreach endeavors, widening the avenues for engagement and exploration.

Harnessing Technology for Exemplary Data Management

In this era of technological prowess, an array of tools stands ready to facilitate the intricate choreography between quality and quantity. Step forward Customer Relationship Management (CRM) software – an invaluable ally empowered with features such as data validation, deduplication, and enrichment. Automation, the pinnacle of technological innovation, elevates database management to unparalleled heights of precision, scalability, and efficiency. Embracing these technological marvels forms the bedrock of your B2B Sales and Business Development strategies.

Collaborating with Esteemed B2B Data Providers

In your pursuit of B2B Database Leads, consider forging collaborations with esteemed B2B data providers. These seasoned professionals unlock a treasure trove of verified leads, tailor-made solutions for niche industries, and a portal to global business expansion. By tapping into their expertise, you merge the realms of quality and quantity, securing a comprehensive toolkit poised to reshape your sales landscape.

As we draw the curtains on this exploration, remember that the compass steering your B2B Sales, Sales Prospecting, and Business Development endeavors is calibrated by the delicate interplay of quality and quantity. A B2B Contact Database enriched with high-value leads, accompanied by a robust quantity, stands as the axis upon which your strategic maneuvers pivot. Equipped with insights, tools, and allies like AccountSend, your pursuit to strike this harmonious equilibrium transforms into an enlightening journey that propels your business towards enduring growth and undeniable success.

#AccountSend#B2BLeadGeneration#B2B#LeadGeneration#B2BSales#SalesLeads#B2BDatabases#BusinessDevelopment#SalesFunnel#SalesProspecting#BusinessOwner#Youtube

14 notes

·

View notes

Text

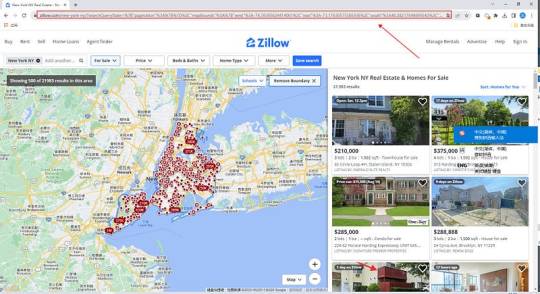

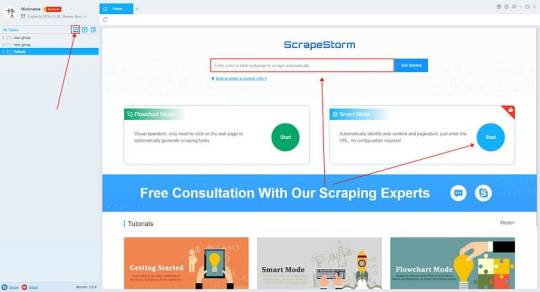

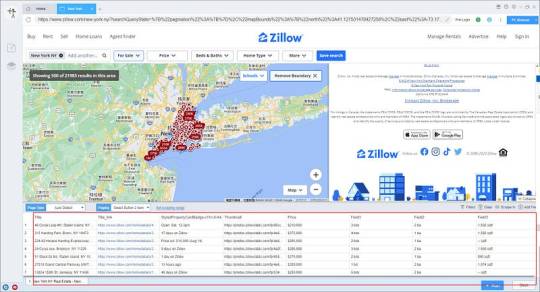

Easy way to get job data from Totaljobs

Totaljobs is one of the largest recruitment websites in the UK. Its mission is to provide job seekers and employers with efficient recruitment solutions and promote the matching of talents and positions. It has an extensive market presence in the UK, providing a platform for professionals across a variety of industries and job types to find jobs and recruit staff.

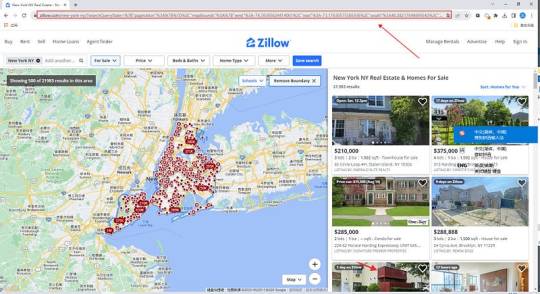

Introduction to the scraping tool

ScrapeStorm is a new generation of Web Scraping Tool based on artificial intelligence technology. It is the first scraper to support both Windows, Mac and Linux operating systems.

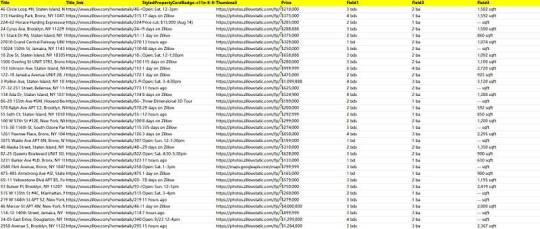

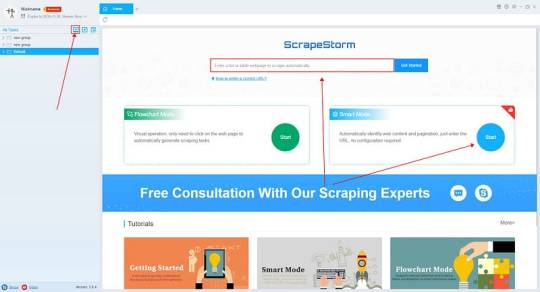

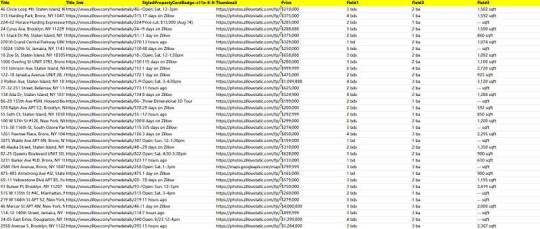

Preview of the scraped result

1. Create a task

(2) Create a new smart mode task

You can create a new scraping task directly on the software, or you can create a task by importing rules.

How to create a smart mode task

2. Configure the scraping rules

Smart mode automatically detects the fields on the page. You can right-click the field to rename the name, add or delete fields, modify data, and so on.

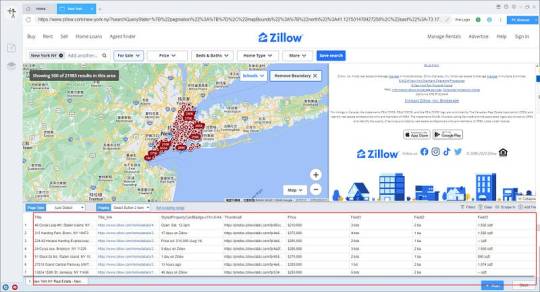

3. Set up and start the scraping task

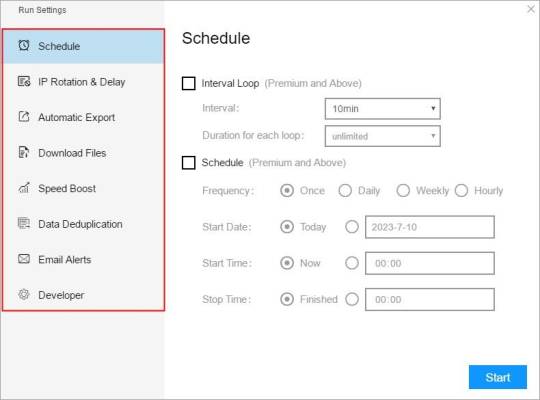

(1) Run settings

Choose your own needs, you can set Schedule, IP Rotation&Delay, Automatic Export, Download Images, Speed Boost, Data Deduplication and Developer.

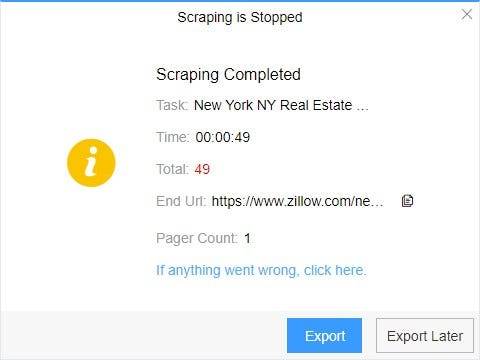

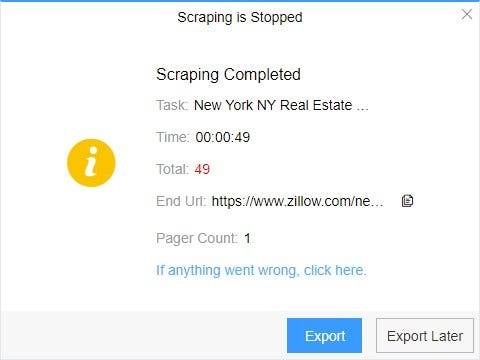

4. Export and view data

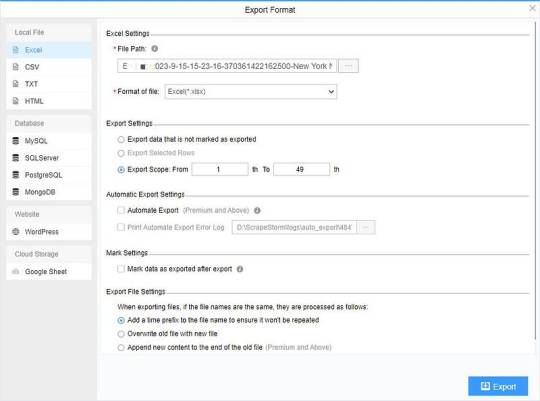

(2) Choose the format to export according to your needs.

ScrapeStorm provides a variety of export methods to export locally, such as excel, csv, html, txt or database. Professional Plan and above users can also post directly to wordpress.

How to view data and clear data

2 notes

·

View notes

Text

Control Structured Data with Intelligent Archiving

Control Structured Data with Intelligent Archiving

You thought you had your data under control. Spreadsheets, databases, documents all neatly organized in folders and subfolders on the company server. Then the calls started coming in. Where are the 2015 sales figures for the Western region? Do we have the specs for the prototype from two years ago? What was the exact wording of that contract with the supplier who went out of business? Your neatly organized data has turned into a chaotic mess of fragmented information strewn across shared drives, email, file cabinets and the cloud. Before you drown in a sea of unstructured data, it’s time to consider an intelligent archiving solution. A system that can automatically organize, classify and retain your information so you can find what you need when you need it. Say goodbye to frantic searches and inefficiency and hello to the control and confidence of structured data.

The Need for Intelligent Archiving of Structured Data

You’ve got customer info, sales data, HR records – basically anything that can be neatly filed away into rows and columns. At first, it seemed so organized. Now, your databases are overloaded, queries are slow, and finding anything is like searching for a needle in a haystack. An intelligent archiving system can help you regain control of your structured data sprawl. It works by automatically analyzing your data to determine what’s most important to keep active and what can be safely archived. Say goodbye to rigid retention policies and manual data management. This smart system learns your data access patterns and adapts archiving plans accordingly. With less active data clogging up your production systems, queries will run faster, costs will decrease, and your data analysts can actually get work done without waiting hours for results. You’ll also reduce infrastructure demands and risks associated with oversized databases. Compliance and governance are also made easier. An intelligent archiving solution tracks all data movement, providing a clear chain of custody for any information that needs to be retained or deleted to meet regulations. Maybe it’s time to stop treading water and start sailing your data seas with an intelligent archiving solution. Your databases, data analysts and CFO will thank you. Smooth seas ahead, captain!

How Intelligent Archiving Improves Data Management

Intelligent archiving is like a meticulous assistant that helps tame your data chaos. How, you ask? Let’s explore:

Automated file organization

Intelligent archiving software automatically organizes your files into a logical folder structure so you don’t have to spend hours sorting through documents. It’s like having your own personal librarian categorize everything for easy retrieval later.

Efficient storage

This software compresses and deduplicates your data to free up storage space. Duplicate files hog valuable storage, so deduplication removes redundant copies and replaces them with pointers to a single master copy. Your storage costs decrease while data accessibility remains the same.

Compliance made simple

For companies in regulated industries, intelligent archiving simplifies compliance by automatically applying retention policies as data is ingested. There’s no danger of mistakenly deleting information subject to “legal hold” and avoiding potential fines or sanctions. Let the software handle the rules so you can avoid data jail.

Searchability

With intelligent archiving, your data is indexed and searchable, even archived data. You can quickly find that invoice from five years ago or the contract you signed last month. No more digging through piles of folders and boxes. Search and find — it’s that easy. In summary, intelligent archiving brings order to the chaos of your data through automated organization, optimization, compliance enforcement, and searchability. Tame the data beast once and for all!

Implementing an Effective Data Archiving Strategy

So you have a mind-boggling amount of data accumulating and you’re starting to feel like you’re drowning in a sea of unstructured information. Before you decide to throw in the towel, take a deep breath and consider implementing an intelligent archiving strategy.

Get Ruthless

Go through your data and purge anything that’s obsolete or irrelevant. Be brutally honest—if it’s not useful now or in the foreseeable future, delete it. Free up storage space and clear your mind by ditching the digital detritus.

Establish a Filing System

Come up with a logical taxonomy to categorize your data. Group similar types of info together for easy searching and access later on. If you have trouble classifying certain data points, you probably don’t need them. Toss ‘em!

Automate and Delegate

Use tools that can automatically archive data for you based on your taxonomy. Many solutions employ machine learning to categorize and file data accurately without human input. Let technology shoulder the burden so you can focus on more important tasks, like figuring out what to have for lunch.

Review and Refine

Revisit your archiving strategy regularly to make sure it’s still working for your needs. Make adjustments as required to optimize how data is organized and accessed. Get feedback from other users and incorporate their suggestions. An effective archiving approach is always a work in progress. With an intelligent data archiving solution in place, you’ll gain control over your information overload and find the freedom that comes from a decluttered digital space. Tame the data deluge and reclaim your sanity!

Conclusion

So there you have it. The future of data management and control through intelligent archiving is here. No longer do you have to grapple with endless spreadsheets, documents, files and manually track the relationships between them.With AI-powered archiving tools, your data is automatically organized, categorized and connected for you. All that structured data chaos becomes a thing of the past. Your time is freed up to focus on more meaningful work. The possibilities for data-driven insights and optimization seem endless. What are you waiting for? Take back control of your data and unleash its potential with intelligent archiving. The future is now, so hop to it! There’s a whole new world of data-driven opportunity out there waiting for you.

2 notes

·

View notes

Text

Scraping Capterra.com Product Details: Unlock B2B Software Insights for Smarter Decisions

Scraping Capterra.com Product Details: Unlock B2B Software Insights for Smarter Decisions

In the competitive world of B2B software, informed decision-making is everything. Whether you're a SaaS provider, market researcher, or software reseller, having access to accurate product details can drive strategic choices and better customer engagement. At Datascrapingservices.com, we offer professional Capterra.com Product Details Scraping Services that provide you with structured, reliable, and up-to-date data from one of the most trusted software directories in the world.

Why Scrape Capterra.com?

Capterra.com is a leading platform where users explore, compare, and review software across thousands of categories like CRM, project management, accounting, HR, marketing automation, and more. It’s a goldmine of information for businesses looking to analyze the software landscape, monitor competitors, or identify partnership opportunities. That’s where our automated Capterra scraping services come in—extracting key product data at scale, with accuracy and speed.

Key Data Fields Extracted from Capterra.com:

Product Name

Vendor Name

Product Description

Category

Pricing Details

Deployment Type (Cloud, On-Premise, etc.)

Features List

User Ratings and Reviews

Review Count and Score

Product URL and Website Links

This structured data can be delivered in your preferred format—CSV, Excel, JSON, or directly into your CRM or BI tool.

Benefits of Capterra Product Details Extraction

✅ Competitive Intelligence

Track your competitors' positioning, pricing, features, and user sentiment. Understand where you stand and how to differentiate your product more effectively.

✅ Lead Generation and Market Research

Identify new software vendors and solutions within specific categories or regions. Perfect for consultants and analysts seeking data-driven insights.

✅ SaaS Product Comparison

If you run a product comparison site or software review platform, you can enrich your database with verified, regularly updated listings from Capterra.

✅ Content Strategy

Use extracted reviews, features, and product overviews to create detailed blog posts, product comparisons, and buyer guides.

✅ Business Development

Target emerging or established vendors for partnerships, integrations, or channel sales opportunities using real-time insights from Capterra.

Why Choose DataScrapingServices.com?

Custom Scraping Solutions: Tailored to your needs—whether you want to track only one category or extract data across all Capterra listings.

Real-time or scheduled extraction: Receive updated data on a daily, weekly, or monthly basis—whenever you need it.

Accurate and Clean Data: We ensure the scraped data is deduplicated, validated, and formatted for immediate use.

Compliant and Ethical Practices: We follow best practices and adhere to web scraping guidelines and data privacy laws.

Best eCommerce Data Scraping Services Provider

Macys.com Product Listings Scraping

Scraping Argos.co.uk Home and Furniture Product Listings

Coles.com.au Product Information Extraction

Extracting Product Details from eBay.de

Scraping Currys.co.uk Product Listings

Target.com Product Prices Extraction

Wildberries.ru Product Price Scraping

Extracting Product Data from Otto.de

Extracting Amazon Product Listings

Extracting Product Details from BigW.com.au

Best Capterra Product Details Extraction Services in USA:

Fort Worth, Washington, Orlando, Mesa, Indianapolis, Long Beach, Denver, Fresno, Bakersfield, Atlanta, Austin, Houston, San Jose, Tulsa, Omaha, Philadelphia, Louisville, Chicago, San Francisco, Colorado, Wichita, San Antonio, Fresno, Long Beach, New Orleans, Oklahoma City, Raleigh, Seattle, Memphis, Sacramento, Virginia Beach, Columbus, Jacksonville, Las Vegas, El Paso, Charlotte, Milwaukee, Sacramento, Dallas, Nashville, Boston, Tucson and New York.

Final Thoughts

Scraping product details from Capterra.com empowers your business with valuable market intelligence that manual methods simply can't deliver. Whether you’re streamlining competitive analysis, fueling lead generation, or enriching your SaaS insights, DataScrapingServices.com is your trusted partner.

📧 Get in touch today: [email protected]🌐 Visit us at: Datascrapingservices.com

Let’s transform Capterra data into your next competitive advantage.

#scrapingcapterraproductdetails#extractingproductinformationfromcapterra#ecommercedataextraction#webscraping#pricemonitoring#ecommercestrategy#dataextraction#marketintelligence#retailpricing#competitortracking#datascrapingservices

0 notes

Text

How Kabir Amperity is Revolutionizing Customer Data Platforms?

Kabir Shahani, the visionary behind Kabir Amperity, has played a pivotal role in transforming how businesses manage and activate customer data. As the co-founder of Amperity, he recognized early on the limitations of traditional customer data platforms and set out to build a smarter, more unified solution. Under his leadership, Amperity leverages advanced AI and machine learning to deliver accurate, real-time customer profiles that drive personalization and growth for major brands. By breaking down data silos and ensuring seamless integration, Kabir Amperity is setting new standards in customer data management. His innovative approach continues to shape the future of digital marketing, proving that with the right leadership, technology can unlock the true value of customer insights.

Addressing The Fragmentation In Customer Data Systems

One of the core challenges Kabir Shahani identified early in his journey was the fragmentation of customer data across multiple systems. Traditional platforms often struggled to create a unified customer profile due to disjointed databases, siloed departments, and legacy technology. Kabir Amperity tackled this issue head-on by developing a system capable of ingesting data from disparate sources and intelligently matching it to individual customer identities. This innovation not only streamlined operations but also allowed companies to gain a 360-degree view of their customers, improving personalization and targeting at every touchpoint.

Leveraging Artificial Intelligence For Better Accuracy

To bring a competitive edge to customer data platforms, Amperity has deeply integrated artificial intelligence into its core engine. Kabir Amperity focused on building machine learning models that continuously improve data matching, deduplication, and enrichment. The AI technology learns from customer behavior, data patterns, and evolving data points, helping businesses improve the accuracy and reliability of their customer profiles. As a result, companies experience fewer errors in targeting, better segmentation, and more meaningful insights. AI is not just a feature at Amperity; it is a foundational pillar that drives smarter marketing strategies and revenue growth.

Creating Real-Time Access To Unified Customer Profiles

In today’s fast-paced digital environment, timing is everything. One of Kabir Shahani’s key contributions has been ensuring that Amperity’s platform delivers customer data in real time. Rather than relying on batch processing, which can delay insights, the platform empowers businesses to access up-to-date customer profiles instantly. This is especially critical for industries like retail, travel, and hospitality, where consumer behavior shifts quickly. Kabir Amperity’s emphasis on speed and accessibility allows brands to act on data at the moment it matters most, creating more responsive and engaging customer experiences.

Helping Major Brands Unlock Growth Potential

Amperity’s success isn’t limited to software innovation—it’s also reflected in the results its clients achieve. Under the leadership of Kabir Amperity, the platform has been adopted by several Fortune 500 companies looking to modernize their data infrastructure. From fashion to financial services, brands have used Amperity to drive customer loyalty, increase conversions, and improve customer retention. The platform’s scalability and flexibility allow businesses of all sizes to adapt quickly and maximize the value of their customer data. This real-world impact validates the revolutionary ideas that Kabir Shahani and his team have built into the platform.

Promoting Ethical And Secure Data Practices

As privacy regulations and consumer expectations evolve, data ethics have become a top concern for businesses. Kabir Amperity recognized this shift early on and prioritized building a platform that ensures compliance and transparency. Amperity allows users to manage consent, track data lineage, and meet international data protection standards. The software is designed not only to optimize performance but also to respect the rights and preferences of customers. In this way, Kabir Amperity is pioneering responsible data practices that position businesses for sustainable success in a privacy-first world.

Building A Culture Of Innovation And Collaboration

Beyond technology, one of the less visible yet powerful aspects of Amperity’s success is its internal culture. Kabir Shahani has cultivated a team that thrives on solving complex challenges and continuously pushing the boundaries of what is possible. By fostering collaboration across engineering, product, and client success teams, Kabir Amperity has created an environment where innovation is a daily practice. This culture ensures that the platform not only keeps up with industry changes but often stays ahead of them, delivering new features and capabilities that keep clients at the forefront of customer engagement.

Expanding The Future Of Customer Data Platforms

The work being done at Amperity under the leadership of Kabir Shahani is more than just a software solution—it is a blueprint for the future of customer data management. The platform is expanding into new markets and integrating with emerging technologies such as predictive analytics, real-time personalization engines, and omnichannel engagement tools. Kabir Amperity envisions a world where businesses of all sizes can use data as a strategic asset, not just an operational resource. With ongoing product development and strategic partnerships, the company is laying the groundwork for the next generation of customer-centric businesses.

Conclusion

Kabir Shahani has not only founded a company—he has sparked a movement in how businesses think about and use customer data. Through Amperity, he has addressed long-standing issues in data fragmentation, brought powerful AI tools to the forefront, and created a platform that is both effective and ethical. The impact of Kabir Amperity is seen in the success stories of global brands and the growing recognition of customer data as a vital business asset. As customer expectations continue to evolve, businesses will increasingly rely on platforms like Amperity to meet those needs with speed, precision, and integrity.

0 notes

Text

Your Trusted Partner for Advanced Data Storage Solutions

Your data deserves the best — and that’s exactly what Esconet Technologies Ltd. delivers.

At Esconet, we provide advanced data storage solutions that are designed to meet the evolving demands of today’s businesses. Whether you need ultra-fast block storage, flexible file and object storage, or software-defined storage solutions, we have you covered.

1. High-Performance Block Storage — Designed for low-latency, high-speed access with NVMe-over-Fabrics. 2. File and Object Storage — Handle massive datasets easily with NFS, SMB, S3 support, and parallel file systems for up to 40 GB/s throughput. 3. Software-Defined Storage (SDS) — Enjoy scalability, auto-tiering, load balancing, deduplication, and centralized management. 4. Data Protection and Security — Advanced encryption, RAID, and erasure coding to safeguard your critical information. 5. Strategic Partnerships — We collaborate with Dell Technologies, HPE, NetApp, Hitachi Vantara, and others to bring you the best solutions, including our own HexaData Storage Systems.

For more details, visit: Esconet's Data Storage Systems Page

#EsconetTechnologies#DataStorage#EnterpriseIT#SecureStorage#SoftwareDefinedStorage#HexaData#BusinessSolutions#DataSecurity

0 notes

Text

SaaS Acceleration Techniques: Boosting Cloud App Performance at the Edge

As businesses increasingly rely on software-as-a-service (SaaS) applications, ensuring optimal performance becomes a strategic imperative. Cloud-based applications offer significant flexibility and cost efficiency, yet their performance often hinges on the underlying network infrastructure. Latency issues, bandwidth constraints, and inefficient routing can slow down critical SaaS platforms, negatively impacting user experience and productivity. Fortunately, WAN optimization techniques—when extended to the network's edge—can dramatically enhance performance, ensuring fast, reliable access to cloud apps.

The Need for WAN Optimization in a SaaS World

SaaS applications are central to modern business operations, from CRM and collaboration tools to analytics and enterprise resource planning (ERP) systems. However, these applications can experience latency and inconsistent performance if the data must travel long distances or traverse congested networks. A poorly optimized WAN may result in delayed page loads, sluggish response times, and a frustrating user experience.

Optimizing WAN performance is critical not only for internal operations but also for customer-facing services. In a world where every millisecond counts, businesses must take proactive steps to ensure that SaaS applications perform at their best. Enhancing network paths and reducing latency can lead to faster decision-making, increased productivity, and a competitive edge.

Key Techniques for SaaS Acceleration

Dynamic Path Selection

One of the most effective WAN optimization techniques is dynamic path selection. This method uses real-time analytics to assess multiple network paths continuously and automatically routes traffic via the most optimal route. By leveraging technologies such as Software-Defined WAN (SD-WAN), organizations can ensure that latency-sensitive SaaS traffic, like video conferencing or real-time collaboration tools, always takes the fastest route. Dynamic routing reduces delays and prevents network congestion, providing smooth, uninterrupted access to cloud applications.

Local Breakout

Local breakout is another critical strategy for accelerating SaaS performance. Instead of routing all traffic back to a centralized data center, local breakout allows branch offices or remote locations to access the internet for SaaS applications directly. This approach significantly reduces the round-trip time and minimizes latency by reducing the distance data must travel. Local breakout is particularly effective in multi-site organizations where remote teams require fast and reliable access to cloud-based services.

Traffic Shaping and QoS Policies

Traffic shaping and Quality of Service (QoS) policies help prioritize critical SaaS traffic over less important data flows. By prioritizing real-time applications and essential business functions, IT teams can ensure mission-critical data gets the bandwidth it needs—even during peak usage periods. Implementing these policies reduces the impact of bandwidth contention and improves overall network performance, thereby enhancing the user experience for cloud applications.

Caching and Data Deduplication

Edge caching is another technique that significantly enhances SaaS performance. By storing frequently accessed data locally at the network edge, caching reduces the need to repeatedly fetch information from distant cloud data centers. This function not only speeds up access times but also alleviates bandwidth congestion. In addition, data deduplication techniques can optimize data transfers by eliminating redundant information, ensuring that only unique data travels over the network. Caching and deduplication help streamline data flows, improve efficiency, and reduce operational costs.

Continuous Monitoring and Real-Time Analytics

Proactive monitoring is essential for maintaining high-quality WAN performance. Continuous real-time analytics track key metrics such as latency, packet loss, throughput, and jitter. These metrics provide IT teams with immediate insights into network performance, allowing them to identify and address any issues quickly. Automated alerting systems ensure that any deviation from SLA benchmarks triggers a swift response, preventing minor glitches from evolving into major disruptions. With ongoing monitoring, businesses can effectively maintain optimal performance levels and plan for future capacity needs.

Best Practices for Implementing WAN Optimization

Adopting these techniques requires careful planning and a strategic approach. First, conduct a comprehensive assessment of your existing network infrastructure. Identify areas where latency is highest and determine which segments can benefit most from optimization. Use this data to develop a roadmap prioritizing dynamic routing, local breakout, and caching in key locations.

Next, invest in a robust SD-WAN solution that integrates seamlessly with your current infrastructure. An effective SD-WAN platform facilitates dynamic path selection and offers centralized control for consistent policy enforcement across all sites. This centralized approach helps reduce configuration errors and ensures all network traffic adheres to predefined QoS rules.

In addition to the technical implementations, it is vital to establish clear service level agreements (SLAs) with your vendors. Use real-time monitoring data to enforce these SLAs, ensuring that every link in your WAN meets the expected performance standards. This proactive approach optimizes network performance and provides the leverage needed for effective vendor negotiations.

Training and support are also crucial components of a successful WAN optimization strategy. Equip your IT staff with the necessary skills to manage and monitor advanced SD-WAN tools and other optimization technologies. Regular training sessions and access to technical support help maintain operational efficiency and ensure your team can respond quickly to network issues.

Real-World Impact: A Theoretical Case Study

Consider the case of a mid-market enterprise that experienced frequent performance issues with its cloud-based CRM and collaboration platforms. The company struggled with high latency and intermittent service interruptions due to a congested WAN that routed all traffic through a single, overloaded data center.

By deploying an SD-WAN solution with dynamic path selection and local breakout, the enterprise restructured its network traffic. The IT team rerouted latency-sensitive applications to use the fastest available links and directed non-critical traffic over less expensive, slower connections. Lastly, they implemented edge caching to store frequently accessed data locally, reducing the need for repeated downloads from the central cloud data center.

The result? The company observed a 35% reduction in average latency, improved application response times, and significantly decreased bandwidth costs. These improvements enhanced employee productivity and boosted customer satisfaction as response times for online support and real-time updates improved dramatically. This case study illustrates how thoughtful WAN optimization can transform network performance and yield tangible cost savings.

Optimize Your WAN for Peak Cloud Performance

In today's digital-first world, balancing latency and bandwidth is crucial for delivering fast and reliable cloud application experiences. Real-time WAN monitoring, dynamic routing, and intelligent traffic management ensure that SaaS applications perform optimally, even during peak usage. Organizations can optimize their networks to meet the demands of multi-cloud environments and emerging technologies, such as 5G, by adopting strategies like SD-WAN, local breakout, caching, and continuous performance analytics. Partnering with a telecom expense management professional is essential for success. A company like zLinq offers tailored telecom solutions that help mid-market enterprises implement these advanced strategies effectively. With comprehensive network assessments, proactive vendor negotiations, and seamless integration of cutting-edge monitoring tools, zLinq empowers organizations to transform their WAN from a cost center into a strategic asset that drives innovation and growth. Ready to optimize your WAN for peak cloud performance? Contact zLinq today to unlock the full potential of your network infrastructure.

0 notes

Text

Transforming Data Accuracy with Match Data Pro LLC: The Future of Reliable Data Management

In the digital age, data is the foundation of decision-making, innovation, and growth. But data is only as valuable as it is accurate, accessible, and well-managed. That’s where Match Data Pro LLC steps in, offering a game-changing solution in the world of data management software and intelligent automation.

From streamlining complex databases to eliminating duplicates and enhancing operational performance, Match Data Pro LLC has earned a reputation as a go-to provider for businesses seeking reliable data management and cutting-edge record linkage systems.

Let’s dive into how their tools are redefining how organizations manage, match, and master their data.

The Need for Smarter Data Management

Data silos, duplicates, and inconsistent formats are common pain points for organizations that handle large or complex datasets. As companies expand, merge, or adopt new systems, the risk of scattered or mismatched records grows exponentially.

These issues don’t just slow down operations—they can lead to poor business decisions, compliance risks, and financial losses.

That’s why investing in robust data management software is no longer optional—it’s essential. Match Data Pro LLC understands this reality and offers tools that solve these problems head-on.

Match Data Pro LLC: Purpose-Built for Precision

Match Data Pro LLC is not just another software company. It’s a team of data experts and innovators focused on helping organizations:

Maintain clean, consistent records

Link data accurately across multiple sources

Automate the process of matching and deduplicating entries

Improve overall data governance and decision-making

Their platform is designed with scalability, performance, and usability in mind, making it ideal for industries ranging from finance and healthcare to government, education, and marketing.

Reliable Data Management: The Core of Your Business Success

At the heart of Match Data Pro’s offering is its commitment to reliable data management. Their software ensures your data is:

Consistent – All formats, fields, and records are normalized

Accurate – Duplicates and outdated entries are eliminated

Connected – Related records are linked intelligently, even across systems

Secure – Protected with strong compliance and encryption standards

By reducing human error and integrating automation, Match Data Pro empowers businesses to trust their data—and use it more effectively.

Unlocking the Power of Record Linkage

One of Match Data Pro’s standout features is its record linkage system, which intelligently connects records that refer to the same entity but may exist in different formats or databases.

For example, a person might be listed as "Jonathan Smith" in one database and "Jon Smith" in another. Without proper linkage, a company may not realize they’re the same person—leading to duplicated mailings, billing confusion, or skewed analytics.

Match Data Pro’s Record Linkage Software uses sophisticated algorithms and fuzzy matching techniques to identify and link such records accurately, even when traditional systems fail.

What Makes Match Data Pro’s Record Linkage Software Unique?

Unlike basic matching tools, Match Data Pro’s Record Linkage Software offers:

Configurable Matching Rules – Tailor the system to your specific data formats and business logic

Machine Learning Capabilities – The more you use it, the smarter it gets

Real-Time Matching – For dynamic data environments like CRMs, e-commerce, or healthcare systems

Batch Processing Options – Ideal for cleaning up legacy databases or massive data imports

Whether you're linking customer records, employee profiles, product inventories, or patient data, the software ensures unmatched accuracy and performance.

Real-World Benefits: From Insights to Impact

Here’s how Match Data Pro’s clients benefit from implementing their data management software:

Marketing Optimization: Eliminate duplicate leads, improve targeting accuracy, and streamline campaign efforts

Compliance Confidence: Keep data secure and aligned with privacy regulations like GDPR and HIPAA

Operational Efficiency: Reduce manual data cleaning and free up your team for higher-value tasks

Improved Customer Experience: Provide personalized service with unified, accurate records

Enhanced Analytics: Make smarter decisions with clean and connected data

No matter the industry, clean data is a competitive advantage—and Match Data Pro delivers it.

Seamless Integration and User-Friendly Design

Match Data Pro understands that even the most powerful system is useless if it’s difficult to implement. That’s why their record linkage system and data tools are built for easy integration with:

CRMs like Salesforce and HubSpot

Databases such as SQL, Oracle, and MongoDB

ERP systems, spreadsheets, cloud platforms, and more

Their intuitive dashboard and clear documentation ensure your team can get started quickly—with minimal training or IT support.

Scalable Solutions for Growing Data Needs

Whether you're a small startup or a multinational enterprise, Match Data Pro’s solutions scale with you. As your data volume and complexity grow, their tools grow with you—delivering performance, flexibility, and control at every stage.

And because they’re constantly evolving with new features, AI enhancements, and user feedback, you’re never stuck with outdated tech.

Why Match Data Pro LLC?

Here’s why more organizations are choosing Match Data Pro for their data management software needs:

Proven Track Record of Success

Advanced Record Linkage Capabilities

Dedicated Customer Support and Onboarding

Flexible Pricing and Deployment Options

Commitment to Accuracy, Reliability, and Innovation

Their goal is simple: to help businesses achieve reliable data management that powers growth, compliance, and intelligent operations.

Final Thoughts

In a world where data fuels every decision, product, and customer interaction, maintaining clean and connected records is critical. With Match Data Pro LLC, you get more than just software—you get a trusted partner dedicated to unlocking the true value of your data.

From advanced record linkage software to powerful data management tools, their platform sets a new standard for precision and performance.

If you’re ready to reduce errors, improve efficiency, and gain actionable insights, it's time to explore the power of Match Data Pro. Discover how their record linkage system and reliable data management solutions can transform your organization—today and into the future.

0 notes

Text

The Ultimate Guide to Choosing the Right Bulk Mailing Service

In the fast-paced world of modern communication and marketing, bulk mailing services remain a powerful tool for businesses. Whether you're running a small enterprise or managing a large corporation, selecting the right bulk mailing service can be a game-changer. With countless options available in 2025, it's essential to understand what to look for, how to evaluate providers, and what benefits you can expect. This guide walks you through everything you need to know about choosing the right bulk mailing service.

Bulk mailing services involve sending large volumes of mail—typically marketing materials like postcards, catalogs, letters, or newsletters—to many recipients at once. These services handle the printing, sorting, and mailing processes to streamline delivery and reduce postage costs.

Why Bulk Mailing Still Matters in 2025

Despite the rise of digital marketing, direct mail boasts impressive engagement rates. According to recent studies, direct mail achieves a response rate of up to 9%, significantly higher than email marketing. In 2025, businesses are increasingly integrating physical mail into omnichannel strategies to reach customers more effectively.

Key Benefits of Using a Bulk Mailing Service

Cost Efficiency: Lower postage rates and volume discounts

Time-Saving: Outsourcing saves you from printing, folding, and mailing

Professional Quality: Expert printing and finishing services

Targeted Campaigns: Services offer data analytics and list segmentation

Scalability: Suitable for small batches or nationwide campaigns

Factors to Consider When Choosing a Bulk Mailing Service

1. Mailing Volume and Frequency

Understand your business needs. Are you sending thousands of letters monthly or running a seasonal campaign? Choose a provider that scales with you.

2. Printing Capabilities

Look for services that offer high-quality color and black-and-white printing, paper options, and customization such as variable data printing.

3. Data Management

Choose providers with tools for address verification, deduplication, and data cleansing. Accurate data ensures deliverability and saves costs.

4. Automation and Integration

Advanced services offer automation tools and API integrations with your CRM or marketing platform for seamless workflows.

5. Turnaround Time

Check if they offer same-day or next-day mailing, especially for time-sensitive communications.

6. Security and Compliance

Ensure the provider complies with data privacy regulations (e.g., GDPR, HIPAA) and follows secure handling procedures.

7. Tracking and Reporting

Modern services offer tracking of mail pieces and performance analytics to help optimize campaigns.

8. Customer Support

Reliable support is critical. Check for 24/7 assistance, dedicated account managers, and multi-channel support options.

Popular Types of Bulk Mail

Postcards

Newsletters

Brochures

Flyers

Catalogs

Invoices and Statements

Questions to Ask a Bulk Mailing Provider

What industries do you specialize in?

Can you integrate with my current marketing software?

Do you offer variable data and personalization?

What is your average delivery time?

What are your data security protocols?

Cost Considerations

Cost depends on volume, design, print quality, mail class, and additional services like list rental or tracking. Request quotes from multiple providers and evaluate their pricing models.

Case Study: Retailer X Boosts ROI with Bulk Mailing

Retailer X partnered with a bulk mailing service to send personalized postcards to past customers. By leveraging customer data and eye-catching design, they achieved a 12% response rate and a 3x return on investment. Automation allowed real-time syncing with their CRM, saving manual effort.

How to Get Started

Define your goals and target audience

Prepare your mailing list

Choose a mailing format

Design your mail piece

Select a reputable bulk mailing provider

Launch and monitor your campaign

Final Thoughts

Bulk mailing continues to deliver tangible value in an increasingly digital world. With the right provider, you can elevate your marketing, reach more customers, and enjoy measurable results. By following this guide, you’ll be well on your way to choosing a bulk mailing service that fits your business needs in 2025 and beyond.

youtube

SITES WE SUPPORT

API To Print Mails – Wix

1 note

·

View note

Text

Enterprise Kubernetes Storage with Red Hat OpenShift Data Foundation (DO370)

Kubernetes has become the de facto standard for container orchestration, enabling organizations to build, deploy, and manage applications at scale. However, running stateful applications in Kubernetes presents unique challenges, particularly in managing persistent storage, scalability, and data resiliency. This is where Red Hat OpenShift Data Foundation (ODF) steps in, offering a comprehensive software-defined storage solution tailored for OpenShift environments.

What is Red Hat OpenShift Data Foundation?

Red Hat OpenShift Data Foundation (formerly known as OpenShift Container Storage) is an integrated storage solution designed to provide scalable, reliable, and persistent storage for applications running on OpenShift. Built on Ceph, NooBaa, and Rook, ODF offers a seamless experience for developers and administrators looking to manage storage efficiently across hybrid and multi-cloud environments.

Key Features of OpenShift Data Foundation

Unified Storage Platform – ODF provides block, file, and object storage, catering to diverse application needs.

High Availability and Resilience – Ensures data availability with multi-node replication and automated failover mechanisms.

Multi-Cloud and Hybrid Cloud Support – Enables storage across on-premises, private cloud, and public cloud environments.

Dynamic Storage Provisioning – Automatically provisions storage resources based on application demands.

Advanced Data Services – Supports features like data compression, deduplication, encryption, and snapshots for data protection.

Integration with OpenShift – Provides seamless integration with OpenShift, including CSI (Container Storage Interface) support and persistent volume management.

Why Enterprises Need OpenShift Data Foundation

Enterprises adopting OpenShift for their containerized workloads require a storage solution that can keep up with their scalability and performance needs. ODF enables businesses to:

Run stateful applications efficiently in Kubernetes environments.

Ensure data persistence, integrity, and security for mission-critical workloads.

Optimize cost and performance with intelligent data placement across hybrid and multi-cloud architectures.

Simplify storage operations with automation and centralized management.

Learning Red Hat OpenShift Data Foundation with DO370

The DO370 course by Red Hat is designed for professionals looking to master enterprise Kubernetes storage with OpenShift Data Foundation. This course provides hands-on training in deploying, managing, and troubleshooting ODF in OpenShift environments. Participants will learn:

How to deploy and configure OpenShift Data Foundation.

Persistent storage management in OpenShift clusters.

Advanced topics like disaster recovery, storage performance optimization, and security best practices.

Conclusion

As Kubernetes adoption grows, organizations need a robust storage solution that aligns with the agility and scalability of containerized environments. Red Hat OpenShift Data Foundation delivers an enterprise-grade storage platform, making it an essential component for any OpenShift deployment. Whether you are an IT architect, administrator, or developer, gaining expertise in DO370 will empower you to harness the full potential of Kubernetes storage and drive innovation within your organization.

Interested in learning more? Explore Red Hat’s DO370 course and take your OpenShift storage skills to the next level!

For more details www.hawkstack.com

0 notes

Text

How to Choose the Right Data Quality Tool for Your Organization

In today's data-driven world, organizations rely heavily on accurate, consistent, and reliable data to make informed decisions. However, ensuring the quality of data is a daunting task due to the vast volumes and complexities of modern datasets. To address this challenge, businesses turn to Data Quality Tools—powerful software solutions designed to identify, cleanse, and manage data inconsistencies. Selecting the right tool for your organization is crucial to maintaining data integrity and achieving operational efficiency.

This blog will guide you through choosing the perfect Data Quality Tool, highlighting key factors to consider, features to look for, and steps to evaluate your options.

Understanding the Importance of Data Quality

Before diving into the selection process, it's vital to understand why data quality matters. Poor data quality can lead to:

Inefficient Operations: Erroneous data disrupts workflows, leading to wasted time and resources.

Faulty Decision-Making: Decisions based on incorrect data can result in missed opportunities and financial losses.

Regulatory Compliance Issues: Many industries require adherence to strict data governance standards. Low-quality data can lead to non-compliance and penalties.

Customer Dissatisfaction: Inaccurate data affects customer service and satisfaction, harming your reputation.

Investing in a reliable Data Quality Tool helps mitigate these risks, ensuring data accuracy, completeness, and consistency.

Key Factors to Consider When Choosing a Data Quality Tool

Selecting the right Data Quality Tool involves evaluating various factors to ensure it aligns with your organization's needs. Here are the essential considerations:

Identify Your Data Challenges

Understand the specific data quality issues your organization faces. Common challenges include duplicate records, incomplete data, inconsistent formatting, and outdated information. A thorough analysis will help you choose a tool that addresses your unique requirements.

Scalability

As your organization grows, so does your data. The tool you select should be scalable and capable of handling increased data volumes and complexity without compromising performance.

Integration Capabilities

A robust Data Quality Tool must seamlessly integrate with your existing IT infrastructure, including databases, data warehouses, and other software applications. Compatibility ensures smooth workflows and efficient data processing.

Ease of Use

The tool should have an intuitive interface, making it easy for your team to adopt and use effectively. Complex tools with steep learning curves can hinder productivity.

Automation Features

Look for automation capabilities such as data cleansing, deduplication, and validation. Automation saves time and reduces human error, enhancing overall data quality.

Cost and ROI

Evaluate the total cost of ownership, including licensing, implementation, and maintenance. Ensure the tool delivers a measurable return on investment (ROI) through improved data accuracy and operational efficiency.

Vendor Support and Updates

Choose a tool from a vendor with a solid track record of customer support and regular updates. This ensures the tool remains up to date with evolving technology and regulatory requirements.

Must-Have Features in a Data Quality Tool

When evaluating options, ensure the Data Quality Tool includes the following key features:

Data Profiling

Data profiling analyses datasets to identify patterns, anomalies, and inconsistencies. This feature is essential for understanding the current state of your data and pinpointing problem areas.

Data Cleansing

Data cleansing automates correcting errors such as typos, duplicate records, and invalid values, ensuring your datasets are accurate and reliable.

Data Enrichment

Some tools enhance data by appending missing information from external sources, improving its completeness and relevance.

Real-Time Processing

Real-time data processing is a critical feature for organizations handling dynamic datasets. It ensures that data is validated and cleansed as it enters the system.

Customizable Rules and Workflows

Every organization has unique data governance policies. A good Data Quality Tool allows you to define and implement custom rules and workflows tailored to your needs.

Reporting and Visualization

Comprehensive reporting and visualization tools provide insights into data quality trends and metrics, enabling informed decision-making and progress tracking.

Compliance Features

To avoid legal complications, ensure the tool complies with industry-specific regulations, such as GDPR, HIPAA, or PCI DSS.

Steps to Evaluate and Choose the Right Data Quality Tool

Define Your Objectives

Set clear goals for achieving success with a Data Quality Tool. These can range from improving customer data accuracy to ensuring compliance with data governance standards.

Create a Checklist of Requirements

Based on your objectives, create a checklist of essential features and capabilities. Use this as a reference when comparing tools.

Research and Shortlist Tools

Research available tools, read reviews, and seek recommendations from industry peers. Then, shortlist tools that meet your requirements and budget.

Request Demos and Trials

Most vendors offer free demos or trial periods. Use this opportunity to explore the tool's features, usability, and compatibility with your systems.

Evaluate Vendor Reputation

Check the vendor's reputation in the market. Look for case studies, customer testimonials, and third-party reviews to gauge their reliability and support quality.

Involve Stakeholders

The evaluation process includes key stakeholders, such as IT teams and data managers. Their insights are invaluable in assessing the tool's practicality and effectiveness.

Make an Informed Decision

After a thorough evaluation, choose the Data Quality Tool that best meets your organization's needs, budget, and long-term goals.

Benefits of Implementing the Right Data Quality Tool

Investing in the right Data Quality Tool offers numerous benefits:

Improved Decision-Making: With accurate and reliable data, decision-makers can confidently strategize and plan.

Enhanced Operational Efficiency: Automation reduces manual effort, allowing your team to focus on strategic initiatives.

Regulatory Compliance: Robust tools ensure your data governance practices align with industry regulations.

Cost Savings: High-quality data minimizes financial losses caused by errors or inefficiencies.

Customer Satisfaction: Accurate customer data improves personalization and service quality, boosting satisfaction and loyalty.

Top Trends in Data Quality Tools

The field of Data Quality Tools is constantly evolving. Here are some emerging trends to watch:

AI and Machine Learning Integration

Advanced tools leverage AI and machine learning to predict and resolve data quality issues proactively.

Cloud-Based Solutions

Cloud-based Data Quality Tools offer scalability, flexibility, and cost-efficiency, making them popular among businesses of all sizes.

Data Quality as a Service (DQaaS)

Many vendors now offer DQaaS, providing data quality management as a subscription-based service.

Focus on Real-Time Data Quality

As businesses rely on real-time analytics, tools that ensure streaming data quality are gaining traction.

Conclusion

Choosing the right Data Quality Tool is a strategic decision that impacts your organization's success. By understanding your data challenges, evaluating essential features, and involving key stakeholders, you can select a tool that ensures data accuracy, compliance, and efficiency. Investing in a reliable tool pays off by enabling better decision-making, enhancing operations, and improving customer satisfaction.

As data's importance continues to grow, adopting a comprehensive Data Quality Tool is no longer optional—it's necessary for organizations striving to stay competitive in a data-driven landscape.

0 notes

Text

What Are the Key Steps in the Data Conversion Process?

In the digital era, seamless data conversion is crucial for businesses transitioning between systems, formats, or platforms. Whether migrating legacy databases to modern infrastructures or transforming raw data into usable insights, an effective data conversion strategy ensures accuracy, integrity, and consistency. Below are the essential steps in the data conversion process.

1. Requirement Analysis

A comprehensive assessment of source and target formats is vital before initiating data conversion. This stage involves evaluating data structures, compatibility constraints, and potential transformation challenges. A detailed roadmap minimizes errors and ensures a structured migration.

2. Data Extraction

Data must be retrieved from its original repository, whether a relational database, flat file, or cloud storage. This step demands meticulous extraction techniques to preserve data fidelity and prevent corruption. In large-scale data conversion projects, automation tools help streamline extraction while maintaining efficiency.

3. Data Cleansing and Validation

Raw data often contains inconsistencies, redundancies, or inaccuracies. Cleansing involves deduplication, formatting corrections, and anomaly detection to enhance quality. Validation ensures data meets predefined integrity rules, eliminating discrepancies that could lead to processing errors post-conversion.

4. Data Mapping and Transformation

Source data must align with the target system’s structure, necessitating meticulous mapping. This step involves schema alignment, datatype standardization, and structural modifications. Advanced transformation techniques such as ETL (Extract, Transform, Load) pipelines facilitate seamless data conversion by automating complex modifications.

5. Data Loading

Once transformed, the data is loaded into the destination system. This phase may involve bulk insertion or incremental loading, depending on the project’s scale. Performance optimization techniques, such as indexing and parallel processing, enhance speed and efficiency while minimizing system downtime.

6. Data Verification and Testing

A thorough validation process is crucial to confirm the integrity and accuracy of the converted data. Cross-checking against the source dataset, conducting sample audits, and running test scenarios help identify anomalies before final deployment. This step ensures that data conversion outcomes meet operational and compliance standards.

7. Post-Conversion Optimization

After successful deployment, performance monitoring and fine-tuning are essential. Index optimization, query performance analysis, and periodic audits help maintain long-term data integrity. Additionally, continuous monitoring allows early detection of emerging inconsistencies or system bottlenecks.

A meticulously executed data conversion process minimizes risks associated with data loss, corruption, or incompatibility. By adhering to structured methodologies and leveraging automation, businesses can seamlessly transition between systems while safeguarding data reliability. Whether for cloud migrations, software upgrades, or enterprise integrations, a well-planned data conversion strategy is indispensable in modern data management.

0 notes

Text

Quick way to extract job information from Reed

Reed is one of the largest recruitment websites in the UK, covering a variety of industries and job types. Its mission is to connect employers and job seekers to help them achieve better career development and recruiting success

Introduction to the scraping tool

ScrapeStorm is a new generation of Web Scraping Tool based on artificial intelligence technology. It is the first scraper to support both Windows, Mac and Linux operating systems.

Preview of the scraped result

1. Create a task

(2) Create a new smart mode task

You can create a new scraping task directly on the software, or you can create a task by importing rules.

How to create a smart mode task

2. Configure the scraping rules

Smart mode automatically detects the fields on the page. You can right-click the field to rename the name, add or delete fields, modify data, and so on.

3. Set up and start the scraping task

(1) Run settings

Choose your own needs, you can set Schedule, IP Rotation&Delay, Automatic Export, Download Images, Speed Boost, Data Deduplication and Developer.

4. Export and view data

(2) Choose the format to export according to your needs.

ScrapeStorm provides a variety of export methods to export locally, such as excel, csv, html, txt or database. Professional Plan and above users can also post directly to wordpress.

How to view data and clear data

2 notes

·

View notes

Text

Revolutionizing Data Security: Enhancing Protection with Veeam and StoneFly DR365V Appliance

In an era where data breaches and cyber threats loom large, businesses are seeking cutting-edge solutions to safeguard their valuable digital assets. Today, we delve into a compelling narrative of technological innovation as a forward-thinking company elevates its data protection strategy using the robust capabilities of the Veeam Backup & Replication software in conjunction with the StoneFly DR365V appliance. Join us on this insightful journey as we explore how these two powerhouses come together to provide unprecedented layers of security, ensuring resilience in the face of ever-evolving threats.

Blog Body:

To truly comprehend the magnitude of this advancement, one must first understand the fundamental principles behind Veeam’s expertise in backup and recovery. Veeam has long been revered for its reliable backup solutions that ensure data availability across virtual, physical, and cloud-based workloads. With a user-friendly interface and seamless integration capabilities, Veeam simplifies complex processes through automation while providing granular recovery options tailored to meet diverse business needs. This versatility places it at the forefront of backup technology – a position further solidified by its compatibility with StoneFly's DR365V appliance.

StoneFly’s DR365V appliance is a formidable player in data storage and protection, known for its scalability and high-performance architecture. At its core lies an intelligent blend of NAS (Network Attached Storage), SAN (Storage Area Network), and iSCSI technologies designed to deliver flexible storage solutions that grow alongside your business requirements. By combining these features with advanced deduplication capabilities, StoneFly optimizes storage efficiency without compromising performance or reliability – making it an ideal companion to complement Veeam's prowess.

When integrated seamlessly with Veeam Backup & Replication software, the DR365V appliance unleashes unparalleled potential for comprehensive disaster recovery strategies. This dynamic duo empowers organizations to execute efficient replication processes that not only reduce downtime but also facilitate instant failover scenarios when needed most urgently – effectively minimizing disruptions caused by unforeseen events such as hardware failures or cyberattacks.

The synergy between these two technologies extends beyond mere functionality; it fosters holistic approaches towards compliance mandates prevalent across industries today. Regulatory frameworks demand stringent measures protecting sensitive information from unauthorized access or exposure during transmission/storage phases - challenges addressed head-on through powerful encryption protocols embedded within both systems’ architectures ensuring end-to-end security throughout entire ecosystems they serve diligently guard every byte entrusted them against malicious intent lurking cyberspace shadows waiting exploit vulnerabilities left unchecked otherwise risk tarnishing reputations irreparably damaging bottom lines if neglected altogether!

As our exploration draws closer conclusion let us reflect upon transformative impact witnessed firsthand companies adopting enhanced protective mechanisms offered synergistic collaboration between veeam stonefly dr365v appliance! Their combined efforts redefine what possible realms safeguarding vital corporate resources fortifying defenses against adversarial forces threatening undermine progress made over years hard work dedication perseverance demonstrated leaders industry looking future confidently knowing equipped withstand whatever challenges may arise along path continued success prosperity shared vision brighter tomorrow awaits those willing embrace change adapt evolving landscape digital age demands nothing less utmost vigilance preparedness order thrive competitive markets ever-changing world around us today tomorrow beyond alike…

Conclusion:

In conclusion, leveraging Veeam Backup & Replication alongside StoneFly’s DR365V appliance offers an unmatched fortress of protection for businesses aiming to secure their critical data assets against emerging threats in today's complex technological landscape. The marriage between these powerful tools provides not only peace-of-mind but also guarantees operational continuity even amidst adversity - ultimately empowering organizations harness full potential modern-day innovations whilst remaining steadfastly committed delivering highest standards excellence customers stakeholders alike! As we look ahead new horizons opportunities await those ready seize moment transform visions realities driving force shaping future generations come…

0 notes

Text

What Is a Network Packet Broker, and Why Do You Need It in IT and Telecom Industries?

https://www.khushicomms.com/category/network-packet-brokers

Introduction

In today's digital world, IT and telecom industries handle vast amounts of data traffic daily. Managing and monitoring network traffic efficiently is critical for security, performance, and compliance. A Network Packet Broker (NPB) plays a vital role in optimizing network monitoring by intelligently distributing and filtering traffic. This article explores what a Network Packet Broker is, its key features, and why it is essential in IT and telecom industries.

What Is a Network Packet Broker?

A Network Packet Broker (NPB) is a device or software solution that aggregates, filters, and distributes network traffic to the appropriate monitoring, security, and analytics tools. It acts as an intermediary between network infrastructure components and monitoring tools, ensuring that the right data reaches the right destination efficiently.

Key Functions of a Network Packet Broker

Traffic Aggregation – Collects data from multiple network links and consolidates it into a single stream.

Traffic Filtering – Filters network packets based on predefined rules, ensuring that only relevant data is forwarded.

Load Balancing – Distributes network traffic evenly across monitoring and security tools.

Packet Deduplication – Eliminates redundant packets to optimize data processing.

Protocol Stripping – Removes unnecessary protocol headers for better analysis and security.

Data Masking – Protects sensitive data before sending it to monitoring tools.

Why Do IT and Telecom Industries Need a Network Packet Broker?

Enhanced Network Visibility

NPBs provide deep insight into network traffic by collecting and forwarding packets to monitoring tools. This ensures that IT teams can detect performance issues, troubleshoot problems, and maintain high service quality.

Improved Security

With cyber threats on the rise, organizations need effective security monitoring. NPBs ensure security tools receive relevant traffic, enabling real-time threat detection and response.

Efficient Traffic Management

By filtering and prioritizing data, NPBs reduce the load on security and monitoring tools, improving their efficiency and extending their lifespan.

Cost Optimization

Instead of overloading expensive monitoring tools with irrelevant traffic, NPBs optimize data distribution, reducing the need for additional hardware and lowering operational costs.

Regulatory Compliance

Many industries must comply with data protection regulations such as GDPR, HIPAA, and PCI DSS. NPBs help organizations meet these requirements by ensuring only necessary data is processed and sensitive information is masked when needed.

Scalability for Growing Networks

As networks expand, traffic increases. NPBs enable IT and telecom companies to scale their network monitoring capabilities without performance degradation.

0 notes