#Enable Encryption for Microsoft SQL Server Connections

Explore tagged Tumblr posts

Text

Revolutionize Your Business with SQL Server 2022's Cloud Connectivity

Empowering Innovation: Unlocking the Future with SQL Server 2022

In today’s rapidly evolving digital landscape, staying ahead requires leveraging cutting-edge technology that seamlessly integrates with the cloud. SQL Server 2022 stands at the forefront of this revolution, offering a powerful, cloud-connected database platform designed to transform your business operations and unlock new opportunities. With its innovative features and enhanced security, SQL Server 2022 provides a comprehensive solution for modern enterprises aiming to harness the full potential of their data.

One of the most compelling aspects of SQL Server 2022 is its deep integration with Azure, Microsoft's trusted cloud platform. This integration enables businesses to build hybrid environments that combine on-premises infrastructure with cloud capabilities, offering unparalleled flexibility and scalability. Whether you’re managing a small application or a large enterprise system, SQL Server 2022 ensures seamless data movement and synchronization across environments, reducing latency and improving overall performance.

The platform's built-in query intelligence is a game-changer, providing insights and optimizations that enhance database performance. Intelligent query processing capabilities automatically adapt to workload patterns, delivering faster results and reducing resource consumption. This means your team can focus more on strategic initiatives rather than troubleshooting performance issues, ultimately accelerating your digital transformation journey.

Security remains a top priority with SQL Server 2022’s advanced features like ledger technology, which offers cryptographic verification of data integrity. This innovation ensures that your data remains tamper-proof and trustworthy, a critical requirement in industries such as finance, healthcare, and government. Combined with robust encryption and role-based access controls, SQL Server 2022 provides a fortified environment to safeguard sensitive information against evolving cyber threats.

Furthermore, SQL Server 2022 simplifies data integration with its support for various data sources, formats, and platforms. Whether consolidating data lakes, integrating with business intelligence tools, or supporting real-time analytics, the platform offers the versatility needed for comprehensive data management strategies. This seamless connectivity empowers organizations to derive actionable insights faster and make more informed decisions.

Businesses seeking to optimize their data infrastructure should consider the cost-effective advantages of licensing options like the sql server 2022 standard license price. Investing in SQL Server 2022 enables organizations not only to future-proof their operations but also to capitalize on the cloud’s agility and intelligence.

In conclusion, SQL Server 2022 is more than just a database; it’s a strategic asset that propels your business into the cloud-connected era. By harnessing its innovative features, enhanced security, and seamless integration capabilities, your organization can achieve greater efficiency, scalability, and resilience. Embrace the future today with SQL Server 2022 and turn your data into your most valuable competitive advantage.

0 notes

Text

Mastering Power Apps: A Step-by-Step Guide for 2025

In today’s digital transformation era, businesses need custom applications to improve productivity and efficiency. Microsoft Power Apps enables users to build low-code applications that integrate with Microsoft 365, Dynamics 365, and other services. Whether you’re a beginner or an experienced developer, this guide will help you master Power Apps in 2025 with best practices and step-by-step instructions.

What is Power Apps?

Power Apps is a low-code application development platform by Microsoft that allows users to create business applications without extensive coding. It offers three types of apps:

Canvas Apps – Highly customizable apps with a drag-and-drop interface.

Model-Driven Apps – Apps based on Microsoft Dataverse for structured data-driven applications.

Power Pages – Used for building external-facing web portals.

By mastering Power Apps, you can digitize business processes, streamline workflows, and create applications that connect with multiple data sources. PowerApps Training in Hyderabad

Getting Started with Power Apps

1. Set up Your Power Apps Account

To start building apps, sign in to Power Apps with your Microsoft 365 account. If you don’t have an account, you can start with a free trial.

2. Understanding the Power Apps Interface

When you log in, you will see:

Home Dashboard – Quick access to apps, templates, and learning resources.

Apps Section – Where you create and manage your applications.

Data Section – Connect to data sources like SharePoint, SQL, and Excel.

Flows – Integrate with Power Automate to add automation.

3. Choosing the Right App Type

Use Canvas Apps if you want full design control.

Use Model-Driven Apps for structured data and predefined components.

Use Power Pages if you need an external-facing portal.

Step-by-Step Guide to Building Your First Power App

Step 1: Choose a Template or Start from Scratch

Power Apps provides pre-built templates that help you get started quickly. Alternatively, you can start from a blank canvas.

Step 2: Connect to Data Sources

Power Apps integrates with multiple data sources like:

SharePoint

Microsoft Dataverse

SQL Server

OneDrive & Excel

Power Automate

Step 3: Design Your App

Use the drag-and-drop builder to add elements such as:

Text fields

Buttons

Dropdowns

Galleries

Step 4: Add Logic and Automation

You can use Power Fx, a simple formula-based language, to add logic to your app. Additionally, integrate with Power Automate to automate workflows, such as sending emails or notifications.

Step 5: Test and Publish Your App

Before publishing:

Use the preview mode to test functionality.

Share the app with your organization.

Publish it for use on desktop and mobile devices.

Best Practices for Mastering Power Apps

1. Use Microsoft Dataverse for Scalability

For large-scale applications, use Dataverse instead of Excel or SharePoint to handle structured data efficiently.

2. Optimize Performance

Limit the number of data connections.

Use delegation-friendly functions.

Minimize on-premises data gateway usage for better speed.

3. Enhance Security

Implement role-based access control (RBAC).

Use environment security policies.

Encrypt sensitive data.

4. Leverage Power Automate for Workflow Automation

Power Automate helps automate repetitive tasks like:

Approvals and notifications

Data synchronization

Email automation

Advanced Features to Explore in 2025

AI Builder

Leverage AI-powered automation by integrating AI models to analyze images, text, and business data.

Power Apps Portals (Now Power Pages)

Build external-facing applications for customers, vendors, and partners with Power Pages.

Integration with Power BI

Use Power BI dashboards inside Power Apps to provide real-time analytics and insights.

Conclusion

Mastering Power Apps in 2025 will give you the ability to build business applications, automate workflows, and improve productivity without deep coding knowledge. By following this guide, you’ll be able to create custom Power Apps solutions that enhance efficiency and innovation in your organization. Start today and become proficient in Power Apps, Power Automate, and Dataverse!

Trending Courses: Generative AI, Prompt Engineering,

Visualpath stands out as the leading and best institute for software online training in Hyderabad. We provide Power Apps and Power Automate Training. You will get the best course at an affordable cost.

Call/What’s App – +91-7032290546

Visit: https://visualpath.in/online-powerapps-training.html

#PowerApps Training#Power Automate Online Training#PowerApps Training in Hyderabad#PowerApps Online Training#Power Apps Course#PowerApps and Power Automate Training#Microsoft PowerApps Training Courses#PowerApps Online Training Course#PowerApps Training in Chennai#PowerApps Training in Bangalore#PowerApps Training in India#PowerApps Course In Ameerpet

1 note

·

View note

Text

Innovations in Data Orchestration: How Azure Data Factory is Adapting

Introduction

As businesses generate and process vast amounts of data, the need for efficient data orchestration has never been greater. Data orchestration involves automating, scheduling, and managing data workflows across multiple sources, including on-premises, cloud, and third-party services.

Azure Data Factory (ADF) has been a leader in ETL (Extract, Transform, Load) and data movement, and it continues to evolve with new innovations to enhance scalability, automation, security, and AI-driven optimizations.

In this blog, we will explore how Azure Data Factory is adapting to modern data orchestration challenges and the latest features that make it more powerful than ever.

1. The Evolution of Data Orchestration

🚀 Traditional Challenges

Manual data integration between multiple sources

Scalability issues in handling large data volumes

Latency in data movement for real-time analytics

Security concerns in hybrid and multi-cloud setups

🔥 The New Age of Orchestration

With advancements in cloud computing, AI, and automation, modern data orchestration solutions like ADF now provide: ✅ Serverless architecture for scalability ✅ AI-powered optimizations for faster data pipelines ✅ Real-time and event-driven data processing ✅ Hybrid and multi-cloud connectivity

2. Key Innovations in Azure Data Factory

✅ 1. Metadata-Driven Pipelines for Dynamic Workflows

ADF now supports metadata-driven data pipelines, allowing organizations to:

Automate data pipeline execution based on dynamic configurations

Reduce redundancy by using parameterized pipelines

Improve reusability and maintenance of workflows

✅ 2. AI-Powered Performance Optimization

Microsoft has introduced AI-powered recommendations in ADF to:

Suggest best data pipeline configurations

Automatically optimize execution performance

Detect bottlenecks and improve parallelism

✅ 3. Low-Code and No-Code Data Transformations

Mapping Data Flows provide a visual drag-and-drop interface

Wrangling Data Flows allow users to clean data using Power Query

Built-in connectors eliminate the need for custom scripting

✅ 4. Real-Time & Event-Driven Processing

ADF now integrates with Event Grid, Azure Functions, and Streaming Analytics, enabling:

Real-time data movement from IoT devices and logs

Trigger-based workflows for automated data processing

Streaming data ingestion into Azure Synapse, Data Lake, or Cosmos DB

✅ 5. Hybrid and Multi-Cloud Data Integration

ADF now provides:

Expanded connector support (AWS S3, Google BigQuery, SAP, Databricks)

Enhanced Self-Hosted Integration Runtime for secure on-prem connectivity

Cross-cloud data movement with Azure, AWS, and Google Cloud

✅ 6. Enhanced Security & Compliance Features

Private Link support for secure data transfers

Azure Key Vault integration for credential management

Role-based access control (RBAC) for governance

✅ 7. Auto-Scaling & Cost Optimization Features

Auto-scaling compute resources based on workload

Cost analysis tools for optimizing pipeline execution

Pay-per-use model to reduce costs for infrequent workloads

3. Use Cases of Azure Data Factory in Modern Data Orchestration

🔹 1. Real-Time Analytics with Azure Synapse

Ingesting IoT and log data into Azure Synapse

Using event-based triggers for automated pipeline execution

🔹 2. Automating Data Pipelines for AI & ML

Integrating ADF with Azure Machine Learning

Scheduling ML model retraining with fresh data

🔹 3. Data Governance & Compliance in Financial Services

Secure movement of sensitive data with encryption

Using ADF with Azure Purview for data lineage tracking

🔹 4. Hybrid Cloud Data Synchronization

Moving data from on-prem SAP, SQL Server, and Oracle to Azure Data Lake

Synchronizing multi-cloud data between AWS S3 and Azure Blob Storage

4. Best Practices for Using Azure Data Factory in Data Orchestration

✅ Leverage Metadata-Driven Pipelines for dynamic execution ✅ Enable Auto-Scaling for better cost and performance efficiency ✅ Use Event-Driven Processing for real-time workflows ✅ Monitor & Optimize Pipelines using Azure Monitor & Log Analytics ✅ Secure Data Transfers with Private Endpoints & Key Vault

5. Conclusion

Azure Data Factory continues to evolve with innovations in AI, automation, real-time processing, and hybrid cloud support. By adopting these modern orchestration capabilities, businesses can:

Reduce manual efforts in data integration

Improve data pipeline performance and reliability

Enable real-time insights and decision-making

As data volumes grow and cloud adoption increases, Azure Data Factory’s future-ready approach ensures that enterprises stay ahead in the data-driven world.

WEBSITE: https://www.ficusoft.in/azure-data-factory-training-in-chennai/

0 notes

Text

Career Path and Growth Opportunities for Integration Specialists

The Growing Demand for Integration Specialists.

Introduction

In today’s interconnected digital landscape, businesses rely on seamless data exchange and system connectivity to optimize operations and improve efficiency. Integration specialists play a crucial role in designing, implementing, and maintaining integrations between various software applications, ensuring smooth communication and workflow automation. With the rise of cloud computing, APIs, and enterprise applications, integration specialists are essential for driving digital transformation.

What is an Integration Specialist?

An Integration Specialist is a professional responsible for developing and managing software integrations between different systems, applications, and platforms. They design workflows, troubleshoot issues, and ensure data flows securely and efficiently across various environments. Integration specialists work with APIs, middleware, and cloud-based tools to connect disparate systems and improve business processes.

Types of Integration Solutions

Integration specialists work with different types of solutions to meet business needs:

API Integrations

Connects different applications via Application Programming Interfaces (APIs).

Enables real-time data sharing and automation.

Examples: RESTful APIs, SOAP APIs, GraphQL.

Cloud-Based Integrations

Connects cloud applications like SaaS platforms.

Uses integration platforms as a service (iPaaS).

Examples: Zapier, Workato, MuleSoft, Dell Boomi.

Enterprise System Integrations

Integrates large-scale enterprise applications.

Connects ERP (Enterprise Resource Planning), CRM (Customer Relationship Management), and HR systems.

Examples: Salesforce, SAP, Oracle, Microsoft Dynamics.

Database Integrations

Ensures seamless data flow between databases.

Uses ETL (Extract, Transform, Load) processes for data synchronization.

Examples: SQL Server Integration Services (SSIS), Talend, Informatica.

Key Stages of System Integration

Requirement Analysis & Planning

Identify business needs and integration goals.

Analyze existing systems and data flow requirements.

Choose the right integration approach and tools.

Design & Architecture

Develop a blueprint for the integration solution.

Select API frameworks, middleware, or cloud services.

Ensure scalability, security, and compliance.

Development & Implementation

Build APIs, data connectors, and automation workflows.

Implement security measures (encryption, authentication).

Conduct performance optimization and data validation.

Testing & Quality Assurance

Perform functional, security, and performance testing.

Identify and resolve integration errors and data inconsistencies.

Conduct user acceptance testing (UAT).

Deployment & Monitoring

Deploy integration solutions in production environments.

Monitor system performance and error handling.

Ensure smooth data synchronization and process automation.

Maintenance & Continuous Improvement

Provide ongoing support and troubleshooting.

Optimize integration workflows based on feedback.

Stay updated with new technologies and best practices.

Best Practices for Integration Success

✔ Define clear integration objectives and business needs. ✔ Use secure and scalable API frameworks. ✔ Optimize data transformation processes for efficiency. ✔ Implement robust authentication and encryption. ✔ Conduct thorough testing before deployment. ✔ Monitor and update integrations regularly. ✔ Stay updated with emerging iPaaS and API technologies.

Conclusion

Integration specialists are at the forefront of modern digital ecosystems, ensuring seamless connectivity between applications and data sources. Whether working with cloud platforms, APIs, or enterprise systems, a well-executed integration strategy enhances efficiency, security, and scalability. Businesses that invest in robust integration solutions gain a competitive edge, improved automation, and streamlined operations.

Would you like me to add recommendations for integration tools or comparisons of middleware solutions? 🚀

Integration Specialist:

#SystemIntegration

#APIIntegration

#CloudIntegration

#DataAutomation

#EnterpriseSolutions

0 notes

Text

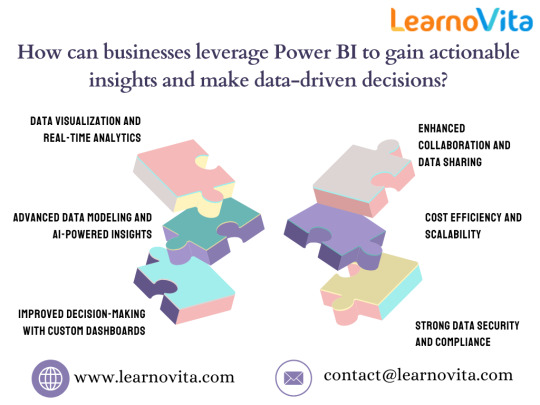

Navigate the Future of Data Visualization with Power BI

Introduction

In the digital age, data is one of the most valuable assets for businesses. However, raw data is often complex, unstructured, and difficult to interpret. To extract meaningful insights, businesses need robust data visualization tools. Microsoft Power BI is a game-changer in this domain, enabling organizations to transform raw data into visually appealing, actionable insights.

This article explores the power of Power BI, its capabilities, and how businesses can leverage it to navigate the future of data visualization effectively.

Understanding Power BI

Power BI is a business intelligence (BI) tool developed by Microsoft that helps users visualize data and share insights across an organization. It integrates seamlessly with multiple data sources and provides powerful analytics and reporting functionalities.

Key Features of Power BI:

Interactive Dashboards: Create compelling, dynamic dashboards that allow users to explore data intuitively.

Data Connectivity: Connect to a wide range of data sources, including databases, cloud services, and Excel files.

AI-powered Insights: Utilize artificial intelligence (AI) to detect patterns, forecast trends, and gain deeper insights.

Custom Visualizations: Design personalized reports with a variety of visualization options, including charts, graphs, and maps.

Real-time Analytics: Monitor real-time data streams to make informed decisions promptly.

Cloud-based and On-premise Solutions: Access data from anywhere using Power BI’s cloud-based service or deploy it on-premises with Power BI Report Server.

The Growing Importance of Data Visualization

As businesses become more data-driven, the need for effective data visualization has increased exponentially. Organizations that leverage data visualization tools like Power BI gain a competitive edge by making faster, more informed decisions.

Benefits of Data Visualization:

Simplifies Complex Data: Converts raw data into understandable visual representations.

Enhances Decision-making: Allows decision-makers to identify trends, opportunities, and risks easily.

Boosts Collaboration: Enables teams to work together effectively by sharing interactive reports and dashboards.

Identifies Key Performance Indicators (KPIs): Tracks business performance with real-time monitoring.

Enhances Predictive Analytics: Uses AI and machine learning to forecast future trends and outcomes.

How Power BI is Revolutionizing Data Visualization

1. Seamless Data Integration

Power BI supports a vast range of data sources, including Microsoft Azure, SQL databases, Google Analytics, Salesforce, and more. With its user-friendly interface, businesses can pull data from multiple platforms and consolidate it into a single dashboard for a comprehensive view.

2. AI-powered Analytics

Power BI incorporates AI-driven analytics, allowing users to generate automatic insights using natural language queries and advanced data models. Features like Power BI’s AI visualizations and Q&A function make data exploration more intuitive and accessible.

3. Customizable Dashboards and Reports

With Power BI, users can create personalized dashboards with drag-and-drop functionality. Whether it’s a sales report, financial forecast, or customer segmentation analysis, Power BI offers a variety of visualization tools to present data in an engaging manner.

4. Mobile Accessibility

Power BI’s mobile app ensures that business leaders can access reports and dashboards from anywhere, providing flexibility in decision-making and enhancing operational efficiency.

5. Security and Compliance

Data security is a top priority for businesses, and Power BI provides enterprise-grade security features, including role-based access controls, encryption, and compliance with GDPR and other industry regulations.

Best Practices for Effective Data Visualization with Power BI

1. Define Clear Objectives

Before creating a dashboard, identify key business goals and the specific data points required to measure performance.

2. Choose the Right Visuals

Select appropriate visualization types for different data sets. For example, line charts are best for trend analysis, while pie charts work well for proportion comparisons.

3. Keep it Simple

Avoid cluttering dashboards with too much information. Focus on key insights and maintain a clean, user-friendly design.

4. Use Real-time Data

Leverage Power BI’s real-time analytics capabilities to ensure decision-makers have access to the most up-to-date information.

5. Ensure Data Accuracy

Validate data sources and apply necessary data transformations to maintain accuracy and reliability in reports.

The Future of Data Visualization with Power BI

As technology evolves, Power BI continues to integrate advanced capabilities such as:

Augmented Analytics: AI-powered automation for deeper data insights.

IoT and Big Data Integration: Seamless connection with Internet of Things (IoT) devices and massive datasets.

Enhanced Collaboration Features: Improved real-time collaboration tools for data analysts and business teams.

Advanced Machine Learning Models: AI-driven predictions for business growth and optimization.

Conclusion

Microsoft Power BI is reshaping the way businesses analyze and visualize data. With its powerful AI-driven analytics, real-time capabilities, and seamless integration, Power BI empowers organizations to navigate the future of data visualization with confidence.By leveraging Power BI’s advanced features, businesses can unlock valuable insights, drive innovation, and make data-driven decisions that propel them ahead of the competition. Whether you are a small business or a large enterprise, investing in Power BI will revolutionize the way you understand and utilize your data.

0 notes

Text

GPU Hosting Server Windows By CloudMinnister Technologies

Cloudminister Technologies GPU Hosting Server for Windows

Businesses and developers require more than just conventional hosting solutions in the data-driven world of today. Complex tasks that require high-performance computing capabilities that standard CPUs cannot effectively handle include artificial intelligence (AI), machine learning (ML), and large data processing. Cloudminister Technologies GPU hosting servers can help with this.

We will examine GPU hosting servers on Windows from Cloudminister Technologies' point of view in this comprehensive guide, going over their features, benefits, and reasons for being the best option for your company.

A GPU Hosting Server: What Is It?

A dedicated server with Graphical Processing Units (GPUs) for high-performance parallel computing is known as a GPU hosting server. GPUs can process thousands of jobs at once, in contrast to CPUs, which handle tasks sequentially. They are therefore ideal for applications requiring real-time processing and large-scale data computations.

Cloudminister Technologies provides cutting-edge GPU hosting solutions to companies that deal with:

AI and ML Model Training:- Quick and precise creation of machine learning models.

Data analytics:- It is the rapid processing of large datasets to produce insights that may be put to use.

Video processing and 3D rendering:- fluid rendering for multimedia, animation, and gaming applications.

Blockchain Mining:- Designed with strong GPU capabilities for cryptocurrency mining.

Why Opt for GPU Hosting from Cloudminister Technologies?

1. Hardware with High Performance

The newest NVIDIA and AMD GPUs power the state-of-the-art hardware solutions used by Cloudminister Technologies. Their servers are built to provide resource-intensive applications with exceptional speed and performance.

Important Points to Remember:

High-end GPU variants are available for quicker processing.

Dedicated GPU servers that only your apps can use; there is no resource sharing, guaranteeing steady performance.

Parallel processing optimization enables improved output and quicker work completion.

2. Compatibility with Windows OS

For companies that depend on Windows apps, Cloudminister's GPU hosting servers are a great option because they completely support Windows-based environments.

The Benefits of Windows Hosting with Cloudminister

Smooth Integration: Utilize programs developed using Microsoft technologies, like PowerShell, Microsoft SQL Server, and ASP.NET, without encountering compatibility problems.

Developer-Friendly: Enables developers to work in a familiar setting by supporting well-known development tools including Visual Studio,.NET Core, and DirectX.

Licensing Management: To ensure compliance and save time, Cloudminister handles Windows licensing.

3. The ability to scale

Scalability is a feature of Cloudminister Technologies' technology that lets companies expand without worrying about hardware constraints.

Features of Scalability:

Flexible Resource Allocation: Adjust your storage, RAM, and GPU power according to task demands.

On-Demand Scaling: Only pay for what you use; scale back when not in use and increase resources during periods of high usage.

Custom Solutions: Custom GPU configurations and enterprise-level customization according to particular business requirements.

4. Robust Security:-

Cloudminister Technologies places a high premium on security. Multiple layers of protection are incorporated into their GPU hosting solutions to guarantee the safety and security of your data.

Among the security features are:

DDoS Protection: Prevents Distributed Denial of Service (DDoS) assaults that might impair the functionality of your server.

Frequent Backups: Automatic backups to ensure speedy data recovery in the event of an emergency.

Secure data transfer:- across networks is made possible via end-to-end encryption, or encrypted connections.

Advanced firewalls: Guard against malware attacks and illegal access.

5. 24/7 Technical Assistance:-

Cloudminister Technologies provides round-the-clock technical assistance to guarantee prompt and effective resolution of any problems. For help with server maintenance, configuration, and troubleshooting, their knowledgeable staff is always on hand.

Support Services:

Live Monitoring: Ongoing observation to proactively identify and address problems.

Dedicated Account Managers: Tailored assistance for business customers with particular technical needs.

Managed Services: Cloudminister provides fully managed hosting services, including upkeep and upgrades, for customers who require a hands-off option.

Advantages of Cloudminister Technologies Windows-Based GPU Hosting

There are numerous commercial benefits to using Cloudminister to host GPU servers on Windows.

User-Friendly Interface:- The Windows GUI lowers the learning curve for IT staff by making server management simple.

Broad Compatibility:- Complete support for Windows-specific frameworks and apps, including Microsoft Azure SDK, DirectX, and the.NET Framework.

Optimized Performance:- By ensuring that the GPU hardware operates at its best, Windows-based drivers and upgrades reduce downtime.

Use Cases at Cloudminister Technologies for GPU Hosting

Cloudminister's GPU hosting servers are made to specifically cater to the demands of different sectors.

Machine learning and artificial intelligence:- With the aid of powerful GPU servers, machine learning models can be developed and trained more quickly. Perfect for PyTorch, Keras, TensorFlow, and other deep learning frameworks.

Media and Entertainment:- GPU servers provide the processing capacity required for VFX creation, 3D modeling, animation, and video rendering. These servers support programs like Blender, Autodesk Maya, and Adobe After Effects.

Big Data Analytics:- Use tools like Apache Hadoop and Apache Spark to process enormous amounts of data and gain real-time insights.

Development of Games:- Using strong GPUs that enable 3D rendering, simulations, and game engine integration with programs like Unreal Engine and Unity, create and test games.

Flexible Pricing and Plans

Cloudminister Technologies provides adjustable pricing structures to suit companies of all sizes:

Pay-as-you-go: This approach helps organizations efficiently manage expenditures by only charging for the resources you utilize.

Custom Packages: Hosting packages designed specifically for businesses with certain needs in terms of GPU, RAM, and storage.

Free Trials: Before making a long-term commitment, test the service risk-free.

Reliable Support and Services

To guarantee optimum server performance, Cloudminister Technologies provides a comprehensive range of support services:

24/7 Monitoring:- Proactive server monitoring to reduce downtime and maximize uptime.

Automated Backups:- To avoid data loss, create regular backups with simple restoration choices.

Managed Services:- Professional hosting environment management for companies in need of a full-service outsourced solution.

In conclusion

Cloudminister Technologies' GPU hosting servers are the ideal choice if your company relies on high-performance computing for AI, large data, rendering, or simulation. The scalability, security, and speed required to manage even the most resource-intensive workloads are offered by their Windows-compatible GPU servers.

Cloudminister Technologies is the best partner for companies looking for dependable GPU hosting solutions because of its flexible pricing, strong support, and state-of-the-art technology.

To find out how Cloudminister Technologies' GPU hosting services may improve your company's operations, get in contact with them right now.

VISIT:- www.cloudminister.com

0 notes

Text

Qlik SaaS: Transforming Data Analytics in the Cloud

In the era of digital transformation, businesses need fast, scalable, and efficient analytics solutions to stay ahead of the competition. Qlik SaaS (Software-as-a-Service) is a cloud-based business intelligence (BI) and data analytics platform that offers advanced data integration, visualization, and AI-powered insights. By leveraging Qlik SaaS, organizations can streamline their data workflows, enhance collaboration, and drive smarter decision-making.

This article explores the features, benefits, and use cases of Qlik SaaS and why it is a game-changer for modern businesses.

What is Qlik SaaS?

Qlik SaaS is the cloud-native version of Qlik Sense, a powerful data analytics platform that enables users to:

Integrate and analyze data from multiple sources

Create interactive dashboards and visualizations

Utilize AI-driven insights for better decision-making

Access analytics anytime, anywhere, on any device

Unlike traditional on-premise solutions, Qlik SaaS eliminates the need for hardware management, allowing businesses to focus solely on extracting value from their data.

Key Features of Qlik SaaS

1. Cloud-Based Deployment

Qlik SaaS runs entirely in the cloud, providing instant access to analytics without requiring software installations or server maintenance.

2. AI-Driven Insights

With Qlik Cognitive Engine, users benefit from machine learning and AI-powered recommendations, improving data discovery and pattern recognition.

3. Seamless Data Integration

Qlik SaaS connects to multiple cloud and on-premise data sources, including:

Databases (SQL, PostgreSQL, Snowflake)

Cloud storage (Google Drive, OneDrive, AWS S3)

Enterprise applications (Salesforce, SAP, Microsoft Dynamics)

4. Scalability and Performance Optimization

Businesses can scale their analytics operations without worrying about infrastructure limitations. Dynamic resource allocation ensures high-speed performance, even with large datasets.

5. Enhanced Security and Compliance

Qlik SaaS offers enterprise-grade security, including:

Role-based access controls

End-to-end data encryption

Compliance with industry standards (GDPR, HIPAA, ISO 27001)

6. Collaborative Data Sharing

Teams can collaborate in real-time, share reports, and build custom dashboards to gain deeper insights.

Benefits of Using Qlik SaaS

1. Cost Savings

By adopting Qlik SaaS, businesses eliminate the costs associated with on-premise hardware, software licensing, and IT maintenance. The subscription-based model ensures cost-effectiveness and flexibility.

2. Faster Time to Insights

Qlik SaaS enables users to quickly load, analyze, and visualize data without lengthy setup times. This speeds up decision-making and improves operational efficiency.

3. Increased Accessibility

With cloud-based access, employees can work with data from any location and any device, improving flexibility and productivity.

4. Continuous Updates and Innovations

Unlike on-premise BI solutions that require manual updates, Qlik SaaS receives automatic updates, ensuring users always have access to the latest features.

5. Improved Collaboration

Qlik SaaS fosters better collaboration by allowing teams to share dashboards, reports, and insights in real time, driving a data-driven culture.

Use Cases of Qlik SaaS

1. Business Intelligence & Reporting

Organizations use Qlik SaaS to track KPIs, monitor business performance, and generate real-time reports.

2. Sales & Marketing Analytics

Sales and marketing teams leverage Qlik SaaS for:

Customer segmentation and targeting

Sales forecasting and pipeline analysis

Marketing campaign performance tracking

3. Supply Chain & Operations Management

Qlik SaaS helps optimize logistics by providing real-time visibility into inventory, production efficiency, and supplier performance.

4. Financial Analytics

Finance teams use Qlik SaaS for:

Budget forecasting

Revenue and cost analysis

Fraud detection and compliance monitoring

Final Thoughts

Qlik SaaS is revolutionizing data analytics by offering a scalable, AI-powered, and cost-effective cloud solution. With its seamless data integration, robust security, and collaborative features, businesses can harness the full power of their data without the limitations of traditional on-premise systems.

As organizations continue their journey towards digital transformation, Qlik SaaS stands out as a leading solution for modern data analytics.

1 note

·

View note

Text

The Power of Power BI: Transforming Data into Action

In today’s digital age, data is one of the most valuable assets for businesses. However, having data alone is not enough—it needs to be transformed into meaningful insights that drive decision-making. This is where Power BI, Microsoft’s business intelligence tool, comes into play. For those looking to enhance their skills, Power BI Online Training & Placement programs offer comprehensive education and job placement assistance, making it easier to master this tool and advance your career.

Power BI enables organizations to visualize data, analyze trends, and make data-driven decisions that lead to business growth. Let’s explore how Power BI can revolutionize the way businesses use data.

1. What is Power BI?

Power BI is a powerful business analytics tool that allows businesses to connect, visualize, and analyze their data from multiple sources. It enables users to create interactive dashboards, reports, and real-time analytics to make informed decisions.

2. Key Features That Make Power BI a Game-Changer

a) Interactive Dashboards and Data Visualization

Power BI turns raw data into stunning, easy-to-understand charts, graphs, and reports. These visuals help businesses quickly identify patterns and make better decisions.

b) Real-Time Data Insights

With Power BI’s real-time analytics, businesses can track sales, customer interactions, and operational metrics as they happen. This allows organizations to react quickly to market changes and optimize strategies instantly.

c) AI-Powered Analytics

Power BI uses artificial intelligence and machine learning to analyze complex data sets and uncover hidden patterns. Features like Power BI Q&A allow users to ask questions in plain language and receive instant, data-driven answers.

d) Seamless Data Integration

Power BI connects with over 100+ data sources, including:

Microsoft Excel, SQL Server, and Azure

Google Analytics, Salesforce, and SAP

Cloud services like AWS and Google Cloud This makes it easy for businesses to combine data from different platforms for a holistic view of their operations.

e) Enhanced Security and Compliance

Power BI ensures that sensitive business data remains secure and compliant with industry regulations like GDPR, ISO, and HIPAA. Role-based access controls and data encryption add an extra layer of protection. It’s simpler to master this tool and progress your profession with the help of Best Online Training & Placement programs, which provide thorough instruction and job placement support to anyone seeking to improve their talents.

3. How Power BI Helps Businesses Make Smarter Decisions

a) Data-Driven Strategy Development

With Power BI, businesses can analyze past performance and forecast future trends, helping leaders make strategic, data-backed decisions.

b) Optimizing Operational Efficiency

Companies can monitor supply chains, employee productivity, and business processes in real time, allowing them to identify inefficiencies and improve operations.

c) Improving Customer Insights

By analyzing customer behavior and purchasing patterns, businesses can create personalized marketing strategies, boost customer engagement, and increase sales.

d) Financial Performance and Budgeting

Power BI allows finance teams to track revenues, expenses, and profitability with automated reports, making budget planning and financial forecasting easier.

4. Why Businesses Should Invest in Power BI

Cost-effective – Power BI offers affordable solutions for businesses of all sizes.

User-friendly – No extensive coding knowledge is required to create dashboards and reports.

Scalable – Whether you’re a startup or a large enterprise, Power BI grows with your business needs.

Cloud and on-premise options – Businesses can choose between cloud-based or on-premise deployment for flexibility.

5. Industries Benefiting from Power BI

Retail and e-commerce – Track sales, customer behavior, and product performance.

Healthcare – Monitor patient data, optimize resource allocation, and improve efficiency.

Finance and banking – Analyze financial performance, detect fraud, and manage risk.

Manufacturing – Optimize supply chain operations, reduce costs, and improve productivity.

Education – Track student performance, manage resources, and enhance learning experiences.

Conclusion

Power BI is revolutionizing the way businesses harness the power of data. By turning complex data into actionable insights, businesses can optimize operations, improve customer engagement, and drive revenue growth. Whether you are a small business, enterprise, or entrepreneur, Power BI provides the tools you need to make smarter, data-driven decisions.

0 notes

Text

What Is Amazon EBS? Features Of Amazon EBS And Pricing

Amazon Elastic Block Store: High-performance, user-friendly block storage at any size

What is Amazon EBS?

Amazon Elastic Block Store provides high-performance, scalable block storage with Amazon EC2 instances. AWS Elastic Block Store can create and manage several block storage resources:

Amazon EBS volumes: Amazon EC2 instances can use Amazon EBS volumes. A volume associated to an instance can be used to install software and store files like a local hard disk.

Amazon EBS snapshots: Amazon EBS snapshots are long-lasting backups of Amazon EBS volumes. You can snapshot Amazon EBS volumes to backup data. Afterwards, you can always restore new volumes from those snapshots.

Advantages of the Amazon Elastic Block Store

Quickly scale

For your most demanding, high-performance workloads, including mission-critical programs like Microsoft, SAP, and Oracle, scale quickly.

Outstanding performance

With high availability features like replication within Availability Zones (AZs) and io2 Block Express volumes’ 99.999% durability, you can guard against failures.

Optimize cost and storage

Decide which storage option best suits your workload. From economical dollar-per-GB to high performance with the best IOPS and throughput, volumes vary widely.

Safeguard

You may encrypt your block storage resources without having to create, manage, and safeguard your own key management system. Set locks on data backups and limit public access to prevent unwanted access to your data.

Easy data security

Amazon EBS Snapshots, a point-in-time copy that can be used to allow disaster recovery, move data across regions and accounts, and enhance backup compliance, can be used to protect block data storage both on-site and in the cloud. With its integration with Amazon Data Lifecycle Manager, AWS further streamlines snapshot lifecycle management by enabling you to establish policies that automate various processes, such as snapshot creation, deletion, retention, and sharing.

How it functions

A high-performance, scalable, and user-friendly block storage solution, Amazon Elastic Block Store was created for Amazon Elastic Compute Cloud (Amazon EC2).Image credit to AWS

Use cases

Create your cloud-based, I/O-intensive, mission-critical apps

Switch to the cloud for mid-range, on-premises storage area network (SAN) applications. Attach block storage that is both high-performance and high-availability for applications that are essential to the mission.

Utilize relational or NoSQL databases

Install and expand the databases of your choosing, such as Oracle, Microsoft SQL Server, PostgreSQL, MySQL, Cassandra, MongoDB, and SAP HANA.

Appropriately scale your big data analytics engines

Detach and reattach volumes effortlessly, and scale clusters for big data analytics engines like Hadoop and Spark with ease.

Features of Amazon EBS

It offers the following features:

Several volume kinds: Amazon EBS offers a variety of volume types that let you maximize storage efficiency and affordability for a wide range of uses. There are two main sorts of volume types: HDD-backed storage for workloads requiring high throughput and SSD-backed storage for transactional workloads.

Scalability: You can build Amazon EBS volumes with the performance and capacity requirements you want. You may adjust performance or dynamically expand capacity using Elastic Volumes operations as your needs change, all without any downtime.

Recovery and backup: Back up the data on your disks using Amazon EBS snapshots. Those snapshots can subsequently be used to transfer data between AWS accounts, AWS Regions, or Availability Zones or to restore volumes instantaneously.

Data protection: Encrypt your Amazon EBS volumes and snapshots using Amazon EBS encryption. To secure data-at-rest and data-in-transit between an instance and its connected volume and subsequent snapshots, encryption procedures are carried out on the servers that house Amazon EC2 instances.

Data availability and durability: io2 Block Express volumes have an annual failure rate of 0.001% and a durability of 99.999%. With a 0.1% to 0.2% yearly failure rate, other volume types offer endurance of 99.8% to 99.9%. To further guard against data loss due to a single component failure, volume data is automatically replicated across several servers in an Availability Zone.

Data archiving: EBS Snapshots Archive provides an affordable storage tier for storing full, point-in-time copies of EBS Snapshots, which you must maintain for a minimum of ninety days in order to comply with regulations. and regulatory purposes, or for upcoming project releases.

Related services

These services are compatible with Amazon EBS:

In the AWS Cloud, Amazon Elastic Compute Cloud lets you start and control virtual machines, or EC2 instances. Like hard drives, EBS volumes may store data and install software.

You can produce and maintain cryptographic keys with AWS Key Management Service, a managed service. Data saved on your Amazon EBS volumes and in your Amazon EBS snapshots can be encrypted using AWS KMS cryptographic keys.

EBS snapshots and AMIs supported by EBS are automatically created, stored, and deleted with Amazon Data Lifecycle Manager, a managed service. Backups of your Amazon EC2 instances and Amazon EBS volumes can be automated with Amazon Data Lifecycle Manager.

EBS direct APIs: These services let you take EBS snapshots, write data to them directly, read data from them, and determine how two snapshots differ or change from one another.

Recycle Bin is a data recovery solution that lets you recover EBS-backed AMIs and mistakenly erased EBS snapshots.

Accessing Amazon EBS

The following interfaces are used to build and manage your Amazon EBS resources:

Amazon EC2 console

A web interface for managing and creating snapshots and volumes.

AWS Command Line Interface

A command-line utility that enables you to use commands in your command-line shell to control Amazon EBS resources. Linux, Mac, and Windows are all compatible.

AWS Tools for PowerShell

A set of PowerShell modules for scripting Amazon EBS resource activities from the command line.

Amazon CloudFormation

It’s a fully managed AWS service that allows you describe your AWS resources using reusable JSON or YAML templates, and then it will provision and setup those resources for you.

Amazon EC2 Query API

The HTTP verbs GET or POST and a query parameter called Action are used in HTTP or HTTPS requests made through the Amazon EC2 Query API.

Amazon SDKs

APIs tailored to particular languages that let you create apps that interface with AWS services. Numerous well-known programming languages have AWS SDKs available.

Amazon EBS Pricing

You just pay for what you provision using Amazon EBS. See Amazon EBS pricing for further details.

Read more on Govindhtech.com

#AmazonEBS#ElasticBlockStore#AmazonEC2#EBSvolumes#EC2instances#EBSSnapshots#News#Technews#Technology#Technologynews#Technologytrends#Govindhtech

0 notes

Text

Why You Should Hire a Power BI Developer for Your Business

In today’s data-driven world, the ability to leverage business intelligence (BI) is crucial for making informed decisions and staying competitive. Power BI, a powerful data visualization and analytics tool from Microsoft, has emerged as a leading solution for transforming raw data into actionable insights. If you want to enhance your organization’s ability to analyze and interpret data, hiring a Power BI developer is a smart move. Here's why you should consider hiring a Power BI developer and how it can benefit your business.

Unlock the Full Potential of Power BI

Power BI is more than just a data visualization tool; it’s a robust business intelligence platform that integrates with a variety of data sources, from databases to cloud services. However, to truly unlock its full potential, it’s essential to have a Power BI developer who understands the intricacies of the platform. A skilled developer will know how to design interactive dashboards, automate reporting, and ensure your BI solutions are tailored to meet your organization’s specific needs.

Custom Dashboards and Reports

Every business has unique data needs, and a one-size-fits-all approach doesn’t always work. By hiring a Power BI developer, you can get custom dashboards and reports that are aligned with your business objectives. Power BI developers are adept at creating reports that allow stakeholders to easily visualize and interpret complex data. Whether you're analyzing sales trends, operational efficiency, or customer insights, a developer can design intuitive, interactive reports that make decision-making easier.

Streamlined Data Integration

One of Power BI’s greatest strengths is its ability to integrate with a wide range of data sources, including Excel, SQL Server, Google Analytics, and even cloud platforms like Azure. A Power BI developer is an expert in connecting and blending data from various sources, ensuring you have access to comprehensive, up-to-date information in one unified platform. This streamlined data integration process helps eliminate silos and enables better decision-making across your organization.

Enhanced Data Security

With the growing threat of data breaches, it’s essential to ensure your business intelligence systems are secure. When you hire a Power BI developer, they can set up proper data security measures within Power BI, ensuring that sensitive information is protected. Developers can implement row-level security, encryption, and access control features that restrict data access based on user roles, ensuring that only authorized users can view specific data sets.

Improved Business Decision-Making

The ultimate goal of Power BI is to provide actionable insights that lead to smarter business decisions. By hiring a Power BI developer, you ensure that your business intelligence solutions are designed with this goal in mind. Developers can build custom metrics, automated alerts, and forecasting models that enable you to make data-driven decisions faster. With real-time data insights, your business can stay ahead of the competition and respond quickly to market changes.

Scalability and Flexibility

As your business grows, so do your data needs. Power BI is a scalable solution, and a Power BI developer ensures your BI infrastructure grows alongside your business. Whether you need to add more data sources, users, or features, a skilled developer can help implement changes smoothly, ensuring your BI solution continues to support your organization’s evolving requirements.

Hire a Power BI Developer from Techcronus

If you’re looking to leverage the full power of Power BI for your business, hiring a dedicated Power BI developer is the key. At Techcronus, we offer expert Power BI development services tailored to your business needs. Our developers can help you design custom dashboards, integrate data sources, ensure data security, and ultimately enhance your decision-making processes. Whether you need a Power BI consultant or a full-time developer, we have the expertise to help your business harness the power of data.

Contact us today to learn more about how our Power BI development services can transform your business insights and improve your operations.

#Power BI developer#power bi consulting firms#microsoft power bi development#microsoft power bi developer#power bi development services#power bi service#power bi consulting services#power bi dashboard

0 notes

Text

Mastering Microsoft Power BI: A Guide to Business Intelligence

In today’s data-driven world, Microsoft Power BI has become a powerhouse for turning raw data into insightful, visual stories. As businesses look for streamlined ways to manage and interpret data, this tool stands out with its powerful analytical capabilities and user-friendly interface. This article will dive deep into how Power BI can transform the way you handle data, showcasing its key features and offering practical tips to get you started on your journey to becoming a Power BI expert.

What is Microsoft Power BI?

Microsoft Power BI is an interactive data visualization tool that transforms scattered data into clear and actionable insights. Built with non-tech users in mind, Power BI enables you to connect, analyze, and present data across a variety of sources—from Excel and Google Analytics to databases like SQL and Azure. Whether you’re working with big data or small data sets, Power BI’s capabilities cater to a broad range of needs.

Why Should You Use Microsoft Power BI?

The world of business intelligence can seem overwhelming, but Power BI simplifies data analysis by focusing on accessibility, versatility, and scalability. Let’s explore why this tool is a must-have for businesses and individuals:

1. User-Friendly Interface

Unlike many complex data tools, Power BI’s interface is intuitive. Users can quickly navigate through dashboards, reports, and data models without extensive training, making it accessible even to beginners.

2. Customizable Visualizations

Power BI allows you to turn data into various visualizations—bar charts, line graphs, maps, and more. With these options, it’s easy to find the format that best conveys your insights. Customization options also let you change colors, themes, and layouts to align with your brand.

3. Real-Time Data Access

Power BI connects with various data sources in real time, which means your reports always display up-to-date information. Real-time access is particularly helpful for businesses needing immediate insights for critical decision-making.

4. Seamless Integration with Other Microsoft Tools

As part of the Microsoft ecosystem, Power BI integrates smoothly with tools like Excel, Azure, and Teams. You can import data directly from Excel or push reports into SharePoint or Teams for collaborative work.

5. Strong Security Features

Security is essential in today’s data environment. Power BI offers robust security protocols, including row-level security, data encryption, and permissions management. This helps ensure that your data is safe, especially when collaborating across teams.

Core Components of Microsoft Power BI

To get a full understanding of Power BI, let’s break down its main components, which help users navigate the tool effectively:

1. Power BI Desktop

Power BI Desktop is the main application for creating and publishing reports. You can import data, create data models, and build interactive visualizations on the Desktop app. This tool is ideal for those who need a comprehensive data analysis setup on their personal computers.

2. Power BI Service

This is the online, cloud-based version of Power BI, where users can share, collaborate, and access reports from any location. It’s especially beneficial for teams that need a collaborative platform.

3. Power BI Mobile Apps

With Power BI Mobile, you can view reports and dashboards on the go. Whether you’re on a tablet or smartphone, this app gives you access to all Power BI services for mobile data insights.

4. Power BI Report Server

This on-premises version of Power BI offers the flexibility of running reports locally, ideal for organizations that require data storage within their own servers. Power BI Report Server supports hybrid approaches and data sovereignty requirements.

Getting Started with Power BI: Step-by-Step Guide

Ready to dive in? Here’s a quick guide to get you set up and familiarized with Power BI.

Step 1: Download Power BI Desktop

To start, download Power BI Desktop from the Microsoft website. The free version offers all the essential tools you need for data exploration and visualization.

Step 2: Connect Your Data

In Power BI, you can connect to multiple data sources like Excel, Google Analytics, SQL Server, and even cloud-based services. Go to the “Get Data” option, select your data source, and import the necessary datasets.

Step 3: Clean and Prepare Your Data

Once you’ve imported data, Power BI’s Power Query Editor allows you to clean and structure it. You can remove duplicates, handle missing values, and transform the data to fit your analysis needs.

Step 4: Build a Data Model

Data models create relationships between tables, allowing for more complex calculations. Power BI’s modeling tools let you establish relationships, add calculated columns, and create complex measures using DAX (Data Analysis Expressions).

Step 5: Create Visualizations

Now comes the exciting part—creating visualizations. Choose from various chart types and customize them with themes and colors. Drag and drop data fields onto the visualization pane to see your data come to life.

Step 6: Share Your Report

When your report is ready, publish it to the Power BI Service for sharing. You can also set up scheduled refreshes so your report remains current.

Essential Tips for Effective Power BI Use

For the best experience, keep these tips in mind:

Use Filters Wisely: Filters refine data without overloading the report. Use slicers or filters for more personalized reports.

Leverage Bookmarks: Bookmarks allow you to save specific views, helping you and your team switch between insights easily.

Master DAX: DAX functions provide more in-depth analytical power. Start with basics like SUM, AVERAGE, and CALCULATE, then advance to more complex formulas.

Optimize Performance: Avoid slow reports by limiting data size and choosing the right visualizations. Performance tips are crucial, especially with large datasets.

Common Use Cases for Microsoft Power BI

Power BI’s versatility enables it to be used in various industries and scenarios:

1. Sales and Marketing Analytics

Sales teams use Power BI to track sales performance, identify trends, and make data-driven decisions. Marketing teams can track campaigns, measure customer engagement, and segment data for tailored marketing.

2. Financial Analysis

Finance professionals turn to Power BI for analyzing budgets, forecasting revenue, and creating financial reports. Its ability to aggregate data from multiple sources makes financial insights comprehensive.

3. Human Resources

Power BI aids HR in tracking employee performance, analyzing turnover rates, and understanding recruitment metrics. HR dashboards offer real-time insights into workforce dynamics.

4. Customer Service

Customer service teams use Power BI for tracking support requests, measuring response times, and analyzing customer satisfaction. It helps in identifying service gaps and improving customer experience.

Why Power BI Matters in Today’s Business Landscape

Power BI has revolutionized business intelligence by making data accessible and actionable for everyone—from executives to analysts. Its ease of use, coupled with powerful analytical tools, has democratized data within organizations. Businesses can now make faster, more informed decisions, enhancing their competitive edge.

Final Thoughts

Whether you're in sales, finance, HR, or customer service, Microsoft Power BI offers the tools you need to turn complex data into a strategic asset. Its approachable design, coupled with robust analytical power, makes it the perfect choice for anyone looking to improve their data skills. By mastering Power BI, you’re not just investing in a tool—you’re investing in a future where data drives every decision.

0 notes

Text

Securing ASP.NET Applications: Best Practices

With the increase in cyberattacks and vulnerabilities, securing web applications is more critical than ever, and ASP.NET is no exception. ASP.NET, a popular web application framework by Microsoft, requires diligent security measures to safeguard sensitive data and protect against common threats. In this article, we outline best practices for securing ASP NET applications, helping developers defend against attacks and ensure data integrity.

1. Enable HTTPS Everywhere

One of the most essential steps in securing any web application is enforcing HTTPS to ensure that all data exchanged between the client and server is encrypted. HTTPS protects against man-in-the-middle attacks and ensures data confidentiality.

2. Use Strong Authentication and Authorization

Proper authentication and authorization are critical to preventing unauthorized access to your application. ASP.NET provides tools like ASP.NET Identity for managing user authentication and role-based authorization.

Tips for Strong Authentication:

Use Multi-Factor Authentication (MFA) to add an extra layer of security, requiring methods such as SMS codes or authenticator apps.

Implement strong password policies (length, complexity, expiration).

Consider using OAuth or OpenID Connect for secure, third-party login options (Google, Microsoft, etc.).

3. Protect Against Cross-Site Scripting (XSS)

XSS attacks happen when malicious scripts are injected into web pages that are viewed by other users. To prevent XSS in ASP.NET, all user input should be validated and properly encoded.

Tips to Prevent XSS:

Use the AntiXSS library built into ASP.NET for safe encoding.

Validate and sanitize all user input—never trust incoming data.

Use a Content Security Policy (CSP) to restrict which types of content (e.g., scripts) can be loaded.

4. Prevent SQL Injection Attacks

SQL injection occurs when attackers manipulate input data to execute malicious SQL queries. This can be prevented by avoiding direct SQL queries with user input.

How to Prevent SQL Injection:

Use parameterized queries or stored procedures instead of concatenating SQL queries.

Leverage ORM tools (e.g., Entity Framework), which handle query parameterization and prevent SQL injection.

5. Use Anti-Forgery Tokens to Prevent CSRF Attacks

Cross-Site Request Forgery (CSRF) tricks users into unknowingly submitting requests to a web application. ASP.NET provides anti-forgery tokens to validate incoming requests and prevent CSRF attacks.

6. Secure Sensitive Data with Encryption

Sensitive data, such as passwords and personal information, should always be encrypted both in transit and at rest.

How to Encrypt Data in ASP.NET:

Use the Data Protection API (DPAPI) to encrypt cookies, tokens, and user data.

Encrypt sensitive configuration data (e.g., connection strings) in the web.config file.

7. Regularly Patch and Update Dependencies

Outdated libraries and frameworks often contain vulnerabilities that attackers can exploit. Keeping your environment updated is crucial.

Best Practices for Updates:

Use package managers (e.g., NuGet) to keep your libraries up to date.

Use tools like OWASP Dependency-Check or Snyk to monitor vulnerabilities in your dependencies.

8. Implement Logging and Monitoring

Detailed logging is essential for tracking suspicious activities and troubleshooting security issues.

Best Practices for Logging:

Log all authentication attempts (successful and failed) to detect potential brute force attacks.

Use a centralized logging system like Serilog, ELK Stack, or Azure Monitor.

Monitor critical security events such as multiple failed login attempts, permission changes, and access to sensitive data.

9. Use Dependency Injection for Security

In ASP.NET Core, Dependency Injection (DI) allows for loosely coupled services that can be injected where needed. This helps manage security services such as authentication and encryption more effectively.

10. Use Content Security Headers

Security headers such as X-Content-Type-Options, X-Frame-Options, and X-XSS-Protection help prevent attacks like content-type sniffing, clickjacking, and XSS.

Conclusion

Securing ASP.NET applications is a continuous and evolving process that requires attention to detail. By implementing these best practices—from enforcing HTTPS to using security headers—you can reduce the attack surface of your application and protect it from common threats. Keeping up with modern security trends and integrating security at every development stage ensures a robust and secure ASP.NET application.

Security is not a one-time effort—it’s a continuous commitment

To know more: https://www.inestweb.com/best-practices-for-securing-asp-net-applications/

0 notes

Text

Using Azure Data Factory with Azure Synapse Analytics

Using Azure Data Factory with Azure Synapse Analytics

Introduction

Azure Data Factory (ADF) and Azure Synapse Analytics are two powerful cloud-based services from Microsoft that enable seamless data integration, transformation, and analytics at scale.

ADF serves as an ETL (Extract, Transform, Load) or ELT (Extract, Load, Transform) orchestration tool, while Azure Synapse provides a robust data warehousing and analytics platform.

By integrating ADF with Azure Synapse Analytics, businesses can build automated, scalable, and secure data pipelines that support real-time analytics, business intelligence, and machine learning workloads.

Why Use Azure Data Factory with Azure Synapse Analytics?

1. Unified Data Integration & Analytics

ADF provides a no-code/low-code environment to move and transform data before storing it in Synapse, which then enables powerful analytics and reporting.

2. Support for a Variety of Data Sources

ADF can ingest data from over 90+ native connectors, including: On-premises databases (SQL Server, Oracle, MySQL, etc.) Cloud storage (Azure Blob Storage, Amazon S3, Google Cloud Storage) APIs, Web Services, and third-party applications (SAP, Salesforce, etc.)

3. Serverless and Scalable Processing With Azure Synapse, users can choose between:

Dedicated SQL Pools (Provisioned resources for high-performance querying) Serverless SQL Pools (On-demand processing with pay-as-you-go pricing)

4. Automated Data Workflows ADF allows users to design workflows that automatically fetch, transform, and load data into Synapse without manual intervention.

5. Security & Compliance Both services provide enterprise-grade security, including: Managed Identities for authentication Role-based access control (RBAC) for data governance Data encryption using Azure Key Vault

Key Use Cases

Ingesting Data into Azure Synapse ADF serves as a powerful ingestion engine for structured, semi-structured, and unstructured data sources.

Examples include: Batch Data Loading: Move large datasets from on-prem or cloud storage into Synapse.

Incremental Data Load: Sync only new or changed data to improve efficiency.

Streaming Data Processing: Ingest real-time data from services like Azure Event Hubs or IoT Hub.

2. Data Transformation & Cleansing ADF provides two primary ways to transform data: Mapping Data Flows: A visual, code-free way to clean and transform data.

Stored Procedures & SQL Scripts in Synapse: Perform complex transformations using SQL.

3. Building ETL/ELT Pipelines ADF allows businesses to design automated workflows that: Extract data from various sources Transform data using Data Flows or SQL queries Load structured data into Synapse tables for analytics

4. Real-Time Analytics & Business Intelligence ADF can integrate with Power BI, enabling real-time dashboarding and reporting.

Synapse supports Machine Learning models for predictive analytics. How to Integrate Azure Data Factory with Azure Synapse Analytics Step 1: Create an Azure Data Factory Instance Sign in to the Azure portal and create a new Data Factory instance.

Choose the region and resource group for deployment.

Step 2: Connect ADF to Data Sources Use Linked Services to establish connections to storage accounts, databases, APIs, and SaaS applications.

Example: Connect ADF to an Azure Blob Storage account to fetch raw data.

Step 3: Create Data Pipelines in ADF Use Copy Activity to move data into Synapse tables. Configure Triggers to automate pipeline execution.

Step 4: Transform Data Before Loading Use Mapping Data Flows for complex transformations like joins, aggregations, and filtering. Alternatively, perform ELT by loading raw data into Synapse and running SQL scripts.

Step 5: Load Transformed Data into Synapse Analytics Store data in Dedicated SQL Pools or Serverless SQL Pools depending on your use case.

Step 6: Monitor & Optimize Pipelines Use ADF Monitoring to track pipeline execution and troubleshoot failures. Enable Performance Tuning in Synapse by optimizing indexes and partitions.

Best Practices for Using ADF with Azure Synapse Analytics

Use Incremental Loads for Efficiency Instead of copying entire datasets, use delta processing to transfer only new or modified records.

Leverage Watermark Columns or Change Data Capture (CDC) for incremental loads.

2. Optimize Performance in Data Flows Use Partitioning Strategies to parallelize data processing. Minimize Data Movement by filtering records at the source.

3. Secure Data Pipelines Use Managed Identity Authentication instead of hardcoded credentials. Enable Private Link to restrict data movement to the internal Azure network.

4. Automate Error Handling Implement Retry Policies in ADF pipelines for transient failures. Set up Alerts & Logging for real-time error tracking.

5. Leverage Cost Optimization Strategies Choose Serverless SQL Pools for ad-hoc querying to avoid unnecessary provisioning.

Use Data Lifecycle Policies to move old data to cheaper storage tiers. Conclusion Azure Data Factory and Azure Synapse Analytics together create a powerful, scalable, and cost-effective solution for enterprise data integration, transformation, and analytics.

ADF simplifies data movement, while Synapse offers advanced querying and analytics capabilities.

By following best practices and leveraging automation, businesses can build efficient ETL pipelines that power real-time insights and decision-making.

WEBSITE: https://www.ficusoft.in/azure-data-factory-training-in-chennai/

0 notes

Text

Integration Specialist: Bridging the Gap Between Systems and Efficiency

The Key to Scalable, Secure, and Future-Ready IT Solutions.

Introduction

In today’s interconnected digital landscape, businesses rely on seamless data exchange and system connectivity to optimize operations and improve efficiency. Integration specialists play a crucial role in designing, implementing, and maintaining integrations between various software applications, ensuring smooth communication and workflow automation. With the rise of cloud computing, APIs, and enterprise applications, integration specialists are essential for driving digital transformation.

What is an Integration Specialist?

An Integration Specialist is a professional responsible for developing and managing software integrations between different systems, applications, and platforms. They design workflows, troubleshoot issues, and ensure data flows securely and efficiently across various environments. Integration specialists work with APIs, middleware, and cloud-based tools to connect disparate systems and improve business processes.

Types of Integration Solutions

Integration specialists work with different types of solutions to meet business needs:

API Integrations

Connects different applications via Application Programming Interfaces (APIs).

Enables real-time data sharing and automation.

Examples: RESTful APIs, SOAP APIs, GraphQL.

Cloud-Based Integrations

Connects cloud applications like SaaS platforms.

Uses integration platforms as a service (iPaaS).

Examples: Zapier, Workato, MuleSoft, Dell Boomi.

Enterprise System Integrations

Integrates large-scale enterprise applications.

Connects ERP (Enterprise Resource Planning), CRM (Customer Relationship Management), and HR systems.

Examples: Salesforce, SAP, Oracle, Microsoft Dynamics.

Database Integrations

Ensures seamless data flow between databases.

Uses ETL (Extract, Transform, Load) processes for data synchronization.

Examples: SQL Server Integration Services (SSIS), Talend, Informatica.

Key Stages of System Integration

Requirement Analysis & Planning

Identify business needs and integration goals.

Analyze existing systems and data flow requirements.

Choose the right integration approach and tools.

Design & Architecture

Develop a blueprint for the integration solution.

Select API frameworks, middleware, or cloud services.

Ensure scalability, security, and compliance.

Development & Implementation

Build APIs, data connectors, and automation workflows.

Implement security measures (encryption, authentication).

Conduct performance optimization and data validation.

Testing & Quality Assurance

Perform functional, security, and performance testing.

Identify and resolve integration errors and data inconsistencies.

Conduct user acceptance testing (UAT).

Deployment & Monitoring

Deploy integration solutions in production environments.

Monitor system performance and error handling.

Ensure smooth data synchronization and process automation.

Maintenance & Continuous Improvement

Provide ongoing support and troubleshooting.

Optimize integration workflows based on feedback.

Stay updated with new technologies and best practices.

Best Practices for Integration Success

✔ Define clear integration objectives and business needs. ✔ Use secure and scalable API frameworks. ✔ Optimize data transformation processes for efficiency. ✔ Implement robust authentication and encryption. ✔ Conduct thorough testing before deployment. ✔ Monitor and update integrations regularly. ✔ Stay updated with emerging iPaaS and API technologies.

Conclusion

Integration specialists are at the forefront of modern digital ecosystems, ensuring seamless connectivity between applications and data sources. Whether working with cloud platforms, APIs, or enterprise systems, a well-executed integration strategy enhances efficiency, security, and scalability. Businesses that invest in robust integration solutions gain a competitive edge, improved automation, and streamlined operations.

Would you like me to add recommendations for integration tools or comparisons of middleware solutions? 🚀

0 notes

Text

Unraveling the Power of Integration Runtime in Azure Data Factory: A Comprehensive Guide

Table of Contents

Introduction

Understanding Integration Runtime

Key Features and Benefits

Use Cases

Best Practices for Integration Runtime Implementation:

Conclusion

FAQS on Integration runtime In Azure Data Factory

Introduction

Within the time of data-driven decision-making, businesses depend on robust information integration arrangements to streamline their operations and gain important experiences. Azure Data Factory stands out as a chief choice for orchestrating and automating information workflows within the cloud. At the heart of Azure Data Factory lies Integration Runtime (IR), a flexible and effective engine that facilitates consistent data movement over diverse environments. Whether you are a seasoned data engineer or a newcomer to Azure Data Factory, this article aims to prepare you with the information to harness the total potential of Integration Runtime for your information integration needs. In this comprehensive guide, we dive profound into Integration Runtime in Azure Data Factory, investigating its key features, benefits, use cases, and best practices.

Understanding Integration Runtime

Integration Runtime serves as the backbone of Azure Data Factory, enabling productive information development and change over different sources and goals. It functions as a compute framework inside Azure, encouraging a network between the data stores, compute services, and pipelines in Azure Data Factory.

There are three types of Integration Runtime in Azure Data Factory

1. Azure Integration Runtime

This type of Integration Runtime is fully managed by Microsoft and is best suited for information movement within Azure administrations and between cloud environments.

2. Self-hosted Integration Runtime