#FakeApp

Note

Hello just wanted to ask! What do you use for smau?

heya! thanks for this ask ♡ i hope it will clear some things up :)

i use memi message (ignore the 2.4 stars it is decent at times but its kinda glitchy for me) for texting and gcs!

other good alternates that i use sometimes are :

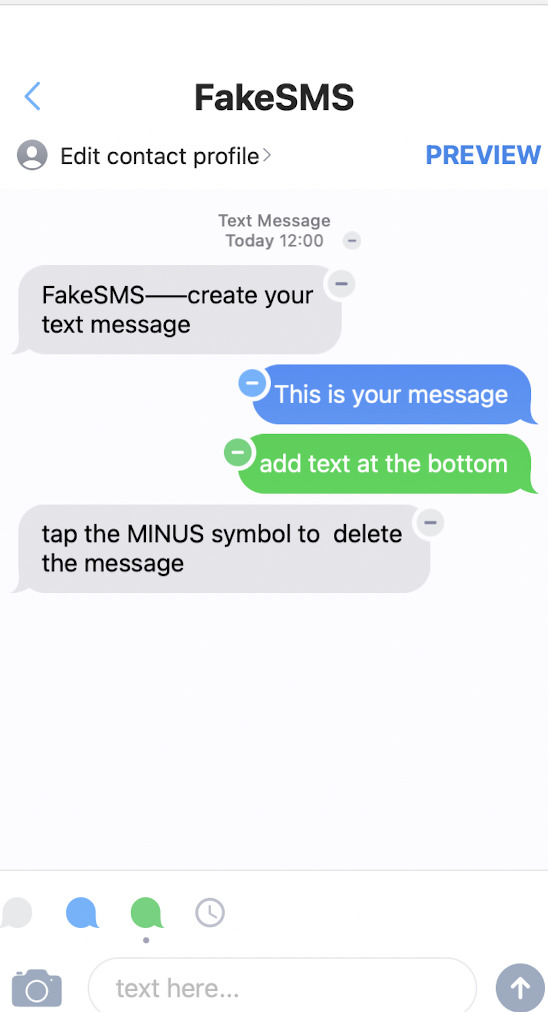

fake chat is really nice and customizable BUT NOT GREAT because of 2 things!!

you cant do group chats

it doesnt save contacts or message history!!

typestory is an alternate app for groupchats!! (you can use it in conjunction with fakeapp <3 these two are also compatible with mac and other devices so its a lot more versatile! ofc, it does require more work)

theres just one big problem

you cant make your own texts in the gc

by that i mean you cant answer anything - because typestory is meant to be a story, meaning that only the characteres speak (aka blue and green bubbles)

as for social media (twitter) i use twinote!! definitely the best one out there, looks really legit and is also fairly easy to use! the time function is a lil wack but thats fine and managable

REASONS WHY ITS VERY GOOD AND MELON APPROVED

making accounts is really easy!!

everything looks perfect

you can even set up notifications, follows

and twitter dms!!! (a function i will definitely be using)

if you have any more questions, feel free to send another dm! thank you ♡♡

21 notes

·

View notes

Text

Fake Messaging Apps Exploit Chinese Users through Malicious Google Ads

Chinese-speaking users are facing a targeted threat through malicious Google ads promoting restricted messaging apps like Telegram, as part of an ongoing malvertising campaign. Malwarebytes' Jérôme Segura revealed in a Thursday report that the threat actor is exploiting Google advertiser accounts to generate harmful ads, directing unsuspecting users to download Remote Administration Trojan (RATs). These programs grant attackers complete control over a victim's machine, enabling them to introduce additional malware.

"The threat actor is abusing Google advertiser accounts to create malicious ads and pointing them to pages where unsuspecting users will download Remote Administration Trojan (RATs) instead," noted Segura.

It's crucial to emphasize that this malicious activity, known as FakeAPP, is a continuation of a previous attack wave that specifically targeted Hong Kong users searching for messaging apps like WhatsApp and Telegram on search engines in late October 2023.

In the latest phase of the campaign, the threat actors have expanded their scope by including the messaging app LINE in their list of targeted applications. Users are redirected to counterfeit websites hosted on Google Docs or Google Sites.

The Google infrastructure is leveraged to embed links leading to other sites controlled by the threat actor. This facilitates the delivery of malicious installer files, ultimately deploying trojans such as PlugX and Gh0st RAT.

Malwarebytes investigators have traced the origin of the fraudulent ads to two advertiser accounts, namely Interactive Communication Team Limited and Ringier Media Nigeria Limited, both based in Nigeria.

Commenting on the tactics employed by the threat actor, Segura highlighted that they prioritize quantity over quality, continually introducing new payloads and infrastructure for command-and-control purposes.

Read the full article

0 notes

Text

“We’re not so far from the collapse of reality."

Major advancements in AI and technology are now allowing people to convincingly face-swap people in videos to make it seem like a celebrity or public figure did or said something they did not do. Software like FakeApp allows for it, and this type of technology is evolving quickly, so people should be careful what they believe.

0 notes

Text

Jordan Peele’s simulated Obama PSA is a double-edged warning against fake news

This deepfaked warning against deepfakes almost makes its point too well.

"We're entering an era in which our enemies can make anyone say anything at any point in time."

By Aja Romano Apr 18, 2018

Jordan Peele just used deepfakes — the nightmarish dystopian tool we last saw being used to generate fake celebrity porn — to deliver the deepest fake of them all.

The Get Out director teamed up with his brother-in-law, BuzzFeed CEO Jonah Peretti, to produce a public service announcement made by Barack Obama.

Obama’s message? Don’t believe everything you see and read on the internet.

“It may sound basic, but how we move forward in the age of information is going to be the difference between whether we survive or whether we become some kind of fucked-up dystopia,” Obama tells viewers in the BuzzFeed video. He also declares that Black Panther’s villain Killmonger was “right” about his plan for world domination, “Ben Carson is in the Sunken Place” — a reference to one of the heartiest memes from Peele’s Oscar-winning Get Out screenplay — and Trump is a “dipshit.”

As anyone who’s familiar with deepfakes has guessed by now, “Obama” in this video is actually Peele himself, doing his famous interpretation of the former president. The algorithmic machine learning technology of deepfakes allows anyone to create a very convincing simulation of a human subject given ample photographic evidence on which to train the machine about what the image should look like.

Given the sheer amount of media coverage around Obama, it was fairly easy for BuzzFeed’s video producer Jared Sosa to create the simulation — though to get the simulation right still required 56 hours of training the machine, according to BuzzFeed’s report on the video.

“Deepfakes” is the term coined by a Reddit user who made a script for the process and released it onto a subreddit he made, also called deepfakes. Another user took that script and modified it into a downloadable program, FakeApp. But although the term came to the world’s attention in conjunction with celebrity porn, the first complex face-capturing tools used to demonstrate the techniques deployed by FakeApp were originally applied to manipulating political figures.

A 2016 research experiment saw the technique being applied to world leaders like George W. Bush, Vladimir Putin, and Obama. Subsequent research applied the technique just to Obama — and the researchers were immediately wary of the monster they’d created.

“You can’t just take anyone’s voice and turn it into an Obama video,” Steve Seitz, one of the researchers, stated in a press release. “We very consciously decided against going down the path of putting other people’s words into someone’s mouth.”

Barely six months later, deepfakes was born. And as Peele and BuzzFeed have proven, you clearly can just take anyone’s voice and turn it into an Obama video — provided the voice is convincing enough.

Though Reddit ultimately banned all faked porn generated via deepfakes, the Pandora’s box of fake reality generation has been opened, and anything — from Obama to Nicolas Cage — is fair game.

Given all this context, it’s arguable that Peele’s contribution might not actually be helping people understand how serious the potential for reality distortion is, so much as giving them a taste of how fun this tech might be to play around with.

Still, in the age of “fake news,” Peele and Peretti clearly felt the message was timely. “We’re entering an era in which our enemies can make it look like anyone is saying anything at any point in time,” the PSA begins.

Point proven.

You Won’t Believe What Obama Says In This Video! 😉

youtube

d

0 notes

Photo

Latest malicious android app lists, if anyone already installed those applications, remove it immediately. #maliciousapps #jokervirus #fakeapp https://www.instagram.com/p/CgC5JJ3jJYn/?igshid=NGJjMDIxMWI=

0 notes

Text

The DeepFakeApp is scary for a variety of reasons, chief among them being the ability to make someone you do not like appear to say or do something they have never done. This is not limited to revenge porn (although that in and of itself can be reputation-shattering, not to mention traumatizing), you could turn your enemies into racists, you can make them murderers, you can put them on a screen and have them in places they would never be.

All the while, most viewers are none-the-wiser, not realizing they’re watching a fake facelift application.

85 notes

·

View notes

Text

Here Come the Fake Videos, Too

By Kevin Roose, NY Times, March 4, 2018

The scene opened on a room with a red sofa, a potted plant and the kind of bland modern art you’d see on a therapist’s wall.

In the room was Michelle Obama, or someone who looked exactly like her. Wearing a low-cut top with a black bra visible underneath, she writhed lustily for the camera and flashed her unmistakable smile.

Then, the former first lady’s doppelgänger began to strip.

The video, which appeared on the online forum Reddit, was what’s known as a “deepfake”--an ultrarealistic fake video made with artificial intelligence software. It was created using a program called FakeApp, which superimposed Mrs. Obama’s face onto the body of a pornographic film actress. The hybrid was uncanny--if you didn’t know better, you might have thought it was really her.

Until recently, realistic computer-generated video was a laborious pursuit available only to big-budget Hollywood productions or cutting-edge researchers. Social media apps like Snapchat include some rudimentary face-morphing technology.

But in recent months, a community of hobbyists has begun experimenting with more powerful tools, including FakeApp--a program that was built by an anonymous developer using open-source software written by Google. FakeApp makes it free and relatively easy to create realistic face swaps and leave few traces of manipulation. Since a version of the app appeared on Reddit in January, it has been downloaded more than 120,000 times, according to its creator.

Deepfakes are one of the newest forms of digital media manipulation, and one of the most obviously mischief-prone. It’s not hard to imagine this technology’s being used to smear politicians, create counterfeit revenge porn or frame people for crimes. Lawmakers have already begun to worry about how deepfakes could be used for political sabotage and propaganda.

Even on morally lax sites like Reddit, deepfakes have raised eyebrows. Recently, FakeApp set off a panic after Motherboard, the technology site, reported that people were using it to create pornographic deepfakes of celebrities. Pornhub, Twitter and other sites quickly banned the videos, and Reddit closed a handful of deepfake groups, including one with nearly 100,000 members.

Some users on Reddit defended deepfakes and blamed the media for overhyping their potential for harm. Others moved their videos to alternative platforms, rightly anticipating that Reddit would crack down under its rules against nonconsensual pornography. And a few expressed moral qualms about putting the technology into the world.

Then, they kept making more.

The deepfake creator community is now in the internet’s shadows. But while out in the open, it gave an unsettling peek into the future.

“This is turning into an episode of Black Mirror,” wrote one Reddit user. The post raised the ontological questions at the heart of the deepfake debate: Does a naked image of Person A become a naked image of Person B if Person B’s face is superimposed in a seamless and untraceable way? In a broader sense, on the internet, what is the difference between representation and reality?

The user then signed off with a shrug: “Godspeed rebels.”

After lurking for several weeks in Reddit’s deepfake community, I decided to see how easy it was to create a (safe for work, nonpornographic) deepfake using my own face.

I started by downloading FakeApp and enlisting two technical experts to help me. The first was Mark McKeague, a colleague in The New York Times’s research and development department. The second was a deepfake creator I found through Reddit, who goes by the nickname Derpfakes.

Because of the controversial nature of deepfakes, Derpfakes would not give his or her real name. Derpfakes started posting deepfake videos on YouTube a few weeks ago, specializing in humorous offerings like Nicolas Cage playing Superman. The account has also posted some how-to videos on deepfake creation.

What I learned is that making a deepfake isn’t simple. But it’s not rocket science, either.

The first step is to find, or rent, a moderately powerful computer. FakeApp uses a suite of machine learning tools called TensorFlow, which was developed by Google’s A.I. division and released to the public in 2015. The software teaches itself to perform image-recognition tasks through trial and error. The more processing power on hand, the faster it works.

To get more speed, Mark and I used a remote server rented through Google Cloud Platform. It provided enough processing power to cut the time frame down to hours, rather than the days or weeks it might take on my laptop.

Once Mark set up the remote server and loaded FakeApp on it, we were on to the next step: data collection.

Picking the right source data is crucial. Short video clips are easier to manipulate than long clips, and scenes shot at a single angle produce better results than scenes with multiple angles. Genetics also help. The more the faces resemble each other, the better.

I’m a brown-haired white man with a short beard, so Mark and I decided to try several other brown-haired, stubbled white guys. We started with Ryan Gosling. (Aim high, right?) I also sent Derpfakes, my outsourced Reddit expert, several video options to choose from.

Next, we took several hundred photos of my face, and gathered images of Mr. Gosling’s face using a clip from a recent TV appearance. FakeApp uses these images to train the deep learning model and teach it to emulate our facial expressions.

To get the broadest photo set possible, I twisted my head at different angles, making as many different faces as I could.

Mark then used a program to crop those images down, isolating just our faces, and manually deleted any blurred or badly cropped photos. He then fed the frames into FakeApp. In all, we used 417 photos of me, and 1,113 of Mr. Gosling.

When the images were ready, Mark pressed “start” on FakeApp, and the training began. His computer screen filled with images of my face and Mr. Gosling’s face, as the program tried to identify patterns and similarities.

About eight hours later, after our model had been sufficiently trained, Mark used FakeApp to finish putting my face on Mr. Gosling’s body. The video was blurry and bizarre, and Mr. Gosling’s face occasionally flickered into view. Only the legally blind would mistake the person in the video for me.

We did better with a clip of Chris Pratt, the scruffy star of “Jurassic World,” whose face shape is a little more similar to mine. For this test, Mark used a bigger data set--1,861 photos of me, 1,023 of him--and let the model run overnight.

A few days later, Derpfakes, who had also been training a model, sent me a finished deepfake made using the footage I had sent and a video of the actor Jake Gyllenhaal. This one was much more lifelike, a true hybrid that mixed my facial features with his hair, beard and body.

Derpfakes repeated the process with videos of Jimmy Kimmel and Liev Schreiber, both of which turned out well. As an experienced deepfake creator, Derpfakes had a more intuitive sense of which source videos would produce a clean result, and more experience with the subtle blending and tweaking that takes place at the end of the deepfake process.

In all, our deepfake experiment took three days and cost $85.96 in Google Cloud Platform credits. That seemed like a small price to pay for stardom.

On the day of the school shooting last month in Parkland, Fla., a screenshot of a BuzzFeed News article, “Why We Need to Take Away White People’s Guns Now More Than Ever,” written by a reporter named Richie Horowitz, began making the rounds on social media.

The whole thing was fake. No BuzzFeed employee named Richie Horowitz exists, and no article with that title was ever published on the site. But the doctored image pulsed through right-wing outrage channels and was boosted by activists on Twitter. It wasn’t an A.I.-generated deepfake, or even a particularly sophisticated Photoshop job, but it did the trick.

Online misinformation, no matter how sleekly produced, spreads through a familiar process once it enters our social distribution channels. The hoax gets 50,000 shares, and the debunking an hour later gets 200. The carnival barker gets an algorithmic boost on services like Facebook and YouTube, while the expert screams into the void.

There’s no reason to believe that deepfake videos will operate any differently. People will share them when they’re ideologically convenient and dismiss them when they’re not. The dupes who fall for satirical stories from The Onion will be fooled by deepfakes, and the scrupulous people who care about the truth will find ways to detect and debunk them.

“There’s no choice,” said Hao Li, an assistant professor of computer science at the University of Southern California. Mr. Li, who is also the founder of Pinscreen, a company that uses artificial intelligence to create lifelike 3-D avatars, said the weaponization of A.I. was inevitable and would require a sudden shift in public awareness.

“I see this as the next form of communication,” he said. “I worry that people will use it to blackmail others, or do bad things. You have to educate people that this is possible.”

So, O.K. Here I am, telling you this: An A.I. program powerful enough to turn Michelle Obama into a pornography star, or transform a schlubby newspaper columnist into Jake Gyllenhaal, is in our midst. Manipulated video will soon become far more commonplace.

And there’s probably nothing we can do except try to bat the fakes down as they happen, pressure social media companies to fight misinformation aggressively, and trust our eyes a little less every day.

5 notes

·

View notes

Photo

I'm up to $95.48 save only need a few dollars more to cash out on #LuckyPusher... Problem is... It took me only a few days of playing to reach $95.48 and after that, not a single green coin will ever be offered again so I've been playing for about 2 weeks with zero chance to ever reach the payout of $100. I'm deleting this #scamapp as well as all the others that have me stuck just a few dollars away from the payout but refuse to allow me to actually reach that payout. #scammerseverywhere #scamsdaily #scamalert #badapp #badapps #dontwasteyourtime #nopayout #nevercashout #fakeapp https://www.instagram.com/p/CFVYY29j5mx/?igshid=whwspuzdb1uq

#luckypusher#scamapp#scammerseverywhere#scamsdaily#scamalert#badapp#badapps#dontwasteyourtime#nopayout#nevercashout#fakeapp

0 notes

Text

I’m a little bent out of shape the FakeApp for deep fakes only runs on NVIDIA right now. There’s so much potential there.

3 notes

·

View notes

Photo

#appinfluencer #definition #personagem #pessoa #aplicativo #empatia #fakeapp x face #realidade https://www.instagram.com/p/CBdD01vHkVh/?igshid=1c6yrg8jua2iu

0 notes

Photo

In this week's episode David, John and Kyle discuss a missing woman that was spotted on The Bachelor (2:00), a twist on robotic telepresence nicknamed “Human Uber” (8:05), a Kickstarter for a unique movie viewing experience (12:20) and a creepy software app that can replace faces in any video (18:00). We also review the series premiere of Netflix’s Altered Carbon entitled “Out of the Past” (27:00). Finally, we discuss the first week of Celebrity Big Brother including the first two live evictions (37:50).

http://www.dualredundancy.com/2018/02/podcast-193-altered-carbon-and.html

#the bachelor#rebekah martinez#missing bachelor#human uber#chameleon mask#kickstarter#poptheatr#poptheatr kickstarter#nicolas cage#fakeapp#altered carbon#altered carbon pilot#altered carbon podcast#altered carbon review#altered carbon premiere#altered carbon out of the past#celebrity big brother#big brother#bbceleb#celebbb#big brother podcast#celebrity big brother podcast#omarosa#celebrity big brother omarosa#omarosa big brother#shannon elizabeth#james maslow#metta world peace

1 note

·

View note

Link

0 notes

Video

instagram

STLQR... for those of us loving others from a distance today... and every other day... and hour... and minute... 😂🔪📱🎬 // #rowlbertosmedia #stlqr #fakeapp #comedy #clinger #stage5 #stage5clinger #maneater #actress #actor #singlesawarenessday #stalker #stalking #femalekiller #obsession #obsessed #humor #newapp #greenscreen #rayerichards #actinglife #actorslife #sketchcomedy

#fakeapp#greenscreen#stage5clinger#obsession#stage5#stalker#comedy#actorslife#maneater#singlesawarenessday#femalekiller#actress#sketchcomedy#obsessed#newapp#actor#stlqr#clinger#rayerichards#rowlbertosmedia#stalking#humor#actinglife

7 notes

·

View notes