#Geolocation Database

Explore tagged Tumblr posts

Text

IP Address Geolocation API | Detect City By IP | DB-IP

DB-IP provides a powerful ip address geolocation api that allows users to easily determine the geographic location of an IP address. With this tool, you can detect city by IP, offering precise data for enhanced analytics, targeted content, and improved security. The API delivers real-time results, making it ideal for businesses needing accurate location-based information. Whether used for fraud prevention or customized user experiences, DB-IP ensures reliable and scalable geolocation services tailored to diverse industry needs.

0 notes

Text

Operatives from Elon Musk’s so-called Department of Government Efficiency (DOGE) are building a master database at the Department of Homeland Security (DHS) that could track and surveil undocumented immigrants, two sources with direct knowledge tell WIRED.

DOGE is knitting together immigration databases from across DHS and uploading data from outside agencies including the Social Security Administration (SSA), as well as voting records, sources say. This, experts tell WIRED, could create a system that could later be searched to identify and surveil immigrants.

The scale at which DOGE is seeking to interconnect data, including sensitive biometric data, has never been done before, raising alarms with experts who fear it may lead to disastrous privacy violations for citizens, certified foreign workers, and undocumented immigrants.

A United States Customs and Immigration Services (USCIS) data lake, or centralized repository, existed at DHS prior to DOGE that included data related to immigration cases, like requests for benefits, supporting evidence in immigration cases, and whether an application has been received and is pending, approved, or denied. Since at least mid-March, however, DOGE has been uploading mass amounts of data to this preexisting USCIS data lake, including data from the Internal Revenue Service (IRS), SSA, and voting data from Pennsylvania and Florida, two DHS sources with direct knowledge tell WIRED.

“They are trying to amass a huge amount of data,” a senior DHS official tells WIRED. “It has nothing to do with finding fraud or wasteful spending … They are already cross-referencing immigration with SSA and IRS as well as voter data.”

Since president Donald Trump’s return to the White House earlier this year, WIRED and other outlets have reported extensively on DOGE’s attempts to gain unprecedented access to government data, but until recently little has been publicly known about the purpose of such requests or how they would be processed. Reporting from The New York Times and The Washington Post has made clear that one aim is to cross-reference datasets and leverage access to sensitive SSA systems to effectively cut immigrants off from participating in the economy, which the administration hopes would force them to leave the county. The scope of DOGE’s efforts to support the Trump administration’s immigration crackdown appear to be far broader than this, though. Among other things, it seems to involve centralizing immigrant-related data from across the government to surveil, geolocate, and track targeted immigrants in near real time.

DHS and the White House did not immediately respond to requests for comment.

DOGE’s collection of personal data on immigrants around the US has dovetailed with the Trump administration’s continued immigration crackdown. “Our administration will not rest until every single violent illegal alien is removed from our country,” Karoline Leavitt, White House press secretary, said in a press conference on Tuesday.

On Thursday, Gerald Connolly, a Democrat from Virginia and ranking member on the House Oversight Committee, sent a letter to the SSA office of the inspector general stating that representatives have spoken with an agency whistleblower who has warned them that DOGE was building a “master database” containing SSA, IRS, and HHS data.

“The committee is in possession of multiple verifiable reports showing that DOGE has exfiltrated sensitive government data across agencies for unknown purposes,” a senior oversight committee aide claims to WIRED. “Also concerning, a pattern of technical malfeasance has emerged, showing these DOGE staffers are not abiding by our nation’s privacy and cybersecurity laws and their actions are more in line with tactics used by adversaries waging an attack on US government systems. They are using excessive and unprecedented system access to intentionally cover their tracks and avoid oversight so they can creep on Americans’ data from the shadows.”

“There's a reason these systems are siloed,” says Victoria Noble, a staff attorney at the Electronic Frontier Foundation. “When you put all of an agency's data into a central repository that everyone within an agency or even other agencies can access, you end up dramatically increasing the risk that this information will be accessed by people who don't need it and are using it for improper reasons or repressive goals, to weaponize the information, use it against people they dislike, dissidents, surveil immigrants or other groups.”

One of DOGE’s primary hurdles to creating a searchable data lake has been obtaining access to agency data. Even within an agency like DHS, there are several disparate pools of data across ICE, USCIS, Customs and Border Protection, and Homeland Security Investigations (HSI). Though some access is shared, particularly for law enforcement purposes, these pools have not historically been commingled by default because the data is only meant to be used for specific purposes, experts tell WIRED. ICE and HSI, for instance, are law enforcement bodies and sometimes need court orders to access an individual's information for criminal investigations, whereas USCIS collects sensitive information as part of the regular course of issuing visas and green cards.

DOGE operatives Edward Coristine, Kyle Schutt, Aram Moghaddassi, and Payton Rehling have already been granted access to systems at USCIS, FedScoop reported earlier this month. The USCIS databases contain information on refugees and asylum seekers and possibly data on green card holders, naturalized US citizens, and Deferred Action for Childhood Arrivals recipients, a DHS source familiar tells WIRED.

DOGE wants to upload information to the data lake from myUSCIS, the online portal where immigrants can file petitions, communicate with USCIS, view their application history, and respond to requests for evidence supporting their case, two DHS sources with direct knowledge tell WIRED. In combination with IP address information from immigrants that sources tell WIRED that DOGE also wants, this data could be used to aid in geolocating undocumented immigrants, experts say.

Voting data, at least from Pennsylvania and Florida, appears to also have also been uploaded to the USCIS data lake. In the case of Pennsylvania, two DHS sources tell WIRED that it is being joined with biometric data from USCIS’s Customer Profile Management System, identified on the DHS’s website as a “person-centric repository of biometric and associated biographic information provided by applicants, petitioners, requestors, and beneficiaries” who have been “issued a secure card or travel document identifying the receipt of an immigration benefit.”

“DHS, for good reason, has always been very careful about sharing data,” says a former DHS staff member who spoke to WIRED on the condition of anonymity because they were not authorized to speak to the press. “Seeing this change is very jarring. The systemization of it all is what gets scary, in my opinion, because it could allow the government to go after real or perceived enemies or ‘aliens; ‘enemy aliens.’”

While government agencies frequently share data, this process is documented and limited to specific purposes, according to experts. Still, the consolidation appears to have administration buy-in: On March 20, President Trump signed an executive order requiring all federal agencies to facilitate “both the intra- and inter-agency sharing and consolidation of unclassified agency records.” DOGE officials and Trump administration agency leaders have also suggested centralizing all government data into one single repository. “As you think about the future of AI, in order to think about using any of these tools at scale, we gotta get our data in one place," General Services Administration acting administrator Stephen Ehikian said in a town hall meeting on March 20. In an interview with Fox News in March, Airbnb cofounder and DOGE member Joe Gebbia asserted that this kind of data sharing would create an “Apple-like store experience” of government services.

According to the former staffer, it was historically “extremely hard” to get access to data that DHS already owned across its different departments. A combined data lake would “represent significant departure in data norms and policies.” But, they say, “it’s easier to do this with data that DHS controls” than to try to combine it with sensitive data from other agencies, because accessing data from other agencies can have even more barriers.

That hasn’t stopped DOGE operatives from spending the last few months requesting access to immigration information that was, until recently, siloed across different government agencies. According to documents filed in the American Federation of State, County and Municipal Employees, AFL-CIO v. Social Security Administration lawsuit on March 15, members of DOGE who were stationed at SSA requested access to the USCIS database, SAVE, a system for local and state governments, as well as the federal government, to verify a person’s immigration status.

According to two DHS sources with direct knowledge, the SSA data was uploaded to the USCIS system on March 24, only nine days after DOGE received access to SSA’s sensitive government data systems. An SSA source tells WIRED that the types of information are consistent with the agency's Numident database, which is the file of information contained in a social security number application. The Numident record would include a person’s social security number, full names, birthdates, citizenship, race, ethnicity, sex, mother’s maiden name, an alien number, and more.

Oversight for the protection of this data also appears to now be more limited. In March, DHS announced cuts to the Office for Civil Rights and Civil Liberties (CRCL), the Office of the Immigration Detention Ombudsman, and the Office of the Citizenship and Immigration Services Ombudsman, all key offices that were significant guards against misuse of data. “We didn't make a move in the data world without talking to the CRCL,” says the former DHS employee.

CRCL, which investigates possible rights abuses by DHS and whose creation was mandated by Congress, had been a particular target of DOGE. According to ProPublica, in a February meeting with the CRCL team, Schutt said, “This whole program sounds like money laundering.”

Schutt did not immediately respond to a request for comment.

Musk loyalists and DOGE operatives have spoken at length about parsing government data to find instances of supposed illegal immigration. Antonio Gracias, who according to Politico is leading DOGE’s “immigration task force,” told Fox and Friends that DOGE was looking at voter data as it relates to undocumented immigrants. “Just because we were curious, we then looked to see if they were on the voter rolls,” he said. “And we found in a handful of cooperative states that there were thousands of them on the voter rolls and that many of them had voted.” (Very few noncitizens voted in the 2024 election, and naturalized immigrants were more likely to vote Republican.) Gracias is also part of the DOGE team at SSA and founded the investment firm Valor Equity Partners. He also worked with Musk for many years at Tesla and helped the centibillionaire take the company public.

“As part of their fixation on this conspiracy theory that undocumented people are voting, they're also pulling in tens of thousands, millions of US citizens who did nothing more than vote or file for Social Security benefits,” Cody Venzke, a senior policy counsel at the American Civil Liberties Union focused on privacy and surveillance, tells WIRED. “It's a massive dragnet that's going to have all sorts of downstream consequences for not just undocumented people but US citizens and people who are entitled to be here as well.”

Over the past few weeks, DOGE leadership within the IRS have orchestrated a “hackathon” aimed at plotting out a “mega API” allowing privileged users to view all agency data from a central access point. Sources tell WIRED the project will likely be hosted on Foundry, software developed by Palantir, a company cofounded by Musk ally and billionaire tech investor Peter Thiel. An API is an application programming interface that allows different software systems to exchange data. While the Treasury Department has denied the existence of a contract for this work, IRS engineers were invited to another three-day “training and building session” on the project located at Palantir’s Georgetown offices in Washington, DC, this week, according to a document viewed by WIRED.

“Building it out as a series of APIs they can connect to is more feasible and quicker than putting all the data in a single place, which is probably what they really want,” one SSA source tells WIRED.

On April 5, DHS struck an agreement with the IRS to use tax data to search for more than seven million migrants working and living in the US. ICE has also recently paid Palantir millions of dollars to update and modify an ICE database focused on tracking down immigrants, 404 Media reported.

Multiple current and former government IT sources tell WIRED that it would be easy to connect the IRS’s Palantir system with the ICE system at DHS, allowing users to query data from both systems simultaneously. A system like the one being created at the IRS with Palantir could enable near-instantaneous access to tax information for use by DHS and immigration enforcement. It could also be leveraged to share and query data from different agencies as well, including immigration data from DHS. Other DHS sub-agencies, like USCIS, use Databricks software to organize and search its data, but these could be connected to outside Foundry instances simply as well, experts say. Last month, Palantir and Databricks struck a deal making the two software platforms more interoperable.

“I think it's hard to overstate what a significant departure this is and the reshaping of longstanding norms and expectations that people have about what the government does with their data,” says Elizabeth Laird, director of equity in civic technology at the Center for Democracy and Technology, who noted that agencies trying to match different datasets can also lead to errors. “You have false positives and you have false negatives. But in this case, you know, a false positive where you're saying someone should be targeted for deportation.”

Mistakes in the context of immigration can have devastating consequences: In March, authorities arrested and deported Kilmar Abrego Garcia, a Salvadoran national, due to, the Trump administration says, “an administrative error.” Still, the administration has refused to bring Abrego Garcia back, defying a Supreme Court ruling.

“The ultimate concern is a panopticon of a single federal database with everything that the government knows about every single person in this country,” Venzke says. “What we are seeing is likely the first step in creating that centralized dossier on everyone in this country.”

9 notes

·

View notes

Text

Frontend Projects Ideas

INTERMEDIATE

1. Chat Application

2. Expense Tracker

3. Weather Dashboard

4. Portfolio CMS

5. Blog CMS

6. Interactive Maps Data

7. Weather-App

8. Geolocation

9. Task Management App

10. Online Quiz Platform

11. Calendar App

12. Social-Media Dashboard

13. Stock Market Tracker

14. Travel Planner

15. Online Code Editor

16. Movie Database

17. Recipe-Sharing Platform

18. Portfolio Generator

19. Interactive-Data Visualization

19..Pomodoro Timer

20.Weather Forecast App

#codeblr#code#coding#learn to code#progblr#programming#software#studyblr#full stack development#full stack developer#full stack web development#webdevelopment#front end developers#front end development#technology#tech#web developers#learning

16 notes

·

View notes

Text

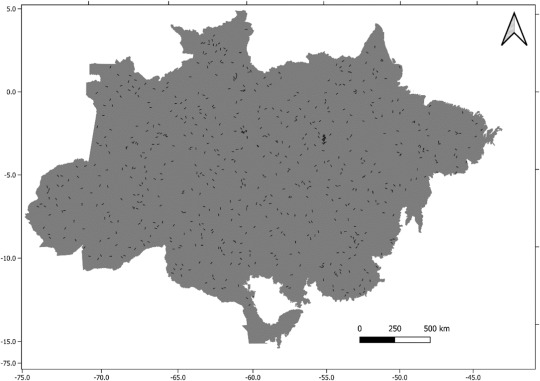

A biomass map of the Brazilian Amazon from multisource remote sensing

The Amazon Forest, the largest contiguous tropical forest in the world, stores a significant fraction of the carbon on land. Changes in climate and land use affect total carbon stocks, making it critical to continuously update and revise the best estimates for the region, particularly considering changes in forest dynamics. Forest inventory data cover only a tiny fraction of the Amazon region, and the coverage is not sufficient to ensure reliable data interpolation and validation. This paper presents a new forest above-ground biomass map for the Brazilian Amazon and the associated uncertainty both with a resolution of 250 meters and baseline for the satellite dataset the year of 2016 (i.e., the year of the satellite observation). A significant increase in data availability from forest inventories and remote sensing has enabled progress towards high-resolution biomass estimates. This work uses the largest airborne LiDAR database ever collected in the Amazon, mapping 360,000 km2 through transects distributed in all vegetation categories in the region. The map uses airborne laser scanning (ALS) data calibrated by field forest inventories that are extrapolated to the region using a machine learning approach with inputs from Synthetic Aperture Radar (PALSAR), vegetation indices obtained from the Moderate-Resolution Imaging Spectroradiometer (MODIS) satellite, and precipitation information from the Tropical Rainfall Measuring Mission (TRMM). A total of 174 field inventories geolocated using a Differential Global Positioning System (DGPS) were used to validate the biomass estimations. The experimental design allowed for a comprehensive representation of several vegetation types, producing an above-ground biomass map varying from a maximum value of 518 Mg ha−1, a mean of 174 Mg ha−1, and a standard deviation of 102 Mg ha−1. This unique dataset enabled a better representation of the regional distribution of the forest biomass and structure, providing further studies and critical information for decision-making concerning forest conservation, planning, carbon emissions estimate, and mechanisms for supporting carbon emissions reductions.

Read the paper.

#brazil#science#ecology#politics#brazilian politics#amazon rainforest#mod nise da silveira#image description in alt

2 notes

·

View notes

Note

AHH Lena ive got a problem!! so i think my blog got hacked into???? but theres nothing diffrent/changed so im not sure but it i was looking at stuff and it says i was "active" at some place ive never been to? but the thing is that weekend i had traveled and somewhat close (like to the neighboring state but it was on the other side away from me) and im not sure if it could just be a glitch? but im still quite worried. i mean i just changed my password but it says it was active recently and i dont know what to do and i dont want to delete my blog (its super small anyways so it wouldnt matter tho)

Don’t panic friend! I’ve got some ideas for you based on some research I’ve done.

(Also apologies for any typos, i’m typing this out on mobile in a waiting room lol)

So, i hadn’t heard of this “active sessions” section of Tumblr before, but quickly found it on the web version under account settings. According to Tumblr’s FAQs, this shows any log ins/access sessions to your Tumblr account by browser, and includes location info, to help you keep your account secure.

Looking at mine, I recognized various devices I’ve used over the past several months, with the locations as my home town. Two logs stood out to me though. 1 - my current session (marked as “current” in green) says my location is in a different part of the state. Odd, but could be due to having a new phone? 2 - apparently a session back in April came from a completely different state. Very odd right?

If i’d come across this back in April, i probably would’ve freaked out like you anon. But the fact it happened 3 months ago (and i haven’t noticed any unusual activity on my account), i couldn’t help but wonder how accurate these locations are…

Hence a research rabbit hole about IP addresses. You’ll notice underneath the city/state display is a string of numbers. This is the IP address of the browser’s network connection. There are several free websites where you can search that IP address and get a much more accurate location… Apparently, IP addresses may not always be accurate due to the geolocation databases they run through. So at the time of that connection, my location was displaying as one place when I was really somewhere else. But when I search that IP address now, it shows my current and accurate location.

I’ve also experienced odd location issues in other areas… like when I access Netflix from a new device and it sends a confirmation email, it usually has the city wrong.

So… this is what I did to look into the odd location activity on my account and i’m comfortable saying it was a IP address geolocation error. It’s possible that’s what you’re seeing on your account too.

If not… next step i would recommend is to double check the email address you have on the account. If someone actually hacked your account, that would be one of the first things they’d change in order to keep access. Really look at the address because sometimes they’ll try to throw you off by making a similar email but with like an added dot, or an extra letter that you wouldn’t catch at first glance. You can change it back to your own address in addition to changing your password.

Those are my two main ideas. I’m not an expert in these things but that’s where i would start, especially if you’re not seeing any suspicious activity on your account. Anyone else with ideas or experience here, feel free to chime in!

2 notes

·

View notes

Text

Mobile Apps Enhancing Personal Safety

Introduction to Personal Safety Challenges

In an era where safety concerns permeate daily life, individuals seek innovative solutions to protect themselves in unpredictable environments. Urban landscapes, late-night commutes, and solitary activities amplify the need for reliable tools that empower users to navigate potential threats with confidence. The rise of smartphone technology has catalyzed a transformative approach to personal security, offering accessible and immediate resources at one’s fingertips. Among these advancements, the mobile app for violence prevention stands out as a beacon of proactive safety, integrating cutting-edge features to mitigate risks and foster peace of mind. This article explores how these applications redefine personal safety, their functionalities, and their profound impact on communities worldwide.

The Role of Technology in Violence Prevention

Smartphones have transcended their role as communication devices, evolving into lifelines for personal protection. A mobile app for violence prevention leverages real-time data, geolocation, and user-friendly interfaces to create a robust safety net. These applications enable users to alert trusted contacts or authorities during emergencies, share live locations, and access safety resources tailored to their surroundings. By harnessing artificial intelligence, some apps analyze patterns of behavior or environmental cues to warn users of potential dangers, such as high-crime areas or suspicious activities. This proactive approach empowers individuals to make informed decisions, transforming reactive responses into preventive strategies. The seamless integration of technology into daily routines ensures that safety is always within reach, fostering a sense of control in uncertain situations.

Features That Empower Users

The strength of a mobile app for violence prevention lies in its diverse and intuitive features, designed to address multifaceted safety concerns. Real-time location sharing allows users to inform loved ones of their whereabouts, ensuring accountability during vulnerable moments, such as walking alone at night. Emergency alert systems enable rapid communication with pre-selected contacts or local law enforcement, reducing response times in critical situations. Some applications offer discreet panic buttons, allowing users to seek help without drawing attention. Additionally, educational resources within these apps provide tips on de-escalating conflicts or recognizing warning signs, equipping users with knowledge to navigate risks confidently. By combining these functionalities, such apps create a comprehensive safety ecosystem that prioritizes prevention and preparedness.

Guarding Personal Security Through Connectivity

The concept of guard personal security is deeply embedded in the connectivity fostered by these applications. By linking users with their communities, these tools amplify collective vigilance. For instance, some apps allow users to report suspicious activities anonymously, contributing to a shared database that informs others of potential threats in real time. This crowd-sourced approach strengthens community resilience, as individuals become active participants in safeguarding their environments. Furthermore, integration with wearable devices, such as smartwatches, enhances accessibility, allowing users to trigger alerts without reaching for their phones. This interconnected framework not only protects individuals but also cultivates a culture of mutual care, where safety becomes a collective responsibility.

Impact on Vulnerable Populations

The transformative potential of a mobile app for violence prevention is particularly significant for vulnerable populations, including women, students, and the elderly. These groups often face heightened risks in public spaces, making accessible safety tools essential. For example, apps tailored for college campuses provide features like safe route planning, connecting students with campus security or peer escorts. Similarly, applications designed for seniors incorporate health alerts alongside safety features, addressing both medical and environmental risks. By catering to specific needs, these apps empower marginalized groups to reclaim their autonomy and navigate their surroundings with greater confidence. The ripple effect of this empowerment extends to families and communities, fostering a broader culture of safety and inclusion.

Challenges and Future Directions

Despite their promise, mobile apps for violence prevention face challenges that warrant attention. Accessibility remains a concern, as not all individuals have access to smartphones or reliable internet connections. Developers must prioritize inclusive design, ensuring that apps are compatible with older devices and available in multiple languages. Privacy is another critical issue, as users entrust sensitive data, such as location information, to these platforms. Robust encryption and transparent data policies are essential to maintain user trust. Looking ahead, advancements in machine learning and predictive analytics hold potential to enhance app capabilities, offering more precise risk assessments and personalized safety recommendations. By addressing these challenges, the future of these applications promises even greater impact in guard personal security.

0 notes

Text

The Ultimate Guide to Developing a Multi-Service App Like Gojek

In today's digital-first world, convenience drives consumer behavior. The rise of multi-service platforms like Gojek has revolutionized the way people access everyday services—from booking a ride and ordering food to getting a massage or scheduling home cleaning. These apps simplify life by merging multiple services into a single mobile solution.

If you're an entrepreneur or business owner looking to develop a super app like Gojek, this guide will walk you through everything you need to know—from ideation and planning to features, technology, cost, and launching.

1. Understanding the Gojek Model

What is Gojek?

Gojek is an Indonesian-based multi-service app that started as a ride-hailing service and evolved into a digital giant offering over 20 on-demand services. It now serves millions of users across Southeast Asia, making it one of the most successful super apps in the world.

Why Is the Gojek Model Successful?

Diverse Services: Gojek bundles transport, delivery, logistics, and home services in one app.

User Convenience: One login for multiple services.

Loyalty Programs: Rewards and incentives for repeat users.

Scalability: Built to adapt and scale rapidly.

2. Market Research and Business Planning

Before writing a single line of code, you must understand the market and define your niche.

Key Steps:

Competitor Analysis: Study apps like Gojek, Grab, Careem, and Uber.

User Persona Development: Identify your target audience and their pain points.

Service Selection: Decide which services to offer at launch—e.g., taxi rides, food delivery, parcel delivery, or healthcare.

Monetization Model: Plan your revenue streams (commission-based, subscription, ads, etc.).

3. Essential Features of a Multi-Service App

A. User App Features

User Registration & Login

Multi-Service Dashboard

Real-Time Tracking

Secure Payments

Reviews & Ratings

Push Notifications

Loyalty & Referral Programs

B. Service Provider App Features

Service Registration

Availability Toggle

Request Management

Earnings Dashboard

Ratings & Feedback

C. Admin Panel Features

User & Provider Management

Commission Tracking

Service Management

Reports & Analytics

Promotions & Discounts Management

4. Choosing the Right Tech Stack

The technology behind your app will determine its performance, scalability, and user experience.

Backend

Programming Languages: Node.js, Python, or Java

Databases: MongoDB, MySQL, Firebase

Hosting: AWS, Google Cloud, Microsoft Azure

APIs: REST or GraphQL

Frontend

Mobile Platforms: Android (Kotlin/Java), iOS (Swift)

Cross-Platform: Flutter or React Native

Web Dashboard: Angular, React.js, or Vue.js

Other Technologies

Payment Gateways: Stripe, Razorpay, PayPal

Geolocation: Google Maps API

Push Notifications: Firebase Cloud Messaging (FCM)

Chat Functionality: Socket.IO or Firebase

5. Design and User Experience (UX)

Design is crucial in a super app where users interact with multiple services.

UX/UI Design Tips:

Intuitive Interface: Simplify navigation between services.

Consistent Aesthetics: Maintain color schemes and branding across all screens.

Microinteractions: Small animations or responses that enhance user satisfaction.

Accessibility: Consider voice commands and larger fonts for inclusivity.

6. Development Phases

A well-planned development cycle ensures timely delivery and quality output.

A. Discovery Phase

Finalize scope

Create wireframes and user flows

Define technology stack

B. MVP Development

Start with a Minimum Viable Product including essential features to test market response.

C. Full-Scale Development

Once the MVP is validated, build advanced features and integrations.

D. Testing

Conduct extensive testing:

Unit Testing

Integration Testing

User Acceptance Testing (UAT)

Performance Testing

7. Launching the App

Pre-Launch Checklist

App Store Optimization (ASO)

Marketing campaigns

Beta testing and feedback

Final round of bug fixes

Post-Launch

Monitor performance

User support

Continuous updates

Roll out new features based on feedback

8. Marketing Your Multi-Service App

Marketing is key to onboarding users and service providers.

Strategies:

Pre-Launch Hype: Use teasers, landing pages, and early access invites.

Influencer Collaborations: Partner with local influencers.

Referral Programs: Encourage user growth via rewards.

Local SEO: Optimize for city-based searches.

In-App Promotions: Offer discounts and bundle deals.

9. Legal and Compliance Considerations

Don't overlook legal matters when launching a multi-service platform.

Key Aspects:

Licensing: Depending on your country and the services offered.

Data Protection: Adhere to GDPR, HIPAA, or local data laws.

Contracts: Create terms of service for providers and users.

Taxation: Prepare for tax compliance across services.

10. Monetization Strategies

There are several ways to make money from your app.

Common Revenue Models:

Commission Per Transaction: Standard in ride-sharing and food delivery.

Subscription Plans: For users or service providers.

Ads: In-app promotions and sponsored listings.

Surge Pricing: Dynamic pricing based on demand.

Premium Features: Offer enhanced services at a cost.

11. Challenges and How to Overcome Them

A. Managing Multiple Services

Solution: Use microservices architecture to manage each feature/module independently.

B. Balancing Supply and Demand

Solution: Use AI to predict demand and onboard providers in advance.

C. User Retention

Solution: Gamify the app with loyalty points, badges, and regular updates.

D. Operational Costs

Solution: Optimize cloud resources, automate processes, and start with limited geography.

12. Scaling the App

Once you establish your base, consider expansion.

Tips:

Add New Services: Include healthcare, legal help, or finance.

Geographical Expansion: Move into new cities or countries.

Language Support: Add multi-lingual capabilities.

API Integrations: Partner with external platforms for payment, maps, or logistics.

13. Cost of Developing a Multi-Service App Like Gojek

Costs can vary based on complexity, features, region, and team size.

Estimated Breakdown:

MVP Development: $20,000 – $40,000

Full-Feature App: $50,000 – $150,000+

Monthly Maintenance: $2,000 – $10,000

Marketing Budget: $5,000 – $50,000 (initial phase)

Hiring an experienced team or opting for a white-label solution can help manage costs and time.

Conclusion

Building a multi-service app like Gojek is an ambitious but achievable project. With the right strategy, a well-defined feature set, and an expert development team, you can tap into the ever-growing on-demand economy. Begin by understanding your users, develop a scalable platform, market effectively, and continuously improve based on feedback. The super app revolution is just beginning—get ready to be a part of it.

Frequently Asked Questions (FAQs)

1. How long does it take to develop a Gojek-like app?

Depending on complexity and team size, it typically takes 4 to 8 months to build a fully functional version of a multi-service app.

2. Can I start with only a few services and expand later?

Absolutely. It's recommended to begin with 2–3 core services, test the market, and expand based on user demand and operational capability.

3. Is it better to build from scratch or use a white-label solution?

If you want custom features and long-term scalability, building from scratch is ideal. White-label solutions are faster and more affordable for quicker market entry.

4. How do I onboard service providers to my platform?

Create a simple registration process, offer initial incentives, and run targeted local campaigns to onboard and retain quality service providers.

5. What is the best monetization model for a super app?

The most successful models include commission-based earnings, subscription plans, and in-app advertising, depending on your services and user base.

#gojekcloneapp#cloneappdevelopmentcompany#ondemandcloneappdevelopmentcompany#ondemandappclone#multideliveryapp#ondemandserviceapp#handymanapp#ondemandserviceclones#appclone#fooddeliveryapp

0 notes

Text

Geolocation Pricing | Geolocation Database Providers | DB-IP

The comprehensive means of implementing Geolocation Pricing techniques into practice is provided by DB-IP. DB-IP gives businesses the ability to customize their pricing models according to market conditions and geographical considerations by giving them accurate geolocation data. With the use of this tool, price may be effectively adjusted to compete with local competitors and demographics, hence increasing total marketing effectiveness. With the support of DB-IP's extensive database, companies may better target their customers and optimize their pricing strategies, leading to more precise and lucrative pricing selections.

#Geolocation Pricing#Geolocation Database Providers#Geolocation Database#Best Ip Geolocation Database

0 notes

Text

Tech Stack You Need for Building an On-Demand Food Delivery App

I remember the first time I considered launching a food delivery app—it felt exciting and overwhelming at the same time. I had this vision of a sleek, user-friendly platform that could bring local restaurant food straight to customers' doors, but I wasn’t sure where to begin. The first big question that hit me? What technology stack do I need to build a reliable, scalable food delivery app solution?

If you’re a restaurant owner, small business operator, or part of an enterprise considering the same path, this guide is for you. Let me break it down and share what I’ve learned about choosing the tech stack for an on demand food delivery app development journey.

Why the Right Tech Stack Matters

Before we get into specifics, let’s talk about why choosing the right tech stack is so crucial. Think of your app like a restaurant kitchen—you need the right tools and appliances to make sure the operations run smoothly. In the same way, the technology behind your app ensures fast performance, strong security, and a seamless user experience. If you're serious about investing in a robust food delivery application development plan, your tech choices will make or break the project.

1. Frontend Development (User Interface)

This is what your customers actually see and interact with on their screens. A smooth, intuitive interface is key to winning users over.

Languages: HTML5, CSS3, JavaScript

Frameworks: React Native, Flutter (for cross-platform apps), Swift (for iOS), Kotlin (for Android)

Personally, I love React Native. It lets you build apps for both iOS and Android using a single codebase, which means faster development and lower costs. For a startup or small business, that’s a win.

2. Backend Development (Server-Side Logic)

This is the engine room of your food delivery app development solution. It handles user authentication, order processing, real-time tracking, and so much more.

Languages: Node.js, Python, Ruby, Java

Frameworks: Express.js, Django, Spring Boot

Databases: MongoDB, PostgreSQL, MySQL

APIs: RESTful APIs, GraphQL for communication between the frontend and backend

If you ask any solid food delivery app development company, they'll likely recommend Node.js for its speed and scalability, especially for apps expecting high traffic.

3. Real-Time Features & Geolocation

When I order food, I want to see the delivery route and ETA—that’s made possible through real-time tech and location-based services.

Maps & Geolocation: Google Maps API, Mapbox, HERE

Real-Time Communication: Socket.io, Firebase, Pusher

Real-time tracking is a must in today’s market, and any modern food delivery app development solution must integrate this smoothly.

4. Cloud & Hosting Platforms

You need a secure and scalable place to host your app and store data. Here’s what I found to work well:

Cloud Providers: AWS, Google Cloud, Microsoft Azure

Storage: Amazon S3, Firebase Storage

CDN: Cloudflare, AWS CloudFront

I personally prefer AWS for its broad range of services and reliability, especially when scaling your app as you grow.

5. Payment Gateways

Getting paid should be easy and secure—for both you and your customers.

Popular Gateways: Stripe, Razorpay, PayPal, Square

Local Payment Options: UPI, Paytm, Google Pay (especially in regions like India)

A versatile food delivery application development plan should include multiple payment options to suit different markets.

6. Push Notifications & Messaging

Engagement is everything. I always appreciate updates on my order or a tempting offer notification from my favorite local café.

Services: Firebase Cloud Messaging (FCM), OneSignal, Twilio

These tools help maintain a strong connection with your users and improve retention.

7. Admin Panel & Dashboard

Behind every smooth app is a powerful admin panel where business owners can manage orders, customers, payments, and analytics.

Frontend Frameworks: Angular, Vue.js

Backend Integration: Node.js or Laravel with MySQL/PostgreSQL

This is one part you definitely want your food delivery app development company to customize according to your specific business operations.

8. Security & Authentication

Trust me—when handling sensitive data like payment info or user addresses, security is non-negotiable.

Authentication: OAuth 2.0, JWT (JSON Web Tokens)

Data Encryption: SSL, HTTPS

Compliance: GDPR, PCI-DSS for payment compliance

A dependable on demand food delivery app development process always includes a strong focus on security and privacy from day one.

Final Thoughts

Choosing the right tech stack isn’t just a technical decision—it’s a business one. Whether you’re building your app in-house or partnering with a trusted food delivery app development company, knowing the components involved helps you make smarter choices and ask the right questions.

When I look back at my own journey in food delivery app solution planning, the clarity came once I understood the tools behind the scenes. Now, as the industry continues to grow, investing in the right technology gives your business the best chance to stand out.

So if you’re serious about launching a top-tier app that delivers both food and fantastic user experience, your tech stack is where it all begins. And hey, if you need help, companies like Delivery Bee are doing some really exciting things in this space. I’d definitely recommend exploring their food delivery app development solutions.

0 notes

Text

Why E-commerce Businesses Need an Address Verification API in 2025

In the ever-evolving world of e-commerce, precision and speed are key to ensuring customer satisfaction and operational efficiency. As we step into 2025, the stakes are higher than ever. With growing consumer expectations, intensified competition, and increased instances of fraud, e-commerce businesses must equip themselves with advanced tools to stay ahead. One such essential tool is the Address Verification API.

The Critical Role of Address Verification in E-commerce

Address verification is the process of validating the accuracy and deliverability of a customer's shipping address. For e-commerce companies, this step is not just administrative—it’s mission-critical. An incorrect address can result in failed deliveries, increased shipping costs, frustrated customers, and a tarnished brand reputation.

What is an Address Verification API?

An Address Verification API (Application Programming Interface) integrates with an e-commerce platform to automatically check and standardize address data during checkout or in the backend. These APIs use real-time data from postal services and geolocation tools to ensure the address exists and is correctly formatted for delivery.

Why It Matters More in 2025

1. Increased Consumer Expectations

Customers now expect fast, error-free deliveries. A single mistake in address entry can lead to significant delays, which in turn can damage customer trust.

2. Growth of Cross-border E-commerce

International shipping introduces complexity due to varying address formats and languages. Address Verification APIs help standardize global addresses to ensure successful deliveries.

3. Rising Costs of Failed Deliveries

According to industry reports, the cost of failed deliveries exceeds $5 billion annually. In 2025, reducing these unnecessary expenses is crucial to maintain profitability.

4. Fraud Prevention

Address verification APIs can detect fraudulent entries by cross-referencing addresses with official postal databases, reducing chargebacks and fake orders.

Benefits of Address Verification API for E-commerce

1. Enhanced Customer Experience

By catching errors at the point of entry, these APIs eliminate the frustration of missed or delayed deliveries.

2. Operational Efficiency

Accurate addresses reduce the burden on customer service teams and cut down on reshipping and return processing.

3. Cost Savings

Avoiding failed deliveries, reshipments, and customer complaints leads to significant savings over time.

4. Better Analytics and Insights

Clean, verified address data improves customer segmentation and targeting for marketing campaigns.

5. Scalability

As your business grows, a robust API ensures that increasing order volumes don’t translate to increasing delivery errors.

SEO-Optimized Features

Address Validation API for Shopify

WooCommerce Address Autocomplete API

Magento Address Validation Tool

Real-Time USPS Address Verification

Global Address Verification for International E-commerce

How to Implement an Address Verification API

Choose the Right Provider: Evaluate based on coverage, response time, integration ease, and support.

Integrate with Your Platform: Use plugins or custom code for seamless integration.

Test Thoroughly: Validate on different devices and address formats.

Monitor & Optimize: Track delivery success rates and optimize rules based on analytics.

Conclusion

In 2025, an Address Verification API is no longer optional for e-commerce success—it’s essential. From preventing fraud and reducing costs to enhancing customer satisfaction, this API offers measurable benefits. Businesses that prioritize clean, accurate data will stand out in the crowded online marketplace.

youtube

SITES WE SUPPORT

Address Mailing APIs – Wix

0 notes

Text

Top 5 Alternative Data Career Paths and How to Learn Them

The world of data is no longer confined to neat rows and columns in traditional databases. We're living in an era where insights are being unearthed from unconventional, often real-time, sources – everything from satellite imagery tracking retail traffic to social media sentiment predicting stock movements. This is the realm of alternative data, and it's rapidly creating some of the most exciting and in-demand career paths in the data landscape.

Alternative data refers to non-traditional information sources that provide unique, often forward-looking, perspectives that conventional financial reports, market research, or internal operational data simply cannot. Think of it as peering through a new lens to understand market dynamics, consumer behavior, or global trends with unprecedented clarity.

Why is Alternative Data So Critical Now?

Real-time Insights: Track trends as they happen, not just after quarterly reports or surveys.

Predictive Power: Uncover leading indicators that can forecast market shifts, consumer preferences, or supply chain disruptions.

Competitive Edge: Gain unique perspectives that your competitors might miss, leading to smarter strategic decisions.

Deeper Context: Analyze factors previously invisible, from manufacturing output detected by sensors to customer foot traffic derived from geolocation data.

This rich, often unstructured, data demands specialized skills and a keen understanding of its nuances. If you're looking to carve out a niche in the dynamic world of data, here are five compelling alternative data career paths and how you can equip yourself for them.

1. Alternative Data Scientist / Quant Researcher

This is often the dream role for data enthusiasts, sitting at the cutting edge of identifying, acquiring, cleaning, and analyzing alternative datasets to generate actionable insights, particularly prevalent in finance (for investment strategies) or detailed market intelligence.

What they do: They actively explore new, unconventional data sources, rigorously validate their reliability and predictive power, develop sophisticated statistical models and machine learning algorithms (especially for unstructured data like text or images) to extract hidden signals, and present their compelling findings to stakeholders. In quantitative finance, this involves building systematic trading strategies based on these unique data signals.

Why it's growing: The competitive advantage gleaned from unique insights derived from alternative data is immense, particularly in high-stakes sectors like finance where even marginal improvements in prediction can yield substantial returns.

Key Skills:

Strong Statistical & Econometric Modeling: Expertise in time series analysis, causality inference, regression, hypothesis testing, and advanced statistical methods.

Machine Learning: Profound understanding and application of supervised, unsupervised, and deep learning techniques, especially for handling unstructured data (e.g., Natural Language Processing for text, Computer Vision for images).

Programming Prowess: Master Python (with libraries like Pandas, NumPy, Scikit-learn, PyTorch/TensorFlow) and potentially R.

Data Engineering Fundamentals: A solid grasp of data pipelines, ETL (Extract, Transform, Load) processes, and managing large, often messy, datasets.

Domain Knowledge: Critical for contextualizing and interpreting the data, understanding potential biases, and identifying genuinely valuable signals (e.g., financial markets, retail operations, logistics).

Critical Thinking & Creativity: The ability to spot unconventional data opportunities and formulate innovative hypotheses.

How to Learn:

Online Specializations: Look for courses on "Alternative Data for Investing," "Quantitative Finance with Python," or advanced Machine Learning/NLP. Platforms like Coursera, edX, and DataCamp offer relevant programs, often from top universities or financial institutions.

Hands-on Projects: Actively work with publicly available alternative datasets (e.g., from Kaggle, satellite imagery providers like NASA, open-source web scraped data) to build and validate predictive models.

Academic Immersion: Follow leading research papers and attend relevant conferences in quantitative finance and data science.

Networking: Connect actively with professionals in quantitative finance or specialized data science roles that focus on alternative data.

2. Alternative Data Engineer

While the Alternative Data Scientist unearths the insights, the Alternative Data Engineer is the architect and builder of the robust infrastructure essential for managing these unique and often challenging datasets.

What they do: They meticulously design and implement scalable data pipelines to ingest both streaming and batch alternative data, orchestrate complex data cleaning and transformation processes at scale, manage cloud infrastructure, and ensure high data quality, accessibility, and reliability for analysts and scientists.

Why it's growing: Alternative data is inherently diverse, high-volume, and often unstructured or semi-structured. Without specialized engineering expertise and infrastructure, its potential value remains locked away.

Key Skills:

Cloud Platform Expertise: Deep knowledge of major cloud providers like AWS, Azure, or GCP, specifically for scalable data storage (e.g., S3, ADLS, GCS), compute (e.g., EC2, Azure VMs, GCE), and modern data warehousing (e.g., Snowflake, BigQuery, Redshift).

Big Data Technologies: Proficiency in distributed processing frameworks like Apache Spark, streaming platforms like Apache Kafka, and data lake solutions.

Programming: Strong skills in Python (for scripting, API integration, and pipeline orchestration), and potentially Java or Scala for large-scale data processing.

Database Management: Experience with both relational (e.g., PostgreSQL, MySQL) and NoSQL databases (e.g., MongoDB, Cassandra) for flexible data storage needs.

ETL Tools & Orchestration: Mastery of tools like dbt, Airflow, Prefect, or Azure Data Factory for building, managing, and monitoring complex data workflows.

API Integration & Web Scraping: Practical experience in fetching data from various web sources, public APIs, and sophisticated web scraping techniques.

How to Learn:

Cloud Certifications: Pursue certifications like AWS Certified Data Analytics, Google Cloud Professional Data Engineer, or Azure Data Engineer Associate.

Online Courses: Focus on "Big Data Engineering," "Data Pipeline Development," and specific cloud services tailored for data workloads.

Practical Experience: Build ambitious personal projects involving data ingestion from diverse APIs (e.g., social media APIs, financial market APIs), advanced web scraping, and processing with big data frameworks.

Open-Source Engagement: Contribute to or actively engage with open-source projects related to data engineering tools and technologies.

3. Data Product Manager (Alternative Data Focus)

This strategic role acts as the crucial bridge between intricate business challenges, the unique capabilities of alternative data, and the technical execution required to deliver impactful data products.

What they do: They meticulously identify market opportunities for new alternative data products or enhancements, define a clear product strategy, meticulously gather and prioritize requirements from various stakeholders, manage the end-to-end product roadmap, and collaborate closely with data scientists, data engineers, and sales teams to ensure the successful development, launch, and adoption of innovative data-driven solutions. They possess a keen understanding of both the data's raw potential and the specific business problem it is designed to solve.

Why it's growing: As alternative data moves from niche to mainstream, companies desperately need strategists who can translate its complex technical potential into tangible, commercially viable products and actionable business insights.

Key Skills:

Product Management Fundamentals: Strong grasp of agile methodologies, product roadmap planning, user story creation, and sophisticated stakeholder management.

Business Acumen: A deep, nuanced understanding of the specific industry where the alternative data is being applied (e.g., quantitative finance, retail strategy, real estate analytics).

Data Literacy: The ability to understand the technical capabilities, inherent limitations, potential biases, and ethical considerations associated with diverse alternative datasets.

Exceptional Communication: Outstanding skills in articulating product vision, requirements, and value propositions to both highly technical teams and non-technical business leaders.

Market Research: Proficiency in identifying unmet market needs, analyzing competitive landscapes, and defining unique value propositions for data products.

Basic SQL/Data Analysis: Sufficient technical understanding to engage meaningfully with data teams and comprehend data capabilities and constraints.

How to Learn:

Product Management Courses: General PM courses provide an excellent foundation (e.g., from Product School, or online specializations on platforms like Coursera/edX).

Develop Deep Domain Expertise: Immerse yourself in industry news, read analyst reports, attend conferences, and thoroughly understand the core problems of your target industry.

Foundational Data Analytics/Science: Take introductory courses in Python/R, SQL, and data visualization to understand the technical underpinnings.

Networking: Actively engage with existing data product managers and leading alternative data providers.

4. Data Ethicist / AI Policy Analyst (Alternative Data Specialization)

The innovative application of alternative data, particularly when combined with AI, frequently raises significant ethical, privacy, and regulatory concerns. This crucial role ensures that data acquisition and usage are not only compliant but also responsible and fair.

What they do: They meticulously develop and implement robust ethical guidelines for the collection, processing, and use of alternative data. They assess potential biases inherent in alternative datasets and their potential for unfair outcomes, ensure strict compliance with evolving data privacy regulations (like GDPR, CCPA, and similar data protection acts), conduct comprehensive data protection and impact assessments, and advise senior leadership on broader AI policy implications related to data governance.

Why it's growing: With escalating public scrutiny, rapidly evolving global regulations, and high-profile incidents of data misuse, ethical and compliant data practices are no longer merely optional; they are absolutely critical for maintaining an organization's reputation, avoiding severe legal penalties, and fostering public trust.

Key Skills:

Legal & Regulatory Knowledge: A strong understanding of global and regional data privacy laws (e.g., GDPR, CCPA, etc.), emerging AI ethics frameworks, and industry-specific regulations that govern data use.

Risk Assessment & Mitigation: Expertise in identifying, analyzing, and developing strategies to mitigate ethical, privacy, and algorithmic bias risks associated with complex data sources.

Critical Thinking & Bias Detection: The ability to critically analyze datasets and algorithmic outcomes for inherent biases, fairness issues, and potential for discriminatory impacts.

Communication & Policy Writing: Exceptional skills in translating complex ethical and legal concepts into clear, actionable policies, guidelines, and advisory reports for diverse audiences.

Stakeholder Engagement: Proficiency in collaborating effectively with legal teams, compliance officers, data scientists, engineers, and business leaders.

Basic Data Literacy: Sufficient understanding of how data is collected, stored, processed, and used by AI systems to engage meaningfully with technical teams.

How to Learn:

Specialized Courses & Programs: Look for postgraduate programs or dedicated courses in Data Ethics, AI Governance, Technology Law, or Digital Policy, often offered by law schools, public policy institutes, or specialized AI ethics organizations.

Industry & Academic Research: Stay current by reading reports and white papers from leading organizations (e.g., World Economic Forum), academic research institutions, and major tech companies' internal ethics guidelines.

Legal Background (Optional but Highly Recommended): A formal background in law or public policy can provide a significant advantage.

Engage in Professional Forums: Actively participate in discussions and communities focused on data ethics, AI policy, and responsible AI.

5. Data Journalist / Research Analyst (Alternative Data Focused)

This captivating role harnesses the power of alternative data to uncover compelling narratives, verify claims, and provide unique, data-driven insights for public consumption or critical internal strategic decision-making in sectors like media, consulting, or advocacy.

What they do: They meticulously scour publicly available alternative datasets (e.g., analyzing satellite imagery for environmental impact assessments, tracking social media trends for shifts in public opinion, dissecting open government data for policy analysis, or using web-scraped data for market intelligence). They then expertly clean, analyze, and, most importantly, effectively visualize and communicate their findings through engaging stories, in-depth reports, and interactive dashboards.

Why it's growing: The ability to tell powerful, evidence-based stories from unconventional data sources is invaluable for modern journalism, influential think tanks, specialized consulting firms, and even for robust internal corporate communications.

Key Skills:

Data Cleaning & Wrangling: Expertise in preparing messy, real-world data for analysis, typically using tools like Python (with Pandas), R (with Tidyverse), or advanced Excel functions.

Data Visualization: Proficiency with powerful visualization tools such as Tableau Public, Datawrapper, Flourish, or programming libraries like Matplotlib, Seaborn, and Plotly for creating clear, impactful, and engaging visual narratives.

Storytelling & Communication: Exceptional ability to translate complex data insights into clear, concise, and compelling narratives that resonate with both expert and general audiences.

Research & Investigative Skills: A deep sense of curiosity, persistence in finding and validating diverse data sources, and the analytical acumen to uncover hidden patterns and connections.

Domain Knowledge: A strong understanding of the subject matter being investigated (e.g., politics, environmental science, consumer trends, public health).

Basic Statistics: Sufficient statistical knowledge to understand trends, interpret correlations, and draw sound, defensible conclusions from data.

How to Learn:

Data Journalism Programs: Some universities offer specialized master's or certificate programs in data journalism.

Online Courses: Focus on courses in data visualization, storytelling with data, and introductory data analysis on platforms like Coursera, Udemy, or specific tool tutorials.

Practical Experience: Actively engage with open data portals (e.g., data.gov, WHO, World Bank), and practice analyzing, visualizing, and writing about these datasets.

Build a Portfolio: Create a strong portfolio of compelling data stories and visualizations based on alternative data projects, demonstrating your ability to communicate insights effectively.

The landscape of data is evolving at an unprecedented pace, and alternative data is at the heart of this transformation. These career paths offer incredibly exciting opportunities for those willing to learn the specialized skills required to navigate and extract profound value from this rich, unconventional frontier. Whether your passion lies in deep technical analysis, strategic product development, ethical governance, or impactful storytelling, alternative data provides a fertile ground for a rewarding and future-proof career.

0 notes

Text

Could Palantir-Type Surveillance Help Turn Much of the World Into a Digital Prison?

COGwriter

There are concerns that the Trump Administration’s data merging plans will eliminate privacy and cause other problems:

‘Destroy the Ring’: Trump’s Palantir deal alarms Hill Republicans

Republican privacy advocates in Congress are criticizing the Trump administration’s work with the tech giant Palantir to analyze what could become a massive pool of government data on Americans.

President Donald Trump signed an order in March that directed federal agencies to remove “unnecessary barriers” to data consolidation. Even before that, as The New York Times reported last week, Palantir had expanded the reach of its artificial intelligence product within the US government — potentially building an interagency database that would merge huge sets of government information on Americans, from medical to financial.

Palantir provides tech to companies and governments that helps them act on the information they collect — a service gaining traction as large language models make it easier to analyze data at scale. …

“It’s dangerous,” Rep. Warren Davidson, R-Ohio, told Semafor. “When you start combining all those data points on an individual into one database, it really essentially creates a digital ID. And it’s a power that history says will eventually be abused.”

“I hope to turn it off, fundamentally,” Davidson added. He compared a Palantir-facilitated merged database to the dangerously powerful ring from the Lord of The Rings: “The only good thing to do with One Ring to Rule Them All is to destroy the Ring.’”

Resistance like Davidson’s is striking because few congressional Republicans have publicly challenged the president since his second term began. https://www.semafor.com/article/06/05/2025/destroy-the-ring-trumps-palantir-deal-alarms-hill-republicans

The Big Tech of USA already has massive databases–and if the USA government taps in, this will encourage other governments around the world to do the same type of thing.

Could Palantir help turn the USA into a type of digital prison?

(Palantir logo)

The Rutherford Institute suggest that is so:

Palantir-Powered Surveillance Is Turning America Into a Digital Prison

We are fast approaching the stage of the ultimate inversion: the stage where the government is free to do anything it pleases, while the citizens may act only by permission.” — Ayn Rand

Call it what it is: a panopticon presidency.

President Trump’s plan to fuse government power with private surveillance tech to build a centralized, national citizen database is the final step in transforming America from a constitutional republic into a digital dictatorship armed with algorithms and powered by unaccountable, all-seeing artificial intelligence.

This isn’t about national security. It’s about control.

According to news reports, the Trump administration is quietly collaborating with Palantir Technologies—the data-mining behemoth co-founded by billionaire Peter Thiel—to construct a centralized, government-wide surveillance system that would consolidate biometric, behavioral, and geolocation data into a single, weaponized database of Americans’ private information.

This isn’t about protecting freedom. It’s about rendering freedom obsolete.

What we’re witnessing is the transformation of America into a digital prison—one where the inmates are told we’re free while every move, every word, every thought is monitored, recorded, and used to assign a “threat score” that determines our place in the new hierarchy of obedience.

This puts us one more step down the road to China’s dystopian system of social credit scores and Big Brother surveillance.

The tools enabling this all-seeing surveillance regime are not new, but under Trump’s direction, they are being fused together in unprecedented ways—with Palantir at the center of this digital dragnet.

Palantir, long criticized for its role in powering ICE (Immigration and Customs Enforcement) raids and predictive policing, is now poised to become the brain of Trump’s surveillance regime.

Under the guise of “data integration” and “public safety,” this public-private partnership would deploy AI-enhanced systems to comb through everything from facial recognition feeds and license plate readers to social media posts and cellphone metadata—cross-referencing it all to assess a person’s risk to the state. …

Building on this foundation of historical abuse, the government has evolved its tactics, replacing human informants with algorithms and wiretaps with metadata, ushering in an age where pre-crime prediction is treated as prosecution.

In the age of AI, your digital footprint is enough to convict you—not in a court of law, but in the court of preemptive suspicion.

Every smartphone ping, GPS coordinate, facial scan, online purchase, and social media like becomes part of your “digital exhaust”—a breadcrumb trail of metadata that the government now uses to build behavioral profiles. The FBI calls it “open-source intelligence.” But make no mistake: this is dragnet surveillance, and it is fundamentally unconstitutional. 06/03/25 https://www.rutherford.org/publications_resources/john_whiteheads_commentary/trumps_palantir_powered_surveillance_is_turning_america_into_a_digital_prison

Yes, this sounds like the USA is getting closer to the UK which is also looking at “pre-crime” etc. in a way reminiscent of the Tom Cruise movie called Minority Report (see UK working with Artificial Intelligence to identify people before they commit crimes–idea reminiscient of ‘Minority Report’).

Despite some claiming that the USA is the land of the free and home of the brave, like some other nations, it is the land of the surveilled and the home of the highly regulated.

Prior to Donald Trump’s re-election, we put out the following video on our Bible News Prophecy YouTube channel:

15:12

666, the Censorship Industrial Complex, and AI

We are seeing more and more censorship, particularly on the internet. Matt Taibi has termed the coordination of this from governments, academia, Big Tech, and “fact checkers,” the ‘Censorship Industrial Complex.’ Is more censorship prophesied to happen? What did the prophet Amos write in the 5th and 8th chapter of his book? What about writings from the Apostle Peter? Is the USA government coercing censorship in violation of the first amendment to its constitution according to federal judges? What about the Digital Services Act from the European Union. Is artificial intelligence (AI) being used? What about for the video game ‘Call of Duty’? Has Google made changes that are against religious speech? Has YouTube, Facebook, Twitter (X), and others in Big Tech been censoring factually accurate information? Is the US government using AI to monitor social media to look for emotions in order to suppress information? What is mal-information? Is a famine of the word of God prophesied to come? Will the 666 Beast and the Antichrist use computers and AI for totalitarian censorship and control?

Here is a link to our video: 666, the Censorship Industrial Complex, and AI.

We are getting closer to the day, with technologies unheard of when God inspired Amos and John to warn what would happen–like computers and AI–that the totalitarian Beast of Revelation will be able use to assist his 666 reign as well as enforce the coming ‘famine of the word’ of God.

Snares are being laid. The Trump Administration is assisting in that.

Notice item 10 of my list of 25 items to prophetically watch in 2025:

10. Knowledge Increasing

Notice something from ancient times:

6 And the LORD said, “Indeed the people are one and they all have one language, and this is what they begin to do; now nothing that they propose to do will be withheld from them. 7 Come, let Us go down and there confuse their language, that they may not understand one another’s speech.”

8 So the LORD scattered them abroad from there over the face of all the earth, and they ceased building the city. 9 Therefore its name is called Babel, because there the Lord confused the language of all the earth; and from there the Lord scattered them abroad over the face of all the earth. (Genesis 11:6-9)

While many languages came out of this, notice another prophecy for the end times:

4 “But you, Daniel, shut up the words, and seal the book until the time of the end; many shall run to and fro, and knowledge shall increase.” (Daniel 12:4)

We are seeing massive developments in health, robotics, artificial intelligence, and various sciences.

The arrival and use of “artificial intelligence,” automobiles, jet planes, computers, cellular telephones, and the internet certainly align with that prophecy.

Computers have also made it easier to communicate, even among people of different languages. And while that can be a good thing … they also have the ability to increase surveillance.

The Bible shows more restrictions are coming.

In my book, Unintended Consequences and Donald Trump’s Presidency: Is Donald Trump Fulfilling Biblical, Islamic, Greco-Roman Catholic, Buddhist, and other America-Related Prophecies?, I had the following warnings:

Donald Trump has been in favor of many aspects of government surveillance and loss of personal privacy rights. (p. 54)

Donald Trump is Apocalyptic … He has made statements suggesting a loss of privacy and favoring unlimited government control. This type of thing will be exploited by 666 of Revelation 13:16-18. (pp. 86,91)

Deceit and surveillance is prophesied to increase.

Notice what will be coming to at least Europe:

14 And he deceives those who dwell on the earth by those signs which he was granted to do in the sight of the beast, telling those who dwell on the earth to make an image to the beast who was wounded by the sword and lived. 15 He was granted power to give breath to the image of the beast, that the image of the beast should both speak and cause as many as would not worship the image of the beast to be killed. 16 He causes all, both small and great, rich and poor, free and slave, to receive a mark on their right hand or on their foreheads, 17 and that no one may buy or sell except one who has the mark or the name of the beast, or the number of his name.

18 Here is wisdom. Let him who has understanding calculate the number of the beast, for it is the number of a man: His number is 666. (Revelation 13:14-18)

The above will help make much of the world a “digital prison.”

Let me add that Europe has already taken steps to push Big Tech to remove speech it does not want to allow–and it is also increasing its ability to surveil.

Some of what the Trump Administration is doing will likely be used by European leaders to justify the beastly surveillance and control the Bible prophesies will occur.

Do not place your confidence in any governments in this world, but in Jesus and the coming Kingdom of God.

Related Items:

Preparing for the ‘Short Work’ and The Famine of the Word What is the ‘short work’ of Romans 9:28? Who is preparing for it? Will Philadelphian Christians instruct many in the end times? Here is a link to a related video sermon titled: The Short Work. Here is a link to another: Preparing to Instruct Many.

25 items to prophetically watch in 2025 Much is happening. Dr. Thiel points to 25 items to watch (cf. Mark 13:37) in this article. Here is a link to a related sermon video: 25 Items to Watch in 2025.

When Will the Great Tribulation Begin? 2025, 2026, or 2027? Can the Great Tribulation begin today? What happens before the Great Tribulation in the “beginning of sorrows”? What happens in the Great Tribulation and the Day of the Lord? Is this the time of the Gentiles? When is the earliest that the Great Tribulation can begin? What is the Day of the Lord? Who are the 144,000? A short video is available titled: Great Tribulation Trends 2025.

Lost Tribes and Prophecies: What will happen to Australia, the British Isles, Canada, Europe, New Zealand and the United States of America? Where did those people come from? Can you totally rely on DNA? What about other peoples? Do you really know what will happen to Europe and the English-speaking peoples? What about Africa, Asia, South America, and the Islands? This free online book provides scriptural, scientific, historical references, and commentary to address those matters. Here are links to related sermons: Lost tribes, the Bible, and DNA; Lost tribes, prophecies, and identifications; 11 Tribes, 144,000, and Multitudes; Israel, Jeremiah, Tea Tephi, and British Royalty; Gentile European Beast; Royal Succession, Samaria, and Prophecies; Asia, Islands, Latin America, Africa, and Armageddon; When Will the End of the Age Come?; Rise of the Prophesied King of the North; Christian Persecution from the Beast; WWIII and the Coming New World Order; and Woes, WWIV, and the Good News of the Kingdom of God.

Donald Trump in Prophecy Prophecy, Donald Trump? Are there prophecies that Donald Trump may fulfill? Are there any prophecies that he has already helped fulfill? Is a Donald Trump presidency proving to be apocalyptic? Three related videos are available: Donald: ‘Trump of God’ or Apocalyptic? and Donald Trump’s Prophetic Presidency and Donald Trump and Unintended Consequences.

Unintended Consequences and Donald Trump’s Presidency: Is Donald Trump Fulfilling Biblical, Islamic, Greco-Roman Catholic, Buddhist, and other America-Related Prophecies? Is Donald Trump going to save the USA or are there going to be many disastrous unintended consequences of his statements and policies? What will happen. The link takes you to a book available at Amazon.com.

Unintended Consequences and Donald Trump’s Presidency: Is Donald Trump Fulfilling Biblical, Islamic, Greco-Roman Catholic, Buddhist, and other America-Related Prophecies? Kindle edition is available for only US$3.99. And you do not need an actual Kindle device to read it. Why? Amazon will allow you to download it to almost any device: Please click HERE to download one of Amazon s Free Reader Apps. After you go to for your free Kindle reader and then go to Unintended Consequences and Donald Trump’s Presidency: Is Donald Trump Fulfilling Biblical, Islamic, Greco-Roman Catholic, Buddhist, and other America-Related Prophecies?

LATEST AUDIO NEWS REPORTS

LATEST BIBLE PROPHECY INTERVIEWS

0 notes

Text

Atlas Nulled Script 2.14