#Kubernetes monitoring

Explore tagged Tumblr posts

Text

Centralized Logging and Monitoring for Kubernetes: Streamline Your Cluster's Performance

When it comes to Kubernetes, logging and monitoring are crucial for ensuring the smooth operation of your cluster. Without a centralized logging and monitoring solution, you'll be left scrambling to troubleshoot issues and optimize performance. At IAMDevBox.com, we've seen firsthand the benefits of implementing a centralized logging and monitoring strategy. By aggregating logs from across your cluster, you can identify bottlenecks, detect anomalies, and respond quickly to issues. In this article, we'll explore the importance of centralized logging and monitoring for Kubernetes, and provide tips on how to get started. Read more: Centralized Logging and Monitoring for Kubernetes: Streamline Your Cluster's Performance

0 notes

Text

Driving Innovation: A Case Study on DevOps Implementation in BFSI Domain

Banking, Financial Services, and Insurance (BFSI), technology plays a pivotal role in driving innovation, efficiency, and customer satisfaction. However, for one BFSI company, the journey toward digital excellence was fraught with challenges in its software development and maintenance processes. With a diverse portfolio of applications and a significant portion outsourced to external vendors, the company grappled with inefficiencies that threatened its operational agility and competitiveness. Identified within this portfolio were 15 core applications deemed critical to the company’s operations, highlighting the urgency for transformative action.

Aspirations for the Future:

Looking ahead, the company envisioned a future state characterized by the establishment of a matured DevSecOps environment. This encompassed several key objectives:

Near-zero Touch Pipeline: Automating product development processes for infrastructure provisioning, application builds, deployments, and configuration changes.

Matured Source-code Management: Implementing robust source-code management processes, complete with review gates, to uphold quality standards.

Defined and Repeatable Release Process: Instituting a standardized release process fortified with quality and security gates to minimize deployment failures and bug leakage.

Modernization: Embracing the latest technological advancements to drive innovation and efficiency.

Common Processes Among Vendors: Establishing standardized processes to enhance understanding and control over the software development lifecycle (SDLC) across different vendors.

Challenges Along the Way:

The path to realizing this vision was beset with challenges, including:

Lack of Source Code Management

Absence of Documentation

Lack of Common Processes

Missing CI/CD and Automated Testing

No Branching and Merging Strategy

Inconsistent Sprint Execution

These challenges collectively hindered the company’s ability to achieve optimal software development, maintenance, and deployment processes. They underscored the critical need for foundational practices such as source code management, documentation, and standardized processes to be addressed comprehensively.

Proposed Solutions:

To overcome these obstacles and pave the way for transformation, the company proposed a phased implementation approach:

Stage 1: Implement Basic DevOps: Commencing with the implementation of fundamental DevOps practices, including source code management and CI/CD processes, for a select group of applications.

Stage 2: Modernization: Progressing towards a more advanced stage involving microservices architecture, test automation, security enhancements, and comprehensive monitoring.

To Expand Your Awareness: https://devopsenabler.com/contact-us

Injecting Security into the SDLC:

Recognizing the paramount importance of security, dedicated measures were introduced to fortify the software development lifecycle. These encompassed:

Security by Design

Secure Coding Practices

Static and Dynamic Application Security Testing (SAST/DAST)

Software Component Analysis

Security Operations

Realizing the Outcomes:

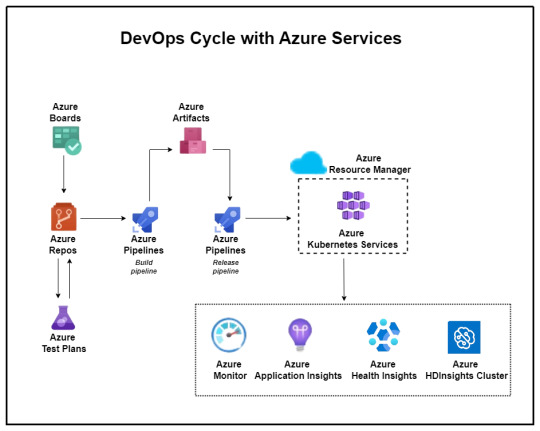

The proposed solution yielded promising outcomes aligned closely with the company’s future aspirations. Leveraging Microsoft Azure’s DevOps capabilities, the company witnessed:

Establishment of common processes and enhanced visibility across different vendors.

Implementation of Azure DevOps for organized version control, sprint planning, and streamlined workflows.

Automation of builds, deployments, and infrastructure provisioning through Azure Pipelines and Automation.

Improved code quality, security, and release management processes.

Transition to microservices architecture and comprehensive monitoring using Azure services.

The BFSI company embarked on a transformative journey towards establishing a matured DevSecOps environment. This journey, marked by challenges and triumphs, underscores the critical importance of innovation and adaptability in today’s rapidly evolving technological landscape. As the company continues to evolve and innovate, the adoption of DevSecOps principles will serve as a cornerstone in driving efficiency, security, and ultimately, the delivery of superior customer experiences in the dynamic realm of BFSI.

Contact Information:

Phone: 080-28473200 / +91 8880 38 18 58

Email: [email protected]

Address: DevOps Enabler & Co, 2nd Floor, F86 Building, ITI Limited, Doorvaninagar, Bangalore 560016.

#BFSI#DevSecOps#software development#maintenance#technology stack#source code management#CI/CD#automated testing#DevOps#microservices#security#Azure DevOps#infrastructure as code#ARM templates#code quality#release management#Kubernetes#testing automation#monitoring#security incident response#project management#agile methodology#software engineering

0 notes

Text

Lens Kubernetes: Simple Cluster Management Dashboard and Monitoring

Lens Kubernetes: Simple Cluster Management Dashboard and Monitoring #homelab #kubernetes #KubernetesManagement #LensKubernetesDesktop #KubernetesClusterManagement #MultiClusterManagement #KubernetesSecurityFeatures #KubernetesUI #kubernetesmonitoring

Kubernetes is a well-known container orchestration platform. It allows admins and organizations to operate their containers and support modern applications in the enterprise. Kubernetes management is not for the “faint of heart.” It requires the right skill set and tools. Lens Kubernetes desktop is an app that enables managing Kubernetes clusters on Windows and Linux devices. Table of…

View On WordPress

#Kubernetes cluster management#Kubernetes collaboration tools#Kubernetes management#Kubernetes performance improvements#Kubernetes real-time monitoring#Kubernetes security features#Kubernetes user interface#Lens Kubernetes 2023.10#Lens Kubernetes Desktop#multi-cluster management

0 notes

Text

How Python Powers Scalable and Cost-Effective Cloud Solutions

Explore the role of Python in developing scalable and cost-effective cloud solutions. This guide covers Python's advantages in cloud computing, addresses potential challenges, and highlights real-world applications, providing insights into leveraging Python for efficient cloud development.

Introduction

In today's rapidly evolving digital landscape, businesses are increasingly leveraging cloud computing to enhance scalability, optimize costs, and drive innovation. Among the myriad of programming languages available, Python has emerged as a preferred choice for developing robust cloud solutions. Its simplicity, versatility, and extensive library support make it an ideal candidate for cloud-based applications.

In this comprehensive guide, we will delve into how Python empowers scalable and cost-effective cloud solutions, explore its advantages, address potential challenges, and highlight real-world applications.

Why Python is the Preferred Choice for Cloud Computing?

Python's popularity in cloud computing is driven by several factors, making it the preferred language for developing and managing cloud solutions. Here are some key reasons why Python stands out:

Simplicity and Readability: Python's clean and straightforward syntax allows developers to write and maintain code efficiently, reducing development time and costs.

Extensive Library Support: Python offers a rich set of libraries and frameworks like Django, Flask, and FastAPI for building cloud applications.

Seamless Integration with Cloud Services: Python is well-supported across major cloud platforms like AWS, Azure, and Google Cloud.

Automation and DevOps Friendly: Python supports infrastructure automation with tools like Ansible, Terraform, and Boto3.

Strong Community and Enterprise Adoption: Python has a massive global community that continuously improves and innovates cloud-related solutions.

How Python Enables Scalable Cloud Solutions?

Scalability is a critical factor in cloud computing, and Python provides multiple ways to achieve it:

1. Automation of Cloud Infrastructure

Python's compatibility with cloud service provider SDKs, such as AWS Boto3, Azure SDK for Python, and Google Cloud Client Library, enables developers to automate the provisioning and management of cloud resources efficiently.

2. Containerization and Orchestration

Python integrates seamlessly with Docker and Kubernetes, enabling businesses to deploy scalable containerized applications efficiently.

3. Cloud-Native Development

Frameworks like Flask, Django, and FastAPI support microservices architecture, allowing businesses to develop lightweight, scalable cloud applications.

4. Serverless Computing

Python's support for serverless platforms, including AWS Lambda, Azure Functions, and Google Cloud Functions, allows developers to build applications that automatically scale in response to demand, optimizing resource utilization and cost.

5. AI and Big Data Scalability

Python’s dominance in AI and data science makes it an ideal choice for cloud-based AI/ML services like AWS SageMaker, Google AI, and Azure Machine Learning.

Looking for expert Python developers to build scalable cloud solutions? Hire Python Developers now!

Advantages of Using Python for Cloud Computing

Cost Efficiency: Python’s compatibility with serverless computing and auto-scaling strategies minimizes cloud costs.

Faster Development: Python’s simplicity accelerates cloud application development, reducing time-to-market.

Cross-Platform Compatibility: Python runs seamlessly across different cloud platforms.

Security and Reliability: Python-based security tools help in encryption, authentication, and cloud monitoring.

Strong Community Support: Python developers worldwide contribute to continuous improvements, making it future-proof.

Challenges and Considerations

While Python offers many benefits, there are some challenges to consider:

Performance Limitations: Python is an interpreted language, which may not be as fast as compiled languages like Java or C++.

Memory Consumption: Python applications might require optimization to handle large-scale cloud workloads efficiently.

Learning Curve for Beginners: Though Python is simple, mastering cloud-specific frameworks requires time and expertise.

Python Libraries and Tools for Cloud Computing

Python’s ecosystem includes powerful libraries and tools tailored for cloud computing, such as:

Boto3: AWS SDK for Python, used for cloud automation.

Google Cloud Client Library: Helps interact with Google Cloud services.

Azure SDK for Python: Enables seamless integration with Microsoft Azure.

Apache Libcloud: Provides a unified interface for multiple cloud providers.

PyCaret: Simplifies machine learning deployment in cloud environments.

Real-World Applications of Python in Cloud Computing

1. Netflix - Scalable Streaming with Python

Netflix extensively uses Python for automation, data analysis, and managing cloud infrastructure, enabling seamless content delivery to millions of users.

2. Spotify - Cloud-Based Music Streaming

Spotify leverages Python for big data processing, recommendation algorithms, and cloud automation, ensuring high availability and scalability.

3. Reddit - Handling Massive Traffic

Reddit uses Python and AWS cloud solutions to manage heavy traffic while optimizing server costs efficiently.

Future of Python in Cloud Computing

The future of Python in cloud computing looks promising with emerging trends such as:

AI-Driven Cloud Automation: Python-powered AI and machine learning will drive intelligent cloud automation.

Edge Computing: Python will play a crucial role in processing data at the edge for IoT and real-time applications.

Hybrid and Multi-Cloud Strategies: Python’s flexibility will enable seamless integration across multiple cloud platforms.

Increased Adoption of Serverless Computing: More enterprises will adopt Python for cost-effective serverless applications.

Conclusion

Python's simplicity, versatility, and robust ecosystem make it a powerful tool for developing scalable and cost-effective cloud solutions. By leveraging Python's capabilities, businesses can enhance their cloud applications' performance, flexibility, and efficiency.

Ready to harness the power of Python for your cloud solutions? Explore our Python Development Services to discover how we can assist you in building scalable and efficient cloud applications.

FAQs

1. Why is Python used in cloud computing?

Python is widely used in cloud computing due to its simplicity, extensive libraries, and seamless integration with cloud platforms like AWS, Google Cloud, and Azure.

2. Is Python good for serverless computing?

Yes! Python works efficiently in serverless environments like AWS Lambda, Azure Functions, and Google Cloud Functions, making it an ideal choice for cost-effective, auto-scaling applications.

3. Which companies use Python for cloud solutions?

Major companies like Netflix, Spotify, Dropbox, and Reddit use Python for cloud automation, AI, and scalable infrastructure management.

4. How does Python help with cloud security?

Python offers robust security libraries like PyCryptodome and OpenSSL, enabling encryption, authentication, and cloud monitoring for secure cloud applications.

5. Can Python handle big data in the cloud?

Yes! Python supports big data processing with tools like Apache Spark, Pandas, and NumPy, making it suitable for data-driven cloud applications.

#Python development company#Python in Cloud Computing#Hire Python Developers#Python for Multi-Cloud Environments

2 notes

·

View notes

Text

Exploring the Azure Technology Stack: A Solution Architect’s Journey

Kavin

As a solution architect, my career revolves around solving complex problems and designing systems that are scalable, secure, and efficient. The rise of cloud computing has transformed the way we think about technology, and Microsoft Azure has been at the forefront of this evolution. With its diverse and powerful technology stack, Azure offers endless possibilities for businesses and developers alike. My journey with Azure began with Microsoft Azure training online, which not only deepened my understanding of cloud concepts but also helped me unlock the potential of Azure’s ecosystem.

In this blog, I will share my experience working with a specific Azure technology stack that has proven to be transformative in various projects. This stack primarily focuses on serverless computing, container orchestration, DevOps integration, and globally distributed data management. Let’s dive into how these components come together to create robust solutions for modern business challenges.

Understanding the Azure Ecosystem

Azure’s ecosystem is vast, encompassing services that cater to infrastructure, application development, analytics, machine learning, and more. For this blog, I will focus on a specific stack that includes:

Azure Functions for serverless computing.

Azure Kubernetes Service (AKS) for container orchestration.

Azure DevOps for streamlined development and deployment.

Azure Cosmos DB for globally distributed, scalable data storage.

Each of these services has unique strengths, and when used together, they form a powerful foundation for building modern, cloud-native applications.

1. Azure Functions: Embracing Serverless Architecture

Serverless computing has redefined how we build and deploy applications. With Azure Functions, developers can focus on writing code without worrying about managing infrastructure. Azure Functions supports multiple programming languages and offers seamless integration with other Azure services.

Real-World Application

In one of my projects, we needed to process real-time data from IoT devices deployed across multiple locations. Azure Functions was the perfect choice for this task. By integrating Azure Functions with Azure Event Hubs, we were able to create an event-driven architecture that processed millions of events daily. The serverless nature of Azure Functions allowed us to scale dynamically based on workload, ensuring cost-efficiency and high performance.

Key Benefits:

Auto-scaling: Automatically adjusts to handle workload variations.

Cost-effective: Pay only for the resources consumed during function execution.

Integration-ready: Easily connects with services like Logic Apps, Event Grid, and API Management.

2. Azure Kubernetes Service (AKS): The Power of Containers

Containers have become the backbone of modern application development, and Azure Kubernetes Service (AKS) simplifies container orchestration. AKS provides a managed Kubernetes environment, making it easier to deploy, manage, and scale containerized applications.

Real-World Application

In a project for a healthcare client, we built a microservices architecture using AKS. Each service—such as patient records, appointment scheduling, and billing—was containerized and deployed on AKS. This approach provided several advantages:

Isolation: Each service operated independently, improving fault tolerance.

Scalability: AKS scaled specific services based on demand, optimizing resource usage.

Observability: Using Azure Monitor, we gained deep insights into application performance and quickly resolved issues.

The integration of AKS with Azure DevOps further streamlined our CI/CD pipelines, enabling rapid deployment and updates without downtime.

Key Benefits:

Managed Kubernetes: Reduces operational overhead with automated updates and patching.

Multi-region support: Enables global application deployments.

Built-in security: Integrates with Azure Active Directory and offers role-based access control (RBAC).

3. Azure DevOps: Streamlining Development Workflows

Azure DevOps is an all-in-one platform for managing development workflows, from planning to deployment. It includes tools like Azure Repos, Azure Pipelines, and Azure Artifacts, which support collaboration and automation.

Real-World Application

For an e-commerce client, we used Azure DevOps to establish an efficient CI/CD pipeline. The project involved multiple teams working on front-end, back-end, and database components. Azure DevOps provided:

Version control: Using Azure Repos for centralized code management.

Automated pipelines: Azure Pipelines for building, testing, and deploying code.

Artifact management: Storing dependencies in Azure Artifacts for seamless integration.

The result? Deployment cycles that previously took weeks were reduced to just a few hours, enabling faster time-to-market and improved customer satisfaction.

Key Benefits:

End-to-end integration: Unifies tools for seamless development and deployment.

Scalability: Supports projects of all sizes, from startups to enterprises.

Collaboration: Facilitates team communication with built-in dashboards and tracking.

4. Azure Cosmos DB: Global Data at Scale

Azure Cosmos DB is a globally distributed, multi-model database service designed for mission-critical applications. It guarantees low latency, high availability, and scalability, making it ideal for applications requiring real-time data access across multiple regions.

Real-World Application

In a project for a financial services company, we used Azure Cosmos DB to manage transaction data across multiple continents. The database’s multi-region replication ensure data consistency and availability, even during regional outages. Additionally, Cosmos DB’s support for multiple APIs (SQL, MongoDB, Cassandra, etc.) allowed us to integrate seamlessly with existing systems.

Key Benefits:

Global distribution: Data is replicated across regions with minimal latency.

Flexibility: Supports various data models, including key-value, document, and graph.

SLAs: Offers industry-leading SLAs for availability, throughput, and latency.

Building a Cohesive Solution

Combining these Azure services creates a technology stack that is flexible, scalable, and efficient. Here’s how they work together in a hypothetical solution:

Data Ingestion: IoT devices send data to Azure Event Hubs.

Processing: Azure Functions processes the data in real-time.

Storage: Processed data is stored in Azure Cosmos DB for global access.

Application Logic: Containerized microservices run on AKS, providing APIs for accessing and manipulating data.

Deployment: Azure DevOps manages the CI/CD pipeline, ensuring seamless updates to the application.

This architecture demonstrates how Azure’s technology stack can address modern business challenges while maintaining high performance and reliability.

Final Thoughts

My journey with Azure has been both rewarding and transformative. The training I received at ACTE Institute provided me with a strong foundation to explore Azure’s capabilities and apply them effectively in real-world scenarios. For those new to cloud computing, I recommend starting with a solid training program that offers hands-on experience and practical insights.

As the demand for cloud professionals continues to grow, specializing in Azure’s technology stack can open doors to exciting opportunities. If you’re based in Hyderabad or prefer online learning, consider enrolling in Microsoft Azure training in Hyderabad to kickstart your journey.

Azure’s ecosystem is continuously evolving, offering new tools and features to address emerging challenges. By staying committed to learning and experimenting, we can harness the full potential of this powerful platform and drive innovation in every project we undertake.

#cybersecurity#database#marketingstrategy#digitalmarketing#adtech#artificialintelligence#machinelearning#ai

2 notes

·

View notes

Text

What is Argo CD? And When Was Argo CD Established?

What Is Argo CD?

Argo CD is declarative Kubernetes GitOps continuous delivery.

In DevOps, ArgoCD is a Continuous Delivery (CD) technology that has become well-liked for delivering applications to Kubernetes. It is based on the GitOps deployment methodology.

When was Argo CD Established?

Argo CD was created at Intuit and made publicly available following Applatix’s 2018 acquisition by Intuit. The founding developers of Applatix, Hong Wang, Jesse Suen, and Alexander Matyushentsev, made the Argo project open-source in 2017.

Why Argo CD?

Declarative and version-controlled application definitions, configurations, and environments are ideal. Automated, auditable, and easily comprehensible application deployment and lifecycle management are essential.

Getting Started

Quick Start

kubectl create namespace argocd kubectl apply -n argocd -f https://raw.githubusercontent.com/argoproj/argo-cd/stable/manifests/install.yaml

For some features, more user-friendly documentation is offered. Refer to the upgrade guide if you want to upgrade your Argo CD. Those interested in creating third-party connectors can access developer-oriented resources.

How it works

Argo CD defines the intended application state by employing Git repositories as the source of truth, in accordance with the GitOps pattern. There are various approaches to specify Kubernetes manifests:

Applications for Customization

Helm charts

JSONNET files

Simple YAML/JSON manifest directory

Any custom configuration management tool that is set up as a plugin

The deployment of the intended application states in the designated target settings is automated by Argo CD. Deployments of applications can monitor changes to branches, tags, or pinned to a particular manifest version at a Git commit.

Architecture

The implementation of Argo CD is a Kubernetes controller that continually observes active apps and contrasts their present, live state with the target state (as defined in the Git repository). Out Of Sync is the term used to describe a deployed application whose live state differs from the target state. In addition to reporting and visualizing the differences, Argo CD offers the ability to manually or automatically sync the current state back to the intended goal state. The designated target environments can automatically apply and reflect any changes made to the intended target state in the Git repository.

Components

API Server

The Web UI, CLI, and CI/CD systems use the API, which is exposed by the gRPC/REST server. Its duties include the following:

Status reporting and application management

Launching application functions (such as rollback, sync, and user-defined actions)

Cluster credential management and repository (k8s secrets)

RBAC enforcement

Authentication, and auth delegation to outside identity providers

Git webhook event listener/forwarder

Repository Server

An internal service called the repository server keeps a local cache of the Git repository containing the application manifests. When given the following inputs, it is in charge of creating and returning the Kubernetes manifests:

URL of the repository

Revision (tag, branch, commit)

Path of the application

Template-specific configurations: helm values.yaml, parameters

A Kubernetes controller known as the application controller keeps an eye on all active apps and contrasts their actual, live state with the intended target state as defined in the repository. When it identifies an Out Of Sync application state, it may take remedial action. It is in charge of calling any user-specified hooks for lifecycle events (Sync, PostSync, and PreSync).

Features

Applications are automatically deployed to designated target environments.

Multiple configuration management/templating tools (Kustomize, Helm, Jsonnet, and plain-YAML) are supported.

Capacity to oversee and implement across several clusters

Integration of SSO (OIDC, OAuth2, LDAP, SAML 2.0, Microsoft, LinkedIn, GitHub, GitLab)

RBAC and multi-tenancy authorization policies

Rollback/Roll-anywhere to any Git repository-committed application configuration

Analysis of the application resources’ health state

Automated visualization and detection of configuration drift

Applications can be synced manually or automatically to their desired state.

Web user interface that shows program activity in real time

CLI for CI integration and automation

Integration of webhooks (GitHub, BitBucket, GitLab)

Tokens of access for automation

Hooks for PreSync, Sync, and PostSync to facilitate intricate application rollouts (such as canary and blue/green upgrades)

Application event and API call audit trails

Prometheus measurements

To override helm parameters in Git, use parameter overrides.

Read more on Govindhtech.com

#ArgoCD#CD#GitOps#API#Kubernetes#Git#Argoproject#News#Technews#Technology#Technologynews#Technologytrends#govindhtech

2 notes

·

View notes

Text

Cloud Agnostic: Achieving Flexibility and Independence in Cloud Management

As businesses increasingly migrate to the cloud, they face a critical decision: which cloud provider to choose? While AWS, Microsoft Azure, and Google Cloud offer powerful platforms, the concept of "cloud agnostic" is gaining traction. Cloud agnosticism refers to a strategy where businesses avoid vendor lock-in by designing applications and infrastructure that work across multiple cloud providers. This approach provides flexibility, independence, and resilience, allowing organizations to adapt to changing needs and avoid reliance on a single provider.

What Does It Mean to Be Cloud Agnostic?

Being cloud agnostic means creating and managing systems, applications, and services that can run on any cloud platform. Instead of committing to a single cloud provider, businesses design their architecture to function seamlessly across multiple platforms. This flexibility is achieved by using open standards, containerization technologies like Docker, and orchestration tools such as Kubernetes.

Key features of a cloud agnostic approach include:

Interoperability: Applications must be able to operate across different cloud environments.

Portability: The ability to migrate workloads between different providers without significant reconfiguration.

Standardization: Using common frameworks, APIs, and languages that work universally across platforms.

Benefits of Cloud Agnostic Strategies

Avoiding Vendor Lock-InThe primary benefit of being cloud agnostic is avoiding vendor lock-in. Once a business builds its entire infrastructure around a single cloud provider, it can be challenging to switch or expand to other platforms. This could lead to increased costs and limited innovation. With a cloud agnostic strategy, businesses can choose the best services from multiple providers, optimizing both performance and costs.

Cost OptimizationCloud agnosticism allows companies to choose the most cost-effective solutions across providers. As cloud pricing models are complex and vary by region and usage, a cloud agnostic system enables businesses to leverage competitive pricing and minimize expenses by shifting workloads to different providers when necessary.

Greater Resilience and UptimeBy operating across multiple cloud platforms, organizations reduce the risk of downtime. If one provider experiences an outage, the business can shift workloads to another platform, ensuring continuous service availability. This redundancy builds resilience, ensuring high availability in critical systems.

Flexibility and ScalabilityA cloud agnostic approach gives companies the freedom to adjust resources based on current business needs. This means scaling applications horizontally or vertically across different providers without being restricted by the limits or offerings of a single cloud vendor.

Global ReachDifferent cloud providers have varying levels of presence across geographic regions. With a cloud agnostic approach, businesses can leverage the strengths of various providers in different areas, ensuring better latency, performance, and compliance with local regulations.

Challenges of Cloud Agnosticism

Despite the advantages, adopting a cloud agnostic approach comes with its own set of challenges:

Increased ComplexityManaging and orchestrating services across multiple cloud providers is more complex than relying on a single vendor. Businesses need robust management tools, monitoring systems, and teams with expertise in multiple cloud environments to ensure smooth operations.

Higher Initial CostsThe upfront costs of designing a cloud agnostic architecture can be higher than those of a single-provider system. Developing portable applications and investing in technologies like Kubernetes or Terraform requires significant time and resources.

Limited Use of Provider-Specific ServicesCloud providers often offer unique, advanced services—such as machine learning tools, proprietary databases, and analytics platforms—that may not be easily portable to other clouds. Being cloud agnostic could mean missing out on some of these specialized services, which may limit innovation in certain areas.

Tools and Technologies for Cloud Agnostic Strategies

Several tools and technologies make cloud agnosticism more accessible for businesses:

Containerization: Docker and similar containerization tools allow businesses to encapsulate applications in lightweight, portable containers that run consistently across various environments.

Orchestration: Kubernetes is a leading tool for orchestrating containers across multiple cloud platforms. It ensures scalability, load balancing, and failover capabilities, regardless of the underlying cloud infrastructure.

Infrastructure as Code (IaC): Tools like Terraform and Ansible enable businesses to define cloud infrastructure using code. This makes it easier to manage, replicate, and migrate infrastructure across different providers.

APIs and Abstraction Layers: Using APIs and abstraction layers helps standardize interactions between applications and different cloud platforms, enabling smooth interoperability.

When Should You Consider a Cloud Agnostic Approach?

A cloud agnostic approach is not always necessary for every business. Here are a few scenarios where adopting cloud agnosticism makes sense:

Businesses operating in regulated industries that need to maintain compliance across multiple regions.

Companies require high availability and fault tolerance across different cloud platforms for mission-critical applications.

Organizations with global operations that need to optimize performance and cost across multiple cloud regions.

Businesses aim to avoid long-term vendor lock-in and maintain flexibility for future growth and scaling needs.

Conclusion

Adopting a cloud agnostic strategy offers businesses unparalleled flexibility, independence, and resilience in cloud management. While the approach comes with challenges such as increased complexity and higher upfront costs, the long-term benefits of avoiding vendor lock-in, optimizing costs, and enhancing scalability are significant. By leveraging the right tools and technologies, businesses can achieve a truly cloud-agnostic architecture that supports innovation and growth in a competitive landscape.

Embrace the cloud agnostic approach to future-proof your business operations and stay ahead in the ever-evolving digital world.

2 notes

·

View notes

Text

Navigating the DevOps Landscape: Opportunities and Roles

DevOps has become a game-changer in the quick-moving world of technology. This dynamic process, whose name is a combination of "Development" and "Operations," is revolutionising the way software is created, tested, and deployed. DevOps is a cultural shift that encourages cooperation, automation, and integration between development and IT operations teams, not merely a set of practises. The outcome? greater software delivery speed, dependability, and effectiveness.

In this comprehensive guide, we'll delve into the essence of DevOps, explore the key technologies that underpin its success, and uncover the vast array of job opportunities it offers. Whether you're an aspiring IT professional looking to enter the world of DevOps or an experienced practitioner seeking to enhance your skills, this blog will serve as your roadmap to mastering DevOps. So, let's embark on this enlightening journey into the realm of DevOps.

Key Technologies for DevOps:

Version Control Systems: DevOps teams rely heavily on robust version control systems such as Git and SVN. These systems are instrumental in managing and tracking changes in code and configurations, promoting collaboration and ensuring the integrity of the software development process.

Continuous Integration/Continuous Deployment (CI/CD): The heart of DevOps, CI/CD tools like Jenkins, Travis CI, and CircleCI drive the automation of critical processes. They orchestrate the building, testing, and deployment of code changes, enabling rapid, reliable, and consistent software releases.

Configuration Management: Tools like Ansible, Puppet, and Chef are the architects of automation in the DevOps landscape. They facilitate the automated provisioning and management of infrastructure and application configurations, ensuring consistency and efficiency.

Containerization: Docker and Kubernetes, the cornerstones of containerization, are pivotal in the DevOps toolkit. They empower the creation, deployment, and management of containers that encapsulate applications and their dependencies, simplifying deployment and scaling.

Orchestration: Docker Swarm and Amazon ECS take center stage in orchestrating and managing containerized applications at scale. They provide the control and coordination required to maintain the efficiency and reliability of containerized systems.

Monitoring and Logging: The observability of applications and systems is essential in the DevOps workflow. Monitoring and logging tools like the ELK Stack (Elasticsearch, Logstash, Kibana) and Prometheus are the eyes and ears of DevOps professionals, tracking performance, identifying issues, and optimizing system behavior.

Cloud Computing Platforms: AWS, Azure, and Google Cloud are the foundational pillars of cloud infrastructure in DevOps. They offer the infrastructure and services essential for creating and scaling cloud-based applications, facilitating the agility and flexibility required in modern software development.

Scripting and Coding: Proficiency in scripting languages such as Shell, Python, Ruby, and coding skills are invaluable assets for DevOps professionals. They empower the creation of automation scripts and tools, enabling customization and extensibility in the DevOps pipeline.

Collaboration and Communication Tools: Collaboration tools like Slack and Microsoft Teams enhance the communication and coordination among DevOps team members. They foster efficient collaboration and facilitate the exchange of ideas and information.

Infrastructure as Code (IaC): The concept of Infrastructure as Code, represented by tools like Terraform and AWS CloudFormation, is a pivotal practice in DevOps. It allows the definition and management of infrastructure using code, ensuring consistency and reproducibility, and enabling the rapid provisioning of resources.

Job Opportunities in DevOps:

DevOps Engineer: DevOps engineers are the architects of continuous integration and continuous deployment (CI/CD) pipelines. They meticulously design and maintain these pipelines to automate the deployment process, ensuring the rapid, reliable, and consistent release of software. Their responsibilities extend to optimizing the system's reliability, making them the backbone of seamless software delivery.

Release Manager: Release managers play a pivotal role in orchestrating the software release process. They carefully plan and schedule software releases, coordinating activities between development and IT teams. Their keen oversight ensures the smooth transition of software from development to production, enabling timely and successful releases.

Automation Architect: Automation architects are the visionaries behind the design and development of automation frameworks. These frameworks streamline deployment and monitoring processes, leveraging automation to enhance efficiency and reliability. They are the engineers of innovation, transforming manual tasks into automated wonders.

Cloud Engineer: Cloud engineers are the custodians of cloud infrastructure. They adeptly manage cloud resources, optimizing their performance and ensuring scalability. Their expertise lies in harnessing the power of cloud platforms like AWS, Azure, or Google Cloud to provide robust, flexible, and cost-effective solutions.

Site Reliability Engineer (SRE): SREs are the sentinels of system reliability. They focus on maintaining the system's resilience through efficient practices, continuous monitoring, and rapid incident response. Their vigilance ensures that applications and systems remain stable and performant, even in the face of challenges.

Security Engineer: Security engineers are the guardians of the DevOps pipeline. They integrate security measures seamlessly into the software development process, safeguarding it from potential threats and vulnerabilities. Their role is crucial in an era where security is paramount, ensuring that DevOps practices are fortified against breaches.

As DevOps continues to redefine the landscape of software development and deployment, gaining expertise in its core principles and technologies is a strategic career move. ACTE Technologies offers comprehensive DevOps training programs, led by industry experts who provide invaluable insights, real-world examples, and hands-on guidance. ACTE Technologies's DevOps training covers a wide range of essential concepts, practical exercises, and real-world applications. With a strong focus on certification preparation, ACTE Technologies ensures that you're well-prepared to excel in the world of DevOps. With their guidance, you can gain mastery over DevOps practices, enhance your skill set, and propel your career to new heights.

11 notes

·

View notes

Text

Journey to Devops

The concept of “DevOps” has been gaining traction in the IT sector for a couple of years. It involves promoting teamwork and interaction, between software developers and IT operations groups to enhance the speed and reliability of software delivery. This strategy has become widely accepted as companies strive to provide software to meet customer needs and maintain an edge, in the industry. In this article we will explore the elements of becoming a DevOps Engineer.

Step 1: Get familiar with the basics of Software Development and IT Operations:

In order to pursue a career as a DevOps Engineer it is crucial to possess a grasp of software development and IT operations. Familiarity with programming languages like Python, Java, Ruby or PHP is essential. Additionally, having knowledge about operating systems, databases and networking is vital.

Step 2: Learn the principles of DevOps:

It is crucial to comprehend and apply the principles of DevOps. Automation, continuous integration, continuous deployment and continuous monitoring are aspects that need to be understood and implemented. It is vital to learn how these principles function and how to carry them out efficiently.

Step 3: Familiarize yourself with the DevOps toolchain:

Git: Git, a distributed version control system is extensively utilized by DevOps teams, for code repository management. It aids in monitoring code alterations facilitating collaboration, among team members and preserving a record of modifications made to the codebase.

Ansible: Ansible is an open source tool used for managing configurations deploying applications and automating tasks. It simplifies infrastructure management. Saves time when performing tasks.

Docker: Docker, on the other hand is a platform for containerization that allows DevOps engineers to bundle applications and dependencies into containers. This ensures consistency and compatibility across environments from development, to production.

Kubernetes: Kubernetes is an open-source container orchestration platform that helps manage and scale containers. It helps automate the deployment, scaling, and management of applications and micro-services.

Jenkins: Jenkins is an open-source automation server that helps automate the process of building, testing, and deploying software. It helps to automate repetitive tasks and improve the speed and efficiency of the software delivery process.

Nagios: Nagios is an open-source monitoring tool that helps us monitor the health and performance of our IT infrastructure. It also helps us to identify and resolve issues in real-time and ensure the high availability and reliability of IT systems as well.

Terraform: Terraform is an infrastructure as code (IAC) tool that helps manage and provision IT infrastructure. It helps us automate the process of provisioning and configuring IT resources and ensures consistency between development and production environments.

Step 4: Gain practical experience:

The best way to gain practical experience is by working on real projects and bootcamps. You can start by contributing to open-source projects or participating in coding challenges and hackathons. You can also attend workshops and online courses to improve your skills.

Step 5: Get certified:

Getting certified in DevOps can help you stand out from the crowd and showcase your expertise to various people. Some of the most popular certifications are:

Certified Kubernetes Administrator (CKA)

AWS Certified DevOps Engineer

Microsoft Certified: Azure DevOps Engineer Expert

AWS Certified Cloud Practitioner

Step 6: Build a strong professional network:

Networking is one of the most important parts of becoming a DevOps Engineer. You can join online communities, attend conferences, join webinars and connect with other professionals in the field. This will help you stay up-to-date with the latest developments and also help you find job opportunities and success.

Conclusion:

You can start your journey towards a successful career in DevOps. The most important thing is to be passionate about your work and continuously learn and improve your skills. With the right skills, experience, and network, you can achieve great success in this field and earn valuable experience.

2 notes

·

View notes

Text

Demystifying Microsoft Azure Cloud Hosting and PaaS Services: A Comprehensive Guide

In the rapidly evolving landscape of cloud computing, Microsoft Azure has emerged as a powerful player, offering a wide range of services to help businesses build, deploy, and manage applications and infrastructure. One of the standout features of Azure is its Cloud Hosting and Platform-as-a-Service (PaaS) offerings, which enable organizations to harness the benefits of the cloud while minimizing the complexities of infrastructure management. In this comprehensive guide, we'll dive deep into Microsoft Azure Cloud Hosting and PaaS Services, demystifying their features, benefits, and use cases.

Understanding Microsoft Azure Cloud Hosting

Cloud hosting, as the name suggests, involves hosting applications and services on virtual servers that are accessed over the internet. Microsoft Azure provides a robust cloud hosting environment, allowing businesses to scale up or down as needed, pay for only the resources they consume, and reduce the burden of maintaining physical hardware. Here are some key components of Azure Cloud Hosting:

Virtual Machines (VMs): Azure offers a variety of pre-configured virtual machine sizes that cater to different workloads. These VMs can run Windows or Linux operating systems and can be easily scaled to meet changing demands.

Azure App Service: This PaaS offering allows developers to build, deploy, and manage web applications without dealing with the underlying infrastructure. It supports various programming languages and frameworks, making it suitable for a wide range of applications.

Azure Kubernetes Service (AKS): For containerized applications, AKS provides a managed Kubernetes service. Kubernetes simplifies the deployment and management of containerized applications, and AKS further streamlines this process.

Exploring Azure Platform-as-a-Service (PaaS) Services

Platform-as-a-Service (PaaS) takes cloud hosting a step further by abstracting away even more of the infrastructure management, allowing developers to focus primarily on building and deploying applications. Azure offers an array of PaaS services that cater to different needs:

Azure SQL Database: This fully managed relational database service eliminates the need for database administration tasks such as patching and backups. It offers high availability, security, and scalability for your data.

Azure Cosmos DB: For globally distributed, highly responsive applications, Azure Cosmos DB is a NoSQL database service that guarantees low-latency access and automatic scaling.

Azure Functions: A serverless compute service, Azure Functions allows you to run code in response to events without provisioning or managing servers. It's ideal for event-driven architectures.

Azure Logic Apps: This service enables you to automate workflows and integrate various applications and services without writing extensive code. It's great for orchestrating complex business processes.

Benefits of Azure Cloud Hosting and PaaS Services

Scalability: Azure's elasticity allows you to scale resources up or down based on demand. This ensures optimal performance and cost efficiency.

Cost Management: With pay-as-you-go pricing, you only pay for the resources you use. Azure also provides cost management tools to monitor and optimize spending.

High Availability: Azure's data centers are distributed globally, providing redundancy and ensuring high availability for your applications.

Security and Compliance: Azure offers robust security features and compliance certifications, helping you meet industry standards and regulations.

Developer Productivity: PaaS services like Azure App Service and Azure Functions streamline development by handling infrastructure tasks, allowing developers to focus on writing code.

Use Cases for Azure Cloud Hosting and PaaS

Web Applications: Azure App Service is ideal for hosting web applications, enabling easy deployment and scaling without managing the underlying servers.

Microservices: Azure Kubernetes Service supports the deployment and orchestration of microservices, making it suitable for complex applications with multiple components.

Data-Driven Applications: Azure's PaaS offerings like Azure SQL Database and Azure Cosmos DB are well-suited for applications that rely heavily on data storage and processing.

Serverless Architecture: Azure Functions and Logic Apps are perfect for building serverless applications that respond to events in real-time.

In conclusion, Microsoft Azure's Cloud Hosting and PaaS Services provide businesses with the tools they need to harness the power of the cloud while minimizing the complexities of infrastructure management. With scalability, cost-efficiency, and a wide array of services, Azure empowers developers and organizations to innovate and deliver impactful applications. Whether you're hosting a web application, managing data, or adopting a serverless approach, Azure has the tools to support your journey into the cloud.

#Microsoft Azure#Internet of Things#Azure AI#Azure Analytics#Azure IoT Services#Azure Applications#Microsoft Azure PaaS

2 notes

·

View notes

Text

Top 10 DevOps Containers in 2023

Top 10 DevOps Containers in your Stack #homelab #selfhosted #DevOpsContainerTools #JenkinsContinuousIntegration #GitLabCodeRepository #SecureHarborContainerRegistry #HashicorpVaultSecretsManagement #ArgoCD #SonarQubeCodeQuality #Prometheus #nginxproxy

If you want to learn more about DevOps and building an effective DevOps stack, several containerized solutions are commonly found in production DevOps stacks. I have been working on a deployment in my home lab of DevOps containers that allows me to use infrastructure as code for really cool projects. Let’s consider the top 10 DevOps containers that serve as individual container building blocks…

View On WordPress

#ArgoCD Kubernetes deployment#DevOps container tools#GitLab code repository#Grafana data visualization#Hashicorp Vault secrets management#Jenkins for continuous integration#Prometheus container monitoring#Secure Harbor container registry#SonarQube code quality#Traefik load balancing

0 notes

Text

What Web Development Companies Do Differently for Fintech Clients

In the world of financial technology (fintech), innovation moves fast—but so do regulations, user expectations, and cyber threats. Building a fintech platform isn’t like building a regular business website. It requires a deeper understanding of compliance, performance, security, and user trust.

A professional Web Development Company that works with fintech clients follows a very different approach—tailoring everything from architecture to front-end design to meet the demands of the financial sector. So, what exactly do these companies do differently when working with fintech businesses?

Let’s break it down.

1. They Prioritize Security at Every Layer

Fintech platforms handle sensitive financial data—bank account details, personal identification, transaction histories, and more. A single breach can lead to massive financial and reputational damage.

That’s why development companies implement robust, multi-layered security from the ground up:

End-to-end encryption (both in transit and at rest)

Secure authentication (MFA, biometrics, or SSO)

Role-based access control (RBAC)

Real-time intrusion detection systems

Regular security audits and penetration testing

Security isn’t an afterthought—it’s embedded into every decision from architecture to deployment.

2. They Build for Compliance and Regulation

Fintech companies must comply with strict regulatory frameworks like:

PCI-DSS for handling payment data

GDPR and CCPA for user data privacy

KYC/AML requirements for financial onboarding

SOX, SOC 2, and more for enterprise-level platforms

Development teams work closely with compliance officers to ensure:

Data retention and consent mechanisms are implemented

Audit logs are stored securely and access-controlled

Reporting tools are available to meet regulatory checks

APIs and third-party tools also meet compliance standards

This legal alignment ensures the platform is launch-ready—not legally exposed.

3. They Design with User Trust in Mind

For fintech apps, user trust is everything. If your interface feels unsafe or confusing, users won’t even enter their phone number—let alone their banking details.

Fintech-focused development teams create clean, intuitive interfaces that:

Highlight transparency (e.g., fees, transaction histories)

Minimize cognitive load during onboarding

Offer instant confirmations and reassuring microinteractions

Use verified badges, secure design patterns, and trust signals

Every interaction is designed to build confidence and reduce friction.

4. They Optimize for Real-Time Performance

Fintech platforms often deal with real-time transactions—stock trading, payments, lending, crypto exchanges, etc. Slow performance or downtime isn’t just frustrating; it can cost users real money.

Agencies build highly responsive systems by:

Using event-driven architectures with real-time data flows

Integrating WebSockets for live updates (e.g., price changes)

Scaling via cloud-native infrastructure like AWS Lambda or Kubernetes

Leveraging CDNs and edge computing for global delivery

Performance is monitored continuously to ensure sub-second response times—even under load.

5. They Integrate Secure, Scalable APIs

APIs are the backbone of fintech platforms—from payment gateways to credit scoring services, loan underwriting, KYC checks, and more.

Web development companies build secure, scalable API layers that:

Authenticate via OAuth2 or JWT

Throttle requests to prevent abuse

Log every call for auditing and debugging

Easily plug into services like Plaid, Razorpay, Stripe, or banking APIs

They also document everything clearly for internal use or third-party developers who may build on top of your platform.

6. They Embrace Modular, Scalable Architecture

Fintech platforms evolve fast. New features—loan calculators, financial dashboards, user wallets—need to be rolled out frequently without breaking the system.

That’s why agencies use modular architecture principles:

Microservices for independent functionality

Scalable front-end frameworks (React, Angular)

Database sharding for performance at scale

Containerization (e.g., Docker) for easy deployment

This allows features to be developed, tested, and launched independently, enabling faster iteration and innovation.

7. They Build for Cross-Platform Access

Fintech users interact through mobile apps, web portals, embedded widgets, and sometimes even smartwatches. Development companies ensure consistent experiences across all platforms.

They use:

Responsive design with mobile-first approaches

Progressive Web Apps (PWAs) for fast, installable web portals

API-first design for reuse across multiple front-ends

Accessibility features (WCAG compliance) to serve all user groups

Cross-platform readiness expands your market and supports omnichannel experiences.

Conclusion

Fintech development is not just about great design or clean code—it’s about precision, trust, compliance, and performance. From data encryption and real-time APIs to regulatory compliance and user-centric UI, the stakes are much higher than in a standard website build.

That’s why working with a Web Development Company that understands the unique challenges of the financial sector is essential. With the right partner, you get more than a website—you get a secure, scalable, and regulation-ready platform built for real growth in a high-stakes industry.

0 notes

Text

CNAPP Explained: The Smartest Way to Secure Cloud-Native Apps with EDSPL

Introduction: The New Era of Cloud-Native Apps

Cloud-native applications are rewriting the rules of how we build, scale, and secure digital products. Designed for agility and rapid innovation, these apps demand security strategies that are just as fast and flexible. That’s where CNAPP—Cloud-Native Application Protection Platform—comes in.

But simply deploying CNAPP isn’t enough.

You need the right strategy, the right partner, and the right security intelligence. That’s where EDSPL shines.

What is CNAPP? (And Why Your Business Needs It)

CNAPP stands for Cloud-Native Application Protection Platform, a unified framework that protects cloud-native apps throughout their lifecycle—from development to production and beyond.

Instead of relying on fragmented tools, CNAPP combines multiple security services into a cohesive solution:

Cloud Security

Vulnerability management

Identity access control

Runtime protection

DevSecOps enablement

In short, it covers the full spectrum—from your code to your container, from your workload to your network security.

Why Traditional Security Isn’t Enough Anymore

The old way of securing applications with perimeter-based tools and manual checks doesn’t work for cloud-native environments. Here’s why:

Infrastructure is dynamic (containers, microservices, serverless)

Deployments are continuous

Apps run across multiple platforms

You need security that is cloud-aware, automated, and context-rich—all things that CNAPP and EDSPL’s services deliver together.

Core Components of CNAPP

Let’s break down the core capabilities of CNAPP and how EDSPL customizes them for your business:

1. Cloud Security Posture Management (CSPM)

Checks your cloud infrastructure for misconfigurations and compliance gaps.

See how EDSPL handles cloud security with automated policy enforcement and real-time visibility.

2. Cloud Workload Protection Platform (CWPP)

Protects virtual machines, containers, and functions from attacks.

This includes deep integration with application security layers to scan, detect, and fix risks before deployment.

3. CIEM: Identity and Access Management

Monitors access rights and roles across multi-cloud environments.

Your network, routing, and storage environments are covered with strict permission models.

4. DevSecOps Integration

CNAPP shifts security left—early into the DevOps cycle. EDSPL’s managed services ensure security tools are embedded directly into your CI/CD pipelines.

5. Kubernetes and Container Security

Containers need runtime defense. Our approach ensures zero-day protection within compute environments and dynamic clusters.

How EDSPL Tailors CNAPP for Real-World Environments

Every organization’s tech stack is unique. That’s why EDSPL never takes a one-size-fits-all approach. We customize CNAPP for your:

Cloud provider setup

Mobility strategy

Data center switching

Backup architecture

Storage preferences

This ensures your entire digital ecosystem is secure, streamlined, and scalable.

Case Study: CNAPP in Action with EDSPL

The Challenge

A fintech company using a hybrid cloud setup faced:

Misconfigured services

Shadow admin accounts

Poor visibility across Kubernetes

EDSPL’s Solution

Integrated CNAPP with CIEM + CSPM

Hardened their routing infrastructure

Applied real-time runtime policies at the node level

✅ The Results

75% drop in vulnerabilities

Improved time to resolution by 4x

Full compliance with ISO, SOC2, and GDPR

Why EDSPL’s CNAPP Stands Out

While most providers stop at integration, EDSPL goes beyond:

🔹 End-to-End Security: From app code to switching hardware, every layer is secured. 🔹 Proactive Threat Detection: Real-time alerts and behavior analytics. 🔹 Customizable Dashboards: Unified views tailored to your team. 🔹 24x7 SOC Support: With expert incident response. 🔹 Future-Proofing: Our background vision keeps you ready for what’s next.

EDSPL’s Broader Capabilities: CNAPP and Beyond

While CNAPP is essential, your digital ecosystem needs full-stack protection. EDSPL offers:

Network security

Application security

Switching and routing solutions

Storage and backup services

Mobility and remote access optimization

Managed and maintenance services for 24x7 support

Whether you’re building apps, protecting data, or scaling globally, we help you do it securely.

Let’s Talk CNAPP

You’ve read the what, why, and how of CNAPP — now it’s time to act.

📩 Reach us for a free CNAPP consultation. 📞 Or get in touch with our cloud security specialists now.

Secure your cloud-native future with EDSPL — because prevention is always smarter than cure.

0 notes

Text

Enterprise Kubernetes Storage with Red Hat OpenShift Data Foundation (DO370)

In the era of cloud-native transformation, data is the fuel powering everything from mission-critical enterprise apps to real-time analytics platforms. However, as Kubernetes adoption grows, many organizations face a new set of challenges: how to manage persistent storage efficiently, reliably, and securely across distributed environments.

To solve this, Red Hat OpenShift Data Foundation (ODF) emerges as a powerful solution — and the DO370 training course is designed to equip professionals with the skills to deploy and manage this enterprise-grade storage platform.

🔍 What is Red Hat OpenShift Data Foundation?

OpenShift Data Foundation is an integrated, software-defined storage solution that delivers scalable, resilient, and cloud-native storage for Kubernetes workloads. Built on Ceph and Rook, ODF supports block, file, and object storage within OpenShift, making it an ideal choice for stateful applications like databases, CI/CD systems, AI/ML pipelines, and analytics engines.

🎯 Why Learn DO370?

The DO370: Red Hat OpenShift Data Foundation course is specifically designed for storage administrators, infrastructure architects, and OpenShift professionals who want to:

✅ Deploy ODF on OpenShift clusters using best practices.

✅ Understand the architecture and internal components of Ceph-based storage.

✅ Manage persistent volumes (PVs), storage classes, and dynamic provisioning.

✅ Monitor, scale, and secure Kubernetes storage environments.

✅ Troubleshoot common storage-related issues in production.

🛠️ Key Features of ODF for Enterprise Workloads

1. Unified Storage (Block, File, Object)

Eliminate silos with a single platform that supports diverse workloads.

2. High Availability & Resilience

ODF is designed for fault tolerance and self-healing, ensuring business continuity.

3. Integrated with OpenShift

Full integration with the OpenShift Console, Operators, and CLI for seamless Day 1 and Day 2 operations.

4. Dynamic Provisioning

Simplifies persistent storage allocation, reducing manual intervention.

5. Multi-Cloud & Hybrid Cloud Ready

Store and manage data across on-prem, public cloud, and edge environments.

📘 What You Will Learn in DO370

Installing and configuring ODF in an OpenShift environment.

Creating and managing storage resources using the OpenShift Console and CLI.

Implementing security and encryption for data at rest.

Monitoring ODF health with Prometheus and Grafana.

Scaling the storage cluster to meet growing demands.

🧠 Real-World Use Cases

Databases: PostgreSQL, MySQL, MongoDB with persistent volumes.

CI/CD: Jenkins with persistent pipelines and storage for artifacts.

AI/ML: Store and manage large datasets for training models.

Kafka & Logging: High-throughput storage for real-time data ingestion.

👨🏫 Who Should Enroll?

This course is ideal for:

Storage Administrators

Kubernetes Engineers

DevOps & SRE teams

Enterprise Architects

OpenShift Administrators aiming to become RHCA in Infrastructure or OpenShift

🚀 Takeaway

If you’re serious about building resilient, performant, and scalable storage for your Kubernetes applications, DO370 is the must-have training. With ODF becoming a core component of modern OpenShift deployments, understanding it deeply positions you as a valuable asset in any hybrid cloud team.

🧭 Ready to transform your Kubernetes storage strategy? Enroll in DO370 and master Red Hat OpenShift Data Foundation today with HawkStack Technologies – your trusted Red Hat Certified Training Partner. For more details www.hawkstack.com

0 notes

Text

Breaking Barriers With DevOps: A Digital Transformation Journey

In today's rapidly evolving technological landscape, the term "DevOps" has become ingrained. But what does it truly entail, and why is it of paramount importance within the realms of software development and IT operations? In this comprehensive guide, we will embark on a journey to delve deeper into the principles, practices, and substantial advantages that DevOps brings to the table.

Understanding DevOps

DevOps, a fusion of "Development" and "Operations," transcends being a mere collection of practices; it embodies a cultural and collaborative philosophy. At its core, DevOps aims to bridge the historical gap that has separated development and IT operations teams. Through the promotion of collaboration and the harnessing of automation, DevOps endeavors to optimize the software delivery pipeline, empowering organizations to efficiently and expeditiously deliver top-tier software products and services.

Key Principles of DevOps

Collaboration: DevOps champions the concept of seamless collaboration between development and operations teams. This approach dismantles the conventional silos, cultivating communication and synergy.

Automation: Automation is the crucial for DevOps. It entails the utilization of tools and scripts to automate mundane and repetitive tasks, such as code integration, testing, and deployment. Automation not only curtails errors but also accelerates the software delivery process.

Continuous Integration (CI): Continuous Integration (CI) is the practice of automatically combining code alterations into a shared repository several times daily. This enables teams to detect integration issues in the embryonic stages of development, expediting resolutions.

Continuous Delivery (CD): Continuous Delivery (CD) is an extension of CI, automating the deployment process. CD guarantees that code modifications can be swiftly and dependably delivered to production or staging environments.

Monitoring and Feedback: DevOps places a premium on real-time monitoring of applications and infrastructure. This vigilance facilitates the prompt identification of issues and the accumulation of feedback for incessant enhancement.

Core Practices of DevOps

Infrastructure as Code (IaC): Infrastructure as Code (IaC) encompasses the management and provisioning of infrastructure using code and automation tools. This practice ensures uniformity and scalability in infrastructure deployment.

Containerization: Containerization, expressed by tools like Docker, covers applications and their dependencies within standardized units known as containers. Containers simplify deployment across heterogeneous environments.

Orchestration: Orchestration tools, such as Kubernetes, oversee the deployment, scaling, and monitoring of containerized applications, ensuring judicious resource utilization.

Microservices: Microservices architecture dissects applications into smaller, autonomously deployable services. Teams can fabricate, assess, and deploy these services separately, enhancing adaptability.

Benefits of DevOps

When an organization embraces DevOps, it doesn't merely adopt a set of practices; it unlocks a treasure of benefits that can revolutionize its approach to software development and IT operations. Let's delve deeper into the wealth of advantages that DevOps bequeaths:

1. Faster Time to Market: In today's competitive landscape, speed is of the essence. DevOps expedites the software delivery process, enabling organizations to swiftly roll out new features and updates. This acceleration provides a distinct competitive edge, allowing businesses to respond promptly to market demands and stay ahead of the curve.

2. Improved Quality: DevOps places a premium on automation and continuous testing. This relentless pursuit of quality results in superior software products. By reducing manual intervention and ensuring thorough testing, DevOps minimizes the likelihood of glitches in production. This improves consumer happiness and trust in turn.

3. Increased Efficiency: The automation-centric nature of DevOps eliminates the need for laborious manual tasks. This not only saves time but also amplifies operational efficiency. Resources that were once tied up in repetitive chores can now be redeployed for more strategic and value-added activities.

4. Enhanced Collaboration: Collaboration is at the heart of DevOps. By breaking down the traditional silos that often exist between development and operations teams, DevOps fosters a culture of teamwork. This collaborative spirit leads to innovation, problem-solving, and a shared sense of accountability. When teams work together seamlessly, extraordinary results are achieved.

5. Increased Resistance: The ability to identify and address issues promptly is a hallmark of DevOps. Real-time monitoring and feedback loops provide an early warning system for potential problems. This proactive approach not only prevents issues from escalating but also augments system resilience. Organizations become better equipped to weather unexpected challenges.

6. Scalability: As businesses grow, so do their infrastructure and application needs. DevOps practices are inherently scalable. Whether it's expanding server capacity or deploying additional services, DevOps enables organizations to scale up or down as required. This adaptability ensures that resources are allocated optimally, regardless of the scale of operations.

7. Cost Savings: Automation and effective resource management are key drivers of long-term cost reductions. By minimizing manual intervention, organizations can save on labor costs. Moreover, DevOps practices promote efficient use of resources, resulting in reduced operational expenses. These cost savings can be channeled into further innovation and growth.

In summation, DevOps transcends being a fleeting trend; it constitutes a transformative approach to software development and IT operations. It champions collaboration, automation, and incessant improvement, capacitating organizations to respond to market vicissitudes and customer requisites with nimbleness and efficiency.

Whether you aspire to elevate your skills, embark on a novel career trajectory, or remain at the vanguard in your current role, ACTE Technologies is your unwavering ally on the expedition of perpetual learning and career advancement. Enroll today and unlock your potential in the dynamic realm of technology. Your journey towards success commences here. Embracing DevOps practices has the potential to usher in software development processes that are swifter, more reliable, and of higher quality. Join the DevOps revolution today!

10 notes

·

View notes

Text

Why DevOps and Microservices Are a Perfect Match for Modern Software Delivery

In today’s time, businesses are using scalable and agile software development methods. Two of the most transformative technologies, DevOps and microservices, have achieved substantial momentum. Both of these have advantages, but their full potential is seen when used together. DevOps gives automation and cooperation, and microservices divide complex monolithic apps into manageable services. They form a powerful combination and allow faster releases, higher quality, and more scalable systems.

Here's why DevOps and microservices are ideal for modern software delivery:

1. Independent Deployments Align Perfectly with Continuous Delivery

One of the best features of microservices is that each service can be built, tested, and deployed separately. This decoupling allows businesses to release features or changes without building or testing the complete program. DevOps, which focuses on continuous integration and delivery (CI/CD), thrives in this environment. Individual microservices can be fitted into CI/CD pipelines to enable more frequent and dependable deployments. The result is faster innovation cycles and reduced risk, as smaller changes are easier to manage and roll back if needed.

2. Team Autonomy Enhances Ownership and Accountability

Microservices encourage small, cross-functional teams to take ownership of specialized services from start to finish. This is consistent with the DevOps principle of breaking down the division between development and operations. Teams that receive experienced DevOps consulting services are better equipped to handle the full lifecycle, from development and testing to deployment and monitoring, by implementing best practices and automation tools.

3. Scalability Is Easier to Manage with Automation

Scaling a monolithic application often entails scaling the entire thing, even if only a portion is under demand. Microservices address this by enabling each service to scale independently based on demand. DevOps approaches like infrastructure-as-code (IaC), containerization, and orchestration technologies like Kubernetes make scaling strategies easier to automate. Whether scaling up a payment module during the holiday season or shutting down less-used services overnight, DevOps automation complements microservices by ensuring systems scale efficiently and cost-effectively.

4. Fault Isolation and Faster Recovery with Monitoring

DevOps encourages proactive monitoring, alerting, and issue response, which are critical to the success of distributed microservices systems. Because microservices isolate failures inside specific components, they limit the potential impact of a crash or performance issue. DevOps tools monitor service health, collect logs, and evaluate performance data. This visibility allows for faster detection and resolution of issues, resulting in less downtime and a better user experience.

5. Shorter Development Cycles with Parallel Workflows

Microservices allow teams to work on multiple components in parallel without waiting for each other. Microservices development services help enterprises in structuring their applications to support loosely connected services. When combined with DevOps, which promotes CI/CD automation and streamlined approvals, teams can implement code changes more quickly and frequently. Parallelism greatly reduces development cycles and enhances response to market demands.

6. Better Fit for Cloud-Native and Containerized Environments

Modern software delivery is becoming more cloud-native, and both microservices and DevOps support this trend. Microservices are deployed in containers, which are lightweight, portable, and isolated. DevOps tools are used to automate processes for deployment, scaling, and upgrades. This compatibility guarantees smooth delivery pipelines, consistent environments from development to production, and seamless rollback capabilities when required.

7. Streamlined Testing and Quality Assurance

Microservices allow for more modular testing. Each service may be unit-tested, integration-tested, and load-tested separately, increasing test accuracy and speed. DevOps incorporates test automation into the CI/CD pipeline, guaranteeing that every code push is validated without manual intervention. This collaboration results in greater software quality, faster problem identification, and reduced stress during deployments, especially in large, dynamic systems.

8. Security and Compliance Become More Manageable

Security can be implemented more accurately in a microservices architecture since services are isolated and can be managed by service-level access controls. DevOps incorporates DevSecOps, which involves integrating security checks into the CI/CD pipeline. This means security scans, compliance checks, and vulnerability assessments are performed early and frequently. Microservices and DevOps work together to help enterprises adopt a shift-left security approach. They make securing systems easier while not slowing development.