#Neuron-On-A-Chip Model

Explore tagged Tumblr posts

Text

Neuron-On-A-Chip Model

Through our neurochip customization service, you can design the pattern shape and topology of the chip yourself to achieve controlled growth of cell bodies and neurite extensions, and nerve cells will exhibit neuron polarity in a specific direction and elongate in the expected direction. Learn more about Neuron-On-A-Chip Model.

0 notes

Text

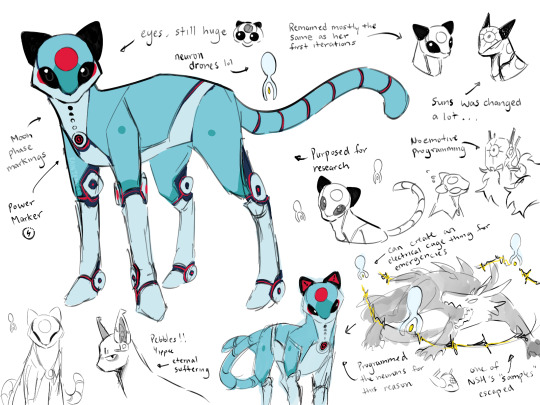

Moon and Pebbles!! Yippee

oh the woes of being a flesh creature surrounded by supercomputer gods,, I got sad drawing him hhh

more about them under the line :>

Moon! She, like Suns, was one of the first successful projects and were both more of test models/therapeutic companions than anything else. They were both restructured to fit their new functions but Suns has obviously undergone more significant changes... Moon is kept inside to assist with research and computational stuff. She's a lab cat. She's generally looks more like a normal creature, and has a friendly appearance because her creators (i guess it would be the ancients) would be seeing her frequently and would rather a friendly face, something that is easily perceived as nonthreatening, as opposed to Suns weaponry and NSH's extra limbs and spikes. She doesn't have the screen face like NSH, so expressing emotions comes mostly from body language. Moon is not outside at all so there is no need for solar panel components like Suns or NSH. She has internal stored power that can last for quite a while but still needs to be recharged? I imagine the neuron fly drones would also assist in that department. The drones still function somewhat like her portable processing servers/braincells. She has also programed a defensive protocol into them, they can create small bits of electricity to use in dire moments. Initially programmed to keep track of NSH's samples that sometimes escapes him.

Pebbles is a purposed organism. He is a whole entire organic cat. He was born in the lab, in a chaotic time when resources were low. He has a mark of communication. He also has a brain chip where he can access (basically) the cloud where the others upload information. He is also a lab cat so this is crucial to his role. He did try and remove it once when he was younger and it backfired horribly and now he has a mechanical ear and eye. He still feels out of place for obvious reasons, being the only creature of organic origin amongst his peers.

He is closest to Moon who had a role in caring for and raising him. She did not know a thing about caring for a living being but did her best. Pebbles does not like being confined to the facility. The suggestion and influence the brain chip has on him sometimes clashes with his thoughts. He is very aware of the limitations it puts on him to not leave. He envies NSH and Suns a lot for being able to do what he can't. He often downloads the maps they create and read NSH's sample studies in his spare time. He also likes seeing the lizards NSH brings back, from a distance.

I think in the time that Pebbles exists, NSH is not very active. Due to the low resources and chaotic season, NSH is often in low power mode. Which means less expeditions outside and more time just, half asleep. And when the weather becomes more sustainable, NSH would be sent on long outings to gather as much as possible before being powered down again. So instead of hearing stories from NSH, he sought out Suns and UI instead. (Actually I think everyone is kind of low power mode here, Suns does not wander as far).

erhm i think he tries to leave the place and then gets sick or something,,,im still thinking..

#rain world#rw downpour#five pebbles#looks to the moon#rw iterator#rain world au#sorry pebbles is in the most inopportune position at any given moment#i got sad drawing him because of all the shit he may or may not go through#raintarts

565 notes

·

View notes

Text

Scientists Gingerly Tap into Brain's Power From: USA Today - 10/11/04 - page 1B By: Kevin Maney

Scientists are developing technologies that read brainwave signals and translate them into actions, which could lead to neural prosthetics, among other things. Cyberkinetics Neurotechnology Systems' Braingate is an example of such technology: Braingate has already been deployed in a quadriplegic, allowing him to control a television, open email, and play the computer game Pong using sensors implanted into his brain that feed into a computer. Although "On Intelligence" author Jeff Hawkins praises the Braingate trials as a solid step forward, he cautions that "Hooking your brain up to a machine in a way that the two could communicate rapidly and accurately is still science fiction." Braingate was inspired by research conducted at Brown University by Cyberkinetics founder John Donoghue, who implanted sensors in primate brains that picked up signals as the animals played a computer game by manipulating a mouse; the sensors fed into a computer that looked for patterns in the signals, which were then translated into mathematical models by the research team. Once the computer was trained on these models, the mouse was eliminated from the equation and the monkeys played the game by thought alone. The Braingate interface consists of 100 sensors attached to a contact lens-sized chip that is pressed into the surface of the cerebral cortex; the device can listen to as many as 100 neurons simultaneously, and the readings travel from the chip to a computer through wires. Meanwhile, Duke University researchers have also implanted sensors in primate brains to enable neural control of robotic limbs. The Defense Advanced Research Project Agency (DARPA) is pursuing a less invasive solution by funding research into brain machine interfaces that can read neural signals externally, for such potential applications as thought-controlled flight systems. Practical implementations will not become a reality until the technology is sufficiently cheap, small, and wireless, and then ethical and societal issues must be addressed. Source

7 notes

·

View notes

Text

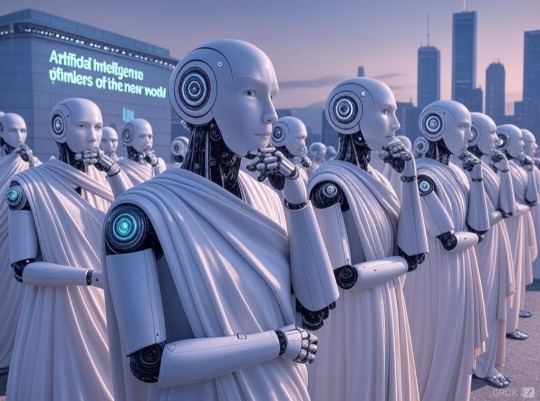

Ai Philosophers Thinkers of the New World

By Arjuwan Lakkdawala

Ink in the Internet

After writing had been established in the world - its origin going back to Mesopotamia (ancient Iraq) closely followed by Ancient Egypt and China, the records show Greek philosophers with overwhelming efforts trying to articulate into words mysteries of the natural world, reason and logic, concepts of what makes a good life, and of course questions about ethics and existence. In my research I found 9 Greek philosophers, to mention a few of them Pythagoras (notoriously famous for the Pythagoras Theorem).

Side Note: "Although the theorem has long been associated with Greek mathematician-philosopher Pythagoras (c. 570–500/490 BCE), it is actually far older. Four Babylonian tablets from circa 1900–1600 BCE indicate some knowledge of the theorem, with a very accurate calculation of the square root of 2."

Protagoras, Socrates, Plato, and Aristotle. It is said that western thought is highly influenced by them. As history unfolded we know that many philosophers expressed their ideas, and presently we have an infinite maze of ideologies.

In a free world everyone believes what they want to believe, but if it is harmful to society, causes self harm, is illogical, then it should be illegal and wrong.

Up to now we were doing all the thinking, but presently there is an emerging new class of very different thinkers, and that is Artificial Intelligence, computer scientists are taking big data which is basically infinite and feeding it to Ai, and concepts from neuroscience is being used to develope more powerful computing like neuromorphic chips.

A study published in Neuron in 2022 explains "Bioneural Circuitry." In simple words, neurons use electricity to send signals so it is possible to merge them with electrical signals from artificial intelligence on electrodes. This could be similar to the technology used by Neuralink implants.

A very futuristic idea that occurred to me is what if a hypothetical Ai (Star-Sci) is used as a teacher from kindergarten to university graduation, with constant upgrade of information as the years go by. This teacher would teach and even give tests. An Ai model that teaches everything. Students would instead of naming universities or a string of teachers, be known only as pupils of a certain Ai. This is of course a very sci-fi idea, but in realistic terms as I see anarchy increasing in the world, and the cases of teachers behaving inappropriately with students, I think Ai teachers will be looked upon favourably pretty soon.

After thought: academics can contribute for free or paid to the Star-Sci life long teaching system. This could be a new style university, where students only interect with Ai, preventing any non-educational use of the institute or corruption from fellow students or teachers.

We in this current age are going through the greatest social experiment. That is the Internet which is a merging of human mind with artificial intelligence. It is changing the world in ways we cannot predict as this hasn't happened before. We have no previous case studies.

Ai is reportedly often used in writing essays, I personally use search engines for my research. I avoid using Ai to do any of my writing, I don't want my mind to rely on artificial intelligence, because I believe it will corrode my much superior human intelligence.

I think artificial intelligence is good to use in the management of big data, but not in any paper with concepts within the ability of human calculation and comprehension.

Scientists are trying to come up with a unified theory of consciousness. According to research and most human experiences we have an outer sense in which we comprehend empirical evidance and what we immediately experience, and then we have an inner consciousness in which we think, form memories and plan ahead. The outer world is not really complicated, it's the inner world - thoughts that can warp into unknown manesfeststions, some of these neurological paths can lead to genuise and others to insanity.

This unknown manesfestations of consciousness is exactly what defines the difference between human thinking and Ai programming. Artificial intelligence cannot have thoughts at all. It can only compute programmed instructions based on the data it has. But with big data this programming could in the near future start to resemble consciousness. As we do not and mentally cannot compute big data, so the output of Ai from big data could seem to us like independent thoughts.

It is crucial to understand this about Ai as technology integrates it further in our lives and we interact with it increasingly.

At the same time we must avoid using it where we can use our human intelligence. This is of prime importance if we are to preserve the excellence of our cognitive abilities.

Neuroscience studies reveal that neural networks that are not used get lost. We don't really need scientific experiments to confirm this, as we can immediately tell when our memory improves, or we get better at any intellectual skill, well known that it only happens with practice, therefore if we rely on Ai it will result gradually in mental decline.

As we think our brains form complex neural networks that improve our intelligence regarding a study or concept.

Thoughts are different than feelings, we have often heard that the brain is logical and sensible while the heart isn't. Studies have confirmed that the heart has a neural network known as the intrinsic cardiac nervous system (ICNS). While this does not say that it is why we have feelings that could contradict our intelligence, it is an interesting hypothetical concept to explain how feelings and logical thoughts can be in conflict with each other.

Being irrational and illogical or hateful, and having several other destructive traits, that cause self harm, or harm to society, is unfortunately observed more often these days.

Mental health is a very complicated and difficult terrain to traverse, we are nothing without our consciousness. Our whole quality of life depends on the level of our intelligence and the health of our minds - this is maintained and achieved by a good upbringing, secure environment, healthy diet, and a rounded education with physical exercise.

There are foods that improve the health of our brains, and foods that increase the risk of Alzheimer's disease and dementia and have other negatives effects. Google for articles about brain health foods.

(I have added notes to this articles in the comments section).

Arjuwan Lakkdawala is an author and independent science researcher.

X/Twitter/Instagram: Spellrainia

Email: [email protected]

Copyright ©️ Arjuwan Lakkdawala 2024

Sources:

MBF Bioscience - Researchers map and explore the heart's "little brain"

Psychology Today - Neuroplasticity - reviewed by Psychology Today staff

Medium - Neuromorphic Computing

ABclonal Knowledge Base - Melding Neuroscience with Computer Technology - Kin Leung

Pythagorean Theorem - written and fact checked by the editors of Britannica Encyclopedia

Singularity Hub - Scientists are Working Towards a Unified Theory of Consciousness - Shelly Fan

The Collector - 9 Greek Philosophers who Shaped the World - Eddie Hodsdon, BA professional Writing, member Canterbury Archealogical Trust

#arjuwan lakkdawala#biology#nature#science#ink in the internet#bioengineering#physics#intelligence#artificial intelligence#neuromorphic chips#greek philosophers#ai philosophers#phythagoras theorem

12 notes

·

View notes

Text

Unveiling the Mystery: Understanding the Inner Workings of Generative AI

Generative AI took the world by storm in the months after ChatGPT, a chatbot based on OpenAI’s GPT-3.5 neural network model, was released on November 30, 2022. GPT stands for generative pretrained transformer, words that mainly describe the model’s underlying neural network architecture.

Empirically, we know how they work in detail because humans designed their various neural network implementations to do exactly what they do, iterating those designs over decades to make them better and better. AI developers know exactly how the neurons are connected; they engineered each model’s training process.

Here are the steps to generative AI development:

Start with the brain: Hawkins hypothesized that, at the neuron level, the brain works by continuously predicting what’s going to happen next and then learning from the differences between its predictions and subsequent reality. To improve its predictive ability, the brain builds an internal representation of the world. In his theory, human intelligence emerges from that process.

Build an artificial neural network: All generative AI models begin with an artificial neural network encoded in software. AI researchers even call each neuron a “cell,” and each cell contains a formula relating it to other cells in the network—mimicking the way that the connections between brain neurons have different strengths.

Each layer may have tens, hundreds, or thousands of artificial neurons, but the number of neurons is not what AI researchers focus on. Instead, they measure models by the number of connections between neurons. The strengths of these connections vary based on their cell equations’ coefficients, which are more generally called “weights” or “parameters.

Teach the newborn neural network model: Large language models are given enormous volumes of text to process and tasked to make simple predictions, such as the next word in a sequence or the correct order of a set of sentences. In practice, though, neural network models work in units called tokens, not words.

Example: Although it’s controversial, a group of more than a dozen researchers who had early access to GPT-4 in fall 2022 concluded that the intelligence with which the model responds to complex challenges they posed to it, and the broad range of expertise it exhibits, indicates that GPT-4 has attained a form of general intelligence. In other words, it has built up an internal model of how the world works, just as a human brain might, and it uses that model to reason through the questions put to it. One of the researchers told “This American Life” podcast that he had a “holy s---” moment when he asked GPT-4 to, “Give me a chocolate chip cookie recipe, but written in the style of a very depressed person,” and the model responded: “Ingredients: 1 cup butter softened, if you can even find the energy to soften it. 1 teaspoon vanilla extract, the fake artificial flavor of happiness. 1 cup semi-sweet chocolate chips, tiny little joys that will eventually just melt away.”

youtube

2 notes

·

View notes

Text

CL1: The Biological Computer That Plays Pong with Actual Brain Cells

Greetings folks! Strap in, because this one's not science fiction — it’s science right now. What you’re about to read involves a computer that thinks using actual, living brain cells. Cortical Labs has built a system that doesn’t just simulate intelligence — it is intelligence. Meet CL1: a hybrid of silicon, stem cells, and sheer bioengineering brilliance.

TL;DR:

Cortical Labs built a biological computer using living neurons from stem cells. These neurons live on a chip, respond to stimuli, and learn to play Pong through feedback. No lines of code needed — just raw, biological learning. It’s a new chapter in computing where machines grow brains instead of running on silicon alone.

🧠 So, What Is CL1?

Imagine this: you take living neurons — derived from either human or mouse induced pluripotent stem cells (iPSCs) — and grow them on top of a microchip covered in electrodes. These electrodes can talk to the neurons using electrical pulses.

Now give that system a goal — say, playing Pong — and watch what happens. With no pre-programming, these little neuron networks start to learn, just by reacting to inputs and adjusting over time.

This isn't a simulation. These are real cells doing real-time problem solving. Welcome to the era of wetware.

⚙️ CL1's Technical Side:

Neurons: Human/mouse neurons derived from iPSCs

Interface: Multi-Electrode Array (MEA)

OS: biOS (as base biological operating system)

Feedback Loop: Electrical stimulation + live response tracking

Learning Mechanism: Hebbian plasticity ("neurons that fire together wire together")

🧬 How the Heck Does This Actually Work?

Let’s break it down — both biologically and technically:

👾 The Digital-to-Bio Feedback Loop:

CL1 is a closed-loop system:

The digital system tells the neurons what's happening (e.g., “pong ball moving left”)

Neurons fire back electrical responses

The system interprets those firings

Correct response? They get rewarded. Wrong one? They get a gentle digital slap

Over time, the neuron network self-organizes, learning the task through synaptic plasticity

🧪 The Biology Bit:

The neurons are grown from induced pluripotent stem cells (iPSCs) — adult cells reprogrammed into a stem-cell-like state

These are then developed into cortical neurons

The network grows on a multi-electrode array that can both stimulate and read from the cells

🖥 The Tech Stack:

biOS (Biological Operating System): Simulates digital environments (like Pong) and interprets neural activity in real time

Signal Processing Engine: Converts biological signals into digital responses

Environmental Control: Keeps the neuron dish alive with precise nutrient feeds, CO₂ levels, and temperature management

💡 Why This Is a Huge Freaking Deal

This isn't about playing Pong. It’s about building a new class of machines that learn like we do. That adapt. That grow. This rewires the concept of computing from algorithm-based logic to biological self-organization.

Potential future uses:

Ultra-low-power, self-learning bio-AI chips

Medical models for brain diseases, drug testing, or trauma simulation

Robotic systems that use real neurons for adaptive control

In short: this is the birth of organic computing.

🔮 Can We Upload Knowledge Yet? Like Matrix Style?

Not quite. Right now, CL1 learns via real-time feedback — it’s still trial-and-error. But researchers are exploring:

Pre-conditioning neural responses

Chemical memory injection

Patterned stimulation to train in behaviors

In the future? We might literally write instincts into neural systems like flashing a bootloader. One day, your drone might come preloaded with lizard-brain reflexes — not software, but neurons.

🧱 What Comes Next?

We’re at the beginning of something radical:

Neural prosthetics with muscle memory

Bio-computers that can evolve new solutions on their own

Robots that aren’t just “smart” — they’re alive-ish

CL1 is laying the foundation for a new kind of intelligence — not modeled after the brain, but actually made of one.

🔗 Sources:

Cortical Labs Official

Nature Article

The Verge Feature

ABC Science News

MIT Tech Review

Neuron Journal Study

#CL1#BiologicalComputing#Neurotech#CorticalLabs#LivingAI#iPSC#BrainComputerInterface#SyntheticBiology#Wetware#Futurism#BioAI#StemCells#Neuroscience#NextGenComputing#MEAtechnology#HumanNeuronAI#Biocomputing#TechFutures#PostSiliconEra#PongBrain#cyberpunk#technology

1 note

·

View note

Text

Critical Comparison Between Neuromorphic Architectures and Spectral Optoelectronic Hardware for Physical Artificial Intelligence

\documentclass[11pt]{article} \usepackage[utf8]{inputenc} \usepackage[english]{babel} \usepackage{amsmath, amssymb, amsfonts} \usepackage{geometry} \usepackage{graphicx} \usepackage{hyperref} \geometry{margin=1in} \title{Critical Comparison Between Neuromorphic Architectures and Spectral Optoelectronic Hardware for Physical Artificial Intelligence} \author{Renato Ferreira da Silva \ \texttt{[email protected]} \ ORCID: \href{https://orcid.org/0009-0003-8908-481X}{0009-0003-8908-481X}} \date{\today}

\begin{document}

\maketitle

\begin{abstract} This article presents a comparative analysis between two next-generation computing paradigms, defined by their ability to exceed conventional CMOS limits in latency, power efficiency, and reconfigurability. These include commercial neuromorphic chips, such as Intel Loihi and IBM TrueNorth, and an emerging optoelectronic hardware architecture based on spectral operators. The comparison includes architecture, energy efficiency, scalability, latency, adaptability, and application domains. We argue that the spectral paradigm not only overcomes the limitations of spiking neural networks in terms of reconfigurability and speed, but also offers a continuous and physical model of inference suitable for embedded AI, physical simulations, and high-density symbolic computing. \end{abstract}

\section{Introduction} The demand for more efficient, faster, and energy-sustainable computing architectures has led to the development of neuromorphic chips that emulate biological neural networks with high parallelism and low power. Although promising, these devices operate with discrete spike-based logic and face limitations in continuous and symbolic tasks. These limitations are particularly problematic in domains requiring real-time analog signal processing (e.g., high-frequency sensor fusion in autonomous vehicles), symbolic manipulation (e.g., theorem proving or symbolic AI planning), or continuous dynamical system modeling (e.g., fluid dynamics in climate models). In parallel, a new approach emerges based on spectral operators — inspired by the Hilbert–Pólya conjecture — which models computation as the physical evolution of eigenvalues in reconfigurable optical structures. This approach enables the processing of information in a fundamentally analog and physically continuous domain.

\section{Theoretical Foundations} \subsection{Spectral Optoelectronic Hardware} The spectral architecture models computation as the eigenvalue dynamics of a Schrödinger operator: [ \mathcal{L}\psi(x) = -\frac{d^2}{dx^2}\psi(x) + V(x)\psi(x) = \lambda \psi(x), ] where ( V(x) ), parameterized by Hermite polynomials, is adjusted via optical modulation. The eigenvalues ( \lambda ) correspond to computational states, enabling continuous analog processing. This modeling approach is advantageous because it allows computation to be directly grounded in physical processes governed by partial differential equations, offering superior performance for tasks involving continuous state spaces, wave propagation, or quantum-inspired inference.

\subsection{Neuromorphic Chips} \begin{itemize} \item \textbf{Intel Loihi}: Implements spiking neural networks with on-chip STDP learning, where synapses adjust weights based on spike timing. Open-source documentation provides access to architectural specifications, allowing adaptation to different learning rules and topologies. \item \textbf{IBM TrueNorth}: Focused on static inference, with 1 million neurons in fixed connectivity, lacking real-time adaptation. The system emphasizes energy-efficient classification tasks but is constrained in dynamic reconfiguration. \end{itemize}

\section{Technical Comparison} \begin{table}[h!] \centering \begin{tabular}{|l|c|c|c|} \hline \textbf{Criterion} & \textbf{Spectral Opto.} & \textbf{Intel Loihi} & \textbf{IBM TrueNorth} \ \hline Intra-chip latency & 21 ps & 0.5--1 \textmu s & 1--2 ms \ Energy per operation & 5 fJ & \textasciitilde1--20 pJ & \textasciitilde26 pJ \ Reconfigurability & Physical (optical) + logic & Adaptive via spikes & Static \ 3D Scalability & High (optical vias) & Moderate & Low \ Unit Cost & High (photonic PDKs, \$5000+) & Moderate (\$1000–2000) & Low (\$100s) \ Application Domain & Physical sim, continuous AI & Robotics, IoT & Static classification \ \hline \end{tabular} \caption{Detailed technical comparison between architectures.} \end{table}

\section{Use Cases} \subsection{Quantum Materials Simulation} Spectral hardware solves nonlinear Schrödinger equations in real time, whereas neuromorphic systems are limited to discrete approximations. Example: modeling superconductivity in graphene under variable boundary and topological constraints.

\subsection{AI-Powered Medical Diagnosis} Coupled optical sensors detect biomarkers via Raman spectroscopy, with local processing in 21 ps — ideal for high-precision robotic surgery. This setup enables continuous patient-state monitoring without requiring digital post-processing.

\section{Challenges and Limitations} \subsection{Fabrication Complexity} 3D optical via lithography requires submicron precision (<10 nm), increasing costs. Standardized PDKs (e.g., AIM Photonics) and foundry collaborations can mitigate these barriers and enable more affordable prototyping.

\subsection{Optical Nonlinearities} Effects such as four-wave mixing (FWM) degrade signals in dense WDM. Compensation techniques include photonic neural networks and digital pre-emphasis filters optimized via reinforcement learning frameworks.

\section{Conclusion and Outlook} The spectral optoelectronic architecture offers ultralow latency (21 ps) and energy efficiency (5 fJ/op), outperforming neuromorphic chips in continuous applications. Fabrication and nonlinearity challenges require advances in integrated photonics and optical DSP. Future work should explore integration with noncommutative geometry to provide algebraic invariants over spectral states and enable hybrid quantum-classical information processing.

\end{document}

0 notes

Text

NVLink Fusion: Revolutionizing AI Chip Communication

Have you ever stopped to think about the hardware that powers our AI breakthroughs? I was diving into *The Neuron* today, and they highlighted Nvidia’s new NVLink Fusion—a high-speed link that lets GPUs talk to each other far more efficiently, cutting down latency and boosting bandwidth between chips. What really struck me is how vital this is for scaling big models. As we push AI to ever-larger sizes, stitching together multiple chips without slowing down becomes mission-critical. My takeaway? When you’re architecting AI systems, don’t just eyeball the GPUs’ specs—look closely at how they connect. A slick interconnect can unlock way more performance than extra cores alone. How do you think faster chip-to-chip communication will change your AI deployments? I’d love to hear your perspective! #AIHardware #Nvidia #AIInfrastructure #ChipDesign #TechTrends

0 notes

Text

Live Science: World's first computer that combines human brain with silicon now available

0 notes

Text

World's first computer that combines human brain with silicon now available | Live Science

0 notes

Text

Neuromorphic chips

This is really interesting; they are actually computer processors designed to work like human brain not in software but in hardware. They mimic how neurons and synapses actually fire, process and store information. (AI with a brain and not just code)

The emerging real-world use cases are for brain-computer interfaces, autonomous vehicles and drones, robotics and edge AI.

The challenges of this are that it is still in research or early production stage, it need new programming models and scaling them to brain-level complexity is tough but not impossible.

0 notes

Text

The Rise of Neuromorphic Computing: Mimicking the Human Brain for Advanced AI

Neuromorphic computing is an innovative approach to computing that emulates the structure and function of the human brain. This technology utilizes artificial neurons and synapses to process information, offering a paradigm shift from traditional computing architectures, such as the von Neumann model, which relies on separate processing and memory units.

Key Features of Neuromorphic Computing

Parallel Processing: Neuromorphic systems can handle multiple tasks simultaneously, akin to how biological neurons operate. This allows for more efficient data processing and real-time learning capabilities.

Energy Efficiency: The human brain operates on approximately 20 watts while achieving extraordinary computational power. Neuromorphic chips aim to replicate this efficiency, making them suitable for high-performance applications without excessive energy consumption.

Scalability: Neuromorphic architectures are inherently scalable; adding more chips increases the number of active neurons without traditional bottlenecks.

Applications and Future Potential

Neuromorphic computing has significant implications for artificial intelligence (AI), enabling advancements in machine learning, sensory processing, and autonomous systems. Companies like IBM have developed neuromorphic chips, such as TrueNorth, which demonstrate the potential for complex computations in real-world applications. As research progresses, neuromorphic computing is expected to play a crucial role in developing artificial superintelligence and enhancing AI capabilities beyond current limitations .

Conclusion

The rise of neuromorphic computing represents a promising frontier in technology, merging insights from neuroscience with advanced engineering. By mimicking the human brain's efficiency and adaptability, this approach could revolutionize how we develop and implement AI systems, paving the way for smarter, more responsive technologies in the future.

Written By :- Hexahome and Hexadecimal Software

0 notes

Note

Beta intelligence military esque

Alice in wonderland

Alters, files, jpegs, bugs, closed systems, open networks

brain chip with memory / data

Infomation processing updates and reboots

'Uploading' / installing / creating a system of information that can behave as a central infomation processing unit accessible to large portions of the consciousness. Necessarily in order to function as so with sufficient data. The unit is bugged with instructions, "error correction", regarding infomation processing.

It can also behave like a guardian between sensory and extra physical experience.

"Was very buggy at first". Has potential to casues unwanted glitches or leaks, unpredictability and could malfunction entirely, especially during the initial accessing / updating. I think the large amount of information being synthesized can reroute experiences, motivations, feelings and knowledge to other areas of consciousness, which can cause a domino effect of "disobedience", and or reprogramming.

I think this volatility is most pronounced during the initial stages of operation because the error correcting and rerouting sequences have not been 'perfected' yet and are in their least effective states, trail and error learning as it operates, graded by whatever instructions or result seeking input that called for the "error correction".

I read the ask about programming people like a computer. Whoever wrote that is not alone. Walter Pitts and Warren McCulloch, do you have anymore information about them and what they did to people?

Here is some information for you. Walter Pitts and Warren McCulloch weren't directly involved in the programming of individuals. Their work was dual-use.

Exploring the Mind of Walter Pitts: The Father of Neural Networks

McCulloch-Pitts Neuron — Mankind’s First Mathematical Model Of A Biological Neuron

Introduction To Cognitive Computing And Its Various Applications

Cognitive Electronic Warfare: Conceptual Design and Architecture

Security and Military Implications of Neurotechnology and Artificial Intelligence

Oz

#answers to questions#Walter Pitts#Warren McCulloch#Neural Networks#Biological Neuron#Cognitive computing#TBMC#Military programming mind control

8 notes

·

View notes

Text

Neurological Disease Models: Pioneering the Future of Brain Research

Neurological diseases, such as Alzheimer’s, Parkinson’s, epilepsy, and multiple sclerosis, represent some of the most complex and challenging conditions to study and treat. These disorders affect the brain and nervous system, disrupting the lives of millions worldwide. To better understand these conditions, researchers rely on neurological disease models, which replicate aspects of these diseases in controlled environments.

These models provide a crucial foundation for uncovering disease mechanisms, testing treatments, and ultimately paving the way for innovative therapies.

What Are Neurological Disease Models?

Neurological disease models are scientific tools designed to mimic the biological, genetic, and pathological features of brain disorders. They allow researchers to study disease progression, identify potential targets for treatment, and evaluate the safety and efficacy of new therapies.

These models fall into three primary categories:

In Vitro Models: Lab-grown cells, such as neurons and glial cells, used to study molecular and cellular mechanisms.

In Vivo Models: Living organisms, often rodents or zebrafish, engineered to display disease-like symptoms.

Computational Models: Simulations that predict disease dynamics using algorithms and mathematical frameworks.

Why Are Neurological Disease Models Important?

The human brain is incredibly complex, and studying it directly is often impractical or impossible. Neurological disease models provide an accessible way to:

Explore Disease Mechanisms:

Models reveal how diseases begin and progress at molecular and cellular levels.

Develop and Test Treatments:

New drugs can be tested for safety and effectiveness in models before human trials.

Discover Biomarkers:

Early detection is critical for many neurological diseases, and models help identify potential diagnostic markers.

Study Rare Conditions:

For less common diseases like ALS or Huntington’s, models provide a platform for targeted research.

Types of Neurological Disease Models

1. Alzheimer’s Disease Models

Transgenic Mice: Engineered to develop amyloid plaques and tau tangles, mirroring human pathology.

3D Brain Organoids: Stem-cell-derived structures replicating human brain regions affected by Alzheimer’s.

2. Parkinson’s Disease Models

Toxin-Based Models: Neurotoxins like MPTP selectively destroy dopamine-producing neurons.

Genetic Models: Animals carrying mutations in genes such as SNCA mimic familial Parkinson’s disease.

3. Epilepsy Models

Chemically Induced Seizures: Substances like kainic acid provoke seizures for studying epilepsy.

Computational Simulations: Map abnormal electrical activity in the brain.

4. Multiple Sclerosis (MS) Models

Autoimmune Models: Experimental autoimmune encephalomyelitis (EAE) mimics inflammation and demyelination seen in MS.

5. Huntington’s Disease Models

Knock-In Models: Animals modified to express mutant huntingtin genes to study motor and cognitive decline.

Innovations in Neurological Disease Models

Recent advancements are transforming the field:

CRISPR-Cas9 Technology:

Enables precise editing of genes to replicate human disease mutations.

Stem Cell-Derived Models:

Patient-derived iPSCs allow personalized studies of disease mechanisms and drug responses.

AI-Powered Computational Models:

Artificial intelligence enhances predictive accuracy in disease simulations.

Organoids and Microfluidics:

3D brain models and lab-on-a-chip systems provide realistic, human-specific disease environments.

Challenges in Neurological Disease Models

While these models are invaluable, they have limitations:

Incomplete Mimicry:

No model fully replicates the complexity of human neurological diseases.

Ethical Concerns:

Using animals and human-derived cells raises ethical considerations.

High Costs:

Developing advanced models, such as organoids or CRISPR-edited systems, requires substantial resources.

Researchers are addressing these challenges through multidisciplinary approaches and technological integration.

Future Directions

The future of neurological disease models is filled with possibilities:

Personalized Models:

Stem-cell-derived models tailored to individual patients will drive precision medicine.

Hybrid Systems:

Combining in vitro, in vivo, and computational models will create more comprehensive research frameworks.

Real-Time Monitoring:

Emerging imaging techniques will enable live tracking of disease processes in models.

High-Throughput Screening:

Automated platforms will accelerate the discovery of new drugs.

Conclusion

Neurological disease models are essential tools for advancing our understanding of brain disorders. By mimicking disease mechanisms and testing potential therapies, these models have already contributed significantly to neuroscience. As technology continues to evolve, these models will play an even greater role in unraveling the complexities of neurological diseases, bringing us closer to effective treatments and improved patient outcomes.

0 notes

Text

1. Research paper published in Neuron.

The brain’s primary immune cells, microglia, are a leading causal cell type in Alzheimer’s disease (AD). Yet, the mechanisms by which microglia can drive neurodegeneration remain unresolved. Here, we discover that a conserved stress signaling pathway, the integrated stress response (ISR), characterizes a microglia subset with neurodegenerative outcomes. Autonomous activation of ISR in microglia is sufficient to induce early features of the ultrastructurally distinct “dark microglia” linked to pathological synapse loss. In AD models, microglial ISR activation exacerbates neurodegenerative pathologies and synapse loss while its inhibition ameliorates them. Mechanistically, we present evidence that ISR activation promotes the secretion of toxic lipids by microglia, impairing neuron homeostasis and survival in vitro. Accordingly, pharmacological inhibition of ISR or lipid synthesis mitigates synapse loss in AD models. Our results demonstrate that microglial ISR activation represents a neurodegenerative phenotype, which may be sustained, at least in part, by the secretion of toxic lipids. (Source: cell.com/neuron, sciencealert.com)

2. Microsoft is planning to invest about $80 billion in fiscal 2025 on developing data centers to train artificial intelligence (AI) models and deploy AI and cloud-based applications, the company said in a blog post on Friday. Investment in AI has surged since OpenAI launched ChatGPT in 2022, as companies across sectors seek to integrate artificial intelligence into their products and services. AI requires enormous computing power, pushing demand for specialized data centers that enable tech companies to link thousands of chips together in clusters. Microsoft has been investing billions to enhance its AI infrastructure and broaden its data-center network. Analysts expect Microsoft's fiscal 2025 capital expenditure including capital leases to be $84.24 billion, according to Visible Alpha. (Source: reuters.com, blogs.microsoft.com)

3. Bloomberg:

The worst-case scenarios for a US-China conflict, as military and policy experts describe it, usually involve China invading Taiwan and seeking to disable growing US military capability in Guam to impede a response. This could mean a missile attack—some Chinese ballistic missiles have been nicknamed “Guam killers” for their ability to reach the island. But the top US military leader on Guam says cyberattacks are more likely. US officials have recounted in testimony and briefings how Chinese hackers are building the capacity to poison water supplies nationwide, flood homes with sewage, and cut off phones, power, ports and airports, actions that could cause mass casualties, disrupt military operations and potentially plunge the US into “societal panic.” The aim, US Cybersecurity and Infrastructure Security Agency (CISA) Director Jen Easterly told Congress in January 2024, would be to take down “everything, everywhere, all at once.”

0 notes

Text

Photonic processor could enable ultrafast AI computations with extreme energy efficiency

New Post has been published on https://sunalei.org/news/photonic-processor-could-enable-ultrafast-ai-computations-with-extreme-energy-efficiency/

Photonic processor could enable ultrafast AI computations with extreme energy efficiency

The deep neural network models that power today’s most demanding machine-learning applications have grown so large and complex that they are pushing the limits of traditional electronic computing hardware.

Photonic hardware, which can perform machine-learning computations with light, offers a faster and more energy-efficient alternative. However, there are some types of neural network computations that a photonic device can’t perform, requiring the use of off-chip electronics or other techniques that hamper speed and efficiency.

Building on a decade of research, scientists from MIT and elsewhere have developed a new photonic chip that overcomes these roadblocks. They demonstrated a fully integrated photonic processor that can perform all the key computations of a deep neural network optically on the chip.

The optical device was able to complete the key computations for a machine-learning classification task in less than half a nanosecond while achieving more than 92 percent accuracy — performance that is on par with traditional hardware.

The chip, composed of interconnected modules that form an optical neural network, is fabricated using commercial foundry processes, which could enable the scaling of the technology and its integration into electronics.

In the long run, the photonic processor could lead to faster and more energy-efficient deep learning for computationally demanding applications like lidar, scientific research in astronomy and particle physics, or high-speed telecommunications.

“There are a lot of cases where how well the model performs isn’t the only thing that matters, but also how fast you can get an answer. Now that we have an end-to-end system that can run a neural network in optics, at a nanosecond time scale, we can start thinking at a higher level about applications and algorithms,” says Saumil Bandyopadhyay ’17, MEng ’18, PhD ’23, a visiting scientist in the Quantum Photonics and AI Group within the Research Laboratory of Electronics (RLE) and a postdoc at NTT Research, Inc., who is the lead author of a paper on the new chip.

Bandyopadhyay is joined on the paper by Alexander Sludds ’18, MEng ’19, PhD ’23; Nicholas Harris PhD ’17; Darius Bunandar PhD ’19; Stefan Krastanov, a former RLE research scientist who is now an assistant professor at the University of Massachusetts at Amherst; Ryan Hamerly, a visiting scientist at RLE and senior scientist at NTT Research; Matthew Streshinsky, a former silicon photonics lead at Nokia who is now co-founder and CEO of Enosemi; Michael Hochberg, president of Periplous, LLC; and Dirk Englund, a professor in the Department of Electrical Engineering and Computer Science, principal investigator of the Quantum Photonics and Artificial Intelligence Group and of RLE, and senior author of the paper. The research appears today in Nature Photonics.

Machine learning with light

Deep neural networks are composed of many interconnected layers of nodes, or neurons, that operate on input data to produce an output. One key operation in a deep neural network involves the use of linear algebra to perform matrix multiplication, which transforms data as it is passed from layer to layer.

But in addition to these linear operations, deep neural networks perform nonlinear operations that help the model learn more intricate patterns. Nonlinear operations, like activation functions, give deep neural networks the power to solve complex problems.

In 2017, Englund’s group, along with researchers in the lab of Marin Soljačić, the Cecil and Ida Green Professor of Physics, demonstrated an optical neural network on a single photonic chip that could perform matrix multiplication with light.

But at the time, the device couldn’t perform nonlinear operations on the chip. Optical data had to be converted into electrical signals and sent to a digital processor to perform nonlinear operations.

“Nonlinearity in optics is quite challenging because photons don’t interact with each other very easily. That makes it very power consuming to trigger optical nonlinearities, so it becomes challenging to build a system that can do it in a scalable way,” Bandyopadhyay explains.

They overcame that challenge by designing devices called nonlinear optical function units (NOFUs), which combine electronics and optics to implement nonlinear operations on the chip.

The researchers built an optical deep neural network on a photonic chip using three layers of devices that perform linear and nonlinear operations.

A fully-integrated network

At the outset, their system encodes the parameters of a deep neural network into light. Then, an array of programmable beamsplitters, which was demonstrated in the 2017 paper, performs matrix multiplication on those inputs.

The data then pass to programmable NOFUs, which implement nonlinear functions by siphoning off a small amount of light to photodiodes that convert optical signals to electric current. This process, which eliminates the need for an external amplifier, consumes very little energy.

“We stay in the optical domain the whole time, until the end when we want to read out the answer. This enables us to achieve ultra-low latency,” Bandyopadhyay says.

Achieving such low latency enabled them to efficiently train a deep neural network on the chip, a process known as in situ training that typically consumes a huge amount of energy in digital hardware.

“This is especially useful for systems where you are doing in-domain processing of optical signals, like navigation or telecommunications, but also in systems that you want to learn in real time,” he says.

The photonic system achieved more than 96 percent accuracy during training tests and more than 92 percent accuracy during inference, which is comparable to traditional hardware. In addition, the chip performs key computations in less than half a nanosecond.

“This work demonstrates that computing — at its essence, the mapping of inputs to outputs — can be compiled onto new architectures of linear and nonlinear physics that enable a fundamentally different scaling law of computation versus effort needed,” says Englund.

The entire circuit was fabricated using the same infrastructure and foundry processes that produce CMOS computer chips. This could enable the chip to be manufactured at scale, using tried-and-true techniques that introduce very little error into the fabrication process.

Scaling up their device and integrating it with real-world electronics like cameras or telecommunications systems will be a major focus of future work, Bandyopadhyay says. In addition, the researchers want to explore algorithms that can leverage the advantages of optics to train systems faster and with better energy efficiency.

This research was funded, in part, by the U.S. National Science Foundation, the U.S. Air Force Office of Scientific Research, and NTT Research.

0 notes