#OCR datasets

Explore tagged Tumblr posts

Text

How to Choose the Right OCR Dataset for Your Project

Introduction:

In the realm of Artificial Intelligence and Machine Learning, Optical Character Recognition (OCR) technology is pivotal for the digitization and extraction of textual data from images, scanned documents, and various visual formats. Choosing an appropriate OCR dataset is vital to guarantee precise, efficient, and dependable text recognition for your project. Below are guidelines for selecting the most suitable OCR dataset to meet your specific requirements.

Establish Your Project Specifications

Prior to selecting an OCR Dataset, it is imperative to clearly outline the scope and goals of your project. Consider the following aspects:

What types of documents or images will be processed?

Which languages and scripts must be recognized?

What degree of accuracy and precision is necessary?

Is there a requirement for support of handwritten, printed, or mixed text formats?

What particular industries or applications (such as finance, healthcare, or logistics) does your OCR system aim to serve?

A comprehensive understanding of these specifications will assist in refining your search for the optimal dataset.

Verify Dataset Diversity

A high-quality OCR dataset should encompass a variety of samples that represent real-world discrepancies. Seek datasets that feature:

A range of fonts, sizes, and styles

Diverse document layouts and formats

Various image qualities (including noisy, blurred, and scanned documents)

Combinations of handwritten and printed text

Multi-language and multilingual datasets

Data diversity is crucial for ensuring that your OCR model generalizes effectively and maintains accuracy across various applications.

Assess Labeling Accuracy and Quality

A well-annotated dataset is critical for training a successful OCR model. Confirm that the dataset you select includes:

Accurately labeled text with bounding boxes

High fidelity in transcription and annotation

Well-organized metadata for seamless integration into your machine-learning workflow

Inadequately labeled datasets can result in inaccuracies and inefficiencies in text recognition.

Assess the Size and Scalability of the Dataset

The dimensions of the dataset are pivotal in the training of models. Although larger datasets typically produce superior outcomes, they also demand greater computational resources. Consider the following:

Whether the dataset's size is compatible with your available computational resources

If it is feasible to generate additional labeled data if necessary

The potential for future expansion of the dataset to incorporate new data variations

Striking a balance between dataset size and quality is essential for achieving optimal performance while minimizing unnecessary resource consumption.

Analyze Dataset Licensing and Costs

OCR datasets are subject to various licensing agreements—some are open-source, while others necessitate commercial licenses. Take into account:

Whether the dataset is available at no cost or requires a financial investment

Licensing limitations that could impact the deployment of your project

The cost-effectiveness of acquiring a high-quality dataset compared to developing a custom-labeled dataset

Adhering to licensing agreements is vital to prevent legal issues in the future.

Conduct Tests with Sample Data

Prior to fully committing to an OCR dataset, it is prudent to evaluate it using a small sample of your project’s data. This evaluation assists in determining:

The dataset’s applicability to your specific requirements

The effectiveness of OCR models trained with the dataset

Any potential deficiencies that may necessitate further data augmentation or preprocessing

Conducting pilot tests aids in refining dataset selections before large-scale implementation.

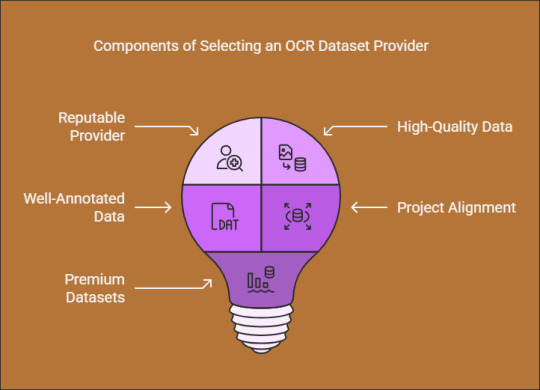

Select a Trustworthy OCR Dataset Provider

Choosing a reputable dataset provider guarantees access to high-quality, well-annotated data that aligns with your project objectives. One such provider. which offers premium OCR datasets tailored for accurate data extraction and AI model training. Explore their OCR dataset solutions for more information.

Conclusion

Selecting an appropriate OCR dataset is essential for developing a precise and effective text recognition model. By assessing the requirements of your project, ensuring a diverse dataset, verifying the accuracy of labels, and considering licensing agreements, you can identify the most fitting dataset for Globose Technology Solutions AI application. Prioritizing high-quality datasets from trusted sources will significantly improve the reliability and performance of your OCR system.

0 notes

Text

Optical Character Recognition (OCR) technology has significantly transformed the manner in which machines decode and process textual information from images, scanned documents, and handwritten notes.

0 notes

Text

OCR Datasets

0 notes

Text

OCR technology has revolutionized data collection processes, providing many benefits to various industries. By harnessing the power of OCR with AI, businesses can unlock valuable insights from unstructured data, increase operational efficiency, and gain a competitive edge in today's digital landscape. At Globose Technology Solutions, we are committed to leading innovative solutions that empower businesses to thrive in the age of AI.

#OCR Data Collection#Data Collection Compnay#Data Collection#globose technology solutions#datasets#technology#data annotation#data annotation for ml

0 notes

Text

At 8:22 am on December 4 last year, a car traveling down a small residential road in Alabama used its license-plate-reading cameras to take photos of vehicles it passed. One image, which does not contain a vehicle or a license plate, shows a bright red “Trump” campaign sign placed in front of someone’s garage. In the background is a banner referencing Israel, a holly wreath, and a festive inflatable snowman.

Another image taken on a different day by a different vehicle shows a “Steelworkers for Harris-Walz” sign stuck in the lawn in front of someone’s home. A construction worker, with his face unblurred, is pictured near another Harris sign. Other photos show Trump and Biden (including “Fuck Biden”) bumper stickers on the back of trucks and cars across America. One photo, taken in November 2023, shows a partially torn bumper sticker supporting the Obama-Biden lineup.

These images were generated by AI-powered cameras mounted on cars and trucks, initially designed to capture license plates, but which are now photographing political lawn signs outside private homes, individuals wearing T-shirts with text, and vehicles displaying pro-abortion bumper stickers—all while recording the precise locations of these observations. Newly obtained data reviewed by WIRED shows how a tool originally intended for traffic enforcement has evolved into a system capable of monitoring speech protected by the US Constitution.

The detailed photographs all surfaced in search results produced by the systems of DRN Data, a license-plate-recognition (LPR) company owned by Motorola Solutions. The LPR system can be used by private investigators, repossession agents, and insurance companies; a related Motorola business, called Vigilant, gives cops access to the same LPR data.

However, files shared with WIRED by artist Julia Weist, who is documenting restricted datasets as part of her work, show how those with access to the LPR system can search for common phrases or names, such as those of politicians, and be served with photographs where the search term is present, even if it is not displayed on license plates.

A search result for the license plates from Delaware vehicles with the text “Trump” returned more than 150 images showing people’s homes and bumper stickers. Each search result includes the date, time, and exact location of where a photograph was taken.

“I searched for the word ‘believe,’ and that is all lawn signs. There’s things just painted on planters on the side of the road, and then someone wearing a sweatshirt that says ‘Believe.’” Weist says. “I did a search for the word ‘lost,’ and it found the flyers that people put up for lost dogs and cats.”

Beyond highlighting the far-reaching nature of LPR technology, which has collected billions of images of license plates, the research also shows how people’s personal political views and their homes can be recorded into vast databases that can be queried.

“It really reveals the extent to which surveillance is happening on a mass scale in the quiet streets of America,” says Jay Stanley, a senior policy analyst at the American Civil Liberties Union. “That surveillance is not limited just to license plates, but also to a lot of other potentially very revealing information about people.”

DRN, in a statement issued to WIRED, said it complies with “all applicable laws and regulations.”

Billions of Photos

License-plate-recognition systems, broadly, work by first capturing an image of a vehicle; then they use optical character recognition (OCR) technology to identify and extract the text from the vehicle's license plate within the captured image. Motorola-owned DRN sells multiple license-plate-recognition cameras: a fixed camera that can be placed near roads, identify a vehicle’s make and model, and capture images of vehicles traveling up to 150 mph; a “quick deploy” camera that can be attached to buildings and monitor vehicles at properties; and mobile cameras that can be placed on dashboards or be mounted to vehicles and capture images when they are driven around.

Over more than a decade, DRN has amassed more than 15 billion “vehicle sightings” across the United States, and it claims in its marketing materials that it amasses more than 250 million sightings per month. Images in DRN’s commercial database are shared with police using its Vigilant system, but images captured by law enforcement are not shared back into the wider database.

The system is partly fueled by DRN “affiliates” who install cameras in their vehicles, such as repossession trucks, and capture license plates as they drive around. Each vehicle can have up to four cameras attached to it, capturing images in all angles. These affiliates earn monthly bonuses and can also receive free cameras and search credits.

In 2022, Weist became a certified private investigator in New York State. In doing so, she unlocked the ability to access the vast array of surveillance software accessible to PIs. Weist could access DRN’s analytics system, DRNsights, as part of a package through investigations company IRBsearch. (After Weist published an op-ed detailing her work, IRBsearch conducted an audit of her account and discontinued it. The company did not respond to WIRED’s request for comment.)

“There is a difference between tools that are publicly accessible, like Google Street View, and things that are searchable,” Weist says. While conducting her work, Weist ran multiple searches for words and popular terms, which found results far beyond license plates. In data she shared with WIRED, a search for “Planned Parenthood,” for instance, returned stickers on cars, on bumpers, and in windows, both for and against the reproductive health services organization. Civil liberties groups have already raised concerns about how license-plate-reader data could be weaponized against those seeking abortion.

Weist says she is concerned with how the search tools could be misused when there is increasing political violence and divisiveness in society. While not linked to license plate data, one law enforcement official in Ohio recently said people should “write down” the addresses of people who display yard signs supporting Vice President Kamala Harris, the 2024 Democratic presidential nominee, exemplifying how a searchable database of citizens’ political affiliations could be abused.

A 2016 report by the Associated Press revealed widespread misuse of confidential law enforcement databases by police officers nationwide. In 2022, WIRED revealed that hundreds of US Immigration and Customs Enforcement employees and contractors were investigated for abusing similar databases, including LPR systems. The alleged misconduct in both reports ranged from stalking and harassment to sharing information with criminals.

While people place signs in their lawns or bumper stickers on their cars to inform people of their views and potentially to influence those around them, the ACLU’s Stanley says it is intended for “human-scale visibility,” not that of machines. “Perhaps they want to express themselves in their communities, to their neighbors, but they don't necessarily want to be logged into a nationwide database that’s accessible to police authorities,” Stanley says.

Weist says the system, at the very least, should be able to filter out images that do not contain license plate data and not make mistakes. “Any number of times is too many times, especially when it's finding stuff like what people are wearing or lawn signs,” Weist says.

“License plate recognition (LPR) technology supports public safety and community services, from helping to find abducted children and stolen vehicles to automating toll collection and lowering insurance premiums by mitigating insurance fraud,” Jeremiah Wheeler, the president of DRN, says in a statement.

Weist believes that, given the relatively small number of images showing bumper stickers compared to the large number of vehicles with them, Motorola Solutions may be attempting to filter out images containing bumper stickers or other text.

Wheeler did not respond to WIRED's questions about whether there are limits on what can be searched in license plate databases, why images of homes with lawn signs but no vehicles in sight appeared in search results, or if filters are used to reduce such images.

“DRNsights complies with all applicable laws and regulations,” Wheeler says. “The DRNsights tool allows authorized parties to access license plate information and associated vehicle information that is captured in public locations and visible to all. Access is restricted to customers with certain permissible purposes under the law, and those in breach have their access revoked.”

AI Everywhere

License-plate-recognition systems have flourished in recent years as cameras have become smaller and machine-learning algorithms have improved. These systems, such as DRN and rival Flock, mark part of a change in the way people are surveilled as they move around cities and neighborhoods.

Increasingly, CCTV cameras are being equipped with AI to monitor people’s movements and even detect their emotions. The systems have the potential to alert officials, who may not be able to constantly monitor CCTV footage, to real-world events. However, whether license plate recognition can reduce crime has been questioned.

“When government or private companies promote license plate readers, they make it sound like the technology is only looking for lawbreakers or people suspected of stealing a car or involved in an amber alert, but that’s just not how the technology works,” says Dave Maass, the director of investigations at civil liberties group the Electronic Frontier Foundation. “The technology collects everyone's data and stores that data often for immense periods of time.”

Over time, the technology may become more capable, too. Maass, who has long researched license-plate-recognition systems, says companies are now trying to do “vehicle fingerprinting,” where they determine the make, model, and year of the vehicle based on its shape and also determine if there’s damage to the vehicle. DRN’s product pages say one upcoming update will allow insurance companies to see if a car is being used for ride-sharing.

“The way that the country is set up was to protect citizens from government overreach, but there’s not a lot put in place to protect us from private actors who are engaged in business meant to make money,” Nicole McConlogue, an associate professor of law at the Mitchell Hamline School of Law, who has researched license-plate-surveillance systems and their potential for discrimination.

“The volume that they’re able to do this in is what makes it really troubling,” McConlogue says of vehicles moving around streets collecting images. “When you do that, you're carrying the incentives of the people that are collecting the data. But also, in the United States, you’re carrying with it the legacy of segregation and redlining, because that left a mark on the composition of neighborhoods.”

19 notes

·

View notes

Text

we all agree that ocr is good right. we arent demonizing all machine learning. right. we are recognizing that the problem with machine learning as a field is things like coercively and nonconsensually obtained and organized datasets which are biased in curation leading to bias in the alogrithms. right. right?

11 notes

·

View notes

Text

really i think that unless your opinions about AI disentangle

large language models (chatgpt et al; low factual reliability, but can sometimes come up with interesting concepts)

diffusion and similar image generators (stable diffusion et al; varying quality, but can produce some impressive work especially if you lean into the weirdness)

classification models (OCR, text-to-speech; have been in use for over a decade depending on the domain)

the entire rest of the field before 2010 or so

you're going to suffer from confused thinking

expanding on point 3 a bit because it's one i'm familiar with: for speech-to-text, image-to-text, handwriting recognition, and similar things, nobody does any non-ML approaches anymore. ML approaches are fast enough, more reliable, generalize easier to other languages, and don't require as much work to create. something like cursorless, hands-free text editing for people with carpal tunnel or whatever, 100% relies on an ML model these days. this has zero bearing on copyright of gathering datasets (many speech-to-text datasets are gathered in controlled conditions specifically for creating a dataset) or AI "taking jobs" (nobody is going to pay a stenographer to follow them around with a laptop) or whatever

7 notes

·

View notes

Text

A Survey of OCR Datasets for Document Processing

Introduction:

Optical Character Recognition (OCR) has emerged as an essential technology for the digitization and processing of documents across various sectors, including finance, healthcare, education, and legal fields. As advancements in machine learning continue, the demand for high-quality OCR datasets has become increasingly critical for enhancing accuracy and efficiency. This article examines some of the most prominent OCR datasets utilized in document processing and highlights their importance in training sophisticated AI models.

Significance of OCR Datasets

OCR Datasets play a vital role in the development of AI models capable of accurately extracting and interpreting text from a wide range of document types. These datasets are instrumental in training, validating, and benchmarking OCR systems, thereby enhancing their proficiency in managing diverse fonts, languages, layouts, and handwriting styles. A well-annotated OCR dataset is essential for ensuring that AI systems can effectively process both structured and unstructured documents with a high degree of precision.

Prominent OCR Datasets for Document Processing

IAM Handwriting Database

This dataset is extensively utilized for recognizing handwritten text.

It comprises labeled samples of English handwritten text.

It is beneficial for training models to identify both cursive and printed handwriting.

MJ Synth (Synthetics) Dataset

This dataset is primarily focused on scene text recognition.

It contains millions of synthetic word images accompanied by annotations.

It aids in training OCR models to detect text within complex backgrounds.

ICDAR Datasets

This collection consists of various OCR datasets released in conjunction with the International Conference on Document Analysis and Recognition (ICDAR).

It includes datasets for both handwritten and printed text, document layouts, and multilingual OCR.

These datasets are frequently employed for evaluating and benchmarking OCR models.

SROIE (Scanned Receipt OCR and Information Extraction) Dataset

This dataset concentrates on OCR applications for receipts and financial documents.

It features scanned receipts with labeled text and key-value pairs.

It is particularly useful for automating invoice and receipt processing tasks.

Google’s Open Images OCR Dataset

This dataset is a component of the Open Images collection, which includes text annotations found in natural scenes.

It facilitates the training of models aimed at extracting text from a variety of image backgrounds.

RVL-CDIP (Tobacco Documents Dataset)

This dataset comprises more than 400,000 scanned images of documents.

It is organized into different categories, including forms, emails, and memos.

It serves as a resource for document classification and OCR training.

Dorbank Dataset

This is a comprehensive dataset designed for the analysis of document layouts.

It features extensive annotations for text blocks, figures, and tables.

It is beneficial for training models that necessitate an understanding of document structure.

Selecting the Appropriate OCR Dataset

When choosing an OCR dataset, it is important to take into account:

Document Type: Differentiating between handwritten and printed text, as well as structured and unstructured documents.

Language Support: Whether the OCR is designed for multiple languages or a single language.

Annotations: The presence of bounding boxes, key-value pairs, and additional metadata.

Complexity: The capability to manage noisy, skewed, or degraded documents.

Conclusion

OCR datasets are vital for training artificial intelligence models in document processing. By carefully selecting the appropriate dataset, organizations and researchers can improve the performance and reliability of their OCR systems. As advancements in Globose Technology Solutions AI-driven document processing continue, utilizing high-quality datasets will be essential for achieving optimal outcomes.

0 notes

Text

The Impact of OCR Datasets on Enhancing Text Recognition Precision in Artificial Intelligence

Introduction

Optical Character Recognition (OCR) technology has significantly transformed the manner in which machines decode and process textual information from images, scanned documents, and handwritten notes. From streamlining data entry processes to facilitating instantaneous language translation, OCR is integral to numerous AI-driven applications. Nevertheless, the effectiveness of OCR models is heavily influenced by the quality and variety of datasets utilized during their training. This article will examine the ways in which OCR datasets contribute to the enhancement of text recognition precision in AI.

1. Superior OCR Datasets Facilitate Enhanced Model Training

OCR Datasets models depend on machine learning algorithms that derive insights from annotated datasets. These datasets encompass images of text in a multitude of fonts, sizes, backgrounds, and orientations, enabling the AI model to identify patterns and progressively enhance its accuracy. High-quality datasets guarantee that models encounter a wide range of text samples, thereby minimizing errors in practical applications.

2. Varied OCR Datasets Promote Generalization

An effectively organized OCR dataset comprises an assortment of handwriting styles, printed text, and multilingual content. This variety aids the AI model in generalizing its learning, allowing for accurate text recognition across diverse contexts, including legal documents, invoices, street signs, and historical manuscripts. In the absence of varied datasets, OCR models may encounter difficulties with real-world discrepancies, resulting in subpar performance.

3. Enhanced Capability to Manage Noisy and Distorted Text

In practical situations, text may be presented under challenging conditions, such as poor lighting, blurriness, skewed angles, or background interference. Well-annotated OCR datasets prepare models to cope with such distortions, ensuring that text recognition remains precise even in less-than-ideal circumstances. This capability is particularly advantageous in applications such as automated document scanning and license plate recognition.

4. Labeling and Annotation Enhance AI Precision

OCR datasets are frequently subjected to manual labeling and annotation to guarantee precision. Each dataset comprises detailed annotations of text regions that assist AI models in understanding the correct positioning, structure, and segmentation of text. Sophisticated annotation methods, such as bounding boxes and polygon segmentation, significantly enhance OCR precision by refining text localization and extraction.

5. Industry-Specific Datasets Boost Performance in Specialized Applications

Various sectors necessitate OCR solutions customized to their specific requirements. For instance:

Healthcare: OCR is employed to digitize medical records and prescriptions.

Finance: OCR facilitates the processing of invoices, checks, and bank statements.

Retail & E-commerce: OCR extracts product information from receipts and packaging.

Utilizing industry-specific OCR datasets allows AI models to attain greater accuracy in specialized applications, minimizing errors and enhancing efficiency.

6. Ongoing Dataset Expansion Promotes Model Advancement

The field of OCR technology is in a state of continuous evolution, with new datasets playing a crucial role in ongoing enhancements. As AI models undergo retraining with updated and expanded datasets, they become adept at addressing emerging text recognition challenges, including novel fonts, languages, and handwriting styles. This adaptability ensures that OCR solutions remain pertinent and highly precise.

Final Thoughts

OCR datasets are essential for improving text recognition accuracy in AI. By supplying diverse, high-quality, and well-annotated data, they empower AI models to effectively process and interpret text across various contexts. As advancements in AI progress, the significance of well-organized OCR datasets will continue to increase, fostering innovation in automation, document processing, and beyond.

To discover how high-quality OCR datasets can enhance your AI model's performance, please visit GTS AI’s OCR Dataset Case Study.

How GTS.AI Make Complete OCR Datasets.

Globose Technology Solutions creates comprehensive OCR datasets by combining advanced data collection, precise annotation, and rigorous validation processes. The company gathers text data from diverse sources, including scanned documents, handwritten notes, invoices, and signage, ensuring a wide range of real-world text variations. Using cutting-edge annotation techniques like bounding boxes and polygon segmentation, GTS.AI accurately labels text while addressing challenges such as blur, skewed angles, and noisy backgrounds. The datasets support multiple languages, fonts, and writing styles, making them highly adaptable for AI-driven text recognition across industries like finance, healthcare, and automation. With continuous updates and customizable solutions, GTS.AI ensures that its OCR datasets enhance AI accuracy and reliability.

0 notes

Text

How AI Software Development Is Revolutionizing Business Automation and Decision-Making in 2025?

In 2025, artificial intelligence is no longer a buzzword—it’s a core component of competitive strategy. Businesses that once viewed AI as a future investment are now rapidly adopting AI software development to optimize workflows, automate processes, and elevate decision-making precision. From streamlining operations to predicting market trends, AI is reshaping the way enterprises function.

So, how exactly is AI software development driving this transformation? Let’s explore the key areas where it’s making the biggest impact.

1. Automating Repetitive and Manual Processes

One of the most immediate benefits of AI software is its ability to handle repetitive tasks with speed and accuracy. Whether it's data entry, invoice processing, or customer support, AI-powered bots and systems now take over tasks that once consumed human hours.

Examples of Automation Use Cases:

AI Chatbots: Handling FAQs, booking appointments, and basic troubleshooting.

Document Processing: Extracting data from PDFs, invoices, and scanned images using Optical Character Recognition (OCR).

Workflow Automation: Tools like robotic process automation (RPA) now integrate with AI to handle complex, rule-based workflows.

This shift not only boosts productivity but also reduces operational costs and error rates.

2. Enhancing Decision-Making with Predictive Analytics

AI software development is increasingly integrated with big data and analytics platforms to provide predictive insights. Machine learning algorithms analyze massive datasets to forecast sales, detect anomalies, and recommend optimal actions.

How it helps businesses:

Retail: Predicting inventory needs and customer demand.

Finance: Flagging fraudulent transactions in real-time.

Marketing: Identifying high-conversion audiences through behavioral analysis.

These insights empower business leaders to make faster, more informed, and risk-mitigated decisions.

3. Personalized Customer Experience

In 2025, personalization is the norm, not the exception. AI algorithms now track and analyze user behavior to deliver tailored experiences—whether on websites, apps, or emails.

Where AI boosts personalization:

E-commerce: Recommending products based on past purchases and browsing patterns.

Healthcare: Offering treatment suggestions tailored to patient history.

Education: Creating custom learning paths for students based on performance data.

AI-driven personalization doesn’t just improve engagement—it significantly increases conversion rates and customer loyalty.

4. AI-Powered Business Intelligence

AI-powered dashboards and analytics tools now provide dynamic visualizations, natural language queries, and real-time alerts. Instead of digging through spreadsheets, executives can ask a question in plain English and receive instant insights.

Examples:

“What was our highest selling product in Q1 2025?”

“Which region saw the largest sales dip last month?”

“Show me trends in customer churn by industry.”

By embedding AI in BI tools, businesses gain clarity and strategic foresight like never before.

5. Smarter Resource Management and Scheduling

AI-based scheduling systems now automate workforce planning, meeting coordination, and even supply chain logistics. These systems learn from historical data and external factors to suggest the best use of resources.

Impacts include:

Reduced employee idle time

Better project planning

Real-time rescheduling during disruptions

From manufacturing to services, AI is optimizing how time, talent, and tools are allocated.

6. AI in Decision-Making: From Insights to Actions

It’s not just about insights anymore—it’s about intelligent action. AI software can now trigger responses based on real-time data. For example:

A drop in inventory can auto-trigger a restock order.

A surge in website traffic can prompt extra server allocation.

A new lead can automatically enter a nurturing campaign.

This kind of closed-loop decision-making is redefining agility in business operations.

7. Industry-Specific AI Applications

Every sector is feeling the impact of AI software development:

Healthcare: AI diagnostic tools and virtual health assistants

Finance: AI-powered trading platforms and robo-advisors

Real Estate: Automated property valuation and virtual assistants

Logistics: Route optimization and delivery tracking

HR: Resume screening and employee sentiment analysis

AI is no longer a one-size-fits-all solution. Today’s tools are deeply customized to the needs and nuances of each industry.

8. The Role of Custom AI Software Development

Off-the-shelf tools can only go so far. In 2025, businesses are increasingly turning to custom AI software development to build solutions that align precisely with their goals, data sources, and tech stacks.

Why custom AI matters:

Seamless integration with existing systems

Proprietary model development

Data privacy and compliance assurance

Competitive differentiation

This custom approach enables companies to create AI capabilities that others simply can’t replicate.

9. Challenges Still Exist—but Solutions Are Evolving

While the benefits are massive, businesses must navigate:

Data quality and availability

AI model transparency (explainability)

Skill gaps in AI development

Ethical and legal concerns

Fortunately, AI development platforms, low-code tools, and ethical AI frameworks are rapidly evolving to bridge these gaps.

Conclusion

AI software development is no longer a futuristic vision—it’s the foundation of smart, agile, and competitive businesses in 2025. From automating operations to enhancing strategic decision-making, AI is proving to be the ultimate force multiplier.

Companies that embrace AI today are setting themselves up not just for survival, but for exponential growth.

0 notes

Text

OCR technology has revolutionized data collection processes, providing many benefits to various industries. By harnessing the power of OCR with AI, businesses can unlock valuable insights from unstructured data, increase operational efficiency, and gain a competitive edge in today's digital landscape. At Globose Technology Solutions, we are committed to leading innovative solutions that empower businesses to thrive in the age of AI.

#OCR Data Collection#Data Collection Compnay#Data Collection#image to text api#pdf ocr ai#ocr and data extraction#data collection company#datasets#ai#machine learning for ai#machine learning

0 notes

Text

AI software development helping businesses grow through smart technology

Artificial Intelligence (AI) and Machine Learning (ML) are transforming the way businesses operate, and specialised software development companies are at the forefront of this change. These companies help organisations harness advanced technologies to improve efficiency, reduce manual work, and make smarter decisions.

What AI software developers offer

AI software development companies build intelligent systems that require little to no human input. Their main areas of expertise include:

Machine learning: Using methods like supervised, unsupervised, and reinforcement learning, along with deep learning and neural networks, they create systems that learn and improve over time.

Natural language processing (NLP): They develop real-time speech recognition and conversational AI tools that improve communication and user experience.

Computer vision: These solutions help machines interpret visual data like facial recognition, CCTV analysis, and image interpretation enhancing security and operational efficiency.

Real-world AI solutions

AI developers apply their skills in many practical ways across industries:

Big data and ML models: They clean and analyze massive datasets like utility meter records to detect errors and inconsistencies.

Manual task automation: By using Optical Character Recognition (OCR), they eliminate the need for manual data entry from documents.

Healthcare insights: Deep learning models can predict a patient’s health trends using symptoms, physiological data, and medical history.

Vision systems: High-performance vision systems and sensors are designed for tasks like machine inspection and quality control in manufacturing.

The AI implementation process

To ensure successful integration of AI, developers follow a clear and strategic process:

Define the use case: Start by identifying the specific problem the AI solution will solve.

Verify data availability: Check whether the necessary data is available and properly recorded.

Data exploration: Analyse the data to understand its patterns and relevance to the problem.

Build the model: Test various features and involve subject matter experts to build the most effective model.

Validate the model: Use performance measures to evaluate and refine the model.

Deploy and automate: Begin with a small rollout of the AI solution, gather feedback, and then expand to a broader audience.

Monitor and improve: Continuously monitor the model’s performance and make updates as needed.

Supporting business growth with AI

AI software development is a powerful tool for businesses aiming to innovate and stay competitive. With the right approach and expertise, AI can streamline operations, uncover insights, and drive smarter decisions.

0 notes

Text

What Are the Most Popular Azure AI Services?

Azure AI services offer a collection of internet-hosted cognitive intelligence offerings that support programmers and enterprises in building smart, innovative, production-ready, and ethical systems using ready-made and built-in utilities, interfaces, and algorithms.

These offerings aim to assist in upgrading enterprise functions swiftly and develop accountable AI platforms to launch at business pace. Azure AI solutions can be accessed via REST interfaces and programming library SDKs across major coding languages.

Understanding Azure AI Services

Azure AI platforms cover a wide range of resources, environments, and pre-trained networks crafted to support the creation, rollout, and oversight of AI-driven solutions.

These Azure AI services platforms use data science (DS), language comprehension processing, visual computing, and various AI strategies to address intricate company issues and propel digital evolution.

Some Lesser Known Facts About Azure AI

Azure provides 99.9% availability for its services.

Microsoft invests aggressively in cybersecurity; spent $20 billion for more than 5 years and exceeded 8,500 security experts.

Compared to companies that rely on on-premises solutions, Azure cloud users are more than twice as likely to find it easier to innovate with AI and ML: 77% versus only 34%.

Some of the Available Azure AI services

Azure AI provides a range of function-specific services that are designed to meet your business needs and requirements. These services aim to assist businesses in accelerating innovation, boosting user experience and resolving complex challenges with the help of AI. Given below are the popular AI Azure Services. Check them out:

Anomaly Detector

Anomaly Detector works as an Azure cognitive platform which allows programmers to recognize and evaluate and recognize deviations in their sequential data without extensive data science expertise.

This Azure AI services platform delivers a range of endpoints that support both multiple analysis and instantaneous evaluation. The core intelligence networks are configured and adapted utilizing the client’s dataset, allowing the feature to adapt to the specific requirements of their organization.

Azure OpenAI Service

The Azure OpenAI offering represents a revolutionary framework that enables enterprises to utilize the vast capabilities of powerful machine intelligence frameworks for their tailored use cases.

This platform works as an access point to state-of-the-art technologies including Codex, DALL*E, and GPT-3.5, which are leaders in AI breakthroughs.

Through the incorporation of these Azure AI and ML services into organizational processes, enterprises can explore innovative directions for creativity and effective solutions.

Azure AI Vision

Azure AI Vision is one of the Azure AI services that delivers a consolidated platform that supplies cutting-edge functions for interpreting pictures and video files and generating output using the graphical attributes that interest the individual user.

This utility supports reviewing visuals that adhere to certain criteria, such as being formatted in BMP, GIF, JPEG, or PNG, maintaining a size below 4 MB, and featuring dimensions above 50 x 50 pixels.

Azure AI Vision is applicable in areas like spatial insight, optical character recognition (OCR), and image diagnostics.

Azure AI Speech

Azure AI Speech delivers a supervised platform that supplies top-tier audio capabilities including voice-to-text, text-to-audio, audio conversion, and speaker identification.

This Azure AI services platform enables programmers to swiftly craft premium voice-integrated application functions and design personalized digital assistants.

Azure AI Speech includes adaptable models and voice options, and engineers can incorporate chosen terminology into the base lexicon or develop tailored algorithms.

The platform is adaptable and deployable in various environments, including the cloud or on-premises via containers.

Azure AI Speech supports converting recordings in over 100 dialects and styles, extract client feedback with support center transcription, elevate user interaction using speech-powered interfaces, and document vital conversations during sessions.

Azure AI Machine Learning

Azure Machine Learning offers a robust web-based framework for designing, refining, and distributing data science models at scale.

It creates an integrated workspace where analysts and engineers can unite on AI initiatives, streamlining essential phases in the model development journey.

Azure AI Content Moderator

Azure AI Content Moderator delivers an intelligence-powered feature that helps organizations manage input that could be inappropriate, harmful, or unsuitable.

This feature uses automated moderation powered by AI to evaluate language, pictures, and video clips and triggers moderation indicators instantly. It supports embedding moderation logic into software to align with compliance or preserve the right experience for users.

It acts as a broad-ranging tool made to identify unsafe user-submitted and algorithm-generated data within services and digital platforms.

Azure AI Document Intelligence

Azure AI Document Intelligence represents an intelligent information handling platform that utilizes cognitive tools and OCR for fast extraction of content and formatting from files.

This system from the Azure AI services leverages sophisticated learning models to retrieve data, paired fields, structured grids, and layouts from records precisely and efficiently.

Azure AI Document Intelligence transforms static records into actionable inputs and allows teams to prioritize decision-making over information gathering.

The solution enables the creation of smart document workflows, offering flexibility to begin with existing templates or construct personalized models, deployed either locally or using cloud support through the AI Document Intelligence SDK or studio.

Azure AI QnA Maker

QnA Maker is one of the most useful Azure AI services which provides an online-based NLP-driven utility that empowers teams to generate interactive dialogue layers on top of their datasets.

The system is designed to deliver the best-matched response for each prompt from a personalized information base. QnA Maker is frequently applied to develop engaging communication systems like chatbot frameworks, voice-assisted programs, and community platform tools.

It organizes knowledge into question-response datasets and recognizes connections within structured or semi-structured material to establish links among the Q&A records.

Azure AI Personalizer

Azure AI Personalizer is one of the most interesting Azure AI services that offers a smart automation feature that allows systems to make improved choices at scale using reinforcement techniques.

The platform evaluates input regarding the status of the system, use-case, and/or individuals (environments), and a collection of potential selections and linked properties (options) to pick the most suitable action.

User response from the system (rewards) is transmitted to Personalizer to enhance and evolve its choice-making efficiency almost instantly.

It serves as a robust solution for engineers and teams striving to deliver adaptive, tailored interactions per individual. The platform can be engaged using a development kit SDK, RESTful endpoint, or via the Personalizer online dashboard.

Azure AI Metrics Advisor

Azure AI Metrics Advisor functions as a series data inspection tool that delivers a group of endpoints for information input, outlier discovery, and root cause exploration.

This tool automates the method of applying analysis models to data and offers an online-based workspace for feeding, recognizing outliers, and diagnostics without needing expertise in data science.

Metrics Advisor supports the integration and intake of multivariate metric datasets from various storage services, like Azure Blob Storage, MongoDB, SQL Server, and others.

The tool belongs to Azure’s intelligent services and leverages AI capabilities to track information and identify irregular patterns within sequential datasets.

Azure AI Immersive Reader

Azure AI Immersive Reader provides a cognitive capability that assists people in understanding and engaging with content.

The tool boosts interpretation and performance through intelligent algorithms and integrates comprehension and text-reading abilities into digital experiences.

Azure AI Immersive Reader is built upon Azure’s AI layer to streamline the deployment of a smart solution that aids individuals of any demographic or literacy level with reader utilities and components such as voice narration, multilingual translation, and attention aids via visual cues and formatting tools.

What are the benefits of Azure AI Services?

Azure AI services allow businesses and developers to create secure and scalable apps. With the help of pre-built models and flexible deployment options, AI integrates with real-life scenarios seamlessly. Given below are the benefits of AI services by Azure.

Comprehensive Ecosystem

Microsoft Azure AI services deliver a flourishing AI ecosystem so that it meets your diverse business needs.

No matter what features it is; NLP, computer vision, machine learning, speech recognition and so on, Azure offers APIs and pre-built models to integrate with your apps.

Flexibility and Scalability

Scalability is one of its features that makes it the best one in the market. As your company grows, Azure AI services can scale accordingly.

Irrespective of your business size. Azure cloud AI ensures that your resources are allocated as needed.

Boosted Customer Experience

Today, meeting customer demands efficiently has become even more important than before. Azure AI allows businesses to create personalized experiences with the help of sentiment analysis.

By using the insights that AI provides, businesses can create customized offers, deals, and interactions to provide maximum customer satisfaction.

Data-oriented Decision Making

You can get robust data analytics in Azure AI services that assist businesses in making better, more informed choices. Businesses can identify patterns and trends in their data that might otherwise go overlooked by utilizing machine learning.

Greater predicting, more intelligent planning, and better comprehension of the future result from this.

Azure AI helps transform raw data into insightful knowledge that produces tangible outcomes, whether it be forecasting customer demands, optimizing supply chains, or identifying market changes.

Streamlined Business Processes

It also helps streamline a business by automating routine tasks to carry out complicated workflows.

It reduces errors, minimizes human intervention, and frees up human resources so that it can focus on more valuable tasks.

Pricing Benefits of Using Azure AI Services

The pricing policies of Azure are one of the best things about it. Let’s find out how you can save with its pricing models.

Monthly or yearly plans: Some services offer fixed pricing plans. These include a set number of uses each month, and you can pay monthly or yearly. This helps if you want to plan your budget ahead of time.

You are charged for what you use: A lot of Azure's AI services are pay-as-you-go. This implies that you only pay for the services you use, such as the volume of data your app processes or the frequency with which it accesses the service. For apps that don't have a monthly usage cap, this is fantastic.

Free use and free Trials: Microsoft frequently offers a free trial or a restricted period of free use. This enables you to test the services out before you buy them.

Additional fees for personalized AI models: The cost of training your AI model (for instance, with Azure Machine Learning or Custom Vision) is determined by the amount of data you utilize and the amount of processing power required.

Costs of management and storage: Paying for things like data storage and AI system management, particularly if it operates in real-time, may be necessary in addition to the basic service.

Savings and exclusive deals: Azure gives you a discount if you pay in advance, use the service frequently, or are a nonprofit or student. They occasionally provide exclusive discounts as well.

Exchange rates and currency: Microsoft adjusts the prices using the London market currency rate if you aren't paying in US dollars.

Conclusion

Azure AI services embody a dynamic collection of utilities and capabilities that equip engineers and enterprises to utilize the disruptive promise of machine intelligence.

Through a wide selection of ready-to-use frameworks, endpoints, and development kits, Azure AI offerings support the design of smart, scalable products that accelerate digital progress and technological creativity.

From pattern spotting to vision analysis, and voice interaction to language interpretation, Azure AI services span a wide array of functions, establishing it as a full-spectrum ecosystem for intelligence-powered developments.

These features not only speed up building timelines but also reinforce ethical AI usage, helping firms launch tools rapidly while adhering to compliance and responsible guidelines.

Additionally, the provision of Azure AI tools via REST-based services and native SDKs in widely used programming environments ensures straightforward use and seamless connectivity with current pipelines and systems.

To sum up, Azure AI resources go beyond basic technology, they serve as a foundation for crafting smart, visionary systems that reshape our digital interactions.

By adopting these intelligent Azure AI services, professionals and businesses can not only remain pioneers in tech advancement but also help shape a smarter, more equitable digital tomorrow.

If you are looking for these services, you must find the best Azure AI consultant for the premium results.

Frequently Asked Questions

Q1. What are AI services in Azure?

These are a set of Azure products which are used to use AI services easily. Some of the common products may include AI search, Content Safety, Azure OpenAI service, and AI speech.

Q2. Which one is easier to use, Azure AI or AWS?

Azure AI is considered better than AWS when it comes to ease of usability.

Q3. What are Microsoft AI tools?

They are typically a set of apps and services that provide task automation, boosted productivity, and deep insights with the help of AI.

Q4. How is Azure AI Beneficial?

It allows continuous adjustments, updates, application deployments and model programming.

0 notes

Text

Text Processing Software Development

Text processing is one of the oldest and most essential domains in software development. From simple word counting to complex natural language processing (NLP), developers can build powerful tools that manipulate, analyze, and transform text data in countless ways.

What is Text Processing?

Text processing refers to the manipulation or analysis of text using software. It includes operations such as searching, editing, formatting, summarizing, converting, or interpreting text.

Common Use Cases

Spell checking and grammar correction

Search engines and keyword extraction

Text-to-speech and speech-to-text conversion

Chatbots and virtual assistants

Document formatting or generation

Sentiment analysis and opinion mining

Popular Programming Languages for Text Processing

Python: With libraries like NLTK, spaCy, and TextBlob

Java: Common in enterprise-level NLP solutions (Apache OpenNLP)

JavaScript: Useful for browser-based or real-time text manipulation

C++: High-performance processing for large datasets

Basic Python Example: Word Count

def word_count(text): words = text.split() return len(words) sample_text = "Text processing is powerful!" print("Word count:", word_count(sample_text))

Essential Libraries and Tools

NLTK: Natural Language Toolkit for tokenizing, parsing, and tagging text.

spaCy: Industrial-strength NLP for fast processing.

Regex (Regular Expressions): For pattern matching and text cleaning.

BeautifulSoup: For parsing HTML and extracting text.

Pandas: Great for handling structured text like CSV or tabular data.

Best Practices

Always clean and normalize text data before processing.

Use tokenization to split text into manageable units (words, sentences).

Handle encoding carefully, especially when dealing with multilingual data.

Structure your code modularly to support text pipelines.

Profile your code if working with large-scale datasets.

Advanced Topics

Named Entity Recognition (NER)

Topic Modeling (e.g., using LDA)

Machine Learning for Text Classification

Text Summarization and Translation

Optical Character Recognition (OCR)

Conclusion

Text processing is at the core of many modern software solutions. From basic parsing to complex machine learning, mastering this domain opens doors to a wide range of applications. Start simple, explore available tools, and take your first step toward developing intelligent text-driven software.

0 notes