#Personalization using vector databases

Explore tagged Tumblr posts

Text

#best vector database for large-scale AI#Deep learning data management#How vector databases work in AI#Personalization using vector databases#role of vector search in semantic AI#vector database for AI

0 notes

Text

@odditymuse you know who this is for

Jace hadn’t actually been paying all that much attention to his surroundings. Perhaps that was the cause of his eventual downfall. The Stark Expo was just so loud, though. Not only to his ears, but to his mind. So much technology in one space, and not just the simple sort, like a bunch of smartphones—not that smartphones could necessarily be classified as simple technology, but when one was surrounded by them all day, every day, they sort of lost their luster—but the complex sort that Jace had to really listen to in order to understand.

After about an hour of wandering, he noticed someone at one of the booths having difficulty with theirs. A hard-light hologram interface; fully tactile holograms with gesture-control and haptic feedback. At least, that was what the poor woman was trying to demonstrate. However, the gestural database seemed to be corrupted. No matter how she tried, the holograms simply wouldn’t do what she wanted them to, either misinterpreting her gesture commands or completely reinterpreting them.

Jace had offered up a suggestion after less than a minute of looking at the device:

“Have you tried realigning the inertial-motion sensor array that tracks hand movement vectors? Your gesture database might not be the actual problem.” That was what she was currently looking at, and it wasn’t. “Even a small drift on, say, the Z-axis,” the axis with the actual misalignment issue, “would feed bad data into the system. Which would, in turn, make the gesture AI try to compensate, learning garbage in the process.”

He’d received a hell of a dirty look for his attempted assistance, and frankly, if she didn’t want to accept his help, that was fine—Jace was used to people ignoring him, even when he was, supposedly, the expert on the matter. But then the woman’s eyes went big, locked onto something behind him, and a single word uttered in a man’s voice had Jace spinning around and freezing, like a deer in headlights.

“Fascinating.”

Oh god. Oh no. That was Tony Stark. The Tony Stark. The one person Jace did not want to meet and hadn’t expected to even be milling around here, if he’d bothered to come at all.

“…Um.” Well done, Jace. Surely that will help your cause.

7 notes

·

View notes

Note

Hi Barb! I was wondering how you went about making your visual assets (ex. the paw print logo) for Blood Moon. Was there a particular program you used? Did you commission a graphic designer? Thanks so much!

I use this website for graphic elements in my games:

This includes the paw print (though it doesn't seem to be there anymore?), the splatter, and the brushstrokes I use for some of my games.

If I want photos or videos (like in Drown With Me) I use this website:

The font I used for Blood Moon was Fonturn:

Occasionally I will try to make graphic elements on my own, but only if it makes sense for them to be a little rudimentary (I'm not really good at art and my computer is prehistoric).

I made the logo for Something a Little Super by myself in an ancient version of photoshop.

For music I lean VERY heavily on these two guys:

Sound effects come from several websites but most often:

I hope that helps.💙

33 notes

·

View notes

Note

its not really archival when youre completely creating a different flag and choosing not to reblog live posts

again, those posts are pngs. despite us posting pngs ourselves, the real archive is the google drive database (which will become a neocities eventually). every post we make is simply to let followers and tumblr users know that there is a new vector available to them.

not every flagmaker on tumblr or anywhere else has professional academic understanding of design and visual experience. and that's not their fault. but still, sometimes you can see the pixel artefacting of a person simply using the bucket tool. there's nothing wrong with doing that, but there are people out there (like ourselves) who would rather have a cleaner, better resolution image of said flags.

what is in the queue is currently all flags that applied to any one of the mods. we are open to requests to allow others to have vectors of what applies to them, but for now what our current working flagmaker does is find flags that are visually messy or visibly using a template that is pixelated to vector so it is better archivable.

if you want me to be a better archive, i guess i'll start copy-pasting flags as they are into pluralpedia and transid.org since i've got accounts on there. but what i want to do is to make better images to post onto those archives instead. tumblr isn't a perfect website. we all know how shit its search function is both on the tumblr explore and on specific blogs. that's why we don't care to reblog anything. regardless of how well we can tag items, tagging is useless without a tag compilation.

sure, sometimes it's not a 1:1 recreation. sometimes people don't make a flag that is viable for 1:1 recreations.

tl;dr, the work we put into archiving is not visible on tumblr. reblogging is not our form of archiving. — bishop

4 notes

·

View notes

Text

🔥🔥🔥AzonKDP Review: World's First Amazon Publishing AI Assistant

AzonKDP is an AI-powered publishing assistant that simplifies the entire process of creating and publishing Kindle books. From researching profitable keywords to generating high-quality content and designing captivating book covers, AzonKDP handles every aspect of publishing. This tool is perfect for anyone, regardless of their writing skills or technical expertise.

Key Features of AzonKDP

AI-Powered Keyword Research

One of the most crucial aspects of successful publishing is selecting the right keywords. AzonKDP uses advanced AI to instantly research profitable keywords, ensuring your books rank highly on Amazon and Google. By tapping into data that’s not publicly available, AzonKDP targets the most lucrative niches, helping your books gain maximum visibility and reach a wider audience.

Niche Category Finder

Finding the right category is essential for becoming a best-seller. AzonKDP analyzes Amazon’s entire category database, including hidden ones, to place your book in a low-competition, high-demand niche. This strategic placement ensures maximum visibility and boosts your chances of becoming a best-seller.

AI Book Creator

Writing a book can be a daunting task, but AzonKDP makes it effortless. The AI engine generates high-quality, plagiarism-free content tailored to your chosen genre or topic. Whether you’re writing a novel, self-help book, business guide, or children’s book, AzonKDP provides you with engaging content that’s ready for publication.

AI-Powered Cover Design

A book cover is the first thing readers see, and it needs to be captivating. AzonKDP’s AI-powered cover designer allows you to create professional-grade covers in seconds. Choose from a variety of beautiful, customizable templates and make your book stand out in the competitive market.

Automated AI Publishing

Formatting a book to meet Amazon’s publishing standards can be time-consuming and technical. AzonKDP takes care of this with its automated publishing feature. With just one click, you can format and publish your book to Amazon KDP, Apple Books, Google Books, and more, saving you hours of work and technical headaches.

Competitor Analysis

Stay ahead of the competition with AzonKDP’s competitor analysis tool. It scans the market to show how well competing books are performing, what keywords they’re using, and how you can outshine them. This valuable insight allows you to refine your strategy and boost your book’s performance.

Multi-Language Support

Want to reach a global audience? AzonKDP allows you to create and publish ebooks in over 100 languages, ensuring your content is accessible to readers worldwide. This feature helps you tap into lucrative international markets and expand your reach.

Multi-Platform Publishing

Don’t limit yourself to Amazon. AzonKDP enables you to publish your ebook on multiple platforms, including Amazon KDP, Apple Books, Google Play, Etsy, eBay, Kobo, JVZoo, and more. This multi-platform approach maximizes your sales potential and reaches a broader audience.

AI SEO-Optimizer

Not only does AzonKDP help your books rank on Amazon, but it also optimizes your content for search engines like Google. The AI SEO-optimizer ensures your book has the best chance of driving organic traffic, increasing your visibility and sales.

Built-in Media Library

Enhance your ebook with professional-quality visuals from AzonKDP’s built-in media library. Access over 2 million stock images, videos, and vectors to personalize your content and make it more engaging for readers.

Real-Time Market Trends Hunter

Stay updated with the latest Amazon market trends using AzonKDP’s real-time trends hunter. It shows you which categories and keywords are trending, allowing you to stay ahead of the curve and adapt to market changes instantly.

One-Click Book Translation Translate your ebook into multiple languages with ease. AzonKDP’s one-click translation feature ensures your content is available in over 100 languages, helping you reach a global audience and increase your sales.

>>>>>Get More Info

#affiliate marketing#Easy eBook publishing#Digital book marketing#AzonKDP Review#AzonKDP#Amazon Kindle#Amazon publishing AI#Ai Ebook Creator

5 notes

·

View notes

Text

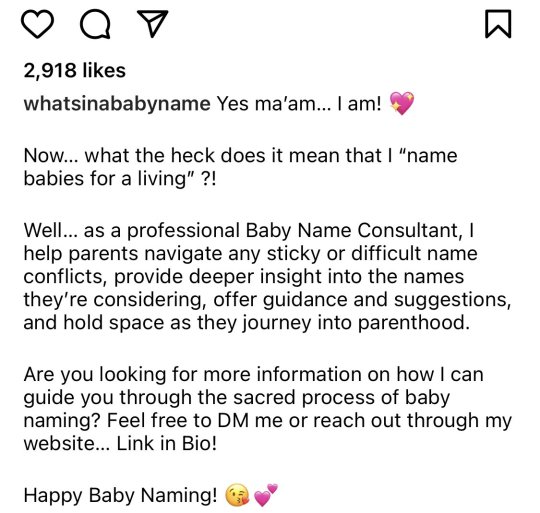

Re: “Emily” yes, it’s a very nice name, but it was also the #1 girls name in the US from 1999-2007 and only dropped out of the top 10 in 2017.

Right now, it’s a safe enough name, but a decade ago you would have to ask yourself, do I want my kid to have to go by Emily X. all through elementary school because there are three in her class? My sister was named Jessica in the 1980s and went through that and hated it. On the other hand, names are more diverse now, so even when it was #1 she might still be the only one - but there would also be Emmas plus “alternate spellings” like Emilie, Emilee, and Emmalee, which were all in the top 1000 when Emily was in the top 10.

And sure, that’s all info you can get yourself, but plenty of people have made money off of writing baby name books that put all that info in one easy place so you don’t have to do dozens of searches of Social Security’s databases, plus things like celebrities with the same name and other possible teasing vectors, etc etc.

Idk, naming kids is hard. They’re gonna have to deal with that name for a long-ass time, and if they have to change it as an adult it’s kind of a pain in the ass. Plenty of people get help, even if most can’t or wouldn’t spend the money on a personal consultant. And yeah, if this lady can give us one fewer kayleigh or brooklynne or whatever, more power to her.

I wish this was my job.

#I didn’t name my son my top choice bc it was the no 1 boy name that year#also ironic that the person posting that has mysharona in their username#because I was almost named Sharona after that song 😫#I was still named after a song but not that one

86K notes

·

View notes

Text

The Role of Data Science in Artificial Intelligence

In today’s digital world, two of the most powerful forces driving innovation are Data Science and Artificial Intelligence (AI). While distinct, these fields are tightly intertwined, with data science providing the essential foundation upon which AI operates. As organizations increasingly turn to intelligent systems to enhance user-friendliness, decision-making, automate operations, and personalize customer experiences, The synergy between data science and AI becomes more vital than ever.

This blog explores how data science underpins the functionality and effectiveness of AI, from model training to real-world deployment, and there are many opportunities in this field, and you have better career growth in the industry.

What Is Data Science in Artificial Intelligence?

At its core, data science is about transforming raw data into meaningful and actionable insights. It encompasses the entire lifecycle of data – collection, cleaning, transformation, analysis, visualization, and interpretation. A data scientist leverages statistical methods, coding abilities, and subject matter knowledge to identify trends and support informed decision-making.

Key components of data science include:

Data Collection: Gathering data from various sources such as sensors, user interactions, surveys, or external databases.

Data Cleaning: Removing inaccuracies, filling in missing values, and ensuring data consistency.

Exploratory Data Analysis (EDA): Visualizing data distributions and identifying trends or anomalies.

Predictive Modeling: Applying algorithms to forecast results or categorize data.

Reporting: Presenting insights via dashboards or reports for stakeholders.

Understanding Artificial Intelligence

Artificial intelligence is the field of creating systems that can perform tasks typically requiring human intelligence. These tasks include speech recognition, decision-making, understanding language, and image identification.

Data science in artificial intelligence can be broken down into subfields:

Machine Learning (ML): Systems learn from data to make predictions without being explicitly programmed.

Deep Learning: A subset of ML using neural networks to analyze large volumes of unstructured data like images or audio.

Natural Language Processing (NLP): Enables machines to understand, process, and interact using human language.

Computer Vision: Enables machines to process and make sense of visual data to inform decisions.

Popular AI applications include autonomous vehicles, fraud detection systems, chatbots, language translation tools, and virtual assistants.

How Data Science Powers AI

AI systems’ effectiveness depends heavily on the quality of the data they are trained with and this is precisely where data science plays a crucial role. Data science provides the structured framework, techniques, and infrastructure needed to build, evaluate, and refine AI models.

Data Preparation Before training an AI model, data scientists gather relevant data, clean it, and transform it into a usable format. Poor-quality data leads to poor predictions, making this step crucial.

Feature EngineeringNot all raw data is directly useful. Data scientists extract features—specific variables that are most relevant for model training. For instance, in a housing price prediction model, features might include location, square footage, and age of the house.

Model Selection and Training Data science provides the tools to train models using different algorithms (e.g., decision trees, support vector machines, neural networks). Data scientists test multiple models and tune hyperparameters to find the best performer.

Model Evaluation Once trained, models must be validated using techniques like cross-validation, confusion matrices, precision-recall scores, and ROC curves. This ensures the AI performs accurately and reliably on real-world data.

Deployment and Monitoring Data science doesn’t end with a deployed model. Continuous monitoring ensures models adapt to changing data patterns and remain effective over time.

Practical Applications of AI Powered by Data Science

The collaboration between AI and data science is transforming nearly every industry. Here are some practical examples from the real world:

Healthcare

AI systems diagnose diseases, predict patient risks, and personalize treatment plans. Data science helps clean and standardize patient data, ensuring AI models provide accurate diagnostics and recommendation

2.E-commerce

Major retailers such as Amazon utilize AI-driven recommendation engines to offer personalized product suggestions. These models rely on user behavior data—what you search, click, and buy—cleaned and structured by data science techniques.

3.Finance

Banks use AI for fraud detection, credit scoring, and algorithmic trading. Data scientists develop and test models using historical transaction data, continuously updating them to catch evolving fraud tactics.

4. Transportation

From optimizing delivery routes to powering autonomous vehicles, data science fuels AI algorithms that analyze traffic patterns, sensor data, and geospatial information.

5.Entertainment

Services like Netflix and Spotify leverage AI to customize content recommendations to individual user preferences. Data science processes viewing/listening histories to help the AI understand individual preferences.

Challenges in Integrating Data Science with Artificial Intelligence

Even though data science and AI have great potential, combining them still comes with some challenges.

Data Quality and Volume AI models require vast amounts of clean, labeled data. Poor data can result in misleading predictions or model failures.

Bias and Ethics AI can pick up biases from the data it learns from, which can cause unfair or unequal results. For instance, a hiring tool trained on biased past data might prefer one group of people over another

3. Infrastructure and Scalability Processing and storing large datasets demands robust infrastructure. Organizations must invest in cloud computing, databases, and data pipelines.

Interpretability AI models, particularly deep learning models, often work like black boxes, making it hard to understand how they make decisions. Data scientists play a critical role in making these models interpretable and transparent for stakeholders.

The Future of Data Science in AI

As both fields grow, they will rely on each other even more.Here’s a glimpse of what the future may bring:

AutoML: Automated machine learning platforms will make it easier for non-experts to develop AI models, guided by principles from data science. Compared to other platforms, it’s a very easy learning platform.

AI Ops: Data science will play a major role in using AI to automate IT operations and system monitoring.

Edge AI: With growing demand for real-time decision-making, data science will help optimize models for edge devices like mobile phones and IoT sensors.

Personalized AI: AI will become more personalized, understanding individual behavior better—thanks to refined data science models and techniques.

The demand for professionals who can bridge the gap between data science and AI is rapidly growing. Roles like machine learning engineers, data engineers, and AI researchers are in high demand.

Conclusion

Data science is the backbone of artificial intelligence. It handles everything from collecting and cleaning data to building and testing models, helping AI to learn and get better. Without good data, AI can’t work—and data science makes sure that data is clear, useful, and reliable.

If you want to build smart systems or drive innovation in any field, understanding data science isn’t just helpful—it’s essential.

Start learning data science now, and you’ll be ready to unlock the full power of AI in the future.

#students#DataScience#MachineLearning#ArtificialIntelligence#AI#DeepLearning#PythonProgramming#BigData#Analytics#DataAnalytics#DataVisualization#DataScientist#DataScienceLife#TechTrends#Programming#DataEngineer#nschool academy

0 notes

Text

Fallout

My games main inspiration is the fallout franchise, A post-apocalyptic RPG game franchise set in various locations around America in a 60's futuristic styled environment, and I have looked at locations and designs from fallout 3's Operation Anchorage DLC to establish a setting and timeframe for the game itself.

The Battle of Anchorage, which is where the DLC takes place was a war that was fought by China and the United States from Late 2066 and early 2077, the environment features dead trees, snow covered terrain and numerous military installations across the DLC's depiction of anchorage.

The main idea was to have the player fight the Chinese Chimera take, though I did consider a swapped perspective as a backup in-case I was unable to source a suitable reference model, though it would be a lot harder considering there are only 2 tanks ever seen in fallout, those being the tanks from the anchorage DLC, and Wrecks of tanks in the base game.

The game using these aspects also works as a reference to Battlezone again with their Military Bradley Training version, which my game could be seen as a fallout universe based version of that game.

Combined with the setting, The games ideal controller contributes to the Fallout theming even more.

The Pip-Boy 3000 (Personal Information Processor is a utility device serving as a database of personal information about its user, which also includes a functional radio, Geiger counter, and flashlight, not to mention its ability to monitor and display vital information to the wearer (hunger, thirst, tiredness, and general health).

youtube

It features multiple dials and knobs for navigation of its various screens, which will be used as the controller binds for the game using its 'Ext Terminal' function, exclusive to the real world replica.

Its default green display will be one of the games main stylistic features, outside of the vector graphic replication.

[Fallout 1 & 2] - [Fallout Tactics] - [Fallout 3 & New Vegas] - [Fallout 4 & 76] (With an example of a Holotape game to give an idea of how the concept could look like if I were to work on it)

If I'm able to, I've also toyed around with the idea of making a concept Holotape design for the game. Holotapes were used throughout every fallout game as a was for information to be stored on, though their designs changed with each major release, and changing the most in Bethesda's release of their own fallout games.

0 notes

Text

What Are the Key Technologies Behind Successful Generative AI Platform Development for Modern Enterprises?

The rise of generative AI has shifted the gears of enterprise innovation. From dynamic content creation and hyper-personalized marketing to real-time decision support and autonomous workflows, generative AI is no longer just a trend—it’s a transformative business enabler. But behind every successful generative AI platform lies a complex stack of powerful technologies working in unison.

So, what exactly powers these platforms? In this blog, we’ll break down the key technologies driving enterprise-grade generative AI platform development and how they collectively enable scalability, adaptability, and business impact.

1. Large Language Models (LLMs): The Cognitive Core

At the heart of generative AI platforms are Large Language Models (LLMs) like GPT, LLaMA, Claude, and Mistral. These models are trained on vast datasets and exhibit emergent abilities to reason, summarize, translate, and generate human-like text.

Why LLMs matter:

They form the foundational layer for text-based generation, reasoning, and conversation.

They enable multi-turn interactions, intent recognition, and contextual understanding.

Enterprise-grade platforms fine-tune LLMs on domain-specific corpora for better performance.

2. Vector Databases: The Memory Layer for Contextual Intelligence

Generative AI isn’t just about creating something new—it’s also about recalling relevant context. This is where vector databases like Pinecone, Weaviate, FAISS, and Qdrant come into play.

Key benefits:

Store and retrieve high-dimensional embeddings that represent knowledge in context.

Facilitate semantic search and RAG (Retrieval-Augmented Generation) pipelines.

Power real-time personalization, document Q&A, and multi-modal experiences.

3. Retrieval-Augmented Generation (RAG): Bridging Static Models with Live Knowledge

LLMs are powerful but static. RAG systems make them dynamic by injecting real-time, relevant data during inference. This technique combines document retrieval with generative output.

Why RAG is a game-changer:

Combines the precision of search engines with the fluency of LLMs.

Ensures outputs are grounded in verified, current knowledge—ideal for enterprise use cases.

Reduces hallucinations and enhances trust in AI responses.

4. Multi-Modal Learning and APIs: Going Beyond Text

Modern enterprises need more than text. Generative AI platforms now incorporate multi-modal capabilities—understanding and generating not just text, but also images, audio, code, and structured data.

Supporting technologies:

Vision models (e.g., CLIP, DALL·E, Gemini)

Speech-to-text and TTS (e.g., Whisper, ElevenLabs)

Code generation models (e.g., Code LLaMA, AlphaCode)

API orchestration for handling media, file parsing, and real-world tools

5. MLOps and Model Orchestration: Managing Models at Scale

Without proper orchestration, even the best AI model is just code. MLOps (Machine Learning Operations) ensures that generative models are scalable, maintainable, and production-ready.

Essential tools and practices:

ML pipeline automation (e.g., Kubeflow, MLflow)

Continuous training, evaluation, and model drift detection

CI/CD pipelines for prompt engineering and deployment

Role-based access and observability for compliance

6. Prompt Engineering and Prompt Orchestration Frameworks

Crafting the right prompts is essential to get accurate, reliable, and task-specific results from LLMs. Prompt engineering tools and libraries like LangChain, Semantic Kernel, and PromptLayer play a major role.

Why this matters:

Templates and chains allow consistency across agents and tasks.

Enable composability across use cases: summarization, extraction, Q&A, rewriting, etc.

Enhance reusability and traceability across user sessions.

7. Secure and Scalable Cloud Infrastructure

Enterprise-grade generative AI platforms require robust infrastructure that supports high computational loads, secure data handling, and elastic scalability.

Common tech stack includes:

GPU-accelerated cloud compute (e.g., AWS SageMaker, Azure OpenAI, Google Vertex AI)

Kubernetes-based deployment for scalability

IAM and VPC configurations for enterprise security

Serverless backend and function-as-a-service (FaaS) for lightweight interactions

8. Fine-Tuning and Custom Model Training

Out-of-the-box models can’t always deliver domain-specific value. Fine-tuning using transfer learning, LoRA (Low-Rank Adaptation), or PEFT (Parameter-Efficient Fine-Tuning) helps mold generic LLMs into business-ready agents.

Use cases:

Legal document summarization

Pharma-specific regulatory Q&A

Financial report analysis

Customer support personalization

9. Governance, Compliance, and Explainability Layer

As enterprises adopt generative AI, they face mounting pressure to ensure AI governance, compliance, and auditability. Explainable AI (XAI) technologies, model interpretability tools, and usage tracking systems are essential.

Technologies that help:

Responsible AI frameworks (e.g., Microsoft Responsible AI Dashboard)

Policy enforcement engines (e.g., Open Policy Agent)

Consent-aware data management (for HIPAA, GDPR, SOC 2, etc.)

AI usage dashboards and token consumption monitoring

10. Agent Frameworks for Task Automation

Generative AI platform Development are evolving beyond chat. Modern solutions include autonomous agents that can plan, execute, and adapt to tasks using APIs, memory, and tools.

Tools powering agents:

LangChain Agents

AutoGen by Microsoft

CrewAI, BabyAGI, OpenAgents

Planner-executor models and tool calling (OpenAI function calling, ReAct, etc.)

Conclusion

The future of generative AI for enterprises lies in modular, multi-layered platforms built with precision. It's no longer just about having a powerful model—it’s about integrating it with the right memory, orchestration, compliance, and multi-modal capabilities. These technologies don’t just enable cool demos—they drive real business transformation, turning AI into a strategic asset.

For modern enterprises, investing in these core technologies means unlocking a future where every department, process, and decision can be enhanced with intelligent automation.

0 notes

Text

Machine Learning Algorithms for Predicting Atrial Fibrillation Recurrence

Introduction

Atrial fibrillation (AF) is a chronic, recurrent condition that poses significant challenges in long-term management, particularly after therapeutic interventions such as catheter ablation, cardioversion, or antiarrhythmic drug therapy. Despite advances in treatment, AF recurrence remains a major concern, with recurrence rates varying widely based on individual patient characteristics. Traditional risk assessment models rely on clinical variables such as age, comorbidities, and echocardiographic findings. However, these methods often lack precision in predicting which patients are most likely to experience AF recurrence.

Machine learning (ML) algorithms are revolutionizing AF management by providing data-driven, highly accurate predictive models. By analyzing vast amounts of patient data—including electrocardiographic (ECG) patterns, imaging studies, genetic markers, and wearable device outputs—ML models can identify subtle risk factors that may be overlooked in conventional assessments. These advanced computational techniques enable personalized treatment planning, early intervention strategies, and improved long-term outcomes for AF patients.

Machine Learning in Risk Stratification and Predictive Modeling

Machine learning algorithms offer superior risk stratification by integrating diverse data sources to create predictive models tailored to individual patients. Unlike traditional scoring systems that rely on a limited number of variables, ML models analyze complex interactions between multiple risk factors to generate more precise recurrence predictions.

Supervised learning techniques, such as decision trees, random forests, and support vector machines, are commonly used to classify patients based on their likelihood of AF recurrence. These models learn from historical patient data, identifying key predictors such as left atrial volume, fibrosis patterns on cardiac MRI, and variability in ECG waveforms. Deep learning, particularly convolutional neural networks (CNNs) and recurrent neural networks (RNNs), enhances predictive accuracy by recognizing intricate patterns in ECG signals, making them invaluable for detecting early signs of arrhythmic recurrence.

The Role of Big Data and Wearable Technology

The growing availability of big data from electronic health records (EHRs), continuous ECG monitoring devices, and genetic databases has fueled the development of more sophisticated ML models for AF prediction. Wearable technologies, including smartwatches and biosensors, continuously collect heart rhythm data, providing real-time insights into arrhythmia patterns and recurrence risks.

ML algorithms process this vast dataset, identifying subtle changes in heart rate variability, P-wave dispersion, and premature atrial contractions that may signal an impending recurrence. By leveraging this continuous stream of patient data, AI-driven models offer real-time risk assessments, enabling physicians to adjust treatment plans proactively. Remote monitoring platforms further enhance patient engagement, allowing early medical intervention and reducing the likelihood of recurrent AF episodes going undetected.

Personalized Treatment Strategies Based on ML Predictions

One of the key advantages of ML-driven AF recurrence prediction is its ability to support personalized treatment strategies. By accurately identifying high-risk patients, clinicians can implement targeted interventions, such as intensified rhythm control strategies, early repeat ablation, or tailored pharmacotherapy, to prevent recurrent AF episodes.

Pharmacological management can also be optimized using ML predictions. For example, patients identified as having a high likelihood of AF recurrence may benefit from earlier initiation of antiarrhythmic drugs or more aggressive anticoagulation therapy to mitigate stroke risk. Conversely, those with a low recurrence probability can avoid unnecessary long-term medication use, reducing the risk of adverse effects. This precision-based approach improves therapeutic outcomes while minimizing treatment burden for patients.

Future Directions and Challenges in ML-Driven AF Management

While ML algorithms have shown great promise in predicting AF recurrence, several challenges remain in their clinical implementation. The need for high-quality, standardized data across diverse patient populations is critical for model generalization and reliability. Biases in training datasets, stemming from disparities in healthcare access and demographic representation, must be addressed to ensure equitable predictions for all patient groups.

Additionally, integrating ML models into routine clinical practice requires seamless interoperability with existing healthcare systems, including EHR platforms and decision-support tools. Ongoing research is focused on developing user-friendly, explainable AI models that provide clear, actionable insights to healthcare providers rather than opaque black box predictions. As technology continues to advance, collaborations between data scientists, cardiologists, and regulatory bodies will be essential in refining ML applications for widespread clinical adoption.

Conclusion

Machine learning algorithms are transforming the prediction and management of atrial fibrillation recurrence by leveraging vast datasets, wearable technology, and advanced computational models. By enhancing risk stratification, enabling real-time monitoring, and supporting personalized treatment strategies, ML-driven approaches improve patient outcomes and reduce the burden of recurrent AF episodes.

As research progresses, further refinement of ML models and their integration into routine clinical workflows will be crucial in optimizing AF management. By embracing AI-driven predictive analytics, healthcare providers can shift from reactive treatment approaches to proactive, data-informed interventions, ultimately improving the quality of life for AF patients and advancing the field of precision cardiology.

1 note

·

View note

Text

AI Agent Development: A Complete Guide to Building Smart, Autonomous Systems in 2025

Artificial Intelligence (AI) has undergone an extraordinary transformation in recent years, and 2025 is shaping up to be a defining year for AI agent development. The rise of smart, autonomous systems is no longer confined to research labs or science fiction — it's happening in real-world businesses, homes, and even your smartphone.

In this guide, we’ll walk you through everything you need to know about AI Agent Development in 2025 — what AI agents are, how they’re built, their capabilities, the tools you need, and why your business should consider adopting them today.

What Are AI Agents?

AI agents are software entities that perceive their environment, reason over data, and take autonomous actions to achieve specific goals. These agents can range from simple chatbots to advanced multi-agent systems coordinating supply chains, running simulations, or managing financial portfolios.

In 2025, AI agents are powered by large language models (LLMs), multi-modal inputs, agentic memory, and real-time decision-making, making them far more intelligent and adaptive than their predecessors.

Key Components of a Smart AI Agent

To build a robust AI agent, the following components are essential:

1. Perception Layer

This layer enables the agent to gather data from various sources — text, voice, images, sensors, or APIs.

NLP for understanding commands

Computer vision for visual data

Voice recognition for spoken inputs

2. Cognitive Core (Reasoning Engine)

The brain of the agent where LLMs like GPT-4, Claude, or custom-trained models are used to:

Interpret data

Plan tasks

Generate responses

Make decisions

3. Memory and Context

Modern AI agents need to remember past actions, preferences, and interactions to offer continuity.

Vector databases

Long-term memory graphs

Episodic and semantic memory layers

4. Action Layer

Once decisions are made, the agent must act. This could be sending an email, triggering workflows, updating databases, or even controlling hardware.

5. Autonomy Layer

This defines the level of independence. Agents can be:

Reactive: Respond to stimuli

Proactive: Take initiative based on context

Collaborative: Work with other agents or humans

Use Cases of AI Agents in 2025

From automating tasks to delivering personalized user experiences, here’s where AI agents are creating impact:

1. Customer Support

AI agents act as 24/7 intelligent service reps that resolve queries, escalate issues, and learn from every interaction.

2. Sales & Marketing

Agents autonomously nurture leads, run A/B tests, and generate tailored outreach campaigns.

3. Healthcare

Smart agents monitor patient vitals, provide virtual consultations, and ensure timely medication reminders.

4. Finance & Trading

Autonomous agents perform real-time trading, risk analysis, and fraud detection without human intervention.

5. Enterprise Operations

Internal copilots assist employees in booking meetings, generating reports, and automating workflows.

Step-by-Step Process to Build an AI Agent in 2025

Step 1: Define Purpose and Scope

Identify the goals your agent must accomplish. This defines the data it needs, actions it should take, and performance metrics.

Step 2: Choose the Right Model

Leverage:

GPT-4 Turbo or Claude for text-based agents

Gemini or multimodal models for agents requiring image, video, or audio processing

Step 3: Design the Agent Architecture

Include layers for:

Input (API, voice, etc.)

LLM reasoning

External tool integration

Feedback loop and memory

Step 4: Train with Domain-Specific Knowledge

Integrate private datasets, knowledge bases, and policies relevant to your industry.

Step 5: Integrate with APIs and Tools

Use plugins or tools like LangChain, AutoGen, CrewAI, and RAG pipelines to connect agents with real-world applications and knowledge.

Step 6: Test and Simulate

Simulate environments where your agent will operate. Test how it handles corner cases, errors, and long-term memory retention.

Step 7: Deploy and Monitor

Run your agent in production, track KPIs, gather user feedback, and fine-tune the agent continuously.

Top Tools and Frameworks for AI Agent Development in 2025

LangChain – Chain multiple LLM calls and actions

AutoGen by Microsoft – For multi-agent collaboration

CrewAI – Team-based autonomous agent frameworks

OpenAgents – Prebuilt agents for productivity

Vector Databases – Pinecone, Weaviate, Chroma for long-term memory

LLMs – OpenAI, Anthropic, Mistral, Google Gemini

RAG Pipelines – Retrieval-Augmented Generation for knowledge integration

Challenges in Building AI Agents

Even with all this progress, there are hurdles to be aware of:

Hallucination: Agents may generate inaccurate information.

Context loss: Long conversations may lose relevancy without strong memory.

Security: Agents with action privileges must be protected from misuse.

Ethical boundaries: Agents must be aligned with company values and legal standards.

The Future of AI Agents: What’s Coming Next?

2025 marks a turning point where AI agents move from experimental to mission-critical systems. Expect to see:

Personalized AI Assistants for every employee

Decentralized Agent Networks (Autonomous DAOs)

AI Agents with Emotional Intelligence

Cross-agent Collaboration in real-time enterprise ecosystems

Final Thoughts

AI agent development in 2025 isn’t just about automating tasks — it’s about designing intelligent entities that can think, act, and grow autonomously in dynamic environments. As tools mature and real-time data becomes more accessible, your organization can harness AI agents to unlock unprecedented productivity and innovation.

Whether you’re building an internal operations copilot, a trading agent, or a personalized shopping assistant, the key lies in choosing the right architecture, grounding the agent in reliable data, and ensuring it evolves with your needs.

1 note

·

View note

Text

The largest set of free and lovely summer icons, images and stickers!

Now this summer it’s here, it’s time to add tropical subjects and sunshine to your designs! Summer tabs can enhance and give a new look to your projects, whether you are a member of a graphic designer, material producer or businessman. To bring color and vitality to their projects, the Iconadda vector provides a vast range of summer icons, attractive summer stickers and summer images.

Why apply stickers, images and icons to the summer? Summer-themed graphics are perfect for marketing, social media, websites and so on. They add a bright, nice stretch to your content that raises the reader interaction. Summer vector icons and tropical images can bring a splash of excitement to your projects, whether you create them for a beach party, summer promotions or holiday blog.

Summer icon, the biggest vector for your projects Our summer icon sets are some of the products: The beach’s palms, sandcastle and umbrellas are some of sun and beach icons. Suitcases, passports and fly are some of symbols on vacation and travel. Ice cream, tropical drinks and Popsicals are some of food and drink icons. Picnic curves, swimming pools and surfboards outside are some of fun icons.

These free summer icons come in SVG and PNG formats, which are easy to incorporate into their designs.

Grand Summer Images for Creative Task Enhance blog entries, web banners and marketing strategies with our free summer images. Among the items in our database: Sumratime flat image for contemporary design Summer water painting with creative twist Sumratime minimum illustration for elegant assignments Our summer vector images are perfect for prints and digital media since they are all of superior quality and can be customized.

Where can I get free summer illustration, stickers and icons? Free summer icons, Vector Summer Pictures and a wide range of eye-catching summer stickers are available in Iconadda. You can use our high-resolution assets for personal and commercial projects

Get summer stickers and icons instantly! To make your designs look energetic and tense, utilize our choice and download the best consemium graphics. Now visit iconadda to utilize our creative resources!

#SummerVibes#SunnyDays#SummerIcons#TropicalStickers#BeachIllustrations#VacationVibes#SummerAesthetic#HotSeason#SummerFun#TravelStickers#PalmTreeIcons#OceanWaves#SunshineGraphics#BeachDay#SummertimeArt#HelloSummer#IceCreamIcons#FlipFlopStickers#HolidayIllustrations#SeasideVibes#SunsetGraphics#SummerFestival#OutdoorAdventure#TropicalParadise#WatermelonIcons#PoolPartyStickers#ExoticSummer#BeachLifeAssets#WarmWeatherArt#SunkissedDesigns

0 notes

Text

Inside the AiBiCi University Data Breach: A Wake-Up Call for Cybersecurity in Higher Education - Blog 3

Introduction

AiBiCi University (ABC), a state institution in the southern Philippines, manages vast amounts of sensitive data, including personally identifiable information (PII) of students, faculty, and administrative staff. However, the university faced a major cybersecurity crisis when an unknown hacker collective breached the student database and leaked private data onto the dark web. The investigation revealed that the breach stemmed from a faculty member’s weak administrator password. Furthermore, ABC University lacked a dedicated Data Protection Officer (DPO) and had outdated cybersecurity measures, leaving it vulnerable to cyber threats.

This incident raised significant concerns among faculty and students about potential financial fraud and identity theft. The National Privacy Commission (NPC) launched an investigation into ABC University for violations of RA 10173 (Data Privacy Act of 2012) concerning data protection, breach disclosure, and institutional responsibility. The university now faces the challenge of addressing these issues, complying with NPC regulations, and reinforcing its data security protocols to prevent future breaches.

Literature Review

1. The Role of Data Protection in Higher Education

Educational institutions manage vast amounts of personal data, making them attractive targets for cybercriminals (Solove, 2006). Universities must balance accessibility with security to prevent unauthorized access to sensitive information. Proper data protection strategies, including encryption, access control, and compliance with legal frameworks, are essential in mitigating risks associated with data breaches (Smith et al., 2018).

2. Password Security and Insider Threats

Weak passwords and insider threats pose major cybersecurity risks. Studies have shown that compromised credentials account for a significant percentage of data breaches (Verizon, 2022). The use of multi-factor authentication (MFA) and role-based access control (RBAC) significantly reduces the risk of unauthorized access (OWASP, 2021). Additionally, continuous monitoring and security awareness training are crucial in preventing faculty and staff from becoming attack vectors.

3. Compliance with Data Protection Laws

RA 10173, also known as the Data Privacy Act of 2012, outlines the legal responsibilities of organizations handling personal data. Organizations that fail to comply with these regulations face penalties, reputational damage, and loss of stakeholder trust (National Privacy Commission, 2020). Compliance requires the appointment of a DPO, conducting Privacy Impact Assessments (PIA), and implementing Incident Response Plans (IRP) to effectively manage data breaches.

Questions and Answers

1. Which clauses of the 2012 Data Privacy Act might AiBiCi University have violated?

ABC University likely violated multiple provisions of RA 10173, particularly those that govern data security, breach notification, and unauthorized processing of personal data. Section 11 emphasizes the principles of data processing, which require organizations to process personal information with transparency, legitimate purpose, and proportionality. ABC University’s failure to secure administrator accounts demonstrates a lapse in maintaining these principles. Furthermore, Section 20 mandates that institutions implement security measures for personal data protection. By failing to update their cybersecurity protocols and enforce strong authentication mechanisms, ABC University neglected its duty to safeguard sensitive data.

Additionally, the university’s breach may fall under Section 25, which penalizes unauthorized processing of personal information. Since the exposed data ended up on the dark web, the university’s failure to prevent unauthorized access means it did not implement sufficient security controls. Section 28, which prohibits unauthorized disclosure, was also likely violated, as confidential student information was made available to third parties without consent. Lastly, Section 30 requires organizations to notify the National Privacy Commission (NPC) and affected individuals immediately after discovering a breach. If ABC University delayed or failed to report the incident, it could face penalties for non-compliance.

2. How can AiBiCi State University make sure that RA 10173 is followed to stop these kinds of incidents?

To ensure compliance with RA 10173, ABC University must implement a structured and proactive approach to data protection. First, the university should appoint a Data Protection Officer (DPO) who is responsible for overseeing data security policies, conducting audits, and ensuring adherence to legal requirements. A strong DPO can bridge the gap between compliance and operational security, reducing the risk of future breaches.

Second, ABC University must conduct Privacy Impact Assessments (PIA) before implementing new information systems or handling sensitive data. These assessments help identify vulnerabilities and allow administrators to take preventive actions. Additionally, implementing multi-layered authentication, such as requiring multi-factor authentication (MFA) for administrative access, would significantly reduce unauthorized logins caused by weak passwords.

Another essential step is to develop a robust incident response plan that outlines the university’s actions in case of a security breach. The plan should include predefined steps for containment, investigation, and notification to the NPC and affected individuals. Furthermore, encrypting sensitive data and ensuring secure storage practices will prevent unauthorized entities from easily accessing private information even if a breach occurs. Lastly, ABC University must regularly update its cybersecurity policies to align with evolving best practices and compliance standards.

3. How should the Data Protection Officer (DPO) of the university react to the NPC's inquiry?

The DPO plays a critical role in responding to the NPC’s inquiry and mitigating the impact of the breach. The first step is to conduct an internal audit to assess the extent of the breach, identify weaknesses in the security framework, and document findings. Transparency is key in demonstrating due diligence, so the DPO must cooperate fully with the NPC’s investigation, providing necessary documents, security logs, and a report on the university’s cybersecurity policies.

In addition, the DPO must notify all affected individuals, informing them of potential risks such as identity theft and advising them on protective measures. To strengthen security immediately, the university should enforce mandatory password resets and improve access control policies. Finally, the DPO must develop and implement a comprehensive Data Privacy Program, ensuring that the institution remains compliant with RA 10173 while fostering a security-first culture.

4. What best practices in cybersecurity might have stopped this hack?

Best practices such as enforcing strong password policies, implementing multi-factor authentication (MFA), and conducting regular cybersecurity training could have prevented this breach. By requiring employees to use complex passwords and MFA, the university could have significantly reduced unauthorized access. Additionally, real-time monitoring and intrusion detection systems would have identified the attack early, allowing for immediate countermeasures. Frequent security audits and strict access controls would have further minimized vulnerabilities.

5. What long-term measures should AiBiCi State University take to improve data security and protection?

ABC University should invest in advanced encryption technologies, automated security systems, and ongoing cybersecurity training. Establishing a cybersecurity task force, conducting risk assessments, and fostering a security-conscious culture will ensure long-term protection. Partnering with cybersecurity firms and adopting international security standards such as ISO 27001 will also strengthen institutional resilience against cyber threats.

What I Have Learned

This case highlights the critical role of data privacy and cybersecurity in educational institutions. I have learned the importance of enforcing strict security policies, such as multi-factor authentication, regular security audits, and continuous employee training. The role of a Data Protection Officer (DPO) is essential in ensuring compliance with RA 10173 and addressing breaches effectively. Understanding legal frameworks like the Data Privacy Act of 2012 helps institutions mitigate risks and respond appropriately to data incidents. This case also emphasizes the importance of transparency and accountability in handling cybersecurity breaches.

Conclusion

The AiBiCi University data breach highlights the critical need for stronger cybersecurity measures in educational institutions. By implementing proactive security strategies and adhering to RA 10173, universities can protect their stakeholders from financial fraud, identity theft, and reputational damage. Moving forward, ABC University must prioritize cybersecurity investments, ensuring a secure and resilient digital environment for students and faculty alike.

References

National Privacy Commission. (2020). RA 10173: Data Privacy Act of 2012.

OWASP. (2021). Best Practices for Secure Authentication.

Smith, J., et al. (2018). Cybersecurity in Higher Education Institutions.

Solove, D. (2006). Understanding Privacy and Data Protection.

Verizon. (2022). Data Breach Investigations Report.

#blog3 #march12/2025 #jaymarasigan #BSIT-IS(3A)

0 notes

Link

[ad_1] Currently, three trending topics in the implementation of AI are LLMs, RAG, and Databases. These enable us to create systems that are suitable and specific to our use. This AI-powered system, combining a vector database and AI-generated responses, has applications across various industries. In customer support, AI chatbots retrieve knowledge base answers dynamically. The legal and financial sectors benefit from AI-driven document summarization and case research. Healthcare AI assistants help doctors with medical research and drug interactions. E-learning platforms provide personalized corporate training. Journalism uses AI for news summarization and fact-checking. Software development leverages AI for coding assistance and debugging. Scientific research benefits from AI-driven literature reviews. This approach enhances knowledge retrieval, automates content creation, and personalizes user interactions across multiple domains. In this tutorial, we will create an AI-powered English tutor using RAG. The system integrates a vector database (ChromaDB) to store and retrieve relevant English language learning materials and AI-powered text generation (Groq API) to create structured and engaging lessons. The workflow includes extracting text from PDFs, storing knowledge in a vector database, retrieving relevant content, and generating detailed AI-powered lessons. The goal is to build an interactive English tutor that dynamically generates topic-based lessons while leveraging previously stored knowledge for improved accuracy and contextual relevance. Step 1: Installing the necessary libraries !pip install PyPDF2 !pip install groq !pip install chromadb !pip install sentence-transformers !pip install nltk !pip install fpdf !pip install torch PyPDF2 extracts text from PDF files, making it useful for handling document-based information. groq is a library that provides access to Groq’s AI API, enabling advanced text generation capabilities. ChromaDB is a vector database designed to retrieve text efficiently. Sentence-transformers generate text embeddings, which helps in storing and retrieving information meaningfully. nltk (Natural Language Toolkit) is a well-known NLP library for text preprocessing, tokenization, and analysis. fpdf is a lightweight library for creating and manipulating PDF documents, allowing generated lessons to be saved in a structured format. torch is a deep learning framework commonly used for machine learning tasks, including AI-based text generation. Step 2: Downloading NLP Tokenization Data import nltk nltk.download('punkt_tab') The punkt_tab dataset is downloaded using the above code. nltk.download(‘punkt_tab’) fetches a dataset required for sentence tokenization. Tokenization is splitting text into sentences or words, which is crucial for breaking down large text bodies into manageable segments for processing and retrieval.Step 3: Setting Up NLTK Data Directory working_directory = os.getcwd() nltk_data_dir = os.path.join(working_directory, 'nltk_data') nltk.data.path.append(nltk_data_dir) nltk.download('punkt_tab', download_dir=nltk_data_dir) We will set up a dedicated directory for nltk data. The os.getcwd() function retrieves the current working directory, and a new directory nltk_data is created within it to store NLP-related resources. The nltk.data.path.append(nltk_data_dir) command ensures that this directory stores downloaded nltk datasets. The punkt_tab dataset, required for sentence tokenization, is downloaded and stored in the specified directory. Step 4: Importing Required Libraries import os import torch from sentence_transformers import SentenceTransformer import chromadb from chromadb.utils import embedding_functions import numpy as np import PyPDF2 from fpdf import FPDF from functools import lru_cache from groq import Groq import nltk from nltk.tokenize import sent_tokenize import uuid from dotenv import load_dotenv Here, we import all necessary libraries used throughout the notebook. os is used for file system operations. torch is imported to handle deep learning-related tasks. sentence-transformers provides an easy way to generate embeddings from text. chromadb and its embedding_functions module help in storing and retrieving relevant text. numpy is a mathematical library used for handling arrays and numerical computations. PyPDF2 is used for extracting text from PDFs. fpdf allows the generation of PDF documents. lru_cache is used to cache function outputs for optimization. groq is an AI service that generates human-like responses. nltk provides NLP functionalities, and sent_tokenize is specifically imported to split text into sentences. uuid generates unique IDs, and load_dotenv loads environment variables from a .env file. Step 5: Loading Environment Variables and API Key load_dotenv() api_key = os.getenv('api_key') os.environ["GROQ_API_KEY"] = api_key #or manually retrieve key from and add it here Through above code, we will load, environment variables from a .env file. The load_dotenv() function reads environment variables from the .env file and makes them available within the Python environment. The api_key is retrieved using os.getenv(‘api_key’), ensuring secure API key management without hardcoding it in the script. The key is then stored in os.environ[“GROQ_API_KEY”], making it accessible for later API calls. Step 6: Defining the Vector Database Class class VectorDatabase: def __init__(self, collection_name="english_teacher_collection"): self.client = chromadb.PersistentClient(path="./chroma_db") self.encoder = SentenceTransformer('all-MiniLM-L6-v2') self.embedding_function = embedding_functions.SentenceTransformerEmbeddingFunction(model_name="all-MiniLM-L6-v2") self.collection = self.client.get_or_create_collection(name=collection_name, embedding_function=self.embedding_function) def add_text(self, text, chunk_size): sentences = sent_tokenize(text, language="english") chunks = self._create_chunks(sentences, chunk_size) ids = [str(uuid.uuid4()) for _ in chunks] self.collection.add(documents=chunks, ids=ids) def _create_chunks(self, sentences, chunk_size): chunks = [] for i in range(0, len(sentences), chunk_size): chunk = ' '.join(sentences[i:i+chunk_size]) chunks.append(chunk) return chunks def retrieve(self, query, k=3): results = self.collection.query(query_texts=[query], n_results=k) return results['documents'][0] This class defines a VectorDatabase that interacts with chromadb to store and retrieve text-based knowledge. The __init__() function initializes the database, creating a persistent chroma_db directory for long-term storage. The SentenceTransformer model (all-MiniLM-L6-v2) generates text embeddings, which convert textual information into numerical representations that can be efficiently stored and searched. The add_text() function breaks the input text into sentences and divides them into smaller chunks before storing them in the vector database. The _create_chunks() function ensures that text is properly segmented, making retrieval more effective. The retrieve() function takes a query and returns the most relevant stored documents based on similarity. Step 7: Implementing AI Lesson Generation with Groq class GroqGenerator: def __init__(self, model_name="mixtral-8x7b-32768"): self.model_name = model_name self.client = Groq() def generate_lesson(self, topic, retrieved_content): prompt = f"Create an engaging English lesson about topic. Use the following information:n" prompt += "nn".join(retrieved_content) prompt += "nnLesson:" chat_completion = self.client.chat.completions.create( model=self.model_name, messages=[ "role": "system", "content": "You are an AI English teacher designed to create an elaborative and engaging lesson.", "role": "user", "content": prompt ], max_tokens=1000, temperature=0.7 ) return chat_completion.choices[0].message.content This class, GroqGenerator, is responsible for generating AI-powered English lessons. It interacts with the Groq AI model via an API call. The __init__() function initializes the generator using the mixtral-8x7b-32768 model, designed for conversational AI. The generate_lesson() function takes a topic and retrieved knowledge as input, formats a prompt, and sends it to the Groq API for lesson generation. The AI system returns a structured lesson with explanations and examples, which can then be stored or displayed. Step 8: Combining Vector Retrieval and AI Generation class RAGEnglishTeacher: def __init__(self, vector_db, generator): self.vector_db = vector_db self.generator = generator @lru_cache(maxsize=32) def teach(self, topic): relevant_content = self.vector_db.retrieve(topic) lesson = self.generator.generate_lesson(topic, relevant_content) return lesson The above class, RAGEnglishTeacher, integrates the VectorDatabase and GroqGenerator components to create a retrieval-augmented generation (RAG) system. The teach() function retrieves relevant content from the vector database and passes it to the GroqGenerator to produce a structured lesson. The lru_cache(maxsize=32) decorator caches up to 32 previously generated lessons to improve efficiency by avoiding repeated computations. In conclusion, we successfully built an AI-powered English tutor that combines a Vector Database (ChromaDB) and Groq’s AI model to implement Retrieval-Augmented Generation (RAG). The system can extract text from PDFs, store relevant knowledge in a structured manner, retrieve contextual information, and generate detailed lessons dynamically. This tutor provides engaging, context-aware, and personalized lessons by utilizing sentence embeddings for efficient retrieval and AI-generated responses for structured learning. This approach ensures learners receive accurate, informative, and well-organized English lessons without requiring manual content creation. The system can be expanded further by integrating additional learning modules, improving database efficiency, or fine-tuning AI responses to make the tutoring process more interactive and intelligent. Use the Colab Notebook here. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 70k+ ML SubReddit. 🚨 Meet IntellAgent: An Open-Source Multi-Agent Framework to Evaluate Complex Conversational AI System (Promoted) Sana Hassan, a consulting intern at Marktechpost and dual-degree student at IIT Madras, is passionate about applying technology and AI to address real-world challenges. With a keen interest in solving practical problems, he brings a fresh perspective to the intersection of AI and real-life solutions. ✅ [Recommended] Join Our Telegram Channel [ad_2] Source link

0 notes

Text

A 5-Step Data Science Guide Anyone Can Follow

Data science has become a cornerstone of modern business and technological advancements. It's the art of extracting valuable insights from data, enabling data-driven decisions that can revolutionize industries. If you're intrigued by the world of data science, here's a 5-step guide to help you embark on your data science journey:

Step 1: Build a Strong Foundation in Mathematics and Statistics

Probability and Statistics: Understand probability distributions, hypothesis testing, and statistical inference.

Linear Algebra: Grasp concepts like matrices, vectors, and linear transformations.

Calculus: Learn differential and integral calculus to understand optimization techniques.

Step 2: Master Programming Languages

Python: A versatile language widely used in data science for data manipulation, analysis, and machine learning.

R: A statistical programming language specifically designed for data analysis and visualization.

SQL: Master SQL to interact with databases and extract relevant data.

Step 3: Dive into Data Analysis and Visualization

Pandas and NumPy: Python libraries for data manipulation and analysis.

Matplotlib and Seaborn: Python libraries for data visualization.

Tableau and Power BI: Powerful tools for creating interactive data visualizations.

Step 4: Learn Machine Learning

Supervised Learning: Understand algorithms like linear regression, logistic regression, decision trees, and random forests.

Unsupervised Learning: Explore techniques like clustering, dimensionality reduction, and anomaly detection.

Deep Learning: Learn about neural networks and their applications in various domains.

Step 5: Gain Practical Experience

Personal Projects: Work on data science projects to apply your skills and build a portfolio.

Kaggle Competitions: Participate in data science competitions to learn from others and improve your skills.

Internships and Co-ops: Gain hands-on experience in a real-world setting.

Remember, data science is a continuous learning process. Stay updated with the latest trends and technologies by following blogs, attending conferences, and participating in online communities.

Xaltius Academy offers comprehensive data science training programs to equip you with the skills and knowledge needed to excel in this growing field. Our expert instructors and hands-on labs will prepare you for success in your data science career.

By following these steps and staying committed to learning, you can embark on a rewarding career in data science.

1 note

·

View note