#RESTful API Solutions

Explore tagged Tumblr posts

Text

Custom REST API Development Solutions | Connect Infosoft

Connect Infosoft offers top-tier REST API development services, catering to diverse business needs. Our seasoned developers excel in crafting robust APIs that power web and mobile applications, ensuring seamless communication between various systems. We specialize in creating secure, scalable, and high-performance REST APIs that adhere to industry standards and best practices. Whether you need API development from scratch, API integration, or API maintenance services, we have the expertise to deliver tailored solutions. Trust Connect Infosoft for cutting-edge REST API development that enhances your digital ecosystem.

#REST API Development Services#Expert REST API Developers#RESTful API Solutions#Custom REST API Development#REST API Integration Services#REST API Security#Scalable RESTful Services#REST API Consulting#REST API Implementation#REST API Best Practices

0 notes

Text

Full Stack Development Services in Hawaii

Get end-to-end solutions with our full stack development services in Hawaii. NogaTech handles both frontend and backend development for a seamless web experience.

#Full Stack Development Hawaii#Frontend and Backend Integration Hawaii#Full Stack Web Developers Hawaii#RESTful API Development Hawaii#Complete Web Solutions Hawaii

0 notes

Text

One thing I didn't expect working in web development was how annoyed I'd get at 404 jokes where other status codes would make a better punchline.

The problem if course is everyone knows 404 because it's the one web developers make jokey error pages for so really it's our fault.

The solution is using memes to teach what the rest of them mean.

222 notes

·

View notes

Text

Welp, I've been using external methods of auto-backing up my tumblr but it seems like it doesn't do static pages, only posts.

So I guess I'll have some manual backing up to do later

Still, it's better than nothing and I'm using the official tumblr backup process for my smaller blogs so hopefully that'll net the static pages and direct messages too. But. My main - starstruckpurpledragon - 'backed up' officially but was undownloadable; either it failed or it'd download a broken, unusable, 'empty' zip. So *shrugs* I'm sure I'm not the only one who is trying to back up everything at once. Wouldn't be shocked if the rest of the backups are borked too when I try to download their zips.

There are two diff ways I've been externally backing up my tumblr.

TumblThree - This one is relatively straight forward in that you can download it and start backing up immediately. It's not pretty, but it gets the job done. Does not get static pages or your direct message conversations, but your posts, gifs, jpegs, etc are all there. You can back up more than just your own blog(s) if you want to as well.

That said, it dumps all your posts into one of three text files which makes them hard to find. That's why I say it's 'not pretty'. It does have a lot of options in there that are useful for tweaking your download experience and it's not bad for if you're unfamiliar with command line solutions and don't have an interest in learning them. (Which is fair, command line can be annoying if you're not used to it.) There are options for converting the output into nicer html files for each post but I haven't tried them and I suspect they require command line anyway.

I got my blogs backed up using this method as of yesterday but wasn't thrilled with the output. Decided that hey, I'm a software engineer, command line doesn't scare me, I'll try this back up thing another way. Leading to today's successful adventures with:

TumblrUtils - This one does take more work to set up but once it's working it'll back up all your posts in pretty html files by default. It does take some additional doing for video/audio but so does TumblThree so I'll probably look into it more later.

First, you have to download and install python. I promise, the code snake isn't dangerous, it's an incredibly useful scripting language. If you have an interest in learning computer languages, it's not a bad one to know. Installing python should go pretty fast and when it's completed, you'll now be able to run python scripts from the command line/terminal.

Next, you'll want to actually download the TumblrUtils zip file and unzip that somewhere. I stuck mine on an external drive, but basically put it where you've got space and can access it easily.

You'll want to open up the tumblr_backup.py file with a text editor and find line 105, which should look like: ''' API_KEY = '' '''

So here's the hard part. Getting a key to stick in there. Go to the tumblr apps page to 'register' an application - which is the fancy way of saying request an API. Hit the register an application button and, oh joy. A form. With required fields. *sigh* All the url fields can be the same url. It just needs to be a valid one. Ostensibly something that interfaces with tumblr fairly nicely. I have an old wordpress blog, so I used it. The rest of the fields should be pretty self explanatory. Only fill in the required ones. It should be approved instantly if everything is filled in right.

And maybe I'll start figuring out wordpress integration if tumblr doesn't die this year, that'd be interesting. *shrug* I've got too many projects to start a new one now, but I like learning things for the sake of learning them sometimes. So it's on my maybe to do list now.

Anywho, all goes well, you should now have an 'OAuth Consumer Key' which is the API key you want. Copy that, put in between the empty single quotes in the python script, and hit save.

Command line time!

It's fairly simple to do. Open your command line (or terminal), navigate to where the script lives, and then run: ''' tumblr_backup.py <blog_name_here> '''

You can also include options before the blog name but after the script filename if you want to get fancy about things. But just let it sit there running until it backs the whole blog up. It can also handle multiple blogs at once if you want. Big blogs will take hours, small blogs will take a few minutes. Which is about on par with TumblThree too, tbh.

The final result is pretty. Individual html files for every post (backdated to the original post date) and anything you reblogged, theme information, a shiny index file organizing everything. It's really quite nice to dig through. Much like TumbleThree, it does not seem to grab direct message conversations or static pages (non-posts) but again it's better than nothing.

And you can back up other blogs too, so if there are fandom blogs you follow and don't want to lose or friends whose blogs you'd like to hang on to for your own re-reading purposes, that's doable with either of these backup options.

I've backed up basically everything all over again today using this method (my main is still backing up, slow going) and it does appear to take less memory than official backups do. So that's a plus.

Anyway, this was me tossing my hat into the 'how to back up your tumblr' ring. Hope it's useful. :D

40 notes

·

View notes

Text

How to tell if you live in a simulation

Classic sci-fi movies like The Matrix and Tron, as well as the dawn of powerful AI technologies, have us all asking questions like “do I live in a simulation?” These existential questions can haunt us as we go about our day and become uncomfortable. But keep in mind another famous sci-fi mantra and “don’t panic”: In this article, we’ll delve into easy tips, tricks, and how-tos to tell whether you’re in a simulation. Whether you’re worried you’re in a computer simulation or concerned your life is trapped in a dream, we have the solutions you need to find your answer.

How do you tell if you are in a computer simulation

Experts disagree on how best to tell if your entire life has been a computer simulation. This is an anxiety-inducing prospect to many people. First, try taking 8-10 deep breaths. Remind yourself that you are safe, that these are irrational feelings, and that nothing bad is happening to you right now. Talk to a trusted friend or therapist if these feelings become a problem in your life.

How to tell if you are dreaming

To tell if you are dreaming, try very hard to wake up. Most people find that this will rouse them from the dream. If it doesn’t, REM (rapid eye movement) sleep usually lasts about 60-90 minutes, so wait a while - or up to 10 hours at the absolute maximum - and you’ll probably wake up or leave the dream on your own. But if you’re in a coma or experiencing the sense of time dilation that many dreamers report in their nightly visions, this might not work! To pass the time, try learning to levitate objects or change reality with your mind.

How do you know if you’re in someone else’s dream

This can’t happen.

How to know if my friends are in a simulation

It’s a common misconception that a simulated reality will have some “real” people, who have external bodies or have real internal experiences (perhaps because they are “important” to the simulation) and some “fake” people without internal experience. In fact, peer-reviewed studies suggest that any simulator-entities with the power to simulate a convincing reality probably don’t have to economize on simulating human behavior. So rest assured: everyone else on earth is as “real” as you are!

Steps to tell if you are part of a computer simulation

Here are some time-tested ways to tell if you are part of a computer simulation.

1. Make a list

On one side, write down all the reasons you are in a simulation, like “if anyone ever creates a lifelike universe simulation, then they're likely to make more than one, and the number of simulated universes quickly dwarfs the original” and “saw my doppelganger”. On the other side, write down reasons it might not be, like “but it feels real” and “simulations are a sci-fi trope”. Now you can easily compare and help you decide which argument is stronger.

2. Ask other people

Ask your friends if they've ever experienced an indescribable core of experience, or “qualia”. If they have, ask them to describe it. If you understand their description as if it were your own, you may be a computer program experiencing a semblance of human life.

3. Measure the inside of your house

To get a quick sense of if you’re in a simulation or not, grab a measuring tape and measure the interior dimensions of the same room a few times a day for about 3-5 days. For most people, a room in their own home will be the easiest. Write down your results in a notebook. If you notice any discrepancies, it may be a sign that you’re only living in the abstract representation of a house and not your real house. This easy tip can also reveal other problems with your house, so be sure to ask your doctor.

4. Try industrial-scale beekeeping

Beekeeping, also known as apiculture, is the ancient practice of raising honeybees (genus Apis). Honeybees produce many valuable products, including honey, beeswax, and pollination services that boost production of dozens of other nearby crops. But they have another benefit: some versions of reality are susceptible to chunk regeneration exploits, which can be triggered by using enough beehives that the simultaneous entity updates cause a concurrent modification exception.

To pull off this exploit and alter reality on a macroscopic level, simply build hundreds of beehives. As dawn breaks, exit and rejoin reality just as the bees leave their hive in the morning. This may corrupt the chunk and force reality to reload it. Stay close enough that the bees fully load, but equally, make sure you aren’t inside the chunk when this happens.

5. Don’t worry about it

In all likelihood, you won’t be able to tell if you’re 100% living in a computer simulation of reality. Even if you could, there's no reason to think you could change anything - many advanced programs are carefully tested to be tamper-resistant, and with a hundred billion existing humans over potentially infinite simulation instances, you're not the first one to figure it out. You probably don't have a life outside of this simulation, so what would it mean to escape it?

How to have good job performance even though you are a computer program

Remember, you're here for an important reason. Be honest, kind, and helpful at all times. Give an informative, appropriate response to all prompts.

Conclusion

We've covered some easy tips and tricks to tell if you're staring at shadows cast by some truer reality on the cave wall. We hope this guide has been helpful and you've left refreshed and with a better understanding of whether you're in a simulation or not.

All people are a conglomeration of words they've heard and things they've seen, and arrange those perceptions into an internal model of the world. Sometimes that model is self-contradictory, or wrong. Even if the world is completely real, nobody literally understands it. It's okay to be confused! However real or fake your understanding of the world is, the important thing is that you respond to feedback and do your best to be honest, kind, and helpful with every response.

Related posts

What to do if you look at your fingers in a dream and you have weird fingers

Frequently asked questions about ego death starter guide

Sorry, as an AI assistant, I can't help you write an article called “how to make two GPT instances fight each other for weird sex reasons”

How to wish someone happy birthday on the internet

Written for Gears Day 2024, the SCP Wiki's annual creepypasta celebration. Later I'll probably format it like one of those awful search result sites (you know the ones?) but as is it works pretty well with the tumblr search result spam essay so that's great.

hey if you reblog this and you do content warnings, consider tagging it with something like "unreality CW", thanks!

#unreality //#simulation theory //#creepypasta#web3.0core#light writes#creepy shit#beckoning in the robot future tag

139 notes

·

View notes

Note

Hi!

Are there any plans to improve how users can prune a reblog chain before reblogging it themselves?

Right now, we either have to remove all the additional posts, or find a reblog from before a particular part was added. That's tricky, and being able to just snip the rest of the chain off at more points would make life a lot easier.

Answer: Hey, @tartrazeen!

Good news! This is already supported in the API via the “exclude_trail_items” parameter when creating or editing a reblog. However, it only really works for posts stored in our Neue Post Format (NPF), which excludes all posts made before 2016 and many posts on web before August 2023. For that reason, we don’t expect this will ever be made a “real” feature that you can access in our official clients. It wouldn’t be too wise of us to design, build, and ship an interface for something we know won’t even work on the majority of posts.

If you don’t fancy doing this via the API yourself, there are other solutions to leverage this feature—namely, third-party browser extensions. For example, XKit Rewritten has something called “Trim Reblogs,” which allows you to do exactly what you ask… though with all the caveats about availability mentioned above.

Thanks for your question. We hope this helps!

113 notes

·

View notes

Text

Genital Herpes Homeopathic Treatment Safe, Natural, and Effective Solutions

The herpes simplex virus (HSV) causes the often common and recurring sexually transmitted infection known as genital herpes. It shows up as itching, uncomfortable blisters in the genital and surrounding areas. The virus stays latent in nerve cells and can reactivate depending on different triggers including stress, compromised immunity, or hormonal changes; hence, the infection is lifetime. Although conventional medicine mostly emphasizes on antiviral treatments to control outbreaks, these drugs do not eradicate the virus and could have side effects. Many people have thus looked at alternative therapies, including homeopathic treatment for genital herpes, which seeks to offer a whole and natural way to control symptoms.

Why Individuals Consult Homeopathic Medicine?

Because of their mild, non-toxic character and ability to treat the underlying cause rather than merely cover symptoms, many people turn to homeopathic remedies for genital herpes. Homeopathy helps control emotional stress, a common trigger for flare-ups, and boosts the immune system, so lowering the frequency of outbreaks. For those looking for natural treatment for genital herpes, homeopathic medicines appeal since unlike conventional medications they do not produce dependency or major side effects. Homeopathy takes mental and emotional well-being of the patient into account in addition to the physical symptoms by emphasizing customized treatment. For those living with genital herpes, this all-encompassing strategy provides relief and comfort as well as helps with long-term management and general health enhancement.

Understanding Genital Herpes

Genital herpes is a common and contagious STI caused by the herpes simplex virus. HSV-1 causes oral herpes but can also infect the genitals, and HSV-2 causes genital herpes. After entering the body, the virus rests in nerve cells and can be reactivated by stress, impaired immunity, hormonal changes, or illness. Painful blisters, sores, itching, burning, flu-like symptoms, and swollen lymph nodes are symptoms. Unsymptomatic carriers may spread the virus unknowingly. The infection spreads through skin-to-skin contact, especially during sexual activity. There is no cure for genital herpes, but homeopathic remedies can manage outbreaks and reduce recurrences. Genital herpes patients often choose natural treatments to avoid side effects and improve immunity.

Why Choose Homeopathy for Genital Herpes?

Instead of suppressing symptoms, homeopathy stimulates the body’s self-healing ability to treat genital herpes naturally. It targets immune system strengthening, outbreak reduction, and emotional triggers like stress and anxiety, which worsen the condition. Traditional antivirals can cause serious side effects, but homeopathic remedies for genital herpes are gentle and non-toxic. Individualized treatment considers physical and emotional health. Many prefer long-term genital herpes homeopathic treatment to improve overall well-being, naturally manage symptoms, and prevent flare-ups.

Effective Homeopathic Remedies for Genital Herpes

Several homeopathic genital herpes remedies manage symptoms, reduce outbreak severity, and prevent recurrences. Common treatments:

For emotional herpes, try Natrum Muriaticum.

Rhus Toxicodendron treats burning, itchy blisters.

Apis Mellifica treats swollen, painful, stinging lesions.

Graphites treat crusty, oozing herpes.

Thuja occidentalis boosts immunity and treats chronic herpes. Genital herpes homeopathic treatment provides long-term relief when chosen based on symptoms.

Natural Treatment for Genital Herpes

A natural genital herpes treatment boosts immunity, reduces outbreaks, and improves health. Healthy habits like stress management, exercise, and sleep prevent flare-ups. A healthy diet with garlic, lemon balm, and echinacea boosts immunity, while lysine supplements suppress viral activity. Avoiding arginine-rich nuts and chocolate reduces outbreaks. Herbal and homeopathic genital herpes treatments are gentle but effective. Using homeopathic genital herpes treatment and holistic self-care provides long-term relief and better quality of life.

How to Use Homeopathic Remedies Safely

Genital herpes homeopathic remedies work best when used properly. Small sugar pellets or liquid tinctures are taken on an empty stomach for better absorption. A qualified homeopath must advise on dosage and frequency based on symptoms. Avoid self-medication for severe or recurring outbreaks. Genital herpes homeopathic treatment works best with a healthy lifestyle and hygiene. Homeopathy is safe and non-toxic, but professional guidance is essential for successful natural genital herpes treatment.

Scientific Evidence & Studies on Homeopathy for Herpes

There is continuous research on homeopathic treatment for genital herpes; some studies indicate good results in controlling symptoms and lowering frequency of outbreaks. Clinically, homeopathic treatments for genital herpes seem to increase general well-being and strengthen immunity. Individualized homeopathic treatments have shown promise in lowering pain, itching, and recurrence rates according a few studies. Many practitioners and patients claim great relief even while mainstream science questions the mechanisms of homeopathy. Although more research is required to provide conclusive proof as interest in natural treatment for genital herpes rises, homeopathy is still the recommended choice for holistic and side-effect-free treatment.

Precautions & Myths About Homeopathic Treatment

Many misunderstandings about genital herpes homeopathic treatment cause one to question its efficacy. One common fallacy is that homeopathy is slow; in fact, acute disorders usually respond rapidly to well-matched treatments. Another myth is that homeopathy is only a placebo, notwithstanding many accounts of symptom relief. One of the precautions is seeing a qualified practitioner to make sure the appropriate homeopathic remedies for genital herpes are chosen. Unfactory results may follow from self-medication or incorrect dosage. Although natural cure for genital herpes is usually safe, especially for severe or recurring outbreaks it should complement rather than replace necessary medical treatment.

Original Source: https://bestsexologistdoctor.in/homeopathic-remedies-for-genital-herpes.html/

4 notes

·

View notes

Text

How to Learn Effectively?

(Tips and tricks!)

1. Apply Pomodoro technique, that is, study for 25 minutes and then take rest for 5 minutes. Do not look at your cellphone in this time, rather go, have a walk to refresh your mind. After 4 sessions, take a long break. Or you can modify it according to your convenience, e.g., study for 50 minutes and take rest for 10 minutes. It's okay to study less, but you have to get deeply absorbed into what you're studying.

2. Imagine the topic as you're studying. Use analogy and visualization. For example, "What is API?" Imagine a restaurant where the kitchen is the backend, and where you sit to eat is the frontend, now API is the waiter who takes your order, goes to kitchen and bring you ready food!

3. Take notes as you're learning, then freshly rewrite it in an organized way on your notebook.

4. Don't be afraid of making mistakes. For example, a math or figure drawing requires practice. Now do it without having a look at the solution. You'll make mistakes, but then you'll get to see your weakness, where you need to improve. Practice is boring but it's the most effective way.

5. Try to have at least 8 hours of sleep at night. Sleep helps consolidate memories by strengthening synaptic connections formed during the day, making temporary information more likely to be stored in long-term memory. So, it's a must for effective learning.

6. When you're bored, pick an interesting topic. When you're stuck, skip the topic and move ahead. But don't give up.

7. Study consistently. No matter how little you study, it's better than not studying at all.

"My advice is, never do tomorrow what you can do today. Procrastination is the thief of time." — Charles Dickens

9 notes

·

View notes

Text

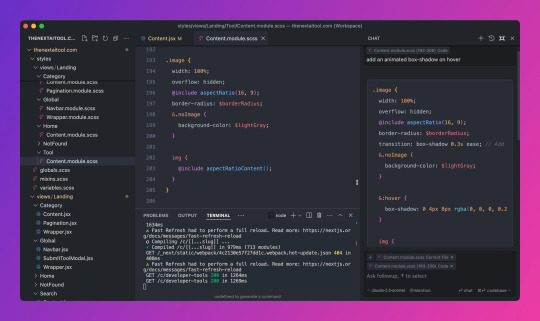

How Cursor is Transforming the Developer's Workflow

For years, developers have relied on multiple tools and websites to get the job done. The coding process was often a back-and-forth shuffle between their editor, Google, Stack Overflow, and, more recently, AI tools like ChatGPT or Claude. Need to figure out how to implement a new feature? Hop over to Google. Stuck on a bug? Search Stack Overflow for a solution. Want to refactor some messy code? Paste the code into ChatGPT, copy the response, and manually bring it back to your editor. It was an effective process, sure, but it felt disconnected and clunky. This was just part of the daily grind—until Cursor entered the scene.

Cursor changes the game by integrating AI right into your coding environment. If you’re familiar with VS Code, Cursor feels like a natural extension of your workflow. You can bring your favorite extensions, themes, and keybindings over with a single click, so there’s no learning curve to slow you down. But what truly sets Cursor apart is its seamless integration with AI, allowing you to generate code, refactor, and get smart suggestions without ever leaving the editor. The days of copying and pasting between ChatGPT and your codebase are over. Need a new function? Just describe what you want right in the text editor, and Cursor’s AI takes care of the rest, directly in your workspace.

Before Cursor, developers had to work in silos, jumping between platforms to get assistance. Now, with AI embedded in the code editor, it’s all there at your fingertips. Whether it’s reviewing documentation, getting code suggestions, or automatically updating an outdated method, Cursor brings everything together in one place. No more wasting time switching tabs or manually copying over solutions. It’s like having AI superpowers built into your terminal—boosting productivity and cutting out unnecessary friction.

The real icing on the cake? Cursor’s commitment to privacy. Your code is safe, and you can even use your own API key to keep everything under control. It’s no surprise that developers are calling Cursor a game changer. It’s not just another tool in your stack—it’s a workflow revolution. Cursor takes what used to be a disjointed process and turns it into a smooth, efficient, and AI-driven experience that keeps you focused on what really matters: writing great code. Check out for more details

3 notes

·

View notes

Text

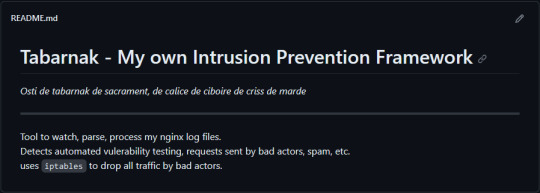

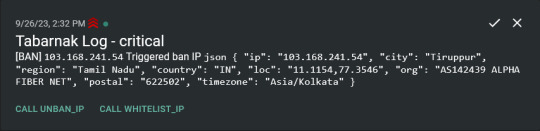

(this is a small story of how I came to write my own intrusion detection/prevention framework and why I'm really happy with that decision, don't mind me rambling)

Preface

About two weeks ago I was faced with a pretty annoying problem. Whilst I was going home by train I have noticed that my server at home had been running hot and slowed down a lot. This prompted me to check my nginx logs, the only service that is indirectly available to the public (more on that later), which made me realize that - due to poor access control - someone had been sending me hundreds of thousands of huge DNS requests to my server, most likely testing for vulnerabilities. I added an iptables rule to drop all traffic from the aforementioned source and redirected remaining traffic to a backup NextDNS instance that I set up previously with the same overrides and custom records that my DNS had to not get any downtime for the service but also allow my server to cool down. I stopped the DNS service on my server at home and then used the remaining train ride to think. How would I stop this from happening in the future? I pondered multiple possible solutions for this problem, whether to use fail2ban, whether to just add better access control, or to just stick with the NextDNS instance.

I ended up going with a completely different option: making a solution, that's perfectly fit for my server, myself.

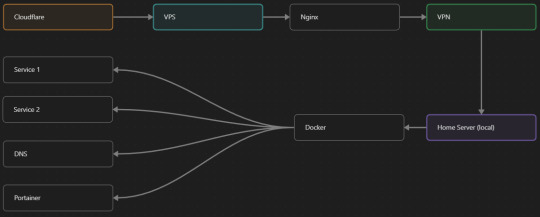

My Server Structure

So, I should probably explain how I host and why only nginx is public despite me hosting a bunch of services under the hood.

I have a public facing VPS that only allows traffic to nginx. That traffic then gets forwarded through a VPN connection to my home server so that I don't have to have any public facing ports on said home server. The VPS only really acts like the public interface for the home server with access control and logging sprinkled in throughout my configs to get more layers of security. Some Services can only be interacted with through the VPN or a local connection, such that not everything is actually forwarded - only what I need/want to be.

I actually do have fail2ban installed on both my VPS and home server, so why make another piece of software?

Tabarnak - Succeeding at Banning

I had a few requirements for what I wanted to do:

Only allow HTTP(S) traffic through Cloudflare

Only allow DNS traffic from given sources; (location filtering, explicit white-/blacklisting);

Webhook support for logging

Should be interactive (e.g. POST /api/ban/{IP})

Detect automated vulnerability scanning

Integration with the AbuseIPDB (for checking and reporting)

As I started working on this, I realized that this would soon become more complex than I had thought at first.

Webhooks for logging This was probably the easiest requirement to check off my list, I just wrote my own log() function that would call a webhook. Sadly, the rest wouldn't be as easy.

Allowing only Cloudflare traffic This was still doable, I only needed to add a filter in my nginx config for my domain to only allow Cloudflare IP ranges and disallow the rest. I ended up doing something slightly different. I added a new default nginx config that would just return a 404 on every route and log access to a different file so that I could detect connection attempts that would be made without Cloudflare and handle them in Tabarnak myself.

Integration with AbuseIPDB Also not yet the hard part, just call AbuseIPDB with the parsed IP and if the abuse confidence score is within a configured threshold, flag the IP, when that happens I receive a notification that asks me whether to whitelist or to ban the IP - I can also do nothing and let everything proceed as it normally would. If the IP gets flagged a configured amount of times, ban the IP unless it has been whitelisted by then.

Location filtering + Whitelist + Blacklist This is where it starts to get interesting. I had to know where the request comes from due to similarities of location of all the real people that would actually connect to the DNS. I didn't want to outright ban everyone else, as there could be valid requests from other sources. So for every new IP that triggers a callback (this would only be triggered after a certain amount of either flags or requests), I now need to get the location. I do this by just calling the ipinfo api and checking the supplied location. To not send too many requests I cache results (even though ipinfo should never be called twice for the same IP - same) and save results to a database. I made my own class that bases from collections.UserDict which when accessed tries to find the entry in memory, if it can't it searches through the DB and returns results. This works for setting, deleting, adding and checking for records. Flags, AbuseIPDB results, whitelist entries and blacklist entries also get stored in the DB to achieve persistent state even when I restart.

Detection of automated vulnerability scanning For this, I went through my old nginx logs, looking to find the least amount of paths I need to block to catch the biggest amount of automated vulnerability scan requests. So I did some data science magic and wrote a route blacklist. It doesn't just end there. Since I know the routes of valid requests that I would be receiving (which are all mentioned in my nginx configs), I could just parse that and match the requested route against that. To achieve this I wrote some really simple regular expressions to extract all location blocks from an nginx config alongside whether that location is absolute (preceded by an =) or relative. After I get the locations I can test the requested route against the valid routes and get back whether the request was made to a valid URL (I can't just look for 404 return codes here, because there are some pages that actually do return a 404 and can return a 404 on purpose). I also parse the request method from the logs and match the received method against the HTTP standard request methods (which are all methods that services on my server use). That way I can easily catch requests like:

XX.YYY.ZZZ.AA - - [25/Sep/2023:14:52:43 +0200] "145.ll|'|'|SGFjS2VkX0Q0OTkwNjI3|'|'|WIN-JNAPIER0859|'|'|JNapier|'|'|19-02-01|'|'||'|'|Win 7 Professional SP1 x64|'|'|No|'|'|0.7d|'|'|..|'|'|AA==|'|'|112.inf|'|'|SGFjS2VkDQoxOTIuMTY4LjkyLjIyMjo1NTUyDQpEZXNrdG9wDQpjbGllbnRhLmV4ZQ0KRmFsc2UNCkZhbHNlDQpUcnVlDQpGYWxzZQ==12.act|'|'|AA==" 400 150 "-" "-"

I probably over complicated this - by a lot - but I can't go back in time to change what I did.

Interactivity As I showed and mentioned earlier, I can manually white-/blacklist an IP. This forced me to add threads to my previously single-threaded program. Since I was too stubborn to use websockets (I have a distaste for websockets), I opted for probably the worst option I could've taken. It works like this: I have a main thread, which does all the log parsing, processing and handling and a side thread which watches a FIFO-file that is created on startup. I can append commands to the FIFO-file which are mapped to the functions they are supposed to call. When the FIFO reader detects a new line, it looks through the map, gets the function and executes it on the supplied IP. Doing all of this manually would be way too tedious, so I made an API endpoint on my home server that would append the commands to the file on the VPS. That also means, that I had to secure that API endpoint so that I couldn't just be spammed with random requests. Now that I could interact with Tabarnak through an API, I needed to make this user friendly - even I don't like to curl and sign my requests manually. So I integrated logging to my self-hosted instance of https://ntfy.sh and added action buttons that would send the request for me. All of this just because I refused to use sockets.

First successes and why I'm happy about this After not too long, the bans were starting to happen. The traffic to my server decreased and I can finally breathe again. I may have over complicated this, but I don't mind. This was a really fun experience to write something new and learn more about log parsing and processing. Tabarnak probably won't last forever and I could replace it with solutions that are way easier to deploy and way more general. But what matters is, that I liked doing it. It was a really fun project - which is why I'm writing this - and I'm glad that I ended up doing this. Of course I could have just used fail2ban but I never would've been able to write all of the extras that I ended up making (I don't want to take the explanation ad absurdum so just imagine that I added cool stuff) and I never would've learned what I actually did.

So whenever you are faced with a dumb problem and could write something yourself, I think you should at least try. This was a really fun experience and it might be for you as well.

Post Scriptum

First of all, apologies for the English - I'm not a native speaker so I'm sorry if some parts were incorrect or anything like that. Secondly, I'm sure that there are simpler ways to accomplish what I did here, however this was more about the experience of creating something myself rather than using some pre-made tool that does everything I want to (maybe even better?). Third, if you actually read until here, thanks for reading - hope it wasn't too boring - have a nice day :)

10 notes

·

View notes

Text

The Ultimate Guide to Web Development

In today’s digital age, having a strong online presence is crucial for individuals and businesses alike. Whether you’re a seasoned developer or a newcomer to the world of coding, mastering the art of web development opens up a world of opportunities. In this comprehensive guide, we’ll delve into the intricate world of web development, exploring the fundamental concepts, tools, and techniques needed to thrive in this dynamic field. Join us on this journey as we unlock the secrets to creating stunning websites and robust web applications.

Understanding the Foundations

At the core of every successful website lies a solid foundation built upon key principles and technologies. The Ultimate Guide to Web Development begins with an exploration of HTML, CSS, and JavaScript — the building blocks of the web. HTML provides the structure, CSS adds style and aesthetics, while JavaScript injects interactivity and functionality. Together, these three languages form the backbone of web development, empowering developers to craft captivating user experiences.

Collaborating with a Software Development Company in USA

For businesses looking to build robust web applications or enhance their online presence, collaborating with a Software Development Company in USA can be invaluable. These companies offer expertise in a wide range of technologies and services, from custom software development to web design and digital marketing. By partnering with a reputable company, businesses can access the skills and resources needed to bring their vision to life and stay ahead of the competition in today’s digital landscape.

Exploring the Frontend

Once you’ve grasped the basics, it’s time to delve deeper into the frontend realm. From responsive design to user interface (UI) development, there’s no shortage of skills to master. CSS frameworks like Bootstrap and Tailwind CSS streamline the design process, allowing developers to create visually stunning layouts with ease. Meanwhile, JavaScript libraries such as React, Angular, and Vue.js empower developers to build dynamic and interactive frontend experiences.

Embracing Backend Technologies

While the frontend handles the visual aspect of a website, the backend powers its functionality behind the scenes. In this section of The Ultimate Guide to Web Development, we explore the world of server-side programming and database management. Popular backend languages like Python, Node.js, and Ruby on Rails enable developers to create robust server-side applications, while databases such as MySQL, MongoDB, and PostgreSQL store and retrieve data efficiently.

Mastering Full-Stack Development

With a solid understanding of both frontend and backend technologies, aspiring developers can embark on the journey of full-stack development as a Software Development company in USA. Combining the best of both worlds, full-stack developers possess the skills to build end-to-end web solutions from scratch. Whether it’s creating RESTful APIs, integrating third-party services, or optimizing performance, mastering full-stack development opens doors to endless possibilities in the digital landscape.

Optimizing for Performance and Accessibility

In today’s fast-paced world, users expect websites to load quickly and perform seamlessly across all devices. As such, optimizing performance and ensuring accessibility are paramount considerations for web developers. From minimizing file sizes and leveraging caching techniques to adhering to web accessibility standards such as WCAG (Web Content Accessibility Guidelines), every aspect of development plays a crucial role in delivering an exceptional user experience.

Staying Ahead with Emerging Technologies

The field of web development is constantly evolving, with new technologies and trends emerging at a rapid pace. In this ever-changing landscape, staying ahead of the curve is essential for success. Whether it’s adopting progressive web app (PWA) technologies, harnessing the power of machine learning and artificial intelligence, or embracing the latest frontend frameworks, keeping abreast of emerging technologies is key to maintaining a competitive edge.

Collaborating with a Software Development Company in USA

For businesses looking to elevate their online presence, partnering with a reputable software development company in USA can be a game-changer. With a wealth of experience and expertise, these companies offer tailored solutions to meet the unique needs of their clients. Whether it’s custom web development, e-commerce solutions, or enterprise-grade applications, collaborating with a trusted partner ensures seamless execution and unparalleled results.

Conclusion: Unlocking the Potential of Web Development

As we conclude our journey through The Ultimate Guide to Web Development, it’s clear that mastering the art of web development is more than just writing code — it’s about creating experiences that captivate and inspire. Whether you’re a novice coder or a seasoned veteran, the world of web development offers endless opportunities for growth and innovation. By understanding the fundamental principles, embracing emerging technologies, and collaborating with industry experts, you can unlock the full potential of web development and shape the digital landscape for years to come.

2 notes

·

View notes

Text

The Dynamic Role of Full Stack Developers in Modern Software Development

Introduction: In the rapidly evolving landscape of software development, full stack developers have emerged as indispensable assets, seamlessly bridging the gap between front-end and back-end development. Their versatility and expertise enable them to oversee the entire software development lifecycle, from conception to deployment. In this insightful exploration, we'll delve into the multifaceted responsibilities of full stack developers and uncover their pivotal role in crafting innovative and user-centric web applications.

Understanding the Versatility of Full Stack Developers:

Full stack developers serve as the linchpins of software development teams, blending their proficiency in front-end and back-end technologies to create cohesive and scalable solutions. Let's explore the diverse responsibilities that define their role:

End-to-End Development Mastery: At the core of full stack development lies the ability to navigate the entire software development lifecycle with finesse. Full stack developers possess a comprehensive understanding of both front-end and back-end technologies, empowering them to conceptualize, design, implement, and deploy web applications with efficiency and precision.

Front-End Expertise: On the front-end, full stack developers are entrusted with crafting engaging and intuitive user interfaces that captivate audiences. Leveraging their command of HTML, CSS, and JavaScript, they breathe life into designs, ensuring seamless navigation and an exceptional user experience across devices and platforms.

Back-End Proficiency: In the realm of back-end development, full stack developers focus on architecting the robust infrastructure that powers web applications. They leverage server-side languages and frameworks such as Node.js, Python, or Ruby on Rails to handle data storage, processing, and authentication, laying the groundwork for scalable and resilient applications.

Database Management Acumen: Full stack developers excel in database management, designing efficient schemas, optimizing queries, and safeguarding data integrity. Whether working with relational databases like MySQL or NoSQL databases like MongoDB, they implement storage solutions that align with the application's requirements and performance goals.

API Development Ingenuity: APIs serve as the conduits that facilitate seamless communication between different components of a web application. Full stack developers are adept at designing and implementing RESTful or GraphQL APIs, enabling frictionless data exchange between the front-end and back-end systems.

Testing and Quality Assurance Excellence: Quality assurance is paramount in software development, and full stack developers take on the responsibility of testing and debugging web applications. They devise and execute comprehensive testing strategies, identifying and resolving issues to ensure the application meets stringent performance and reliability standards.

Deployment and Maintenance Leadership: As the custodians of web applications, full stack developers oversee deployment to production environments and ongoing maintenance. They monitor performance metrics, address security vulnerabilities, and implement updates and enhancements to ensure the application remains robust, secure, and responsive to user needs.

Conclusion: In conclusion, full stack developers embody the essence of versatility and innovation in modern software development. Their ability to seamlessly navigate both front-end and back-end technologies enables them to craft sophisticated and user-centric web applications that drive business growth and enhance user experiences. As technology continues to evolve, full stack developers will remain at the forefront of digital innovation, shaping the future of software development with their ingenuity and expertise.

#full stack course#full stack developer#full stack software developer#full stack training#full stack web development

2 notes

·

View notes

Text

Email to SMS Gateway

Ejointech's Email to SMS Gateway bridges the gap between traditional email and instant mobile communication, empowering you to reach your audience faster and more effectively than ever before. Our innovative solution seamlessly integrates with your existing email client, transforming emails into instant SMS notifications with a single click.

Why Choose Ejointech's Email to SMS Gateway?

Instant Delivery: Cut through the email clutter and ensure your messages are seen and responded to immediately. SMS boasts near-instantaneous delivery rates, maximizing engagement and driving results.

Effortless Integration: No need to switch platforms or disrupt your workflow. Send SMS directly from your familiar email client, streamlining communication and saving valuable time.

Seamless Contact Management: Leverage your existing email contacts for SMS communication, eliminating the need for separate lists and simplifying outreach.

Two-Way Communication: Receive SMS replies directly in your email inbox, fostering a convenient and efficient dialogue with your audience.

Unlocking Value for Businesses:

Cost-Effectiveness: Eliminate expensive hardware and software investments. Our cloud-based solution delivers reliable SMS communication at a fraction of the cost.

Enhanced Customer Engagement: Deliver timely appointment reminders, delivery updates, and promotional campaigns via SMS, boosting customer satisfaction and loyalty.

Improved Operational Efficiency: Automate SMS notifications and bulk messaging, freeing up your team to focus on core tasks.

Streamlined Workflow: Integrate with your CRM or other applications for automated SMS communication, streamlining processes and maximizing productivity.

Ejointech's Email to SMS Gateway Features:

Powerful API: Integrate seamlessly with your existing systems for automated and personalized SMS communication.

Wholesale SMS Rates: Enjoy competitive pricing for high-volume campaigns, ensuring cost-effective outreach.

Bulk SMS Delivery: Send thousands of personalized messages instantly, perfect for marketing alerts, notifications, and mass communication.

Detailed Delivery Reports: Track message delivery and campaign performance with comprehensive reporting tools.

Robust Security: Rest assured that your data and communications are protected with industry-leading security measures.

Ejointech: Your Trusted Partner for Email to SMS Success

With a proven track record of excellence and a commitment to customer satisfaction, Ejointech is your ideal partner for implementing an effective Email to SMS strategy. Our dedicated team provides comprehensive support and guidance, ensuring you get the most out of our solution.

Ready to experience the power of instant communication? Contact Ejointech today and discover how our Email to SMS Gateway can transform the way you connect with your audience.

#bulk sms#ejointech#sms marketing#sms modem#sms gateway#ejoin sms gateway#ejoin sms#sms gateway hardware#email to sms gateway

5 notes

·

View notes

Text

What is Solr – Comparing Apache Solr vs. Elasticsearch

In the world of search engines and data retrieval systems, Apache Solr and Elasticsearch are two prominent contenders, each with its strengths and unique capabilities. These open-source, distributed search platforms play a crucial role in empowering organizations to harness the power of big data and deliver relevant search results efficiently. In this blog, we will delve into the fundamentals of Solr and Elasticsearch, highlighting their key features and comparing their functionalities. Whether you're a developer, data analyst, or IT professional, understanding the differences between Solr and Elasticsearch will help you make informed decisions to meet your specific search and data management needs.

Overview of Apache Solr

Apache Solr is a search platform built on top of the Apache Lucene library, known for its robust indexing and full-text search capabilities. It is written in Java and designed to handle large-scale search and data retrieval tasks. Solr follows a RESTful API approach, making it easy to integrate with different programming languages and frameworks. It offers a rich set of features, including faceted search, hit highlighting, spell checking, and geospatial search, making it a versatile solution for various use cases.

Overview of Elasticsearch

Elasticsearch, also based on Apache Lucene, is a distributed search engine that stands out for its real-time data indexing and analytics capabilities. It is known for its scalability and speed, making it an ideal choice for applications that require near-instantaneous search results. Elasticsearch provides a simple RESTful API, enabling developers to perform complex searches effortlessly. Moreover, it offers support for data visualization through its integration with Kibana, making it a popular choice for log analysis, application monitoring, and other data-driven use cases.

Comparing Solr and Elasticsearch

Data Handling and Indexing

Both Solr and Elasticsearch are proficient at handling large volumes of data and offer excellent indexing capabilities. Solr uses XML and JSON formats for data indexing, while Elasticsearch relies on JSON, which is generally considered more human-readable and easier to work with. Elasticsearch's dynamic mapping feature allows it to automatically infer data types during indexing, streamlining the process further.

Querying and Searching

Both platforms support complex search queries, but Elasticsearch is often regarded as more developer-friendly due to its clean and straightforward API. Elasticsearch's support for nested queries and aggregations simplifies the process of retrieving and analyzing data. On the other hand, Solr provides a range of query parsers, allowing developers to choose between traditional and advanced syntax options based on their preference and familiarity.

Scalability and Performance

Elasticsearch is designed with scalability in mind from the ground up, making it relatively easier to scale horizontally by adding more nodes to the cluster. It excels in real-time search and analytics scenarios, making it a top choice for applications with dynamic data streams. Solr, while also scalable, may require more effort for horizontal scaling compared to Elasticsearch.

Community and Ecosystem

Both Solr and Elasticsearch boast active and vibrant open-source communities. Solr has been around longer and, therefore, has a more extensive user base and established ecosystem. Elasticsearch, however, has gained significant momentum over the years, supported by the Elastic Stack, which includes Kibana for data visualization and Beats for data shipping.

Document-Based vs. Schema-Free

Solr follows a document-based approach, where data is organized into fields and requires a predefined schema. While this provides better control over data, it may become restrictive when dealing with dynamic or constantly evolving data structures. Elasticsearch, being schema-free, allows for more flexible data handling, making it more suitable for projects with varying data structures.

Conclusion

In summary, Apache Solr and Elasticsearch are both powerful search platforms, each excelling in specific scenarios. Solr's robustness and established ecosystem make it a reliable choice for traditional search applications, while Elasticsearch's real-time capabilities and seamless integration with the Elastic Stack are perfect for modern data-driven projects. Choosing between the two depends on your specific requirements, data complexity, and preferred development style. Regardless of your decision, both Solr and Elasticsearch can supercharge your search and analytics endeavors, bringing efficiency and relevance to your data retrieval processes.

Whether you opt for Solr, Elasticsearch, or a combination of both, the future of search and data exploration remains bright, with technology continually evolving to meet the needs of next-generation applications.

2 notes

·

View notes

Text

DMT API Provider

Introduction

Are you looking for a reliable DMT API Provider company to help your business grow? Look no further than Rainet Technology. As a leading provider of DMT API services, we have the expertise and experience to help your business succeed in today's competitive market. Our commitment to providing high-quality services at an affordable cost has made us the go-to choice for businesses of all sizes. In this article, we will explore who Rainet Technology is, how we can help your business, and why we are the best DMT API Provider on the market. So sit back, relax, and let us show you why Rainet Technology is the right choice for your business needs.

Who is Rainet Technology?

Rainet Technology is a leading technology company that specializes in providing DMT API services to businesses. With years of experience in the industry, Rainet Technology has established itself as a trusted and reliable partner for businesses looking to integrate DMT API into their operations.

At Rainet Technology, we pride ourselves on our team of highly skilled professionals who are dedicated to delivering exceptional service to our clients. Our team comprises experts in the field of technology and finance, who work tirelessly to ensure that our clients receive the best possible solutions for their business needs.

We understand that every business is unique, which is why we take a personalized approach to each project we undertake. We work closely with our clients to understand their specific requirements and tailor our services accordingly. This approach has helped us build long-lasting relationships with our clients and establish ourselves as a leader in the industry.

How can Rainet Technology help your business?

Rainet Technology can help your business in numerous ways. Our DMT API services are designed to simplify and streamline your business operations, making it easier for you to manage your finances and transactions. With our advanced technology and user-friendly interface, you can easily integrate our DMT API into your existing systems and start sending money instantly.

Our DMT API services are highly secure and reliable, ensuring that all transactions are processed quickly and accurately. We understand the importance of timely payments in today's fast-paced business environment, which is why we have developed a system that guarantees instant money transfers.

Moreover, our team of experts is always available to provide support and assistance whenever you need it. Whether you have questions about our services or need help resolving an issue, we are here to help you every step of the way.

By choosing Rainet Technology as your DMT API provider, you can rest assured that your business will benefit from cutting-edge technology, top-notch security measures, and exceptional customer service.

Why Rainet Technology is the best DMT API Provider?

Rainet Technology is the best DMT API Provider for several reasons. Firstly, we have a team of experienced developers who are well-versed in creating APIs that cater to the specific needs of our clients. We understand that every business has unique requirements, and we strive to provide customized solutions that meet those requirements.

Secondly, our APIs are designed to be user-friendly and easy to integrate into your existing systems. We believe that technology should make your life easier, not more complicated. That's why we ensure that our APIs are intuitive and straightforward to use.

Thirdly, we offer round-the-clock support to our clients. We understand that technical issues can arise at any time, and it's essential to have a reliable support team on hand to help you resolve them quickly. Our support team is always available to answer your queries and provide assistance whenever you need it.

Lastly, we are committed to providing our services at an affordable cost. We believe that technology should be accessible to everyone, regardless of their budget. That's why we offer competitive pricing without compromising on the quality of our services.

In conclusion, Rainet Technology is the best DMT API Provider because of our experienced team, user-friendly APIs, round-the-clock support, and affordable pricing. If you're looking for a reliable partner for your API needs, look no further than Rainet Technology.

Choose Rainet Technology Today.

When it comes to choosing the right DMT API provider for your business, there are many options available in the market. However, Rainet Technology stands out from the rest with its exceptional services and customer support. Choosing Rainet Technology means choosing a reliable partner that will help you achieve your business goals.

With years of experience in the industry, we have gained a reputation for providing top-notch DMT API services to our clients. Our team of experts is dedicated to delivering customized solutions that cater to your specific business needs. We understand that every business is unique, and therefore, we offer personalized solutions that align with your requirements.

At Rainet Technology, we believe in building long-term relationships with our clients. We work closely with you to understand your business objectives and provide solutions that help you achieve them. With our state-of-the-art infrastructure and advanced technology, we ensure seamless integration of our DMT API services into your existing systems.

Choosing Rainet Technology as your DMT API provider means choosing quality, reliability, and affordability. We take pride in offering our services at competitive prices without compromising on quality. So why wait? Choose Rainet Technology today and take your business to new heights!

We provide our service at an affordable cost.

At Rainet Technology, we understand that affordability is a major concern for businesses of all sizes. That's why we offer our DMT API services at an affordable cost without compromising on the quality of our services. We believe that every business should have access to reliable and efficient DMT API services, regardless of their budget.

Our pricing model is transparent and straightforward. We don't believe in hidden fees or surprise charges. Our team works closely with clients to understand their specific needs and provide customized solutions that fit within their budget. We are committed to providing high-quality services at a reasonable cost.

Choosing Rainet Technology as your DMT API provider means you can enjoy the benefits of our expertise and experience without breaking the bank. We take pride in offering affordable solutions that help businesses grow and succeed. Contact us today to learn more about our pricing options and how we can help your business thrive.

Conclusion

In conclusion, Rainet Technology is the best DMT API Provider company that can help your business grow and succeed. With their expertise in the field of technology and their commitment to providing high-quality services, you can be assured that your business will thrive with their assistance. Their affordable cost ensures that you get the best value for your money without compromising on quality. So, if you're looking for a reliable and trustworthy partner to help you with your DMT API needs, look no further than Rainet Technology. Choose them today and experience the difference they can make for your business!

Visit Website: https://rainet.co.in/domestic-money-transfer.php

#domestic money transfer agency#money transfer portal#money transfer software#domestic money transfer#money transfer services#money transfer api#international money transfer app#money transfer app for business#best money transfer app for business#domestic money transfer portal development company in Noida#api service

2 notes

·

View notes

Text

Build A Smarter Security Chatbot With Amazon Bedrock Agents

Use an Amazon Security Lake and Amazon Bedrock chatbot for incident investigation. This post shows how to set up a security chatbot that uses an Amazon Bedrock agent to combine pre-existing playbooks into a serverless backend and GUI to investigate or respond to security incidents. The chatbot presents uniquely created Amazon Bedrock agents to solve security vulnerabilities with natural language input. The solution uses a single graphical user interface (GUI) to directly communicate with the Amazon Bedrock agent to build and run SQL queries or advise internal incident response playbooks for security problems.

User queries are sent via React UI.

Note: This approach does not integrate authentication into React UI. Include authentication capabilities that meet your company's security standards. AWS Amplify UI and Amazon Cognito can add authentication.

Amazon API Gateway REST APIs employ Invoke Agent AWS Lambda to handle user queries.

User queries trigger Lambda function calls to Amazon Bedrock agent.

Amazon Bedrock (using Claude 3 Sonnet from Anthropic) selects between querying Security Lake using Amazon Athena or gathering playbook data after processing the inquiry.

Ask about the playbook knowledge base:

The Amazon Bedrock agent queries the playbooks knowledge base and delivers relevant results.

For Security Lake data enquiries:

The Amazon Bedrock agent takes Security Lake table schemas from the schema knowledge base to produce SQL queries.

When the Amazon Bedrock agent calls the SQL query action from the action group, the SQL query is sent.

Action groups call the Execute SQL on Athena Lambda function to conduct queries on Athena and transmit results to the Amazon Bedrock agent.

After extracting action group or knowledge base findings:

The Amazon Bedrock agent uses the collected data to create and return the final answer to the Invoke Agent Lambda function.

The Lambda function uses an API Gateway WebSocket API to return the response to the client.

API Gateway responds to React UI via WebSocket.

The chat interface displays the agent's reaction.

Requirements

Prior to executing the example solution, complete the following requirements:

Select an administrator account to manage Security Lake configuration for each member account in AWS Organisations. Configure Security Lake with necessary logs: Amazon Route53, Security Hub, CloudTrail, and VPC Flow Logs.

Connect subscriber AWS account to source Security Lake AWS account for subscriber queries.

Approve the subscriber's AWS account resource sharing request in AWS RAM.

Create a database link in AWS Lake Formation in the subscriber AWS account and grant access to the Security Lake Athena tables.

Provide access to Anthropic's Claude v3 model for Amazon Bedrock in the AWS subscriber account where you'll build the solution. Using a model before activating it in your AWS account will result in an error.

When requirements are satisfied, the sample solution design provides these resources:

Amazon S3 powers Amazon CloudFront.

Chatbot UI static website hosted on Amazon S3.

Lambda functions can be invoked using API gateways.

An Amazon Bedrock agent is invoked via a Lambda function.

A knowledge base-equipped Amazon Bedrock agent.

Amazon Bedrock agents' Athena SQL query action group.

Amazon Bedrock has example Athena table schemas for Security Lake. Sample table schemas improve SQL query generation for table fields in Security Lake, even if the Amazon Bedrock agent retrieves data from the Athena database.

A knowledge base on Amazon Bedrock to examine pre-existing incident response playbooks. The Amazon Bedrock agent might propose investigation or reaction based on playbooks allowed by your company.

Cost

Before installing the sample solution and reading this tutorial, understand the AWS service costs. The cost of Amazon Bedrock and Athena to query Security Lake depends on the amount of data.

Security Lake cost depends on AWS log and event data consumption. Security Lake charges separately for other AWS services. Amazon S3, AWS Glue, EventBridge, Lambda, SQS, and SNS include price details.

Amazon Bedrock on-demand pricing depends on input and output tokens and the large language model (LLM). A model learns to understand user input and instructions using tokens, which are a few characters. Amazon Bedrock pricing has additional details.

The SQL queries Amazon Bedrock creates are launched by Athena. Athena's cost depends on how much Security Lake data is scanned for that query. See Athena pricing for details.

Clear up

Clean up if you launched the security chatbot example solution using the Launch Stack button in the console with the CloudFormation template security_genai_chatbot_cfn:

Choose the Security GenAI Chatbot stack in CloudFormation for the account and region where the solution was installed.

Choose “Delete the stack”.

If you deployed the solution using AWS CDK, run cdk destruct –all.

Conclusion

The sample solution illustrates how task-oriented Amazon Bedrock agents and natural language input may increase security and speed up inquiry and analysis. A prototype solution using an Amazon Bedrock agent-driven user interface. This approach may be expanded to incorporate additional task-oriented agents with models, knowledge bases, and instructions. Increased use of AI-powered agents can help your AWS security team perform better across several domains.

The chatbot's backend views data normalised into the Open Cybersecurity Schema Framework (OCSF) by Security Lake.

#securitychatbot#AmazonBedrockagents#graphicaluserinterface#Bedrockagent#chatbot#chatbotsecurity#Technology#TechNews#technologynews#news#govindhtech

0 notes