#SET options in SQL

Explore tagged Tumblr posts

Text

Optimizing SQL Server Plan Cache: Strategies for Managing SET Option Variance

In the realm of SQL Server management, ensuring optimal performance often involves meticulous examination and tuning of the plan cache. This cache, a crucial component for executing queries efficiently, can become cluttered with numerous execution plans, particularly when queries spam the cache due to varying SET options. Consequently, this article delves into practical T-SQL code examples and…

View On WordPress

#Identifying spamming queries#Plan Cache management#query optimization#SET options in SQL#SQL Server performance

0 notes

Text

The Story of KLogs: What happens when an Mechanical Engineer codes

Since i no longer work at Wearhouse Automation Startup (WAS for short) and havnt for many years i feel as though i should recount the tale of the most bonkers program i ever wrote, but we need to establish some background

WAS has its HQ very far away from the big customer site and i worked as a Field Service Engineer (FSE) on site. so i learned early on that if a problem needed to be solved fast, WE had to do it. we never got many updates on what was coming down the pipeline for us or what issues were being worked on. this made us very independent

As such, we got good at reading the robot logs ourselves. it took too much time to send the logs off to HQ for analysis and get back what the problem was. we can read. now GETTING the logs is another thing.

the early robots we cut our teeth on used 2.4 gHz wifi to communicate with FSE's so dumping the logs was as simple as pushing a button in a little application and it would spit out a txt file

later on our robots were upgraded to use a 2.4 mHz xbee radio to communicate with us. which was FUCKING SLOW. and log dumping became a much more tedious process. you had to connect, go to logging mode, and then the robot would vomit all the logs in the past 2 min OR the entirety of its memory bank (only 2 options) into a terminal window. you would then save the terminal window and open it in a text editor to read them. it could take up to 5 min to dump the entire log file and if you didnt dump fast enough, the ACK messages from the control server would fill up the logs and erase the error as the memory overwrote itself.

this missing logs problem was a Big Deal for software who now weren't getting every log from every error so a NEW method of saving logs was devised: the robot would just vomit the log data in real time over a DIFFERENT radio and we would save it to a KQL server. Thanks Daddy Microsoft.

now whats KQL you may be asking. why, its Microsofts very own SQL clone! its Kusto Query Language. never mind that the system uses a SQL database for daily operations. lets use this proprietary Microsoft thing because they are paying us

so yay, problem solved. we now never miss the logs. so how do we read them if they are split up line by line in a database? why with a query of course!

select * from tbLogs where RobotUID = [64CharLongString] and timestamp > [UnixTimeCode]

if this makes no sense to you, CONGRATULATIONS! you found the problem with this setup. Most FSE's were BAD at SQL which meant they didnt read logs anymore. If you do understand what the query is, CONGRATULATIONS! you see why this is Very Stupid.

You could not search by robot name. each robot had some arbitrarily assigned 64 character long string as an identifier and the timestamps were not set to local time. so you had run a lookup query to find the right name and do some time zone math to figure out what part of the logs to read. oh yeah and you had to download KQL to view them. so now we had both SQL and KQL on our computers

NOBODY in the field like this.

But Daddy Microsoft comes to the rescue

see we didnt JUST get KQL with part of that deal. we got the entire Microsoft cloud suite. and some people (like me) had been automating emails and stuff with Power Automate

This is Microsoft Power Automate. its Microsoft's version of Scratch but it has hooks into everything Microsoft. SharePoint, Teams, Outlook, Excel, it can integrate with all of it. i had been using it to send an email once a day with a list of all the robots in maintenance.

this gave me an idea

and i checked

and Power Automate had hooks for KQL

KLogs is actually short for Kusto Logs

I did not know how to program in Power Automate but damn it anything is better then writing KQL queries. so i got to work. and about 2 months later i had a BEHEMOTH of a Power Automate program. it lagged the webpage and many times when i tried to edit something my changes wouldn't take and i would have to click in very specific ways to ensure none of my variables were getting nuked. i dont think this was the intended purpose of Power Automate but this is what it did

the KLogger would watch a list of Teams chats and when someone typed "klogs" or pasted a copy of an ERROR mesage, it would spring into action.

it extracted the robot name from the message and timestamp from teams

it would lookup the name in the database to find the 64 long string UID and the location that robot was assigned too

it would reply to the message in teams saying it found a robot name and was getting logs

it would run a KQL query for the database and get the control system logs then export then into a CSV

it would save the CSV with the a .xls extension into a folder in ShairPoint (it would make a new folder for each day and location if it didnt have one already)

it would send ANOTHER message in teams with a LINK to the file in SharePoint

it would then enter a loop and scour the robot logs looking for the keyword ESTOP to find the error. (it did this because Kusto was SLOWER then the xbee radio and had up to a 10 min delay on syncing)

if it found the error, it would adjust its start and end timestamps to capture it and export the robot logs book-ended from the event by ~ 1 min. if it didnt, it would use the timestamp from when it was triggered +/- 5 min

it saved THOSE logs to SharePoint the same way as before

it would send ANOTHER message in teams with a link to the files

it would then check if the error was 1 of 3 very specific type of error with the camera. if it was it extracted the base64 jpg image saved in KQL as a byte array, do the math to convert it, and save that as a jpg in SharePoint (and link it of course)

and then it would terminate. and if it encountered an error anywhere in all of this, i had logic where it would spit back an error message in Teams as plaintext explaining what step failed and the program would close gracefully

I deployed it without asking anyone at one of the sites that was struggling. i just pointed it at their chat and turned it on. it had a bit of a rocky start (spammed chat) but man did the FSE's LOVE IT.

about 6 months later software deployed their answer to reading the logs: a webpage that acted as a nice GUI to the KQL database. much better then an CSV file

it still needed you to scroll though a big drop-down of robot names and enter a timestamp, but i noticed something. all that did was just change part of the URL and refresh the webpage

SO I MADE KLOGS 2 AND HAD IT GENERATE THE URL FOR YOU AND REPLY TO YOUR MESSAGE WITH IT. (it also still did the control server and jpg stuff). Theres a non-zero chance that klogs was still in use long after i left that job

now i dont recommend anyone use power automate like this. its clunky and weird. i had to make a variable called "Carrage Return" which was a blank text box that i pressed enter one time in because it was incapable of understanding /n or generating a new line in any capacity OTHER then this (thanks support forum).

im also sure this probably is giving the actual programmer people anxiety. imagine working at a company and then some rando you've never seen but only heard about as "the FSE whos really good at root causing stuff", in a department that does not do any coding, managed to, in their spare time, build and release and entire workflow piggybacking on your work without any oversight, code review, or permission.....and everyone liked it

#comet tales#lazee works#power automate#coding#software engineering#it was so funny whenever i visited HQ because i would go “hi my name is LazeeComet” and they would go “OH i've heard SO much about you”

64 notes

·

View notes

Text

Python Libraries to Learn Before Tackling Data Analysis

To tackle data analysis effectively in Python, it's crucial to become familiar with several libraries that streamline the process of data manipulation, exploration, and visualization. Here's a breakdown of the essential libraries:

1. NumPy

- Purpose: Numerical computing.

- Why Learn It: NumPy provides support for large multi-dimensional arrays and matrices, along with a collection of mathematical functions to operate on these arrays efficiently.

- Key Features:

- Fast array processing.

- Mathematical operations on arrays (e.g., sum, mean, standard deviation).

- Linear algebra operations.

2. Pandas

- Purpose: Data manipulation and analysis.

- Why Learn It: Pandas offers data structures like DataFrames, making it easier to handle and analyze structured data.

- Key Features:

- Reading/writing data from CSV, Excel, SQL databases, and more.

- Handling missing data.

- Powerful group-by operations.

- Data filtering and transformation.

3. Matplotlib

- Purpose: Data visualization.

- Why Learn It: Matplotlib is one of the most widely used plotting libraries in Python, allowing for a wide range of static, animated, and interactive plots.

- Key Features:

- Line plots, bar charts, histograms, scatter plots.

- Customizable charts (labels, colors, legends).

- Integration with Pandas for quick plotting.

4. Seaborn

- Purpose: Statistical data visualization.

- Why Learn It: Built on top of Matplotlib, Seaborn simplifies the creation of attractive and informative statistical graphics.

- Key Features:

- High-level interface for drawing attractive statistical graphics.

- Easier to use for complex visualizations like heatmaps, pair plots, etc.

- Visualizations based on categorical data.

5. SciPy

- Purpose: Scientific and technical computing.

- Why Learn It: SciPy builds on NumPy and provides additional functionality for complex mathematical operations and scientific computing.

- Key Features:

- Optimized algorithms for numerical integration, optimization, and more.

- Statistics, signal processing, and linear algebra modules.

6. Scikit-learn

- Purpose: Machine learning and statistical modeling.

- Why Learn It: Scikit-learn provides simple and efficient tools for data mining, analysis, and machine learning.

- Key Features:

- Classification, regression, and clustering algorithms.

- Dimensionality reduction, model selection, and preprocessing utilities.

7. Statsmodels

- Purpose: Statistical analysis.

- Why Learn It: Statsmodels allows users to explore data, estimate statistical models, and perform tests.

- Key Features:

- Linear regression, logistic regression, time series analysis.

- Statistical tests and models for descriptive statistics.

8. Plotly

- Purpose: Interactive data visualization.

- Why Learn It: Plotly allows for the creation of interactive and web-based visualizations, making it ideal for dashboards and presentations.

- Key Features:

- Interactive plots like scatter, line, bar, and 3D plots.

- Easy integration with web frameworks.

- Dashboards and web applications with Dash.

9. TensorFlow/PyTorch (Optional)

- Purpose: Machine learning and deep learning.

- Why Learn It: If your data analysis involves machine learning, these libraries will help in building, training, and deploying deep learning models.

- Key Features:

- Tensor processing and automatic differentiation.

- Building neural networks.

10. Dask (Optional)

- Purpose: Parallel computing for data analysis.

- Why Learn It: Dask enables scalable data manipulation by parallelizing Pandas operations, making it ideal for big datasets.

- Key Features:

- Works with NumPy, Pandas, and Scikit-learn.

- Handles large data and parallel computations easily.

Focusing on NumPy, Pandas, Matplotlib, and Seaborn will set a strong foundation for basic data analysis.

9 notes

·

View notes

Text

Symfony Clickjacking Prevention Guide

Clickjacking is a deceptive technique where attackers trick users into clicking on hidden elements, potentially leading to unauthorized actions. As a Symfony developer, it's crucial to implement measures to prevent such vulnerabilities.

🔍 Understanding Clickjacking

Clickjacking involves embedding a transparent iframe over a legitimate webpage, deceiving users into interacting with hidden content. This can lead to unauthorized actions, such as changing account settings or initiating transactions.

🛠️ Implementing X-Frame-Options in Symfony

The X-Frame-Options HTTP header is a primary defense against clickjacking. It controls whether a browser should be allowed to render a page in a <frame>, <iframe>, <embed>, or <object> tag.

Method 1: Using an Event Subscriber

Create an event subscriber to add the X-Frame-Options header to all responses:

// src/EventSubscriber/ClickjackingProtectionSubscriber.php namespace App\EventSubscriber; use Symfony\Component\EventDispatcher\EventSubscriberInterface; use Symfony\Component\HttpKernel\Event\ResponseEvent; use Symfony\Component\HttpKernel\KernelEvents; class ClickjackingProtectionSubscriber implements EventSubscriberInterface { public static function getSubscribedEvents() { return [ KernelEvents::RESPONSE => 'onKernelResponse', ]; } public function onKernelResponse(ResponseEvent $event) { $response = $event->getResponse(); $response->headers->set('X-Frame-Options', 'DENY'); } }

This approach ensures that all responses include the X-Frame-Options header, preventing the page from being embedded in frames or iframes.

Method 2: Using NelmioSecurityBundle

The NelmioSecurityBundle provides additional security features for Symfony applications, including clickjacking protection.

Install the bundle:

composer require nelmio/security-bundle

Configure the bundle in config/packages/nelmio_security.yaml:

nelmio_security: clickjacking: paths: '^/.*': DENY

This configuration adds the X-Frame-Options: DENY header to all responses, preventing the site from being embedded in frames or iframes.

🧪 Testing Your Application

To ensure your application is protected against clickjacking, use our Website Vulnerability Scanner. This tool scans your website for common vulnerabilities, including missing or misconfigured X-Frame-Options headers.

Screenshot of the free tools webpage where you can access security assessment tools.

After scanning for a Website Security check, you'll receive a detailed report highlighting any security issues:

An Example of a vulnerability assessment report generated with our free tool, providing insights into possible vulnerabilities.

🔒 Enhancing Security with Content Security Policy (CSP)

While X-Frame-Options is effective, modern browsers support the more flexible Content-Security-Policy (CSP) header, which provides granular control over framing.

Add the following header to your responses:

$response->headers->set('Content-Security-Policy', "frame-ancestors 'none';");

This directive prevents any domain from embedding your content, offering robust protection against clickjacking.

🧰 Additional Security Measures

CSRF Protection: Ensure that all forms include CSRF tokens to prevent cross-site request forgery attacks.

Regular Updates: Keep Symfony and all dependencies up to date to patch known vulnerabilities.

Security Audits: Conduct regular security audits to identify and address potential vulnerabilities.

📢 Explore More on Our Blog

For more insights into securing your Symfony applications, visit our Pentest Testing Blog. We cover a range of topics, including:

Preventing clickjacking in Laravel

Securing API endpoints

Mitigating SQL injection attacks

🛡️ Our Web Application Penetration Testing Services

Looking for a comprehensive security assessment? Our Web Application Penetration Testing Services offer:

Manual Testing: In-depth analysis by security experts.

Affordable Pricing: Services starting at $25/hr.

Detailed Reports: Actionable insights with remediation steps.

Contact us today for a free consultation and enhance your application's security posture.

3 notes

·

View notes

Text

Short-Term vs. Long-Term Data Analytics Course in Delhi: Which One to Choose?

In today’s digital world, data is everywhere. From small businesses to large organizations, everyone uses data to make better decisions. Data analytics helps in understanding and using this data effectively. If you are interested in learning data analytics, you might wonder whether to choose a short-term or a long-term course. Both options have their benefits, and your choice depends on your goals, time, and career plans.

At Uncodemy, we offer both short-term and long-term data analytics courses in Delhi. This article will help you understand the key differences between these courses and guide you to make the right choice.

What is Data Analytics?

Data analytics is the process of examining large sets of data to find patterns, insights, and trends. It involves collecting, cleaning, analyzing, and interpreting data. Companies use data analytics to improve their services, understand customer behavior, and increase efficiency.

There are four main types of data analytics:

Descriptive Analytics: Understanding what has happened in the past.

Diagnostic Analytics: Identifying why something happened.

Predictive Analytics: Forecasting future outcomes.

Prescriptive Analytics: Suggesting actions to achieve desired outcomes.

Short-Term Data Analytics Course

A short-term data analytics course is a fast-paced program designed to teach you essential skills quickly. These courses usually last from a few weeks to a few months.

Benefits of a Short-Term Data Analytics Course

Quick Learning: You can learn the basics of data analytics in a short time.

Cost-Effective: Short-term courses are usually more affordable.

Skill Upgrade: Ideal for professionals looking to add new skills without a long commitment.

Job-Ready: Get practical knowledge and start working in less time.

Who Should Choose a Short-Term Course?

Working Professionals: If you want to upskill without leaving your job.

Students: If you want to add data analytics to your resume quickly.

Career Switchers: If you want to explore data analytics before committing to a long-term course.

What You Will Learn in a Short-Term Course

Introduction to Data Analytics

Basic Tools (Excel, SQL, Python)

Data Visualization (Tableau, Power BI)

Basic Statistics and Data Interpretation

Hands-on Projects

Long-Term Data Analytics Course

A long-term data analytics course is a comprehensive program that provides in-depth knowledge. These courses usually last from six months to two years.

Benefits of a Long-Term Data Analytics Course

Deep Knowledge: Covers advanced topics and techniques in detail.

Better Job Opportunities: Preferred by employers for specialized roles.

Practical Experience: Includes internships and real-world projects.

Certifications: You may earn industry-recognized certifications.

Who Should Choose a Long-Term Course?

Beginners: If you want to start a career in data analytics from scratch.

Career Changers: If you want to switch to a data analytics career.

Serious Learners: If you want advanced knowledge and long-term career growth.

What You Will Learn in a Long-Term Course

Advanced Data Analytics Techniques

Machine Learning and AI

Big Data Tools (Hadoop, Spark)

Data Ethics and Governance

Capstone Projects and Internships

Key Differences Between Short-Term and Long-Term Courses

FeatureShort-Term CourseLong-Term CourseDurationWeeks to a few monthsSix months to two yearsDepth of KnowledgeBasic and Intermediate ConceptsAdvanced and Specialized ConceptsCostMore AffordableHigher InvestmentLearning StyleFast-PacedDetailed and ComprehensiveCareer ImpactQuick Entry-Level JobsBetter Career Growth and High-Level JobsCertificationBasic CertificateIndustry-Recognized CertificationsPractical ProjectsLimitedExtensive and Real-World Projects

How to Choose the Right Course for You

When deciding between a short-term and long-term data analytics course at Uncodemy, consider these factors:

Your Career Goals

If you want a quick job or basic knowledge, choose a short-term course.

If you want a long-term career in data analytics, choose a long-term course.

Time Commitment

Choose a short-term course if you have limited time.

Choose a long-term course if you can dedicate several months to learning.

Budget

Short-term courses are usually more affordable.

Long-term courses require a bigger investment but offer better returns.

Current Knowledge

If you already know some basics, a short-term course will enhance your skills.

If you are a beginner, a long-term course will provide a solid foundation.

Job Market

Short-term courses can help you get entry-level jobs quickly.

Long-term courses open doors to advanced and specialized roles.

Why Choose Uncodemy for Data Analytics Courses in Delhi?

At Uncodemy, we provide top-quality training in data analytics. Our courses are designed by industry experts to meet the latest market demands. Here’s why you should choose us:

Experienced Trainers: Learn from professionals with real-world experience.

Practical Learning: Hands-on projects and case studies.

Flexible Schedule: Choose classes that fit your timing.

Placement Assistance: We help you find the right job after course completion.

Certification: Receive a recognized certificate to boost your career.

Final Thoughts

Choosing between a short-term and long-term data analytics course depends on your goals, time, and budget. If you want quick skills and job readiness, a short-term course is ideal. If you seek in-depth knowledge and long-term career growth, a long-term course is the better choice.

At Uncodemy, we offer both options to meet your needs. Start your journey in data analytics today and open the door to exciting career opportunities. Visit our website or contact us to learn more about our Data Analytics course in delhi.

Your future in data analytics starts here with Uncodemy!

2 notes

·

View notes

Text

What Are the Qualifications for a Data Scientist?

In today's data-driven world, the role of a data scientist has become one of the most coveted career paths. With businesses relying on data for decision-making, understanding customer behavior, and improving products, the demand for skilled professionals who can analyze, interpret, and extract value from data is at an all-time high. If you're wondering what qualifications are needed to become a successful data scientist, how DataCouncil can help you get there, and why a data science course in Pune is a great option, this blog has the answers.

The Key Qualifications for a Data Scientist

To succeed as a data scientist, a mix of technical skills, education, and hands-on experience is essential. Here are the core qualifications required:

1. Educational Background

A strong foundation in mathematics, statistics, or computer science is typically expected. Most data scientists hold at least a bachelor’s degree in one of these fields, with many pursuing higher education such as a master's or a Ph.D. A data science course in Pune with DataCouncil can bridge this gap, offering the academic and practical knowledge required for a strong start in the industry.

2. Proficiency in Programming Languages

Programming is at the heart of data science. You need to be comfortable with languages like Python, R, and SQL, which are widely used for data analysis, machine learning, and database management. A comprehensive data science course in Pune will teach these programming skills from scratch, ensuring you become proficient in coding for data science tasks.

3. Understanding of Machine Learning

Data scientists must have a solid grasp of machine learning techniques and algorithms such as regression, clustering, and decision trees. By enrolling in a DataCouncil course, you'll learn how to implement machine learning models to analyze data and make predictions, an essential qualification for landing a data science job.

4. Data Wrangling Skills

Raw data is often messy and unstructured, and a good data scientist needs to be adept at cleaning and processing data before it can be analyzed. DataCouncil's data science course in Pune includes practical training in tools like Pandas and Numpy for effective data wrangling, helping you develop a strong skill set in this critical area.

5. Statistical Knowledge

Statistical analysis forms the backbone of data science. Knowledge of probability, hypothesis testing, and statistical modeling allows data scientists to draw meaningful insights from data. A structured data science course in Pune offers the theoretical and practical aspects of statistics required to excel.

6. Communication and Data Visualization Skills

Being able to explain your findings in a clear and concise manner is crucial. Data scientists often need to communicate with non-technical stakeholders, making tools like Tableau, Power BI, and Matplotlib essential for creating insightful visualizations. DataCouncil’s data science course in Pune includes modules on data visualization, which can help you present data in a way that’s easy to understand.

7. Domain Knowledge

Apart from technical skills, understanding the industry you work in is a major asset. Whether it’s healthcare, finance, or e-commerce, knowing how data applies within your industry will set you apart from the competition. DataCouncil's data science course in Pune is designed to offer case studies from multiple industries, helping students gain domain-specific insights.

Why Choose DataCouncil for a Data Science Course in Pune?

If you're looking to build a successful career as a data scientist, enrolling in a data science course in Pune with DataCouncil can be your first step toward reaching your goals. Here’s why DataCouncil is the ideal choice:

Comprehensive Curriculum: The course covers everything from the basics of data science to advanced machine learning techniques.

Hands-On Projects: You'll work on real-world projects that mimic the challenges faced by data scientists in various industries.

Experienced Faculty: Learn from industry professionals who have years of experience in data science and analytics.

100% Placement Support: DataCouncil provides job assistance to help you land a data science job in Pune or anywhere else, making it a great investment in your future.

Flexible Learning Options: With both weekday and weekend batches, DataCouncil ensures that you can learn at your own pace without compromising your current commitments.

Conclusion

Becoming a data scientist requires a combination of technical expertise, analytical skills, and industry knowledge. By enrolling in a data science course in Pune with DataCouncil, you can gain all the qualifications you need to thrive in this exciting field. Whether you're a fresher looking to start your career or a professional wanting to upskill, this course will equip you with the knowledge, skills, and practical experience to succeed as a data scientist.

Explore DataCouncil’s offerings today and take the first step toward unlocking a rewarding career in data science! Looking for the best data science course in Pune? DataCouncil offers comprehensive data science classes in Pune, designed to equip you with the skills to excel in this booming field. Our data science course in Pune covers everything from data analysis to machine learning, with competitive data science course fees in Pune. We provide job-oriented programs, making us the best institute for data science in Pune with placement support. Explore online data science training in Pune and take your career to new heights!

#In today's data-driven world#the role of a data scientist has become one of the most coveted career paths. With businesses relying on data for decision-making#understanding customer behavior#and improving products#the demand for skilled professionals who can analyze#interpret#and extract value from data is at an all-time high. If you're wondering what qualifications are needed to become a successful data scientis#how DataCouncil can help you get there#and why a data science course in Pune is a great option#this blog has the answers.#The Key Qualifications for a Data Scientist#To succeed as a data scientist#a mix of technical skills#education#and hands-on experience is essential. Here are the core qualifications required:#1. Educational Background#A strong foundation in mathematics#statistics#or computer science is typically expected. Most data scientists hold at least a bachelor’s degree in one of these fields#with many pursuing higher education such as a master's or a Ph.D. A data science course in Pune with DataCouncil can bridge this gap#offering the academic and practical knowledge required for a strong start in the industry.#2. Proficiency in Programming Languages#Programming is at the heart of data science. You need to be comfortable with languages like Python#R#and SQL#which are widely used for data analysis#machine learning#and database management. A comprehensive data science course in Pune will teach these programming skills from scratch#ensuring you become proficient in coding for data science tasks.#3. Understanding of Machine Learning

3 notes

·

View notes

Text

The Role of Machine Learning Engineer: Combining Technology and Artificial Intelligence

Artificial intelligence has transformed our daily lives in a greater way than we can’t imagine over the past year, Impacting how we work, communicate, and solve problems. Today, Artificial intelligence furiously drives the world in all sectors from daily life to the healthcare industry. In this blog we will learn how machine learning engineer build systems that learn from data and get better over time, playing a huge part in the development of artificial intelligence (AI). Artificial intelligence is an important field, making it more innovative in every industry. In the blog, we will look career in Machine learning in the field of engineering.

What is Machine Learning Engineering?

Machine Learning engineer is a specialist who designs and builds AI models to make complex challenges easy. The role in this field merges data science and software engineering making both fields important in this field. The main role of a Machine learning engineer is to build and design software that can automate AI models. The demand for this field has grown in recent years. As Artificial intelligence is a driving force in our daily needs, it become important to run the AI in a clear and automated way.

A machine learning engineer creates systems that help computers to learn and make decisions, similar to human tasks like recognizing voices, identifying images, or predicting results. Not similar to regular programming, which follows strict rules, machine learning focuses on teaching computers to find patterns in data and improve their predictions over time.

Responsibility of a Machine Learning Engineer:

Collecting and Preparing Data

Machine learning needs a lot of data to work well. These engineers spend a lot of time finding and organizing data. That means looking for useful data sources and fixing any missing information. Good data preparation is essential because it sets the foundation for building successful models.

Building and Training Models

The main task of Machine learning engineer is creating models that learn from data. Using tools like TensorFlow, PyTorch, and many more, they build proper algorithms for specific tasks. Training a model is challenging and requires careful adjustments and monitoring to ensure it’s accurate and useful.

Checking Model Performance

When a model is trained, then it is important to check how well it works. Machine learning engineers use scores like accuracy to see model performance. They usually test the model with separate data to see how it performs in real-world situations and make improvements as needed.

Arranging and Maintaining the Model

After testing, ML engineers put the model into action so it can work with real-time data. They monitor the model to make sure it stays accurate over time, as data can change and affect results. Regular updates help keep the model effective.

Working with Other Teams

ML engineers often work closely with data scientists, software engineers, and experts in the field. This teamwork ensures that the machine learning solution fits the business goals and integrates smoothly with other systems.

Important skill that should have to become Machine Learning Engineer:

Programming Languages

Python and R are popular options in machine learning, also other languages like Java or C++ can also help, especially for projects needing high performance.

Data Handling and Processing

Working with large datasets is necessary in Machine Learning. ML engineers should know how to use SQL and other database tools and be skilled in preparing and cleaning data before using it in models.

Machine Learning Structure

ML engineers need to know structure like TensorFlow, Keras, PyTorch, and sci-kit-learn. Each of these tools has unique strengths for building and training models, so choosing the right one depends on the project.

Mathematics and Statistics

A strong background in math, including calculus, linear algebra, probability, and statistics, helps ML engineers understand how algorithms work and make accurate predictions.

Why to become a Machine Learning engineer?

A career as a machine learning engineer is both challenging and creative, allowing you to work with the latest technology. This field is always changing, with new tools and ideas coming up every year. If you like to enjoy solving complex problems and want to make a real impact, ML engineering offers an exciting path.

Conclusion

Machine learning engineer plays an important role in AI and data science, turning data into useful insights and creating systems that learn on their own. This career is great for people who love technology, enjoy learning, and want to make a difference in their lives. With many opportunities and uses, Artificial intelligence is a growing field that promises exciting innovations that will shape our future. Artificial Intelligence is changing the world and we should also keep updated our knowledge in this field, Read AI related latest blogs here.

2 notes

·

View notes

Text

Which is better full stack development or testing?

Full Stack Development vs Software Testing: Which Career Path is Right for You?

In today’s rapidly evolving IT industry, choosing the right career path can be challenging. Two popular options are Full Stack Development and Software Testing. Both of these fields offer unique opportunities and cater to different skill sets, making it essential to assess which one aligns better with your interests, goals, and long-term career aspirations.

At FirstBit Solutions, we take pride in offering a premium quality of teaching, with expert-led courses designed to provide real-world skills. Our goal is to help you know, no matter which path you choose. Whether you’re interested in development or testing, our 100% unlimited placement call guarantee ensures ample job opportunities. In this answer, we’ll explore both career paths to help you make an informed decision.

Understanding Full Stack Development

What is Full Stack Development?

Full Stack Development involves working on both the front-end (client-side) and back-end (server-side) of web applications. Full stack developers handle everything from designing the user interface (UI) to managing databases and server logic. They are versatile professionals who can oversee a project from start to finish.

Key Skills Required for Full Stack Development

To become a full stack developer, you need a diverse set of skills, including:

Front-End Technologies: HTML, CSS, and JavaScript are the fundamental building blocks of web development. Additionally, proficiency in front-end frameworks like React, Angular, or Vue.js is crucial for creating dynamic and responsive web interfaces.

Back-End Technologies: Understanding back-end programming languages like Node.js, Python, Ruby, Java, or PHP is essential for server-side development. Additionally, knowledge of frameworks like Express.js, Django, or Spring can help streamline development processes.

Databases: Full stack developers must know how to work with both SQL (e.g., MySQL, PostgreSQL) and NoSQL (e.g., MongoDB) databases.

Version Control and Collaboration: Proficiency in tools like Git, GitHub, and agile methodologies is important for working in a collaborative environment.

Job Opportunities in Full Stack Development

Full stack developers are in high demand due to their versatility. Companies often prefer professionals who can handle both front-end and back-end tasks, making them valuable assets in any development team. Full stack developers can work in:

Web Development

Mobile App Development

Enterprise Solutions

Startup Ecosystems

The flexibility to work on multiple layers of development opens doors to various career opportunities. Moreover, the continuous rise of startups and digital transformation initiatives has further fueled the demand for full stack developers.

Benefits of Choosing Full Stack Development

High Demand: The need for full stack developers is constantly increasing across industries, making it a lucrative career choice.

Versatility: You can switch between front-end and back-end tasks, giving you a holistic understanding of how applications work.

Creativity: If you enjoy creating visually appealing interfaces while also solving complex back-end problems, full stack development allows you to engage both creative and logical thinking.

Salary: Full stack developers typically enjoy competitive salaries due to their wide skill set and ability to handle various tasks.

Understanding Software Testing

What is Software Testing?

Software Testing is the process of evaluating and verifying that a software product or application is free of defects, meets specified requirements, and functions as expected. Testers ensure the quality and reliability of software by conducting both manual and automated tests.

Key Skills Required for Software Testing

To succeed in software testing, you need to develop the following skills:

Manual Testing: Knowledge of testing techniques, understanding different testing types (unit, integration, system, UAT, etc.), and the ability to write test cases are fundamental for manual testing.

Automated Testing: Proficiency in tools like Selenium, JUnit, TestNG, or Cucumber is essential for automating repetitive test scenarios and improving efficiency.

Attention to Detail: Testers must have a keen eye for identifying potential issues, bugs, and vulnerabilities in software systems.

Scripting Knowledge: Basic programming skills in languages like Java, Python, or JavaScript are necessary to write and maintain test scripts for automated testing.

Job Opportunities in Software Testing

As the demand for high-quality software increases, so does the need for skilled software testers. Companies are investing heavily in testing to ensure that their products perform optimally in the competitive market. Software testers can work in:

Manual Testing

Automated Testing

Quality Assurance (QA) Engineering

Test Automation Development

With the rise of Agile and DevOps methodologies, the role of testers has become even more critical. Continuous integration and continuous delivery (CI/CD) pipelines rely on automated testing to deliver reliable software faster.

Benefits of Choosing Software Testing

Job Security: With software quality being paramount, skilled testers are in high demand, and the need for testing professionals will only continue to grow.

Quality Assurance: If you have a knack for perfection and enjoy ensuring that software works flawlessly, testing could be a satisfying career.

Automated Testing Growth: The shift toward automation opens up new opportunities for testers to specialize in test automation tools and frameworks, which are essential for faster releases.

Flexibility: Testing provides opportunities to work across different domains and industries, as almost every software product requires thorough testing.

Full Stack Development vs Software Testing: A Comparative Analysis

Let’s break down the major factors that could influence your decision:

Factors

Full Stack Development

Software Testing

Skills

Proficiency in front-end and back-end technologies, databases

Manual and automated testing, attention to detail, scripting

Creativity

High – involves creating and designing both UI and logic

Moderate – focuses on improving software through testing and validation

Job Roles

Web Developer, Full Stack Engineer, Mobile App Developer

QA Engineer, Test Automation Engineer, Software Tester

Career Growth

Opportunities to transition into senior roles like CTO or Solution Architect

Growth towards roles in automation and quality management

Salary

Competitive with wide-ranging opportunities

Competitive, with automation testers in higher demand

Demand

High demand due to increasing digitalization and web-based applications

Consistently high, especially in Agile/DevOps environments

Learning Curve

Steep – requires mastering multiple languages and technologies

Moderate – requires a focus on testing tools, techniques, and automation

Why Choose FirstBit Solutions for Full Stack Development or Software Testing?

At FirstBit Solutions, we provide comprehensive training in both full stack development and software testing. Our experienced faculty ensures that you gain hands-on experience and practical knowledge in the field of your choice. Our 100% unlimited placement call guarantee ensures that you have ample opportunities to land your dream job, no matter which course you pursue. Here’s why FirstBit is your ideal training partner:

Expert Trainers: Learn from industry veterans with years of experience in development and testing.

Real-World Projects: Work on real-world projects that simulate industry scenarios, providing you with the practical experience needed to excel.

Job Assistance: Our robust placement support ensures you have access to job openings with top companies.

Flexible Learning: Choose from online and offline batch options to fit your schedule.

Conclusion: Which Career Path is Right for You?

Ultimately, the choice between full stack development and software testing comes down to your personal interests, skills, and career aspirations. If you’re someone who enjoys building applications from the ground up, full stack development might be the perfect fit for you. On the other hand, if you take satisfaction in ensuring that software is of the highest quality, software testing could be your calling.

At FirstBit Solutions, we provide top-notch training in both fields, allowing you to pursue your passion and build a successful career in the IT industry. With our industry-aligned curriculum, expert guidance, and 100% placement call guarantee, your future is in good hands.

So, what are you waiting for? Choose the course that excites you and start your journey toward a rewarding career today!

#education#programming#tech#technology#training#python#full stack developer#software testing#itservices#java#.net#.net developers#datascience

2 notes

·

View notes

Text

VPS Windows Hosting in India: The Ultimate Guide for 2024

In the ever-evolving landscape of web hosting, Virtual Private Servers (VPS) have become a preferred choice for both businesses and individuals. Striking a balance between performance, cost-effectiveness, and scalability, VPS hosting serves those seeking more than what shared hosting provides without the significant expense of a dedicated server. Within the myriad of VPS options, VPS Windows Hosting stands out as a popular choice for users who have a preference for the Microsoft ecosystem.

This comprehensive guide will explore VPS Windows Hosting in India, shedding light on its functionality, key advantages, its relevance for Indian businesses, and how to select the right hosting provider in 2024.

What is VPS Windows Hosting?

VPS Windows Hosting refers to a hosting type where a physical server is partitioned into various virtual servers, each operating with its own independent Windows OS. Unlike shared hosting, where resources are shared among multiple users, VPS provides dedicated resources, including CPU, RAM, and storage, which leads to enhanced performance, security, and control.

Why Choose VPS Windows Hosting in India?

The rapid growth of India’s digital landscape and the rise in online businesses make VPS hosting an attractive option. Here are several reasons why Windows VPS Hosting can be an optimal choice for your website or application in India:

Seamless Compatibility: Windows VPS is entirely compatible with Microsoft applications such as ASP.NET, SQL Server, and Microsoft Exchange. For websites or applications that depend on these technologies, Windows VPS becomes a natural option.

Scalability for Expanding Businesses: A notable advantage of VPS hosting is its scalability. As your website or enterprise grows, upgrading server resources can be done effortlessly without downtime or cumbersome migration. This aspect is vital for startups and SMEs in India aiming to scale economically.

Localized Hosting for Improved Speed: Numerous Indian hosting providers have data centers within the country, minimizing latency and enabling quicker access for local users, which is particularly advantageous for targeting audiences within India.

Enhanced Security: VPS hosting delivers superior security compared to shared hosting, which is essential in an era where cyber threats are increasingly prevalent. Dedicated resources ensure your data remains isolated from others on the same physical server, diminishing the risk of vulnerabilities.

Key Benefits of VPS Windows Hosting

Dedicated Resources: VPS Windows hosting ensures dedicated CPU, RAM, and storage, providing seamless performance, even during traffic surges.

Full Administrative Control: With Windows VPS, you gain root access, allowing you to customize server settings, install applications, and make necessary adjustments.

Cost Efficiency: VPS hosting provides the advantages of dedicated hosting at a more economical price point. This is incredibly beneficial for businesses looking to maintain a competitive edge in India’s market.

Configurability: Whether you require specific Windows applications or custom software, VPS Windows hosting allows you to tailor the server to meet your unique needs.

Managed vs. Unmanaged Options: Depending on your technical ability, you can opt for managed VPS hosting, where the provider manages server maintenance, updates, and security, or unmanaged VPS hosting, where you retain full control of the server and its management.

How to Select the Right VPS Windows Hosting Provider in India

With a plethora of hosting providers in India offering VPS Windows hosting, selecting one that meets your requirements is crucial. Here are several factors to consider:

Performance & Uptime: Choose a hosting provider that guarantees a minimum uptime of 99.9%. Reliable uptime ensures your website remains accessible at all times, which is crucial for any online venture.

Data Center Location: Confirm that the hosting provider has data centers located within India or in proximity to your target users. This will enhance loading speeds and overall user satisfaction.

Pricing & Plans: Evaluate pricing plans from various providers to ensure you’re receiving optimal value. Consider both initial costs and renewal rates, as some providers may offer discounts for longer commitments.

Customer Support: Opt for a provider that offers 24/7 customer support, especially if you lack an in-house IT team. Look for companies that offer support through various channels like chat, phone, and email.

Security Features: Prioritize providers offering robust security features such as firewall protection, DDoS mitigation, automatic backups, and SSL certificates.

Backup and Recovery: Regular backups are vital for data protection. Verify if the provider includes automated backups and quick recovery options for potential issues.

Top VPS Windows Hosting Providers in India (2024)

To streamline your research, here's a brief overview of some of the top VPS Windows hosting providers in India for 2024:

Host.co.in

Recognized for its competitive pricing and exceptional customer support, Host.co.in offers a range of Windows VPS plans catering to businesses of various sizes.

BigRock

Among the most well-known hosting providers in India, BigRock guarantees reliable uptime, superb customer service, and diverse hosting packages, including Windows VPS.

MilesWeb

MilesWeb offers fully managed VPS hosting solutions at attractive prices, making it a great option for businesses intent on prioritizing growth over server management.

GoDaddy

As a leading name in hosting, GoDaddy provides flexible Windows VPS plans designed for Indian businesses, coupled with round-the-clock customer support.

Bluehost India

Bluehost delivers powerful VPS solutions for users requiring high performance, along with an intuitive control panel and impressive uptime.

Conclusion

VPS Windows Hosting in India is an outstanding option for individuals and businesses in search of a scalable, cost-effective, and performance-oriented hosting solution. With dedicated resources and seamless integration with Microsoft technologies, it suits websites that experience growing traffic or require ample resources.

As we advance into 2024, the necessity for VPS Windows hosting is expected to persist, making it imperative to choose a hosting provider that can accommodate your developing requirements. Whether launching a new website or upgrading your existing hosting package, VPS Windows hosting is a strategic investment for the future of your online endeavors.

FAQs

Is VPS Windows Hosting costly in India?

While VPS Windows hosting is pricier than shared hosting, it is much more affordable than dedicated servers and many providers in India offer competitive rates, making it accessible for small and medium-sized enterprises.

Can I upgrade my VPS Windows Hosting plan easily?

Absolutely, VPS hosting plans provide significant scalability. You can effortlessly enhance your resources like CPU, RAM, and storage without experiencing downtime.

What type of businesses benefit from VPS Windows Hosting in India?

Businesses that demand high performance, improved security, and scalability find the most advantage in VPS hosting. It’s particularly ideal for sites that utilize Windows-based technologies like ASP.NET and SQL Server.

2 notes

·

View notes

Text

Backend update

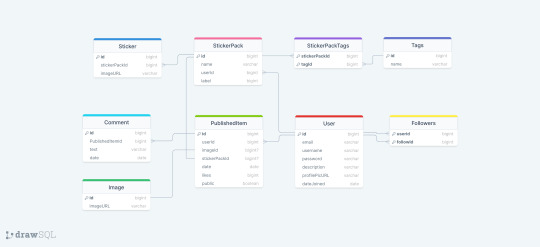

Had the most horrible time working with Sequelize today! As I usually do whenever I work with Sequelize! Sequelize is an SQL ORM - instead of writing raw SQL, ORM gives you an option to code it in a way that looks much more like an OOP, which is arguably simpler if you are used to programming that way. So to explain my project a little bit, it's a full stack web app - an online photo editor for dragging and dropping stickers onto canvas/picture. Here is the diagram.

I'm doing it with Next which I've never used before, I only did vanilla js, React and a lil bit of Angular before. The architecture of a next project immediately messed me up so much, it's way different from the ones I've used before and I often got lost in the folders and where to put stuff properly (this is a huge thing to me because I always want it to be organized by the industry standard and I had no reference Next projects from any previous jobs/college so it got really overwhelming really soon :/) . The next problem was setting up my MySQL database with Sequelize because I know from my past experience that Sequelize is very sensitive to where you position certain files/functions and in which order are they. I made all the models (Sequelize equivalent of tables) and when it was time to sync, it would sync only two models out of nine. I figured it was because the other ones weren't called anywhere. Btw a fun fact

So I imported them to my index.js file I made in my database folder. It was reporting an db.define() is not a function error now. That was weird because it didn't report that for the first two tables that went through. To make a really long story short - because I was used to an server/client architecture, I didn't properly run the index.js file, but just did an "npm run dev" and was counting on all of the files to run in an order I am used to, that was not the case tho. After about an hour, I figured I just needed to run index.js solo first. The only reasons those first two tables went through in the beginning is because of the test api calls I made to them in a separate file :I I cannot wait to finish this project, it is for my bachelors thesis or whatever it's called...wish me luck to finish this by 1.9. XD

Also if you have any questions about any of the technologies I used here, feel free to message me c: <3 Bye!

#codeblr#code#programming#webdevelopment#mysql#nextjs#sequelize#full stack web development#fullstackdeveloper#student#computer science#women in stem#backend#studyblr

15 notes

·

View notes

Text

5 Best Online Free SQL Formatter Tools for Efficient Code Formatting

Introduction:

SQL (Structured Query Language) is a powerful tool for managing and manipulating relational databases. Writing clean and well-formatted SQL code is crucial for readability, collaboration, and maintenance. To aid developers in formatting their SQL code, several online tools are available for free. In this article, we will explore five of the best online free SQL formatter tools, with a special emphasis on one standout tool: sqlformatter.org.

sqlformatter.org: sqlformatter.org is a user-friendly and powerful online SQL formatter that stands out for its simplicity and effectiveness. The tool supports various SQL dialects, including MySQL, PostgreSQL, and SQLite. Users can easily paste their SQL code into the input area, adjust formatting options, and instantly see the formatted output. The website also provides the option to download the formatted SQL code. With its clean interface and robust formatting capabilities, sqlformatter.org is a top choice for developers seeking a hassle-free SQL formatting experience.

Poor SQL Formatter: Poor SQL Formatter is another excellent online tool that focuses on making poorly formatted SQL code more readable. It offers a simple interface where users can paste their SQL code and quickly format it. The tool supports various SQL databases, and users can customize the formatting options according to their preferences. Additionally, Poor SQL Formatter provides a side-by-side comparison of the original and formatted code, making it easy to spot and understand the changes.

Online-SQL-Formatter: Online-SQL-Formatter is a versatile tool that supports multiple SQL dialects, including standard SQL, MySQL, and PostgreSQL. The website provides a clean and intuitive interface where users can input their SQL code and customize formatting options. The tool also allows users to validate their SQL syntax and format the code accordingly. With its comprehensive set of features, Online-SQL-Formatter is a reliable choice for developers working with various SQL database systems.

DBeaver SQL Formatter: DBeaver is a popular open-source database tool, and it offers a built-in SQL formatter as part of its feature set. While primarily known as a database management tool, DBeaver's SQL formatter is a valuable resource for developers seeking a unified environment for database-related tasks. Users can format their SQL code directly within the DBeaver interface, making it a seamless experience for those already using the tool for database development.

Online Formatter by Dan's Tools: Dan's Tools provides a comprehensive suite of online tools, including a user-friendly SQL formatter. This tool supports various SQL dialects and offers a straightforward interface for formatting SQL code. Users can customize formatting options and quickly obtain well-organized SQL code. The website also provides additional SQL-related tools, making it a convenient hub for developers working on SQL projects.

Conclusion:

Formatting SQL code is an essential aspect of database development, and the availability of free online tools makes this process more accessible and efficient. Whether you prefer the simplicity of sqlformatter.org, the readability focus of Poor SQL Formatter, the versatility of Online-SQL-Formatter, the integration with DBeaver, or the convenience of Dan's Tools, these tools cater to different preferences and requirements. Choose the one that aligns with your workflow and coding style to enhance the readability and maintainability of your SQL code.

2 notes

·

View notes

Text

Why Power BI Takes the Lead Against SSRS

In an era where data steers the course of businesses and fuels informed decisions, the choice of a data visualization and reporting tool becomes paramount. Amidst the myriad of options, two stalwarts stand out: Power BI and SSRS (SQL Server Reporting Services). As organizations, including those seeking Power BI training in Gurgaon, strive to extract meaningful insights from their data, the debate about which tool to embrace gains prominence. In this digital age, where data is often referred to as the "new oil," selecting the right tool can make or break a business's competitive edge.

Understanding the Landscape

What is Power BI?

Microsoft Power BI is a powerful business analytics application that enables organizations to visualize data and communicate insights across the organization. With its intuitive interface and user-friendly features, Power BI transforms raw data into interactive visuals, making it easier to interpret and draw actionable conclusions.

What is SSRS?

On the other hand, SSRS, also developed by Microsoft, focuses on traditional reporting. It enables the creation, management, and delivery of traditional paginated reports. SSRS has been a reliable choice for years, but the advent of Power BI has brought new dimensions to data analysis.

The Advantages of Power BI Over SSRS

In the realm of data analysis and reporting tools, Power BI shines as a modern marvel, surpassing SSRS in various crucial aspects. Let's explore the advantages that set Power BI apart:

1. Interactive Visualizations

Power BI's forte lies in its ability to transform raw data into interactive and captivating visual representations. Unlike SSRS, which predominantly deals with static reports, Power BI empowers users to explore data dynamically, enabling them to drill down into specifics and gain deeper insights. This interactive approach enhances data comprehension and decision-making processes.

2. Real-time Insights

While SSRS offers a snapshot of data at a particular moment, Power BI steps ahead with real-time data analysis capabilities. Modern businesses, including those enrolling in a Power BI training institute in Bangalore, require up-to-the-minute insights to stay competitive, and Power BI caters precisely to this need. It connects seamlessly to various data sources, ensuring that decisions are based on the latest information.

3. User-Friendly Interface

Power BI's intuitive interface stands in stark contrast to SSRS's somewhat technical setup. With its drag-and-drop functionality, Power BI eliminates the need for extensive coding knowledge. This accessibility allows a wider range of users, from business analysts to executives, to create and customize reports without depending heavily on IT departments.

4. Scalability

As a company grows, so does the amount of data it handles. Power BI's cloud-based architecture ensures scalability without compromising performance. Whether you're dealing with a small dataset or handling enterprise-level data, Power BI can handle the load, guaranteeing smooth operations and robust analysis.

5. Natural Language Queries

One of Power BI's standout features is its ability to understand natural language queries. Users can interact with the tool using everyday language and receive relevant visualizations in response. This bridge between human language and data analytics simplifies the process for non-technical users, making insights accessible to all.

The SEO Advantage

In the digital age, search engine optimization (SEO) plays a vital role in ensuring your content, including information about Power BI training in Mumbai, reaches the right audience. When it comes to comparing Power BI and SSRS in terms of SEO, Power BI once again takes the lead.

With their interactive visual content, Power BI-enhanced articles attract more engagement. This higher engagement leads to longer on-page time, lower bounce rates, and improved SEO rankings. Search engines recognize user behavior as a marker of content quality and relevance, boosting the visibility of Power BI-related articles.

For more information, contact us at:

Call: 8750676576, 871076576

Email: [email protected]

Website: www.advancedexcel.net

#power bi training in gurgaon#power bi coaching in gurgaon#power bi classes in mumbai#power bi course in mumbai#power bi training institute in bangalore#power bi coaching in bangalore

2 notes

·

View notes

Text

Unlock a High-Growth Career with the Best Data Science Courses in Delhi and Gurgaon

In the age of digital transformation, data is the new currency. Companies across industries are heavily investing in data-driven strategies to stay ahead in the competition. This has led to an unprecedented demand for data scientists and data analysts. If you're aiming to enter this exciting field, enrolling in the best data science certification course is your first step toward success.

For aspiring professionals in India, two locations stand out for their quality education and job prospects – the data scientist course in Delhi and the data science course in Gurgaon. Both offer practical, industry-aligned training that prepares you for real-world challenges in the field of data science.

Explore the Data Scientist Course in Delhi – A Capital Choice for Learners

Delhi is not only the capital of India but also a hub for education and IT. Many top institutes in Delhi offer structured and advanced data scientist courses that combine theory with hands-on experience. These courses often include:

Python and R programming

Statistics and data analysis

Machine learning algorithms

Data visualization tools (Tableau, Power BI)

Deep learning and artificial intelligence

Capstone projects using real datasets

The data scientist course in Delhi is ideal for fresh graduates, IT professionals, engineers, and business analysts who want to transition into a data-centric role. These courses are often available in flexible formats – weekend, part-time, and full-time – to cater to different schedules.

Why Enroll in a Data Science Course in Gurgaon?

Gurgaon (Gurugram) is known as the tech and corporate hub of India. It houses several Fortune 500 companies, MNCs, and startups, making it a hotspot for data-driven jobs. A data science course in Gurgaon connects learners directly with the job market. These courses are structured to ensure that learners gain:

Strong foundations in statistics and data interpretation

Knowledge of programming tools like Python, SQL, and Spark

Practical exposure through live projects and internships

Interview preparation and placement support

With experienced mentors and access to corporate tie-ups, students in Gurgaon benefit from real-time industry exposure. This makes them highly employable and job-ready by the time they finish their certification.

Choosing the Best Data Science Certification Course – What to Look For

When selecting a course, make sure it’s not just about flashy advertisements or promises. The best data science certification course should meet certain essential criteria:

Updated Syllabus: Aligned with current industry demands like AI, cloud computing, and big data.

Practical Exposure: Real-world case studies, datasets, and project-based learning.

Certification Recognition: Provided by reputed institutions or globally accepted platforms.

Mentorship and Support: Personalized guidance, doubt sessions, and career counseling.

Placement Assistance: Active help with resume building, mock interviews, and job referrals.

Final Thoughts

Whether you're looking to join a data scientist course in Delhi or a data science course in Gurgaon, one thing is clear – the opportunities in this field are vast and growing. With the right certification and skill set, you can unlock career options in tech giants, finance, healthcare, e-commerce, and more.

Don’t wait for the right time. The data revolution is happening now. Enroll in the best data science certification course and future-proof your career today!

0 notes

Text

Dataflow Migration Essentials: Moving Tableau Hyper Extracts to Power BI Dataflows

Migrating from Tableau to Power BI is more than just a visual transition—it’s a shift in how data is handled, transformed, and managed. A critical part of this transition involves moving Tableau’s Hyper Extracts into the Power BI environment, particularly into Power BI Dataflows, which are essential for scalable and maintainable data architecture. Understanding the nuances of this process ensures a smoother migration and long-term success with Power BI.

Understanding Tableau Hyper Extracts

Tableau Hyper Extracts are high-performance, compressed data sources that enhance query speed and interactivity. These files are optimized for Tableau’s internal engine, offering fast access and flexibility when working offline or with large datasets. However, Power BI doesn’t natively consume Hyper files. Therefore, migrating Hyper data requires a structured transformation process to integrate the same logic and performance into Power BI Dataflows.

What Are Power BI Dataflows?

Power BI Dataflows are cloud-based ETL (Extract, Transform, Load) processes powered by Power Query online. They allow you to define reusable, centralized data transformation logic that feeds multiple Power BI datasets. Using Dataflows helps teams enforce governance, share transformations across reports, and offload heavy data prep from desktop to cloud.

Migration Steps: Hyper to Dataflow

Exporting Hyper Data: Begin by exporting your Tableau Hyper Extracts into a format Power BI can ingest—preferably CSV or a SQL-compatible format. You may need to automate this if you’re handling multiple files or frequent refreshes.

Recreating Transformations in Power Query: Tableau Prep and calculated fields often contain transformation logic that must be replicated in Power BI’s Power Query Editor. Review each Hyper extract’s source logic and convert it into M code using Power Query Online within Power BI Dataflows.

Uploading to Data Lake (Optional): For large datasets, consider storing your CSV or transformed files in Azure Data Lake or SharePoint and referencing them directly in your Dataflow. This promotes scalability and aligns with Power BI's modern data architecture.

Building the Dataflow: Use Power BI Service to create a new Dataflow. Connect to your source (e.g., Azure, SQL, local folder), import the data, and apply transformations. Ensure column types, naming conventions, and relationships match the original Hyper Extract model.

Scheduling Refresh: Set up automatic refreshes for your Dataflows. Unlike Tableau Extracts, which are manually or conditionally refreshed, Power BI Dataflows allow you to schedule refresh intervals, ensuring up-to-date reporting at all times.

Key Considerations

Performance Tuning: Dataflows can impact performance if poorly structured. Avoid unnecessary joins, ensure data is filtered at source, and optimize M queries.

Governance: Centralize your logic in Dataflows to promote consistency across Power BI datasets and prevent data silos.

Compatibility Check: Validate that all logic from Hyper Extracts—especially custom calculations—can be translated effectively using Power Query’s capabilities.

Final Thoughts

Migrating Tableau Hyper Extracts to Power BI Dataflows isn't a simple copy-paste operation—it requires a deliberate strategy to map, transform, and optimize your data for the Power BI ecosystem. By leveraging the flexibility of Power BI Dataflows and the robust cloud infrastructure behind them, organizations can unlock deeper analytics, better performance, and long-term BI scalability.

Visit https://tableautopowerbimigration.com to explore expert tools, strategies, and support tailored for every stage of your Tableau to Power BI migration journey.

0 notes

Text

Master the Future: Join the Best Data Science Course in Kharadi Pune at GoDigi Infotech

In today's data-driven world, Data Science has emerged as one of the most powerful and essential skill sets across industries. Whether it’s predicting customer behavior, improving business operations, or advancing AI technologies, data science is at the core of modern innovation. For individuals seeking to build a high-demand career in this field, enrolling in a Data Science Course in Kharadi Pune is a strategic move. And when it comes to top-notch training with real-world application, GoDigi Infotech stands out as the premier destination.

Why Choose a Data Science Course in Kharadi Pune?

Kharadi, a thriving IT and business hub in Pune, is rapidly becoming a magnet for tech professionals and aspiring data scientists. Choosing a data science course in Kharadi Pune places you at the heart of a booming tech ecosystem. Proximity to leading IT parks, startups, and MNCs means students have better internship opportunities, networking chances, and job placements.

Moreover, Pune is known for its educational excellence, and Kharadi, in particular, blends professional exposure with an ideal learning environment.

GoDigi Infotech – Leading the Way in Data Science Education

GoDigi Infotech is a recognized name when it comes to professional IT training in Pune. Specializing in future-forward technologies, GoDigi has designed its Data Science Course in Kharadi Pune to meet industry standards and deliver practical knowledge that can be immediately applied in real-world scenarios.

Here’s why GoDigi Infotech is the best choice for aspiring data scientists:

Experienced Trainers: Learn from industry experts with real-time project experience.

Practical Approach: Emphasis on hands-on training, real-time datasets, and mini-projects.

Placement Assistance: Strong industry tie-ups and dedicated placement support.

Flexible Batches: Weekday and weekend options to suit working professionals and students.

Comprehensive Curriculum: Covering Python, Machine Learning, Deep Learning, SQL, Power BI, and more.

Course Highlights – What You’ll Learn

The Data Science Course at GoDigi Infotech is crafted to take you from beginner to professional. The curriculum covers:

Python for Data Science

Basic to advanced Python programming

Data manipulation using Pandas and NumPy

Data visualization using Matplotlib and Seaborn

Statistics & Probability

Descriptive statistics, probability distributions

Hypothesis testing

Inferential statistics

Machine Learning

Supervised & unsupervised learning

Algorithms like Linear Regression, Decision Trees, Random Forest, SVM, K-Means Clustering

Deep Learning

Neural networks

TensorFlow and Keras frameworks

Natural Language Processing (NLP)

Data Handling Tools

SQL for database management

Power BI/Tableau for data visualization

Excel for quick analysis

Capstone Projects

Real-life business problems

End-to-end data science project execution

By the end of the course, learners will have built an impressive portfolio that showcases their data science expertise.

Career Opportunities After Completing the Course

The demand for data science professionals is surging across sectors such as finance, healthcare, e-commerce, and IT. By completing a Data Science Course in Kharadi Pune at GoDigi Infotech, you unlock access to roles such as:

Data Scientist

Data Analyst

Machine Learning Engineer

AI Developer

Business Intelligence Analyst

Statistical Analyst

Data Engineer

Whether you are a fresh graduate or a working professional planning to shift to the tech domain, this course offers the ideal foundation and growth trajectory.

Why GoDigi’s Location in Kharadi Gives You the Edge

Being located in Kharadi, GoDigi Infotech offers unmatched advantages:

Networking Opportunities: Get access to tech meetups, seminars, and hiring events.

Internships & Live Projects: Collaborations with startups and MNCs in and around Kharadi.

Easy Accessibility: Well-connected with public transport, metro, and major roads.

Who Should Enroll in This Course?

The Data Science Course in Kharadi Pune by GoDigi Infotech is perfect for:

Final year students looking for a tech career

IT professionals aiming to upskill

Analysts or engineers looking to switch careers

Entrepreneurs and managers wanting to understand data analytics

Enthusiasts with a non-technical background willing to learn

There’s no strict prerequisite—just your interest and commitment to learning.

Visit Us: GoDigi Infotech - Google Map Location

Located in the heart of Kharadi, Pune, GoDigi Infotech is your stepping stone to a data-driven future. Explore the campus, talk to our advisors, and take the first step toward a transformative career.

Conclusion: Your Data Science Journey Begins Here

In a world where data is the new oil, the ability to analyze and act on information is a superpower. If you're ready to build a rewarding, future-proof career, enrolling in a Data Science Course in Kharadi Pune at GoDigi Infotech is your smartest move. With expert training, practical exposure, and strong placement support, GoDigi is committed to turning beginners into industry-ready data scientists.Don’t wait for opportunities—create them. Enroll today with GoDigi Infotech and turn data into your career advantage.

0 notes

Text