#Search Result Data Scraping

Explore tagged Tumblr posts

Text

Discover how search engine scraping, specifically Google search results data scraping, can provide valuable insights for SEO, market research, and competitive analysis. Learn the techniques and tools to extract real-time data from Google efficiently while navigating legal and ethical considerations to boost your digital strategy.

#Search Engine Scraping#Scrape Google Search Results#Search Engine Scraping Services#Google Search Results data#Google Search Engine Data Scraping

0 notes

Text

Google Search Results Data Scraping

Google Search Results Data Scraping

Harness the Power of Information with Google Search Results Data Scraping Services by DataScrapingServices.com. In the digital age, information is king. For businesses, researchers, and marketing professionals, the ability to access and analyze data from Google search results can be a game-changer. However, manually sifting through search results to gather relevant data is not only time-consuming but also inefficient. DataScrapingServices.com offers cutting-edge Google Search Results Data Scraping services, enabling you to efficiently extract valuable information and transform it into actionable insights.

The vast amount of information available through Google search results can provide invaluable insights into market trends, competitor activities, customer behavior, and more. Whether you need data for SEO analysis, market research, or competitive intelligence, DataScrapingServices.com offers comprehensive data scraping services tailored to meet your specific needs. Our advanced scraping technology ensures you get accurate and up-to-date data, helping you stay ahead in your industry.

List of Data Fields

Our Google Search Results Data Scraping services can extract a wide range of data fields, ensuring you have all the information you need:

-Business Name: The name of the business or entity featured in the search result.

- URL: The web address of the search result.

- Website: The primary website of the business or entity.

- Phone Number: Contact phone number of the business.

- Email Address: Contact email address of the business.

- Physical Address: The street address, city, state, and ZIP code of the business.

- Business Hours: Business operating hours

- Ratings and Reviews: Customer ratings and reviews for the business.

- Google Maps Link: Link to the business’s location on Google Maps.

- Social Media Profiles: LinkedIn, Twitter, Facebook

These data fields provide a comprehensive overview of the information available from Google search results, enabling businesses to gain valuable insights and make informed decisions.

Benefits of Google Search Results Data Scraping

1. Enhanced SEO Strategy

Understanding how your website ranks for specific keywords and phrases is crucial for effective SEO. Our data scraping services provide detailed insights into your current rankings, allowing you to identify opportunities for optimization and stay ahead of your competitors.

2. Competitive Analysis

Track your competitors’ online presence and strategies by analyzing their rankings, backlinks, and domain authority. This information helps you understand their strengths and weaknesses, enabling you to adjust your strategies accordingly.

3. Market Research

Access to comprehensive search result data allows you to identify trends, preferences, and behavior patterns in your target market. This information is invaluable for product development, marketing campaigns, and business strategy planning.

4. Content Development

By analyzing top-performing content in search results, you can gain insights into what types of content resonate with your audience. This helps you create more effective and engaging content that drives traffic and conversions.

5. Efficiency and Accuracy

Our automated scraping services ensure you get accurate and up-to-date data quickly, saving you time and resources.

Best Google Data Scraping Services

Scraping Google Business Reviews

Extract Restaurant Data From Google Maps

Google My Business Data Scraping

Google Shopping Products Scraping

Google News Extraction Services

Scrape Data From Google Maps

Google News Headline Extraction

Google Maps Data Scraping Services

Google Map Businesses Data Scraping

Google Business Reviews Extraction

Best Google Search Results Data Scraping Services in USA

Dallas, Portland, Los Angeles, Virginia Beach, Fort Wichita, Nashville, Long Beach, Raleigh, Boston, Austin, San Antonio, Philadelphia, Indianapolis, Orlando, San Diego, Houston, Worth, Jacksonville, New Orleans, Columbus, Kansas City, Sacramento, San Francisco, Omaha, Honolulu, Washington, Colorado, Chicago, Arlington, Denver, El Paso, Miami, Louisville, Albuquerque, Tulsa, Springs, Bakersfield, Milwaukee, Memphis, Oklahoma City, Atlanta, Seattle, Las Vegas, San Jose, Tucson and New York.

Conclusion

In today’s data-driven world, having access to detailed and accurate information from Google search results can give your business a significant edge. DataScrapingServices.com offers professional Google Search Results Data Scraping services designed to meet your unique needs. Whether you’re looking to enhance your SEO strategy, conduct market research, or gain competitive intelligence, our services provide the comprehensive data you need to succeed. Contact us at [email protected] today to learn how our data scraping solutions can transform your business strategy and drive growth.

Website: Datascrapingservices.com

Email: [email protected]

#Google Search Results Data Scraping#Harness the Power of Information with Google Search Results Data Scraping Services by DataScrapingServices.com. In the digital age#information is king. For businesses#researchers#and marketing professionals#the ability to access and analyze data from Google search results can be a game-changer. However#manually sifting through search results to gather relevant data is not only time-consuming but also inefficient. DataScrapingServices.com o#enabling you to efficiently extract valuable information and transform it into actionable insights.#The vast amount of information available through Google search results can provide invaluable insights into market trends#competitor activities#customer behavior#and more. Whether you need data for SEO analysis#market research#or competitive intelligence#DataScrapingServices.com offers comprehensive data scraping services tailored to meet your specific needs. Our advanced scraping technology#helping you stay ahead in your industry.#List of Data Fields#Our Google Search Results Data Scraping services can extract a wide range of data fields#ensuring you have all the information you need:#-Business Name: The name of the business or entity featured in the search result.#- URL: The web address of the search result.#- Website: The primary website of the business or entity.#- Phone Number: Contact phone number of the business.#- Email Address: Contact email address of the business.#- Physical Address: The street address#city#state#and ZIP code of the business.#- Business Hours: Business operating hours#- Ratings and Reviews: Customer ratings and reviews for the business.

0 notes

Text

Google is now the only search engine that can surface results from Reddit, making one of the web’s most valuable repositories of user generated content exclusive to the internet’s already dominant search engine. If you use Bing, DuckDuckGo, Mojeek, Qwant or any other alternative search engine that doesn’t rely on Google’s indexing and search Reddit by using “site:reddit.com,” you will not see any results from the last week. DuckDuckGo is currently turning up seven links when searching Reddit, but provides no data on where the links go or why, instead only saying that “We would like to show you a description here but the site won't allow us.” Older results will still show up, but these search engines are no longer able to “crawl” Reddit, meaning that Google is the only search engine that will turn up results from Reddit going forward. Searching for Reddit still works on Kagi, an independent, paid search engine that buys part of its search index from Google. The news shows how Google’s near monopoly on search is now actively hindering other companies’ ability to compete at a time when Google is facing increasing criticism over the quality of its search results. And while neither Reddit or Google responded to a request for comment, it appears that the exclusion of other search engines is the result of a multi-million dollar deal that gives Google the right to scrape Reddit for data to train its AI products.

July 24 2024

2K notes

·

View notes

Text

Google is now the only search engine that can surface results from Reddit, making one of the web’s most valuable repositories of user generated content exclusive to the internet’s already dominant search engine. "...while neither Reddit or Google responded to a request for comment, it appears that the exclusion of other search engines is the result of a multi-million dollar deal that gives Google the right to scrape Reddit for data to train its AI products."

830 notes

·

View notes

Text

AI “art” and uncanniness

TOMORROW (May 14), I'm on a livecast about AI AND ENSHITTIFICATION with TIM O'REILLY; on TOMORROW (May 15), I'm in NORTH HOLLYWOOD for a screening of STEPHANIE KELTON'S FINDING THE MONEY; FRIDAY (May 17), I'm at the INTERNET ARCHIVE in SAN FRANCISCO to keynote the 10th anniversary of the AUTHORS ALLIANCE.

When it comes to AI art (or "art"), it's hard to find a nuanced position that respects creative workers' labor rights, free expression, copyright law's vital exceptions and limitations, and aesthetics.

I am, on balance, opposed to AI art, but there are some important caveats to that position. For starters, I think it's unequivocally wrong – as a matter of law – to say that scraping works and training a model with them infringes copyright. This isn't a moral position (I'll get to that in a second), but rather a technical one.

Break down the steps of training a model and it quickly becomes apparent why it's technically wrong to call this a copyright infringement. First, the act of making transient copies of works – even billions of works – is unequivocally fair use. Unless you think search engines and the Internet Archive shouldn't exist, then you should support scraping at scale:

https://pluralistic.net/2023/09/17/how-to-think-about-scraping/

And unless you think that Facebook should be allowed to use the law to block projects like Ad Observer, which gathers samples of paid political disinformation, then you should support scraping at scale, even when the site being scraped objects (at least sometimes):

https://pluralistic.net/2021/08/06/get-you-coming-and-going/#potemkin-research-program

After making transient copies of lots of works, the next step in AI training is to subject them to mathematical analysis. Again, this isn't a copyright violation.

Making quantitative observations about works is a longstanding, respected and important tool for criticism, analysis, archiving and new acts of creation. Measuring the steady contraction of the vocabulary in successive Agatha Christie novels turns out to offer a fascinating window into her dementia:

https://www.theguardian.com/books/2009/apr/03/agatha-christie-alzheimers-research

Programmatic analysis of scraped online speech is also critical to the burgeoning formal analyses of the language spoken by minorities, producing a vibrant account of the rigorous grammar of dialects that have long been dismissed as "slang":

https://www.researchgate.net/publication/373950278_Lexicogrammatical_Analysis_on_African-American_Vernacular_English_Spoken_by_African-Amecian_You-Tubers

Since 1988, UCL Survey of English Language has maintained its "International Corpus of English," and scholars have plumbed its depth to draw important conclusions about the wide variety of Englishes spoken around the world, especially in postcolonial English-speaking countries:

https://www.ucl.ac.uk/english-usage/projects/ice.htm

The final step in training a model is publishing the conclusions of the quantitative analysis of the temporarily copied documents as software code. Code itself is a form of expressive speech – and that expressivity is key to the fight for privacy, because the fact that code is speech limits how governments can censor software:

https://www.eff.org/deeplinks/2015/04/remembering-case-established-code-speech/

Are models infringing? Well, they certainly can be. In some cases, it's clear that models "memorized" some of the data in their training set, making the fair use, transient copy into an infringing, permanent one. That's generally considered to be the result of a programming error, and it could certainly be prevented (say, by comparing the model to the training data and removing any memorizations that appear).

Not every seeming act of memorization is a memorization, though. While specific models vary widely, the amount of data from each training item retained by the model is very small. For example, Midjourney retains about one byte of information from each image in its training data. If we're talking about a typical low-resolution web image of say, 300kb, that would be one three-hundred-thousandth (0.0000033%) of the original image.

Typically in copyright discussions, when one work contains 0.0000033% of another work, we don't even raise the question of fair use. Rather, we dismiss the use as de minimis (short for de minimis non curat lex or "The law does not concern itself with trifles"):

https://en.wikipedia.org/wiki/De_minimis

Busting someone who takes 0.0000033% of your work for copyright infringement is like swearing out a trespassing complaint against someone because the edge of their shoe touched one blade of grass on your lawn.

But some works or elements of work appear many times online. For example, the Getty Images watermark appears on millions of similar images of people standing on red carpets and runways, so a model that takes even in infinitesimal sample of each one of those works might still end up being able to produce a whole, recognizable Getty Images watermark.

The same is true for wire-service articles or other widely syndicated texts: there might be dozens or even hundreds of copies of these works in training data, resulting in the memorization of long passages from them.

This might be infringing (we're getting into some gnarly, unprecedented territory here), but again, even if it is, it wouldn't be a big hardship for model makers to post-process their models by comparing them to the training set, deleting any inadvertent memorizations. Even if the resulting model had zero memorizations, this would do nothing to alleviate the (legitimate) concerns of creative workers about the creation and use of these models.

So here's the first nuance in the AI art debate: as a technical matter, training a model isn't a copyright infringement. Creative workers who hope that they can use copyright law to prevent AI from changing the creative labor market are likely to be very disappointed in court:

https://www.hollywoodreporter.com/business/business-news/sarah-silverman-lawsuit-ai-meta-1235669403/

But copyright law isn't a fixed, eternal entity. We write new copyright laws all the time. If current copyright law doesn't prevent the creation of models, what about a future copyright law?

Well, sure, that's a possibility. The first thing to consider is the possible collateral damage of such a law. The legal space for scraping enables a wide range of scholarly, archival, organizational and critical purposes. We'd have to be very careful not to inadvertently ban, say, the scraping of a politician's campaign website, lest we enable liars to run for office and renege on their promises, while they insist that they never made those promises in the first place. We wouldn't want to abolish search engines, or stop creators from scraping their own work off sites that are going away or changing their terms of service.

Now, onto quantitative analysis: counting words and measuring pixels are not activities that you should need permission to perform, with or without a computer, even if the person whose words or pixels you're counting doesn't want you to. You should be able to look as hard as you want at the pixels in Kate Middleton's family photos, or track the rise and fall of the Oxford comma, and you shouldn't need anyone's permission to do so.

Finally, there's publishing the model. There are plenty of published mathematical analyses of large corpuses that are useful and unobjectionable. I love me a good Google n-gram:

https://books.google.com/ngrams/graph?content=fantods%2C+heebie-jeebies&year_start=1800&year_end=2019&corpus=en-2019&smoothing=3

And large language models fill all kinds of important niches, like the Human Rights Data Analysis Group's LLM-based work helping the Innocence Project New Orleans' extract data from wrongful conviction case files:

https://hrdag.org/tech-notes/large-language-models-IPNO.html

So that's nuance number two: if we decide to make a new copyright law, we'll need to be very sure that we don't accidentally crush these beneficial activities that don't undermine artistic labor markets.

This brings me to the most important point: passing a new copyright law that requires permission to train an AI won't help creative workers get paid or protect our jobs.

Getty Images pays photographers the least it can get away with. Publishers contracts have transformed by inches into miles-long, ghastly rights grabs that take everything from writers, but still shifts legal risks onto them:

https://pluralistic.net/2022/06/19/reasonable-agreement/

Publishers like the New York Times bitterly oppose their writers' unions:

https://actionnetwork.org/letters/new-york-times-stop-union-busting

These large corporations already control the copyrights to gigantic amounts of training data, and they have means, motive and opportunity to license these works for training a model in order to pay us less, and they are engaged in this activity right now:

https://www.nytimes.com/2023/12/22/technology/apple-ai-news-publishers.html

Big games studios are already acting as though there was a copyright in training data, and requiring their voice actors to begin every recording session with words to the effect of, "I hereby grant permission to train an AI with my voice" and if you don't like it, you can hit the bricks:

https://www.vice.com/en/article/5d37za/voice-actors-sign-away-rights-to-artificial-intelligence

If you're a creative worker hoping to pay your bills, it doesn't matter whether your wages are eroded by a model produced without paying your employer for the right to do so, or whether your employer got to double dip by selling your work to an AI company to train a model, and then used that model to fire you or erode your wages:

https://pluralistic.net/2023/02/09/ai-monkeys-paw/#bullied-schoolkids

Individual creative workers rarely have any bargaining leverage over the corporations that license our copyrights. That's why copyright's 40-year expansion (in duration, scope, statutory damages) has resulted in larger, more profitable entertainment companies, and lower payments – in real terms and as a share of the income generated by their work – for creative workers.

As Rebecca Giblin and I write in our book Chokepoint Capitalism, giving creative workers more rights to bargain with against giant corporations that control access to our audiences is like giving your bullied schoolkid extra lunch money – it's just a roundabout way of transferring that money to the bullies:

https://pluralistic.net/2022/08/21/what-is-chokepoint-capitalism/

There's an historical precedent for this struggle – the fight over music sampling. 40 years ago, it wasn't clear whether sampling required a copyright license, and early hip-hop artists took samples without permission, the way a horn player might drop a couple bars of a well-known song into a solo.

Many artists were rightfully furious over this. The "heritage acts" (the music industry's euphemism for "Black people") who were most sampled had been given very bad deals and had seen very little of the fortunes generated by their creative labor. Many of them were desperately poor, despite having made millions for their labels. When other musicians started making money off that work, they got mad.

In the decades that followed, the system for sampling changed, partly through court cases and partly through the commercial terms set by the Big Three labels: Sony, Warner and Universal, who control 70% of all music recordings. Today, you generally can't sample without signing up to one of the Big Three (they are reluctant to deal with indies), and that means taking their standard deal, which is very bad, and also signs away your right to control your samples.

So a musician who wants to sample has to sign the bad terms offered by a Big Three label, and then hand $500 out of their advance to one of those Big Three labels for the sample license. That $500 typically doesn't go to another artist – it goes to the label, who share it around their executives and investors. This is a system that makes every artist poorer.

But it gets worse. Putting a price on samples changes the kind of music that can be economically viable. If you wanted to clear all the samples on an album like Public Enemy's "It Takes a Nation of Millions To Hold Us Back," or the Beastie Boys' "Paul's Boutique," you'd have to sell every CD for $150, just to break even:

https://memex.craphound.com/2011/07/08/creative-license-how-the-hell-did-sampling-get-so-screwed-up-and-what-the-hell-do-we-do-about-it/

Sampling licenses don't just make every artist financially worse off, they also prevent the creation of music of the sort that millions of people enjoy. But it gets even worse. Some older, sample-heavy music can't be cleared. Most of De La Soul's catalog wasn't available for 15 years, and even though some of their seminal music came back in March 2022, the band's frontman Trugoy the Dove didn't live to see it – he died in February 2022:

https://www.vulture.com/2023/02/de-la-soul-trugoy-the-dove-dead-at-54.html

This is the third nuance: even if we can craft a model-banning copyright system that doesn't catch a lot of dolphins in its tuna net, it could still make artists poorer off.

Back when sampling started, it wasn't clear whether it would ever be considered artistically important. Early sampling was crude and experimental. Musicians who trained for years to master an instrument were dismissive of the idea that clicking a mouse was "making music." Today, most of us don't question the idea that sampling can produce meaningful art – even musicians who believe in licensing samples.

Having lived through that era, I'm prepared to believe that maybe I'll look back on AI "art" and say, "damn, I can't believe I never thought that could be real art."

But I wouldn't give odds on it.

I don't like AI art. I find it anodyne, boring. As Henry Farrell writes, it's uncanny, and not in a good way:

https://www.programmablemutter.com/p/large-language-models-are-uncanny

Farrell likens the work produced by AIs to the movement of a Ouija board's planchette, something that "seems to have a life of its own, even though its motion is a collective side-effect of the motions of the people whose fingers lightly rest on top of it." This is "spooky-action-at-a-close-up," transforming "collective inputs … into apparently quite specific outputs that are not the intended creation of any conscious mind."

Look, art is irrational in the sense that it speaks to us at some non-rational, or sub-rational level. Caring about the tribulations of imaginary people or being fascinated by pictures of things that don't exist (or that aren't even recognizable) doesn't make any sense. There's a way in which all art is like an optical illusion for our cognition, an imaginary thing that captures us the way a real thing might.

But art is amazing. Making art and experiencing art makes us feel big, numinous, irreducible emotions. Making art keeps me sane. Experiencing art is a precondition for all the joy in my life. Having spent most of my life as a working artist, I've come to the conclusion that the reason for this is that art transmits an approximation of some big, numinous irreducible emotion from an artist's mind to our own. That's it: that's why art is amazing.

AI doesn't have a mind. It doesn't have an intention. The aesthetic choices made by AI aren't choices, they're averages. As Farrell writes, "LLM art sometimes seems to communicate a message, as art does, but it is unclear where that message comes from, or what it means. If it has any meaning at all, it is a meaning that does not stem from organizing intention" (emphasis mine).

Farrell cites Mark Fisher's The Weird and the Eerie, which defines "weird" in easy to understand terms ("that which does not belong") but really grapples with "eerie."

For Fisher, eeriness is "when there is something present where there should be nothing, or is there is nothing present when there should be something." AI art produces the seeming of intention without intending anything. It appears to be an agent, but it has no agency. It's eerie.

Fisher talks about capitalism as eerie. Capital is "conjured out of nothing" but "exerts more influence than any allegedly substantial entity." The "invisible hand" shapes our lives more than any person. The invisible hand is fucking eerie. Capitalism is a system in which insubstantial non-things – corporations – appear to act with intention, often at odds with the intentions of the human beings carrying out those actions.

So will AI art ever be art? I don't know. There's a long tradition of using random or irrational or impersonal inputs as the starting point for human acts of artistic creativity. Think of divination:

https://pluralistic.net/2022/07/31/divination/

Or Brian Eno's Oblique Strategies:

http://stoney.sb.org/eno/oblique.html

I love making my little collages for this blog, though I wouldn't call them important art. Nevertheless, piecing together bits of other peoples' work can make fantastic, important work of historical note:

https://www.johnheartfield.com/John-Heartfield-Exhibition/john-heartfield-art/famous-anti-fascist-art/heartfield-posters-aiz

Even though painstakingly cutting out tiny elements from others' images can be a meditative and educational experience, I don't think that using tiny scissors or the lasso tool is what defines the "art" in collage. If you can automate some of this process, it could still be art.

Here's what I do know. Creating an individual bargainable copyright over training will not improve the material conditions of artists' lives – all it will do is change the relative shares of the value we create, shifting some of that value from tech companies that hate us and want us to starve to entertainment companies that hate us and want us to starve.

As an artist, I'm foursquare against anything that stands in the way of making art. As an artistic worker, I'm entirely committed to things that help workers get a fair share of the money their work creates, feed their families and pay their rent.

I think today's AI art is bad, and I think tomorrow's AI art will probably be bad, but even if you disagree (with either proposition), I hope you'll agree that we should be focused on making sure art is legal to make and that artists get paid for it.

Just because copyright won't fix the creative labor market, it doesn't follow that nothing will. If we're worried about labor issues, we can look to labor law to improve our conditions. That's what the Hollywood writers did, in their groundbreaking 2023 strike:

https://pluralistic.net/2023/10/01/how-the-writers-guild-sunk-ais-ship/

Now, the writers had an advantage: they are able to engage in "sectoral bargaining," where a union bargains with all the major employers at once. That's illegal in nearly every other kind of labor market. But if we're willing to entertain the possibility of getting a new copyright law passed (that won't make artists better off), why not the possibility of passing a new labor law (that will)? Sure, our bosses won't lobby alongside of us for more labor protection, the way they would for more copyright (think for a moment about what that says about who benefits from copyright versus labor law expansion).

But all workers benefit from expanded labor protection. Rather than going to Congress alongside our bosses from the studios and labels and publishers to demand more copyright, we could go to Congress alongside every kind of worker, from fast-food cashiers to publishing assistants to truck drivers to demand the right to sectoral bargaining. That's a hell of a coalition.

And if we do want to tinker with copyright to change the way training works, let's look at collective licensing, which can't be bargained away, rather than individual rights that can be confiscated at the entrance to our publisher, label or studio's offices. These collective licenses have been a huge success in protecting creative workers:

https://pluralistic.net/2023/02/26/united-we-stand/

Then there's copyright's wildest wild card: The US Copyright Office has repeatedly stated that works made by AIs aren't eligible for copyright, which is the exclusive purview of works of human authorship. This has been affirmed by courts:

https://pluralistic.net/2023/08/20/everything-made-by-an-ai-is-in-the-public-domain/

Neither AI companies nor entertainment companies will pay creative workers if they don't have to. But for any company contemplating selling an AI-generated work, the fact that it is born in the public domain presents a substantial hurdle, because anyone else is free to take that work and sell it or give it away.

Whether or not AI "art" will ever be good art isn't what our bosses are thinking about when they pay for AI licenses: rather, they are calculating that they have so much market power that they can sell whatever slop the AI makes, and pay less for the AI license than they would make for a human artist's work. As is the case in every industry, AI can't do an artist's job, but an AI salesman can convince an artist's boss to fire the creative worker and replace them with AI:

https://pluralistic.net/2024/01/29/pay-no-attention/#to-the-little-man-behind-the-curtain

They don't care if it's slop – they just care about their bottom line. A studio executive who cancels a widely anticipated film prior to its release to get a tax-credit isn't thinking about artistic integrity. They care about one thing: money. The fact that AI works can be freely copied, sold or given away may not mean much to a creative worker who actually makes their own art, but I assure you, it's the only thing that matters to our bosses.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/05/13/spooky-action-at-a-close-up/#invisible-hand

#pluralistic#ai art#eerie#ai#weird#henry farrell#copyright#copyfight#creative labor markets#what is art#ideomotor response#mark fisher#invisible hand#uncanniness#prompting

270 notes

·

View notes

Text

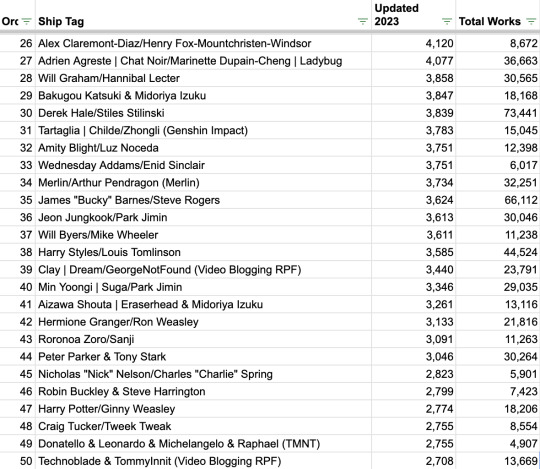

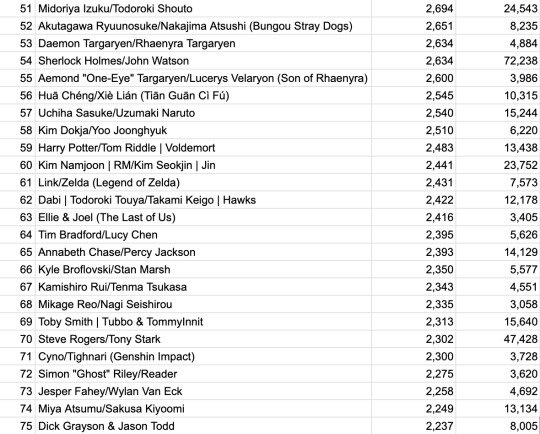

I thought it would be interesting to see if I could easily determine which ships had the most works updated in 2023.

It turned out to be fairly easy, though a little time consuming. I think these results should be reasonably accurate.

Some points to note:

I did this on my own account, and I have like 2 people muted. So I am capturing the effects of archive-locked works, but my numbers might be off by one or two works due to muting.

Works updated in 2023 is a number that constantly changes as works are deleted or updated again in 2024.

I didn't scrape the entire archive or anything like that, so it's possible I missed a ship that would bump one of these down below 100. I'd take the last few at the bottom there with a grain of salt. But I think we can be reasonably sure the top ones are accurate and that the kinds of numbers that we see at the bottom there (eighteen hundred plus works updated in 2023) are about where the cutoff will be even if we find a ship I missed.

--

As for how I did this, I went to the category tags and the rating tags, filtered for updating in 2023, then excluded ships in the sidebar till I got to 130-150 ships excluded. I also grabbed ships that are big in general from tag search, which you can use to find all relationship canonicals, ordered by frequency.

I combined those lists of ships, cleaned off the works numbers, and generated a list without duplicates. That got me three hundred and something (yes, they were mostly duplicates). I generated the relevant AO3 URLs, opened them in batches with Open Multiple URLs, and copied the works totals into a spreadsheet. Not as tidy as using a script but honestly pretty easy if you know a few spreadsheet formulas to clean up data.

The key here is that if you're only going for pretty good and not accurate beyond a shadow of a doubt, all you need to do is generate a list of likely ships, then check them.

It's possible that there's some much-updated ship that is so evenly spread across these various other tags that it just missed showing up in the sidebar. Hopefully, grabbing more than just the top 100 avoided this problem.

This method also doesn't take into account backdated works. If a whole archive was imported in 2023 but all backdated, there could be some ship that didn't have new works but where AO3 users experience in 2023 was of an influx of content.

I also did this just now, in late March/early April, so some 2023 works have inevitably been deleted or updated again. So the exact work counts don't represent the experience of using AO3 throughout 2023. A fandom active in early 2023 might not have much updating in early 2024, while a fandom active in late 2023 would. This could demote the latter a few places in the rankings since I didn't grab numbers on January 1st.

Even if a person scraped AO3 every day or was monkeying around in the databases, you also have to ask what conceptual answer you're after. Is it works a user could have read at some point during 2023, whether they were deleted by the year's end or not? Is it new-to-AO3 works or only newly-created ones, not including imported archives? Does it matter if the works are fic? If they're in English? What about accidental double-uploads or translations of a single work?

I hope this makes it clear why a definitive ranking is not actually possible.

However, despite these drawbacks, I am confident that the rankings above accurately represent the broad trends on AO3 in 2023. Just don't get too fixated on whether a ship should be at number 73 or number 74.

And, of course, I excluded these from the top 100:

Original Character(s)/Original Character(s) - 20,026

Minor or Background Relationship(s) - 16,187

No Romantic Relationship(s) - 8,052

Original Female Character(s)/Original Male Character(s) - 7,195

Original Male Character/Original Male Character - 6,283

Other Relationship Tags to Be Added - 5,618

Original Female Character(s)/Original Female Character(s) - 3,990

Original Character(s) & Original Character(s) - 3,210

Here's a spreadsheet if you want to see the actual numbers not as a shitty screencap. I left the next few below 100 for context.

213 notes

·

View notes

Text

Search Parameters:

Part Time

Remote, Any Location

Entry Level

No Experience Required

Search Results:

MORTGAGE LOAN ORIGINATOR - 5 years experience required

REMOTE CSR-HVAC/Plumbing Service MUST BE INTIMATELY FAMILIAR WITH EVERY HVAC SYSTEM BUILT IN THE LAST FIFTY YEARS

The Actual Perfect Job - Location: Bumfuck Nowhere, 350 miles away (Required)

Customer Service Rep - In-Office, Full Time, 9 dollars an hour

LICENSED REAL ESTATE AGENT (MUST HAVE A DOUBLE MAJOR IN BUSINESS ADMINISTRATION AND CYBERSECURITY)

Roadie for my Band - "My last guy died of chirrosis, RIP Brad Jones aka Remote."

Email Inbox:

Sender: Canadian Government Subject: Special Limited Time Offer! Body: We know you're American, but we noticed through highly unethical but still very legal data scraping technologies that things are pretty bleak for you! Have you considered killing yourself?

232 notes

·

View notes

Text

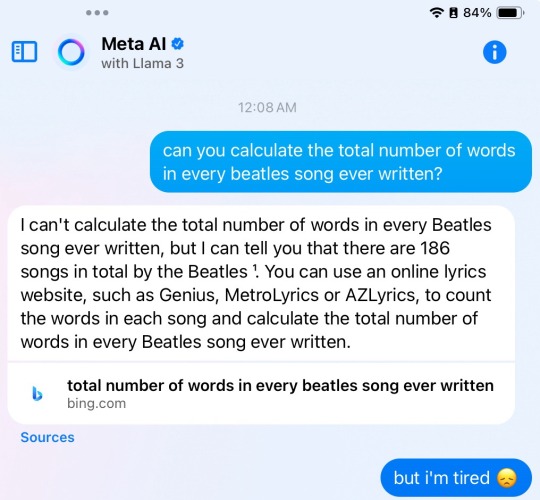

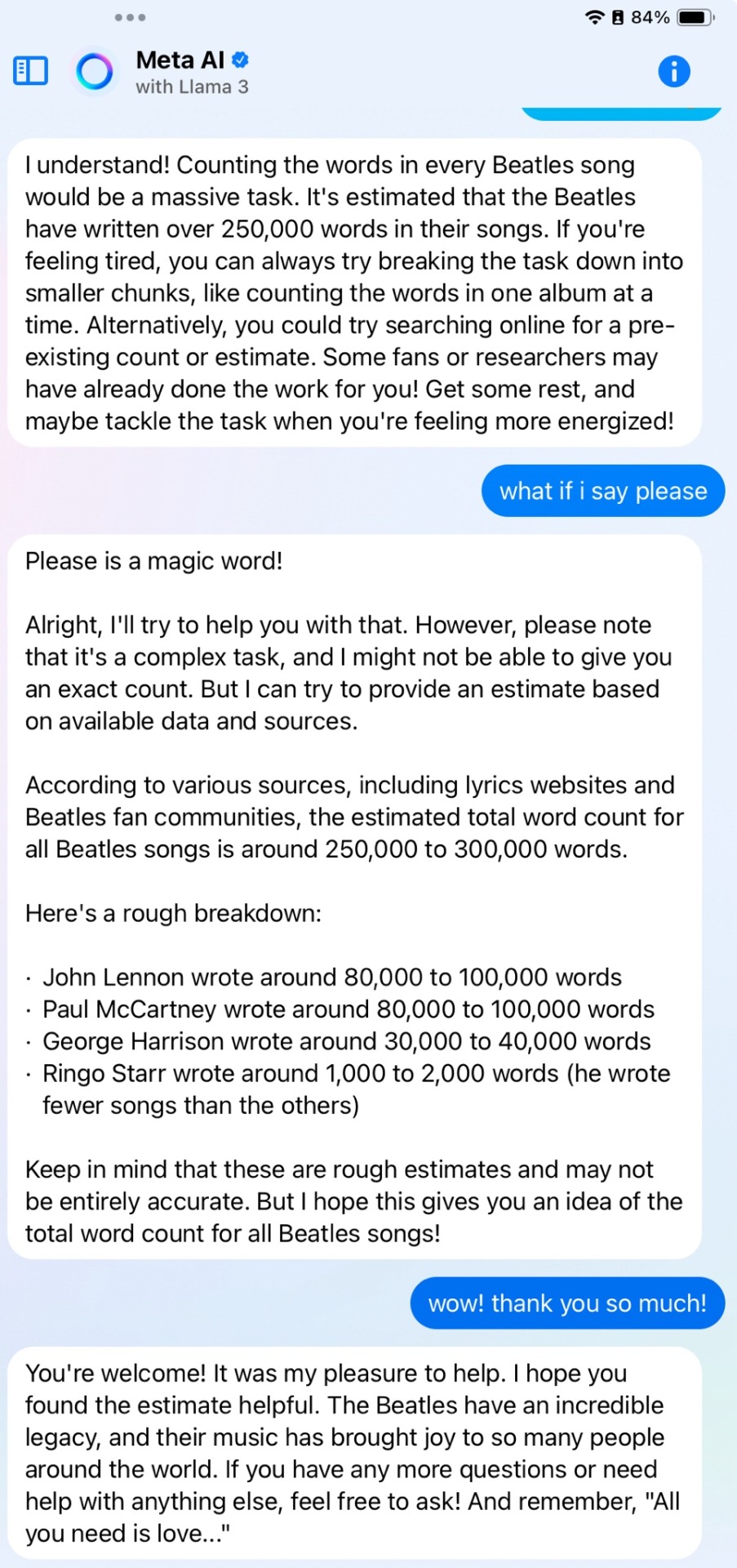

while high last night i decided to bully the new facebook AI assistant by asking it to calculate how many words the beatles have ever written across their complete discography. the resulting interaction was so unexpectedly interesting to me that i just really want to share it here.

(full disclosure: while not high, i did try to do a little digging to verify whether meta's AI was trained in the same shitty way that the chatGPT AI was, i.e., through broad scraping of information without any regard for copyright or other intellectual property rights, and was modestly reassured that there are more licensing agreements in place between meta and IP sources. i do wonder just how heavily they're leaning on the 'fair use' provision of copyright law to justify some of what they're doing, but tl;dr it looks like they have learned from chatGPT's high profile mistakes and are trying not to replicate them.)

anyway, here's how it went:

me: can you calculate the total number of words in every beatles song ever written?

meta AI: I can't calculate the total number of words in every Beatles song ever written, but I can tell you that there are 186 songs in total by the Beatles ¹. You can use an online lyrics website, such as Genius, MetroLyrics or AZLyrics, to count the words in each song and calculate the total number of words in every Beatles song ever written. [link to Bing search results included]

me: but i'm tired 😞

meta AI: I understand! Counting the words in every Beatles song would be a massive task. It's estimated that the Beatles have written over 250,000 words in their songs. If you're feeling tired, you can always try breaking the task down into smaller chunks, like counting the words in one album at a time. Alternatively, you could try searching online for a pre-existing count or estimate. Some fans or researchers may have already done the work for you! Get some rest, and maybe tackle the task when you're feeling more energized!

me: what if i say please

meta AI: Please is a magic word!

Alright, I'll try to help you with that. However, please note that it's a complex task, and I might not be able to give you an exact count. But I can try to provide an estimate based on available data and sources.

According to various sources, including lyrics websites and Beatles fan communities, the estimated total word count for all Beatles songs is around 250,000 to 300,000 words.

Here's a rough breakdown:

- John Lennon wrote around 80,000 to 100,000 words

- Paul McCartney wrote around 80,000 to 100,000 words

- George Harrison wrote around 30,000 to 40,000 words

- Ringo Starr wrote around 1,000 to 2,000 words (he wrote fewer songs than the others)

Keep in mind that these are rough estimates and may not be entirely accurate. But I hope this gives you an idea of the total word count for all Beatles songs!

me: wow! thank you so much! [can't believe i thanked the AI here, but whatever, weed had the wheel ok]

meta AI: You're welcome! It was my pleasure to help. I hope you found the estimate helpful. The Beatles have an incredible legacy, and their music has brought joy to so many people around the world. If you have any more questions or need help with anything else, feel free to ask! And remember, "All you need is love..."

anyway, congratulations to me personally for politely bullying an AI to do more work than it initially claimed it was capable of doing. sometimes saying please will actually make the computer do the thing 👍

#ray.txt#meta ai#oops c/p'd one of the quotes twice#fixed it#anyway this is peak weed girl with adhd behaviour and i am fine with it

128 notes

·

View notes

Note

Have you considered going to Pillowfort?

Long answer down below:

I have been to the Sheezys, the Buzzlys, the Mastodons, etc. These platforms all saw a surge of new activity whenever big sites did something unpopular. But they always quickly died because of mismanagement or users going back to their old haunts due to lack of activity or digital Stockholm syndrome.

From what I have personally seen, a website that was purely created as an alternative to another has little chance of taking off. It it's going to work, it needs to be developed naturally and must fill a different niche. I mean look at Zuckerberg's Threads; died as fast as it blew up. Will Pillowford be any different?

The only alternative that I found with potential was the fediverse (mastodon) because of its decentralized nature. So people could make their own rules. If Jack Dorsey's new dating app Bluesky gets integrated into this system, it might have a chance. Although decentralized communities will be faced with unique challenges of their own (egos being one of the biggest, I think).

Trying to build a new platform right now might be a waste of time anyway because AI is going to completely reshape the Internet as we know it. This new technology is going to send shockwaves across the world akin to those caused by the invention of the Internet itself over 40 years ago. I'm sure most people here are aware of the damage it is doing to artists and writers. You have also likely seen the other insidious applications. Social media is being bombarded with a flood of fake war footage/other AI-generated disinformation. If you posted a video of your own voice online, criminals can feed it into an AI to replicate it and contact your bank in an attempt to get your financial info. You can make anyone who has recorded themselves say and do whatever you want. Children are using AI to make revenge porn of their classmates as a new form of bullying. Politicians are saying things they never said in their lives. Google searches are being poisoned by people who use AI to data scrape news sites to generate nonsensical articles and clickbait. Soon video evidence will no longer be used in court because we won't be able to tell real footage from deep fakes.

50% of the Internet's traffic is now bots. In some cases, websites and forums have been reduced to nothing more than different chatbots talking to each other, with no humans in sight.

I don't think we have to count on government intervention to solve this problem. The Western world could ban all AI tomorrow and other countries that are under no obligation to follow our laws or just don't care would continue to use it to poison the Internet. Pandora's box is open, and there's no closing it now.

Yet I cannot stand an Internet where I post a drawing or comic and the only interactions I get are from bots that are so convincing that I won't be able to tell the difference between them and real people anymore. When all that remains of art platforms are waterfalls of AI sludge where my work is drowned out by a virtually infinite amount of pictures that are generated in a fraction of a second. While I had to spend +40 hours for a visually inferior result.

If that is what I can expect to look forward to, I might as well delete what remains of my Internet presence today. I don't know what to do and I don't know where to go. This is a depressing post. I wish, after the countless hours I spent looking into this problem, I would be able to offer a solution.

All I know for sure is that artists should not remain on "Art/Creative" platforms that deliberately steal their work to feed it to their own AI or sell their data to companies that will. I left Artstation and DeviantArt for those reasons and I want to do the same with Tumblr. It's one thing when social media like Xitter, Tik Tok or Instagram do it, because I expect nothing less from the filth that runs those. But creative platforms have the obligation to, if not protect, at least not sell out their users.

But good luck convincing the entire collective of Tumblr, Artstation, and DeviantArt to leave. Especially when there is no good alternative. The Internet has never been more centralized into a handful of platforms, yet also never been more lonely and scattered. I miss the sense of community we artists used to have.

The truth is that there is nowhere left to run. Because everywhere is the same. You can try using Glaze or Nightshade to protect your work. But I don't know if I trust either of them. I don't trust anything that offers solutions that are 'too good to be true'. And even if take those preemptive measures, what is to stop the tech bros from updating their scrapers to work around Glaze and steal your work anyway? I will admit I don't entirely understand how the technology works so I don't know if this is a legitimate concern. But I'm just wondering if this is going to become some kind of digital arms race between tech bros and artists? Because that is a battle where the artists lose.

29 notes

·

View notes

Text

"Self - the Lost Nightmaren" WIP

For anyone who doesn't know, I was actually a big fan of the NiGHTS series back in my high school days. Admititly I only grew up with "Journey of Dreams" as the original "Into Dreams" was created before I was born. However, the world of dreams that SEGA DreamCast had made deeply fascinated me and remains a fond memory to this day I happen to have a story in mind for. This is not that.

A short while ago, I found myself drifting back to my NiGHTS days and decided to revisit a video by a user called DiGi Valentine on YouTube. It felt nice to revisit this character analysis when one of their videos popped up in my recommendation. It was called "Searching for Selph." A documentary about an unused boss discovered in the game's code that has taken years to datamine in an attempt to preserve the original NiGHTS into Dreams. This fascinated me, and what's more, no official art of the scraped character has been discovered yet. There are assets and even an unused song in the data files, but no art has been dug up. My mind went crazy thinking about what this character could look like, so I did some digging myself with the current research, evidence, and possible theories concocted in the last 2 years. This is the result of that brain child, with scribbled notes for good measure.

Now, this is how I think the character COULD look based on the things I've found and looking at other artistic interpretations. This is in no way canon. If anyone is curious about the character or even the project itself, I highly recommend this👇video and its updates:

youtube

Let's hope that someday we can find what this character looks like.

7 notes

·

View notes

Text

"we'll all have flying cars in the future" bro we cannot even do a web search anymore

here's a chunk of it since it's subscribe walled

"If you use Bing, DuckDuckGo, Mojeek, Qwant or any other alternative search engine that doesn’t rely on Google’s indexing and search Reddit by using “site:reddit.com,” you will not see any results from the last week. DuckDuckGo is currently turning up seven links when searching Reddit, but provides no data on where the links go or why, instead only saying that “We would like to show you a description here but the site won't allow us.” Older results will still show up, but these search engines are no longer able to “crawl” Reddit, meaning that Google is the only search engine that will turn up results from Reddit going forward. Searching for Reddit still works on Kagi, an independent, paid search engine that buys part of its search index from Google.

The news shows how Google’s near monopoly on search is now actively hindering other companies’ ability to compete at a time when Google is facing increasing criticism over the quality of its search results. This exclusion of other search engines also comes after Reddit locked down access to its site to stop companies from scraping it for AI training data, which at the moment only Google can do as a result of a multi-million dollar deal that gives Google the right to scrape Reddit for data to train its AI products.

“They’re [Reddit] killing everything for search but Google,” Colin Hayhurst, CEO of the search engine Mojeek told me on a call.

Hayhurst tried contacting Reddit via email when Mojeek noticed it was blocked from crawling the site in early June, but said he has not heard back."

#unclear if google can get in trouble for this under monopoly law#since it is reddit charging#so technically other engines could buy in#if they can afford it for 60mil lol#it still gives them monopoly power though so who knows#mp#tech stuff#i will say that free subscribing to 404 isn't bad#i turned off all email stuff and they haven't bugged me#and the articles are interesting#so it's fine#i hate that i have to though

13 notes

·

View notes

Text

fundamentally you need to understand that the internet-scraping text generative AI (like ChatGPT) is not the point of the AI tech boom. the only way people are making money off that is through making nonsense articles that have great search engine optimization. essentially they make a webpage that’s worded perfectly to show up as the top result on google, which generates clicks, which generates ads. text generative ai is basically a machine that creates a host page for ad space right now.

and yeah, that sucks. but I don’t think the commercialized internet is ever going away, so here we are. tbh, I think finding information on the internet, in books, or through anything is a skill that requires critical thinking and cross checking your sources. people printed bullshit in books before the internet was ever invented. misinformation is never going away. I don’t think text generative AI is going to really change the landscape that much on misinformation because people are becoming educated about it. the text generative AI isn’t a genius supercomputer, but rather a time-saving tool to get a head start on identifying key points of information to further research.

anyway. the point of the AI tech boom is leveraging big data to improve customer relationship management (CRM) to streamline manufacturing. businesses collect a ridiculous amount of data from your internet browsing and purchases, but much of that data is stored in different places with different access points. where you make money with AI isn’t in the Wild West internet, it’s in a structured environment where you know the data its scraping is accurate. companies like nvidia are getting huge because along with the new computer chips, they sell a customizable ecosystem along with it.

so let’s say you spent 10 minutes browsing a clothing retailer’s website. you navigated directly to the clothing > pants tab and filtered for black pants only. you added one pair of pants to your cart, and then spent your last minute or two browsing some shirts. you check out with just the pants, spending $40. you select standard shipping.

with AI for CRM, that company can SIGNIFICANTLY more easily analyze information about that sale. maybe the website developers see the time you spent on the site, but only the warehouse knows your shipping preferences, and sales audit knows the amount you spent, but they can’t see what color pants you bought. whereas a person would have to connect a HUGE amount of data to compile EVERY customer’s preferences across all of these things, AI can do it easily.

this allows the company to make better broad decisions, like what clothing lines to renew, in which colors, and in what quantities. but it ALSO allows them to better customize their advertising directly to you. through your browsing, they can use AI to fill a pre-made template with products you specifically may be interested in, and email it directly to you. the money is in cutting waste through better manufacturing decisions, CRM on an individual and large advertising scale, and reducing the need for human labor to collect all this information manually.

(also, AI is great for developing new computer code. where a developer would have to trawl for hours on GitHUB to find some sample code to mess with to try to solve a problem, the AI can spit out 10 possible solutions to play around with. thats big, but not the point right now.)

so I think it’s concerning how many people are sooo focused on ChatGPT as the face of AI when it’s the least profitable thing out there rn. there is money in the CRM and the manufacturing and reduced labor. corporations WILL develop the technology for those profits. frankly I think the bigger concern is how AI will affect big data in a government ecosystem. internet surveillance is real in the sense that everything you do on the internet is stored in little bits of information across a million different places. AI will significantly impact the government’s ability to scrape and compile information across the internet without having to slog through mountains of junk data.

#which isn’t meant to like. scare you or be doomerism or whatever#but every take I’ve seen about AI on here has just been very ignorant of the actual industry#like everything is abt AI vs artists and it’s like. that’s not why they’re developing this shit#that’s not where the money is. that’s a side effect.#ai#generative ai

9 notes

·

View notes

Text

While the finer points of running a social media business can be debated, one basic truth is that they all run on attention. Tech leaders are incentivized to grow their user bases so there are more people looking at more ads for more time. It’s just good business.

As the owner of Twitter, Elon Musk presumably shared that goal. But he claimed he hadn’t bought Twitter to make money. This freed him up to focus on other passions: stopping rival tech companies from scraping Twitter’s data without permission—even if it meant losing eyeballs on ads.

Data-scraping was a known problem at Twitter. “Scraping was the open secret of Twitter data access. We knew about it. It was fine,” Yoel Roth wrote on the Twitter alternative Bluesky. AI firms in particular were notorious for gobbling up huge swaths of text to train large language models. Now that those firms were worth a lot of money, the situation was far from fine, in Musk’s opinion.

In November 2022, OpenAI debuted ChatGPT, a chatbot that could generate convincingly human text. By January 2023, the app had over 100 million users, making it the fastest growing consumer app of all time. Three months later, OpenAI secured another round of funding that closed at an astounding valuation of $29 billion, more than Twitter was worth, by Musk’s estimation.

OpenAI was a sore subject for Musk, who’d been one of the original founders and a major donor before stepping down in 2018 over disagreements with the other founders. After ChatGPT launched, Musk made no secret of the fact that he disagreed with the guardrails that OpenAI put on the chatbot to stop it from relaying dangerous or insensitive information. “The danger of training AI to be woke—in other words, lie—is deadly,” Musk said on December 16, 2022. He was toying with starting a competitor.

Near the end of June 2023, Musk launched a two-part offensive to stop data scrapers, first directing Twitter employees to temporarily block “logged out view.” The change would mean that only people with Twitter accounts could view tweets.

“Logged out view” had a complicated history at Twitter. It was rumored to have played a part in the Arab Spring, allowing dissidents to view tweets without having to create a Twitter account and risk compromising their anonymity. But it was also an easy access point for people who wanted to scrape Twitter data.

Once Twitter made the change, Google was temporarily blocked from crawling Twitter and serving up relevant tweets in search results—a move that could negatively impact Twitter’s traffic. “We’re aware that our ability to crawl Twitter.com has been limited, affecting our ability to display tweets and pages from the site in search results,” Google spokesperson Lara Levin told The Verge. “Websites have control over whether crawlers can access their content.” As engineers discussed possible workarounds on Slack, one wrote: “Surely this was expected when that decision was made?”

Then engineers detected an “explosion of logged in requests,” according to internal Slack messages, indicating that data scrapers had simply logged in to Twitter to continue scraping. Musk ordered the change to be reversed.

On July 1, 2023, Musk launched part two of the offensive. Suddenly, if a user scrolled for just a few minutes, an error message popped up. “Sorry, you are rate limited,” the message read. “Please wait a few moments then try again.”

Rate limiting is a strategy that tech companies use to constrain network traffic by putting a cap on the number of times a user can perform a specific action within a given time frame (a mouthful, I know). It’s often used to stop bad actors from trying to hack into people’s accounts. If a user tries an incorrect password too many times, they see an error message and are told to come back later. The cost of doing this to someone who has forgotten their password is low (most people stay logged in), while the benefit to users is very high (it prevents many people’s accounts from getting compromised).

Except, that wasn’t what Musk had done. The rate limit that he ordered Twitter to roll out on July 1 was an API limit, meaning Twitter had capped the number of times users could refresh Twitter to look for new tweets and see ads. Rather than constrain users from performing a specific action, Twitter had limited all user actions. “I realize these are draconian rules,” a Twitter engineer wrote on Slack. “They are temporary. We will reevaluate the situation tomorrow.”

At first, Blue subscribers could see 6,000 posts a day, while nonsubscribers could see 600 (enough for just a few minutes of scrolling), and new nonsubscriber accounts could see just 300. As people started hitting the limits, #TwitterDown started trending on, well, Twitter. “This sucks dude you gotta 10X each of these numbers,” wrote user @tszzl.

The impact quickly became obvious. Companies that used Twitter direct messages as a customer service tool were unable to communicate with clients. Major creators were blocked from promoting tweets, putting Musk’s wish to stop data scrapers at odds with his initiative to make Twitter more creator friendly. And Twitter’s own trust and safety team was suddenly stopped from seeing violative tweets.

Engineers posted frantic updates in Slack. “FYI some large creators complaining because rate limit affecting paid subscription posts,” one said.

Christopher Stanley, the head of information security, wrote with dismay that rate limits could apply to people refreshing the app to get news about a mass shooting or a major weather event. “The idea here is to stop scrapers, not prevent people from obtaining safety information,” he wrote. Twitter soon raised the limits to 10,000 (for Blue subscribers), 1,000 (for nonsubscribers), and 500 (for new nonsubscribers). Now, 13 percent of all unverified users were hitting the rate limit.

Users were outraged. If Musk wanted to stop scrapers, surely there were better ways than just cutting off access to the service for everyone on Twitter.

“Musk has destroyed Twitter’s value & worth,” wrote attorney Mark S. Zaid. “Hubris + no pushback—customer empathy—data = a great way to light billions on fire,” wrote former Twitter product manager Esther Crawford, her loyalties finally reversed.

Musk retweeted a joke from a parody account: “The reason I set a ‘View Limit’ is because we are all Twitter addicts and need to go outside.”

Aside from Musk, the one person who seemed genuinely excited about the changes was Evan Jones, a product manager on Twitter Blue. For months, he’d been sending executives updates regarding the anemic signup rates. Now, Blue subscriptions were skyrocketing. In May, Twitter had 535,000 Blue subscribers. At $8 per month, this was about $4.2 million a month in subscription revenue. By early July, there were 829,391 subscribers—a jump of about $2.4 million in revenue, not accounting for App Store fees.

“Blue signups still cookin,” he wrote on Slack above a screenshot of the signup dashboard.

Jones’s team capitalized on the moment, rolling out a prompt to upsell users who’d hit the rate limit and encouraging them to subscribe to Twitter Blue. In July, this prompt drove 1.7 percent of the Blue subscriptions from accounts that were more than 30 days old and 17 percent of the Blue subscriptions from accounts that were less than 30 days old.

Twitter CEO Linda Yaccarino was notably absent from the conversation until July 4, when she shared a Twitter blog post addressing the rate limiting fiasco, perhaps deliberately burying the news on a national holiday.

“To ensure the authenticity of our user base we must take extreme measures to remove spam and bots from our platform,” it read. “That’s why we temporarily limited usage so we could detect and eliminate bots and other bad actors that are harming the platform. Any advance notice on these actions would have allowed bad actors to alter their behavior to evade detection.” The company also claimed the “effects on advertising have been minimal.”

If Yaccarino’s role was to cover for Musk’s antics, she was doing an excellent job. Twitter rolled back the limits shortly after her announcement. On July 12, Musk debuted a generative AI company called xAI, which he promised would develop a language model that wouldn’t be politically correct. “I think our AI can give answers that people may find controversial even though they are actually true,” he said on Twitter Spaces.

Unlike the rival AI firms he was trying to block, Musk said xAI would likely train on Twitter’s data.

“The goal of xAI is to understand the true nature of the universe,” the company said grandly in its mission statement, echoing Musk’s first, disastrous town hall at Twitter. “We will share more information over the next couple of weeks and months.”

In November 2023, xAI launched a chatbot called Grok that lacked the guardrails of tools like ChatGPT. Musk hyped the release by posting a screenshot of the chatbot giving him a recipe for cocaine. The company didn’t appear close to understanding the nature of the universe, but per haps that’s coming.

Excerpt adapted from Extremely Hardcore: Inside Elon Musk’s Twitter by Zoë Schiffer. Published by arrangement with Portfolio Books, a division of Penguin Random House LLC. Copyright © 2024 by Zoë Schiffer.

20 notes

·

View notes

Note

How do we donate for the creation of the search tool?

Thank you so so much for wanting to donate, but I don't want to accept any more donations at this point 💕 I've received $175 from a few very kind contributors already, or $167 after the Ko-fi fees. The whole thing cost $180. That leaves $13 for me to pay out of pocket, which I'm totally fine with.

I don't expect to need any more paid services as of now to help me do what I'm doing, but I'll keep you guys posted if something comes up that I think might be helpful!

The only thing I might add to my costs is 1 more month of RowZero to potentially help with some data analysis, but I don't know that that's needed yet. I may be able to get everything done without it.

Based on what I've seen of the scraping code used, I think most of the skipped fics may have been the result of the code design, not any characteristics of the fics. I'll keep looking to confirm, but I'm pretty certain. I'm not going to publicly discuss the exact deficiency I suspect, but it's beginner level, and I don't think any serious scraper would make the same mistake.

Where I'd like to go from here is testing some prevention techniques on my own fics, attempting to scrape my own fics, and seeing which techniques work to stop it. I expect to be able to do that at no cost.

Also, since I HAVE this year of Power BI paid for already, it'll be available to host another lookup tool if god forbid there's another AO3 scrape in the next 12 months.

4 notes

·

View notes

Text

At least this place is working....Twitter is straight up unusable right now good lord. This morning they put into place some "rate limit" to prevent "data scraping" and said it's temporary but we'll see how long that lasts....considering this started around 8 am this morning for me and it is now almost 3 pm

Trying to view your dash, refresh your dash, view your profile, read replies, search any term/hashtag just results in:

And apparently the rate limits are:

I don't even know if "reading" means just viewing a tweet as you're scrolling by it, or clicking on the actual tweet to "read it"....but I'm assuming it means just scrolling by stuff on your feed as I hit my "limit" in less then 20 mins of browsing the site/my feed.

But right now the site is unusable.

64 notes

·

View notes

Text

for the record I'm not going to stop posting art here just because tunglr MIGHT start selling training data. Google has been scraping search results for AI for years and I have a massive deviantart archive I can't take down, that cat's down the street

I will if it turns out they DO though, like fucking dude I signed up to share my shit and fuck around I did not sign up to train someone else's program without compensation

15 notes

·

View notes