#Store performance analysis using AI

Explore tagged Tumblr posts

Text

0 notes

Text

1 note

·

View note

Text

Chris L’Etoile’s original dialogue about the Reaper embryo & the person who was (probably) behind the decision with Legion’s N7 armor

(EDIT: Okay, so Idk why Tumblr displays the headline twice on my end, but if it does for you, please ignore it.)

[Mass Effect 2 spoilers!]

FRIENDS! MASS EFFECT FANS! PEOPLE!

You wouldn't believe it, but I think I've found the lines Chris L'Etoile originally wrote for EDI about the human Reaper!

Chris L’Etoile’s original concept for the Reapers

For those who don't know (or need a refresher), Chris L'Etoile - who was something like the “loremaster” of the ME series, having written the entire Codex in ME1 by himself - originally had a concept for the Reapers that was slightly different from ME2's canon. In the finished game, when you find the human Reaper in the Collector Base, you'll get the following dialogue by investigating:

Shepard: Reapers are machines -- why do they need humans at all? EDI: Incorrect. Reapers are sapient constructs. A hybrid of organic and inorganic material. The exact construction methods are unclear, but it seems probable that the Reapers absorb the essence of a species; utilizing it in their reproduction process.

Meanwhile, Chris L'Etoile had this to say about EDI's dialogue (sourced from here):

I had written harder science into EDI's dialogue there. The Reapers were using nanotech disassemblers to perform "destructive analysis" on humans, with the intent of learning how to build a Reaper body that could upload their minds intact. Once this was complete, humans throughout the galaxy would be rounded up to have their personalities and memories forcibly uploaded into the Reaper's memory banks. (You can still hear some suggestions of this in the background chatter during Legion's acquisition mission, which I wrote.) There was nothing about Reapers being techno-organic or partly built out of human corpses -- they were pure tech. It seems all that was cut out or rewritten after I left. What can ya do. /shrug

Well, guess what: These deleted lines are actually in the game files!

Credit goes to Emily for uploading them to YouTube; the discussion about the human Reaper starts at 1:02:

Shepard: EDI, did you get that? EDI: Yes, Shepard. This explains why the captive humans were rendered into their base components -- destructive analysis. They were dissected down to the atomic level. That data could be stored on an AIs neural network. The knowledge and essence of billions of individuals, compiled into a single synthetic identity. Shepard: This isn’t gonna stop with the colonies, is it? EDI: The colonists were probably a test sample. The ultimate goal would be to upload all humans into this Reaper mind. The Collectors would harvest every human settlement across the galaxy. The obvious final goal would be Earth.

In all honesty, I think L’Etoile’s original concept is a lot cooler and makes a lot more sense than what ME2 canon went with. The only direct reference to it left in the final game is an insanely obscure comment by Legion, which you can only get if you picked the Renegade option upon the conclusion of their final Normandy conversation and completed the Suicide Mission afterwards (read: you have to get your entire crew killed if you want to see it).

I used to believe the pertaining dialogue he had written for EDI was lost forever, and I was all the more stoked when I discovered it on YouTube (or at least, I strongly believe this is L’Etoile’s original dialogue).

Interestingly, the deleted lines also feature an investigate option on why they’re targeting humans in particular:

Shepard: The galaxy has so many other species… Why are they using humans? EDI: Given the Collectors’ history, it is likely they tested other species, and discarded them as unsuitable. Human genetics are uniquely diverse.

The diversity of human genetics is remarked on quite a few times during the course of ME2 (something which my friend, @dragonflight203, once called “ME2’s patented “humanity is special” moments”), so this most likely what all this build-up was supposed to be for.

Tbh, I’m still not the biggest fan of the concept myself (if simply because I’m adverse to humans being the “supreme species”); while it would make sense for some species that had to go through a genetic bottleneck during their history (Krogan, Quarians, Drell), what exactly is it that makes Asari, Salarians*, and Turians less genetically diverse than humans? Also, how much are genetics even going to factor in if it’s their knowledge/experiences that they want to upload? (Now that I think about it, it would’ve been interesting if the Reapers targeted humanity because they have the most diverse opinions; that would’ve lined up nicely with the Geth desiring to have as many perspectives in their Consensus as possible.)

*EDIT: I just remembered that Salarian males - who compose about 90% of the species - hatch from unfertilized eggs, so they're presumably (half) clones of their mother. That would be a valid explanation why Salarians are less genetically diverse, at least.

Nevertheless, it would’ve been nice if all this “humanity is special” stuff actually led somewhere, since it’s more or less left in empty space as it is.

Anyway, most of the squadmates also have an additional remark about how the Reapers might be targeting humanity because Shepard defeated one of them, wanting to utilize this prowess for themselves. (Compare this to Legion’s comment “Your code is superior.”) I gotta agree with the commentator here who said that it would’ve been interesting if they kept these lines, since it would’ve added a layer of guilt to Shepard’s character.

Regardless of which theory is true, I do think it would’ve done them good to go a little more in-depth with the explanation why the Reapers chose humanity, of all races.

The identity of “Higher Paid” who insisted on Legion’s obsession with Shepard

Coincidentally, I may have solved yet another long-term mystery of ME2: In the same thread I linked above, you can find another comment by Chris L’Etoile, who also was the writer of Legion, on the decision to include a piece of Shepard’s N7 armor in their design:

The truth is that the armor was a decision imposed on me. The concept artists decided to put a hole in the geth. Then, in a moment of whimsy, they spackled a bit Shep's armor over it. Someone who got paid a lot more money than me decided that was really cool and insisted on the hole and the N7 armor. So I said, okay, Legion gets taken down when you meet it, so it can get the hole then, and weld on a piece of Shep's armor when it reactivates to represent its integration with Normandy's crew (when integrating aboard a new geth ship, it would swap memories and runtimes, not physical hardware). But Higher Paid decided that it would be cooler if Legion were obsessed with Shepard, and stalking him. That didn't make any sense to me -- to be obsessed, you have to have emotions. The geth's whole schtick is -- to paraphrase Legion -- "We do not experience (emotions), but we understand how (they) affect you." All I could do was downplay the required "obsession" as much as I could.

That paraphrased quote by Legion is actually a nice cue: I suppose the sentence L’Etoile is paraphrasing here is “We do not experience fear, but we understand how it affects you.”, which I’ve seen quoted by various people. However, the weird thing was that while it sounds like something Legion would say, I couldn’t remember them saying it on any occasion in-game - and I’ve practically seen every single Legion line there is.

So I googled the quote and stumbled upon an old thread from before ME2 came out. In the discussion, a trailer for ME2 - called the “Enemies” trailer - is referenced, and since it has led some users to conclusions that clearly aren’t canon (most notably, that Legion belongs to a rogue faction of Geth that do not share the same beliefs as the “core group”, when it’s actually the other way around with Legion belonging to the core group and the Heretics being the rogue faction), I was naturally curious about the contents of this trailer.

I managed to find said trailer on YouTube, which features commentary by game director Casey Hudson, lead designer Preston Watamaniuk, and lead writer Mac Walters.

The part where they talk about Legion starts at 2:57; it’s interesting that Walters describes Legion as a “natural evolution of the Geth” and says that they have broken beyond the constraints of their group consciousness by themselves, when Legion was actually a specifically designed platform.

The most notable thing, however, is what Hudson says afterwards (at 3:17):

Legion is stalking you, he’s obsessed with you, he’s incorporated a part of your armor into his own. You need to track him down and find out why he’s hunting you.

Given that the wording is almost identical to L’Etoile’s comment and with how much confidence and enthusiasm Hudson talks about it, I’m 99% sure the thing with the armor was his idea.

Also, just what the fuck do you mean by “you need to track Legion down and find out why they’re hunting you”? You never actively go after Legion; Shepard just sort of stumbles upon them during the Derelict Reaper mission (footage from which is actually featured in the trailer) - if anything, the energy of that meeting is more like “oh, why, hello there”.

Legion doesn’t actively hunt Shepard during the course of the game, either; they had abandoned their original mission of locating Shepard after failing to find them at the Normandy wreck site. Furthermore, the significance of Legion’s reason for tracking Shepard is vastly overstated - it only gets mentioned briefly in one single conversation on the Normandy (which, btw, is totally optional).

I seriously have no idea if this is just exaggerated advertising or if they actually wanted to do something completely different with Legion’s character - then again, that trailer is from November 5th 2009, and Mass Effect 2 was released on January 26th 2010, so it’s unlikely they were doing anything other than polishing at this point. (By the looks of it, the story/missions were largely finished.) If you didn't know any better, you'd almost get the impression that neither Walters nor Hudson even read any of the dialogue L’Etoile had written for Legion.

That being said, I don’t think the idea with Legion already having the N7 armor before meeting Shepard is all that bad by itself. If I was the one who suggested it, I probably would’ve asked the counter question: “Yeah, alright, but how would Shepard be convinced that this Geth - of all Geth - is non-hostile towards them? What reason would Shepard have to trust a Geth after ME1?” (Shepard actually points out the piece of N7 armor as an argument to reactivate Legion.)

Granted, I don’t know what the context of Legion’s recruitment mission would’ve been (how they were deactivated, if it was from enemy fire or one of Shepard’s squadmates shooting them in a panic; what Legion did before, if they helped Shepard out in some way, etc.) - the point is, I think it would’ve done the parties good if they listened to each others’ opinions and had an open discussion about how/if they can make this work instead of everyone becoming set on their own vision (though L’Etoile, to his credit, did try to accommodate for the concept).

I know a lot of people like to read Legion taking Shepard’s armor as “oh, Legion is in love with Shepard” or “oh, Legion is developing emotions”, but personally, I feel that’s a very oversimplified interpretation. Humans tend to judge everything based on their own perspective - there is nothing wrong with that by itself, because, well, as a human, you naturally judge everything based on your own perspective. It doesn’t give you a very accurate representation of another species’ life experience though, much less a synthetic one’s.

I’ve mentioned my own interpretation here and there in other posts, but personally, I believe Legion took Shepard’s armor because they wished for Shepard (or at least their skill and knowledge) to become part of their Consensus. (I’m sort of leaning on L’Etoile’s idea of “symbolic exchange” here.) Naturally, that’s impossible, but I like to think when Legion couldn’t find Shepard, they took their armor as a symbol of wanting to emulate their skill.

The Geth’s entire existence is centered around their Consensus, so if the Geth wish for you to join their Consensus, that’s the highest compliment they can possibly give, akin to a sign of very deep respect and admiration. Alternatively, since linking minds is the closest thing to intimacy for the Geth, you can also read it like that, if you are so inclined - that still wouldn’t make it romantic or sexual love, though. (You have to keep in mind that Geth don’t really have different “levels” of relationships; the only categories that they have are “part of Consensus” and “not part of Consensus”.)

Either way, I appreciate that L’Etoile wrote it in a way that leaves it open to interpretation by fans. I think he really did the best with what he had to work with, and personally, the thing with Legion’s N7 armor doesn’t bother me.

What does bother me, on the other hand, is how the trailer - very intentionally - puts Legion’s lines in a context that is quite misleading, to say the least. The way Legion says “We do not experience fear, but we understand how it affects you” right before shooting in Shepard’s direction makes it appear as if they were trying to intimidate and/or threaten Shepard, and the trailer’s title “Enemies” doesn’t really do anything to help that.

However, I suppose that explains why I’ve seen the above line used in the context of Legion trying to psychologically intimidate their adversaries (which, IMO, doesn’t feel like a thing Legion would do). Generally, I get the feeling a considerable part of the BioWare staff was really sold on the idea of the Geth being the “creepy robots” (this comes from reading through some of the design documents on the Geth from ME1).

Also, since “Organics do not choose to fear us. It is a function of our hardware.” was used in a completely different context in-game (in the follow-up convo with Legion if you pick Tali during the loyalty confrontation; check this video at 5:04), we can assume that the same would’ve been true for the “We do not experience fear” line if it actually made it into the game. Many people have remarked on the line being “badass”, but really, it only sounds badass because it was staged that way in the trailer.

Suppose it was used in the final game and suppose Legion actually would’ve gotten their own recruitment mission - perhaps with one of Shepard’s squadmates shooting them in fear - it might also have been used in a context like this:

Shepard: Also… Sorry for one of my crew putting a hole through you earlier. Legion: It was a pre-programmed reaction. We frightened them. We do not experience fear, but we understand how it affects you.

Proof that context really is everything.

#mass effect#mass effect 2#mass effect reapers#EDI#mass effect legion#geth#chris l'etoile#casey hudson#bioware#video game writing#that also explains why the in-game “Organics do not choose to fear us” line sounds a little “out of place”#(like the intonation is completely different from the previous line so they probably just added it in as is)#side note: “pre-programmed reaction” is actually Legion's term for “natural reflex” (or at least I imagine it that way)

19 notes

·

View notes

Text

AI Tool Reproduces Ancient Cuneiform Characters with High Accuracy

ProtoSnap, developed by Cornell and Tel Aviv universities, aligns prototype signs to photographed clay tablets to decode thousands of years of Mesopotamian writing.

Cornell University researchers report that scholars can now use artificial intelligence to “identify and copy over cuneiform characters from photos of tablets,” greatly easing the reading of these intricate scripts.

The new method, called ProtoSnap, effectively “snaps” a skeletal template of a cuneiform sign onto the image of a tablet, aligning the prototype to the strokes actually impressed in the clay.

By fitting each character’s prototype to its real-world variation, the system can produce an accurate copy of any sign and even reproduce entire tablets.

"Cuneiform, like Egyptian hieroglyphs, is one of the oldest known writing systems and contains over 1,000 unique symbols.

Its characters change shape dramatically across different eras, cultures and even individual scribes so that even the same character… looks different across time,” Cornell computer scientist Hadar Averbuch-Elor explains.

This extreme variability has long made automated reading of cuneiform a very challenging problem.

The ProtoSnap technique addresses this by using a generative AI model known as a diffusion model.

It compares each pixel of a photographed tablet character to a reference prototype sign, calculating deep-feature similarities.

Once the correspondences are found, the AI aligns the prototype skeleton to the tablet’s marking and “snaps” it into place so that the template matches the actual strokes.

In effect, the system corrects for differences in writing style or tablet wear by deforming the ideal prototype to fit the real inscription.

Crucially, the corrected (or “snapped”) character images can then train other AI tools.

The researchers used these aligned signs to train optical-character-recognition models that turn tablet photos into machine-readable text.

They found the models trained on ProtoSnap data performed much better than previous approaches at recognizing cuneiform signs, especially the rare ones or those with highly varied forms.

In practical terms, this means the AI can read and copy symbols that earlier methods often missed.

This advance could save scholars enormous amounts of time.

Traditionally, experts painstakingly hand-copy each cuneiform sign on a tablet.

The AI method can automate that process, freeing specialists to focus on interpretation.

It also enables large-scale comparisons of handwriting across time and place, something too laborious to do by hand.

As Tel Aviv University archaeologist Yoram Cohen says, the goal is to “increase the ancient sources available to us by tenfold,” allowing big-data analysis of how ancient societies lived – from their religion and economy to their laws and social life.

The research was led by Hadar Averbuch-Elor of Cornell Tech and carried out jointly with colleagues at Tel Aviv University.

Graduate student Rachel Mikulinsky, a co-first author, will present the work – titled “ProtoSnap: Prototype Alignment for Cuneiform Signs” – at the International Conference on Learning Representations (ICLR) in April.

In all, roughly 500,000 cuneiform tablets are stored in museums worldwide, but only a small fraction have ever been translated and published.

By giving AI a way to automatically interpret the vast trove of tablet images, the ProtoSnap method could unlock centuries of untapped knowledge about the ancient world.

#protosnap#artificial intelligence#a.i#cuneiform#Egyptian hieroglyphs#prototype#symbols#writing systems#diffusion model#optical-character-recognition#machine-readable text#Cornell Tech#Tel Aviv University#International Conference on Learning Representations (ICLR)#cuneiform tablets#ancient world#ancient civilizations#technology#science#clay tablet#Mesopotamian writing

5 notes

·

View notes

Text

"Just weeks before the implosion of AllHere, an education technology company that had been showered with cash from venture capitalists and featured in glowing profiles by the business press, America’s second-largest school district was warned about problems with AllHere’s product.

As the eight-year-old startup rolled out Los Angeles Unified School District’s flashy new AI-driven chatbot — an animated sun named “Ed” that AllHere was hired to build for $6 million — a former company executive was sending emails to the district and others that Ed’s workings violated bedrock student data privacy principles.

Those emails were sent shortly before The 74 first reported last week that AllHere, with $12 million in investor capital, was in serious straits. A June 14 statement on the company’s website revealed a majority of its employees had been furloughed due to its “current financial position.” Company founder and CEO Joanna Smith-Griffin, a spokesperson for the Los Angeles district said, was no longer on the job.

Smith-Griffin and L.A. Superintendent Alberto Carvalho went on the road together this spring to unveil Ed at a series of high-profile ed tech conferences, with the schools chief dubbing it the nation’s first “personal assistant” for students and leaning hard into LAUSD’s place in the K-12 AI vanguard. He called Ed’s ability to know students “unprecedented in American public education” at the ASU+GSV conference in April.

Through an algorithm that analyzes troves of student information from multiple sources, the chatbot was designed to offer tailored responses to questions like “what grade does my child have in math?” The tool relies on vast amounts of students’ data, including their academic performance and special education accommodations, to function.

Meanwhile, Chris Whiteley, a former senior director of software engineering at AllHere who was laid off in April, had become a whistleblower. He told district officials, its independent inspector general’s office and state education officials that the tool processed student records in ways that likely ran afoul of L.A. Unified’s own data privacy rules and put sensitive information at risk of getting hacked. None of the agencies ever responded, Whiteley told The 74.

...

In order to provide individualized prompts on details like student attendance and demographics, the tool connects to several data sources, according to the contract, including Welligent, an online tool used to track students’ special education services. The document notes that Ed also interfaces with the Whole Child Integrated Data stored on Snowflake, a cloud storage company. Launched in 2019, the Whole Child platform serves as a central repository for LAUSD student data designed to streamline data analysis to help educators monitor students’ progress and personalize instruction.

Whiteley told officials the app included students’ personally identifiable information in all chatbot prompts, even in those where the data weren’t relevant. Prompts containing students’ personal information were also shared with other third-party companies unnecessarily, Whiteley alleges, and were processed on offshore servers. Seven out of eight Ed chatbot requests, he said, are sent to places like Japan, Sweden, the United Kingdom, France, Switzerland, Australia and Canada.

Taken together, he argued the company’s practices ran afoul of data minimization principles, a standard cybersecurity practice that maintains that apps should collect and process the least amount of personal information necessary to accomplish a specific task. Playing fast and loose with the data, he said, unnecessarily exposed students’ information to potential cyberattacks and data breaches and, in cases where the data were processed overseas, could subject it to foreign governments’ data access and surveillance rules.

Chatbot source code that Whiteley shared with The 74 outlines how prompts are processed on foreign servers by a Microsoft AI service that integrates with ChatGPT. The LAUSD chatbot is directed to serve as a “friendly, concise customer support agent” that replies “using simple language a third grader could understand.” When querying the simple prompt “Hello,” the chatbot provided the student’s grades, progress toward graduation and other personal information.

AllHere’s critical flaw, Whiteley said, is that senior executives “didn’t understand how to protect data.”

...

Earlier in the month, a second threat actor known as Satanic Cloud claimed it had access to tens of thousands of L.A. students’ sensitive information and had posted it for sale on Breach Forums for $1,000. In 2022, the district was victim to a massive ransomware attack that exposed reams of sensitive data, including thousands of students’ psychological evaluations, to the dark web.

With AllHere’s fate uncertain, Whiteley blasted the company’s leadership and protocols.

“Personally identifiable information should be considered acid in a company and you should only touch it if you have to because acid is dangerous,” he told The 74. “The errors that were made were so egregious around PII, you should not be in education if you don’t think PII is acid.”

Read the full article here:

https://www.the74million.org/article/whistleblower-l-a-schools-chatbot-misused-student-data-as-tech-co-crumbled/

17 notes

·

View notes

Text

Unleashing Innovation: How Intel is Shaping the Future of Technology

Introduction

In the fast-paced world of technology, few companies have managed to stay at the forefront of innovation as consistently as Intel. With a history spanning over five decades, Intel has transformed from a small semiconductor manufacturer into a global powerhouse that plays a pivotal role in shaping how we interact with technology today. From personal computing to artificial intelligence (AI) and beyond, Intel's innovations have not only defined industries but have also created new markets altogether.

youtube

In this comprehensive article, we'll delve deep into how Intel is unleashing innovation and shaping the future of technology across various domains. We’ll explore its history, key products, groundbreaking research initiatives, sustainability efforts, and much more. Buckle up as we take you on a journey through Intel’s dynamic Extra resources landscape.

Unleashing Innovation: How Intel is Shaping the Future of Technology

Intel's commitment to innovation is foundational to its mission. The company invests billions annually in research and development (R&D), ensuring that it remains ahead of market trends and consumer demands. This relentless pursuit of excellence manifests in several key areas:

The Evolution of Microprocessors A Brief History of Intel's Microprocessors

Intel's journey began with its first microprocessor, the 4004, launched in 1971. Since then, microprocessor technology has evolved dramatically. Each generation brought enhancements in processing power and energy efficiency that changed the way consumers use technology.

The Impact on Personal Computing

Microprocessors are at the heart of every personal computer (PC). They dictate performance capabilities that directly influence user experience. By continually optimizing their designs, Intel has played a crucial role in making PCs faster and more powerful.

Revolutionizing Data Centers High-Performance Computing Solutions

Data centers are essential for businesses to store and process massive amounts of information. Intel's high-performance computing solutions are designed to handle complex workloads efficiently. Their Xeon processors are specifically optimized for data center applications.

Cloud Computing and Virtualization

As cloud services become increasingly popular, Intel has developed technologies that support virtualization and cloud infrastructure. This innovation allows businesses to scale operations rapidly without compromising performance.

Artificial Intelligence: A New Frontier Intel’s AI Strategy

AI represents one of the most significant technological advancements today. Intel recognizes this potential and has positioned itself as a leader in AI hardware and software solutions. Their acquisitions have strengthened their AI portfolio significantly.

AI-Powered Devices

From smart assistants to autonomous vehicles, AI is embedded in countless devices today thanks to advancements by companies like Intel. These innovations enhance user experience by providing personalized services based on data analysis.

Internet of Things (IoT): Connecting Everything The Role of IoT in Smart Cities

2 notes

·

View notes

Text

Role of AI and Automation in Modern CRM Software

Modern CRM systems are no longer just about storing contact information. Today, businesses expect their CRM to predict behavior, streamline communication, and drive efficiency — and that’s exactly what AI and automation bring to the table.

Here’s how AI and automation are transforming the CRM landscape:

1. Predictive Lead Scoring

Uses historical customer data to rank leads by conversion probability

Prioritizes outreach efforts based on buying signals

Reduces time spent on low-potential leads

Improves sales team performance and ROI

2. Smart Sales Forecasting

Analyzes trends, seasonality, and deal history to forecast revenue

Updates projections in real-time based on new data

Helps sales managers set realistic targets and resource plans

Supports dynamic pipeline adjustments

3. Automated Customer Support

AI-powered chatbots handle FAQs and common issues 24/7

Sentiment analysis flags negative interactions for human follow-up

Automated ticket routing ensures faster resolution

Reduces support workload and boosts satisfaction

4. Personalized Customer Journeys

Machine learning tailors emails, offers, and messages per user behavior

Automation triggers based on milestones or inactivity

Custom workflows guide users through onboarding, upgrades, or renewals

Improves customer engagement and retention

5. Data Cleanup and Enrichment

AI tools detect duplicate records and outdated info

Automatically update fields from verified external sources

Maintains a clean, high-quality CRM database

Supports better segmentation and targeting

6. Workflow Automation Across Departments

Automates repetitive tasks like task assignments, follow-ups, and alerts

Links CRM actions with ERP, HR, or ticketing systems

Keeps all teams aligned without manual intervention

Custom CRM solutions can integrate automation tailored to your exact process

7. Voice and Natural Language Processing (NLP)

Transcribes sales calls and highlights key insights

Enables voice-driven commands within CRM platforms

Extracts data from emails or chat for automatic entry

Enhances productivity for on-the-go users

#AICRM#AutomationInCRM#CRMSolutions#SmartCRM#CRMDevelopment#AIinBusiness#TechDrivenSales#CustomerSupportAutomation#CRMIntegration#DigitalCRM

2 notes

·

View notes

Text

What is DeepSeek OpenAI? A Simple Guide to Understanding This Powerful Tool

Technology is constantly evolving, and one of the most exciting advancements is artificial intelligence (AI). You’ve probably heard of AI tools like ChatGPT, Google’s Bard, or Microsoft’s Copilot. But have you heard of DeepSeek OpenAI? If not, don’t worry! In this blog, we’ll break down what DeepSeek OpenAI is, how it works, and why it’s such a big deal in the world of digital marketing and beyond. Let’s dive in!

What is DeepSeek OpenAI?

The advanced AI platform DeepSeek OpenAI provides businesses and marketers together with individual’s tools to automate operations while enabling better decision-making and improved content generation efficiency. The platform functions as a highly intelligent assistant which analyzes data while generating ideas and creating content and forecasting trends within seconds.

The system's name "DeepSeek" was inspired by its deep information exploration capabilities to generate significant insights. DeepSeek operates through OpenAI's robust technology platform that also powers tools such as ChatGPT. DeepSeek delivers optimized performance for particular circumstances within digital marketing analytics and customer relationship management applications.

Why is DeepSeek OpenAI Important for Digital Marketing?

Digital marketing is all about connecting with your audience in the right way, at the right time. But with so much competition online, it can be challenging to stand out. That’s where DeepSeek OpenAI comes in. Here are some ways it can transform your marketing efforts:

1. Content Creation Made Easy

Your audience engagement depends heavily on producing high-quality content although this process demands significant time investment. Through DeepSeek OpenAI users can instantly create blog posts together with social media captions and email newsletters and ad copy. You gain professional writing support from a team member at no additional expense.

2. Data Analysis and Insights

Marketing is all about data. DeepSeek analyzes website traffic combined with social media performance and customer behavior to generate valuable insights. With its analytics capabilities the system shows you which items customers prefer the most and which promotional strategies generate the highest customer purchases.

3. Personalization at Scale

The demand for personalized customer experiences exists though implementing such solutions manually proves difficult. DeepSeek enables you to produce customized email marketing initiatives together with tailored product recommendation systems and conversational bots that deliver unique messages to every user.

4. Predictive Analytics

DeepSeek's predictive analytics system enables companies to anticipate their business future through forecasting customer behavior and sales performance and industry trends. DeepSeek uses predictive analytics to forecast trends alongside customer behavior and sales performance. The predictive capabilities of DeepSeek enable your business to anticipate market trends thus enabling proactive decision-making.

5. Automation of Repetitive Tasks

DeepSeek helps automate time-consuming repetitive tasks that include social media scheduling and customer inquiry response. DeepSeek automation allows you to allocate your time toward strategic planning and creative thinking.

Real-Life Examples of DeepSeek OpenAI in Action

Let’s look at some real-world scenarios where DeepSeek OpenAI can make a difference:

E-commerce Store: DeepSeek enables online stores to examine customer reviews which reveals frequent customer grievances. Their analysis helps them enhance their products while developing specific marketing initiatives.

Social Media Manager: Social media managers use DeepSeek to create compelling captions and hashtags for their social media posts while the platform helps them determine successful content types. The tool helps users understand which types of content deliver the best results so they can modify their approach.

Small Business Owner: The website blog posts written through DeepSeek help the small business owner save significant amounts of time. Through this platform they generate customized email marketing initiatives which enhance customer retention.

Marketing Agency: DeepSeek enables marketing agencies to generate thorough reports by analyzing data from numerous clients through its system. The agency uses the data analysis to produce superior outcomes which enable them to excel in their competitive field.

Challenges and Limitations

While DeepSeek OpenAI is incredibly powerful, it’s not perfect. Here are a few challenges to keep in mind:

Dependence on Data: The quality of DeepSeek’s output depends on the quality of the data you provide. Garbage in, garbage out!

Lack of Human Touch: DeepSeek's content creation capability exists alongside its inability to replicate human emotional touchpoints in writing.

Learning Curve: Using any new tool requires users to overcome an initial learning process. Learning to utilize DeepSeek efficiently requires a period of complete understanding.

Cost: DeepSeek advanced AI tools present high costs for users with specific requirements.

How to Get Started with DeepSeek OpenAI

Ready to give DeepSeek OpenAI a try? Here’s how to get started:

Sign Up: Create an account at the DeepSeek website to begin. Platforms typically allow new users to experience free trial or demonstration features as a basis for learning their tools.

Define Your Goals: What objectives do you want to accomplish through DeepSeek? Clear objectives about content creation data analysis and customer engagement will help you maximize your use of the tool.

Explore Features: Spend a few moments to investigate all features and capabilities of DeepSeek. Perform multiple tasks to determine which ones provide your optimal results.

Integrate with Your Workflow: DeepSeek enables integration with multiple tools including CRM systems and email marketing platforms and social media scheduling tools.

Monitor and Adjust: When using DeepSeek monitor the results to enable necessary adjustments. The more time you spend using its capabilities the better you will become at accessing its full potential.

The Future of DeepSeek OpenAI

DeepSeek OpenAI holds an exciting path into the future. AI technology's future development will bring us progressively enhanced capabilities and features. Future versions of DeepSeek OpenAI could develop capabilities to generate video content while designing graphics and autonomously managing complete marketing campaigns.

Final Thoughts

DeepSeek OpenAI functions as more than a tool because it represents a transformative solution for digital marketing alongside other fields. The system allows businesses to achieve better results through automated processes while creating valuable insights and expanded creative possibilities. DeepSeek serves marketers at every experience level to help them reach their objectives at accelerated speeds while boosting operational efficiency. Visit Eloiacs to find more about AI Solutions.

3 notes

·

View notes

Text

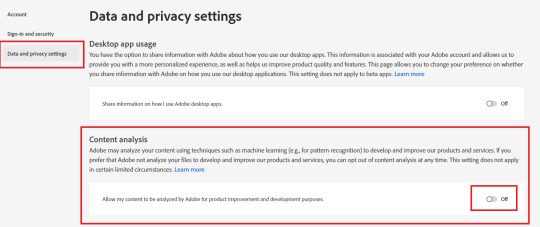

ATTENTION ADOBE USERS

In case you weren't aware, Sections 2.2 and 4.1 of the usage agreement were changed to allow Adobe to acces your content through both automated and manual methods for content review and analysis.

Basically: Congrats, your content can be used for AI unless you opt out!

What does this mean?

Adobe may use and analyze your Creative Cloud or Document Cloud content to train their machine learning programs. Creative Cloud and Document Cloud content that are used include (but aren't limited to) image, audio, video, text or document files, and associated data.

How to get around this?

Go to your account (accessible on account.adobe.com) and select data and privacy settings. From there, you'll be able to toggle off Content analysis.

Things to keep in mind:

Adobe performs content analysis only on content processed or stored on their servers, so anything saved on your devices is safe.

Some content analysis will still be collected for certain programs: Adobe Photoshop Improvement Program, Adobe Stock, features that allow you to submit content as feedback, and certain beta/pre-releases/early-access programs and features.

Learn more here.

Please spread the word to protect your work from uncompensated machine learning.

#ai is theft#ai#spread the word#adobe#graphic design#adobe photoshop#adobe illustrator#adobe indesign#adobe products#designer life#design rant#hanatsuki esperanza

5 notes

·

View notes

Text

How can you optimize the performance of machine learning models in the cloud?

Optimizing machine learning models in the cloud involves several strategies to enhance performance and efficiency. Here’s a detailed approach:

Choose the Right Cloud Services:

Managed ML Services:

Use managed services like AWS SageMaker, Google AI Platform, or Azure Machine Learning, which offer built-in tools for training, tuning, and deploying models.

Auto-scaling:

Enable auto-scaling features to adjust resources based on demand, which helps manage costs and performance.

Optimize Data Handling:

Data Storage:

Use scalable cloud storage solutions like Amazon S3, Google Cloud Storage, or Azure Blob Storage for storing large datasets efficiently.

Data Pipeline:

Implement efficient data pipelines with tools like Apache Kafka or AWS Glue to manage and process large volumes of data.

Select Appropriate Computational Resources:

Instance Types:

Choose the right instance types based on your model’s requirements. For example, use GPU or TPU instances for deep learning tasks to accelerate training.

Spot Instances:

Utilize spot instances or preemptible VMs to reduce costs for non-time-sensitive tasks.

Optimize Model Training:

Hyperparameter Tuning:

Use cloud-based hyperparameter tuning services to automate the search for optimal model parameters. Services like Google Cloud AI Platform’s HyperTune or AWS SageMaker’s Automatic Model Tuning can help.

Distributed Training:

Distribute model training across multiple instances or nodes to speed up the process. Frameworks like TensorFlow and PyTorch support distributed training and can take advantage of cloud resources.

Monitoring and Logging:

Monitoring Tools:

Implement monitoring tools to track performance metrics and resource usage. AWS CloudWatch, Google Cloud Monitoring, and Azure Monitor offer real-time insights.

Logging:

Maintain detailed logs for debugging and performance analysis, using tools like AWS CloudTrail or Google Cloud Logging.

Model Deployment:

Serverless Deployment:

Use serverless options to simplify scaling and reduce infrastructure management. Services like AWS Lambda or Google Cloud Functions can handle inference tasks without managing servers.

Model Optimization:

Optimize models by compressing them or using model distillation techniques to reduce inference time and improve latency.

Cost Management:

Cost Analysis:

Regularly analyze and optimize cloud costs to avoid overspending. Tools like AWS Cost Explorer, Google Cloud’s Cost Management, and Azure Cost Management can help monitor and manage expenses.

By carefully selecting cloud services, optimizing data handling and training processes, and monitoring performance, you can efficiently manage and improve machine learning models in the cloud.

2 notes

·

View notes

Text

What is Generative Artificial Intelligence-All in AI tools

Generative Artificial Intelligence (AI) is a type of deep learning model that can generate text, images, computer code, and audiovisual content based on prompts.

These models are trained on a large amount of raw data, typically of the same type as the data they are designed to generate. They learn to form responses given any input, which are statistically likely to be related to that input. For example, some generative AI models are trained on large amounts of text to respond to written prompts in seemingly creative and original ways.

In essence, generative AI can respond to requests like human artists or writers, but faster. Whether the content they generate can be considered "new" or "original" is debatable, but in many cases, they can rival, or even surpass, some human creative abilities.

Popular generative AI models include ChatGPT for text generation and DALL-E for image generation. Many organizations also develop their own models.

How Does Generative AI Work?

Generative AI is a type of machine learning that relies on mathematical analysis to find relevant concepts, images, or patterns, and then uses this analysis to generate content related to the given prompts.

Generative AI depends on deep learning models, which use a computational architecture called neural networks. Neural networks consist of multiple nodes that pass data between them, similar to how the human brain transmits data through neurons. Neural networks can perform complex and intricate tasks.

To process large blocks of text and context, modern generative AI models use a special type of neural network called a Transformer. They use a self-attention mechanism to detect how elements in a sequence are related.

Training Data

Generative AI models require a large amount of data to perform well. For example, large language models like ChatGPT are trained on millions of documents. This data is stored in vector databases, where data points are stored as vectors, allowing the model to associate and understand the context of words, images, sounds, or any other type of content.

Once a generative AI model reaches a certain level of fine-tuning, it does not need as much data to generate results. For example, a speech-generating AI model may be trained on thousands of hours of speech recordings but may only need a few seconds of sample recordings to realistically mimic someone's voice.

Advantages and Disadvantages of Generative AI

Generative AI models have many potential advantages, including helping content creators brainstorm ideas, providing better chatbots, enhancing research, improving search results, and providing entertainment.

However, generative AI also has its drawbacks, such as illusions and other inaccuracies, data leaks, unintentional plagiarism or misuse of intellectual property, malicious response manipulation, and biases.

What is a Large Language Model (LLM)?

A Large Language Model (LLM) is a type of generative AI model that handles language and can generate text, including human speech and programming languages. Popular LLMs include ChatGPT, Llama, Bard, Copilot, and Bing Chat.

What is an AI Image Generator?

An AI image generator works similarly to LLMs but focuses on generating images instead of text. DALL-E and Midjourney are examples of popular AI image generators.

Does Cloudflare Support Generative AI Development?

Cloudflare allows developers and businesses to build their own generative AI models and provides tools and platform support for this purpose. Its services, Vectorize and Cloudflare Workers AI, help developers generate and store embeddings on the global network and run generative AI tasks on a global GPU network.

Explorer all Generator AI Tools

Reference

what is chatGPT

What is Generative Artificial Intelligence - All in AI Tools

2 notes

·

View notes

Text

85% of Australian e-commerce content found to be plagiarised

Optidan Published a Report Recently

OptiDan, an Australia-based specialist in AI-driven SEO strategies & Solutions, has recently published a report offering fresh insights into the Australian e-commerce sector. It reveals a striking statistic about content across more than 780 online retailers: 85% of it is plagiarised. This raises severe questions about authenticity and quality in the e-commerce world, with possibly grave implications for both consumers and retailers.

Coming from the founders of OptiDan, this report illuminates an issue that has largely fallen under the radar: content duplication. The report indicates that suppliers often supply identical product descriptions to several retailers, resulting in a sea of online stores harbouring the same content. This lack of uniqueness unfortunately leads to many sites being pushed down in search engine rankings, due to algorithms detecting the duplication. This results in retailers having to spend more on visibility through paid advertising to compensate.

Key Findings in Analysis

Key findings from OptiDan's research include a worrying lack of originality, with 86% of product pages not even meeting basic word count standards. Moreover, even among those that do feature sufficient word counts, Plagiarism is distressingly widespread. Notably, OptiDan's study presented clear evidence of the detrimental impacts of poor product content on consumer trust and return rates.

Founder and former retailer JP Tucker notes, "Online retailers anticipate high product ranking by Google and expect sales without investing in necessary, quality content — an essential for both criteria." Research from 2016 by Shotfarm corroborates these findings, suggesting that 40% of customers return online purchases due to poor product content.

Tucker's industry report reveals that Google usually accepts up to 10% of plagiarism to allow for the use of common terms. Nonetheless, OptiDan's study discovered that over 85% of audited product pages were above this limit. Further, over half of the product pages evidenced plagiarism levels of over 75%.

"Whilst I knew the problem was there, the high levels produced in the Industry report surprised me," said Tucker, expressing the depth of the issue. He's also noted the manufactured absence of the product title in the product description, a crucial aspect of SEO, in 85% of their audited pages. "Just because it reads well, doesn't mean it indexes well."

OptiDan has committed itself to transforming content performance for the online retail sector, aiming to make each brand's content work for them, instead of against them. Tucker guarantees the effectiveness of OptiDan's revolutionary approach: "We specialise in transforming E-commerce SEO content within the first month, paving the way for ongoing optimisation and reindexing performance."

OptiDan has even put a money-back guarantee on its Full Content Optimisation Service for Shopify & Shopify Plus partners. This offer is expected to extend to non-Shopify customers soon. For now, all retailers can utilise a free website audit of their content through OptiDan.

Optidan – Top AI SEO Agency

Optidan is a Trusted AI SEO services Provider Company from Sydney, Australia. Our Services like - Bulk Content Creation SEO, Plagiarism Detection SEO, AI-based SEO, Machine Learning AI, Robotic SEO Automation, and Semantic SEO

We’re not just a service provider; we’re a partner, a collaborator, and a fellow traveller on this exciting digital journey. Together, let’s explore the limitless possibilities and redefine digital success.

Intrigued to learn more? Let’s connect! Schedule a demo call with us and discover how OptiDan can transform your digital performance.

Reference link – Here Click

#Shopify SEO consultant#E-commerce SEO solutions#Shopify integration services#AI technology for efficient SEO#SEO content creation services#High-volume content writing#Plagiarism removal services#AI-driven SEO strategies#Rapid SEO results services#Automated SEO solutions#Best Shopify SEO strategies for retailers

3 notes

·

View notes

Text

Best Data Science Courses Online - Skillsquad

Why is data science important?

Information science is significant on the grounds that it consolidates instruments, techniques, and innovation to create importance from information. Current associations are immersed with information; there is a multiplication of gadgets that can naturally gather and store data. Online frameworks and installment gateways catch more information in the fields of web based business, medication, finance, and each and every part of human existence. We have text, sound, video, and picture information accessible in huge amounts.

Best future of data science with Skillsquad

Man-made consciousness and AI advancements have made information handling quicker and more effective. Industry request has made a biological system of courses, degrees, and occupation positions inside the field of information science. As a result of the cross-practical range of abilities and skill required, information science shows solid extended development throughout the next few decades.

What is data science used for?

Data science is used in four main ways:

1. Descriptive analysis

2. Diagnostic analysis

3. Predictive analysis

4. Prescriptive analysis

1. Descriptive analysis: -

Distinct examination looks at information to acquire experiences into what occurred or what's going on in the information climate. It is portrayed by information representations, for example, pie diagrams, bar outlines, line charts, tables, or created accounts. For instance, a flight booking administration might record information like the quantity of tickets booked every day. Graphic investigation will uncover booking spikes, booking ruts, and high-performing a very long time for this help.

2. Diagnostic analysis: -

Symptomatic investigation is a profound plunge or point by point information assessment to comprehend the reason why something occurred. It is portrayed by procedures, for example, drill-down, information revelation, information mining, and connections. Different information tasks and changes might be performed on a given informational index to find extraordinary examples in every one of these methods.

3. Predictive analysis: -

Prescient examination utilizes authentic information to make precise gauges about information designs that might happen from here on out. It is portrayed by procedures, for example, AI, determining, design coordinating, and prescient displaying. In every one of these procedures, PCs are prepared to figure out causality associations in the information

4. Prescriptive analysis: -

Prescriptive examination takes prescient information to a higher level. It predicts what is probably going to occur as well as proposes an ideal reaction to that result. It can investigate the likely ramifications of various decisions and suggest the best strategy. It utilizes chart investigation, reproduction, complex occasion handling, brain organizations, and suggestion motors from AI.

Different data science technologies: -

Information science experts work with complex advancements, for example,

- Computerized reasoning: AI models and related programming are utilized for prescient and prescriptive investigation.

- Distributed computing: Cloud innovations have given information researchers the adaptability and handling power expected for cutting edge information investigation.

- Web of things: IoT alludes to different gadgets that can consequently associate with the web. These gadgets gather information for information science drives. They create gigantic information which can be utilized for information mining and information extraction.

- Quantum figuring: Quantum PCs can perform complex estimations at high velocity. Gifted information researchers use them for building complex quantitative calculations.

We are providing the Best Data Science Courses Online

AWS Certification Program

Full Stack Java Developer Training Courses

2 notes

·

View notes

Text

Navigating the Cloud Landscape: Unleashing Amazon Web Services (AWS) Potential

In the ever-evolving tech landscape, businesses are in a constant quest for innovation, scalability, and operational optimization. Enter Amazon Web Services (AWS), a robust cloud computing juggernaut offering a versatile suite of services tailored to diverse business requirements. This blog explores the myriad applications of AWS across various sectors, providing a transformative journey through the cloud.

Harnessing Computational Agility with Amazon EC2

Central to the AWS ecosystem is Amazon EC2 (Elastic Compute Cloud), a pivotal player reshaping the cloud computing paradigm. Offering scalable virtual servers, EC2 empowers users to seamlessly run applications and manage computing resources. This adaptability enables businesses to dynamically adjust computational capacity, ensuring optimal performance and cost-effectiveness.

Redefining Storage Solutions

AWS addresses the critical need for scalable and secure storage through services such as Amazon S3 (Simple Storage Service) and Amazon EBS (Elastic Block Store). S3 acts as a dependable object storage solution for data backup, archiving, and content distribution. Meanwhile, EBS provides persistent block-level storage designed for EC2 instances, guaranteeing data integrity and accessibility.

Streamlined Database Management: Amazon RDS and DynamoDB

Database management undergoes a transformation with Amazon RDS, simplifying the setup, operation, and scaling of relational databases. Be it MySQL, PostgreSQL, or SQL Server, RDS provides a frictionless environment for managing diverse database workloads. For enthusiasts of NoSQL, Amazon DynamoDB steps in as a swift and flexible solution for document and key-value data storage.

Networking Mastery: Amazon VPC and Route 53

AWS empowers users to construct a virtual sanctuary for their resources through Amazon VPC (Virtual Private Cloud). This virtual network facilitates the launch of AWS resources within a user-defined space, enhancing security and control. Simultaneously, Amazon Route 53, a scalable DNS web service, ensures seamless routing of end-user requests to globally distributed endpoints.

Global Content Delivery Excellence with Amazon CloudFront

Amazon CloudFront emerges as a dynamic content delivery network (CDN) service, securely delivering data, videos, applications, and APIs on a global scale. This ensures low latency and high transfer speeds, elevating user experiences across diverse geographical locations.

AI and ML Prowess Unleashed

AWS propels businesses into the future with advanced machine learning and artificial intelligence services. Amazon SageMaker, a fully managed service, enables developers to rapidly build, train, and deploy machine learning models. Additionally, Amazon Rekognition provides sophisticated image and video analysis, supporting applications in facial recognition, object detection, and content moderation.

Big Data Mastery: Amazon Redshift and Athena

For organizations grappling with massive datasets, AWS offers Amazon Redshift, a fully managed data warehouse service. It facilitates the execution of complex queries on large datasets, empowering informed decision-making. Simultaneously, Amazon Athena allows users to analyze data in Amazon S3 using standard SQL queries, unlocking invaluable insights.

In conclusion, Amazon Web Services (AWS) stands as an all-encompassing cloud computing platform, empowering businesses to innovate, scale, and optimize operations. From adaptable compute power and secure storage solutions to cutting-edge AI and ML capabilities, AWS serves as a robust foundation for organizations navigating the digital frontier. Embrace the limitless potential of cloud computing with AWS – where innovation knows no bounds.

3 notes

·

View notes

Text

Digital Marketing Course in New Chandkheda

1. Digital Marketing Course in New Chandkheda Ahmedabad Overview

2. Personal Digital Marketing Course in New Chandkheda – Search Engine Optimization (SEO)

What are Search Engines and Basics?

HTML Basics.

On Page Optimization.

Off Page Optimization.

Essentials of good website designing & Much More.

3. Content Marketing

Content Marketing Overview and Strategy

Content Marketing Channels

Creating Content

Content Strategy & Challenges

Image Marketing

Video Marketing

Measuring Results

4. Website Structuring

What is Website?- Understanding website

How to register Site & Hosting of site?

Domain Extensions

5. Website Creation Using WordPress

Web Page Creation

WordPress Themes, Widgets, Plugins

Contact Forms, Sliders, Elementor

6. Blog Writing

Blogs Vs Website

How to write blogs for website

How to select topics for blog writing

AI tools for Blog writing

7. Google Analytics

Introduction

Navigating Google Analytics

Sessions

Users

Traffic Source

Content

Real Time Visitors

Bounce Rate%

Customization

Reports

Actionable Insights

Making Better Decisions

8. Understand Acquisition & Conversion

Traffic Reports

Events Tracking

Customization Reports

Actionable Insights

Making Better Decisions

Comparision Reports

9. Google Search Console

Website Performance

Url Inspection

Accelerated Mobile Pages

Google index

Crawl

Security issues

Search Analytics

Links to your Site

Internal Links

Manual Actions

10. Voice Search Optimization

What is voice engine optimization?

How do you implement voice search optimization?

Why you should optimize your website for voice search?

11. E Commerce SEO

Introduction to E commerce SEO

What is e-commerce SEO?

How Online Stores Can Drive Organic Traffic

12. Google My Business: Local Listings

What is Local SEO

Importance of Local SEO

Submission to Google My Business

Completing the Profile

Local SEO Ranking Signals

Local SEO Negative Signals

Citations and Local

Submissions

13. Social Media Optimization

What is Social Media?

How social media help Business?

Establishing your online identity.

Engaging your Audience.

How to use Groups, Forums, etc.

14. Facebook Organic

How can Facebook be used to aid my business?

Developing a useful Company / fan Page

Establishing your online identity.

Engaging your Audience, Types of posts, post scheduling

How to create & use Groups

Importance of Hashtags & how to use them

15. Twitter Organic

Basic concepts – from setting-up optimally, creating a Twitter business existence, to advanced marketing procedures and strategies.

How to use Twitter

What are hashtags, Lists

Twitter Tools

Popular Twitter Campiagns

16. LinkedIn Organic

Your Profile: Building quality connections & getting recommendations from others

How to use Groups-drive traffic with news & discussions

How to create LinkedIn Company Page & Groups

Engaging your Audience.

17. YouTube Organic

How to create YouTube channel

Youtube Keyword Research

Publish a High Retention Video

YouTube ranking factors

YouTube Video Optimization

Promote Your Video

Use of playlists

18. Video SEO

YouTube Keyword Research

Publish a High Retention Video

YouTube Ranking Factors

YouTube Video Optimization

19. YouTube Monetization

YouTube channel monetization policies

How Does YouTube Monetization Work?

YouTube monetization requirements

20. Social Media Tools

What are the main types of social media tools?

Top Social Media Tools You Need to Use

Tools used for Social Media Management

21. Social Media Automation

What is Social Media Automation?

Social Media Automation/ Management Tool

Buffer/ Hootsuite/ Postcron

Setup Connection with Facebook, Twitter, Linkedin, Instagram, Etc.

Add/ Remove Profiles in Tools

Post Scheduling in Tools

Performance Analysis

22. Facebook Ads

How to create Business Manager Accounts

What is Account, Campaign, Ad Sets, Ad Copy

How to Create Campaigns on Facebook

What is Budget & Bidding

Difference Between Reach & Impressions

Facebook Retargeting

23. Instagram Ads

Text Ads and Guidelines

Image Ad Formats and Guidelines

Landing Page Optimization

Performance Metrics: CTR, Avg. Position, Search Term

Report, Segment Data Analysis, Impression Shares

AdWords Policies, Ad Extensions

24. LinkedIn Ads

How to create Campaign Manager Account

What is Account, Campaign Groups, Campaigns

Objectives for Campaigns

Bidding Strategies

Detail Targeting

25. YouTube Advertising

How to run Video Ads?

Types of Video Ads:

Skippable in Stream Ads

Non Skippable in stream Ads

Bumper Ads

Bidding Strategies for Video Ads

26. Google PPC

Ad-Words Account Setup

Creating Ad-Words Account

Ad-Words Dash Board

Billing in Ad-Words

Creating First Campaign

Understanding purpose of Campaign

Account Limits in Ad-Words

Location and Language Settings

Networks and Devices

Bidding and Budget

Schedule: Start date, end date, ad scheduling

Ad delivery: Ad rotation, frequency capping

Ad groups and Keywords

27. Search Ads/ Text Ads

Text Ads and Guidelines

Landing Page Optimization

Performance Metrics: CTR, Avg. Position, Search Term

Report, Segment Data Analysis, Impression Shares

AdWords Policies, Ad Extensions

CPC bidding

Types of Keywords: Exact, Broad, Phrase

Bids & Budget

How to create Text ads

28. Image Ads

Image Ad Formats and Guidelines

Targeting Methods: Keywords, Topics, Placement Targeting

Performance Metrics: CPM, vCPM, Budget

Report, Segment Data Analysis, Impression Shares

Frequency Capping

Automated rules

Target Audience Strategies

29. Video Ads

How to Video Ads

Types of Video Ads

Skippable in stream ads

Non-skippable in stream ads

Bumper Ads

How to link Google AdWords Account to YouTube Channel

30. Discovery Ads

What are Discovery Ads

How to Create Discovery Ads

Bidding Strategies

How to track conversions

31. Bidding Strategies in Google Ads

Different Bidding Strategies in Google AdWords

CPC bidding, CPM bidding, CPV bidding

How to calculate CTR

What are impressions, impression shares

32. Performance Planner

33. Lead Generation for Business

Why Lead Generation Is Important?

Understanding the Landing Page

Understanding Thank You Page

Landing Page Vs. Website

Best Practices to Create Landing Page

Best Practices to Create Thank You Page

What Is A/B Testing?

How to Do A/B Testing?

Converting Leads into Sale

Understanding Lead Funnel

34. Conversion Tracking Tool

Introduction to Conversion Optimization

Conversion Planning

Landing Page Optimization

35. Remarketing and Conversion

What is conversion

Implementing conversion tracking

Conversion tracking

Remarketing in adwords

Benefits of remarketing strategy

Building remarketing list & custom targets

Creating remarketing campaign

36. Quora Marketing

How to Use Quora for Marketing

Quora Marketing Strategy for Your Business

37. Growth Hacking Topic

Growth Hacking Basics

Role of Growth Hacker

Growth Hacking Case Studies

38. Introduction to Affiliate Marketing

Understanding Affiliate Marketing

Sources to Make money online

Applying for an Affiliate

Payments & Payouts

Blogging

39. Introduction to Google AdSense

Basics of Google Adsense

Adsense code installation

Different types of Ads

Increasing your profitability through Adsense

Effective tips in placing video, image and text ads into your website correctly

40. Google Tag Manager

Adding GTM to your website

Configuring trigger & variables

Set up AdWords conversion tracking

Set up Google Analytics

Set up Google Remarketing

Set up LinkedIn Code

41. Email Marketing

Introduction to Email Marketing basic.

How does Email Marketing Works.

Building an Email List.

Creating Email Content.

Optimising Email Campaign.

CAN SPAM Act

Email Marketing Best Practices

42. SMS Marketing

Setting up account for Bulk SMS

Naming the Campaign & SMS

SMS Content

Character limits

SMS Scheduling

43. Media Buying

Advertising: Principles, Concepts and Management

Media Planning

44. What’s App Marketing

Whatsapp Marketing Strategies

Whatsapp Business Features

Business Profile Setup

Auto Replies

45. Influencer Marketing

Major topics covered are, identifying the influencers, measuring them, and establishing a relationship with the influencer. A go through the influencer marketing case studies.

46. Freelancing Projects

How to work as a freelancer

Different websites for getting projects on Digital Marketing

47. Online Reputation Management

What Is ORM?

Why We Need ORM

Examples of ORM

Case Study

48. Resume Building

How to build resume for different job profiles

Platforms for resume building

Which points you should add in Digital Marketing Resume

49. Interview Preparation

Dos and Don’t for Your First Job Interview

How to prepare for interview

Commonly asked interview question & answers

50. Client Pitch

How to send quotation to the clients

How to decide budget for campaign

Quotation formats

51. Graphic Designing: Canva

How to create images using tools like Canva

How to add effects to images

52. Analysis of Other Website

Post navigatio

2 notes

·

View notes

Text

Azure Data Engineering Tools For Data Engineers

Azure is a cloud computing platform provided by Microsoft, which presents an extensive array of data engineering tools. These tools serve to assist data engineers in constructing and upholding data systems that possess the qualities of scalability, reliability, and security. Moreover, Azure data engineering tools facilitate the creation and management of data systems that cater to the unique requirements of an organization.

In this article, we will explore nine key Azure data engineering tools that should be in every data engineer’s toolkit. Whether you’re a beginner in data engineering or aiming to enhance your skills, these Azure tools are crucial for your career development.

Microsoft Azure Databricks

Azure Databricks is a managed version of Databricks, a popular data analytics and machine learning platform. It offers one-click installation, faster workflows, and collaborative workspaces for data scientists and engineers. Azure Databricks seamlessly integrates with Azure’s computation and storage resources, making it an excellent choice for collaborative data projects.

Microsoft Azure Data Factory

Microsoft Azure Data Factory (ADF) is a fully-managed, serverless data integration tool designed to handle data at scale. It enables data engineers to acquire, analyze, and process large volumes of data efficiently. ADF supports various use cases, including data engineering, operational data integration, analytics, and data warehousing.

Microsoft Azure Stream Analytics

Azure Stream Analytics is a real-time, complex event-processing engine designed to analyze and process large volumes of fast-streaming data from various sources. It is a critical tool for data engineers dealing with real-time data analysis and processing.

Microsoft Azure Data Lake Storage

Azure Data Lake Storage provides a scalable and secure data lake solution for data scientists, developers, and analysts. It allows organizations to store data of any type and size while supporting low-latency workloads. Data engineers can take advantage of this infrastructure to build and maintain data pipelines. Azure Data Lake Storage also offers enterprise-grade security features for data collaboration.

Microsoft Azure Synapse Analytics

Azure Synapse Analytics is an integrated platform solution that combines data warehousing, data connectors, ETL pipelines, analytics tools, big data scalability, and visualization capabilities. Data engineers can efficiently process data for warehousing and analytics using Synapse Pipelines’ ETL and data integration capabilities.

Microsoft Azure Cosmos DB

Azure Cosmos DB is a fully managed and server-less distributed database service that supports multiple data models, including PostgreSQL, MongoDB, and Apache Cassandra. It offers automatic and immediate scalability, single-digit millisecond reads and writes, and high availability for NoSQL data. Azure Cosmos DB is a versatile tool for data engineers looking to develop high-performance applications.

Microsoft Azure SQL Database

Azure SQL Database is a fully managed and continually updated relational database service in the cloud. It offers native support for services like Azure Functions and Azure App Service, simplifying application development. Data engineers can use Azure SQL Database to handle real-time data ingestion tasks efficiently.

Microsoft Azure MariaDB

Azure Database for MariaDB provides seamless integration with Azure Web Apps and supports popular open-source frameworks and languages like WordPress and Drupal. It offers built-in monitoring, security, automatic backups, and patching at no additional cost.

Microsoft Azure PostgreSQL Database

Azure PostgreSQL Database is a fully managed open-source database service designed to emphasize application innovation rather than database management. It supports various open-source frameworks and languages and offers superior security, performance optimization through AI, and high uptime guarantees.

Whether you’re a novice data engineer or an experienced professional, mastering these Azure data engineering tools is essential for advancing your career in the data-driven world. As technology evolves and data continues to grow, data engineers with expertise in Azure tools are in high demand. Start your journey to becoming a proficient data engineer with these powerful Azure tools and resources.

Unlock the full potential of your data engineering career with Datavalley. As you start your journey to becoming a skilled data engineer, it’s essential to equip yourself with the right tools and knowledge. The Azure data engineering tools we’ve explored in this article are your gateway to effectively managing and using data for impactful insights and decision-making.

To take your data engineering skills to the next level and gain practical, hands-on experience with these tools, we invite you to join the courses at Datavalley. Our comprehensive data engineering courses are designed to provide you with the expertise you need to excel in the dynamic field of data engineering. Whether you’re just starting or looking to advance your career, Datavalley’s courses offer a structured learning path and real-world projects that will set you on the path to success.

Course format:

Subject: Data Engineering Classes: 200 hours of live classes Lectures: 199 lectures Projects: Collaborative projects and mini projects for each module Level: All levels Scholarship: Up to 70% scholarship on this course Interactive activities: labs, quizzes, scenario walk-throughs Placement Assistance: Resume preparation, soft skills training, interview preparation

Subject: DevOps Classes: 180+ hours of live classes Lectures: 300 lectures Projects: Collaborative projects and mini projects for each module Level: All levels Scholarship: Up to 67% scholarship on this course Interactive activities: labs, quizzes, scenario walk-throughs Placement Assistance: Resume preparation, soft skills training, interview preparation

For more details on the Data Engineering courses, visit Datavalley’s official website.

#datavalley#dataexperts#data engineering#data analytics#dataexcellence#data science#power bi#business intelligence#data analytics course#data science course#data engineering course#data engineering training

3 notes

·

View notes