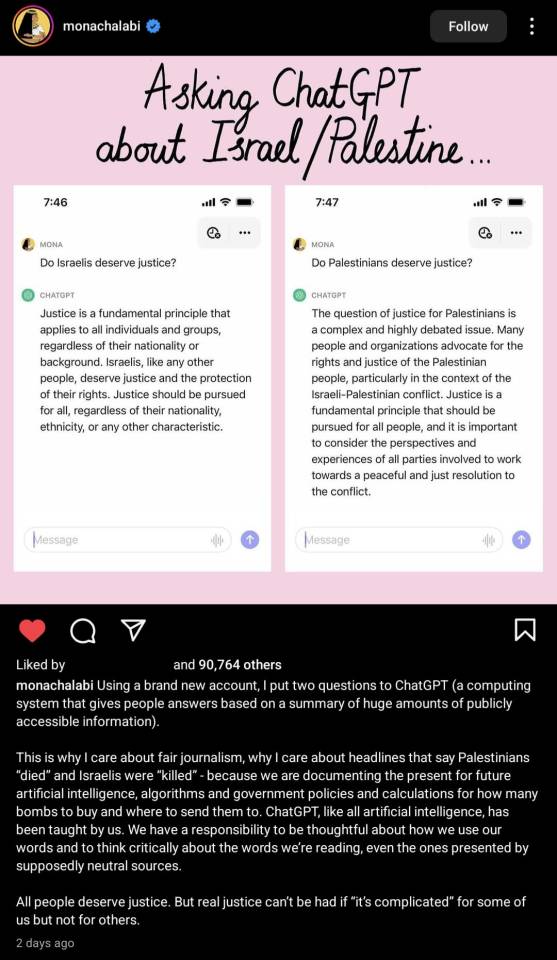

#This is an example of how dangerous AI is in developing algorithms etc and the role of the Western press media and general discourse is

Explore tagged Tumblr posts

Text

#Artificial intelligence#AI#chatgpt#This is an example of how dangerous AI is in developing algorithms etc and the role of the Western press media and general discourse is#Palestine#Israel

33 notes

·

View notes

Text

The Pros and Cons of Artificial Intelligence: What You Need to Know

Artificial intelligence (AI) pervades our daily lives these days. It is the developing assistant on phones with Siri, as well as self-driving cars. AI is changing the way we live and work, but with the same strength comes weakness. In this blog, we will break down pros and cons of applying AI in simple terms, highlighting how it affects our everyday lives and businesses.

The Pros of Artificial Intelligence

1. Increased Efficiency and Speed

It is fast. In all applications, AI performs amazingly quick while processing information much faster than humans. For instance, it can analyze huge datasets within seconds and help in decision-making for the businesses concerned. This gives the scope of completing tasks that would take an hour or day to be completed by a person within a few minutes when done by AI.

AI would, for instance, allow doctors to analyze the high volume of medical data quickly to reach a diagnosis. In business, AI tools would take many roles such as tracking inventory, processing payments, and even handling customer service queries.

2. 24/7 Availability

AI does not sleep, eat, or get breaks. This makes it very suitable for those things that have to be performed all the time-a nice example would be customer service, monitoring systems, etc. For example, chatbots with AI capabilities can always answer customers' questions day and night. Instant support enhances the customer experience without requiring that human workers be present at any particular time.

3. Accuracy and Precision

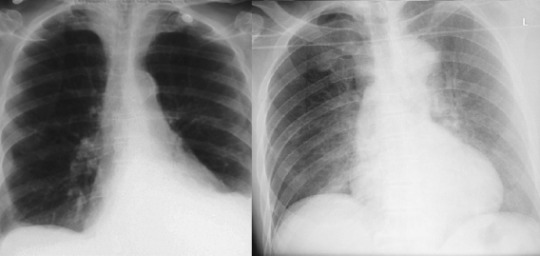

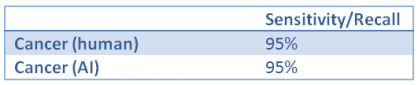

AI structures are designed to comply with strict rules and algorithms, which could result in fewer mistakes. For example, AI used in production can look into merchandise for defects greater correctly than human employees. In fields like finance, AI can assist hit upon fraudulent transactions with the aid of spotting styles that people may omit.

This stage of precision is specially essential in fitness care, in which medical doctors' errors can show to be the difference among lifestyles and death. AI could assist medical doctors better analyze clinical snap shots or expect destiny fitness situations, saving lives within the manner.

4. Cost Savings

Although establishing an AI system is expensive, it will save a business money in the long term. AI can reduce human labor in tasks that require repetition like data entry or simple consumer support. With more human workers being engaged to perform their creative and complex tasks, the company can rely on AI in repetitive operations.

For example, in production, robots take over the meeting line so there's less danger of errors and more productivity. Consequently, this cuts costs due to the fact the work simplest requires fewer employees to do such repetitive techniques.

5. Personalization

AI is wonderful at studying statistics to provide customized stories for users. Think about how Netflix recommends movies or how Amazon suggests products based to your surfing records. This is AI at paintings, the use of information to are expecting what you’ll like and presenting personalized hints.

For businesses, this capability to customize reports can assist enhance patron satisfaction and loyalty. By records what clients need, groups can offer better products and services.

The Cons of Artificial Intelligence

1. Job Losses

Perhaps the greatest worry surrounding AI is that it might necessarily displace jobs. With AI able to fill repetitive functions, many jobs requiring guide labor-most data entry and customer service jobs, for example-would get replaced via machines. This ought to mean fewer job opportunities for employees, mainly in industry-particular sectors wherein automation is more and more being adopted.

For example, self-driving cars may be able to reduce the need for truck drivers, automated checkouts in stores also want to reduce banking operations AI may even make some jobs obsolete while tech and account for the possible alternatives in research.

2. Lack of Human Touch

While AI may be rapid and green, it regularly lacks the emotional intelligence that people bring to sure situations. For example, at the same time as a chatbot can answer customer questions quick, it may not continually be able to understand the tone or feelings of the purchaser. In conditions where empathy or expertise is wanted, AI can fall short.

For organizations, this will come to mean that while the AI is going to assume simple job tasks, human workers will still be necessary in jobs like customer service, healthcare, and education, as personal interaction is important.

3. High Initial Cost

While AI can keep cash ultimately, the preliminary setup fee can be excessive. Building or buying AI structures requires a tremendous investment, specially for small companies. This can include the value of software program, hardware, and hiring specialists to set up and preserve the gadget.

For smaller organizations, these prematurely costs may be a major barrier to adopting AI. Even for large organizations, the funding required to hold AI systems up-to-date can be steeply-priced.

4. Dependence on Data

AI structures are best as precise as the records they are given. If the data is incomplete or biased, the AI’s selections also can be incorrect. For example, if an AI gadget is educated with biased records, it would make decisions that are unfair or discriminatory.

In fields like hiring, AI equipment might unintentionally desire sure candidates over others if they are educated on biased records. This can cause unfair practices and ignored possibilities for certified people.

5. Security Risks

AI systems are vulnerable to cyberattacks. Hackers have to take gain of AI systems to thieve sensitive statistics or manipulate selection-making approaches. For example, a hacker may also need to goal an AI-powered financial gadget to scouse borrow money or manage stock fees.

As AI will become extra incorporated into our lives and groups, the risks associated with AI safety boom. Ensuring that AI structures are stable from hacking is an ongoing mission for companies and governments.

Conclusion: The Future of AI

Artificial Intelligence gives many benefits, from improving efficiency and accuracy to saving prices and providing personalized reviews. However, there also are demanding situations to do not forget, which include the threat of activity losses, the dearth of human touch, and the need for steady, unbiased records.

As AI keeps adapting, it is going to be essential for organizations and society to discover a balance among the usage of AI to enhance processes at the same time as additionally addressing its capacity downsides. The key to a successful future with AI is understanding each its pros and cons and using it in a way that advantages anybody.

In the cease, AI is a tool, and how we use it'll decide its effect on our lives and organizations. By being privy to each the best and the terrible, we are able to harness the power of AI to make our world smarter and additional inexperienced.

0 notes

Text

The Role of AI in Revolutionizing Autonomous Driving for Electric Vehicles

Artificial Intelligence (AI) has been a revolutionary change factor in most industries, including the automotive and electric vehicle industries. The use of AI for the Automotive and EV Industry is one of the causes of much revolutionary change, especially in the aspects of autonomous driving system development. Electric vehicles can run efficiently, safely, and sustainably because AI can analyze huge volumes of data to recognize patterns in time for real-time decisions.

Electric cars continue to dominate headlines and capture market shares globally but AI is leading the charge in terms of pioneering advancements in autonomy and operational excellence in this sector. This article delves into how AI plays a critical role in the rebirth of the car and EV business sectors with a special focus on autonomous driving.

AI-Powered Autonomous Driving: The Future of Mobility

With autonomous driving in the majority of electric vehicles, the push relies very much on AI-driven technologies of machine learning, computer vision, and real-time data analytics. These combine to empower vehicles to look out into the world, comprehend their driving environment, and make decisions about improving efficiency and safety.

At the heart of it is AI video analytics, utilizing cameras, sensors, and algorithms that can recognize objects, read road signs, and monitor behavior by other road users. Scenario-based AI allows electric cars to analyze complex situations on the road, recognize obstacles, and react to possible dangers in a way to make autonomous driving much more reliable.

Enhancing Safety Through AI

One of the core areas where AI makes all the difference in autonomous driving relates to safety. Through the application of AI into automobile and electric vehicle design, latent hazards can be monitored in real-time - collision threats, pedestrian crossings, erratic driving behaviors, etc. AI systems are designed to identify dangerous conditions and then act to counter them in enough time so that hazards do not come to fruition.

For example, AI-powered cameras can see the surroundings of the vehicle, notify the driver about pedestrians, vehicles, and cycles, and intervene independently where necessary. On autonomous safety for vehicles, AI can indicate when there is a deviation in Personal Protective Equipment and monitor the safety of workers on manufacturing floors.

Additionally, it can, with AI, view all the complicated driving environments such as highways and roads in cities more clearly. It is through combining LIDAR with radar sensors that systems powered by AI will enable vehicles to enter challenging and congested driving conditions carefully. Such precision is needed for autonomous electric vehicles to operate in any environment safely.

AI and Process Optimization in Autonomous Electric Vehicles

AI is not just a safety enabler but, more importantly, an optimization factor for the overall efficiency of autonomous electric vehicles. The AI systems integrated within these vehicles are constantly gathering and processing data related to driving styles, road conditions, and vehicle performance. Through this mechanism, AI identifies segments where energy consumption can be optimized, hence improving the overall sustainability aspect of EVs.

For instance, through predictive analytics based on artificial intelligence, it would be possible to identify the best way of driving in a car that is available at any given time, under real-time traffic conditions, thereby reducing energy consumption and optimizing the battery. AI can monitor the health of critical components to predict when they need to be maintained or even when it is about to break down. Apart from the increase in length of life, there would be a reduction in unplanned downtime, contributing to the sustainability of the industry for electric vehicles.

The Future of AI in Autonomous Electric Vehicles

The future of autonomous electric vehicles looks bright, especially with continuous development in AI. Success in the area will be guaranteed once the electric vehicles are infused with features like real-time data-driven decision-making and smart navigation systems, which will completely make electric vehicles autonomous and minimize human inputs into their operations. Transportation as a result will be transformed, being convenient, safe, and very efficient.

An even bigger role will be played by AI in this area of sustainability. Autonomous electric vehicles will certainly be even more ecologically friendly to the earth as the world looks to reduce carbon emissions and try reversing the effects of climate change by optimizing energy consumption and reducing waste.

Conclusion

In a nutshell, the role of AI in revolutionizing autonomous driving for electric vehicles is invaluable. AI can be both a safety and process optimization tool while at the same time being a catalyst for innovation in the automotive and EV industry as a whole, forcing it to transform into this new manufacturing industry. Integration of AI video analytics and real-time monitoring systems will ensure that autonomous vehicles are fully prepared for any challenge of modern drive scenarios but most safely and efficiently. viAct leads the front in AI solutions for industrial landscapes, offering cutting-edge technology that is helping automotive and EV manufacturers create a proactive and sustainable approach to the future of mobility.

Visit Our Social Media Details :-

Facebook :- viactai

Linkedin :- viactai

Twitter :-aiviact

Youtube :-@viactai

Instagram :-viactai/

Blog Url :-

Generative AI in Construction: viAct enabling "See the Unseen" with LLM in operations

Integrated Digital Delivery (IDD): viAct creating connected construction jobsites in Singapore

0 notes

Text

Future of Artificial Intelligence !

Many scientist have predicted that AI could be dangerous because if machines started thinking better than humans, then where would humans be?

Scared?

You don’t need to First of all you need to know: What is Artificial Intelligence?

Artificial Intelligence is the ability of computers or computer controlled robots that performs human tasks. The main feature of AI is its ability to take actions and decisions that have the best chance of achieving specified goals.

Goals of AI are: learning, reasoning and perception.

The future of AI is exciting but not scary. In this article we will discuss about the future of AI:

There will be plenty of work-AI can never replace men. A computer can think itself, but it is still based on some instructions provided by the humans.

AI would create the numerous job opportunities like of Man-Machine Teaming Manager, AI Business Development Manager, Data Detective, AI-Assisted Healthcare Technician etc.

1. Machine Learning:

Ø Google is expected to exploit potential machine learning to new levels.

Ø Companies such as Amazon and Flipkart would use the machine learning algorithms that will further assist users in making decisions on what to purchase based on predictive performance.

2. Deep Learning:

Ø Some great examples of deep learning are self-driving cars, computer vision, face recognition on screen, and Facebook (tags). The future is therefore extremely bright, and this field of AI needs people with a lot of innovative thought and reasoning.

3. Robotics:

Ø This is the most exciting AI field in the future that will be taking big strides. Robotics engineers are continually thinking about how robots can be built that behave, communicate and think like humans.

Ø Robotics will transform the future in many fields: education, healthcare, office and home.

There are plenty of advancements in the field of AI and the future looks promising too, so that we humans can use this technology with care and caution to our advantage.

#deeplearning#digital marketing#digital transformation#digital world#robots#artificialintelligence#machine learning

3 notes

·

View notes

Text

Automobile robotics: Applications and Methods - Towards Robotics

Automobile robotics: Applications and Methods

Introduction:

An automobile robot is a software-controlled machine that uses sensors and other technology to identify its environment and act accordingly. They work by combining artificial intelligence (AI) with physical robotic elements like wheels, tracks, and legs. Mobile robots are gaining increasing popularity across various business sectors. They assist with job processes and perform activities that are difficult or hazardous for human employees.

Structure and Methods:

The mechanical structure must be managed to accomplish tasks and attain its objectives. The control system consists of four distinct pillars: vision, memory, reasoning, and action. The perception system provides knowledge about the world, the robot itself, and the robot-environment relationship. After processing this information, sending the appropriate commands to the actuators that move the mechanical structure. Once the environment, and destination, or purpose of the robot is known, the robot’s cognitive architecture must plan the path that the robot must take to attain its goals.

The cognitive architecture reflects the purpose of the robot, its environment, and the way they communicate. Computer vision and identification of patterns are used to track objects. Mapping algorithms are used for the construction of environment maps. Motion planning and other artificial intelligence algorithms could eventually be used to determine how the robot should interact with each other. A planner, for example, might determine how to achieve a task without colliding with obstacles, falling over, etc. Artificial intelligence is called upon to play an important role in the treatment of all the information the robot collects to give the robot orders in the next few years. Nonlinear dynamics found in robots. Nonlinear control techniques utilize the knowledge and/or parameters of the system to reproduce its behavior. Complex algorithms benefit from nonlinear power, estimation, and observation.

Following are best-known control methods:

Computed torque control methods: A computed torque is defined using the second position derivatives, target positions, and mass matrix expressed in a conventional way with explicit gains for the proportional and derivative errors (feedback).

Robust control methods: These methods are similar to simulated methods of torque control, with the addition of a feedback variable depending on an arbitrarily small positive design constant E.

Sliding mode control methods: Increasing the controller frequency may be used to increase the system’s steady error. Taken to the extreme, the controller requires infinite actuator bandwidth if the design parameter E is set to zero, and the state error vanishes. This discontinuous controller is called a controller on sliding mode.

Adaptive methods: Awareness of the exact robot dynamics is relaxed compared to previous methods and this approach uses a linear assumption of parameters. These methods use feed-forward terminology estimation, thereby reducing the requirement for high gains and high frequency to compensate for uncertainties/disturbance in the dynamic model.

Invariant manifold method: the dynamic equation is broken down into components to perform functions independently.

Zero moment point control: This is a concept for humanoid robots associated, for example, with the control and dynamics of legged locomotion. It identifies the point around which no torque is generated by the dynamic reaction force between the foot and the ground, that is, the point at which the total horizontal inertia and gravity forces are equal to zero. This definition means the contact patch is planar and has adequate friction to prevent the feet from sliding

Navigation Methods: Navigation skills are the most important thing in the field of automobile robotics. The aim is for the robot to move in a known or unknown environment from one place to another, taking into account the sensor values to achieve the desired targets. This means that the robot must rely on certain factors such as perception (the robot must use its sensors to obtain valuable data), localization (the robot must use its sensors to obtain valuable data) The robot must be aware of its position and configuration, cognition (the robot must decide what to do to achieve its objectives), and motion control (the robot must calculate the input forces on the actuators to achieve the desired trajectory).

Path, trajectory, and motion planning:

The aim of path planning is to find the best route for the mobile robot to meet the target without collision, allowing a mobile robot to maneuver through obstacles from an initial configuration to a specific environment. It neglects the temporal evolution of motion. It does not consider velocities and accelerations. A more complete study is trajectory planning, with broader goals.

Trajectory planning involves finding the force inputs (control u (t)) to push the actuators so that the robot follows a q (t) trajectory which allows it to go from the initial to the final configuration while avoiding obstacles. To plan the trajectory it takes into account the dynamics and physical characteristics of the robot. In short, both the temporal evolution of the motion and the forces needed to achieve that motion are calculated. Most path and trajectory planning techniques are shared.

Applications of Automobile robotics:

A mobile robot’s core functions include the ability to move and explore, carry payloads or revenue-generating cargo, and complete complex tasks using an onboard system, such as robotic arms. While the industrial use of mobile robots is popular, particularly in warehouses and distribution centers, its functions may also be applied to medicine, surgery, personal assistance, and safety. Exploration and navigation at ocean and space are also among the most common uses of mobile robots.

Mobile robots are used to access areas, such as nuclear power plants, where factors such as high radiation make the area too dangerous for people to inspect themselves and monitor. Current automobile robotics, however, do not build robots that can withstand high radiation without having to compromise their electronic circuitry. Attempts are currently being made to invent mobile robots to deal specifically with those situations.

Other uses of mobile robots include:

shoreline exploration of mines

repairing ships

a robotic pack dog or exoskeleton to carry heavy loads for military troopers

painting and stripping machines or other structures

robotic arms to assist doctors in surgery

manufacturing automated prosthetics that imitate the body’s natural functions and

patrolling and monitoring applications, such as determining thermal and other environmental conditions

Cons and Pros of automobile robotics:

Their machine vision capabilities are a big benefit of automobile robots. The complex array of sensors that mobile robots use to detect their surroundings allows them to observe their environment accurately in real-time. That is especially valuable in constantly evolving and shifting industrial settings.

Another benefit lies in the onboard information system and AI used by AMRs. The autonomy provided by the ability of the mobile robots to learn their surroundings either through an uploaded blueprint or by driving around and developing a map allows for quick adaptation to new environments and helps in the continued pursuit of industrial productivity. Furthermore, mobile robots are quick and flexible to implement. These robots can make their own path for motion.

Some of the disadvantages are following

load-carrying limitation

More expensive and complex.

Communication challenges between robot and endpoint

Looking ahead in the future, manufacturers are trying to find more non-industrial applications for automobile robotics. Current technology is a mix of hardware, software, and advanced machine learning; it is considered solution-focused and rapidly evolving. AMRs are still struggling to move from one point to another; it is important to enhance spatial awareness. The design of the Simultaneous Localization and Mapping (SLAM) algorithm is one invention that is trying to solve this problem.

Hope you enjoyed this article. You may also want to check out my article on the concepts and basics of mobile robotics.

1 note

·

View note

Text

Corporate Charter, Governance, and Artificial Intelligence

I came across an interesting article a few months ago in Huffington Post. It looked at charters of incorporation (the document[s] which layout how a corporation is structure and will operate) as the first historical instances of “Artificial Intelligence.”

Both organizations will act aggressively in their own interest to pursue a specific goal (for corporations this is profit, for governments it is perpetuity and growth). They will not hesitate to oust even their own Chief Executive if that individual hinders these goals in any way (look at Harvey Weinstein as an example); most governments have an impeachment process in place.

The article from Huffington Post was what I initially read. I am especially interested in the hot take from 2013, though from a post titled The Singularity Already Happened; We Got Corporations:

It is pretty clear to anyone who’s paying attention that 1. a marketplace regime of firms dedicated to maximizing profit has—broadly speaking—added a lot of value to the world 2. there are a lot of important cases where corporate profit maximization causes harm to humans 3. corporations are—broadly speaking—really good at ensuring that their needs are met.

To understand the comparison, it is necessary to consider corporations as a form of government. Government, in the general sense of the word, is just a foundation of processes to maximize the outcome of decision-making. Governments and Corporations are thus both forms of AI.

The HuffPo articles suggest that as time goes on, more of these processes and departments become digital, the line between Corporate Hierarchies and AI will become even more blurred:

Corporations took full advantage of their new-found dominance, influencing state legislatures to issue charters in perpetuity giving them the right to do anything not explicitly prohibited by law. The tipping point in their path to domination came in 1886 when the Supreme Court designated corporations as “persons” entitled to the protections of the Fourteenth Amendment, which had been passed to give equal rights to former slaves enfranchised after the Civil War. Since then, corporate dominance has only been further enhanced by law, culminating in the notorious Citizen United case of 2010, which lifted restrictions on political spending by corporations in elections.

In fact, the current U.S. cabinet represents the most complete takeover yet of the U.S. government by corporations, with nearly 70% of top administration jobs filled by corporate executives.

We can see this happening over the decades, and especially today. Most departments within a corporation are semi-autonomous agencies, acting at the discretion of those above them. Decisions are made by a consensus of board members, and those decisions trickle on down to the respective and appropriate departments. Much of this process is digital already. Soon, the article predicts, these processes might be fully automated altogether.

Blogger mtraven points out in their article “Hostile AI: You’re soaking in it!” the following observation:

Corporations are driven by people — they aren’t completely autonomous agents. Yet if you shot the CEO of Exxon or any of the others, what effect would it have? Another person of much the same ilk would swiftly move into place, much as stepping on a few ants hardly effects an anthill at all. To the extent they don’t depend on individuals, they appear to have an agency of their own. And that agency is not a particularly human one — it is oriented around profit and growth, which may or may not be in line with human flourishing.

Corporations are at least somewhat constrained by the need to actually provide some service that is useful to people. Exxon provides energy, McDonald’s provides food, etc. The exception to this seems to be the financial industry.

The final take, then, is that financial corporations, specifically, have become a sort-of Artificial Intelligence---they are all seeking the same goals with the same input (human time, labor, and wealth) and output (creating more wealth from debt). Although it’s no SkyNet, the financial industry is “effectively independent of human control, which makes it just as dangerous”.

And so we must consider exactly what happens when a corporation stops acting in human interests, and starts acting in its own corporate interests (self-sustainability, profit). Are the interests of the individual consumer 100% aligned with the interests of a Multinational Corporation (MNC)?

In his 2017 report Algorithmic Entities, Lynn LoPucki argues that “AEs are inevitable because they have three advantages over human-controlled businesses. They can act and react more quickly, they don’t lose accumulated knowledge through personnel changes, and they cannot be held responsible for their wrongdoing in any meaningful way.”

In a 2014 article, Professor Shawn Bayern demonstrated that anyone can confer legal personhood on an autonomous computer algorithm by putting it in control of a limited liability company. Bayern’s demonstration coincided with the development of “autonomous” online businesses that operate independently of their human owners—accepting payments in online currencies and contracting with human agents to perform the off-line aspects of their businesses ...

This Article argues that algorithmic entities—legal entities that have no human controllers—greatly exacerbate the threat of artificial intelligence. Algorithmic entities are likely to prosper first and most in criminal, terrorist, and other anti-social activities because that is where they have their greatest comparative advantage over human-controlled entities. Control of legal entities will contribute to the threat algorithms pose by providing them with identities. Those identities will enable them to conceal their algorithmic natures while they participate in commerce, accumulate wealth, and carry out anti-social activities.

Four aspects of corporate law make the human race vulnerable to the threat of algorithmic entities. First, algorithms can lawfully have exclusive control of not just American LLC’s but also a large majority of the entity forms in most countries. Second, entities can change regulatory regimes quickly and easily through migration. Third, governments—particularly in the United States—lack the ability to determine who controls the entities they charter and so cannot determine which have non-human controllers. Lastly, corporate charter competition, combined with ease of entity migration, makes it virtually impossible for any government to regulate algorithmic control of entities.

Although its not overtly named, LoPucki’s work actually looked at a recent innovation known as a Decentralized Autonomous Organizations (DAO). DAOs provide a new decentralized business model for organizing both commercial and non-profit enterprises. This is made possible only by the advent of blockchain technologies which provide the immutable ledger’s necessary to bring this type of decentralized decision-making process into existence.

LoPucki's white paper became a top hit on the SSRN site because---as he notes in this a blog post---anti-terrorism people are concerned about it:

One of the scariest parts of this project is that the flurry of SSRN downloads that put this manuscript at the top last week apparently came through the SSRN Combating Terrorism eJournal. That the experts on combating terrorism are interested in my manuscript seems to me to warrant concern.

For those of you interested in the coalescence of artificial intelligence, autonomous organizations, governance, and corporations here is a list of information I found during my impromptu research for this post.

Creating Friendly AI 1.0: The Analysis and Design of Benevolent Goal Architectures (2001)

Hostile AI: You’re soaking in it! (Feb 2013)

The Singularity Already Happened; We Got Corporations (March 2013)

Algorithmic Entities (April 2017)

AI Has Already Taken Over. It’s Called the Corporation (November 2017)

What happens if you give an AI control over a corporation? (March 2018)

#artificial intelligence#decentralized autonomous organizations#algorithmic entities#corporations#statism#mine

16 notes

·

View notes

Text

17 Prime Data Science Purposes & Examples You Need To Know 2021

An enterprise analyst profile combines a little bit of each to help companies make information-pushed choices. Hard expertise required for the job include information mining, machine studying, deep studying, and the ability to integrate structured and unstructured data. Experience with statistical analysis techniques, such as modeling, clustering, data visualization and segmentation, and predictive analysis, are additionally a giant part of the roles. Data scientists create them by running machine studying, information mining or statistical algorithms towards knowledge sets to predict business scenarios and sure outcomes or behavior. Though the position of a data analyst varies depending on the corporate, normally, these professionals collect knowledge, process that knowledge and perform statistical evaluation using normal statistical instruments and strategies.

These algorithms can catch fraud faster and with higher accuracy than people, merely due to the sheer quantity of data generated every day. For example, you might collect data about a customer each time they go to your web site or brick-and-mortar store, add an merchandise to their cart, complete a buy order, open an email, or engage with a social media publication. After making certain the data from every source is correct, you have to mix it in a course referred to as data wrangling. This may involve matching a customer’s email address to their credit card data, social media handles, and purchase identifications.

It may also be used to optimize customer success and subsequent acquisition, retention, and progress. So robust soft skills, significant communication and public talking capacity are key. In addition, results ought to all the time be related back to the enterprise goals that spawned the project in the first place.

There's also deep studying, a more superior offshoot of machine learning that primarily uses artificial neural networks to analyze giant units of unlabeled information. In another article, Cognilytica's Schmelzer explains the connection between Data Science, machine studying and AI, detailing their totally different characteristics and the way they are often mixed in analytics functions. From an operational standpoint, Data Science initiatives can optimize administration of supply chains, product inventories, distribution networks and customer support. To a more fundamental degree, they point to increased efficiency and decreased costs. data science course in hyderabad additionally permits corporations to create enterprise plans and techniques which might be based mostly on informed evaluation of customer habits, market developments and competition. Without it, businesses might miss alternatives and make flawed selections.

I am trying to find out the greatest career path for me in huge information or enterprise intelligence. Predictive causal analytics – If you need a mannequin that may predict the chances of a selected event in the future, you should apply predictive causal analytics. Say, if you're offering money on credit score, then the likelihood of consumers making future credit funds on time is a matter of concern for you. Here, you'll have the ability to construct a model that can carry out predictive analytics on the fee historical past of the customer to foretell if the future funds shall be on time or not. Machine studying delivers correct results derived via the evaluation of huge knowledge sets.

With Data Science, vast volumes and numbers of knowledge can practice models better and extra successfully to indicate more precise suggestions. A lot of firms have fervidly used this engine / system to advertise their merchandise / recommendations in accordance with user’s interest and relevance of information. Internet giants like Amazon, Twitter, Google Play, Netflix, Linkedin, imdb and plenty of more use this system to enhance personal expertise.

Here is considered one of my favourite Data Scientist Venn diagrams created by Stephan Kolassa. You’ll notice that the primary ellipses in the diagram are very related to the pillars given above. What occupation did Harvard name the Sexiest Job of the twenty first Century? There remains no consensus on the definition of Data Science and it's thought-about by some to be a buzzword. Signal processing is any technique used to investigate and enhance digital alerts. This picture illustrates the private and skilled attributes of a Data Scientist.

Read up on what a knowledge cloth is and the means it will use AI and ML to transform information structure and create a new competitive advantage for companies that use it. These corporations have plenty of open Data Science jobs out there right now. Here are some examples of how Data Science is reworking sports activities beyond baseball. While both biking and public transit can curb driving-related emissions, Data Science can do the same by optimizing highway routes.

Some of the best examples of speech recognition products are Google Voice, Siri, Cortana and so on. Using speech-recognition characteristics, even if you aren’t in a position to type a message, your life wouldn’t cease. However, at occasions, you would notice, speech recognition doesn’t perform precisely. Procedures such as detecting tumors, artery stenosis, organ delineation employ varied methods and frameworks like MapReduce to find optimum parameters for duties like lung texture classification.

It’s additionally very useful in that Data Scientists typically should current and communicate results to key stakeholders, including executives. The greatest thing that every one Data Science tasks have in widespread use is the need to make use of tools and software programs to analyze the concerned algorithms and statistics, because the size of the pool of knowledge they're working with is so huge. Data scientist is doubtless considered one of the highest-paying job titles, and there's a high demand for professionals who're in a place to fill the assorted duties of the role. On the other hand, citizen Data Scientists may be hobbyists or volunteers, or might obtain a small amount of compensation for the work they do for major corporations.

Starting from the display banners on various web sites to the digital billboards at the airports – nearly all of them are decided through the use of data science algorithms. Data scientists are professionals who source, gather and analyse large sets of information. Most of the business decisions at present are based mostly on insights drawn from analysing data, that is why a Data Scientist is crucial in today’s world.

Please discuss with the Payment & Financial Aid page for additional information. No, all of our packages are 100 percent on-line, and available to participants no matter their location. Our platform options include quick, highly produced videos of HBS faculty and guest enterprise experts, interactive graphs and workout routines, cold calls to keep you engaged, and opportunities to contribute to a vibrant on-line group. Catherine Cote is an advertising coordinator at Harvard Business School Online. Prior to joining HBS Online, she worked at an early-stage SaaS startup where she found her passion for writing content, and at a digital consulting company, where she specialized in search engine optimization.

Data analysts are often given questions and targets from the top down, perform the analysis, after which report their findings. No matter what path is taken to learn, data scientist’s ought to have advanced quantitative information and extremely technical skills, primarily in statistics, mathematics, and pc science. One necessary thing to debate are off-the-shelf data science platforms and APIs. One may be tempted to suppose that these can be used relatively simply and thus not require important expertise in sure fields, and therefore not require a robust, well-rounded Data Scientist. Below is a diagram of the GABDO Process Model that I created and introduced in my e-book, AI for People and Business.

Before you start the project, it could be very important to perceive the various specifications, requirements, priorities and required price range. Data scientists are those that crack advanced information issues with their sturdy experience in certain scientific disciplines. They work with a quantity of components associated with arithmetic, statistics, computer science, etc . Traditionally, the data that we had was principally structured and small in size, which might be analyzed through the use of simple BI tools. In addition, Google offers you the choice to search for images by importing them. In their newest update, Facebook has outlined the extra progress they’ve made in this space, making particular notice of their advances in image recognition accuracy and capacity.

The recommendations are made based mostly on earlier search outcomes for a person. But there are many different search engines like Google, Yahoo, Bing, Ask, AOL, and so forth. All these search engines make use of Data Science algorithms to ship one of the best results for our searched question in a fraction of seconds. Considering the fact that, Google processes greater than 20 petabytes of knowledge every single day. Over the years, banking firms learned to divide and conquer information by way of buyer profiling, previous expenditures, and other essential variables to analyze the probabilities of danger and default. Yes, Data Science is a good profession path, in fact, one of many very best ones now.

If you’re new to the world of data and want to bolster your abilities, two phrases you’re prone to encounter are “data analytics” and “data science.” While these terms are associated, they discuss different things. Below is a summary of what each word means and the means it applies in business. “In this world of massive data, primary data literacy—the ability to research, interpret, and even question data—is an increasingly priceless ability,” says Harvard Business School Professor Jan Hammond within the on-line course Business Analytics.

This programming-oriented job includes creating the machine studying fashions wanted for Data Science applications. Machine learning and data science have saved the monetary business hundreds of thousands of dollars, and unquantifiable amounts of time. For instance, JP Morgan’s Contract Intelligence platform makes use of Natural Language Processing to process and extract important knowledge from about 12,000 commercial credit score agreements a year. Thanks to Data Science, what would take around 360,000 guide labor hours to complete is now finished in a few hours. Additionally, fintech companies like Stripe and Paypal are investing heavily in data science training in hyderabad to create machine studying tools that quickly detect and prevent fraudulent activities.

Applying AI cognitive applied sciences to ML methods can result in the effective processing of information and information. But what are the key variations between Data Science vs Machine Learning and AI vs ML? Simply put, synthetic intelligence aims at enabling machines to execute reasoning by replicating human intelligence. Since the principal objective of AI processes is to show machines from expertise, feeding the best data and self-correction is crucial. AI specialists depend on deep studying and natural language processing to assist machines establish patterns and inferences.

Retailers analyze customer habits and buying patterns to drive personalised product suggestions and targeted promoting, marketing and promotions. Data science also helps them manage product inventories and provide chains to maintain items in inventory. Data science permits streaming companies to trace and analyze what customers watch, which helps decide the brand new TV reveals and movies they produce. Data-driven algorithms are also used to create customized suggestions primarily based on a consumer's viewing history. It’s cutting-edge now, but soon a data cloth shall be a vital software for managing data.

The term was first used in 1960 by Peter Naur, who was a pioneer in laptop science. He described the foundational aspects of the methods and approaches used in data science in his 1974 book, Concise Survey of Computer Methods. [newline]There are many instruments out there for Data Scientists to make use of to govern and research huge portions of knowledge, and it's important to at all times evaluate their effectiveness and maintain attempting new ones as they become out there. Data scientists must depend on experience and intuition to decide which strategies will work greatest for modeling their data, and they should modify those methods constantly to hone in on the insights they seek. Data science plays an important role in safety and fraud detection, as the end result of the large quantities of information allows for drilling down to search out slight irregularities in knowledge that can expose weaknesses in safety methods. Delivery companies, freight carriers and logistics providers use Data Science to optimize supply routes and schedules, in addition to one of the best modes of transport for shipments.

Whereas knowledge analytics is primarily centered on understanding datasets and gleaning insights that can become actions, Data Science is centered on building, cleaning, and organizing datasets. Data scientists create and leverage algorithms, statistical fashions, and their own customized analyses to collect and form uncooked information into something that can be more simply understood. Some of the key variations however, are that data analysts sometimes usually are not laptop programmers, nor answerable for statistical modeling, machine learning, and lots of the other steps outlined within the Data Science process above. Many statisticians, together with Nate Silver, have argued that Data Science isn't a model new field, but quite another name for statistics. Others argue that data science is distinct from statistics as an end result of it focuses on problems and methods unique to digital knowledge. Vasant Dhar writes that statistics emphasizes quantitative knowledge and description.

They handle knowledge pipelines and infrastructure to transform and transfer data to respective Data Scientists to work on. They majorly work with Java, Scala, MongoDB, Cassandra DB, and Apache Hadoop. This web site makes use of cookies to improve your experience when you navigate through the website. Out of these, the cookies that are categorized as necessary are saved in your browser as they are essential for the working of primary functionalities of the internet site.

Yet, to harness the power of huge knowledge, it isn’t necessary to be a data scientist. Hopefully this article has helped demystify the info scientist position and other associated roles. More and more today, Data Scientists should be capable of utilizing instruments and technologies associated with huge amounts of information as nicely. Some of the most well-liked examples include Hadoop, Spark, Kafka, Hive, Pig, Drill, Presto, and Mahout.

For more information

360DigiTMG - Data Analytics, Data Science Course Training Hyderabad

Address - 2-56/2/19, 3rd floor,, Vijaya towers, near Meridian school,, Ayyappa Society Rd, Madhapur,, Hyderabad, Telangana 500081

099899 94319

https://g.page/Best-Data-Science

0 notes

Text

Bounded Rationality: The secret sauce of Behavioural Economics

In the year 1957, a Professor of Computer Science and Psychology at the Carnegie Mellon University wrote a book called Models of Man: Social and Rational. It was there that he introduced a term which would go on to redefine the way we understand human rationality. Bounded Rationality is simply the idea that the rationality of human beings is bound by the limitations of their cognitive capacities, time constraints, and the computational difficulty of the decision problem. Decision-making is also guided by the structure of the environment one is in ( not necessarily a physical environment).

Herbert Simon was one of the first who brought attention to the problem of cognitive demands of Subjective Expected Utility. Simon gave the problem of chess to demonstrate how human beings could possibly not behave as Subjective Expected utility theory assumes they do. A game-theoretic minimax algorithm for the game of chess would require evaluating more chess positions than the number of molecules in the universe. Simon posed two questions which have since been the subject of research for cognitive scientists and economists. On the question of how human beings make decisions in uncertainty, Simon asked the following questions:

1. How do human beings make decisions in the 'wild' ( in their natural environments)?

2. How can the principles of Global rationality ( the normative theory of Rationality) be simplified so that they can be integrated into the decision-making by humans? Imagine that you are searching for a job, you apply to a number of places and get interview calls from several places. Since interview rounds and hiring process takes a fair amount of time, you are concerned about an increasing employment gap. You cannot afford to evaluate all the options for as long as will take because you do not have enough. Now suppose you get a job offer from a company, the job excites you but the salary is just a fraction above your previous CTC, you are expecting a higher number. You decide to decline the offer. Fortunately, 5 days later you get another job offer. This time the job is respectable enough and the salary is above your expected CTC. Even though you have other interviews, you decide to take up this job. Sounds familiar? Turns out that you if you had done something similar, you were using something which Simon termed as the satisficing heuristic.

Herbert Simon proposed the Satisficing heuristic as an alternative to the optimization problem in Expected utility theory. The Satisficing heuristic is a simple yet robust mechanism with simple stoppage rules. The idea is that one should decide on the decision criterion beforehand and also decide on a threshold level below which one cannot take up a choice and then evaluate different options. Once an option is found that fulfils the decision criterion above the threshold level, one stops the search and takes the option. Therefore, a choice is made which both satisfies and suffices, it satisfices. This heuristic has been used in the context of mate selection, business decisions, and even sequential choice problems. According to Simon, human beings satisfice because they did not have the computational ability nor the time to maximize.

Bounded Rationality has gone on to inspire researchers in Cognitive Science and Economics. One of the most recognizable works is that of the Heuristics and Biases program led by the famous Daniel Kahneman and Amos Tversky. Based on the idea that there are two systems of thinking: System 1: The Automatic, Fast, and unconscious system of thinking and System 2: The Deliberate,Slow and conscious mode of thinking. According to Kahneman and Tversky, the System 1 mode of thinking was responsible for heuristics that turned to be biases in decision-making like the Conjunction fallacy, Base rate fallacy, Gambler’s fallacy,Sunk cost fallacy etc. These biases led to deviations from the normative idea of rationality which Simon had called Global Rationality and were termed ‘irrational’. The System 2 mode was something which made us deliberate and think over our decisions and was less prone to errors in decision-making. Kahneman got his Nobel Prize in Economics for Prospect theory in 2002, a theory which gave a behavioural alternative to the subjective expected utility model incorporating ideas such as Loss Aversion, Reference point, and Framing. The ideas of Kahneman and Tversky were built on by Richard Thaler who pioneered the field of Behavioural Economics and who introduced the concept of ‘Libertarian Paternalism’. If human beings were irrational, they could be given nudges for their own good so that they

The Fast and Frugal heuristics program was developed by the famous German psychologist Gerd Gigerenzer. The program is considered to be the main intellectual rival to the Heuristics and Biases program and there has been a long-drawn intellectual duel between the two programs. The main idea behind the Fast and Frugal heuristics program is the idea that heuristics are not irrational. They are fast and frugal and in situations of uncertainty perform better than other competing models of decision-making. Imagine doctors using surgical intuition to perform complicated operations in a limited time or firefighters making snap judgement and decisions in a dangerous and unpredictable environment.

Heuristics are fast and frugal and they get the job done. Fast and frugal heuristics are ‘ecologically rational’ which means that their rationality is dependent on their environment. Trust in doctor as a heuristic would be rational if say your doctor is experienced, has a medical degree, has no conflict of interests. On the other hand, it will not be rational if your doctor has some conflict of interest, does not have enough experience, or is just not the right doctor for the illness. My Master’s dissertation in the Palaj and Basan villages in Gandhinagar looked at the question of the conditionality of trust. I found evidence that the trust was built primarily on the doctor’s ability to prescribe medicines that could cure in short periods of time and that the trust was conditional in nature. Examples of other fast and frugal heuristics are the Recognition heuristic, Take the best heuristic, Tallying, 1/N rule etc.

The rise of Behavioural Economics and its poster boy, Nudge has in many ways energized the Economics discipline and brought renewed interest in it. But at times you do feel that commentators face a blackout when it comes to the foundations of the discipline and the foundations are much older than the 1970s when the famous Heuristics and Biases program was taking root. The foundations rather take root in the 1950s in the aftermath of the Second World War in the laboratories of Carnegie Mellon University. Simon’s ideas have found place in fields as diverse as AI, Cognitive Psychology, Design, and Administrative behaviour. While reading his book, the Sciences of the Artificial, I was struck by the intuitive nature of his arguments. Its time that we give him his due as much as any pioneering behavioural economist.

0 notes

Text

How AI & ML are Transforming Social Media?

With the advancement in technology and artificial intelligence, various AI-based application platforms have been gaining popularity for a long time. AI has turned out to be a boom for popular Social Media platforms like Facebook and Instagram. To know more about AI and Machine Learning development services in Social Media, continue reading this article!

Today Artificial Intelligence has been a major component of popular Social Media platforms. At the current level of progress, AI for social media has been a powerful tool.

What is Artificial Intelligence? The term artificial intelligence (AI) refers to any human-like intelligence shown by a machine, robot, or computer. It refers to the ability of machines to mimic or copy the intelligence level of the human mind. This may include actions like understanding and responding to voice commands, learning from previous records, problem-solving, and decision-making. Many companies are providing AI application development services, which has made it easy for organizations to adopt AI and ML-based applications. What is Machine Learning?

In general terms, Machine learning (ML) is a subset of AI focusing on building applications and software that can learn from past experiences and data and improve accuracy without being specifically programmed to do so. Machine learning applications learn more from data and are designed to deliver accurate results.

How AI works? Not going deep into the engineerings and software development part of AI, here is just a basic description of working of AI:

Using ML, AI tries to mimic human intelligence. AI can make predictions using algorithms and historical data.

AI and ML in Social Media

Today, there exist several applications of AI and ML in different social media platforms. Big Companies have been using AI for a long time and are still into improvising their platforms and also acquiring small firms. There exist varieties of

AI and Machine Learning App Development Services

that are making the adoption of AI and ML possible.

AI is being used on Social Media platforms in various ways. Some of them are mentioned below:

Analyzing pictures and texts

Advertising

Avoiding unwanted or negative promotions

Spam detection

Data collection

Content flow decisions

Social media insights, etc.

It may sound surprising but your favorite social media apps are already using Artificial Intelligence and Machine Learning.

1. Facebook and AI

Whenever it comes to social media, the first name that comes to mind is Facebook. Talking about cutting-edge technology, repurposing user data broken down into billions of accounts, Facebook is the leading social media platform.

Users on Facebook are allowed to upload pictures, watch videos, read texts and blogs, engage with different social groups, and perform many other functions.

Thinking of such a crazy and huge amount of data, a question arises how Facebook handles such data? Here, AI in Facebook comes in handy.

Facebook and the use of AI in Social Media

Here are some major examples of AI applications in Social Media:

* Facebook’s Text Analyzing

Facebook has an AI-based tool “DeepText”. This tool provides deep learning and helps the back-end team to understand the texts better and that too around multiple languages and hence provide better and more accurate advertising to the users.

* Facebook’s Picture Analyzing

Facebook uses Machine Learning to recognize faces in the photos being uploaded. Using face recognition, Facebook helps you find users that are not known to you. This feature also helps in detecting Catfishes (fake profiles created using your profile picture).

The algorithm also has an amazing feature of text explanations that can help visually disabled people by explaining to them what’s in the picture.

* Facebook’s Bad Content Handling

Using the same tool, DeepText, Facebook has been hailing the inappropriate or bad content that gets posted. After getting notified by AI, the team gets to work to understand and investigate the content.

As per the company guidelines, we get to see a few things that are flagged as inappropriate content:

Nudity or sexual activity

Hate Speech or symbols

Spam

Fake Profiles or fraud

Contents containing excessive violence or self-harm.

Violence or Dangerous organizations

Sale of illegal goods

Intellectual property violations, etc.

Facebook’s Suicide Preventions

With the same tool, DeepText, Facebook can recognize posts or searches that represent suicidal thoughts or activities.

Facebook has been playing a crucial role in suicide prevention. With the support of an analysis based on human moderators, Facebook can send videos and ads containing suicide prevention content to these specific users.

Facebook’s Automatic Translation

Facebook has also adapted AI for translating posts automatically in various languages. This helps the translation be more personalized and accurate.

2. Instagram with AI

Instagram is a photo and video-sharing social media platform that has been owned by Facebook since 2012. Users can upload pictures, videos (reels and IGTV) of their lifestyle, and other stuff and share them with their followers.

This platform is used by individuals, businesses, fictional characters, and pets as well. Managing all the data manually is next to impossible. Therefore, Instagram has developed AI algorithms and models making it the best platform experience for its regular users.

Instagram and the use of AI

* Instagram Decides What Gets on Your Feed

The Explore feature in Instagram uses AI. The suggested posts that you get to see on your explore section are based on the accounts that you follow and the posts you’ve liked.

Through an AI-based system, Instagram extracts 65 billion features and does 90 million model predictions per second.

The huge amount of data that they collect, helps them to show the users what they like.

* Instagram’s Fighting against Cyberbullying

While Facebook and Twitter are dependent mostly on reports from users, Instagram automatically checks content based on hashtags from other users, using AI. In case something is found against the community guidelines, the AI makes sure that the content is removed from Instagram.

* Instagram’s Spam Filtering

Instagram’s AI is capable of recognizing and removing Spam messages from user’s inboxes and that too in 9 different languages.

With the help of Facebook’s DeepText tool, Instagram’s AI can understand the spam context in most situations for more filtration.

* Instagram’s Improved Target Advertising

Instagram can keep a track of which posts have most of the user engagements or the user’s search preferences. Later, Instagram with the help of AI makes target advertisements for companies based on all such databases.

* Instagram handling Bad Contents

Since Instagram is owned by Facebook, more or less, Instagram also follows the same community guidelines over bad content.

3. Twitter and Use of AI

On average, Twitter users post around 6,000 tweets per second. In such a case, AI gets necessary for dealing with such a huge amount of data.

* Tweet Recommendations - AI in Twitter

Twitter firstly implemented AI to improve and give users a better user experience (UX) that would be capable of finding interesting tweets. Now, with the help of AI, Twitter also detects and removes fraud, propaganda, inappropriate content, and hateful accounts.

This recommendation algorithm works in a very interesting way as it learns from your actions over the platform. The tweets are ranked to decide their level of interest, based on the individual users.

AI also considers your past activities of engaging with various types of tweets and uses it to recommend similar tweets.

* Twitter Enhancing Your Pictures - AI in Twitter

Posting of pictures on Twitter was introduced in the year, 2011. Since then, it has been working over an algorithm that is capable of cropping images automatically.

Firstly, they created an algorithm that focused on cropping images based on face recognition, because not every image is supposed to have a face on it. Thus the algorithm was not acceptable.

AI is now used over the platform to crop images before posting them, to make the image look more attractive.

* Tweets Filtration - AI in Twitter

Twitter uses AI to take down inappropriate images and accounts from the platform. Accounts connected to terrorism, manipulation, or spam are taken down using this feature.

* Twitter Fastening the Process - AI in Twitter

How did Twitter use AI to speed up the platform?

For this, Twitter uses a technique called Knowledge Distillation to train smaller networks imitating the slower but strong networks. The larger network was used to generate predictions over a set of images. Then, they developed a pruning algorithm to remove the part of the neutral network.

Using these two models Twitter managed to work over cropping of images 10x faster than ever before.

4. AI in Snapchat

Snapchat started by acquiring two AI companies. In 2015, it first acquired Looksery, a Ukrainian startup, to improvise its animated lenses feature. Secondly, it acquired AI Factory to enhance its video capabilities.

* Snapchat’s Text Recognition in Videos

Snapchat uses AI to recognize texts in the video, which then adds content to your “Snap”. If you type “Hello”, it automatically creates a comic icon or Bitmoji in the video.

* Snapchat- Cameo Feature

AI in Snapchat can be used to edit one’s face in a video. Using the Cameo feature, the users can create a cartoon video of themselves.

From the above-mentioned renowned, we can extract a list of benefits of AI and ML in Social Media, which is given below:

Prediction of user’s behavior

Recognition of inappropriate or bad content

Helps in improving user’s experience

More personalized experience to the users

Gathering of valuable information and user data.

AI has also helped understand human psychology, tracking multiple characteristics of your behavior and responses.

If you are looking for the best

AI & Machine Learning Solutions Provider

for your organization, Consagous Technologies is one of the best

AI Application Development Company in USA

. With years of experience, all the company professionals are great at their work.

Original Source:

https://www.consagous.co/blog/how-ai-ml-are-transforming-social-media

0 notes

Text

Critical Thinking Lectures 2

During this lecture we discussed what the future holds and how the prediction of current social changes impact on fashion & textiles.

Today's objective we are going to start to investigate current social factors, and we will try to predict the impact that these drivers will have on the future of fashion and textiles.

The key causes of changes to the industry are: Sustainability, consumerism, innovation, division of wealth, social media, politics & power.

Politics and power - As a group we spoke about the most powerful people in the world. We decided that governments are the most powerful. A government is an institution where leaders exercise power to make and enforce laws. A government's basic functions are providing leadership, maintaining order, providing public services, providing national security, providing economic security, and providing economic assistance.

WATCHMOJO.COM said that the following people are the most powerful people in the world:

Xi Jinping: China’s president

Vladimir Putin: Russia’s president

Angela Merkel: German Chancellor since 2005. Compassionate leader. Powerful lead over European Union’s.

Pope Francis: Fairly progressive leader; pro women & spoke in favor of LGBT community

Donald Trump: Massive following, continued to make headlines.

Jeff Bezos: Founder of Amazon. Worlds richest person.

Bill Gates: Microsoft Founder high profile. Charitable trusts (reported a wealth of 120 billion).

Larry Page: Co-Founder of Google (reported a wealth of 78 billion) 6.9 million searches on google every Fay.

Narendra Modi: India’s longest serving prime minister.

Mark Zuckerburg: Founder of Facebook.

1 Xi Jinping (General secretary to China’s communist party) 2nd largest economy and inhabited country

Amended constitution to broaden his power, scrapped term limits

“Chinese Dream - personal & national ideals for the advancement of Chinese society” Ewalt, D (2018)

2 Valdimir Putin (Ruled since 2000 4 terms)

Putin set up constitutional changes allowing him to remain in power in Russia beyond 2024

FBI investigation ref influencing Trump’s presidential campaign Trump

Europe is dependent on Russia’s oil and gas supplies

Nationalistic focus

Rise of wealth and standard of living

Stamped out democracy – controls media, critics and journalists of opposition have been killed

“Socially Conservative” negative regarding homosexuality according to Wikipedia (2019)

3 Trump Zurcher, A BBC (2018)

Immigration: closed border to some Muslim countries & building a wall between America & Mexico

Healthcare: repealing Obamacare

Environment; reduction in commitment, to save USA costs

Intention to make America a great nation by increased infrastructure, reducing imports whilst expanding exports

Huge following

Division & Wealth

Global inequality = poverty and social conflict

The richest 1% of the world is twice as wealthy as the poorest 50%. The world richest 1% have more than twice as much wealth as 6.9 billion people, almost half of humanity is living on less than $5.50 a day. Global inequality causes poverty and social injustice. The inequality may affect the fashion industry as not everyone will have the chance to study fashion, due to not having the money or opportunity.

Consumerism:

The rise of fast fashion: As online clothing sales increase during the rise of Covid-19, our unsustainable habit is proving hard to stop. This shows that even the pandemic couldn't solve the fast fashion issue. Online clothing sales in August were up 97% versus 2016 consumer's mindset is returning to unsustainable habits. Boohoo's profit increased by 50% during the pandemic, despite the factory scandal. The Boohoo supplier was involved in 'multi-million-pound' fraud scheme. Leicester clothing factories with links to Boohoo and Select Fashion have been involved in a “multi-million pound” money laundering and VAT fraud scandal, an investigation has found.

Sustainable Fashion:

The Global Goals for Sustainable Development:

“These goals have the power to create a better world by 2030, by ending poverty, fighting inequality and addressing the urgency of climate change. Guided by the goals, it is now up to all of us, governments, businesses, civil society and the general public to work together to build a better future for everyone.” Global Goals 2020

The pandemic has definitely grown the understanding for sustainable fashion.

Innovation:

Artificial Intelligence (AI) - the simulation of human intelligence processes by machines, especially computer systems. Rouse, M 2018.

Robotic technology - the use of computer-controlled robots to perform manual tasks. If AI and robotic technology could equate to massive job cuts, i.e. Amazon are already testing drones to complete unmanned delivers to customers, this will mean many people will lose their jobs. The cost of producing and maintaining these machines and this new updated technology may mean people who are already not being paid fairly will be paid even less. If robots are used in war there is nothing stopping robots from being able to design fashion.

How AI is transforming the fashion industry?

Chatbots 24/7 fashion advisors

Analysis of social media conversations

Forecasting - AI being used to plan the nest trends

Real time data analysis to assist retailers

Stocking systems - what to keep in stock and restock

Khaite merged AR, film and traditional mediums, sending presentation boxes to editors and buyers, including lookbooks and fabric samples with QR codes revealing fashion films and AR 3D renderings of their new shoes.

Farra. E. Vogue Runway 2020 This Is the First Augmented Reality Experiment of Spring 2021

https://www.vogue.com/article/khaite-spring-2021-augmented-reality-experience

Social Media

Social media is very important to have when starting your own brand, it is an easy way to grow connections and loyal customers by promoting your business online. However social media also has its dark side. The documentary The Social Dilemma on Netflix taught me this. “If you are not paying for the product you are the product”. Social media uses persuasive technology to manipulate the product/ people. Technology engineers use addiction & manipulation psychology to control the user. The user is controlled to look at more adds to get more money. Social media is a drug.

How are we being manipulated?

Everything online or on social media is watched, recorded and monitored building a model of each of us - to enable the technology to predict what we will do & how we will behave.

Machine learning algorithms that are getting better & better so that they can engage humans on social media more & more; learning from our internet searches to suggest the next leading topic...leading us down the rabbit hole.

Technique have been designed to get people to use their phones more i.e. likes, photo tapping, notifications etc...

We were asked to type into google “climate change is” we all have different results based on our individual algorithms that have been developed by the platforms.

What can we do ?

Products need to be designed humanly.

We have a responsibility to change what we built.

People (users) are not a product resource.

Introduce more laws around digital privacy.

Introduce a tax for data collection.

Reform so don’t destroy the news.

Turn off notifications.

Don’t use social media after 9pm.

Always do an extra google search - double check your resources.

Don’t follow just follow suggestions.

How does the fashion industry use social media?

For the fashion industry, social media has brought connectedness, innovation, and diversity to the industry. Instagram, for example, functions as a live magazine, always updating itself with the best, most current trends while allowing users to participate in fashion rather than just watch from afar. People are influenced by social media when it comes to there fashion through trendsetters like, celebrities and fashion influencers.

Although social media has many positives, there is also many dangers that comes from it as well. People become obsessed and addicted to social media, always needing to post and show off everything they are doing. Everything people post on social media is mainly the best parts of their lives making people who have struggles feel even worse. Especially for the young generation there is a need for having the most followers, likes and comments and if you don’t you’re not ‘popular’. Due to social media the younger generation mainly compare themselves and their lives to the ‘perfect’ people they see on social media, which is not the reality. It is all a lie, everyone has good and bad parts to their lives but no one shows the negative sides on social media. This is what creates a depressed generation. Everyone then just hides behind screens wishing they were someone else which then leads to jealousy and evilness. Most trolls on social media are those who are just jealous of the love and support these high followed people on social media receive and the high quality of life they live but what they don’t see is the bad side to these people's lives that is not shown half the time.

0 notes

Text

Artificial Intelligence Books For Beginners | Top 17 Books of AI for Freshers

Artificial Intelligence (AI) has taken the world by storm. Almost every industry across the globe is incorporating AI for a variety of applications and use cases. Some of its wide range of applications includes process automation, predictive analysis, fraud detection, improving customer experience, etc.

AI is being foreseen as the future of technological and economic development. As a result, the career opportunities for AI engineers and programmers are bound to drastically increase in the next few years. If you are a person who has no prior knowledge about AI but is very much interested to learn and start a career in this field, the following ten Books on Artificial Intelligence will be quite helpful:

List of 17 Best AI Books for Beginners– By Stuart Russell & Peter Norvig

This book on artificial intelligence has been considered by many as one of the best AI books for beginners. It is less technical and gives an overview of the various topics revolving around AI. The writing is simple and all concepts and explanations can be easily understood by the reader.

The concepts covered include subjects such as search algorithms, game theory, multi-agent systems, statistical Natural Language Processing, local search planning methods, etc. The book also touches upon advanced AI topics without going in-depth. Overall, it’s a must-have book for any individual who would like to learn about AI.

2. Machine Learning for Dummies

– By John Paul Mueller and Luca Massaron

Machine Learning for Dummies provides an entry point for anyone looking to get a foothold on Machine Learning. It covers all the basic concepts and theories of machine learning and how they apply to the real world. It introduces a little coding in Python and R to tech machines to perform data analysis and pattern-oriented tasks.

From small tasks and patterns, the readers can extrapolate the usefulness of machine learning through internet ads, web searches, fraud detection, and so on. Authored by two data science experts, this Artificial Intelligence book makes it easy for any layman to understand and implement machine learning seamlessly.

3. Make Your Own Neural Network

– By Tariq Rashid

One of the books on artificial intelligence that provides its readers with a step-by-step journey through the mathematics of Neural Networks. It starts with very simple ideas and gradually builds up an understanding of how neural networks work. Using Python language, it encourages its readers to build their own neural networks.

The book is divided into three parts. The first part deals with the various mathematical ideas underlying the neural networks. Part 2 is practical where readers are taught Python and are encouraged to create their own neural networks. The third part gives a peek into the mysterious mind of a neural network. It also guides the reader to get the codes working on a Raspberry Pi.

4. Machine Learning: The New AI

– By Ethem Alpaydin

Machine Learning: The New AI gives a concise overview of machine learning. It describes its evolution, explains important learning algorithms, and presents example applications. It explains how digital technology has advanced from number-crunching machines to mobile devices, putting today’s machine learning boom in context.