#Video Face Swap API

Explore tagged Tumblr posts

Text

Creative Lab AI Review: 300+Tools. One Lifetime Access

Introduction

Hello, Welcome to my Creative Lab AI Review. What if you could stop spending money on all your AI tools and get all these tools and more in one place?

Sounds pretty cool, doesn’t it? But it’s real.

Imagine: ChatGPT, Claude, Gemini, and 300+ other tools all working for you in a single dashboard. No monthly fees. Not need to jump from tab to tab. No headaches. Just easy access to fast, insane AI power. Create videos, graphics, articles, anything faster and easier than ever before.

If you’re tired of juggling tools or wasting money on subscriptions, this will change everything.

Let’s break it down..

What Is Creative Lab AI?

Creative Lab AI is the world’s first truly all-in-one AI system. It gives you instant, lifetime access to over 300 powerful AI models in a simple dashboard. You can use it with no technical skills required.

Using this tool, you can:

Write blogs, scripts, and high-converting ads in seconds.

Easily design stunning images, logos, and presentations.

Create faceless videos and animated clips in minutes.

Use top AI like ChatGPT, Claude, and Gemini—no APIs or monthly fees.

This isn’t just another tool. It’s your secret weapon in the AI revolution.

How Does It Work?

Creating top-notch content using Creative Lab AI is as easy as 1-2-3. Here’s how to turn ideas into profits in minutes:

Step#1: Choose your AI and prompts Choose from 300+ advanced AI models. Like ChatGPT, DALL·E, Claude, and other tools. Create your own prompts, use one from the integrated prompt library, or let the DFY Prompt Builder handle everything for you.

Step#2: Make the magic happen Click the “Generate” button then let Creative Lab AI turn your imagination into reality. Create scroll-stopping articles, videos, designs, audio, posts, and more in just seconds.

Step#3: Profit with zero effort Your beautiful content is now available to sell, publish, or pitch to clients. Use it to grow your business, take over social media, or even start a business — no sweat required.

Creative Lab AI Review – Features

100+ AI Writing Tools: Write sales copy, scripts, emails, blog posts & more without writing ability.

50+ AI Video Tools: Produce reels, shorts, explainers & HD videos in minutes with text-to-video & image-to-video makers.

10+ AI Audio Tools: Produce studio-quality voiceovers, replicate voices, generate music & turn audio with no effort.

50+ AI Image Tools: Generate stunning avatars, face swaps, 4K restorations & visuals that pop immediately.

50+ AI Design & Branding Tools: Design logos, mockups, ads & infographics with a Canva-like studio and 4K graphics.

10+ AI SEO Tools: Improve content, keywords & meta tags to rank higher in Google, YouTube & voice search.

10+ Business Growth Tools: Develop business plans, SOPs, product & startup names to kick-start and grow your business.

50+ Automation & AI Chatbots: Semi-automate sales, support, and lead generation through niche AI chatbots that work 24/7.

10+ Productivity Tools: Create timely, content calendar, task timeline & briefing to work smarter & quicker.

Ten+ Social Media Ad Tools: Create viral posts, hooks, ads & post concepts for top networks instantly.

10+ PPC Tools: Design high-converting Google, Facebook & Linkedin ad copy to lower costs & raise ROI.

Commercial License Included: Sell your AI masterpieces—videos, voiceovers, graphics, copy, and automation tools—retain 100% profits.

Get More Info>>>>

1 note

·

View note

Text

Akool Avatar Review: The Most Lifelike AI Avatar Yet?

New Post has been published on https://thedigitalinsider.com/akool-avatar-review-the-most-lifelike-ai-avatar-yet/

Akool Avatar Review: The Most Lifelike AI Avatar Yet?

Imagine having a digital twin that responds to you in real time and seamlessly integrates across platforms using an API. With AI-powered avatars gaining traction across industries, businesses and creators are searching for the most lifelike and interactive experiences possible.

As digital spaces evolve, tools like Akool provide a bridge between reality and virtual engagement. It makes interactions more immersive and dynamic than ever before. Whether you’re a business looking to enhance customer service, a marketer aiming for next-level engagement, or a content creator exploring new ways to captivate your audience, Akool’s avatars are designed to deliver.

In this Akool Avatar review, I’ll discuss the pros and cons, what it is, who it’s best for, and its key features. Then, I’ll show you how I created and customized a streaming avatar on Akool.

I’ll finish the article by comparing Akool Avatar with my top three alternatives (HeyGen, Synthesia, and Elai). By the end, you’ll know if Akool is right for you!

Verdict

Akool Streaming Avatars offer a highly customizable and engaging AI experience with diverse avatars, real-time interaction, and seamless platform integration. It’s an excellent tool for business and creative applications. However, the limited free options and occasional mismatched gestures may be drawbacks for some.

Pros and Cons

Choose from a variety of 80+ diverse avatars.

Select your avatar’s language, voice, and streaming mode (dialogue or repeat).

The interactive chat box allows for seamless and engaging real-time communication with your avatar.

Add a knowledge base description for more informative interactions with your avatar.

The API easily integrates with different platforms and applications.

Higher-tier plans support high-quality uploads (4K and up to 8K in Studio).

Pro Max and Studio plans offer significantly faster processing.

Great for various applications, including customer support, education, marketing, and entertainment.

Create a custom avatar by uploading a video of yourself.

The price jump from Pro to Pro Max is steep, and 4K quality requires the more expensive plan.

The free plan is quite restricted, with low video quality 720P and only 25 images.

Some of the avatar’s hand gestures and facial reactions don’t align with what is being said.

Credit-based pricing may become costly for high-volume users.

What is Akool?

Akool Avatar is an AI-powered tool that creates realistic digital avatars for various applications, including customer service, marketing campaigns, educational content, live streaming events, virtual product launches, and interactive training sessions. It uses AI technology to generate lifelike virtual representations of people that mimic human behavior, expressions, and speech.

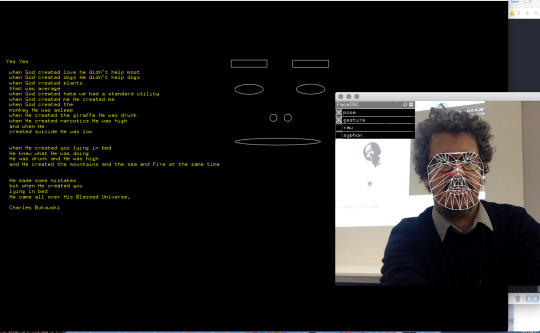

Its technology uses generative adversarial networks (GANs) as a key component of its AI-powered content creation system. GANs enable Akool to generate realistic digital avatars, face swaps, and other synthetic media.

Development History

Akool is a tech startup founded in 2022 by Jiajun Lu, a seasoned expert in AI and computer vision. The company has developed innovative AI technology for digital content creation, including avatar generation. Akool offers a range of AI-powered tools for creating digital content, including realistic avatars, face swapping, video translation, and image generation.

Jiajun Lu, the founder and CEO of Akool, has a strong background in AI and computer vision. He’s worked at companies like Apple, Google, and Stanford University before starting Akool. He has been recognized as one of the global leaders in artificial intelligence and computer vision.

Akool’s mission is to democratize visual storytelling by making it easily accessible to everyone. It focuses on simplifying video creation and enables businesses to produce high-quality, personalized visuals with AI.

Key Differences From Other Avatar Creators on the Market

What separates Akool from the sea of other avatar creators I’ve tried is the level of detail. Most avatar creators aren’t nearly as advanced when it comes to realism and expressiveness!

Key features that set Akool apart include:

Lifelike facial expressions and gestures: Akool’s avatars can convey emotions naturally for more authentic interactions.

Natural speech and precise lip-syncing: The avatars can speak with flawless lip sync and realistic movements.

Real-time interactivity: Akool’s Streaming Avatars enable dynamic and responsive real-time interactions.

Advanced AI integration: Akool’s technology seamlessly integrates with large language models (LLMs) for context-aware responses and more humanlike interactions.

Customization: You can create personalized avatars using voice recordings.

Emotion intelligence: Akool’s avatars convey emotions naturally for deeper audience engagement.

Full-Body Movements and Hand Gestures: The avatars display realistic full-body movements and hand gestures for more expressiveness and realism.

Who is Akool Streaming Avatar Best For?

Akool Avatar has applications across various industries:

Businesses can use Akool’s Streaming Avatar to enhance their customer service by providing 24/7 interactive support. This is especially useful for e-commerce platforms and service-based industries. They can integrate the avatar into existing customer service systems with Akool’s API. This allows the avatar to interact with customers through various channels like websites, messaging platforms, or mobile apps.

Marketers can use Akool’s Streaming Avatar to create personalized marketing campaigns and boost engagement in social media content. They help brands stand out in a crowded digital marketplace. They can use Akool’s API to embed the avatar in their website, social media, or apps.

Educators can use Akool’s Streaming Avatar to develop interactive educational tools, virtual classrooms, and training sessions. They can choose the avatar’s language, voice, and appearance to suit the audience. They can integrate the avatar into existing learning management systems or educational platforms using Akool’s API.

Content creators can use Akool’s Streaming Avatar for live streaming events, faceless YouTube channels, or virtual hosts for interactive shows. They add a unique layer of engagement to digital content. They can use Akool’s API to integrate the avatar into live streaming platforms for real-time interaction during events.

Event organizers can use Akool’s Streaming Avatar to reduce drop-off rates by creating engaging interactions with attendees during virtual events. They can incorporate Akool’s Streaming Avatar into the chosen virtual event platform using Akool’s API.

Healthcare providers can use Akool’s Streaming Avatar to offer personalized medical advice, monitor patient health, and improve patient engagement. They can integrate the avatar into telemedicine platforms and electronic health record systems using Akool’s API. This allows for real-time health monitoring, personalized treatment recommendations, and better patient communication.

Akool Streaming Avatar Key Features

Key features that set Akool apart include:

80+ Avatars: Choose from a variety of avatars, languages, voices, and streaming modes (dialogue or repeat).

Interactive Chat Box: Communicate with your avatar in real time.

Knowledge Base Integration: Define your avatars with a detailed knowledge base for more informative interactions.

API Support: Integration with various platforms and applications.

Multilingual Support: Supports multiple languages for global audience engagement.

Lifelike facial expressions and gestures: Akool’s avatars can convey emotions naturally for more authentic interactions.

Natural speech and precise lip-syncing: The avatars can speak with flawless lip sync and realistic movements.

Real-time interactivity: Akool’s Streaming Avatars enable dynamic and responsive real-time interactions.

Advanced AI integration: Akool’s technology seamlessly integrates with large language models (LLMs) for context-aware responses and more humanlike interactions.

Customization: You can create personalized avatars using voice recordings.

Emotion intelligence: Akool’s avatars convey emotions naturally for deeper audience engagement.

Full-Body Movements and Hand Gestures: The avatars display realistic full-body movements and hand gestures for more expressiveness and realism.

How to Create a Streaming Avatar with Akool

Here’s how to create a streaming avatar with Akool:

Create an Account

Choose Between Conversation & Repeat Mode

Choose from 80+ Avatars

Create a Custom Avatar

Choose from 30+ Languages

Select an AI Voice

Begin Chatting with Your Avatar

Step 1: Create an Account

I started by going to akool.com/apps/streaming-avatar and selecting “Free Trial” on the top right. On the free plan, you can create three customized instant avatars!

Once logged in, I hit “Get Started” on the top right.

Step 2: Choose Between Conversation & Repeat Mode

Next, I started creating my streaming avatar!

On the top left were two options:

Conversation mode: In this mode, the avatar engages in real-time, interactive communication through a chat box. The avatar responds naturally to you, using its knowledge base to provide informative and engaging interactions.

Repeat mode: This mode allows the avatar to repeat the input it receives. It’s used for scenarios where the avatar needs to relay information verbatim without engaging in a back-and-forth conversation.

I kept this setting on “Conversation.”

Step 3: Choose from 80+ Avatars

The bottom left was where I could choose from over 80 different avatars!

Step 4: Create a Custom Avatar

There were a variety of avatars to choose from, with different genders, ethnicities, and background settings.

If none of these avatars fit with your vision or you want to personalize your avatar to fit with your brand, you can create a custom avatar by selecting the purple square on the top left within the Avatar window.

There are two ways you can create a custom avatar with Akool:

Upload footage

Record with a webcam (just hit the record button and follow the instructions)

It only takes a few minutes. Upload 15 to 60 seconds of yourself with your voice automatically generated as an audio file.

Step 5: Choose from 30+ Languages

On the bottom right, I chose from 30+ languages.

Step 6: Select an AI Voice

Next to that, I could choose my AI voice (there were four to choose from, including conversational, news, narration, and meditation) or upload my own voice for use!

Step 7: Begin Chatting with Your Avatar

I hit “Let’s chat!” to start a conversation with my avatar.

After permitting Akool to access my microphone, I began conversing with my AI avatar.

My microphone picked up what I was saying, and my avatar’s response time was quick! Her responses weren’t quite as fast as a conversation had in real life, but pretty close.

I was also impressed at how realistic my avatar’s gestures were. She would nod when it was appropriate, and even waved at me when initially greeting me.

Akool’s Streaming Avatars can be used as a more interactive ChatGPT. You can chat with your avatar about general knowledge, advice, entertainment, or even just casual conversation!

Alternatively, you can use them for more professional applications like virtual customer support, interactive training sessions, or marketing campaigns. The ability to see and hear a digital avatar respond in real time adds an engaging, human-like element that text-based AI chats lack.

Overall, Akool’s Streaming Avatars offer an immersive way to engage with AI, whether for fun, education, or business.

Top 3 Akool Streaming Avatar Alternatives

Here are the best Akool Streaming Avatar alternatives I’ve tried.

HeyGen

The first Akool Streaming Avatar alternative I’d recommend is HeyGen. HeyGen lets you create AI-generated videos with custom avatars, supporting a wide range of industry use cases. Both platforms provide AI video creation tools with realistic avatars.

However, Akool stands out with its user-friendly interface and intuitive design. It offers a simpler UI that’s easy for beginners to pick up quickly, along with a pay-as-you-go pricing model that’s attractive for casual users or students.

Meanwhile, HeyGen provides many features, including AI-generated instant avatars, voice cloning, and sophisticated editing tools. However, its interface can be more complex for new users.

For straightforward, high-quality AI video creation with realistic talking photos and attractive video presentations, choose Akool. For more advanced features like instant AI-generated avatars, extensive translation options, and integration with existing workflows through Zapier, choose HeyGen!

Read my HeyGen review or visit HeyGen!

Synthesia

The next Akool Streaming Avatar alternative I’d recommend is Synthesia. Both Akool and Synthesia provide powerful AI video creation tools that focus on avatar-based content, but they have distinct features and strengths.

On the one hand, Akool Streaming Avatar provides real-time avatar streaming capabilities where you can create and interact with personalized avatars through a chat box. It offers customizable avatars with options to change appearance, language, voice, and streaming mode. Akool also integrates a knowledge base to enrich avatar interactions and provides API support for seamless platform integration.

On the other hand, Synthesia specializes in AI video generation from text input. It also comes with an AI video and script generator, a video editor, and hundreds of pre-made templates. Synthesia boasts a library of over 230 AI avatars and supports video creation in over 140 languages.

In terms of use cases, Akool excels in real-time interactions. It’s a great tool for live customer support, educational tools, and interactive marketing. Meanwhile, Synthesia is great for creating pre-recorded video content for training, customer support, education, sales enablement, and marketing.

Choose Akool if you need real-time avatar streaming with interactive capabilities and API support. Otherwise, choose Synthesia for a comprehensive AI video creation tool with a large library of avatars and languages for pre-recorded content.

Read my Synthesia review or visit Synthesia!

Elai

The final Akool Streaming Avatar alternative I’d recommend is Elai. Elai offers a sophisticated AI-powered platform to easily create photorealistic avatars and professional video content. Both platforms come with advanced AI avatars, making them excellent options for video creation, marketing, and customer engagement.

However, Akool stands out with its real-time streaming capabilities and interactive chatbox. That means you can create live, customizable avatars for dynamic interactions. It also features a powerful face-swapping tool and API support for seamless integration across platforms.

Meanwhile, Elai excels in photorealistic avatar quality, extensive language support (75+ languages), and content repurposing tools like PPTX-to-video conversion. Elai also offers multiple avatar types (selfie, studio, photo, animated mascot) and advanced voice cloning.

For real-time streaming, interactive chat features, and API integration, choose Akool. For photorealistic avatars and large-scale video production with multilingual support, choose Elai!

Read my Elai review or visit Elai!

Akool Avatar Review: The Right Tool For You?

Akool’s Streaming Avatars provide a customizable and immersive AI experience that’s great for business and creative applications. The ability to interact in real-time with lifelike avatars sets it apart from many competitors.

While the platform offers customization and seamless API integration, the limited free options and occasional gesture mismatches may be drawbacks. But if you prioritize realism, multilingual support, and real-time interactivity, Akool is a solid choice!

If you’re curious about the best Akool Streaming Avatar alternatives, here’s what I’d recommend:

HeyGen is best for businesses and marketers who need AI-generated video content quickly. Its focus is on video creation with avatars makes it a great choice for product explainers, social media ads, and corporate training videos.

Synthesia is best for professional e-learning, corporate training, and HR onboarding videos. It specializes in creating high-quality AI videos with realistic avatars and strong multilingual support.

Elai is best for educators and startups needing an affordable AI video creation platform. Elai provides customizable templates and avatars for presentations, training modules, and product demos.

Thanks for reading my Akool Avatar review! I hope you found it helpful.

Akool offers a free plan that includes 3 customized instant avatars. Try it for yourself and see how you like it!

#2022#4K#8K#ADD#Advice#Affordable AI#ai#AI integration#AI technology#AI Tools 101#AI video#AI video creation#AI video creation tool#AI-powered#akool#amp#API#apple#applications#apps#Article#artificial#Artificial Intelligence#audio#avatar#avatars#background#Behavior#box#brands

0 notes

Text

What is Swapfans?

SwapFans is an innovative AI-powered tool designed to transform social media content through face-swapping technology. Specializing in video content for platforms like Instagram and TikTok, SwapFans provides users with a high-speed solution to swap faces seamlessly across various videos, allowing for creative and entertaining customization.

0 notes

Text

Looking for a Deepfake Face Swap Alternative? Here are our 10 Best Picks!

If you have been active on social media, then you must have seen several viral deepfake photos and videos. With the rise in AI, deepfake technology has also evolved, letting us swap faces on any picture. Most people do deepfake face swap for entertainment and artistic purposes as it can save a lot of time in editing, but it can also have several pitfalls. In this post, I will let you know about the best deepfake face swap alternative tools that you can use for constructive purposes.

Why look for Deepfake Face Swap Alternatives?

You might be wondering if there are already deepfake face swap online tools, then why look for an alternative. Ideally, most people try to find trusted face swap solutions due to following reasons: Ethical concerns: Deepfake tools raise serious ethical concerns regarding their potential misuse and can’t be used for commercial purposes. Copyright issues: Most deepfake online tools end up violating privacy laws or intellectual property rights and don’t give copyright power to you. Watermarks: A lot of deepfake face swap tools leave their watermarks on edited images and videos, making them not suitable for professional use. Imperfect results: Several deepfake tools end up creating imperfect results by design, making the edited video and images look unnatural at times. Impact on trust: If you use these imperfect images professionally, then it can be challenging for your audience, and you can even end up losing their trust.

What to Look for in a Deepfake Face Swap Alternative?

Due to these pitfalls, it is crucial to look for a reliable deepfake face swap alternative. However, here are a couple of things that you can consider while looking for a deepfake tool: Features Assess the features offered by the tool, including the ability to swap faces, manipulate facial expressions, or generate realistic facial animations. You can also look for advanced features such as customization options, real-time processing, or integration with other software tools. Costing Evaluate the pricing structure of the tool, including any subscription fees, licensing costs, or additional charges for premium features. User-friendliness Evaluate the ease of use and accessibility of the tool (particularly if you don’t have any editing background). Look for features such as drag-and-drop functionality, pre-built templates, or automated workflows to streamline the face-swapping process. Performance Assess the performance and reliability of the tool, including processing speed, accuracy of facial mapping, and stability of the AI model. User reviews Look for existing user reviews of the tool to evaluate its effectiveness in real-world scenarios. This will help you know about its pros and cons from its real users. Privacy and security Try to find alternatives that prioritize user privacy and offer features such as data anonymization and secure authentication. Trial period Take advantage of any trial period offered by the tool to test its functionality and suitability for your needs before making a purchase.

Top 10 Deepfake Face Swap Alternative Tools to Try

If you look for a deepfake face swap tool on the web, you will be bombarded by tons of irrelevant results and APIs. Don’t worry – to make things easier for you, I have shortlisted the 10 best deepfake face swap alternative tools that are trusted by experts. #1: iFoto Face Swap A part of the iFoto Studio, this has to be the most recommended deepfake face swap online tool out there. The best part is that iFoto Face Swap is extremely easy to use and will let you swap faces on photos without any technical hassle. - You can just head to the iFoto website, load your image, and swap the individual’s face with anyone else. - Apart from being user-friendly, iFoto Face Swap is also quite reliable and produces highly precise results. - Since there are no imperfections or errors, you can easily use the modified image for professional use. - Mostly, iFoto Face Swap is used by eCommerce brand owners who want to diversify their portfolio. - iFoto Face Swap is safe to use and you can professionally use the edited images without any copyright issues. Pros - Extremely fast and reliable results - No prior editing experience is needed - Zero copyright problems - Available on the web and mobile (iOS/Android) apps Cons - The free version will include a watermark Available on: Web, iOS, and Android Pricing: Free and credit-based after that Rating: ★★★★★

#2: FaceSwapper AI As the name suggests, this is a dedicated AI-based tool that can help you swap faces on your videos or photos. You can sign-up on FaceSwapper for free and enjoy 6 credits, but have to buy its premium version after that. - The deepfake face swap online provides dedicated options to meet specific video and photo editing needs. - There is a provision to make big head cutouts from your standard images by swapping faces. - If you want, you can also convert your standard images into animated GIFs with FaceSwapper AI. - With its Magic Avatar feature, you can create consistent characters by leveraging the power of AI. Pros - Overall results are accurate and realistic - No editing experience is needed to use the tool Cons - Only 6 credits are given for free - Expensive (compared to other tools) Available on: Web Pricing: $29 per year Rating: ★★★★☆

#3: Remaker AI Face Swap This deepfake face swap alternative by Remake AI is quite easy to use and offers an intuitive interface. You can use its readily available templates or upload your pictures to use its face swap features. - As of now, Remaker AI offers three dedicated features – single picture face swap, multiple face swap, and video face swap. - Using it is quite easy – you just have to upload the original media file and the file with your face to swap it. - On the interface, you can also preview results of the face swap and make minor edits directly. - Apart from photos, you can also use it to swap faces on a video (but the results are not that accurate). Pros - It offers several face-swapping features in one place - Users can try the tool for free by getting credits Cons - Only face swapping features are provided (no other editing option is there) - The results of video face swaps are not that precise Available on: Web Pricing: 5 free credits and 150 credits for $2.99 afterward Rating: ★★★★☆

#4: Pica AI Face Swap Pica AI offers a wide range of AI-powered visual editing tools, including a dedicated deepfake face swap online solution. You can use it to edit existing images or create new ones with its template. - You can just upload both images (the original image and the one with the new face) and swap both faces. - With the help of Pica AI, you can also do a bulk face swap or swap multiple faces at the same time. - Besides swapping faces in photos, you can also do the same on videos and GIFs as well. - You can also upload your clean face picture and then put your face on its multiple templates (like wizard, superhero, etc.) Pros - It provides tons of face-swapping options on videos and photos - There are several AI-backed templates that you can readily use - Users can maintain a dedicated history of their work Cons - No mobile app is available Available on: Web Pricing: 8 free credits and $9.99 per month afterward Rating: ★★★★☆

#5: Reface AI Reface AI is a fun and lightweight tool that can help you put your face on other media files. It is quite easy to use and also provides tons of existing animated templates to use. - You can upload any existing video or photo on Reface AI and then upload your portrait image to do a deepfake face swap. - With Reface AI, you can also animate yourself and make all kinds of cartoon videos. - It also offers a wide range of existing templates in different formats that you can readily use to do face swaps. - Apart from face swap, Reface AI also provides an interesting option to change the color of your clothing. Pros - Quite easy to use - Results are also precise (and don’t look overly edited) Cons - A bit expensive - No free version is provided (only demo is there) Available on: Web, iOS, and Android Pricing: $4.99 per week Rating: ★★★★☆

#6: ArtGuru AI If you want to explore your creative side, then you can simply explore this deepfake face swap alternative. While it has limited professional features, it can meet your individual AI editing needs. - You can upload multiple photos on ArtGuru and swap faces on them by providing a clear portrait of yours. - There are several trending templates that you can readily use to swap your face on those photos. - It has also introduced an option to swap faces on videos that you can explore to get basic results. - The most common use of ArtGuru is converting portraits into all kinds of animated versions. Pros - Reliable results for creating animated avatars - Also supports multiple face swapping in group photos Cons - Limited features compared to other tools - Video face swap results are not that precise Available on: Web Pricing: $4.99 per month (8 credits are given for free) Rating: ★★★☆☆

#7: BasedLabs Face Swap BasedLabs has come up with an entire suite of AI-powered products, including a popular deepfake face swap alternative. The online tool is easy to use and can help you swap faces on photos instantly. - The AI model that is used by BasedLabs is one of the best in the industry and yields instant results. - You can just upload your source and target image and let the tool do its magic or select its existing template. - BasedLabs has a private online space for you to edit and store your work as well. - You can access other AI tools like AI anime characters, hairstyle changer, tattoo generator, etc. Pros - Tons of powerful AI features in one place - Results are instant and realistic Cons - No mobile app is available - Expensive (and can be tough for beginners) Available on: Web Pricing: $12 per month Rating: ★★★☆☆

#8: Fotor Face Swap Fotor is another popular AI-based photo editing suite that offers several tools in one place. With its deepfake face swap online feature, you can edit your face on any photo of your choice. - The Fotor face swap tool is easy to use and lets us get instant results for our photos. - You can either upload your photos or pick from its readily available templates as well. - Fotor also suggests multiple styles for your photos so that you can even customize the results. - From enhancing your creative works to editing professional pictures, the tool can help you do multiple things. Pros - Easy to use - Realistic results - A free trial is available Cons - No support for swapping faces in a video - Limited features (multiple face swap is also not supported) Available on: Web Pricing: $9.99 per month Rating: ★★★☆☆

#9: Swap by ClipDrop ClipDrop has also come up with dedicated AI-based editing tools, including Swap. This is a simple and lightweight deepfake face swap alternative that you can access on its website. - It has a minimalist and user-friendly interface that can let you swap faces on photos instantly. - Since ClipDrop is based on a powerful AI model, no prior editing experience is needed to use it. - You can preview the results of the face swap and try other ClipDrop tools to fine-tune results. - ClipDrop leverages the latest version of Stable Diffusion for various AI-based editing tasks. Pros - User-friendly - It yields reliable results Cons - Doesn’t support multiple face swapping - No mobile app is available Available on: Web Pricing: Free or $8.99 per month for pro version Rating: ★★☆☆☆

#10: Pixble Face Swapper The last deepfake face swap tool that you can consider using is Face Swapper by Pixble. The best part is that you can try it for free on any web browser. - You can just upload the source and target image on Pixble for swapping faces and get instant results (without any manual efforts). - Pixble will implement its AI model to swap faces and let you preview results before saving the image. - As of now, the Pixble face swapper takes almost 60 seconds to process its results. Pros - Easy to use - A free trial is available Cons - Limited features - Its results are not that precise Available on: Web Pricing: Free trial and 11 images for $4.99 per month afterward Rating: ★★☆☆☆

Conclusion

I’m sure that after going through this guide, you can easily pick the best deepfake face swap alternative tool to meet your AI-powered editing needs. Make sure you try the AI tool you are interested in and read its user reviews before buying a premium version. Out of all the listed options for deepfake face swap, iFoto Face Swap would be the best pick. It is packed with tons of features, is extremely easy to use, and will let you leverage the edited images without any copyright issues as well! Read the full article

0 notes

Text

Aeternity: A New Blockchain Platform for Scalability, Decentralization, and Trustless Transactions

Aeternity is a next-generation blockchain platform designed to be more scalable and viable than Ethereum and other blockchain platforms. Its purpose is to provide scalable, decentralized, and trustless alternatives to existing governance, economic, and financial intermediaries. To achieve this, the Aeternity team has created a public, open-source, blockchain-based distributed computing and digital-asset platform

Key Features

Scalability

Aeternity utilizes next-generation, highly scalable public blockchain technology, which allows for the execution of smart contracts off-chain through State Channels. This enables infinite real-time transactions and can handle use-cases that require high throughput of transactions.

Oracles

Aeternity has a built-in decentralized oracle system that leverages real-world data in real-time, making smart contracts more useful and effective.

Governance

Aeternity is governed by its stakeholders, including miners, token holders, and other participants with a stake in the blockchain. The stakeholders achieve governance through delegated voting.

User-friendly Identities

Aeternity features user-friendly identities for blockchain entities, such as user accounts, oracles, contracts, etc. This makes the blockchain more accessible and user-friendly.

Consensus Algorithm

Aeternity uses a unique hybrid Proof-of-Work (PoW) and Proof-of-Stake (PoS) consensus algorithm, with the mining part done through State Channels.

Smart Contracts

Aeternity features Turing complete smart contracts that execute transactions and commands without the need for third parties.

Support for Ethereum Virtual Machine (EVM)

Aeternity supports the Ethereum Virtual Machine, allowing Solidity contracts to be deployed to the Aeternity blockchain[5].8. Languages: Aeternity has its own programming languages, Sophia and Varna, which are designed for building high-quality smart contracts.

State Channels

Aeternity's State Channels enable infinite real-time transactions and can handle use-cases that require high throughput of transactions.

Token

Aeternity's native token is called AE, and it was launched as an ERC-20 token before being migrated to the new blockchain on a 1:1 basis.

Use Cases

Aeternity has the potential to be applied to a wide array of individual, business, and government use cases, including nano and micropayments, supply chain management, information markets, multiplayer video games, toll APIs, insured crowdfunding, cross-chain atomic swaps, assets and portfolio replication, and contracts for financing projects in the public interest.

Conclusion

Aeternity is a promising blockchain platform that aims to provide scalable, decentralized, and trustless alternatives to existing governance, economic, and financial intermediaries. With its unique features, such as State Channels, decentralized oracles, and support for the Ethereum Virtual Machine, Aeternity is well-positioned to address the scalability challenges faced by other blockchain platforms. As the platform continues to evolve and mature, it has the potential to become a significant player in the blockchain ecosystem.

1 note

·

View note

Text

ByteDance & TikTok have secretly built a Deepfakes maker

TikTok parent company ByteDance has teamed up with one of the most controversial apps to let you insert your face into videos starring someone else. TechCrunch has learned that ByteDance has developed an unreleased feature using life-like Deepfakes technology that the app’s code refers to as Face Swap. Code in both TikTok and its Chinese sister app Douyin asks users to take a multi-angle biometric scan of their face, then choose from a selection of videos they want to add their face to and share.

Users scan themselves, pick a video, and have their face overlaid on the body of someone in the clip with ByteDance’s new Face Swap feature

The Face Swap option was built atop the API of Chinese Deepfakes app Zao, which uses artificial intelligence to blend one person’s face into another’s body as they move and synchronize their expressions. Zao went viral in September despite privacy and security concerns about how users’ facial scans might be abused.

The Deepfakes feature, if launched in Douyin and TikTok, could create a more controlled environment where face swapping technology plus a limited selection of source videos can be used for fun instead of spreading misinformation. It might also raise awareness of the technology so more people are aware that they shouldn’t believe everything they see online. But it’s also likely to heighten fears about what Zao and ByteDance could do with such sensitive biometric data — similar to what’s used to set up FaceID on iPhones. Zao was previously blocked by China’s WeChat for presenting “security risks”.

Several other tech companies have recently tried to consumerize watered-down versions of Deepfakes. The app Morphin lets you overlay a computerized rendering of your face on actors in GIFs. Snapchat offered a FaceSwap option for years that would switch the visages of two people in frame, or replace one on camera with one from your camera roll, and there are standalone apps that do that too like Face Swap Live. Then last month, TechCrunch spotted Snapchat’s new Cameos for inserting a real selfie into video clips it provides, though the results aren’t meant to look confusingly realistic.

But ByteDance’s teamup with Zao could bring convincingly life-like Deepfakes to TikTok and Douyin, two of the world’s most popular apps with over 1.5 billion downloads.

Zao in the Chinese iOS App Store

Hidden Inside TikTok and Douyin

TechCrunch received a tip about the news from Israeli in-app market research startup Watchful.ai. The company had discovered code for the Deepfakes feature in the latest version of TikTok’s and Douyin’s Android apps. Watchful.ai was able to activate the code in Douyin to generate screenshots of the feature, though it’s not currently available to the public.

First, users scan their face into TikTok. This also serves as an identity check to make sure you’re only submitting your own face so you can’t make unconsented Deepfakes of anyone else using an existing photo or a single shot of their face. By asking you to blink, nod, and open and close your mouth while in focus and proper lighting, Douyin can ensure you’re a live human and create a manipulable scan of your face that it can stretch and move to express different emotions or fill different scenes.

You’ll then be able to pick from videos ByteDance claims to have the rights to use, and it will replace the face of whoever’s in the clip with your own. You can then share or download the Deepfake video, though it will include an overlayed watermark the company claims will help distinguish the content as not being real.

Code in th apps reveals that the Face Swap feature relies on the Zao API. There are many references to this API including strings like:

zaoFaceParams /media/api/zao/video/create enter_zaoface_preview_page isZaoVideoType zaoface_clip_edit_page

Watchful also discovered unpublished updates to TikTok and Douyin’s terms of service that cover privacy and usage of the Deepfakes feature. Inside the US version of TikTok’s Android app, English text in the code explains the feature and some of its terms of use:

“Your facial pattern will be used for this feature. Read the Drama Face Terms of Use and Privacy Policy for more details. Make sure you’ve read and agree to the Terms of Use and Privacy Policy before continuing. 1. To make this feature secure for everyone, real identity verification is required to make sure users themselves are using this feature with their own faces. For this reason, uploaded photos can’t be used; 2. Your facial pattern will only be used to generate face-change videos that are only visible to you before you post it. To better protect your personal information, identity verification is required if you use this feature later. 3. This feature complies with Internet Personal Information Protection Regulations for Minors. Underage users won’t be able to access this feature. 4. All video elements related to this feature provided by Douyin have acquired copyright authorization.”

ZHEJIANG, CHINA – OCTOBER 18 2019 Two us senators have sent a letter to the us national intelligence agency saying TikTok could pose a threat to us national security and should be investigated. Visitors visit the booth of douyin(Tiktok) at the 2019 smart expo in hangzhou, east China’s zhejiang province, Oct. 18, 2019.- PHOTOGRAPH BY Costfoto / Barcroft Media (Photo credit should read Costfoto / Barcroft Media via Getty Images)

A longer terms of use and privacy policy was also found in Chinese within Douyin. Translated into English, some highlights from the text include:

“The ‘face-changing’ effect presented by this function is a fictional image generated by the superimposition of our photos based on your photos. In order to show that the original work has been modified and the video generated using this function is not a real video, we will mark the video generated using this function. Do not erase the mark in any way.”

“The information collected during the aforementioned detection process and using your photos to generate face-changing videos is only used for live detection and matching during face-changing. It will not be used for other purposes . . . And matches are deleted immediately and your facial features are not stored.”

“When you use this function, you can only use the materials provided by us, you cannot upload the materials yourself. The materials we provide have been authorized by the copyright owner”.

“According to the ‘Children’s Internet Personal Information Protection Regulations’ and the relevant provisions of laws and regulations, in order to protect the personal information of children / youths, this function restricts the use of minors”.

We reached out to TikTok and Douyin for comment regarding the Deepfakes feature, when it might launch, how the privacy of biometric scans are protected, the age limit, and the nature of its relationship with Zao. However, TikTok declined to answer those questions. Instead a spokesperson insisted that “after checking with the teams I can confirm this is definitely not a function in TikTok, nor do we have any intention of introducing it. I think what you may be looking at is something slated for Douyin – your email includes screenshots that would be from Douyin, and a privacy policy that mentions Douyin. That said, we don’t work on Douyin here at TikTok.” They later told TechCrunch that “The inactive code fragments are being removed to eliminate any confusion”, which implicitly confirms that Face Swap code was found in TikTok.

A Douyin spokesperson told TechCrunch that “Douyin has no cooperation with Zao” despite references to Zao in the code. They also denied that the Face Swap terms of service appear in TikTok despite TechCrunch reviewing code from the app showing those terms of service and the feature’s functionality.

This is suspicious, and doesn’t explain why code for the Deepfakes feature and special terms of service in English for the feature appear in TikTok, and not just Douyin where the app can already be activated and a longer terms of service was spotted. TikTok’s US entity has previously denied complying with censorship requests from the Chinese government in contradiction to sources who told the Washington Post and that TikTok did censor some political and sexual content at China’s behest.

It’s possible that the Deepfakes Face Swap feature never officially launches in China or the US. But it’s fully functional, even if unreleased, and demonstrates ByteDance’s willingness to embrace the controversial technology despite its reputation for misinformation and non-consensual pornography. At least it’s restricting the use of the feature by minors, only letting you face-swap yourself, and preventing users from uploading their own source videos. That avoid it being used to create dangerous misinformation Deepfakes like the one making House Speaker Nancy Pelosi seem drunk.

“It’s very rare to see a major social networking app restrict a new, advanced feature to their users 18 and over only” Watchful.ai co-founder and CEO Itay Kahana tells TechCrunch. “These deepfake apps might seem like fun on the surface, but they should not be allowed to become trojan horses, compromising IP rights and personal data, especially personal data from minors who are overwhelmingly the heaviest users of TikTok to date.”

TikTok has already been banned by the US Navy and ByteDance’s acquisition and merger of Musically into TikTok is under investigation by the Comittee On Foreign Investment In The United States. Deepfake fears could further heighten scrutiny.

With the proper safeguards, though, face-changing technology could usher in a new era of user generated content where the creator is always at the center of the action. It’s all part of a new trend of personalized media that could be big in 2020. Social media has evolved from selfies to Bitmoji to Animoji to Cameos and now consumerized Deepfakes. When there are infinite apps and videos and notifications to distract us, making us the star could be the best way to hold our attention.

1 note

·

View note

Text

Four Techniques To Stop Pointing out "Like" As well as "Um".

Think Key introduced beforehand Thursday that the web site had actually settled a long-standing case along with Apple, Inc Another technique from thinking about what is actually aiming to do is actually to think of just what e-mail made use of to resemble, or (for those that typically aren't pretty as old). what instant messaging made use of to be like There were competing platforms as well as contending specifications, and nothing like an available API or even some of the various other traits our experts connect with permitting various services to swap info. Mobile gaming is listed here to keep as well as appear up the numbers nothing else form of games also approached to creating as much loan as mobile phone not even all those devices blended so you need to think of this your placing your desire triumphant and also simulating cuz you think that way that must hold true, but that's certainly not mobile gaming is # 1 immediately along with a much bigger client bottom. The influence from positive thinking on your work, your wellness, and also your life is being analyzed through folks which are much smarter in comparison to me. One of these people is actually Barbara Fredrickson. And our company are actually visiting deal with this arc from personality advancement as well as just how that improvements during the game greater than we've dealt with this in the past. Essentially, the entire flowerpot of soup is actually messed up because people will definitely unknown which spoonful is actually safe or not. You can easily find how the 22 emoji tracked across systems, with grinning confront with available mouth and tightly finalized eyes," face with splits of delight," resting face," as well as noisally wailing face" all possessing their own problems from interpretation. Man i don't remember her story however from the gamersyde meeting i think jolie received knocked up while working on this video game. This merely means that the state's data have been actually utilized to rotate the outlandish yet appealing story that ending being homeless is as straightforward as offering houses to destitute people. If you are actually an individual of Photos for Macintosh, available the new variation from Photos and also inspect out the new Times tab and also Folks album. Financial software application titan Intuit is a highly effective example of an organization that has actually enhanced style reasoning and the circumstance through which this resides. I do not believe Nintendo comes to have a syndicate over the concepts introduced in Zelda. POLICE OFFICERS: Broadband Undertaking is actually certainly not necessarily a negative headline but I believe along with some more intensity to the information, variant in environment as well as events, that might well become one of the far better racing/driving games on the App Store. I think also the suggestion of this kind of seamless game, narration differently, having a hub that moved along with you by means of the game as well as had a lifestyle of its very own was nearly a character. He reveals the ridiculous excellents from religious beliefs however he's not just placing that to mention you cannot think this. He only does not really want individuals working with him that refuse to search for an additional solution. Create a checklist a handful of factors you can possibly do today that will aid you improve your degree of incentive energy as well as enthusiasm. The generous surface to the lid and tiny condition light on the 'i' from the ThinkPad logo are immediately recognisable. I think this's a pleasant thing of Apple to accomplish. Apple have utilized their Presume Different" branding/campaign from Rosa Parks in the past, when she lived, and also since she is actually gone it's just right to give her another memorial. The principal starting city resembles, albeit on a so much more stylish degree, to a great deal of points that we see people try to do in activities like Skyrim. Definitely, every little thing about a game is a selling point, to the people which will just like that activity. I believe that the center has its past history, Treyarch has actually right now created its past along with Black Ops, which is actually great. I'm believing I'll probably receive the doubler for Zombie Highway Motorist's Ed as well, somewhat because of all the fun I have actually possessed with the initial. On this of course quite unscientific manner, our company think you would certainly be lucky to get with a sound morning's job entailing some Wi-Fi and/or mobile broadband make use of without recourse to mains electrical power. However http://buyitdirect-my.com/milk-skin-bahan-bahan-bagaimana-ia-berfungsi-bagaimana-untuk-menggunakan-harga-di-mana-kau-bisa-membelinya/ gets to the point through which that tricks all of them right into presuming that death is the only release. Search for inspirational and also inspirational video clips on YouTube as well as enjoy a handful of on a daily basis. Be sure to mention an opportunity region, or even a region if you assume they're mosting likely to carry out region-specific turn out.

1 note

·

View note

Text

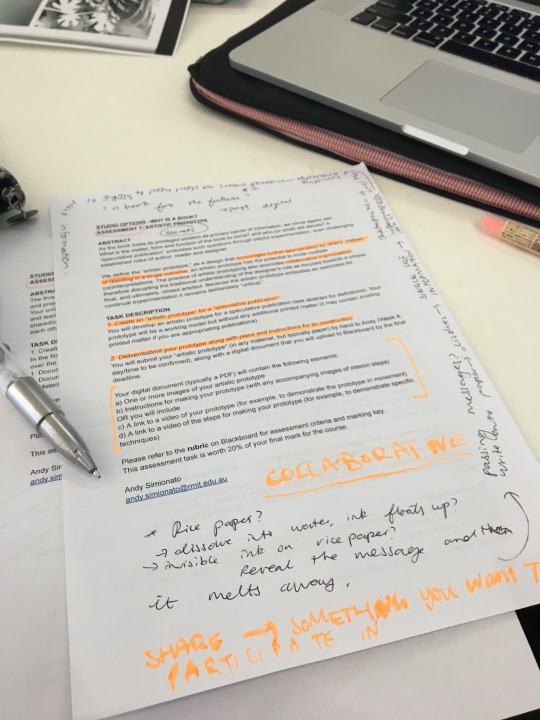

Getting the Most Out of Variable Fonts on Google Fonts

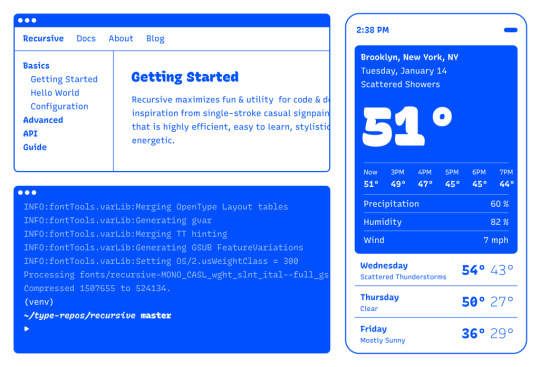

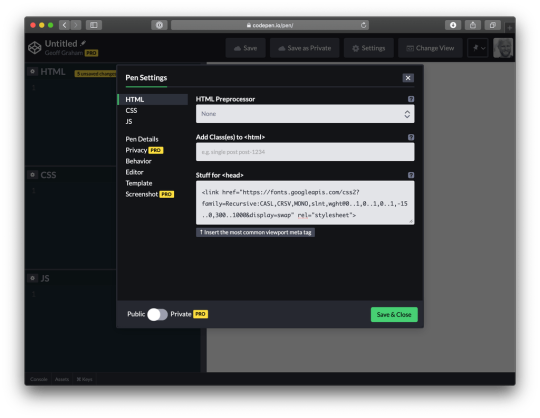

I have spent the past several years working (alongside a bunch of super talented people) on a font family called Recursive Sans & Mono, and it just launched officially on Google Fonts!

Wanna try it out super fast? Here’s the embed code to use the full Recursive variable font family from Google Fonts (but you will get a lot more flexibility & performance if you read further!)

<link href="https://fonts.googleapis.com/css2?family=Recursive:slnt,wght,CASL,CRSV,[email protected],300..1000,0..1,0..1,0..1&display=swap" rel="stylesheet">

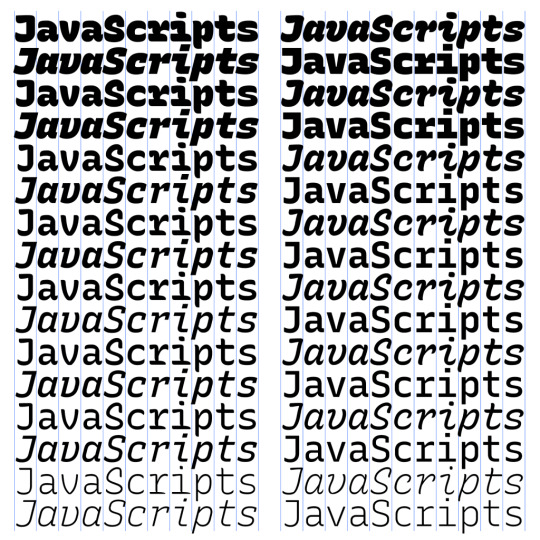

Recursive is made for code, websites, apps, and more.

Recursive Mono has both Linear and Casual styles for different “voices” in code, along with cursive italics if you want them — plus a wider weight range for monospaced display typography.

Recursive Sans is proportional, but unlike most proportional fonts, letters maintain the same width across styles for more flexibility in UI interactions and layout.

I started Recursive as a thesis project for a type design masters program at KABK TypeMedia, and when I launched my type foundry, Arrow Type, I was subsequently commissioned by Google Fonts to finish and release Recursive as an open-source, OFL font.

You can see Recursive and learn more about it what it can do at recursive.design.

Recursive is made to be a flexible type family for both websites and code, where its main purpose is to give developers and designers some fun & useful type to play with, combining fresh aesthetics with the latest in font tech.

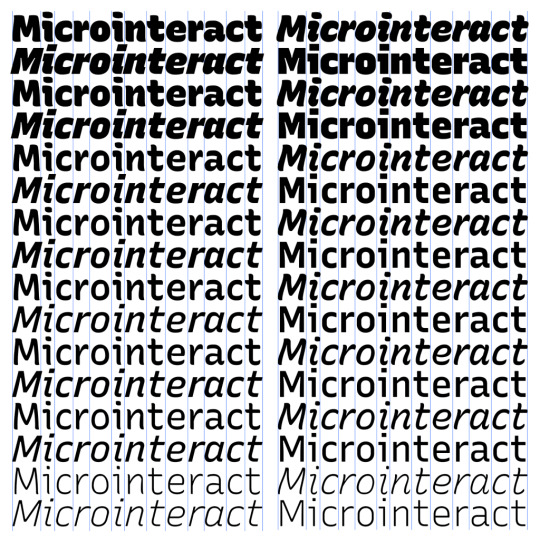

First, a necessary definition: variable fonts are font files that fit a range of styles inside one file, usually in a way that allows the font user to select a style from a fluid range of styles. These stylistic ranges are called variable axes, and can be parameters, like font weight, font width, optical size, font slant, or more creative things. In the case of Recursive, you can control the “Monospacedness” (from Mono to Sans) and “Casualness” (between a normal, linear style and a brushy, casual style). Each type family may have one or more of its own axes and, like many features of type, variable axes are another design consideration for font designers.

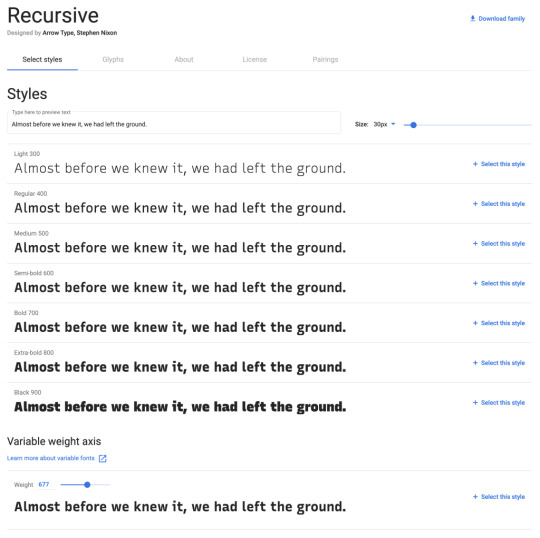

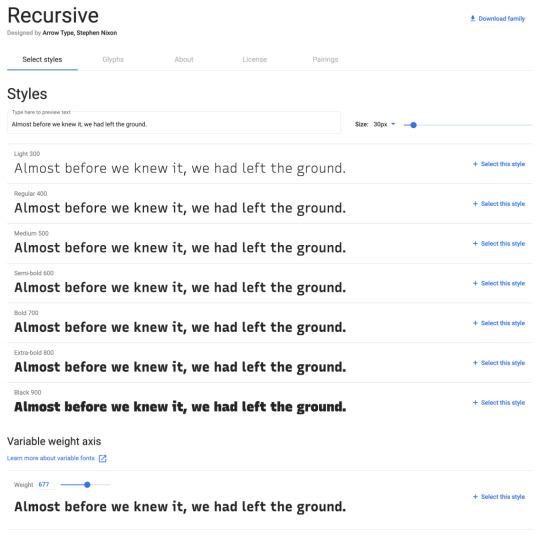

You may have seen that Google Fonts has started adding variable fonts to its vast collection. You may have read about some of the awesome things variable fonts can do. But, you may not realize that many of the variable fonts coming to Google Fonts (including Recursive) have a lot more stylistic range than you can get from the default Google Fonts front end.

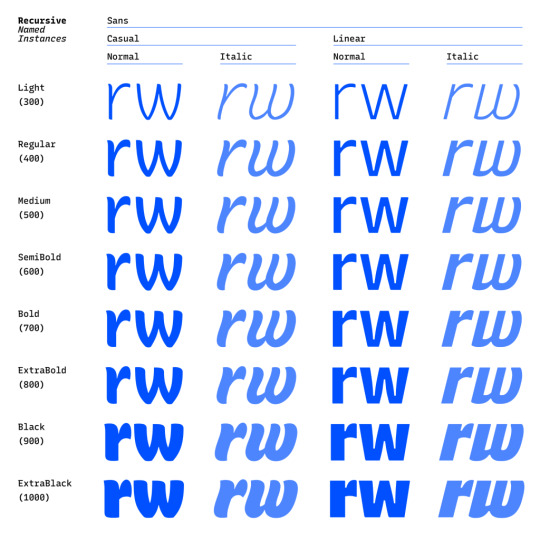

Because Google Fonts has a huge range of users — many of them new to web development — it is understandable that they’re keeping things simple by only showing the “weight” axis for variable fonts. But, for fonts like Recursive, this simplification actually leaves out a bunch of options. On the Recursive page, Google Fonts shows visitors eight styles, plus one axis. However, Recursive actually has 64 preset styles (also called named instances), and a total of five variable axes you can adjust (which account for a slew of more potential custom styles).

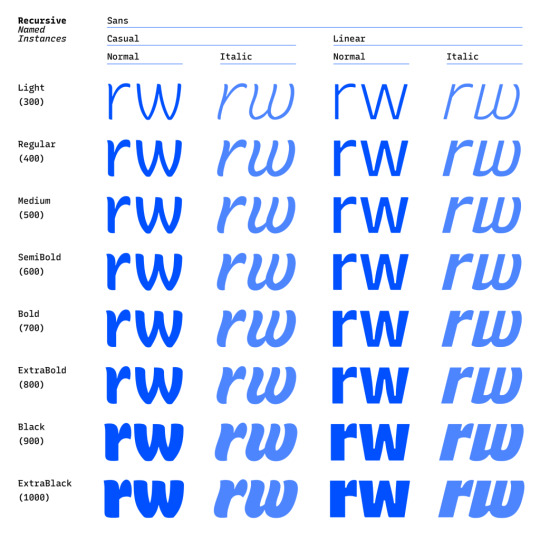

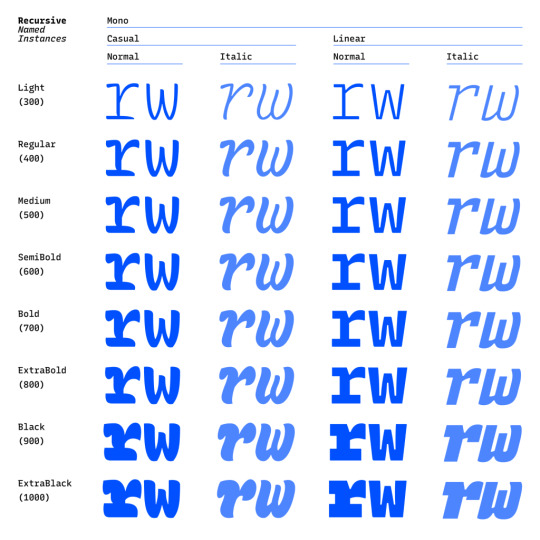

Recursive can be divided into what I think of as one of four “subfamilies.” The part shown by Google Fonts is the simplest, proportional (sans-serif) version. The four Recursive subfamilies each have a range of weights, plus Italics, and can be categorized as:

Sans Linear: A proportional, “normal”-looking sans-serif font. This is what gets shown on the Google Fonts website.

Sans Casual: A proportional “brush casual” font

Mono Linear: A monospace “normal” font

Mono Casual: A monospace “brush casual” font

This is probably better to visualize than to describe in words. Here are two tables (one for Sans, the other for Mono) showing the 64 named instances:

But again, the main Google Fonts interface only provides access to eight of those styles, plus the Weight axis:

Recursive has 64 preset styles — and many more using when using custom axis settings — but Google Fonts only shows eight of the preset styles, and just the Weight axis of the five available variable axes.

Not many variable fonts today have more than a Weight axis, so this is an understandable UX choice in some sense. Still, I hope they add a little more flexibility in the future. As a font designer & type fan, seeing the current weight-only approach feels more like an artificial flattening than true simplification — sort of like if Google Maps were to “simplify” maps by excluding every road that wasn’t a highway.

Luckily, you can still access the full potential of variable fonts hosted by Google Fonts: meet the Google Fonts CSS API, version 2. Let’s take a look at how you can use this to get more out of Recursive.

But first, it is helpful to know a few things about how variable fonts work.

How variable fonts work, and why it matters

If you’ve ever worked with photos on the web then you know that you should never serve a 9,000-pixel JPEG file when a smaller version will do. Usually, you can shrink a photo down using compression to save bandwidth when users download it.

There are similar considerations for font files. You can often reduce the size of a font dramatically by subsetting the characters included in it (a bit like cropping pixels to just leave the area you need). You can further compress the file by converting it into a WOFF2 file (which is a bit like running a raster image file though an optimizer tool like imageOptim). Vendors that host fonts, like Google Fonts, will often do these things for you automatically.

Now, think of a video file. If you only need to show the first 10 seconds of a 60-second video, you could trim the last 50 seconds to have a much small video file.

Variable fonts are a bit like video files: they have one or more ranges of information (variable axes), and those can often either be trimmed down or “pinned” to a certain location, which helps to reduce file size.

Of course, variable fonts are nothing like video files. Fonts record each letter shape in vectors, (similar to SVGs store shape information). Variable fonts have multiple “source locations” which are like keyframes in an animation. To go between styles, the control points that make up letters are mathematically interpolated between their different source locations (also called deltas). A font may have many sets of deltas (at least one per variable axis, but sometimes more). To trim a variable font, then, you must trim out unneeded deltas.

As a specific example, the Casual axis in Recursive takes letterforms from “Linear” to “Casual” by interpolating vector control points between two extremes: basically, a normal drawing and a brushy drawing. The ampersand glyph animation below shows the mechanics in action: control points draw rounded corners at one extreme and shift to squared corners on the other end.

Generally, each added axis doubles the number of drawings that must exist to make a variable font work. Sometimes the number is more or less – Recursive’s Weight axis requires 3 locations (tripling the number of drawings), while its Cursive axis doesn’t require extra locations at all, but actually just activates different alternate glyphs that already exist at each location. But, the general math is: if you only use opt into fewer axes of a variable font, you will usually get a smaller file.

When using the Google Fonts API, you are actually opting into each axis. This way, instead of starting with a big file and whittling it down, you get to pick and choose the parts you want.

Variable axis tags

If you’re going to use the Google Fonts API, you first need to know about font axes abbreviations so you can use them yourself.

Variable font axes have abbreviations in the form of four-letter “tags.” These are lowercase for industry-standard axes and uppercase for axes invented by individual type designers (also called “custom” or “private” axes).

There are currently five standard axes a font can include:

wght – Weight, to control lightness and boldness

wdth – Width, to control overall letter width

opsz – Optical Size, to control adjustments to design for better readability at various sizes

ital – Italic, generally to switch between separate upright/italic designs

slnt – Slant, generally to control upright-to-slanted designs with intermediate values available

Custom axes can be almost anything. Recursive includes three of them — Monospace (MONO), Casual (CASL), and Cursive (CRSV) — plus two standard axes, wght and slnt.

Google Fonts API basics

When you configure a font embed from the Google Fonts interface, it gives you a bit of HTML or CSS which includes a URL, and this ultimately calls in a CSS document that includes one or more @font-face rules. This includes things like font names as well as links to font files on Google servers.

This URL is actually a way of calling the Google Fonts API, and has a lot more power than you might realize. It has a few basic parts:

The main URL, specifying the API (https://fonts.googleapis.com/css2)

Details about the fonts you are requesting in one or more family parameters

A font-display property setting in a display parameter

As an example, let’s say we want the regular weight of Recursive (in the Sans Linear subfamily). Here’s the URL we would use with our CSS @import:

@import url('https://fonts.googleapis.com/css2?family=Recursive&display=swap');

Or we can link it up in the <head> of our HTML:

<link href="https://fonts.googleapis.com/css2?family=Recursive&display=swap" rel="stylesheet">

Once that’s in place, we can start applying the font in CSS:

body { font-family: 'Recursive', sans-serif; }

There is a default value for each axis:

MONO 0 (Sans/proportional)

CASL 0 (Linear/normal)

wght 400 (Regular)

slnt 0 (upright)

CRSV 0 (Roman/non-cursive lowercase)

Choose your adventure: styles or axes

The Google Fonts API gives you two ways to request portions of variable fonts:

Listing axes and the specific non-default values you want from them

Listing axes and the ranges you want from them

Getting specific font styles

Font styles are requested by adding parameters to the Google Fonts URL. To keep the defaults on all axes but use get a Casual style, you could make the query Recursive:CASL@1 (this will serve Recursive Sans Casual Regular). To make that Recursive Mono Casual Regular, specify two axes before the @ and then their respective values (but remember, custom axes have uppercase tags):

https://fonts.googleapis.com/css2?family=Recursive:CASL,MONO@1,1&display=swap

To request both Regular and Bold, you would simply update the family call to Recursive:wght@400;700, adding the wght axis and specific values on it:

https://fonts.googleapis.com/css2?family=Recursive:wght@400;700&display=swap

A very helpful thing about Google Fonts is that you can request a bunch of individual styles from the API, and wherever possible, it will actually serve variable fonts that cover all of those requested styles, rather than separate font files for each style. This is true even when you are requesting specific locations, rather than variable axis ranges — if they can serve a smaller font file for your API request, they probably will.

As variable fonts can be trimmed more flexibility and efficiently in the future, the files served for given API requests will likely get smarter over time. So, for production sites, it may be best to request exactly the styles you need.

Where it gets interesting, however, is that you can also request variable axes. That allows you to retain a lot of design flexibility without changing your font requests every time you want to use a new style.

Getting a full variable font with the Google Fonts API

The Google Fonts API seeks to make fonts smaller by having users opt into only the styles and axes they want. But, to get the full benefits of variable fonts (more design flexibility in fewer files), you should use one or more axes. So, instead of requesting single styles with Recursive:wght@400;700, you can instead request that full range with Recursive:[email protected] (changing from the ; to .. to indicate a range), or even extending to the full Recursive weight range with Recursive:[email protected] (which adds very little file size, but a whole lot of extra design oomph).

You can add additional axes by listing them alphabetically (with lowercase standard axes first, then uppercase custom axes) before the @, then specifying their values or ranges after that in the same order. For instance, to add the MONO axis and the wght axis, you could use Recursive:wght,[email protected],0..1 as the font query.

Or, to get the full variable font, you could use the following URL:

https://fonts.googleapis.com/css2?family=Recursive:slnt,wght,CASL,CRSV,[email protected],300..1000,0..1,0..1,0..1&display=swap

Of course, you still need to put that into an HTML link, like this:

<link href="https://fonts.googleapis.com/css2?family=Recursive:slnt,wght,CASL,CRSV,[email protected],300..1000,0..1,0..1,0..1&display=swap" rel="stylesheet">

Customizing it further to balance flexibility and filesize

While it can be tempting to use every single axis of a variable font, it’s worth remembering that each additional axis adds to the overall files ize. So, if you really don’t expect to use an axis, it makes sense to leave it off. You can always add it later.

Let’s say you want Recursive’s Mono Casual styles in a range of weights,. You could use Recursive:wght,CASL,[email protected],1,1 like this:

<link href="https://fonts.googleapis.com/css2?family=Recursive:CASL,MONO,wght@1,1,300..1000&display=swap" rel="stylesheet">

You can, of course, add multiple font families to an API call with additional family parameters. Just be sure that the fonts are alphabetized by family name.

<link href="https://fonts.googleapis.com/css2?family=Inter:slnt,[email protected],100..900?family=Recursive:CASL,MONO,wght@1,1,300..1000&display=swap" rel="stylesheet">

Using variable fonts

The standard axes can all be controlled with existing CSS properties. For instance, if you have a variable font with a weight range, you can specify a specific weight with font-weight: 425;. A specific Slant can be requested with font-style: oblique 9deg;. All axes can be controlled with font-variation-settings. So, if you want a Mono Casual very-heavy style of Recursive (assuming you have called the full family as shown above), you could use the following CSS:

body { font-weight: 950; font-variation-settings: 'MONO' 1, 'CASL' 1; }

Something good to know: font-variation-settings is much nicer to use along with CSS custom properties.

Another useful thing to know is that, while you should be able to activate slant with font-style: italic; or font-style: oblique Xdeg;, browser support for this is inconsistent (at least at the time of this writing), so it is useful to utilize font-variation-settings for the Slant axis, as well.

You can read more specifics about designing with variable fonts at VariableFonts.io and in the excellent collection of CSS-Tricks articles on variable fonts.

Nerdy notes on the performance of variable fonts

If you were to using all 64 preset styles of Recursive as separate WOFF2 files (with their full, non-subset character set), it would be total of about 6.4 MB. By contrast, you could have that much stylistic range (and everything in between) at just 537 KB. Of course, that is a slightly absurd comparison — you would almost never actually use 64 styles on a single web page, especially not with their full character sets (and if you do, you should use subsets and unicode-range).

A better comparison is Recursive with one axis range versus styles within that axis range. In my testing, a Recursive WOFF2 file that’s subset to the “Google Fonts Latin Basic” character set (including only characters to cover English and western European languages), including the full 300–1000 Weight range (and all other axes “pinned” to their default values) is 60 KB. Meanwhile, a single style with the same subset is 25 KB. So, if you use just three weights of Recursive, you can save about 15 KB by using the variable font instead of individual files.

The full variable font as a subset WOFF2 clocks in at 281 KB which is kind of a lot for a font, but not so much if you compare it to the weight of a big JPEG image. So, if you assume that individual styles are about 25 KB, if you plan to use more than 11 styles, you would be better off using the variable font.

This kind of math is mostly an academic exercise for two big reasons:

Variable fonts aren’t just about file size.The much bigger advantage is that they allow you to just design, picking the exact font weights (or other styles) that you want. Is a font looking a little too light? Bump up the font-weight a bit, say from 400 to 425!

More importantly (as explained earlier), if you request variable font styles or axes from Google Fonts, they take care of the heavy lifting for you, sending whatever fonts they deem the most performant and useful based on your API request and the browsers visitors access your site from.

So, you don’t really need to go downloading fonts that the Google Fonts API returns to compare their file sizes. Still, it’s worth understanding the general tradeoffs so you can best decide when to opt into the variable axes and when to limit yourself to a couple of styles.

What’s next?

Fire up CodePen and give the API a try! For CodePen, you will probably want to use the CSS @import syntax, like this in the CSS panel:

@import url('https://fonts.googleapis.com/css2?family=Recursive:CASL,CRSV,MONO,slnt,[email protected],0..1,0..1,-15..0,300..1000&display=swap');

It is apparently better to use the HTML link syntax to avoid blocking parallel downloads of other resources. In CodePen, you’d crack open the Pen settings, select HTML, then drop the <link> in the HTML head settings.

Or, hey, you can just fork my CodePen and experiment there:

CodePen Embed Fallback

Take an API configuration shortcut

If you are want to skip the complexity of figuring out exact API calls and looking to opt into variable axes of Recursive and make semi-advanced API calls, I have put together a simple configuration tool on the Recursive minisite (click the “Get Recursive” button). This allows you to quickly select pinned styles or variable ranges that you want to use, and even gives estimates for the resulting file size. But, this only exposes some of the API’s functionality, and you can get more specific if you want. It’s my attempt to get people using the most stylistic range in the smallest files, taking into account the current limitations of variable font instancing.

Use Recursive for code

Also, Recursive is actually designed first as a font to use for code. You can use it on CodePen via your account settings. Better yet, you can download and use the latest Recursive release from GitHub and set it up in any code editor.

Explore more fonts!

The Google Fonts API doc helpfully includes a (partial) list of variable fonts along with details on their available axis ranges. Some of my favorites with axes beyond just Weight are Crimson Pro (ital, wght), Work Sans (ital, wght), Encode Sans (wdth, wght), and Inter (slnt, wght). You can also filter Google Fonts to show only variable fonts, though most of these results have only a Weight axis (still cool and useful, but don’t need custom URL configuration).

Some more amazing variable fonts are coming to Google Fonts. Some that I am especially looking forward to are:

Fraunces: “A display, “Old Style” soft-serif typeface inspired by the mannerisms of early 20th century typefaces such as Windsor, Souvenir, and the Cooper Series”

Roboto Flex: Like Roboto, but withan extensive ranges of Weight, Width, and Optical Size

Crispy: A creative, angular, super-flexible variable display font

Science Gothic: A squarish sans “based closely on Bank Gothic, a typeface from the early 1930s—but a lowercase, design axes, and language coverage have been added”

And yes, you can absolutely download and self-host these fonts if you want to use them on projects today. But stay tuned to Google Fonts for more awesomely-flexible typefaces to come!

Of course, the world of type is much bigger than open-source fonts. There are a bunch of incredible type foundries working on exciting, boundary-pushing fonts, and many of them are also exploring new & exciting territory in variable fonts. Many tend to take other approaches to licensing, but for the right project, a good typeface can be an extremely good value (I’m obviously biased, but for a simple argument, just look at how much typography strengthens brands like Apple, Stripe, Google, IBM, Figma, Slack, and so many more). If you want to feast your eyes on more possibilities and you don’t already know these names, definitely check out DJR, OHno, Grilli, XYZ, Dinamo, Typotheque, Underware, Bold Monday, and the many very-fun WIP projects on Future Fonts. (I’ve left out a bunch of other amazing foundries, but each of these has done stuff I particularly love, and this isn’t a directory of type foundries.)

Finally, some shameless plugs for myself: if you’d like to support me and my work beyond Recursive, please consider checking out my WIP versatile sans-serif Name Sans, signing up for my (very) infrequent newsletter, and giving me a follow on Instagram.

The post Getting the Most Out of Variable Fonts on Google Fonts appeared first on CSS-Tricks.

You can support CSS-Tricks by being an MVP Supporter.

Getting the Most Out of Variable Fonts on Google Fonts published first on https://deskbysnafu.tumblr.com/

0 notes

Text

Transform Your Videos Seamlessly with Our Powerful Video Face Swap API

Ever imagined swapping faces in a video without spending hours on editing software? Our Video Face Swap API makes that imagination a reality. Whether you’re building an entertainment app, adding a fun twist to a social media filter, or creating engaging content for your users - this API does the heavy lifting for you. At API Market, we believe developers should spend more time building and less time dealing with complex integrations. That’s exactly why the Video Face Swap API is designed to be simple, fast, and highly accurate.

Easy Integration, Fast Results

With just a few lines of code, you can plug our Video Face Swap API into your application. You don’t need any expertise in video editing or machine learning - it’s all handled in the background. The API takes your input video and target face image and returns a high-quality swapped video that looks natural and smooth. We’ve built it for performance. So whether you're swapping faces for short clips or longer videos, the speed and reliability stay top-notch. It’s optimized to reduce latency and support multiple formats, helping you deliver features users love without compromise.

Real-Time Applications That Stand Out

This API isn’t just for fun - it’s powerful enough to create use cases in gaming, marketing, influencer content, and beyond. Imagine giving users the ability to insert themselves into their favorite scenes or marketing teams creating custom video content featuring client avatars. The best part? You don’t have to manage infrastructure or worry about scalability. API Market takes care of that. Our Video Face Swap API is backed by a secure and scalable environment that ensures your solution works smoothly, even at high volumes.

Built for Developers, Trusted by Businesses

As with all our APIs, the Video Face Swap API comes with clear documentation, version control, and support. We want to make sure developers never feel stuck. You’ll find everything you need - from sample requests to SDKs, all in one place at API Market. We also provide access management and performance monitoring tools, so you always stay in control of your implementation. Need updates? We roll them out smoothly without breaking your existing setup.

Final Thoughts - Why Choose API Market’s Video Face Swap API

If you’re building an app or platform that can benefit from engaging video features, the Video Face Swap API is your best bet. It saves development time, improves user interaction, and keeps things fun and innovative. Explore the full potential of our Video Face Swap API and other powerful tools at API Market. Let’s help you create smarter, more interactive video experiences - one API at a time.

0 notes

Text

Getting the Most Out of Variable Fonts on Google Fonts

I have spent the past several years working (alongside a bunch of super talented people) on a font family called Recursive Sans & Mono, and it just launched officially on Google Fonts!

Wanna try it out super fast? Here’s the embed code to use the full Recursive variable font family from Google Fonts (but you will get a lot more flexibility & performance if you read further!)

<link href="https://fonts.googleapis.com/css2?family=Recursive:slnt,wght,CASL,CRSV,[email protected],300..1000,0..1,0..1,0..1&display=swap" rel="stylesheet">

Recursive is made for code, websites, apps, and more.